Abstract

The predictive capacity of a marker in a population can be described using the population distribution of risk (Huang et al. 2007; Pepe et al. 2008a; Stern 2008). Virtually all standard statistical summaries of predictability and discrimination can be derived from it (Gail and Pfeiffer 2005). The goal of this paper is to develop methods for making inference about risk prediction markers using summary measures derived from the risk distribution. We describe some new clinically motivated summary measures and give new interpretations to some existing statistical measures. Methods for estimating these summary measures are described along with distribution theory that facilitates construction of confidence intervals from data. We show how markers and, more generally, how risk prediction models, can be compared using clinically relevant measures of predictability. The methods are illustrated by application to markers of lung function and nutritional status for predicting subsequent onset of major pulmonary infection in children suffering from cystic fibrosis. Simulation studies show that methods for inference are valid for use in practice.

The predictive capacity of a marker in a population can be described using the population distribution of risk (Huang et al. 2007; Pepe et al. 2008a; Stern 2008). Virtually all standard statistical summaries of predictability and discrimination can be derived from it (Gail and Pfeiffer 2005). The goal of this paper is to develop methods for making inference about risk prediction markers using summary measures derived from the risk distribution. We describe some new clinically motivated summary measures and give new interpretations to some existing statistical measures. Methods for estimating these summary measures are described along with distribution theory that facilitates construction of confidence intervals from data. We show how markers and, more generally, how risk prediction models, can be compared using clinically relevant measures of predictability. The methods are illustrated by application to markers of lung function and nutritional status for predicting subsequent onset of major pulmonary infection in children suffering from cystic fibrosis. Simulation studies show that methods for inference are valid for use in practice.

1. Background

Let D denote a binary outcome variable, such as presence of disease or occurrence of an event within a specified time period and let Y denote a set of predictive markers used to predict a bad outcome, D = 1, or a good outcome, D = 0. For example, elements of the Framingham risk score (age, gender, total and high-density lipoprotein cholesterol, systolic blood pressure, treatment for hypertension and smoking) are used to predict occurrence of a cardiovascular event within 10 years (http://hp2010.nhlbihin.net/atpiii/calculator.asp). We write the risk associated with marker value Y = y as risk(y) = P[D = 1|Y = y].

Huang et al. (2007) proposed the predictiveness curve to describe the predictive capacity of Y. It displays the population distribution of risk via the risk quantiles, R(ν) versus ν, where

The inverse of the predictiveness curve is simply the cumulative distribution function (cdf) of risk(Y)

and correspondingly

Gail and Pfeiffer (2005) noted that standard statistical measures used to quantify the predictive capacity of a risk prediction model can be calculated from the risk distribution function, Frisk(p). These include measures derived from the receiver operating characteristic (ROC) curve and the Lorenz curve, predictive values, misclassification rates, and measures of explained variation. Bura and Gastwirth (2001) used the risk quantiles, R(ν), to assess predictors in binary regression models. They proposed a summary index which they called the total gain.

Summary indices are often used to compare prediction models. The area under the ROC curve is widely used in practice for this purpose. However there is controversy about its use, particularly in the cardiovascular research community (Cook 2007; Pencina et al. 2008). This has motivated another approach to evaluating risk prediction markers that relies on defining categories of risk that are clinically meaningful. Several summary indices based on this notion have been proposed. The reclassification percent and the net reclassification index (NRI) are such summary measures derived from reclassification tables and they have recently gained popularity in the applied literature (Ridker et al. 2008; D’Agostino et al. 2008).

In this paper, we explicitly relate existing and new summary measures of prediction to the risk distribution, i.e. to the predictiveness curve. We contrast them qualitatively, paying particular attention to their clinical interpretations and relevance. We then derive distribution theory that can be used for making statistical inference. Note that rigorous methods for inference have not been available heretofore for several of the existing summary measures. Rather the measures are used informally in practice. Small sample performance is investigated for the new and existing summary measures with simulation studies.

The methods are illustrated with data from 12,802 children with cystic fibrosis disease. We describe the data and risk modelling methods in detail later in section 7. Briefly, we compare the capacities of lung function and nutritional measures made in 1995 to predict onset of a pulmonary exacerbation event during the following year. Overall, 41% of children had a pulmonary exacerbation in 1996. Figure 1 displays predictiveness curves (estimated using methods described in Section 7) for two risk models, one based on lung function (FEV1) and one based on weight. We see from Figure 1 that lung function is more predictive in the sense that more subjects have lung function based risks that are at the high and low ends of the risk scale than is true for weight based risks. Since a good risk marker is one that is helpful to individuals making medical decisions, and because decisions are more easily made when an individual’s risk is high or low than if it is in the middle, we conclude informally from the curves that lung function is a superior predictor than weight. We next define formal summary indices that can be used for descriptive and comparative purposes and illustrate them with the cystic fibrosis data.

Figure 1.

Predictiveness curves for FEV1 (solid curve) and weight (dashed curve) as predictors of the risk of having at least one pulmonary exacerbation in the following year in children with cystic fibrosis. The horizontal line indicates the overall proportion of the population with an event, ρ=41%. Using the low risk threshold, pL=0.25, 11% of subjects are classified as low risk according to weight while 32% are classified as low risk according to FEV1.

2. Summary Indices Involving Risk Thresholds

In clinical practice, a subject’s risk is calculated to assist in medical decision making. If his risk is high, he may be recommended for diagnostic, treatment or preventive interventions. If his risk is low, he may avoid interventions that are unlikely to benefit him. In certain clinical contexts, explicit treatment guidelines exist that are based on individual risk calculations. For example, the Third Adult Treatment Panel recommends that if a subject’s 10 year risk of a cardiovascular disease exceeds 20% he should consider low density lipoprotein (LDL)-lowering therapy (Adult Treatment Panel III 2001). The risk threshold that leads one to opt for an intervention depends on anticipated costs and benefits. These may vary with individuals’ perceptions and preferences (Vickers and Elkin 2006; Hunink et al. 2006). The choice of threshold may also vary with the availability of health care resources. In this section we discuss summary indices that depend on specifying a risk threshold. To be concrete we suppose that the overall risk in the population is high, ρ = P[D = 1], and that the goal of the risk model is to identify individuals at low risk, risk(Y) < pL, where pL is the risk threshold that defines low risk in the specific clinical context. Analagous discussion would pertain to a low risk population in which a risk model is sought to identify a subset of individuals at high risk. Extensions to settings where multiple risk categories are of interest occur in practice when multiple treatment options are available, and will be discussed at the end of this section.

For illustration with the cystic fibrosis data, we choose the low risk threshold pL = 0.25 which contrasts with the overall incidence ρ = 0.41. Patients with cystic fibrosis now routinely receive inhaled antibiotic treatment to prevent pulmonary exacerbations but this was not the case in the 1990s the time during which our data were collected. If subjects at low risk, risk(Y) < pL, in the absence of treatment could be identified, they could forego treatment and thereby avoid inconveniences, monetory costs and potentially increased risk of developing therapy resistant bacterial strains associated with inhaled prophylactic antibiotics.

2.1. Population Proportion at Low Risk

A simple compelling summary measure is the proportion of the population deemed to be at low risk according to the risk model. This is R−1(pL), the inverse function of the predictiveness curve, as noted earlier. A good risk prediction marker should identify more people at low risk so that more people can avoid the negative consequences of treatment that is unnecessary for them. That is, a better model will have larger values for R−1(pL). In the Cystic Fibrosis example, we see from Figure 1 that 32% of subjects in the population are in the low risk stratum based on lung function measures while 11%, are in the low risk stratum according to weight. A completely uninformative marker would put none in the low risk stratum since it assigns risk(Y) = ρ to all subjects.

2.2. Cases and Controls Classified as Low Risk

Another important perspective from which to evaluate risk prediction markers is classification accuracy (Pepe et al. 2008a, Janes, Pepe and Gu 2008). This is characterized by the risk distribution in cases, subjects for whom D = 1, and in controls, subjects with a good outcome D = 0. Specifically, a better risk model will classify fewer cases and more controls as low risk (Pencina et al. 2008). This is desirable because cases should not forego treatment as they may benefit from it. On the other hand, treatment should be avoided for controls since they only suffer its negative consequences. Corresponding summary measures are termed true and false positive rates,

Higher TPR(pL) and lower FPR(pL) are desirable.

Figure 2 shows cumulative distributions of risk(Y) in cases and controls separately. From this, TPR(p) and FPR(p) can be gleaned for any value of p. We see that the proportion of controls in the low risk stratum is much larger when using lung function as the risk prediction marker than for weight, 1-FPR(pL) = 46% for lung function as opposed to 15% for weight. However the proportion of cases whose risks exceed pL is also lower for the lung function model (TPR(pL) = 87%) than for the weight model (TPR(pL) = 93%)

Figure 2.

Cumulative distributions of risk based on FEV1 and weight in predicting the risk of having at least one pulmonary exacerbation in the following year in children with cystic fibrosis. Distributions are shown separately for subjects who had events (cases, solid curve) and for subjects who did not (controls, dashed curve). According to FEV1, 13% of cases and 46% of controls are classified as low risk, while only 7% of cases and 15% of controls are assigned low risk status according to weight.

Observe that TPR(pL) and FPR(pL) are indexed by the threshold pL. This contrasts with the display of TPR and FPR that constitutes the ROC curve. ROC curves (Figure 3) suppress the risk thresholding values by showing TPR just as a function of FPR, not TPR and FPR as functions of risk threshold (Figure 2). When specific risk thresholds define clinically meaningful risk categories, the TPR and FPR associated with those risk category definitions are of intrinsic interest, more so than the TPR achieved at a fixed FPR value.

Figure 3.

ROC curves for FEV1 (solid curve) and weight (dashed curve) as predictors of risk of having at least one pulmonary exacerbation in the following year in children with cystic fibrosis. The solid and filled circles are the true and false positive rates corresponding to the low risk threshold pL=0.25. The areas under the ROC curve are 0.771 for FEV1 and 0.639 for weight.

2.3. Event Rates in Risk Strata

Another pair of summary measures is the event rates in the two risk strata. These can be thought of as predictive values, PPV(pL) and 1-NPV(pL), defined as

| (1) |

PPV(pL) is the event rate in the high risk stratum and 1-NPV(pL) is the event rate in the low risk stratum. For a good marker, the event rate PPV(pL) will be high and the event rate 1-NPV(pL) will be low.

By applying Bayes theorem to (1), PPV and NPV can be written in terms of TPR and FPR:

| (2) |

These expressions facilitate estimation of PPV(p) and NPV(p), which we discuss in section 4.

Event rates are also functions of the predictiveness curve. Specifically they average the curve over the ranges (νL, 1) and (0, νL) where νL = R−1(pL).

For the cystic fibrosis example, estimates of the event rates, 1-NPV(pL) and PPV(pL), are 17% and 53% for the risk strata defined by lung function. In contrast the event rates are much closer to each other, 24% and 43%, in the two risk strata defined by weight. Again lung function appears to be the better predictor of low risk. Not only is R−1(pL), the size of the low risk stratum, bigger when using lung function but 1-NPV(pL), the event rate in the low risk stratum, is also smaller.

2.4. νth Risk Percentile

In the applied literature, variables are often categorized using quantiles. In this vein, categories of risk are sometimes defined using risk quantiles for which we have used the notation R(ν). For example, Ridker et al. (2000) used quartiles of risk and noted that high sensitivity c-reactive protein (hs-CRP) was more predictive of cardiovascular risk than standard lipid screening because the level of hs-CRP in the highest versus lowest quartile was associated with a much higher relative risk for future coronary events than was the case for standard lipid measurements.

Another context in which R(ν) is well motivated is when availability of medical resources is limited. Suppose resources are available to provide an intervention to a fraction 1 − ν of the population, those 1 − ν at highest risk. Since R(ν) is the corresponding risk quantile, subjects given the intervention have risks ≥ R(ν). A marker or risk model for which R(ν) is larger is preferable because it ensures that those receiving intervention are at greater risk of a bad outcome in the absence of the intervention.

In the cystic fibrosis example, suppose the 10% of the population deemed to be at highest risk will be treated. If lung function is used to calculate risk, subjects with risks at or above 0.76 receive treatment. On the other hand if weight is used to calculate risk, subjects whose risks are as low as 0.52 will be offered treatment.

2.5. Risk Threshold Yielding Specified TPR or FPR

In a diagnostic setting, it may be important to flag most people with disease as high risk so that people with disease get necessary treatment. In other words, we may require that the TPR exceed a certain minimum value, TPR=t. The corresponding risk threshold is an important entity to report. We denote it by R(νT (t)). The decision rule that yields TPR=t, requires people whose risks are as low as R(νT (t)) to undergo treatment. If the treatment is cumbersome or risky the decision rule may be unacceptable or unethical if the threshold R(νT (t)) is low.

In screening healthy populations for a rare disease such as ovarian cancer, the false positive rate must be very low in order to avoid large numbers of subjects undergoing unnecessary medical procedures. The risk threshold that yields an acceptable FPR must also be acceptable for individuals as a threshold for deciding for or against medical procedures. To maintain a very low FPR, the risk threshold may be very high in which case the decision rule would not be ethical. Reporting the risk threshold that yields specified FPR=t is therefore often important in practice and we denote the threshold by R(νF (t)).

Unlike other predictiveness summary measures, R(νT (t)) and R(νF (t)) may not be suited to the task of comparing markers. It is not clear that a specific ordering of thresholds is always preferable. In the cystic fibrosis example, the risk threshold that yields TPR=0.85 is 0.27 when the calculation is based on lung function, but 0.32 when weight is used. Observe that another consideration is the corresponding false positive rate which is 0.50 for lung function and 0.72 for weight. If one wanted to control the false positive rate, at FPR=0.15 say, the corresponding risk thresholds are 0.54 for lung function and 0.51 for weight. Observe that the lung function based risk threshold is lower than that for weight when controlling the TPR but higher when controlling the FPR.

2.6. Risk Reclassification Measures

Several summary measures that rely on defined risk categories have been proposed recently. The context for their definition has been when comparing a baseline risk model with one that adds a novel marker to the baseline predictors using risk reclassification tables that involve 3 or more categories of risk. It is illuminating to consider these measures in our much simplified context, where only 2 risk categories defined by a single risk threshold pL are of interest and when the baseline model involves no covariates at all so that the baseline risk is equal to ρ for all subjects. We discuss the more complex setting later.

Cook (2007) proposes the reclassification percent to summarize predictive information in a model. In our context, all subjects are considered high risk under the baseline model because ρ > pL. The reclassification percent is therefore the proportion of subjects classified as low risk according to the risk model involving Y. This is exactly the summary index R−1(pL) discussed earlier.

Pencina et al. (2008) criticize the reclassification percent because it does not distinguish between desirable risk reclassifications (up for cases and down for controls) and undesirable risk reclassifications (down for cases and up for controls). They propose the net reclassification improvement (NRI) summary statistic as an alternative. We use“up” and “down” to denote changes of one or more risk categories in the upward and downward directions, respectively, for a subject between their baseline and augmented risk values. The NRI is defined as

In our simple context it is easy to see that

where TPR(pL) and FPR(pL) were discussed earlier. We see that in the 2 category setting the NRI statistic is equal to Youden’s index (Youden 1950). Youden’s index has been criticized because implicitly it weighs equally the consequences of classifying a case as low risk, i.e. a case failing to receive intervention, and classifying a control as high risk, i.e. a control subjected to unnecessary intervention. Most often the costs and consequences of these mistakes will differ greatly for cases and controls. Therefore we recommend reporting the two components of the NRI separately, TPR(pL) and FPR(pL). Values were reported for the cystic fibrosis study above. The corresponding NRI values are 0.33=0.87–0.54 for lung function and 0.08=0.93–0.85 for weight.

2.7. Extensions and Discussion

A key use of summary measures is to compare different risk models. One can quantify the difference in performance between two risk models by taking the difference between summary measures derived from the two models. In the cystic fibrosis example discussed here, the two risk models involve completely different markers. However, one could also entertain two models that involve some common predictors. The setting in which risk reclassification ideas have emerged, is where one model involves standard baseline predictors and the other includes a novel marker in addition to the baseline predictors. Taking the difference in summary measures for the two models is a sensible way of assessing improvement in performance in this context too.

Recall that when only 2 risk categories (low versus high) exist, Cook’s reclassification percent is equal to R−1(pL) when the baseline risk does not depend on baseline covariates. However, the reclassification percent is not equal to the difference of values for R−1(pL) between the baseline and augmented models when the baseline model does involve covariates. In general, even when two models have exactly the same predictive performance, the reclassification percent is typically non-zero. In fact it has been shown to vary dramatically with correlations between predictors in one model versus another (Janes et al. 2008). This measure therefore does not seem well suited for gauging the difference between predictive capacities of two models. Instead we suggest that one simply focus on the difference in proportions of subjects classified as low (or high) risk with the two models, i.e. differences in R−1(pL).

We represented the NRI statistic as TPR(pL)-FPR(pL) in the simple setting. It is easy to show that when two models involve covariates, the NRI statistic to compare the two models is the difference (TPR1(pL)-TPR2(pL))-(FPR1(pL)-FPR2(pL)) where subscripts 1 and 2 are used to index the two models. In analogy with our earlier discussion, we recommend reporting the two comparative components separately, TPR1(pL)-TPR2(pL) and FPR1(pL)-FPR2(pL), rather than their difference, the NRI, because typically changes in TPR should be weighted differently than changes in FPR.

Summarizing data is difficult when more than two risk categories are involved. Statistics such as the NRI have been criticized because they do not distinguish between changes of one risk category and more than one risk category (Pepe et al. 2008b). In a similar vein, when 3 risk categories exist with specific treatment recommendations for each, misclassifying a case as being in the lowest risk level may be more serious than misclassifying him as in the middle category. Similarly, misclassifying a control as being in the highest risk level may be more serious than misclassifying him as being in the middle category. Without specifying utilities associated with different types of misclassifications, any accumulation of data across risk categories is difficult to justify. For these settings we propose use of a vector of summary statistics distinguished by the risk thresholds. For example, suppose we consider three risk categories for the cystic fibrosis study defined by two thresholds pL = 0.25 and pH = 0.75. We could report: the proportions of subjects in the highest and lowest categories, (1−R−1(pH), R−1(pL)); the proportions of cases and controls in each category, (TPR(pH), 1−TPR(pL)) and (FPR(pH), 1−FPR(pL)); and so forth.

Although statistical summaries that depend on clinically meaningful risk thresholds are appealing, the choice of risk thresholds is often uncertain. Different clinicians or policy makers may choose different risk categorizations. This argues for displaying the risk distributions as continuous curves since one can then read from them summary indices described here using any risk threshold of interest to the reader.

3. Threshold Independent Summary Measures

Classic measures that describe the predictive strength of a model can be interpreted as summary indices for the predictiveness curve. We describe the relationships next. These measures can compliment the display of risk distributions for several models when no specific risk thresholds are of key interest. In addition, formal hypothesis tests to compare predictiveness curves can be based on them.

3.1. Proportion of Explained Variation

The proportion of explained variation, also called R2, is the most popular measure of predictive power for continuous outcomes and is popular for binary outcomes too. It is most commonly defined as

But it can also be written as

because var(D)=E(var(D|Y))+var(E(D|Y)) and E(D|Y) = P(D = 1|Y) = risk(Y). PEV is a standardized measure of the variance in risk(Y) since ρ(1 − ρ) in the denominator is the risk variance for an ideal marker that predicts risk(Y) = 1 for cases and risk(Y) = 0 for controls. Hu et al. (2006) noted that PEV can also be written as the correlation between D and risk(Y).

An unintuitive but interesting and simple interpretation for PEV is as the difference between the averages of risk(Y) for cases and controls(Pepe, Feng and Gu 2008b),

In summary for the cystic fibrosis data, PEV, calculated as 0.22 for the lung function measure and 0.05 for weight, can be interpreted as variances of risk distributions displayed in Figure 1 standardized by the ideal variance of 0.41 × (1 − 0.41) = 0.24, or as differences in means of distributions shown in Figure 2. In Figure 1, var(risk(Y)) = 0.053 for lung function and 0.012 for weight yielding 0.22 and 0.05 respectively when divided by 0.24. On the other hand in Figure 2, case and control mean risks are 0.54 and 0.32 for lung function while they are 0.44 and 0.39 for weight, again yielding 0.54–0.32=0.22 and 0.44–0.39=0.05 for the PEV values calculated as the differences in means.

Pencina et al. (2008) employ the PEV summary measure to gauge the improvement in risk prediction when clinically relevant risk thresholds do not exist. They do not recognize it as the proportion of explained variation but call it integrated discrimination improvement (IDI) and note that it has another interpretation as Youden’s index integrated uniformly over (0,1):

where Y I(p) = P(risk(Y) > p|D = 1) − P(risk(Y) > p|D = 0) is Youden’s index for the binary decision rule that is positive when risk(Y) > p. In other words, PEV can also be interpreted as the difference between integrated TPR(p) and FPR(p) functions defined earlier.

In a commentary on the Pencina et al. (2008) paper, Ware and Cai (2008) suggest that IDI, denoted here by PEV, does not depend on the overall event rate, ρ = P(D = 1). We disagree. To illustrate, suppose we have a single marker with risk function risk(Y) increasing in Y. Then

where risk(y) = p. Here, the conditional probabilities, P(Y > y|D = 1) and P(Y > y|D = 0), are independent of prevalence, ρ, but is a function of ρ. To demonstrate, consider a simple linear logistic regression model,

| (3) |

And note that

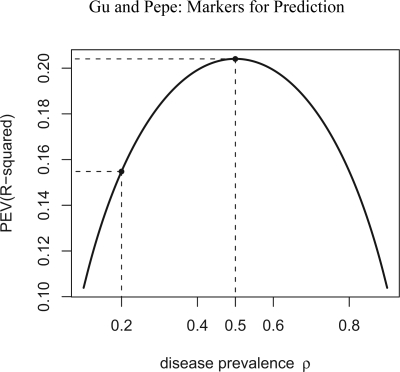

Since , clearly varies with ρ, so does its derivative. Figure 4 shows the relationship between PEV and ρ for a marker Y that is standard normally distributed in controls and normally distributed with mean 1 and variance 1 in cases. The risk is a simple linear logistic risk function (equation (3)). As ρ increases from 0 to 1, we see that PEV increases then decreases with maximum occurring at ρ = 0.5. Janssens et al. (2006) also demonstrated dependence of PEV on ρ through a simulation study.

Figure 4.

Relationship between the proportion of explained variation, PEV, and the prevalence. A linear logistic risk model with controls standard normally distributed and cases normally distributed with mean 1 and variance 1 was used to generate the data. Maximum PEV occurs at ρ=0.5.

The proportion of explained variation has been defined in other ways, notably based on notions of log likelihood (deviance). Gail and Pfeiffer (2005) note that these can also be calculated from the risk distribution. However, Zheng and Agresti (2000) make the point that these summary measures are difficult to interpret and we concur wholeheartedly. Therefore, we do not pursue them further in this paper but note that methods for inference could be developed in analogy with those we develop here for PEV.

3.2. Total Gain

Total gain, proposed by Bura and Gastwirth (2001) is defined as,

| (4) |

This is the area sandwiched between the predictiveness curve and the horizontal line at ρ, which is the predictiveness curve for a completely uninformative marker assigning risk(Y) = ρ to all subjects. TG is appealing because it can be visualized directly from the predictiveness curve. For a perfect risk prediction model, the predictiveness curve is a step function rising from 0 to 1 at ν = 1 − ρ. The corresponding TG is 2ρ(1 − ρ).

Other interpretations can be made for TG. Huang and Pepe (2008b) have shown that TG is equivalent to the Kolmogorov-Smirnov measure of distance between risk distributions for cases and controls. This is an ROC summary index (Pepe 2003, page 80):

| (5) |

| (6) |

In fact we can write this more simply.

Result

| (7) |

Proof

Let ν* be the point where R(ν*) = ρ. We have

Furthermore, because and , setting these two terms equal it follows that

Therefore TG can be written as

because . Moreover, since 1 − R−1(ρ) = ρTPR(ρ) + (1 − ρ)FPR(ρ), the above representation of TG can be further simplified to 2ρ(1 − ρ){TPR(ρ) − FPR(ρ)}.

This representation of TG is useful for estimation and for deriving asymptotic distribution theory. Interestingly, by equating (6) and (7), we find that the maximum value of TPR(p)-FPR(p) occurs at the risk threshold p = ρ. Another short proof follows by taking its derivative. In particular, since

| (8) |

taking the derivative of the right side with respect to ν and setting it to 0, we have

at the solution. That is, the solution is at R(ν) = ρ. In the same illustrative setting used above, where ρ = 0.2, Y is standard normal in controls and normal with mean 1 and variance 1 in cases, we see from Figure 5 how TPR(p)-FPR(p) varies with p. The maximum value, 0.39, is achieved at p = 0.2, i.e. at p = ρ.

Figure 5.

Association between and TPR(p)− FPR(p) and p. A linear logistic risk model with controls standard normally distributed and cases normally distributed with mean 1 and variance 1 was used to generate the data. Overall prevalence of event ρ=0.2. Maximum value, also known as the Kolmogorov-Smirnov distance, occurs at p = ρ.

Another appealing feature of TG is that after it is standardized by 2ρ(1−ρ), the total gain for a perfect marker, it is functionally independent of ρ. Let’s use TG to denote standardized total gain

so that TG ∈ [0, 1]. We will focus on TG; here. It is independent of disease prevalence because of it’s interpretation as the Kolmogorov-Smirnov ROC summary index. Moreover, based on the results above, TG is simply interpreted as the difference between the proportions of cases and controls with risks above the average, ρ = P(D = 1) = E(risk(Y)).

In the cystic fibrosis example, TG based on lung function is 0.20, while TG based on weight is 0.09. Since the overall event rate is ρ=41%, the corresponding standardized TG values are TG=0.42 for lung function and TG=0.20 for weight.

3.3. Area Under the ROC Curve and Further Discussion

The area under the ROC curve is widely used to summarize and compare predictive markers and models. It can be interpreted simply as the probability of correctly ordering subjects with and without events using risk(Y):

However, it has been criticized widely for having little relevance to clinical practice (Cook 2007; Pepe and Janes 2008; Pepe et al. 2007). In particular, the task facing the clinician in practice is not to order risks for two individuals. Part of the appeal of the AUC, however, lies in the fact that it depends neither on prevalence, ρ, nor on risk thresholds. Yet in the context of risk prediction within a specific clinical population, these attributes may be weaknesses. In particular, when specific risk thresholds are of interest, the ROC curve hides them. In Figure 3, we plot the ROC curves for risk based on lung function and on weight. The AUC values are 0.771 and 0.639, respectively.

Interestingly all of the measures discussed here can be thought of as the mathematical distance between risk distributions for cases and controls (Figure 2) measured in different ways. The PEV is the difference in the means of case and control risk distributions. The TG is the Kolmogorov-Smirnov measure and we have shown that this is equal to the difference between the proportions of cases and controls with risks larger than ρ. The AUC is equivalent to the Wilcoxon measure of distance between risk distributions for cases and controls.

4. Estimation OF Summary Measures

We now turn to estimation of summary indices from data. We focus on the scenario where Y is a single continuous marker. We also allow Y to be a predefined combination of multiple markers. For example, the score may be derived from a training dataset and our task is to evaluate the combination score using a test dataset.

We use the following notation: Y, YD and YD̄ are marker measurements from the general, case and control populations, respectively. Let F, FD and FD̄ be the corresponding distribution functions and let f, fD and fD̄ be the density functions. We assume the risk, risk(Y) = P(D = 1|Y), is monotone increasing in Y. Under this assumption we have R(ν) = P{D = 1|Y = F−1(ν)}. Thus the curve R(ν) vs. ν is the same as the curve risk(Y) vs. F(Y) and the predictiveness curve can be obtained by first estimating the risk model risk(Y), and then the marker distribution F(Y). Let YDi, i = 1, ..., nD be the nD independent identically distributed observations from cases, and YD̄i, i = 1, ..., nD̄ be the nD̄ independent identically distributed observations from controls. We write Yi, i = 1, ..., n for {YD1 ..., YDnD, YD̄1, ..., YD̄nD} where n = nD + nD̄.

Suppose the risk model is risk(Y) = P(D = 1|Y) = G(θ, Y), where

and h is some monotone increasing function of Y. This is a very general formulation. As a special case, logit{G(θ, Y)} could be as simple as θ0 + θ1Y with θ1 > 0, the ordinary linear logistic model. We consider estimation first under a cohort or cross sectional design and later discuss case-control designs for which the logistic regression formulation is particularly helpful.

4.1. Cohort Design

Suppose we have n independent identically distributed observations (Yi, Di) from the population. Maximum likelihood estimates of θ can be obtained, denoted by θ̂, as well as empirical estimates of F, FD, FD̄, and ρ, denoted by F̂, F̂D, F̂D̄, and ρ̂. We use these to calculate estimated summary indices. Summary measures that involve risk thresholds are the risk quantile, R(ν), the population proportion with risk below p, R−1(p), cases and controls with risks above p, TPR(p) and FPR(p), event rates in risk strata, PPV(p) and 1-NPV(p), and the risk thresholds yielding specified TPR or FPR, R(νT (t)) and R(νF (t)).

We plug θ̂ and F̂ into G to get estimators of R(ν), and R−1(p):

Estimates of cases and controls with risks above p are:

We write the event rates in risk strata in terms of TPR(p) and FPR(p) to facilitate their estimation:

In a cohort study, these estimates are equal to the empirical proportions of cases amongst those with estimated risks above and below p. However, the formulations here are valid in a case-control study too. Finally risk thresholds yielding specified TPR or FPR are obtained by first calculating the corresponding quantile of Y and then plugging it into the fitted risk model:

Summary measures that do not involve specific risk thresholds are proportion of explained variation, PEV, standardized total gain, TG, and area under the ROC curve, AUC. Recall that PEV is the difference between mean risk in cases and in controls. Sample means of estimated risks yield an estimator of PEV:

On the other hand, TG, can be expressed as the difference between the proportion of cases and controls with risks less than ρ. We write:

Finally AUC is estimated as the proportion of case-control pairs where the estimated risk for the case exceeds that of the control

Since G(θ, Y) is increasing in Y, this is the same as the standard empirical estimator of the AUC based on Y,

4.2. Case-Control Design

Case-control studies are often conducted in the early phases of marker development (Pepe et al. 2001; Baker et al. 2002). Compared to cohort studies, they are smaller and more cost efficient. Since early phase studies dominate biomarker research, it is crucial that estimates of statistical measures of performance accommodate case-control designs. In this section, we describe estimation under a case-control design assuming that an estimate of prevalence, ρ̂ is available. The value ρ̂ may be derived either from a cohort which is independent from the case-control sample, or from the parent cohort within which the case-control sample is nested. As a special case one can assume ρ is known or fixed without sampling variability. In determining populations where risk markers may or may not be useful, predictiveness curves could be evaluated for various specified fixed values of ρ.

In case-control studies, we sample fixed numbers of cases and controls, nD and nD̄, respectively. As a consequence, the intercept of the logistic risk model is not estimable. But by adjusting the intercept, we can still estimate the true risk in the population. In particular let S indicate case-control sampling. In the case-control study the risk model can be written as

where and θ1S = θ1, and θ0 and θ1 are population based intercept and slope. Therefore, having calculated maximum likelihood estimates for θ0S and θ1S from the case-control study, we use to estimate the population intercept θ0.

The marker distribution in the population, F, cannot be estimated directly because of the case-control sampling design. However, since case and control samples are representative, empirical estimates of FD and FD̄ are valid which we have denoted by F̂D and F̂D̄. Therefore we estimate F with F̂ = ρ̂F̂D + (1 − ρ̂) F̂D̄.

Estimates of the predictiveness summary measures can then be obtained by plugging corresponding values for θ̂, F̂, F̂D, F̂D̄ and ρ̂ into the expressions given earlier. These estimates are called semiparametric “empirical” estimates by Huang and Pepe (2008a) because FD and FD̄ are estimated empirically. The semiparametric likelihood framework also allows one to estimate FD and FD̄ using maximum likelihood (Qin and Zhang 1997, 2003; Qin 1998). Huang and Pepe (2008a) compared the performance of semiparametric “empirical” estimators of the predictiveness curve with semiparametric maximum likelihood estimators. Gains in efficiency by using maximum likelihood are typically small. We use empirical estimators of FD and FD̄ here, because this approach is intuitive and easy to implement. Moreover, they estimate important estimable quantities even when the risk model is misspecified. For example, TP̂R(p) is the proportion of cases whose calculated risks (calculated under the assumed model) exceed p, PÊV is the difference in mean calculated risk for cases and controls, and so forth.

5. Asymptotic Distribution Theory

In this section, we present asymptotic distribution theory for all of the summary measures defined in previous sections. Results for pointwise estimators of R(ν) and R−1(p)were previously reported by Huang et al.(2007) and Huang and Pepe (2008a), but for completeness we restate them here. Theory for the empirical estimator of AUC is not reported here since it is well established (Pepe 2003, page 105). Derivations of our results are provided in the Appendix. In addition, in the Appendix, we detail the components of the asymptotic variance expressions separately for case-control and cohort study designs.

Assume the following conditions hold:

G(s, Y) is a differentiable function with respect to s and Y at s = θ, Y = F−1(ν).

G−1(s, p) is continuous, and ∂G−1(s, p)/∂s exists at s = θ.

Theorem As n → ∞, each of the following random variables converges to a mean zero normal random variable: (i) , with variance

(ii) , with variance

(iii) , with variance

(iv) , with variance

where is the asymptotic variance of and

| (9) |

| (10) |

| (11) |

(v) , with variance

(vi) , with variance

(vii) , where TPR=1−νT (t) is pre-specified, with variance

(viii) , where FPR=1−νF (t) is pre-specified, with variance

(ix) , with variance

(x) , with variance

where

| (12) |

| (13) |

| (14) |

6. Simulation Studies

We performed simulation studies to investigate the validity of using large sample theory for making inference in finite sample studies, and to compare it with inference using bootstrap resampling. Data were simulated under a linear logistic risk model. Specifically we employed a population prevalence of ρ = 0.2 and generated marker data according to YD̄ ∼ N(0, 1) and YD ∼ N(1, 1). The correct form for G(θ, Y) was employed in fitting the risk model, namely a linear logistic model. For each simulated dataset, estimates of summary indices were calculated and their corresponding variances were estimated using the analytic formulae from the asymptotic theory. Variance estimates were also calculated using bootstrap resampling. Sample sizes ranged from 100 to 2000 and 5000 simulations were conducted for each scenario.

Simulation studies were conducted for case-control study designs as well as for cohort study designs. For the case-control scenario, we simulated nested case-control samples within the main study cohort employing equal numbers of cases and controls and with size of the cohort equal to 5 times that of the case-control study. The estimator ρ̂ is calculated from the main study cohort and sampling variability in summary estimates due to ρ̂ is acknowledged in making inference. Separate resampling of cases and controls was done for the nested case-control scenarios.

A full reporting of our simulation results can be found in Gu and Pepe (2009a). Tables 1 and 2 display results for a subset of the summary indices under case-control study designs. Huang and Pepe (2008a) report extensive simulation results for estimates of points on the predictiveness curve, R(ν) and R−1(p), and are not reported here. We found little bias in the estimated values. Moreover estimated standard deviations based on asymptotic theory agree well with the actual standard deviations and with those estimated from bootstrap resampling. Coverage probabilities were excellent when sample sizes were moderate to large. We observed some under-coverage and some over-coverage with small sample sizes (n = nD + nD̄ = 100). Not surprisingly this occurred primarily at the boundaries of the case and control distributions and was not an issue for the overall summary measures, PEV, TG and AUC. Generally, coverage based on percentiles of the bootstrap distribution are somewhat better than those based on assumptions of normality, but the difference shrinks for larger n.

Table 1.

Results of simulations to evaluate the application of inference based on asymptotic distribution theory and bootstrap resampling to finite sample studies. The study design employs case-control sampling from a parent cohort with prevalence 0.2. Marker data for controls are standard normally distributed and for cases is normally distributed with mean 1 and variance 1. The case-control subset is 1/5 the size of the parent cohort and is randomly selected. Shown are results for TPR(p), FPR(p), PPV (p) and NPV (p).

| p = 0.1 | p = 0.35 | p = 0.6 | p = 0.1 | p = 0.35 | p = 0.6 | p = 0.1 | p = 0.35 | p = 0.6 | p = 0.1 | p = 0.35 | p = 0.6 | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.905 | TPR(p) = 0.395 | 0.098 | 0.622 | FPR(p) = 0.103 | 0.011 | 0.267 | PPV (p) = 0.490 | 0.691 | 0.941 | NPV(p) = 0.856 | 0.814 | ||

| % Bias | |||||||||||||

| n=100 | 0.22 | −0.69 | 21.42 | −0.52 | −4.09 | 3.32 | 2.07 | 3.16 | −18.73 | −0.21 | 0.14 | 0.39 | |

| n=500 | 0.05 | −0.27 | 4.26 | −0.22 | −0.41 | 2.38 | 0.51 | 0.75 | −0.11 | −0.03 | 0.05 | 0.09 | |

| n=2000 | 0.01 | −0.19 | 1.18 | −0.19 | −0.26 | 0.79 | 0.09 | 0.11 | 0.66 | 0.00 | 0.01 | 0.02 | |

| Standard deviation | |||||||||||||

| n = 100 | observed | 0.037 | 0.117 | 0.090 | 0.120 | 0.037 | 0.019 | 0.037 | 0.101 | 0.189 | 0.032 | 0.023 | 0.020 |

| asymptotic | 0.039 | 0.114 | 0.091 | 0.115 | 0.041 | 0.018 | 0.037 | 0.113 | 0.205 | 0.025 | 0.022 | 0.020 | |

| bootstrap | 0.037 | 0.110 | 0.089 | 0.112 | 0.038 | 0.016 | 0.038 | 0.112 | 0.200 | 0.028 | 0.022 | 0.018 | |

| n = 500 | observed | 0.017 | 0.053 | 0.041 | 0.054 | 0.017 | 0.007 | 0.017 | 0.042 | 0.135 | 0.010 | 0.010 | 0.009 |

| asymptotic | 0.016 | 0.052 | 0.042 | 0.052 | 0.017 | 0.008 | 0.016 | 0.041 | 0.141 | 0.009 | 0.010 | 0.009 | |

| bootstrap | 0.015 | 0.050 | 0.041 | 0.050 | 0.015 | 0.007 | 0.016 | 0.045 | 0.132 | 0.011 | 0.010 | 0.008 | |

| n = 2000 | observed | 0.008 | 0.027 | 0.021 | 0.027 | 0.008 | 0.004 | 0.008 | 0.020 | 0.065 | 0.005 | 0.005 | 0.005 |

| asymptotic | 0.008 | 0.026 | 0.021 | 0.026 | 0.008 | 0.004 | 0.008 | 0.020 | 0.065 | 0.005 | 0.005 | 0.005 | |

| bootstrap | 0.007 | 0.025 | 0.020 | 0.025 | 0.008 | 0.004 | 0.008 | 0.021 | 0.066 | 0.005 | 0.005 | 0.005 | |

| 95% coverage probability | |||||||||||||

| n = 100 | asymptotic | 92.9 | 92.5 | 89.2 | 92.6 | 96.7 | 89.0 | 94.2 | 97.5 | 95.8 | 90.3 | 93.3 | 94.7 |

| bootstrap-N | 94.4 | 90.6 | 90.4 | 91.0 | 92.2 | 86.0 | 93.0 | 97.2 | 93.2 | 94.2 | 92.8 | 92.4 | |

| bootstrap-P | 97.4 | 92.2 | 93.2 | 92.4 | 92.6 | 89.4 | 92.8 | 98.2 | 90.5 | 95.8 | 93.6 | 94.4 | |

| n = 500 | asymptotic | 93.4 | 94.1 | 93.1 | 93.7 | 96.0 | 94.2 | 94.2 | 95.3 | 94.6 | 92.8 | 94.1 | 94.9 |

| bootstrap-N | 92.0 | 93.8 | 92.2 | 92.4 | 93.4 | 92.4 | 93.2 | 96.0 | 95.2 | 95.6 | 92.2 | 93.6 | |

| bootstrap-P | 94.4 | 94.2 | 93.0 | 93.4 | 93.6 | 94.0 | 93.4 | 97.4 | 96.2 | 96.8 | 92.2 | 94.8 | |

| n = 2000 | asymptotic | 95.6 | 93.7 | 94.4 | 94.2 | 95.5 | 95.1 | 94.4 | 95.3 | 95.1 | 94.0 | 94.1 | 94.8 |

| bootstrap-N | 95.8 | 94.2 | 93.4 | 93.8 | 94.7 | 94.2 | 95.0 | 94.8 | 96.2 | 95.0 | 94.2 | 94.6 | |

| bootstrap-P | 95.0 | 94.8 | 93.2 | 94.2 | 95.7 | 95.7 | 94.4 | 96.6 | 95.6 | 96.4 | 94.6 | 95.2 | |

Table 2.

Results of simulations to evaluate the application of inference based on asymptotic distribution theory and bootstrap resampling to finite sample studies. Data are generated as described for Table 1. Shown are results for PEV, standardized total gain, TG and AUC.

| PEV=0.154 | TG = 0.383 | AUC=0.760 | ||

|---|---|---|---|---|

| % Bias | ||||

| n = 100 | 7.09 | 0.97 | 0.05 | |

| n = 500 | 1.24 | 0.56 | 0.06 | |

| n = 2000 | 0.62 | −0.13 | −0.01 | |

| Standard deviation | ||||

| n = 100 | observed | 0.064 | 0.092 | 0.047 |

| asymptotic | 0.071 | 0.095 | 0.047 | |

| bootstrap | 0.064 | 0.096 | 0.047 | |

| n = 500 | observed | 0.029 | 0.041 | 0.021 |

| asymptotic | 0.031 | 0.042 | 0.021 | |

| bootstrap | 0.028 | 0.042 | 0.021 | |

| n = 2000 | observed | 0.015 | 0.021 | 0.011 |

| asymptotic | 0.016 | 0.021 | 0.011 | |

| bootstrap | 0.016 | 0.021 | 0.011 | |

| 95% coverage probability | ||||

| n = 100 | asymptotic | 95.7 | 94.8 | 94.3 |

| bootstrap-N | 93.2 | 95.2 | 93.0 | |

| bootstrap-P | 92.8 | 96.8 | 94.2 | |

| n = 500 | asymptotic | 95.8 | 95.0 | 94.3 |

| bootstrap-N | 95.4 | 94.4 | 95.0 | |

| bootstrap-P | 94.4 | 94.2 | 94.6 | |

| n = 2000 | asymptotic | 96.5 | 95.2 | 94.8 |

| bootstrap-N | 96.4 | 94.6 | 95.0 | |

| bootstrap-P | 95.4 | 95.0 | 95.0 | |

7. The Cystic Fibrosis Data

Cystic fibrosis is an inherited chronic disease that affects the lungs and digestive system of people. A defective gene and its protein product cause the body to produce unusually thick, sticky mucus which clogs the lungs and leads to life-threatening lung infections, and also obstructs the pancreas and stops natural enzymes from helping the body break down and absorb food. The main culminating event that leads to death is acute pulmonary exacerbations, i.e. lung infection requiring intravenous antibiotics.

The data for analysis here is from the Cystic Fibrosis Registry, a database maintained by the Cystic Fibrosis Foundation that contains annually updated information on over 20,000 people diagnosed with CF and living in the USA. In order to illustrate our methodology, we consider FEV1, a measure of lung function, and weight, a measure of nutritional status, as measured in 1995 to predict occurrence of pulmonary exacerbation in 1996. Data from 12,802 patients 6 years of age and older are analyzed. 5,245 subjects (41%) had at least one pulmonary exacerbation. A child’s weight is standardized for age and gender by reporting his/her placement value, which is equal to 1 minus his/her percentile value, in a healthy population of children of the same age and gender (Hamill et al. 1977), while FEV1 is standardized for age, gender and height in a different way, explicit formulae were provided by Knudson et al. (1983). We modelled the risk functions using logistic regression models with weight and FEV1 entered the model as linear terms, and both are negated to satisfy the assumption that increasing values are associated with increasing risk. Figure 1 shows the predictiveness curves for the entire cohort and Figure 2 shows the risk distributions separately for cases (those who had a pulmonary exacerbation) and for controls.

First, we use the entire cohort to estimate predictiveness summary measures for weight and lung function. Table 3 shows the point estimates discussed earlier in sections 2 and 3. Here we provide confidence intervals based on asymptotic distribution theory and on bootstrap resampling. Observe that standard deviations are all small and that corresponding confidence intervals are very tight. Bootstrap confidence intervals are almost identical to those based on asymptotic theory.

Table 3.

Point estimates and 95% confidence intervals for the summary indices using FEV1 and weight as markers of risk for subsequent pulmonary exacerbation in patients with cystic fibrosis. Results based on the entire cohort.

| Standard deviation | 95% confidence interval | ||||

|---|---|---|---|---|---|

| Estimate | Asymptotic | Asymptotic | Percentile Bootstrap | p-value | |

| R(0.9) | |||||

| FEV1 | 0.76 | 0.007 | (0.745,0.773) | (0.746,0.773) | |

| weight | 0.52 | 0.006 | (0.503,0.537) | (0.503,0.527) | < 0.001 |

| R−1(0.25) | |||||

| FEV1 | 0.32 | 0.010 | (0.305,0.344) | (0.303,0.342) | |

| weight | 0.11 | 0.009 | (0.095,0.131) | (0.095,0.134) | < 0.001 |

| R(v(TPR = 0.85)) | |||||

| FEV1 | 0.27 | 0.007 | (0.253,0.280) | (0.254,0.279) | |

| weight | 0.32 | 0.007 | (0.304,0.330) | (0.305,0.332) | < 0.001 |

| R(v(FPR = 0.15)) | |||||

| FEV1 | 0.54 | 0.008 | (0.525,0.556) | (0.524,0.554) | |

| weight | 0.51 | 0.006 | (0.503,0.527) | (0.503,0.527) | < 0.001 |

| T P R(0.25) | |||||

| FEV1 | 0.87 | 0.007 | (0.853,0.880) | (0.852,0.879) | |

| weight | 0.93 | 0.007 | (0.920,0.947) | (0.921,0.946) | < 0.001 |

| FPR(0.25) | |||||

| FEV1 | 0.54 | 0.013 | (0.517,0.569) | (0.519,0.570) | |

| weight | 0.85 | 0.011 | (0.832,0.877) | (0.831,0.875) | < 0.001 |

| PPV (0.25) | |||||

| FEV1 | 0.53 | 0.005 | (0.515,0.535) | (0.513,0.536) | |

| weight | 0.43 | 0.002 | (0.427,0.435) | (0.428,0.437) | < 0.001 |

| NPV (0.25) | |||||

| FEV1 | 0.83 | 0.005 | (0.820,0.842) | (0.819,0.840) | |

| weight | 0.76 | 0.011 | (0.747,0.780) | (0.746,0.778) | < 0.001 |

| PEV | |||||

| FEV1 | 0.22 | 0.005 | (0.202,0.229) | (0.203,0.230) | |

| weight | 0.05 | 0.003 | (0.041,0.056) | (0.041,0.056) | < 0.001 |

| TG | |||||

| FEV1 | 0.42 | 0.008 | (0.407,0.440) | (0.407,0.439) | |

| weight | 0.20 | 0.009 | (0.183,0.218) | (0.182,0.218) | < 0.001 |

| AUC | |||||

| FEV1 | 0.77 | 0.004 | (0.762,0.779) | (0.762,0.780) | |

| weight | 0.64 | 0.005 | (0.630,0.649) | (0.630,0.649) | < 0.001 |

We used the summary indices as the basis for hypothesis tests to formally compare the predictive capacities of FEV1 and weight. The difference between estimates of the indices was calculated and standardized using a bootstrap estimate of the variance of the difference. By comparing these test statistics with quantiles of the standard normal distribution, p-values were calculated. We see that differences between lung function and weight as predictive markers are statistically significant, no matter what summary index is employed. Note however that each test relates to a different question about predictive performance, depending on the particular summary index on which it is based. Asking if PEVs for weight and lung function are equal is not the same as asking if the proportion of subjects whose risks are less than 0.25, R−1(0.25), are equal. Asking if PEVs for weight and lung function are equal is not the same as asking if the AUCs for risks associated with weight and FEV1 are equal.

Next, we randomly sampled 1,280 cases and 1,280 controls from the entire cohort to form a nested case-control study sample that is about 1/5 th the size of the cohort. Table 4 presents results that use data on FEV1 and weight from the case-control subset and the estimate of the overall incidence of pulmonary exacerbation from the entire cohort, ρ̂ = 0.41. Estimates of summary indices are very close to the cohort estimates but corresponding confidence intervals are much wider. Nevertheless conclusions remain the same. This demonstrates that in a study where predictive markers are costly to obtain, the nested case-control design could yield considerable cost savings.

Table 4.

Point estimates and 95% confidence intervals for the summary indices using FEV1 and weight as markers of risk for subsequent pulmonary exacerbation in patients with cystic fibrosis. Results based on prevalence estimated from the entire cohort and marker data from a randomly selected case-control subset with 1,280 cases and 1,280 controls.

| Standard deviation | 95% confidence interval | ||||

|---|---|---|---|---|---|

| Estimate | Asymptotic | Asymptotic | Percentile Bootstrap | p-value | |

| R(0.9) | |||||

| FEV1 | 0.76 | 0.014 | (0.731,0.785) | (0.735,0.788) | |

| weight | 0.52 | 0.011 | (0.494,0.535) | (0.496,0.534) | < 0.001 |

| R−1(0.25) | |||||

| FEV1 | 0.35 | 0.014 | (0.318,0.375) | (0.314,0.367) | |

| weight | 0.12 | 0.020 | (0.086,0.157) | (0.089,0.151) | < 0.001 |

| R(v(T P R = 0.85)) | |||||

| FEV1 | 0.26 | 0.008 | (0.247,0.277) | (0.250,0.278) | |

| weight | 0.31 | 0.008 | (0.299,0.330) | (0.300,0.331) | < 0.001 |

| R(v(F P R = 0.15)) | |||||

| FEV1 | 0.53 | 0.010 | (0.513,0.551) | (0.517,0.550) | |

| weight | 0.52 | 0.011 | (0.498,0.541) | (0.500,0.543) | 0.332 |

| T P R(0.25) | |||||

| FEV1 | 0.86 | 0.006 | (0.848,0.875) | (0.850,0.874) | |

| weight | 0.93 | 0.011 | (0.911,0.954) | (0.915,0.950) | < 0.001 |

| F P R(0.25) | |||||

| FEV1 | 0.51 | 0.021 | (0.465,0.552) | (0.476,0.568) | |

| weight | 0.84 | 0.027 | (0.794,0.888) | (0.792,0.885) | < 0.001 |

| PPV (0.25) | |||||

| FEV1 | 0.54 | 0.011 | (0.519,0.561) | (0.517,0.558) | |

| weight | 0.43 | 0.007 | (0.423,0.447) | (0.422,0.445) | < 0.001 |

| N P V (0.25) | |||||

| FEV1 | 0.84 | 0.008 | (0.819,0.854) | (0.819,0.851) | |

| weight | 0.77 | 0.016 | (0.739,0.804) | (0.738,0.806) | < 0.001 |

| PEV | |||||

| FEV1 | 0.21 | 0.017 | (0.181,0.248) | (0.184,0.245) | |

| weight | 0.04 | 0.008 | (0.022,0.054) | (0.025,0.053) | < 0.001 |

| TG | |||||

| FEV1 | 0.41 | 0.019 | (0.368,0.442) | (0.370,0.444) | |

| weight | 0.21 | 0.020 | (0.171,0.251) | (0.171,0.252) | < 0.001 |

| AUC | |||||

| FEV1 | 0.77 | 0.009 | (0.749,0.786) | (0.750,0.787) | |

| weight | 0.65 | 0.011 | (0.625,0.669) | (0.628,0.670) | < 0.001 |

Predictiveness summary measures, such as R(ν) and R−1(p), are based on a single point on the predictiveness curve. Others, such as true and false positive rates and event rates, accumulate differences over part of the curve. Measures such as PEV, TG and AUC accumulate differences over the entire curve. One might conjecture that measures that accumulate differences would often be more powerful for testing if the predictiveness curves are equal. To investigate, we varied the case-control sample size and evaluated p-values associated with differences between the various summary measures. From Table 4, with a reasonably large case-control sample size (n=2560), we concluded that differences between almost all summary indices for the two markers are significantly different. However we see from Table 5 that as the size of the case-control study varies from medium (n=500) to small (n=100), the point estimates of measures based on specific thresholds become much more variable and p-values for differences between lung function and weight become statistically insignificant in most cases. In contrast, conclusions about the superiority of lung function as a predictive marker remained firm when PEV, TG or AUC were used as the basis of hypothesis tests about equality of curves, even with very small sample sizes (n=100).

Table 5.

Point estimates and 95% confidence intervals for the summary indices using FEV1 and weight as markers of risk for subsequent pulmonary exacerbation in patients with cystic fibrosis. Results are based on prevalence estimated from the entire cohort and marker data from a randomly selected case-control subset with equal numbers of cases and controls. The total number of cases and controls is denoted by n. Confidence intervals and p-values were based on bootstrap resampling.

| Estimate (95% confidence interval) | ||||

|---|---|---|---|---|

| FEV1 | weight | p-value | ||

| R(0.1) | n = 500 | 0.15 (0.100,0.203) | 0.28 (0.212,0.371) | < 0.001 |

| n = 200 | 0.11 (0.049,0.179) | 0.22 (0.107,0.335) | 0.04 | |

| n = 100 | 0.17 (0.077,0.283) | 0.16 (0.041,0.299) | 0.89 | |

| R−1(0.25) | n = 500 | 0.29 (0.173,0.373) | 0.12 (0.022,0.216) | < 0.001 |

| n = 200 | 0.32 (0.125,0.453) | 0.14 (0,0.286) | 0.02 | |

| n = 100 | 0.37 (0.126,0.516) | 0.10 (0,0.331) | 0.09 | |

| R(v(TPR = 0.85)) | n = 500 | 0.25 (0.203,0.304) | 0.32 (0.283,0.368) | 0.002 |

| n = 200 | 0.27 (0.209,0.346) | 0.36 (0.273,0.443) | 0.008 | |

| n = 100 | 0.25 (0.167,0.366) | 0.32 (0.227,0.433) | 0.10 | |

| R(v(FPR = 0.15)) | n = 500 | 0.58 (0.508,0.626) | 0.50 (0.439,0.562) | 0.006 |

| n = 200 | 0.55 (0.460,0.632) | 0.50 (0.414,0.591) | 0.25 | |

| n = 100 | 0.48 (0.330,0.607) | 0.55 (0.404,0.654) | 0.42 | |

| TPR(0.25) | n = 500 | 0.89 (0.837,0.922) | 0.93 (0.865,0.974) | 0.09 |

| n = 200 | 0.90 (0.845,0.972) | 1 (0,1) | 0.58 | |

| n = 100 | 0.88 (0.731,1) | 0.96 (0,1) | 0.81 | |

| FPR(0.25) | n = 500 | 0.58 (0.474,0.807) | 0.82 (0.695,0.956) | 0.003 |

| n = 200 | 0.57 (0.386,0.816) | 0.83 (0.553,0.990) | 0.03 | |

| n = 100 | 0.72 (0.384,0.980) | 1 (0,1) | 0.53 | |

| PPV (0.25) | n = 500 | 0.50 (0.454,0.543) | 0.44 (0.408,0.461) | 0.01 |

| n = 200 | 0.49 (0.437,0.575) | 0.43 (0.409,0.461) | 0.05 | |

| n = 100 | 0.46 (0.410,0.613) | 0.40 (0.396,0.501) | 0.29 | |

| NPV (0.25) | n = 500 | 0.85 (0.807,0.885) | 0.76 (0.696,0.840) | 0.02 |

| n = 200 | 0.85 (0.753,0.909) | 0.76 (0.448,0.865) | 0.42 | |

| n = 100 | 0.80 (0.598,0.907) | 0.74 (0,0.859) | 0.77 | |

| PEV | n = 500 | 0.22 (0.158,0.293) | 0.04 (0.016,0.082) | < 0.001 |

| n = 200 | 0.22 (0.119,0.337) | 0.07 (0.020,0.148) | 0.004 | |

| n = 100 | 0.31 (0.163,0.480) | 0.08 (0.012,0.215) | 0.006 | |

| TG | n = 500 | 0.45 (0.391,0.543) | 0.21 (0.120,0.291) | < 0.001 |

| n = 200 | 0.48 (0.354,0.590) | 0.25 (0.111,0.375) | 0.004 | |

| n = 100 | 0.56 (0.380,0.743) | 0.24 (0.062,0.418) | 0.004 | |

| AUC | n = 500 | 0.76 (0.718,0.800) | 0.63 (0.581,0.677) | < 0.001 |

| n = 200 | 0.76 (0.689,0.823) | 0.64 (0.564,0.712) | 0.002 | |

| n = 100 | 0.83 (0.752,0.908) | 0.70 (0.606,0.790) | 0.02 | |

8. Concluding Remarks

This paper presents some new clinically relevant measures that quantify the predictive performance of a risk marker. New measures formally defined include TPR(p), FPR(p), PPV(p), NPV(p), R(νT), R(νF), although several of these are already used informally in the applied literature. We have previously argued for use of R(ν) and R−1(p) in practice. In addition we have provided new intuitive interpretations for some existing predictive measures, including the popular proportion of explained variation, PEV which is called the IDI by Pencina et al. (2008), the standardized total gain, TG, and recently proposed risk reclassification measures, namely the NRI and the risk reclassification percent. We demonstrated that all of these measures are functions of the risk distribution, also known as the predictiveness curve. A fundamental initial step in the assessment of any risk model is to evaluate if risks calculated according to the model reflect the probabilities P(D = 1|Y). The predictiveness curve can also be useful in making this assessment (Pepe et al. 2008) and is complemented by use of the Hosmer-Lemeshow statistic (Hosmer and Lemeshow 1989). In our cystic fibrosis example, the two linear logistic risk models, one for lung function and one for weight, both yield Hosmer-Lemeshow test p-values>0.05, indicating that they fit the observed data reasonably well. When the risk model is misspecified the summary measures relate to the distributions of ‘fitted’ risk. Performance summaries such as TPR, FPR, PPV, NPV, R(νT), R(νF), R(ν), and R−1(p) will be of interest even when the fitted risk is not the true risk. For example, TPR, the proportion of cases detected by the (incorrect) risk calculator will still be clinically relevant.

A second contribution of this paper is to provide distribution theory for estimators of the summary indices. Such has not been available for most of the measures heretofore, including the popular PEV measure. Our methods can now be used to construct confidence intervals for the summary indices. Simulation studies indicate that the methods are valid for application in practice with finite samples.

We also demonstrated in an example how summary indices can be used to make formal rigorous comparisons between markers. Such has only been possible previously for comparisons based on the AUC or on point estimates of the predictiveness curve, R(ν) and R−1 (p) (Huang, Pepe and Feng 2007; Huang and Pepe 2008).

Our methods accommodate cohort or case-control study designs. The latter are particularly important in the early phases of biomarker development when biomarker assays are expensive or procurement of biological samples is difficult (Pepe et al. 2001). In such settings nested case-control studies are much more feasible (Baker et al. 2002; Pepe et al. 2008d). Our methodology is currently restricted to risk models that include a single marker or a predefined combination of markers. In practice studies often involve development of a marker combination and assessment of its performance. Compelling arguments have been provided in the literature for splitting a dataset into training and test subsets (Simon 2006; Ransohoff 2004). In these circumstances our methods apply to evaluation with the test data of the combination developed with the training data. It would be worthwhile however to explore use of cross validation techniques for simultaneous development and assessment of risk models using the summary indices we have described.

Which summary index should be recommended for use in practice? In our opinion, a summary index should not replace the display of the risk distributions (Figures 1 and 2) but should serve only to complement them. The choice of summary indices to report should be driven by the scientific objectives of the study. For example, if the objective is to risk stratify the population according to some risk threshold, below which treatment is not indicated and above which treatment is indicated, the corresponding proportions of the population that fall into the two risk strata, R−1(pL) and 1 − R−1(pL) would be key performance measures to report. Yet additional measures would also be of interest in this setting and should be reported including TPR(pL), FPR(pL), PPV(pL) and NPV(pL). When no risk thresholds have been defined as clinically relevant, PEV or TG or AUC could complement the displays of risk distributions and serve as the basis of test statistics to test for the equality of differences between case and control risk distribution curves.

The final stages of evaluating a model for use in practice should incorporate notions of costs and benefits (i.e. utilities) that may be associated with decisions based on risk(Y). However, specifying costs and benefits is typically very difficult in practice. Vickers and Elkin (2006) have recently proposed a standardized measure of expected utility for a prediction model that does not require explicit specifications of costs and benefits. The net benefit at risk threshold p is defined as NB(p) = ρTPR(p) + (1 − ρ)FPR(p)p/(1 − p), and their decision curve plots NB(p) versus p. This weighted average of true and false positive rates could complement descriptive plots of risk distributions. Moreover, the methods for inference that we have presented here give rise to methods for inference about decision curves too.

APPENDIX: ASYMPTOTIC THEORY

To simplify notation, we suppose the risk model is logistic linear in Y :

I. Cohort Design

In a cohort study the log likelihood function is

| (15) |

Let θ̂0, θ̂1 be the maximum likelihood estimators (MLE) of θ0, θ1 based on (15). The following results are well known.

Result 1 Let

and A(θ) = A(θ, ∞). If A(θ)−1 exists,

We can also write , where ℓθ(Yi) = A(θ)−1lθ(Yi), i = 1, ..., n. And

Result 2 As n → ∞,

where η ≡ nD/n.

Lemma 1 Let

and use BD,0, BD,1, BD̄,0 and BD̄,1 for the limits at t = ∞.

Then we have

Proof:

We prove the first result and proofs of the other two results follow from similar arguments.

Proof of Theorem items (i), (ii) and (iii) We show the proof for item (i). The proofs for items (ii) and (iii) follow similar arguments.

where

by equicontinuity of the process . Earlier results and the delta method then imply:

| (16) |

The last equality follows from Result 2 (for variance of F̂), Result 1 (for variance of θ̂) and Lemma 1 (for covariance of (F̂, θ̂)).

Proof of Theorem items (iv) and (v)

We write

The asymptotic distribution of is given in Result 2 as are the distributions of and because:

And similarly,

In the following, we calculate the covariances between ( ), ( ) and ( ). The asymptotic variance of , σ4(p)2, then follows from the delta method.

Consider the covariance between and :

Because F̂D and F̂D̄ are uncorrelated, the above covariance can be written as

| (17) |

| (18) |

The last equality uses Result 1 (for variance of θ̂) and Lemma 1 (for covariance of (F̂D, θ̂) and (F̂D̄, θ̂)).

The second covariance (equation (10)) is between ; and :

where and .

Observe that

where R(v) ≡ G(θ, Y) and . Both and are bounded in probability and therefore Hn converges to 0 as n → ∞.

We next derive expressions for An and Bn.

| (19) |

And Bn is

| (20) |

where cov ( ) is given by Lemma 1.

Combining the two terms yields a value for cov2. The derivation of cov3 follows from a similar argument.

The proof of item (v) of the Theorem uses exactly the same techniques.

Proof of Theorem items (vi), (vii) and (viii) We prove Theorem item (vii) in the following. Proofs of (vi) and (viii) are similar. The following proof is based on Huang and Pepe (2008a) where they derived the asymptotic distribution of when R(ν) is estimated under a case-control design.

When the value of TPR is 1 − νT (t), by a Taylor series expansion,

It follows that the asymptotic variance is

| (21) |

The variance of and of are provided in Result 2, and their covariance is provided in Lemma 1. Putting them all together, we have the following result,

Proof of Theorem item (ix)

where . It is easy to see that Pn converges to zero as n → ∞ since is bounded in probability and F̂D−FD (or F̂D̄ −FD̄) converges in probability to 0. We define , , and .

Now we have,

| (22) |

where

| (23) |

From a similar argument,

| (24) |

where

| (25) |

Because F̂D and F̂D̄ are independent the covariance between An and Cn is zero. Observe also that from previous derivations we have

| (26) |

The asymptotic variance of , , can be obtained by combining var(An + Bn), var(Cn + Dn) and cov(An + Bn, Cn + Dn).

Proof of Theorem item (x)

The result in the Theorem follows. Now we derive expressions for the variance components in a cohort study. Observe that

where we define

and note that as n → ∞ due to the equicontinuity of the process.

| (27) |

The variance of Bn follows from that of given in Result 1, and the variances of An and Cn both follow from Result 2. cov(An, Bn) follows from Lemma 1. Furthermore, cov(An, Cn) and cov(Bn, Cn) concern the covariance between (F̂D, ρ̂) and (θ̂, ρ̂), both of which can be found in the proof of Theorem item (iv), cov2 (see equation (19) and (20)).

Similarly, we have

| (28) |

The variance of , Σ2 (equation (13)), depends on the variances and covariances of the three terms

The variance of En can be obtained by using Result 1, and the variances of Dn and Fn can be found using Result 2. The covariance between Dn and En follows from Lemma 1. Covariances between (Dn, Fn) and (En, Fn) follow from an argument similar to that used in deriving cov(An, Cn) and cov(Bn, Cn) (equation (19) and (20)).

The asymptotic variance of̅ , is therefore

where ∑1,2 is

| (29) |

All of these covariance terms can be obtained using corresponding Results and Lemmas: cov(An, En) and cov(Bn,Dn) from Lemma 1; cov(Bn, En) from Result 1; cov(Cn, Fn) from Result 2. cov(An, Fn) and cov(Bn, Fn) concern the covariance between (F̂D, ρ̂) and (θ̂, ρ̂) and expressions have been derived in the proof of Theorem item (iv) above, cov2 (equation (19) and (20)), while cov(Cn, Dn) and cov(Cn, En) concern the covariance between (F̂D̄ , ρ̂) and (θ̂, ρ̂) and are derived using a similar argument.

II. Case-Control Design

Let ρ̂ be the estimate of disease prevalence ρ from a cohort independent of the case-control sample, or the parent cohort within which the case-control sample is nested. Assume the size of the cohort is λ times the size of the case-control sample. Denote

The following results are well established.

Result 3 Let

where k ≡ nD/nD̄ and A = A(∞). If A−1 exists,

where

A proof can be found in Prentice and Pyke (1979), Qin and Zhang (1997) and Zhang (2000).

The next set of results, Results 4–7, have been proved by Huang and Pepe (2008a).

Result 4 As n → ∞, converges to a normal random variable with mean 0 and variance

Result 5

Result 6

Result 7

Most of the proofs in the following are exactly the same as those developed for a cohort study. Therefore we do not repeat the proofs that are the same. However, expressions for the components of the asymptotic variances that are different are provided. We will frequently refer to expressions in Results 4–7.

Proof of Theorem item (i), (ii) and (iii)

The proof is the same as the proof provided for cohort studies. Based on equation (16),

Expressions for the three individual components can all be found in Results 6 and 7. Proofs for items (ii) and (iii) of the Theorem follow similar arguments.

Proof of Theorem items (iv) and (v)

According to equation (17),

Results 6 and 7 provide expressions for the three individual terms.

However, the expressions for cov2 and cov3 are different from those under a cohort study design,

Similarly,

Item (v) of the Theorem follows from a similar argument.

Proof of Theorem (vi), (vii) and (viii) These all follow similar arguments. We use (vii) to illustrate.

According to equation (21),

The result follows by plugging in corresponding expressions from Result 2, 6 and 7. Proofs of items (vi) and (viii) follow similar arguments.

Proof of Theorem item (ix) Proof of Theorem (ix) is exactly the same as the proofs for a cohort study. Equations (22), (24) and (26) defined expressions for the components of the asymptotic variance of , . We only need to substitute l(θ) in the definition of and (equation (23) and (25)) with the likelihood function based on case-control data, which are defined by Prentice and Pyke (1979), Qin and Zhang (1997) and Zhang (2000).

Proof of Theorem item (x) According to equations (27), (28) and (29), the three components of var ( ), , are

The following Results provide corresponding expressions for each of the individual components:

Result 2: var(An) and var(Dn).

Result 3: cov(Bn, En).

Result 6: var(Bn), var(Cn), var(En), cov(Bn, Cn), var(Fn), cov(En, Fn), cov(Bn, Fn), cov(Cn, En) and cov(Cn, Fn).

Result 7: cov(An, Bn), cov(Dn, En), cov(Bn,Dn) and cov(An, En).

Furthermore, cov(An, Cn)=cov(Dn, Fn)=cov(An, Fn)=cov(Cn,Dn)=0.

Footnotes

This work is supported in part by grants from the National Institutes of Health (R01 GM054438 and U01 CA086368).

REFERENCES

- Baker SG, Kramer BS, Srivastava S. “Markers for Early Detection of Cancer: Statistical Guidelines for Nested Case-Control Studies,”. BMC Medical Research Methodology. 2002;2:4. doi: 10.1186/1471-2288-2-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bura E, Gastwirth JL. “The Binary Regression Quantile Plot: Assessing the Importance of Predictors in Binary Regression Visually,”. Biometrical Journal. 2001;4:3, 5–21. [Google Scholar]

- Cook NR. “Use and Misuse of the Receiver Operating Characteristic Curve in Risk Prediction,”. Circulation. 2007;11:5, 928–935. doi: 10.1161/CIRCULATIONAHA.106.672402. [DOI] [PubMed] [Google Scholar]

- Cook NR, Buring JE, Ridker PM. “The Effect of Including C-Reactive Protein in Cardiovascular Risk Prediction Models for Women,”. Annals of Internal Medicine. 2006;14:5, 21–29. doi: 10.7326/0003-4819-145-1-200607040-00128. [DOI] [PubMed] [Google Scholar]

- D’Agostino RB, Sr, Vasan RS, Pencina MJ, Wolf PA, Cobain M, Massaro JM, Kannel WB. “General Cardiovascular Risk Profile for Use in Primary Care: The Framingham Heart Study,”. Circulation. 2008;11:7, 743–753. doi: 10.1161/CIRCULATIONAHA.107.699579. [DOI] [PubMed] [Google Scholar]

- Expert Panel on Detection EaToHBCiA “Executive Summary of the Third Report of the National Cholesterol Education Program (NCEP) Expert Panel on Detection, Evaluation, and Treatment of High Blood Cholesterol in Adults (Adult Treatment Panel III),”. The Journal of the American Medical Association. 2001;285(19):2486–2497. doi: 10.1001/jama.285.19.2486. [DOI] [PubMed] [Google Scholar]

- Gail MH, Pfeiffer RM. “On Criteria for Evaluating Models of Absolute Risk,”. Biostatistics. 2005;6(2):227–239. doi: 10.1093/biostatistics/kxi005. [DOI] [PubMed] [Google Scholar]

- Green DM, Swets JA. Signal detection theory and psychophysics. New York: Wiley; 1996. [Google Scholar]

- Gu W, Pepe MS. “Estimating the Capacity for Improvement in Risk Prediction with a Marker,”. Biostatistics. 2009;10(1):172–186. doi: 10.1093/biostatistics/kxn025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu W, Pepe MS.2009a“Measures to Summarize and Compare the Predictive Capacity of Markers,” UW Biostatistics Working Paper Series, Working Paper 342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hamill PV, Drizd TA, Johnson CL, Reed RB, Roche AF. United States, Vital Health Statistics. Vol. 11. Washington, DC: 1977. “NCHS Growth Curves for Children Birth-8 Years,”; pp. 1–74. [PubMed] [Google Scholar]

- Hosmer DW, Lemeshow S.1989Applied Logistic Regression (section 522). New York: Wiley [Google Scholar]

- Hu B, Palta M, Shao J. “Properties of R2 Statistics for Logistic Regression,”. Statistics in Medicine. 2006;25(8):1383–1395. doi: 10.1002/sim.2300. [DOI] [PubMed] [Google Scholar]

- Huang Y, Pepe MS.2008a“Semiparametric and Nonparametric Methods for Evaluating Risk Prediction Markers in Case-Control Studies,” UW Biostatistics Working Paper Series, Working Paper 333. [Google Scholar]

- Huang Y, Pepe MS.2008b“A Parametric ROC Model Based Approach for Evaluating the Predictiveness of Continuous Markers in Case-Control Studies,” Biometrics, doi: 10.1111/j.1541-0420.2005.00454.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang Y, Pepe MS, Feng Z. “Evaluating the Predictiveness of a Continuous Marker,”. Biometrics. 2007;63(4):1181–1188. doi: 10.1111/j.1541-0420.2007.00814.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hunink M, Glasziou P, Siegel J, Weeks J, Pliskin J, Elstein A, Weinstein M. Decision making in health and medicine. Cambridge University Press; 2006. [Google Scholar]

- Janes H, Pepe MS, Gu W. “Assessing the Value of Risk Predictions Using Risk Stratification Tables,”. Annals of Internal Medicine. 2008;149(10):751–760. doi: 10.7326/0003-4819-149-10-200811180-00009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Janssens ACJW, Aulchenko YS, Elefante S, Borsboom GJJM, Steyerberg EW, van Duijn CM. “Predictive Testing for Complex Diseases Using Multiple Genes: Fact or Fiction?,”. Genetics in Medicine. 2006;8(7):395–400. doi: 10.1097/01.gim.0000229689.18263.f4. [DOI] [PubMed] [Google Scholar]

- Knudson RJ, Lebowitz MD, Holberg CJ, Burrows B. “Changes in the Normal Maximal Expiratory Flow-Volume Curve with Growth and Aging,”. The American Review of Respiratory Disease. 1983;12:7, 725–734. doi: 10.1164/arrd.1983.127.6.725. [DOI] [PubMed] [Google Scholar]

- Pencina MJ, D’Agostino RB, Sr, D’Agostino RB, Jr, Vasan RS. “Evaluating the Added Predictive Ability of a New Marker: From Area Under the ROC Curve to Reclassification and Beyond,”. Statistics in Medicine. 2008;2:7, 157–172. doi: 10.1002/sim.2929. [DOI] [PubMed] [Google Scholar]