Abstract

The “weighted ensemble” method, introduced by Huber and Kim [Biophys. J. 70, 97 (1996)], is one of a handful of rigorous approaches to path sampling of rare events. Expanding earlier discussions, we show that the technique is statistically exact for a wide class of Markovian and non-Markovian dynamics. The derivation is based on standard path-integral (path probability) ideas, but recasts the weighted-ensemble approach as simple “resampling” in path space. Similar reasoning indicates that arbitrary nonstatic binning procedures, which merely guide the resampling process, are also valid. Numerical examples confirm the claims, including the use of bins which can adaptively find the target state in a simple model.

INTRODUCTION

In 1996, Huber and Kim1 introduced a path sampling method which they dubbed “weighted ensemble Brownian dynamics” (WEBD) simulation. Their focus was the diffusively controlled binding of a ligand to a receptor, and the WEBD method was designed to guarantee (statistically) that some ligands would not simply wander away while also permitting the correct calculation of kinetic rates. The method was later extended to configuration space in the folding of coarse-grained proteins.2 Our own use of the approach studied a conformational transition, and we demonstrated that the method produces not only kinetic information but also the full transition path ensemble.3 All these studies considered only Markovian dynamics. We note that “forward flux”sampling4, 5 uses a strategy similar to WEBD; there are also related steady-state methods6, 7 and Monte Carlo (MC) approaches.8 Furthermore, there are other rigorous path sampling and generating techniques.9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19

The aim of the present note is to establish or expand upon two important facts about the WE method. (i) The WE method produces unbiased results for a broad class of stochastic dynamics, including non-Markovian processes. Thus the “BD” in Huber and Kim’s original title was overly restrictive. Non-Markovian dynamics can arise, for instance, in self-avoiding walks20 or as a means for accounting for the effects of degrees of freedom which have been suppressed—for instance, the hydrodynamic effects of solvent molecules not explicitly modeled.21 (ii) Further, the WE method can use arbitrary bins which can change over time. This point was made in the original WE paper1 and reiterated in our own work.3

To demonstrate (i) and (ii), we will show that WE is simply a resampling process, i.e., it generates an alternative but equivalent statistical sample of trajectories at occasional time points. Further, this leads to no bias in the future evolution of the system, subsequent to resampling. Because all dynamical information, including the configurational distribution, derives from the trajectory ensemble, WE produces fully unbiased results. In WE simulation, the resampling is controlled by the choice of bins. Because of the flexibility intrinsic to resampling, however, there is great flexibility in bin selection—including the freedom to dynamically redefine bins on the fly. As shown below, it is even possible to redefine bins adaptively—without using knowledge of the target state—which eventually lead to successful transitions. This opens up the possibility to explore configurational changes not already described by experimental structures.

THEORY

The WE procedure

The weighted ensemble procedure of Huber and Kim1 is straightforward to describe. First, the space of interest (real or configurational) is divided into regions called “bins.” The choice of bins is arbitrary and may change during a simulation, as discussed below. For concreteness, however, it may be presumed that the bins are static and chosen so that some or all bins lead sequentially to a “target state” of interest, which might be a binding site in real space or a configuration-space state. Let N be the number of bins.

In conventional WE simulations M stochastic trajectories are initiated from one bin and each is assigned a weight (probability) of 1∕M. Any distribution of initial configurations may be used, whether confined to a single bin or not. The trajectories are run for a short interval of time and stopped, at which point the current bin of each trajectory is determined. Trajectories arriving to new bins are split into identical daughter trajectories sharing the weight and history of the parent trajectory. Typically, M trajectories are created in each newly visited bin, and each daughter inherits a suitable fraction of the parent’s weight (e.g., 1∕M if only one trajectory arrives). All trajectories are then restarted and run for another short interval of time, after which the process of splitting is repeated as necessary. Whenever more than M trajectories occupy the same bin, some are “killed” by a probabilistic resampling process (see below), maintaining a total weight of one and no more than NM trajectories based on N bins. The whole process is repeated until the desired information is obtained.

The WE procedure generates a weighted trajectory ensemble, which is rich in information. For instance, the transition rate from the initial state to the target can be calculated based on the probability arriving in the target state as a function of time; in simple cases, the arrival probability per unit time rapidly plateaus to the value of the rate.1, 3 (More generally, the rate can also be calculated based on a steady-state WE procedure, as we have shown in Ref. 22.) The trajectory ensemble also includes information on more detailed transition probabilities and the evolving configurational distribution, both of which can be used to analyze or identify “structural” features3 such as intermediate states.

Resampling

The first goal is to get a feel for “resampling,”23, 24 which is a method for generating an alternative sample of a probability distribution, given some initial sample. Consider a simple static example, where we initially generate, say, 100 numbers distributed according to a Gaussian. We could now resample this distribution in a number of ways which preserve the distribution. For instance, we could (a) discard 50 numbers at random or (b) duplicate each number exactly twice. It is clear that such processes are statistically correct by a “repeated simulation” argument: if we average over many repetitions of the sample generation and resampling process—e.g., repeated generation of many sets of 50 numbers by process (a)—then we will generate a Gaussian distribution to any desired precision.

Resampling can also be done in a nonuniform way, by keeping track of weights. Consider the same set of 100 “Gaussian numbers,” with zero mean. In the initial “ordinary” statistical sample, we can say that each number has a constant weight which can be set to one without loss of generality. We could randomly discard half the numbers on the left (x<0) and assign each of the remaining left-side numbers a weight of two. Again by the repeated simulation argument, this is correct resampling. Alternatively, we could duplicate all the numbers on the right (x>0) and assign each a weight of one-half. More strangely at first glance, we could simultaneously do both resampling procedures, which would lead to a sparse sample on the left and a dense sample on the right. The repeated simulation argument also demonstrates the validity of this procedure.

Resampling can be defined as a process which creates an alternative, but statistically equivalent, sample of a fixed probability distribution based on an initial sample. The resampled sample will contain only a subset of elements in the original sample but with potentially different frequencies and compensating weights. The new sample can be either larger or smaller than the original, as desired.

Importantly, the elements in a sample—which can be resampled—can have arbitrary dimensionality. They can be vectors or “objects” of any kind and, in particular, a vector can represent a dynamical process∕history. For instance, a vector can represent the sequence of configurations in a discretized trajectory. Distributions of trajectories will now be considered more carefully.

Statistical description of discretized stochastic trajectories

We would like to construct a probabilistic description of stochastic processes, and in particular, the probability distribution of trajectories.9, 12, 13 Because our goal is to perform more effective computer simulations, we will restrict ourselves to discretized dynamics. That is, for our purposes, the exact dynamics will be enacted by a computer simulation of the form

| (1) |

which indicates that the present system configuration xj is a (probabilistic) function of the full history of the trajectory. In other words, in the notation of Ref. 25, xj is chosen from the conditional probability distribution p1|k(xj|xj−k,xj−k+1,…,xj−1), which depends on the previous k steps of the trajectory. We are restricting ourselves here to processes which are homogeneous in time, i.e., the function f (or the conditional probability) is independent of the time step j.

The important special case of a Markov process corresponds to k=1. For k>1, an operational rule is required to initialize the trajectory: if j<k, the function f must embody some rule for using the distribution p1|k, e.g., setting all earlier xi (for i<0) to some arbitrary value(s).

Such a dynamical process implies that we can construct the full probability distribution Ppath for n-step trajectories as the product of the initial distribution p1(x0) and subsequent conditional probabilities p1|k(xj|xj−k,…,xj−1). That is, we have

| (2) |

This formal—but explicit—equation for the probability distribution of trajectories shows that for the broad class of stochastic dynamics governed by Eq. 1, the distribution of trajectories can be considered a high dimensional “equilibrium” distribution.9, 12, 13 Indeed, it is this fact which permits the use of path integrals.

It is also of interest to consider the configuration-space distribution. This evolving distribution can be derived from Eq. 2 at any point in time simply by integrating over all possible histories.26 That is, the distribution at time point n which depends on the initial distribution is given by (again in the notation of Ref. 25)

| (3) |

In the case of a continuous-time Markov process, this is the distribution that would be calculated from solutions of the Fokker–Planck equation. Note that the distribution p1 in Eq. 3 is not the same as that in Eq. 2; rather we are following van Kampen’s convention,25 where the subscript refers to the number of variables in the argument and the conditionality if present.

Resampling a distribution of trajectories

Because the distribution of trajectories Ppath(x0,…,xn) is an ordinary, albeit high-dimensional, distribution, it can be resampled just as described for equilibrium distributions. That is, given one sample of trajectories, we can generate another (statistically) correct sample using deletions and duplications of entire trajectories, provided that we attach the correct weights to the remaining trajectories. The means for doing this are identical to that for the equilibrium case, and the specific procedure used in WE simulation is described later.

The distribution of trajectories at future times—subsequent to a resampling process—will also be correct. That is, if we resample the trajectory distribution at time step n—preserving Ppath(x0,…,xn)—the trajectories which evolve from the new sample via the dynamics (1) will also have the correct distribution at a later time step n+m. This can be seen formally by decomposing the full distribution according to the relation

| (4) |

which is equivalent to propagating the dynamics via Eq. 1 from step n to step n+m.

WE as resampling

Weighted ensemble simulation simply performs occasional resamplings of the trajectory distribution. In operational terms, it follows a set of simultaneous trajectories simulated in parallel. Thus, the only difference between WE and brute force simulation of this set of trajectories is that the WE method entails resampling at occasional time points. Between resamplings, the WE simulations employ ordinary brute force dynamics.1

Resampling in WE is performed only among trajectories at the same point in time, and this is done in two ways. First, an entire trajectory may be replicated M times, with each replica assigned a weight of wi∕M if the original trajectory had weight wi. Such replicas are assigned a history identical to that of the original trajectory. Second, two trajectories, i and j may be “combined:” the full weight wi+wj is assigned to one of the trajectories, with probability governed by the relative weights. The surviving reweighted trajectory retains its original history. Such combination is, in fact, simply a removal process, like those described in the examples above. The two types of resampling processes in WE, by construction, preserve the correct probability distribution for trajectories for the time at which resampling is performed. Therefore, as discussed above, the correct trajectory distribution is preserved at all future times. Because the WE resampling preserves the distribution of trajectories, it also preserves the correct configurational distribution, which is derived from the trajectory distribution via Eq. 3.

Resampling in WE simulation can be achieved with arbitrary dynamically changing bins

In its simplest form, weighted ensemble simulation divides configuration space into a fixed set of bins or regions. Resampling is performed to ensure that the number of trajectories in each bin is equal (once a bin has been visited). For details, see, for example, Ref. 3.

The arguments above have emphasized that WE simulation is more general than originally thought. Specifically, we have argued that (i) resampling can be performed correctly in myriad ways, and (ii) that any correct resampling procedure will preserve the correct stochastic dynamics in a WE simulation. Thus, WE simulation can exploit alternative resamplings.

Most importantly, as noted by Huber and Kim1 in their original paper, the bin choices which govern resampling in a WE study can be adjusted during the simulation. Arbitrary changes to the binning during a WE simulation serve only to generate different—but statistically correct—resamplings. We now turn to numerical illustrations.

NUMERICAL RESULTS

To confirm our previous conclusions by simulations, we consider several examples.

Colored noise

We first study one-dimensional stochastic dynamics27 governed by the overdamped Langevin equation,

| (5) |

where F(x) is the physical conservative force and γ is the friction constant. The noise R(t) can be taken as Gaussian white noise with zero mean and correlation

| (6) |

or colored noise with zero mean and exponential correlation

| (7) |

where {⋯} denotes the average over the distribution of the initial value of R(t) according to

| (8) |

Here kB is Boltzmann’s constant, T is the temperature, and D is the diffusion constant. The inverse of λ in Eq. 7 is the correlation time for the colored noise. If we use the simulation time step size dt as a unit, this timescale can be expressed as

| (9) |

In the case of colored noise 7, the process 5 is non-Markovian for the single coordinate x.25 The particular choice of noise correlations 7, related to an Ornstein–Uhlenbeck process, can be generated using an auxiliary Markovian variable as a matter of convenience. However we consider only the single non-Markovian variable, x, in which case 5 represents a simple stochastic differential equation with additive noise.

Both the WE method and brute force simulation are applied to study the duration of transition events28, 29 for the double-well potential

| (10) |

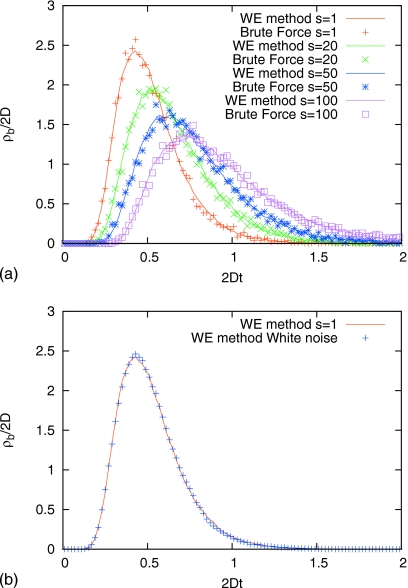

with F(x)=−dU(x)∕dx. The duration of a transition is defined to be the time interval between the last time the trajectory leaves x=−1 and the first time it reaches x=1.29 In Fig. 1a, we compare brute force and WE simulation results for different colored noises with s=1, s=20, s=50, and s=100. The two types of numerical results match very well. Fig. 1b shows the WE simulation results for colored noise with s=1 and white noise, which are almost identical, as one would expect. Thus for any s⪢1, the white and colored noise results are dramatically different, and WE method reproduces the effects in detail.

Figure 1.

The event-duration distribution ρb for the double well potential U(x)=5.0kBT[1−x2]2. Panel (a) shows the results from brute force and WE simulation for different colored noises with s=1, s=20, s=50, ands=100. Panel (b) shows the WE simulation results for colored noise with s=1 and white noise. For any s⪢1, the white and colored noise results are dramatically different, and WE method reproduces the effects in detail.

Myopic self-avoiding walk

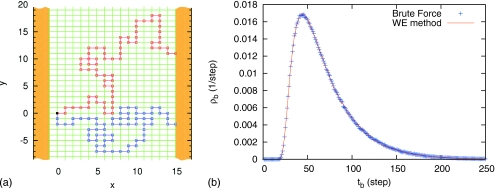

We consider a self-avoiding random walk on a two-dimensional “simple cubic” grid as a more extreme non-Markovian example: the “dynamics” (i.e., transition probabilities) at any step depends on the complete previous history. In particular, we study a “myopic self-avoiding walk,” following the definition in Madras and Slade’s20 book. Walkers will always look one step forward before they move. If there are nearest neighbors of the current position which have never been visited, the next step will be chosen uniformly from them. Otherwise the next step will be chosen uniformly from those neighbors which have been visited least often. This definition ensures that the walk will not be trapped, and thus contains more dynamical flavor than the strictly nonintersecting self-avoiding random walk.20 As shown in Fig. 2a, two absorbing walls are placed at x=−1 and x=15, and all the walkers start from the origin (0, 0). Those which reach the right absorbing wall before being absorbed by the left absorbing wall are defined as successful transition events.

Figure 2.

“Myopic self-avoiding random walk” on a two-dimensional grid. Panel (a) shows two successful transition events. The walkers, which started from the origin (0, 0), reached the right absorbing wall at x=15 before being absorbed by the left absorbing wall at x=−1. The upper trajectory crossed itself once at (4, 10), and the lower one avoided itself successfully during the whole transition. Panel (b) compares the distributions of the transition-event durations, obtained from brute force and WE simulation.

Brute force simulation and the WE method are applied to obtain the distribution of the durations of the transition events. The results are shown in Fig. 2b, and they match very well. In WE simulation (10.865±0.015)% of all the walkers reached the right absorbing wall successfully, which is in agreement with the brute force result, (10.854±0.004)%. It is noteworthy that when applied to self-avoiding walk simulations, the WE method is operationally very similar to the early work of Erpenbeck and Wall,30 as well as to later refinements (e.g., Refs. 31, 32).

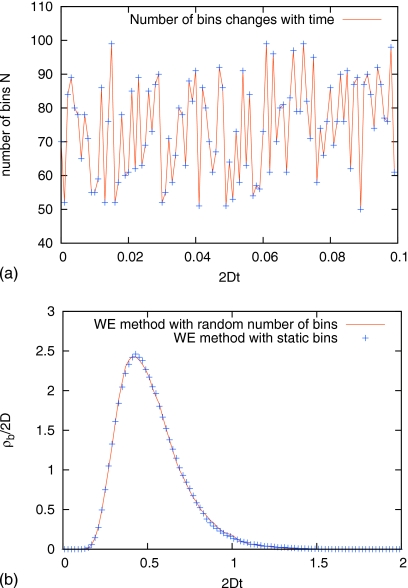

WE method with random number of bins

The WE method can use arbitrary bins which can change over time. To check this important fact, the WE program was changed to randomly choose the number of bins N from a range before every resampling (splitting and combination). Because such random binning is not selected to aid the efficiency of WE simulation, it should provide a good test for the correctness of WE under somewhat “adverse” conditions. The modified WE program is applied to study the duration of transition events for a Brownian particle in the double well potential described by Eq. 10. In the WE simulations, the region x<−1 is treated as the first bin and the initial state, the region x>1 is the last bin and the final state. The area between them −1≤x≤1 is divided into (N−2) bins evenly. As shown in Fig. 3, the modified WE program with fluctuating bins yields excellent agreement with the result from static bins.

Figure 3.

The modified WE program with fluctuating bins N (randomly uniformly chosen from 50≤N≤100) is applied to study the duration of transition events for a Brownian particle in double well potentialU(x)=5.0kBT[1−x2]2. Panel (a) shows the time series of the number of bins N; only the first 100 values of N are included. Panel (b) shows that the modified WE program with fluctuating bins reproduces the results from static bins.

WE method with adaptive Voronoi bins

The WE approach can adopt clustering ideas to divide multiple simulations into groups, and change the bins during the simulation for better performance; see also Refs. 7, 33, 34. In a particularly intriguing approach, the bins can be constructed and adjusted without using information about the target state. Such an adaptive strategy could be important in biomolecular simulations, where only a single state (e.g., experimental protein structure) is known, but the presence of other states is suspected.

The idea in adaptive WE simulation is for the bins to follow the evolving probability distribution. For example, the simulation can employ bins arising from the Voronoi diagram35 based on N reference configurations. These reference configurations are chosen as follows.

-

(1)

The first reference configuration is randomly chosen from the current set of M×N configurations (i.e., M configurations per bin).

-

(2)

Suppose we already have n chosen references (n<N). For every configuration i, calculate the distances to each of these n previous references, and then find the minimum of these distances, denoted Dmin(i).

-

(3)

The configuration with the maximum Dmin(i) will be the next reference.

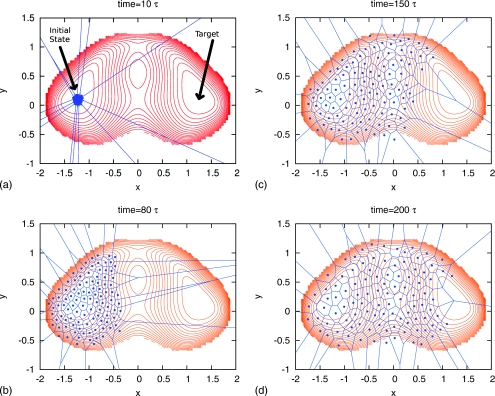

This procedure guarantees that the reference configurations will spread evenly over the represented configurational space. Furthermore the definition of bins will evolve with the simulation as shown in Fig. 5.

Figure 5.

An adaptive WE method which defines Voronoi bins based on the evolving distribution. The dots are the reference configurations for the Voronoi bins. At the beginning, all the reference configurations are in the initial state. Subsequently the reference configurations and bins follow the evolving probability distribution. The target is found spontaneously after 200τ, where τ is the time interval between resamplings (splitting and combination).

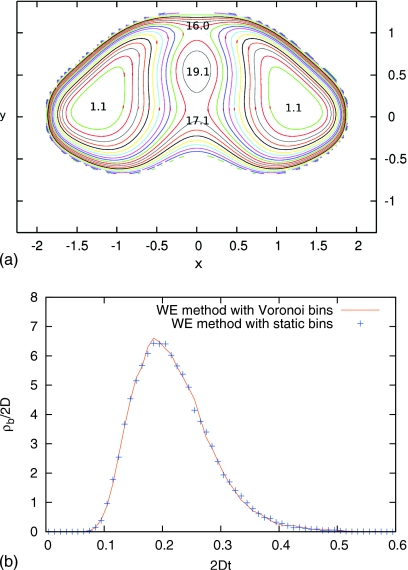

We apply this adaptive WE method to a toy two-dimensional system with two distinct pathways. The two-dimensional double well potential is inspired by Chen, Nash, and Horing’s work,36 and defined via

| (11) |

as shown in Fig. 4a. The barrier between the two wells is about 15kBT along each pathway. Both the WE methods with dynamic Voronoi bins and static bins are applied to get the distribution of duration of transition events. The initial state and the final state are defined as the regions where the potential satisfies U(x,y)<(Umin+2kBT), where Umin is the lowest potential in the left and right wells.

Figure 4.

Panel (a) shows the two-dimensional double well potential defined via Eq. 11 and the potential values of U∕kBT at extreme points. Panel (b) compares the results of duration of transition event duration obtained by the WE methods with dynamic Voronoi bins and static bins. They are in good agreement.

We compare the results obtained via WE simulations with dynamic Voronoi bins and static bins in Fig. 4b. Again, The WE method with dynamic bins reproduces the result from static bins. In Fig. 5, we show the evolution of Voronoi bins in one of the WE simulations. The bins follow the evolving probability distribution and find the target spontaneously after ∼200τ, where τ is the time interval between resamplings (splitting and combination).

DISCUSSION

How resampling can improve efficiency in WE simulation

A simple example illustrates how WE simulation can improve the efficiency of estimating dynamical quantities like the transition rate or of sampling the transition path ensemble. Consider a simple double-well potential, and imagine starting 100 brute force simulations from the minimum of the left well, state A. At some time t later, one expects to sample approximately 100kt transitions to the right well (state B), where k is the rate for that process. For t⪡1∕k, it is unlikely to observe any transitions.

Also consider a WE simulation of the same system, using 100 simulations also started from state A, with just a single resampling process at time t∕2. At such an intermediate time, if the rate is low, we expect that roughly half the trajectories (∼50) have diffused to the left of the state A minimum and half to the right. Assume that the WE resampling process at t∕2 removes about half of the left trajectories and replicates half of the right trajectories (with appropriate reweighting), maintaining 100 trajectories overall. Because successful transitions must proceed from the right side of the minimum, and because there will now be about ∼75 trajectories on the right instead of ∼50 without resampling, the resampling will increase the chance of observing a transition trajectory. With repeated resampling and a series of bins covering the reaction coordinate, a kind of “statistical ratcheting” is achieved.

There is a price paid for the increased efficiency of estimating dynamical quantities, namely, a decreased precision in the sampling of the initial state, A. This is especially true when there is a low transition rate out of state A. However the typical goal of WE simulation is to sample transitions and not the initial state.

Improved efficiency is not guaranteed

On a practical level, we note that WE simulation (i.e., resampling a set of dynamical trajectories) does not necessarily increase the efficiency with which a certain dynamical process is characterized. To see this, consider again the example of a double-well potential with trajectories initiated from the left well. If, perversely, the trajectories were resampled so as to have fewer in the transition region between the two wells (and perhaps more to the left of the left minimum), such a WE simulation would likely be less efficient than simple brute force simulation with no resampling. In fact, we found that the WE method could be more or less efficient than brute force depending on the particular random walk problem selected (see Sec. 3B).

There is therefore, as with many other simulation strategies, a certain amount of “art” to optimizing the efficiency of the WE method-even though it will remain rigorous if performed based on rigorous resampling. This aspect has been discussed in Ref. 3 but bears further investigation.

Connection to other methods

In a sense, we have shown that the WE approach accounts properly for the trajectory “history” and therefore is applicable for non-Markovian dynamics. Other path sampling methods can also account for trajectory history, including “forward flux sampling” (FFS),4 “dynamic importance sampling” (DIMS),13 “transition path sampling” (TPS),12 “transition interface sampling” (TIS),17 etc. The MC method “Russian roulette and splitting,”8 which is closely related to WE method, apparently also can account for history effects. Failure to account for trajectory history rules out an exact description of non-Markovian processes.

CONCLUSIONS

We used probabilistic arguments and numerical tests to demonstrate the generality of weighted ensemble path sampling simulation. The method, in fact, applies to a broad class of Markovian and non-Markovian dynamics. We also confirmed that the bins used for “sampling” trajectories can be changed during a simulation. In a toy system, we demonstrated an adaptive approach to changing bins which did not require knowledge of the target state.

ACKNOWLEDGMENTS

We benefited from many helpful discussions about WE approach with group members Divesh Bhatt and Andrew Petersen. This work was supported by NIH (Grant No. GM070987) (to D.M.Z.).

References

- Huber G. A. and Kim S., Biophys. J. 70, 97 (1996). 10.1016/S0006-3495(96)79552-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rojnuckarin A., Kim S., and Subramaniam S., Proc. Natl. Acad. Sci. U.S.A. 95, 4288 (1998). 10.1073/pnas.95.8.4288 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang B. W., Jasnow D., and Zuckerman D. M., Proc. Natl. Acad. Sci. U.S.A. 104, 18043 (2007). 10.1073/pnas.0706349104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allen R. J., Warren P. B., and ten Wolde P. R., Phys. Rev. Lett. 94, 018104 (2005). 10.1103/PhysRevLett.94.018104 [DOI] [PubMed] [Google Scholar]

- Escobedo F. A., Borrero E. E., and Araque J. C., J. Phys.: Condens. Matter 21, 333101 (2009). 10.1088/0953-8984/21/33/333101 [DOI] [PubMed] [Google Scholar]

- Warmflash A., Bhimalapuram P., and Dinner A. R., J. Chem. Phys. 127, 154112 (2007). 10.1063/1.2784118 [DOI] [PubMed] [Google Scholar]

- Dickson A., Warmflash A., and Dinner A. R., J. Chem. Phys. 130, 074104 (2009). 10.1063/1.3070677 [DOI] [PubMed] [Google Scholar]

- Kahn H., Use Of Different Monte Carlo Methods, Symposium on Monte Carlo Methods (Wiley, New York, 1956), pp. 146–190. [Google Scholar]

- Pratt L. R., J. Chem. Phys. 85, 5045 (1986). 10.1063/1.451695 [DOI] [Google Scholar]

- Schlitter J., Engels M., Krüger P., Jacoby E., and Wollmer A., Mol. Simul. 10, 291 (1993). 10.1080/08927029308022170 [DOI] [Google Scholar]

- Izrailev S., Stepaniants S., Balsera M., Oono Y., and Schulten K., Biophys. J. 72, 1568 (1997). 10.1016/S0006-3495(97)78804-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dellago C., Bolhuis P. G., Csajka F. S., and Chandler D., J. Chem. Phys. 108, 1964 (1998). 10.1063/1.475562 [DOI] [Google Scholar]

- Zuckerman D. M. and Woolf T. B., J. Chem. Phys. 111, 9475 (1999). 10.1063/1.480278 [DOI] [Google Scholar]

- Eastman P., Gronbech-Jensen N., and Doniach S., J. Chem. Phys. 114, 3823 (2001). 10.1063/1.1342162 [DOI] [Google Scholar]

- E W., Ren W., and Vanden-Eijnden E., Phys. Rev. B 66, 052301 (2002). 10.1103/PhysRevB.66.052301 [DOI] [Google Scholar]

- Wales D. J., Mol. Phys. 100, 3285 (2002). 10.1080/00268970210162691 [DOI] [Google Scholar]

- van Erp T. S., Moroni D., and Bolhuis P. G., J. Chem. Phys. 118, 7762 (2003). 10.1063/1.1562614 [DOI] [PubMed] [Google Scholar]

- Faradjian A. K. and Elber R., J. Chem. Phys. 120, 10880 (2004). 10.1063/1.1738640 [DOI] [PubMed] [Google Scholar]

- Yang Z., MÃjek P., and Bahar I., PLOS Comput. Biol. 5, e1000360 (2009). 10.1371/journal.pcbi.1000360 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Madras N. and Slade G., The Self-Avoiding Walk (Birkhäuser, Boston, 1996). [Google Scholar]

- Forster D., Hydrodynamic Fluctuations, Broken Symmetry, And Correlation Functions (Westview, Boulder, CO, 1995). [Google Scholar]

- Bhatt D., Zhang B. W., and Zuckerman D. M., e-print arXiv:0910.5255v1/physics.bio-ph.

- Liu J. S., Monte Carlo Strategies in Scientific Computing (Springer, Berlin, 2002). [Google Scholar]

- Frenkel D. and Smit B., Understanding Molecular Simulation: From Algorithms to Applications, 2nd ed. (Academic, Boston, 2001). [Google Scholar]

- van Kampen N. G., Stochastic Processes in Physics and Chemistry (North Holland, Amsterdam, 1992). [Google Scholar]

- Dykman M. I., McClintock P. V. E., Smelyanski V. N., Stein N. D., and Stocks N. G., Phys. Rev. Lett. 68, 2718 (1992). 10.1103/PhysRevLett.68.2718 [DOI] [PubMed] [Google Scholar]

- Fox R. F., Gatland I. R., Roy R., and Vemuri G., Phys. Rev. A 38, 5938 (1988). 10.1103/PhysRevA.38.5938 [DOI] [PubMed] [Google Scholar]

- Crehuet R., Field M. J., and Pellegrini E., Phys. Rev. E 69, 012101 (2004). 10.1103/PhysRevE.69.012101 [DOI] [PubMed] [Google Scholar]

- Zhang B. W., Jasnow D., and Zuckerman D. M., J. Chem. Phys. 126, 074504 (2007). 10.1063/1.2434966 [DOI] [PubMed] [Google Scholar]

- Wall F. T. and Erpenbeck J. J., J. Chem. Phys. 30, 634 (1959). 10.1063/1.1730021 [DOI] [Google Scholar]

- Grassberger P., Phys. Rev. E 56, 3682 (1997). 10.1103/PhysRevE.56.3682 [DOI] [Google Scholar]

- Grassberger P., Comput. Phys. Commun. 147, 64 (2002). 10.1016/S0010-4655(02)00205-9 [DOI] [Google Scholar]

- Borrero E. E. and Escobedo F. A., J. Chem. Phys. 127, 164101 (2007). 10.1063/1.2776270 [DOI] [PubMed] [Google Scholar]

- Borrero E. E. and Escobedo F. A., J. Chem. Phys. 129, 024115 (2008). 10.1063/1.2953325 [DOI] [PubMed] [Google Scholar]

- Aurenhammer F., ACM Comput. Surv. 23, 345 (1991). 10.1145/116873.116880 [DOI] [Google Scholar]

- Chen L. Y., Nash P. L., and Horing N. J. M., J. Chem. Phys. 123, 094104 (2005). 10.1063/1.2013213 [DOI] [PubMed] [Google Scholar]