Abstract

The possibility that “dead regions” or “spectral holes” can account for some differences in performance between bilateral cochlear implant (CI) users and normal-hearing listeners was explored. Using a 20-band noise-excited vocoder to simulate CI processing, this study examined effects of spectral holes on speech reception thresholds (SRTs) and spatial release from masking (SRM) in difficult listening conditions. Prior to processing, stimuli were convolved through head-related transfer-functions to provide listeners with free-field directional cues. Processed stimuli were presented over headphones under binaural or monaural (right ear) conditions. Using Greenwood’s [(1990). J. Acoust. Soc. Am. 87, 2592–2605] frequency-position function and assuming a cochlear length of 35 mm, spectral holes were created for variable sizes (6 and 10 mm) and locations (base, middle, and apex). Results show that middle-frequency spectral holes were the most disruptive to SRTs, whereas high-frequency spectral holes were the most disruptive to SRM. Spectral holes generally reduced binaural advantages in difficult listening conditions. These results suggest the importance of measuring dead regions in CI users. It is possible that customized programming for bilateral CI processors based on knowledge about dead regions can enhance performance in adverse listening situations.

INTRODUCTION

Bilateral cochlear implantation has been provided to an increasing number of deaf patients with the goal that bilateral stimulation would enable optimal performance in adverse listening situations. This approach is mainly driven by the substantial benefit that binaural hearing is known to provide to normal-hearing listeners (NHLs) as compared with monaural hearing, in particular, in complex listening situations (e.g., Bronkhorst and Plomp, 1988; Blauert, 1997; Hawley et al., 1999, 2004). Specifically, when listening under binaural conditions, NHLs experience significant improvement in speech recognition when an interfering sound is spatially separated from target speech. This improvement in performance is known as the advantage of spatial separation or spatial release from masking (SRM) (Hawley et al., 1999, 2004; Drullman and Bronkhorst, 2000; Freyman et al., 2001; Litovsky, 2005).

In recent years, some benefits of bilateral cochlear implants (CIs) have been demonstrated when performance is compared to that with a single CI. These benefits include enhancement of speech recognition in a multi-source environment, whether target and interfering sources are co-located or spatially separated (Muller et al., 2002; Schon et al., 2002; Tyler et al., 2002; Vermeire et al., 2003; Schleich et al., 2004; Litovsky et al., 2006a, 2009). In addition, improved sound localization abilities have been reported in both adults (e.g., Tyler et al., 2002; van Hoesel and Tyler, 2003; Litovsky et al., 2009) and children (Litovsky et al., 2006b; Litovsky et al., 2006c). Nonetheless, the measured benefits from bilateral implantation vary amongst individuals (e.g., Muller et al., 2002; Wilson et al., 2003; Litovsky et al., 2004, 2006a, 2009; van Hoesel, 2004), with a large range of outcomes and effect sizes. Furthermore, few patients reach the level of performance seen in NHLs. These findings suggest that there exist limitations in the ability of bilateral CIs to provide substantial benefits to all users, but the sources of these limitations remain to be identified. In the context of the current work, two of these limitations are addressed: reduced spectral resolution and loss of spectral information, with the latter being the primary focus.

Reduced spectral resolution or inability to utilize the full spectral information provided by the actual number of electrodes that are stimulated along the implant array has been noted in a number of studies (Friesen et al., 2001; Fu et al., 2004). The extent to which this problem can be ameliorated by providing two implants vs a single implant is not well understood and remains an open question. Within each electrode array, the utility of spectral information is hampered by channel interaction (Throckmorton and Collins, 2002; Fu and Nogaki, 2005) as well as alteration in the spectral-tonotopic mapping (Fu and Shannon, 1999) which are mediated monaurally at the peripheral level. In the context of bilateral implantation, one can also argue that across-ear differences in monaural processes might partially reverse some of these effects in a summation-like manner due to the use of two independent CIs. However, it is likely that the limitations in utilizing the available spectral information would persist to some extent, even when a patient has a CI in each ear. This is because the extent to which bilateral implants result in an overall increase in the number of functionally independent channels is not clear.

Another source of limitation is related to the local loss of hair cells or auditory neurons. This is known as “dead regions” (Nadol, 1997; Kawano et al., 1998; Moore et al., 2000) and is highly relevant to cochlear implantation. Modern CI speech processors use multiple-electrode arrays specifically designed to take advantage of the natural tonotopic organization of the cochlea and its innervation patterns. The presence of local anomaly along the tonotopic axis is likely to disrupt these innervation patterns (Liberman and Kiang, 1978; Hartmann et al., 1984; Shepherd and Javel, 1997); hence, CI listeners are forced to integrate information from frequency bands that are non-contiguous, i.e., “disjoint bands.” The perceptual consequences of this effect can be examined by creating “holes” in the spectrum (Shannon et al., 2002).

An intriguing question that has been the focus of studies done with non-CI users is how do listeners integrate information from disjoint bands, and what is the contribution of each band to overall speech intelligibility. A hallmark of this work has been the development of the articulation index (AI), which represents an attempt to predict speech intelligibility from individual spectral components of the speech segment (Fletcher and Galt, 1950). In general, the AI is based on the assumption that each frequency band contributes to the total AI independent of all other frequency bands and that the contribution of these individual bands is additive. However, subsequent studies demonstrated a synergistic effect when listeners integrated information from widely disjoint bands (Kryter, 1962; Grant and Braida, 1991; Warren et al., 1995). The results of these studies suggest that the AI theory does not take into account the fact that listeners combine information from disjoint bands, thus failing to predict intelligibility of pass-band speech.

A similar line of investigation is needed to understand how CI listeners integrate auditory information from disjoint bands, given that they have been shown to weigh frequency information in a different manner than NHLs (Mehr et al., 2001). While CI users can tolerate a relatively large loss of spectral information (e.g., Shannon et al., 2002; Başkent and Shannon, 2006), studies to date were conducted in quiet, which may not predict CI users’ performance in realistic, complex auditory environments. In addition, given the growing number of individuals who undergo bilateral implantation, it is important to further understand the extent to which benefits that are provided through bilateral CIs, such as better speech understanding when target and interfering sounds are spatially separated, are affected by loss of spectral information.

The current study was aimed at investigating the effect of spectral holes on speech intelligibility and on benefits of spatial separation of target and interferes. The paradigm used here builds on the concept of the “cocktail party” environment, in which multiple sources are presented from a number of predetermined locations. First, by including conditions in quiet and with interferers we can compare effects of holes with background noise and in previously-studied quiet conditions. Second, by simulating spatial locations using virtual-space stimuli over headphones, we can directly examine performance under monaural vs binaural conditions and assess binaural benefit. The approach used here is similar to that used previously in absence of spectral holes or degraded speech (Hawley et al., 2004; Culling et al., 2004). By using a noise-excited CI vocoder in which spectrum is degraded already, and then imposing spectral holes, we tested the hypothesis that spectral holes would be detrimental to sentence recognition due to the fragile nature of spectrally sparse information in the vocoder. Further, we tested the hypothesis that the extent of degradation in speech understanding would be dependent on the location of the spectral hole along the simulated cochlear array. Thus, the approach used here would enable identification of regions along the cochlea that, if not intact, place the listener in a position of being particularly susceptible to disruption. Finally, it was hypothesized that a loss of spectral information caused by spectral holes would interact significantly with listening mode (binaural vs monaural) such that binaural hearing may serve to reduce the deleterious effects of spectral hole due to binaural redundancy and the availability of spatial cues.

While the effect of spectral holes should ultimately be tested directly in CI users, currently the identification of dead regions in these listeners remains challenging (Bierer, 2007). As a first step, vocoder simulation of CI listening was used to identify some of the important variables that could potentially impact performance under idealized conditions. Another clinical issue to note is that, because in clinical situations tonotopic information matching across the ears is difficult to achieve and to verify, the precise matching in the present study is idealized relative to true CI bilateral frequency mapping. In addition, in the binaural conditions, spectral holes were deliberately created at matched locations in the two ears, which again, may be unrealistic, as spectral holes present in CI patients due to the existence of inactive or atrophied auditory nerve fibers are unlikely to occur at identical places in the right and left cochleae. This general approach was chosen because of the growing concern regarding the need to maximize salience of binaural cues in CI patients by matching the stimulated cochleotopic regions in the right and left ears (Long, 2000; Long et al., 2003; Wilson et al., 2003). Matching information across the two ears has been a hallmark of studies on binaural sensitivity, in which electrically pulsed signals are delivered to a single pair of binaurally matched electrodes (van Hoesel and Clark, 1997;Litovsky et al., 2010; Long et al., 2003; van Hoesel, 2004; van Hoesel et al., 2009). However, similar work with speech stimuli remains to be done to understand the potential effectiveness of matching in the clinical realm.

METHODS

Listeners

Twenty adults with normal hearing (4 males and 16 females; 19–30 yrs old) participated in this study. Participants were native speakers of American English who were recruited from the student population at the University of Wisconsin-Madison. Normal-hearing sensitivity was verified by pure tone, air conduction thresholds of 15 dB hearing level (HL) or better at octave frequencies ranging from 250 and 8000 Hz (ANSI, 2004). In addition, no asymmetry in hearing thresholds exceeded 10 dB HL at any of the frequencies tested. All participants signed approved Human Subjects (University of Wisconsin Health Sciences IRB) consent forms and were paid for their participation.

Setup

Testing was conducted in a standard double-walled Industrial Acoustics Company (IAC) sound booth. Participants sat at a small desk facing a computer monitor with a mouse and keyboard for response input. Target and interfering stimuli were first convolved through head-related transfer functions (HRTFs)1 (described in Sec. 2E), digitally mixed and then processed through CI simulation filters to create vocoded speech (see Sec. 2F). A Tucker-Davis Technologies (TDT) system 3 RP2.1 real-time processor was used to attenuate the stimuli before sending them to the TDT headphone buffer (HB7). Stimuli were then delivered to listeners through headphones (Sennheiser HD 580). Stimuli were calibrated using a Larsen-Davis “system 824” digital precision sound level meter and a 6-cc coupler (AEC101 IEC 318 artificial ear coupler manufactured by Larson Davis, Depew, New York). Listening conditions were either binaural or monaural (right ear only).

Stimuli and procedure

Open set sentences from the MIT recordings of the Harvard IEEE corpus (Rothauser et al., 1969) were used. The complete corpus consists of 72 phonetically balanced lists with ten sentences each, and each sentence has five key words that require identification. These sentences are grammatically and semantically appropriate; however, they have relatively low predictability. Examples of these sentences include “The wide road shimmered in the hot sun,” “The small pop gnawed a hole in the sock,” or “The glow deepened in the eyes of the sweet girl.” This corpus was chosen based on the large number of sentences available. Because they have previously been used in a number of studies with NHLs (e.g., Hawley et al., 1999, 2004) and with CI users (e.g., Stickney et al., 2004; Loizou et al., 2009) under difficult listening conditions, they are valid for the type of tasks used in the present study. As was done in previous studies, short sentences from the corpus were used for the target stimuli, while 15 of the longest sentences were reserved for the interferer sentences and used throughout the experiment in random order. In addition, on each trial interferer sentences had earlier onset (∼1 s) than target sentences. Target sentences were recorded with two different male voices; each male voice contributed to half of the lists. The testing order of the target lists was fixed across all participants; however, the order of the sentences within each list was randomized. Interferer stimuli consisted of two-female talkers, each uttering different sentences from the interferer corpus, in random order from trial to trial.

Listeners were instructed to listen to the sentences spoken by a male voice and to ignore those sentences spoken by the females; they were encouraged to guess when not sure and to type everything that they judged to be spoken by the male voice. Spelling and typos were checked online by an examiner seated in the observation room. Other than on the first sentence in each list, once the listeners completed typing the content of the target sentence they pressed the return key and the correct target sentence was displayed on the computer monitor. The first sentence was treated in a special manner, as it was repeated at incrementally higher levels until three key words were identified; only after this first correct response was the sentence revealed to the listener. All other remaining sentences were displayed following the listener’s response regardless of number of correct words, as they were only used once. The key words in each sentence were identified with uppercase letters in the transcript. These responses were self-marked by listeners and were verified online by an examiner. Listeners were instructed to compare the two transcripts and to count the number of correct key words, then to enter that number and press the return key to progress to the next trial. Each speech recognition threshold (SRT) run was logged in a data file that could be used for verifying data and scoring reliability by the examiner if needed. This method has been used in previous studies (Hawley et al., 2004; Culling et al., 2004).

Estimation of speech reception threshold

The intensity level of the interferers was fixed at 50 dB sound pressure level (SPL) throughout the experiment. SRTs denoting target intelligibility were measured using a similar approach to that described by Plomp (1986) and used by us previously (Hawley et al., 2004; Culling et al., 2004; Loizou et al., 2009). For each SRT measurement, one list of ten sentences was used. At the beginning of each list, the target sentence was presented at a level that yielded poor performance, i.e., a disadvantageous signal-to-noise ratio (SNR). If the target was judged to be inaudible by the listener, the instruction was to press the return key and the same target sentence was repeated at a SNR that was more favorable by 4 dB; this was repeated until 3 or more key words (out of 5) were correctly identified. Following, the remaining nine sentences were presented at varying SNRs using a one-down∕one-up adaptive tracking algorithm targeting 50% correct (Levitt, 1971). The rule for varying the levels was as follows: level decreased by 2 dB if three or more key words identified correctly, else the level was increased by 2 dB. A single SRT value was determined by averaging the level presented on the last eight trials (trials 4–11). Given that each list allowed only ten trials to be measured, the level at which the 11th trial would have been presented was estimated based on the result of the tenth trial (Hawley et al., 1999; 2004; Culling et al., 2004).

Virtual spatial configurations

The unprocessed stimuli were convolved with non-individualized HRTFs (Gardner and Martin, 1994) to provide listeners with spatial cues regarding both target and interferers. Stimuli for each intended virtual spatial configuration were convolved through HRTFs for the right and left ears. Target and interfering stimuli were then digitally mixed and subsequently passed through the CI simulation filters described below. Measurements were obtained under headphones for each listener using the following virtual spatial configurations: (1) Quiet: target at 0° azimuth and no interferers, (2) front: target and interferers both at 0° azimuth, (3) right: target at 0° azimuth and interferers at 90° azimuth, and (4) left: target at 0° azimuth and interferers at −90° azimuth (see Fig. 1). SRM, which provides a measure of the improvement in SRT that occurs when the target and interfering stimuli are spatially separated, was calculated from SRTs in conditions (2)–(4) such that SRMright equals [SRTfront−SRTright] and SRMleft is equal to [SRTfront−SRTleft].

Figure 1.

Schematic representation of the virtual spatial conditions used in the study. Quiet, (target at 0° and no interferers), front (target and interferers at 0°), right (target at 0° and interferers at 90°), and left (target at 0° and interferers at −90°). These setups were used in the binaural and monaural conditions.

CI signal processing

Stimuli were processed through MATLAB software simulations using the signal processing strategies described by Shannon et al. (1995). Speech signals covering the frequency range of 300–10 300 Hz were divided into 20 contiguous frequency bands using a sixth order elliptical infinite impulse response (IIR) filter. The frequency cutoffs in these bands were calculated using the Greenwood map (Greenwood, 1990). The envelope from each band was extracted by full-wave rectification, followed by low-pass filtering using second order Bessel IIR filters at a 50 Hz cutoff frequency. The envelope from each band was then used to modulate a broadband white noise carrier, which was then subject to the same filter as in the analysis filters to remove spectral splatter. Finally, to create vocoded speech, the modulated outputs from each band were summed. For each given trial, target and interferers were processed in the same manner.

In the spectral hole conditions, holes were created by simply setting the output of the corresponding analysis bands to zero. These omitted bands were manipulated using the following frequency ranges which correspond to three tonotopic locations: 150–1139 Hz (basal), 749–3616 Hz (middle), and 2487–10 800 Hz (apical). Using the above frequency ranges, two hole sizes (6 and 10 mm) were created for each tonotopic location by varying the range of the dropped central frequencies (see Table 1 for specifics). The locations of spectral holes were determined using Greenwood’s frequency-position function which assumes a 35 mm cochlear length (Greenwood, 1990). The overall level of stimuli with holes was normalized to the level of the condition without a hole; thus the hole size had no effect on level.

Table 1.

Summary of the frequency range dropped to create the different experimental spectral holes. Central frequencies of the corresponding output bands were set to zero to eliminate stimulation in those regions.

| Spectral hole size (mm) | Apex (Hz) | Middle (Hz) | Base (Hz) |

|---|---|---|---|

| 6 | 150–600 | 1139–2487 | 4350–10 800 |

| 10 | 150–1139 | 749–3616 | 2487–10 800 |

Experimental conditions and design

Participants were divided into two equal-N size groups and randomly assigned to one of two listening mode groups (binaural or monaural). Each listener completed the study in five, 2 hour sessions. SRTs were measured for seven conditions (baseline+2 hole sizes×3 locations). For each of these conditions, testing was conducted at four spatial configurations, as described in Sec. 2F. Thus, each listener contributed data from 28 SRTs. Data collection was blocked by processing condition, thus seven testing blocks; these blocks were presented in random order for each subject, and within each block spatial conditions were randomized.

Prior to each testing block, listeners received training using the same processing condition as in that block. Four SRTs (two in quiet and two with speech interferers in the front) were collected; these data were discarded from the final analysis. This training procedure has been shown to help stabilize subjects’ performance when listening to vocoded speech (Garadat et al., 2009). After completing all testing conditions, each subject was tested again on the first testing block to control for possible learning occurring in the first session. This approach increased the total number of SRT conditions tested to 32 per subject, but did not change the number of SRTs per subject used in the data analysis.

RESULTS

Results were analyzed separately for the two groups of listeners as well as for across-group effects of listening modes. Within each group, SRT and SRM values were subjected to two-way repeated measure analyses of variance (ANOVAs) with spectral hole placement (baseline, basal, middle, and apical) and spatial configuration (quiet, front, right, and left) as the within-subject variables. The analyses were conducted separately for the 6 and 10 mm hole data, and within each analysis the same baseline data (where no holes were present) were used. An α criterion of 0.05 was used to determine statistical significance in the omnibus F tests. Across-group comparisons were conducted as mixed-nested ANOVAs (detailed below). Post hoct-test α values were corrected for multiple comparisons using the Holm–Bonferroni procedure.

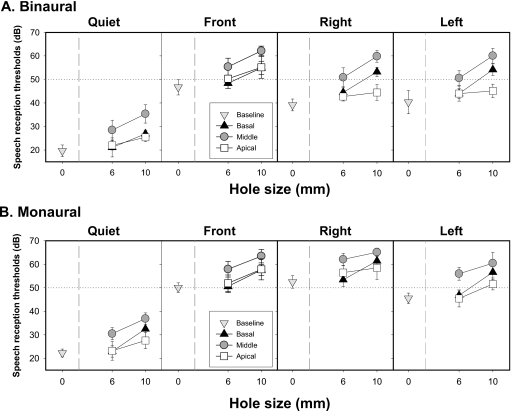

Effect of spectral holes on SRTs and SRM in binaural listening

Figure 2 (top panel) shows SRT values obtained in the 6 and 10 mm binaural conditions. A significant main effect of hole placement was found for both 6 mm [F(3,9)=13.6, p<0.001] and 10 mm [F(3,9)=51.35, p<0.0001] hole conditions. For both hole sizes, SRTs increased significantly (i.e., worse performance) relative to the baseline for all hole locations (p<0.0001). Middle holes, regardless of hole size, resulted in higher SRTs than basal (p<0.0001) and apical (p<0.0001) holes. While 6 mm apical and basal holes produced comparable SRTs, in the 10 mm condition SRTs were higher with basal than with apical holes (p<0.0001).

Figure 2.

Average SRTs (±SD) in decibels are plotted as a function of spectral hole size for the baseline and the three different hole placement conditions (basal, middle, and apical). Upper panels represent binaural data obtained for the four spatial configurations and bottom panels represent monaural data. The horizontal dotted line represents 0 dB SNR.

A significant main effect of spatial configuration was found for both the 6 mm [F(3,9)=240.7, p<0.0001] and 10 mm [F(3,9)=378.4, p<0.0001] conditions. Relative to the quiet condition, SRTs increased in the presence of speech interferers, as expected, regardless of the location of the interferers (p<0.0001). In addition, front SRTs were higher than both left and right (p<0.0001) SRTs, with no differences between left and right. These results were similar for both the 6 and 10 mm conditions. However, in the 10 mm condition, a significant interaction was found for hole placement and spatial configuration [F(9,81)=7.1, p<0.0001]. SRTs were comparable for the basal and apical holes in the quiet condition and for the front-interferer condition, i.e., when there was no masking or when the interferers and target arrived from the same location. However, SRTs were higher for basal than apical holes in the right and left interferer conditions (p<0.0001), that is, when the interferers and target were spatially separated. This interaction suggests that the lost high-frequency information due to the presence of 10 mm holes in the base might be necessary in spatial segregation in order to facilitate listening in complex listening environments.

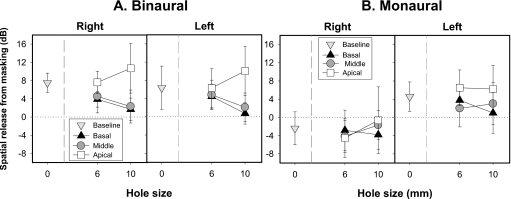

SRM values calculated from the SRTs under binaural conditions are summarized in Fig. 3 (panel A). A main effect of hole placement was not found for 6 mm conditions, suggesting that no differences in amount of SRM are introduced by the presence of 6 mm spectral holes. However, a significant main effect of 10 mm hole placement on SRM was found [F(3,9)=4.9, p<0.05]. Relative to the no-hole condition, SRM was comparable to the apical condition; however, both conditions produced SRM that was larger than SRM obtained in the basal (p<0.0001) and middle (p<0.005) hole conditions. No differences in SRM were found between basal and middle holes. There was no main effect of spatial configuration, suggesting that in the binaural conditions, the amount of SRM was comparable for the left and right spatial conditions.

Figure 3.

Average SRM (±SD) in decibels are plotted as a function of spectral hole size for the baseline and the three different hole placement conditions (basal, middle, and apical). Panel A represents binaural data and panel B represents monaural data. The horizontal dotted line represents 0 dB SRM.

Effect of spectral holes on SRTs and SRM in monaural listening

Figure 2 (bottom panels) shows SRT values obtained in the 6 and 10 mm monaural conditions. A significant effect of hole placement on SRTs was found for the 6 mm [F(3,9)=19.55, p<0.0005] and 10 mm [F(3,9)=42.1, p<0.0005] conditions. Unlike the binaural condition, 6 mm holes in the apical and basal regions had no effect on SRTs as determined by the results of the post-hoc analysis. However, Fig. 2 shows a modest trend for higher SRTs obtained in these two spectral hole conditions; this is discussed further in Sec. 4C. In addition, similar to the binaural conditions, 6 mm middle holes also resulted in higher SRTs compared with no-hole or with holes in the base or apex (p<0.0001). In contrast, in the 10 mm case, SRTs were elevated for all conditions with holes relative to the baseline condition (p<0.0001). In addition, in the 10 mm case, middle holes resulted in higher SRTs than basal and apical holes (p<0.0001), and basal holes led to higher SRTs than apical holes (p<0.005).

A main effect of spatial configuration was found for both 6 mm [F(3,9)=381.43, p<0.00001] and 10 mm [F(3,9)=311.7, p<0.0005] conditions. Similar to the binaural stimulation mode, masking was evident by the fact that, relative to the quiet condition, SRTs increased in the presence of speech interferers regardless of the location of the interferers (p<0.0001). Unlike the binaural listening mode, SRTs were higher when speech interferers were on the right compared with front in the 6 mm condition (p<0.005); however, SRTs were comparable for front and right conditions when 10 mm holes occurred. Additionally left SRTs were lower than right (p<0.0001) and front SRTs (p<0.005) for both 6 and 10 mm holes.

From these monaural SRT values, SRM was computed as the difference between SRTs in the left or right condition and SRTs in the front (see Fig. 3, panel B). A main effect of hole placement was not found for either the 6 or 10 mm conditions, suggesting that SRM was similar in the monaural listening mode regardless of the hole size. These results are partially inconsistent with the binaural data which showed significantly reduced SRM in the presence of 10 mm spectral holes and will be revisited in Sec. 4B for further discussion. As expected for the monaural conditions, a significant main effect was found for spatial configuration in both 6 mm [F(3,9)=108.39, p<0.0001] and 10 mm conditions [F(3,9)=103.06, p<0.00001]. As can be seen in Fig. 3 (panel B), there was substantially greater release from masking when the interferers were to the left than the right. In addition, a significant two-way interaction for hole placement by spatial configuration was found for 6 mm conditions [F(3,27)=294.67, p<0.05]. Specifically, when interferers were to the right, reduction in SRM compared with the baseline condition occurred only with apical holes, with no differences across other conditions. Conversely, when interferers were to the left, apical spectral holes produced larger SRM than that obtained in the baseline (p<0.05), basal (p<0.005), or middle (p<0.0001) conditions. In addition, SRM was smaller in the middle than the baseline conditions (p<0.01), and SRM was comparable for the middle and basal conditions (see Fig. 3, panel B). Taken together, these results suggest that when interferers were located to the left, spatial segregation of target and interfering speech was facilitated compared to the right conditions. However, the effect size is dependent on information in particular spectral regions.

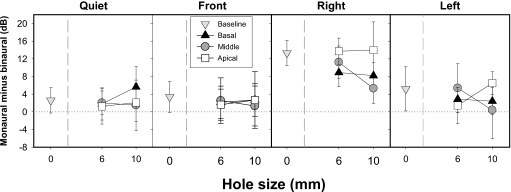

Effect of listening mode

This section focuses on differences observed in binaural vs monaural listening modes. SRT and SRM values were each subjected to a mixed-nested ANOVA with spatial configuration as a within-subject variable and listening mode as a between-subject variable. Analyses were conducted separately for the baseline and each of the six-hole conditions for the base, middle, and apex. Due to the large number of analyses, results are primarily reported in tables (Tables 2, 3, 4). Figure 4 is intended to demonstrate the effect of listening mode by plotting differences in SRT values between monaural and binaural conditions; a value of zero represents no difference and positive values indicate better performance (i.e., lower SRTs) for the binaural group than the monaural group. The ANOVA, which was conducted on the raw SRT values, yielded a significant main effect of listening mode, suggesting that the binaural group performed significantly better than the monaural group (see Table IIa for statistical results). A significant main effect of spatial configuration was also found (see Table IIIa).

Table 2.

Results of main effect of listening mode.

| Condition | df | F | P |

|---|---|---|---|

| (a) SRTs | |||

| Baseline | 1,18 | 44.960 | <0.0001 |

| 6 mm basal | 1,18 | 23.541 | <0.0001 |

| 10 mm basal | 1,18 | 42.078 | <0.0001 |

| 6 mm middle | 1,18 | 24.161 | <0.0001 |

| 10 mm middle | 1,18 | 5.686 | <0.05 |

| 6 mm apical | 1,18 | 25.624 | <0.0001 |

| 10 mm apical | 1,18 | 55.924 | <0.0001 |

| (b) SRM | |||

| Baseline | 1,18 | 18.015 | <0.0001 |

| 6 mm basal | 1,18 | 12.593 | <0.005 |

| 10 mm basal | 1,18 | 4.220 | Not sig. |

| 6 mm middle | 1,18 | 16.263 | <0.0001 |

| 10 mm middle | 1,18 | 1.433 | Not sig. |

| 6 mm apical | 1,18 | 17.529 | <0.005 |

| 10 mm apical | 1,18 | 9.565 | <0.01 |

Table 3.

Results of main effect of spatial configuration.

| Condition | df | F | P |

|---|---|---|---|

| (a) Overall binaural and monaural SRTs | |||

| Baseline | 3,18 | 189.9 | <0.0001 |

| 6 mm basal | 3,18 | 218.496 | <0.0001 |

| 10 mm basal | 3,18 | 218.446 | <0.0001 |

| 6 mm middle | 3,18 | 159.137 | <0.0001 |

| 10 mm middle | 3,18 | 157.39 | <0.0001 |

| 6 mm apical | 3,18 | 178.97 | <0.0001 |

| 10 mm apical | 3,18 | 189.9 | <0.0001 |

| (b) Overall binaural and monaural SRM | |||

| Baseline | 1,18 | 13.408 | <0.005 |

| 6 mm basal | 1,18 | 21.326 | <0.0001 |

| 10 mm basal | 1,18 | 8.970 | <0.01 |

| 6 mm middle | 1,18 | 17.189 | <0.005 |

| 10 mm middle | 1,18 | 4.552 | <0.05 |

| 6 mm apical | 1,18 | 27.552 | <0.0001 |

| 10 mm apical | 1,18 | 10.052 | <0.01 |

Table 4.

Results for two-way interaction of spatial configuration×listening mode.

| Condition | df | F | P |

|---|---|---|---|

| (a) SRTs | |||

| Baseline | 3,54 | 21.787 | <0.0001 |

| 6 mm basal | 3,54 | 10.664 | <0.0001 |

| 10 mm basal | 3,54 | 6.852 | <0.005 |

| 6 mm middle | 3,54 | 12.403 | <0.0001 |

| 10 mm middle | 3,54 | 3.322 | <0.05 |

| 6 mm apical | 3,54 | 28.974 | <0.0001 |

| 10 mm apical | 3,54 | 11.429 | <0.0001 |

| (b) SRM | |||

| Baseline | 1,18 | 26.541 | <0.0001 |

| 6 mm basal | 1,18 | 15.269 | <0.005 |

| 10 mm basal | 1,18 | 20.271 | <0.0001 |

| 6 mm middle | 1,18 | 14.362 | <0.005 |

| 10 mm middle | 1,18 | 5.519 | <0.05 |

| 6 mm apical | 1,18 | 43.896 | <0.0001 |

| 10 mm apical | 1,18 | 14.709 | <0.005 |

Figure 4.

Average SRTs (±SD) are plotted as the difference in decibels between monaural and binaural SRTs. A positive value indicates improvement in performance due to listening binaurally; consequently, a value near zero indicates minimal differences between monaural and binaural SRTs. Data are plotted as a function of spectral hole size with 0 mm refers to the baseline conditions. These data are plotted for the basal, middle, and apical hole conditions.

A significant interaction between spatial configuration and listening mode is noteworthy (see Table IVa for post-hoc independent sample t-test results). In the baseline condition, binaural SRTs were lower than monaural SRTs for all spatial configurations (p<0.05); all values shown in Fig. 4 with hole size=0 reflect significant group differences. However, when spectral holes were introduced, group differences depended on the spatial configuration. The two groups had comparable performance in the quiet and front SRT conditions where the SNR was identical across the two ears. As can be seen in Fig. 4 an exception to this occurred in the 10 mm basal condition in which the binaural group had lower quiet SRTs than the monaural group (p<0.0001). When the interferers were located to the right, the binaural group performed substantially better than the monaural group (p<0.0001) across the different spectral hole conditions. However, when the interferers were located to the left, the binaural group performed better in the 6 mm basal (p<0.05), 6 mm middle (p<0.005), and 10 mm apical (p<0.0001) hole conditions.

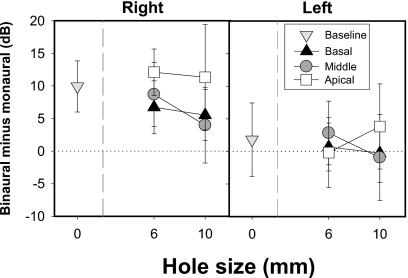

In Fig. 5 group differences in SRM are shown. The data are plotted such that zero values would reflect no group differences and positive values indicate greater amount of SRM in the binaural group. Relative to the monaural group, the binaural group had greater SRM in all but the basal and middle 10 mm hole conditions (statistics listed in Table IIb). As can also be seen in Fig. 5, right SRM was greater than left SRM (see Table IIIb for statistical results). The group difference is accounted for by the fact that the binaural group had greater right SRM than the monaural group, but the two groups had comparable left SRM as is evident by values being closer to zero (statistical results are reported in Table IVb). This latter finding is a good example of the robust advantages brought on by the head shadow effect that is naturally available in the monaural conditions, with right ear listening and interferes on the left.

Figure 5.

Average SRM values (±SD) are plotted as the difference in decibels between monaural and binaural SRTs. A positive value indicates greater SRM due to listening binaurally; consequently, a value near zero indicates minimal differences between monaural and binaural SRTs. Data are plotted as a function of spectral hole size with 0 mm refers to the baseline conditions. These data are plotted for the basal, middle, and apical hole conditions.

DISCUSSION

In this study, measures of SRT and SRM were used to evaluate the effect of simulated dead regions or spectral holes on sentence recognition. Previous studies addressed this issue in quiet listening conditions, presenting a limited representation of the real world (Kasturi and Loizou, 2002; Shannon et al., 2002). In addition, these issues were addressed under either binaural or monaural listening; hence, role of binaural hearing cannot be determined in these studies. Given that listeners typically operate in less than ideal listening situations, the current study provides an estimate of the effect of spectral holes on performance under conditions that mimic the cocktail party effect (for a review, see Bronkhorst, 2000). By simulating a complex auditory environment using virtual-space stimuli, we were able to look at the interaction between limitations imposed by spectral holes and the contribution of binaural hearing to performance.

Effect of size and tonotopic location of spectral holes on SRTs

Using a 20-band noise-excited vocoder, current results demonstrated the deleterious effect of spectral holes on SRTs for sentences. As can be seen in Fig. 2, one important finding is the lack of differences in SRTs between 6 mm basal (4350–10 800 Hz) and apical (150–600) holes. However, when the size of the spectral hole was increased to 10 mm, SRTs for the basal hole conditions were higher than SRTs for the apical hole conditions which suggests that basal holes occurred in the frequency range between 2487 and 10 800 Hz are more detrimental to overall performance than apical holes created by omitting the frequency range between 150 and 1139 Hz. These findings suggest the importance of the high-frequency information in the basal region to sentence recognition. Consistent with these data, individuals with high-frequency hearing loss often complain of great difficulty understanding speech particularly in the presence of background noise (Chung and Mack, 1979; Pekkarinen et al., 1990). Current findings are also in agreement with anatomical, electrophysiological, and behavioral data, showing that loss of basal cells in non-human mammals produced a greater deterioration in threshold than loss of apical cells (Schuknecht and Neff, 1952; Stephenson et al., 1984; Smith et al., 1987). One interpretation is that, due to redundancy in mechanisms that encode low-frequency information, a relatively small number of apical hair cells can account for hearing at low-frequencies (Prosen et al., 1990).

More important than basal stimulation, however, seems to be mid-cochlear stimulation. Our results demonstrated a substantial increase in SRTs when spectral holes were created in the middle of the simulated electrode array. This degradation in performance was greater than that produced by basal or apical holes, which suggests the importance of the mid-frequency range for sentence recognition. There is a line of evidence indicating that mid-frequency regions accommodate most of the spectral cues for speech such as the second and the third formants which are important for vowel and consonant identification (Stevens, 1997). In addition, using filtered speech, frequency bands in the mid-frequency range (1500–3000 Hz) have been shown to produce better speech recognition than those in the lower-frequency range (e.g., French and Steinberg, 1947; Stickney and Assmann, 2001). Direct measures of frequency-importance functions for a variety of speech materials also showed that the frequency band near 2000 Hz contributes the most to speech intelligibility (e.g., Bell et al., 1992; DePaolis et al., 1996). Finally, it appears that while low-frequency information is important for vowel recognition and high-frequency information for consonant recognition, mid-frequency information is important for both vowel and consonant recognitions (Kasturi and Loizou, 2002). Taken together, it seems that the mid-frequency range is the richest information-bearing region of the speech spectrum; hence a significant deterioration in sentence recognition is likely to occur when this frequency range is missing. Overall, current results are consistent with most frequency-importance functions.

However, in contrast to these results, previous work using a band-pass filtering approach in NHLs demonstrated that high level of speech recognition could be achieved even when a large disruption in the mid-frequency range occurs (e.g., Breeuwer and Plomp, 1984; Warren et al., 1995; Lippmann, 1996). Several important issues need to be taken into account when considering this difference. First, in the current study additional reduction in spectral information was introduced by using a vocoder. This finding supports our hypothesis that spectral holes are detrimental to sentence recognition due to the fragile nature of spectrally sparse information in the vocoder and reduced ability to seamlessly combine information from disjointed bands. Future work might delve more deeply into the interactions between number of channels and susceptibility to degradation.

Our results also suggest that the extent of degradation in speech understanding is dependent on the location of the spectral hole along the simulated cochlear array; thus the approach used here would enable identification of regions along the cochlea that, if intact, place the listener in a position of being particularly susceptible to disruption. Nonetheless, one might consider the drawback of using meaningful sentences to measure speech recognition due to increased linguistic redundancy. Meaningful sentences are likely to make available the semantic predictability and the contextual information that listeners could exploit in order to facilitate speech understanding (Miller et al., 1951; Pickett and Pollack, 1963; Rabinowitz et al., 1992). This can be supported by a study by Stickney and Assmann (2001) which replicated a previous investigation showing that speech intelligibility can remain high even when listening through narrow spectral slits (Warren et al., 1995). Using sentences with high and low context predictability, Stickney and Assmann (2001) found that while sentences with high predictability led to high speech recognition rates, performance dropped by 20% when sentences with low predictability were used. It is important to note that the sentence corpus used in this study has relatively low word-context predictability where almost no contextual information is present (Rabinowitz et al., 1992); hence, contextual cues were not a confounding factor in the current study. These results rather support the importance of the middle-frequency information to overall speech recognition.

Findings from the current study are at variance with aspects of previous work using a similar approach (Shannon et al., 2002) in which apical holes were more disruptive to sentence recognition than basal holes. Methodological differences are likely responsible for the variance between studies. Specifically, there are differences between the two studies in the frequency partitioning of bands used to create vocoded speech. Frequency-to-place calculations can be determined by either using the Greenwood equation (this study) or by using one of the 15 frequency allocation tables (Cochlear Corporation 1995) such as Table 9 which uses a linear approach up to 1550 Hz and a logarithmic approach for the remaining bands (Shannon et al., 2002). The range of frequency information that was dropped to create the spectral holes was different between the two studies. Specifically, in the study by Shannon et al., (2002), the frequency range of 350–2031 Hz was eliminated in order to create the largest apical holes. However, this current study eliminated the frequency ranges between 1139–2487 and 749–3616 Hz to create 6 and 10 mm spectral holes, respectively, in the middle region. Therefore, there is an overlap between the two studies in the frequencies used to create the apical and middle spectral holes. Hence, regardless of the discrepancy in the frequency partitioning of the bands, both studies confirm the importance of this frequency range for speech discrimination.

Effect of size and tonotopic location of spectral holes on SRM

In adverse listening environments listeners take advantage of the availability of spatial cues to segregate target speech from interfering sounds. SRM is the improvement in speech recognition that occurs when a target source is spatially separated from interfering sounds. In this study we examined the extent to which SRM is affected by speech being spectrally impoverished.

It was hypothesized that despite the presence of spectral holes spatial cues would remain available and provide sufficient cues for SRM. Results from the binaural group (shown in Fig. 3, panel A) indicate that there was no significant impact of the 6 mm holes on SRM. However, as seen in this figure, there seems to be a tendency for a modest decrease in amount of SRM for the 6 mm basal and middle hole conditions which did not reach statistical significance. In contrast, under monaural stimulation, SRM was reduced by the 6 mm spectral holes. However, when 10 mm spectral holes were introduced, SRM was reduced in the binaural conditions, with the extent of hole impact depending on the cochlear place of spectral hole. Specifically, binaural SRM was particularly reduced when 10 mm middle and basal holes were created. In comparison, there was no effect of 10 mm spectral holes on SRM in the monaural conditions. These results leave an important question regarding the binaural∕monaural group differences for the 10 mm conditions. However, when considering these data, one should take into account that 6 mm spectral holes resulted in reduced SRM in the monaural conditions. As such it is reasonable to predict that SRM for these monaural conditions would rather decrease with further increase in the spectral hole size. Indeed this decrease in amount of SRM can be noticeable in Fig. 3 for the 10 mm basal conditions (panel B). However, while this decrease in SRM was statistically significant for the binaural condition, it did not reach significance in the monaural conditions. The extent to which inter-subject differences played a role in masking this effect cannot be ruled out. It is noteworthy, however, to mention that these individual differences are larger in extent than the individual differences in NHLs tested under similar conditions using natural speech (Hawley et al., 2004). Part of this variability could be introduced by the uncertainty of listening to spectrally limited stimuli.

Interestingly, the finding of reduced SRM in the presence of spectral holes is consistent with previous reports, demonstrating reduced SRM in hearing impaired individuals (Duquesnoy, 1983; Gelfand et al., 1988). It has been suggested that reduced SRM in hearing impaired individuals is related to their inability to take advantage of interaural level differences due to reduced audibility (Bronkhorst and Plomp, 1989, 1992). Findings from the current study do not support that idea as increasing the level of the target signal did not preserve the spatial cues, rather these results are more consistent with the idea that reduced SRM in hearing impaired individuals is related to reduced or absent spectral cues underlying head shadow effects (Dubno et al., 2002). Head shadow effect is evident starting at approximately 1500 Hz and largest between 2000 and 5000 Hz (Nordlund, 1962; Tonning, 1971; Festen and Plomp, 1986). In this study, to create basal and middle spectral holes, the above frequency range was systematically manipulated depending on the exact location and the extent of the spectral holes. Specifically, basal spectral holes were created in the frequency range of 2487–10 800 Hz, and middle spectral holes were created in the frequency range of 799–3616 Hz. A large reduction in SRM was observed when holes occurred in these conditions. On the other hand, when the spectral holes occurred between 150 and 1139 Hz in the apical conditions, no change in SRM was observed. Therefore, preserving spectral information underlying head shadow cues might aid sound segregation which is essential to overcome the problem of listening in noise.

Contribution of binaural hearing

This study was partly motivated by the findings that there are clear and documented limitations to advantages offered by bilateral CIs (e.g., Wilson et al., 2003; Shannon et al., 2004, p. 366; Tyler et al., 2006; Ching et al., 2007). In CI users, speech understanding in the presence of interferers has been shown to improve to some extent when bilateral CIs are provided, although much of this benefit seems to be derived from the head shadow effect (van Hoesel and Tyler, 2003; Litovsky et al., 2006a, 2009), a physical effect that is monaurally mediated. In general, binaural advantages in CI users are minimal or absent relative to those reported in NHLs (e.g., van Hoesel and Clark, 1997; Muller et al., 2002; Schon et al., 2002; Gantz et al., 2002; Litovsky et al., 2006a; Buus et al., 2008; Loizou et al., 2009; Litovsky et al., 2009). These differences indicate the presence of unknown factors that place limitations on CI users’ performance. The current study examined the variable of spectral holes.

The binaural group had lower SRTs than the monaural group; however, the extent of the binaural advantage was subject to the presence of spectral holes. In the baseline condition (no holes), the binaural advantage was present in quiet, and with interferers in front, right, and left, consistent with previous reports regarding advantages of binaural hearing in quiet and in adverse listening environment (e.g., MacKeith and Coles, 1971; Bronkhorst and Plomp, 1989; Arsenault and Punch, 1999). With the introduction of spectral holes, the binaural group performed better than the monaural group only in the spatially separated conditions, with the exception of the quiet 10 mm basal condition. These results suggest that the advantages of binaural listening in the quiet and front were reduced by the presence of spectral holes regardless of location and size of hole. It has been shown that listeners exploit other perceptual cues to extract information from multiple speech signals in absence of spatial cues, such as spectral and temporal differences between a target and interfering sources (Tyler et al., 2002; Assmann and Summerfield, 1990; Leek and Summers, 1993; Bird and Darwin, 1998; Bacon et al., 1998; Vliegen and Oxenham, 1999; Summers and Molis, 2004). For example, Hawley et al. (2004) reported that effects of binaural listening and F0 differences seem to be interdependent such that advantages of F0 cues were larger in binaural than in monaural and that binaural advantages were greater when F0 cues were present. Hence, these perceptual cues are likely to be limited or less distinct when spectral holes are present, which would explain why binaural advantage was reduced in the quiet and front conditions.

The greatest advantage of binaural listening occurred when interferers were spatially separated to the right. Under monaural stimulation (right ear), listeners had substantially elevated SRTs when speech interferers were ipsilateral to the functional ear; a configuration that created an unfavorable SNR. When interferers were placed on the contralateral side (left), a substantial improvement in monaural SRTs occurred, yet performance was worse than binaural SRTs for some conditions. Specifically, the binaural group had lower left SRTs than the monaural group in the 6 mm hole conditions in the base and middle and 10 mm hole in the apex. These results suggest that binaural hearing might offer additional mechanisms that could improve SRTs in the presence of reduced spectral information. However, these advantages did not occur for 10 mm holes in the base and middle and 6 mm hole in the apex; it is likely that these results are driven by the reduced SRM in the basal and middle conditions. Additionally, given that left SRM was greater for apical hole conditions than for the baseline in the monaural conditions, considerable improvement in speech perception occurred which minimized the differences between binaural and monaural performances for the left spatial configuration. In general, these results indicate that the extent to which binaural benefits occurred for the left spatial conditions was susceptible to the size and location of the spectral holes.

Results further showed a significant main effect of 6 mm basal and apical holes on SRTs for the binaural conditions but not for the monaural conditions. It is important to note that these results do not necessarily indicate that binaural hearing is more susceptible to the presence of spectral holes than monaural hearing. To support this argument, several details need to be taken into account. (1) Although this effect was pronounced in the binaural conditions, right and left SRTs were still significantly lower (better) for binaural than monaural listening mode. (2) Given that effect of spectral holes was determined based on change in SRTs relative to the baseline conditions, these results could be produced by lower baseline SRTs in the binaural conditions and higher baseline SRTs in the monaural conditions. Additionally these results further suggest that the extent of degradation in SRTs that introduced by the presence of spectral holes occurred similarly for binaural and monaural conditions.

Similar comparisons of SRM data showed a robust advantage for binaural hearing in the right spatial configuration as this configuration created unfavorable SNR in the monaural conditions due to the proximity of the interferers to the functional ear. As such, this result points to the susceptibility of unilateral CI users to interfering speech. However, amount of SRM for the left configuration was comparable for the binaural and monaural listening modes; these results were found for the spectral hole conditions as well as the baseline condition. Overall these findings are consistent with previous results that showed similar extent in spatial unmasking for binaural and monaural (shadowed ear) conditions in bilateral CI users (van Hoesel and Tyler, 2003).

SUMMARY AND CONCLUSION

Findings from the current study demonstrated that spectral holes are detrimental to speech intelligibility as evidenced by elevated SRTs and reduced SRM; the extent of this deterioration depended on the cochleotopic location and size of the simulated spectral holes as well as the listening environment. A substantial increase in SRTs occurred when spectral holes were created in the middle of the simulated electrode array; this deterioration in performance was greater than that produced by basal and apical holes. Current results further demonstrated that loss of high-frequency information created by the presence of basal holes produced the greatest detriment to SRM. In the presence of limited spectral information, binaural hearing seems to offer greater advantages over monaural stimulation in acoustically complex environments, as demonstrated by lower SRTs. However, these advantages were reduced in the presence of spectral holes. Specifically, compared to the baseline conditions, binaural advantages were mostly observed when target and interfering speech were spatially separated. Regarding SRM, the greatest advantage for binaural over monaural (right ear) hearing was found when interfering speech was placed to the right, near the ear with good SNR. SRM was reduced in the presence of basal and middle holes, but slightly increased with apical holes, suggesting that loss of low-frequency inputs is related to there being greater dependence on spatial cues for unmasking. In general, these findings imply that listening under simulated CI conditions makes for greater susceptibility to holes in the mid-to-high-frequency regions of spectra in speech signals. However, the extent of this degradation in actual CI users may be different due to other factors not controlled here such as the neural-electrode interface, spread of current, and real holes in hearing due to poor neural survival. Finally, results further suggest the importance of spectral cues over temporal cues for speech intelligibility in the presence of limited spectral information.

ACKNOWLEDGMENTS

The authors are grateful to Shelly Godar, Tanya Jensen, and Susan Richmond for assisting with subject recruitment. This work was supported by NIH-NIDCD Grant No. R01DC030083 to R.L.

Footnotes

HRTFs were obtained from the MIT Media Laboratory.

References

- ANSI (2004). ANSI S3.6-2004, American National Standards specification for audiometers, American National Standards Institute, New York.

- Arsenault, M., and Punch, J. (1999). “Nonsense-syllable recognition in noise using monaural and binaural listening strategies,” J. Acoust. Soc. Am. 105, 1821–1830. 10.1121/1.426720 [DOI] [PubMed] [Google Scholar]

- Assmann, P., and Summerfield, Q. (1990). “Modeling the perception of concurrent vowels: Vowels with different fundamental frequencies,” J. Acoust. Soc. Am. 88, 680–97. 10.1121/1.399772 [DOI] [PubMed] [Google Scholar]

- Bacon, S., Opie, J., and Montoya, D. (1998). “The effect of hearing loss and noise masking on the masking release for speech in temporally complex backgrounds,” J. Speech Lang. Hear. Res. 41, 549–563. [DOI] [PubMed] [Google Scholar]

- Başkent, D., and Shannon, R. (2006). “Frequency transposition around dead regions simulated with a noiseband vocoder,” J. Acoust. Soc. Am. 119, 1156–1163. 10.1121/1.2151825 [DOI] [PubMed] [Google Scholar]

- Bell, T. S., Dirks, D. D., and Trine, T. D. (1992). “Frequency-importance functions for words in high-and low-context sentences,” J. Speech Hear. Res. 35, 950–959. [DOI] [PubMed] [Google Scholar]

- Bierer, J. A. (2007). “Threshold and channel interaction in cochlear implant users: Evaluation of the tripolar electrode configuration,” J. Acoust. Soc. Am. 121, 1642–1653. 10.1121/1.2436712 [DOI] [PubMed] [Google Scholar]

- Bird, J., and Darwin, C. J. (1998). “Effects of a difference in fundamental frequency in separating two sentences,” in Psychophysical and Physiological Advances in Hearing, edited by Palmer A. R., Rees A., Summerfield A. Q., and Meddis R. (Whurr, London: ). [Google Scholar]

- Blauert, J. (1997). Spatial Hearing: The Psychophysics of Human Sound Localization (MIT Press, Cambridge, MA: ). [Google Scholar]

- Breeuwer, M., and Plomp, R. (1984). “Speechreading supplemented with frequency-selective sound-pressure information,” J. Acoust. Soc. Am. 76, 686–691. 10.1121/1.391255 [DOI] [PubMed] [Google Scholar]

- Bronkhorst, A. (2000). “The cocktail party phenomenon: A review of research on speech intelligibility in multiple-talker conditions,” Acust. Acta Acust. 86, 117–128. [Google Scholar]

- Bronkhorst, A., and Plomp, R. (1988). “The effect of head-induced interaural time and level differences on speech intelligibility in noise,” J. Acoust. Soc. Am. 83, 1508–1516. 10.1121/1.395906 [DOI] [PubMed] [Google Scholar]

- Bronkhorst, A., and Plomp, R. (1989). “Binaural speech intelligibility in noise for hearing-impaired listeners,” J. Acoust. Soc. Am. 86, 1374–1383. 10.1121/1.398697 [DOI] [PubMed] [Google Scholar]

- Bronkhorst, A., and Plomp, R. (1992). “Effect of multiple speechlike maskers on binaural speech recognition in normal and impaired hearing,” J. Acoust. Soc. Am. 92, 3132–3139. 10.1121/1.404209 [DOI] [PubMed] [Google Scholar]

- Buss E., Pillsbury, H., Buchman, C., Pillsbury, C., Clark, M., Haynes, D., Labadie, R., Amberg, S., Roland, P., Kruger, P., Novak, M., Wirth, J., Black, J., Peters, R., Lake, J., Wackym, P., Firszt, J., Wilson, B., Lawson, D., Schatzer, R., D'Haese, PS, and Barco, A. (2008). “Multicenter U.S. bilateral MED-EL cochlear implantation study: speech perception over the first year of use,” Ear Hear. 29, 20–32 [DOI] [PubMed] [Google Scholar]

- Ching, T., van Wanrooy, E., and Dillon, H. (2007). “Binaural-bimodal fitting or bilateral implantation for managing severe to profound deafness: A review,” Trends Amplif. 11, 161–192. 10.1177/1084713807304357 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chung, D., and Mack, B. (1979). “The effect of masking by noise on word discrimination scores in listeners with normal hearing and with noise-induced hearing loss,” Scand. Audiol. 8, 139–143. [DOI] [PubMed] [Google Scholar]

- Cochlear Corporation (1995). Technical reference manual, Engle-wood, Co.

- Culling, J., Hawley, M., and Litovsky, R. (2004). “The role of head-induced interaural time and level differences in the speech reception threshold for multiple interfering sound sources,” J. Acoust. Soc. Am. 116, 1057–1065. 10.1121/1.1772396 [DOI] [PubMed] [Google Scholar]

- DePaolis, R. A., Janota, C. P., and Frank, T. (1996). “Frequency importance functions for words, sentences, and continuous discourse,” J. Speech Hear. Res. 39, 714–723. [DOI] [PubMed] [Google Scholar]

- Drullman, R., and Bronkhorst, A. (2000). “Multichannel speech intelligibility and talker recognition using monaural, binaural, and three-dimensional auditory presentation,” J. Acoust. Soc. Am. 107, 2224–2235. 10.1121/1.428503 [DOI] [PubMed] [Google Scholar]

- Dubno, J., Ahlstrom, J., and Horwitz, A. (2002). “Spectral contributions to the benefit from spatial separation of speech and noise,” J. Speech Lang. Hear. Res. 45, 1297–1310. 10.1044/1092-4388(2002/104) [DOI] [PubMed] [Google Scholar]

- Duquesnoy, A. (1983). “Effect of a single interfering noise or speech source upon the binaural sentence intelligibility of aged persons,” J. Acoust. Soc. Am. 74, 739–743. 10.1121/1.389859 [DOI] [PubMed] [Google Scholar]

- Festen, J., and Plomp, R. (1986). “Speech-reception threshold in noise with one and two hearing aids,” J. Acoust. Soc. Am. 79, 465–471. 10.1121/1.393534 [DOI] [PubMed] [Google Scholar]

- Fletcher, H., and Galt, R. H. (1950). “The perception of speech and its relation to telephony,” J. Acoust. Soc. Am. 22, 89–151. 10.1121/1.1906605 [DOI] [Google Scholar]

- French, N., and Steinberg, J. (1947). “Factors governing the intelligibility of speech sounds,” J. Acoust. Soc. Am. 19(1), 90–119. 10.1121/1.1916407 [DOI] [Google Scholar]

- Freyman, R., Balakrishnan, U., and Helfer, K. (2001). “Spatial release from informational masking in speech recognition,” J. Acoust. Soc. Am. 109, 2112–2122. 10.1121/1.1354984 [DOI] [PubMed] [Google Scholar]

- Friesen, L., Shannon, R., Başkent, D., and Wang, X. (2001). “Speech recognition in noise as a function of the number of spectral channels: Comparison of acoustic hearing and cochlear implant,” J. Acoust. Soc. Am. 110, 1150–1163. 10.1121/1.1381538 [DOI] [PubMed] [Google Scholar]

- Fu, Q., and Nogaki, G. (2005). “Noise susceptibility of cochlear implant users, the role of spectral resolution and smearing,” J. Assoc. Res. Otolaryngol. 6, 19–27. 10.1007/s10162-004-5024-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fu, Q., and Shannon, R. (1999). “Recognition of spectrally degraded and frequency shifted vowels in acoustic and electric hearing,” J. Acoust. Soc. Am. 105, 1889–1900. 10.1121/1.426725 [DOI] [PubMed] [Google Scholar]

- Fu, Q., Chinchilla, S., and Galvin, J. (2004). “The role of spectral and temporal cues in voice gender discrimination by normal hearing listeners and cochlear implant users,” J. Assoc. Res. Otolaryngol. 5, 523–260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gantz, B., Tyler, R., Rubinstein, J., Wolaver, A., Lowder, M., Abbas, P., Brown, C., Hughes, M., and Preece, J. (2002). “Binaural cochlear implants placed during the same operation,” Otol. Neurotol. 23, 169–180. 10.1097/00129492-200203000-00012 [DOI] [PubMed] [Google Scholar]

- Garadat, S., Litovsky, R., Yu, Q., and Zeng, F. G. (2009). “Role of binaural hearing in speech intelligibility and spatial release from masking using vocoded speech,” J. Acoust. Soc. Am. 126, 2522–2535 10.1121/1.3238242 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gardner, W., and Martin, K. (1994). HRTF measurements of a KEMAR dummy-head microphone, The MIT media lab machine listening group. http://sound.media.mit.edu/resources/KEMAR.html (Last viewed 2/4/2009).

- Gelfand, S., Ross, L., and Miller, S. (1988). “Sentence reception in noise from one versus two sources: Effect of aging and hearing loss,” J. Acoust. Soc. Am. 83, 248–256. 10.1121/1.396426 [DOI] [PubMed] [Google Scholar]

- Grant, K., and Braida, L. (1991). “Evaluating the articulation index for auditory-visual input,” J. Acoust. Soc. Am. 89, 2952–2960. 10.1121/1.400733 [DOI] [PubMed] [Google Scholar]

- Greenwood, D. (1990). “A cochlear frequency-position function for several species—29 years later,” J. Acoust. Soc. Am. 87, 2592–2605. 10.1121/1.399052 [DOI] [PubMed] [Google Scholar]

- Hartmann, R., Top, G., and Klinke, R. (1984). “Discharge patterns of cat primary auditory fibers with electrical stimulation of the cochlea,” Hear. Res. 13, 47–62. 10.1016/0378-5955(84)90094-7 [DOI] [PubMed] [Google Scholar]

- Hawley, M., Litovsky, R., and Colburn, H. (1999). “Speech intelligibility and localization in a multi-source environment,” J. Acoust. Soc. Am. 105, 3436–3448. 10.1121/1.424670 [DOI] [PubMed] [Google Scholar]

- Hawley, M., Litovsky, R., and Culling, J. (2004). “The benefits of binaural hearing in a cocktail party: Effect of location and type of interferer,” J. Acoust. Soc. Am. 115, 833–843. 10.1121/1.1639908 [DOI] [PubMed] [Google Scholar]

- Kasturi, K., and Loizou, P. (2002). “The intelligibility of speech with holes in the spectrum,” J. Acoust. Soc. Am. 112, 1102–1111. 10.1121/1.1498855 [DOI] [PubMed] [Google Scholar]

- Kawano, A., Seldon, H., Clark, G., Ramsden, R., and Raine, C. (1998). “Intracochlear factors contributing to psychophysical percepts following cochlear implantation,” Acta Oto-Laryngol. 118, 313–326. 10.1080/00016489850183386 [DOI] [PubMed] [Google Scholar]

- Kryter, K. (1962). “Validation of the articulation index,” J. Acoust. Soc. Am. 34, 1698–1702. 10.1121/1.1909096 [DOI] [Google Scholar]

- Leek, M., and Summers, V. (1993). “The effect of temporal waveform shape on spectral discrimination by normal-hearing and hearing-impaired listeners,” J. Acoust. Soc. Am. 94, 2074–2082. 10.1121/1.407480 [DOI] [PubMed] [Google Scholar]

- Levitt, H. (1971). “Transformed up-down methods in psychophysics,” J. Acoust. Soc. Am. 49, 467–477. 10.1121/1.1912375 [DOI] [PubMed] [Google Scholar]

- Liberman, M., and Kiang, N. (1978). “Acoustic trauma in cats. Cochlear pathology and auditory-nerve activity,” Acta Oto-Laryngol., Suppl. 358, 1–63. [PubMed] [Google Scholar]

- Lippman, R. (1996). “Accurate consonant perception without mid-frequency speech energy,” IEEE Trans. Speech Audio Process. 4, 66–69. 10.1109/TSA.1996.481454 [DOI] [Google Scholar]

- Litovsky, R. (2005). “Speech intelligibility and spatial release from masking in young children,” J. Acoust. Soc. Am. 117, 3091–3099. 10.1121/1.1873913 [DOI] [PubMed] [Google Scholar]

- Litovsky, R. Y., Johnstone, P., and Godar, S. (2006c). “Benefits of bilateral cochlear implants and/or hearing aids in children,” Int. J. Audiol. 45, 78–91. 10.1080/14992020600782956 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky, R. Y., Johnstone, P. M., Godar, S., Agrawal, S., Parkinson, A., Peters, R., and Lake, J. (2006b). “Bilateral cochlear implants in children: Localization acuity measured with minimum audible angle,” Ear Hear. 27, 43–59. 10.1097/01.aud.0000194515.28023.4b [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky, R. Y., Jones, G. L., Agrawal, S., and van Hoesel, R. (2010). “Effect of age at onset of deafness on binaural sensitivity in electric hearing in humans,” J. Acoust. Soc. Am. , 126(6) (in press). [DOI] [PMC free article] [PubMed]

- Litovsky, R. Y., Parkinson, A., and Arcaroli, J. (2009). “Spatial hearing and speech intelligibility in bilateral cochlear implant users,” Ear Hear. 30, 419–431. 10.1097/AUD.0b013e3181a165be [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky, R., Parkinson, A., Arcaroli, J., and Sammath, C. (2006a). “Clinical study of simultaneous bilateral cochlear implantation in adults: A multicenter study,” Ear Hear. 27, 714–731. 10.1097/01.aud.0000246816.50820.42 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky, R., Parkinson, A., Arcoroli, J., Peters, R., Lake, J., Johnstone, P., and Yu, G. (2004). “Bilateral cochlear implants in adults and children,” Arch. Otolaryngol. Head Neck Surg. 130, 648–55. 10.1001/archotol.130.5.648 [DOI] [PubMed] [Google Scholar]

- Loizou, P., Hu, Y., Litovsky, R. Y., Yu, G., Peters, R., Lake, R., and Roland, P. (2009). “Speech recognition by bilateral cochlear implant users in a cocktail party setting,” J. Acoust. Soc. Am. 125, 372–383. 10.1121/1.3036175 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Long, C. (2000). “Bilateral cochlear implants: Basic psychophysics,” Ph.D. thesis, MIT, Cambridge, MA. [Google Scholar]

- Long, C., Eddington, D., Colburn, S., and Rabinowitz, W. (2003). “Binaural sensitivity as a function of interaural electrode position with a bilateral cochlear implant user,” J. Acoust. Soc. Am. 114, 1565–1574. 10.1121/1.1603765 [DOI] [PubMed] [Google Scholar]

- MacKeith, N., and Coles, R. (1971). “Binaural advantages in hearing of speech,” J. Laryngol. Otol. 85, 213–232. 10.1017/S0022215100073369 [DOI] [PubMed] [Google Scholar]

- Mehr, M. A., Turner, C. W., and Parkinson, A. (2001). “Channel weights for speech recognition in cochlear implant users,” J. Acoust. Soc. Am. 109, 359–366. 10.1121/1.1322021 [DOI] [PubMed] [Google Scholar]

- Miller, G., Heise, G., and Lichten, W. (1951). “The intelligibility of speech as a function of the context of the test material,” J. Exp. Psychol. 41, 329–335. 10.1037/h0062491 [DOI] [PubMed] [Google Scholar]

- Moore, B., Huss, M., Vickers, D., Glasberg, B., and Alcantara, J. (2000). “A test for the diagnosis of dead regions in the cochlea,” Br. J. Audiol. 34, 205–224. [DOI] [PubMed] [Google Scholar]

- Muller, J., Schon, F., and Helms, J. (2002). “Speech understanding in quiet and noise in bilateral users of the MED-EL COMBI 40∕40+ cochlear implant system,” Ear Hear. 23, 198–206. 10.1097/00003446-200206000-00004 [DOI] [PubMed] [Google Scholar]

- Nadol, J. B., Jr. (1997). “Patterns of neural degeneration in the human cochlea and auditory nerve: Implications for cochlear implantation,” Otolaryngol.-Head Neck Surg. 117, 220–228. 10.1016/S0194-5998(97)70178-5 [DOI] [PubMed] [Google Scholar]

- Nordlund, B. (1962). “Physical factors in angular localization,” Acta Oto-Laryngol. 54, 75–93. 10.3109/00016486209126924 [DOI] [PubMed] [Google Scholar]

- Pekkarinen, E., Salmivalli, A., and Suonpaa, J. (1990). “Effect of noise on word discrimination by subjects with impaired hearing, compared with those with normal hearing,” Scand. Audiol. 19, 31–36. [DOI] [PubMed] [Google Scholar]

- Pickett, J., and Pollack, I. (1963). “Intelligibility of excerpt from fluent speech: Effect of rate of utterance and duration of Excerpt,” Lang Speech 6, 151–164. [Google Scholar]

- Plomp, R. (1986). “A signal-to-noise ratio method for the speech-reception SRT of the hearing impaired,” J. Speech Hear. Res. 29, 146–154. [DOI] [PubMed] [Google Scholar]

- Prosen, C., Moody, D., Stebbins, W., Smith, D., Sommers, M., Brown, N., Altschuler, R., and Hawkins, J. (1990). “Apical hair cells and hearing,” Hear. Res. 44, 179–194. 10.1016/0378-5955(90)90079-5 [DOI] [PubMed] [Google Scholar]

- Rabinowitz, W., Eddington, D., Delhorne, L., and Cuneo, P. (1992). “Relations among different measures of speech reception in subjects using a cochlear implant,” J. Acoust. Soc. Am. 92, 1869–1881. 10.1121/1.405252 [DOI] [PubMed] [Google Scholar]

- Rothauser, E., Chapman, W., Guttman, N., Nordby, K., Silbigert, H., Urbanek, G., and Weinstock, M. (1969). “IEEE recommended practice for speech quality measurements,” IEEE Trans. Audio Electroacoust. 17, 227–246. [Google Scholar]

- Schleich, P., Nopp, P., and D’Haese, P. (2004). “Head shadow, squelch, and summation effects in bilateral users of the Med-El COMBI 40∕40+ cochlear implant,” Ear Hear. 25, 197–204. 10.1097/01.AUD.0000130792.43315.97 [DOI] [PubMed] [Google Scholar]

- Schon, F., Muller, J., and Helms, J. (2002). “Speech reception thresholds obtained in a symmetrical four-loudspeaker arrangement from bilateral users of MED-EL cochlear implant,” Otol. Neurotol. 3, 710–714. 10.1097/00129492-200209000-00018 [DOI] [PubMed] [Google Scholar]

- Schuknecht, H., and Neff, W. (1952). “Hearing after apical lesions in the cochlea,” Acta Oto-Laryngol. 42, 263–274. 10.3109/00016485209120353 [DOI] [PubMed] [Google Scholar]

- Shannon, R., Fu, Q., Galvin, J., and Friesen, L. (2004). in Cochlear Implants: Auditory Prostheses and Electric Hearing, edited by Zeng F. G., Popper A. N., and Fay R. R. (Springer, New York: ), pp. 334–376. [Google Scholar]

- Shannon, R., Galvin, J., III, and Baskent, D. (2002). “Holes in hearing,” J. Assoc. Res. Otolaryngol. 3, 185–199. 10.1007/s101620020021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shannon, R., Zeng, F. G., Kamath, V., Wygonski, J., and Ekelid, M. (1995). “Speech recognition with primarily temporal cues,” Science 270, 303–304. 10.1126/science.270.5234.303 [DOI] [PubMed] [Google Scholar]

- Shepherd, R., and Javel, E. (1997). “Electrical stimulation of the auditory nerve. I. Correlation of physiological responses with cochlear status,” Hear. Res. 108, 112–144. 10.1016/S0378-5955(97)00046-4 [DOI] [PubMed] [Google Scholar]

- Smith, D., Brown, N., Moody, D., Stebbins, W., and Nuttall, A. (1987). “Cryoprobe-induced apical lesions in the chinchilla. II. Effects on behavioral auditory thresholds,” Hear. Res. 26, 311–317. 10.1016/0378-5955(87)90066-9 [DOI] [PubMed] [Google Scholar]

- Stephenson, L., Moody, D., Rarey, K., Norat, M., Stebbins, W., and Davis, J. (1984). “Behavioral and morphological changes following low-frequency noise exposure in the guinea pig,” Assoc. Res. Otolaryngol. St. Petersburg , 88–89.

- Stevens, K. (1997). “Articulatory-acoustic relationship,” in Handbook of Phonetic Science, edited by Hardcastle W. J. and Laver J. (Blackwell, Oxford: ). [Google Scholar]

- Stickney, G., and Assmann, P. (2001). “Acoustic and linguistic factors in the perception of band-pass-filtered speech,” J. Acoust. Soc. Am. 109, 1157–1165. 10.1121/1.1340643 [DOI] [PubMed] [Google Scholar]

- Stickney, G., Zeng, F. G., Litovsky, R., and Assmann, P. (2004). “Cochlear implant recognition with speech maskers,” J. Acoust. Soc. Am. 116, 1081–1091. 10.1121/1.1772399 [DOI] [PubMed] [Google Scholar]

- Summers, V., and Molis, M. (2004). “Speech recognition in fluctuating and continuous maskers: Effects of hearing loss and presentation level,” J. Speech Lang. Hear. Res. 47, 245–256. 10.1044/1092-4388(2004/020) [DOI] [PubMed] [Google Scholar]

- Throckmorton, C., and Collins, L. (2002). “The effect of channel interactions on speech recognition in cochlear implant subjects: Predictions from an acoustic model,” J. Acoust. Soc. Am. 112, 285–296. 10.1121/1.1482073 [DOI] [PubMed] [Google Scholar]

- Tonning, F. (1971). “Directional audiometry II. The influence of azimuth on the perception of speech,” Acta Oto-Laryngol. 72, 352–357. 10.3109/00016487109122493 [DOI] [PubMed] [Google Scholar]

- Tyler, R., Gantz, B., Rubinstein, J., Wilson, B., Parkinson, A., Wolaver, A., Precce, J., Witt, S., and Lowder, M. (2002). “Three-month results with bilateral cochlear implants,” Ear Hear. 23, 80S–89S. 10.1097/00003446-200202001-00010 [DOI] [PubMed] [Google Scholar]

- Tyler, R., Noble, W., Dunn, C., and Witt, S. (2006). “Some benefits and limitations of binaural cochlear implants and our ability to measure them,” Int. J. Audiol. 45, S113–S119. 10.1080/14992020600783095 [DOI] [PubMed] [Google Scholar]

- van Hoesel, R. (2004). “Exploring the benefits of bilateral cochlear implants,” Audiol. Neuro-Otol. 9, 234–246. 10.1159/000078393 [DOI] [PubMed] [Google Scholar]

- van Hoesel, R. J. M., Jones, G. L., and Litovsky, R. Y. (2009). “Interaural time-delay sensitivity in bilateral cochlear implant users: Effects of pulse-rate, modulation-rate, and place of stimulation,” J. Assoc. Res. Otolaryngol. 10, 557–567. 10.1007/s10162-009-0175-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Hoesel, R., and Clark, G. (1997). “Psychophysical studies with two binaural cochlear implant subjects,” J. Acoust. Soc. Am. 102, 495–507. 10.1121/1.419611 [DOI] [PubMed] [Google Scholar]

- van Hoesel, R., and Tyler, R. (2003). “Speech perception, localization, and lateralization with bilateral cochlear implants,” J. Acoust. Soc. Am. 113, 1617–1630. 10.1121/1.1539520 [DOI] [PubMed] [Google Scholar]

- Vermeire, K., Brokx, J., van de Heyning, P., Cochet, E., and Carpentier, H. (2003). “Bilateral cochlear implantation in children,” Int. J. Pediatr. Otorhinolaryngol. 67, 67–70. 10.1016/S0165-5876(02)00286-0 [DOI] [PubMed] [Google Scholar]

- Vliegen, J., and Oxenham, A. (1999). “Sequential stream segregation in the absence of spectral cues,” J. Acoust. Soc. Am. 105, 339–346. 10.1121/1.424503 [DOI] [PubMed] [Google Scholar]

- Warren, R., Riener, K., Bashford, J., and Brubaker, B. (1995). “Spectral redundancy, intelligibility of sentences heard through narrow spectral slits,” Percept. Psychophys. 57, 175–182. [DOI] [PubMed] [Google Scholar]

- Wilson, B., Lawson, D., Muller, J., Tyler, R., and Kiefer, J. (2003). “Cochlear implant: Some likely next steps,” Annu. Rev. Biomed. Eng. 5, 207–249. 10.1146/annurev.bioeng.5.040202.121645 [DOI] [PubMed] [Google Scholar]