Abstract

Intraoperative imaging offers a means to account for morphological changes occurring during the procedure and resolve geometric uncertainties via integration with a surgical navigation system. Such integration requires registration of the image and world reference frames, conventionally a time consuming, error-prone manual process. This work presents a method of automatic image-to-world registration of intraoperative cone-beam computed tomography (CBCT) and an optical tracking system. Multimodality (MM) markers consisting of an infrared (IR) reflective sphere with a 2 mm tungsten sphere (BB) placed precisely at the center were designed to permit automatic detection in both the image and tracking (world) reference frames. Image localization is performed by intensity thresholding and pattern matching directly in 2D projections acquired in each CBCT scan, with 3D image coordinates computed using backprojection and accounting for C-arm geometric calibration. The IR tracking system localized MM markers in the world reference frame, and the image-to-world registration was computed by rigid point matching of image and tracker point sets. The accuracy and reproducibility of the automatic registration technique were compared to conventional (manual) registration using a variety of marker configurations suitable to neurosurgery (markers fixed to cranium) and head and neck surgery (markers suspended on a subcranial frame). The automatic technique exhibited subvoxel marker localization accuracy (<0.8 mm) for all marker configurations. The fiducial registration error of the automatic technique was (0.35±0.01) mm, compared to (0.64±0.07 mm) for the manual technique, indicating improved accuracy and reproducibility. The target registration error (TRE) averaged over all configurations was 1.14 mm for the automatic technique, compared to 1.29 mm for the manual in accuracy, although the difference was not statistically significant (p=0.3). A statistically significant improvement in precision was observed—specifically, the standard deviation in TRE was 0.2 mm for the automatic technique versus 0.34 mm for the manual technique (p=0.001). The projection-based automatic registration technique demonstrates accuracy and reproducibility equivalent or superior to the conventional manual technique for both neurosurgical and head and neck marker configurations. Use of this method with C-arm CBCT eliminates the burden of manual registration on surgical workflow by providing automatic registration of surgical tracking in 3D images within ∼20 s of acquisition, with registration automatically updated with each CBCT scan. The automatic registration method is undergoing integration in ongoing clinical trials of intraoperative CBCT-guided head and neck surgery.

Keywords: image-guided interventions, cone-beam CT, surgical navigation, image registration, head and neck surgery

INTRODUCTION

Image-guided surgery (IGS) is becoming common to the modern surgical arsenal for a variety of procedures in orthopedic, neuro-, and head and neck surgeries.1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16 Conventionally, IGS utilizes preoperative computed tomography (CT) or magnetic resonance imaging images to assist the surgeon in planning and execution of a surgical procedure with increased precision and accuracy by helping to resolve geometric uncertainties. Most IGS systems report a mean accuracy of ∼1–2 mm or less, which is suitable for a variety of procedures.4 More recently, intraoperative imaging technologies are being realized that allow guidance with respect to images that accurately reflect morphological change at the time of surgery (e.g., tissue deformation or excision). A specific technology considered in this work is cone-beam CT (CBCT) on a mobile C-arm with a flat-panel detector (FPD), offering sub-mm, isotropic 3D spatial resolution, and soft-tissue visualization.12, 13, 14, 15, 16, 17, 18 The underlying geometric accuracy of the surgical navigation system may be unchanged (∼1–2 mm, owing to errors in the registration and∕or tracking system), and from a clinical perspective of bulk (nonmicroscopic) disease, improving tracking precision below ∼1 mm may meet with diminishing returns (owing to errors in physical treatment delivery and uncertainty in disease extent). The main advantage of intraoperative imaging is navigation in the context of 3D images acquired at the most up-to-date point in the procedure, and the system described below for automatic image-to-world registration is desired to perform at a level of precision comparable to existing techniques (∼1–2 mm).

Real-time surgical navigation (i.e., the tracking of surgical tools within the reference frame of the image) requires registration of the image and tracking coordinate systems, referred to as image-to-world registration. Following registration, the tracking system—e.g., an infrared (IR) or electromagnetic (EM) tracking system used in conjunction with passive or active markers attached to surgical tools—allows localization and visualization of the tool in image coordinates. A variety of image-to-world registration techniques are known, ranging from rigid point-based registration to surface matching and deformable registration.1, 2, 3, 19 The first is the most prevalent, involving the colocalization of specific points in both reference frames (e.g., markers affixed to a stereotactic frame or rigid anatomy2).

Conventionally, markers are localized manually in image coordinates (via mouse click), and tracking coordinates are determined with a handheld trackable pointer. Because the time scale associated with manual colocalization is long (minutes), the process can present a bottleneck to surgical workflow. An initial registration is usually performed just prior to the procedure, and subsequent perturbation of the markers would degrade the accuracy of surgical navigation. Repeating the registration during the procedure is often-untenable. Intraoperative imaging (such as C-arm CBCT) not only provides images that accurately reflect changes in tissue morphology, it also presents an opportunity to update the image-to-world registration during the procedure. Moreover—and the specific topic of investigation below—it presents a means by which the image-to-world registration may be determined automatically, potentially removing an otherwise substantial workflow bottleneck. Specifically, intraoperative imaging is shown to allow image-to-world registration computed automatically with each intraoperative scan based on markers that are visible to both the imaging and tracking systems.

A variety of techniques for automatic image-to-world registration have been reported.20, 21, 22, 23, 24, 25, 26 Potential solutions include tracking of the C-arm itself, patient registration masks, and automatic headset registration. Tracking of the C-arm suggests inherently increased registration error due to distant positioning of the tracking system and nonidealities in the C-arm gantry orbit. The mask and headset potentially improve registration accuracy (fiducials in proximity to the surgical target) but are somewhat limited to a single, preincision use. Previous work by Bootsma et al.23 automatically registers the image and world reference frames by colocalizing markers within the 3D field of view (FOV) of CBCT reconstructions. The current work extends that approach to allow automatic registration directly from the projection data, permitting markers to be placed outside the 3D FOV and requiring only that markers are present in some subset of the projection data. The approach is analogous to techniques developed for seed localization in brachytherapy which segment seeds in two or more 2D projections to derive their 3D position.27, 28, 29, 30 As shown below, such an approach allows automatic registration of image and world reference frames with every CBCT scan and facilitates the development of novel marker tools that are better suited to subcranial surgical sites—allowing marker configurations that are not attached to the cranium or a conventional head frame.

MATERIALS

Intraoperative cone-beam CT

A mobile C-arm for intraoperative CBCT has been developed in collaboration with Siemens Healthcare (Siemens SP, Erlangen Germany) as previously described7, 8, 9, 14, 15, 16, 17 and illustrated in Fig. 1a. The main modifications to the C-arm include replacement of the x-ray image intensifier with a large-area FPD (PaxScan 4030CB, Varian Imaging Products, Palo Alto, CA) allowing a FOV of 20×20×15 cm3 at the isocenter and soft-tissue imaging capability, motorization of the C-arm orbit and development of a geometric calibration method,31, 32 and integration with a computer-control system for image readout and 3D reconstruction by a modified Feldkamp33 algorithm. Applications under investigation range from image-guided brachytherapy7 to orthopedic12, 13 and head and neck surgery.8, 9, 10, 11, 14

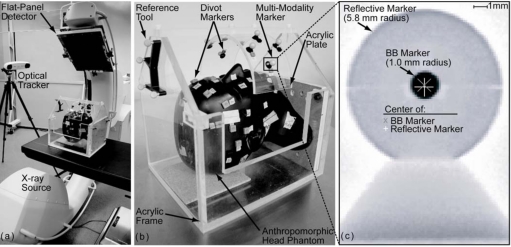

Figure 1.

(a) Illustration of experimental setup showing the C-arm, optical tracking system, and head phantom. (b) Closeup of the anthropomorphic head phantom setup. Divot markers are used to manually localize landmarks and target points, while custom MM markers are used in automatic registration. (c) Radiograph of MM markers mounted on an x-ray translucent post. Markers consist of a reflective spherical marker with tungsten sphere (BB) placed at center. The gray “X” and white “+” indicate the BB marker and reflective marker centers, respectively.

Volume images are reconstructed from x-ray projections acquired over the C-arm orbit (∼178°, recognizing that the orbit less than 180°+fan angle imparts a limited-angle artifact as described previously).16 The FPD has 2048×1536 pixels with a pitch of 0.194 mm, which is binned upon readout to 1024×768 pixels (dynamic gain readout, in which the gain of detector readout amplifiers scales dynamically with the pixel signal as a means of improving dynamic range and CBCT image quality34, 35). CBCT imaging entails acquisition of 100–500 projections reconstructed at 0.2–1.6 mm voxel size (depending on speed and image quality requirements)—nominally 200 projections reconstructed at 0.8 mm voxel size (256×256×192 voxels). Filtered backprojection is performed using a modified Feldkamp algorithm, with mechanical nonidealities of the C-arm accommodated using a geometric calibration. The resulting CBCT images demonstrate sub-mm 3D spatial resolution and soft-tissue visibility. All images in this study were acquired at an imaging dose to isocenter of 9.6 mGy [dose to the center of a 16 cm diameter water-equivalent cylinder (“head” phantom)], sufficient for both bony detail and soft-tissue visibility.17 Acquisition time was typically ∼60 s, and reconstruction of 256×256×192 voxel images on a PC workstation (Quad Core 2.8 GHz, 4 GB RAM, Dell, Round Rock, TX) takes ∼20 s.

A component of the system critical to the automatic registration algorithm described below is the geometric calibration, described in detail in previous work. In general, geometric calibration relates the 3D coordinate of voxels in the reconstructed image to 2D pixels in the projection domain.32 The geometric calibration operates on projections of a phantom designed to yield complete pose determination for any projection angle. Geometric parameters are defined according to four right-handed Cartesian coordinate systems denoted by the symbols I (image coordinate system), r (real detector coordinate system), i (virtual detector coordinate system), and p (detector pixel coordinate system). The geometric parameters include the piercing point , x-ray source position , detector position , detector tilt and rotation , and the gantry angle (γj)I for each projection j acquired. These parameters and the derived source-to-axis distance (SADj) and axis-to-detector distance (ADDj) provide a complete pose description of the C-arm.

Tracking system and MM markers

The IR tracking system (Polaris Vicra, NDI, Waterloo, ON) illustrated in Fig. 1a was used to measure the position of reflective spherical markers. According to the manufacturer specifications, the camera provides RMS accuracy of 0.25 mm in marker localization within a FOV described by a rectangular frustum 55 cm from the face of the camera with the large base of area 94×89 cm2 and small base of 49×39 cm2 separated by 78 cm, sufficient to encompass the imaging FOV of the C-arm. The markers were affixed to trackable tools (e.g., a pointer) or objects [e.g., the anthropomorphic head phantom or acrylic frame in Fig. 1b]. A reference tool was used with the tracking system to ensure that perturbation of the camera or object would not result in loss of registration.

Custom MM markers were designed to allow localization by both the IR tracking system and the x-ray imaging system. As illustrated in Fig. 1c, each MM marker consists of a 2.0 mm diameter tungsten sphere (BB) at the center of a reflective spherical marker mounted on an x-ray translucent (polycarbonate) support. The centers of the BB and reflective markers are coincident within 0.15±0.04 mm, as measured by segmentation in orthogonal radiographs of 30 MM markers (i.e., the centroid of the BB segmentation compared to the center of a circular fit to the edge of the reflective marker). Precise centering was achieved by designing the support posts such that the BB rests at the center of the superior-inferior plane of the reflective marker and creating a well at the center of the reflective marker for accurate BB placement in the anterior-posterior and left-right planes. In the automatic image-to-world registration approach detailed below, the BB markers are localized by segmentation in CBCT projection data (to give an “image point set”) which is rigidly registered to reflective markers localized by the tracking system (“tracker point set”). These point sets represent the same locations in the coordinate systems of the imaging and tracking systems.

Anthropomorphic head phantom

As shown in Fig. 1b, an anthropomorphic head phantom containing a natural human skeleton and soft-tissue-simulating material17, 36 was used to provide a realistic context in which to develop and evaluate the automatic registration algorithm. The head was rigidly mounted on an acrylic frame with the reference tool attached. The acrylic frame is x-ray transparent and nonobstructive to the optical tracking system. The frame incorporated a curved acrylic plate placed over the head phantom (∼13.9 cm above the isocenter), as illustrated in Fig. 1b. Two types of markers were affixed to the head and∕or the curved plate—the MM markers described above (used by the automatic registration algorithm) and divot markers (used for manual localization by a hand-held pointer). Various configurations of MM markers placed on the skin surface or acrylic plate were used to evaluate the automatic registration algorithm detailed below. Divot markers were used to compare the performance of manual and automatic registration: Those placed on the skin surface mimicked conventional clinical configurations, while those on the curved plate reproduced the MM marker locations for fair performance comparison. In addition, four divot markers on the skin surface represented targets for evaluation of target registration error (TRE).

AUTOMATIC IMAGE-TO-WORLD REGISTRATION ALGORITHM

The automatic registration algorithm consists of processes illustrated in Fig. 2 to localize markers in both the image reference frame (C-arm image data) and the world reference frame (tracking system). As detailed in the sections below, markers are first automatically localized within 2D projections acquired in any CBCT scan, then transformed to 3D image coordinates, defining the image point set, via the geometric calibration of the C-arm, and finally matched and rigidly registered to the tracker point set to allow tool tracking within a common coordinate system, referred to as surgical navigation.

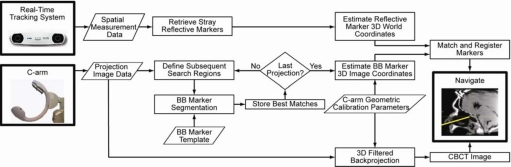

Figure 2.

Flowchart illustrating the automatic registration algorithm. Tracking and imaging processes operate independently up to marker matching and rigid registration. The tracking system reports the location of stray reflective markers. The imaging system searches each projection for BB markers and records the 2D detector pixel location. Using the C-arm geometric calibration, the 3D location of each marker is determined. Image and tracker point sets are matched and registered, and surgical navigation proceeds according to the resulting registration.

3D localization of reflective markers (tracker point set)

The tracking system is capable of localizing up to 50 reflective spherical markers within its FOV. Markers may be defined as a set associated with a predefined tool (e.g., a pointer) or as independent points (referred to as “strays”). The MM markers are considered strays rigidly affixed to the patient, a stereotactic frame, or an auxiliary device (e.g., the curved plate). The locations of the stray markers are retrieved through an application program interface command to the camera, implemented in the studies reported below in custom software developed in the open-source IGSTK platform (v2.0, Kitware Inc., Clifton Park, NY). The reflective markers (the tracker point set) are then ready for registration to the image point set, described below.

3D localization of BB markers (image point-set)

As illustrated in Fig. 2, localization of the BB markers in 3D image coordinates is a two-step process: (i) segmentation of BBs in the 2D projection image data, followed by (ii) estimation of BB locations in 3D image coordinates in a manner that accounts for the C-arm geometric calibration.

Segmentation of BB markers in 2D projections

Figure 3a shows a CBCT projection of the MM markers affixed to the surface of the head phantom, from which the (U,V)p coordinates of the BBs may be determined by intensity thresholding and template matching (circles). Intensity thresholding is used to determine local maxima (i.e., regions of high attenuation, such as bone and metallic structures). Threshold values giving reliable segmentation of the tungsten BBs (along with other dense objects) were determined empirically and found to be fairly robust without need of user intervention. Segmented regions are considered to have uniform intensity and subjected to pattern matching usinga priori knowledge on the BB size and shape. The template applied is a circle of uniform intensity with a radius ranging from 2 to 5 pixels. In each of j projections (nominally 200), the centroids of all template-matched objects are calculated, and the FPD pixel location is stored, denoted as . These locations comprise the 2D image point set and include all BB markers found in the projections as well as any false positives caused by regions of high x-ray attenuation that match the template. False positives are subsequently rejected based on the (known) total number of “true” markers and the consistency of presentation in the projection sinogram (i.e., markers are expected to follow a smooth sinusoid in the (U,θ) domain). Typically, a segmented region was ruled false positive if presenting in fewer than ten projections (to rule out spurious signals or high-attenuation objects that might resemble the BB pattern when truncated at the edge of the search region), allowing the segmentation process to proceed without user intervention.

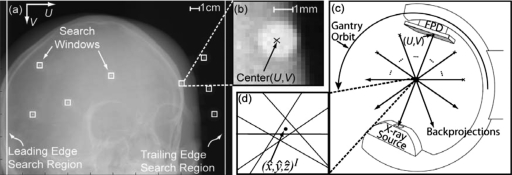

Figure 3.

Determination of marker locations from 2D projections. (a) Segmentation of BB markers in 2D projections. The 2D center of the BB marker is determined by segmentation from projection data using intensity thresholding and pattern matching. (b) Example search window for BB segmentation (20×20 pixels). (c) Estimation of BB marker 3D location in image coordinates by transformation of FPD pixel coordinate system (U,V)p to image coordinate system (x,y,z)I (analogous to backprojection). (d) The 3D estimate of marker location is the point of minimum distance from all backprojections over the gantry orbit.

Definition of constrained search regions was found to considerably reduce the complexity of BB segmentation and improve the robustness of automatic 2D localization. Following initial localization of a BB marker in 2D and using the corresponding estimate of its 3D location (as described below), the position of the marker in the subsequent projection can be predicted by forward projection from the 3D image location. A constrained search window can therefore be defined in which a BB marker is expected to appear in subsequent projections. In the studies below, search windows of 20×20 pixels centered on the predicted 2D location of the BB centroid were used, as shown in Figs. 3a, 3b. In addition, search regions at the left and right edge of the projection were defined to better detect BBs entering the 2D FOV. Since the C-arm gantry rotates in a simple semicircle (apart from geometric nonidealities described below), the edge search regions were only required on the leading and trailing edges of rotation. In the studies below, edge search regions with width of 30 pixels was sufficient to segment BBs in at least two consecutive projections, which allowed definition of the (20×20 pixel) search windows. Any area of the edge search region that overlaps a search window is omitted during segmentation. Also, the first 9 projections (out of 200) were ignored to avoid slight irreproducibility in gantry vibration at the start of rotation.

Localization of BB markers in 3D image coordinates

The 3D image coordinate location of each BB centroid is estimated by transforming the 2D FPD pixel locations (U,V)p for projection j using the C-arm geometric calibration and a linear least squares (LLS) method. First, the C-arm geometric calibration is used to determine the position of the segmented BB centroids in the real detector coordinate system (r),31, 32

| (1) |

| (2) |

| (3) |

where Pr(x,y,z) is a 3D position in the real detector coordinate system, (U,V)p and (Uo,Vo)p are the BB centroid location and piercing point of the detector in the pixel coordinate system, respectively, and au and av are the pixel size in the projection domain (au=av=0.388 mm). Note that the directions of the Up and Vp axes are opposite to xr and yr. The real detector position of the BB is then transformed to the image coordinate system using

| (4) |

where PI is a 3D position on the surface of the FPD in image coordinates, and are rotation matrices from the “real detector” to “virtual detector” and virtual detector to “image” coordinate systems, respectively, and is a translation vector from virtual detector to image coordinate systems. The position in the jth projection, , is determined for each BB segmented, as illustrated in Fig. 3c. The C-arm geometric calibration provides the 3D source position in world coordinates at each projection j. The 3D image coordinate of the BB lies somewhere along the line segment from the detector position to the source.

In an ideal system, backprojected lines from a given marker’s location in each over the gantry orbit would intersect at a common point, (x,y,z)I. Due to nonidealities in the C-arm gantry rotation and inaccuracy in the 2D segmentation process, however, these backprojected lines do not perfectly intersect, as illustrated in Fig. 3d. Therefore, a LLS method was employed to determine the point of minimum distance, denoted as , from all backprojected lines j over a range m to n, where m and n are the first and last of a possibly discontinuous set of projections in which the BB was segmented. The LLS method takes a generic 3D distance equation of a point to j backprojected lines,

| (5) |

and minimizes the distance by setting the partial derivatives of xI, yI, and zI to zero. The partial derivatives can be reorganized to form an overdetermined system of equations, given generically by

| (6) |

where X is a coefficient matrix of the partial derivatives, β is (x,y,z)I, and y is a vector of constants. The LLS general estimation of the solution for β is

| (7) |

giving a computationally simple, closed-form solution for the 3D position of the BB marker in image coordinates, , calculated using MATLAB (R2007a, The Mathworks, Natick, MA).

Registration of tracker and image point sets

The resulting tracker point set (Sec. 3A, defined in 3D world coordinates) and image point set (Sec. 3B, defined in 3D image coordinates) are related by an image-to-world registration. The registration method in this study used the rigid, point-based method described by Horn,37 involving unit quaternions for a closed-form solution to the least squares approach to determine the rotation, translation, and scale between two different Cartesian coordinate systems. Previous studies have demonstrated accurate registration by this method, given proper configuration of markers (e.g., noncollinear configurations).23, 38, 39 In the context of head and neck surgery, this method was shown to facilitate marker configurations offering low, uniform TRE throughout the FOV in CBCT-guided surgery.39 For example, good registration was demonstrated in a previous study involving eight markers placed in noncollinear configurations which the configuration centroid was in close proximity to the surgical target.40 This approach is the basis for the number of markers and the geometric configurations used in this study.

EXPERIMENTAL METHODS

Image-to-world registration techniques

Two methods of point-set localization were compared, the conventional manual technique and the automatic projection-based technique described above. In the manual technique, localization of divot markers defined the registration of image and tracker point sets. The image point set was defined as the average of ten manual localizations of all eight divot markers in the reconstructed image. This point set is also referred to as the true locations of the divot markers. The true locations of the MM markers were determined in a similar manner. The tracker point sets were defined by the locations of the coinciding divot markers manually localized ten times by the tracking system using a trackable pointer. Each of the tracker point sets was registered to the true point set.

The automatic registration technique colocalized tracker and image point sets as described in Secs. 3A, 3B. The tracker point set (reflective markers) was localized ten times, and each set was rigidly registered to the image point set (BB markers) as described above.

Marker configurations

Two experiments were performed to characterize the accuracy and precision of the automatic registration algorithm. The first validated the automatic technique in comparison to the conventional manual approach. Eight MM markers (denoted as M1—M8) were placed on the surface of the head phantom at locations analogous to rigid anatomy, as shown in Fig. 4a, with divot markers placed immediately adjacent (within ∼1–2 cm). Divot markers were also affixed to the surface as target points (denoted as T1–T4, also shown in Fig. 4) for measurement of TRE in both registration techniques. The true location of the MM and divot markers for this configuration exhibited a mean standard deviation of 0.17 mm, owing to observer variability but still within one voxel.

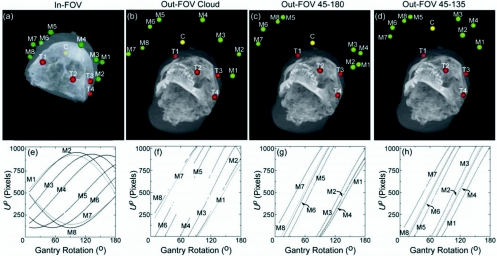

Figure 4.

Illustration of marker configurations, targets, and location of segmented BB centroids. Images (a)–(d) show maximum intensity projection renderings of CBCT images superimposed with the estimated locations of eight MM markers (M1–M8, green). The configuration centroid of the eight markers is also shown (C, yellow), as are the four target points (divot markers, T1–T4, red). In-FOV configurations involve MM markers affixed to the surface of the phantom, while Out-FOV configurations involve markers affixed to a curved acrylic plate [Fig. 1b] such that markers are present only in a subset of projections. The plots of (e)–(h) show the Up coordinate of each of the markers versus the C-arm rotation angle. These sinograms illustrate that in-FOV markers exist in all projections, while out-FOV markers enter and exit the projection FOV during gantry rotation, creating gaps in the sinogram. The smaller gaps are due to interference from bony anatomy. Each point in the Up coordinate also represents a line segment from to the source demonstrating the Δθ used to determine for each BB marker over all j projections.

This configuration of MM markers, referred to as “in-FOV,” allowed all markers to be detected in every projection throughout the gantry orbit. That is, every marker was always with the projection FOV and, therefore, was within the 3D FOV of the CBCT image. This is verified in the sinogram of Fig. 4e, where the Up coordinate of each of the eight BB centroids is plotted versus gantry angle. For each point along the sinogram, there is a line backprojected from to the source, illustrating the number of rays and angular increment (Δθ) used to determine for each BB marker.

The second experiment explored the possibility of placing MM markers in configurations that are potentially better suited to head and neck surgery. Three “out-FOV” configurations were considered in which MM markers were attached to the curved acrylic plate shown in Fig. 1b. The rationale for this approach was (i) to overcome the lack of rigid anatomy inferior to the cranium; (ii) to place the configuration centroid nearer to subcranial targets (thereby improving TRE),39 and (iii) to allow flexible marker configurations that would be surgically unobtrusive. Such configurations, in combination with automatic projection-based registration, could address the challenges to accurate guidance of head and neck surgery. Clinical implementations of this model could replace the curved plate by a cranial stereotactic frame or tools (marker “paddles”) snapped into position during scanning.

Three out-FOV MM marker configurations are illustrated in Figs. 4b, 4c, 4d. As in the previous experiment, divot markers were placed adjacent to each MM marker for comparison to the manual localization technique. While the out-FOV markers may not appear within the 3D FOV of the CBCT image, they do present in a subset of the CBCT projection data [as seen in the discontinuous sinograms of Figs. 4f, 4g, 4h] such that their position in world coordinates can be estimated as described in Sec. 3B.

To determine the true locations of the out-FOV markers, scans of the head phantom in the acrylic frame were acquired on a diagnostic CT scanner (Aquilion ONE, Toshiba, Tokyo, Japan) offering a 3D FOV sufficient to encompass the entire phantom, frame, and curved plate. The true locations were taken as the average of ten manual localizations in CT (0.15 mm average standard deviation) transformed into the CBCT image coordinate system (RMS transformation error of 0.51±0.05 mm) via rigid registration using divot markers affixed to the surface of the head phantom.

The three out-FOV configurations are referred to as “cloud,” “45-180,” and “45-135,” as shown in Figs. 4b, 4c, 4d. The cloud configuration placed the eight MM markers in an evenly scattered pattern on the curved plate, placing the configuration centroid fairly close to surgical targets [Fig. 4b] but presenting markers sporadically in projection data [Fig. 4f]. The 45-180 configuration placed four MM markers at 45° and four MM markers at 180° (each with respect to the FPD start position), as shown in Fig. 4c. This configuration also placed the centroid fairly close to surgical targets, and depending on the height of the curved plate, the markers at 45° present in the early and midprojections, while the 180° markers present in the mid- and late projections [Fig. 4g]. The 45-135 configuration placed four MM markers each at 45° and 135°, placing the configuration centroid farther from (more anterior to) surgical targets, with markers presenting in the projection data as in the sinograms of Fig. 4h.

Target registration error

An important metric of accuracy of image-to-world registration is the TRE. The TRE is the postregistration distance between homologous image and tracker points other than the registration point sets—e.g., the target divot markers T1–T4 illustrated in Figs. 4a, 4b, 4c, 4d—and may be measured experimentally23, 39 and∕or computed theoretically.37, 40, 41, 42, 43, 44 Measurements of TRE were performed for each target marker (T1–T4) for both the manual and automatic registration techniques for all four of the marker configurations in Fig. 4. The true locations of the targets were defined as in Sec. 4A. The tracker location of each target was recorded by the tracking system (trackable pointer), and the TRE for the manual (or automatic) registration technique was measured as the distance between the true target point location and the recorded tracker location after manual (or automatic) registration, with such measurements repeated ten times for statistical analysis.

The mean TRE can also be calculated theoretically based on the marker configuration with respect to the location of the surgical target.41, 42 As described by Wiles et al.,42 the mean TRE is ideally given by

| (8) |

where FRE is the mean fiducial registration error (i.e., the expected distance between the true MM marker location and that estimated by the registration), N is the number of MM markers, fk is the separation of the markers, and dk is the distance from the configuration centroid to the surgical target. Calculations of TRE for the four MM marker configurations under consideration are shown in Fig. 5 as color maps within a sagittal slice. These calculations assume FRE fixed at a constant value of 0.3 mm, a reasonable value for eight markers assuming an FLE of 0.3 mm (the average result over all configurations, in basic agreement with Wiles and co-workers42, 43 and West et al.44) using Sibson’s estimation for FRE (Ref. 45)

| (9) |

where N is the number of markers. Consistent with the notion that the configuration centroid should fall in proximity to the surgical target, the TRE maps in Fig. 5 suggest the best TRE (e.g., in the nasopharynx or skull base) for the in-FOV configuration and the worst TRE for the out-FOV 45-135 configuration. Such theoretical calculations of TRE provided an understanding of how a configuration affects accuracy throughout the image. As shown in previous work,37 the measured TRE is expected to agree with the theoretical TRE in trend (e.g., best for in-FOV) but not necessarily in magnitude [typically larger due to experimental factors not contained in Eq. 8, such as camera precision and vibration, anisotropic FLE,42 and exclusion of tip position tracking error when using a reference tool43, 44].

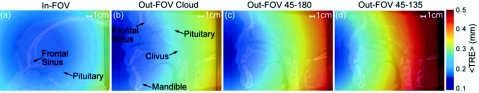

Figure 5.

Estimated target registration error computed from Eq. 8. (a)–(d) show color maps of the estimated TRE for the four marker configurations superimposed on CBCT sagittal slices. The in-FOV configuration mimics conventional marker placement normally targeting neurosurgical anatomy. The out-FOV configurations place markers in a manner that shifts the centroid toward subcranial anatomy more relevant to head and neck procedures. The basis for the number and configuration of markers is derived from previous studies (Refs. 38, 39).

RESULTS

Image point-set localization accuracy

To evaluate the automatic registration technique, the estimated 3D locations of the in-FOV configuration were compared to the true locations. The in-FOV results in Figs. 6a, 6b, 6c, 6d show the mean and standard deviation of the difference between true and estimated locations for the individual xI, yI, and zI coordinates as well as the magnitude of the 3D localization error (d). The in-FOV marker localization has an average accuracy of 0.39±0.11 mm, which is half the voxel size of the CBCT reconstructions. The localization error appears to be dominated by a systematic offset in localization in the x coordinate (d∼xI∼0.4 mm, compared to yI and zI each <0.1 mm). The source of the x-coordinate error is under investigation and may be associated with detector lag,46 causing a slight lateral shift in BB centroid location in the projection domain.

Figure 6.

[(a)–(d)] Difference in location of the automatic registration 3D estimation from truth for each of the four marker configurations. The mean and standard deviation of the difference is shown for each coordinate (x,y,z)I and magnitude (d) averaged over all eight markers in each configurations.

The out-FOV configurations exhibit a slightly higher localization error of 0.72±0.16 mm, as shown in Fig. 6. A similar systematic offset in the x coordinate is observed as noted above and represents the largest contributor to localization error in most cases. Considering the three out-FOV configurations individually, the Cloud exhibited the poorest performance overall (0.86±0.16 mm error), followed by 45-180 configuration (0.67±0.21 mm error) and the 45-135 configuration (0.63±0.11 mm error). The reason for reduced accuracy in the out-FOV configurations is twofold. First, because the MM markers were not contained within the limited 3D FOV of the CBCT images, transformation of true marker locations from diagnostic CT to CBCT via rigid registration was required and had an inherent RMS error of 0.51±0.05 mm (Sec. 4B).

Second, the MM markers in the out-FOV configurations were segmented from ∼1∕3 to 1∕2 the total number of projections of the in-FOV configuration and from a reduced angular range. The difference in the number of projections (Nproj) and angular range (Δθ) in which the MM markers were segmented is evident in the sinograms of Figs. 4e, 4f, 4g, 4h and in Table 1. The sinogram in Fig. 4e verifies that all MM markers were visible in all projections for the in-FOV configuration, representing the best possible case (Nproj∼191 and Δθ∼172°). By comparison, the out-FOV configurations exhibit discontinuous sinograms (with large gaps in the sinogram resulting from markers exiting and re-entering the projection FOV and smaller gaps due to interference from bony anatomy), with a correspondingly reduced Nproj(∼60–100) and Δθ(∼90°). The reduced angular range likely contributes to the reduced accuracy for the out-FOV configurations—i.e., the accuracy with which can be localized in 3D reduces with the tomographic angle over which rays are backprojected. Further, the reduced performance of the cloud configuration may be due to the reduced Nproj(∼60) for some markers, compared to Nproj∼100 for the 45-180 and 45-135 configurations.

Table 1.

The total number of projections in which markers were segmented and the corresponding total angle over which markers were observed for each marker (M1–M8) and configuration. In-FOV represents the best possible case for both metrics (i.e., markers visible in all projections across the full orbital arc): Nproj=total number of segmented projections; Δθ (°)=the change in angle between the first and last segmented projection.

| Marker configuration | Marker | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| M1 | M2 | M3 | M4 | M5 | M6 | M7 | M8 | ||

| In-FOV | Nproj | 191 | 191 | 191 | 191 | 189a | 191 | 191 | 191 |

| Δθ (°) | 171.9 | 171.9 | 171.9 | 171.9 | 171.9 | 171.9 | 171.9 | 171.9 | |

| Out-FOV Cloud | Nproj | 96 | 69a | 99a | 54a | 59a | 60a | 99 | 60a |

| Δθ (°) | 86.0 | 88.6 | 93.1 | 88.6 | 90.5 | 97.6 | 171.9 | 171.9 | |

| Out-FOV 45-180 | Nproj | 98 | 103 | 108 | 93a | 97a | 102 | 96 | 106a |

| Δθ (°) | 87.7 | 92.3 | 96.8 | 90.4 | 89.6 | 91.3 | 86.0 | 94.9 | |

| Out-FOV 45-135 | Nproj | 108 | 100a | 99 | 102 | 94a | 103a | 99a | 105a |

| Δθ (°) | 96.8 | 90.4 | 88.7 | 91.3 | 90.5 | 93.2 | 91.4 | 94.9 | |

Marker was within the projection FOV but not segmented in some projections typically due to interference from overlying bony anatomy—e.g., for the in-FOV configuration, two projections for M5.

The average computational time to segment the eight BB centroids from all acquired projections was 30 s and 22 s for the in-FOV and out-FOV arrangements, respectively (MATLAB, R2007a, The Mathworks, Natick MA, running on a dual-core PC, 2.4 GHz, Dell Computers, Round Rock, TX). The difference in the computing time is due to the number of search windows—8 windows (equal to the number of MM markers) throughout the segmentation process for the in-FOV configuration, compared to 0-8 windows for the out-FOV configurations.

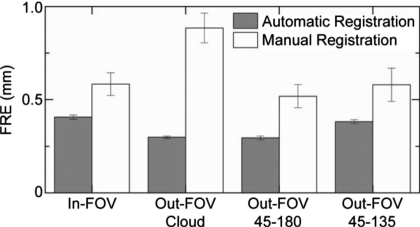

Automatic versus manual registration

The FRE and TRE were measured to evaluate the accuracy of marker localization for both the automatic and manual registration techniques. As mentioned in Sec. 4B, the FRE was measured as the difference in 3D location between true and registered point sets, with the mean and standard deviation over ten trials plotted in Fig. 7 for each of the four marker configurations. The FRE for the manual registration technique (∼0.5–0.8 mm) agrees with previous studies.39 The automatic registration technique exhibits a consistently lower FRE (∼0.3–0.4 mm) and greater reproducibility (lower standard deviation). This demonstrates superior registration for the automatic technique, a somewhat expected result, since FRE describes only the fiducial localization error relationship to the number of markers [Eq. 9].45

Figure 7.

Fiducial registration error of the automatic and manual registration techniques for the four marker configurations. Bars represent the mean and error bars the standard deviation over all registrations. The automatic registration technique appears to provide smaller, more reproducible FRE in each case.

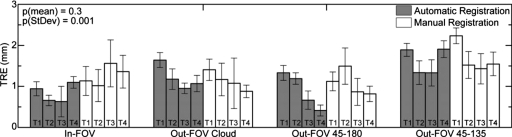

The TRE was measured for both registration techniques for each of the four marker configurations using the four target divot markers [Figs. 4a, 4b, 4c, 4d, red dots labeled T1–T4], as described in Sec. 4C. As shown in Fig. 8, the TRE for the automatic technique was consistently at or below that of the conventional manual technique, with an overall mean of 1.14±0.20 mm for the former compared to 1.29±0.34 mm for the latter. The superior TRE for the automatic registration technique is not statistically significant (p=0.3 as analyzed in a two-tailed, heteroscedastic student t-test computed for the average TRE for each target point [four points∕configuration]) and suggests greater reproducibility (lower standard deviation). The results are in basic agreement with the TRE reported previously for an automatic registration technique23 (2.11±0.13 mm) which localized markers directly in the CBCT image (requiring markers to be contained within the 3D FOV). The reduced TRE measured in the current work is likely associated with intrinsically higher resolution of the projection data (0.2 mm detector pixel pitch) compared to 3D reconstructions (0.8 mm voxel size), the position of the tracking system,47 and variations in trackable tools.43, 44

Figure 8.

TRE measurements for all target points (T1–T4; as labeled in Fig. 4) and marker configurations. The bars represent the mean and the error bars the standard deviation. The automatic and manual registration methods exhibit overall mean TRE of 1.14 and 1.29 mm, respectively, with overall average standard deviations of 0.20 and 0.34 mm, respectively.

The TRE was also calculated using Eq. 8 for each of the marker configurations in Figs. 4a, 4b, 4c, 4d. As illustrated in Fig. 5, it is expected that the in-FOV configuration yields the best (lowest) TRE, owing primarily to the configuration centroid lying deeper within the head, followed by the out-FOV Cloud, 45-180, and 45-135 configurations. To confirm these expectations, the RMS marker separation [fk in Eq. 8] and the centroid-to-target distances [dk in Eq. 8] were calculated for each arrangement, as summarized in Table 2. In terms of marker separation (fk), the cloud and 45-180 configurations give the best dispersal of fiducials. In terms of centroid-to-target distance (dk), the in-FOV configuration places the centroid in best proximity to the targets (as expected), and the 45-135 configuration is the worst (centroid most anterior to the targets). Overall, the calculations suggest that the TRE increases (worsening) in the rank order of Figs. 5a, 5b, 5c, 5d—i.e., best for the in-FOV configuration, followed by out-FOV cloud, 45-180, and 45-135.

Table 2.

Estimated TRE and associated parameters [Eq. 8] for each target point and marker configuration. The RMS separation of the marker configuration, fk, and the distance between the configuration centroid to the target, dk, is best for the in-FOV, slightly better for out-FOV 45-180 than Cloud, and worst for out-FOV 45-135.

| Configuration | RMS marker separation, fk (mm) | Centroid-to-target distance,dk (mm) | TRE (mm) (Eq. 8)a | ||||||

|---|---|---|---|---|---|---|---|---|---|

| T1 | T2 | T3 | T4 | T1 | T2 | T3 | T4 | ||

| In-FOV | 76.6 | 72.0 | 83.4 | 81.7 | 106.6 | 0.19 | 0.21 | 0.18 | 0.23 |

| Out-FOV Cloud | 101.2 | 66.3 | 70.4 | 125.0 | 144.1 | 0.16 | 0.16 | 0.23 | 0.25 |

| Out-FOV 45-180 | 103.9 | 72.8 | 60.6 | 114.5 | 133.8 | 0.18 | 0.17 | 0.23 | 0.26 |

| Out-FOV 45-135 | 82.5 | 86.9 | 93.0 | 142.8 | 166.0 | 0.23 | 0.24 | 0.33 | 0.38 |

FRE=0.3 mm.

Comparing such calculations to Fig. 8 for the automatic registration technique, we observe that the in-FOV marker configuration exhibits a slight improvement in TRE (0.83±0.20 mm) compared to the out-FOV configurations (1.24±0.20 mm) but the result is not statistically significant (p=0.79, two-tailed, homoscedastic student t-test computed for the average TRE for each target point (four points∕configuration)). The various out-FOV configurations performed nearly equivalently, with the 45-180 configuration suggesting a slightly improved TRE (0.90±0.17 mm), which was not statistically significant (p=0.28) compared to the cloud and 45-135 configurations. The overall discrepancy between the magnitude of the theoretically calculated TRE (Table 2) and the measured TRE (Fig. 8) is to be expected, attributable to camera precision, vibration, etc. The rank order of TRE performance suggested by theory is more or less consistent with measurement but is at the level of experimental error. The results are encouraging overall because (i) the automatic technique clearly demonstrated equivalent or superior performance to the manual technique and (ii) the various out-FOV configurations exhibited similar TRE to the in-FOV configuration, supporting the development of novel auxiliary marker tools for head and neck surgery.

DISCUSSION AND CONCLUSIONS

The automatic registration technique exhibits comparable accuracy to that of the conventional manual technique and demonstrates slightly improved reproducibility. Although the time associated with each technique was not rigorously evaluated, the automatic technique is almost certainly less time consuming. The conventional procedure takes minutes to complete, compared to seconds for the automatic procedure. In addition, much of the process (e.g., segmentation) can be conducted in parallel with CBCT image reconstruction and thereby automatically update the registration with each CBCT scan. It is foreseeable that the registration process can operate seamlessly without user intervention and allow accurate surgical navigation in the context of up-to-date intraoperative images while reducing the workflow burden of image-to-world registration.

In estimating the 3D coordinates of MM markers from 2D projections, a greater Nproj and Δθ was found to provide more accurate estimatation of . This is evident in comparison of the in-FOV and out-FOV configurations, as well as among the out-FOV configurations. Factors affecting Nproj and Δθ include the ability to segment markers without interference (e.g., dense metal or bony anatomy) and the distance from isocenter to a given MM marker.

Future improvements in the automatic technique could include increasing the robustness of the segmentation algorithm—e.g., altering the intensity and pattern filter of the algorithm. The current filter uses intensity gradients to determine regions of high intensity and then matches to the pattern of a uniform intensity circle with varying radius. A more robust filter could be applied that accurately determines the size of the marker in the projection domain and employs appropriate intensity gradient threshold depending on the overlying anatomy. The 3D location of the marker estimated throughout the segmentation could be used to determine the approximate diameter of the marker in projections (rather than the variable 2–5 pixel radius described above). Using the estimated diameter, a pattern with varying intensity (edges slightly lower intensity than the center) could be used for segmentation. Further, a more adaptive intensity gradient threshold could be determined by sampling the background (edges of the search window) to determine what surrounds and overlays the marker. For example, markers overlain by bony anatomy would have a lower intensity gradient threshold than markers surrounded by soft tissue or air.

Given that the 2D projection FOV for the C-arm is fixed, decreasing the isocenter-to-marker distance will increase Nproj and Δθ and thereby improve localization accuracy. The isocenter distance for this study was selected arbitrarily, and in practice, markers could be positioned just above the surface of the surgical site. This could be facilitated through the use of a reference tool to allow removal of the markers from the surgical field after imaging (such that they are only in position during image acquisition) so that they do not interfere with surgical access. A variety of tools could be developed to reproducibly position the markers in a manner that minimizes both isocenter-to-marker distance as well as marker-to-target distance in order to improve algorithm accuracy and TRE. For example, such a tool could attach rigidly to the cranium or stereotactic frame and reproducibly suspend markers above targeted head and neck anatomy. This could remove the usual restrictions associated with affixing markers to rigid anatomy.

Another factor affecting the accuracy of marker 3D localization is the detector lag. This well known phenomenon results primarily from charge trapping and release in FPD pixel components. Analogous to the “comet” artifact in CBCT reconstructions, the localization error associated with lag results from spatial blurring in the direction opposite of the gantry rotation. Since the FPD path is limited to the upper hemicircle of the gantry rotation, the comet artifact only affects localization in xI; the orthogonal component, yI, sees opposing detector lag from 0° to 90° and from 90° to 180°, thus canceling the overall effect of lag; similarly, the zI component is orthogonal to the gantry rotation and thus unaffected by lag. A variety of lag correction techniques48 are applicable and could reduce the systematic error is xI.

An automatic image-to-world registration method has been developed that demonstrates accuracy and reproducibility consistently at or better than that of the conventional manual technique. The method identifies fiducial markers directly in 2D x-ray projections, offering the potential for novel marker configurations designed for head and neck surgery that minimize TRE for subcranial targets (sinus, oro-, and nasopharyngeal) and satisfy clinical constraints (e.g., lack of rigid anatomy and surgically unobtrusive markers). The results overcome the requirement that markers be within the 3D FOV (Ref. 23) and provide an initial investigation into marker configurations that could improve performance and facilitate clinical translation in head and neck surgery. Clinical application should consider marker configurations consistent with the surgical setup and line-of-sight limitations of the optical tracking system. Similarly, a reference marker could be rigidly affixed to the patient to allow the optical tracker to be freely maneuvered in the room to maintain line of sight. As future work, one could consider an automatic registration approach perfectly analogous to that reported here based on EM tracking (which is free from line-of-sight limitations), utilizing MM EM markers∕transponders that are “visible” to both the C-arm x-ray system and the EM tracker. The automatic registration technique could eliminate the time required for manual registration and provide registered 3D images for visualization and guidance within ∼15–20 s of image acquisition. The automatic registration method using the prototype C-arm is undergoing integration in ongoing clinical trials of intraoperative CBCT-guided head and neck surgery.

ACKNOWLEDGMENTS

The authors extend sincere thanks to G. Bootsma and S. Nithiananthan (University of Toronto) for help with the 3D tracking and visualization systems and Dr. H. Chan (Ontario Cancer Institute) for help with the C-arm system. The work was supported in part by the Guided Therapeutics (GTx) program at University Health Network (Toronto ON), an NSERC graduate scholarship (CGS Master’s Award), and the National Institutes of Health (NIH Grant No. R01-CA-127944).

References

- Sindwani R. and Bucholz R. D., “The next generation of navigational technology,” Otolaryngol. Clin. North Am. 38(3), 551–562 (2005). 10.1016/j.otc.2004.11.003 [DOI] [PubMed] [Google Scholar]

- Galloway R. L., “The process and development of image-guided procedures,” Annu. Rev. Biomed. Eng. 3, 83–108 (2001). 10.1146/annurev.bioeng.3.1.83 [DOI] [PubMed] [Google Scholar]

- Yaniv Z. and Cleary K., “Image-guided procedures: A review,” Computer Aided Interention and Medical Robotics, Georgetown University (Imaging Science and Information Systems Center, Washington, DC, 2006), Paper No. TR 2006-3.

- Labadie R. F., Davis B. M., and Fitzpatrick J. M., “Image-guided surgery: What is accuracy?,” Curr. Opin. Otolaryngol. Head Neck Surg. 13, 27–31 (2005). 10.1097/00020840-200502000-00008 [DOI] [PubMed] [Google Scholar]

- Palmer J. N. and Kennedy D. W., “Historical perspective on image-guided sinus surgery,” Otolaryngol. Clin. North Am. 38(3), 419–428 (2005). 10.1016/j.otc.2004.10.023 [DOI] [PubMed] [Google Scholar]

- Metson R., Cosenza M., Gliklich R. E., and Montgomery W. W., “The role of image-guidance systems for head and neck surgery,” Arch. Otolaryngol. Head Neck Surg. 125(10), 1100–1104 (1999). [DOI] [PubMed] [Google Scholar]

- Jaffray D. A., Siewerdsen J. H., Edmundson G. K., Wong J. W., and Martinez A., “Flat-panel cone-beam CT on a mobile isocentric C-arm for image-guided brachytherapy,” Proc. SPIE 4682, 209–217 (2002). 10.1117/12.465561 [DOI] [Google Scholar]

- Rafferty M. A., Siewerdsen J. H., Chan Y., Moseley D. J., Daly M. J., Jaffray D. A., and Irish J. C., “Investigation of C-arm cone-beam CT-guided surgery of the frontal recess,” Laryngoscope 115(12), 2138–2143 (2005). 10.1097/01.mlg.0000180759.52082.45 [DOI] [PubMed] [Google Scholar]

- Rafferty M. A., Siewerdsen J. H., Chan Y., Daly M. J., Moseley D. J., Jaffray D. A., and Irish J. C., “Intra-operative cone-beam CT for guidance of temporal bone surgery,” Otolaryngol.-Head Neck Surg. 134(5), 801–808 (2006). 10.1016/j.otohns.2005.12.007 [DOI] [PubMed] [Google Scholar]

- Chan Y., Siewerdsen J. H., Rafferty M. A., Moseley D. J., Jaffrary D. A., and Irish J. C., “Cone-beam computed tomography of a mobile C-arm: Novel intraoperative imaging technology for guidance of head and neck surgery,” J. Otolaryngol. 37(1), 81–90 (2008). [PubMed] [Google Scholar]

- Sindwani R. and Metson R., “Image-guided frontal sinus surgery,” Otolaryngol. Clin. North Am. 38(3), 461–471 (2005). 10.1016/j.otc.2004.10.026 [DOI] [PubMed] [Google Scholar]

- Khoury A., Siewerdsen J. H., Whyne C. M., Daly M. J., Kreder H. J., Moseley D. J., and Jafffrary D. A., “Intraoperative cone-beam CT for image guided tibial plateau fracture reduction,” Comput. Aided Surg. 12(4), 195–207 (2007). 10.1080/10929080701526872 [DOI] [PubMed] [Google Scholar]

- Khoury A., Whyne C. M., Daly M. J., Moseley D. J., Bootsma G., Skrinskas T., Siewerdsen J. H., and Jaffrary D. A., “Intraoperative cone-beam CT for correction of periaxial malrotation of the femoral shaft: A surface-matching approach,” Med. Phys. 34(4), 1380–1387 (2007). 10.1118/1.2710330 [DOI] [PubMed] [Google Scholar]

- Siewerdsen J. H., Chan Y., Rafferty M. A., Moseley D. J., Jaffray D. A., and Irish J. A., “Cone-beam CT with a flat-panel detector on a mobile C-arm: Pre-clinical investigation in image-guided surgery of the head and neck,” Proc. SPIE 5744, 789–797 (2005). 10.1117/12.595690 [DOI] [Google Scholar]

- Siewerdsen J. H., Jaffray D. A., Edmundson G. K., Sanders W. P., Wong J. W., and Martinez A., “Flat-panel cone-beam CT: A novel imaging technology for image-guided procedures,” Proc. SPIE 4319, 435–444 (2001). 10.1117/12.428085 [DOI] [Google Scholar]

- Siewerdsen J. H., Moseley D. J., Burch S., Bisland S. K., Bogaards A., Wilson B. C., and Jaffray D. A., “Volume CT with a flat-panel detector on a mobile, isocentric C-arm: Pre-clinical investigation in guidance of minimally invasive surgery,” Med. Phys. 32(1), 241–254 (2005). 10.1118/1.1836331 [DOI] [PubMed] [Google Scholar]

- Daly M. J., Siewerdsen J. H., Moseley D. J., Jaffray D. A., and Irish J. C., “Intraoperative cone-beam CT for guidance of head and neck surgery: Assessment of dose and image quality using a C-arm prototype,” Med. Phys. 38(10), 3767–3780 (2006). 10.1118/1.2349687 [DOI] [PubMed] [Google Scholar]

- Schmidgunst C., Ritter D., and Lang E., “Calibration model of a dual gain flat panel detector for 2D and 3D x-ray imaging,” Med. Phys. 34(9), 3649–3664 (2007). 10.1118/1.2760024 [DOI] [PubMed] [Google Scholar]

- Nithiananthan S., Brock K. K., Irish J. C., and Siewerdsen J. H., “Deformable registration for intra-operative cone-beam CT guidance of head and neck surgery,” IEEE Proceedings of Engineering in Medicine and Biology Society, 1, 2008, pp. 3634–3637. [DOI] [PubMed]

- Grimson W. L., Ettinger G. J., White S. J., Lozano-Perez T., Wells W. M., and Kikinis R., “An automatic registration method for frameless stereotaxy, image guided surgery, and enhanced reality visualization,” IEEE Trans. Med. Imaging 15(2), 126–140 (1996). 10.1109/42.491415 [DOI] [PubMed] [Google Scholar]

- Klein T., Traub J., Hautmann H., Ahmandian A., and Navab N., “Fiducial-free registration procedure for navigated bronchoscopy,” Med. Imaging: Comp. Assisted Interventions 10, 475–482 (2007). [DOI] [PubMed] [Google Scholar]

- Knott P. D., Maurer C. R., Gallivan R., Roh H. J., and Citardi M. J., “The impact of fiducial distribution on headset based registration in image-guided sinus surgery,” Otolaryngol.-Head Neck Surg. 131(5), 666–672 (2004). 10.1016/j.otohns.2004.03.045 [DOI] [PubMed] [Google Scholar]

- Bootsma G., Siewerdsen J. H., Daly M. J., and Jaffray D. A., “Initial investigation of an automatic registration algorithm for surgical navigation,” IEEE Proceedings of Engineering in Medicine and Biology Society, 1, 2008. (unpublished), pp. 3638–3642. [DOI] [PubMed]

- Papavasileiou P., Flux G. D., Flower M. A., and Guy M. J., “Automated CT marker segmentation for image registration in radionuclide therapy,” Phys. Med. Biol. 46, N269–N279 (2001). 10.1088/0031-9155/46/12/402 [DOI] [PubMed] [Google Scholar]

- Mao W., Wiersma R. D., and Xing L., “Fast internal marker tracking algorithm for onboard MV and kV imaging systems,” Med. Phys. 35(5), 1942–1949 (2008). 10.1118/1.2905225 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitschke M. and Navab N., “Optimal configuration for dynamic calibration of projection geometry of x-ray C-arm systems,” in IEEE Workshop on Mathematical Methods in Biomedical Image Analysis, 2000, pp. 204–209.

- Tubic D., Zaccarin A., Pouliot J., and Beaulieu L., “Automated seed detection and three-dimensional reconstruction. I. Seed localization from fluoroscopic images or radiographs,” Med. Phys. 28(11), 2265–2271 (2001). 10.1118/1.1414308 [DOI] [PubMed] [Google Scholar]

- Whitehead G., Chang Z., and Ji J., “An automated intensity-weighted brachytherapy seed localization algorithm,” Med. Phys. 35(3), 818–827 (2008). 10.1118/1.2836418 [DOI] [PubMed] [Google Scholar]

- Tubic D. and Beaulieu L., “Sliding slice: A novel approach for high accuracy and automatic 3D localization of seed from CT scans,” Med. Phys. 32(1), 163–174 (2005). 10.1118/1.1833131 [DOI] [PubMed] [Google Scholar]

- Su Y., Davis B. J., Herman M. G., and Robb R. A., “Prostate brachytherapy seed localization by analysis of multiple projections: Identifying and addressing the seed overlap problem,” Med. Phys. 31(5), 1277–1287 (2004). 10.1118/1.1707740 [DOI] [PubMed] [Google Scholar]

- Cho Y. B., Moseley D. J., Siewerdsen J. H., and Jaffray D. A., “Accurate technique for complete geometric calibration of cone-beam computed tomography systems,” Med. Phys. 32(4), 968–983 (2005). 10.1118/1.1869652 [DOI] [PubMed] [Google Scholar]

- Daly M. J., Siewerdsen J. H., Cho Y. B., Jaffray D. A., and Irish J. C., “Geometric calibration of a mobile c-arm for intraoperative cone beam CT,” Med. Phys. 35(5), 2124–2136 (2008). 10.1118/1.2907563 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feldkamp L. A., Davis L. C., and Kress J. W., “Practical cone-beam algorithm,” J. Opt. Soc. Am. A 1(6), 612–619 (1984). 10.1364/JOSAA.1.000612 [DOI] [Google Scholar]

- Siewerdsen J. H., Daly M. J., Bachar G., Moseley D. J., Bootsma G., Brock K. K., Ansell S., Wilson G. A., Chharbra S., Jaffrary D. A., and Irish J. C., “Multi-mode C-arm fluoroscopy, tomosynthesis, and cone-beam CT for image-guided interventions: From proof of principle to patient protocols,” Proc. SPIE 6510, 65101A-1–65101-11 (2007). 10.1117/12.713642 [DOI] [Google Scholar]

- Roos P. G., Colbeth R. E., Mollov I., Munrob P., Pavkovicha J., Seppia E. J., Shapirob E. G., Togninaa C. A., Virshupa G. F., Yua J. M., Zentaia G., Kaisslc W., Matsinosc E., Richtersc J., and Riemc H., “Multiple gain ranging readout method to extend the dynamic range of amorphous silicon flat panel imagers,” Proc. SPIE 5368, 139–149 (2004). 10.1117/12.535471 [DOI] [Google Scholar]

- Chiarot C. B., Siewerdsen J. H., Haycocks T., Moseley D. J., and Jaffray D. A., “An innovative phantom for quantitative and qualitative investigation of advanced x-ray imaging technologies,” Phys. Med. Biol. 50(21), N287–N297 (2005). 10.1088/0031-9155/50/21/N01 [DOI] [PubMed] [Google Scholar]

- Horn B. K., “Closed-form solution of absolute orientation using unit quaternions,” J. Opt. Soc. Am. A 4(4), 629–642 (1987). 10.1364/JOSAA.4.000629 [DOI] [Google Scholar]

- West J. B., Fitzpatrick J. M., Toms S. A., Maurer C. R., and Maciunas R. J., “Fiducial point placement and the accuracy of point-based, rigid body registration,” Neurosurgery 48(4), 810–817 (2001). 10.1097/00006123-200104000-00023 [DOI] [PubMed] [Google Scholar]

- Hamming N. M., Daly M. J., Irish J. C., and Siewerdsen J. H., “Effect of fiducial configuration on target registration error in intraoperative cone-beam CT guidance of head and neck surgery,” IEEE Proceedings of Engineering in Medicine and Biology Society2008, pp. 3643–3648. [DOI] [PubMed]

- Fitzpatrick J. M. and West J. B., “The distribution of target registration error in rigid-body point-based registration,” IEEE Trans. Med. Imaging 20(9), 917–927 (2001). 10.1109/42.952729 [DOI] [PubMed] [Google Scholar]

- Fitzpatrick J. M., West J. B., and Maurer C. R., “Predicting error in rigid-body point-based registration,” IEEE Trans. Med. Imaging 17(5), 694–702 (1998). 10.1109/42.736021 [DOI] [PubMed] [Google Scholar]

- Wiles A. D., Likholyot A., Frantz D. D., and Peters T. M., “A statistical model for point-based target registration error with anisotropic fiducial localizer error,” IEEE Trans. Med. Imaging 27(3), 378–390 (2008). 10.1109/TMI.2007.908124 [DOI] [PubMed] [Google Scholar]

- Wiles A. D. and Peters T. M., “Improved statistical TRE model when using a reference frame,” Medical Imaging: Computer Assisted Interventions, 2007, Vol. 10, pp. 442–449. [DOI] [PubMed]

- West J. B. and Maurer C. R., “Designing optically tracked instruments for image-guided surgery,” IEEE Trans. Med. Imaging 23(5), 533–545 (2004). 10.1109/TMI.2004.825614 [DOI] [PubMed] [Google Scholar]

- Sibson R., “Studies in the robustness of multidimensional scaling: Perturbational analysis of classical scaling,” J. R. Stat. Soc. Ser. B (Methodol.) 41(2), 217–229 (1979). [Google Scholar]

- Siewerdsen J. H. and Jaffray D. A., “Cone-beam computed tomography with a flat-panel imager: Effects of image lag,” Med. Phys. 26(12), 2635–2647 (1999). 10.1118/1.598803 [DOI] [PubMed] [Google Scholar]

- Khadem R., Yeh C. C., Tehrani M. S., Bax M. R., Johnson J. A., Welch J. N., Wilkinson E. P., and Shahidi R., “Comparative tracking error analysis of five different optical tracking systems,” Comput. Aided Surg. 5, 98–107 (2000). 10.3109/10929080009148876 [DOI] [PubMed] [Google Scholar]

- Mail N., O’Brien P., and Pang G., “Lag correction model and ghosting analysis for an indirect-conversion flat-panel imager,” J. Appl. Clin. Med. Phys. 8(3), 137–146 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]