Abstract

Sensorimotor-based theories of semantic memory contend that semantic information about an object is represented in the neural substrate invoked when we perceive or interact with it. We used fMRI adaptation to test this prediction, measuring brain activation as participants read pairs of words. Pairs shared function (flashlight–lantern), shape (marble–grape), both (pencil–pen), were unrelated (saucer–needle), or were identical (drill–drill). We observed adaptation for pairs with both function and shape similarity in left premotor cortex. Further, degree of function similarity was correlated with adaptation in three regions: two in the left temporal lobe (left medial temporal lobe, left middle temporal gyrus), which has been hypothesized to play a role in mutimodal integration, and one in left superior frontal gyrus. We also found that degree of manipulation (i.e., action) and function similarity were both correlated with adaptation in two regions: left premotor cortex and left intraparietal sulcus (involved in guiding actions). Additional considerations suggest that the adaptation in these two regions was driven by manipulation similarity alone; thus, these results imply that manipulation information about objects is encoded in brain regions involved in performing or guiding actions. Unexpectedly, these same two regions showed increased activation (rather than adaptation) for objects similar in shape. Overall, we found evidence (in the form of adaptation) that objects that share semantic features have overlapping representations. Further, the particular regions of overlap provide support for the existence of both sensorimotor and amodal/multimodal representations.

Keywords: semantic representations, semantic features, semantic attributes, adaptation, fMRI, action, repetition suppression

Introduction

Many theories of semantic memory posit that the meanings of concepts can be described as patterns of activation that are distributed over semantic features (e.g., Allport, 1985; Barsalou, 1999; Tyler et al., 2000). A benefit of this kind of architecture is that relationships between concepts can be captured via overlapping representations (McRae et al., 1997; Masson, 1995). Some distributed theories of semantic memory further suggest that semantic information about an object is represented in the neural substrate that is invoked when we perceive and/or interact with that object. Specifically, these sensorimotor-based theories suggest that the meaning of a (concrete) concept is not distributed in an amodal, unitary semantic system, but instead different aspects of meaning are stored in physically distal networks, according to the modality in which the information was acquired (e.g., Warrington & McCarthy, 1987; Glenberg, 1997; Barsalou, 1999). Amodal theories (e.g., Caramazza & Shelton, 1998), on the other hand, do not posit that representations are sensorimotor-based and so need not predict that concepts are situated in modality specific cortices.

Regardless of whether it is posited that meanings are distributed over features in an amodal system or across multiple, modality specific systems, all distributed models of semantic memory assume that the representations of concepts that share features overlap. This means that activating a particular concept should also partially activate other concepts that share its features. The semantic priming effect, wherein identifying a target word is facilitated when it is preceded by a (conceptually) related prime word (e.g., Meyer & Schvaneveldt, 1971), can therefore be interpreted as support for distributed models. However, semantic priming studies typically use primes and targets from the same semantic category, and as many have pointed out (e.g., Kellenbach et al., 2000), category co-exemplars are usually related in multiple ways (e.g., crayon and pencil are both thin, oblong, used for marking paper, and grasped with the thumb and the second and third fingers). Therefore, although the results of these studies show that semantically related words partially activate each other, they cannot identify which of these features are responsible for the facilitation effect. Identifying the responsible features would help distinguish between sensorimotor and amodal distributed models of semantic memory; if concepts that are related via sensorimotor features partially activate each other, this would suggest that their meanings are represented (at least in part) as patterns of activation over sensorimotor-based attributes.

A handful of studies have explicitly manipulated the semantic relationship between primes and targets (e.g., Schreuder et al., 1984). Most of these studies explored whether semantic priming would be obtained when primes and targets have the same shape or function (function is defined here as the purpose for which an object is used, e.g., flashlight and lantern have the same function), and both shape and function priming have been observed1 (Schreuder et al., 1984; Flores d'Arcais et al., 1985; Taylor & Heindel, 2001). Semantic priming has also been observed for objects that are manipulated (i.e., interacted with) similarly (e.g., piano and typewriter, which are both tapped with the fingers [Myung et al., 2006]). Results from visual world eyetracking studies, in which preferential fixations were observed on objects related (in function, shape, or manipulation) to a named object, are also consistent with the hypothesis that related objects have overlapping representations (Yee, et al., under review; Myung et al., 2006). Behavioral studies therefore suggest that objects with similar functions, manipulations or shapes do in fact have partially overlapping representations. If this is true, then this overlap should be instantiated at the neural level. The experiment reported here examines whether feature overlap is instantiated at the neural level by using an fMRI adaptation paradigm that permits identification of the particular brain regions in which the overlap is located.

The assumption underlying the fMRI adaptation paradigm is that repeated presentation of the same visual or verbal stimulus results in reduced fMRI signal levels in brain regions that process that stimulus, either because of neuronal “fatigue” (e.g., firing-rate adaptation) or because the initial activation of a stimulus' representation is less neurally efficient than subsequent activation (see Grill-Spector et al., 2006 for a review). In a typical fMRI adaptation experiment, stimuli are presented which are either identical (which produces an adaptation/reduced hemodynamic response) or completely different (producing a recovery response). However, it is possible to use stimuli pairs that are related, rather than identical, and several recent fMRI studies of semantically related word pairs have found less activation for related than unrelated word pairs, predominantly in temporal and/or inferior frontal cortices (Kotz et al., 2002; Rossell et al., 2003; Rissman et al., 2003; Copland et al., 2003; Giesbrecht et al., 2004; Matsumoto et al., 2005; Gold et al., 2006; Tivarus et al., 2006; Kuperberg et al., 2007; Bedny, McGill & Thompson-Schill, 2008).

The adaptation paradigm can also be employed while varying the level of stimulus similarity (e.g., Kourtzi & Kanwisher, 2001; Epstein et al., 2003; Fang, et al., 2005) in order to obtain a measure of neurally perceived difference: the greater the similarity, the greater the expected adaptation. Because of this sensitivity to similarity, the adaptation paradigm is a natural fit for examining whether, as predicted by distributed models, semantically related objects have overlapping representations; for if they do, when one object's representation is activated, semantically related objects should also be partially activated. Thus the presentation of semantically related objects should produce adaptation, with the greater the relatedness, the greater the adaptation. Two recent fMRI studies found just such an ordered effect of relatedness (but c.f. Raposo et al., 2006). In Wheatley, et al. (2005) subjects silently read pairs of words which were either unrelated in meaning, semantically related, or identical. In left ventral temporal cortex and in anterior left inferior frontal gyrus, the greatest activity was found for unrelated word pairs, less for semantically related pairs, and least of all for identical pairs. Similarly, in Wible et al., (2006), subjects heard pairs of words that were either strongly connected (in that they shared many associates), weakly connected (sharing fewer associates), or unrelated. In bilateral posterior superior and middle temporal cortex, activation was greatest for unrelated pairs, less for weakly connected, and the least of all for strongly connected pairs.

The fact that many of these semantic adaptation studies found adaptation in the inferior frontal and middle temporal gyri in particular, could be interpreted as support for amodal representations, as it has been suggested that these areas underlie amodal semantic processing (e.g., Postler et al., 2003). Crucially, however, none of these prior adaptation studies attempted to test specific sensorimotor features. Therefore, they do not address whether concepts' representations may also be comprised of sensorimotor features that are represented in sensorimotor areas.

Brain imaging studies that have addressed particular semantic features have demonstrated that when retrieving information about a specific attribute of an object (e.g., its color or shape), brain areas in, or just anterior to, those implicated in perceiving that attribute become active (at least for color, shape, and manipulation; for a review, see Thompson-Schill, Kan & Oliver, 2006). While it is possible that these sensorimotor regions were activated as a consequence of tasks that directed attention to their corresponding features, there is some evidence to the contrary: Even under conditions that do not require attending to manipulation information (i.e., under passive viewing) pictures of tools produce greater activation in left ventral premotor and left posterior parietal cortex than non-manipulable objects (Chao & Martin, 2000). The fact that motor regions were automatically activated when tools were viewed implies that these motor regions are part of tools' conceptual representations. This is consistent with the notion that concepts are represented in or near perceptual cortices. However, these studies do not address whether objects that share semantic features have overlapping (rather than nearby) representations.

If as sensorimotor-based theories suggest, the meaning of a (concrete) concept is distributed over the multiple brain regions involved in perceiving and/or interacting with the object, then conceiving of an object should automatically activate these sensorimotor regions. An object's shape and the way it is manipulated can both be directly linked to sensorimotor information. On the other hand, function, in the way we define it (the purpose for which an object is used, e.g., flashlight and lantern share the same function) does not by itself have a direct sensorimotor correlate. It is therefore possible that shape and manipulation similarity will be reflected in adaptation in regions devoted to processing object form and to motor programming respectively, but that function similarity will be instantiated in regions that have been hypothesized to represent more abstract, higher order relationships.

We use an adaptation paradigm to focus on two features in particular: function and shape, asking specifically whether pairs of objects with similar functions and/or shapes have overlapping neural representations (as indicated by eliciting a smaller neural response than unrelated pairs), and also whether the extent of any such overlap can be predicted by the degree of similarity. Because there is considerable variability across such pairs in manipulation similarity (e.g., things that are similar in both function and shape tend to be manipulated more similarly, while things similar in shape but not function tend not to be), we also examine whether objects that are manipulated similarly have overlapping representations.

Materials and Methods

Behavioral Experiment

Subjects

Subjects were 30, right handed, monolingual, native speakers of American English, aged 20-33, from the University of Pennsylvania and Drexel University communities. They were paid $10 for participating.

Stimuli

We selected an initial set of 195 word pairs. Seventy-two were from Thompson-Schill (1999), and an additional one hundred twenty-three were developed using the MRC Psycholinguistics Database (Coltheart, 1981) and the University of South Florida Free Association Norms (Nelson et al., 1998). Words were chosen to be high in familiarity and imageabilty. In a separate norming study, each word pair was rated by sixty volunteers on a 1-7 scale for similarity in function: “rate the following pairs of objects according to how similar their functions are”, shape: “picture the things that the words refer to and rate them according to how similar their shapes are”, or manipulation: “consider the typical movements you make when you use these objects and rate how similar the movements are”. Sixteen separate norming participants also rated each item on tactile experience: “How much experience have you had touching this object?”. 2

Based on the function and shape ratings, 144 of the 195 pairs were divided into 4 conditions (of 36 pairs each), made up of pairs that shared: (1) function only (flashlight – lantern), (2) shape only (marble – grape), (3) both shape and function (pencil – pen) or (4) were unrelated (saucer – needle) (Table 1). A fifth condition of 36 pairs involved repetition of the same word (drill – drill), thus sharing all features. There were therefore 180 pairs in all3. Each object word was prefaced with the definite article “the” (“the drill”) to avoid part of speech ambiguity. We also created thirty-nine probe items (e.g., “bumpy?”) (See Appendix).

Table 1.

Average attribute ratings (from 1-7) for each condition. Standard errors in parentheses.

| Condition | ||||

|---|---|---|---|---|

| Unrelated | Shape | Function | Shape+Function | |

| Shape Similarity | 1.1(0.1) | 5.4(0.1) | 2.7(0.2) | 5.8(0.1) |

| Function Similarity | 1.2(0.1) | 1.8(0.1) | 5.9(0.1) | 6.1(0.1) |

| Manipulation Similarity | 1.4(0.1) | 3.7(0.3) | 4.8(0.2) | 6.8(0.1) |

Procedure

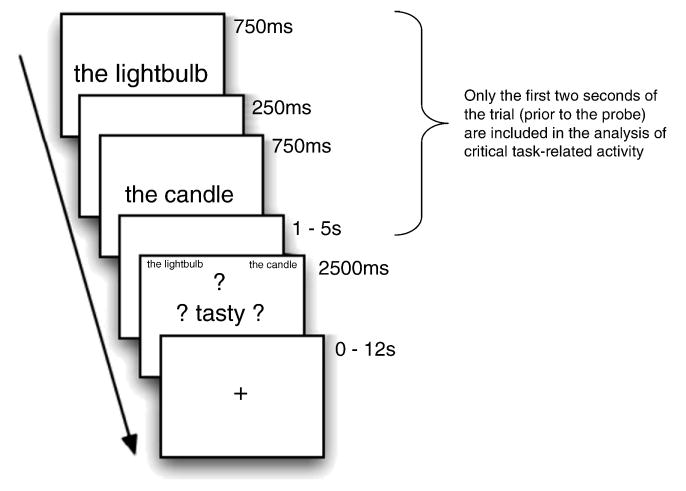

Stimuli were presented with E-Prime experimental software. Instructions were presented both verbally and on-screen. One word from the pair was presented in the center of the screen (e.g., the lightbulb) for 750ms. After a 250 ms ISI, the second word was presented in the center of the screen (e.g., the candle) for 750 ms. The sequential presentation of the pair of items was followed by a fixation-only ISI, the duration of which was randomly varied (1000, 3000, or 5000 ms). After this “jittered” delay, the probe screen was presented. At this point, the two words were represented, in the upper left and right corners (to help ensure that the task did not require verbal rehearsal of the test items during the delay), and a probe word appeared in the center of the screen, surrounded by question marks (e.g., ? tasty ?). (see Figure 1). At the probe, participants responded as to whether either one of the two words had the property described by the probe word. Trials were separated by a 2000ms inter-trial interval (ITI) consisting of a fixation point. Participants were instructed to respond as quickly as possible without sacrificing accuracy. Response times were measured from the onset of the probe display, which appeared for 2500ms regardless of the participant's response or lack thereof. Each word pair was seen exactly twice; each probe was seen nine or ten times. Trial and probe order were randomized for each subject (making it unlikely that a subject would be presented with the same probe twice for a given pair). A one-minute rest break was provided after every forty-five trials. The experiment lasted one hour.

Figure 1.

The structure of a trial in the imaging study. The critical task-related activity was modeled from only the first two seconds of the trial. The structure of behavioral-only trials was identical except that the ITI was always 2s (rather than ranging from 0-12s)

Imaging Experiment

Subjects

Subjects were 18 right-handed, monolingual, native speakers of American English, aged 19-33 years from the University of Pennsylvania community. They were paid $40 for participating. None of the participants had a history of neurological or psychiatric illness or were currently using medication affecting the central nervous system. Four subjects were excluded from further analyses due to excessive motion (excursion of over 6mm or degrees), so the results reported below were obtained from a sample of 14 participants.

Image Acquisition

MRI data was collected on a 3-Telsa Siemens Trio scanner at the Hospital of the University of Pennsylvania using a Bruker quadrature volume head coil. Axial T1-weighted structural images were collected using the MPRAGE sequence with isotropic voxels of 1mm thickness. T2* weighted BOLD data was then collected in echoplanar 3mm isotropic voxels. A total of 33 interleaved axial slices were acquired (TR = 2000ms, TE = 30ms, matrix size = 64 × 64 pixels). Functional data was collected in 8 runs of about 9 minutes each. Subjects viewed a fixation point during the first 12 seconds of each run to allow for steady state magnetization; data from this time period was discarded before analysis.

Stimuli and Procedure

Stimuli and procedure were identical to the behavioral experiment with the following exceptions: Stimuli were displayed via an Epson 8100 3-LCD projector with a Buhl long-throw rear-view/rear-projection lens, projecting onto a Mylar screen visible to the subject via a mirror mounted inside the head coil. Video signals were fed into the magnet room via a Lightwave FiberLynx fiber-optic VGA connection. Trials were separated by an ITI ranging from zero to twelve seconds. These ITIs were determined by the OPTSEQ program4. Responses were monitored using a fiber-optic button box.

Image Processing

Raw data were converted from native Siemens 2D images to VoxBo 4D images. The data were sinc interpolated to correct for the slice acquisition sequence, thresholded to remove artifacts and non-brain data, and motion corrected with a least squares six parameter rigid body realignment routine using the first function image as reference. The VoxBo software's modified general linear model was used to analyze voxelwise BOLD activity as a function of condition on each trial. Signal change was modeled by creating independent covariates of interest for each event type, including “same shape”, “same function”, “unrelated”, “shape+function”, “same word”, “probe”, “jitterdelay”, and “ITI”. For item analyses, a single, independent binary covariate of interest was created for each item. (We then correlated the beta values from these covariates with the similarity ratings, as described below.) Covariates of interest were convolved with a standard impulse response function. Global signal and differences between runs were included in the model as variables of no interest (after testing to ensure that the global signal had low colinearity with the conditions of interest). Noise was filtered using a 1/f noise model empirically derived from the average of all of the subject's runs. Raw data for all runs from each subject were transformed to standardized MNI space.

Results

Behavioral Results

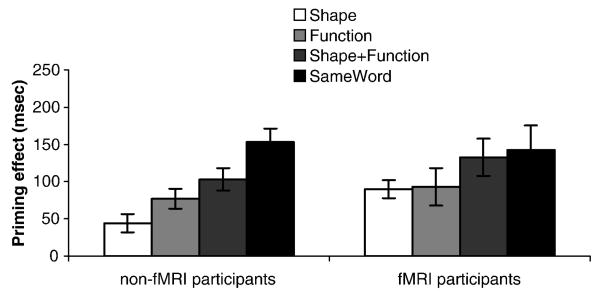

Property verification response times were analyzed. Accuracy information was not calculated because norms for what constituted a correct response for each probe-item pair combination were not available. Because the task was to decide whether the probe word applied to either word in the pair, for ‘yes’ responses, it is conceivable that when responding to the probe participants only considered one of the items. We therefore included in the analysis only trials in which subjects answered ‘no’ (76% of responses). For each related condition (i.e., shape, function, shape+function and sameword), we calculated a priming effect by subtracting the mean response time in that condition from that in the unrelated condition. For participants in the behavioral experiment, all conditions showed reliable priming relative to the unrelated condition, and a one-way repeated measures ANOVA of the four related conditions revealed a main effect of condition, F(3, 27) = 16.2, p<.001. Priming effects displayed the following pattern: sameword > shape+function > function > shape (Figure 2, left panel). Follow-up paired t-tests revealed that all conditions differed significantly from each other at the p<.05 level. There was no effect of jitter-delay. The behavioral responses from the fMRI participants were subjected to an identical analysis. Due to equipment failure, response times from 4 of the 14 participants were not collected. All conditions showed reliable priming, but there were no differences between the four related conditions, F(3, 7) = 1.7, p=.25. However, the ordering of conditions was the same as in the behavioral experiment (Figure 2, right panel).

Figure 2.

Priming effect in each condition (“no” responses only; each condition subtracted from “unrelated” condition) in non-fMRI participants and fMRI participants

Imaging Results

The data from the initial presentation of the word pair (i.e., 750 for each word, plus 250 ISI after each word) were modeled separately from the probes; the analyses focused on this initial time period only. During this period (prior to the probe), participants could not yet know which response (“yes or “no”) they were going to make. Therefore, we included all trials in the analysis (i.e., those that ultimately received “yes” and “no” responses).

We conducted two sets of analyses: an fROI analysis comparing responses to each condition, and a whole-brain item analysis using the (previously collected) function, shape, or manipulation similarity ratings for each word pair (irrespective of condition) as parametric variables. This latter kind of item-based approach (averaging over items across subjects) is commonly used with behavioral data, but it has only recently been applied to fMRI (Bedny, Aguirre & Thompson-Schill 2007). Such an analysis has the potential to be extremely powerful because obtaining parameter estimates for individual items permits finer-grained explorations into representation than is possible with traditional fMRI analyses.

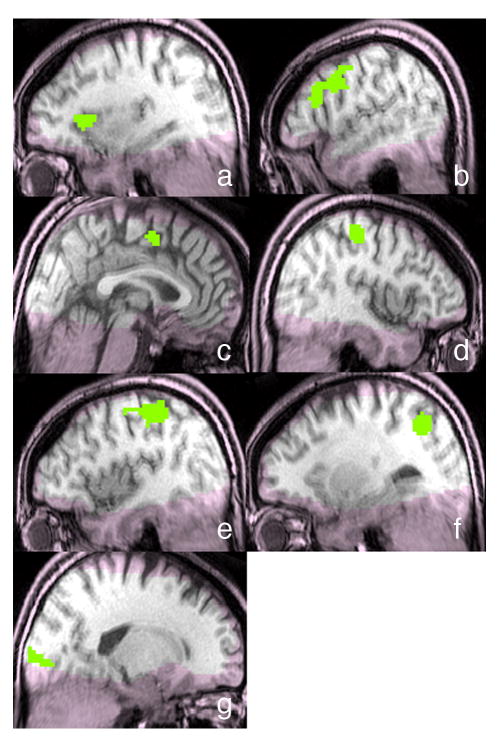

fROI Condition-based analyses

For the fROI analysis, we functionally-defined ROIs by performing a random effects group analysis to detect areas for which activity during the initial presentation of the word pair (averaged across all five conditions) differed from activity during the ITI5. A permutation analysis was used to control the family wise error rate at p<0.05 (two-tailed) corrected for multiple comparisons across voxels. This analysis produced seven functional ROIs of more than 20 contiguous voxels (Table 2 and Figure 3). No other cortical regions met these criteria. Exploratory analyses employing a more lenient threshold (p=.30, corrected) expanded the size of these seven regions, but no additional regions appeared.

Table 2.

Seven brain areas identified as more responsive to the task (averaged across all conditions) than to the inter-trial interval.

| Region | Peak voxel MNI coordinates | Number of voxels |

|---|---|---|

| left insula | [-29, 20, 6] | 47 |

| left precentral cortex with extension into inferior frontal gyrus; BA 6/9 | [-47, 12, 28] | 198 |

| cingulate sulcus; BA 24 | [3, 2, 52] | 72 |

| right postcentral gyrus; BA 4 | [39, -26, 54] | 104 |

| left postcentral gyrus; BA 3 | [-40, -28, 54] | 208 |

| left intraparietal sulcus; BA 7 | [-27, -62, 45] | 93 |

| right calcarine sulcus; BA 17 | [17, -91, 2] | 97 |

Figure 3.

Seven fROIs defined by task > ITI: a) left insula; b) left precentral cortex (extending into inferior frontal gyrus); c) cingulate sulcus; d) right postcentral gyrus; e) left postcentral gyrus; f) left intraparietal sulcus; g) right calcarine sulcus. Regions in which coverage was poor are shaded pink.

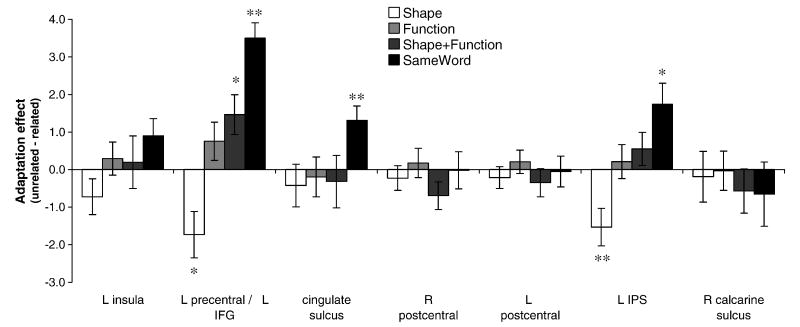

To determine whether there was adaptation in these regions, activity (i.e., mean signal in the region) in each of the related conditions (i.e., shape, function, shape+function and sameword) was compared to the “unrelated” condition (Figure 4). Three of the regions exhibited adaptation for the “same word” condition (left precentral [extending into inferior frontal gyrus], cingulate sulcus, and left intraparietal sulcus). There was also adaptation for the shape+function condition in the left precentral region. Although none of the other conditions produced reliable adaptation effects, there was an inverse adaptation effect for the “shape” condition in both the left precentral and left intraparietal regions. Further, in two regions (left precentral and left intraparietal sulcus) the relative amounts of adaptation for the different conditions corresponded to the order of RTs observed in the behavioral data: sameword > shape+function > function > shape (the match is not exact, however, because there was greater activation for the shape condition relative to the “unrelated” condition).

Figure 4.

Adaptation effects for pairs related in shape, function, shape+function or identity (i.e., same word) in the seven functionally defined ROIs. Significant effects are marked with asterisks (* p≤.05, ** p<.01).

Whole-brain Item-based analyses

To determine whether there were any brain regions in which degree of function, shape or manipulation relatedness correlated negatively with activation (i.e. showing adaptation), we conducted whole-brain analyses treating the similarity ratings for each word pair (irrespective of condition) as parametric variables, and correlating them with voxelwise BOLD activity. (To avoid the possibility that an observed correlation would be driven exclusively by “sameword” pairs, this condition was not included.) This item-based correlational analysis was thresholded as described above, i.e., a permutation analysis-corrected p<0.05 (corresponding to r = -.17) had to be exceeded by more than 20 contiguous voxels. When the correlation was conducted with shape ratings, no brain areas exceeded this threshold6. In contrast, when the correlation was conducted with function ratings, five regions exceeded threshold, and when the correlation was conducted with manipulation ratings, two exceeded threshold. Table 3 and Figure 5 provide information on these regions. No other cortical regions survived the thresholding for any of the attributes.

Table 3.

Regions in which degree of shape, function or manipulation similarity (according to norming) correlates negatively with activation.

| Similarity ratings included in correlation analysis | Region in which activation correlates with ratings [peak correlation MNI coordinates] |

|---|---|

| Shape | None |

| Function | left precentral cortex (extending into inferior frontal gyrus); 671 voxels; BA 6/9 [-39, 9, 30] |

| left posterior superior frontal gyrus; 101 voxels; BA 6 [-18, -6, 58] | |

| left medial temporal lobe; 44 voxels; BA 36 [-36, -27, -15] | |

| left posterior middle temporal gyrus; 74 voxels, BA 37 [-45, -54, -9] | |

| left intraparietal sulcus; 116 voxels, BA 7 [-24, -60, 45] | |

| Manipulation | left precentral cortex; 405 voxels; BA 6/9 [-36, 9, 30] |

| left intraparietal sulcus; 22 voxels; BA 7/19 [-27, -69, 33] | |

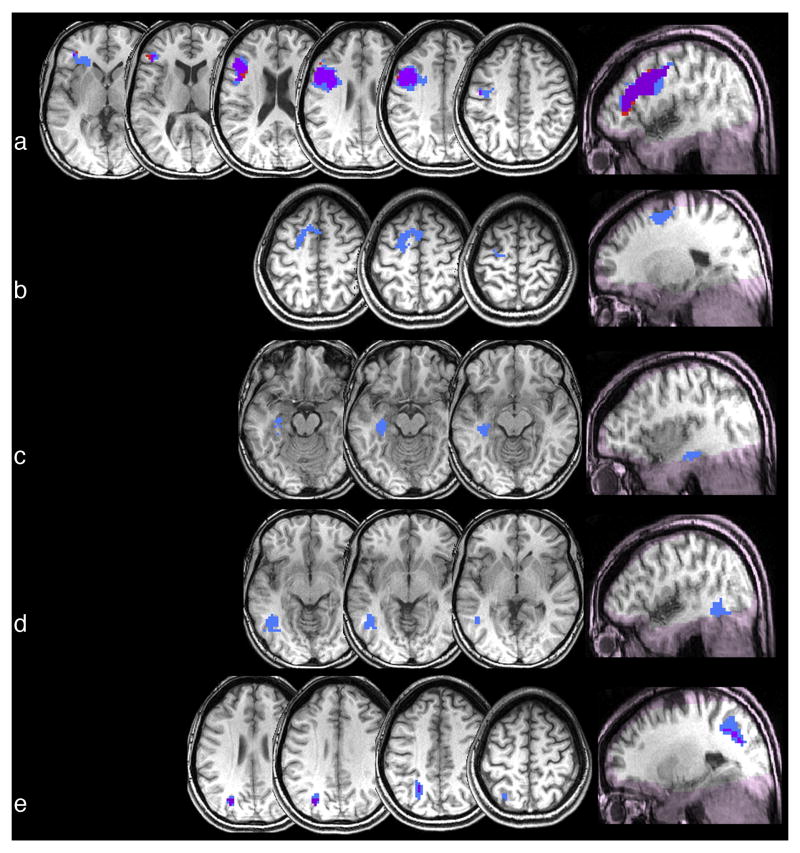

Figure 5.

Regions in which similarity (according to norming) of function (blue), manipulation (red), or both (purple) correlates negatively with activation: a) left precentral cortex (extending into inferior frontal gyrus); b) left posterior superior frontal gyrus; c) left medial temporal lobe; d) left posterior middle temporal gyrus; e) left intraparietal sulcus. Regions in which coverage was poor are shaded pink.

Discussion

In the current study, we used an fMRI adaptation paradigm to explore the nature of semantic representations. In particular, we asked whether objects with similar functions and/or shapes or manipulations have overlapping neural representations. Another goal was to investigate the extent to which the representations of objects with similar sensorimotor features (shapes or manipulations) overlap in sensorimotor cortices, and the extent to which the representations of objects with similar abstract features (function) overlap in regions linked to amodal/multimodal processing.

There were four main findings: 1) objects that are similar in both function and shape have overlapping representations (indicated by significant adaptation effects) in left precentral cortex; 2) the degree of manipulation similarity predicts the degree of adaptation in left precentral cortex and left intraparietal sulcus; 3) shape similarity produces an inverse adaptation effect both of the above regions and 4) degree of function similarity predicts the degree of adaptation in the above two regions and also in two left temporal regions (medial temporal lobe and posterior MTG) as well as in the left superior frontal gyrus. The first three findings, we will argue, highlight the role of manipulation knowledge and the fact that this knowledge is represented in sensorimotor cortices. The fourth finding suggests, in contrast, that the more abstract feature, function, is represented in areas linked to amodal/multi-modal processing. Hence, we obtained evidence for distributed representations that include both perceptually grounded and amodal/mutimodal components. Below we elaborate on these findings and discuss their implications.

In a region of interest analysis, we functionally defined ROIs as regions that responded more to the initial presentation of the word pair relative to the ITI. We observed seven such regions (Table 2 / Figure 3). In three of these regions (left precentral cortex, cingulate sulcus and left intraparietal sulcus) we observed adaptation for repeated presentation of the same word. The first finding related to our current questions, however, was that left precentral cortex (i.e., premotor cortex) also showed adaptation for objects that are similar in both function and shape. Why might a region involved in performing and imagining actions (Decety et al., 1994) show adaptation for word pairs related in function and shape? Since objects with similar functions and shapes are almost invariably manipulated similarly, we hypothesize that this adaptation may be due to similarity in manipulation rather than function and shape per se. Consistent with this interpretation, we observed no adaptation for function alone in the premotor region, and an inverse adaptation effect for shape alone; hence, the combined effects of function alone and shape alone would not account for the observed adaptation for the shape+function condition. If manipulation, on the other hand, is in fact driving the adaptation effect in premotor cortices, then in this region (and irrespective of condition) how similarly objects are manipulated should correlate with degree of adaptation. Our second main finding was exactly that: a whole-brain voxel-wise correlation analysis using manipulation ratings revealed that the more similarly two objects are manipulated, the greater the adaptation in left precentral cortex. This analyses also revealed a relationship between manipulation similarity and adaptation in left IPS, part of the dorsal stream involved in planning skilled actions, [Goodale & Milner, 1992]).

It should be noted that adaptation in premotor cortex and IPS was also predicted by function ratings (Table 3), and that across all item pairs similarity ratings for function and manipulation were very highly correlated (r=.777). Thus the whole-brain analyses by themselves cannot distinguish between whether the adaptation in premotor cortex and IPS should be attributed to manipulation or function; to decisively demonstrate that manipulation alone is responsible for the effects in these two regions, an experiment that is explicitly designed to measure the effects of manipulation independently of other variables is clearly needed. However, as noted above, in the fROI analysis, the function condition (in which manipulation similarity was relatively low) did not produce significant adaptation in premotor cortex or IPS (Figure 4). This implies that the correlations in these regions are not driven by function alone, making manipulation the more likely candidate.

A relationship between similarity of manipulation and adaptation in premotor cortex and IPS is consistent with several neuroimaging studies of manipulable objects which (though varying in the extent to which explicit retrieval of manipulation information was required by the task) have found effects in premotor cortex (Grafton, et al., 1997; Gerlach et al., 2002; Kable et al., 2005), intraparietal sulcus and/or inferior parietal lobule (Binofski et al., 1999; Boronat et al., 2005) or both (Chao & Martin 2000; Kellenbach et al., 2003; Wheatley et al., 2005; Buxbaum et al., 2006; Canessa et al., 2007). However, the present result extends these finding by suggesting that similarity of manipulation, (rather than only the possibility of manipulation) is encoded in these regions.

This finding regarding the encoding of manipulation similarity has significance to a question that is often raised when sensorimotor regions are activated during conceptual processing: Might any such activation be epiphenomenal, rather than part of the conceptual representation? There are many possible epiphenomena one might refer to when raising this objection; for example, activation in visual areas during semantic processing could be related to the process of mental image generation and not to the activation of stored visual features per se. The nature of fMRI is such that the methodology does not allow for inferring that any particular activated region is necessary to a representation. However, the current findings advance this discussion in novel ways: rather than determining the mere presence or absence of activation in sensorimotor areas during semantic retrieval, we characterize the pattern of activation across items. Our data constrain the set of possible epiphenomenal accounts to those in which the neural pattern associated with the putative epiphenomenal process tracks the similarity among the concepts evoking that process8. The visual imagery account just described, for example, would not be expected to have this feature. In contrast, this pattern falls naturally out of a sensorimotor account in which objects that share sensorimotor features have overlapping representations.

Thus, we argue that our findings provide support for the hypotheses that: 1) Knowledge about how objects are manipulated is stored in, or near the systems that act on those objects, and 2) Objects that are manipulated similarly have overlapping representations in these areas. This second hypothesis receives additional support from two recent behavioral studies indicating that objects that are manipulated similarly partially activate each other (Myung et al., 2006), but that this activation is abnormal in apraxic patients with lesions in left inferior parietal lobule and precentral gyrus/premotor cortex (Myung, et al., accepted).

We now turn to our third finding. We found no adaptation for shape. Instead, reading the names of objects with similar shapes resulted in an increased neural response relative to completely unrelated objects (i.e., an inverse adaptation effect) in left precentral cortex and left IPS. The absence of a positive adaptation effect for shape (we shall come back to the presence of a inverse adaptation effect) is somewhat surprising given that two prior studies have provided some suggestion that objects similar in shape do partially activate each other: event-related-potentials showed that target words related in shape to prime words elicited reduced N400s relative to unrelated target words (Kellenbach et al 2000); and viewing similarly shaped objects produced adaptation in regions involved in object shape processing (Kourtzi & Kanwisher, 2001). However, there are several possible reasons for the absence of adaptation in the current study.

First, and importantly given our prediction that we would observe adaptation in areas involved in processing object shape, in several subjects data acquisition was poor in temporal areas including the ventral temporal lobe (see Figure 3) which is known to be involved in the processing of object shape (Kourtzi & Kanwisher, 2001). Second, it is possible that because most of the items we employed were non-living9, shape is not an automatically activated component of their representations (Thompson-Schill, Aguirre, D'Esposito & Farah, 1999). However, this second account is difficult to reconcile with our behavioral data, in which there was a priming effect for shape-related pairs (despite that few of the probe words were visual). A third possibility is that shape information becomes active early during concept retrieval, but rapidly decays (e.g., Schreuder et al., 1984; Yee et al., under review). If true, the neural response to shape may have dissipated by the time the second word appeared10. If shape information is only briefly active, though, then why did we observe behavioral priming in the shape condition? Perhaps because unlike the neural measure, which was derived from the response to two words presented sequentially, the prime and target reappeared together on the screen when the behavioral response was made. This simultaneous presentation may have reactivated their representations, allowing the behavioral measure to detect the brief activation of their shape information.

To the extent to which these accounts are accurate, they can only be part of the explanation for our findings regarding shape, however, because we did not observe a null result in the shape condition – we instead observed an inverse adaptation effect. Increased neural activation in response to semantically related stimuli (a.k.a. “response enhancement”) has been reported in several other studies (Rossell et al., 2001; Kotz et al., 2002; Rossell et al., 2003; Raposo et al., 2006; Copland et al., 2007), but adaptation (or “response suppression”) is much more common. It has been suggested that response enhancement usually reflects controlled processing or expectancy generation (e.g., Kuperberg et al., 2008). However, it would be difficult to attribute the inverse adaptation effect in the current study to controlled processing because the shape+function condition (in which the relationship was more obvious than that in the shape-only condition) produced a standard adaptation effect.

Reflecting on how our shape stimuli were created, however, may shed light on this unexpected finding. Our shape-related pairs were carefully selected to be dissimilar in function; and objects that are similar in shape but not function are likely to be dissimilar in manipulation. It is possible that in brain regions that represent manipulation information, it is necessary for objects that are similar in shape but dissimilar in manipulation to have very distinct activation patterns – to ensure that the overlap on shape does not result in accidentally manipulating these objects in the same way (e.g., eating marbles due to their similarity in shape to grapes). This could account for the inverse-adaptation effect in the motor-associated areas; the more similar in shape two dissimilarly manipulated objects are, the more distinct the representation in these areas would be.

If true, after excluding pairs from the analysis that are similar in shape and supposed to be manipulated similarly, we would expect adaptation in regions that represent manipulation to be negatively correlated with shape similarity (reflecting that objects that are different in manipulation but similar in shape need to have particularly distinct representations in motor areas, whereas objects that are equally different in manipulation but not as similar shape need not have such distinct motor representations). We tested this prediction and found it to be at least partially supported: After excluding pairs that are similar in shape and are supposed to be manipulated similarly11, we conducted an item analysis correlating shape similarity with brain activation (averaged across the region) in the two regions (left precentral and left IPS, see Figure 5) that showed adaptation for manipulation. In the left precentral region we found a significant positive correlation between shape similarity and activation (r=.27, p<.01). In the left intraparietal sulcus region, the correlation with shape was also positive but not significant (r=.07, p=.47). This suggests that at least in the precentral region, the inverse-adaptation effect for shape may result from the emergence (during learning) of maximally-distinctive representations of manipulation information between similarly-shaped objects that have different functions. A similar idea is found in discussions of the mapping between phonological representations and semantic representations of homophones (i.e., words that have the same sound but different meanings; e.g., Kawamoto et al., 1994). In future work, it would be interesting to explicitly test the predictions about representations suggested by the inverse adaptation effects we observed.

Finally, we turn to our fourth finding. None of the functionally-defined task-sensitive regions exhibited adaptation for objects similar in function only. This finding is consistent with a PET study that did not observe regions more activated by function than action judgments (Kellenbach et al., 2003). However, in a more sensitive test – a whole-brain parametric analysis – we found that degree of function similarity (based on similarity ratings) predicts degree of adaptation in five regions. As noted above, in two of these regions (left premotor cortices and left IPS) adaptation was also correlated with similarity of manipulation, making it difficult to attribute the pattern to one or the other attribute (and function and manipulation similarity were too strongly correlated to partial out one from the other). Nevertheless, we have argued that because there was no effect in these regions in the function-only condition of the fROI analysis, manipulation is more likely to account for the relationship. On the other hand, in the three regions in which adaptation was correlated with function but not manipulation (left superior frontal gyrus, left medial temporal lobe and left posterior middle temporal gyrus) we can more confidently ascribe the effect to function similarity. The left medial temporal lobe and MTG have both been characterized as multi-modal areas (see Mesulam, 1998, for a review), serving to integrate information from unimodal cortices. One possibility is that these regions integrate shape and manipulation information arriving from other areas, allowing for function similarity to be encoded. With respect to the MTG, such an organization would be consistent with the hypothesis that motion information in the occipito-temporal cortex is organized along a concrete to abstract gradient, with concrete motion information encoded more posteriorly near human MT/MST (just posterior to the MTG region we observed), and more abstract information (which should require integration) encoded in more anterior areas (Kable et al., 2005). A role for the left posterior MTG in integration receives additional support from studies suggesting that it is involved in contextual combination during phrase interpretation (e.g., Gennari et al., 2007).

Several other studies, while not controlling for the particular overlapping feature, have also observed adaptation for semantically related pairs in regions in left medial temporal lobe (Rossell et al., 2001; Rossell et al., 2003; Wheatley et al., 2005; Wibble et al., 2006) and left posterior MTG (Wheatley et al., 2005; Gold et al., 2006; Giesbrecht, et al., 2004; Kuperberg et al., 2008). Further, the two studies that have looked for parametric effects have also observed parametric adaptation effects for semantically related pairs in similar medial (Wheatly et al., 2005) and lateral temporal areas (Wible et al., 2006; but c.f. Raposo et al., 2006). As described in the introduction, the category co-exemplars that often comprise “semantically” related pairs are usually related on multiple features. Hence it is possible that the adaptation found in these earlier studies in part reflects multi-modal (e.g., function) similarity.

Together, our findings for function and manipulation suggest that these two attributes are represented independently (at least in some brain regions).12 This is consistent with patient studies that have found that information about how an object is manipulated can be intact despite impaired knowledge of its function (Sirigu, Duhamel & Poncet, 1991; Buxbaum & Saffran, 2002), or vice-versa (Riddoch et al., 1989; Tranel et al., 2003). Further, consistent with the current work, these patient studies have linked knowledge about object function to temporal cortices (Sirigu et al., 1991) and object manipulation knowledge to fronto-parietal cortices (Buxbaum & Saffran, 2002; Tranel et al., 2003).

It is worth explicitly considering how it might be possible to reconcile sensorimotor-based theories of semantic memory with more categorical accounts of semantic representation (for example, the notion that tools and animals are represented in different brain regions because of their taxonomic category, see Mahon & Caramazza, 2009). The Wheatley et al. (2005) study described in the introduction is relevant here. They observed that manmade (generally manipulable) objects produced greater activation in dorsal areas involved in controlling movement (left premotor cortex, left anterior cingulate, and bilateral inferior parietal lobule) compared to animate (generally non-manipulable) objects. In contrast, animate objects produced greater activation in posterior and ventral areas involved in processing visual color and form (left lateral fusiform gyri, right superior temporal sulcus, and medial occipital lobe). Their findings are consistent with the hypothesis (originally articulated by Warrington & McCarthy, 1987) that category effects maybe a consequence of different kinds of objects tending to depend on different types of information to different extents, such that even without semantic memory being categorically organized per se, an organization that partially adheres to category boundaries could emerge. Our findings for function also speak to this topic; the involvement of left medial temporal lobe and MTG (areas previously implicated in multimodal integration) is consistent with the existence of a system that integrates sensory information from multiple modalities, allowing higher-order relationships (e.g., perhaps emergent categories corresponding to tools vs. animals) to be represented (see Patterson et al., 2007 for a review).

Conclusions

We found adaptation for words similar in function and shape, and also that degree of adaptation was predicted by degree of manipulation similarity in two regions, and by degree of function similarity in three additional regions. Specifically, adaptation correlated with manipulation in dorsal stream regions that have been implicated in motor imagery and motor behavior, suggesting that manipulation information is encoded in sensorimotor areas. Similarity in function, on the other hand (a feature that does not have an obvious sensorimotor correlate), was uniquely correlated with activity in the left superior frontal gyrus, the left medial temporal lobe and the left middle temporal gyrus. The latter two regions have been hypothesized to encode multi-modal information, consistent with function information being encoded in multi-modal areas. Hence, our findings demonstrate that objects with similar features have overlapping representations and suggest that these representations are encoded in both sensorimotor and multi-modal cortices. This is consistent with models of semantic memory that posit that representations include both sensorimotor and amodal/muitimodal components.

Acknowledgments

This research was supported by NIH Grant (R01MH70850) awarded to Sharon Thompson-Schill and by a Ruth L. Kirschstein NRSA Postdoctoral Fellowship awarded to Eiling Yee. We are grateful to Geoff Aguirre for assistance with data analysis, and to Katherine White, Sarah Drucker and members of the Thompson-Schill lab for helpful comments.

Appendix

| Unrelated | Shape | Function | Shape+Function | Identity | Probes |

|---|---|---|---|---|---|

| ambulance-bagpipe | ball-planet | airplane-subway | apple-peach | bandage | bright |

| anchor-slingshot | ball-plum | backpack-suitcase | apron-smock | blade | bumpy |

| antennae-igloo | balloon-melon | bathtub-shower | bed-cot | blanket | comfortable |

| cake-scarf | banjo-racket | bell-gong | bench-sofa | boot | curved |

| canoe-comb | block-dice | belt-suspenders | blanket-quilt | bridge | cutting |

| emerald-harbour | bowl-helmet | bike-car | boat-ship | chair | damaging |

| flask-tweezers | box-aquarium | birdcage-doghouse | bucket-pail | clarinet | dangerous |

| fountain-tooth | bracelet-handcuff | bomb-gun | can-bottle | dart | delicate |

| funnel-guitar | bracelet-hoop | bread-cake | canoe-kayak | drill | enjoyable |

| glove-raspberry | cigarette-chalk | broom-vacuum | carpet-rug | drum | fast |

| hammer-peach | clock-compass | bullet-arrow | coat-jacket | fork | flat |

| hat-towel | cylinder-post | cabinet-closet | crayon-marker | hose | fragile |

| key-cart | cymbal-frisbee | cannon-slingshot | crib-cage | jeep | gleaming |

| ladle-knob | flag-sheet | car-bus | deck-patio | key | glowing |

| lampshade-baton | fork-rake | cassette-CD | desk-table | kite | greasy |

| marble-grass | grate-fence | chopsticks-fork | fence-gate | microscope | heavy |

| meadow-dog | hose-rope | clarinet-harmonica | glass-mug | mirror | hissing |

| microphone-magazine | leash-whip | dice-cards | gown-robe | necklace | marking |

| padlock-rope | lemon-football | dress-suit | hammer-mallet | needle | melodic |

| penny-carrot | marble-grape | flashlight-lantern | hotel-inn | oven | noisy |

| pie-duck | marble-pearl | globe-map | motor-engine | pants | opening |

| radio-bracelet | oar-spatula | gun-sword | muffin-cupcake | pickle | playful |

| razor-iron | pearl-snowball | hourglass-clock | napkin-tissue | precise | |

| saucer-needle | pizza-plate | lightbulb-candle | package-box | quilt | round |

| ski-dress | plank-ruler | lighter-match | pants-jeans | sandal | sharp |

| snow-missile | puck-coaster | orange-banana | pencil-crayon | scissors | silky |

| soap-helmet | safe-oven | pipe-cigar | piano-organ | sink | silver |

| sock-umbrella | soap-brick | saw-axe | pickle-cucumber | spade | smooth |

| stamp-vase | spike-pencil | scissors-knife | pistol-gun | stove | soft |

| teapot-wheel | spoon-lollipop | stairs-elevator | plate-dish | strawberry | solid |

| telephone-house | staple-handle | staple-paperclip | river-creek | toaster | sour |

| telephone-house | staple-handle | staple-paperclip | river-creek | toaster | sour |

| tornado-coffee | sugar-sand | stove-microwave | shoe-sneaker | toilet | sturdy |

| tray-mop | television-box | telescope-binoculars | stable-barn | truck | sweet |

| wallet-mattress | tent-pyramid | tent-cabin | street-road | trumpet | swift |

| yacht-pillow | thimble-cup | wallet-purse | string-rope | typewriter | tasty |

| zipper-seaweed | tire-doughnut | zipper-button | yarn-thread | violin | useful |

| valuable | |||||

| warm | |||||

| wrinkled |

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

However, shape priming has emerged more consistently when responses are faster, while function priming has emerged more consistently when responses are slower. To accommodate this pattern of results it has been suggested that perceptual information becomes available earlier than conceptual (Schreuder et al., 1984; Flores d'Arcais et al., 1985). We return to this timing difference and its implications for our findings, in the general discussion.

This measure was inspired by Oliver et al. (2009), who demonstrated that prior tactile experience with an object (gauged using an almost identical question) affected left inferior parietal lobule activity during semantic retrieval.

Across the five conditions, items did not differ in: familiarity (ratings obtained from the MRC psycholingustic database) (F=.88, p=.48), frequency (F=1.4, p=.23), or tactile experience (F=.33, p=.86). There was a difference in imageability (MRC data: F=3.8, p<.01) with items in the unrelated and same word condition being more imageable than those in the shape or function conditions. However, the unrelated and shape conditions showed very different patterns of BOLD response from each other, making imageability unlikely to account for results. To confirm that any observed differences between conditions are due to similarity on the attributes of interest rather than imageability, all analyses (of both behavioral and BOLD data) were repeated on a subset of items (18 pairs across four conditions were removed) that were balanced for imageability (F=1.1, p=.37). There were no differences between the pattern of results observed in the subset vs. the full set of items.

OPTSEQ is part of the FS-FAST collection of analysis tools (http://surfer.nmr.mgh.harvard.edu/optseq). It determines stimulus optimization (i.e., the optimal word pair onsets) given the repetition time (TR), number of event types, time per event type, and number of acquisitions. A description of the calculations used in the implementation of this program can be found in Burock et al., 1998 and Dale, 1999.

A whole brain analysis comparing initial presentation of the word pair to ITI revealed no effects of condition.

Exploratory analyses employing a more lenient threshold (p=.30, corrected) also yielded no brain areas with a supra-threshold relationship between adaptation and shape similarity.

Because these attributes were so highly correlated it was not possible to include function as a covariate in the analysis.

It is worth noting that at this point, an epiphenomenal account becomes difficult to distinguish from the distributed semantic architecture we propose. Further, the question of the “essential” vs. “nonessential” aspects of conceptual representations seems ill-posed in light of the nature of a distributed semantic architecture, in which attention can be focused on different aspects of a concept via partial activation.

Shape-related pairs never consisted of two living things, no animals were included at all, and in only four (of 36) pairs could one object (a fruit) be classified as “living”.

We can speculate that timecourse may also help explain the discrepancy between studies that suggest that shape is automatically activated for non-living things (e.g., Schreuder et al., 1984) and those suggesting it may not be (Thompson-Schill et al., 1999). It may be that for non-living things, shape information becomes active only briefly before decaying (and hence must be probed within the active period to be detected), whereas for living things, because it is a more essential component (Farah & McClelland, 1991) shape remains active longer. Future work is needed to explore the possibility that different features have different timecourses depending on object category.

Operationally defined as having a similarity rating greater than 4.0 (on a 1-7 scale) on both shape and manipulation.

In light of studies demonstrating the importance of the anterior temporal lobes in semantic processing (see Patterson, et al., 2007 for review), it is important to note that in the current work, as in many fMRI studies (see Devlin et al., 2000 for discussion), data acquisition in these regions was poor.

References

- Allport DA. Distributed memory, modular subsystems and dysphasia. In: Newman SK, Epstein R, editors. Current Perspectives in Dysphasia. Edinburgh: Churchill Livingstone; 1985. pp. 32–60. [Google Scholar]

- Barsalou LW. Perceptual symbol systems. Behavioral and Brain Sciences. 1999;22:577–660. doi: 10.1017/s0140525x99002149. [DOI] [PubMed] [Google Scholar]

- Bedny M, McGill M, Thompson-Schill SL. Semantic adaptation and competition during word comprehension. Cerebral Cortex. 2008;18(11):2574–2585. doi: 10.1093/cercor/bhn018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bedny M, Aguirre GK, Thompson-Schill SL. Item analysis in functional magnetic resonance imaging. Neuroimage. 2007;35(3):1093–1102. doi: 10.1016/j.neuroimage.2007.01.039. [DOI] [PubMed] [Google Scholar]

- Binkofski F, Buccino G, Stephan KM, Rizzolatti G, Seitz RJ, Freund HJ. A parieto-premotor network for object manipulation: evidence from neuroimaging. Experimental Brain Research. 1999;128:210–213. doi: 10.1007/s002210050838. [DOI] [PubMed] [Google Scholar]

- Boronat CB, Buxbaum LJ, Coslett HB, Tang K, Saffran EM, Kimberg DY, Detre JA. Distinctions between manipulation and function knowledge of objects: evidence from functional magnetic resonance imaging. Cognitive Brain Research. 2005;23(23):361–373. doi: 10.1016/j.cogbrainres.2004.11.001. [DOI] [PubMed] [Google Scholar]

- Burock MA, Buckner RL, Woldorff MG, Rosen BR, Dale AM. Randomized event-related experimental designs allow for extremely rapid presentation rates using functional MRI. NeuroReport. 1998;9:3735–3739. doi: 10.1097/00001756-199811160-00030. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Saffran EM. Knowledge of object manipulation and object function: dissociations in apraxic and nonapraxic subjects. Brain and Language. 2002;82:179–199. doi: 10.1016/s0093-934x(02)00014-7. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Kyle KM, Tang K, Detre JM. Neural substrates of knowledge of hand postures for object grasping and functional object use: Evidence from fMRI. Brain Research. 2006;1117(1):175–185. doi: 10.1016/j.brainres.2006.08.010. [DOI] [PubMed] [Google Scholar]

- Canessa N, Borgo F, Cappa SF, Perani D, Falini A, Buccino G, Tettamanti M, Shallice T. The different neural correlates of action and functional knowledge in semantic memory: an FMRI study. Cereb Cortex. 2008;18(4):740–751. doi: 10.1093/cercor/bhm110. [DOI] [PubMed] [Google Scholar]

- Caramazza A, Shelton JR. Domain-specific knowledge systems in the brain the animate-inanimate distinction. J Cogn Neurosci. 1998;10:1–34. doi: 10.1162/089892998563752. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A. Representation of manipulable man-made objects in the dorsal stream. NeuroImage. 2000;12:478–484. doi: 10.1006/nimg.2000.0635. [DOI] [PubMed] [Google Scholar]

- Coltheart M. The MRC Psycholinguistics database. Quarterly Journal of Experimental Psychology. 1981;33A:497–505. [Google Scholar]

- Copland DA, de Zubicaray GI, McMahon K, Wilson SJ, Eastburn M, Chenery HJ. Brain activity during automatic semantic priming revealed by event-related functional magnetic resonance imaging. NeuroImage. 2003;20:302–310. doi: 10.1016/s1053-8119(03)00279-9. [DOI] [PubMed] [Google Scholar]

- Copland DA, de Zubicaray GI, McMahon K, Eastburn M. Neural correlates of semantic priming for ambiguous words: an event-related fMRI study. Brain Research. 2007;1131(1):163–172. doi: 10.1016/j.brainres.2006.11.016. [DOI] [PubMed] [Google Scholar]

- Dale AM. Optimal experimental design for event-related fMRI. Hum Brain Mapp. 1999;8:109–114. doi: 10.1002/(SICI)1097-0193(1999)8:2/3<109::AID-HBM7>3.0.CO;2-W. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Decety J, Perani D, Jeannerod M, Bettinardi V, Tadary B, Woods R, Mazziotta JC, Fazio F. Mapping motor representations with positron emission tomography. Nature. 1994;371:600–602. doi: 10.1038/371600a0. [DOI] [PubMed] [Google Scholar]

- Devlin JT, Russell RP, Davis MH, Price CJ, Wilson J, Moss HE, Matthews PM, Tyler LK. Susceptibility-Induced Loss of Signal: Comparing PET and fMRI on a Semantic Task. NeuroImage. 2000;11:589–600. doi: 10.1006/nimg.2000.0595. [DOI] [PubMed] [Google Scholar]

- Epstein R, Graham KS, Downing PE. Viewpoint-specific scene representations in human parahippocampal cortex. Neuron. 2003;37:865–876. doi: 10.1016/s0896-6273(03)00117-x. [DOI] [PubMed] [Google Scholar]

- Fang F, Murray SO, Kersten D, He S. Orientation-tuned fMRI adaptation in human visual cortex. Neurophysiology. 2005;94:4188–4194. doi: 10.1152/jn.00378.2005. [DOI] [PubMed] [Google Scholar]

- Farah MJ, McClelland JL. A computational model of semantic memory impairment: Modality-specificity and emergent category-specificity. Journal of Experimental Psychology: General. 1991;120:339–357. [PubMed] [Google Scholar]

- Flores d'Arcais G, Schreuder R, Glazenborg G. Semantic activation during recognition or referential words. Psychological Research. 1985;47:39–49. [Google Scholar]

- Gennari SP, MacDonald MC, Postle BR, Seidenberg MS. Context-dependent interpretation of Words: Evidence for interactive neural processes. NeuroImage. 2007;35:1278–1286. doi: 10.1016/j.neuroimage.2007.01.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gerlach C, Law I, Paulson OB. When action turns into Words. Activation of motor-based knowledge during categorization of manipulable objects. Journal of Cognitive Neuroscience. 2002;14(8):1230–1239. doi: 10.1162/089892902760807221. [DOI] [PubMed] [Google Scholar]

- Giesbrecht B, Camblin CC, Swaab TY. Separable effects of semantic priming and imaginability on word processing in human cortex. Cerebral Cortex. 2004;14:521–529. doi: 10.1093/cercor/bhh014. [DOI] [PubMed] [Google Scholar]

- Glenberg A. What memory is for. Behavioral and Brain Sciences. 1997;20:1–55. doi: 10.1017/s0140525x97000010. [DOI] [PubMed] [Google Scholar]

- Gold BT, Balota DA, Kirchhoff BA, Buckner RL. Common and dissociable activation patterns associated with controlled semantic and phonological processing: Evidence from fMRI adaptation. Cerebral Cortex. 2005;15:1438–1450. doi: 10.1093/cercor/bhi024. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends in Neurosciences. 1992;15:20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- Grafton ST, Fadiga L, Arbib MA, Rizzolatti G. Premotor cortex activation during observation and naming of familiar tools. NeuroImage. 1997;6(4):231–236. doi: 10.1006/nimg.1997.0293. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Henson R, Martin A. Repetition and the brain: neural models of stimulus-specific effects. Trends in Cognitive Sciences. 2006;10(1):14–23. doi: 10.1016/j.tics.2005.11.006. [DOI] [PubMed] [Google Scholar]

- Kable JW, Kan IP, Wilson A, Thompson-Schill SL, Chatterjee A. Conceptual representations of action in lateral temporal cortex. Journal of Cognitive Neuroscience. 2005;17:855–870. doi: 10.1162/089892905775008625. [DOI] [PubMed] [Google Scholar]

- Kawamoto AH, Farrar WT, Kello CT. When two meanings are better than one: Modeling the ambiguity advantage using a recurrent distributed network. Journal of Experimental Psychology: Human Perception and Performance. 1994;20:1233–1247. [Google Scholar]

- Kellenbach ML, Brett M, Patterson K. Actions speak louder than functions: the importance of manipulability and action in tool representation. J Cogn Neurosci. 2003;15:30–46. doi: 10.1162/089892903321107800. [DOI] [PubMed] [Google Scholar]

- Kellenbach ML, Wijers AA, Mulder G. Visual semantic features are activated during the processing of concrete words: Event-related potential evidence for perceptual semantic priming. Cognitive Brain Research. 2000;10(12):67–75. doi: 10.1016/s0926-6410(00)00023-9. [DOI] [PubMed] [Google Scholar]

- Kotz SA, Cappa SF, von Cramon DY, Friederici AD. Modulation of the lexical-semantic network by auditory semantic priming: An event-related functional MRI study. NeuroImage. 2002;17:1761–1772. doi: 10.1006/nimg.2002.1316. [DOI] [PubMed] [Google Scholar]

- Kourtzi Z, Kanwisher N. The Human Lateral Occipital Complex Represents Perceived Object Shape. Science. 2001;24:1506–9. doi: 10.1126/science.1061133. [DOI] [PubMed] [Google Scholar]

- Kuperberg G, Deckersbach T, Holt D, Goff D, West WC. Increased temporal and prefrontal activity to semantic associations in schizophrenia. Arch Gen Psychiatry. 2007;64:138–151. doi: 10.1001/archpsyc.64.2.138. [DOI] [PubMed] [Google Scholar]

- Kuperberg GR, Lakshmanan BM, Greve DN, West WC. Task and semantic relationship influence both the polarity and localization of hemodynamic modulation during lexico-semantic processing. Human Brain Mapping. 2008;29:544–561. doi: 10.1002/hbm.20419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Caramazza A. Concepts and Categories: A Cognitive Neuropsychological Perspective. Annual Review of Psychology. 2009;60:27–51. doi: 10.1146/annurev.psych.60.110707.163532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Masson MEJ. A distributed memory model of semantic priming. Journal of Experimental Psychology: Learning, Memory and Cognition. 1995;21:3–23. [Google Scholar]

- Matsumoto A, Iidaka T, Haneda K, Okada T, Sadato N. Linking semantic priming effect in functional MRI and event-related potentials. NeuroImage. 2005;24(3):624–634. doi: 10.1016/j.neuroimage.2004.09.008. [DOI] [PubMed] [Google Scholar]

- McRae K, de Sa VR, Seidenberg MS. On the nature and scope of featural representations of word meaning. Journal of Experimental Psychology: General. 1997;126:99–130. doi: 10.1037//0096-3445.126.2.99. [DOI] [PubMed] [Google Scholar]

- Mesulam MM. From sensation to cognition. Brain. 1998;121(6):1013–1052. doi: 10.1093/brain/121.6.1013. [DOI] [PubMed] [Google Scholar]

- Meyer DE, Schvaneveldt RW. Facilitation in recognizing pairs of words: Evidence of a dependence between retrieval operations. Journal of Experimental Psychology. 1971;90:227–234. doi: 10.1037/h0031564. [DOI] [PubMed] [Google Scholar]

- Myung J, Blumstein SE, Sedivy J. Playing on the typewriter and typing on the piano: Manipulation Features of Man-made Objects. Cognition. 2006;98:223–243. doi: 10.1016/j.cognition.2004.11.010. [DOI] [PubMed] [Google Scholar]

- Myung J, Blumstein SE, Yee E, Sedivy JC, Thompson-Schill SL, Buxbaum LJ. Impaired access to manipulation features in apraxia: Evidence from eyetracking and semantic judgment tasks. Brain and Language. 2010;112:101–112. doi: 10.1016/j.bandl.2009.12.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelson DL, McEvoy CL, Schreiber TA. The University of South Florida word association, rhyme, and word fragment norms. 1998 doi: 10.3758/bf03195588. http://www.usf.edu/FreeAssociation/ [DOI] [PubMed]

- Oliver RT, Geiger EJ, Lewandowski BC, Thompson-Schill SL. Remembrance of things touched: How sensorimotor experience affects the neural instantiation of object form. Neuropsychologia. 2009;47:239–247. doi: 10.1016/j.neuropsychologia.2008.07.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT. Where do you know what you know? The representation of semantic knowledge in the human brain. Nature Reviews Neuroscience. 2007;8:976–987. doi: 10.1038/nrn2277. [DOI] [PubMed] [Google Scholar]

- Postler J, De Bleser R, Cholewa J, Glauche V, Hamzei F, Weiller C. Neuroimaging of the semantic system(s) Aphasiology. 2003;17(9):799–814. [Google Scholar]

- Raposo A, Moss HE, Stamatakis EA, Tyler LK. Repetition suppression and semantic enhancement: An investigation of the neural correlates of priming. Neuropsychologia. 2006;44:2284–2295. doi: 10.1016/j.neuropsychologia.2006.05.017. [DOI] [PubMed] [Google Scholar]

- Riddoch M, Humphreys G, Price C. Routes to action: Evidence from apraxia. Cognitive Neuropsychology. 1989;6(5):437–454. [Google Scholar]

- Rissman J, Eliassen JC, Blumstein SE. An event-related FMRI investigation of implicit semantic priming. Journal of Cognitive Neuroscience. 2003;15(8):1160–1175. doi: 10.1162/089892903322598120. [DOI] [PubMed] [Google Scholar]

- Rossell SL, Bullmore ET, Williams SC, David AS. Brain activation during automatic and controlled processing of semantic relations: A priming experiment using lexical-decision. Neuropsychologia. 2001;39:1167–1176. doi: 10.1016/s0028-3932(01)00049-5. [DOI] [PubMed] [Google Scholar]

- Rossell SL, Price CJ, Nobre AC. The anatomy and time course of semantic priming investigated by fMRI and ERPs. Neuropsychologia. 2003;41:550–564. doi: 10.1016/s0028-3932(02)00181-1. [DOI] [PubMed] [Google Scholar]

- Schreuder R, Flores D'Arcais GB, Glazenborg G. Effects of perceptual and conceptual similarity in semantic priming. Psychological Research. 1984;45:339–354. [Google Scholar]

- Sirigu A, Duhamel J, Poncet M. The role of sensorimotor experience in object recognition: A case of multimodal agnosia. Brain. 2002;114:2555–2573. doi: 10.1093/brain/114.6.2555. [DOI] [PubMed] [Google Scholar]

- Taylor JR, Heindel W. Priming Perceptual and Conceptual semantic knowledge: Evidence for automatic and strategic effects. Cognitive Neuroscience Society 8th Annual Meeting Program.2001. [Google Scholar]

- Thompson-Schill SL, Aguirre GK, D'Esposito M, Farah MJ. A neural basis for category and modality specificity of semantic knowledge. Neuropsychologia. 1999;37:671–676. doi: 10.1016/s0028-3932(98)00126-2. [DOI] [PubMed] [Google Scholar]

- Thompson-Schill SL, Gabrieli JDE. Priming of visual and functional knowledge on a semantic classification task. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1999;25:41–53. doi: 10.1037//0278-7393.25.1.41. [DOI] [PubMed] [Google Scholar]

- Thompson-Schill SL, Kan IP, Oliver RT. Neuroimaging of semantic memory. In: Cabeza R, Kingstone A, editors. Handbook of Functional Neuroimaging. 2nd. Cambridge, MA: MIT Press; 2006. pp. 149–190. [Google Scholar]

- Tivarus ME, Ibinson JW, Hillier A, Schmalbrock P, Beversdorf DQ. An fMRI study of semantic priming: modulation of brain activity by varying semantic distances. Cognitive and Behavioral Neurology. 2006;19(4):194–201. doi: 10.1097/01.wnn.0000213913.87642.74. [DOI] [PubMed] [Google Scholar]

- Tranel D, Kemmerer D, Adolphs R, Damasio H, Damasio AR. Neural Correlates of Conceptual Knowledge for Actions. Cognitive Neuropsychology. 2003;20(3):409–432. doi: 10.1080/02643290244000248. [DOI] [PubMed] [Google Scholar]

- Tyler LK, Moss HE, Durrant-Peatfield M, Levy JP. Conceptual structure and the structure of concepts: A Distributed Account of Category Specific Deficits. Brain and Language. 2000;75(2):195–231. doi: 10.1006/brln.2000.2353. [DOI] [PubMed] [Google Scholar]

- Warrington EK, McCarthy RA. Categories of knowledge. Further fractionations and an attempted integration. Brain. 1987;110(Pt 5):1273–1296. doi: 10.1093/brain/110.5.1273. [DOI] [PubMed] [Google Scholar]

- Wheatley T, Weisberg J, Beauchamp MS, Martin A. Automatic priming of semantically related words reduces activity in the fusiform gyrus. J Cognitive Neuroscience. 2005;17:1871–1885. doi: 10.1162/089892905775008689. [DOI] [PubMed] [Google Scholar]

- Wible CG, Han SD, Spencer MH, Kubicki M, Niznikiewicz MH, Jolesz FA, et al. Connectivity among semantic associates: An fMRI study of semantic priming. Brain and Language. 2006;97:294–305. doi: 10.1016/j.bandl.2005.11.006. [DOI] [PubMed] [Google Scholar]