Abstract

The variational inequality problem (VIP) is considered here. We present a general algorithmic scheme which employs projections onto hyperplanes that separate balls from the feasible set of the VIP instead of projections onto the feasible set itself. Our algorithmic scheme includes the classical projection method and Fukushima’s subgradient projection method as special cases.

1 Introduction

We consider the variational inequality problem (VIP) in the Euclidean space Rn. Given a nonempty closed convex set X ⊆ Rn and a function f : Rn → Rn, the VIP is to find a point x * such that

| (1) |

This problem was well-studied in the last decades, see, e.g., the treatise of Facchinei and Pang [7] and the review papers by Noor [12] and by Xiu and Zhang [13]. In particular, algorithmic approaches were investigated, using projections of different types, in order to generate a sequence of iterates that converges to a solution. See, e.g., Yang [15], Yamada and Ogura [14], Auslender and Teboulle [3] or Censor, Iusem and Zenios [5], to name but (very) few out of the existing vast literature. The importance of VIPs stems from the fact that some fundamental problems can be cast in this form, see, e.g., [7, Volume I, Subsection 1.4].

Some algorithms for solving the VIP fit into the framework of the following general iterative scheme.

Algorithm 1

Initialization

Let be a user-chosen positive real sequence, select an arbitrary starting point x0 ∈ Rn and set the iteration index k = 0.

Iterative step

Given the current iterate xk, calculate the next iterate

where G is a symmetric positive definite matrix, PX is the projection operator onto X with respect to the G-norm ∥z∥G = 〈z, Gz〉1/2.

See, Auslender [2] and consult [7, Volume 2, Subsection 12.1] for more details. Such methods are particularly useful when the set X is simple enough to project on. However, in general, one has to solve at each iterative step the minimization problem

The efficiency of such a projection method may be seriously affected by the need to solve such optimization problems at each iterative step.

An orthogonal projection of a point z onto a set X can be viewed as an orthogonal projection of z onto the hyperplane H which separates z from X, and supports X at the closest point to z in X. But, of course, at the time of performing such an orthogonal projection, neither the closest point to z in X, nor the separating and supporting hyperplane H are available. In view of the simplicity of an orthogonal projection onto a hyperplane, it is natural to ask whether one could use other separating supporting hyperplanes instead of that particular hyperplane H through the closest point to z. Aside from theoretical interest, this may lead to algorithms useful in practice, provided that the computational effort of finding such other hyperplanes favorably competes with the work involved in performing orthogonal projections directly onto the given sets.

To circumvent the difficulties associated with the orthogonal projections onto the feasible set of (1) Fukushima [10] developed a method that utilizes outer approximations of X. His method replaces the orthogonal projection onto the set X by a projection onto a half-space containing X, which is easier to calculate. Letting X ≔ {x ∈ Rn | g(x) ≤ 0} where g : Rn → R is convex, Fukushima’s algorithm is as follows.

Algorithm 2

Initialization

Let be a user-chosen positive real sequence, select an arbitrary starting point x 0 ∈ Rn and set the iteration index k = 0.

Iterative step

Given the current iterate xk,

- choose a subgradient ξk ∈ ∂g(xk) of g at xk and let

- Calculate the “shifted point”

and then the next iterate xk+1 is the projection of zk onto the half-space Tk with respect to the G-norm, namely, If xk+1 = xk then stop, otherwise, set k = k + 1 and return to (1).

Since the bounding hyperplanes of the subgradiental half-spaces Tk, used by Fukushima, separate the current point z from the set X, the question again arises whether or not any other separating hyperplanes can be used in the algorithm while retaining the overall convergence to the solution.

This question presents a theoretical challenge and we are able to offer here answers that hold under some not too restrictive conditions. Under these conditions, we are able to show that, as a matter of fact, the hyperplanes need to separate not just the point z from the feasible set of (1), but rather separate a “small” ball around z from X. This is inspired by our earlier work [1] on the convex feasibility problem. Whether or not our current restrictions can be relaxed or removed still remains to be seen.

Our goal is to present the structural algorithmic discovery that both Algorithm 1 and Algorithm 2 are realizations of a more general algorithmic principle. Once we achieve this we are less concerned at the moment about the minimal strength of the conditions under which our results hold. This and generalizations of the new algorithmic structure to algorithms which use two projections per iteration for the VIP are currently under investigation. Our work is admittedly a theoretical development and no numerical advantages are claimed at this point. The large “degree of freedom” of choosing the super-sets, onto which the projections of the algorithm are performed, from a wide family of half-spaces may include specific algorithms that have not yet been explored. In Section 2 we present the algorithmic scheme and in Section 3 we give our convergence analysis. Section 4 discusses special cases.

2 The Algorithmic Scheme

2.1 Assumptions

Let X ≔ {x ∈ Rn | g(x) ≤ 0} where g : Rn → R is convex. Let G be a symmetric positive definite matrix and denote the distance from a point x ∈ Rn to the set X with respect to the norm ∥ · ∥G by

For any ε > 0, we denote

Following [10], we assume that the following conditions are satisfied.

Condition 3

f is continuous on X ε for some ε > 0.

Condition 4

f is strongly monotone with constant α on X ε for some ε > 0, i.e.,

| (2) |

Condition 5

For some y ∈ X, there exist a β > 0 and a bounded set D ⊂ Rn such that

It is well-known that under Conditions 3 and 4, the problem (1) has a unique solution, see, e.g., Kinderlehrer and Stampacchia [11, Corollary 4.3, p. 14].

2.2 The algorithmic scheme

In order to present the algorithmic scheme a few definitions are needed.

Definition 6

Let G be a symmetric positive definite matrix. Given a 0 ≤ δ ≤ 1, a closed convex set X ⊆ Rn and a point x ∈ Rn,

B(x,X,δ) ≔ B(x, δ dist(x, X)) = {z ∈ Rn | ∥ x − z∥G ≤ δ dist(x, X)} is the G-ball centered at x with radius δ dist(x, X),

for any x ∉ int X, denote by ℋ (x,X,δ) the set of all hyperplanes which separate B(x,X,δ) from X,

- for x,y ∈ Rn, define the mapping

where PH− is the projection operator, with respect to the G-norm, onto the half-space whose bounding hyperplane is H and such that X ⊆ H−.

The mapping 𝒜 defined above maps a quadruple (x, y, X, δ) onto a set. A selection from 𝒜X,δ(x,y) means that if x ∉ int X a specific hyperplane H ∈ ℋ(x,X,δ) is chosen and PH−(y) is selected. If x ∈ int X then x is selected.

Let be a sequence of positive numbers satisfying

| (3) |

Our algorithmic scheme for the VIP is as follows.

Algorithm 7

Initialization

Let be a user-chosen positive real sequence that fulfills (3). Choose a constant δ such that 0 < δ ≤ 1, select an arbitrary starting point x 0 ∈ Rn and set k = 0.

Iterative step

Given the current iterate xk,

- calculate the “shifted point”

(4) - Calculate the next iterate by

(5) If x k+1 = xk, stop, otherwise, set k = k + 1 and return to (1).

In what follows, we shall denote by Pk the projection operator onto where Hk is the selected hyperplane Hk ∈ ℋ (xk, X, δ) Thus, in (5) we have

| (6) |

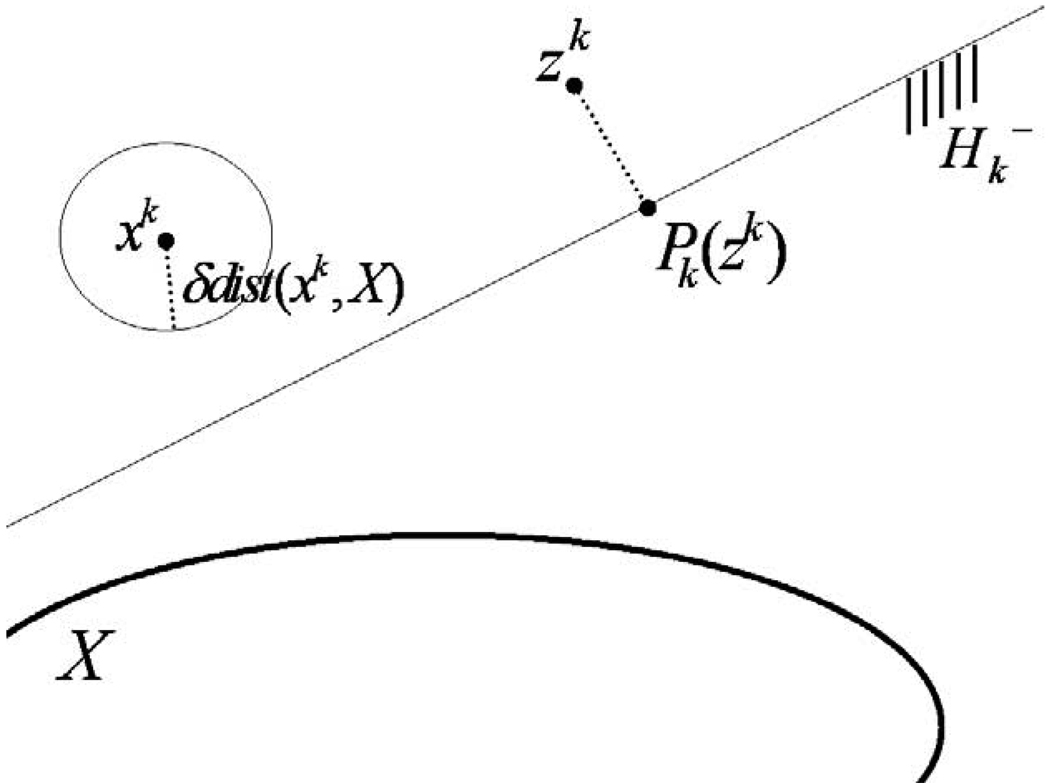

The iterative step of this algorithmic scheme is illustrated in Figure 1.

Figure 1.

Illustration of the algorithmic scheme in Algorithm 7.

Remark 8

Observe that there is no need to calculate in practice the radius δ dist(xk, X) of the ball B(xk, X, δ). If there would have been a need to calculate this then it would, obviously, amount to preforming a projection of xk onto X, which is the very thing that we are trying to circumvent. All that is needed, when deriving from the algorithmic scheme a specific algorithm, is to show that the specific algorithm indeed “chooses” the hyperplanes in concert with the requirement of separating such B(xk, X, δ) balls from the feasible set of (1). We demonstrate this in Section 4 below.

3 Convergence

First we show that if Algorithm 7 stops then it has reached the solution of the VIP.

Theorem 9

If xk+1 = xk occurs for some k ≥ 0 in Algorithm 7, then xk is the solution of problem (1).

Proof

First assume that f(xk) ≠ 0, then xk+1 = xk is possible only if the radius of B(xk,X, δ) is zero which implies that xk ∈ X since δ > 0. By definition, Pk(y¯) is the solution of the problem min , where is the half-space determined by Hk and X ⊆ . Applying [4, Theorem 2.4.2] with the Bregman function , whose zone is Rn, for the set , we get

where here ∇h(ȳ) = Gȳ, ∇h(Pk(ȳ)) = GPk(ȳ). So, we have

| (7) |

Taking w = x̄, ȳ = zk in (7) we get

| (8) |

which, by (4), implies that for all

Since we assume that x k+1 = xk

so we get

and then , and x k+1 = xk lead to

On the other hand, if f(xk) = 0, then (8) holds with zk = xk, i.e.,

or, by (6) and x k+1 = xk,

which is true for all x̄ ∈ X since and the proof is complete.

In the remainder of this section we suppose that Algorithm 7 generates an infinite sequence and establish the next lemmas that will be useful in proving the convergence of our algorithmic scheme. The next lemma was proved in [8] for G = I and under the assumption that E is compact.

Lemma 10

Let A, E and F be nonempty closed convex sets in Rn, such that A ⊂ E ⊂ F. For any point x ∈ F, let y be the point in E closest to x. Then we have

| (9) |

Furthermore,

| (10) |

Proof

Since y is the optimal solution of the problem min , it must satisfy the inequality (see, e.g., [4, Theorem 2.4.2])

Thus, for all e ∈ E,

and, since A ⊂ E, we get

which proves (9). In order to prove (10), let z̃ ∈ A be the closest point to x ∈ F. Then, using (9), we obtain

which completes the proof.

Lemma 11

Let ȳ ∈ Rn be an arbitrary point. Then, in Algorithm 7, for all k ≥ 0, we have the inequalities

Proof

This follows from Lemma 10 with A = X, E = and F = Rn. The next lemma is quoted from [10, Lemma 2].

Lemma 12

Let and be sequences of nonnegative numbers, and let μ ∈ [0,1) be a constant. If the inequalities

hold and if , then .

Lemma 13

If Condition 5 is satisfied, then any sequence , generated by Algorithm 7, is bounded.

Proof

The proof is structured along the lines of [10, Lemma 3]. First assume that f(xk) ≠ 0. Let y ∈ X be a point for which Condition 5 holds and let M > 0 be such that ∥x − y∥G < M, for all x ∈ D, where D is a bounded set given in Condition 5. Lemma 11 implies that, for each zk ∈ Rn,

| (11) |

Therefore,

| (12) |

Thus, if ∥xk − y∥G ≥ M, then we have, by (12) and Condition 5,

| (13) |

From Demmel [6, (5.2), page 199] we have

where υ is the largest eigenvalue of G −1, so that

| (14) |

where ν is the smallest eigenvalue of G. By (13) and (14)

Since limk→∞ ρk = 0, the last inequality implies

| (15) |

provided that k is sufficiently large. On the other hand, since y ∈ X,

Using the triangle inequality with G-norms and (14) we obtain

so that

| (16) |

for all sufficiently large k, where ε > 0 is a small constant. Inequalities (15) and (16) imply that is bounded. If f(xk) = 0 we have by (6) and (11)

which implies (16) and the rest follows.

Lemma 14

Let X be a nonempty closed convex set X ⊆ Rn and let 0 < δ ≤ 1. Let W ⊂ Rn be a nonempty convex compact set, let W\X ≔ {x ∈ W | x ∉ X}. Denote by σ(x) a selection from 𝒜X,δ(x,x), then there exists a constant μ ∈ [0,1) such that

Proof

Since σ(x) is, by definition, the closest point to x in the convex set H −, and X ⊆ H−, we get

| (17) |

This holds because

| (18) |

and, by Lemma 10, we get

| (19) |

(18) and (19) imply (17). On the other hand, since σ(x) is a selection from 𝒜X,δ(x,x) and x ∉ X, we have that σ(x) = PH−(x) where H ∈ ℋ(x,X,δ), so

| (20) |

(if x ∈ X then both sides of (20) are zero.) Since δ is a positive constant such that 0 < δ ≤ 1, we have

so, by (17), for all x ∈ W\X

Since also 0 ≤ 1 − δ2 < 1, taking ∈ [0,1), we get

which completes the proof.

Lemma 15

For any sequence , generated by Algorithm 7, we have limk→∞ dist(xk,X) = 0.

Proof

Assume that f(xk) ≠ 0, by (10) of Lemma 10 we have

and, by Lemma 14, there exists a constant μ ∈ [0,1) such that

| (21) |

On the other hand, the nonexpansiveness of the projection operator and reuse of (14) implies

Therefore,

| (22) |

Let sk = PX (Pk (xk)), namely,

Then, by the triangle inequality, we get

Now, since sk ∈ X, we have

From the last three inequalities we get

and, using (21) and (22), we have

So the result of this case is obtained by Lemma 12 and (3). In case f(xk) = 0, (11) becomes

and, by Lemma 12 with and bk = 0, for all k ≥ 0, the desired result is obtained.

Lemma 16

For any iterative sequence , generated by Algorithm 7, .

Proof

Assume first that f(xk) ≠ 0, using the triangle inequality with G-norms we have

| (23) |

where the last inequality follows from (22) and the equality

Since , we have

thus,

By Lemma 15 and (3) we now obtain the required result. In case f(xk) = 0, (23) becomes

where the last inequality follows from . By Lemma 15 and taking limits as k → ∞, we get the required result.

Theorem 17

Consider the VIP(X, f) (1) and assume that Conditions 3-5 hold. Then any sequence , generated by Algorithm 7, converges to the unique solution x* of problem (1).

Proof

By Lemma 15, xk ∈ Xε, for all sufficiently large k, where Xε is the set given in Conditions 3 and 4. (We assume without loss of generality that the value of ε is common in both conditions.) From Condition 4 we have

and

So, we obtain

| (24) |

Let λ be an arbitrary positive number. Since x * satisfies (1), it follows from Lemmas 13 and 15 that the following inequalities hold, for all sufficiently large k,

| (25) |

Using the Cauchy-Schwarz inequality

By Lemma 13, is bounded, and with Condition 3 we get that is also bounded. Lemma 16 guarantees

| (26) |

for all sufficiently large k. Applying (25) and (26) to (24), we obtain

| (27) |

Let us divide the indices of as follows

Equation (27) implies, due to the arbitrariness of λ, that for f(xk) = 0

Now consider the indices in Γ̄ and suppose that there exists a ζ > 0 such that

| (28) |

By Lemma 11

So, we get

Since λ in (27) is arbitrary, we choose λ = αζ2/4,

provided that k are sufficiently large. So,

By Lemma 13, is bounded, thus Condition 3 implies that is also bounded. So there exists a τ > 0 such that

so that,

provided that k are sufficiently large. Then there exists an integer k̄ ∈ Γ̄ such that

| (29) |

By adding the inequalities (29) from k = k̄ to k̄ + ℓ, over indices k ∈ Γ̄, we have

for any ℓ > 0. However, this is impossible due to (3), so there exists no ζ > 0 such that (28) is satisfied. Thus, {xk}k∈Γ̄ contains a subsequence {xk}k∈Γ̂, Γ̂ ⊆ Γ̄ converging to x *, and so there is a subsequence {xk}k∈Γ∪Γ̂ of the whole sequence converging to x *. In order to prove that the entire sequence is convergent to x *, suppose to the contrary that there exists a subsequence of converging to x̂ ∈ X, x̂ ≠ x*. Since, by Lemma 16, , there must exist a ζ > 0 and an arbitrarily large integer j ∈ Γ̄ such that

| (30) |

However, if j is sufficiently large, we may apply an argument similar to that used to derive (29), and obtain the inequality

which contradicts (30). Therefore, the sequence must converge to the solution x *.

4 Special Cases of the Algorithmic Scheme

Our general algorithmic scheme (Algorithm 7) includes as a special case the classical projection method of Algorithm 1. This can be seen by using δ = 1 in Algorithm 7, which reduces the family of potential half-spaces to a singleton which includes only the half-space that supports X at the projection of zk (of (4)) onto X.

Another important special case is obtained from Algorithm 7 if we choose the convex function g(x) ≔ dist(x, X). This is no restriction since any convex set X can be presented in this way and Fukushima’s method of Algorithm 2 is obtained as a special case of our algorithmic scheme.

4.1 An interior anchor point algorithm

To illustrate that additional algorithms can be derived from Algorithm 7 we present below an algorithm that uses other hyperplanes to project on. This particular realization requires that (the interior) int X is nonempty. The idea of using an interior point as an anchor to generate a separating hyperplane appeared previously in [1] for the convex feasibility problem, and in [9] for an outer approximation method.

Algorithm 18

Initialization

Let y ∈ int X be fixed and given. Select an arbitrary starting point x 0 ∈ Rn and set k = 0.

Iterative step

Given the current iterate xk,

if xk ∈ X set xk = xk+1 and stop.

- Otherwise, calculate the “shifted point”

and construct the line Lk through the points xk and y.(31) Denote by wk the point closet to xk in the set Lk ∩ X.

Construct a hyperplane Hk separating xk from X and supporting X at wk.

Compute x k+1 = , where is the half-space whose bounding hyperplane is Hk and X ⊆ , set k = k + 1 and return to (1).

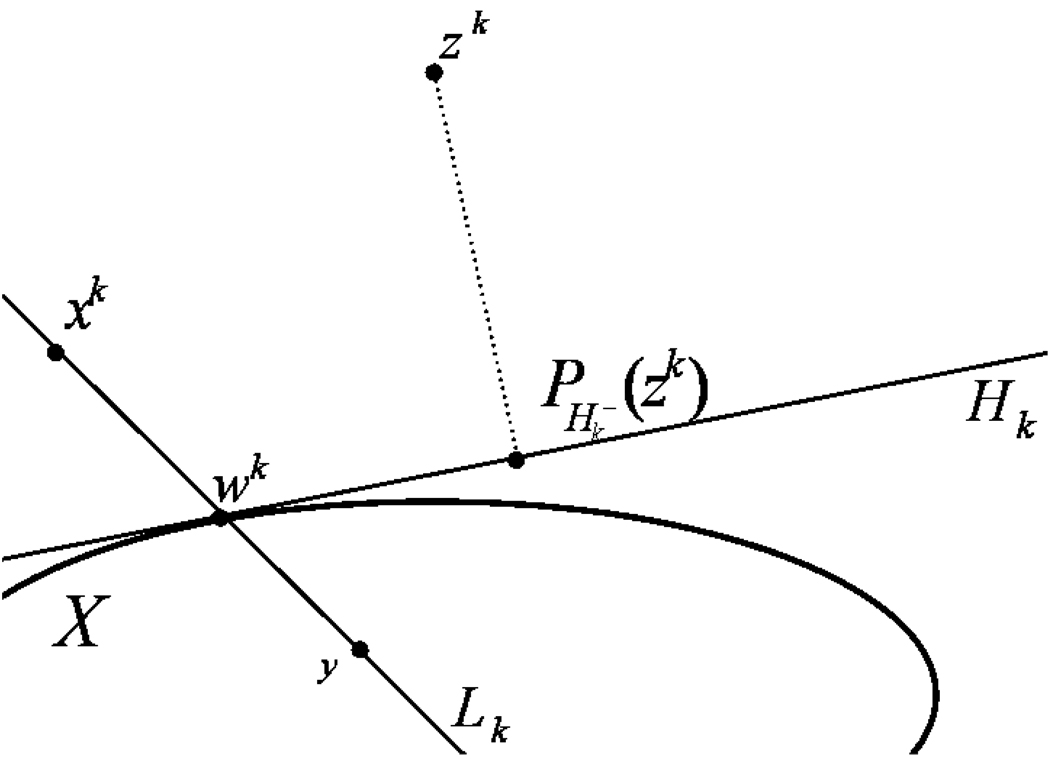

The iterative step of this algorithm is illustrated in Figure 2. We show that Algorithm 18 generates sequences that converge to a solution of problem (1) by showing that it is a special case of Algorithm 7.

Figure 2.

Illustration of Algorithm 18: Interior anchor point

Theorem 19

Consider the VIP(X, f) (1) and assume that Conditions 3–5 hold and that int X ≠ ∅. Then any sequence , generated by Algorithm 18, converges to the solution x* of problem (1).

Proof

First assume that G = I, the unit matrix. Algorithm 18 is obviously a special case of Algorithm 7 where we choose at each step a separating hyperplane which also supports X at the point wk. The stopping criterion is valid by Theorem 9. In order to invoke Theorem 17 we have to show that for such an algorithm δ > 0 always holds. By Lemma 13, is bounded and, since xk ∉ X, we have

| (32) |

and we also have

Defining d ≔ dist(y, bd X), since y ∈ int X,

From the boundedness of we know that there exists a positive N such that ∥y − wk∥2 ≤ N, for all k ≥ 0. Combining these inequalities with (32) implies that

which shows that the algorithm is of the same type of Algorithm 7 with δ ≔ d/N > 0.

To show convergence for a general symmetric positive definite matrix G we recall thatall norms are equivalent in Rn so that there exists constants M 1 and M 2 such that, for all x ∈ Rn,

Actually,

where ρ(G) is the largest eigenvalue of G (see, e.g., [6, Equation (5.2), page 199]). So, we get

thus,

where d̂ ≔ dist(y, bd X). Also,

So, by (32) and the last three inequalities, we get

| (33) |

where M̂ ≔ M 1/(M 2(ρ(G))2) and this completes the proof.

Acknowledgments

We are grateful to Masao Fukushima for his constructive comments on an earlier version of the paper. This work was supported by grant No. 2003275 of the United States-Israel Binational Science Foundation (BSF) and by a National Institutes of Health (NIH) grant No. HL70472.

References

- 1.Aharoni R, Berman A, Censor Y. An interior points algorithm for the convex feasibility problem. Advances in Applied Mathematics. 1983;4:479–489. [Google Scholar]

- 2.Auslender A. Optimisation: Méthodes Numériques. Masson, Paris: 1976. [Google Scholar]

- 3.Auslender A, Teboulle M. Interior projection-like methods for monotone variational inequalities. Mathematical Programming. 2005;Series A, 104:39–68. [Google Scholar]

- 4.Censor Y, Zenios SA. Parallel Optimization: Theory, Algorithms, and Applications. New York, NY, USA: Oxford University Press; 1997. [Google Scholar]

- 5.Censor Y, Iusem AN, Zenios SA. An interior point method with Bregman functions for the variational inequality problem with paramonotone operators. Mathematical Programming. 1998;81:373–400. [Google Scholar]

- 6.Demmel JW. Applied Numerical Linear Algebra. Philadelphia, PA, USA: SIAM; 1997. [Google Scholar]

- 7.Facchinei F, Pang JS. Finite-Dimensional Variational Inequalities and Complementarity Problems, Volume I and Volume II. New York, NY, USA: Springer-Verlag; 2003. [Google Scholar]

- 8.Fukushima M. An outer approximation algorithm for solving general convex programs. Operations Research. 1983;31:101–113. [Google Scholar]

- 9.Fukushima M. On the convergence of a class of outer approximation algorithms for convex programs. Journal of Computational and Applied Mathematics. 1984;10:147–156. [Google Scholar]

- 10.Fukushima M. A relaxed projection method for variational inequalities. Mathematical Programming. 1986;35:58–70. [Google Scholar]

- 11.Kinderlehrer D, Stampacchia G. An Introduction to Variational Inequalities and Their Applications. New York, NY, USA: Academic Press; 1980. [Google Scholar]

- 12.Aslam Noor M. Some developments in general variational inequalities. Applied Mathematics and Computation. 2004;152:197–277. [Google Scholar]

- 13.Xiu N, Zhang J. Some recent advances in projection-type methods for variational inequalities. Journal of Computational and Applied Mathematics. 2003;152:559–585. [Google Scholar]

- 14.Yamada I, Ogura N. Hybrid steepest descent method for variational inequality problem over the fixed point set of certain quasi-nonexpansive mappings. Numerical Functional Analysis and Optimization. 2004;25:619–655. [Google Scholar]

- 15.Yang Q. On variable-step relaxed projection algorithm for variational inequalities. Journal of Mathematical Analysis and Applications. 2005;302:166–179. [Google Scholar]