Abstract

The benefit of wearing hearing aids in multitalker, reverberant listening environments was evaluated in a study of speech-on-speech masking with two groups of listeners with hearing loss (younger/older). Listeners selectively attended a known spatial location in two room conditions (low/high reverberation) and identified target speech in the presence of two competing talkers that were either colocated or symmetrically spatially separated from the target. The amount of spatial release from masking (SRM) with bilateral aids was similar to that when listening unaided at or near an equivalent sensation level and was negatively correlated with the amount of hearing loss. When using a single aid, SRM was reduced and was related to the level of the stimulus in the unaided ear. Increased reverberation also reduced SRM in all listening conditions. Results suggest a complex interaction between hearing loss, hearing aid use, reverberation, and performance in auditory selective attention tasks.

Keywords: hearing aids, informational masking, reverberation, spatial benefit, selective attention

Difficulty in understanding speech in background noise is a common complaint of listeners with hearing loss (HL) seeking care in audiology clinics. Often the noise that patients describe actually refers to a background of speech, such as having a conversation in a busy restaurant or trying to talk to one's spouse while the kids carry on their own conversation. When the issue is selectively attending to one talker in the presence of multiple competing talkers, researchers often describe it as the “cocktail party problem” (Cherry, 1953), which has been the focus of recent literature reviews (e.g., Bronkhorst, 2000; Ebata, 2003; Yost, 1997).

In considering the literature concerning the cocktail party problem, it is striking that there have been relatively few studies of selective listening in multisource acoustic environments for listeners wearing hearing aids. However, it is well known that listeners with HL frequently experience difficulty understanding speech in these situations, and there is strong evidence that this problem is not fully remediated by amplification. For example, ratings of perceived difficulty of listening in complex and dynamic auditory environments on the Speech, Spatial, and Qualities of Hearing scale (SSQ), such as following simultaneous or rapidly altering speech streams, were highly correlated with a self-assessment of experiencing social limitations and emotional distress as a consequence of HL (Gatehouse & Noble, 2004). Also, Harkins and Tucker (2007) surveyed a group of more than 400 adults with HL (78% wore hearing aids; 22% had cochlear implants) to identify situations in which listeners continue to have difficulty understanding speech with amplification, to determine how often they are in those situations and to determine whether they use assistive listening devices in addition to their hearing aids/cochlear implants. The situation in which the greatest percentage of respondents reported continued communication difficulty was in a noisy group environment (94%), and 41% of the respondents reported that they were in such a situation often or very often. About 65% of the respondents reported experiencing difficulty in noisy situations when conversing with one or two people, with 82% indicating that they were frequently in this situation. The motivation for the present study is to obtain a better understanding of the cause of these communication difficulties and the benefit provided by hearing aids.

In multitalker environments, listeners (regardless of whether they are wearing hearing aids) typically focus attention on a target talker but also maintain awareness of the full auditory scene to shift attention to another source (cf. Broadbent, 1958). This is a complex problem of speech-on-speech masking, where the reception of the target talker is adversely affected by the presence of the competing talkers. To communicate successfully in such situations the listener must perceptually segregate the target talker and direct attention to him or her. This is not a simple task, however, and the interference between talkers has both peripheral and central components. When the target and masker occur in the same frequency region at the same time, the target may be energetically masked due to an overlap of the target and masker representations in the peripheral auditory system. In ongoing speech, however, this masking varies from moment to moment because of fluctuations of the sounds in frequency and amplitude. A second component of masking that cannot be accounted for by peripheral masking effects is referred to as informational masking. In this type of masking, the target and masker are both audible, but the listener cannot perceptually segregate the target from the background or is unable to successfully direct attention to the target. This may occur if there is a high degree of similarity between the background talkers and the target talker, if the background is highly uncertain, or if the background is simply difficult to ignore (see review by Kidd, Mason, Richards, Gallun, & Durlach, 2008).

When differentiating between multiple competing talkers, there are a number of acoustic cues that may facilitate source segregation (see reviews by Bregman, 1990; Darwin & Carlyon, 1995), such as differences in fundamental frequency, spatial location, onsets and offsets, prosody, and intensity levels. There are also higher-level factors that may provide a benefit too such as a priori knowledge about the sources or the message content that may help direct the focus of attention. In the current study, we examined the use of separation of sources in azimuth as a means for providing spatial release from masking (SRM). Differences in spatial location between sources produce binaural cues including interaural time differences (ITDs) and interaural level differences (ILDs) that form the basis for SRM. Recent evidence has suggested that SRM for multiple simultaneous talkers may involve both energetic masking and informational masking (e.g., Arbogast, Mason & Kidd, 2002; Colburn, Shinn-Cunningham, Kidd, & Durlach, 2006; Darwin, 2008; Freyman, Helfer, McCall, & Clifton, 1999; Shinn-Cunningham, Ihlefeld, Satyavarta, & Larson, 2005). Historically, however, it has been the factors involved in reducing energetic masking that have been most commonly studied in the context of producing SRM. These include the better-ear advantage (an improvement in target-to-masker ratio [T/M] in one ear due to head shadow) and binaural analysis (within-channel masking-level difference [MLD]). The higher-level factors related to informational masking and release from informational masking have received considerably less study and are less clearly understood. Kidd, Arbogast, Mason, and Gallun (2005a) found that speech identification in a highly uncertain three-talker listening situation was significantly improved by a priori knowledge about target location. When listeners were uncertain about the location of the target, speech identification performance was, on average, about 67% correct, but when the listeners knew where to direct their attention performance improved to greater than 90% correct. These results were replicated recently by Singh, Pichora-Fuller, and Schneider (2008) who found similar advantages of a priori knowledge in both younger and older listener groups, but generally poorer performance overall for the older group. This suggests that age may be a factor in some SRM conditions.

In a previous speech-on-speech masking study in normal-hearing (NH) young-adult listeners, Marrone, Mason, and Kidd (2008a) concluded that a large component of SRM was a reduction in informational masking. In their experiment, the speech materials—the target and both maskers—were sentences from the coordinate response measure (CRM) test (Bolia, Nelson, Ericson, & Simpson, 2000) and were presented through loudspeakers in a sound field. The CRM test has been shown to produce large amounts of informational masking under certain conditions (see Arbogast et al., 2002; Brungart et al., 2006). The target was always presented from a loudspeaker directly in front of the listener, while the two independent speech maskers were either colocated with the target or were symmetrically spatially separated from the target. The maximum SRM (difference in speech reception thresholds for colocated versus spatially separated maskers) found was about 13 dB.

To determine whether the same trends reported by Marrone et al. (2008a) would be observed in listeners with HL, a similar approach was taken in a second study (Marrone, Mason, & Kidd, in press) that was intended to create large amounts of informational masking in a multitalker speech identification task. In that study, a total of 40 listeners were tested, 20 of whom had sensorineural HL. The other 20 listeners were age-matched NH controls. The stimuli and procedures were the same as in the earlier study although only a subset of spatial separations were tested. Consistent with the report by Marrone et al. (2008a), listeners with NH demonstrated a large benefit in performance when the sources were spatially separated. This effect was obtained without the availability of a simple better-ear advantage—as revealed by the absence of any SRM in a control condition that simulated monaural listening—and was relatively robust with respect to increased reverberation. However, the listeners with bilateral sensorineural HL received significantly less benefit from spatial separation of sources than their NH counterparts. Both listener groups had similar speech identification thresholds when the three talkers were colocated. However, when the talkers were spatially separated, listeners with HL required much higher target-to-masker ratios at threshold than NH listeners, particularly in the reverberant environment. Although a few listeners with HL had SRM within the range of the NH listeners, for others, performance was as poor in the spatially separated condition as in the colocated condition. There was a strong inverse relationship between the amount of HL (as estimated by the listener's threshold for speech in quiet) and the benefit of spatial separation between the talkers.

Several possible explanations were offered for the much reduced SRM in listeners with HL. One possibility is increased energetic masking. Generally, conditions in which energetic masking dominates yield less benefit from the perceptual cues that normally provide a release from informational masking (see Kidd et al., 2008). Evidence for increased energetic masking in listeners with sensorineural HL, and a concomitant reduction in SRM, has been reported by Arbogast, Mason, and Kidd (2005). In that study, which used speech targets and maskers processed into mutually exclusive narrow frequency bands, SRM occurred when a single masker talker was separated from the target talker by 90° azimuth. The authors concluded that the reduced SRM in the hearing-impaired listeners could have been due to wider auditory filters that smeared the representations of the target and masker causing greater energetic masking. Another possibility is that the degraded spectral and temporal representation of the stimuli affected the ability of the listeners with HL to segregate the target stream and maintain it over time.

One question raised by the results from listeners with HL in conditions producing large amounts of informational masking (Arbogast et al., 2005; Marrone et al., in press) is whether the benefit of spatial separation between talkers observed unaided1 would be different when wearing hearing aids. Beyond the obvious benefit hearing aids provide in restoring the audibility of sounds that is the prerequisite to comprehension, several questions remain regarding whether and to what extent hearing aids allow listeners to make use of spatial cues, especially in complex and highly uncertain multisource environments. In the current study, the same listeners from Marrone et al. (in press) were tested with their personal hearing aids to determine whether amplification would alter the benefit of spatial separation observed. Listeners wore their personal hearing aids at user-adjusted settings, assuring that they were accustomed to the amplification provided.

Because current hearing aids in a bilateral fitting process incoming sounds independently of one another, differences between the aids in compression, noise reduction, and other adaptive algorithms could alter the natural interaural level and timing cues. There is evidence that these types of distortions can negatively impact localization abilities (Byrne & Noble, 1998; Keidser et al., 2006; Van den Bogaert, Klasen, Moonen, Van Deun, & Wouters, 2006). As performance in the experimental task is dependent on binaural processing and the effective use of spatial cues (see Marrone et al., 2008a), aided performance could be worse than unaided performance as a consequence of the hearing aids operating independently at each ear. Alternatively, the frequency-specific gain applied by the hearing aids could, for example, improve performance by restoring high-frequency audibility. As in Marrone et al. (in press), here the target speech was presented at a fixed sensation level above the listener's quiet (and in this case, aided) threshold so that the speech was highly intelligible in isolation. Given that hearing aids provide benefit for speech recognition in noise by amplifying low-level sounds that would otherwise be inaudible to the listener, the question in the current study was more specifically whether at a suprathreshold level there would be a performance difference attributable to the frequency-specific amplification and/or other processing by the instrument(s). The assumption underlying this hypothesis is that improved high-frequency audibility may translate into improved binaural cues that would facilitate perceptual segregation of the talkers based on their perceived spatial location. Dubno, Ahlstrom, and Horwitz (2002) found that listeners with sensorineural HL showed little benefit from spatially separated speech and noise that were high-pass filtered as compared to NH listeners in the same condition and hypothesized that this was a consequence of reduced high-frequency head-shadow cues (ILDs) because the listeners had the most HL in the high frequencies. Thus, high-frequency amplification could restore these cues and potentially yield better representations of the spatial locations of the sources, which in turn may improve perceptual segregation of the sound sources. Preliminary results by Ahlstrom, Horwitz, and Dubno (2006) suggested that the high-frequency audibility that is restored by hearing aids leads to an improvement in the benefit of spatial separation between a target talker (located directly ahead) and multitalker babble (to the side). When the low-pass cutoff frequency of the speech stimuli was increased and mid-to-high frequency speech information was restored with amplification, listeners had improved speech recognition in the spatially separated condition. They suggested that the improved audibility likely restores head shadow cues in the high frequencies.

There has been little previous study of SRM in listeners wearing hearing aids. Festen and Plomp (1986) examined the benefit of spatial separation between a speech target presented from straight ahead and a masking noise shaped to match the long-term average of the speech stimuli and presented from either straight ahead, to the right side, or to the left side. Listeners were tested with their personal hearing aids at user-adjusted settings in an anechoic room and listened either unaided, with one aid, or with both hearing aids. Overall, performance was about 2 dB better unaided than in any of the aided conditions. The amount of SRM was, on average, 6.5 dB for listeners with pure tone averages (PTA) less than 50 dB HL and 5.5 dB for listeners with a PTA greater than 50 dB HL. This was significantly reduced as compared to results from NH listeners in identical conditions reported by Plomp and Mimpen (1981). In that study, they found a 10 dB SRM for speech masked by a single noise source. By comparison, Festen and Plomp (1986) reported that SRM in the unilateral and bilateral amplification conditions was 4.5 dB on average. However, when the noise source was located on the same side as the hearing aid, listeners with a PTA greater than 50 dB HL showed less SRM using one aid than two aids. Recently, Kalluri and Edwards (2007) studied the effect of compression on SRM in NH listeners. They found that compression acting independently at the ears reduced the benefit of spatial separation between multiple talkers in an ILD-only condition but not when the sounds were spatialized using head-related transfer functions (with ITD and ILD cues).

In the present study, we also examined whether the benefit of spatial separation between talkers while using amplification would be affected by an increase in room reverberation. Based on previous findings (Marrone et al., 2008a; Nabelek & Pickett, 1974; Novick, Bentler, Dittberner, & Flamme, 2001), the underlying hypothesis was that an increase in reverberation would negatively affect performance. Second, because the choice of using one versus two hearing aids in noisy situations affects binaural hearing, we compared conditions of bilateral amplification to unilateral amplification. This tested the hypothesis that there would be a bilateral advantage observed because the experimental task is fundamentally based on binaural listening. In Noble and Gatehouse (2006), SSQ questions that are related to the listening situation tested in the current experiment (e.g., having a conversation in an echoic environment, ignoring an interfering voice of the same pitch) revealed benefit from a unilateral fitting and additional benefit from a bilateral fitting in groups of experienced hearing aid wearers. However, in Walden and Walden (2005), listeners (aged 50–50 years) performed better when using a single hearing aid than when using two hearing aids on the QuickSIN (Quick Speech-In-Noise) test, where the task was to repeat keywords from sentences in the presence of four-talker babble. In their study, the target and maskers were presented from the same loudspeaker located directly in front of the listener, so there were no spatial difference cues available. There was a trend for better performance with a single aid as listener age increased. Consequently, our approach was to recruit both younger and older listeners with HL to examine the effect of age. Finally, the focus of the current study was on a sample of listeners using their personal hearing aids with omnidirectional microphones and thus we did not investigate the processing parameters, directionality, and so on, which might optimize performance on the task.

Methods

Listeners

A total of 40 listeners were recruited from the university and the greater-Boston area via newspaper advertisements and word of mouth. There were 2 groups of 20 subjects, a group of listeners with HL who reported regular use of bilateral hearing aids in daily life and an age-matched NH group. These were the same listeners as tested in Marrone et al. (in press). All listeners were native English speakers and in both the NH and HL groups there were 10 younger (aged 19–19) and 10 older (aged 57–57) participants. Audiometric measurements were conducted in a sound-treated double-walled booth to determine eligibility for participation. Criteria for participation included hearing that was essentially the same in both ears (asymmetry defined as >10 dB HL difference between ears at two or more audiometric test frequencies) and no significant air-bone gap (≤ 10 dB HL difference between air and bone conduction thresholds at any audiometric test frequency). Table 1 gives demographic information for the listeners with HL including sex, age, etiology of HL, duration of HL, duration of hearing aid use, PTA for the right and left ears (average threshold at 500, 1000, and 2000 Hz), and audiometric slope for each ear. The HL listeners had mild to moderately severe symmetric sensorineural HL that was either flat or gradually sloping in configuration. The NH listeners had audiometric thresholds ≤ 25 dB HL at octave frequencies from 250–250 Hz. In the aided conditions, the HL listeners were tested with their own aids at their normal settings in omnidirectional mode.

Table 1.

Listener Demographics for the HL (Hearing Loss) Group

| Duration (Years) |

PTA (dB HL) |

Slope (dB) |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| HL Listener Group | Sex | Age | Etiology | HL | HA Use | R | L | R | L | |

| Y1 | M | 19 | Alport's Syndrome | 11 | 11 | 58 | 62 | 6.25 | 6.25 | |

| Y2 | M | 19 | Hereditary | 19 | 11 | 42 | 45 | 7.5 | 6.25 | |

| Y3 | M | 20 | Unknown | 20 | 15 | 63 | 60 | 8.75 | 6.3 | |

| Y4 | F | 21 | Unknown | 16 | 11 | 50 | 38 | 8.75 | 9.25 | |

| Y5 | F | 22 | Meniere's Disease | 22 | 7 | 42 | 47 | −1.25 | −2.5 | |

| Y6 | F | 27 | Unknown | 27 | 23 | 68 | 67 | 7.5 | 6.25 | |

| Y7 | F | 36 | Scarlet fever | 34 | 20 | 48 | 43 | 5 | 8.75 | |

| Y8 | F | 38 | Unknown | 38 | 33 | 62 | 62 | 8.75 | 11.25 | |

| Y9 | F | 41 | Hereditary | 41 | 35 | 45 | 45 | 6.25 | 7.5 | |

| Y10 | F | 42 | Unknown | 42 | 10 | 43 | 42 | 10 | 10 | |

| O1 | F | 57 | Unknown | 50 | 22 | 58 | 53 | 15 | 15 | |

| O2 | F | 59 | Meniere's Disease | 14 | 1 | 47 | 50 | 8.75 | 10 | |

| O3 | F | 63 | Rubella | 63 | 55 | 52 | 53 | 7.5 | 6.25 | |

| O4 | F | 66 | Unknown | 55 | 42 | 70 | 68 | 7.5 | 7.5 | |

| O5 | F | 71 | Unknown | 65 | 45 | 58 | 58 | 6.25 | 6.25 | |

| O6 | F | 72 | Presbycusis | 3 | 1 | 52 | 45 | 8.75 | 7.5 | |

| O7 | F | 75 | Unknown | 20 | 17 | 60 | 67 | 2.5 | 3.75 | |

| O8 | F | 78 | Presbycusis | 3 | 1 | 47 | 50 | 1.25 | 1.25 | |

| O9 | F | 78 | Presbycusis | 9 | 8 | 58 | 62 | 3.75 | 5 | |

| O10 | M | 80 | Presbycusis | 4 | 2 | 38 | 40 | 10 | 8.75 | |

NOTES: F = female; HL = hearing loss; HA use = hearing aid use; L = left ear; M = male; O = older listeners; PTA = pure tone average; R = right ear; Y = younger listeners. Listener demographics for the HL group include sex, age (years), etiology of HL, duration of HL (years), duration of hearing aid use (years), PTA (average air conduction threshold in dB HL at 500, 1000, and 2000 Hz), and average slope over the range between 250 and 4000 Hz (dB/octave). Listeners are sorted by age and are divided into two subgroups (younger/older).

Stimuli

The stimuli were recordings of the four female talkers from the coordinate response measure (CRM) corpus (Bolia et al., 2000). Every sentence in this corpus has the structure, “Ready [call sign] go to [color] [number] now.” The corpus has sentences with all combinations of eight call signs (Arrow, Baron, Charlie, Eagle, Hopper, Laker, Ringo, Tiger), four colors (blue, green, red, white), and eight numbers (digits 1–1). On every trial, the listener heard three sentences spoken by different talkers. The talkers and sentences varied from trial to trial. The target sentence was identified by the call sign “Baron.” The masker sentences were also from the CRM corpus, spoken by two different female talkers. The masker talkers, call signs, colors, and numbers were different from the target and from each other.

Description of Amplification Used and Electroacoustic Measures

The listeners wore their own hearing aids with their regular fitting set in omnidirectional mode. None of the other characteristics of the aids were adjusted. Consequently, a variety of manufacturers, models, and styles were represented in the group. In all, 80% were digital signal-processing devices. The majority were custom in-the-ear devices (23/40), and the remainder were behind-the-ear devices, three of which were open canal fittings. The hearing aids were required to be in working order, but no attempt was made to change or improve the fitting. This was based on the decision to have a sample that was representative of the hearing aids in current use by the listeners recruited for the study.

Electroacoustic measurements of each hearing aid were made at each of the two listening sessions. Coupler measurements were made using a Frye Systems 7000 test box and hearing aid analyzer to characterize the frequency response, determine input/output transfer functions, assess attack/release time and processing delay, and to determine gain at user-adjusted settings. Of the 40 hearing aids tested, 11 provided linear amplification, and none of these reached an output limit within the range of sound levels presented in the current experiment. The remaining 29 hearing aids were nonlinear, multiband compression instruments. The time constants for this type of hearing aid can be broadly described as fitting one of two classes, as outlined by Moore (2008): fast- versus slow-acting compression. In the current group, most of the hearing aids (19/29) had fast-acting compression (an attack time of 0.5–20 ms and a recovery time of 5–5 ms).

Following otoscopic examination, probe microphone measurements in the listener's ear were made using the modulated noise test signal (digital speech) on the Frye Systems 7000 real-ear analyzer. These measurements were conducted in a sound-treated, double-walled audiometric test booth using the test protocol for real-ear verification described by Hawkins and Mueller (1998). The real-ear-unaided response was measured using a 65 dB SPL (sound pressure level) input signal. The real-ear-aided response measurements were made using 50, 65, and 80 dB SPL signals. The aided measurements were made with the hearing aid set at the listener's preferred settings in omnidirectional mode, the same settings that were then used for the full experiment. The measured insertion gain was compared to the target insertion gain values that would be prescribed based on the National Acoustics Labs-Revised, Profound prescriptive method (NAL-RP; Byrne, Parkinson, & Newall, 1990). The average measured and target real-ear insertion gain (REIG) values (in dB) at audiometric frequencies are given for the right and left ears in Table 2. With the exception of one listener (Y5) who had measured REIG across frequencies that exceeded NAL-RP prescribed gain by 12–12 dB, most listeners tended to use settings that were below the prescriptive targets based on their current hearing thresholds.

Table 2.

Average and Standard Deviations for Measured Real Ear Insertion Gain (REIG), Target REIG Calculated Using the National Acoustics Labs-Revised, Profound (NAL-RP) Prescriptive Method, and the Deviation From Target (Measured REIG vs. Target REIG) for Right and Left Ears.

| Right Ear |

Left Ear |

|||||

|---|---|---|---|---|---|---|

| Frequency | Measured REIG | Target REIG | Difference | Measured REIG | Target REIG | Difference |

| 250 Hz | 5.8 (6.2) | 3.2 (3.9) | 2.6 (5.7) | 6.7 (9.2) | 3.6 (4.2) | 3.0 (8.2) |

| 500 Hz | 11.3 (9.3) | 14.2 (5.0) | −2.9 (8.8) | 11.2 (10.8) | 14.2 (5.7) | −3.0 (8.6) |

| 1000 Hz | 21.6 (10.1) | 26.1 (5.0) | −4.5 (8.7) | 20.6 (12.0) | 26.1 (5.2) | −5.5 (10.2) |

| 2000 Hz | 23.4 (9.7) | 25.6 (4.8) | −2.2 (8.5) | 22.9 (10.4) | 25.4 (4.3) | −2.5 (9.4) |

| 4000 Hz | 16.1 (9.0) | 25.9 (4.9) | −9.8 (9.1) | 15.6 (7.7) | 25.6 (4.8) | −10.0 (6.6) |

| 6000 Hz | 8.2 (11.8) | 26.3 (5.7) | −18.1 (14.0) | 6.3 (14.6) | 26.7 (5.9) | −20.5 (13.5) |

Room Conditions

The study was conducted at the Soundfield Laboratory at Boston University. This space includes a single-walled IAC sound booth (12'4” long, 13' wide, and 7'6” high) that was designed to allow changing the sound absorption characteristics of the sound field. This is done by covering all surfaces (ceiling, walls, floor, and door) with panels of different acoustic reflectivity, such as acoustic foam or Plexiglas©. For the current experiment, there was a low-reverberation and a high-reverberation condition. In the low-reverberation condition, the room configuration was that of a standard IAC booth: The ceiling, walls, and door had a perforated metal surface, and the floor was carpeted (referred to as the BARE room condition because no surface coverings were applied). In the more reverberant condition, all surfaces were covered with reflective Plexiglas© panels (the PLEX room condition), creating a noticeable increase in reverberation. The measured reverberation time increased from about 60 to 250 ms, whereas the direct-to-reverberant ratio decreased from about 6.3 to −0.9 dB. Furthermore, for a given voltage input to the loudspeakers, the SPL in the PLEX room was approximately 3 dB higher than in the BARE room. Additional acoustic measurements in this room for the different surfaces are described in Kidd, Mason, Brughera, and Hartmann (2005b). The listener was seated in the center of a semicircle of seven loudspeakers positioned at head height and located 5' from the approximate center of the listener's head. Only the loudspeakers directly in front of the listener (0° azimuth) and to the right and left of the listener (±90° azimuth) were used in the experiment.

Procedures

The task was 1-interval 4×8 alternative forced choice with feedback. Listeners used a handheld keypad with liquid crystal display (Q-term II) to enter their responses and receive feedback on each trial. They were instructed to identify the color and number from the sentence with the call sign “Baron” and were informed that this sentence would always be presented from the loudspeaker directly ahead. At the beginning of each trial, the word Listen appeared on the display. After stimulus presentation, the prompts “Color [B R W G]?” and “Number [1–8]?” appeared and the listener registered their choice of each. Responses were scored as correct only if the listener identified both the color and number accurately. Feedback consisted of a message indicating whether the response was correct and what the target color and number had been on that trial (e.g., “Incorrect, it was red two.”). Listeners completed a short practice block of target identification in quiet at a comfortable listening level to familiarize them with the procedures and keypad.

For the NH listeners, there were two monaural earplug plus ear muff conditions (right ear occluded, left ear occluded). The plug and muff condition was intended as a “monaural” control, even though it is not strictly monaural listening, and is a potentially useful comparison for the unilateral aided conditions in the HL group. There were three aided listening conditions tested for the HL listeners: bilateral aided, right ear aided, and left ear aided.

These conditions were conducted in two experimental sessions, one for each room condition. The two sessions typically occurred within one week of each other. The order of the listening conditions and room conditions was counterbalanced across participants. Audiometric testing was completed during the first listening session. Thresholds were rechecked at the second listening session for one listener that had a history of fluctuating HL (Y5). Electroacoustic measurements were completed at both listening sessions to confirm that the hearing aids were set in the same way for both sessions.

Testing in each room condition began with two quiet conditions. First, unmasked identification thresholds for the target CRM sentences at the target location (0°) were obtained in both unaided and aided conditions. A one-up, one-down adaptive procedure was used to estimate the 50% correct point on the psychometric function (Levitt, 1971). Each adaptive track continued until a minimum of 30 trials were tested and at least 10 reversals had been obtained. The initial step size was 4 dB and was reduced to 2 dB after three reversals. The threshold estimate was computed after discarding the first three or four reversals (whichever produced an even number for averaging) and thus was based on at least the last six reversals. Two estimates of threshold in quiet were measured and averaged. If the threshold estimates were greater than a 5 dB difference, an additional two estimates were collected and all four were averaged.

Next, performance in an unmasked fixed-level identification task was measured for the target at the level at which it would be presented in the masked conditions. For the NH listeners, the target level in the masked conditions was set to 60 dB SPL. For the HL group, the target was set to 30 dB sensation level SL re: quiet aided speech identification thresholds whenever possible and at lower SLs in a few cases to avoid excessively high masker levels (at 20 dB SL for listeners O4, O7, and O8). This same sensation level was used across aided listening conditions and across room conditions with two exceptions (listeners Y3 and Y8, who were tested at 20 dB SL in one of the unilateral aided conditions due to an asymmetry in unilateral aided speech identification thresholds). For most listeners, the fixed level for the target stimulus in the aided listening conditions was comparable to that of normal conversational speech (e.g., 65 dB SPL ± 5 dB). Quiet speech identification performance was nearly perfect at the test level of the target for all listeners (98.6% correct on average, standard deviation (SD) = 2.7%).

For the measurements of masked speech identification thresholds, listeners heard three sentences played concurrently (one target with two independent maskers) in every trial. The maskers were either colocated with the target at 0° or symmetrically spatially separated (target at 0°, masker 1 from −90°, masker 2 from +90°). The target level was fixed and the level of the maskers was varied adaptively to estimate threshold. The two masker talkers were presented at the same rms level on each trial of the adaptive track. The initial masker level was 20 dB below the target. The masker level adapted in 4 dB steps initially and was reduced to 2 dB steps after the third reversal. The threshold estimate was computed after discarding the first three or four reversals (whichever produced an even number) and thus was based on at least the last six reversals. Threshold estimates were averaged over four tracks per condition.

In the plug and muff conditions, the NH listeners wore commercially available hearing protectors on one ear and were tested in quiet and in the masked speech conditions at 0° and ± 90°. The hearing protectors used were disposable E-A-R® plugs and the AOSafety® Economy Earmuff, both manufactured by the Aearo Company. To estimate the amount of attenuation obtained, listeners wore earplugs in both ears and both earmuffs, while speech identification-threshold estimates were obtained in quiet. If they did not achieve at least 35 dB of attenuation (± 5 dB) relative to their unoccluded speech thresholds, the earplugs were reinserted, the earmuffs were repositioned, and new threshold estimates were obtained. An earplug was then removed from one ear and the earmuff from that ear was removed from the headband, which had been modified so that it could be positioned comfortably but still tightly on the listener's head. Listeners were tested in a monaural right and monaural left condition in each of the two room conditions (order counterbalanced across listeners).

Results

Preliminary Measurements: Identification in Quiet

Speech identification thresholds in quiet for the three aided listening conditions are given in Table 3, along with unaided thresholds for comparison. On average, bilateral aided thresholds were 13 dB better (lower) than unaided thresholds, ranging from −1 to 28 dB improvement in the low-reverberant room, and were 15 dB lower than unaided thresholds in the more reverberant room on average, ranging from 3–3 dB improvement. Bilateral aided thresholds were 2 dB better on average than the unilateral aided thresholds in both room conditions.

Table 3.

Means and Standard Deviations in dB SPL (sound pressure level) for Speech Identification Thresholds in Quiet.

| Listening Condition |

|||||

|---|---|---|---|---|---|

| HL Listener Group | Room Condition | Unaided | Bilateral Aided | Right Aided | Left Aided |

| Younger (n = 10) | BARE | 45.0 (10.4) | 35.5 (5.6) | 37.4 (6.2) | 36.2 (8.2) |

| PLEX | 48.4 (9.2) | 36.4 (5.7) | 38.5 (5.0) | 37.6 (7.3) | |

| Older (n = 10) | BARE | 55.1 (13.4) | 38.2 (6.5) | 40.3 (6.0) | 41.3 (9.8) |

| PLEX | 58.2 (12.0) | 40.0 (5.5) | 41.4 (4.6) | 43.8 (7.5) | |

NOTES: Unaided thresholds from Marrone et al. (2008b) are given for comparison. BARE indicates bare room condition with no surface coverings applied. PLEX indicates room covered with reflective Plexiglas© panels.

The speech identification thresholds in quiet in the low-reverberation room were highly correlated with both the audiometric PTA (r = .94, p < .001) and the standard audiometric speech recognition threshold (SRT; r = .96, p > .001) obtained using recorded spondaic words (the PTA and SRT values used for analysis were the average values for the right and left ears). As described in the Methods section, speech identification performance in quiet was nearly perfect when the CRM sentences were presented at the test level used for the target in both room conditions and for all listeners.

Speech Identification Thresholds With Two Competing Talkers

The masked speech identification thresholds are expressed in terms of T/M and SRM in dB. The T/M was calculated by subtracting the level of the individual maskers at threshold from the fixed target level. SRM is the difference in the T/M at threshold for the colocated and spatially separated conditions so that a positive SRM indicates a benefit of spatial separation.

The group mean results in T/M are contained in Table 4 for all listeners and conditions. Data from unaided conditions for the same listeners, stimuli, and procedures from Marrone et al. (in press) are given for comparison. In the unaided conditions from Marrone et al. (in press), the target was set to 30 dB SL re: speech identification threshold in quiet, which is roughly equivalent to applying linear uniform gain of 30 dB. Inspection of Table 4 indicates that when the three talkers were colocated, group mean performance was similar across listening conditions and room conditions. Threshold T/Ms ranged from 4.5 to 6.5 dB indicating that the target was just higher in level than the combined two-talker masker (i.e., above 3 dB). However, a somewhat larger range of performance was observed when the three talkers were spatially separated. In that case, the lowest (best) T/Ms at threshold occurred in the low-reverberation room and the highest (poorest) T/Ms at threshold occurred in the unilateral aided conditions in the more reverberant room. In general, the older listeners had slightly higher T/Ms at threshold than the younger listeners (about 1–1 dB across conditions).

Table 4.

Means and Standard Deviations for Target-to-Masker Ratios (T/Ms) at Coordinate Response Measure (CRM) Identification Threshold (in dB) in the Colocated and Spatially Separated Conditions for the Bilateral Aided, Unilateral Aided, and Unaided Listening Conditions.

| Unaided |

Bilateral Aided |

Unilateral Aided |

|||||

|---|---|---|---|---|---|---|---|

| Measure | HL Listener Group | Low Reverberation | High Reverberation | Low Reverberation | High Reverberation | Low Reverberation | High Reverberation |

| T/M at 0° | Younger | 4.8 (1.5) | 4.9 (2.3) | 4.8 (1.7) | 4.6 (1.9) | 4.5 (1.5) | 4.8 (2.3) |

| Older | 6.4 (2.2) | 6.3 (1.8) | 5.5 (1.1) | 6.5 (1.5) | 5.4 (1.2) | 6.1 (1.4) | |

| T/M at ±90° | Younger | −0.1 (3.5) | 1.9 (4.0) | 0.4 (3.3) | 1.9 (4.0) | 1.3 (3.6) | 3.7 (3.5) |

| Older | 3.5 (4.4) | 4.9 (2.3) | 3.7 (3.9) | 5.9 (3.3) | 4.2 (3.5) | 6.0 (3.0) | |

| SRM | Younger | 4.9 (2.5) | 3.0 (2.1) | 4.4 (2.3) | 2.7 (2.5) | 3.2 (2.9) | 1.1 (1.7) |

| Older | 2.9 (2.7) | 1.4 (1.9) | 1.8 (2.9) | 0.6 (2.4) | 1.3 (2.8) | 0.1 (2.1) | |

NOTES: HL = hearing loss. SRM = spatial release from masking. SRM in dB is also given (difference in T/M between the spatially separated and colocated conditions calculated on an individual listener basis, then averaged). Data for the unaided condition are from Marrone et al., in press. This condition shows performance without hearing aids, although uniform gain was applied to the loudspeakers to raise the target level to 30 dB SL re: speech identification threshold in quiet.

Spatial Benefit

The benefit of spatial separation between talkers was measured on an individual listener basis by subtracting the T/M at threshold when the talkers were spatially separated from the T/M at threshold when the talkers were colocated. This gives the amount of SRM for an individual listener and was calculated for each aided listening condition in both room conditions. Because there was not an ear difference in SRM between the right and left aided conditions in either room condition (paired t tests; BARE: t = 0.19, p = .849; PLEX: t = 1.52, p = .144), data were collapsed across ear condition to simplify group comparisons between the unilateral aided condition and the other listening conditions.

The amount of SRM was used as the dependent variable in statistical analysis by repeated-measures analysis of variance (ANOVA). The within-subjects factors were room reverberation (BARE/PLEX) and listening condition (unaided, bilateral aided, unilateral aided); the between-subjects factor was listener age (younger/older). The unaided data were from the Marrone et al. (in press) study. There were significant main effects of reverberation, F(1, 18) = 29.24, p < .001, and listening condition, F(2, 36) = 10.9, p < .001. The effect of listener age approached but did not reach statistical significance, F(1, 18) = 4.16, p = .056, and, therefore, will not be considered as a separate factor in SRM in the discussion below. There were no statistically significant interactions. Post hoc pairwise comparisons with a Bonferroni correction between the listening conditions revealed that there was not a significant difference (p = .26) in SRM between the bilateral aided condition and the unaided condition (at a similar sensation level) from Marrone et al. (in press). Listeners showed a small but statistically significant advantage (1 dB on average) in SRM when using two aids instead of one (p = .028). There was also a slight but significant difference between listening with one hearing aid and the unaided condition (p = .001), where listening with one aid was 1.6 dB worse on average.

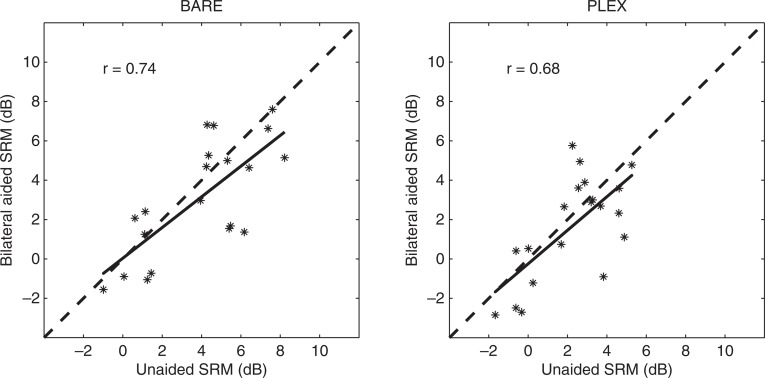

On an individual subject basis, there was a moderately strong and statistically significant correlation between the amount of SRM in the unaided condition and the amount of SRM in the bilateral aided condition in both the low-reverberant room (r = .74, p < .001) and in the more reverberant room (r = .68, p < .001). This is illustrated in Figure 1, showing individual listener data for the amount of SRM in the unaided condition from Marrone et al. (in press) along the abscissa and SRM in the bilateral aided condition along the ordinate for both room conditions (in separate panels). The dashed line represents where the values would fall if the amount of SRM was equivalent in both listening conditions. In all, 14 of the 20 listeners had less than a 2 dB difference between the bilateral aided condition and the unaided condition at an equivalent sensation level. There was not a relationship between the listener's threshold in quiet (estimate of the amount of HL) and the difference in performance between the bilateral aided condition and the unaided condition in the low-reverberation room (r = − 04, p = .88). The mild inverse correlation in the more reverberant room did not reach statistical significance (r = −.43, p = .062).

Figure 1.

A comparison of bilateral aided SRM and unaided SRM from Marrone et al., (in press). Results were obtained at a comparable sensation level on an individual listener basis. The dashed line along the diagonal illustrates equivalent SRM in the two conditions. The solid line represents the best fit for the data.

Given that there was not a significant difference in spatial benefit observed in the bilateral aided condition and the unaided condition at a comparable sensation level, the question arose whether the uniform gain provided at the input to the loudspeakers was similar to the gain applied by the hearing aids. An analysis of the difference in level at the eardrum between the two conditions was performed. A comparison of the long-term average spectrum for the Frye digital speech signal used in the real-ear measurements and the CRM stimuli used in the experiment revealed that the spectra were quite similar and in particular had the same high-frequency roll-off (6 dB per octave after 1000 Hz). Consequently, the real-ear measurements were used to estimate the frequency response in the ear at the target levels used in the two listening conditions. The real-ear unaided response (REUR) at the target level used in the unaided condition was subtracted from the real-ear-aided response (REAR) at the target level used in the bilateral aided condition. For all listeners, the aided levels were higher than those in the unaided condition for frequencies above 1000 Hz, meaning that the hearing aids provided frequency-specific improvements in audibility over the uniform gain provided by the amplifier and loudspeaker in the unaided condition.

To test the hypothesis that the amount of SRM could be related to the additional high-frequency amplification in the aided condition, a correlation analysis was conducted. To have a single value to use as an estimate of the difference in high-frequency response between the conditions (high-frequency benefit), the real-ear-unaided response values at the target level for 2 and 3 kHz for each ear were averaged and subtracted from the average real-ear-aided response values at the target level for 2 and 3 kHz for each ear. The correlation was stronger for the more reverberant room, but neither reached statistical significance (BARE: r = .2, p = .393; PLEX: r = .43, p = .058).

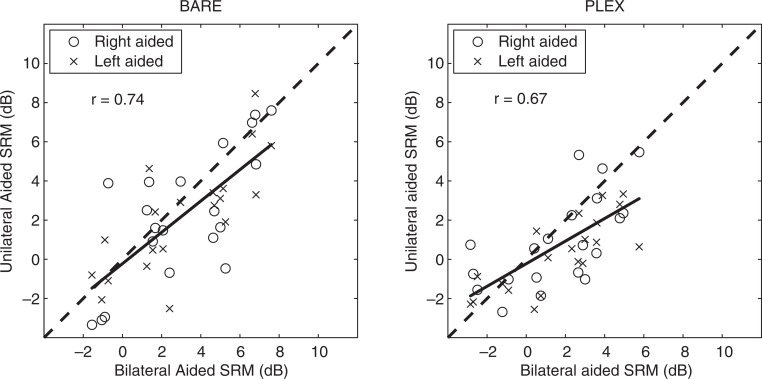

Figure 2 shows individual data for the unilateral- bilateral aided comparison for both room conditions. Each listener has two points in each panel; the right aided values are represented by circles and the left aided values are represented by crosses. In these panels, note that some listeners are still able to achieve a small amount of SRM with one hearing aid. The amount of spatial release in the unilateral aided condition was correlated with that in the bilateral aided condition in both room conditions (BARE: r = .74, p < .001; PLEX: r = .67, p < .001). There was considerable interindividual variability in the amount of unilateral aided SRM (ranging from −2.6 to 7.9 dB in BARE and from −2 to 4 dB in PLEX). One possible explanation for this was that the amount of unilateral aided SRM was dependent on how audible the target was in the unaided ear. This was explored in an analysis presented in Figure 3, where the amount of SRM in the unilateral aided condition is presented as a function of the sensation level of the target in the unaided ear (unaided threshold in quiet). Data are shown for the low-reverberant condition; results for the more reverberant room were equivalent. Individual values for the right aided condition are represented as circles and the crosses represent the left aided condition. The square with error bars represents the mean and standard deviation for the NH group listening with an earplug and earmuff on one ear (n = 36 ears). There is a dashed line drawn at 0 dB SRM for reference, and the straight line represents the best-fit regression line to the data. There was a moderately strong correlation between the amount of unilateral aided SRM and the sensation level of the target in the unaided ear (r = .62, p < .001).

Figure 2.

The relationship between the amount of SRM in the bilateral aided condition and the amount of release in the unilateral aided conditions. The dashed line along the diagonal represents performance that is equivalent in the two conditions. The solid line represents the best fit for the data.

Figure 3.

The amount of SRM in the unilateral aided condition as a function of the sensation level of the stimuli in the unaided ear. The square symbol represents the mean performance for the NH listeners when using an earplug and earmuff to occlude one ear and the error bars represent ±1 standard error of the mean. The dashed line represents the best-fit line to the data and the dotted line is a reference line to illustrate 0 dB SRM.

To investigate whether there was a relationship between listener age and unilateral SRM, additional correlation analyses were performed. There was not a correlation between unilateral aided SRM and listener age in either room condition (BARE: r = −.28, p = .24; PLEX: r = −.25, p = .28). There was also not a correlation between age and the difference in the amount of SRM in the bilateral and unilateral aided conditions in either room (BARE: r = −.18, p = .45; PLEX: r = −.34, p = .14).

Discussion

The purpose of the current study was to examine the benefit of spatial separation between multiple talkers in a sample of experienced hearing aid users wearing their personal hearing aids. On average, the amount of SRM with bilateral hearing aids was slightly less, but not significantly different, than performance without hearing aids at a comparable sensation level. This result should not be interpreted as implying that the hearing aids do not provide benefit in this type of listening situation because the presentation level in the unaided condition was equivalent to providing a significant amount of uniform amplification and is, therefore, not representative of unaided listening in daily life. There was slightly, but significantly, less SRM for the unilateral aided condition as compared to either the bilateral aided condition or the unaided condition.

Overall, the listeners' hearing aids did provide additional high-frequency gain as compared to the relatively uniform gain provided by the loudspeakers in the unaided condition. However, this was not related to the amount of SRM observed, although there was a trend toward improved spatial benefit in the more reverberant room with more high-frequency gain (and presumably better audibility). The measured high-frequency gain at the listeners' preferred hearing aid settings was often lower than a widely used prescriptive target (NAL-RP) that was calculated based on their current hearing thresholds. Therefore, it is possible that the performance observed in this group, although representative of their current hearing aid use, may not reflect their best possible performance.

Although we might have hoped that bilateral amplification would increase SRM by improving perceptual segregation based on improved high-frequency audibility, it could also have had the opposite effect if the two aids altered important ITDs or were poorly matched such that integration of information across the ears was adversely affected. Instead, the current result is somewhat encouraging and suggests that improvements in hearing aid design, fitting, and use may lead to greater benefits. Furthermore, Neher, Behrens, Kragelund, and Petersen (2007) have recently found that some listeners can achieve aided performance benefits in multitalker spatially separated conditions through training. These possible benefits—acoustic and otherwise—would only be the case, though, if the fundamental limitation on SRM is not determined by the degree and nature of the hearing impairment. It remains unclear as to why listeners with HL do not benefit more from spatial separation between multiple talkers. In comparison to the NH listeners in Marrone et al. (in press), bilateral aided spatial benefit is around 8 dB less on average. In this task, there is greatly reduced opportunity to benefit from better ear listening (cf. Marrone et al., 2008a). We speculate that under these conditions SRM is reduced in listeners with HL due to poorer perceptual segregation of sources and/or the ability to focus attention at a point in space. It may also be limited by increased energetic masking. Consequently, it may be that the best spatial benefit that can occur with hearing aid fittings is to preserve whatever SRM is present without hearing aids, unless the amplification provided can strengthen access to perceptual segregation cues.

The symmetric placement of the maskers eliminates the complication of whether a single hearing aid is on the acoustically better or poorer ear. Listeners were able to achieve a small amount of spatial release with one hearing aid and performance with one aid was correlated with performance with two aids. Overall, the unilateral aided results suggest that listeners are using input from the unaided ear to obtain a small binaural benefit. This was in contrast to the monaural control condition, in which NH listeners with one ear occluded by an earplug and earmuff did not show SRM. Several other studies have shown binaural benefit with interaural asymmetries in the input signals (Festen & Plomp, 1986; MacKeith & Coles, 1971; McCullough & Abbas, 1992). Of particular relevance, Festen and Plomp (1986) found that listeners were able to use input from the unaided ear when only one ear was aided, particularly when the listener's PTA was less than 50 dB HL such that the noise level was always above threshold in the unaided ear. Two aids were better than one when the noise was on the same side as the hearing aid and the listener's PTA was greater than 50 dB HL. In the current data, there was a positive correlation between the sensation level of the target in the unaided ear and unilateral SRM. Stated differently, this means that the degree to which listeners are able to use information from two ears with only one hearing aid is dependent on the amount of HL in the unaided ear. Similarly, an interaction between hearing level and the effect of unilateral versus bilateral amplification has been reported in studies of horizontal localization. For example, Byrne, Noble, and LePage (1992) found that localization is relatively good when listeners are fit either unilaterally or bilaterally for mild HL up to 50 dB HL, but that poorer localization accuracy was apparent at greater degrees of loss for unilateral fittings than for bilateral fittings.

Overall, the trends in the current data are the same as those observed in the study of spatial separation between speech and a single speech-shaped noise masker by Festen and Plomp (1986). When comparing threshold T/Ms, those in the Festen and Plomp study were lower than in the current study, likely as a result of differences in the number and type of masking sources used. Despite these differences, it is interesting to note that the interactions between spatial benefit and listening condition were similar between the two studies. Finally, listeners in both studies used their own hearing aids at their preferred settings. Much like the current findings, Festen and Plomp found that on average, the listeners chose a level of applied gain that did not necessarily compensate for their HL in some situations. In fact, they had several listeners that used an average gain close to zero.

Based in part on the results of Novick et al. (2001), it was hypothesized that the bilateral advantage might have been larger in this study in the condition having the most reverberation. However, there was not a difference in bilateral advantage between the two room conditions tested here. In the Novick et al. study, 10 listeners with mild-to-moderate sensorineural HL were tested on the hearing in noise test (HINT) and speech in noise (SPIN) test in two room conditions, an anechoic room and a reverberant classroom (reverberation time listed at 0.67 sec.), while listening with hearing aids (Oticon DigiFocus ITE). They found no difference between unilateral and bilateral fittings on the HINT in either room or on the SPIN in the anechoic chamber. However, in the reverberant room, listeners performed significantly better on the SPIN test with two hearing aids as compared to one. They speculated that this was because the sound field is asymmetric in the reverberant room, so that cues at one ear are not the same as those at the other ear. Specifically, because the multitalker babble in the SPIN was not spectrally matched to the target talker, they hypothesized that the listener might be able to make use of small differences between the target and the babble at the two ears. They concluded that a bilateral fitting may, therefore, be more important in a reverberant environment. In the current study, SRM was on average approximately 1 dB better in the bilateral aided condition than in the unilateral aided condition for both reverberation conditions. Across individual subjects, this difference ranged from −2.9 to 4.5 dB SRM in the low reverberant room and from −2.1 dB to 3.0 dB SRM in the more reverberant room. However, there was an interaction between the aided listening condition and room reverberation on T/M at threshold. The best aided T/Ms were obtained for bilateral fittings in the low-reverberant condition and the worst T/Ms at threshold across the aided conditions occurred when listeners used a single hearing aid in the more reverberant room. This result is contrary to the position that listeners would benefit by removing one hearing aid in a noisy environment (e.g., Walden & Walden, 2005). Furthermore, there was not a relationship between increasing age and the difference between SRM with a single hearing aid as compared to SRM with two hearing aids. Although the older listeners in the current study tended to show less SRM than their younger counterparts, their performance was essentially the same across listening conditions, and the effect of age approached but did not reach statistical significance.

Summary

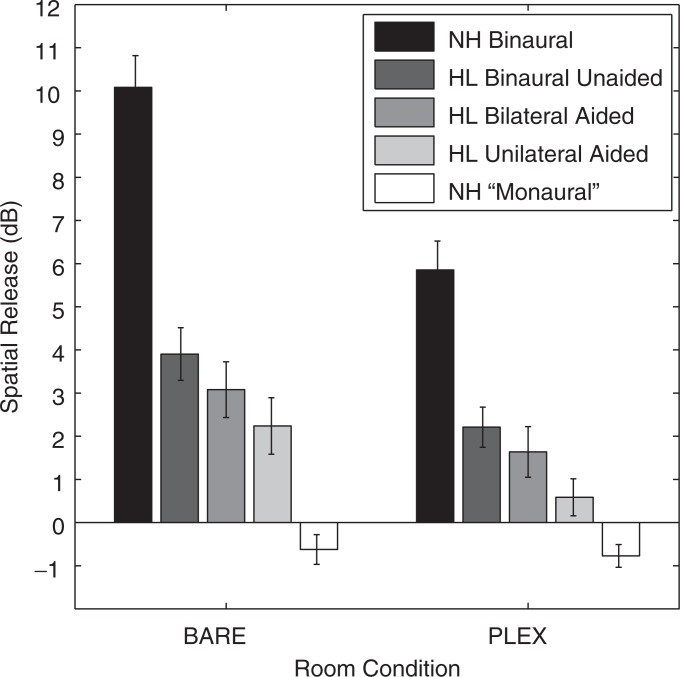

This study evaluated the effect of amplification on the benefit of spatial separation between multiple talkers in rooms with reverberation. We compared aided performance with one or two hearing aids to an unaided condition (Marrone et al., in press) where the speech was presented at or near an equivalent sensation level. A summary of the results, including data from Marrone et al. (in press) for comparison, is given in Figure 4. Overall, with or without hearing aids, hearing-impaired listeners showed much less SRM than age-matched NH listeners. There was not a significant difference with respect to SRM between listening to speech with uniform gain applied to the source and listening with personal hearing aid amplification at user-adjusted settings. There was a small but significant advantage of using bilateral aids as compared to unilateral aids for SRM. Further, the amount of SRM obtained with a unilateral aid was strongly related to the amount of spatial release obtained with bilateral hearing aids. Those listeners with more mild HL were able to make use of binaural information from the unaided ear and achieve a small SRM in the unilateral aided listening condition. In a control condition with NH listeners, SRM was not observed when listeners had one ear occluded by an earplug and earmuff that greatly reduced the sensation level of the stimuli in one ear. Finally, in the cases where the talkers were spatially separated, there was an interaction between aided listening condition and the amount of room reverberation such that the best performance when using hearing aids was obtained for a bilateral fitting in low reverberation and the worst performance occurred when listening with a single hearing aid in higher reverberation.

Figure 4.

A summary of the amount of SRM in the aided listening conditions for the two levels of room reverberation (BARE and PLEX). The NH binaural and HL binaural unaided data are replotted from Marrone et al. (2008b) for comparison. Error bars represent ± 1 standard error of the mean.

Acknowledgments

This work was supported by grants from the National Institute on Deafness and Other Communication Disorders (NIDCD). Portions of the data were presented at the 153rd meeting of the Acoustical Society of America in Salt Lake City, UT, June 4–4, 2007 and at the International Symposium on Auditory and Audiological Research in Helsingør, Denmark, August 29–29, 2007. The authors wish to thank the listeners who participated in the experiment for their time and effort, as well as Virginia Best, Nathaniel Durlach, Barbara Shinn-Cunningham, H. Steven Colburn, and Melanie Matthies for many helpful discussions of the results. The authors also acknowledge the constructive suggestions of the two anonymous reviewers and Dr. Arlene Neuman.

Note

The term unaided as used in this article means that the listener was not wearing his or her personal hearing aids. However, under many conditions the stimuli were presented through loudspeakers at specified suprathreshold levels providing essentially uniform amplification.

References

- Ahlstrom J. B., Horwitz A. R., Dubno J. R. (2006, August). Spatial benefit of hearing aids. Presented at the International Hearing Aids Research Conference, Lake Tahoe, CA.

- Arbogast T. L., Mason C. R., Kidd G., Jr. (2002). The effect of spatial separation on informational and energetic masking of speech. Journal of the Acoustical Society of America, 112, 2086–2098 [DOI] [PubMed] [Google Scholar]

- Arbogast T. L., Mason C. R., Kidd G., Jr. (2005). The effect of spatial separation on informational masking of speech in normal-hearing and hearing-impaired listeners. Journal of the Acoustical Society of America, 117, 2169–2180 [DOI] [PubMed] [Google Scholar]

- Bolia R. S., Nelson W. T., Ericson M. A., Simpson B. D. (2000). A speech corpus for multitalker communications research. Journal of the Acoustical Society of America, 107, 1065–1066 [DOI] [PubMed] [Google Scholar]

- Bregman A. (1990). Auditory scene analysis: The perceptual organization of sound. Cambridge, MA: MIT Press [Google Scholar]

- Broadbent D. E. (1958). Perception and communication. London: Pergamon Press [Google Scholar]

- Bronkhorst A. (2000). The cocktail party phenomenon: A review of research on speech intelligibility in multiple-talker conditions. Acustica Acta Acustica, 86, 117–128 [Google Scholar]

- Byrne D., Noble W. (1998). Optimizing sound localization with hearing aids. Trends in Amplification, 3, 51–73 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Byrne D., Noble W., LePage B. (1992). Effects of long-term bilateral and unilateral fitting of different hearing aid types on the ability to locate sounds. Journal of the American Academy of Audiology, 3, 369–382 [PubMed] [Google Scholar]

- Byrne D., Parkinson A., Newall P. (1990). Hearing aid gain and frequency response requirements for the severely/profoundly hearing impaired. Ear and Hearing, 11, 40–49 [DOI] [PubMed] [Google Scholar]

- Cherry E. C. (1953). Some experiments on the recognition of speech, with one and with two ears. Journal of the Acoustical Society of America, 25, 975–979 [Google Scholar]

- Colburn H. S., Shinn-Cunningham B. A., Kidd G., Jr., Durlach N. I. (2006). The perceptual consequences of binaural hearing. International Journal of Audiology, 45, 34–44 [DOI] [PubMed] [Google Scholar]

- Darwin C. J. (2008). Spatial hearing and perceiving sources. In Yost W. A., Popper A. N., Fay R. R. (Eds.), Auditory perception of sound sources (pp. 215–232). New York: Springer Science + Business Media LLC [Google Scholar]

- Darwin C. J., Carlyon R. P. (1995). Auditory grouping. In Moore B. C. J. (Ed.), Hearing (pp. 387–424). London: Academic Press [Google Scholar]

- Dubno J. R., Ahlstrom J. B., Horwitz A. R. (2002). Spectral contributions to the benefit from spatial separation of speech and noise. Journal of Speech, Language, and Hearing Research, 45, 1297–1310 [DOI] [PubMed] [Google Scholar]

- Ebata M. (2003). Spatial unmasking and attention related to the cocktail party problem. Acoustical Science and Technology, 24, 208–219 [Google Scholar]

- Festen J. M., Plomp R. (1986). Speech-reception threshold in noise with one and two hearing aids. Journal of the Acoustical Society of America, 79, 465–471 [DOI] [PubMed] [Google Scholar]

- Freyman R. L., Helfer K. S., McCall D. D., Clifton R. K. (1999). The role of perceived spatial separation in the unmasking of speech. Journal of the Acoustical Society of America, 106, 3578–3588 [DOI] [PubMed] [Google Scholar]

- Gatehouse S., Noble W. (2004). The Speech, Spatial, and Qualities of Hearing Scale (SSQ). International Journal of Audiology, 43, 85–99 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harkins J., Tucker P. (2007). An internet survey of individuals with hearing loss regarding assistive listening devices. Trends in Amplification, 11, 91–100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hawkins D. B., Mueller H. G. (1998). Procedural considerations in probe-microphone measurements. In Mueller H. G., Northern J. L., Hawkins D. B. (Eds.), Probe microphone measurements: Hearing aid selection and assessment (pp. 67–89). San Diego, CA: Singular Publishing [Google Scholar]

- Kalluri S., Edwards B. (2007, September). Impact of hearing impairment and hearing aids on benefits due to binaural hearing. Proceedings of the International Congress on Acoustics. Madrid, Spain.

- Keidser G., Rohrseitz K., Dillon H., Hamacher V., Carter L., Rass U., et al. (2006). The effect of multi-channel wide dynamic range compression, noise reduction, and the directional microphone on horizontal localization performance in hearing aid wearers. International Journal of Audiology, 45, 563–579 [DOI] [PubMed] [Google Scholar]

- Kidd G., Jr., Arbogast T. L., Mason C. R., Gallun F. J. (2005a). The advantage of knowing where to listen. Journal of the Acoustical Society of America, 118, 3804–3815 [DOI] [PubMed] [Google Scholar]

- Kidd G., Jr., Mason C. R., Brughera A., Hartmann W. M. (2005b). The role of reverberation in release from masking due to spatial separation of sources for speech identification. Acustica Acta Acustica, 91, 526–536 [Google Scholar]

- Kidd G., Jr., Mason C. R., Richards V. M., Gallun F. J., Durlach N. (2008). Informational masking. In Yost W. A., Popper A. N., Fay R. R. (Eds.), Auditory perception of sound sources (pp. 143–190). New York: Springer Science + Business Media LLC [Google Scholar]

- Levitt H. (1971). Transformed up-down methods in psychoacoustics. Journal of the Acoustical Society of America, 49, 467–477 [PubMed] [Google Scholar]

- MacKeith N. W., Coles R. R. (1971). Binaural advantages in hearing of speech. Journal of Laryngology and Otology, 85, 213–232 [DOI] [PubMed] [Google Scholar]

- Marrone N., Mason C. R., Kidd G., Jr. (2008a). Tuning in the spatial dimension: Evidence from a masked speech identification task. Journal of the Acoustical Society of America, 124, 1146–1158 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marrone N., Mason C. R., Kidd G., Jr. (in press). Effects of hearing loss and age on the benefit of spatial separation between multiple talkers in reverberant rooms. Journal of the Acoustical Society of America. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCullough J. A., Abbas P. J. (1992). Effects of interaural speech-recognition differences on binaural advantage for speech in noise. Journal of the American Academy of Audiology, 3, 255–261 [PubMed] [Google Scholar]

- Moore B. C. J. (2008). The choice of compression speed in hearing aids: Theoretical and practical considerations and the role of individual differences. Trends in Amplification, 12, 103–112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nabelek A., Pickett J. M. (1974). Monaural and binaural speech perception through hearing aids under noise and reverberation with normal and hearing-impaired listeners. Journal of Speech and Hearing Research, 17, 724–739 [DOI] [PubMed] [Google Scholar]

- Neher T., Behrens T., Kragelund L., Petersen A. S. (2007, August). Spatial unmasking in aided hearing-impaired listeners and the need for training. In Dau T., Buchholz J. M., Harte J. M., Christiansen T. U. (Eds.), Auditory signal processing in hearing-impaired listeners. 1st International Symposium on Auditory and Audiological Research (pp. 515–522). Copenhagen, Denmark: Centertryk A/S [Google Scholar]

- Noble W., Gatehouse S. (2006). Effects of bilateral versus unilateral hearing aid fitting on abilities measured by the Speech, Spatial, and Qualities of Hearing Scale (SSQ). International Journal of Audiology, 45, 172–181 [DOI] [PubMed] [Google Scholar]

- Novick M. L., Bentler R. A., Dittberner A., Flamme G. (2001). Effects of release time and directionality on unilateral and bilateral hearing aid fittings in complex sound fields. Journal of the American Academy of Audiology, 12, 534–544 [PubMed] [Google Scholar]

- Plomp R., Mimpen A. M. (1981). Effect of the orientation of the speaker's head and the azimuth of a noise source on the speech-reception threshold for sentences. Acustica, 48, 325–328 [Google Scholar]

- Shinn-Cunningham B. G., Ihlefeld A., Satyavarta, Larson E. (2005). Bottom-up and top-down influences on spatial unmasking. Acustica Acta Acustica, 91, 967–979 [Google Scholar]

- Singh G., Pichora-Fuller K., Schneider B. (2008). The effect of age on auditory spatial attention in conditions of real and simulated spatial separation. Journal of the Acoustical Society of America, 124, 1294–1305 [DOI] [PubMed] [Google Scholar]

- Van den Bogaert T., Klasen T. J., Moonen M., Van Deun L., Wouters J. (2006). Horizontal localization with bilateral hearing aids: Without is better than with. Journal of the Acoustical Society of America, 119, 515–526 [DOI] [PubMed] [Google Scholar]

- Walden T. C., Walden B. E. (2005). Unilateral versus bilateral amplification for adults with impaired hearing. Journal of the American Academy of Audiology, 16, 574–584 [DOI] [PubMed] [Google Scholar]

- Yost W. A. (1997). The cocktail party problem: Forty years later. In Gilkey R. A., Anderson T. R. (Eds.), Binaural and spatial hearing in real and virtual environments (pp. 329–348). Mahwah, NJ: Lawrence Erlbaum [Google Scholar]