Abstract

A software system, SPATS (patent pending), that tests and trains important bottom-up and combined bottom-up/top-down speech-perception skills is described. Bottom-up skills are the abilities to identify the constituents of syllables: onsets, nuclei, and codas in quiet and noise as produced by eight talkers. Top-down skills are the abilities to use knowledge of linguistic context to identify words in spoken sentences. The sentence module in SPATS emphasizes combined bottom-up/top-down abilities in perceiving sentences in noise. The word-initial onsets, stressed nuclei, and word-final codas are ranked in importance and grouped into subsets based on their importance. Testing utilizes random presentation of all the items included in a subset. Training in Quiet (SNR = 40 dB) or in Noise (SNR = 5 dB), is adaptively focused on individual listener’s learnable items of intermediate difficulty. Alternatively, SNR-adaption training uses Kaernbach’s algorithm to find the SNR required for a target percent correct. The unique sentence module trains the combination of bottom-up (hearing) with top-down (use of linguistic context) abilities to identify words in meaningful sentences in noise. Scoring in the sentence module is objective and automatic.

I. INTRODUCTION

This paper describes the Speech Perception Assessment and Training System (SPATS, patent pending) developed by the authors at Communication Disorders Technology, Inc. The original motivation for the work was to provide a much more detailed assessment of the speech-perception problems encountered by hearing-impaired clients than was previously available and then, based on that assessment, to offer a training program designed improve the clients’ abilities to understand speech in everyday situations. The companion paper (ASA154-4aPP10) gives the results of the use of SPATS with users of hearing-aids and cochlear-implants. The present paper describes SPATS and the measures of performance it produces.

II. BACKGROUND

There is growing evidence that computer-based auditory training is beneficial and can be accomplished in the hearing clinic or at home (Burk and Humes, 2007; Burk and Humes, 2008; Burk et al., 2006; Fu, 2007; Miller et al., 2004; Miller et al., 2005; Miller, et al., 2007, Stecker et al., 2006; Sweetow and Henderson-Sabes, 2004; Sweetow and Palmer, 2006; Sweetow and Sabes, 2006). SPATS was developed over approximately the same time span as many of the cited papers, but differs from them in the level of detail of the assessment component and in the kinds of training regimes available. More generally, there is a growing consensus that appropriately selected training regimes can exploit the mechanisms of plasticity inherent in the nervous system (Kraus and Banai, 2007; Bacon, Ed., 2006).

III. MODULES

SPATS has two main modules: A. The Sentence Module and B. The Syllable-Constituent Module. These modules and their elements can be used in isolation or in any combination the supervisor chooses as explained below in section IV. OPERATING MODES. However, in actual use we have found it advantageous to systematically intermix the use of the sentence module the syllable-constituent modules. It is believed that such intermixing encourages transfer from constituent training to listening to everyday sentences and helps maintain client interest and motivation. The end goals of the system are to evaluate and train the perception of everyday speech.

A. The Sentence Module

The Sentence Module (patent pending) provides practice in top-down and combined top-down and bottom up speech perception skills. One thousand sentences were recorded by 15 different talkers. Each is spoken naturally and the rates of speech, intonation patterns, and stress patterns vary among the talkers. Therefore, most of the usual kinds of phonetic accommodations that occur in everyday speech are included in this corpus. Scoring of the sentence task is objective and entirely computer based.

The Basic Sentence Task

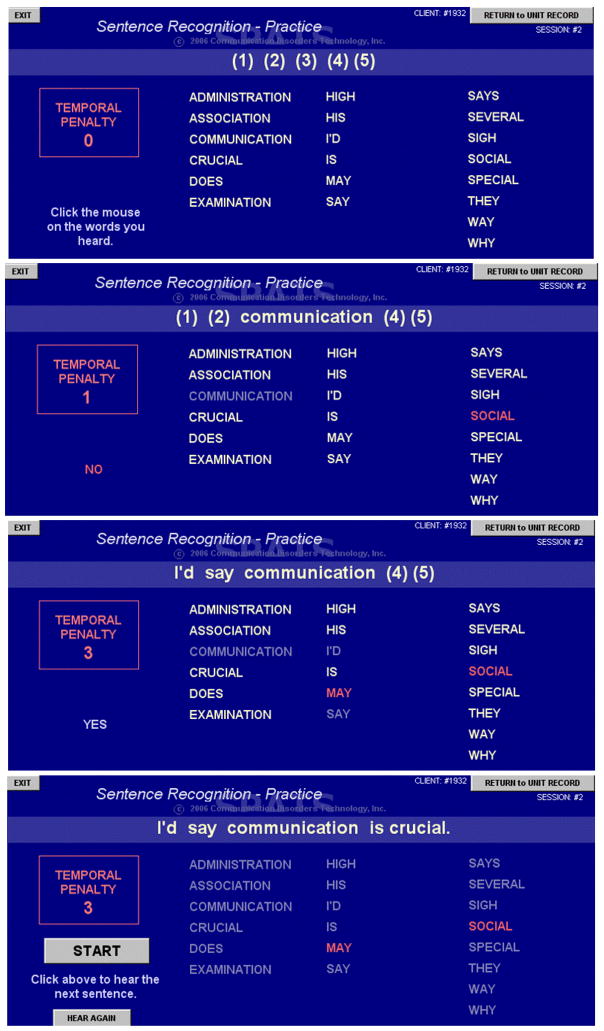

As shown in Figure 1, a spoken sentence of four to seven words is presented. A screen then appears that shows “slots” for each word at the top, while below an alphabetical list of words that contains the spoken words plus three foils for each. The foils are selected to differ from the spoken words (target words) in the onset, nucleus, or coda. Thus, if a target word was “pat” a foil might be “brat,” “path” or “pit.” For many words, foils that only differed in one constituent could not be found. In that case, near approximations were used as foils. The user is instructed to click on the words that they thought that they heard. Correctly selected words change to blue in the list and also appear in the appropriate slot in the header. Errors turn red and cause the sentence to be replayed. Whenever the listener goes 5 sec without a response a “temporal penalty” is assessed. Sample screens for a five-word sentence are shown below. Sentences are presented in groups of three. After each group of three sentences a score is computed based on the number of errors normalized for sentence length and on the number of temporal penalties. The SNR at the beginning of sentence training is set to 0 dB. After each group of three sentences the SNR is decreased or increased based on the sentence scores. While exact procedures are still under development the aim is that the client work at SNRs of about 0 to −10 dB. This range requires the combined use of top-down and bottom-up skills. In applications of SPATS to date we have intermixed training work with the sentence module with constituent testing and training. While this sequencing is not required, it is intended to maintain interest in the training and to encourage transfer from constituent training to the perception of fluent, naturally spoken speech.

FIGURE 1.

This shows the sentence response screen for four stages of solution. The upper panel shows the screen the client sees after the first hearing of the sentence. The second panel shows the screen with 1 correct response, 1 incorrect response, and 1 temporal penalty. The third panel shows the screen after 3 correct responses, 2 errors, and 3 temporal penalties. The lowest panel shows the screen at the completion of the sentence with 5 correct responses, 2 errors, and 3 temporal penalties.

B. Syllable Constituent Module

Organization, Ranking, and Lists of Syllable Constituents

The syllable constituent module is designed to test and train the identification of all of the significant components of syllables; onsets, nuclei, and codas. An “Americanized” Celex database of English was used to determine the lexical and textual frequencies of occurrence of onsets in word initial syllables, of nuclei in the stressed syllables of words, and of the word-final codas. This resulted in a list of 45 onsets, 28 nuclei, and 36 codas that were considered to be the significant constituents of stressed syllables in AE. The members of each group of constituents were ranked in importance based on their average ranks in textual and lexical frequency of occurrence. The constituents were then placed in nested groups: Level 1 being the 25% most important of the significant items, Level 2 the 50% most important, Level 3 the 75% most important, and Level 4 being 100% (all) of the items judged to be significant. These sets are shown in Chart 1 below.

Chart 1.

Lists of syllable constituents used in SPATS

Each constituent was spoken by 8 talkers, 4 male and 4 female. The onsets were combined with four vowels (/i/, /!/, /u/, & /ɚ/). The nuclei were only recorded in the hVd consonantal context. The codas were recorded with 5 nuclei (/i/, /!/, /u/, /ɚ/ & /εɫ/). Each constituent type is divided into 4 nested levels. Level 1 = Column 1, Level 2 = Cols. 1 & 2, Level 3 =Cols. 1, 2 & 3, Level 4 = 1, 2, 3, & 4. Testing and training can be done at selected Levels or in progressive fashion that proceeds from L1 and upgrades to the next Level whenever a criterion score is achieved. The ranks next to each constituent give their relative ranks based on their lexical and textual frequencies of occurrence.

Types of testing and training

Testing (also termed “Benchmark runs”) and training can be done at fixed signal-to-noise ratios (SNRs). SPATS defaults for fixed SNR conditions are: 1) SNR = 40 dB (“Quiet”) and 2) SNR = 5 dB (“Noise”). SPATS noise is 12-talker babble. Testing and training can also be done with SNR Adaption to a target percent correct (TPC). SNR adaptation uses Kaernbach’s (Kaernbach, 1991) weighted up-down algorithm. The target percent correct (TPC) is selected based on the client’s performance in Quiet (SNR = 40 dB). TPC = 0.67*PCquiet + 13.33. For clinical application, SNR adaption is not recommended for TPCs less than 50%.

Syllable Constituents Versus Phonemes

In SPATS, we assume that onsets, nuclei, and codas represent the acoustic elements of spoken American English. To illustrate: the allophones of the phoneme /t/ have different acoustic characteristics in /tr-/, /st-/, /str-/, /-nt/, /-st/, or /-lt/. Therefore consonants and consonant clusters are considered separately as syllable onsets or codas. Also, consonants or clusters are considered to be different when they occur as an onset (e.g. st-) or as a coda (e.g. -st). The reasons for this approach are multiple. But the most compelling one is the lack of predictability from performance on one constituent to that on another. For example, one may be able to discriminate / b-/ from /p-/ but not /br-/ from /pr-/, /bl-/ from /pl-/ or /-p/ from /-b/.

Post-Trial Rehearing

SPATS provides a post-trial rehearing option. After a response, the client may “rehear” the target stimulus and may compare it to the constituent that was incorrectly identified. After an unsure correct response, the client may listen to the stimulus up to three times before proceeding to the next trial. After an incorrect response, the client may rehear the stimulus and the incorrect response (same talker and context) each up to three times before proceeding to the next trial. The post-trial rehearing option allows the client to listen for differences between the actual stimulus and the sound mistaken for it.

Benchmark Runs (Tests)

Benchmark runs (Tests) are conducted as follows. Each item in a set (e.g. Nuclei Level 1 or Onsets Level 3) is presented n times (usually, n = 4). Items are selected at random without replacement until each item has been presented n-times. Talkers and contexts are selected at random without replacement for a given run. For example, if the stimulus set is Onsets Level 3, there are 33 items on the screen. Each item would be presented 4 times for a total of 132 trials. The exact combinations of talkers and contexts are chosen randomly for each such test. A benchmark run always begins with all items assigned to an Item Mastery Score of 50. The Item Mastery Score is defined in the section entitled “Adaptive Item Selection” below. See also the section entitled, “List of Five Performance Measures for Constituent Tests and Training.”

Training Runs

The number of trials in a training run is n times the number of response items (usually, n = 4). However, items are not presented equally often. Rather, Adaptive Item Selection (AIS, patent pending) is used as described below. By this method, items of intermediate difficulty are presented with greater probability than others. Talkers and contexts are always selected at random. At the beginning of a series of training runs, each item has the Item Mastery Score (IMS) it had at the end of the preceding benchmark run. Thereafter, the IMS’s are carried forward from one training run to the next. When a new benchmark run is inserted, all items are returned to an IMS value of 50 and the process is started over. By inserting a Benchmark run after every few training runs the possibility that an item would be incorrectly trapped at 100 (Very Easy) or at 0 (Very Difficult) is eliminated.

Comparing Performance on Benchmark and Training Runs

One cannot directly compare percent-correct scores on Benchmark and Training runs. Because AIS reduces the probability of easy or very difficult items, a client’s percent correct during training can be either higher or lower than on a Benchmark run. Because for most clients AIS plays relatively more items of intermediate difficulty and relatively fewer easy items, the percent correct scores are lower in training than in benchmark runs. It has been found that the Average Item Mastery Score at the end of a training run provides a good measure of performance and progress. As the client learns the items in the set, the Average IMS for the set increases. An interesting process occurs during training runs. The distribution of IMS’s becomes bimodal as items tend to migrate into the very easy (100) and very difficult (0) groups. When an item in the very easy category is missed, it and the item confused with it drop into the intermediate category and are likely to be presented. Similarly, when an item in the very difficult category is correctly identified, it moves into the intermediate category and its probability of being presented increases. Combined with the post-trial rehearing option, this allows the client to improve his performance based on both short-term and long-term memory. During a training run the client often masters items based on short-term memory and the Average Item Mastery Score at the end of a training run tends to be higher than the overall Percent Correct. As the learning consolidates and becomes secure in long-term memory, the percent correct increases to match the Average IMS and the gap between them disappears.

Adaptive Item Selection (AIS)

Adaptive Item Selection (AIS, patent pending) is used during SPATS training runs at fixed SNRs. The goal is to present learnable items of intermediate difficulty with a much higher probability than those that are either very easy or very difficult. To understand the AIS algorithm, one must first grasp the concept of the Item Mastery Score (IMS). Each item in a set being trained is assigned an IMS of 100, 75, 50, 25, or 0. At beginning of a Benchmark run, all items are assigned an IMS of 50. When an item is correctly identified it moves up one step, say from 50 to 75. When an item is presented and missed or when it is used incorrectly as a response, it moves down one step, say from 100 to 75. [Items with an IMS of 100 cannot move to a higher score. Items with an IMS of 0 cannot move to a lower score.] Each IMS represents a mastery category. As training proceeds, items move from one category to another. To choose an item for presentation, AIS first selects a category. After a category has been selected, an item within the category is randomly selected. The basic paradigm is shown as used for hearing-impaired listeners in the Table 1.

Table 1.

Probabilities of selecting Item Mastery Categories for a training trial.

| Item Mastery Category | 4 or more Items in All | None in 75 | None in 75, 50 | None in 50, 25 | None in 75,50,25 | None in 100, 75 | None in 100, 0 | None in 75 & 0 |

|---|---|---|---|---|---|---|---|---|

| 100 very easy | 0.135 | 0.245 | 0.450 | 0.208 | 0.675 | 0.000 | 0.000 | 0.278 |

| 75 easy | 0.450 | 0.000 | 0.000 | 0.692 | 0.000 | 0.000 | 0.563 | 0.000 |

| 50 moderate | 0.250 | 0.455 | 0.000 | 0.000 | 0.000 | 0.602 | 0.313 | 0.515 |

| 25-difficult | 0.100 | 0.182 | 0.333 | 0.000 | 0.000 | 0.241 | 0.125 | 0.206 |

| 0 very difficult | 0.065 | 0.118 | 0.217 | 0.100 | 0.325 | 0.157 | 0.000 | 0.000 |

| Sum | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 |

The probability that a particular item will be selected is the probability that the mastery category will be selected, divided by the number of items in the category [p(IMS)*1/NIMS]. When there are no items in a selected category, the process of category selection is repeated until an item can be found. The middle categories tend to “empty out” as items that can be learned migrate toward IMS = 100, while those that cannot be learned or are very difficult to learn, migrate down to IMS = 0. Special rules govern sampling from categories containing only 1, 2, 3, or 4 items to prevent predictable repetitions of those items. The AIS algorithm has been found to be very effective because it focuses training on the items that the client needs to learn and is able to learn. In effect, the AIS automatically focuses training on each individual client’s learnable, problem sounds.

Response Screens

The constituents are organized on response screens as shown in Figures 2, 3, & 4 below. The screens for onsets and codas, Figures 2 & 4 are organized by place, voicing and manner of articulation. Place of articulation is coded with left-to-right representing front-to-back place of articulation of the distinctive element within a row. Manner and voicing are color coded. Voiceless sounds are on white backgrounds and voiced sounds are on dark or colored backgrounds. Sounds involving laterals are in “lemon-colored” rows. Sounds involving rhotics are in “rose-colored” rows. Sounds involving nasals are in “mango colored” or “nectarine-colored” rows. Sounds involving glides are in “water-colored” or “aqua” rows. The left-hand column of the nucleus screen is shown in Figure 3. Unlike the onset and coda screens, it is organized alphabetically. The rhotic monophthongs and diphthong and the vowels followed by dark-el are aligned with the corresponding items in the left-hand column. Clients are familiarized with a screen before testing or training on its set of constituents. Our experience is that they have little difficulty using any of these screens if their first experience is at Level 1 or Level 2. Most importantly, this organization of the screens may enable the clients to recognize the patterning of their errors.

FIGURE 2.

Response screen for onset testing and training at Level 3. Clients are familiarized with the screens by graduated, programmed introductions. Only the color coding is explained. Notice that the screens for Levels 1 & 2 would have fewer onsets shown and that the Screen for Level 4 would have more. The layout of the screen remains same for all three levels.

FIGURE 3.

Response screen for nucleus testing and training at Level 3. The left-hand column is organized alphabetically. The rhotic monophthongs and diphthongs (middle column) and the vowels followed by dark-el (right-hand column) are aligned with the corresponding items in the left-hand column. Clients are familiarized with the screens by graduated, programmed introductions. Notice that the screens for Levels 1 & 2 would have fewer nuclei shown and that the Screen for Level 4 would have more. The layout of the screen remains same for all three levels.

FIGURE 4.

Response screen for coda testing and training at Level 3. Clients are familiarized with the screens by graduated, programmed introductions. Only the color coding is explained. Notice that the screens for Levels 1 & 2 would have fewer codas shown and that the Screen for Level 4 would have more. The layout of the screen remains same for all three levels.

Trial Procedure and Immediate Feedback

The clients, who are native speakers of English, are encouraged to imitate the syllable that they hear and then to click on the appropriate response button. If the response is correct, the letters on the button turn cyan and the background black. If the response is incorrect the letters and background of the incorrect button immediately reverse, and the letters on the correct button immediately turn to cyan on black. Also, a message at the bottom of the screen describes the error, “No, you chose ‘x’ but ‘y’ was played.” In this way, the client receives immediate informative feedback on each trial. Since the screens are, for the most part, phonetically organized, the correct and incorrect buttons are often nearby and in this way the clients may begin to understand the organization of their errors.

List of Five Performance Measures for Constituent Benchmark and Training Runs

The first two measures are familiar, measures 3–5 are novel and were invented for SPATS (patent pending).

Percent Correct (PC): This refers to the hit rate, meaning the rate at which an item is identified correctly when presented. It can be calculated for an item or for the entire set of items used in a test.

Percent Information Transmitted (p(IT)): This is the information transmitted divided by the information in the stimulus set times 100.

Percent Correct Usage (PCU): This measure incorporates hits and false alarms into a single statistic. It is the number of correct uses of the response divided by the sum of the number of stimulus presentations plus the number of incorrect uses of the response all multiplied by 100. It reflects how well the listener knows what the sound is and what it isn’t. It can be calculated for each item or for an entire set of items.

Item Mastery Score (IMS): In SPATS, at any given moment, each item has an Item Mastery Score of 100, 75, 50, 25, or 0. Initially all items in a Benchmark run (test) have an IMS of 50. As testing or training proceeds, an item moves to the next higher score whenever it is identified correctly. It moves down to the next lower score whenever it is presented and missed or whenever it is used incorrectly as a response. The score cannot go higher than 100 or lower than 0. The Average IMS is the average IMS for a set of items calculated at the end of a run.

Adaptive training in noise (SNR Adaption) is directed toward a target percent correct (TPC), and the listener’s performance is described as a Percent of Norm (%Norm)). For every combination of TPC and stimulus set, the SNR achieved by normally hearing, native speakers of English has been determined (SNRN). A client’s performance is described by the relation of their obtained SNR (SNRC) to SNRN by the equation %Norm = [(SNRC − 40)/(SNRN − 40)]*100. A client, who does as well as the Norm, has a score of 100%. A client, who requires SNR = 40 to achieve the TPC, has a score of 0% (zero), because SNR=40 dB is considered to be nominal quiet.

Reports of Performance

On the completion of a run with a fixed SNR, the client sees his performance, Percent Correct and Average Item Mastery Score as shown in Figure 5.

FIGURE 5.

Screen seen at the end of run with fixed SNR=40. The client’s Average Item Mastery Score and Percent Correct are shown. The Normal Range gives the numerical values of the Average Item Mastery Score and of the Percent Correct attained by normally hearing, native speakers of English.

Although not shown the subject can request a list of the items and their inter-confusions grouped into Mastered, Easy, Hard, and Very Difficult categories. In this way, the client can immediately see the current state of his speech perception capabilities.

Upon the completion of an SNR adaption run, the client sees a screen like that in Figure 6.

FIGURE 6.

In SPATS, the SNR is manipulated while keeping the overall level of signal-plus-noise constant. Thus, when the SNR is changed both speech and noise levels change and the power sum remains at 65 dB. This chart shows normal levels, ≪NORM≫, for both noise and speech for the constituent type and level, and the TPC. The normal levels are for normally hearing people who are native speakers of English. The client is to strive to get the noise up to its norm and the speech down to its norm. The client’s TPC (72%), actual PC (71%), and the client’s SNR (−1.55) are shown at the top of the screen. At the bottom of the screen is the client’s % of Norm (79%). It is found that client’s strive to improve their % of Norm.

After a client has completed several training and Benchmark runs, a graph of progress can be requested as shown in Figure 7.

FIGURE 7.

The client’s Average Item Mastery Scores are shown to improve with practice. The thick lines are for Benchmark runs, while the thin lines are for training runs. In this case the scores for Benchmark and training runs are nearly identical, more often the Average Item Mastery Score would be higher on training than on Benchmark runs.

More complete reports can be requested that list all interconfusions, confusion matrices, and graphs showing improvements from run to run. A sample page from a report intended for the audiologist or sophisticated client is shown in Figure 8 on the next page. This report shows time and date, the percent correct, the average item mastery score, the average PCU, the information transmitted, the distribution of item difficulties at the end of a run, the items listed in order of PCU, and a list of interconfusions. A more traditional confusion matrix is displayed upon request (not shown). This example is for a Benchmark Run for Onsets Level 1. The first item, the onset t- was identified perfectly every time it was presented and was never used incorrectly as a response. The seventh item on the list, the onset w-, was responded to perfectly when it was presented. But w- was used as a response 9 times, 5 times in error. It was used as a response to the onset f- twice, to a vowel onset (Vwl) once, and as a response to the onset h- twice. Thus, SPATS details each client’s exact interconfusions for all of the important syllable constituents of English.

FIGURE 8.

Optional session report described on the previous page.

IV. OPERATING MODES

SPATS can be operated in two modes: the Manual Selection Mode and the Curriculum Mode. In either mode a benchmark test in Quiet (SNR =40 dB) is required before one can test or train in Noise (SNR = 5) or with SNR Adaption.

The Manual Selection Mode

Use of the manual selection mode can be explained with reference to Figure 9.

FIGURE 9.

This shows the “Unit Record Screen” when SPATS is run in the “Manual Selection Mode.”

In this example, the client has completed Benchmark runs for all of the conditions shown with green letters. All conditions shown with white letters may then be individually selected for Benchmark or Training runs. Note that Noise and Adaption selections are not available until a Quiet run has been completed. Although not shown, when a condition is shown in red it means that a run was started but not completed. The user can select Sentence runs by clicking “Go to SENTENCE RECOGNITION.” By selecting buttons in the upper left, one can go to a list of the clients, run screen Introductions, or calibrate the sound level. When green buttons are selected, a variety of options are offered including new runs, reports, or graphs of progress.

The Curriculum Mode

In the curriculum mode the client has no choices of which kind of module to do next. The client simply clicks a large button labeled “CLICK HERE to CONTINUE,” which covers the “Go to SENTENCED RECOGNITION” button shown in Figure 9. The client then follows written instructions that appear on the screen. The curriculum mode eliminates client and/or operator errors in the selection of tasks and the sequence of tasks. Although not shown, curricula can be easily written, stored, and selected. Curricula can be constructed that are progressive from level to level, depending on the client’s performance. SPATS displays curricula so that the administrator, can create, view, and edit them.

V. SPATS PHILOSOPHY

The following five points guided the development of SPATS.

Assessment should have the potential to provide a detailed inventory of the basic elements of speech, syllable constituents, that can and cannot be recognized.

The system should include objectively scored testing and training with naturally spoken sentences.

Intensive practice can help hearing-impaired people learn to attend to and use the acoustic cues that are available to them even though many such cues may be attenuated, distorted, or otherwise differ from those used by normal hearing listeners.

Optimal procedures to enhance learning were sought. These included immediate informative feedback as well as other forms of feedback, providing, in so far as possible, stimulus-response compatibility or isomorphism, both low variability (post-trial rehearing) and high variability training, and practice in combining top-down and bottom-up perceptual skills required for the recognition of continuous discourse.

The authors also believe, and evidence confirms, that learning new perceptual skills requires intensive practice over periods of many hours and weeks. A client needs the support and guidance of a professional in order to maintain motivation and to progress. While SPATS can and should be used at home, initial training should be conducted under supervision. Home use should include regularly scheduled visits (preferably at least bi-weekly) with the supervisor, during which progress is reviewed, questions answered, and problems are discussed. A total of 15 to 40 hours of practice spaced over several weeks can be expected to produce improved everyday speech perception in quiet and in noise. Available evidence suggests that improvements achieved are proportional to time devoted to training, without any currently predictable limit.

VI. APPLICATIONS

Demonstrated Successful Applications

-

1)

SPATS has been used to assess and to successfully train speech perception by adult hearing-aid users.

-

2)

SPATS has been used to assess and to successfully train speech perception by adult cochlear-implant users.

-

3)

SPATS has been used to assess and to successfully train the perception of American English by adult ESL-learners.

Potential Applications

-

4)

SPATS may be useful for a wide variety of speech research applications.

-

5)

SPATS has potential for hearing-aid and cochlear-implant research.

-

6)

SPATS has potential for hearing-aid fitting and cochlear-implant fitting.

-

7)

SPATS has potential for use by school-age children with difficulties in speech perception or reading.

-

8)

SPATS, with response screens translated to IPA, has potential for use in teaching phonetics.

-

9)

SPATS may have potential in retraining people with CNS damage.

Acknowledgments

James D. Miller, Charles S. Watson, and Diane Kewley-Port are stockholders in Communication Disorders Technology, Inc. and could profit from the commercialization of SPATS software. Jonathan M. Dalby of Indiana University-Purdue University Fort Wayne contributed to the early phases of this work and is also a stockholder in Communication Disorders Technology, Inc. This work was supported by NIH/NIDCD SBIR Grant R44DC006338.

References

- Bacon S, editor. J Comm Disord. 6. Vol. 40. 2007. ASHA 2006 Research Symposium: Issues in the Development and Plasticity of the Auditory System; pp. 433–536. [DOI] [PubMed] [Google Scholar]

- Burk M, Humes LE. Effects of training on speech recognition performance in noise using lexically hard words. J Sp Lang Hear Res. 2007;50:25–40. doi: 10.1044/1092-4388(2007/003). [DOI] [PubMed] [Google Scholar]

- Burk M, Humes LE. Effects of long-term training on aided speech-recognition performance in noise in older adults. J Sp Lang Hear Res. 2008;51:759–771. doi: 10.1044/1092-4388(2008/054). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burk M, Humes LE, Amos NE, Strauser LE. Effect of training on word recognition performance in noise for young-normal hearing and older hearing-impaired listeners. Ear & Hear. 2006;27:263–278. doi: 10.1097/01.aud.0000215980.21158.a2. [DOI] [PubMed] [Google Scholar]

- Fu QJ, Galvin JJ. Perceptual learning and auditory training in cochlear implant recipients. Trends in Amplification. 2007;11:193–205. doi: 10.1177/1084713807301379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaernbach C. Simple adaptive testing with the weighted up-down method. Perception & Psychophysics. 1991;49:227–229. doi: 10.3758/bf03214307. [DOI] [PubMed] [Google Scholar]

- Kraus N, Banai K. Auditory processing malleability: Focus on language and music. Curr Dir Psychol Sci. 2007;16:105–109. [Google Scholar]

- Miller JD, Dalby JM, Watson CS, Burleson DF. Training experienced hearing-aid users to identify syllable-initial consonants in quiet and noise. J Acoust Soc Am. 2004;115:2387. [Google Scholar]

- Miller JD, Dalby JM, Watson CS, Burleson DF. Training experienced hearing-aid users to identify syllable constituents in quiet and noise. ISCA Workshop on Plasticity in Speech Perception, PSP; 2005; June; London: A46. 2005. [Google Scholar]

- Miller JD, Watson CS, Kistler DJ, Wightman FL, Preminger JE. Preliminary evaluation of the speech perception assessment and training system (SPATS) with hearing-aid and cochlear-implant users. J Acoust Soc Am. 2007;122:3063. doi: 10.1121/1.2988004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stecker GC, Bowman GA, Yund EW, Herron TJ, Roup CM, Woods DL. Perceptual training improves syllable identification in new and experienced hearing aid users. J Rehabilitative Res and Dev. 2006;43:537–552. doi: 10.1682/jrrd.2005.11.0171. [DOI] [PubMed] [Google Scholar]

- Sweetow R, Henderson-Sabes J. The case for LACE: Listening and auditory communication enhancement training. Hearing Journal. 2004;57:32–38. [Google Scholar]

- Sweetow R, Palmer CV. Efficacy of individual auditory training in adults: A systematic review of the evidence. J Am Acad Audiol. 2005;16:494–504. doi: 10.3766/jaaa.16.7.9. [DOI] [PubMed] [Google Scholar]

- Sweetow RW, Sabes JH. The need for and development of an adaptive Listening and Communication Enhancement (LACE) Program. J Am Acad Audiol. 2006;17:538–558. doi: 10.3766/jaaa.17.8.2. [DOI] [PubMed] [Google Scholar]