Abstract

Spoken languages are characterized by flexible, multivariate prosodic systems. As a natural language, American Sign Language (ASL), and other sign languages (SLs), are also expected to be characterized in the same way. Artificially created signing systems for classroom use, such as signed English, serve as a contrast to natural sign languages. The present article explores the effects of changes in signing rate on signs, pauses, and, unlike previous studies, a variety of nonmanual markers.

Rate was a main effect on the duration of signs, the number of pauses and pause duration, the duration of brow raises, the duration of licensed lowered brows, the number and duration of blinks, all of which decreased with increased signing rate. This indicates that signers produced their different signing rates without making dramatic changes in the number of signs, but instead by varying the sign duration, in accordance with previous observations (Grosjean, 1978, 1979). These results can be brought to bear on three different issues: (1) the difference between grammatical nonmanuals and non-grammatical nonmanuals; (2) the fact that nonmanuals in general are not just a modality effect; and (3) the use of some nonmanuals as pragmatically determined as opposed to overt morphophonological markers reflecting the semantic–syntax–pragmatic interfaces.

Keywords: American Sign Language, facial expressions, pragmatics, prosody, semantics

1 Introduction

Spoken languages are characterized by flexible, multivariate prosodic systems (an extensive review of theories and data is given in Ladd, 1996). As a natural language, American Sign Language (ASL), and other sign languages (SLs), are also similarly characterized. This study provides support for the claim that signers systematically regulate the prosodic structure of ASL. At the same time, it has been claimed that certain of the nonmanual markings (face, head) used in ASL are (1) syntactic in origin and domain (Neidle, Kegl, MacLaughlin, Bahan, & Lee, 2000), or (2) pragmatic in origin and prosodic in domain (Sandler & Lillo-Martin, 2006; Wilbur, 1991), or (3) separated into groups which vary in origin and in domain determinants (Wilbur, 2000; Wilbur & Patschke, 1999). The results presented here will be evaluated in light of these claims.

The fact that ASL is a language in a different modality (visual/spatial rather than auditory/vocal) implies that there are differences in its organizational principles. A fundamental difference between ASL (and other SLs) and spoken languages is the surface organization. There is a strong tendency for spoken languages to utilize sequential information transfer: phonemes occur in sequence; word formation involves affixing before, inside of, or after the stem; and sentence formation relies on the presentation of syntactic constituents in sequence. This is not to say that simultaneous information transfer does not occur in spoken languages. Some examples are: simultaneous pitch patterns for intonation and lexical tone; ablauts (e.g., German plural); vowel harmony; and nasalization over large domains. However, spoken languages tend to make greater use of sequential information than simultaneous information; this is probably due to the speed of articulation and transmission of sound waves for perception.

In contrast, sign languages have slower production (the hands are larger muscles than those that produce speech), but much faster perception in the visual modality (the speed of light to the eye). SLs rely heavily on simultaneous information transfer (layering) even though, ultimately, signs must be put in sequence for production and perception (Sandler & Lillo-Martin, 2006; Wilbur, 2000; Wilbur, Klima, & Bellugi, 1983). Layering requires that the articulation of each piece of information cannot interfere with the perception and production of the others. Thus, layering can be viewed as a “conspiracy” of form and meaning to allow more than one linguistically meaningful unit of information to be efficiently transferred simultaneously (this does not mean that there is no coarticulation in sign production, cf. Mauk, 2003). An example in spoken languages is the use of tone in tone languages, wherein consonantal and vocalic segments are sequentially articulated while tone contours are simultaneously produced with them. Tone articulation uses an available production channel that does not interfere with the articulation of each segmental phoneme; both are distinctly produced and perceived.

One might say that the design features of a “natural language” include, but may not be limited to, a “hierarchically organized, constituent-based system of symbol use that serves the needs of communities of users to efficiently produce and understand infinite numbers of novel messages, and being capable of being learned by babies from birth” (Wilbur, 2003). Artificially created signing systems for classroom use, such as signed English (SE), serve as a contrast to natural sign languages. Over time, ASL has adapted to minimize the number of signs while maximizing the information in a message (Frishberg, 1975), developing simultaneous transmission strategies along with sequential ones. In contrast, SE retains the sequential word order of English, despite the differences resulting from modality of production (signs take longer to make than spoken words). Bellugi and Fischer (1972) demonstrated that sentences in ASL and spoken English with the same number of semantic propositions take roughly the same duration, whereas in SE they take at least 50% longer. One implication of this finding is that, at some level of processing, there is an optimal time or rate for transmission of information regardless of modality. This conclusion is supported by the similarity of spoken and signed syllable duration (about .25 seconds) reported by Wilbur and Nolen (1986). Like spoken syllables, sign syllables can be defined on the basis of production, perception, and/or linguistic utility; sign syllables have been counted and tapped to by Deaf signers, and participate in metrical structure, slips of the hands, backwards signing, and other phenomena (see Brentari, 1998, for further discussion). SLs tend to have predominantly monosyllabic lexical items, although disyllabic and multisyllabic forms do exist, and some signs will have additional syllables due to reduplication for inflection and affixation (e.g., plural). The finding that the same story produced in SE had an increased duration of almost 50% can be interpreted as a potential problem for SE usage, in terms of perception, production, and memory processing (Wilbur & Petersen, 1998). It also serves as evidence that SE is not adapted to the manual/visual modality. It is this lack of adaptation that proves the rule: the design features of natural languages require an efficient fit with the perception and production requirements of the modality in which they are used; SE is not natural and does not fit its modality efficiently.

A unique aspect of signed languages is the systematic grammatical use of facial expressions and head or body positions. The nonmanual markers (NMs) comprise a number of independent channels (our current count is 14): the head, the shoulders, the torso, the eyebrows, the eyeballs (gaze), the eyelids, the nose, the mouth (upper lip, lower lip, mid-lip, lip corners), the tongue, the cheeks, and the chin. Each of these is capable of independent articulation and, with layering, complex combinations can be produced. In general, nonmanuals may provide lexical or morphemic information on lexical items, or indicate the ends of phrases or phrasal extent.

1.1 Prosody in sign languages

Traditionally, prosody includes rhythm, intonation, and stress. In the sign language literature, rhythmic structure and stress have been investigated in more detail than intonation, which has been recently re-addressed.

1.1.1 Stress

Studies of ASL stress marking reveal that within-sign peak velocity (measured with 3D motion capture) is the primary kinematic marker of stress (Wilbur, 1999b), along with signs being raised in the vertical signing space (Wilbur & Schick, 1987). More relevant to the claim that ASL has a prosodic structure integrated with its syntax is the observation that ASL differs from English, which allows sentential stress to shift to different locations in the sentence depending on the focused or contrasted word. Instead, ASL prefers focus, hence sentence stress, to fall in sentence final position (Wilbur, 1997). In this regard, ASL patterns more like Catalan than like English (Vallduví, 1991, 1992; Wilbur, 1997). Stress will not be considered further in this report.

1.1.2 Prosodic constituency and phrasing

There is ample evidence of prosodic constituency in ASL. Grosjean and Lane (1977) analyzed pauses, which group signs together in a hierarchical manner: longest pauses were placed between sentences, whereas shorter pauses occurred within sentences. Phrase Final Lengthening is also well-documented (Coulter, 1993; Liddell & Johnson, 1989; Wilbur, 1999b). Intonational Phrase (IP) boundaries are marked with change in head or body position, changes in facial expression, and periodic blinks (Brentari & Crossley, 2002; Sandler & Lillo-Martin, 2006; Wilbur, 1994). It should be mentioned that IPs have been determined by different prosodic algorithms. For example, Wilbur (1994) used Selkirk’s (1984, 1995) derivation of IPs from syntactic constituents (discussed in more detail in Section 1.2), whereas Sandler and Lillo-Martin (2006) used Nespor and Vogel’s (1986) prosodic hierarchy, which is based on increasing breaks in rhythmic structure from the prosodic word, to larger phonological phrases, to the IP. It is not clear whether the IPs derived from both methods are actually co-extensive, but for this study, we will assume that they are. In this report, we will analyze number and duration of pauses and number and duration of eyeblinks as they reflect changes in prosodic constituency.

1.1.3 The notion of intonation for sign languages

In the areas of rhythm and stress, there does not appear to be a modality effect in sign language (aside from form) above the level of the syllable in the prosodic hierarchy (Allen, Wilbur, & Schick, 1991; Nespor & Sandler, 1999; Nespor & Vogel, 1986; Wilbur, 1999b; Wilbur & Allen, 1991). The primary difference in form appears to be one of increased simultaneous occurrence in sign languages as compared to spoken languages (“layering”, Wilbur, 2000). Sandler and Lillo-Martin (2006, p.253) argue that “sign languages have intonational phrases and intonational tunes as well, the latter expressed through facial expression.” It is the layering of the simultaneous nonmanuals that they view as “tune-like.”

To gain a sense of what intonation would be like for SLs, consider first some results from Weast (2008). In a study that measured brow height differences at the pixel level for ASL statements and questions produced with five different emotions, she observed that eyebrow height showed a clear declination across statements and to a lesser extent before sentence-final position in questions, parallel to intonation in languages like English. This finding directly contradicts the claim in Sandler and Lillo-Martin (2006, p.261) that “there is nothing in sign language that directly corresponds to pitch excursions,” and serves as a caution to us as we explore the prosodic system further. Weast also shows that eyebrow height differentiates questions from statements, and yes/no questions from wh-questions, performing a syntactic function. Furthermore, maximum eyebrow heights differed significantly by emotion (sad and angry lower than neutral, happy, and surprise). The syntactic uses of eyebrow height are constrained by emotional eyebrow height, illustrating the simultaneity of multiple messages on the face and the interaction between information channels, in some senses similar to the interaction of linguistic and paralinguistic uses of pitch (Ladd, 1996).

We return then to the question of what Sandler and Lillo-Martin (2006) mean by intonational tunes for SLs. As mentioned above, their notion of intonational phrases is determined by the prosodic hierarchy of Nespor and Vogel (1986). The ends of these phrases are marked by nonmanual articulatory events (as well as by Phrase Final Lengthening and pauses, Section 1.3), such as eyeblinks, head nods, and return to eye contact (cf. Baker & Padden, 1978). During the span between start and end of IPs (and smaller prosodic phrases), nonmanual articulations may be layered – for example, a head tilt and eyebrow raise for question marking may co-occur with a mouth adverbial modifying a verb sign. Sandler and Lillo-Martin’s (2006) notion of intonational tune is based on the analogy of co-occurring and sequential facial articulations to multiple pitch levels of languages with tone and intonation patterns. They claim that this approach is superior to a syntactic-only analysis of nonmanuals (e.g., Neidle et al., 2000) because it can capture the syntactic domain and the prosodic domain and the pragmatic function, which the syntactic approach cannot. I return to this issue in the discussion of the results (Section 4).

1.2 Nonmanual marking in ASL

In general, ASL nonmanuals provide morphemic information on specific lexical items, or indicate the ends of phrases (boundary markers) or their extent (domain markers). It is important to distinguish linguistic uses of these nonmanuals from affective uses of similar looking facial expressions. One difference is that grammatical nonmanuals turn on and off with the constituents they modify, while affective markers turn on and off gradually and not necessarily at syntactic boundaries (Baker-Shenk, 1983; Liddell, 1978, 1980). For example, the negative headshake is a grammatical marker in ASL and is turned on and off abruptly at constituent boundaries; negative headshakes used by non-signing speakers of English are turned on and off gradually and in positions seemingly unconnected with English syntactic structure (Boyes Braem & Sutton-Spence, 2001; Veinberg & Wilbur, 1990). Research on the acquisition of nonmanuals indicates a very clear separation between early use of facial expressions for affective purposes and later development of facial expressions for linguistic functions (Anderson & Reilly, 1998; Reilly & Bellugi, 1996; Reilly, McIntire, & Bellugi, 1990).

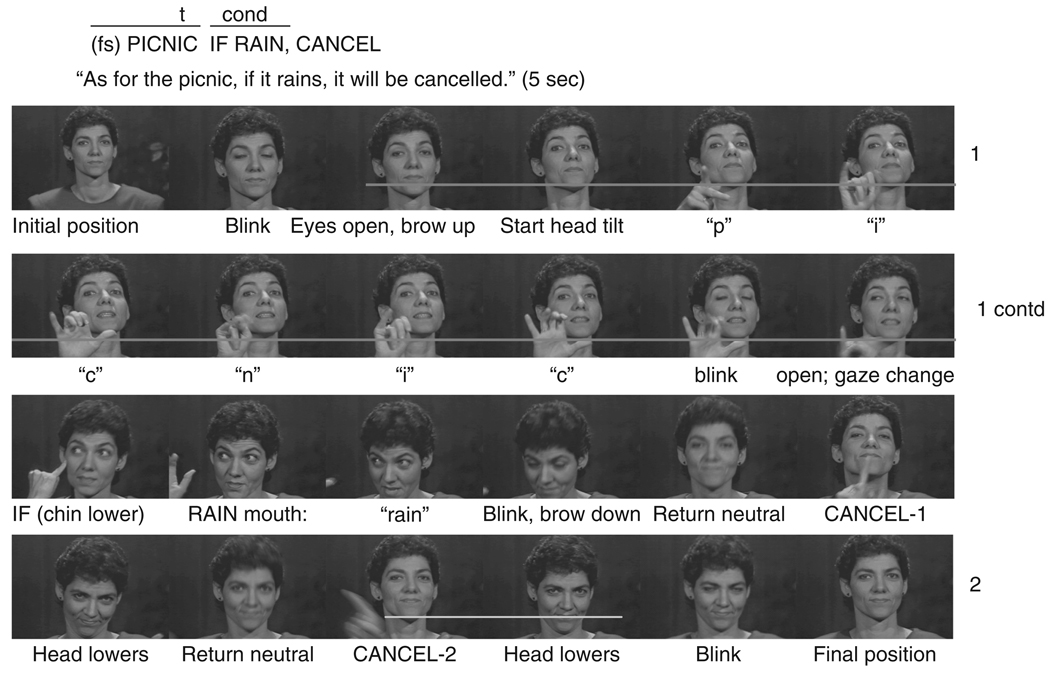

The various nonmanuals of ASL can be roughly divided into two groups. The lower part of the face tends to produce meaningful markers (adjectives, adverbs) that associate with specific lexical items or phrases with those lexical items as heads (e.g., N or NP, V or VP). The upper part of the face (eyebrows, head position, head nods, eyegaze) tends to co-occur with higher syntactic constituents (clauses, sentences) even if such constituents contain only a single sign (e.g., a topicalized noun). Within an ASL phrase, then, the signer’s head may assume a particular position (e.g., tilted back, Figure 1) that is held throughout a phrase, while the mouth, cheeks, and other lower face positions may change during the phrase as the lexical signs are produced (Bahan, 1996; Baker & Padden, 1978; Coulter, 1978, 1979; Liddell, 1978; Neidle et al., 2000; Sandler & Lillo-Martin, 2006; Wilbur, 1991; Wilbur et al., 1983). Recent findings indicate that the use of the lower face to form mouthings (from English words) during ASL signs is much more widespread than previously thought, and its frequency is dependent on both context and the grammatical category of the manual sign it occurs with (Nadolske & Rosenstock, 2007; cf. Boyes Braem & Spence-Sutton, 2001). Figure 1 contains an example with the mouthing of the word “rain.”

Figure 1.

A variety of grammatical nonmanuals used in sequence

Notice that the head tilt back (Line 1) continues while the signer produces the topic “picnic (fingerspelled)” but is lost at the beginning of the conditional clause with the sign IF. In contrast, the brow raise starts with the topic and stays up through the conditional clause IF RAIN, coming down at the end of that clause along with an eyeblink. Note also that between the topic and conditional clause, there is an eyeblink and an eyegaze change, marking the prosodic boundary but to a lesser extent than that between the conditional clause and matrix clause, where, in fact, the signer returns her head and face practically to the initial (and also final) position. The sign CANCEL, which has two movements, has a head nod (Line 2) on each part. This coordinated head and manual movement, that is, the head follows the up and down movement of the hands, may be an extension of what Woll (2001) calls “echo phonology,” which refers specifically to the coordinated movement of mouth and hands. This figure illustrates a variety of sequential and simultaneous nonmanuals. In this article, we will be concerned with brow position, blinks, and head nods. The two general behaviors of interest are: (1) edge marking at the boundary of constituents, and (2) domain marking, where the marker spreads across an entire domain.

Finally, this section closes with the caution that it is insufficient to say that nonmanuals have been “borrowed” from general facial and gestural indicators of conversational functions. For example, it is true that English-speaking hearing people use negative headshakes in negative situations. Veinberg and Wilbur (1990) reported that signers’ negative headshakes begin and end abruptly with the negated syntactic constituent (within 15–19 ms), whereas hearing non-signers’ negative headshakes do not correlate with negated structure. In addition, signers also use combinations of eye/eyebrow and mouth/chin positions to signal negation. Furthermore, ASL does not require negative headshakes in negative situations. Other ways that negation can be marked nonmanually is with a lean backward, which can be used to deny a preceding proposition (Veinberg & Wilbur, 1990; Wilbur & Patschke, 1998). It is more appropriate to say that nonmanuals may have been “derived” or grammaticalized from such gestures, and even then, caution is necessary, as Wood (1996) documents the existence of a repeated nose wrinkle for referential and pragmatic purposes that has no obvious non-signing gestural origin. It is also the case that different sign languages use different nonmanual markers to perform the same function (Šarac, Schalber, Alibašić, & Wilbur, 2007); thus specific nonmanuals do not have universal meanings and there is no “universal questioning face,” as suggested by Janzen (1998, 1999) and MacFarlane (1998). The function of each nonmanual articulation needs to be evaluated individually.

I will describe in some detail several nonmanuals that are explored in this study: eyebrow position, eyeblink, and head nod. Other nonmanuals have been identified as linguistically significant in ASL and other sign languages. These include eyegaze, head tilt, body leans, head thrusts, and negative headshakes. Interested readers are referred to Boyes Braem and Sutton-Spence (2001), Liddell (1986), Neidle et al. (2000), Sandler and Lillo-Martin (2006), and Wilbur (2000). Recent reanalysis of lower face nonmanuals, in particular the behavior and function of mouth positions and changes in position, can be found in Grose (2008), Schalber (2004), Schalber and Grose (2006).

1.2.1 Eyebrow

There are three linguistically significant eyebrow positions in ASL: raised, lowered, and neutral (Wilbur & Patschke, 1999). I have claimed that these brow positions are each associated with a different semantic operator: [−wh], [+wh], and none, respectively. Thus, lowered brow occurs uniquely and exclusively with wh-questions and true embedded wh-complements. Neutral brows occur on assertions. Previous approaches to explaining brow raise behavior have claimed that it performs a pragmatic function, such as indicating that information is presupposed, given, or otherwise not asserted (Coulter, 1978) or “predicting that information will follow” (Sandler & Lillo-Martin, 2006, citing Dachkovsky, 2004). However, Wilbur and Patschke (1999) observe structures with brow raise on new information, as well as non-asserted information without brow raise. Suggestions that the function of brow raise is primarily pragmatic do not deal with the many cases where unasserted information or information preceding further predicating information do not have brow raise despite being in the appropriate pragmatic environment. Raised brows occur on a large collection of seemingly unrelated structures: topics; focalized/topicalized constituents; left dislocations; conditionals; relative clauses; yes/no questions; wh-clauses of wh-cleft constructions; preposed focus associates of the signs SAME “even” and ONLY-ONE “only”; the focus of THAT-clefts (the ASL equivalent of it-clefts, S. Fischer personal communication); and everything that precedes (non-doubled) modals and negatives that are in right-peripheral focus position. To use brow raise correctly in ASL, one must master a sophisticated level of linguistic complexity: the commonality found in brow raise usage is that these clauses are associated with [−wh] restrictive operators and the brow raise spreads only across the constituent associated with the operator’s semantic restriction (for accessible discussion of restrictive semantic operators, see Krifka, 1995; Krifka et al., 1995). No other generalization accounts for all and only this particular set of semantic brow raise triggers.

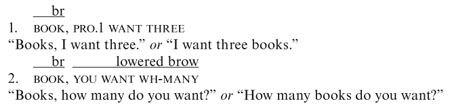

The distinction between the behavior of [−wh]-marking brow raise and [+wh]-marking brow lowering is relevant to the results reported here. A simple example would be a topic with brow raise followed by an assertion with neutral brow position or by a wh-question with brow lowering. Boster (1996) discusses such examples in Quantifier Phrase splits, in which a noun is separated from its modifying quantifier or wh-word. The noun receives brow raise marking because it is topicalized, and the remaining clause is either unmarked if it is an assertion (1) or marked with lowered brow as part of the wh-question face (2) (Lillo-Martin & Fischer, 1992). Critically, the brow raise from the topic does not extend over the whole statement or question:

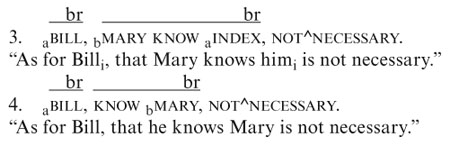

Because brow raise may be associated with different [−wh] operators, there may be more than one in a sentence (this fact creates another problem for a purely pragmatic analysis). Lillo-Martin (1986) cites the following examples, in which there is both a topic brow raise and a second brow raise-marked sentential subject associated with negative modal compound not^necessary:

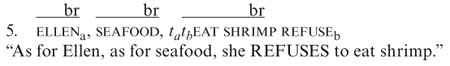

A final example with three brow raise markers comes from our data:

In this example, the first brow raise is on the left-dislocated ellen, the second is on the topic seafood, and the main verb refuse has been focused (moved to final position; cf. Wilbur, 1997, 1999a), which results in the third brow raise on eat shrimp as the associate of the focus operator (Wilbur, 1996). Thus, brow raise-marking is not like brow lowering for wh-clauses in a number of important respects: (a) the domain of brow raise is limited to the restrictive scope position of the operator rather than larger syntactic (c-command) domains; and (b) there can be multiple brow raise-marked phrases associated with different functions in the same sentence. For our purposes, it should be noted that signing rate can affect whether the brows drop to neutral between sequential brow raises, for example in (5), whether there are three distinct brow raises produced or one long brow raise. As can be seen in Figure 1 above, the brow raise extends across the topic picnic and the conditional if rain, despite a blink marking the juncture between them and despite the fact that this signer is hyperarticulating facial expressions in a teaching video on ASL nonmanuals while signing at normal rate. The two measures of brow position addressed in this study are number and duration for both brow raise and brow lowering.

1.2.2 Eyeblinks

Baker and Padden (1978) first brought eyeblinks to the attention of sign language researchers as one of four components contributing to the conditional nonmanual marker (others were contraction of the distance between the lips and nose, brow raise, and the initiation of head nodding). Stern and Dunham (1990) distinguish three main types of blinks: startle reflex blinks, involuntary periodic blinks (for wetting the eye), and voluntary blinks. Startle reflex blinks are not used linguistically, whereas both periodic blinks and voluntary blinks serve specific linguistic functions in ASL. Frequency of periodic blinks is affected by perceptual and cognitive demands (for example, 18.4 blinks per minute while talking and only 3.6 while watching video screens). Blink location (when someone blinks) is also affected by task demands, for example, readers tend to place their blinks at semantically appropriate places in the text, such as the end of a sentence, paragraph, or page. These controlled blinks are referred to as inhibited periodic blinks.

As Grosjean and Lane (1977) indicated, speakers do not breathe in the middle of a spoken word but signers may breathe anywhere they please. Baker and Padden (1978) reported that signers do not blink in the middle of a sign whereas speakers may blink anywhere they please. As documented in Wilbur (1994), these inhibited periodic blinks are a primary marker of the end (right edge) of IPs in ASL, as determined by Selkirk’s (1984, 1995) algorithm for determining IPs from syntactic structure in spoken languages. Selkirk’s algorithm uses a purely syntactic notion of ‘ungoverned maximal projection’ for determining IP. When applied to ASL, where a signer might blink can be predicted with over 90% accuracy (although it cannot be predicted that a signer will blink). Thus eyeblink location provides information about constituent structure, both syntactic and prosodic.

There are also blinks that co-occur with lexical signs. These blinks are slower and longer than edge-marking blinks. Such lexical blinks are voluntary, not periodic, and they perform a semantic and/or prosodic function of emphasis, assertion, or stress, with a domain limited to the lexical item that they occur with. Voluntary blinks on lexical signs in final position in a clause can be followed immediately by periodic blinks at the end of the constituent, reflecting their different functions. Indeed, it is this pattern of blinking – long slow followed by short fast – that led us to investigate functions other than eyewetting for ASL blinks (Wilbur, 1994). In this study, only non-lexical eyeblinks are analyzed. The measures are again number and duration.

1.2.3 Head nods (HN)

Head nods perform a variety of functions. (Liddell 1977, 1980) observed that head nods co-occurring with signs mark emphasis, assertions, and/or existence. Head nods have also been noted to correlate with syntactic locations where verbs are missing (Aarons, 1994; Liddell, 1977, 1980). Wilbur (1991) suggested that such head nods mark focus, that is, they may be the emphatic correlate of voluntary eyeblinks, rather than the overt reflection of a missing verb. The full range of functions and the exact phonological specifications (repetitions, speed of production) for each type of head nod is an area open to further research (indeed, Neidle et al., 2000, do not think it necessary to take such formational distinctions into account). It seems that there are at least three head nod functions corresponding to three different articulations: (a) single head nod as phrase boundary marker; (b) slowed/deliberate head nod as a focus marker co-occurring with lexical signs; and (b) repetitive head nod covering constituent domains and conveying the speaker’s commitment to the truth of the assertion (large, slow nodding marking strong assertion; small, rapid nodding for hedging or counterfactuals). However, the presence of a boundary-marking single head nod may also be the result of a specific semantic function. Grose (2003) argues that the perfect tense (the event occurs before the reference time) is marked by a single downward head nod.

1.2.4 Other strategies for reducing signs per utterance

As discussed in this article, nonmanuals perform prosodic, syntactic, and semantic functions. Similarly, sign languages use spatial arrangement to convey syntactic, semantic, and morphological information. If a verb is inflected for its arguments by showing starting (source) and ending (goal) locations in space, then the argument nouns or pronouns do not need to be separately signed. Aspectual information carried in English by adverbs and prepositional phrases can be conveyed by modifying the verb’s temporal and rhythmic characteristics; for example, different kinds of reduplicative rhythmic patterns indicate whether an activity occurred for a long time, habitually, frequently, and so on (Klima & Bellugi, 1979; Wilbur, 2005, 2009, in press). ASL does not need separate signs for many of the concepts that English has separate words for. In this respect, the fact that ASL is a naturally evolved language in the visual/manual modality can be fully appreciated – more information is conveyed simultaneously than in comparable English renditions. A few of these strategies will be relevant to the discussion of our results below.

1.3 Effects of rate of production

In a series of studies investigating performance variables (pausing, rate of signing, breathing), Grosjean (1977, 1978; Grosjean & Lane, 1977) reported similarities and differences between speaking and signing. Speech measurements were made with ribcage expansion and contraction monitoring by a pneumograph (Grosjean & Collins, 1978). Subjects were asked to read a 116-word passage at a normal rate, and then at normal and four other rates randomly requested. Speech duration, breathing pauses (BP), and non-breathing pauses (NBP) were measured from simultaneous oscillographic recordings of speech and rib-cage movement following standard definitions in the field (Goldman-Eisler, 1968).

The same passage was translated into ASL. Five signers produced the story at five different rates, four times each. The mean signs per minute for each rate for each signer was calculated, and then those productions that most approximated the mean were selected for measurement and analysis (hence, n = 25). Two signing judges pushed down a computer key at the start of a pause and held it down until the pause was completed. Pauses were identified when a sign was held in final position without movement. In addition, a sign-naïve adult was asked to analyze one passage to determine if knowledge of ASL is necessary to identify and measure pauses. Grosjean and Lane (1977) reported that it is not, as the sign-naïve coder agreed with the two judges at least 80% of the time.

Parallel to studies of pausing and syntax in spoken languages (Grosjean & Deschamps, 1975), Grosjean and Lane (1977) found that the longest pauses in a signed story appeared at what might be considered the boundary between two sentences; that shorter pauses occurred between constituents that can be analyzed as parts of a conjoined sentence; and that the shortest pauses appeared between internal constituents of the sentence. Grosjean and Collins (1978) reported that for English, pauses with durations of greater than 445 ms occurred at sentence boundaries; pauses between 245 and 445 ms occurred between conjoined sentences, between noun phrases and verb phrases, and between a complement and the following noun phrase; and pauses of less than 245 ms occurred within phrasal constituents. Grosjean and Lane’s (1977) findings for ASL were that pauses between sentences had a mean duration of 229 ms, pauses between conjoined sentences had a mean duration of 134 ms, pauses between NP and VP had a mean duration of 106 ms, pauses within the NP had a mean duration of 6 ms, and pauses within the VP had a mean duration of 11 ms. These results are interpreted as clearly showing that sign sentences are organized hierarchically and that the signs are not strung together without internal constituents.

The technique of asking signers to change their rate of signing created some interesting questions. Grosjean (1977) investigated in detail the effects of rate change, again comparing signers to speakers. If speakers double their actual reading rate, they feel themselves to have increased by six times, whereas listeners perceive an increase of three times (Lane & Grosjean, 1973). Thus, produced rates and perceived rates differ in spoken language. Similarly, if signers increase signing rate by two, they perceive an increase by six, whereas observers (signer or nonsigner) perceive an increase by three (Grosjean, 1977). However, unlike Lane and Grosjean (1973), Grosjean (1977) found that when readers double their rate of reading, listeners perceive an increase by four. Regardless of which listener rate is used, the overall effect seems to be that judgments of self-production of speech or signing rates are equivalent for speakers and signers, but that judgments of perceived speech rates tend to be overestimated more than perceived rates of signing. Grosjean suggested that the similarity in judgments on the part of the producers (speaker or signer) may be attributable to similar muscle feedback (despite the different articulation in the two modalities), whereas the differences in the perceivers may be attributable to the different perceptual systems in use, visual versus auditory.

(Grosjean 1978, 1979) looked at differences in how signers and speakers were able to accomplish these changes in rate. Speakers tend to change the amount of time they pause, whereas signers tend to adjust the time they spend articulating, and when they do adjust pausing time, they tend to adjust it equally among number of pauses and pause duration. Grosjean showed that the time spent articulating a sign is modified directly by changes in movement as opposed to any other parameter (e.g., addition of extra location, modification of handshape). If signers do modify pause time, it generally affects both the number of pauses and the duration of pauses. On the other hand, speakers primarily alter the number of pauses but not the pause durations. Interestingly, although speakers, because of the very nature of the speech process, must breathe between words, primarily at syntactic breaks, signers maintain normal breathing rhythm while signing, although breathing rate may be affected by the physical effort required to sign at, for instance, three times the normal rate. Grosjean also found that signs tend to be longer in sentence final position than within sentences, and that the first occurrence of a sign within a single conversation is longer than the second or subsequent occurrences of that same sign (controlling for syntactic position).

Baker and Padden (1978) report signing rate during rapid turn-taking for two same-sex dyads, one male and one female, as calculated from videotape with synchronized time display in tenths of a second. The signing rate for the male dyad was 3.12 signs per second, and for females 3.00 signs per second.

The present article explores the effects of changes in signing rate on number and duration of signs, pauses, and, extending previous studies, a variety of nonmanual markers.

2 Methods

In order to observe the effects on number and duration of manual and nonmanual variables as a test of previous claims in the literature, we asked signers to vary their signing. As in all natural language production studies, it was not possible to have absolute control over what was produced. Signers differed from each other in how they told the story (despite being given a script to memorize with practice and feedback) but were generally consistent across trials and rates.

2.1 Subjects

Six native or near-native adult Deaf ASL users from the Indianapolis Deaf Community participated in this study. The signers’ ages ranged from mid-20s to mid-30s; there were three males and three females.

2.2 Stimuli

With the help of a native ASL user, three short paragraph “stories” were scripted in ASL gloss (see the Appendix for full scripts). These three stories are dubbed “Cars,” “Fingerspelling,” and “Knitting.” The stories included a diverse set of signs and differed in length to provide an opportunity to note variance and to push articulation limits.

Appendix.

Story scripts

| Knitting | Fingerspelling | Cars |

|---|---|---|

|

last-week my mother |

my friend teach english class |

my brother 2-of-us |

|

learn knit |

all-of-them real trouble |

discuss cars |

|

struggle knit struggle |

not interested look-at-ceiling |

pt.(brother) like |

|

pt.(mother) bear-down |

chat |

japanese |

|

continue |

i look-at-class |

i like american |

|

succeed make square perfect |

i must motivate all-of-them |

2-of-us discuss |

| mistakes none |

how? |

negativesa |

|

fingerspell help q? |

positivesa |

|

|

try see |

negativesb |

|

|

positivesb |

||

|

i-give-up |

||

| “Last week, my mother learned to knit. She really struggled with it. She continued to work at it and finally made a perfect square with no mistakes.” |

“My friend teaches an English class. All of them are real trouble—they’re not interested, they stare at the ceiling and chat with each other. I look at them and wonder how I can motivate them. Perhaps fingerspelling will help. We can try it and see.” |

“My brother and I discussed cars. He liked Japanese, I liked American. We discussed back and forth (what are) the positives and negatives. Then I gave up.” |

2.3 Task

Signers were asked to memorize the stories one at a time from the glosses on a card (they were not shown the English translations). They were then asked to produce the story at three signing rates, based on their own perceptions of normal, slow, and fast.1 After signers practiced the story to the point of memorization, they produced the story twice at normal rate to confirm memorization. These warm-up productions were not included in the analysis presented here. Then signers produced the story twice at Slow, twice at Normal, twice at Fast (Ascending rate), then twice at Fast (for a total of four productions in a row at Fast rate), twice at Normal, and twice at Slow (Descending rate). Thus each story was produced 12 times after the warm-up, a total of four times at each of three rates. With three stories, each signer produced a total of 36 trials (except for Subject 2 who failed to produced two of the fast trials of the Fingerspelling story). For the six signers, the total number of trials is 214 (72 at each rate minus the two missing trials). Productions were videorecorded for subsequent analysis. A timecode was recorded onto a copy of the video from a timecode generator to provide time in minutes: seconds: milliseconds. This permitted direct entry of start and stop times into a database that was designed in FoxPro specifically for this project.

2.4 Coding

Coding took over a year and was conducted by multiple pairs of coders with necessary ASL fluency working for no more than two hours at a time (undergraduate and graduate students, lab employees). Data were coded by rotating pairs of people based on their schedules; disagreements between them involved a third person (or more) until (a) a resolution was reached; or (b) the item was marked to be omitted from further analysis. The result was a coded paper template for each trial, which indicated ascending or descending order, story, requested rate, trial number, and subject. The signs from each script were laid out on the page with additional room for entries of start/stop times of signs (using recognizable handshape as the primary criterion for sign segmentation; Green, 1984), pauses (at least two frames with no observable movement of the hand against the background), brow raises, brow furrows, eyeblinks, and head nods (duration of head nods was not measured). Added signs and omitted signs were noted on the paper template.

Data were then entered from the paper into a database. The database has 12,906 records (six subjects, three rates, three stories, four trials for each measured sign, pause, brow raise, lowered brow and eyeblink) and 20 variables (signer, trial number, rate, section [beginning/practice, ascending order, descending order], story, sign name or pause/brow/blink, start time, end time, and the following yes/no variables: on template, pause, added, missing, brow raise, lowered brow, squint, eyeblink, head nod, head thrust, eye gaze change), for a total of 309,744 entries. Each sign was coded for whether it was on the scripted template, whether it was followed by a pause, whether it was accompanied by a brow raise, a brow furrowing, an eyeblink, or a head nod, as well as the other variables which are not dealt with in this article. If a sign was produced in the wrong order as compared to the scripted template, it was coded as “added” (not on the template) where it occurred and “missing” (on the template) where it should have been produced, as well as being marked as a “transposition.” All durations were calculated automatically in the database from the start–stop times.

2.5 Analysis

Data were imported from the database into SPSS. The following variables were calculated: rate (number of signs per second); number and durations of signs, pauses, brow raises, lowered brows, eyeblinks, and number of head nods. Number of signs per second was calculated by summing all sign durations and dividing that by the number of signs produced; that is, pauses were not included in the determination of signing rate. For number of signs produced, the effects of story and rate were tested, and within-subject variation was also analyzed with repeated measures across trials. On the remaining variables, the main effects of Order of Rate Change (Ascending, Descending) and Rate were investigated with a general linear model.

3 Results

3.1 Order of rate change

Univariate ANOVA revealed that there was no significant effect on the number of signs produced in Ascending (Slow, Normal, Fast) or Descending (Fast, Normal, Slow) order (2599 signs in Ascending order, 2572 in Descending order), or on the durations of the signs produced (Ascending .458 s, Descending .572 s), F(1, 5165) = .141, p = .708. Order was ignored for further analysis.

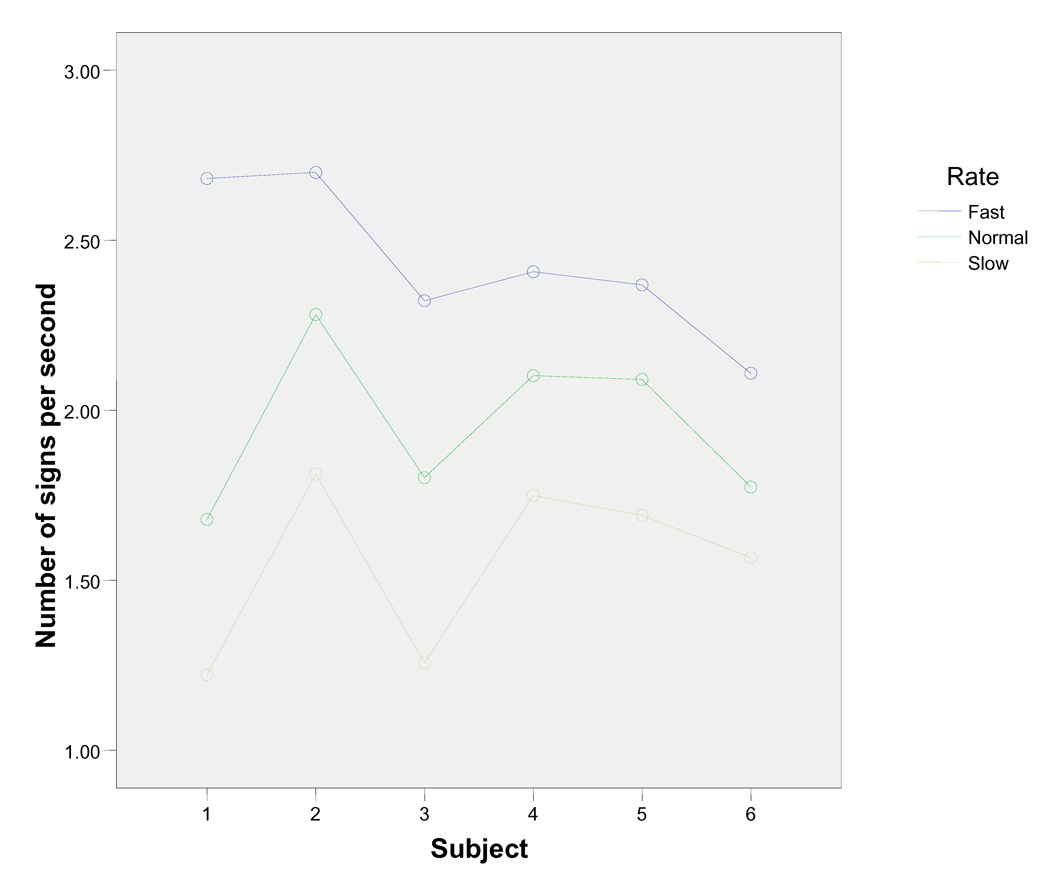

3.2 Rate (signs per second)

In the Fast condition, signers produced an average of 2.43 signs/s; in Normal they produced an average of 1.95 signs/s; and in Slow they produced an average of 1.55 signs/s, F(2, 15) = 20.47, p = .000 (Table 1). Post-hoc analysis (Fisher Least Square Difference (LSD)) indicates that all three Rates differed significantly from each other (Fast – Normal, p = .012; Fast – Slow, p = .000; Normal – Slow, p = .033).

Table 1.

Number of signs per second at each target rate

| Signs per second | |||

|---|---|---|---|

| Rate | M | SD | n |

| Fast | 2.43 | .23 | 6 |

| Normal | 1.95 | .24 | 6 |

| Slow | 1.55 | .25 | 6 |

| Total | 1.98 | .43 | 18 |

Thus, despite our inability to control the rates at which subjects actually signed, they nonetheless gauged themselves sufficiently well to differentiate three rates of signing, supporting prior findings from Grosjean and Lane (1977). As Figure 2 shows, this differentiation held for all six subjects.

Figure 2.

Number of signs per second by each subject at each target rate

We note that our fastest rate, an average of 2.43 signs/s, is well below what Baker and Padden (1978) report for “rapid turn-taking”, which they characterized as “competitive conversation”.

3.3 Number of signs produced

3.3.1 Effect of subject

3.3.1.1 Within-subject variability

Given past reports of extreme phonetic variation across native signers from within a single region of the U.S.A. (Mauk, 2003), Subject was included as a factor in the analysis of number of signs produced. One measure of within-subject variability was a repeated measures analysis of the number of signs produced by each signer across the four trials of each model at each rate. The results of this analysis show that there is no main effect across trials with respect to number of signs produced, F(3, 44) = .973, p = .408, nor is there an interaction with story, F(6, 44) = .107, p = .995, or with rate, F(6, 44) = 1.127, p = .350. Given this lack of within-subject variability, only the mean number of signs per rate per subject per story is given in Table 2. Within-subject variation (as indicated by standard deviation in parentheses) was greatest for the Fingerspelling story, which had the largest number of signs.

Table 2.

Within-subject variation in number of signs produced across four trials of each story at each target rate

| Cars |

Fingerspell |

Knit |

||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Fast |

Normal |

Slow |

Fast |

Normal |

Slow |

Fast |

Normal |

Slow |

||||||||||

| Subject | M | SD | M | SD | M | SD | M | SD | M | SD | M | SD | M | SD | M | SD | M | SD |

| 1 | 20.0 | 0 | 20.8 | 1.0 | 21.5 | 0.6 | 27.8 | 0.5 | 27.5 | 1.0 | 28.8 | 1.7 | 17.5 | 0.6 | 17.5 | 0.6 | 17.8 | 1.0 |

| 2 | 27.5 | 1.0 | 30.0 | 0.8 | 29.5 | 0.6 | 28.5 | 0.7 | 30.3 | 1.9 | 30.5 | 3.7 | 26.3 | 1.0 | 26.0 | 0.8 | 24.5 | 2.5 |

| 3 | 20.8 | 1.0 | 20.3 | 0.5 | 21.0 | 0.8 | 28.5 | 3.0 | 28.0 | 2.0 | 28.3 | 0.5 | 18.8 | 0.5 | 19.0 | 0.8 | 19.5 | 1.7 |

| 4 | 20.3 | 0.5 | 20.0 | 0 | 21.8 | 1.7 | 33.8 | 1.5 | 33.3 | 1.7 | 33.3 | 1.5 | 18.0 | 0.8 | 19.0 | 1.4 | 19.0 | 1.4 |

| 5 | 21.5 | 0.6 | 23.3 | 1.9 | 21.8 | 1.0 | 27.8 | 1.7 | 27.0 | 0.8 | 29.5 | 3.7 | 17.3 | 0.5 | 18.3 | 0.5 | 17.8 | 1.0 |

| 6 | 24.8 | 1.0 | 25.0 | 0.8 | 25.8 | 0.5 | 29.8 | 1.7 | 30.3 | 0.5 | 31.0 | 1.8 | 20.5 | 0.6 | 20.0 | 0 | 21.8 | 2.1 |

| Total | 22.5 | 0.2 | 23.2 | 0.6 | 23.5 | 0.3 | 29.3 | 2.6 | 29.4 | 0.3 | 30.2 | 0.9 | 19.7 | 0.3 | 20.0 | 0.3 | 20.0 | 0.8 |

3.3.1.2 Between subjects effects

Subjects differed significantly from each other in the number of signs they produced, F(5, 54) = 78.30, p = .000, the number of signs they produced at different Rates F(2, 54) = 4.06, p = .023, and the number of signs they produced for the different Stories, F(2, 54) = 739.29, p = .000. There was also a Subject by Story interaction, F(10, 54) = 22.61, p = .000, but no other interactions. At the Slow rate, larger variation was shown by Subjects 2 and 5 for the Fingerspelling story and by Subjects 2, 5, and 6 for the Knit story, when compared to the other signers. Subject 3 showed greater variation in the Fingerspelling story when produced at Normal and Fast rates compared to the other signers.

3.3.2 Effect of story

Stories differed in the number of signs in their scripts. Thus, it is not surprising that Stories differed in the number of signs in their productions, F(2, 44) = 49.17, p = .000. There were no interactions with Rate, F(4, 44) = .054, p = .994.

The average number of signs produced at each signing rate across all Stories did not differ with Rate (F(2, 35) = .106, p = .9). Although not significant by rate, these averages obscure some interesting linguistic effects of signing rate on the number of signs produced. The Fast condition had fewer (1659) total signs than the Slow (1771) or Normal (1741) conditions. First, we note again that the Fast condition was missing two trials of Fingerspelling by Subject 2, who averaged 28.5 signs per trial; assuming this mean for the missing two trials would bring the total for Fast up to an estimated 1716 signs, still fewer than Slow or Normal.

We explored the data to determine sources of the remaining differences. One source is related to the fact that typically a definite noun is introduced into a discourse preceded or followed by a point to a particular location in space which will be used for subsequent references to the referent of that noun. However, ASL permits single-handed noun signs to be produced with the dominant hand while deictic, determiner, or adverbial pointing can be produced simultaneously with the nondominant hand. In such cases, what was previously counted as two signs now was counted as, and took up the duration of, only one sign. We kept track of this factor as added signs and omitted signs. Fast had a net of 3 omitted signs (109 added, 112 omitted), whereas Slow had a net gain of 69 (162 added, 93 omitted), and Normal had a net gain of 43 (137 added, 94 omitted), as compared to the scripts.

Second, qualitative analysis of the added signs in Slow and Normal revealed that certain scripted signs were repeated in non-template positions. For example in the Knit story, the sequence MISTAKES NONE was frequently signed as NONE MISTAKES NONE, emphasizing its prominence as the final achievement of the agent in the story. Third, in the Slow rate, additional signs also appeared that were not on the script template, representing signer commentary (e.g., Whew!), extra pointing (e.g., before and after a noun, instead of just before or after), and separate articulation of first person subject pronoun when not needed because the verb clearly indicated the subject. An example of this is the glossed sequence I I-GIVE-UP I. The verb GIVE-UP is made in front of the signer’s chest, and without indication of another person as subject, the default interpretation is that the signer is the subject of ‘give up’. I have glossed this as I-GIVE-UP to make it clear that there is already a first person interpretation of the verb as produced. Then the signer produces the first person pronoun sign (which is a pointing sign toward the signer’s chest) both before and after the verb sign. This example, then, includes both an unnecessary sign for subject and a repetition of that unnecessary sign after the verb (this latter has been called ‘doubling’; Padden, 1988, 1990; Wilbur, 1999c). The net result is that there are extra signs added in Slow, along with no simultaneous productions of NP + pointing.

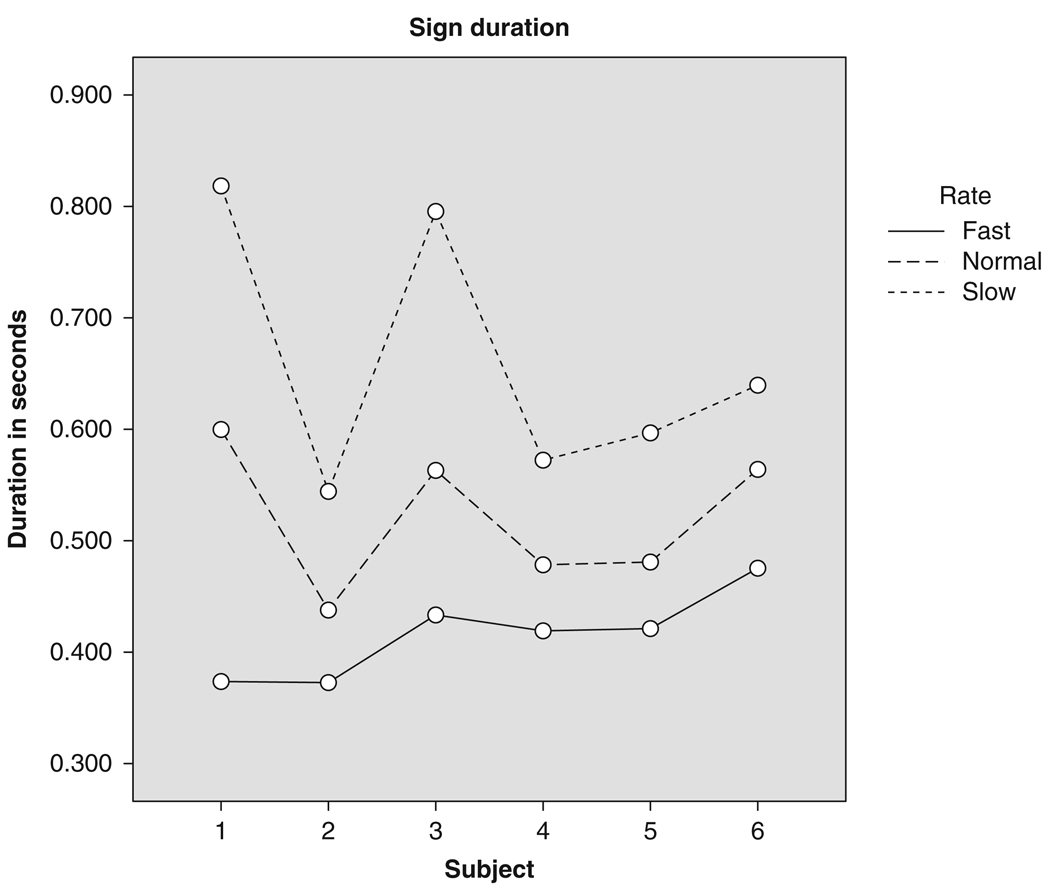

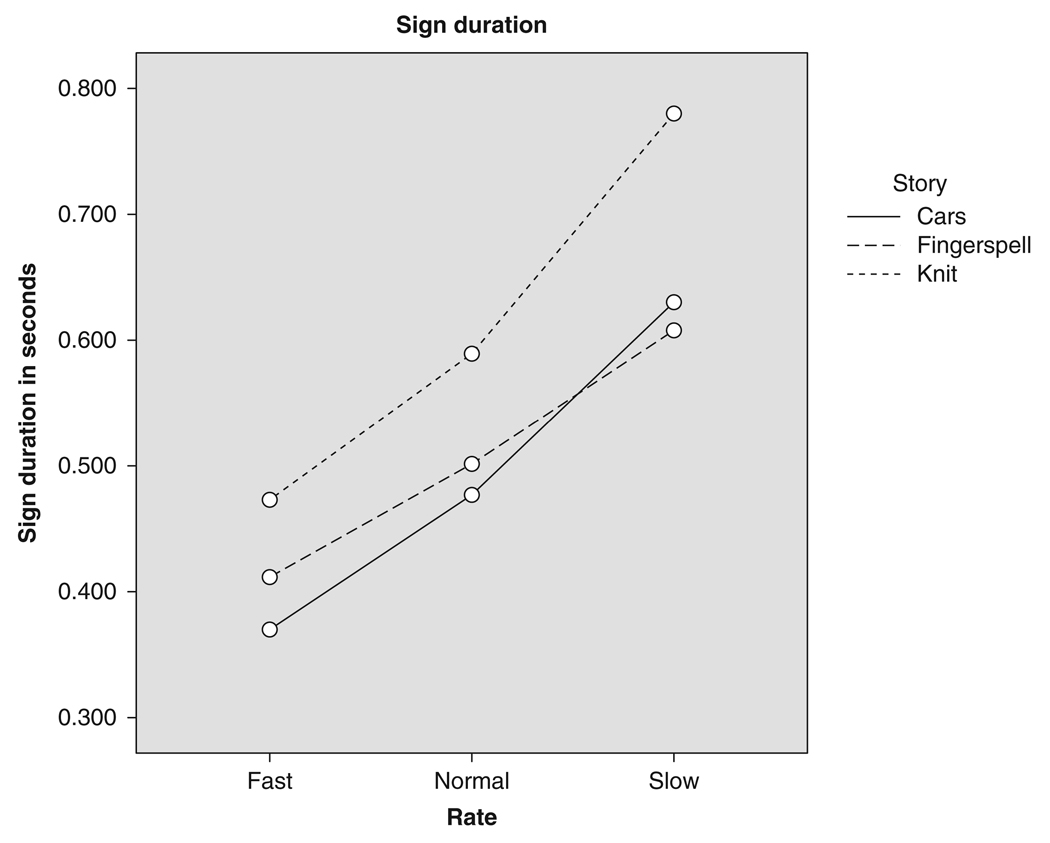

3.4 Sign duration

As can be seen in Table 3, the mean duration of signs decreased (from .66 to .51 to .42 s) as rate increased from Slow to Normal to Fast (F(2, 5167) = 180.49, p = .000). Post-hoc pairwise comparisons (Fisher LSD) revealed that sign duration differed significantly in all three rates: sign duration in Slow is longer than in Normal (mean difference is .14, p = .000) and Fast (mean difference is .24, p = .000); Normal and Fast also differ significantly from each other (mean difference .1, p =.000).

Table 3.

Effects of rate on sign duration (in seconds)

| Rate | M | SD | N |

|---|---|---|---|

| Slow | .66 | .49 | 1770 |

| Normal | .51 | .32 | 1741 |

| Fast | .42 | .26 | 1659 |

| Total | .53 | .39 | 5170 |

Sign duration was also significantly affected by Subject, F(5, 5116) = 23.18, p = .000, and by Story, F(2, 5116) = 50.83, p = .000. There were significant Rate by Subject, F(10, 5116) = 8.99, p = .000, and Rate by Story, F(4, 5116) = 3.43, p = .008, interactions. Signers 1 and 3 had larger sign duration variance at the Normal and Slow rates than the other four signers. The Knit story had larger sign duration variance at all rates, and this difference increased as signing Rate decreased. These are displayed in Figure 3 and Figure 4, respectively.

Figure 3.

Sign duration by subject at each rate; note larger variance in subjects 1 and 3 compared to 2, 4, 5, and 6

Figure 4.

Sign duration by Story at each rate; note greater duration for Knit story, and increasing distance between it and other stories as rate decreases

3.5 Pauses

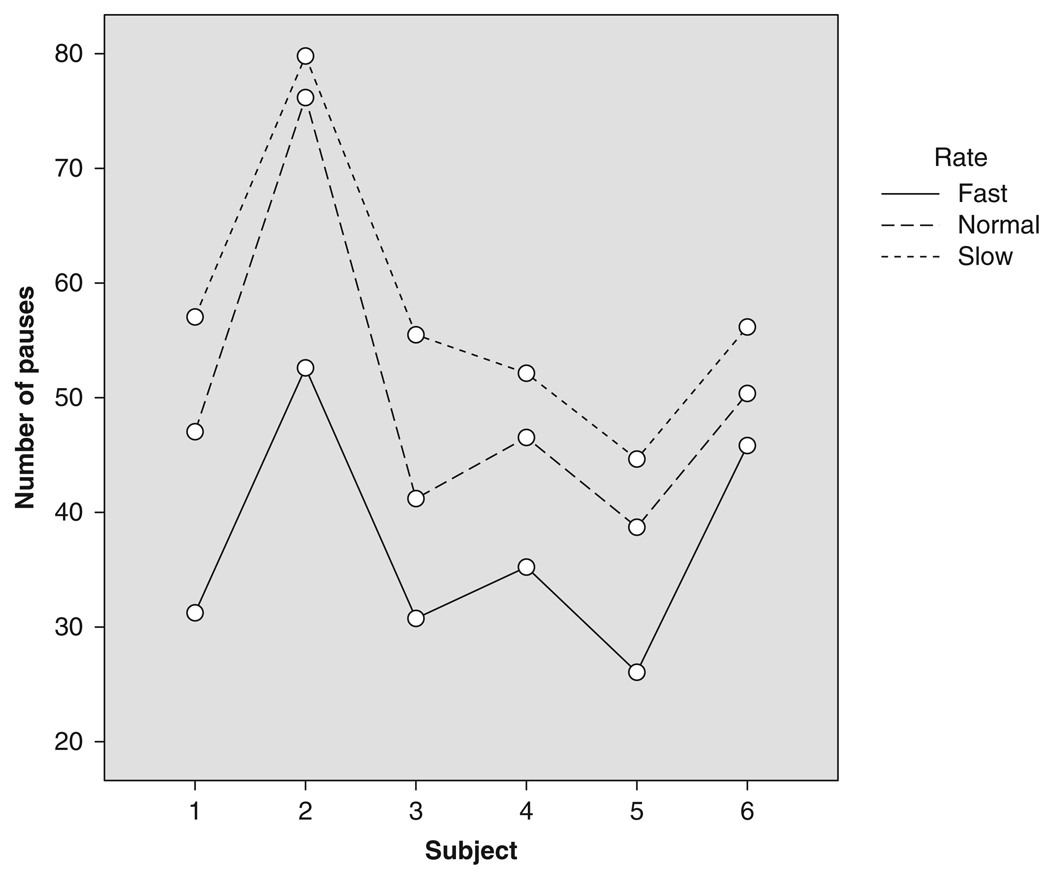

As Rate decreased, the average number of pauses produced by the six signers increased, F(2, 15) = 4.51, p = .03 (Table 4). Post-hoc testing (LSD) indicated that the number of pauses in Slow is significantly greater than in Fast but not in Normal, and Normal and Fast do not differ from each other. Figure 5 displays this effect by signer.

Table 4.

Effect of rate on number of pauses

| Rate | Total | M | SD | n |

|---|---|---|---|---|

| Fast | 667 | 37.1 | 19.4 | 18 |

| Normal | 899 | 49.9 | 22.5 | 18 |

| Slow | 1035 | 57.5 | 21.7 | 18 |

| Total | 2601 | 48.2 | 22.5 | 54 |

Figure 5.

Number of pauses produced by signer at each rate; note generally high number of pauses produced by Signer 2, and also a relatively high number of pauses produced by signer 6 even at Normal and Fast rates

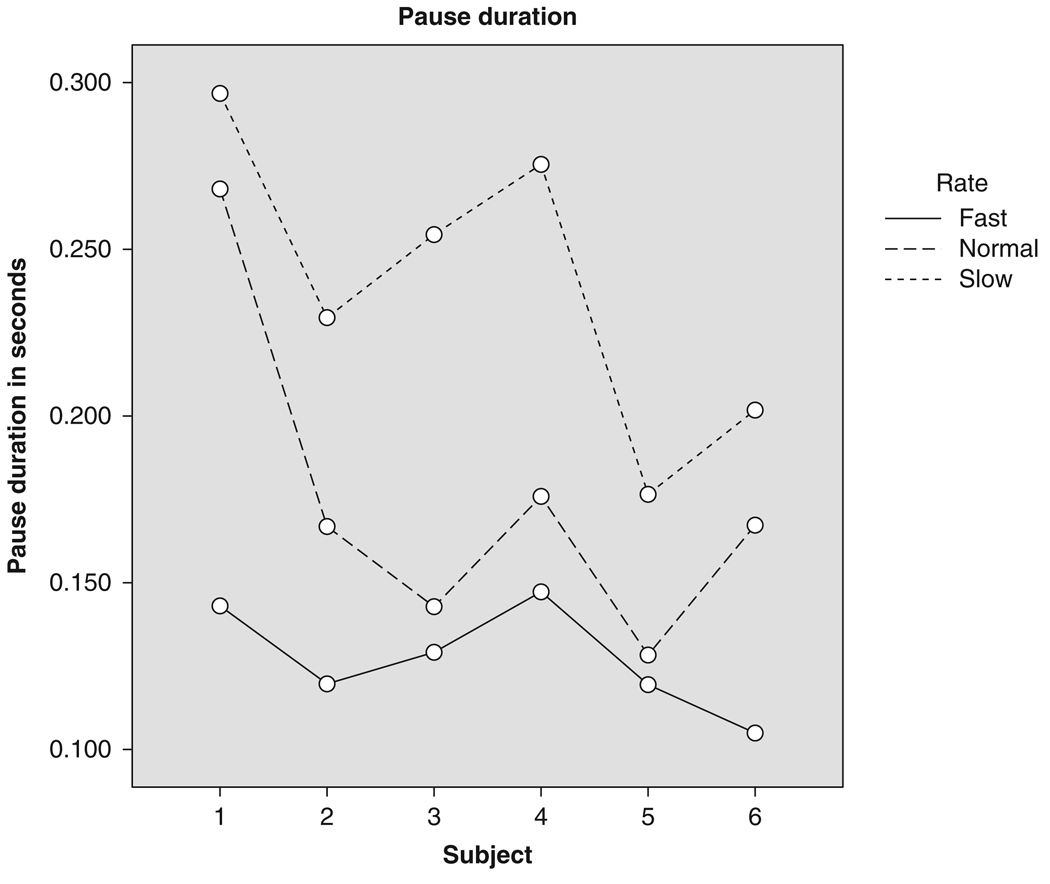

The mean pause duration also increased with decreased Rate, F(2, 2547) = 42.13, p = .000 (Table 5). Post-hoc testing (LSD) indicated that pause duration at each Rate was significantly different from the others (p = .000). Pause duration also varied significantly by Subject, F(5, 2547) = 4.46, p = .000, which is displayed in Figure 6.

Table 5.

Effect of rate on pause duration (in seconds)

| Rate | M | SD | n |

|---|---|---|---|

| Fast | .13 | .15 | 667 |

| Normal | .18 | .21 | 899 |

| Slow | .24 | .27 | 1035 |

| Total | .19 | .23 | 2601 |

Figure 6.

Average pause duration by subject for each rate; note generally longer pause durations for signer 1 and generally shorter for Signer 5

Pause duration differed by Story, F(2, 2547) = 17.16, p = .000 (Figure 7). We attribute this difference primarily to the differing syntactic structures of the stories, hence their differing relative pause durations, as demonstrated by Grosjean and Lane (1977).

Figure 7.

Pause duration by story at each rate

The findings of significant changes in number of pauses and pause duration support (Grosjean’s 1978, 1979) earlier report that when signers do modify total pause time at different signing rates, they do it by changing both the number of pauses and the duration of pauses.

3.6 Effects of rate on nonmanuals

Overall, the Number of nonmanuals decreased with increased Rate, except in the case of Lowered Brows. All measured nonmanuals decreased significantly in Duration with increased Rate. We address this in the order: Brow Raise, Lowered Brow, Eyeblink, Head Nod.

3.6.1 Brow raise

The number of brow raises decreased with increased Rate but not significantly, F(2, 15) = .87, p = .439 (Table 6). This likely reflects a number of strategies. One such strategy is the “running together” of brow raises rather than clearly returning the brows to a neutral position between individual brow raises at the faster rates. Such running together would affect the number of brow raises counted without actually changing the constituents that are properly marked by brow raise. In this sense, there is a modification of brow behavior prosodically but not syntactically. Nonetheless, as illustrated in Figure 1 above, running together of brow raise across separate brow raise-marked phrases occurs in naturally produced signing at normal rate. Another strategy is essentially the opposite, which is to more carefully articulate brow ups and returns (hyperarticulation) at the slower rates.

Table 6.

Effect of rate on number of brow raises

| Rate | Total | M | SD | n |

|---|---|---|---|---|

| Fast | 228 | 38.0 | 11.9 | 6 |

| Normal | 256 | 24.7 | 11.6 | 6 |

| Slow | 284 | 47.3 | 13.2 | 6 |

| Total | 768 | 42.7 | 12.6 | 18 |

The number of brow raises varied significantly by Subject, F(5, 12) = 10.82, p = .000. Figure 8 shows that Subjects 1, 3, and 6 varied the number of brow raises greatly by rate, while Subjects 2, 4, and 5 did not. From the patterns of variance, one may infer that Subject 6 adopted a hyperarticulation strategy for the Slow condition, as there were many more brow raises compared to both Normal and Fast, whereas Subject 4 appears to have used a running together strategy for the Fast condition, as there are many fewer brow raises than at Normal and Slow. Subject 2 had a generally high number of brow raises across all conditions, and Subject 5 had a generally low number across all conditions. Thus with just six subjects, we see four patterns: no change with rate; running together at Fast; hyperarticulation at Slow; and general variation with rate (Subjects 1 and 3). These findings suggest that in order to determine whether a brow raise is required on a given construction, it is necessary to have multiple signers produce the target at a relatively careful (e.g., slower) signing rate. We return to this issue in the discussion in Section 4.

Figure 8.

Number of brow raises by signer at each rate (across 12 trials: four trials for each story)

In contrast to the number of brow raises, the duration of brow raises decreased as signing rate increased, F(2, 750) = 5.89, p = .003, indicating that their duration is being adjusted along with the sign duration of the signs they cover. Post-hoc testing (LSD) showed that brow raise duration did not differ significantly between Fast and Normal, but that both differed from Slow: Fast was .46 s shorter than Slow, p = .000, and Normal was .29 s shorter than Slow, p = .012. Story was not a significant main effect, F(2, 714) = .572, p = .564 (Table 7).

Table 7.

Effects of rate on brow raise duration (in seconds)

| Rate | M | SD | n |

|---|---|---|---|

| Fast | .82 | 2.12 | 228 |

| Normal | .99 | .69 | 256 |

| Slow | 1.28 | .85 | 284 |

| Total | 1.05 | 1.34 | 768 |

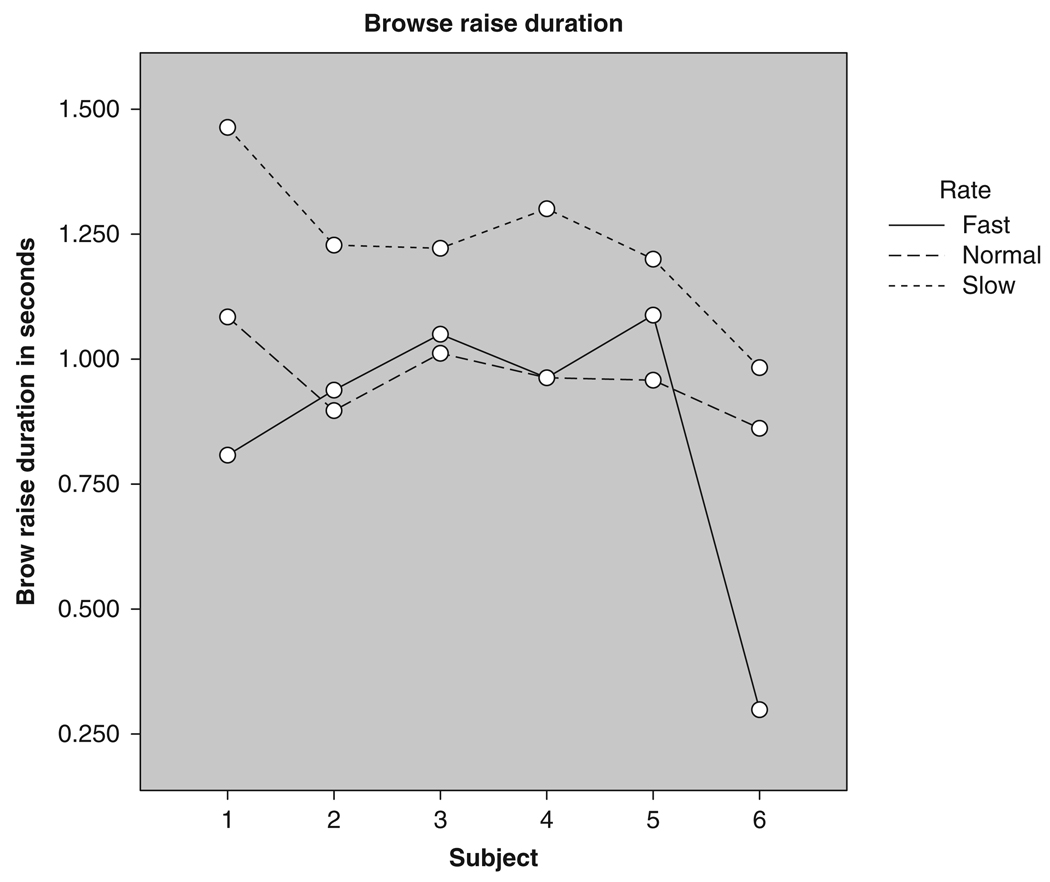

Brow raise duration was significantly affected by Subject, F(5, 750) = 2.31, p = .042. This is displayed in Figure 9. Note that all subjects had longer brow raise durations in the Slow condition, and that Subject 6 had extremely short brow raise durations in the Fast condition compared to Normal, which is directly related to the absence of a difference in the number of brow raises in Fast and Normal seen in Figure 8 above. Subjects 2, 3, and 4 show almost no change in brow raise duration in Fast and Normal, while all three show lengthening in the Slow condition. Comparing these signers’ results with their numbers in Figure 8 shows that Subject 3 differs from the other two, varying the number of brow raises as well as the duration, whereas Subjects 2 and 4 varying duration primarily in Slow and show no variation in the number of brow raises produced. Subjects 2, 3, and 5, the last of whom did not vary the number of brow raises, actually produced longer brow raises in the Fast condition than in the Normal. Thus neither measure alone, number of brow raises or duration of brow raise, comprehensively captures the differing performances of subjects under varying rate conditions.

Figure 9.

Brow raise duration by signer

3.6.2 Lowered brows

As seen for brow raises, the number of lowered brows did not differ significantly with Rate, F(2, 51) = .147, p = .864 (Table 8). They did, however, differ by Story, F(2, 51) = 8.28, p = .001 (Table 9). The explanation for this result lies in differences in the source of lowered brows in the three stories (“licensed” and “unlicensed” lowered brows; see Sections 3.6.3 and 3.6.4 below).

Table 8.

Effect of rate on number of lowered brows (6 subjects × 3 stories)

| Rate | Total | M | SD | n |

|---|---|---|---|---|

| Fast | 137 | 7.7 | 4.2 | 18 |

| Normal | 128 | 7.1 | 4.2 | 18 |

| Slow | 125 | 6.9 | 4.1 | 18 |

| Total | 390 | 7.2 | 4.1 | 54 |

Table 9.

Effect of story on number of lowered brows

| Story | M | SD | n |

|---|---|---|---|

| Cars | 4.4 | 4.3 | 18 |

| Fingerspell | 8.8 | 3.5 | 18 |

| Knit | 8.5 | 3.0 | 18 |

| Total | 7.2 | 4.1 | 54 |

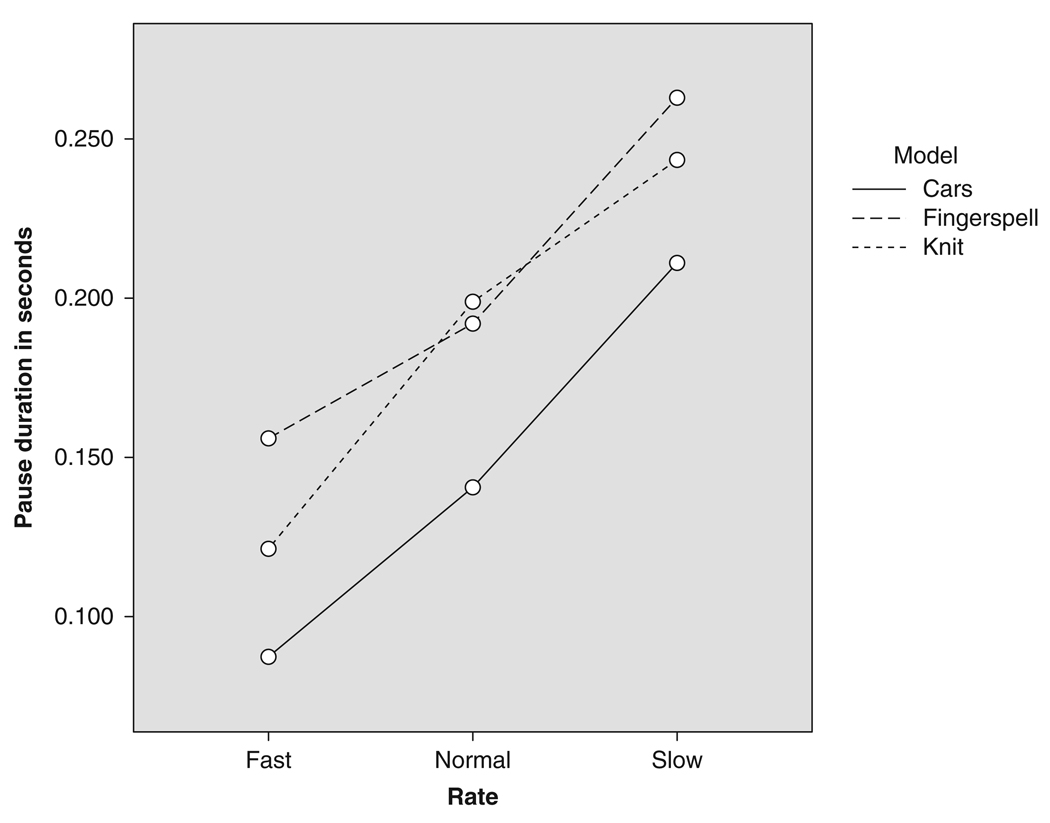

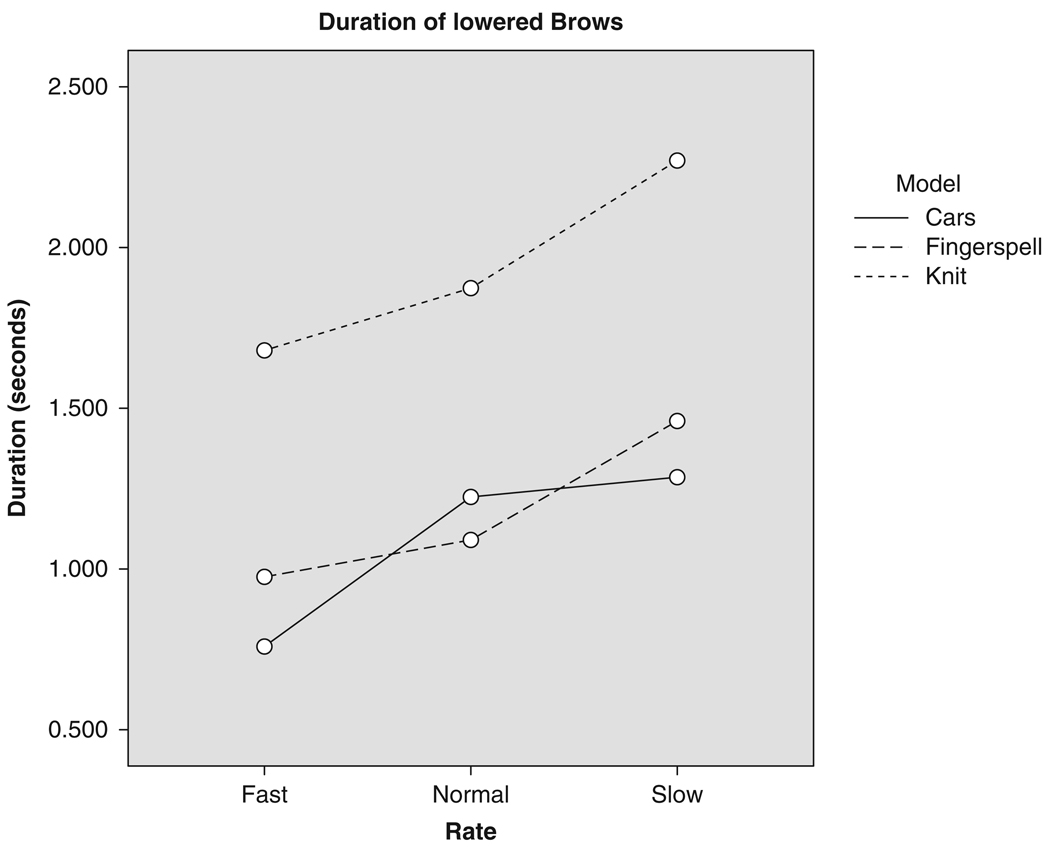

Like brow raise duration, the duration of lowered brows decreased with increased Rate, F(2, 341) = 4.02, p = .019 (Table 10). As reported for the number of lowered brows, Story was also significant for lowered brow duration, F(2, 341) = 16.92, p = .000 (also given in Table 10). Post-hoc analysis (Scheffe, p < .05) indicates that the duration of lowered brows in the Knit story differs significantly from those of the other two stories, which do not differ from each other.

Table 10.

Effect of rate and story on lowered brow duration (in seconds)

| Fingerspell |

Knit |

Cars |

Overall |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Rate | M | SD | n | M | SD | n | M | SD | n | M | SD | n |

| Fast | .98 | .69 | 55 | 1.69 | 1.15 | 49 | .75 | .45 | 33 | 1.18 | .93 | 137 |

| Normal | 1.09 | .80 | 57 | 1.87 | 1.39 | 48 | 1.23 | .65 | 23 | 1.41 | 1.10 | 128 |

| Slow | 1.47 | 1.23 | 47 | 2.28 | 1.67 | 55 | 1.28 | .64 | 23 | 1.79 | 1.43 | 125 |

Lowered brows can serve both grammatical and affective functions (as can brow raises, see recent measurements by Weast, 2008). The Fingerspelling story has an overt licenser of lowered brows, Cars has an interpretation which might license lowered brows, and Knit has an affective function that can serve as the source for lowered brows but which does not constitute a grammatical licensing.

3.6.3 “Licensed” lowered brows

Grammatical brow lowering is associated specifically with wh-questions and embedded wh-clauses, and only one per sentence is licensed by the [+wh] feature (Neidle et al., 2000). In the scripted stories, only one, Fingerspelling, contains an overt wh-question. All six subjects used lowered brows at all rates for this story. The main effect of Rate on lowered brow duration (Table 10 above) in this story was significant, F(2, 76) = 7.55, p = .001.

A different situation is seen with the Cars story, which could be interpreted to contain a covert wh-question in the part glossed 2-of-us discuss negatives positives negatives positives “The two of us discuss (what are) the positives and negatives.” Crosslinguistically in sign languages studied to date, when overt wh-signs are not present, the nonmanual marker of wh-questions is generally required (Šarac et al., 2007). Four of the six subjects produced lowered brows with this story at Fast and Normal rates, and five of the six used lowered brows at the Slow rate. One signer did not use lowered brows at all with this story, indicating a non-wh interpretation. As in the Fingerspelling story, the main effect of Rate on lowered brow duration (Table 10 above) in this story was significant, F(2, 76) = 3.98, p = .021.

3.6.4 “Unlicensed” lowered brows

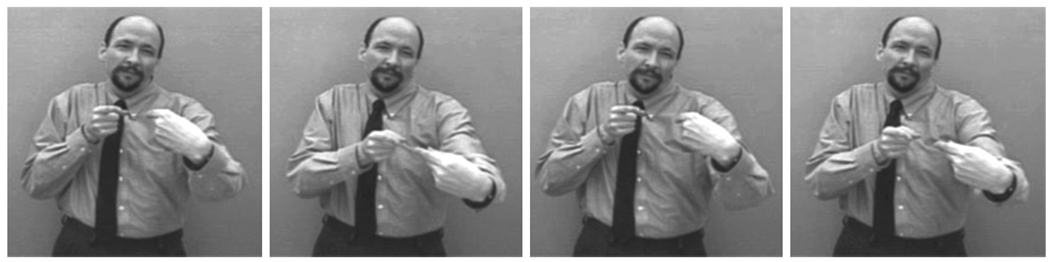

In contrast, the Knit story contained 152 brow lowering, only one of which was licensed by an overt added wh-word WHAT (Weast, 2008, refers to this as “lexical lowering”). The remaining 151 unlicensed lowered brows served affective functions. For example, certain lexical signs such as STRUGGLE and BEAR-DOWN generally occur with lowered brows (Figure 10). Post-hoc testing (Scheffe, p = .05) indicated that the lowered brows in the Knit story were significantly longer in duration than those in either the Cars or the Fingerspelling stories (Figure 11). Also in contrast to those two stories, the main effect of Rate on lowered brow duration was not significant, F(2, 76) = 2.31, p = .103. These findings support the idea that affective facial expressions such as those in the Knit story are not integrated into the grammatical, hence prosodic, structure of ASL (or other SLs) (Anderson & Reilly, 1998; Baker & Padden, 1978; Vos, van der Kooij, & Crasborn, 2006) but perform a constraining function (Weast, 2008). According to Weast, affect determines the relative range of brow height when raising or lowering, but the presence and spread of raising or lowering is determined by the syntax (and, I would add, semantics).

Figure 10.

Lexical sign (citation form) STRUGGLE with lowered brows. Pictures reproduced courtesy of Dr. William Vicars

Figure 11.

Duration of lowered brows by story by rate

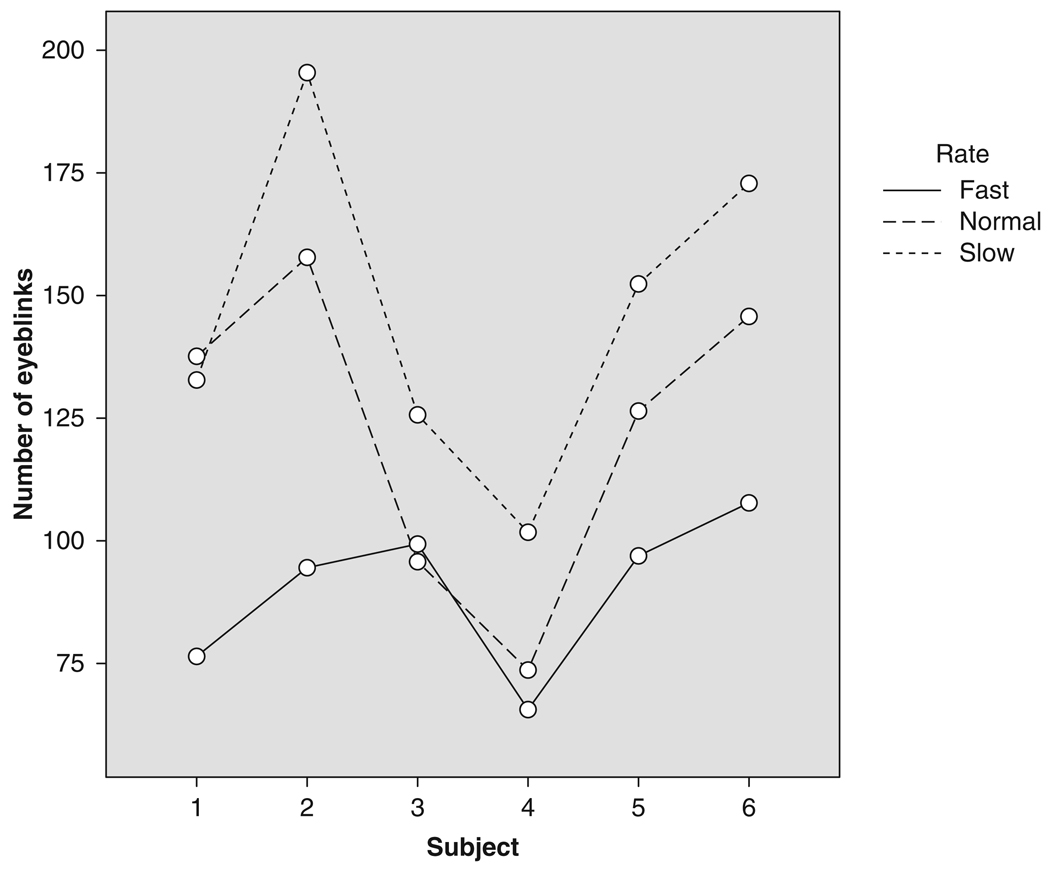

3.6.5 Eyeblink

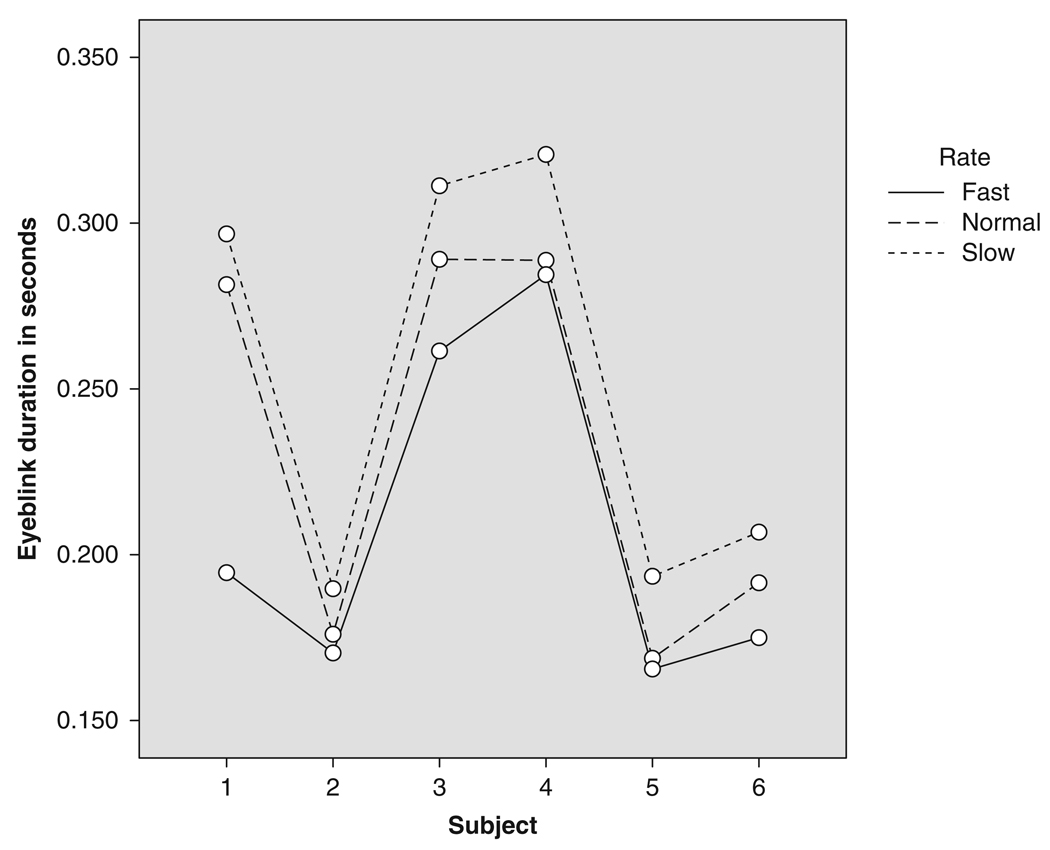

The number of eyeblinks varied with Rate, F(2, 15) = 5.89, p = .013 (Table 11). Figure 12 shows the variation in number of eyeblinks by rate for each subject. Like brow raises and licensed lowered brows, eyeblink durations differed by Rate, F(2, 2158) = 16.65, p = .000, decreasing as Rate increased (Table 12). Figure 13 shows the variation in eye blink duration by rate for each subject.

Table 11.

Number of eyeblinks by rate

| Rate | M | SD | n |

|---|---|---|---|

| Fast | 90.7 | 16.0 | 6 |

| Normal | 122.7 | 31.8 | 6 |

| Slow | 146.8 | 34.1 | 6 |

| Total | 120.1 | 35.7 | 18 |

Figure 12.

Number of eyeblinks by subject

Table 12.

Duration of eyeblinks by rate (in seconds)

| Rate | M | SD | N |

|---|---|---|---|

| Fast | .20 | .09 | 544 |

| Normal | .22 | .12 | 736 |

| Slow | .24 | .14 | 881 |

| Total | .23 | .12 | 2161 |

Figure 13.

Eyeblink duration by signer; note that subjects differ widely in their baseline eyeblink duration (Normal condition) but that all produced longer eyeblinks in the Slow condition than in the other two conditions. Signer 1 dramatically shortened eyeblink duration in the Fast condition, which Signers 3 and 6 also did somewhat

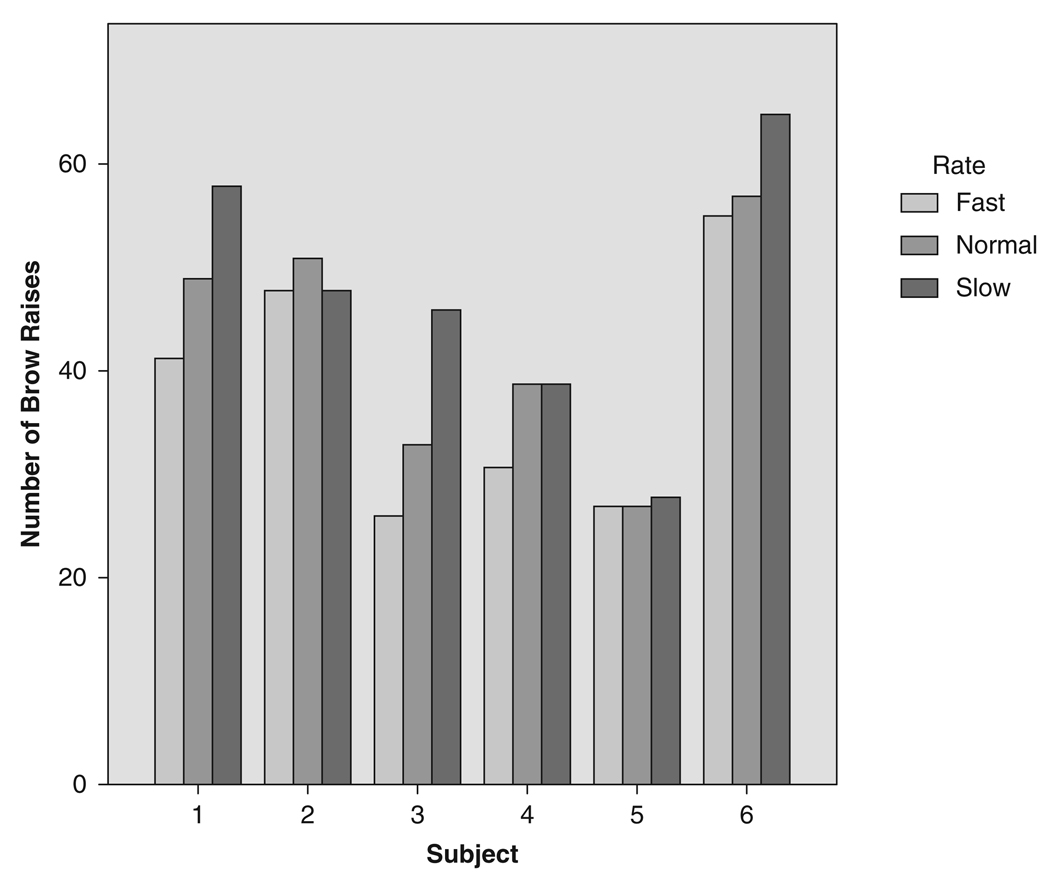

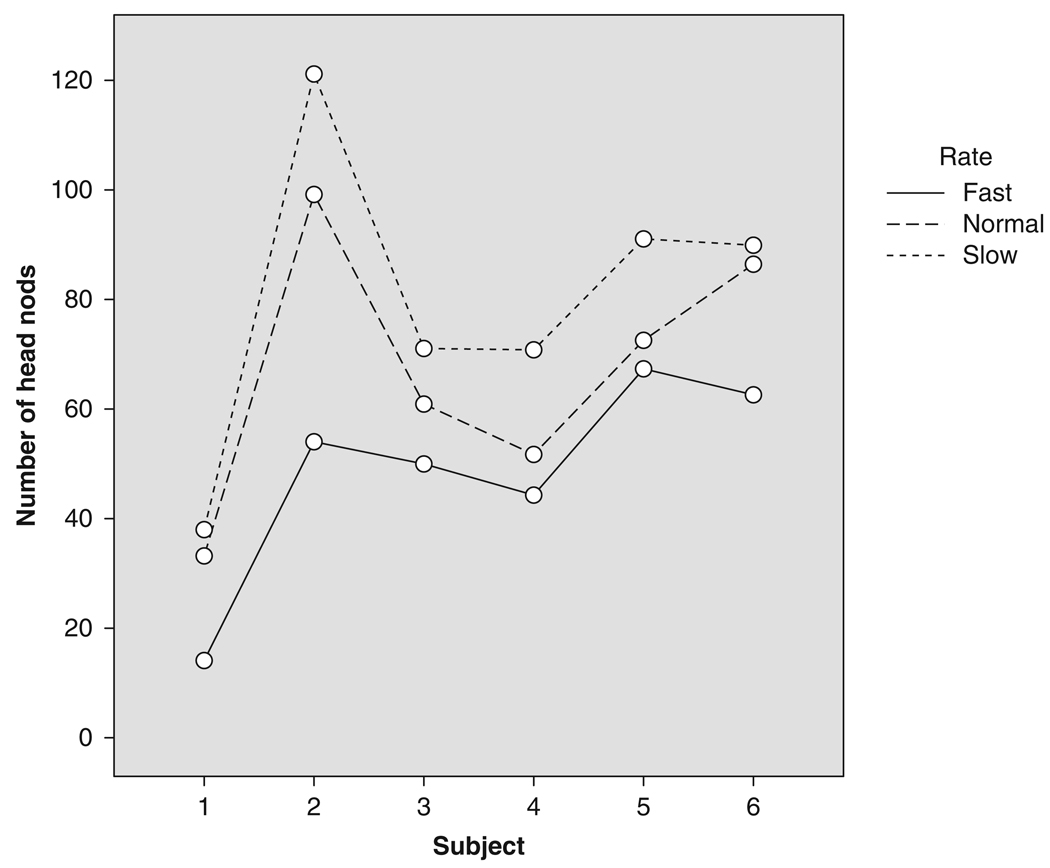

3.6.6 Head nods

The number of Head Nods differed significantly with Rate, F(2, 36) = 6.8, p = .003 (Table 13), and with Subject, F(5, 36) = 6.67, p = .000, (Figure 14). Post-hoc testing (LSD) indicates that Subject 1 produced significantly fewer head nods than all other subjects, and that Subject 2 produced significantly more head nods than Subjects 1, 3, and 4. There were no additional differences or interactions.

Table 13.

Number of head nods by rate

| Rate | Total N | M | SD | n |

|---|---|---|---|---|

| Fast | 292 | 16.2 | 7.9 | 18 |

| Normal | 403 | 22.4 | 10.3 | 18 |

| Slow | 482 | 26.8 | 12.5 | 18 |

| Total | 1177 | 21.8 | 11.1 | 54 |

Figure 14.

Head nods by subject

3.7 Summary of results

In this study, signers produced three significantly different rates of signing, as calculated in signs per second. Subjects were remarkably consistent in the number of signs they produced across the four trials of each story at each rate, although that number differed by subject, by story, and by rate. Thus, while there was significant intersubject variability, there was relatively little within-subject variability with respect to the number of signs produced. Rate was a main effect on sign duration, pause number and pause duration, brow raise duration, licensed lowered brow duration, number and duration of eyeblinks, and number of head nods. This indicates that signers produced their different signing rates without making dramatic changes in the number of signs, but instead by varying the sign duration. Like Grosjean’s earlier work, we also found that pause number and pause duration were adjusted along with the signing rate. The nonmanuals studied here occur on or after the signs themselves. The effects of increased signing rate were decreased duration of the measured three nonmanuals: brow raise, lowered brow (licensed only), and eyeblink. The number of blinks also decreased with increased signing rate. In contrast, unlicensed affective/lexical use of lowered brow did not significantly vary in number or duration with signing rate, and their duration was about twice that of the licensed brow lowering. This pattern suggests that signed prosody, like that of speech, is characterized by an integrated, multivariate system of units that can be regulated in production across different contexts in which the system might be stressed to a greater or lesser degree as a result of changes in signing rate.

4 Discussion and conclusion

This study confirms that signers systematically modulate prosodic characteristics of their production as a function of signing rate. In order to increase signing rate, signers do not merely omit signs. This fact contrasts with widespread sign omission when signers try to speed up signing in order to sign and speak at the same time (simultaneous communication with signed English) (Wilbur & Petersen, 1998).

When changing signing rates, the signers in this study did adjust sign duration, the number of pauses, and pause duration, in accordance with previous observations (Grosjean, 1978, 1979). Nonmanuals are also affected by changes in signing rate, indicating that they are part of the language planning and production process. These results can be brought to bear on three issues: (1) the difference between grammatical nonmanuals and affective facial expressions; (2) the fact that nonmanuals are not just a general modality effect but are language specific; and (3) the question of how best to characterize nonmanuals in ASL grammar.

4.1 The difference between grammatical nonmanuals and affective facial expressions

The relevant data and results from this study that bear on the differences in grammatical (syntax-semantic) versus non-grammatical (lexical, affective) nonmanuals are the lowered brows. As observed, occurrences in the Fingerspelling and Cars stories are licensed by overt and covert [wh]-meaning (henceforth, wh-operator). In contrast, lowered brows in the Knit story may be associated with the effort of struggling to learn to knit. We could say that the lowered brow on STRUGGLE and BEAR-DOWN and other signs in that story are supported by the lexical items, but it is clear that the presence or absence of such lowered brows is not distinctive (there is no minimal pair of signs, one of which has lowered brow and one of which does not). The number of such lowered brows and their duration are in sharp contrast to the use of lowered brows that are licensed by the semantic wh-operator. We can conclude then that grammatically licensed lowered brows are integrated into the overall prosodic/rhythmic structure and are modified along with other such variables as signing rate changes. Those that are not grammatically licensed operate independent of the other prosodic adjustments being made. While the distinction between grammatical and affective facial expressions has long been identified, this is the first study to show differential behavior with respect to prosody.

4.2 Nonmanuals are not a general modality effect

Another way to evaluate the results obtained here is to compare them to facial usage in signed SE. If nonmanuals are the result of the modality of perception and production (visual signal), then we might expect that any kind of signing sequence, including artificially created systems such as classroom sign systems, would end up with their own, even if unconscious, patterns for facial usage, and not just naturally evolved languages like ASL. In one study, Mallery-Ruganis and Fischer (1991) identified the inappropriate use of facial behaviors as the primary feature contributing to the reduction in effective message transmission in simultaneous communication (speaking and signing English at the same time). Another study compared SE with and without speech produced by two groups of fluent signers, one group that knew ASL since birth and used SE on a daily basis for professional functions, and another group that did not know ASL and had used SE on a daily basis for at least ten years (Wilbur & Petersen, 1998). The ASL-using group produced raised brows on 47 of 48 produced yes/no questions while they were signing SE, whereas the non-ASL group did so on only 9 of 48. Furthermore, the non-ASL group incorrectly produced lowered brows on 26 of 48 yes/no questions, demonstrating that raised brows are not an automatic result of the pragmatics/function of asking a question (the “universal questioning face hypothesis”) or some type of modality effect (in which all SLs would have brow raise for yes/no questions, which is not the case; Šarac et al., 2007).

4.3 How do the current data contribute to our understanding of the best way to characterize nonmanuals?

Weast (2008) notes that the debate has focused on mutually exclusive options of either syntax or intonational explanations. She suggests an expanded theory in which both can be correct, and that it is possible with one eyebrow movement to convey syntax, grammatical intonation, and emotional intonation. In order to reconsider the two approaches with the current data, it is necessary to first identify which data are relevant. Note that Weast refers to “one eyebrow movement.” The reason for this is that the relevant data need to be those which cover a domain, rather than those that mark the edges of domains. Given that sign duration (Phrase Final Lengthening), pausing, eyeblinking, and frequently, head nodding all function as phrasal end markers, they cannot contribute to the discussion. That leaves just brow raise and lowered brow from the current measures (although we note that other potentially relevant nonmanual articulations include mouth postures, cheek positions, eye gaze, and head tilt, which are not addressed here). What accounts for the presence of raised or lowered brows, the domain over which the position is held, and the observed effects of signing rate?

As mentioned earlier, the leading candidate for an intonational analogue is the system of nonmanuals. Recently, Sandler (1999; Nespor & Sandler, 1999; Sandler & Lillo-Martin, 2006) suggested that some nonmanuals are intonational “tunes”, although they use the neutral term “superarticulation.” For example, the presence of brow raise can mark the distinction between a question and a statement in much the same way that spoken language interrogatives may be marked with rising intonation. Such speculation raises the question of what is meant by “intonation” in spoken languages.

Ladd (1980) argued strongly for a perspective on intonation that recognizes the existence of an intonational lexicon from which one chooses appropriate intonational morphemes in accordance with contextual demands and meanings to be conveyed. He observes that intonation differs from other components of language in its phonetic substance, focusing primarily on the values and changes of fundamental frequency (f0), which is not a major distinctive feature of consonants and vowels. If nonmanuals carry intonation in signed languages, then, like its spoken language counterpart, signed intonation differs from the rest of signing by its phonetic substance—intonational information is not carried on the hands, but on the head, face, and body. However, to fit within Ladd’s definition, we would have to show that nonmanuals are indeed intonational morphemes, with each carrying its own meaning.

More recent approaches to spoken language intonation have taken a compositional perspective in which intonation patterns have internal structure (Beckman & Pierrehumbert, 1986; Ladd, 1996; Pierrehumbert & Hirschberg, 1990; Selkirk, 1995). The theory of IP posited that intonational patterns contain three components per phrase—pitch accent, phrase accent, and boundary marker, and that only these pitch patterns are specified for a phrase. The intonation pattern may span a single word in a one-word phrase or many words in a phrase. Such a model differs from older conceptions of intonation patterns as meaning-carrying global pitch contours that are squeezed or stretched across sentences. Instead, target pitches are specified at landmarks and transitions between them are interpolated (i.e., what is needed to get from one specified pitch to the next). The location of the pitch accent is at the most prominent syllable in the phrase; the location of the boundary tone is at the end of the phrase. The location and function of the phrase accent may be a boundary tone on a smaller intonation domain, the intermediate phrase (Beckman & Pierrehumbert, 1986). In conjunction with this compositional approach to form, Pierrehumbert and Hirschberg (1990) provide an outline of a compositional theory of intonational meanings. They suggest that speakers choose an intonational contour to convey the relationship between current, previous, and subsequent utterances, and between current utterance content and speaker’s determination of what the listener may have as mutually-held beliefs. Specifically, they argue:

pitch accents convey information about the status of discourse referents, modifiers, predicates, and relationships specified by accented lexical items. Phrase accents convey information about the relatedness of intermediate phrases – in particular, whether … one intermediate phrase is to form part of the larger interpretative unit with another. Boundary tones convey information about the directionality of interpretation for the current intonational phrase – whether it is “forward-looking” or not. (1990, p.308)

Following this analysis, Sandler and Lillo-Martin (2006) suggest that nonmanuals (excluding lexical and adverbial ones) form an intonation system. Clearly, since nonmanuals are layered and can co-occur, they each contribute to the overall facial pattern, which they liken to the intonational tunes in an intonational pattern. Sandler and Lillo-Martin cite Dachkovsky (2004), who proposes that brow raise carries the meaning “prediction”: the part with brow raise will be followed by relevant “information or consequence”; in other words, it is “forward-looking.” Other nonmanuals contribute additional meanings to create a full (and simultaneous) intonation pattern. Furthermore, they argue that the use of the nonmanual is dependent on factors other than syntax, such as “pragmatic force and sociolinguistic contextual information.” So for them, the brow lowering associated with wh-questions is related to the content question discourse function rather than to the presence of a syntactic indicator for [wh]. They note that what appear to be wh-questions syntactically may have different nonmanuals if the pragmatic intent is not that of a wh-question.