Abstract

In adults, specific neural systems with right-hemispheric weighting are necessary to process pitch, melody, and harmony as well as structure and meaning emerging from musical sequences. It is not known to what extent the specialization of these systems results from long-term exposure to music or from neurobiological constraints. One way to address this question is to examine how these systems function at birth, when auditory experience is minimal. We used functional MRI to measure brain activity in 1- to 3-day-old newborns while they heard excerpts of Western tonal music and altered versions of the same excerpts. Altered versions either included changes of the tonal key or were permanently dissonant. Music evoked predominantly right-hemispheric activations in primary and higher order auditory cortex. During presentation of the altered excerpts, hemodynamic responses were significantly reduced in the rig1ht auditory cortex, and activations emerged in the left inferior frontal cortex and limbic structures. These results demonstrate that the infant brain shows a hemispheric specialization in processing music as early as the first postnatal hours. Results also indicate that the neural architecture underlying music processing in newborns is sensitive to changes in tonal key as well as to differences in consonance and dissonance.

Keywords: auditory cortex, functional MRI, neonates, emotion

Music is a cultural phenomenon and an art; however, in recent years, it has also become a fruitful research tool in numerous fields of cognitive neuroscience such as auditory perception (1), learning and memory (2), brain plasticity (3), sensorimotor processing (4), and the mirror neuron system (5). Consistent results have described brain networks for music processing in adult nonmusicians involving the superior temporal gyrus, inferior frontal, and parietal areas, with a dominance of the right over the left hemisphere (1). These neural networks may be intrinsically related to the emergence of musical abilities in humans. It is, however, difficult to determine to what extent music processing skills, as observed in adults, are the result of an adaptation of the brain from exposure to the musical environment or to biological constraints that lead, with normal experience, to the typical trajectory of brain development. One way to address this issue is to study how the brain processes musical stimuli at a point where exposure to music has been minimal and presumably not sufficient to induce major shaping of the processing networks. We used functional MRI (fMRI) to investigate the neural correlates of music processing in neonates. In recent years, fMRI has been used successfully with pediatric and healthy infant populations (6 –8), proving to be a noninvasive and reliable technique yielding valuable information about brain development. The babies who participated in the study were first exposed to music initially outside the uterine environment, allowing observation of the early developmental stages of a capacity that plays an important role for emotional, cognitive, and social development from the first days of life (9).

Music perception relies on sophisticated cognitive skills for the decoding of pitch, rhythm, and timbre and for the processing of sequential elements that form hierarchical structures and convey emotional expression and meaning. Despite the complexity of such cognitive operations, mounting evidence indicates that newborns and young infants are highly sensitive to musical information. Music modulates infants’ attention and arousal levels (10) and evokes pleasure or discomfort. Infants with casual exposure to music possess the abilities for relational processing of pitch and tempo; for the differentiation of consonant vs. dissonant intervals; and for the detection of variations in rhythm, meter, timbre, and tempo as well as duration of tones and musical phrases (12, 13). These musical competences of infants play a crucial role in early language learning, because the processing of speech prosody (e.g., speech melody, speech rhythm) provides important cues for the identification of syllables, words, and phrases (14 –16). Neurophysiological studies using near-infrared optical topography (17) and magnetoencephalography (18) in 3-month-old infants showed more prominent activation to normal speech sounds than to flattened speech sounds in right temporoparietal regions. This suggests that processing the slow-changing melodic components of natural speech relies on right-hemispheric neural resources already engaged at 3 months of age. Despite this evidence for the importance of musical competences in early childhood, the neural basis of music processing in infants has remained elusive and no previous study has investigated music processing in neonates with fMRI.

The present study addresses this issue, using excerpts of classical music pieces and counterparts of these excerpts that varied in their syntactic properties and in their degree of consonance and dissonance. The control conditions were chosen so as to be acoustically closely matched to the original stimuli and still musical. This allowed a more precise interpretation of any result observed in the original music condition. In other words, we asked whether the newborn brain would be sensitive to subtle variations of the musical stimuli or whether it would respond indifferently to any music-like stimulus.

Using fMRI, data were obtained from 18 healthy full-term newborns within the first 3 days of life. Babies heard 21-s musical stimuli alternating with 21-s blocks of silence (Fig. 1B). Three sets of musical stimuli were used (Fig. 1A): Set 1 (“original music”) consisted of original instrumental (piano) excerpts drawn from the corpus of major-minor tonal (“Western”) music of the 18th and 19th centuries. This first experimental condition addressed a basic question about the neural network involved in music processing. From these original excerpts, two further sets (altered music: sets 2 and 3) were created. In set 2 (key shifts), all voices were infrequently shifted one semitone up- or downward, thus infrequently shifting the tonal center to a tonal key that was harmonically only distantly related to the preceding harmonic context (e.g., from C major to C# major). The recognition of such key changes involves that listeners sequence the musical information, establish and maintain a representation of a tonal center from the different tones of a musical passage, and realize when the representation of a tonal center differs from the tonal center of incoming musical information (19, 20). The extraction of a tonal center is a basis for the music-syntactic processing of harmonies (19, 20), and processing changes of the tonal center involves prefrontal cortical areas, particularly the pars opercularis of the inferior frontolateral cortex (in the left hemisphere part of Broca’s area), ventrolateral premotor cortex, and dorsolateral prefrontal cortex in both adults and 10-year-old children (21, 22). Notably, the inferior frontolateral cortex has particularly been shown to serve the processing of syntax in both music and language, although with right-hemispheric weighting in the music domain (21 –23) and left-hemispheric weighting in the language domain (24, 25). We aimed at testing whether the brains of newborns are already sensitive to changes in tonal key and which brain areas would be involved in newborns for the processing of key changes.

Fig. 1.

Examples of stimuli and scanning paradigm. (A) Fragments illustrating the three sets of stimuli: original music, altered music: key shifts, and altered music: dissonance. (B) Experimental paradigm.

In set 3 (dissonance), the upper voice (i.e., melody) of the musical excerpts was permanently shifted one semitone upward, rendering the excerpts permanently dissonant [similar to previous studies (26, 27)]. Dissonance and consonance are basic perceptual properties of tones sounding together, and the perception of consonance and dissonance relies on properties of the auditory pathway, presumably independent of extended auditory experience (11, 28, 29). Particularly in Western listeners, consonant tone combinations are perceived as more pleasant than permanently dissonant ones (26, 27, 29) and 2-month old infants already show a preference of consonance over dissonance (11, 30, 31). We used the dissonant stimuli of set 3 to investigate whether neonates’ brains are sensitive to dissonance.

Stimulus sets 2 and 3 were also used to investigate neural correlates of emotional responses in newborns. In addition to studies showing that consonant music is usually perceived as more pleasant than permanently dissonant music, previous work with adults has shown that unexpected chord functions and changes in tonal key elicit emotional responses in listeners (32, 33). Up to now, however, the neural origins of human emotion in early life have remained unknown, and the knowledge about emotional processes obtained from adults cannot be extended to children, because the processes underlying the generation of emotions are heavily shaped by lifelong experiences. Studies investigating emotion using musical stimuli in adults revealed that music-evoked emotions involve core structures of emotional processing, such as the amygdala, nucleus accumbens, and orbitofrontal cortex (26, 27, 34). Our study also aimed at exploring the early sensitivity of such core structures of emotional processing, and the specificity of their responses, to original and altered music stimuli.

Note that all three stimulus sets were identical with regard to tempo, meter, rhythm, timbre, and contour and that the stimulus alterations (key shifts and dissonance) did not turn the original music into disorganized noise. Note also that newborns had little or no exposure to music except for the heavily filtered sounds reaching the fetus in utero (35). Thus, if the original music and the stimulus alterations elicit specific brain activity in newborns, this would also indicate predispositions for the processing of these musical features that are largely independent of culture-specific adaptations.

The present study uses fMRI to explore brain specialization for music processing in newborns with minimal exposure to external auditory stimuli.

Results

The main effect of original music (set 1) vs. silence showed an extended right-hemispheric activation cluster focused in the superior temporal gyrus, with its peak activation being located in the primary auditory cortex (transverse temporal gyrus), extending into the secondary auditory cortex, and anteriorly into the planum polare, as well as posteriorly into the planum temporale, temporoparietal junction, and inferior parietal lobule (Fig. 2A). In the left hemisphere, activation of the primary and secondary auditory cortices was weaker than in the right hemisphere (Fig. 2A); this observation was confirmed by region of interest (ROI) analysis (see below and Fig. 4). In addition, activation was observed in the right insula and the right amygdala-hippocampal complex (see Fig. 3).

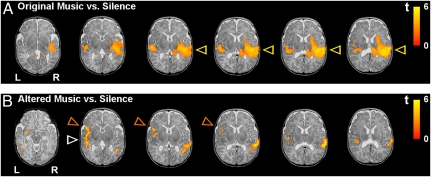

Fig. 2.

Activations elicited by the musical stimuli in newborns (n = 18, random effects group analyses, false discovery rate corrected; P < 0.0002 at the voxel level and P < 0.05 at the cluster level) overlaid over a T2-weighted image from a single newborn subject (note that the spatial resolution of the functional group data is lower compared with the anatomical image). (A) Mean activations for original music vs. silence are shown for six axial slices. Note the right-hemispheric predominance of temporal activation (yellow arrows). (B) Mean activations for altered music (key shifts and dissonance pooled) vs. silence. Note the left-hemispheric activation in the inferior frontal gyrus (orange arrows) and the reduced activation in the right temporal lobe (compared with the contrast of original music vs. silence, white arrow). (Details are provided in Materials and Methods.)

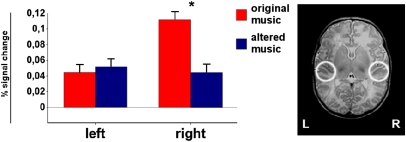

Fig. 4.

ROI analysis. Changes in activation for original music and altered music (key shifts and dissonance pooled) in primary and secondary auditory cortices as measured within spherical ROIs. (Right) ROIs on a T2-weighted image of a single newborn subject. The histograms show the percent signal change measured in each ROI during each of the two stimulus types (original music and altered music). Error bars indicate SEM.

Fig. 3.

Direct contrast of original music vs. altered music in healthy newborns (n = 18, random effects group analysis; P < 0.05 at the voxel level, uncorrected) overlaid on a T2-weighted image from a single newborn (note that the spatial resolution of the functional group data is lower compared with the anatomical image). Regions more active for original music are shown in orange/yellow, and regions more active for altered music are shown in blue. Two axial slices show a stronger activation of the left inferior frontal gyrus in response to altered music. The slices also show a stronger activation of (posterior) auditory cortex in response to original music. The two coronal slices show activation of the left amygdala-hippocampal complex (and of the ventral striatum) for altered music and activation of the right amygdala-hippocampal complex for original music. The two sagittal slices show the larger right superior temporal activation for original music.

We then addressed the differences in activation between the two altered conditions and silence: key shifts (set 2) vs. silence and dissonance (set 3) vs. silence. Both comparisons showed a very comparable pattern, namely, less extended activation in the right temporal regions (compared with the main effect of original music vs. silence) and, instead, left-hemispheric activation clusters in the superior and middle temporal regions (including primary auditory cortex), inferior frontal gyrus, amygdala, and ventral striatum (see Fig. 2B for pooled datasets). The direct comparison between the two altered conditions showed no significant differences in activation, except that blood oxygenation level-dependent contrast (BOLD) signal changes were stronger during the key shifts (set 2) in the left amygdala and ventral striatum. Because of the essentially identical activation patterns elicited by sets 2 and 3, data of both sets were pooled, leading to an equal amount of scans for subsequent comparisons between altered music (sets 2 and 3 pooled) vs. silence and between original music (set 1) vs. altered music (details provided in Materials and Methods). The main effect of altered music (sets 2 and 3 pooled) vs. silence (Fig. 2B) illustrates that the right-hemispheric activation of the primary auditory cortex and posterior superior temporal gyrus (which was observed up to the temporoparietal junction) was less pronounced compared with the main effect of original music vs. silence (Fig. 2A). The main effect of altered music vs. silence also shows activation of the left inferior frontal gyrus (which was not observed in the comparison of original music vs. silence) and activation of the left primary auditory cortex, which was comparable to that observed in the effect of original music vs. silence (see Fig. 3 for the direct contrast between original music and altered music).

The direct contrast of original music vs. altered music shows areas with significantly stronger hemodynamic responses for original music (P < 0.05, voxel level, uncorrected for cluster extent) in the superior and posterior part of the right auditory cortex and in the right amygdala-hippocampal complex (Fig. 3). Areas with stronger hemodynamic responses for altered music were observed in the left inferior frontal gyrus, and the left amygdala-hippocampal complex (a more precise identification of the medial temporal lobe structures, given their small size, exceeds the limits of 1.5 T fMRI spatial resolution).

To address specifically the level of activity in the right and left primary and secondary auditory cortices, ROI analysis was conducted on subjects’ average percent signal change for original music and altered music (Fig. 4 and Materials and Methods). ANOVA on these ROI values with the factors of hemisphere and stimulus type (two levels: set 1, pooled sets 2 and 3) indicated a significant interaction between hemisphere and stimulus type (F = 4.74, P = 0.03). This interaction was due to the difference in hemodynamic response between the two stimulus types in the right hemisphere, with stronger activation for original music compared with altered music (multiple comparison Tukey honestly significant difference, P = 0.05). In the left auditory cortex, no difference in activation was observed for the two stimulus types.

Discussion

Our data show that a hemispheric functional asymmetry for music perception is present at birth. Activation with right-hemispheric dominance was observed in the primary, secondary, and higher order auditory cortices in newborns, consistent with data obtained from adult nonmusicians, especially for the processing of pitch relations (36). This right-hemispheric dominance indicates that the newborn brain responds to musical information quite specifically, because the right primary auditory cortex is particularly involved in pitch analysis and integration (i.e., in the decoding of pitch height, pitch direction, and pitch chroma) (37), a prerequisite for the processing of music. Notably, the activations extended beyond Heschl's gyrus toward the right planum polare as well as toward the planum temporale and the inferior parietal lobule. Previous functional neuroimaging studies with adults showed that the right superior temporal gyrus (including the planum polare) becomes increasingly involved with increasing melodic complexity (38) and that right-hemispheric areas of auditory cortex outside the primary zone are specialized in the processing of pitch patterns, in the encoding and recognition of melodies, and in auditory-motor transformation (39, 40). Our data show that these areas are already recruited with right-hemispheric dominance by newborns for the processing of musical information.

In adult brains, the right-hemispheric preference for music processing has been attributed to different specializations of the left and right auditory cortices for the processing of temporal and spectral aspects of acoustic stimuli (41, 42). These cortices might have evolved a complementary specialization, with the left hemisphere having better temporal resolution (crucial for speech analysis) and the right hemisphere having a better frequency resolution (required for pitch processing) (43). These functional hemispheric differences correspond to anatomical findings of volume differences in the left and right auditory cortices (41, 44).

The lateralized specialization of brain structures is not a uniquely human phenomenon, and it is not determined by specific exposure to environmental sounds (45). Gross anatomical asymmetries around the Sylvian fissure have been observed in fetuses from midgestation and in newborns (46). Recently, studies have identified a large number of genes expressed differently in the left and right perisylvian fetal cortices at different weeks of gestation corresponding with periods of neural proliferation and migration (47, 48). These hemispheric asymmetries, may result in early functional asymmetries, even before the onset of hearing (49).

The right-preponderant auditory cortex activation observed in the present study parallels the left-hemispheric auditory cortex activations shown for infants hearing speech (8). Taken together, these results demonstrate the presence of early propensities in the way the auditory nervous system processes sound (8, 50). Notably, the areas activated by hearing music were not confined to primary auditory cortices. This is reminiscent of auditory cortex activations reported for infants hearing sentences (51) and presumably implies that the complex hierarchical organization of auditory language processing is paralleled by a similar hierarchical organization of music processing very early in life.

In addition to the asymmetry of auditory cortex activation for the processing of the original music, we showed that BOLD signals were modulated by alterations of the musical stimuli. In particular, in the BOLD signal changes in response to the altered music (compared to the original music) were smaller in the right primary and secondary auditory cortex (Fig. 2 and Fig. 4) , but larger in left-hemispheric superior temporal and inferior-frontal cortices (Fig. 2B). When newborns perceived stimuli that contained manipulations of the acoustic structure (i.e., with regard to dissonance) and manipulation of the music-syntactic structure (i.e., with regard to the shift of tonal key), we observed rather symmetrical activity of the primary auditory cortices (Fig. 4). These findings indicate that newborns’ neural responses to musical stimuli can be modulated by structural variations of the stimuli. Importantly, a significant difference in the right auditory cortex activity was observed when directly comparing original music vs. altered music, that is, two conditions in which musical stimuli were presented (Fig. 4). Thus, the right-lateralized auditory cortex activation was attributable to the specific features of the (mainly consonant and structured) original music and was not simply an unspecific response that could have been elicited by any sound in general. These results are in agreement with behavioral evidence from neonates and older babies showing that infants are highly sensitive to pitch alterations and that this ability is subserved by neural predispositions present from birth (52).

The pattern of activation observed for the altered music condition presumably reflects a sensitivity of the newborn brain to dissonance (both altered sets contained a higher degree of sensory dissonance compared with the original sets: set 2 consisted of more dissonant intervals than the original music, and the key shifts in set 3 also introduced a subtle sensory dissonance attributable to the semitone shift of notes (SI Text). Neuropsychological and neuroimaging studies from adults as well as behavioral studies with older babies (12) showed that human infants tested at 7 months perceive sensory dissonance similar to adults (53), that infants prefer to listen to consonant intervals (11, 30) and appear to discriminate consonant and dissonant music shortly after birth (28). The perception of sensory dissonance is a function of the physical properties of auditory stimuli, as well as those of basic physiological and anatomical constraints, resulting from limitations of the auditory system in resolving tones that are too proximal in pitch (54). This phenomenon is independent of specific experience and is observable across species (55, 56). Although the specific weighting of genetic and experiential factors in shaping early sensitivity to consonance and dissonance remains to be specified, our results indicate sensitivity of the auditory cortex to consonance and dissonance already at birth.

In addition to introducing a sensory dissonance, key shifts introduced pitches that were less congruent with the sensory memory traces established by the previous chords (Fig. S1). However, signal changes in the auditory cortex in response to the key changes do not appear to be attributable to auditory sensory memory operations related to the detection of such pitches. Key shifts led to a reduction of signal change in the auditory cortex in the right hemisphere for altered music (compared with original music), and no difference in BOLD response was observed in the left auditory cortex between altered and original music (Fig. 4). This is in contrast to functional neuroimaging data of automatic change detection in adults (57), which show increased BOLD responses in the auditory cortex in response to acoustic changes.

Another major result is that babies engaged neural resources located in the inferior frontolateral cortex of the left hemisphere in response to altered music, perhaps because the frequent changes of key during the key shifts condition and the tonal ambiguity of the chords in the dissonance condition required more left-hemispheric neural capacities for the processing of acoustic relations between chords during altered music compared with original music. The engagement of inferior frontolateral cortical resources in newborns is reminiscent of similar activations in adults and 10-year-old children during the processing structurally irregular chord functions (22) and dissonant tone clusters embedded in mainly consonant chord sequences (21). As noted previously, it is unlikely that altered music evoked auditory sensory memory activation contributing significantly to the BOLD signal changes. Therefore, the activations of the inferior frontolateral cortex during altered music apparently do not merely reflect automatic change detection. However, note that relations between chords (as well as corresponding tonal hierarchies) are established based on mainly acoustical properties of musical signals such as pitch repetition and pitch similarity (20, 58, 59), and it is likely that the inferior frontolateral cortex is involved in establishing such relations (22). Also note that our results do not indicate that the infants had representations of musical scales or implicit knowledge of the key membership of tones. Notably, the activation of the posterior part of the left inferior frontal gyrus in newborns is likely to be comparable to activations of Broca's area in adults. In adults, Broca's area has been shown to be of fundamental importance for the processing of structured sequential information in language, music, and actions, and this area is critically involved in the detection of irregularities within structured sequences (60).

Finally, our data from newborns also suggest effects of music on activity of cerebral core structures for emotional music processing, as shown in adults (61). Even though these results demand to be interpreted with caution, BOLD signal increase was observed in response to the original music in the right amygdala-hippocampal complex and during perception of altered music in the left hippocampal/entorhinal cortex, possibly including the amygdala (Fig. 3). For adults, consonant music has a different emotional valence than dissonant music (26, 27), and adult listeners perceive musical stimuli with unexpected changes of the tonal key as more unpleasant compared with musical stimuli with no key change (3, 5). Corresponding to these differences in emotional valence, previous behavioral studies in infants showed a clear preference for consonant sounds in comparison to dissonant sounds (11, 30, 31). In the present study, activation of the previously mentioned limbic structures in infants in response to music suggests that newborns already show neural emotional responses to musical stimuli. The observed hemispheric differences in activation of limbic structures remain to be specified.

In conclusion, our results show that a neuronal architecture serving the processing of music is already present at birth. Right-lateralized auditory cortex activity was observed for the processing of original music, indicating that neonates already show a right-hemispheric predominance for the processing of musical information. This activation was modulated by contrasting original music with altered but still musical stimuli, indicating that the response we obtained was not generically driven by auditory stimulation. Our data show that the newborn's brain is sensitive to changes in the tonal key of music and to differences in the sensory dissonance of musical signals. Such structural manipulations activated the inferior frontolateral cortex, and thus possibly Broca’s area, which becomes fundamentally important for the learning of language during later stages of development. Finally, the activity changes within limbic structures suggest that newborns engage neural resources of emotional processing in response to musical stimuli. One inherent limitation of our study is that it cannot estimate the extent to which uterine input has already shaped the neural systems at birth. The fetus perceives auditory information in the final weeks of gestation, and salient features of this input can be recognized after birth (62 –64). Also, our findings do not imply that the specific response we observe when the newborn brain is exposed to musical information evolved for, and is exclusively involved in, instrumental music processing (similar responses are likely to be elicited by song, and by the melodic aspects of speech). Future studies should explore the responses at birth to auditory stimuli with different degrees of musical content, such as melodic singing, child-directed speech, nonlexical prosodic speech, and speech with minimal prosodic content. This study demonstrates competencies of newborns for the processing of music, providing insight into the neural origins of music, a universal human capability.

Materials and Methods

Subjects.

Eighteen healthy, full-term, nonsedated newborns (8 female, Apgar score ≥ 8) within the first 3days of life participated in the study. Gestation and birth histories were normal for all subjects. Data from 3 other newborns were not used because of large movement artifacts. The majority of the subjects’ immediate family members were right-handed (88%) (65), with no history of learning disabilities or psychiatric and neurological disorders, and of monolingual Italian background (one bilingual English-Italian), and they were not musicians. Mothers reported casual exposure to music during pregnancy. Parents gave written consent in accordance with the procedures approved by the Ethical Committee of the San Raffaele Scientific Institute and by the Ethics Review Board of the New and Emerging Science and Technology (NEST) project in the Sixth Framework Program (FP6) of the European Union.

Stimuli.

Three sets of stimuli were created (Fig. 1 A and B). Set 1 (music) consisted of 10 instrumental tonal music excerpts drawn from the corpus of major-minor tonal (“Western”) classical music (details are provided in SI Text). Each excerpt was 21 sec long, with an average tempo of 124 beats per minute (range: 104–144 beats per minute).

Sets 2 and 3 (altered music) were obtained by manipulating the excerpts of set 1. For set 2, all voices were shifted one semitone higher or lower at the end of cadences. For set 3, the leading voice was shifted one semitone higher. The excerpts were created starting from a Musical Instrument Digital Interface sequencer (Rosegarden4) with piano samples (Yamaha Disklavier Pro) and then digitally recorded with a low-latency audio-server (Jack Audio Connection Kit) and a multichannel digital recorder (Ardour Digital Audio Workstation) on a Linux platform (DebianGNU/Linux) with a real-time kernel module. Stimuli were presented via an xmms audio-player and fed as optical inputs into an audio-control unit (DAP Center Mark II, MR Confon) connected to MRI-compatible headphones (MR Confon) (Fig. 1). Auditory modeling was conducted on the stimuli to estimate the degree of acoustical deviance introduced by the changes of tonal key in the excerpts with key change (SI Text).

Imaging Protocol.

MRI scans were acquired on a Philips 1.5-T Intera scanner (Philips Medical Systems) that was certified by the European Union for use on all age groups.

fMRI was performed using an optimized echoplanar imaging (EPI) gradient echo pulse sequence with the following acquisition parameters: repetition time (TR)/echo time (TE) = 3,000/40 msec, 3.75 × 3.75 × 3 mm voxel size, 23 slices for each volume, and 140 volumes for each scan. T2-weighted clinical images were reviewed by a licensed pediatric neuroradiologist (C.B.).

Procedure.

When infants were quiet, they were swaddled in a blanket and placed in a custom-made cradle that fit inside the head coil. Infants were fitted with headphones, with a foam pad around their heads for additional noise dampening. Infants’ behavior during scanning was monitored by a camera and a microphone placed inside the magnet bore. All the infants slept naturally through most of the experiment. Scanning was interrupted if the infants became restless.

Sounds were presented via piezoelectric, European Union-certified, MRI-compatible headphones custom-made to fit newborns’ ears and incorporating an active gradient noise-suppression mechanism in addition to passive deadening, leading to noise reduction on the order of 30–40 dB above 600 Hz (MR Confon). With noise reduction, the scanner noise had an intensity of about 66 dB sound pressure level (SPL) at the eardrum of the infant. The sound presentation was adjusted to a comfortable volume level (about 84 dB SPL) allowing the music to be clearly audible above residual scanner noise.

A block design was used with 21-s blocks alternating between conditions (music, silence, altered music, silence) in a pseudorandom order, such that two versions of the same excerpt never followed each other for a total scan time of 7 min (Fig. 1B). Each sequence contained only one kind of altered music. Two 7-min sequences were presented in alternate order to each successive infant: one containing music and key shifts alterations and one containing music and dissonance alterations. The two kinds of alteration were obtained from two different sets of five excerpts so as to avoid repeating the same nonaltered stimulus twice.

Data Analysis.

Images were processed within the framework of the general linear model in AFNI (66).

After EPI time series reconstruction, every brain volume of each participant's functional runs was examined to identify artifacts attributable to either subject head movement or MRI scanning system properties. Sequences from 3 subjects of the 21 scanned presented large-scale movements and were discarded. Sixteen of the remaining 18 newborns provided usable data for two sequences. For the two other babies, only one sequence (with original music and key shifts) was usable. For within-subject motion correction and realignment, both functional time series were aligned to a “base” image free of artifacts. Motion correction and image realignment was performed with a weighted linear least-square algorithm (3dvolreg) (67, 68) with gradient descent, using six parameters of rigid body shift and rotation. Individual brain volumes with greater than ±3 mm or 3° motion correction were eliminated from further analyses. On average, 198 volumes were retained per subject (SD = 68). A spatial filter with an rms width of 4 mm was applied to each EPI volume.

To control for individual differences in absolute values of activation, for each voxel, we computed the mean intensity value of the sequence time series and divided it by the values within that voxel to obtain the percent signal change at each time point. The two functional sequences, if usable, were concatenated.

Template Creation and Image Registration.

Because a suitable newborn template is currently unavailable, we created an ad hoc template from the subjects’ whole-head EPI scans (3.75 × 3.75 × 3-mm resolution) (SI Text). For each subject, a 12-parameter affine-general transformation algorithm (3dWarpDrive) was then used to coregister the subject's average functional volume to the infant template. The same transformation matrix was subsequently applied to the subject's functional scans to align them to the infant template.

Image Analysis: Single-Subject Level.

Multiple linear regression implemented in AFNI's 3dDeconvolve was used to fit stimulus reference vectors to the MRI time series at each voxel for each participant, obtaining an estimate of the BOLD response to each condition of interest (69). For the 16 subjects for whom two sequences were usable, the regression was run with nine regressors of no interest (mean, linear trend, second-order polynomial within each sequence to account for slow changes in MRI signal, and six outputs from the motion-correction algorithm to account for residual variance attributable to subject motion not corrected by registration) and three regressors of interest (one per condition: music, key shifts alterations, and dissonance alterations). Each regressor of interest was obtained by convolving a square wave for each stimulation block of that condition with a gamma variate function approximating the hemodynamic response (70). For each subject, the regression models provided estimates of the response (% signal change of the BOLD response) to each stimulus type in each voxel.

Multiple regression was also run on 18 subjects, pooling dissonance and key shifts alterations, because both conditions were modeled as “altered music.” This comprehensive regression was run with nine regressors of no interest (as above) and two regressors of interest (one per condition: music and altered music).

Group Analysis.

A random effects model implemented in AFNI’s 3dANOVA was used to create group maps in the common template space. The estimates obtained through the regression were entered in two-way mixed-effects ANOVAs performed on each voxel in template space, with stimulus type as a fixed variable and subjects as random variables. For the analysis on 16 subjects who had usable data from both sessions, three stimulus types were included: music, key shifts alterations, and dissonance alterations. For the comprehensive analysis on 18 subjects, music and altered music were used as variables. The ANOVAs generated activation maps for each stimulus type vs. baseline. Planned contrasts were used to identify areas of significantly different response between conditions.

Probability of a false detection was estimated through a Monte Carlo simulation of the process of image generation, spatial correlation of voxels, voxel intensity thresholding, masking, and cluster identification (71). All reported activations for this analysis survived a voxel-level significance threshold of P < 0.0002 and a cluster-size significance threshold of α = 0.05 (minimum cluster size of 33 voxels) (SI Text).

For ROI analyses, spherical ROIs with a 10-mm radius comprising the auditory cortices were drawn on the group template. For statistical processing, the single-subject statistical maps that were the basis for the group ANOVAs were used. Subjects’ average (unthresholded) percent signal change in each ROI was entered into random effects ANOVA with hemisphere (left/right) and stimulus type (music and altered music) as fixed variables and subjects as random variables.

Supplementary Material

Acknowledgments

We thank the parents who graciously agreed to have their infants participate in the study. This work was supported by a grant from the Fondazione Mariani (to D.P. and S.K.) and by the European Union Project BrainTuning FP6-2004 NEST-PATH-028570.

Footnotes

The authors declare no conflict of interest.

*This Direct Submission article had a prearranged editor.

This article contains supporting information online at www.pnas.org/cgi/content/full/0909074107/DCSupplemental.

References

- 1.Peretz I, Zatorre RJ. Brain organization for music processing. Annu Rev Psychol. 2005;56:89–114. doi: 10.1146/annurev.psych.56.091103.070225. [DOI] [PubMed] [Google Scholar]

- 2.Gaab N, Gaser C, Schlaug G. Improvement-related functional plasticity following pitch memory training. NeuroImage. 2006;31:255–263. doi: 10.1016/j.neuroimage.2005.11.046. [DOI] [PubMed] [Google Scholar]

- 3.Münte TF, Altenmüller E, Jäncke L. The musician's brain as a model of neuroplasticity. Nat Rev Neurosci. 2002;3:473–478. doi: 10.1038/nrn843. [DOI] [PubMed] [Google Scholar]

- 4.Zatorre RJ, Chen JL, Penhune VB. When the brain plays music: Auditory-motor interactions in music perception and production. Nat Rev Neurosci. 2007;8:547–558. doi: 10.1038/nrn2152. [DOI] [PubMed] [Google Scholar]

- 5.Molnar-Szakacs I, Overy K. Music and mirror neurons: From motion to ‘e’ motion. Soc Cogn Affect Neurosci. 2006;1:235–241. doi: 10.1093/scan/nsl029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Anderson AW, et al. Neonatal auditory activation detected by functional magnetic resonance imaging. Magn Reson Imaging. 2001;19:1–5. doi: 10.1016/s0730-725x(00)00231-9. [DOI] [PubMed] [Google Scholar]

- 7.Altman NR, Bernal B. Brain activation in sedated children: Auditory and visual functional MR imaging. Radiology. 2001;221:56–63. doi: 10.1148/radiol.2211010074. [DOI] [PubMed] [Google Scholar]

- 8.Dehaene-Lambertz G, Dehaene S, Hertz-Pannier L. Functional neuroimaging of speech perception in infants. Science. 2002;298:2013–2015. doi: 10.1126/science.1077066. [DOI] [PubMed] [Google Scholar]

- 9.Trehub SE. Musical predispositions in infancy. Ann NY Acad Sci. 2001;930:1–16. doi: 10.1111/j.1749-6632.2001.tb05721.x. [DOI] [PubMed] [Google Scholar]

- 10.Trainor LJ, Desjardins RN. Pitch characteristics of infant-directed speech affect infants’ ability to discriminate vowels. Psychon Bull Rev. 2002;9:335–340. doi: 10.3758/bf03196290. [DOI] [PubMed] [Google Scholar]

- 11.Zentner MR, Kagan J. Infants’ perception of consonance and dissonance in music. Infant Behav Dev. 1998;21:483–492. [Google Scholar]

- 12.Trehub SE. The developmental origins of musicality. Nat Neurosci. 2003;6:669–673. doi: 10.1038/nn1084. [DOI] [PubMed] [Google Scholar]

- 13.Winkler I, Háden GP, Ladinig O, Sziller I, Honing H. Newborn infants detect the beat in music. Proc Natl Acad Sci USA. 2009;106:2468–2471. doi: 10.1073/pnas.0809035106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Jusczyk PW, Houston DM, Newsome M. The beginnings of word segmentation in English-learning infants. Cognit Psychol. 1999;39:159–207. doi: 10.1006/cogp.1999.0716. [DOI] [PubMed] [Google Scholar]

- 15.Jusczyk PW. How infants begin to extract words from speech. Trends Cogn Sci. 1999;3:323–328. doi: 10.1016/s1364-6613(99)01363-7. [DOI] [PubMed] [Google Scholar]

- 16.Thiessen ED, Saffran JR. When cues collide: Use of stress and statistical cues to word boundaries by 7- to 9-month-old infants. Dev Psychol. 2003;39:706–716. doi: 10.1037/0012-1649.39.4.706. [DOI] [PubMed] [Google Scholar]

- 17.Homae F, Watanabe H, Nakano T, Asakawa K, Taga G. The right hemisphere of sleeping infant perceives sentential prosody. Neurosci Res. 2006;54:276–280. doi: 10.1016/j.neures.2005.12.006. [DOI] [PubMed] [Google Scholar]

- 18.Sambeth A, Ruohio K, Alku P, Fellman V, Huotilainen M. Sleeping newborns extract prosody from continuous speech. Clin Neurophysiol. 2008;119:332–341. doi: 10.1016/j.clinph.2007.09.144. [DOI] [PubMed] [Google Scholar]

- 19.Patel AD. Language, music, syntax and the brain. Nat Neurosci. 2003;6:674–681. doi: 10.1038/nn1082. [DOI] [PubMed] [Google Scholar]

- 20.Koelsch S. Music-syntactic processing and auditory memory: Similarities and differences between ERAN and MMN. Psychophysiology. 2009;46:179–190. doi: 10.1111/j.1469-8986.2008.00752.x. [DOI] [PubMed] [Google Scholar]

- 21.Koelsch S, et al. Bach speaks: A cortical “language-network” serves the processing of music. NeuroImage. 2002;17:956–966. [PubMed] [Google Scholar]

- 22.Koelsch S, Fritz T, Schulze K, Alsop D, Schlaug G. Adults and children processing music: An fMRI study. NeuroImage. 2005;25:1068–1076. doi: 10.1016/j.neuroimage.2004.12.050. [DOI] [PubMed] [Google Scholar]

- 23.Koelsch S. Neural substrates of processing syntax and semantics in music. Curr Opin Neurobiol. 2005;15:207–212. doi: 10.1016/j.conb.2005.03.005. [DOI] [PubMed] [Google Scholar]

- 24.Moro A, et al. Syntax and the brain: Disentangling grammar by selective anomalies. NeuroImage. 2001;13:110–118. doi: 10.1006/nimg.2000.0668. [DOI] [PubMed] [Google Scholar]

- 25.Friederici AD. Towards a neural basis of auditory sentence processing. Trends Cogn Sci. 2002;6:78–84. doi: 10.1016/s1364-6613(00)01839-8. [DOI] [PubMed] [Google Scholar]

- 26.Blood AJ, Zatorre RJ, Bermudez P, Evans AC. Emotional responses to pleasant and unpleasant music correlate with activity in paralimbic brain regions. Nat Neurosci. 1999;2:382–387. doi: 10.1038/7299. [DOI] [PubMed] [Google Scholar]

- 27.Koelsch S, Fritz T, V Cramon DY, Müller K, Friederici AD. Investigating emotion with music: An fMRI study. Hum Brain Mapp. 2006;27:239–250. doi: 10.1002/hbm.20180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Masataka N. Preference for consonance over dissonance by hearing newborns of deaf parents and of hearing parents. Dev Sci. 2006;9:46–50. doi: 10.1111/j.1467-7687.2005.00462.x. [DOI] [PubMed] [Google Scholar]

- 29.Fritz T, et al. Universal recognition of three basic emotions in music. Curr Biol. 2009;19:573–576. doi: 10.1016/j.cub.2009.02.058. [DOI] [PubMed] [Google Scholar]

- 30.Trainor LJ, Tsang CD, Cheung VH. Preference for sensory consonance in 2- and 4-month-old infants. Music Percept. 2002;20:187–194. [Google Scholar]

- 31.Trainor LJ, Heinmiller BM. The development of evaluative responses to music: Infants prefer to listen to consonance over dissonance. Infant Behav Dev. 1998;21:77–88. [Google Scholar]

- 32.Sloboda JA. Music structure and emotional response: Some empirical findings. Psychol Music. 1991;19:110–120. [Google Scholar]

- 33.Koelsch S, Kilches S, Steinbeis N, Schelinski S. Effects of unexpected chords and of performer’s expression on brain responses and electrodermal activity. PLoS One. 2008;3:e2631. doi: 10.1371/journal.pone.0002631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Blood AJ, Zatorre RJ. Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proc Natl Acad Sci USA. 2001;98:11818–11823. doi: 10.1073/pnas.191355898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Gerhardt KJ, Abrams RM, Oliver CC. Sound environment of the fetal sheep. Am J Obstet Gynecol. 1990;162:282–287. doi: 10.1016/0002-9378(90)90866-6. [DOI] [PubMed] [Google Scholar]

- 36.Zatorre RJ. Neural specializations for tonal processing. Ann NY Acad Sci. 2001;930:193–210. doi: 10.1111/j.1749-6632.2001.tb05734.x. [DOI] [PubMed] [Google Scholar]

- 37.Zatorre RJ, Gandour JT. Neural specializations for speech and pitch: Moving beyond the dichotomies. Philos Trans R Soc London B. 2008;363:1087–1104. doi: 10.1098/rstb.2007.2161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Patterson RD, Uppenkamp S, Johnsrude IS, Griffiths TD. The processing of temporal pitch and melody information in auditory cortex. Neuron. 2002;36:767–776. doi: 10.1016/s0896-6273(02)01060-7. [DOI] [PubMed] [Google Scholar]

- 39.Warren JE, Wise RJ, Warren JD. Sounds do-able: Auditory-motor transformations and the posterior temporal plane. Trends Neurosci. 2005;28:636–643. doi: 10.1016/j.tins.2005.09.010. [DOI] [PubMed] [Google Scholar]

- 40.Hickok G, Buchsbaum B, Humphries C, Muftuler T. Auditory-motor interaction revealed by fMRI: Speech, music, and working memory in area Spt. J Cogn Neurosci. 2003;15:673–682. doi: 10.1162/089892903322307393. [DOI] [PubMed] [Google Scholar]

- 41.Zatorre RJ, Belin P. Spectral and temporal processing in human auditory cortex. Cereb Cortex. 2001;11:946–953. doi: 10.1093/cercor/11.10.946. [DOI] [PubMed] [Google Scholar]

- 42.Tervaniemi M, Hugdahl K. Lateralization of auditory-cortex functions. Brain Res Brain Res Rev. 2003;43:231–246. doi: 10.1016/j.brainresrev.2003.08.004. [DOI] [PubMed] [Google Scholar]

- 43.Liégeois-Chauvel C, de Graaf JB, Laguitton V, Chauvel P. Specialization of left auditory cortex for speech perception in man depends on temporal coding. Cereb Cortex. 1999;9:484–496. doi: 10.1093/cercor/9.5.484. [DOI] [PubMed] [Google Scholar]

- 44.Penhune VB, Zatorre RJ, MacDonald JD, Evans AC. Interhemispheric anatomical differences in human primary auditory cortex: Probabilistic mapping and volume measurement from magnetic resonance scans. Cereb Cortex. 1996;6:661–672. doi: 10.1093/cercor/6.5.661. [DOI] [PubMed] [Google Scholar]

- 45.Toga AW, Thompson PM. Mapping brain asymmetry. Nat Rev Neurosci. 2003;4:37–48. doi: 10.1038/nrn1009. [DOI] [PubMed] [Google Scholar]

- 46.Chi JG, Dooling EC, Gilles FH. Left-right asymmetries of the temporal speech areas of the human fetus. Arch Neurol. 1977;34:346–348. doi: 10.1001/archneur.1977.00500180040008. [DOI] [PubMed] [Google Scholar]

- 47.Sun T, Collura RV, Ruvolo M, Walsh CA. Genomic and evolutionary analyses of asymmetrically expressed genes in human fetal left and right cerebral cortex. Cereb Cortex. 2006;16(Suppl 1):i18–i25. doi: 10.1093/cercor/bhk026. [DOI] [PubMed] [Google Scholar]

- 48.Sun T, et al. Early asymmetry of gene transcription in embryonic human left and right cerebral cortex. Science. 2005;308:1794–1798. doi: 10.1126/science.1110324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.DeCasper AJ, Prescott P. Lateralized processes constrain auditory reinforcement in human newborns. Hear Res. 2009;255:135–141. doi: 10.1016/j.heares.2009.06.012. [DOI] [PubMed] [Google Scholar]

- 50.Peña M, et al. Sounds and silence: An optical topography study of language recognition at birth. Proc Natl Acad Sci USA. 2003;100:11702–11705. doi: 10.1073/pnas.1934290100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Dehaene-Lambertz G, et al. Functional organization of perisylvian activation during presentation of sentences in preverbal infants. Proc Natl Acad Sci USA. 2006;103:14240–14245. doi: 10.1073/pnas.0606302103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Háden GP, et al. Timbre-independent extraction of pitch in newborn infants. Psychophysiology. 2009;46:69–74. doi: 10.1111/j.1469-8986.2008.00749.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Schellenberg EG, Trainor LJ. Sensory consonance and the perceptual similarity of complex-tone harmonic intervals: Tests of adult and infant listeners. J Acoust Soc Am. 1996;100:3321–3328. doi: 10.1121/1.417355. [DOI] [PubMed] [Google Scholar]

- 54.Plomp R, Levelt WJM. Tonal consonance and critical bandwidth. J Acoust Soc Am. 1965;38:348–560. doi: 10.1121/1.1909741. [DOI] [PubMed] [Google Scholar]

- 55.Tramo MJ, Cariani PA, Delgutte B, Braida LD. Neurobiological foundations for the theory of harmony in western tonal music. Ann NY Acad Sci. 2001;930:92–116. doi: 10.1111/j.1749-6632.2001.tb05727.x. [DOI] [PubMed] [Google Scholar]

- 56.Fishman YI, et al. Consonance and dissonance of musical chords: Neural correlates in auditory cortex of monkeys and humans. J Neurophysiol. 2001;86:2761–2788. doi: 10.1152/jn.2001.86.6.2761. [DOI] [PubMed] [Google Scholar]

- 57.Schönwiesner M, et al. Heschl's gyrus, posterior superior temporal gyrus, and mid-ventrolateral prefrontal cortex have different roles in the detection of acoustic changes. J Neurophysiol. 2007;97:2075–2082. doi: 10.1152/jn.01083.2006. [DOI] [PubMed] [Google Scholar]

- 58.Leman M. An auditory model of the role of short-term memory in probe-tone ratings. Music Percept. 2000;17:481–509. [Google Scholar]

- 59.Krumhansl CL. Cognitive Foundations of Musical Pitch. New York: Oxford Univ Press; 1990. [Google Scholar]

- 60.Schubotz RI, Fiebach CJ. Integrative models of Broca's area and the ventral premotor cortex. Cortex. 2006;42:461–463. doi: 10.1016/s0010-9452(08)70386-1. [DOI] [PubMed] [Google Scholar]

- 61.Phillips ML, Drevets WC, Rauch SL, Lane R. Neurobiology of emotion perception I: The neural basis of normal emotion perception. Biol Psychiatry. 2003;54:504–514. doi: 10.1016/s0006-3223(03)00168-9. [DOI] [PubMed] [Google Scholar]

- 62.DeCasper AJ, Fifer WP. Of human bonding: Newborns prefer their mothers’ voices. Science. 1980;208:1174–1176. doi: 10.1126/science.7375928. [DOI] [PubMed] [Google Scholar]

- 63.Lecanuet JP, Schaal B. Fetal sensory competencies. Eur J Obstet Gynecol Reprod Biol. 1996;68:1–23. doi: 10.1016/0301-2115(96)02509-2. [DOI] [PubMed] [Google Scholar]

- 64.Hepper PG. Fetal “soap” addiction. Lancet. 1988;1:1347–1348. doi: 10.1016/s0140-6736(88)92170-8. [DOI] [PubMed] [Google Scholar]

- 65.Oldfield RC. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- 66.Cox RW. AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- 67.Cox RW, Jesmanowicz A. Real-time 3D image registration for functional MRI. Magn Reson Med. 1999;42:1014–1018. doi: 10.1002/(sici)1522-2594(199912)42:6<1014::aid-mrm4>3.0.co;2-f. [DOI] [PubMed] [Google Scholar]

- 68.Oakes TR, et al. Comparison of fMRI motion correction software tools. NeuroImage. 2005;28:529–543. doi: 10.1016/j.neuroimage.2005.05.058. [DOI] [PubMed] [Google Scholar]

- 69.Saad ZS, et al. Functional imaging analysis contest (FIAC) analysis according to AFNI and SUMA. Hum Brain Mapp. 2006;27:417–424. doi: 10.1002/hbm.20247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Cohen MS. Parametric analysis of fMRI data using linear systems methods. NeuroImage. 1997;6:93–103. doi: 10.1006/nimg.1997.0278. [DOI] [PubMed] [Google Scholar]

- 71.Forman SD, et al. Improved assessment of significant activation in functional magnetic resonance imaging (fMRI): Use of a cluster-size threshold. Magn Reson Med. 1995;33:636–647. doi: 10.1002/mrm.1910330508. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.