Abstract

Selective attention confers a behavioral benefit for both perceptual and working memory (WM) performance, often attributed to top-down modulation of sensory neural processing. However, the direct relationship between early activity modulation in sensory cortices during selective encoding and subsequent WM performance has not been established. To explore the influence of selective attention on WM recognition, we used electroencephalography (EEG) to study the temporal dynamics of top-down modulation in a selective, delayed-recognition paradigm. Participants were presented with overlapped, “double-exposed” images of faces and natural scenes, and were instructed to either remember the face or the scene while simultaneously ignoring the other stimulus. Here, we present evidence that the degree to which participants modulate the early P100 (97–129 ms) event-related potential (ERP) during selective stimulus encoding significantly correlates with their subsequent WM recognition. These results contribute to our evolving understanding of the mechanistic overlap between attention and memory.

INTRODUCTION

Goal-directed selective attention influences the magnitude and speed of neural processing in cortical regions where sensory information is actively represented, via a process known as top-down modulation (Desimone & Duncan, 1995; Gazzaley, Cooney, McEvoy, Knight, & D’Esposito, 2005; Kastner & Ungerleider, 2000; Luck, Chelazzi, Hillyard, & Desimone, 1997). Many studies have capitalized on the high temporal resolution of electroencephalography (EEG) to reveal early influences of top-down control on visual processing in humans (Hillyard & Anllo-Vento, 1998), and more recently to establish a direct relationship between neural measures of modulation and indicators of behavioral performance, such as the speed of stimulus detection (Talsma, Mulckhuyse, Slagter, & Theeuwes, 2007; Thut, Nietzel, Brandt, & Pascual-Leone, 2006). Furthermore, evidence has emerged that demonstrates a mechanistic overlap between the processes of selective attention and working memory (WM). Several studies have revealed a major role of working memory in the control of visual selective attention (Awh & Jonides, 2001; de Fockert, Rees, Frith, & Lavie, 2001; Desimone, 1996), while others have shown that selective attention is a key component of working memory (Awh & Jonides, 2001). Recent studies utilizing EEG have investigated the time-course of attentional involvement in WM, presenting a model in which attention is utilized throughout the WM maintenance period (Jha, 2002; Sreenivasan, Katz, & Jha, 2007), likely by biasing cortical processing of relevant sensory representations and activity modulation of the processing of distractors (Sreenivasan & Jha, 2007). Although data has been suggested that WM maintenance may depend on temporally-early attentional factors (Sreenivasan, Katz, & Jha, 2007), a direct correlation between early neural measures of selective activity modulation during encoding and subsequent WM performance has not yet been described.

Selective attention results in activity modulation at very early stages of visual processing (Khoe, Mitchell, Reynolds, & Hillyard, 2005; López, Rodríguez, & Valdés-Sosa, 2004; Martinez, Teder-Salejarvi, & Vazquez, 2006; Pinilla, Cobo, Torres, & Valdes-Sosa, 2001; Schoenfeld, Hopf, Martinez, & Mai, 2007; Valdes-Sosa, Bobes, Rodriguez, & Pinilla, 1998), including amplitude modulations of the P100 (~100ms) and N170 (~170ms) event-related potential (ERP) components (see (review, Hillyard & Anllo-Vento, 1998)), which have been localized to visual cortical areas in lateral extrastriate cortex (Di Russo, Martínez, Sereno, Pitzalis, & Hillyard, 2002; Gomez Gonzalez, Clark, Fan, Luck, & Hillyard, 1994). We hypothesize that such early top-down modulation of cortical activity reflects the fidelity of sensory representations of relevant information in such a manner that it confers a behavioral benefit on maintaining that information in mind.

Here we explore how early markers of visual processing that are modulated when attention is selectively directed to complex, real-world visual objects (i.e., human faces or natural scenes) relates to subsequent WM recognition performance. Our study utilized a delayed recognition task in which participants were instructed to remember two stimuli (800ms each) over the course of a 4-second delay period (Fig 1). We used overlapping transparent images of faces and scenes, with either the face or the scene relevant (and the other irrelevant) for the WM task in a design similar to previous studies of object-based attention (Furey et al., 2006; O’Craven, Downing, & Kanwisher, 1999; Serences, Schwarzbach, Courtney, Golay, & Yantis, 2004; Yi & Chun, 2005). Recording posterior EEG measures while participants viewed the overlapped stimuli during the encoding period (equivalent bottom-up input with variations only in instructions), enabled us to evaluate the timing of top-down modulation and correlate these measures with recognition accuracy recorded after the delay period.

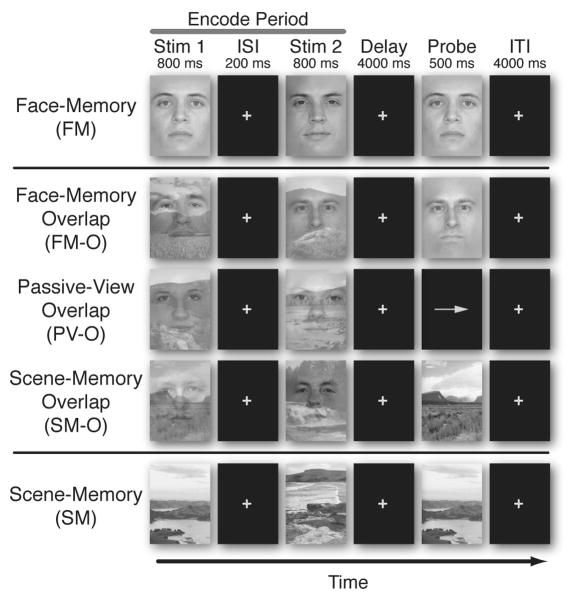

Figure 1.

Experimental Paradigm. Five different tasks were presented in a delayed-recognition task design. All trials involved viewing two images (Stim-1, Stim-2) (Encode), followed by a 4-second period (Delay), and concluded with a third image (Probe). Encoding stimuli on FM and SM consisted of isolated pictures of faces and natural scenes, while encoding stimuli on the three overlap tasks (FM-O, PV-O, and SM-O) consisted of an overlapped scene and a face picture. For the four memory tasks, participants were instructed to remember the relevant encoding stimuli, maintain the images in mind over the delay period and respond with a button press whether or not the Probe image matched one of the two Encoding images. For the passive view task (PV-O), participants were instructed to relax and view the double-exposed images without trying to remember them, after which they responded to the direction of an arrow with a button press.

METHODS

Participants

Nineteen healthy, right-handed individuals (mean age 22.9 years; range 18–34 years; ten males) with normal or corrected-to-normal vision volunteered, gave consent, and were monetarily compensated to participate in the study. Participants were pre-screened, and none used any medication known to affect cognitive state.

Stimuli

The stimuli consisted of grayscale images of faces and natural scenes. All face and scene stimuli were novel across all tasks, across all runs, and across all trials of the experiment. Images were 225 pixels wide and 300 pixels tall (14×18 cm), and were presented foveally, subtending a visual angle of 3 degrees from a small cross at the center of the image. The face stimuli consisted of a variety of neutral-expression male and female faces across a large age range. Hair and ears were removed digitally, and a blur was applied along the contours of the face as to remove any potential non-face-specific cues. The sex of the face stimuli was held constant within each trial. Images of scenes were not digitally modified beyond resizing and grayscaling. For the tasks consisting of overlapped faces and scenes, one face and one scene were randomly paired, made transparent, and digitally overlapped using Adobe Photoshop CS2 such that both the face and scene were equally visible. Overlapped and isolated images were randomly assigned to the different tasks.

Experimental Procedures

The experimental paradigm was comprised of five different tasks in a delayed-recognition WM task design (Fig. 1). Each task consisted of the same temporal sequence with only the instructions differing across tasks. All tasks involved viewing two images (Stim-1, Stim-2), each being displayed for 800 ms (with a 200 ms inter-stimulus interval [ISI]). These images were followed by a 4 second period (Delay) in which the images were to be held in mind (mentally rehearsed). After the delay, a third image appeared (Probe). The participant was instructed, to respond with a button press (as quickly as possible without sacrificing accuracy) whether or not the Probe image matched one of the previous two images (Stim-1, Stim-2). This was followed by an inter-trial interval (ITI) lasting 4 seconds.

For three of the five tasks, the Stim-1 and Stim-2 images were comprised of both a scene and a face superimposed upon each other. For these double-exposed images, the participants were instructed to focus their attention on and hold in mind either the face or the scene, while ignoring the other. In the face memory-overlap task (FM-O), the faces were held in mind while the scenes were ignored, and vice versa in the scene memory-overlap task (SM-O). When the Probe image appeared, it was composed of an isolated face in the FM-O task, or an isolated scene in the SM-O task. For the passive view (PV-O) task, participants were instructed to relax and view the double-exposed images without trying to hold them in mind, after which they responded to an arrow direction with a button press. For the other two tasks, the Stim-1 and Stim-2 images were each composed of a single stimulus without any distracting information: a face in the face memory task (FM) and a scene in the scene memory task (SM). The task was presented in 3 separate runs, each run consisting of each of the 5 task sets presented in blocks and counterbalanced in random order across all participants. Each task set consisted of a block of 20 trials of that task (60 total trials per task condition for all 3 runs, 120 total Encode Period images). Each blocked task set was preceded by an instruction screen cueing the subject to the specific memory goal of the task (i.e. “remember the faces”).

Following the main experiment, participants performed a surprise post-experiment recognition test in which they viewed 320 non-overlapped images, including 160 faces and 160 scenes. 80 of the faces and 80 of the scenes were novel stimuli that were not included in the main experiment. There were 20 faces each from the FM, FM-O, SM-O, and PV-O tasks, and 20 scenes each from the SM, SM-O, FM-O, and PV-O tasks. No encoded stimulus was included that was also a match during a trial of the main experiment, so that no stimuli in the post-experiment test was seen more than once before. All included face and scene stimuli (both novel images and images from the experiment) were randomly ordered, and participants were asked to rate their confidence of recognition of each image as follows: 1-definitely did not see the image during the course of the experiment; 2-think that the image was not seen during the experiment; 3-think that the image was seen during the course of the experiment; 4-definitely saw the image during the experiment. An incidental long-term memory recognition index for each stimulus was calculated by subtracting the rating of novel stimuli for each participant.

Eye Movement Control Experiment

Eye-tracking was performed on 5 participants (recruited with the same exclusionary criteria) while they performed the main experiment with identical instructions. Data was collected on an ASL EYE-TRAC6 (Applied Science Laboratories, Bedford, MA) sampled at 60Hz. Eye-blinks were removed and data was high-pass filtered at 0.5 Hz using a 5th order Butterworth filter to remove drift using MATLAB (MathWorks, Natick, MA). Across condition time-series analysis was performed using paired t-tests with an uncorrected alpha-value of 0.05. ANOVAs were calculated using a 2-way, repeated measures ANOVA and post-hoc t-tests were performed for eye-position differences between conditions, using an alpha value of 0.05 with Tukey-Kramer correction.

Electrophysiological Recordings

Neural data was recorded at 1024 Hz through a 24-bit BioSemi ActiveTwo 64-channel Ag-AgCl active electrode EEG acquisition system in conjunction with BioSemi ActiView software (CortechSolutions, LLC). Electrode offsets were maintained between +/− 20 mV. Precise markers of stimulus presentation were acquired using a photodiode. Trials with excessive peak-to-peak deflections, amplifier clipping, or excessive high frequency (EMG) activity were excluded prior to analysis.

Electrophysiological Data Analysis

Pre-processing was conducted through the EEGLAB toolbox (Swartz Center for Computational Neuroscience, UCSD) for MATLAB. Off-line, the raw EEG-data were high-pass filtered (0.5Hz), referenced to an average reference, and segmented into epochs beginning 200 ms before stimulus onset and ending 800 ms after stimulus onset. Single epochs were baseline-corrected using an average from −200 to 0 msec before stimulus appearance. Eye-movements and artifacts were removed through an independent component analysis by excluding components consistent with topographies for blinks and eye movements and electrooculogram time-series. Artifact-free data epochs were then split by task, filtered (.1–30 Hz), and averaged, to create stimulus-locked ERPs.

Localizer Task

An independent functional localizer task was used to define electrodes of interest (EOIs) for each participant (Liu, Harris, & Kanwisher, 2002). The localizer task consisted of a 1-back design in which participants attended to 7 blocks of 20 faces and 7 blocks of 20 scenes. Participants were instructed to attend to the stimuli and to indicate when each 1-back match occurred by pressing a button with both forefingers. Face and scene blocks were randomly inter-mixed. Face and scene trials were then segmented separately and averaged. Epochs to repeated stimuli were not included in the average in order to prevent motor contamination in the ERP. The P100 component was identified at lateral posterior electrodes as the first positive deflection appearing between 50 and 150 ms after stimulus onset. The N170 component was identified at posterior sites as the maximal negative peak between 120 and 220 ms after stimulus onset. As revealed in previous studies, we found a significant preference for faces at both 100 ms (Liu et al., 2002; Herrmann et al., 2005), and 170 ms after stimulus onset (Bentin, Allison, Puce, Perez, & McCarthy, 1996; Herrmann, Ehlis, Ellgring, & Fallgatter, 2005; Liu, Harris, & Kanwisher, 2002) in components for all posterior-lateral electrodes, such that they revealed significantly larger amplitudes for faces vs. scenes (electrodes P10, PO8, P8, O2, P9, PO7, P7, O1; all p-values<0.02). The lateral posterior electrode that showed the largest P100 and N170 amplitude difference between faces and scenes was defined as that participant’s P100 EOI and N170 EOI, respectively. EOIs included the following electrodes: P8, P10, PO4, PO8, O2, P7, P9, PO7, O1.

Event Related Potentials

Epochs from each task of the main experimental task were separately segmented, baselined at −200 to 0 ms relative to stimulus onset, and then averaged. Only encoding-period segments (Stim-1, Stim-2) from correct trials were included. ERPs from each of the tasks included a mean of 116 averaged epochs per participant per task (range 80–120). The peak of the P100 ERP component for each posterolateral electrode was defined as the maximal positive voltage of the first positive deflection appearing between 50 and 150 ms after stimulus onset, while the peak of the N170 component was defined as the maximal negative voltage between 120 and 220 ms after stimulus onset. After the peak was identified for each individual, ERP amplitudes were then calculated as the area +/− 4ms from the peak latency. Across-participant ERP ANOVA and t-test statistics were calculated using amplitudes and latencies from each participant’s EOI.

Statistical Analysis

Behavioral and ERP data were each subjected to a repeated measures, 2×2 ANOVA (with stimuli type and overlap as factors) and checked against a normal distribution using a Lilliefors test. Post-hoc two-tailed t-tests were corrected for multiple comparisons using Tukey’s honestly significant difference criterion and an alpha of 0.05. Time windows for significant divergence of face and scene localizer data were calculated using paired t-tests for each time-point. These were not corrected for multiple-comparisons under the assumption that time-dependent measures are not independent comparisons.

RESULTS

Behavioral Results

WM accuracy and response time (RT) data were subjected to separate, repeated-measures, 2×2 analysis of variance (ANOVA) with the type of stimulus attended (face vs. scene) and overlap status (overlapped vs. non-overlapped) as factors. WM accuracy revealed a main effect of overlap (F1,18 = 55.05; p < 0.0001), such that accuracy was significantly reduced in tasks with overlapped stimuli relative to tasks with face and scene stimuli presented in isolation (FM-O—82.7% vs. FM—89.5%, p < 0.01; SM-O—83.9% vs. SM—92.9%, p < 0.01) (Fig. 2A). This WM performance reduction for the overlapping stimuli was also evident as an increased response time for overlap tasks (F1,18 = 15.09; p < 0.001), (FM-O—1096 ms vs. FM—1055 ms, p = 0.09; SM-O—1103 ms vs. SM—1029 ms, p < 0.01) (Fig. 2B).

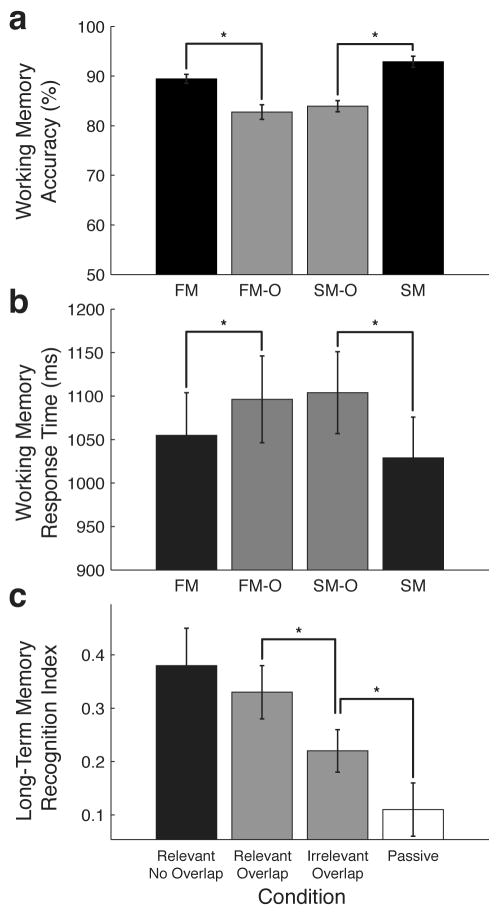

Figure 2.

Behavioral Results. a, Working Memory Accuracy. Tasks utilizing overlapped images showed significantly reduced WM recognition accuracy. b, Working Memory Response Time. Overlapped tasks showed significant increases in response time relative to the non-overlapped task counterparts. c, Long-Term Memory Recognition Index. A post-experiment recognition test revealed significantly better recognition of relevant images in the overlap tasks (faces in FM-O and scenes in SM-O) than irrelevant images from the overlap tasks (faces from SM-O and scenes from FM-O), as well as images from the passive view task (PV-O). Error bars represent standard error of the mean. Asterisks denote significant differences (p<0.05). Face memory-overlap (FM-O), Scene memory-overlap (SM-O), Face memory (FM), Scene memory (SM).

There was a main effect of stimulus for WM accuracy (F1,18=4.8; p < 0.05), but no interaction between stimulus and overlap (F1,18=1.17; p < 0.287); post-hoc comparisons revealed that accuracy was reduced for faces compared to scenes, only in the non-overlapped tasks (SM—92.9%, FM—89.5%, p < 0.01). There was no main effect of stimulus for RT, and no interaction between stimulus and overlap for RT. Accuracy in the passive view (PV-O) task was 99.3%; reaction times to arrow direction averaged 593 ms.

Results of the surprise post-experiment recognition test revealed that participants remembered the previously seen stimuli in the long-term (d′: non-overlap = 0.58, 0.08se ±, relevant overlap = 0.39, ± 0.08se, irrelevant overlap = 0.35, ± 0.06se). The recognition strength reported by the participants (indexed by confidence ratings 1 through 4) revealed that relevant stimuli from both non-overlapped and overlapped tasks were rated significantly higher than irrelevant stimuli from overlapped tasks (p < 0.05 and p < 0.05, respectively) and stimuli from the passive view task (p < 0.01 and p < 0.01 respectively) (Fig. 2C). These data confirm that participants were performing the experiment as instructed, such that they were selectively directing their attention to the relevant stimuli and ignoring the irrelevant stimuli.

EEG Results

P100 Component

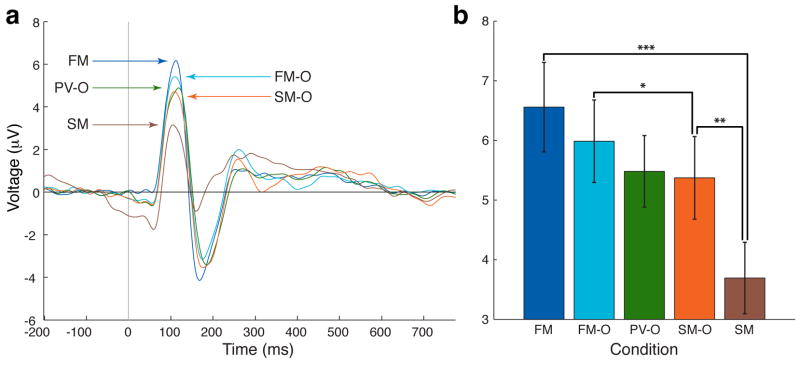

P100 peak latency and amplitude from posterior electrodes of interest (EOIs) were subjected to separate 2×2 ANOVA with the type of stimulus attended (face vs. scene) and overlap status (overlapped vs. non-overlapped) as factors. P100 measures of peak latency were not significantly different between stimulus type or overlap (ANOVA: main effect of stimulus, F1,18=0.86, p = 0.34; overlap, F1,18=0.21, p = 0.66; Mean latency across participants: FM—110 ms, FM-O—113 ms, PV-O—113 ms, SM-O—114 ms, SM—115 ms; all p > 0.17 for all two-tailed comparisons). However, measures of P100 amplitude showed significant differences (main effect of overlap, F1,18 = 11.36, p < 0.005, main effect of stimulus type, F1,18 = 32.28, p < 0.0001, and an interaction between overlap and stimulus type, F2,18 = 16.06, p < 0.001). Post-hoc comparisons revealed that the amplitude of the P100 was significantly greater for the FM task than for the SM task (FM vs. SM, p < 0.0001; all participants exhibited greater P100 amplitude in FM vs. SM) (Fig. 3A and B), revealing a differential response in the P100 component for faces compared to scenes, as reported by others (i.e. bottom-up effect)(Herrmann, Ehlis, Ellgring, & Fallgatter, 2005; Liu, Harris, & Kanwisher, 2002). Importantly, we report that for spatially overlapped images of faces and scenes with equivalent bottom-up information, attention to one stimulus while ignoring the other resulted in significant attentional modulation at this early time point in visual processing (i.e., top-down effect) (FM-O vs. SM-O, p < 0.01; fifteen of nineteen participants exhibited greater P100 amplitude in FM-O vs. SM-O) (Fig. 3A and B). The P100 component of the FM-O task was significantly different from the SM-O task at 97–129 ms (paired, two-tailed t-tests across time-points, p < 0.05). P100 amplitude in the FM-O task was closer to that of the FM task, while the P100 amplitude in the SM-O task was closer to that of the SM task (FM vs. FM-O, p = 0.10; SM-O vs. SM, p < 0.01). Although P100 amplitude in the passive view task (PV-O) was between FM-O and SM-O, it was not significantly different from either overlap task (PV-O vs. FM-O, p = 0.11; PV vs. SM-O, p = 0.72).

Figure 3.

Top Down Modulation of the P1 component. a, Grand Average waveform of P1 EOIs (n=19). b, P100 peak amplitudes (n=19). All peak amplitudes of memory tasks show significant differences across tasks (PV-O is not significantly different than FM-O or SM-O). Error bars represent standard error of the mean. Asterisks denote significant difference (singe - p<0.05, double, - p<0.01, triple, p<0.0001. Face memory-overlap (FM-O), Scene memory-overlap (SM-O), Passive view-overlap (PV-O), Face memory (FM), Scene memory (SM).

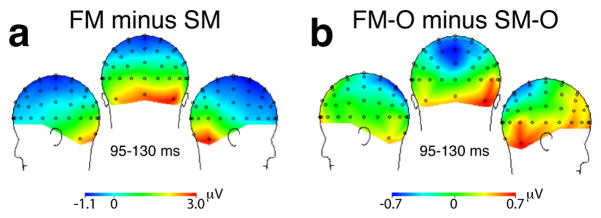

Topography maps of the P100 difference between pairs of tasks are shown in Fig. 4. The lateralized posterior topography of the non-overlapped face and scene difference (FM vs. SM: bottom-up contrast) is comparable to the overlapped face and scene difference (FM-O vs. SM-O: top-down contrast), revealing that top-down modulation occurs in approximately the same visual cortical regions that distinguish the stimuli based on bottom-up, stimulus-driven differences.

Figure 4.

Topographic ERP difference maps at 95–130 ms (P100 component). The lateralized posterior scalp topography of the non-overlapped face and scene difference (FM minus SM: bottom-up contrast) is comparable to the topography of the overlapped face and scene difference (FM-O minus SM-O: top-down contrast).

N170 Component

An ANOVA showed a significant effect of overlap and stimulus type for N170 latency (main effect of stimulus type, F1,18 = 10.93, p < 0.005; main effect of overlap, F1,18 = 21.97, p < 0.0005). Post-hoc t-tests revealed that the mean N170 latencies significantly differ between isolated faces and scenes (FM—174 ms vs. SM—157 ms, p < 0.01), but were not significantly different for overlapped tasks (FM-O—184 ms vs. SM-O—176 ms, p = 0.13). The N170 peaked significantly later in the presence of distraction (FM-O later than FM, p < 0.01; SM-O later than SM, p < 0.01). However, there was no interaction between stimulus type and overlap (F1,1 = 0.73, p = 0.40).

Analysis of N170 amplitude reveals the classic finding of face-selectivity (N170 face-selective effect, (Bentin, Allison, Puce, Perez, & McCarthy, 1996)), with an ANOVA across tasks showing a main effect of stimulus type (F1,18=13.9, p < 0.005), and post-hoc t-tests revealing a significantly more negative N170 component for isolated faces than scenes (FM vs. SM, p < 0.01). However, the N170 amplitude was not modulated by top-down attention in this experiment; that is, N170 amplitudes in the overlapped tasks were not significantly different from each other (main effect of overlap, F1,18 = 0.31, p = 0.58; FM-O vs. SM-O, p = 0.81). N170 amplitude in the PV-O task was not significantly different from the other overlap tasks (vs. FM-O, p = 0.58; vs. SM-O, p = 0.23).

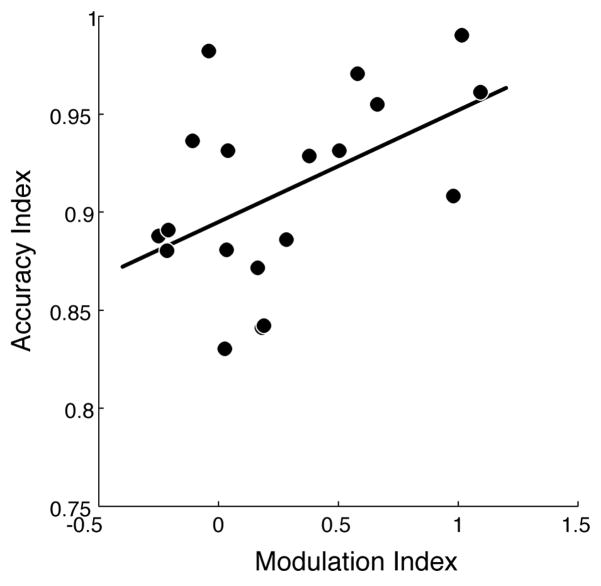

Neural-Behavioral Correlations

We report a significant across-participant correlation between the P100 Modulation Index and WM Accuracy Index (r = 0.45, p < 0.05) (Fig. 5); i.e., the degree to which a participant selectively modulates activity in the first 100 ms of encoding a stimulus is a significant predictor of their ability to accurately recognize the stimulus after a 4 second delay. We found this critical correlation by developing indices that allowed for a comparison of neural activity and behavior. First, to generate an index of top-down modulation of the P100 amplitude, we computed a P100 Modulation Index for each participant as the difference in P100 amplitude in the overlap tasks, corrected by the difference in P100 amplitude in non-overlap tasks:

Figure 5.

Neural-behavioral correlation. Measures of attentional modulation (P1 Modulation Index) significantly correlate with working memory recognition (Accuracy Index). Subjects with greater attentional modulation of P100 amplitude (~100ms post-stimulus presentation) show greater ability to subsequently remember encoded stimuli after a delay period of working memory maintenance (4 sec post-stimulus presentation), R=0.45, p<0.05.

This index allowed us to normalize for individual differences in bottom-up sensory processing. Second, to generate an index of WM recognition performance, we computed a WM Accuracy Index for each participant, comprised of the participant’s average accuracy in overlap tasks, corrected by their average accuracy in non-overlap tasks:

This index allowed us to normalize for individual differences in WM abilities. There was no comparable finding of significant correlation between P100 modulation and long-term memory measures, perhaps due to sparse sampling of long-term memory measures.

Eye movement control

To investigate the possibility that a condition-dependent shift in eye-position either before or within 100 ms after stimulus presentation may have resulted in the reported P1-effect (as opposed to selective attention), we performed an additional experiment with eye-tracking alone under identical conditions and instructions to the EEG experiment. Analysis revealed that there were no condition specific differences in eye-position at any time-point. Furthermore, the median eye-position prior to stimulus onset (-200 msec to 0 msec) and immediately after stimulus onset (0 to 100 msec) showed no dependence on condition in the vertical or horizontal directions (2-way repeated measures ANOVA: vertical-pre, F3,4 = 1.35, p = 0.26; vertical-post, F3,4 = 2.17, p = 0.09; horizontal-pre, F3,4 = 2.08, p = 0.11; horizontal-post, F3,4 = 0.09, p = 0.96. Posthoc t-tests: vertical-pre, FM vs. SM: p = 0.85, FM-O vs. SM-O: p = 0.59; vertical-post, FM vs. SM: p = 0.28, FM-O vs. SM-O: p = 0.49; horizontal-pre, FM vs. SM: p=0.41, FM-O vs. SM-O: p = 0.79; horizontal-post, FM vs. SM: p = 0.99, FM-O vs. SM-O: p = 0.97). In addition, measures of WM accuracy for each participant of the eye-tracking experiment was within 2 standard deviations of the mean WM accuracy measures for participants of the main experiment.

Although this experiment cannot definitively demonstrate that eye position was not an influence on the reported P1 effect and behavioral correlation (because eye-tracking data was not obtained for the EEG sessions), these results reveal that participants do not seem to rely on a consistent and differential shift in eye gaze to perform the experiment. Furthermore, reports from participants in the EEG experiment do not suggest that a strategy of fixating their eyes at a particular location was utilized (e.g., repositioning gaze above the center of the screen prior to stimulus onset to more easily detect featural information from the faces, such as the eyes).

DISCUSSION

This study investigated top-down modulation of early visual processing and the influence of such modulation on subsequent WM recognition performance. We capitalized on the presence of well-described EEG signal differences associated with bottom-up processing of isolated face and scene stimuli (Bentin, Allison, Puce, Perez, & McCarthy, 1996; Herrmann, Ehlis, Ellgring, & Fallgatter, 2005; Liu, Harris, & Kanwisher, 2002) to explore attentional influences on sensory cortical processing in the context of interfering information (i.e., overlapped stimuli). By maintaining bottom-up, sensory information constant and manipulating task goals, we were able to isolate the influence of top-down modulation on visual processing. We found that significant modulation of visual cortical activity begins as early as 97 ms after stimulus presentation (P100 component). Importantly, we found that at this early time point the extent to which participants selectively modulate neural representations of task-relevant information, when distracted by irrelevant information, correlates with their ability to successfully recognize the relevant stimuli after a period of WM maintenance. This provides a direct correlative link between neural activity in early visual cortex during selective encoding and behavioral measures of WM performance.

Early Visual Cortex Modulation

Modulation of early ERP components have been well-documented during covert spatial-based attention (Hillyard, Vogel, & Luck, 1998), and more recently in feature-based attention tasks (Schoenfeld, Hopf, Martinez, & Mai, 2007). In contrast to spatial- and feature-based attention, object-based attention involves the integration of spatial and feature aspects of an object to yield a holistic representation. In the current study, the use of spatially superimposed faces and scenes minimizes spatial-based mechanisms (Furey et al., 2006; O’Craven, Downing, & Kanwisher, 1999; Serences, Schwarzbach, Courtney, Golay, & Yantis, 2004; Yi & Chun, 2005), and the task goals of successfully recognizing the relevant object after a delay period reduces reliance solely on feature information. While the task design in the current study minimizes both spatial- and feature-based attentional mechanisms, there may still be an influence of feature and spatial information during WM encoding. For example, a shift in covert spatial attention to an anticipated location, such as that containing salient facial features, may occur during or prior to the cue period, although none of the participants reported relying solely on a consistent feature or spatial strategy. Moreover, the eye-tracking control experiment revealed that overt eye-movements were not likely a confounding factor in the reported neural results.

We report significant modulation of the P100 component in a selective attention task for complex real-world objects. This finding is consistent with several previously published reports of object-based attention, but is at odds with others. Object-based studies using illusory surface paradigms have documented significant modulation of the P100 (Valdes-Sosa, Bobes, Rodriguez, & Pinilla, 1998), and even the earlier C1 component (Khoe, Mitchell, Reynolds, & Hillyard, 2005). However, some studies have found modulatory changes that begin slightly later in the time-course of visual processing, at the N170 component, ~170 ms (He, Fan, Zhou, & Chen, 2004; Martinez, Ramanathan, Foxe, Javitt, & Hillyard, 2007; Martinez et al., 2006; Pinilla, Cobo, Torres, & Valdes-Sosa, 2001); these studies utilized either the discrimination of illusory surfaces defined by transparent motion or the detection of luminance/shape changes at one end of an object.

It is important to note that unlike other EEG attention studies that did not find P100 selectivity for faces, we observed a P100 amplitude preference for faces both in the main experiment and in an independent localizer task where faces and scenes were presented in separate blocks. While the current study and at least one other study (Herrmann et al., 2005) have revealed P100 selectivity to faces, others that have used face stimuli have found the P100 to reflect more domain-general aspects of visual processing, lacking any particular specificity for faces (Linkenkaer-Hansen et al., 1998; Rossion et al., 1999; Rossion et al., 2003). Interestingly, and consistent with face selectivity as displayed by the N170, the findings in both our study and the Herrmann et al. study showed a lateralized P100 distribution. While all P100 findings likely represent early visual processing, it is possible that our results and those of studies that did not reveal P100 face selectivity may not reflect exactly the same type of processing.

Also, the current findings are in contrast to the results of a magnetoencephalography (MEG) study that utilized similar stimuli (superimposed faces and houses), but in a one-back, repetition detection task. This study showed modulation only at later time points (> 190 ms) (Furey et al., 2006). Our results may have revealed earlier modulation due to greater task demands imposed by a two-item, delayed-recognition task; it has been shown that increasing task difficulty results in enhanced activity modulation (Spitzer, Desimone, & Moran, 1988).

In a recently published study, we utilized face and scene stimuli in a similar two-item, delayed-recognition task, but instead of using simultaneously-presented overlapped stimuli, the face and scene images were presented sequentially and did not overlap (Gazzaley, Cooney, McEvoy, Knight, & D’Esposito, 2005). Interestingly, the study revealed significant N170 modulation, but not significant P100 amplitude modulation by attentional goals. However, we recently increased the number of research participants in the sequential design version of this task and revealed significant top-down modulation of the P100 amplitude for sequentially-presented relevant vs. irrelevant faces (Gazzaley et al., In Press), thus paralleling the current study findings of very early object-based modulation. Because it has been postulated that early bottom-up face processing is rapid and largely automatic (Heisz, Watter, & Shedden, 2006), it is especially significant that top-down modulation can occur at such an early phase in processing these stimuli.

Several studies have revealed that early face processing (P100 component, M100 component) is a reflection of face categorization (Liu, Harris, & Kanwisher, 2002), while later processing (N170 component) reflects the processing of configural information of faces (Goffaux, Gauthier, & Rossion, 2003; Latinus & Taylor, 2006; Liu, Harris, & Kanwisher, 2002; Rossion et al., 2000). If so, it follows that P100 modulation observed in the overlap tasks might represent early successful categorization of a face as being distinct from a scene, as based on low-level feature analysis (Latinus & Taylor, 2006). However, this raises the question as to why the N170 component was not modulated in the current study (i.e., no significant difference between FM-O and SM-O). One potential reason is based on previous findings that configural face processing requires extraction of low spatial frequency (LSF) information (Goffaux, Hault, Michel, Vuong, & Rossion, 2005). In the current study, the application of a transparency filter and an overlapped image obscures LSF information, while largely preserving high spatial frequency (HSF) information. When Goffaux and colleagues applied a filter to face stimuli that eliminated LSF and retained HSF information, face-selective N170 perceptual effects were abolished (Goffaux, Gauthier, & Rossion, 2003). It is thus possible that the bottom-up perceptual modifications to the faces introduced by our experimental design resulted in loss LSF information and interfered with top-down influences at this stage. In support of this notion, it has also been revealed that the projection of LSF information to the prefrontal cortex influences top-down modulation of visual cortical areas at ~180 ms (Bar et al., 2006). This may explain why the present results differ from those previously reported using the sequential version of this paradigm, i.e., when LSF information was preserved, significant top-down modulation of the N170 was present (Gazzaley, Cooney, McEvoy, Knight, & D’Esposito, 2005).

However, this explanation does account for the fact that several previous studies in which LSF information was present have not revealed N170 modulation as a function of attention (Carmel & Bentin, 2002; Cauquil, Edmonds, & Taylor, 2000). It is possible that N170 modulation was not observed in the current study because the salience of face stimuli were already too high to benefit from additional perceptual modulation at this stage of encoding. Indeed, it has been argued that relative to stimuli with high salience, stimuli with low salience are more likely to benefit from additional attentional modulation (Hawkins, Shafto, & Richardson, 1988).

In considering how activity modulation can occur so early in the processing of the overlapped visual stimuli (i.e., 100 ms after stimulus presentation), it is important to recognize that participants were cued to the relevant information, such that they were aware of the stimulus to be remembered prior to presentation. This aspect of the current study parallels that used in most spatial attention tasks, which also report modulation of the P100 amplitude. In other words, anticipatory gain modulation may pre-activate sensory cortical areas to enhance the efficiency of subsequent sensory processing, as described in other studies (Kastner, Pinsk, De Weerd, Desimone, & Ungerleider, 1999; Luck, Chelazzi, Hillyard, & Desimone, 1997).

Neural-behavioral correlation

It is well-established that selective attention confers a behavioral performance advantage for a variety of perceptual tasks, such as visual detection (Posner, Snyder, & Davidson, 1980), discrimination (Carrasco & McElree, 2001), and categorization (Heekeren, Marrett, Bandettini, & Ungerleider, 2004). In a comparable manner, failure to selectively direct attentional resources negatively impacts memory performance (Gazzaley, Cooney, Rissman, & D’Esposito, 2005). The behavioral advantage mediated by selective attention is presumably the result of reduced interference from irrelevant information in a system with limited capacity (Hasher, Lustig, & Zacks, 2008; Vogel, McCollough, & Machizawa, 2005), likely mediated via top-down control mechanisms originating from the prefrontal cortex (see (review, Gazzaley & D’Esposito, 2007)). However, only recently have direct correlations between the magnitude of visual cortex activity modulation and behavioral measures of perceptual and memory performance been established (Brewer, Zhao, Desmond, Glover, & Gabrieli, 1998; Gazzaley, Cooney, Rissman, & D’Esposito, 2005; Pessoa, Kastner, & Ungerleider, 2002; Rees, Friston, & Koch, 2000; Vogel & Machizawa, 2004).

By revealing a significant correlation between very early measures of visual cortex activity during selective stimulus encoding and subsequent WM recognition accuracy, our results contribute to a growing literature describing the relationship between visual activity modulation and behavioral performance. Specifically, the degree to which participants modulate the P100 amplitude in overlap tasks predicts their subsequent recognition accuracy. This finding suggests that robust and early modulation generates higher fidelity stimulus representations, which translates to improved maintenance of relevant information across a delay period, resulting in superior recognition ability.

Conclusion

Consistency of goal-directed activity modulation occurring so early in the processing of spatial-, feature- and object-based information suggests that domain-general mechanisms of top-down modulation are targeted on early cortical regions of the visual processing stream. The influence of such early top-down modulation of neural representations for real-world objects on WM recognition performance is consistent with a growing appreciation of the dynamic relationship of attention and WM (Awh & Jonides, 2001; de Fockert, Rees, Frith, & Lavie, 2001).

Acknowledgments

This work was supported by National Institutes of Heath Grant K08-AG025221, R01-AG030395, and the American Federation of Aging Research (AFAR). We thank Nick Planet and Derek Wu for their assistance in EEG data acquisition and pre-processing.

References

- Awh E, Jonides J. Overlapping mechanisms of attention and spatial working memory. Trends Cogn Sci. 2001;5(3):119–126. doi: 10.1016/s1364-6613(00)01593-x. [DOI] [PubMed] [Google Scholar]

- Bar M, Kassam KS, Ghuman AS, Boshyan J, Schmid AM, Dale AM, et al. Top-down facilitation of visual recognition. Proc Natl Acad Sci U S A. 2006;103(2):449–454. doi: 10.1073/pnas.0507062103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. J Cogn Neurosci. 1996;8(6):551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brewer JB, Zhao Z, Desmond JE, Glover GH, Gabrieli JD. Making Memories: Brain Activity that Predicts How Well Visual Experience Will Be Remembered. Science. 1998;281(5380):1185. doi: 10.1126/science.281.5380.1185. [DOI] [PubMed] [Google Scholar]

- Carmel D, Bentin S. Domain specificity versus expertise: factors influencing distinct processing of faces. Cognition. 2002 doi: 10.1016/s0010-0277(01)00162-7. [DOI] [PubMed] [Google Scholar]

- Carrasco M, McElree B. Covert attention accelerates the rate of visual information processing. Proc Natl Acad Sci U S A. 2001;98(9):5363–5367. doi: 10.1073/pnas.081074098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cauquil AS, Edmonds GE, Taylor MJ. Is the face-sensitive N170 the only ERP not affected by selective attention? Neuroreport. 2000;11(10):2167–2171. doi: 10.1097/00001756-200007140-00021. [DOI] [PubMed] [Google Scholar]

- de Fockert JW, Rees G, Frith CD, Lavie N. The role of working memory in visual selective attention. Science. 2001;291(5509):1803–1806. doi: 10.1126/science.1056496. [DOI] [PubMed] [Google Scholar]

- Desimone R. Neural mechanisms for visual memory and their role in attention. Proc Natl Acad Sci U S A. 1996;93(24):13494–13499. doi: 10.1073/pnas.93.24.13494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annu Rev Neurosci. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Di Russo F, Martínez A, Sereno M, Pitzalis S, Hillyard S. Cortical sources of the early components of the visual evoked potential. Human Brain Mapping. 2002;15(2):95–111. doi: 10.1002/hbm.10010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Furey ML, Tanskanen T, Beauchamp MS, Avikainen S, Uutela K, Hari R, et al. Dissociation of face-selective cortical responses by attention. Proc Natl Acad Sci U S A. 2006;103(4):1065–1070. doi: 10.1073/pnas.0510124103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gazzaley A, Clapp W, Kelley J, McEvoy K, Knight RT, D’Esposito M. Age-related top-down suppression deficit in the early stages of cortical visual memory processing. PNAS. doi: 10.1073/pnas.0806074105. (In Press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gazzaley A, Cooney JW, McEvoy K, Knight RT, D’Esposito M. Top-down enhancement and suppression of the magnitude and speed of neural activity. J Cogn Neurosci. 2005;17(3):507–517. doi: 10.1162/0898929053279522. [DOI] [PubMed] [Google Scholar]

- Gazzaley A, Cooney JW, Rissman J, D’Esposito M. Top-down suppression deficit underlies working memory impairment in normal aging. Nat Neurosci. 2005;8(10):1298–1300. doi: 10.1038/nn1543. [DOI] [PubMed] [Google Scholar]

- Gazzaley A, D’Esposito M. Unifying prefrontal cortex function: Executive control, neural networks and top-down modulation. In: Cummings J, Miller B, editors. The Human Frontal Lobes. 2. New York: The Guildford Press; 2007. [Google Scholar]

- Goffaux V, Gauthier I, Rossion B. Spatial scale contribution to early visual differences between face and object processing. Cognitive Brain Research. 2003 doi: 10.1016/s0926-6410(03)00056-9. [DOI] [PubMed] [Google Scholar]

- Goffaux V, Hault B, Michel C, Vuong QC, Rossion B. The respective role of low and high spatial frequencies in supporting configural and featural processing of faces. Perception. 2005;34(1):77–86. doi: 10.1068/p5370. [DOI] [PubMed] [Google Scholar]

- Gomez Gonzalez CM, Clark VP, Fan S, Luck SJ, Hillyard SA. Sources of attention-sensitive visual event-related potentials. Brain Topogr. 1994;7(1):41–51. doi: 10.1007/BF01184836. [DOI] [PubMed] [Google Scholar]

- Hasher L, Lustig C, Zacks JM. Inhibitory mechanisms and the control of attention. In: Conway A, Jarrold C, Kane M, Miyake A, Towse J, editors. Variation in working memory. New York: Oxford University Press; 2008. pp. 227–249. [Google Scholar]

- Hawkins HL, Shafto MG, Richardson K. Effects of target luminance and cue validity on the latency of visual detection. Perception & psychophysics. 1988;44(5):484–492. doi: 10.3758/bf03210434. [DOI] [PubMed] [Google Scholar]

- He X, Fan S, Zhou K, Chen L. Cue Validity and Object-Based Attention. Journal of Cognitive Neuroscience. 2004;16:1085–1097. doi: 10.1162/0898929041502689. [DOI] [PubMed] [Google Scholar]

- Heekeren HR, Marrett S, Bandettini PA, Ungerleider LG. A general mechanism for perceptual decision-making in the human brain. Nature. 2004;431(7010):859–862. doi: 10.1038/nature02966. [DOI] [PubMed] [Google Scholar]

- Heisz JJ, Watter S, Shedden JM. Progressive N170 habituation to unattended repeated faces. Vision Research. 2006 doi: 10.1016/j.visres.2005.09.028. [DOI] [PubMed] [Google Scholar]

- Herrmann MJ, Ehlis AC, Ellgring H, Fallgatter AJ. Early stages (P100) of face perception in humans as measured with event-related potentials (ERPs) J Neural Transm. 2005;112(8):1073–1081. doi: 10.1007/s00702-004-0250-8. [DOI] [PubMed] [Google Scholar]

- Hillyard SA, Anllo-Vento L. Event-related brain potentials in the study of visual selective attention. Proc Natl Acad Sci U S A. 1998;95(3):781–787. doi: 10.1073/pnas.95.3.781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hillyard SA, Vogel EK, Luck SJ. Sensory gain control (amplification) as a mechanism of selective attention: electrophysiological and neuroimaging evidence. Philos Trans R Soc Lond B Biol Sci. 1998;353(1373):1257–1270. doi: 10.1098/rstb.1998.0281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jha AP. Tracking the time-course of attentional involvement in spatial working memory: an event-related potential investigation. Brain Res Cogn Brain Res. 2002;15(1):61–69. doi: 10.1016/s0926-6410(02)00216-1. [DOI] [PubMed] [Google Scholar]

- Kastner S, Pinsk MA, De Weerd P, Desimone R, Ungerleider LG. Increased activity in human visual cortex during directed attention in the absence of visual stimulation. Neuron. 1999;22(4):751–761. doi: 10.1016/s0896-6273(00)80734-5. [DOI] [PubMed] [Google Scholar]

- Kastner S, Ungerleider LG. Mechanisms of visual attention in the human cortex. Annu Rev Neurosci. 2000;23:315–341. doi: 10.1146/annurev.neuro.23.1.315. [DOI] [PubMed] [Google Scholar]

- Khoe W, Mitchell JF, Reynolds JH, Hillyard SA. Exogenous attentional selection of transparent superimposed surfaces modulates early event-related potentials. Vision Res. 2005;45(24):3004–3014. doi: 10.1016/j.visres.2005.04.021. [DOI] [PubMed] [Google Scholar]

- Latinus M, Taylor MJ. Face processing stages: impact of difficulty and the separation of effects. Brain Res. 2006;1123(1):179–187. doi: 10.1016/j.brainres.2006.09.031. [DOI] [PubMed] [Google Scholar]

- Liu J, Harris A, Kanwisher N. Stages of processing in face perception: an MEG study. Nat Neurosci. 2002;5(9):910–916. doi: 10.1038/nn909. [DOI] [PubMed] [Google Scholar]

- López M, Rodríguez V, Valdés-Sosa M. Two-object attentional interference depends on attentional set. International Journal of Psychophysiology. 2004 doi: 10.1016/j.ijpsycho.2004.03.006. [DOI] [PubMed] [Google Scholar]

- Luck SJ, Chelazzi L, Hillyard SA, Desimone R. Neural mechanisms of spatial selective attention in areas V1, V2, and V4 of macaque visual cortex. J Neurophysiol. 1997;77(1):24–42. doi: 10.1152/jn.1997.77.1.24. [DOI] [PubMed] [Google Scholar]

- Martinez A, Ramanathan DS, Foxe JJ, Javitt DC, Hillyard SA. The role of spatial attention in the selection of real and illusory objects. J Neurosci. 2007;27(30):7963–7973. doi: 10.1523/JNEUROSCI.0031-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martinez A, Teder-Salejarvi W, Vazquez M. Objects Are Highlighted by Spatial Attention. Journal of Cognitive Neuroscience. 2006 doi: 10.1162/089892906775783642. [DOI] [PubMed] [Google Scholar]

- Martinez A, Teder-Salejarvi W, Vazquez M, Molholm S, Foxe JJ, Javitt DC, et al. Objects are highlighted by spatial attention. J Cogn Neurosci. 2006;18(2):298–310. doi: 10.1162/089892906775783642. [DOI] [PubMed] [Google Scholar]

- O’Craven KM, Downing PE, Kanwisher N. fMRI evidence for objects as the units of attentional selection. Nature. 1999;401(6753):584–587. doi: 10.1038/44134. [DOI] [PubMed] [Google Scholar]

- Pessoa L, Kastner S, Ungerleider LG. Attentional control of the processing of neural and emotional stimuli. Brain Res Cogn Brain Res. 2002;15(1):31–45. doi: 10.1016/s0926-6410(02)00214-8. [DOI] [PubMed] [Google Scholar]

- Pinilla T, Cobo A, Torres K, Valdes-Sosa M. Attentional shifts between surfaces: effects on detection and early brain potentials. Vision Res. 2001;41(13):1619–1630. doi: 10.1016/s0042-6989(01)00039-6. [DOI] [PubMed] [Google Scholar]

- Pinilla T, Cobo A, Torres K, Valdés-Sosa M. Attentional shifts between surfaces: effects on detection and early brain potentials. Vision Research. 2001 doi: 10.1016/s0042-6989(01)00039-6. [DOI] [PubMed] [Google Scholar]

- Posner MI, Snyder CR, Davidson BJ. Attention and the detection of signals. J Exp Psychol. 1980;109(2):160–174. [PubMed] [Google Scholar]

- Rees G, Friston K, Koch C. A direct quantitative relationship between the functional properties of human and macaque V5. Nat Neurosci. 2000;3(7):716–723. doi: 10.1038/76673. [DOI] [PubMed] [Google Scholar]

- Rossion B, Gauthier I, Tarr MJ, Despland P, Bruyer R, Linotte S, et al. The N170 occipito-temporal component is delayed and enhanced to inverted faces but not to inverted objects: an electrophysiological account of face-specific processes in the human brain. Neuroreport. 2000;11(1):69–74. doi: 10.1097/00001756-200001170-00014. [DOI] [PubMed] [Google Scholar]

- Schoenfeld MA, Hopf JM, Martinez A, Mai HM. Spatio-temporal Analysis of Feature-Based Attention. Cerebral Cortex. 2007 doi: 10.1093/cercor/bhl154. [DOI] [PubMed] [Google Scholar]

- Serences JT, Schwarzbach J, Courtney SM, Golay X, Yantis S. Control of Object-based Attention in Human Cortex. Cereb Cortex. 2004 doi: 10.1093/cercor/bhh095. [DOI] [PubMed] [Google Scholar]

- Spitzer H, Desimone R, Moran J. Increased attention enhances both behavioral and neuronal performance. Science. 1988;240(4850):338–340. doi: 10.1126/science.3353728. [DOI] [PubMed] [Google Scholar]

- Sreenivasan KK, Jha AP. Selective attention supports working memory maintenance by modulating perceptual processing of distractors. Journal of Cognitive Neuroscience. 2007;19(1):32–41. doi: 10.1162/jocn.2007.19.1.32. [DOI] [PubMed] [Google Scholar]

- Sreenivasan KK, Katz J, Jha AP. Temporal characteristics of top-down modulations during working memory maintenance: an event-related potential study of the N170 component. Journal of Cognitive Neuroscience. 2007;19(11):1836–1844. doi: 10.1162/jocn.2007.19.11.1836. [DOI] [PubMed] [Google Scholar]

- Talsma D, Mulckhuyse M, Slagter HA, Theeuwes J. Faster, more intense! The relation between electrophysiological reflections of attentional orienting, sensory gain control, and speed of responding. Brain Res. 2007;1178:92–105. doi: 10.1016/j.brainres.2007.07.099. [DOI] [PubMed] [Google Scholar]

- Thut G, Nietzel A, Brandt SA, Pascual-Leone A. Alpha-band electroencephalographic activity over occipital cortex indexes visuospatial attention bias and predicts visual target detection. J Neurosci. 2006;26(37):9494–9502. doi: 10.1523/JNEUROSCI.0875-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Valdes-Sosa M, Bobes MA, Rodriguez V, Pinilla T. Switching attention without shifting the spotlight object-based attentional modulation of brain potentials. J Cogn Neurosci. 1998;10(1):137–151. doi: 10.1162/089892998563743. [DOI] [PubMed] [Google Scholar]

- Vogel EK, Machizawa MG. Neural activity predicts individual differences in visual working memory capacity. Nature. 2004;428(6984):748–751. doi: 10.1038/nature02447. [DOI] [PubMed] [Google Scholar]

- Vogel EK, McCollough AW, Machizawa MG. Neural measures reveal individual differences in controlling access to working memory. Nature. 2005;438(7067):500–503. doi: 10.1038/nature04171. [DOI] [PubMed] [Google Scholar]

- Yi DJ, Chun MM. Attentional modulation of learning-related repetition attenuation effects in human parahippocampal cortex. J Neurosci. 2005;25(14):3593–3600. doi: 10.1523/JNEUROSCI.4677-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]