Abstract

Perceptual learning is the improvement in perceptual task performance with practice or training. The observation of specificity in perceptual learning has been widely associated with plasticity in early visual cortex representations. Here, we review the evidence supporting the plastic reweighting of readout from stable sensory representations, originally proposed by Dosher & Lu (1998), as an alternative explanation of perceptual learning. A task-analysis that identifies circumstances in which specificity supports representation enhancement and those in which it implies reweighting provides a framework for evaluating the literature; reweighting is broadly consistent with the behavioral results and almost all of the physiological reports. We also consider the evidence that the primary mode of perceptual learning is through augmented Hebbian learning of the reweighted associations, which has implications for the role and importance of feedback. Feedback is not necessary for perceptual learning, but can improve it in some circumstances, and in some cases block feedback is also helpful – all effects that are generally compatible with an augmented Hebbian model (Petrov, Dosher, & Lu, 2005). The two principles of perceptual learning through reweighting evidence from stable sensory representations and of augmented Hebbian learning provide a theoretical structure for the consideration of issues such as task difficulty, task roving, and cuing in perceptual learning.

1. Introduction

Practice or training in perceptual tasks improves the quality of perceptual performance, often by substantial amounts. This improvement is called perceptual learning, in contrast with learning in the cognitive or motor domains. Visual perceptual learning is of increasing interest due to its presumed relationship with plasticity in adult visual cortex on the one hand and its potential implications for remediation during recovery or development of visual functions on the other. In this paper we review the evidence for an important form and mode of perceptual learning – incremental learned reweighting of sensory inputs to a decision via augmented Hebbian learning. We argue that while this may not be the only form of perceptual learning, it is certainly one important form, and may be the dominant one.

This paper focuses on a review and analysis of two main propositions:

Perceptual learning is often accomplished through incremental reweighting of early sensory inputs to task-specific response selection without altering early sensory representations.

Perceptual learning is often accomplished through the learning of incremental Hebbian associations, with and without external feedback.

These propositions are assessed for consistency with behavioral and physiological evidence in visual perceptual learning. The principles can be embodied in computational models of visual representations and Hebbian learning, and are consistent with the broad pattern of influence of feedback. This suggests a framework for understanding different perceptual learning tasks, transfer of perceptual learning, and several training phenomena.

2. Reweighting versus Representation Change and Specificity

2.1 Specificity and Implications

Performance improvements through perceptual learning can be quite specific to the trained stimuli and task. For example, improvements in perceptual judgments for stimuli of a particular orientation tend to transfer little to the performance of the same task for an orthogonal orientation or in a different location (Ahissar & Hochstein, 1996, 1997; Ahissar et al., 1998; Crist, Kapadia, Westheimer, & Gilbert, 1997; Fahle & Edelman, 1993; Fiorentini & Berardi, 1980, 1981; Karni & Sagi, 1991; Poggio, Fahle, & Edelman, 1992; Schoups, Vogels, & Orban, 1995; Vogels & Orban, 1985). This specificity in the behavioral profile of perceptual learning (e.g., Ahissar & Hochstein, 1996, 1997; Crist, Li, & Gilbert, 2001; Fahle & Morgan, 1996; Liu & Weinshall, 2000; Shiu & Pashler, 1992) has led most researchers to make strong claims of plasticity in early primary visual cortex (for reviews, see Ahissar, Laiwand, Kozminsky, & Hochstein, 1998; Gilbert, 1994; Karni & Bertini, 1997). They conclude that perceptual learning involves modification or enhancement through experience of the representation in orientation-tuned cortical areas with relatively small receptive fields such as V1 and V2. Although higher sensory representations (i.e., V4) almost by definition combine, or reweight, information from earlier sensory inputs, the inference of representational enhancement has generally been attributed to the earliest levels of visual analysis (e.g., the lateral connections within V1), where these two hypotheses of representational enhancement and reweighting are distinct. For example, Fahle (1994) concluded about hyperacuity tasks that: “.. orientation specificity … requires that the neurons that learn [emphasis added] are orientation specific … [and] … the position specificity .. indicates that the underlying neuronal processes occur in a cortical area …[without] position invariance… These results suggest Area V1 as the most probably candidate for learning of visual hyperacuity.”

However, we suggest that in many cases the behavioral perceptual learning data are also perfectly compatible with task-specific learned re-weighting of the connections from the early visual representations to response selection areas, with little or no changes in the representations themselves (Dosher & Lu, 1998, 1999; Mollon & Danilova, 1997). In the example cited above, training at orthogonal orientations or in distinct regions of the visual field is more likely to involve independent sensory representation at the neural level (V1, V2) for the two orthogonal orientations. But the trained changes may reside within the connections from the early representations to task-related decision units, rather than changes in the representations themselves.

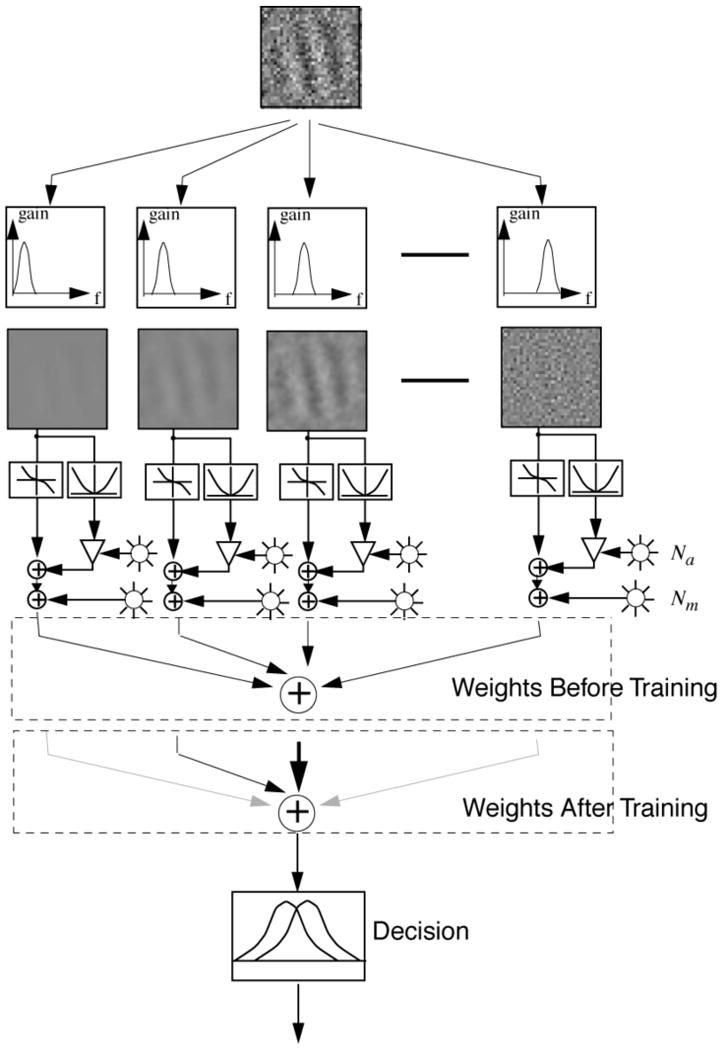

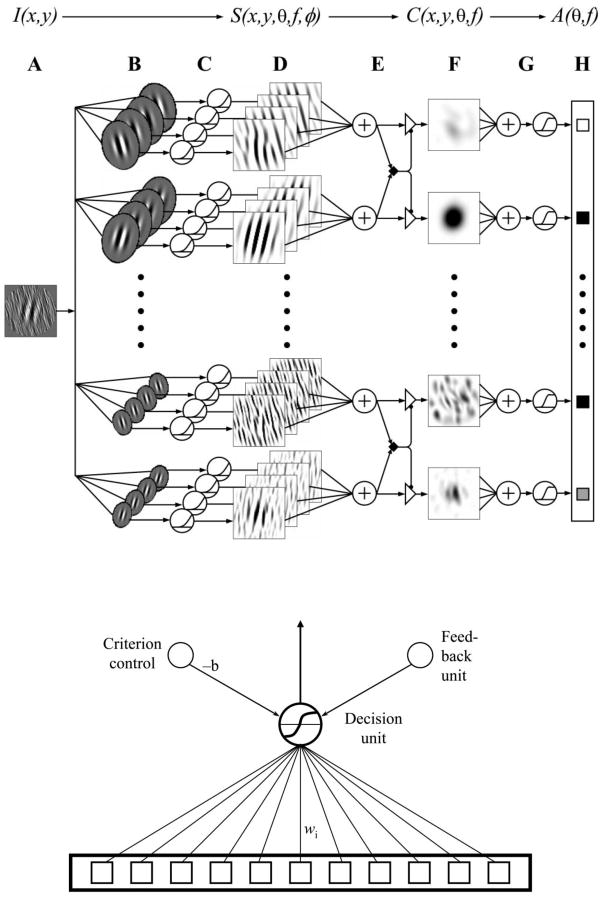

The observation that specificity of perceptual learning may reflect access of information or connections from early visual representations rather than change of the representations themselves was first made by Mollon and Danilova (1997), who remarked, “From the failure of perceptual learning to transfer when stimulus parameters are changed, it cannot necessarily be concluded that the site of learning is distal, rather the learning may be central and the specificity may lie in what is learnt.” Dosher and Lu (1998, 2000) independently claimed that perceptual learning could reflect re-weighting of input from sensory representations to a decision, in the absence of changes in the representations. The idea is that the input of certain sensory representations to a decision, for example, those with location, orientation, and spatial frequency that correspond to the trained stimulus, should be strengthened, while other irrelevant inputs are down-weighted in the decision. Figure 1 illustrates one schematic model in which early spatial frequency filters are reweighted to a decision unit (after Dosher & Lu, 1998).

Figure 1.

A multi-channel re-weighting framework for perceptual learning. Perceptual learning strengthens the connections from relevant and reduces the connections from irrelevant early visual system filters coding for orientation and spatial frequency to a decision process. After Dosher & Lu (1998), Figure 2.

So, perceptual learning that is specific to basic stimulus features such as retinal location, orientation, or spatial frequency logically may be accomplished either through the enhancement of early sensory representations or through selective re-weighting of connections from the early sensory representations to specific responses, or both. What kinds of experiments can discriminate change in early sensory representations from reweighting as explanations of specificity in perceptual learning? Based on an analysis of the kinds of tasks typically used to evaluate specificity, we suggest that many behavioral studies of perceptual learning are non-diagnostic and might be consistent with either.

2.2 Task Classification for Perceptual Learning

A task analysis, first introduced in Petrov, Dosher, and Lu (2005) (see Figure 2), provides a framework for evaluating the diagnostic value of experimental designs for discriminating reweighting and representational enhancement in perceptual learning. Primarily designed for paradigms that collect behavioral data from an initial training task and a subsequent transfer task, the analysis might be extended to interleaved training paradigms. The two forms of plasticity – reweighting versus representational change – may make similar predictions for performance in two tasks (e.g., a “training” task and a “transfer” task) in many cases. A systematic task analysis suggests more diagnostic tests for the level of perceptual learning. The basic idea is that to document representational change, it is necessary to test task pairs that use the same or closely related representations but distinct tasks, where pure reweighting is likely to show task independence. See Table 1 for a summary of the classification of key examples in the literature.

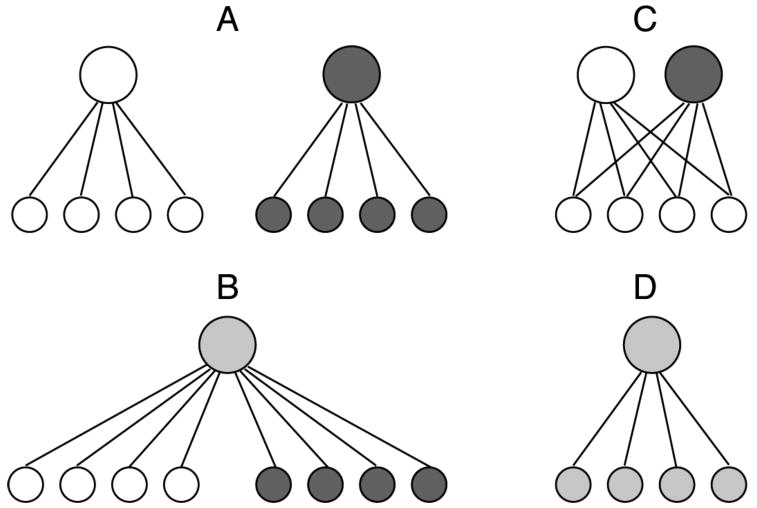

Figure 2.

Task analysis for perceptual learning, specificity, and reweighting versus representational enhancement. (A) distinct representation and response structures; (B) distinct representations but shared task structures; (C) shared representations, but independent task structures; and (D) shared representations and shared task structures. Only scenarios C and D provide an opportunity to distinguish reweighting and representation change. Modified from Petrov, Dosher, & Lu (2005), Figure 1.

Table 1.

Sample Task Classifications from the Literature

| Study | Tasks | Scenario | Conclusion | Notes |

|---|---|---|---|---|

| Matthews, Liu, Geesaman, & Qian, 1995 | Line orientation and 2-dot motion direction | A (or B) | Either | Little transfer between tasks. |

| Dosher & Lu, 2007 | Foveal Gabor orientation around two distinct diagonals | B (or A) | Either | High independence (specificity) to different base angles |

| Ahissar & Hochstein, 1996 | Visual search in texture displays of oriented lines | B (or A) | Either | Relative specificity to orientation and locations of targets |

| Crist, Kapadia, Westheimer, & Gilbert, 1997 | Peripheral 3-line bisection either of different orientations or different peripheral locations | B | Either | Specificity to orientation, and for distant peripheral locations |

| Schoups, Vogels, & Orban, 1995 | Complex grating orientation discrimination | B (or C) | Either | Some significant specificity to retinal location |

| Fahle, 1997 | Curvature, orientation, and vernier discrimination tasks in the same location | C | Reweighting | No transfer curvature, orientation, and vernier tasks on similar inputs |

| Fahle & Morgan, 1996 | Vernier and bisection dot judgments | C | Reweighting | Distinct tasks on (nearly) the same input stimuli |

| Ahissar & Hochstein, 1997 | Local and global letter shape judgments | C (or A) | Reweighting (or Either) | Distinct tasks on the same displays, but the relevant coding may be distinct |

| Wilson, 1986 | C | |||

| Petrov, Dosher, & Lu, 2005, 2006 | Gabor orientation judgments in two different external noise contexts | D (or C) | Reweighting | Persistent switch-costs suggests shared inputs and task structure |

In Scenario A (Fig. 2A), two tasks use distinct sensory representation inputs and also distinct task or response structures, and so have distinct weighting connections from the sensory inputs to task decision units. An example might combine an orientation discrimination task in one retinal location and a contrast increment task in another. Since the entire systems (representations and task decision rules) are distinct in the two tasks, perceptual learning could reflect pure representational enhancement, pure reweighting, or a combination of both. The two tasks will be learned independently, and so show strong specificity. There are few, if any, such examples of completely unrelated tasks in the literature.

In Scenario B (Fig. 2B), two distinct sets of sensory representations are used at input, although the task may be the same. An example is orientation discrimination at a location in one hemi-field, and another orientation discrimination at a location in another1. Perceptual learning could reflect reweighting, representational enhancement, or both since both the inputs and the connection weights to the decision unit are distinct; independent learning in the two tasks, or specificity, is a natural prediction. This is perhaps the dominant task classification in the literature, including almost all of the studies of specificity to orientation, retinal location, or motion direction (Ahissar & Hochstein, 1996, 1997; Ahissar et al., 1998; Crist, Kapadia, Westheimer, & Gilbert, 1997; Fahle & Edelman, 1993; Fiorentini & Berardi, 1980, 1981; Karni & Sagi, 1991; Poggio, Fahle, & Edelman, 1992; Schoups, Vogels, & Orban, 1995; Vogels & Orban, 1985). These behavioral studies are non-diagnostic with respect to the site of plasticity.

In Scenario C (Fig. 2C), the two tasks rely on the same (or nearly the same) sensory representations, but the tasks differ from each other, so the connections between the inputs and the decision tasks are separate. The single best example is the report of Fahle & Morgan (1996) where the two tasks, vernier and bisection judgments, use a nearly identical set of dots as input. Here, if training alters the representations then either transfer or interference is likely to occur at the switch to the second task since the (changed) representational coding is common (see sample data in Figure 3).

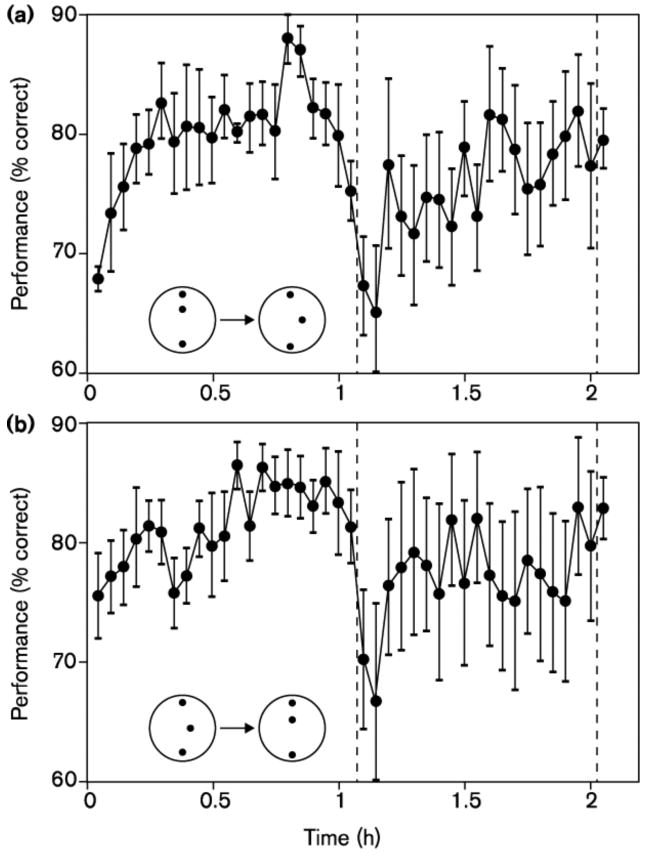

Figure 3.

Performance accuracy for training and transfer (a) between bisection and vernier and (b) between vernier and bisection tasks (from Fahle & Morgan (1996). Contrary to predictions of representational enhancement, the tasks show near independence of perceptual learning despite using nearly the same input stimuli.

Fahle and Morgan suggest task independence for their data, consistent with reweighting of separate set of task connections to decision in perceptual learning. Another example, from Ahissar & Hochstein (1997), involves training on a local then global or global then local searches in local/global letter search; here too, the pattern shows relatively independent learning in the two tasks; whether the same sensory inputs are used is less clear.

Finally, in Scenario D (Fig. 2D), two tasks share both the same sensory representational system and the same task – and so share the connections from the sensory representation to response. In this case, the two tasks are identical except in some factor such as context, or relative probability of examples, training on one task must impact the other. The question then is whether the specific pattern of interaction in the data is compatible with representation change or with re-weighting. To evaluate this generally requires a fully specified model of learning. An example of such a task and model (Petrov, Dosher, & Lu, 2005, 2006) is discussed below.

To summarize, in the prior literature, many or most transfer tests focused on conditions in which plausibly distinct stimulus representations are tested in training and transfer conditions (e.g., motion directions around the horizontal and vertical), and correspond to Scenarios A or B. The common conclusion of these studies invoked plasticity in the representations at the early sensory level as the mechanisms of perceptual learning. In fact, specificity in these cases is also fully compatible with reweighting. Such studies document many interesting properties of perceptual learning, but they cannot distinguish between reweighting and change of the representation system. A few previously reported perceptual learning studies correspond to Scenario C or perhaps D (Ahissar & Hochstein, 1997; Fahle, 1997; Fahle & Morgan, 1996; Wilson, 1986), using the same or quite similar stimuli in different tasks, and generally exhibited little or no positive (or negative) transfer of learning from one task to a second task. Fahle and Morgan (1996) concluded: “the neuronal mechanisms underlying the …. tasks are at least partially non-identical and … learning does not take place on the first common level of analysis” (Fahle, 1997). These views are compatible with our proposal that perceptual learning took place through reweighting.

Petrov, Dosher, and Lu (2005, 2006) tested a situation where the target stimuli and the discrimination task — and hence the stimulus representations and weights to the decision unit — are identical, while a background noise context changed between two tasks (or task environments). Practice in one external noise context improved performance in that context, but exhibited switch costs at the transition to the other. Negative transfer between different external noise environments relying on the same target stimuli might seem to favor perceptual learning through representational modification. Instead, the complex qualitative and quantitative pattern of learning and switch-costs in this non-stationary, context-varying training protocol was well accounted for by a quantitative network model (see 3.2) with incremental reweighting.

2.3 Evidence from Physiology

Plastic changes in cortical substrates of perception due to perceptual learning seemed to some to be a logical functional analog to reported reorganization of cortical representations following lesions. Gilbert, Sigman & Crist (2001, p. 685) opined, “Though the capacity for plasticity in adult primary sensory cortices was originally demonstrated as resulting from peripheral lesions, it is reasonable to assume that it didn't evolve for this purpose, but that it is used in the normal functioning of the cerebral cortex throughout life.”

Aside from any lesion-induced changes, plastic modification in sensory representations with extended practice were reported in auditory (Weinberger et al., 1990; Weinberger, Javid, & Lepan, 1993), and somato-sensory (Jenkins, Merzenich, Ochs, Allard, & Guic-Robles, 1990; Recanzone, Merzenich, & Schreiner, 1992) cortices, and in some fMRI results (Schiltz et al., 1999; Schwartz, Maquet, & Frith, 2002; Vaina et al., 1998). Perhaps the most dramatic of these involved increases in the cortical regions representing practiced digits (fingers) in somato-sensory tactile discrimination tasks (i.e., Recanzone et al., 1992)2. Yet against this backdrop of practice-induced changes in auditory and somato-sensory cortices, the evidence for practice-induced changes in visual representations is modest indeed.

Several single unit studies in early visual areas in adult monkeys (Crist et al., 2001; Ghose, Yang, & Maunsell, 2002; Schoups, Vogels, Qian, & Orban, 2001; Yang & Maunsell, 2004) found that learning associated with marked improvements in behavioral performance in the visual modality did not appear to be associated with neuronal recruitment, or increased cortical territory, or other major changes in early visual representations. A range of measures, including location, size, and orientation selectivity of basic receptive fields were largely indistinguishable in trained and untrained regions of V1 and V2. Essentially no changes in receptive field parameters were observed as a function of distance from the trained location and/or preferred orientations (Ghose et al., 2002). Tuning curves of cells in visual cortex remained evenly distributed over orientations in both trained and untrained neurons (Schoups et al., 2001). Schoups et al. did, however, report small changes in the slopes of tuning curves of neurons with preferred orientations slightly off from the trained orientation; yet these small changes do not provide a compelling account of the large behavioral changes. In contrast, Ghose et al. (2002) found no such changes in tuning curve slopes in early visual cortex. Modest sharpening of tuning curves were reported in V4 (Yang and Maunsell, 2004). Other reported task-specific tuning curves (i.e., Li, Piëch, & Gilbert, 2004) seem to reflect the selection of task-relevant stimulus elements during a particular task, possibly reflecting attention, and not persistent cross-task changes in tuning of cells in V1.

Law and Gold (2008) studied the effects on practice in a visual motion direction task on the sensory representations in MT as well as on LIP, often associated with attention, reward anticipation and decision-making. Consistent with the predictions of Dosher and Lu (1998, 1999), Law and Gold (p. 511) conclude, “…[our] results suggest that the perceptual improvements corresponded to an increasingly selective readout of highly sensitive MT neurons by a decision process, represented in LIP, that instructed the behavioral response.” Finally, other researchers (Smirnakis, Brewer, Schmid, Toias, Schuz, Augath, Inhoffen, Wandell, & Logothetis, 2005; but see Huxlin, 2008) have called into question the ubiquity of sensory-cortical recruitment or remapping of sensory cortex even subsequent to lesions.

In sum, these reports reveal early sensory representations that show either no changes with perceptual learning, or modest changes in the slopes of a small subset of tuning functions. We suggest these changes are not sufficient to provide a full explanation of the behavioral improvements.

2.5 Summary

Perceptual learning in the visual domain has been widely associated with long-lasting plasticity of sensory representations in early visual cortex (V1, V2) (Gilbert, Sigman, & Crist, 2001; Seitz & Watanabe, 2006; Tsodyks & Gilbert, 2004). Alteration of sensory cortices through perceptual experience has become the default explanation of perceptual learning. However, there is increasing evidence supporting the proposal (Dosher and Lu, 1998, 1999; Mollon and Danilova, 1996) that the behavioral expression of specificity in perceptual learning in visual system reflects reweighted decisions, or changed readout, from stationary visual sensory representations. A review of the evidence within the context of a task analysis suggests that it is a dominant mechanism. Physiological analysis using single cell recording has documented remarkable robustness and stability of visual representations in the face of training. Only a few instances identify very modest changes in tuning functions with perceptual practice that occur outside of the original trained task environment.

We conclude that – in the visual domain as distinct from other sensory or sensory motor domains – reweighting or altered readout at higher levels of visual system is perhaps the dominant mode of perceptual learning. Indeed, model-based computations (see below) suggest that reweighting may overshadow representational change as a learning mechanism, even if some slight representation change is manifested.

3. The Mode of Perceptual Learning

3.1 Learning a Task in Different Contexts

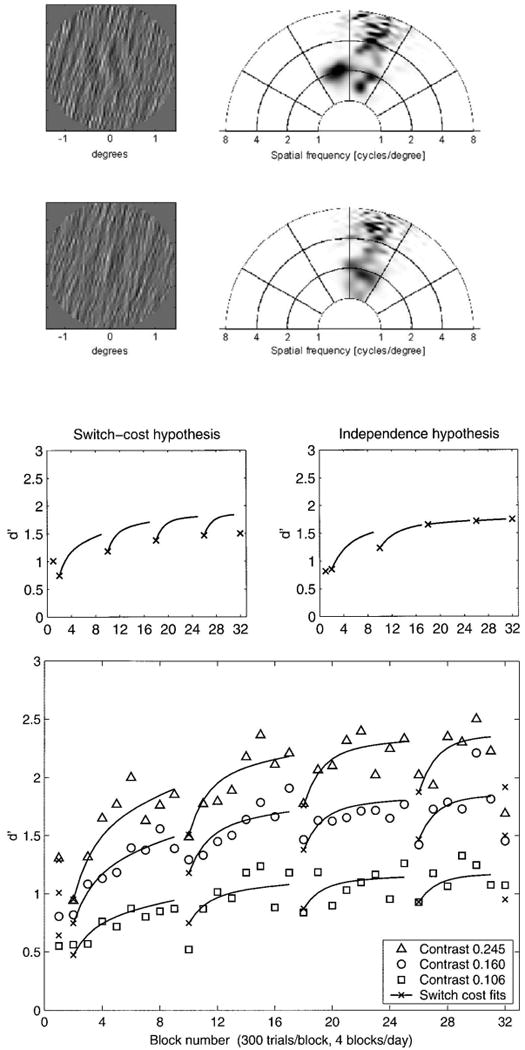

A task analysis of perceptual learning (2.3) identified tasks with shared input representations (Scenario C) or with identical inputs and tasks (Scenario D) as the only diagnostic behavioral tests of the form and level of plasticity. This motivated a new test in which the target stimuli and the discrimination task are shared, while a background noise context differed between two task environments (Petrov, Dosher, and Lu, 2005). Observers discriminated the orientation of a Gabor patch embedded in oriented noise (Fig. 4 top left, with right-oriented external noise). Observers trained alternatively in two different external noise contexts for several sessions each. This requires focusing on distinct evidence (sub-regions) of the stimulus representation in order to accomplish the same task, as seen in the graphs of the spatial frequencies and orientations represented in the stimuli (Fig. 4 top right; the stimulus space in left oriented noise is a mirror reflection). In this example, the most distinctive energy is for the shallow left tilt at 2 cpd for the incongruent.

Figure 4.

Perceptual learning in alternating external noise contexts. Stimulus examples tilted top left and right embedded in right-oriented external noise (top left) along with spatial-frequency/orientation energy in the stimuli (top right). Independent learning in the two context tasks predicts one pattern of alternate learning blocks, while cross-learning predicts cross-talk (center panel). Increases in behavioral discrimination accuracy (d′) in alternating noise contexts as a function of practice block and the contrast of the tilted Gabor target. A persistent pattern of switch-costs is a consequence of sequentially optimizing the same learned weights. Modified from Petrov, Dosher, & Lu (2005).

Significant switch-costs (interference) occurred between successive training blocks of the two noise contexts, added to a general improvement in performance. This was consistent with the switch-cost pattern of learning but not the independent learning pattern shown in Figure 4 (middle). A co-learning analysis in which each task is trained alternately in turn, with multiple phases of testing on each task, is essential for distinguishing between independent co-learning and competitive (push-pull) co-learning. If two tasks are learned completely independently during alternating phases, then this should be equivalent to cutting out segments of two independent training curves, and piecing them together. Independent learning – assuming some form of diminishing returns – gradually eliminates the “lags” (apparent switch-costs) over time and training. Evidence for two independent training curves is consistent only with re-weighting of connections to independent higher decision structures (Scenario C). If both the representations and the connection structures are shared (Scenario D), as in this experiment, persistent switch cost can correspond either to representation change or to re-weighting, or both, and this must be assessed with a model.

The perceptual learning data (d′ discriminability) for three different Gabor contrasts are shown in Figure 4 (bottom), along with a model fit (Petrov et al., 2005). This is among the most complex data sets in the perceptual learning literature. Surprisingly, the complex qualitative and quantitative patterns of learning and switch-costs in this non-stationary, context-varying training protocol are well accounted for by a quantitative network model with incremental reweighting and stable representations.

3.2 A Hebbian Reweighting Model

A physiologically-inspired neural network model of the complex learning dynamics in stochastic non-stationary learning environments (Petrov, Dosher, & Lu, 2005) embodies the multi-channel reweighting model (Figure 3) outlined in Dosher and Lu (1998, 1999). The model predicts complex perceptual learning patterns such as those observed in the context-varying training experiment. The model implements an early visual system representation of the input stimulus, and learns to re-weight this information for a decision through augmented Hebbian learning. The multi-channel reweighting model (Petrov et al., 2005) is shown schematically in Figure 5.

Figure 5.

Schematic of the augmented Hebbian reweighting model consists of a stable representation system (top) and weight structure that associates a pattern of input activity to a decision unit (bottom). The representation system computes an activity pattern over 35 units reflecting activity through a spatial filter centered at one of 5 spatial frequencies and 7 orientations, combined over phase, normalized, and averaged in the spatial region of the Gabor. The learning system incrementally adjusts the weights to a decision unit using Hebbian associative learning, augmented by input from an external feedback signal and a bias or criterion unit.

The representation system (Figure 5, top) computes the normalized output activities of spatial-frequency and orientation-selective filters3 for each input stimulus. This pattern of activities over the representation units is connected with a decision unit (Figure 5, bottom) that chooses a response (here, ‘tilted right’ or ‘tilted left’). The decision space for the encoded stimulus structure in this task, much like many simpler perceptual learning tasks, is closely approximated by a linear separable boundary. The great success in accounting for the complex data pattern of contrast effects, switch-cost effects, and congruency effects (high discrimination only for the Gabor opposite the external noise, data not shown) from this model provides an existence proof of the qualitative and quantitative adequacy of channel reweighting – without changing stimulus representation – as an explanation of a particularly challenging set of perceptual learning data.

The impact on perceptual task accuracy of various changes in the tuning of the sensory representation system can be estimated within such a model. The two physiological observations reporting any change in the sensory representations showed modest sharpening of tuning curves of about 30% reduction of bandwidth in V1 (Schoups et al., 2001) and of 14% in V4 (Yang & Maunsell, 2004), but tenfold or more improvements in psychophysical thresholds. Within the model, a 50% sharpening in tuning curves could account at most for about a 10% improvement in behavioral performance (Petrov et al., 2005) in a task that actually showed 120-150% improvements. To the extent that these estimates are representative, this strongly suggests that reweighting of sensory outputs to a decision must account for the bulk – and can account for all – of the observed behavioral improvements.

The reweighting model has similarities of principle, but also differences from the early model of hyperacuity of Poggio, Fahle, and Edelman (1992), which “… raises the possibility that when a certain perceptual task is practiced, the brain quickly synthesizes a specialized neural module that reads out responses in the primary sensory areas of the brain in a way that is optimal for this particular task. Because the responses of sensory neurons are not affected by learning and the synthesized module is not involved in any other tasks, the obtained improvement in the performance is highly specific to the task…” (Tsodyks & Gilbert, 2004, p. 776). The Poggio et al. model postulated a three-level feed-forward network in which the pattern of activity in early Gaussian position filters are remapped as radial-basis functions (templates), and these are connected by a weight-structure to a decision unit. It differs from the Hebbian reweighting model in requiring a teaching signal, or feedback, while the Hebbian associative mechanisms of the reweighting model does not require feedback or a teaching signal in order to support learning in a wide range of circumstances.

3.3 Hebbian Mode of Perceptual Learning and the Role of Feedback

In the multi-channel reweighting model (Petrov et al., 2005), perceptual learning is based on incremental Hebbian associations of sensory evidence to the response. An error-correcting back-propagation learning algorithm was too powerful and its predictions were inconsistent with the perceptual learning data, especially for switch-costs. In Hebbian learning, feedback is not necessary for learning in some circumstances where the response of the untrained system is moderately correlated to the correct response even early in learning. This analysis suggests boundary conditions on the phenomenon of learning without feedback. This prediction was tested by running an identical experiment without individual trial feedback (Petrov, Dosher, & Lu, 2006).

Consistent with the prediction of the augmented Hebbian learning model, the results without feedback were quite similar to those with feedback, shown in Figure 5. The two sets of learning curves are similar in all respects in discriminability d′, although there were systematic differences in bias. There was similar initial and continuing learning with and without feedback, but also a very similar pattern of recovery from switches in this non-stationary training environment. The augmented Hebbian learning model accounted for this pattern given initial task performance sufficient to serve as correlated internal feedback. Feedback, should it occur, is as an added input, and a criterion control module served as a corrective to unexpectedly large numbers of one response or the other. This latter device aided systematic learning and recovery from task switches. The model provides an excellent account of the behavior while assuming no changes in early representation. It also provides a framework for consideration of the literature on perceptual learning and feedback.

3.4 Feedback in Perceptual Learning

The role of feedback in perceptual learning has received significant treatment in the literature. There are several major findings. The majority of perceptual learning experiments use trial-by-trial feedback. However in several reports, perceptual learning occurs with block feedback (Herzog & Fahle, 1997; Shiu & Pashler, 1992). Both forms of feedback have been reported to improve learning in some perceptual tasks. In other tasks, perceptual learning occurs even in the absence of external feedback (Ball & Sekuler, 1987; Crist et al., 1997; Fahle & Edelman, 1993; Herzog & Fahle, 1997; Karni & Sagi, 1991; McKee & Westheimer, 1978; Shiu & Pashler, 1992); but see (Vallabha & McClelland, 2007). The study of alternate training in distinct noise contexts (Petrov et al., 2006) joins other reports of successful learning without external feedback. So, although it is possible to learn without feedback, in some cases feedback seems necessary for perceptual learning, especially for difficult stimuli (Herzog & Fahle, 1997; Shiu & Pashler, 1992). In other cases, feedback improves the rate or extent of learning (Fahle & Edelman, 1993; Ball & Sekuler, 1987). When external feedback is introduced following achievement of asymptotic performance with no-feedback training, the addition of external feedback may have little effect (Herzog & Fahle, 1997; McKee & Westheimer, 1978).

False feedback has been considered in a few studies. Perceptual learning was found to be absent with false feedback, but performance rebound occurred with subsequent correct feedback (Herzog & Fahle, 1997). Indeed, in certain situations, perceptual learning is thought to occur even when the stimulus is task-irrelevant (Seitz & Watanabe, 2003; Watanabe, Nanez, & Sasaki, 2001; Watanabe et al., 2002), based on feedback in an independent, primary, task.

Overall, these empirical results indicate that: (1) Learning can often occur, and sometimes even be optimized, without overt feedback. (2) Feedback may benefit perceptual learning, particularly when the task difficulty is high and the initial performance is low. (3) Inaccurate feedback may damage the ability to learn. The complex empirical results on the role of feedback present an important challenge for modeling. The analysis of perceptual learning models may be very important for understanding the role of feedback and the importance of task difficulty in training effectiveness, as well as having implications for designing training protocols in practical applications. These issues are considered next.

3.5 Models of Perceptual Learning

In aggregate, then, the literature suggests that perceptual learning is accomplished neither in a pure supervised mode (Hurtz, Kraugh, & Palmer, 1991) nor in a pure unsupervised mode (Polat & Sagi, 1994; Vaina et al., 1995; Weiss, Edelman, & Fahle, 1993). Pure supervised models would be fully reliant on external teaching signals, or explicit feedback, for successful learning. A purely unsupervised mode of learning is incompatible with the observation that explicit feedback may, in some circumstances, improve the rate of learning. Herzog and Fahle (1997) reviewed several distinct extensions to unsupervised learning modes of perceptual learning based on the early evidence and observed, “… As a surprising conclusion we find that both supervised and unsupervised (feedforward) neural networks are unable to explain the observed phenomena and that straightforward ad hoc extensions also fail.”

However, we believe that a model based on augmented Hebbian learning provides possible explanations for the full range of these feedback phenomena in perceptual learning (Fahle & Edelman, 1993; Herzog & Fahle, 1997; Petrov, Dosher, & Lu, 2005, 2006). The augmented Hebbian reweighting model of perceptual learning (Petrov et al., 2005, 2006) is broadly compatible with this complex empirical literature. Unsupervised Hebbian learning with possible input from feedback allows for learning without feedback, but also accounts for the positive role of feedback in some circumstances. The model explains why feedback may even be “necessary” (at least over the time scale of a perceptual learning experiment) in some cases. For near-threshold tasks (e.g., Herzog & Fahle, 1997) where the initial response accuracy is low, unsupervised Hebbian learning may need a very long time to discover the weak statistical correlations, and may never succeed. It is precisely in these cases where initial accuracies are low, or where accuracy is held low in adaptive staircase methods, that should benefit the most from accurate feedback.

Similarly, the Hebbian framework explains why late introduction of feedback may fail to improve performance further (Herzog & Fahle, 1997; McKee & Westheimer, 1978). If the model has already found an optimal or near-optimal weight vector before external feedback becomes available, corresponding to (near) asymptotic performance, then introduction of feedback at that point should be unimportant. The augmented Hebbian model may also explain why block-level feedback may have some positive effects on learning, although the mechanism is less straightforward. One role of feedback may be to induce more stringent criterion (bias) control, which may be induced by block feedback just as well as by trial-by-trial feedback. Criterion control refers to corrective inputs that arise when the recent history of responses exhibit a strong bias to one response (Petrov et al., 2005, 2006).

3.6 Summary

The varied effects of feedback on perceptual learning in the broad literature can be understood in the simple and elegant framework of an augmented Hebbian learning hypothesis, and is also compatible with the multi-channel reweighing model in which perceptual learning optimizes a weight structure to a decision unit or module, without requiring modification of the sensory representation itself. Should perceptual experience alter the tuning of sensory representations, it follows that a weight structure that was previously optimized would need to be further altered to compensate for or integrate any such sensory changes. A pure associative, or Hebbian, mode of learning predicts the possibility of unsupervised learning without feedback. Augmentation of a pure associative process with inputs from feedback and criterion control allows for a positive effect of trial-to-trial feedback, and perhaps even of block-feedback. False feedback would push the net response in the wrong direction, showing a misleading or counter-optimizing effect.

4. Implications for Other Perceptual Learning Phenomena

The Hebbian reweighting framework of perceptual learning has a number of implications for other phenomena of perceptual learning. One principle alluded to in Petrov et al. (2006) is the potentially important role played by initial accuracy in the early stages of training and by the intermixture of ‘easy’ or high accuracy (i.e., high contrast) trials in the ability to learn without feedback. The model predicts reported effects in which the introduction of a number of obvious trials ‘jumpstarts’ perceptual learning for even difficult tasks that otherwise show little learning (Rubin, Nakayama, & Shapley, 1997). The model makes predictions for a wide range of such phenomena; details of these predictions remain to be computed and tested, although initial results are promising (Liu, Lu, & Dosher, 2008).

Another phenomenon of perceptual learning that might be understood within the multi-channel reweighting framework concerns the damaging effect of stimulus ‘roving’ in certain learning tasks. This phenomenon refers to the observation that sometimes randomly interleaving two kinds of stimuli in the same task may substantially reduce or eliminate perceptual learning that would otherwise occur for each stimulus individually (Yu, Klein, & Levi, 2004; Kuai, Zhong, Klein, Levi, & Yu, 2005; Otto, Herzon, Fahle, & Zhaoping, 2006). For example, little or no learning may occur in contrast discrimination tasks in which the base contrast varies randomly between different levels (i.e., Kuai et al., 2005). Similarly, interleaving two different sets of bisection stimuli with different distances between outer line elements may exhibit little or no learning (Otto et al., 2006). However, remarkably, the ability to learn may be reinstated when distinct contrast (or motion) stimuli are presented with an obvious temporal patterning, such as alternating two contrast bases or rotating successively between four different motion directions (upper right, then lower right, then lower left, then upper left) (Kuai, et al., 2005).

One general way to think about these designs within the framework of a reweighting model is that the different conditions require focusing on distinct target-relevant regions of the stimulus representations. If these are intermixed over trials randomly then all trials naturally are treated as the same task or decision structure, and each trial provides different and incompatible evidence for optimization of the weight structures. In contrast, if the observer can clearly mark each successive trial in a temporally patterned learning mode as representing a distinct task and decision structure, then the incompatible stimulus patterns can be segregated into different learned weight structures connecting to distinct decision units4. In most such cases, the resulting learning would be compatible with learned reweighting, and less compatible with any significant alteration in stimulus representations.

Further computational modeling may reveal the range of phenomena that incremental reweighting and Hebbian associative learning explanations of perceptual learning can predict.

5. Conclusions

We conclude that reweighting of connections between sensory representations and a decision or response unit, and not altered sensory representations, is a primary – and perhaps the primary – form of perceptual learning. This conclusion is based on the facts that (1) behavioral evidence of specificity to a stimulus or task usually cited in support of representation change is either consistent with reweighting or with either explanation, (2) that physiological evidence shows little effect on sensory representations through practice, with inconsistent reports of modest changes in the slope of tuning functions in early visual cortices that are not consistent with the size of observed improvements in performance, and (3) even if perceptual learning results in these small to modest changes in sensory representations, learned reweighting to decision would be necessary to compensate for these changes in order to optimize performance.

Second, the evidence strongly suggests that perceptual learning is accomplished through an augmented form of incremental Hebbian learning. The learning takes feedback, when it occurs, as another input and also may make use of response monitoring for bias. This framework accounts for the basic facts about perceptual learning and feedback, including: (1) the ability to learn in a range of circumstances without feedback, (2) the fact that feedback may assist perceptual learning in some circumstances where initial performance is poor, (3) that incorrect feedback may hinder or bias learning, and (4) the fact that introduction of feedback has little effect if asymptotic performance has already been attained without feedback. Additionally, (5) the presence of a module for monitoring response bias may provide a mechanism to account for observed effects of block feedback, and may be critical for the recovery of performance after task or stimulus switch-costs.

Finally, the reweighting framework, along with the proposed comprehensive task analysis, has the potential to provide explanations of the effects of easy ‘insight’ trials on learning very difficult discriminations, the interactions of feedback and attained accuracy during perceptual training, and the role of temporal patterning in releasing blocked perceptual learning in trial-roving experiments. The Hebbian reweighting framework supports computational predictions in a range of conditions, and such applications should be further developed. In contrast, the hypothesis that perceptual learning alters sensory representations currently lacks a specific mechanism whereby representations are changed through practice, predictions for the mode of learning, and about the role of feedback and when it is effective.

Acknowledgments

Supported by the National Eye Institute; our previous work on perceptual learning was supported by the National Institutes of Mental Health and the National Science Foundation. We wish to acknowledge Alexander Petrov for prior collaboration in the development of the augmented Hebbian learning model.

Footnotes

Note that even the same task in two distinct locations might de facto be equivalent to two distinct tasks, and hence could an example of type A.

Even this classic example of sensory-cortical plasticity, the authors note a decoupling between the observed physiological changes and changes in behavioral discriminability.

The representation in Petrov et al. (2005) spanned 5 spatial-frequencies × 7 orientations, combined over phases and relevant spatial regions.

Kuai et al., 2006, report an experiment in which a 1-sec precue of stimulus condition was not sufficient to reinstate learning, while a successive rotation through stimulus types did lead to successful learning. This suggests that a 1-sec precue does not provide a sufficient opportunity to set up distinct weight structures and decision units for learning. We might predict that at some point a longer pre-cue interval would be successful in reinstating learning.

References

- Ahissar M, Hochstein S. Learning pop-out detection: Specificities to stimulus characteristics. Vision Research. 1996;36(21):3487–3500. doi: 10.1016/0042-6989(96)00036-3. [DOI] [PubMed] [Google Scholar]

- Ahissar M, Hochstein S. Task difficulty and the specificity of perceptual learning. Nature. 1997;387(6631):401–406. doi: 10.1038/387401a0. [DOI] [PubMed] [Google Scholar]

- Ahissar M, Laiwand R, Kozminsky G, Hochstein S. Learning pop-out detection: building representations for conflicting target-distractor relationships. Vision Research. 1998;38:3095–3107. doi: 10.1016/s0042-6989(97)00449-5. [DOI] [PubMed] [Google Scholar]

- Ball K, Sekuler R. A specific and enduring improvement in visual motion discrimination. Science. 1982;218(4573):697–698. doi: 10.1126/science.7134968. [DOI] [PubMed] [Google Scholar]

- Ball K, Sekuler R. Direction-specific improvement in motion discrimination. Vision Research. 1987;27(6):953–965. doi: 10.1016/0042-6989(87)90011-3. [DOI] [PubMed] [Google Scholar]

- Ball K, Sekuler R, Machamer J. Detection and identification of moving targets. Vision Research. 1983;23(3):229–238. doi: 10.1016/0042-6989(83)90111-6. [DOI] [PubMed] [Google Scholar]

- Bennett RG, Westheimer G. The effect of training on visual alignment discrimination and grating resolution. Perception & Psychophysics. 1991;49(6):541–546. doi: 10.3758/bf03212188. [DOI] [PubMed] [Google Scholar]

- Crist RE, Kapadia MK, Westheimer G, Gilbert CD. Perceptual learning of spatial location: Specificity for orientation, position, and context. Journal of Physiology. 1997;78:2889–2894. doi: 10.1152/jn.1997.78.6.2889. [DOI] [PubMed] [Google Scholar]

- Crist RE, Li W, Gilbert CD. Learning to see: Experience and attention in primary visual cortex. Nature Neuroscience. 2001;4(5):519–525. doi: 10.1038/87470. [DOI] [PubMed] [Google Scholar]

- DeValois K. Spatial frequency adaptation can enhance contrast sensitivity. Vision Research. 1977;17:1057–1065. doi: 10.1016/0042-6989(77)90010-4. [DOI] [PubMed] [Google Scholar]

- Dorais A, Sagi D. Contrast masking effects change with practice. Vision Research. 1997;37(13):1725–1733. doi: 10.1016/s0042-6989(96)00329-x. [DOI] [PubMed] [Google Scholar]

- Dosher B, Lu ZL. Perceptual learning reflects external noise filtering and internal noise reduction through channel reweighting. Proceedings of the National Academy of Sciences of the United States of America. 1998;95(23):13988–13993. doi: 10.1073/pnas.95.23.13988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dosher B, Lu ZL. Mechanisms of perceptual learning. Vision Research. 1999;39(19):3197–3221. doi: 10.1016/s0042-6989(99)00059-0. [DOI] [PubMed] [Google Scholar]

- Fahle M. Specificity of learning curvature, orientation, and vernier discriminations. Vision Research. 1997;37(14):1885–1895. doi: 10.1016/s0042-6989(96)00308-2. [DOI] [PubMed] [Google Scholar]

- Fahle M. Perceptual learning: Gain without pain? Nature Neuroscience. 2002;5(10):923–924. doi: 10.1038/nn1002-923. [DOI] [PubMed] [Google Scholar]

- Fahle M, Edelman S. Long-term learning in vernier acuity: Effects of stimulus orientation, range and of feedback. Vision Research. 1993;33(3):397–412. doi: 10.1016/0042-6989(93)90094-d. [DOI] [PubMed] [Google Scholar]

- Fahle M, Morgan MJ. No transfer of perceptual learning between similar stimuli in the same retinal position. Current Biology. 1996;6(3):292–297. doi: 10.1016/s0960-9822(02)00479-7. [DOI] [PubMed] [Google Scholar]

- Fendick M, Westheimer G. Effects of practice and the separation of test targets on foveal and peripheral stereoacuity. Vision Research. 1983;23(2):145–150. doi: 10.1016/0042-6989(83)90137-2. [DOI] [PubMed] [Google Scholar]

- Fine I, Jacobs RA. Perceptual learning for a pattern discrimination task. Vision Research. 2000;40(23):3209–3230. doi: 10.1016/s0042-6989(00)00163-2. [DOI] [PubMed] [Google Scholar]

- Fiorentini A, Berardi N. Perceptual learning specific for orientation and spatial frequency. Nature. 1980;287(5777):43–44. doi: 10.1038/287043a0. [DOI] [PubMed] [Google Scholar]

- Fiorentini A, Berardi N. Learning in grating waveform discrimination: Specificity for orientation and spatial frequency. Vision Research. 1981;21(7):1149–1158. doi: 10.1016/0042-6989(81)90017-1. [DOI] [PubMed] [Google Scholar]

- Gilbert CD, Sigman M, Crist RE. The neural basis of perceptual learning. Neuron. 2001;31:681–697. doi: 10.1016/s0896-6273(01)00424-x. [DOI] [PubMed] [Google Scholar]

- Gilbert CD. Early perceptual learning. Proceedings of the National Academy of Sciences, USA. 1994;91:1195–1197. doi: 10.1073/pnas.91.4.1195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghose GM, Yang T, Maunsell JHR. Physiological correlates of perceptual learning in monkey V1 and V2. Journal of Neurophysiology. 2002;87(10):1867–1888. doi: 10.1152/jn.00690.2001. [DOI] [PubMed] [Google Scholar]

- Gibson E. Principles of perceptual learning. New York: Appleton Century Crofts; 1969. [Google Scholar]

- Gold J, Bennett PJ, Sekuler AB. Signal but not noise changes with perceptual learning. Nature. 1999;402(6758):176–178. doi: 10.1038/46027. [DOI] [PubMed] [Google Scholar]

- Green CS, Bavelier D. Action video game modifies visual selective attention. Nature. 2003;423(6939):534–537. doi: 10.1038/nature01647. [DOI] [PubMed] [Google Scholar]

- Herzog MH, Fahle M. The role of feedback in learning a Vernier discrimination task. Vision Research. 1997;37(15):2133–2141. doi: 10.1016/s0042-6989(97)00043-6. [DOI] [PubMed] [Google Scholar]

- Herzog MH, Fahle M. Modeling perceptual learning: difficulties and how they can be overcome. Biological Cybernatics. 1998;78:107–117. doi: 10.1007/s004220050418. [DOI] [PubMed] [Google Scholar]

- Hurlbert A. Visual perception: Learning to see through noise. Current Biology. 2000;10:R231–233. doi: 10.1016/s0960-9822(00)00371-7. [DOI] [PubMed] [Google Scholar]

- Hurtz J, Kraugh A, Palmer RG. Introduction to the theory of neural compuation. Redwood City, CA: Addison-Wesley; 1991. [Google Scholar]

- Huxlin KR. Perceptual plasticity in damaged adult visual system. Vision Research. 2008 doi: 10.1016/j.visres.2008.05.022. in press. [DOI] [PubMed] [Google Scholar]

- Jenkins WM, Merzenich MM, Ochs MT, Allard T, Guic-Robles E. Functional Reorganization of Primary Somatosensory Cortex in Adult Owl Monkeys after Behaviorally Controlled Tactile Stimulation. Journal of Neurophysiology. 1990;62:82–104. doi: 10.1152/jn.1990.63.1.82. [DOI] [PubMed] [Google Scholar]

- Karni A, Bertini G. Learning perceptual skills: Behavioral probes into adult cortical plasticity. Current Opinion in Neurobiology. 1997;7(4):530–535. doi: 10.1016/s0959-4388(97)80033-5. [DOI] [PubMed] [Google Scholar]

- Karni A, Sagi D. Where practice makes perfect in texture-discrimination - Evidence for primary visual-cortex plasticity. Proceedings of the National Academy of Sciences of the United States of America. 1991;88(11):4966–4970. doi: 10.1073/pnas.88.11.4966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karni A, Sagi D. The time course of learning a visual skill. Nature. 1993;365(6443):250–252. doi: 10.1038/365250a0. [DOI] [PubMed] [Google Scholar]

- Kuai SG, Zhang JY, Klein SA, Levi DM, Yu C. The essential role of stimulus temporal patterning in enabling perceptual learning. Nature Neuroscience. 2005;8:11, 1497–1499. doi: 10.1038/nn1546. [DOI] [PubMed] [Google Scholar]

- Law CT, Gold JI. Neural correlates of perceptual learning in a sensorymotor, but not a sensory, cortical area. Nature Neuroscience. 2008;11:505–513. doi: 10.1038/nn2070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levi DM, Klein SA. Noise provides some new signals about the spatial vision of amblyopes. Journal of Neuroscience. 2003;23(7):2522–2526. doi: 10.1523/JNEUROSCI.23-07-02522.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levi DM, Polat U, Hu YS. Improvement in Vernier acuity in adults with amblyopia. Practice makes better. Investigative Ophthalmology & Visual Science. 1997;38(8):1493–1510. [PubMed] [Google Scholar]

- Li RW, Levi DM. Characterizing the mechanisms of improvement for position discrimination in adult amblyopia. Journal of Vision. 2004;4(6):476–487. doi: 10.1167/4.6.7. [DOI] [PubMed] [Google Scholar]

- Li W, Piëch V, Gilbert C. Perceptual learning and top-down influences in primary visual cortex. Nature Neuroscience. 2004;7:651–657. doi: 10.1038/nn1255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu J, Lu Z, Dosher B. Augmented Hebbian learning hypothesis in perceptual learning: Interaction between feedback and task difficulty. Vision Sciences Society Meeting Abstract Book 2008 [Google Scholar]

- Liu JV, Ashida H, Smith AT, Wandell BA. Assessment of stimulus-induced changes in human V1 visual field maps. Journal of Neurophysiology. 2006;96:3398–3408. doi: 10.1152/jn.00556.2006. [DOI] [PubMed] [Google Scholar]

- Liu Z, Vaina LM. Simultaneous learning of motion discrimination in two directions. Cognitive Brain Research. 1998;6(4):347–349. doi: 10.1016/s0926-6410(98)00008-1. [DOI] [PubMed] [Google Scholar]

- Liu Z, Weinshall D. Mechanisms of generalization in perceptual learning. Vision Research. 2000;40(1):97–109. doi: 10.1016/s0042-6989(99)00140-6. [DOI] [PubMed] [Google Scholar]

- Lu ZL, Chu W, Dosher B, Lee S. Independent perceptual learning in monocular and binocular motion systems. Proc Natl Acad Sci U S A. 2005;102:5624–5629. doi: 10.1073/pnas.0501387102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu ZL, Dosher B. Perceptual learning retunes the perceptual template in foveal orientation identification. Journal of Vision. 2004;4(1):44–56. doi: 10.1167/4.1.5. [DOI] [PubMed] [Google Scholar]

- Matthews N, Liu Z, Qian N. The effect of orientation learning on contrast sensitivity. Vision Research. 2001;41(4):463–471. doi: 10.1016/s0042-6989(00)00269-8. [DOI] [PubMed] [Google Scholar]

- Mayer M. Practice improves adults' sensitivity to diagonals. Vision Research. 1983;23:547–550. doi: 10.1016/0042-6989(83)90130-x. [DOI] [PubMed] [Google Scholar]

- McKee SP, Westheimer G. Improvement in vernier acuity with practice. Perception & Psychophysics. 1978;24(3):258–262. doi: 10.3758/bf03206097. [DOI] [PubMed] [Google Scholar]

- Merzenich MM, Jenkins WM, Johnston P, Schreiner C, Miller SL, Tallal P. Temporal processing deficits of language-learning impaired children ameliorated by training. Science. 1996;271(5245):77–81. doi: 10.1126/science.271.5245.77. [DOI] [PubMed] [Google Scholar]

- Mollon JD, Danilova MV. Three remarks on perceptual learning. Spatial Vision. 1996;10(1):51–58. doi: 10.1163/156856896x00051. [DOI] [PubMed] [Google Scholar]

- Otto TU, Herzon MH, Fahle M, Zhaoping L. Perceptual learning with spatial uncertainties. Bisection task with spatial uncertainty limited learning. Vision Research. 2006;46:3223–3433. doi: 10.1016/j.visres.2006.03.021. [DOI] [PubMed] [Google Scholar]

- Petrov A, Dosher B, Lu ZL. Perceptual learning through incremental channel reweighting. Psychological Review. 2005;112(4):715–743. doi: 10.1037/0033-295X.112.4.715. [DOI] [PubMed] [Google Scholar]

- Petrov AP, Dosher B, Lu ZL. Comparable perceptual learning with and without feedback in non-stationary contexts: Data and model. Vision Research. 2006;46:3177–3197. doi: 10.1016/j.visres.2006.03.022. [DOI] [PubMed] [Google Scholar]

- Poggio T, Fahle M, Edelman S. Fast perceptual learning in visual hyperacuity. Science. 1992;256(5059):1018–1021. doi: 10.1126/science.1589770. [DOI] [PubMed] [Google Scholar]

- Polat U, Ma-Naim T, Belkin M, Sagi D. Improving vision in adult amblyopia by perceptual learning. Proceedings of the National Academy of Science, USA. 2004;101(17):6692–6697. doi: 10.1073/pnas.0401200101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polat U, Sagi D. Spatial interactions in human vision: from near to far via experience-dependent cascades of connections. Proceedings of the National Academy of Science. 1994;91(4):1206–1209. doi: 10.1073/pnas.91.4.1206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramachandran VS, Braddick O. Orientation-specific learning in stereopsis. Perception. 1973;2(3):371–376. doi: 10.1068/p020371. [DOI] [PubMed] [Google Scholar]

- Recanzone GH, Merzenich MM, Schreiner CE. Changes in the distributed temporal response properties of SI cortical neurons reflect improvements in performance on a temporally based tactile discrimination task. Journal of Neurophysiology. 1992;67(5):1071–1091. doi: 10.1152/jn.1992.67.5.1071. [DOI] [PubMed] [Google Scholar]

- Rubin N, Nakayama K, Shapley R. Abrupt learning and retinal size specificity in illusory-contour perception. Current Biology. 1997;7:461–467. doi: 10.1016/s0960-9822(06)00217-x. [DOI] [PubMed] [Google Scholar]

- Saarinen J, Levi DM. Perceptual learning in vernier acuity: What is learned? Vision Research. 1995;35(4):519–527. doi: 10.1016/0042-6989(94)00141-8. [DOI] [PubMed] [Google Scholar]

- Saarinen J, Levi DM. Integration of local features into a global shape. Vision Research. 2001;41(14):1785–1790. doi: 10.1016/s0042-6989(01)00058-x. [DOI] [PubMed] [Google Scholar]

- Schiltz C, Bodart JM, Dejardin S, Michel C, Roucoux A, Crommelinck M, et al. Neuronal mechanisms of perceptual learning: Changes in human brain activity with training in orientation discrimination. Neuroimage. 1999;9(4662) doi: 10.1006/nimg.1998.0394. [DOI] [PubMed] [Google Scholar]

- Schoups A, Vogels R, Orban GA. Human perceptual learning in identifying the oblique orientation: Retinotopy, orentation specificity and monocularity. Journal of Physiology. 1995;483:797–810. doi: 10.1113/jphysiol.1995.sp020623. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoups A, Vogels R, Qian N, Orban GA. Practising orientation identification improves orientation coding in V1 neurons. Nature. 2001;412(6846):549–553. doi: 10.1038/35087601. [DOI] [PubMed] [Google Scholar]

- Schwartz S, Maquet P, Frith C. Neural correlates of perceptual learning: A functional MRI study of visual texture discrimination. Proceedings of the National Academy of Sciences, USA. 2002;99(26):17137–17142. doi: 10.1073/pnas.242414599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seitz AR, Watanabe T. Is subliminal learning really passive? Nature. 2003;422(6927):36. doi: 10.1038/422036a. [DOI] [PubMed] [Google Scholar]

- Seitz A, Watanabe T. A unified model for perceptual learning. Trends in Cognitive Science. 2006;9:329–334. doi: 10.1016/j.tics.2005.05.010. [DOI] [PubMed] [Google Scholar]

- Shiu Lp, Pashler H. Improvement in line orientation discrimination is retinally local but dependent on cognitive set. Perception & Psychophysics. 1992;52(5):582–588. doi: 10.3758/bf03206720. [DOI] [PubMed] [Google Scholar]

- Smirnakis SM, Brewer AA, Schmid MC, Toias AS, Schuz A, Augath M, Inhoffen W, Wandell BA, Logothetis NK. Lack of long-term cortical reorganization after macaque retinal lesions. Nature. 2005;435:300–307. doi: 10.1038/nature03495. [DOI] [PubMed] [Google Scholar]

- Tsodyks M, Gilbert C. Neural networks and perceptual learning. Nature. 2004;431:775–781. doi: 10.1038/nature03013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vaina LM, Belliveau JW, des Roziers EB, Zeffiro TA. Neural systems underlying learning and representation of global motion. Proceedings of the National Academy of Science. 1998;95(21):12657–12662. doi: 10.1073/pnas.95.21.12657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vaina LM, Sundareswaran V, Harris JG. Learning to ignore: Psychophysics and computational modeling of fast learning of direction in noisy motion stimuli. Cognitive Brain Research. 1995;2(3):155–163. doi: 10.1016/0926-6410(95)90004-7. [DOI] [PubMed] [Google Scholar]

- Vogels R, Orban GA. The effect of practice on the oblique effect in line orientation judgments. Vision Research. 1985;25(11):1679–1687. doi: 10.1016/0042-6989(85)90140-3. [DOI] [PubMed] [Google Scholar]

- Watanabe T, Nanez JE, Sasaki Y. Perceptual learning without perception. Nature. 2001;413(6858):844–848. doi: 10.1038/35101601. [DOI] [PubMed] [Google Scholar]

- Watanabe T, Nanez JE, Sr, Koyama S, Mukai I, Liederman J, Sasaki Y. Greater plasticity in lower-level than higher-level visual motion processing in a passive perceptual learning task. Nature Neuroscience. 2002;5(10):1003–1009. doi: 10.1038/nn915. [DOI] [PubMed] [Google Scholar]

- Weinberger NM, Ashe JH, Metherate R, McKenna TM, Diamond DM, Bakin J. Retuning auditory cortex by learning: a preliminary model of receptive field plasticity. Concepts in Neuroscience. 1990;1:91–131. [Google Scholar]

- Weinberger NM, Javid R, Lepan B. Long-Term Retention of Learning-Induced Receptive Field Plasticity in the Auditory Cortex. Proceedings of the National Academy of Sciences, USA. 1993;90:2394–2398. doi: 10.1073/pnas.90.6.2394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiss Y, Edelman S, Fahle M. Models of perceptual learning in vernier hyperacuity. Neural Computation. 1993;5(5):695–718. [Google Scholar]

- Yang T, Maunsell JHR. The Effect of Perceptual Learning on Neuronal Responses in Monkey Visual Area {V4} Journal of Neuroscience. 2004;24(7):1617–1626. doi: 10.1523/JNEUROSCI.4442-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu C, Klein S, Levi D. Perceptual learning in contrast discrimination and the (minimal) role of context. Journal of Vision. 2004;4:169–182. doi: 10.1167/4.3.4. [DOI] [PubMed] [Google Scholar]