Abstract

Error propagation on the Euclidean motion group arises in a number of areas such as in dead reckoning errors in mobile robot navigation and joint errors that accumulate from the base to the distal end of kinematic chains such as manipulators and biological macromolecules. We address error propagation in rigid-body poses in a coordinate-free way. In this paper we show how errors propagated by convolution on the Euclidean motion group, SE(3), can be approximated to second order using the theory of Lie algebras and Lie groups. We then show how errors that are small (but not so small that linearization is valid) can be propagated by a recursive formula derived here. This formula takes into account errors to second-order, whereas prior efforts only considered the first-order case. Our formulation is nonparametric in the sense that it will work for probability density functions of any form (not only Gaussians). Numerical tests demonstrate the accuracy of this second-order theory in the context of a manipulator arm and a flexible needle with bevel tip.

Keywords: Recursive error propagation, Euclidean group, spatial uncertainty

1 Introduction

Error propagation on the Euclidean motion group arises in a surprising number of different areas. For example, consider a robotic manipulator for which each joint angle has some backlash. If we describe this backlash as a distribution of possible angles around the nominal one, how will these joint errors add up to produce pose errors at the end effector ? Similar problems arise in the study of chainlike biological macromolecules that undergo thermal fluctuations in solution. See, for example, [Zhou and Chirikjian 2006] and [Kim and Chirikjian 2005]. As another example, consider a nonholonomic mobile robot that executes an open loop trajectory. Uncertainties in pose will add up along the path, and if many trials are performed, what will the distribution of terminal poses be ? Many such problems in ‘probabilistic robotics’ can be imagined with the recent popularity of SLAM [Thrun, Burgard and Fox 2005].

If the errors are small, Jacobian-based methods or first-order error propagation theories can be used. But what if the errors are very large ? Here we address the propagation of large errors in rigid-body poses in a coordinate-free way. In this paper we show how errors propagated by convolution on the Euclidean motion group, SE(3), can be approximated to second order using the theory of Lie algebras and Lie groups. We then show how errors of moderate size (but not so small that linearization is valid) can be propagated by a recursive formula derived here. This formula takes into account errors to second-order, whereas prior efforts only considered the first-order case. Our formulation is nonparametric in the sense that it will work for probability density functions of any form (not only Gaussians).

In the remainder of this section we review the literature on error propagation, and review the terminology and notation used throughout the paper. In what follows, bold lower case letters denote vectors. N and n are positive integers. G denotes either the groups SO(3) or SE(3). All upper case letters (Roman or Greek) (except for N and G) denote matrices. Lower case letters denote scalars and group elements. A lower case letter followed by parenthesis denotes a scalar-valued function.

In Section 2, important definitions from the basic theory of Lie groups and probability and statistics are reviewed. In Section 3, several new theorems are proved. This forms the core of our paper. In Section 4, sampling is discussed and the theory is adapted for the case when a whole pdf is not available. Then numerical tests demonstrate the accuracy of this recursive second-order propagation formula relative to baseline truth generated by brute force. In Section 5 our conclusions are presented. Three appendices provide more detailed background material that is important for understanding the definitions and proofs presented in the main body of the paper. The remainder of the current section reviews the literature and basic definitions and notation used throughout the paper.

1.1 Literature Review

The Lie-group-theoretic notation and terminology which has now become standard vocabulary in the robotics community is presented in [Murray, Li and Sastry 1994], and Selig [Selig 1996]. In [Chirikjian 2001] many problems in robot kinematics and motion planning were formulated as the convolution of functions on the Euclidean group. The representation and estimation of spatial uncertainty has also received attention in the robotics and vision literature. Two classic works in this area are [Smith and Cheeseman 1986] and [Su and Lee 1992]. Recent work on error propagation describes the concatenation of Gaussian random variables on groups and applies this formalism to mobile robot navigation [Smith, Drummond and Roussopoulos 2003]. In all three of these works, errors are assumed to be small enough that co-variances can be propagated by the formula [Wang and Chirikjian 2006a, Wang and Chirikjian 2006b]

| (1) |

where Ad is the adjoint operator for SE(3) (See Appendix for a review of terminology). This equation essentially says that given two ‘noisy’ frames of reference g1, g2 ∈ SE(3), each of which is a Gaussian random variable with 6 × 6 covariance matrices3 Σ1 and Σ2, respectively, the covariance of g1 ○ g2 will be Σ1*2. This approximation is very good when errors are very small. We extend this linearized approximation to the quadratic terms in the expansion of the matrix exponential parameterization of SE(3). The origin of (1) will become clear for the special case of small errors in our more general nonparametric derivation.

1.2 Review of Rigid-Body Motions

The Euclidean motion group, SE(3), is the semi-direct product of IR3 with the special orthogonal group, SO(3). We represent elements of SE(3) using 4 × 4 homogeneous transformation matrices

and identify the group law with matrix multiplication. The inverse of any group element is written as

For small translational (rotational) displacements from the identity along (about) the ith coordinate axis, the homogeneous transforms representing infinitesimal motions look like

where I4 is the 4 × 4 identity matrix and

These are related to the basis elements {Ei} for so(3) (the Lie algebra corresponding to the rotation group, SO(3)) as

when i = 1,2,3. Each Ẽi has a corresponding natural unit basis vector ei ∈ IR6. For example, e1 = [1, 0, 0, 0, 0, 0]T, e2 = [0, 1, 0, 0, 0, 0]T, etc.

Large motions are also obtained by exponentiating these matrices. For example,

More generally, it can be shown that every element in the neighborhood of the identity of a matrix Lie group G can be described with the exponential parameterization

| (2) |

where n is the dimension of the group. For SO(3) and SE(3), n = 3 and 6, respectively, and the exponential parameterization extends over the whole group.

One defines the ‘vee’ operator, ∨, such that

The vector, x ∈ IRn, can be obtained from g ∈ G from the formula

| (3) |

For SO(3) and SE(3) this is defined except on a set of measure zero, which for all intents and purposes in the probability and statics problems that we will consider means that the exponential and logarithm maps are ‘effectively’ bijective. See the Appendix for details.

When integrating a function over SO(3) or SE(3), a weight w(x) is defined such that

The exact form of the weighting function is

| (4) |

This is derived for SO(3) and SE(3) in the Appendix. The weighting function is even in the sense that w(x) = w(−x).

1.3 The Baker-Campbell-Hausdorff Formula

Given any two elements of a Lie algebra, X and Y, the Lie bracket is defined as [X,Y] = XY − Y X. An important relationship called the Baker-Campbell-Hausdorff formula exists between the Lie bracket and matrix exponential (see [Baker 1904, Campbell 1897, Hausdorff 1906]). Namely, the logarithm of the product of two Lie group elements written as exponentials of Lie algebra elements can be expressed as

where

| (5) |

This expression is verified by expanding eX and eY in Taylor series of the form in (37), and then substituting the result into (38) with g = eXeY. If the ∨ operation is applied (see Appendix for a review), (5) can be written as

1.4 Probability and Statistics in IRn: Multivariate Analysis

In IRn, a probability density function (or pdf) is defined by the conditions

where dx = dx1dx2 … dxn is the usual Lebesgue integration measure. The mean of a pdf, f(x), is defined as

| (6) |

Note that μ minimizes the cost function

| (7) |

where is the 2-norm in IRn.

The covariance of the same pdf about the mean is defined as

| (8) |

It follows that

| (9) |

where C is the covariance about the origin and Σ is the covariance about the mean.

Pdfs are often used to describe distributions of errors. If these errors are concatenated, they ‘add’ by convolution:

| (10) |

The mean and covariance of convolved distributions are found as

| (11) |

In other words, these quantities can be propagated without explicitly performing the convolution computation, or even knowing the full pdfs. This is independent of the parametric form of the pdf. Often one does not have access to the full pdf, but only samples from a process with an underlying pdf. In this case, the unbiased sample mean and covariance are defined as [Anderson 2005]:

The reason for division by N − 1 rather than N is explained in texts on multivariate analysis such as [Anderson 2005]. As the sample size becomes large, the difference between N and N − 1 becomes negligible and these sampled quantities converge to those corresponding to the underlying pdf.

Our main purpose in this paper is to develop equations analogous to (11) to describe the propagation of error on the motion group SE(3). In the process we will also do so for the rotation group SO(3).

It is often convenient to use the Gaussian (or normal) distribution: to model errors in IRn. This parametric distribution is completely defined by its mean and covariance. We will have no need to assume that densities are Gaussian. Our results are nonparametric, and therefore more general.

2 Definitions and Properties of Mean and Covariance on SE(3)

In this section we provide definitions of the mean and covariance of Lie-group-valued functions and illustrate some of their properties. We note in passing that a pdf that is a symmetric function, ρ(g) = ρ(g−1), always satisfies the condition

| (12) |

for G = SO(3) or G = SE(3). This is easy to see if we let ρ0(x) = ρ(eX). Then ρ0(x) = ρ0(−x). This is an even function in the exponential coordinates, and so the odd function xρ0(x) integrates to zero over a symmetric domain of integration in the space of exponential parameters that maps to G. See appendix for discussion of integration measures. In our case this domain is the ball of radius π (for SO(3)), or the Cartesian product of this ball with IR3. Both of which are symmetric. Hence the integral in (12) vanishes. More generally, if ρ(g) is a symmetric function on G = SO(3) or G = SE(3) then for ,

| (13) |

This is because the integrand is an odd function of the components of x. For example,

DEFINITION 1: If a unique value μ ∈ G exists for which

| (14) |

μ will be called the mean of a pdf f(g) on G, which is a straightforward extension of (6). Furthermore, the covariance about the mean will be computed as

| (15) |

Note that while in the case of Euclidean space (6) and minimization of (7) both give the same value of the mean, the minimization of a functional of the form

does not generally return a value hmin that is equal to μ. However, in the special case when f(g) is unimodal and very concentrated, hmin ≈ μ.

The equality (12) can be thought of as a statement of when the mean is at the identity. If ρ(g) has mean at the identity, then f(g) = ρ(a−1 ○ g) has mean at a. We will use ρ(g) to denote pdfs with mean at the identity, and f(g) to denote pdfs that can have the mean at some other group element.

THEOREM 1: If f(g) has mean μ and covariance Σ, then to second order

| (16) |

where the following shorthand is used:

| (17) |

and the matrix-valued function F1(Σ) is defined as

| (18) |

and

| (19) |

Here C is the covariance about the identity, which is defined in an analogy with the concept of covariance about the origin in the context of probability and statistics in IRn.

Proof: Let f(g) = ρ(μ−1 ○ g) where ρ(g) has mean at the identity. Then

Expanding using the BCH formula (5) with μ = exp X and g = exp Y, and using the linearity of the Lie bracket, we find that since ρ(g) is a pdf with mean at the identity,

The first expression in the statement of the theorem results from the definition of the adjoint. Likewise,

Expanding out the product and eliminating terms linear in y results in the second statement of the theorem.

3 Propagation of the Mean and Covariance of PDFs on SE(3)

Let μ1, μ2 ∈ SE(3) be two precise reference frames. Then μ10○μ2 is the frame resulting from stacking one relative to the other. Now suppose that each has some uncertainty. Let {hi} and {kj} be two sets of frames of reference that are distributed around the identity. Let the first have N1 elements, and the second have N2. What will the covariance of the set of N1 · N2 frames {(μ1○μ2)−1○μ1○hi○μ2○kj} (which are also distributed around the identity) look like ?

Let ρi(g) be a unimodal pdf with mean at the identity and which has a preponderance of its mass concentrated in a unit ball around the identity (where distance from the identity is measured as ∥(log g)∨∥). Then will be a distribution with the same shape centered at μi. In general, the convolution of two pdfs is defined as

and in particular if we make the change of variables , then

Making the change of variables g = μ1 ○ μ2 ○ q, where q is a relatively small displacement measured from the identity, the above can be written as

| (20) |

The essence of this paper is the efficient approximation of covariances about the mean of ρ1*2 in (20) when the covariances about the means of ρ1 and ρ2 are known. In cases when μ1*2 = μ1 ○ μ2, the problem reduces to the efficient approximation of

| (21) |

LEMMA 1: The convolution of pdfs with mean at the identity results (to second order) in a pdf with mean at the identity. Furthermore, if ρ1 * ρ2 = ρ2 * ρ1 and ρi(g) = ρi(g−1), then this result becomes exact.

Proof:

To second order, all terms in the BCH expansion of log(h ○ k) are linear in either log h or log k (or both), and therefore at least one of the above integrals integrates to zero.

If ρ1 * ρ2 = ρ2 * ρ1 and ρi(g) = ρi(g−1) then it is easy to show that (ρ1 * ρ2)(g) = (ρ1 * ρ2)(g−1), which automatically means that the function (ρ1 * ρ2)(g) has mean at the identity.

THEOREM 2: If fi(g) has mean μi and covariance Σi for i = 1,2, then to second order, the mean and covariance of (f1 * f2)(g) are respectively

| (22) |

and

| (23) |

where

, B = Σ2, and C(A,B) and A″ are computed as follows:

B″ is defined in the same way with B replacing A everywhere in the expression. The blocks of C are computed as

where Dij,kl = D(Aij, Bkl), and the matrix-valued function D(A′,B′) is defined relative to the entries in the 3 × 3 blocks A′ and B′ as

Proof: The approximation in (22) follows directly from Lemma 1. Next, let where k = exp K, and let Y = log q′. Using the Baker-Campbell-Hausdorff formula (5) to evaluate the log terms in the definition of covariance, and retaining all even terms to second order (since first order terms will integrate to zero), we get

| (24) (25) |

Each of these terms can be expanded using the adjoint concept. For example,

| (26) |

In our formulation, (where k = eK and so ). Defining the vector , then

and since q′ = eY,

.

The following complicated looking integral (which is nothing more than (21) written in exponential coordinates)

can be simplified. This is because

and

and since all terms in {(Z(X, Y))∨[(Z(X, Y))∨]T}even can be expressed as weighted sums of such products, it follows that after integration we get

| (27) |

For the SE(3) case

where T∨ = t, V∨ = v and Ω∨ = ω. Then (26) becomes:

and

If we divide the 6 × 6 symmetric matrices and B = Σ2 into 3 × 3 blocks as

then using the specific form of ad(X) and integrating over q′ we get

and

Then integrating over k ∈ G gives

The BCH formula yields several such terms, each of which can be obtained by either transposing those given above or switching the roles of B and A.

4 Sampled Distributions and Numerical Examples

Evaluating the robustness of the first-order (1) and the second-order (23) covariance propagation formula over a wide range of kinematic errors is essential to understand effectiveness of these formulas. In this section, we test these two covariance propagation formulas with concrete numerical examples.

In many practical situations, discrete data are sampled from ρ1 and ρ2 rather than having complete knowledge of the distributions themselves. Therefore, sampled covariances can be computed by making the following substitutions:

| (28) |

and

| (29) |

where

Here Δ(g) is the Dirac delta function for the group G, which has the properties

Using these properties, if we substitute (28) and (29) into (21), the result is

| (30) |

While this equation is exact, it has the drawback of requiring O(N1 · N2) arithmetic operations. In the first-order theory of error propagation, we made the approximation

or equivalently

where k = exp Y and q = exp X are elements of the Lie group SE(3). This decouples the summations and makes the computation O(N1 + N2). However, the first-order theory breaks down for large errors. Therefore, we explore here the numerical accuracy of the second-order theory developed in the previous section.

4.1 Error Propagation in a PUMA Manipulator

Consider a spatial serial manipulator, PUMA 560. The link-frame assignments of PUMA 560 for D-H parameters is the same as those given in [Craig 2005]. Table 1 lists the D-H parameters of PUMA 560, where a2 = 431.8 mm, a3 = 20.32 mm, d3 = 124.46 mm, and d4 = 431.8 mm. The solution of forward kinematics is the homogeneous transformations of the relative displacements from one D-H frame to another multiplied sequentially.

Table 1.

DH Parameters of the PUMA 560

| i | αi−1 | ai−1 | di | θ i |

| 1 | 0 | 0 | 0 | θ 1 |

| 2 | −90° | 0 | 0 | θ 2 |

| 3 | 0 | a 2 | d 3 | θ 3 |

| 4 | −90° | a 3 | d 4 | θ 4 |

| 5 | 90° | 0 | 0 | θ 5 |

| 6 | −90° | 0 | 0 | θ 6 |

In order to test these covariance propagation formulas, we first need to create some kinematic errors. Since joint angles are the only variables of the PUMA 560, we assume that errors exist only in these joint angles. We generated errors by deviating each joint angle from its ideal value with uniform random absolute errors of ±∊. Therefore, each joint angle was sampled at three values: θi − ∊, θi, θi + ∊. This generates N = 36 different frames of references that are clustered around desired gee. Here gee denotes the position and orientation of the distal end of the manipulator relative to the base in the form of homogeneous transformation matrix.

It is important to note that while the cloud of frames is clustered around gee, it may not be the case that gee is actually the mean of this cloud. In the first-order theory, the cloud is assumed to be so tightly focused around gee that the approximation μee ≈ gee can be made without causing significant errors. However, in the second order theory, one needs to be more precise. We can update our estimate of the mean as:

| (31) |

In practice, for errors of moderate magnitude, only one such update is required to obtain the exact mean. For very large errors this formula can be iterated with the output, μee, from one iteration serving as the input, gee, for the next iteration. A similar update to obtain μ1 and μ2 from the frame clouds around the frames g1 and g2 (the relative frames from base to mid point and mid point to distal end of the manipulator such that g1 ○ g2 = gee) should also be performed.

Three different methods for computing the same error covariances for the whole manipulator are computed. The first is to apply brute force enumeration, which gives the actual covariance of the whole manipulator:

| (32) |

where , and (32) is used for all of the 36 different frames of references . The second method is to apply the first-order propagation formula (1). The third is to apply the second-order propagation formula (23). For the covariance propagation methods, we only need to find the mean and covariance of each individual link. Then the covariance of the whole manipulator can be recursively calculated using the corresponding propagation formula.

In order to quantify the robustness of the two covariance approximation methods, we define a measure of deviation of results between the first/second order formula and the actual covariance using the Hilbert-Schmidt (Frobenius) norm as

| (33) |

where Σprop is the covariance of the whole manipulator calculated using either the first-order (1) or the second-order (23) propagation formula, Σactual is the actual covariance of the whole manipulator calculated using (32), and ∥ · ∥ denotes the Hilbert-Schmidt (Frobenius) norm.

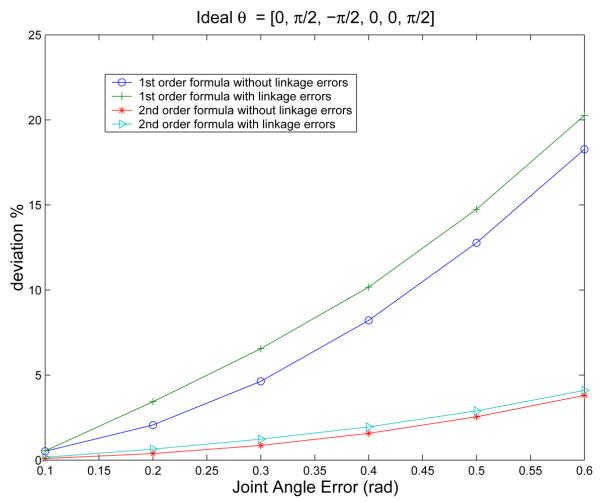

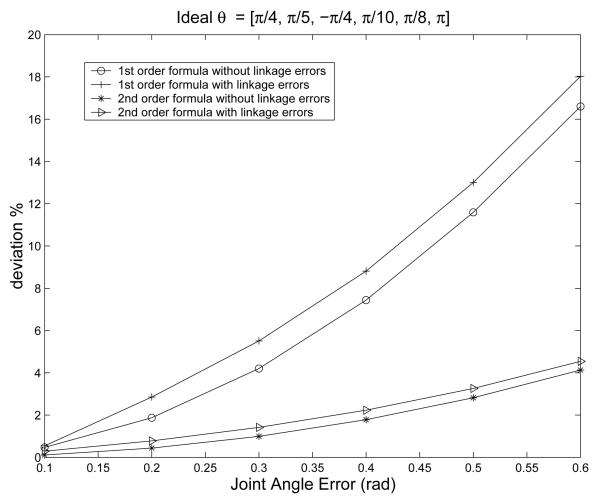

With all the above information, we now can conduct the specific computation and analysis. The results of two different configurations of the manipulator are illustrated here. The ideal joint angles of one configuration from θ1 to θ6 were taken as [0, π/2, −π/2, 0, 0, π/2]. The ideal joint angles of the other configuration were taken as [π/4, π/5, −π/4, π/10, π/8, π]. As an initial test, the joint angle errors ∊ were taken from 0.1 rad to 0.6 rad, and the static DH parameters of the links were assumed to be error free. The covariances of the whole manipulator corresponding to these kinematic errors were then calculated through the three aforementioned methods. The resulting deviations between the covariance matrices computed directly using (32) and the first-order and second-order propagation formulas are plotted in Figures 1 and 2 with (33) on the y-axis for different amounts of noise on the x-axis.

Figure 1.

The Deviation of the First and Second-order Propagation Methods for Configuration I

Figure 2.

The Deviation of the First and Second-order Propagation Methods for Configuration II

Since physical manipulators cannot be manufactured with exact design parameters, and their real linkage parameters such as the static DH parameters (αi, ai, di) may have errors, the propagation theory is applied now to the case with both joint angle errors and linkage errors. The same sets of calculations that were conducted for the case with only joint angles are now conducted for this case with the additional linkage errors. Our numerical simulations have shown that if the only static DH parameters that have errors are the translational parameters ai and di, then they have essentially no effect the value of the deviation. In other words, both the first and second order propagation formulas capture the covariances resulting from these translational errors. However, the linkage errors in the angular DH parameters such as αi create observable effects on the accuracy of the propagation formulas. In the given example, we assume that DH parameters α0, α1, and α5 deviate from their ideal values with uniform random absolute errors of ±0.2 rad. Therefore, they are sampled at three values: αi − 0.2, αi, αi + 0.2. Together with the six joint angle errors, this generates N = 39 different frames of references that are clustered around the baseline gee. The results of the first-order and second-order propagation formula of these cases were also graphed in Figures 1 and 2.

The numerical simulation results demonstrate that the propagation formula can efficiently deal with all kinematic errors including errors in joint angles and linkage parameters. It is also clear that the second-order propagation formula makes significant improvements in terms of accuracy when compared to the first-order formula. The second-order propagation theory is much more robust than the first-order formula over a wide range of kinematic errors. These two methods both work well for small errors, and deviate from the actual value more and more as the errors become large. However, the deviation of the first-order formula grows rapidly and breaks down while the second-order propagation method still retains a reasonable value.

To give the readers a sense of what these covariances look like, we listed the values of the covariance of the whole manipulator for the joint angle error ∊ = 0.3 rad below.

The ideal pose of the end effector can be found easily via forward kinematics to be

The actual covariance of the whole manipulator calculated using equations (32) is

the covariance using the first-order propagation formula (1) is

and the covariance using the second-order propagation formula (23) is

4.2 Continuous-Time Covariance Propagation: The Stochastic Flexible Needle with Bevel Tip

The previous example in this paper illustrated how to obtain the mean and covariance of error pdfs resulting from convolutions of densities centered around discrete joints in a manipulator arm. In contrast, applications such as SLAM can be better described with a model in which the error accumulates continuously over time. This section addresses that problem. In particular, estimates of the mean and covariance of a process described by a stochastic differential equation can be obtained for small time intervals by numerical integration. The second-order propagation formulas derived earlier in the paper are then used to propagate these estimates for larger values of time. The example that is used to illustrate this technique is flexible needle steering.

Recently, a number of works have been concerned with the steering of flexible needles with bevel tips through soft tissue for minimally invasive medical treatments. See, for example, [Webster et al. 2006], [Park et al. 2005], [Alterovitz, Simeon and Goldberg 2007]. In this problem, a flexible needle is rotated with the angular speed ω(t) around its tangent while it is inserted with translational speed v(t) in the tangential direction. Due to the bevel tip, the needle will not follow a straight line when ω(t) = 0 and v(t) is constant. Rather, in this case the tip of the needle will approximately follow a circular arc with curvature κ when the medium is very firm and the needle is very flexible. The specific value of the constant κ depends on parameters such as the angle of the bevel, how sharp the needle is, and properties of the tissue. In practice κ is fit to experimental observations of the needle bending in a particular medium during insertions with ω(t) = 0 and v(t) is constant. Using this as a baseline, and building in arbitrary ω(t) and v(t), a nonholonomic kinematic model then predicts the time evolution of the position and orientation of the needle tip [Webster et al. 2006, Park et al. 2005].

In a reference frame attached to the needle tip with the local x3 axis denoting the tangent to the “backbone curve” of the needle, and x1 denoting the axis orthogonal to the direction of infinitesimal motion induced by the bevel (i.e., the needle bends in the x2 − x3 plane), the nonholonomic kinematic model for the evolution of the frame at the needle tip was developed in [Webster et al. 2006, Park et al. 2005] as:

| (34) |

If everything were certain, and if this model were exact, then g(t) could be obtained by simply integrating the ordinary differential equation in (34). However, in practice a needle that is repeatedly inserted into a medium such as gelatin (which is used to simulate soft tissue) will demonstrate an ensemble of slightly different trajectories.

A simple stochastic model for the needle is obtained by letting [Park et al. 2005, Park et al. 2008]:

and

Here ω0(t) and v0(t) are what the inputs would be in the ideal case, w1(t) and w2(t) are uncorrelated unit Gaussian white noises, and λi are constants.

Thus, a nonholonomic needle model with noise is

| (35) |

where dWi = Wi(t + dt) − Wi(t) = wi(t)dt are the non-differentiable increments of a Wiener process Wi(t). This noise model is a stochastic differential equation (SDE) on SE(3). As shorthand, we write this as

In this subsection, the second-order covariance propagation formula is demonstrated by “pasting together” two ensembles of needle trajectories from t = 0 to t = 1/2 and t = 1/2 to t = 1 to get the mean and covariance of needle trajectories from t = 0 to t = 1. These needle trajectories are generated by integrating the SDE in (35) for these three time periods with Δt = 0.01 using a modified version of the Euler-Maruyama method for generating sample paths of SDEs [Higham 2001]. The mean and covariance resulted from the second-order propagation formula are then compared with those obtained by integrating the SDE from t = 0 to t = 1 as detailed below.

The reference frame g(t) is generated from ξ(t) = (g−ldg)∨ by the product of exponentials formula at multiples of the small time step Δt as:

A cloud of frames {g(i)(nΔt)} for the trials i = 1, …, 10,000 are created with κ = 0.05 and a certain value of λ1 and λ2. The actual SE(3) means and covariances of the cloud of frames {g(i)(nΔt)} are then computed using brute force enumeration for the three time periods: t1 = [0, 1/2], t2 = [1/2, 1], and t3 = [0, 1] by applying (31) and (32) respectively. With the actual means and covariances of needle trajectories for t1 = [0, 1/2] and t2 = [1/2, 1], the estimated means and covariances of needle trajectories for t3 = [0,1] are derived using the second-order propagation formula (22) and (23). These estimated means and covariances for period t3 = [0, 1] are compared with their corresponding actual values. The comparison results are quantitively expressed through a definition on deviation. The measure of deviation on the covariance is defined as (33). Similarly, the measure of deviation on the mean is defined as

| (36) |

where μprop is the mean calculated using second-order propagation formula (22), μactual is the actual mean calculated using (31), and ∥ · ∥ denotes the Hilbert-Schmidt (Frobenius) norm.

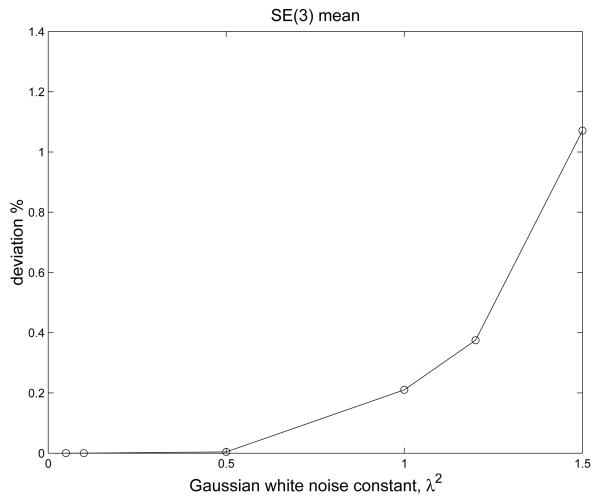

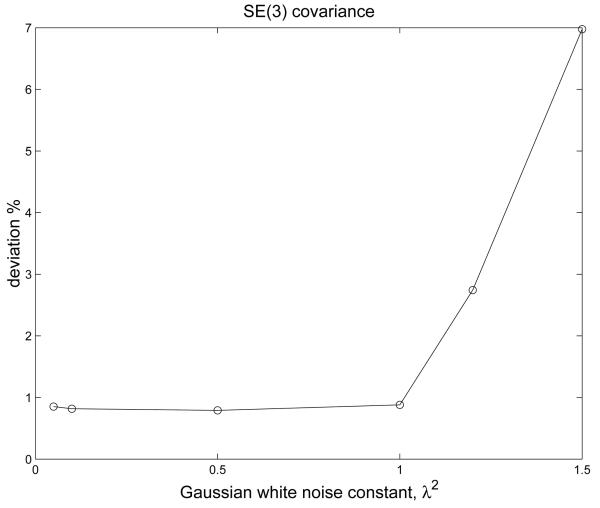

A range of values of λ1 and λ2 are tested to verify the effectiveness of the second order propagation formula. These values are . These comparison results are illustrated through the graphs of deviation versus Gaussian white noise constant λ2 as shown in Figures 3 and 4. It can be observed that the deviation of the mean is less than 0.3% and the deviation of the covariance is less than 1% for λ < 1, where λ = 1 is a fairly large noise constant. These comparisons have shown that the mean and covariance computed from the second-order propagtion formula are very good approximations to those obtained by integrating the SDE from t = 0 to t = 1.

Figure 3.

The Deviation of the Mean

Figure 4.

The Deviation of the Covariance

5 Conclusions

In this paper, first-order kinematic error propagation formulas are modified to include second-order effects. This extends the usefulness of these formulas to errors that are not necessarily small. In fact, in the example to which the methodology is applied, errors in orientation can be as large as a radian or more and the second-order formula appears to capture the error well. The second-order propagation formula makes significant improvements in terms of accuracy than that of the first-order formula. The second-order propagation theory is much more robust than the first-order formula over a wide range of kinematic errors. This is demonstrated with the example of a PUMA manipulator arm with substantial errors in the joints, as well as stochastic trajectories of a nonholonomic kinematic model of a flexible needle.

Acknowledgements

This work was performed with partial support from the NIH Grants R01EB006435 “Steering Flexible Needles in Soft Tissue” and R01GM075310 ‘Group-Theoretic Methods in Protein Structure Determination.” We thank Dr. Wooram Park for useful discussions on stochastic simulations of needles.

A Matrix Lie Groups in General

A matrix Lie group is a Lie group where G is a set of square matrices and the group operation is matrix multiplication. In this work, only the groups SO(3) and SE(3) will be considered.

A.1 The Exponential and Logarithm Maps

Given a general matrix Lie group, elements sufficiently close to the identity are written as g(t) = etX for some X ∈ 𝒢 (the Lie algebra of G) and t near 0. Explicitly,

| (37) |

The matrix logarithm is defined by the Taylor series about the identity matrix:

| (38) |

For matrix Lie groups, operations such as g − I and division of g by a scalar are well defined. The exponential map takes an element of the Lie algebra and produces an element of the Lie group. This is written as:

The logarithm map does just the opposite:

In other words, log(exp X) = X, and exp(log(g)) = g.

Given any smooth curve g(t) ∈ G, we can compute and . These will be elements of 𝒢.

A.2 The Lie Bracket and the Adjoint Matrices Ad(g) and ad(X)

The adjoint operator is defined as

| (39) |

This gives a homomorphism Ad : G → GL(𝒢) from the group into the set of all invertible linear transformations of 𝒢 onto itself. It is a homomorphism because

It is linear because

In the special case of a 1-parameter subgroup when g = g(t) is an element close to the identity. 4, we can approximate g(t) ≈ I + tX for small t. Then we get Ad(I + tX)Y = Y + t(XY − YX). The quantity

| (40) |

is called the Lie bracket of the elements X, Y ∈ 𝒢.

It is clear from the definition in (40) that the Lie bracket is linear in each entry:

and

Furthermore, the Lie bracket is anti-symmetric:

| (41) |

and hence [X, X] = 0. Given a basis {E1, .…, En} for the Lie algebra 𝒢, any arbitrary element can be written as

The Lie bracket of any two elements will result in a linear combination of all basis elements. This is written as

The constants are called the structure constants of the Lie algebra 𝒢. Note that the structure constants are antisymmetric: .

It can be checked that for any three elements of the Lie algebra, the Jacobi identity is satisfied:

| (42) |

It is often convenient to write the independent entries of any X ∈ 𝒢 as a column vector using the notation x = X∨ where the rule is used. The particular details of the ∨ operator for the cases of SO(3) and SE(3) are given in the following appendices.

A matrix denoted as ad(X) can then be defined such that for any X, Y ∈ 𝒢

From (41), it follows that

B The Rotation Group, SO(3)

The Lie algebra so(3) consists of skew-symmetric matrices of the form

| (43) |

The skew-symmetric matrices {Ei} form a basis for the set of all such 3 × 3 skew-symmetric matrices, and the coefficients {xi} are all real. The ∨ operation is defined to extract these coefficients from a skew symmetric matrix to form a column vector [x1, x2, x3]T ∈ IR3 such that Xy = x × y for any y ∈ IR3, where × is the usual vector cross product.

In this case, the adjoint matrices are

Furthermore,

It is well known (see [Chirikjian 2001] for derivation and references) that

| (44) |

where . Clearly, since the instantaneous rotation axis is preserved under a rotation, R(x)x = x.

An interesting and useful fact is that except for a set of measure zero, all elements of SO(3) can be captured with the parameters within the open ball defined by ∥x∥ < π, and the matrix logarithm of any group element parameterized in this range is also well defined. It is convenient to know that the angle of the rotation, θ(R), is related to the exponential parameters as |θ(R)| = ∥x∥. Furthermore,

where

Invariant definitions of directional (Lie) derivatives and integration measure for SO(3) can be defined. When computing these invariant quantities in coordinates (including exponential coordinates), a Jacobian matrix comes into play. There are two such Jacobian matrices:

and

The subscripts r and l denote the side where the partial derivative appears (right or left).

These two Jacobian matrices are related as

| (45) |

Relatively simple analytical expressions have been derived by Park [?] for the Jacobian Jl and its inverse when rotations are parameterized as in (44). These expressions are

| (46) |

and

The corresponding Jacobian Jr and its inverse are then calculated using (45) as [Chirikjian 2001]

and

Note that

The determinants are

Given a square-integrable function of rotation, f(R) ∈ L2(SO(3)), the proper (invariant) way to integrate using exponential coordinates is

where dx = dx1dx2dx3 and J can denote either Jr or Jl. The normalization by 8π2 ensures that ∫SO(3) 1dR = 1.

C The Special Euclidean Group, SE(3)

The Lie algebra se(3) consists of “screw” matrices of the form

| (47) |

The matrices {Ẽi} form a basis for the set of all such 4×4 screw matrices, and the coefficients {xi} are all real. The tilde is used to distinguish between these basis elements and those for SO(3). The ∨ operation is defined to extract these coefficients from a screw matrix to form a column vector X∨ = [x1, x2, x3, x4, x5, x6]T ∈ IR6. The double use of ∨ in the so(3) and se(3) cases will not cause confusion, since the object to which it is applied defines the sense in which it is used.

It will be convenient to define ω= [x1, x2, x3]T, and v = [x4, x5, x6]T, so that

It can be shown that [Chirikjian 2009]

| (48) |

This follows from the expression for the matrix exponential given in [Murray, Li and Sastry 1994] and the definition of the SO(3) Jacobian in (46). From the form of (48), it is clear that if g has rotational part R, and translational part t, then the matrix logarithm can be written in closed form as

and

| (49) |

The adjoint matrices for SE(3) are

where T∨ = t and

where V∨ = v and Ω∨ = ω.

The Jacobians for SE(3) using exponential parameters are then

and

The right Jacobian for SE(3) in exponential coordinates can be computed from (48) as

| (50) |

where 03 is the 3 × 3 zero matrix. It becomes immediately clear that

Given a square-integrable function of motion, f(g) ∈ L2(SE(3)), the proper (invariant) way to integrate using exponential coordinates is

The normalization by 8π2 is an artifact of the SO(3) case, which is retained since SE(3) is not compact.

Footnotes

Exactly what is meant by a covariance for a Lie group is quantified later in the paper.

In the context of matrix Lie groups, one natural way to measure distance is as a matrix norm of the difference of two group elements.

References

- Alterovitz R, Simeon T, Goldberg K. Robotics Science and Systems. Atlanta, GA: Jun, 2007. The Stochastic Motion Roadmap: A Sampling Framework for Planning with Markov Motion Uncertainty. [Google Scholar]

- Anderson TW. An Introduction to Multivariate Statistical Analysis. 3rd ed. Wiley; New York: 2005. [Google Scholar]

- Baker HF. Alternants and Continuous Groups. (Second Series).Proc. London Mathematical Society. 1904;3:24–47. [Google Scholar]

- Campbell JE. On a Law of Combination of Operators. Proc. London Mathematical Society. 1897;29:14–32. [Google Scholar]

- Chirikjian GS. Stochastic Models, Information, and Lie Groups. Birkhauser; New York: 2009. [Google Scholar]

- Chirikjian GS, Kyatkin AB. Engineering Applications of Noncommutative Harmonic Analysis. CRC Press; Boca Raton, FL: 2001. [Google Scholar]

- Craig JJ. Introduction to Robotics Mechanics and Control. 3rd edition Prentice Hall: 2005. [Google Scholar]

- Higham DJ. An Algorithmic Introduction to Numerical Simulation of Stochastic Differential Equations. SIAM Review. 2001;43(3):525–546. [Google Scholar]

- Hausdorff F. Berichte der Sachsichen Akademie der Wissenschaften. Vol. 58. Leipzig; 1906. Die symbolische Exponentialformel in der Gruppentheorie; pp. 19–48. [Google Scholar]

- Kim J-S, Chirikjian GS. A unified approach to conformational statistics of classical polymer and polypeptide models. Polymer. 2005 Nov 28;46(25):11904–11917. doi: 10.1016/j.polymer.2005.09.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray RM, Li Z, Sastry SS. A Mathematical Introduction to Robotic Manipulation. CRC Press; Boca Raton: 1994. [Google Scholar]

- Park W, Kim JS, Zhou Y, Cowan NJ, Okamura AM, Chirikjian GS. Diffusion-based motion planning for a non-holonomic flexible needle model; IEEE International Conference on Robotics and Automation.2005. pp. 4600–4605. [Google Scholar]

- Park W, Liu Y, Moses M, Chirikjian GS. Kinematic State Estimation and Motion Planning for Stochastic Nonholo-nomic Systems Using the Exponential Map. Robotica. 2008 doi: 10.1017/S0263574708004475. to appear. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Selig JM. Geometrical Methods in Robotics. Springer; New York: 1996. [Google Scholar]

- Smith P, Drummond T, Roussopoulos K. Computing MAP trajectories by representing, propagating and combining PDFs over groups; Proceedings of the 9th IEEE International Conference on Computer Vision; Nice. 2003. pp. 1275–1282. [Google Scholar]

- Smith RC, Cheeseman P. On the Representation and Estimation of Spatial Uncertainty. The International Journal of Robotics Research. 1986;5(4):56–68. [Google Scholar]

- Su S, Lee CSG. Manipulation and Propagation of Uncertainty and Verification of Applicability of Actions in assembly Tasks. IEEE Transactions on Systems, Man, and Cybernetics. 1992;22(6):1376–1389. [Google Scholar]

- Thrun S, Burgard W, Fox D. Probabilistic Robotics. MIT Press; Cambridge, MA: 2005. [Google Scholar]

- Wang Y, Chirikjian GS. Error Propagation on the Euclidean Group with Applications to Manipulator Kinematics. IEEE Transactions on Robotics. 2006;22(4):591–602. [Google Scholar]

- Wang Y, Chirikjian GS. Error Propagation in Hybrid Serial-Parallel Manipulators; IEEE International Conference on Robotics and Automation; Orlando Florida. May, 2006. pp. 1848–1853. [Google Scholar]

- Webster RJ, III, Kim JS, Cowan NJ, Chirikjian GS, Okamura AM. Nonholonomic modeling of needle steering. International Journal of Robotics Research. 2006;25:509–525. [Google Scholar]

- Zhou Y, Chirikjian GS. Conformational Statistics of Semi-Flexible Macromolecular Chains with Internal Joints. Macromolecules. 2006;39(5):1950–1960. doi: 10.1021/ma0512556. [DOI] [PMC free article] [PubMed] [Google Scholar]