Abstract

At present there is a debate on the number of body representations in the brain. The most commonly used dichotomy is based on the body image, thought to underlie perception and proven to be susceptible to bodily illusions, versus the body schema, hypothesized to guide actions and so far proven to be robust against bodily illusions. In this rubber hand illusion study we investigated the susceptibility of the body schema by manipulating the amount of stimulation on the rubber hand and the participant’s hand, adjusting the postural configuration of the hand, and investigating a grasping rather than a pointing response. Observed results showed for the first time altered grasping responses as a consequence of the grip aperture of the rubber hand. This illusion-sensitive motor response challenges one of the foundations on which the dichotomy is based, and addresses the importance of illusion induction versus type of response when investigating body representations.

Keywords: Rubber hand illusion, Body schema, Body image, Perception, Action

Introduction

Head and Holmes (1911–1912) are among the first who identified the need for multiple body representations in the brain. The representations they identified were based on a large heterogeneous group of neurological patients that showed that different bodily sensations can be lost independently of each other (Head and Holmes 1911–1912). However, to date there is no agreement on the number and characteristics of mental body representations (Berlucchi and Aglioti 1997; Felician et al. 2003; Gallagher 1986; Gallagher 2005; Gallagher and Cole 1995; Paillard 1999; Schwoebel and Coslett 2005; Sirigu et al. 1991). This is partly because the identification of different body representations have been dissociated on multiple levels, including conscious versus unconscious, dynamic versus static, and top–down versus bottom–up. Although numerous body representations have been identified, the most parsimonious and commonly used dissociation is between two general representations: the body image, a cognitive representation which integrates stored knowledge and experiences and is thought to underlie perceptual judgments; and the body schema, thought to be a more holistic representation mainly based on proprioceptive input and used to govern body movements (e.g., Gallagher 1986; Paillard 1991, 1999).

In line with this dualism, research into body representations in the healthy brain has also resulted in a distinction between (at least) two dissociable body representations (Kammers et al. 2006). This dissociation has been established by task dependency of bodily illusions in healthy individuals. More specifically, perceptual and motor tasks have been used to localize body parts during sensory conflict induced by the illusion. When a bodily illusion resulted in significantly different localizations in each task, this dissociation was taken as evidence for two separate underlying body representations (Kammers et al. 2006, 2009a, b).

Several key suppositions underlie this principal line of reasoning. First, the multisensory conflict induced by the bodily illusion must be solved differently for separate body representations. In other words, although the same (conflicting) sensory information is provided to the brain, the way to calibrate the localization of the illuded body part must depend on the task. Second, this different calibrating or weighting of sensory information must result in significant different localizations (otherwise dissociating two modes of response becomes difficult). Third, the two administered tasks must actually tap on different body representations.

There is supporting evidence for all three raised premises. First, we know from patient studies that localization without perception can be doubly dissociated from perception without localization (Dijkerman and de Haan 2007; Head and Holmes 1911–1912; Paillard 1991, 1999; Rossetti et al. 2001). Second, it is known that multisensory information can be weighted differently depending on the task. For example, research on multimodal integration has shown that dominance of either vision or proprioception in localization depends on the task demands (Scheidt et al. 2005; van Beers et al. 1998, 1999, 2002). Third, there is a vast amount of literature describing a (theoretical) dissociation between a general body representation underlying action (body schema) versus one underlying perceptual judgments (body image). This is true even in some of the theories in which three or four representations have been identified, since many of these “additional” representations are thought to be separations of either the body image or the body schema (e.g., Coslett and Lie 2004; Schwoebel and Coslett 2005; Sirigu et al. 1991). Furthermore, the dissociation between motor and perceptual tasks is in line with the dissociation found for the ventral “what/perceptual” versus dorsal “how/motor” stream identified for the visual system (Goodale and Milner 1992).

Similarly, bodily illusion task dependency between motor responses and perceptual judgments has now been established in healthy individuals as well (Kammers et al. 2006, 2009a, b). However, so far this dissociation has largely been characterized by highly susceptible perceptual judgments (body image), versus highly robust motor responses (body schema). Our interpretation of this difference in susceptibility has been that the body schema is mainly based on bottom–up proprioceptive information and takes into account the body’s information as a whole (Kammers et al. 2006). This more holistic representation is therefore less disturbed by a (local) bodily induced sensory conflict. For example, in a vibrotactile kinaesthetic illusion only the vibrated tendon is signaling the brain that it is stretching. The body schema is in this case hypothesized to still incorporate other senses and afferent information, which leads to a net result that is hardly, or even not at all, affected by the bodily illusion-induced conflict. The body image, on the other hand, is hypothesized to take into account previous (sensory) experiences and stored body knowledge. In this case the body image is hypothesized to take the top–down information into account, “knowing from experience” that stretching of a muscle is accompanied by movement of the attached limb, which results in a net relocation of the perceived location of the illuded arm for perceptual responses.

We have not been able to show any illusion-dependent kinematic changes in pointing movements investigated with a bodily illusion that induces relocation of one’s own hand together with changes in higher-order bodily experiences; the rubber hand illusion (RHI). In this illusion a multisensory conflict is induced by synchronous stroking of a visible rubber hand placed at a natural anatomical position and a person’s unseen own hand (Botvinick and Cohen 1998). The RHI has already been demonstrated in numerous studies (see for example, Costantini and Haggard 2007; Durgin et al. 2007; Ehrsson et al. 2004; Farne et al. 2000; Lloyd 2007; Longo et al. 2008; Pavani et al. 2000; Tsakiris and Haggard 2005), but consistent effects on action responses have remained elusive. One possible explanation was thought to be that the body schema might only be affected when the manner of conflict induction corresponds to the way the body schema represents the body, i.e., for action. However, implementing active movement during induction of the RHI using a modified video paradigm, (i.e., manipulating feeling of agency) still did not result in significant illusion effects on the pointing task (Kammers et al. 2009c).

As such, no double dissociation has been found in the healthy brain between body representations. However, there are reports of illusion-sensitive motor responses that are affected by bodily induced multimodal conflicts. With use of the Mirror illusion, Holmes and colleagues have shown altered pointing responses after either active or passive exposure to either the reflection of the participant’s contralateral own hand or the reflection of a rubber hand (Holmes et al. 2004, 2006; Holmes and Spence 2005). Implications of this will be outlined in the discussion. Nevertheless, the difference in susceptibility between the body image (perceptual judgment) and the body schema (motor response) to certain bodily illusions remains intriguing and the reasoning that the body schema might be more affected when the illusions operate on incoming sensory information that is on the level the body schema functions might still be valid.

In the present experiment we therefore introduced three new manipulations using the RHI. First we apply a more global stimulation of the participant’s own hand and the rubber hand (in general the RHI is generated by stimulation of only one finger). Tsakiris and Haggard (2005) showed that the RHI merely occurred locally: only the felt position of the stimulated finger drifted toward the rubber hand, although a non-stimulated finger could be affected when bordered by two stimulated fingers. In other words, the body image can be fragmented and local, whereas the body schema is hypothesized to be more holistic in nature. However, it has not been investigated whether additional tactile stimulation on the hand induces a differential effect on a motor response. As such, we try to target the motoric body representation by stimulating the thumb as well as the index finger. Second, we introduced a new orientation of the rubber hand, whereby the hand is positioned on its side in a ‘grasping orientation’. Instead of inducing a conflict between the locations of the two hands in the horizontal plane, we induced a conflict between the grip aperture (distance between index finger and thumb) of the rubber hand and the participant’s own hand in the vertical plane. Finally, we measure not a pointing motor task but instead investigated the kinematics of a grasping response to an external object. Finally, we implemented a perceptual scaling response on half of the trials, to investigate the conscious perceived grip aperture of the illuded hand (body image). This was done on only half of the trials because otherwise an effect on the motor task might be mediated by either a delay between induction and grasping, and/or by the preceding perceptual response.

Methods

Participants

Eleven right-handed healthy female university students participated in the experiment (mean age 24.1 years, SD 4.0). All participants gave written informed consent and right-handedness was assessed by the Dutch handedness questionnaire (Van Strien 1992). Participants with a score of 7 or more were included in this study, indicating a strong preference for right-handedness in daily activities. All participants were naive to the rationale of the experiment.

Experimental setup

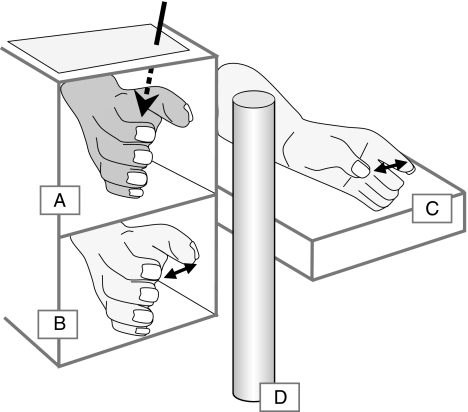

Participants stood in front of a high table, with their right forearm resting in the bottom compartment of a wooden framework. A right rubber hand, specially modified such that its grip aperture (the distance between index finger and thumb) could be adjusted, was placed in the top compartment, directly above the participant’s own hand (see Fig. 1).

Fig. 1.

Experimental setup as seen from the experimenter’s view. The rubber hand (a) is presented in a framework occluding the participant’s own hand (b). The participant’s left hand (c) was used on some trials to make a perceptual matching response, mimicking the perceived grip aperture of the right hand. Note that only the rubber hand was visible to the participant, through a layer of Plexiglas (dashed arrow). A cylinder which was also visible to the participant formed the reaching target (d), although actual grasping movements were made without visual feedback

The participant’s right forearm was occluded from vision by the framework throughout the experiment. Additionally, participants wore a black smock to occlude their upper arms as well as their left hand and forearm. The rubber hand was visible in the top compartment through a Plexiglas cover, and presented on its side, with the little finger resting on the table (ulna down, with the radial side pointing upwards). A grasping target, consisting of a cylinder of either 3 or 5 cm diameter, was visible 9 cm behind the framework. Participants stood on an adjustable platform such that they could comfortably rest their forearms with their elbows forming approximately 90° angles. The left hand was placed on the table outside the framework, 5 cm higher than the right hand, to eliminate any height reference participants might experience from leaning on the same plane. Participants were asked to relax and to refrain from moving their limbs during trials.

Design

Participants made grasping movements toward a target cylinder while the motion of their thumb and index finger were recorded. In order to investigate conflict between the spatial configuration of the rubber hand and the configuration of the participant’s own hand, we independently manipulated the starting grip aperture of both the rubber hand and the participant’s hand: either small (4 cm) or large (6 cm). Participants were therefore tested in two different congruent conditions as well as two conflict conditions. Additionally, the diameter of the cylinder that formed the grasping target could be either small (3 cm) or large (5 cm). Finally, on half the trials the grasping movement was preceded by a perceptual task, in which participants used their (occluded) left hand to indicate the perceived grip aperture of their stimulated right hand before making the grasping movement. Participants carried out 2 trials in each of the 16 total combinations of conditions. Trial order was counterbalanced in blocks across participants. The experiment was conducted in two separate sessions of about 2 h each.

Procedure

Rubber hand illusion induction

At the start of each trial, the RHI was induced by synchronous stroking of the thumb and index finger simultaneously of both the rubber hand and the participant’s hand using two sets of identical paintbrushes. Stimulation was delivered manually for 60 s by the experimenter. During this period, participants had their eyes open and were instructed to watch the rubber hand. The hand with which the experimenter stimulated the rubber hand was visible to the participant; the experimenter’s other hand was not. Participants were instructed to pay attention to the grip aperture of the rubber hand as well as to the grasping target. After induction of the illusion, participants were instructed to close their eyes, and responses were recorded. Note that stimulation was synchronous in all conditions, such that the RHI was induced in all trials.

Perceptual response

On half of the trials, participants were asked to report the perceived grip aperture of their right stimulated hand just before the grasping movement was made (i.e., directly after induction of the RHI). They were instructed to do so by mimicking the perceived grip aperture of their right hand using their non-stimulated, occluded left hand.

Grasping response

In each trial, participants made a grasping movement to the vertical cylinder which had been visible in the induction phase of the trial. Participants were instructed to grasp the cylinder at its midpoint, and to do so in a single, fluid motion. Once the movement was completed, the participant withdrew their hand and the experimenter realigned the participants hand with the rubber hand to prepare for the next trial. Note that participants had their eyes closed during the grasping movement. Visual feedback was therefore unavailable and the movement was memory-guided, with a delay of ~2 s (no preceding perceptual judgments) or ~10 s (with preceding perceptual judgments) between induction of the illusions and the grasping response.

Kinematic recordings

All grasping movements were recorded with a miniBIRD (Ascension Technology) kinematic recording device sampling the positions of the participant’s thumb and index finger at 86 Hz. Trials were repeated if the grasping motion was not completed within 3 s, or if the participants moved their hand after grasping the bar. This was done without informing the participant. Kinematic data were filtered using a second-order Butterworth filter. On each trial, the grasping movement was defined as the time during which the velocity of either marker exceeded 5 mm/s. Dependent variables of interest were maximum grip aperture (MGA), peak velocity (PV), time to maximum grip aperture (TTMGA), and time to peak velocity as a proportion of movement time (TTPV).

Results

Grasping response

Maximum grip aperture, time to maximum grip aperture, peak velocity, and time to peak velocity were entered into 2 × 2 × 2 × 2 repeated measures analyses of variance with the following factors: OWN HAND (small/large) RUBBER HAND (small/large), TARGET SIZE (small/large), and PRECEDING PERCEPTUAL RESPONSE (yes/no). Eleven participants each contributed 16 data points, each of which consisted of the mean of two trials. Because no significant main or interaction effects were observed on any of the dependent variables for PRECEDING PERCEPTUAL RESPONSE, trials with and without a preceding perceptual response have been collapsed in all figures.

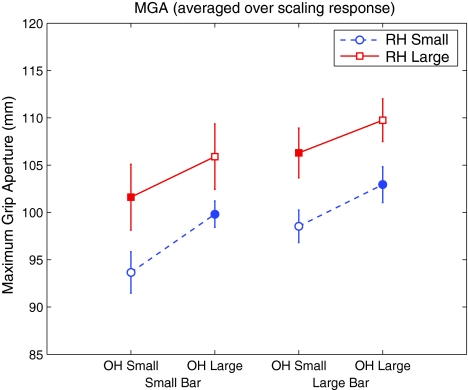

Maximum grip aperture

A significant main effect of TARGET SIZE on MGA was observed (F = 27.89, df = 1, p < 0.001) (Fig. 2): participants opened their hands further when reaching for a large target than when reaching for a small target (mean difference ± SD, 4.1 ± 2.6 mm). Furthermore, there was a significant main effect of OWN HAND: participants’ MGA was larger when the starting grip aperture of their own hand was large compared to when it was small (F = 59.8, df = 1, p < 0.001; mean difference ± SD, 4.6 ± 2.0 mm). Critically, a main effect of RUBBER HAND was observed: when participants viewed a rubber hand with a small grip aperture, MGA was smaller than when the rubber hand had a large grip aperture (F = 6.92, df = 1, p = 0.025; mean difference ± SD, 7.1 ± 9.0 mm). There was no effect of whether participants made a PRECEDING PERCEPTUAL JUDGMENT (F = 0.17, df = 1, p = 0.689) and none of the interactions were significant (all p > 0.246). These results are summarized in Fig. 2, clearly showing that the grip aperture of the rubber hand affects the MGA of the grasping movement.

Fig. 2.

Maximum grip aperture (MGA) as a function of target size, for different starting grip apertures of the participant’s own hand (OH) and rubber hand (RH). Error bars depict standard errors of the mean. The vertical axis indicates the maximum distance between the motion sensors attached to participants’ thumb and finger, and is therefore a relative, rather than absolute measure of grip aperture. Open markers denote congruent conditions, and closed markers denote conflict conditions. Three main effects are evident: MGA increases with OH grip aperture, with RH grip aperture, and with target size

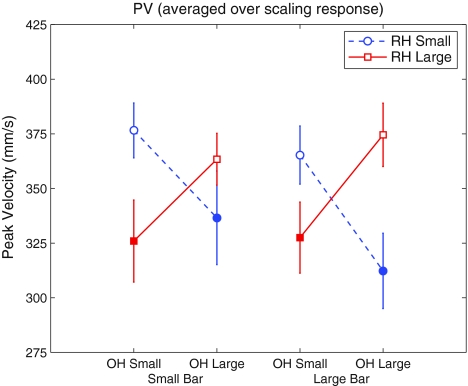

Peak velocity

No main effects were observed for PV (all p > 0.189). However, the two-way interaction between OWN HAND and RUBBER HAND was significant (F = 9.45, df = 1, p = 0.012) (Fig. 3). This was a congruency effect: when the grip aperture of the participants’ own hand mismatched with the rubber hand, PV dropped significantly compared to when the grip apertures of the participant’s hand and the rubber hand matched. In other words, when there was RHI-induced conflict, participants’ grasping movements were slower than when there was no such conflict (mean difference ± SD, 44.4 ± 47.9 mm/s). Additionally, there was a significant interaction between RUBBER HAND and TARGET SIZE (F = 6.57, df = 1, p = 0.028). This too was a congruency effect: grasping movements were faster when the rubber hand grip aperture and the grasping target were either both small or both large, and slower when one was large and the other small. None of the other interactions were significant (all p > 0.277).

Fig. 3.

Peak velocity (PV) as a function of target size, for different starting grip apertures of the participant’s own hand (OH) and rubber hand (RH). Error bars depict standard errors of the mean. The vertical axis indicates the maximum velocity of the grasping movement. Open markers denote congruent conditions, and closed markers denote conflict conditions. An interaction effect is evident: when OH grip aperture and RH grip aperture are in conflict, peak velocity is reduced compared to when OH grip aperture and RH grip aperture are congruent

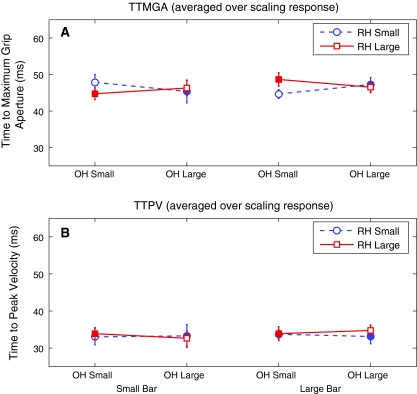

Time to maximum grip aperture and time to peak velocity

No significant effects were found for TTMGA, although the three-way interaction of OWN HAND, RUBBER HAND, AND TARGET SIZE approached significance (F = 4.88, df = 1, p = 0.052). No other main or interaction effects were near significance (all p > 0.159) (Fig. 4a). No significant main or interaction effects were observed for TTPV (all p > 0.144) (Fig. 4b). Overall, there was minimal variance in both TTMGA and TTPV; in both cases, mean values across conditions fell within a range of just 5 ms. Finally, visual inspection of the grasping trajectory traces did not reveal any systematic differences between conditions, besides those reflected in the above-mentioned effects on MGA and PV.

Fig. 4.

Time to maximum grip aperture (TTMGA) (a) and time to peak velocity (TTPV) (b) as a function of target size, for different starting grip apertures of the participant’s own hand (OH) and rubber hand (RH). Error bars depict standard errors of the mean. Open markers denote congruent conditions, and closed markers denote conflict conditions. No significant effect was observed on either TTMGA or TTPV

Perceptual response

Perceptual matching responses were entered into a 2 × 2 analysis of variance with the grip aperture of the participant’s own right hand (small or large) and the grip aperture of the rubber hand (small or large) as factors. An expected main effect of OWN HAND was observed (F = 178.13, df = 1, p < 0.001): participants indicated a larger aperture with their left hand when the aperture of their right hand was large than when it was small (mean difference ± SD, 24.3 ± 6.0 mm). Importantly, however, we observed a main effect of RUBBER HAND (F = 19.21, df = 1, p = 0.001): participants indicated a larger grip aperture when viewing a rubber hand with a large grip aperture, than when viewing a rubber hand with a small grip aperture (mean difference ± SD, 8.9 ± 6.7 mm). The effect of the rubber hand was greater when the participant’s own grip aperture was large than when it was small (mean difference ± SD, 13.4 ± 9.7 and 4.3 ± 8.0 mm, respectively), as indicated by a significant interaction effect (F = 6.63, df = 1, p = 0.028). In sum, perceptual responses were affected in a way that followed the expected direction of the RHI.

Perception–action interactions

On half the experimental trials, participants reported the perceived grip aperture of their stimulated right hand (by matching it with their left hand) before making the grasping movement. We observed no effect of this perceptual judgment on any of the four kinematic parameters of the subsequent movement (MGA, TTMGA, PV, and TTPV; all main effects p > 0.189, all interactions p > 0.144). As such, execution of the grasping movement appears unaffected by whether participants were required to make a preceding perceptual judgment.

Discussion

There are commonly considered to be at least two dissociable body representations in the brain: the body image and the body schema. In the present RHI study we investigated the susceptibility of the body schema, which is thought to underlie actions and has been found to be largely robust against bodily illusions. In order to do so, we (1) manipulated the amount of stimulation on the rubber hand and the participant’s own hand, (2) adjusted the spatial configuration of the rubber hand so it was in a ‘grasping configuration’, and (3) investigated a grasping rather than a pointing motor response.

As well as showing a RHI-dependent effect on perceptual scaling judgments presumably subserved by the body image, the present study for the first time demonstrates a RHI effect on kinematic parameters of a grasping movement. Specifically, we observed effects on both MGA and PV during the grasping movement. Participants opened their hand during the grasping motion according to the grip aperture of the rubber hand. The effect of the rubber hand’s grip aperture was therefore comparable to the effect of the starting grip aperture of participant’s own grasping hand.

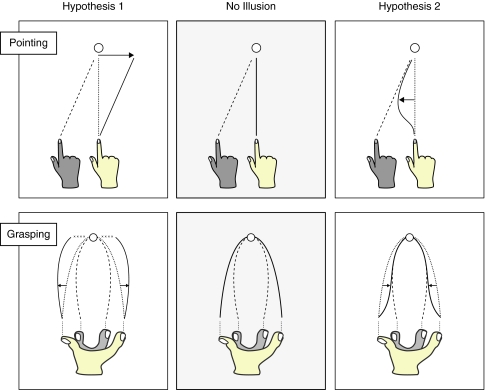

Interestingly, the direction of the effect on MGA was opposite to the direction in which motor responses hypothetically would have been affected in other bodily illusions. If we would take the traditional RHI as an example, in a pointing task the effect of the illusory displacement would be expected to manifest as end-point errors away from the rubber hand (in a similar way to end-point errors found in many manipulations of the mirror illusion or after prism adaptation (see for example Holmes et al. 2004; Kitazawa et al. 1997). The rationale behind this is that the perceived starting position of the participant’s hand shifts toward the location of the visible rubber hand. As such, a motor program to reach a target is planned from this illuded start position. When that motor program is then executed from the actual position of the participant’s hand, this would result in a pointing error away from the rubber hand (Fig. 5, top left panel). Following this line of reasoning, we would expect the MGA on grasping responses to be larger when the participant viewed a rubber hand with a small grip aperture, since the motor program would then need to incorporate additional ‘opening’ of the perceived starting grip aperture (Fig. 5, bottom left panel). Instead, we observed the opposite: MGA during the grasping movement was larger when participants viewed a rubber hand with a large grip aperture (Fig. 5, bottom right panel).

Fig. 5.

Opposing hypotheses about possible illusion effects on motor responses in the traditional rubber hand illusion (upper panels) and on the present grasping task (lower panels). The light gray hand indicates the participant’s own hand (solid trajectory), and the dark gray hand indicates the rubber hand (dashed trajectory). Dotted lines indicate the expected movement trajectory in the absence of an illusion effect. Arrows indicates the direction of the effect of the illusion according to each hypothesis. Center panels indicate unaffected motor responses. Left panels indicate movement trajectories in which a motor program is planned based on the illuded location or posture of the hand, and subsequently carried out from the actual location or posture. Conversely, right panels indicate movement trajectories in which a trajectory in space is planned, and the motor system brings the movement of the limb onto this trajectory soon after the movement begins. Differences are exaggerated for clarity

In order to make correct grasping or pointing/reaching movements, the brain needs to know the body’s correct starting position (Rossetti et al. 1995). When reaching without vision, we only have proprioception about the starting position/configuration of the hand. When visual information about the veridical location of the hand is provided, the motor response is in general found to be more accurate than when based on proprioception alone (Newport et al. 2001; Rossetti et al. 1995; Wann and Ibrahim 1992). This visual information can however be altered by mirror illusions (Holmes et al. 2004, 2006; Holmes and Spence 2005), by prism adaptation (Jackson and Newport 2001; Kitazawa et al. 1997; Rossetti et al. 1998), or by showing a rubber hand (e.g., Botvinick and Cohen 1998). A common feature of these methodologies is that they all induce a conflict between the proprioceptively perceived location and the visual location of the participant’s hand (or rubber hand). However, as mentioned earlier, the effect of the bodily illusion-induced multisensory conflict on subsequent reaching or pointing movements have been inconsistent.

Holmes and colleagues have shown affected end-point errors for reaching movements of the unseen (illuded) hand to a visual target in several well-designed manipulations of the mirror illusion (e.g., Holmes et al. 2004; Holmes et al. 2006; Holmes and Spence 2005). To date, however, we have been unable to induce any RHI-dependent effects on kinematic parameters of reaching movements executed either toward or with the illuded hand. One large difference between our RHI(s) and the mirror illusion is the discrepancy between the visual and the proprioceptively perceived hand location: on average 15 cm in the mirror illusion paradigms (Holmes et al. 2004; Holmes et al. 2006), compared to ~30 cm in our RHI experiments; in addition to which the rubber hand was often presented on the body midline (Kammers et al. 2009a, b, c). Perhaps the magnitude of the discrepancy between visual and proprioceptive information about the hand’s location has limited the impact of bodily illusions on the motoric body representation. The perceptual body representation has been found to represent the body in a fragmented, local way, such that it might be less dependent on the magnitude of this multimodal discrepancy. In any case, it is striking that the present RHI experiment reveals effects on a motor response, now that the magnitude of the induced conflict is reduced to just 2 cm (i.e., difference between the grip aperture of the rubber hand and the participants own hand).

An alternative explanation might be that the location of the hand and the posture of the hand are affected differently in bodily illusions. This hypothesis is supported by the finding that incompatibility of posture between the visual and proprioceptive perceived hand reduced the effect of the mirror illusion on reaching movements (Holmes et al. 2006). More specifically, Holmes et al. (2006) showed enhanced end-point errors after exposure to either the reflection of a rubber hand or the participant’s contralateral own hand in a conflicting location. They showed a reduction of the illusion-dependent end-point biases when the posture of the rubber hand (palm-up) did not match the unseen (illuded) participant’s own hand (palm down) (Experiment 2). However, no such reduction was found when the posture of the participant’s own hand was manipulated (Experiment 3). Combined, these results suggest that the effects of exposure to the reflection of the rubber hand are “an exclusively visual phenomenon, with no significant contribution from postural information relating to the unseen hand” (Holmes et al. 2006, page 12 of 30).

This is in line with the present results for two reasons. First, there is no conflict in starting location between the rubber hand and the participants own hand in the horizontal plane, which enables the participant to arrive at the presented object when reaching without vision. The difference between the location of the rubber hand and the participant’s own hand in the vertical plan is not relevant to the task since the object to grasp is long and in front of the participant’s own unseen hand as well as the rubber hand. Second, the results of Holmes et al. (2006) support our findings that the starting posture of the participant’s own hand is overwritten by the visible posture (i.e., grip aperture) of the rubber hand. It is known that the position of our hands is based on the weighted sum of visual and proprioceptive information (van Beers et al. 1999, 2002). The present study suggests that visual information about the postural configuration of a hand (without manipulation its horizontal location) overrides the proprioceptively perceived configuration to such a degree that subsequent planned trajectories are affected.

In addition to MGA, we observed an effect of the RHI on PV. PV during grasping was lower when the starting grip apertures of the participants own hand and the rubber hand were in conflict than when they were in agreement. Reductions in PV have previously been shown to be related to reaching uncertainty (Jeannerod 1986), suggesting that to some degree the motor system was able to detect the incongruence between the perceived and veridical grip apertures. One might speculate that grip aperture incongruence led to reductions in PV because the body schema might encode limb posture mainly based on visual information, but presented with conflicting new proprioceptive update reduces speed of the movement in order to adjust the grip aperture to the original calibrated (seen) aperture. Note, however, that such an uncertainty explanation cannot explain the observed effect of rubber hand grip aperture on MGA. Uncertainty as a result of grip aperture conflict might be expected to manifest as an interaction effect (an increase in MGA in incongruent conditions). Instead, we observe a main effect of rubber hand grip aperture, indicating that participants were not simply opening their hand wider when they were uncertain. Participants shaped their hand according to the grip aperture of the rubber hand (as if it were their own), indicating that the underlying motoric body representation was affected, rather than that they were merely less certain in conflict conditions.

Finally, there was also a RHI effect on the perceptual scaling judgments (thought to be subserved by the body image). Again, participants over- or under-estimated the starting grip aperture of their own hand according to the seen grip aperture of the rubber hand. The fact that the RHI in this experiment affected a perceptual task as well as a motor task could be taken as contrasting evidence to the theory of two different underlying body representations, since the robustness of the body schema and the susceptibility of the body image has been one of the grounds for their distinctiveness. However, we have already shown that a motor task can affect subsequent perceptual judgments (Kammers et al. 2009a), whereas here we find no effect of a preceding perceptual judgment on subsequent motor responses. This could be interpreted as converging evidence that the two tasks used in the present experiment do indeed depend on dissociable body representations.

In sum, the present experiment shows that the motoric body representation can be sensitive to the RHI, as shown by two distinct effects on kinematic parameters of a grasping movement. This was achieved by applying more holistic stimulation, manipulating postural rather than location information, and by investigating grasping movements rather than pointing movements. This finding suggests that although perceptual and motor tasks might be subserved by different body representations, the motoric body representation is not intrinsically robust to bodily illusion. Further research on the holistic or global nature of the motoric body representation, as well as the nature of its susceptibility to bodily illusions, might shed more light on the precise way in which it represents our body, external space (as an object or a moving subject), and the interaction between the two.

Acknowledgments

This research was supported by a VIDI grant from the Netherlands Organization for Scientific Research (NWO) to H.C.D (No. 452-03-325). Additional support was provided to M.K. by a Medical Research Council–Economic and Social Research Council (MRC–ESRC) fellowship (G0800056/86947).

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

References

- Berlucchi G, Aglioti S. The body in the brain: neural bases of corporeal awareness. Trends Neurosci. 1997;20(12):560–564. doi: 10.1016/S0166-2236(97)01136-3. [DOI] [PubMed] [Google Scholar]

- Botvinick M, Cohen J. Rubber hands ‘feel’ touch that eyes see. Nature. 1998;391(6669):756. doi: 10.1038/35784. [DOI] [PubMed] [Google Scholar]

- Coslett HB, Lie E. Bare hands and attention: evidence for a tactile representation of the human body. Neuropsychologia. 2004;42(14):1865–1876. doi: 10.1016/j.neuropsychologia.2004.06.002. [DOI] [PubMed] [Google Scholar]

- Costantini M, Haggard P. The rubber hand illusion: sensitivity and reference frame for body ownership. Conscious Cogn. 2007;16(2):229–240. doi: 10.1016/j.concog.2007.01.001. [DOI] [PubMed] [Google Scholar]

- Dijkerman HC, de Haan EH. Somatosensory processes subserving perception and action. Behav Brain Sci. 2007;30(2):189–201. doi: 10.1017/S0140525X07001392. [DOI] [PubMed] [Google Scholar]

- Durgin FH, Evans L, Dunphy N, Klostermann S, Simmons K. Rubber hands feel the touch of light. Psychol Sci. 2007;18(2):152–157. doi: 10.1111/j.1467-9280.2007.01865.x. [DOI] [PubMed] [Google Scholar]

- Ehrsson HH, Spence C, Passingham RE. That’s my hand! Activity in premotor cortex reflects feeling of ownership of a limb. Science. 2004;305(5685):875–877. doi: 10.1126/science.1097011. [DOI] [PubMed] [Google Scholar]

- Farne A, Pavani F, Meneghello F, Ladavas E. Left tactile extinction following visual stimulation of a rubber hand. Brain. 2000;123(Pt 11):2350–2360. doi: 10.1093/brain/123.11.2350. [DOI] [PubMed] [Google Scholar]

- Felician O, Ceccaldi M, Didic M, Thinus-Blanc C, Poncet M. Pointing to body parts: a double dissociation study. Neuropsychologia. 2003;41(10):1307–1316. doi: 10.1016/S0028-3932(03)00046-0. [DOI] [PubMed] [Google Scholar]

- Gallagher S. Body image and body schema. J Mind Behav. 1986;7:541–554. [Google Scholar]

- Gallagher S. How the body shapes the mind. New York: Oxford University Press; 2005. [Google Scholar]

- Gallagher S, Cole J. Body schema and body image in a deafferented subject. J Mind Behav. 1995;16:369–390. [Google Scholar]

- Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends Neurosci. 1992;15(1):20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- Head H, Holmes HG (1911–1912) Sensory disturbances from cerebral lesions. Brain 34:102–254

- Holmes NP, Spence C. Visual bias of unseen hand position with a mirror: spatial and temporal factors. Exp Brain Res. 2005;166(3–4):489–497. doi: 10.1007/s00221-005-2389-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holmes NP, Crozier G, Spence C. When mirrors lie: “visual capture” of arm position impairs reaching performance. Cogn Affect Behav Neurosci. 2004;4(2):193–200. doi: 10.3758/CABN.4.2.193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holmes NP, Snijders HJ, Spence C. Reaching with alien limbs: visual exposure to prosthetic hands in a mirror biases proprioception without accompanying illusions of ownership. Percept Psychophys. 2006;68(4):685–701. doi: 10.3758/bf03208768. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jackson SR, Newport R. Prism adaptation produces neglect-like patterns of hand path curvature in healthy adults. Neuropsychologia. 2001;39(8):810–814. doi: 10.1016/S0028-3932(01)00015-X. [DOI] [PubMed] [Google Scholar]

- Jeannerod M. Mechanisms of visuomotor coordination: a study in normal and brain-damaged subjects. Neuropsychologia. 1986;24:41–78. doi: 10.1016/0028-3932(86)90042-4. [DOI] [PubMed] [Google Scholar]

- Kammers MPM, van der Ham IJ, Dijkerman HC. Dissociating body representations in healthy individuals: differential effects of a kinaesthetic illusion on perception and action. Neuropsychologia. 2006;44(12):2430–2436. doi: 10.1016/j.neuropsychologia.2006.04.009. [DOI] [PubMed] [Google Scholar]

- Kammers MPM, de Vignemont F, Verhagen L, Dijkerman HC. The rubber hand illusion in action. Neuropsychologia. 2009;47(1):204–211. doi: 10.1016/j.neuropsychologia.2008.07.028. [DOI] [PubMed] [Google Scholar]

- Kammers MPM, Verhagen L, Dijkerman HC, Hogendoorn H, De Vignemont F, Schutter D (2009b) Is this hand for real? Attenuation of the rubber hand illusion by transcranial magnetic stimulation over the inferior parietal lobule. J Cogn Neurosci. doi:10.1162/jocn.2009.21095 [DOI] [PubMed]

- Kammers MPM, Longo MR, Tsakiris M, Dijkerman HC, Haggard P. Specificity and coherence of body representations. Perception. 2009;38(4):1804–1820. doi: 10.1068/p6389. [DOI] [PubMed] [Google Scholar]

- Kitazawa S, Kimura T, Uka T. Prism adaptation of reaching movements: specificity for the velocity of reaching. J Neurosci. 1997;17(4):1481–1492. doi: 10.1523/JNEUROSCI.17-04-01481.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lloyd DM. Spatial limits on referred touch to an alien limb may reflect boundaries of visuo-tactile peripersonal space surrounding the hand. Brain Cogn. 2007;64(1):104–109. doi: 10.1016/j.bandc.2006.09.013. [DOI] [PubMed] [Google Scholar]

- Longo MR, Schuur F, Kammers MP, Tsakiris M, Haggard P. What is embodiment? A psychometric approach. Cognition. 2008;107:978–998. doi: 10.1016/j.cognition.2007.12.004. [DOI] [PubMed] [Google Scholar]

- Newport R, Hindle JV, Jackson SR. Links between vision and somatosensation. Vision can improve the felt position of the unseen hand. Curr Biol. 2001;11(12):975–980. doi: 10.1016/S0960-9822(01)00266-4. [DOI] [PubMed] [Google Scholar]

- Paillard J. Knowing where and knowing how to get there. In: Paillard J, editor. Brain and space, chap 24. Oxford: Oxford University Press; 1991. pp. 461–481. [Google Scholar]

- Paillard J. Body schema and body image: a double dissociation in deafferented patients. In: Gantchev GN, Mori S, Massion J, editors. Motor control, today and tomorrow. Sofia: Academic Publishing House; 1999. pp. 197–214. [Google Scholar]

- Pavani F, Spence C, Driver J. Visual capture of touch: out-of-the-body experiences with rubber gloves. Psychol Sci. 2000;11(5):353–359. doi: 10.1111/1467-9280.00270. [DOI] [PubMed] [Google Scholar]

- Rossetti Y, Desmurget M, Prablanc C. Vectorial coding of movement: vision, proprioception, or both? J Neurophysiol. 1995;74(1):457–463. doi: 10.1152/jn.1995.74.1.457. [DOI] [PubMed] [Google Scholar]

- Rossetti Y, Rode G, Pisella L, Farne A, Li L, Boisson D, et al. Prism adaptation to a rightward optical deviation rehabilitates left hemispatial neglect. Nature. 1998;395(6698):166–169. doi: 10.1038/25988. [DOI] [PubMed] [Google Scholar]

- Rossetti Y, Rode G, Boisson D (2001) Numbsense: a case study and implications. In: De Gelder B, De Haan EHF, Heywood CA (eds) Out of mind, varieties of unconscious processes. pp 265–292

- Scheidt RA, Conditt MA, Secco EL, Mussa-Ivaldi FA. Interaction of visual and proprioceptive feedback during adaptation of human reaching movements. J Neurophysiol. 2005;93(6):3200–3213. doi: 10.1152/jn.00947.2004. [DOI] [PubMed] [Google Scholar]

- Schwoebel J, Coslett HB. Evidence for multiple, distinct representations of the human body. J Cogn Neurosci. 2005;17(4):543–553. doi: 10.1162/0898929053467587. [DOI] [PubMed] [Google Scholar]

- Sirigu A, Grafman J, Bressler K, Sunderland T. Multiple representations contribute to body knowledge processing. Evidence from a case of autotopagnosia. Brain. 1991;114(Pt 1B):629–642. doi: 10.1093/brain/114.1.629. [DOI] [PubMed] [Google Scholar]

- Tsakiris M, Haggard P. The rubber hand illusion revisited: visuotactile integration and self-attribution. J Exp Psychol Hum Percept Perform. 2005;31(1):80–91. doi: 10.1037/0096-1523.31.1.80. [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, Denier van der Gon JJ. The precision of proprioceptive position sense. Exp Brain Res. 1998;122(4):367–377. doi: 10.1007/s002210050525. [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, Gon JJ. Integration of proprioceptive and visual position-information: an experimentally supported model. J Neurophysiol. 1999;81(3):1355–1364. doi: 10.1152/jn.1999.81.3.1355. [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Wolpert DM, Haggard P. When feeling is more important than seeing in sensorimotor adaptation. Curr Biol. 2002;12(10):834–837. doi: 10.1016/S0960-9822(02)00836-9. [DOI] [PubMed] [Google Scholar]

- Van Strien JW. Classificatie van links–en rechtshandige proefpersonen. Nederlands Tijdschrift voor de Psychologie en haar Grensgebieden. 1992;47:88–92. [Google Scholar]

- Wann JP, Ibrahim SF. Does limb proprioception drift? Exp Brain Res. 1992;91(1):162–166. doi: 10.1007/BF00230024. [DOI] [PubMed] [Google Scholar]