Abstract

Local polynomial estimators are popular techniques for nonparametric regression estimation and have received great attention in the literature. Their simplest version, the local constant estimator, can be easily extended to the errors-in-variables context by exploiting its similarity with the deconvolution kernel density estimator. The generalization of the higher order versions of the estimator, however, is not straightforward and has remained an open problem for the last 15 years. We propose an innovative local polynomial estimator of any order in the errors-in-variables context, derive its design-adaptive asymptotic properties and study its finite sample performance on simulated examples. We provide not only a solution to a long-standing open problem, but also provide methodological contributions to error-invariable regression, including local polynomial estimation of derivative functions.

Keywords: Bandwidth selector, Deconvolution, Inverse problems, Local polynomial, Measurement errors, Nonparametric regression, Replicated measurements

1. INTRODUCTION

Local polynomial estimators are popular techniques for nonparametric regression estimation. Their simplest version, the local constant estimator, can be easily extended to the errors-in-variables context by exploiting its similarity with the deconvolution kernel density estimator. The generalization of the higher order versions of the estimator, however, is not straightforward and has remained an open problem for the last 15 years, since the publication of Fan and Truong (1993). The purpose of this article is to describe a solution to this longstanding open problem: we also make methodological contributions to errors-in-variable regression, including local polynomial estimation of derivative functions.

Suppose we have an iid sample (X1, Y1), …, (Xn, Yn) distributed like (X, Y), and we want to estimate the regression curve m(x) = E(Y|X = x) or its νth derivative m(ν)(x). Let K be a kernel function and h > 0 a smoothing parameter called the bandwidth. When X is observable, at each point x, the local polynomial estimator of order p approximates the function m by a pth order polynomial , where the local parameters βx = (βx,0, …, βx,p) are fitted locally by a weighted least squares regression problem, via minimization of

| (1) |

where Kh(x) = h−1K(x/h). Then m(x) is estimated by m̂(x) = β̂x, 0 and m(ν)(x) is estimated by (see Fan and Gijbels 1996). Local polynomial estimators of order p > 0 have many advantages over other nonparametric estimators, such as the Nadaraya-Watson estimator (p = 0). One of their attractive features is their capacity to adapt automatically to the boundary of the design points, thereby offering the potential of bias reduction with no or little variance increase.

In this article, we consider the more difficult errors-in-variables problem, where the goal is still to estimate the curve m(x) or its derivative m(ν)(x), but the only observations available are an iid sample (W1, Y1), …, (Wn, Yn) distributed like (W, Y), where W = X + U with U independent of X and Y. Here, X is not observable and instead we observe W, which is a version of X contaminated by a measurement error U with density fU. In this context, when p = 0, mp(Xj) = βx,0, and a consistent estimator of m can simply be obtained after replacing the weights Kh(Xj − x) in (1) by appropriate weights depending on Wj (see Fan and Truong 1993). For p > 0, however,

depends on the unobserved Xj. As a result, despite the popularity of the measurement error problem, no one has yet been able to extend the minimization problem (1) and the corresponding local pth order polynomial estimators for p > 0 to the case of contaminated data. An exception is the recent article by Zwanzig (2007), who constructed a local linear estimator of m in the context where the Ui’s are normally distributed, the density of the Xi’s is known to be uniform U[0, 1], and the curve m is supported on [0, 1].

We propose a solution to the general problem and thus generalize local polynomial estimators to the errors-in-variable case. The methodology consists of constructing simple unbiased estimators of the terms depending on Xj, which are involved in the calculation of the usual local polynomial estimators. Our approach also provides an elegant estimator of the derivative functions in the errors-in-variables setting.

The errors-in-variables regression problem has been considered by many authors in both the parametric and the non-parametric context. See, for example, Fan and Masry (1992), Cook and Stefanski (1994), Stefanski and Cook (1995), Ioannides and Alevizos (1997), Koo and Lee (1998), Carroll, Maca, and Ruppert (1999), Stefanski (2000), Taupin (2001), Berry, Carroll, and Ruppert (2002), Carroll and Hall (2004), Staudenmayer and Ruppert (2004), Liang and Wang (2005), Comte and Taupin (2007), Delaigle and Meister (2007), Hall and Meister (2007), and Delaigle, Hall, and Meister (2008); see also Carroll, Ruppert, Stefanski, and Crainiceanu (2006) for an exhaustive review of this problem.

2. METHODOLOGY

In this section, we will first review local polynomial estimators in the error-free case to show exactly what has to be solved in the measurement error problem. After that, we give our solution.

2.1 Local Polynomial Estimator in the Error-free Case

In the usual error-free case (i.e., when the Xi’s are observable), the local polynomial estimator of m(ν)(x) of order p can be written in matrix notation as

where with 1 on the (ν + 1)th position, y⊤ = (Y1, …, Yn), X = {(Xi − x)j}1≤i≤n,0≤j≤p and K = diag{Kh(Xj − x)} (e.g., see Fan and Gijbels (1996, p. 59).

Using standard calculations, this estimator can be written in various equivalent ways. An expression that will be particularly useful in the context of contaminated errors, where we observe neither X nor K, is the one used in Fan and Masry (1997), which follows from equivalent kernel calculations of Fan and Gijbels (1996, p. 63). Let

where

Then the local polynomial estimator of m(n)(x) of order p can be written as

2.2 Extension to the Error-case

Our goal is to extend to the errors-in-variables setting, where the data are a sample (W1, Y1), …, (Wn, Yn) of contaminated iid observations coming from the model

| (2) |

where Uj are the measurement errors, independent of (Xj, Yj, ηj), and fU is known.

For p = 0, a rate-optimal estimator has been developed by Fan and Truong (1993). Their technique is similar to the one employed in density deconvolution problems studied in Stefanski and Carroll (1990) (see also Carroll and Hall 1988). It consists of replacing the unobserved Kh(Xj − x) by an observable quantity Lh(Wj − x) satisfying

In the usual nomenclature of measurement error models, this means that Lh(Wj − x) is an unbiased score for the kernel function Kh(Xj − x).

Following this idea, we would like to replace (Xj − x)kKh(Xj − x) in Sn,k and Tn,k by (Wj − x)kLk,h(Wj − x), where Lk,h(x) = h−1Lk(x/h), and each Lk potentially depends on h and satisfies

| (3) |

That is, we propose to find unbiased scores for all components of the kernel functions. Thus, using the substitution principle, we propose to estimate m(ν)(x) by

| (4) |

where Ŝn = {Ŝn,j+l(x)}0≤j,l≤p and T̂n = {T̂n,0(x), …, T̂n,p(x)}⊤ with

The method explained earlier seems relatively straightforward but its actual implementation is difficult, and this is the reason that the problem has remained unsolved. The main difficulty has been that it is very hard to find an explicit solution Lk,h(·) to the integral Equation (3). In addition, a priori it is not clear that the solution will be independent of other quantities such as Xj, x, and other population parameters. Therefore, this problem has remained unsolved for more than 15 years.

The key to finding the solution is the Fourier transform. Instead of solving (3) directly, we solve its Fourier version

| (5) |

where, for a function g, we let φg denote its Fourier transform, whereas for a random variable T, we let φT denote the characteristic function of its distribution.

We make the following basic assumptions:

Condition A:∫|φX|< ∞; φU(t) ≠ 0 for all t; is not identically zero and for all h > 0 and 0 ≤ l ≤ 2p.

Condition A generalizes standard conditions of the deconvolution literature, where it is assumed to hold for p = 0. It is easy to find kernels that satisfy this condition. For example, kernels defined by φK(t) = (1 − t2)q·1[−1,1](t), with q ≥ 2p, satisfy this condition.

Under these conditions, we show in the Appendix that the solution to (5) is found by taking Lk (in the definition of Lk,h) equal to

with

In other words, our estimator is defined by (4), where

| (6) |

with KU,k,h(x) = h−1KU,k(x/h). Note that the functions KU,k depend on h, even though, to simplify the presentation, we did not indicate this dependence explicitly in the notations.

In what follows, for simplicity, we drop the p index from . It is also convenient to rewrite (4) as

where Ŝν,k(x) denotes the (ν + 1, k + 1)th element of the inverse of the matrix Ŝn.

3. ASYMPTOTIC NORMALITY

3.1 Conditions

To establish asymptotic normality of our estimator, we need to impose some regularity conditions. Note that these conditions are stronger than those needed to define the estimator, and to simplify the presentation, we allow overlap of some of the conditions [e.g., compare condition A and condition (B1) which follows].

As expected because of the unbiasedness of the score, and as we will show precisely, the asymptotic bias of the estimator, defined as the expectation of the limiting distribution of m̂(ν)(x) − m(ν)(x), is exactly the same as in the error-free case. Therefore, exactly the same as in the error-free case, the bias depends on the smoothness of m and fX, and on the number of finite moments of Y and K. Define τ2 (u) = E[{Y − m(x)}2|X = u]. Note that, to simplify the notation, we do not put an index x into the function τ, but it should be obvious that the function depends on the point x where we wish to estimate the curve m. We make the following assumptions:

Condition B:

(B1) K is a real and symmetric kernel such that ∫K(x) dx = 1 and has finite moments of order 2p + 3;

(B2) h → 0 and nh → ∞as n → ∞

(B3) fX(x) > 0 and fX is twice differentiable such that for j = 0, 1, 2;

(B4) m is p + 3 times differentiable, τ2(·) is bounded, ||m(j)||∞<∞ for j = 0, …, p + 3, and for some η > 0, E{|Yi − m(x)|2+η|X= u} is bounded for all u.

These conditions are rather mild and, apart from the assumptions on the conditional moments of Y, they are fairly standard in the error-free context. Boundedness of moments of Y are standard in the measurement error context (see Fan and Masry 1992; Fan and Truong 1993).

The asymptotic variance of the estimator, defined as the variance of the limiting distribution of m β(ν)(x) − m(ν)(x) differs from the error-free case because, as usual in deconvolution problems, it depends strongly on the type of measurement errors that contaminate the X-data. Following Fan (1991a,b,c), we consider two categories of errors. An ordinary smooth error of order β is such that

| (7) |

for some constants c > 0 and β > 1. A supersmooth error of order β > 0 is such that

| (8) |

with d0, d1, γ, β0, and β1 some positive constants. For example, Laplace errors, Gamma errors, and their convolutions are ordinary smooth, whereas Cauchy errors, Gaussian errors, and their convolutions are supersmooth. Depending on the type of the measurement error, we need different conditions on K and U to establish the asymptotic behavior of the variance of the estimator. These conditions mostly concern the kernel function, which we can choose; they are fairly standard in deconvolution problems and are easy to satisfy. For example, see Fan (1991a,b,c) and Fan and Masry (1992). We assume:

Condition O (ordinary smooth case):

||φ′U||∞ < ∞ and for k = 0, …,2p + 1, and and, for 0 ≤k, k′ ≤ 2p, .

Condition S (supersmooth case):

φK is supported on [−1, 1] and, for k = 0, …, 2p, ;

In the sequel we let Ŝ = Ŝn, μj = ∫ujK(u)du, S = (μK+ℓ)0≤k, l≤p,,S̃ = (μK±ℓ+1)0≤k, l≤p, μ = (μp+1, …, μ2p+1)⊤ μ̃ = (μp+2, …, μ2p+2) and, for any square integrable function g, we define R(g)= ∫ g2. Finally, we let S i,j denote the (i+1,+ j + 1)th element of the matrix and, for c as in (7),

Note that this matrix is always real because its (k, k′)th element is zero when k + k′is odd, and ik′−k = (−1)(k′−k)/2 otherwise.

3.2 Asymptotic Results

Asymptotic properties of the estimator depend on the type of error that contaminates the data. The following theorem establishes asymptotic normality in the ordinary smooth error case.

Theorem 1

Assume (7). Under Conditions A, B, and O, if nh2β+ 2ν+ 1→ ∞ and nh2β+4→ ∞ we have

where , and

-

if p − ν is odd

-

if p − ν is even

From this theorem we see that, as usual in nonparametric kernel deconvolution estimators, the bias of our estimator is exactly the same as the bias of the local polynomial estimator in the error-free case, and the errors-in-variables only affect the variance of the estimator. Compare the previous bias formulas with Theorem 3.1 of Fan and Gijbels (1996), for example. In particular, our estimator has the design-adaptive property discussed in Section 3.2.4 of that book.

The optimal bandwidth is found by the usual trade-off between the squared bias and the variance, which gives h ~ n−1/(2β + 2p+3) if p − ν is odd and h ~ n−1/(2β + 2p+5) if p − ν is even. The resulting convergence rates of the estimator are, respectively, n− (p+1−ν)/(2β + 2p+3) if p − ν is odd and n− (p+2−ν)/(2β + 2p+5) if p − ν is even. For p = ν = 0, our estimator of m is exactly the estimator of Fan and Truong (1993) and has the same rate, that is n−2/(2β +5) (remember that we only assume that fX is twice differentiable). For p = 1 and ν = 0, our estimator is different from Fan and Truong (1993), but it converges at the same rate. For p > 1 and ν = 0, our estimator converges at faster rates.

Remark 1: Which p should one use?

This problem is essentially the same as in the error-free case (see Fan and Gijbels, 1996, Sec. 3.3). In particular, although, in theory using higher values of p reduces the asymptotic bias of the estimator without increasing the order of its variance, the theoretical improvement for p − ν > 1 is not generally noticeable in finite samples. In particular, the constant term of the dominating part of the variance can increase rapidly with p. However, the improvement from p − ν = 0 to p − ν = 1 can be quite significant, especially in cases where fX or m are discontinuous at the boundary of their domain, in which case the bias for p − ν = 1 is of smaller order than the bias for p − ν = 0. In other cases, the biases of the estimators of orders p and p + 1, where p − ν is even, are of the same order. See Sections 3.3 and 5.

Remark 2: Higher order deconvolution kernel estimators

As usual, it is possible to reduce the order of the bias by using higher order kernels, i.e., kernels that have all their moments up to order k, say, vanishing, and imposing the existence of higher derivatives of fX and m, as was done in Fan and Truong (1993). However, such kernels are not very popular, because it is well known that, in practice, they increase the variability of estimators and can make them quite unattractive (e.g., see Marron and Wand 1992). Similarly, one can also use the infinite order sinc kernel, which has the appealing theoretical property that it adapts automatically to the smoothness of the curves (see Diggle and Hall 1993; Comte and Taupin 2007). However, the trick of the sinc kernel does not apply to the boundary setting. In addition, this kernel can only be used when p = ν = 0, because , where it can sometimes work poorly in practice, especially in cases where fX or m have boundary points (see Section 5 for illustration).

The next theorem establishes asymptotic normality in the supersmooth error case. In this case, the variance term is quite complicated and asymptotic normality can only be established under a technical condition, which generalizes Condition 3.1 (and Lemma 3.2) of Fan and Masry (1992). This condition is nothing but a refined version of the Lyapounov condition, and it essentially says that the bandwidth cannot converge to zero too fast. It should be possible to derive more specific lower bounds on the bandwidth, but this would require considerable technical detail and therefore will be omitted here. In the next theorem we first give an expression for the asymptotic bias and variance of m̂(ν)(x) defined as, respectively, the expectation and the variance of h−νν!Zn, the asymptotically dominating part of the estimator, and where Zn is defined in (A.3). Then, under the additional assumption, we derive asymptotic normality.

Theorem 2

Assume (8). Under conditions A, B, and S, if h = d(2/γ)1/β(ln n) −1/β with d > 1, then

Bias{m̂(ν)(x)} is as in Theorem 1 and var{m̂(ν)(x)} = o (Bias2{m̂(ν)(x)})

-

If, in addition, for Un,1 defined in the Appendix at Equation (A.5), there exists r > 0 such that for bn = hβ/(2r+10), , with 0 < C1 < ∞ independent of n, then we also have

When h = d(2/γ)1/β(ln n) −1/β with d > 1, as in the theorem, it is not hard to see that, as usual in the supersmooth error case, the variance is negligible compared with the squared bias and the estimator converges at the logarithmic rate {log(n)} − (p+1−ν)/β if p − ν is odd, and {log(n)} − (p+2−ν)/β if p − ν is even. Again, for p = ν = 0, our estimator is equal to the estimator of Fan and Truong (1993) and thus has the same rate.

3.3 Behavior Near the Boundary

Because the bias of our estimator is the same as in the error-free case, it suffers from the same boundary effects when the design density fX is compactly supported. Without loss of generality, suppose that fX is supported on [0, 1] and, for any integer k ≥ 0 and any function g defined in [0, 1] that is k times differentiable on ]0, 1[, let g̃(k) (x) = g(k)(0+) · 1{x=0} + g(k)(1−) · 1{x=1} + g(k)(x) · 1{0<x<1} We derive asymptotic normality of the estimator under the following conditions, which are the same as those usually imposed in the error-free case:

Condition C:

(C1)–(C2) Same as (B1)–(B2);

(C3) fX(x) > 0 for x ε]0, 1[ and fX is twice differentiable such that for j=0,1,2;

(C4) m is p + 3 times differentiable on ]0, 1[, τ2 is bounded on [0, 1] and continuous on ]0, 1[, ||m(j)||∞< ∞ on [0, 1] for j =0,…, p + 3 and there exists η > 0 such that E{|Yi −m(x)|2)+η|X = u} is bounded for all u ε[0, 1].

We also define , SB(x) = (μk+k′(x))0≤ k,k′≤p, μ (x) = {μp+1(x), …,μ2p+1(x)}⊤ and

For brevity, we only show asymptotic normality in the ordinary smooth error case. Our results can be extended to the supersmooth error case: all our calculations for the bias are valid for supersmooth errors, and the only difference is the variance, which is negligible in that case.

The proof of the next theorem is similar to the proof of Theorem 1 and hence is omitted. It can be obtained from the sequence of Lemmas B10 to B13 of Delaigle, Fan, and Carroll (2008). As for Theorem 2, a technical condition, which is nothing but a refined version of the Lyapounov condition, is required to deal with the variance of the estimator.

Theorem 3

Assume (7). Under Conditions A, C, and O, if nh2β+2ν+1 → ∞ and nh2β+4 → ∞ as n → ∞ and for some finite constant C1 > 0, we have

where and .

As before, the bias is the same as in the error-free case, and thus all well-known results of the boundary problem extend to our context. In particular, the bias of the estimator for p − ν even is of order hp+1 − ν, instead of hp+ 2 − ν in the case without a boundary, whereas the bias of the estimator for p − ν odd remains of order hp+1 − ν, as in the no-boundary case. In particular, the bias of the estimator for p − ν = 0 is of order h, whereas it is of order h2 when p − ν = 1. For this reason, local polynomial estimators with p − ν odd, and in particular with p − ν = 1, are often considered to be more natural.

Note that in deconvolution problems, kernels are usually supported on the whole real line (see Delaigle and Hall, 2006), the presence of the boundary can affect every point of the type x = ch or x = 1 − ch, with c a finite constant satisfying 0 ≤ c ≤ 1/h. For x = ch, it can be shown that

whereas if x = 1 − ch, we have

4. GENERALIZATIONS

In this section, we show how our methodology can be extended to provide estimators in two important cases: (1) when the measurement error distribution is unknown; and (2) when the measurement errors are heteroscedastic. In the interest of space we focus on methodology and do not give detailed asymptotic theory.

4.1 Unknown Measurement Error Distribution

In empirical applications, it can be unrealistic to assume that the error density is known. However, it is only possible to construct a consistent estimator of m if we are able to consistently estimate the error density itself. Several approaches for estimating this density fU have been considered in the nonparametric literature. Diggle and Hall (1993) and Neumann (1997) assumed that a sample of observations from the error density is available and estimated fU nonparametrically from those data. A second approach, applicable when the contaminated observations are replicated, consists in estimating fU from the replicates. Finally, in some cases, if we have a parametric model for the error density fU and additional constraints on the density fX, it is possible to estimate an unknown parameter of fU without any additional observation (see Butucea and Matias 2005; Meister 2006).

We give details for the replicated data approach, which is by far the most commonly used. In the simplest version of this model, the observations are a sample of iid data (Wj1, Wj2, Yj), j = 1, …, n, generated by the model

| (9) |

where the Ujk’s are independent, and independent of the (Xj, Yj, ηj)’ s.

In the measurement error literature, it is often assumed that the error density, fU(;θ) is known up to a parameter θ, which has to be estimated from the data. For example, if θ = var(U), the unknown variance of U, a consistent estimator is given by

| (10) |

where (see, for example, Carroll et al. 2006, Equation 4.3). Taking φU(;θ̂) to be the characteristic function corresponding to fU(;θ̂) we can extend our estimator of m(ν) to the unknown error case by replacing φU by φU (;θ̂) everywhere.

In the case where no parametric model for fU is available, some authors suggest using a nonparametric estimator of fU. For general settings (see Li and Vuong 1998; Schennach 2004a,b; Hu and Schennach 2008). In the common case where the error density fU is symmetric, Delaigle et al. (2008) proposed to estimate φU(t) by . Following the approach they use for the case p = ν = 0, we can extend our estimator of m(ν) to the unknown error case by replacing φU by φ̂U everywhere, adding a small positive number to φ̂U when it gets too small. Detailed convergence rates of this approach have been studied by Delaigle et al. (2008) in the local constant case (p = 0), where they show that the convergence rates of this version of the estimator is the same as that of the estimator with known fU, as long as fX is sufficiently smooth relative to fU. Their conclusion can be extended to our setting.

4.2 Heteroscedastic Measurement Errors

Our local polynomial methodology can be generalized to the more complicated setting where the errors Ui are not identically distributed. In practice, this could happen when observations have been obtained in different conditions, for example if they were collected from different laboratories. Recent references on this problem include Delaigle and Meister (2007 Delaigle and Meister (2008) and Staudenmayer, Ruppert, and Buonaccorsi (2008). In this context, the observations are a sample (W1, Y1), …, (Wn, Yn) of iid observations coming from the model

| (11) |

where the Uj’s are independent of the (Xj, Yj)’s. Our estimator cannot be applied directly to such data because there is no common error density fU, and therefore KU,k is not defined. Rather, we need to construct appropriate individual functions KUj,k and then replace Ŝn,k(x) and T̂n,k(x) in the definition of the estimator, by

| (12) |

where we use the superscript H to indicate that we are treating the heteroscedastic case. As before, we require that and .

A straightforward solution would be to define KUj,k by

where φUj is the characteristic function of the distribution of Uj. However, theoretical properties of the corresponding estimator are generally not good, because the order of its variance is dictated by the least favorable error densities (see Delaigle and Meister 2007 see Delaigle and Meister 2008). This problem can be avoided by extending the approach of those authors to our context by taking

Alternatively, in the case where the error densities are unknown but replicates are available as at (9) we can use instead

| (13) |

where W̄j = (Wj1 + Wj2)/2 and

adding a small positive number to the denominator when it gets too small. This is a generalization of the estimator of Delaigle and Meister (2008).

5. FINITE SAMPLE PROPERTIES

5.1 Simulation Settings

Comparisons between kernel estimators and other methods have been carried out by many authors in various contexts, with or without measurement errors. One of their major advantages is that they are simple and can be easily applied to problems such as heteroscedasticity (see Section 4.2), nonparametric variance, or mode estimation and detection of boundaries. See also the discussion in Delaigle and Hall (2008). As for any method, in some cases kernel methods outperform, and in other cases are outperformed by other methods. Our goal is not to rederive these well-known facts, but rather to illustrate the new results of our article.

We applied our technique for estimating m and m(1) to several examples to include curves with several local extrema and/or an inflection point, as well as monotonic, convex and/or unbounded functions. To summarize the work we are about to present, our simulations illustrate in finite samples:

the gain that can be obtained by using a local linear estimator (LLE) in the presence of boundaries, in comparison with a local constant estimator (LCE);

properties of our estimator of m(1) for p = 1 (LPE1) and p = 2 (LPE2);

properties of our estimator when the error variance is estimated from replicates;

the robustness of our estimator against misspecification of the error density;

the gain obtained by using our estimators compared with their naive versions (denoted, respectively, by NLCE, NLLE, NLPE1, or NLPE2), which pretend there is no error in the data;

the properties of the LCE using the sinc kernel (LCES) in the presence of boundary points.

We considered the following examples: (1) m(x) = x3 exp(x4/1,000) cos(x) and η ~ N(0, 0.62); (2) m(x) = 2x exp(−10x4/81), η ~ N(0, 0.22); (3) m(x) = x3, η ~ N(0, 1.22); (4) m(x) = x4, η ~ N(0, 42). In cases (1) and (2) we took X ~ 0.8X1 + 0.2X2, where X1 ~fX1(x) = 0.1875x21[−2,2](x) and X2 ~ U[−1, 1]. In cases (3) and (4) we took X ~ N(0, 1).

In each case considered, we generated 500 samples of various sizes from the distribution of (W, Y), where W = X + U with U ~ Laplace or normal of zero mean, for several values of the noise-signal-ratio var(U)/var(X). Except otherwise stated, we used the kernel whose Fourier transform is given by

| (13) |

To illustrate the potential gain of using local polynomial estimators without confounding the effect of an estimator with that of the smoothing parameter selection, we used, for each method, the theoretical optimal value of h; that is, for each sample, we selected the value h minimizing the Integrated Squared Error ISE = ∫ {m(ν)(x) − m̂(ν)(x)}2 dx, where m̂(ν) is the estimator considered.

In the case where ν = 0, a data-driven bandwidth procedure has been developed by Delaigle and Hall (2008). For example, for the LLE of m, NW, and DW, in Section 3.1 of that article, are equal to NW = T̂n,2Ŝn,0 − T̂n,1Ŝn,1 and , respectively (see also Figure 2 in that article). Note that the fully automatic procedure of Delaigle and Hall (2008) also includes the possibility of using a ridge parameter in cases where the denominator DW(x) gets too small. It would be possible to extend their method to cases where ν > 0, by combining their SIMulation EXtrapolation (SIMEX) idea with data-driven bandwidths used in the error-free case, in much the same way as they combined their SIMEX idea with cross-validation for the case ν = 0. Although not straightforward, this is an interesting topic for future research.

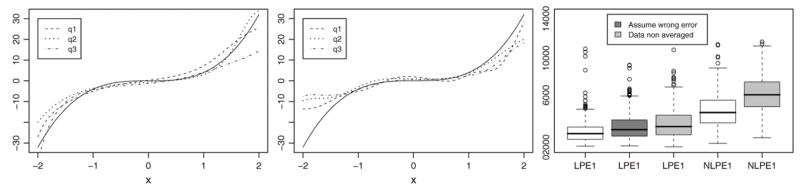

Figure 2.

Estimates of the density function m(1) for curve (4) when U is Laplace, var(U) = 0.4var(X) and n = 250, using our local linear method (LPE1, left) and the naive local linear method that ignores measurement error (NLPE1, center), when data are averaged and var(U) is estimated by (10). Right: boxplots of ISEs, var(U) estimated by (10). Data averaged except for boxes 3 and 5. Box 2 wrongly assumes Laplace error.

5.2 Simulation Results

In the following figures, we show boxplots of the 500 calculated ISEs corresponding to the 500 generated samples. We also show graphs with the target curve (solid line) and three estimated curves (q1, q2, and q3) corresponding to, respectively, the first, second, and third quartiles of these 500 calculated ISEs for a given method.

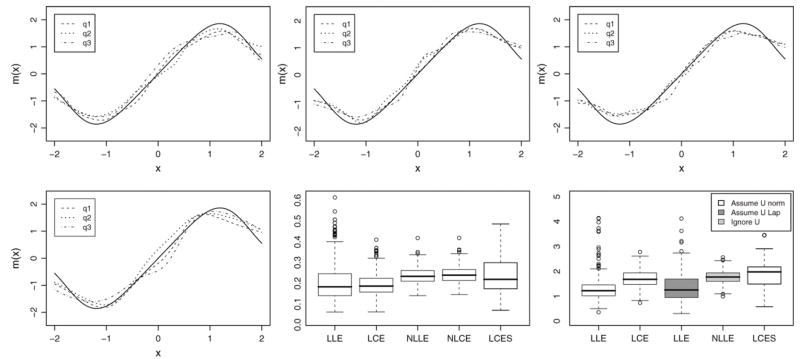

Figure 1 shows the quantile estimated curves of m at (2) and boxplots of the ISEs, for samples of size n = 500, when U is Laplace and var(U) = 0.2var(X). As expected by the theory, the LCE is more biased than the LLE near the boundary. Similarly, the LCES is more biased and variable than the LLE. As usual in measurement error problems, the naive estimators that ignore the error are oversmoothed, especially near the modes and the boundary. The boxplots show that the LLE tends to work better than the LCE, but also tends to be more variable. Except in a few cases, both outperform the LCES and the naive estimators.

Figure 1.

Estimated curves for case (2) when U is Laplace, var(U) = 0.2 var (X) and n = 500. Estimators: local linear estimator (LLE, top left), local constant estimator (LCE, top center), the naive local linear estimator that ignores measurement error (NLLE, top right), the local constant estimators using the sinc kernel (LCES, bottom left), and boxplots of the ISEs (bottom center). Bottom right: boxplots of the ISEs for case (1) when U is normal, var(U) = 0.2 var (X) and n = 250. Data are averaged replicates and var(U) is estimated by (10).

Figure 1 also shows boxplots of ISEs for curve (1) in the case where U is normal, var(U) = 0.2var(X) and n = 250. Here we pretended var(U) was unknown, but generated replicated data as at (9) and estimated var(U) via (10). Because the error variance of the averaged data W̄i = (Wi1 + Wi2)/2 is half the original one, we applied each estimator with these averaged data, either assuming U was normal, or wrongly assuming it was Laplace, with unknown variance estimated. We found that the estimator was quite robust against error misspecification, as already noted by Delaigle (2008) in closely connected deconvolution problems. Like there, assuming Laplace distribution often worked reasonably well (see also Meister 2004). Except in a few cases, the LLE worked better than the LCE, and both outperformed the LCES and the NLLE (which itself outperformed the NLCE not shown here).

At Figure 2 we show results for estimating the derivative of curve (4) in the case where U is Laplace, var(U) = 0.4var(X), and n = 250. We assumed the error variance was unknown, and we generated replicated data and estimated var(U) by (10). We applied the LPE1 on both the averaged data and the original sample of non-averaged replicated data. For the averaged data, the errors distribution is that of a Laplace convolved with itself, and we took either that distribution or wrongly assumed that the errors were Laplace. We compared our results with the naive NPLE1. Again, taking the error into account worked better than ignoring the error, even when a wrong error distribution was used, and whether we used the original data or the averaged data.

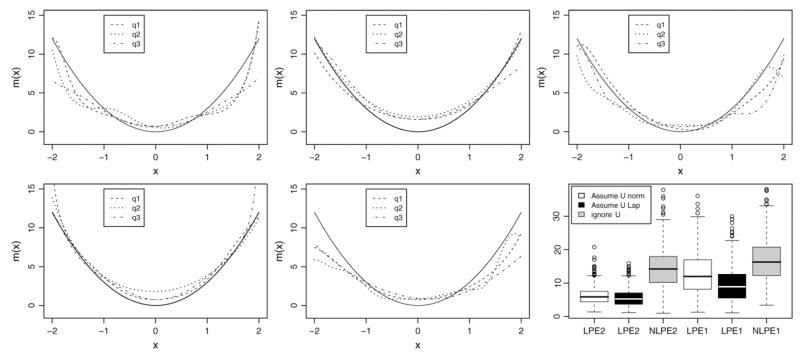

Finally, Figure 3 concerns estimation of the derivative function m(1) in case (3) when U is normal with var(U) = 0.4var(X) and n = 500. We generated replicates and calculated the LPE1 and LPE2 estimators assuming normal errors or wrongly assuming Laplace errors, and pretended the error variance was unknown and estimated it via (10). In this case as well, taking the measurement error into account gave better results than ignoring the errors, even when the wrong error distribution was assumed. The LPE2 worked better at the boundary than the LPE1, but at the interior it is the LPE1 that worked better.

Figure 3.

Estimates of the derivative function m(1) for case (3) when U is normal with var(U) = 0.4var(X) and n = 500, using our local linear method (LPE1, top left) and local quadratic method (LPE2, top center) assuming that U is normal, using the LPE1 (top right) and LPE2 (bottom left) wrongly assuming that the error distribution is Laplace, or using the NLPE2 (bottom center). Boxplots of the ISEs (bottom right). Data are averaged replicates and var(U) is estimated by (10).

6. CONCLUDING REMARKS

In the 20 years since the invention of the deconvoluting kernel density estimator and the 15 years of its use for local constant, Nadaraya-Watson kernel regression, the discovery of a kernel regression estimator for a function and its derivatives that has the same bias properties as in the no-measurement-error case has remained unsolved. By working with estimating equations and using the Fourier domain, we have shown how to solve this problem. The resulting kernel estimators are readily computed, and with the right degrees of the local polynomials, have the design adaptation properties that are so valued in the no-error case.

Acknowledgments

Carroll’s research was supported by grants from the National Cancer Institute (CA57030, CA90301) and by award number KUS-CI-016-04 made by the King Abdullah University of Science and Technology (KAUST). Delaigle’s research was supported by a Maurice Belz Fellowship from the University of Melbourne, Australia, and by a grant from the Australian Research Council. Fan’s research was supported by grants from the National Institute of General Medicine R01-GM072611 and National Science Foundation DMS-0714554 and DMS-0751568. The authors thank the editor, the associate editor, and referees for their valuable comments.

APPENDIX: TECHNICAL ARGUMENTS

A.1 Derivation of the Estimator

We have that

where we used the fact that . Similarly, we find that

Therefore, from (5) and using E[eitWj|Xj] = eitXjφU(t), Lk satisfies

From and the Fourier inversion theorem, we deduce that

A.2 Proofs of the Results of Section 3

We show only the main results and refer to a longer version of this article, Delaigle et al. (2008), for technical results that are straightforward extensions of results of Fan (1991a), Fan and Masry (1992) and Fan and Truong (1993).

In what follows, we will first give the proofs, referring to detailed Lemmas and Propositions that follow. A longer version with many more details is given by Delaigle et al. (2008).

Before we provide detailed proofs of the theorems, note that because Ŝν,k, k = 0, … p represents the (ν + 1)th row of , we have

Consequently, it is not hard to show that

| (A.1) |

where

| (A.2) |

Proof of Theorem 1

We give the proof in the case where p − ν odd. The case p − ν even is treated similarly by replacing everywhere Sν,k(x) by Sν,k(x) − hS̆ ν,k.

From (A.1) and Lemma A.1, we have

| (A.3) |

where

| (A.4) |

We deduce from Propositions A.1 and A.2 that the OP(hp+3) term in (A.3) is negligible. Hence, hν(ν!)−1[m̂(ν)(x) − m(ν)(x)] is dominated by Zn, and to prove asymptotic normality of m̂(ν)(x), it suffices to show asymptotic normality of Zn. To do this, write , where

| (A.5) |

As in Fan (1991a), to prove that

| (A.6) |

it suffices to show that for some η > 0,

| (A.7) |

Take η as in (B4) and let κ(u) = E{|Y − m(x)|2+η|X = u}. We have

from Lemma B.5 of Delaigle et al. (2008), and where, here and below, C denotes a generic positive and finite constant. Similarly, we have

and thus E|Un, j|2+η ≤ Ch−β(2+η)−1−η.

For the denominator, it follows from Proposition A.2 and Lemma B.7 of Delaigle et al. (2008) that

We deduce that (A.7) holds and the proof follows from the expressions of E(Un, j) and var(Un, j) given in Propositions A.1 and A.2.

Proof of Theorem 2

Using similar techniques as in the ordinary smooth error case and Lemmas B.8 and B.9 of the more detailed version of this article (Delaigle et al. 2008), it can be proven that (A.3) holds in the supersmooth case as well, and under the conditions of the theorem, the OP(hp+3) is negligible. Thus, as in the ordinary smooth error case, it suffices to show that, for some η > 0, (A.7) holds.

With Pn,i and Qn,i as in the ordinary smooth error case, we have

with β2 = β0·1{β0 < 1/2}, where, here and later, C denotes a generic finite constant, and where we used Lemma B.9 of Delaigle et al. (2008). It follows that . Under the conditions of the theorem, we conclude that (A.7) holds for any η > 0 and (A.6) follows.

Lemma A.1

Under the conditions of Theorem 1, suppose that, for all 0 ≤ k ≤ 2p, we have, when p − ν is odd, (nh)−1/2 {R(KU,k)}1/2 = O(hp+1) and when p − ν is even, (nh)−1/2 {R(KU,k)}1/2 = O(hp+2). Then,

| (A.8) |

Where Rν,k(x) = Sν,k(x) if p − ν is odd and Rν, k(x) = Sν,k(x) − hS̆ν,k(x) if p − ν is even, and where S̆i,j denotes

Proof

The arguments are an extension of the calculations of Fan and Gijbels (1996, pp. 62 and 101–103). We have

By construction of the estimator, is equal to the expected value of its error-free counterpart , which, by Taylor expansion, is easily found to be

| (A.9) |

Because K is symmetric, it has zero odd moments, and thus if k + p is odd and if k + p is even. Moreover,

where we used

and results derived in the proof of Lemma B.1 of Delaigle et al. (2008).

Using our previous calculations, we see that when k + p is odd,

whereas, for k + p even,

where c1 and c2 denote some finite nonzero constants (depending on x but not on n). Now, it follows from Lemmas B.1 and B.5 of Delaigle et al. (2008) that, under the conditions of the lemma, Ŝ = fX(x)S + hf′X(x)S̃) OP(h2). Let I denote the identity matrix. By Taylor expansion, we deduce that

Thus, we have Ŝi,j = Si,j − hS̆i,j + OP(h2), where, due to the symmetry properties of the kernel, Si,j = 0 when i + j is odd, whereas S̆ν,j = 0 when i + j is even. This concludes the proof.

Proposition A.1

Under Conditions A, B, and O, we have for p − ν odd

and, for p − ν even,

Proof

From (A.4), we have where is given at (A.9). It follows that

Recall that Sν,k(x) = 0 unless k + ν is even and S̆ν,k (x) = 0 unless k + ν is odd, and write k + p = (k + ν) + (p − ν). If k + ν is even and p − ν is odd or k + ν is odd and p − ν is even, then k + p is odd and thus μk+p+2 = 0. If k + ν is odd and p − ν is odd or if k + ν is even and p − ν is even, then using similar arguments we find μk+p+1 = 0.

Proposition A.2

Under Conditions A, B, and O, we have

Proof

Let Un as in the proof of Theorem 1. We have

We split the proof into three parts.

-

To calculate var(Pn,i), note that

and, noting that each KU,k is real, we have,

where we used (B.1) of Delaigle et al. (2008), which states that

with c as in (7). Finally

and thus

Now , which implies that

-

To calculate var(Qn,i), note that E{KU,k+j,h(Wi − x)} = ∫ vk+jK(v)fX(x + hv) dv = O(1) and |E{KU,k+j,h(Wi − x)KU,k′+j′,h(Wi − x)}| = O(h−2β−1), by Lemma B.6 of Delaigle et al. (2008), which implies that

and var(Qn,i) = O(h−2β+1), which is negligible compared with var(Pn,i).

We conclude from (1) and (2) that var(Un,i) = var(Pn,i){1 + o(1)}, which proves the result.

Contributor Information

Aurore Delaigle, Aurore Delaigle is Reader, Department of Mathematics, University of Bristol, Bristol BS8 1TW, UK and Department of Mathematics and Statistics, University of Melbourne, VIC, 3010, Australia (E-mail: aurore.delaigle@bri-s.ac.uk).

Jianqing Fan, Jianqing Fan is Frederick L. Moore’18 Professor of Finance, Department of Operations Research and Financial Engineering, Princeton University, Princeton, NJ 08544, and Honored Professor, Department of Statistics, Shanghai University of Finance and Economics, Shanghai, China (E-mail: jqfan@princeton.edu).

Raymond J. Carroll, Raymond J. Carroll is Distinguished Professor, Department of Statistics, Texas A&M University, College Station, TX 77843 (E-mail: carroll@stat.tamu.edu)

References

- Berry S, Carroll RJ, Ruppert D. Bayesian Smoothing and Regression Splines for Measurement Error Problems. Journal of the American Statistical Association. 2002;97:160–169. [Google Scholar]

- Butucea C, Matias C. Minimax Estimation of the Noise Level and of the Deconvolution Density in a Semiparametric Convolution Model. Bernoulli. 2005;11:309–340. [Google Scholar]

- Carroll RJ, Hall P. Optimal Rates of Convergence for Deconvolving a Density. Journal of the American Statistical Association. 1988;83:1184–1186. [Google Scholar]

- Carroll RJ, Hall P. Low-Order Approximations in Deconvolution and Regression with Errors in Variables. Journal of the Royal Statistical Society: Series B. 2004;66:31–46. [Google Scholar]

- Carroll RJ, Maca JD, Ruppert D. Nonparametric Regression in the Presence of Measurement Error. Biometrika. 1999;86:541–554. [Google Scholar]

- Carroll RJ, Ruppert D, Stefanski LA, Crainiceanu CM. Measurement Error in Nonlinear Models. 2. Boca Raton: Chapman and Hall CRC Press; 2006. [Google Scholar]

- Comte F, Taupin M-L. Nonparametric Estimation of the Regression Function in an Errors-in-Variables Model. Statistica Sinica. 2007;17:1065–1090. [Google Scholar]

- Cook JR, Stefanski LA. Simulation-Extrapolation Estimation in Parametric Measurement Error Models. Journal of the American Statistical Association. 1994;89:1314–1328. [Google Scholar]

- Delaigle A. An Alternative View of the Deconvolution Problem. Statistica Sinica. 2008;18:1025–1045. [Google Scholar]

- Delaigle A, Fan J, Carroll RJ. Design-adaptive Local Polynomial Estimator for the Errors-in-Variables Problem. 2008. Long version available from the authors. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delaigle A, Hall P. On the Optimal Kernel Choice for Deconvolution. Statistics & Probability Letters. 2006;76:1594–1602. [Google Scholar]

- Delaigle A, Hall P. Using SIMEX for Smoothing-Parameter Choice in Errors-in-Variables Problems. Journal of the American Statistical Association. 2008;103:280–287. [Google Scholar]

- Delaigle A, Hall P, Meister A. On Deconvolution With Repeated Measurements. Annals of Statistics. 2008;36:665–685. [Google Scholar]

- Delaigle A, Meister A. Nonparametric Regression Estimation in the Heteroscedastic Errors-in-Variables Problem. Journal of the American Statistical Association. 2007;102:1416–1426. [Google Scholar]

- Delaigle A, Meister A. Density Estimation with Heteroscedastic Error. Bernoulli. 2008;14:562–579. [Google Scholar]

- Diggle P, Hall P. A Fourier Approach to Nonparametric Deconvolution of a Density Estimate. Journal of the Royal Statistical Society: Ser B. 1993;55:523–531. [Google Scholar]

- Fan J. Asymptotic Normality for Deconvolution Kernel Density Estimators. Sankhya A. 1991a;53:97–110. [Google Scholar]

- Fan J. Global Behavior of Deconvolution Kernel Estimates. Statistica Sinica. 1991b;1:541–551. [Google Scholar]

- Fan J. On the Optimal Rates of Convergence for Nonparametric Deconvolution Problems. Annals of Statistics. 1991c;19:1257–1272. [Google Scholar]

- Fan J, Gijbels I. Local Polynomial Modeling and Its Applications. London: Chapman & Hall; 1996. [Google Scholar]

- Fan J, Masry E. Multivariate Regression Estimation with Errors-in-Variables: Asymptotic Normality for Mixing Processes. J Multiv Anal. 1992;43:237–271. [Google Scholar]

- Fan J. Local Polynomial Estimation of Regression Functions for Mixing Processes. Scandinavian Journal of Statistics. 1997;24:165–179. [Google Scholar]

- Fan J, Truong YK. Nonparametric Regression With Errors in Variables. Annals of Statistics. 1993;21:1900–1925. [Google Scholar]

- Hall P, Meister A. A Ridge-Parameter Approach to Deconvolution. Annals of Statistics. 2007;35:1535–1558. [Google Scholar]

- Hu Y, Schennach SM. Identification and Estimation of Non-classical Nonlinear Errors-in-Variables Models with Continuous Distributions. Econometrica. 2008;76:195–216. [Google Scholar]

- Ioannides DA, Alevizos PD. Nonparametric Regression with Errors in Variables and Applications. Statistics & Probability Letters. 1997;32:35–43. [Google Scholar]

- Koo J-Y, Lee K-W. B-Spline Estimation of Regression Functions with Errors in Variable. Statistics & Probability Letters. 1998;40:57–66. [Google Scholar]

- Li T, Vuong Q. Nonparametric Estimation of the Measurement Error Model Using Multiple Indicators. Journal of Multivariate Analysis. 1998;65:139–165. [Google Scholar]

- Liang H, Wang N. Large Sample Theory in a Semiparametric Partially Linear Errors-in-Variables Model. Statistica Sinica. 2005;15:99–117. [Google Scholar]

- Marron JS, Wand MP. Exact Mean Integrated Squared Error. Annals of Statistics. 1992;20:712–736. [Google Scholar]

- Meister A. On the Effect of Misspecifying the Error Density in a Deconvolution Problem. The Canadian Journal of Statistics. 2004;32:439–449. [Google Scholar]

- Meister A. Density Estimation with Normal Measurement Error with Unknown Variance. Statistica Sinica. 2006;16:195–211. [Google Scholar]

- Neumann MH. On the Effect of Estimating the Error Density in Non-parametric Deconvolution. Journal of Nonparametric Statistics. 1997;7:307–330. [Google Scholar]

- Schennach SM. Estimation of Nonlinear Models with Measurement Error. Econometrica. 2004a;72:33–75. [Google Scholar]

- Schennach SM. Nonparametric Regression in the Presence of Measurement Error. Econometric Theory. 2004b;20:1046–1093. [Google Scholar]

- Staudenmayer J, Ruppert D. Local Polynomial Regression and Simulation-Extrapolation. Journal of the Royal Statistical Society: Series B (General) 2004;66:17–30. [Google Scholar]

- Staudenmayer J, Ruppert D, Buonaccorsi J. Density Estimation in the Presence of Heteroscedastic Measurement Error. Journal of the American Statistical Association. 2008;103:726–736. [Google Scholar]

- Stefanski LA. Measurement Error Models. Journal of the American Statistical Association. 2000;95:1353–1358. doi: 10.1080/01621459.2013.858630. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stefanski L, Carroll RJ. Deconvoluting Kernel Density Estimators. Statistics. 1990;21:169–184. [Google Scholar]

- Stefanski LA, Cook JR. Simulation-Extrapolation: The Measurement Error Jackknife. Journal of the American Statistical Association. 1995;90:1247–1256. [Google Scholar]

- Taupin ML. Semi-Parametric Estimation in the Nonlinear Structural Errors-in-Variables Model. Annals of Statistics. 2001;29:66–93. [Google Scholar]

- Zwanzig S. On Local Linear Estimation in Nonparametric Errors-in-Variables Models. Theory of Stochastic Processes. 2007;13:316–327. [Google Scholar]