Abstract

Functional analysis (FA) methodology is a well-established standard for assessment in applied behavior analysis research. Although used less commonly in clinical (nonresearch) application, the basic components of an FA can be adapted easily in many situations to facilitate the treatment of problem behavior. This article describes practical aspects of FA methodology and suggests ways that it can be incorporated into routine clinical work.

Descriptors: Behavioral assessment, functional analysis methodology

Research methods used in applied behavior analysis provide an excellent model for practice, although standards for evaluating research are admittedly more stringent. The demonstration of experimental control is a good example—it is required in research but not often attempted in practice. Translation of research methodology into practical application often is a matter of what is useful yet feasible, and a demonstration of control, at least during assessment, is both. Most practitioners understand the value of knowing how problem behavior is maintained before attempting to reduce it; perhaps less clear is why practitioners should conduct a functional analysis (FA) when (a) information can be obtained from other sources and (b) practical constraints seem to preclude a thorough analysis. We address both of these issues and suggest ways to incorporate FA methodology into routine clinical assessments.

The term “functional analysis” refers to any empirical demonstration of a cause-effect relation (Baer, Wolf, & Risley, 1968); its application with problem behavior is unique, however. A variety of reinforcement options are available when attempting to establish a new response because nonexistent target responses have no function. Although we may approach the treatment of problem behavior the same way—applying various sorts of contingencies and evaluating their effects, ongoing behavior does have a function based on its history of reinforcement. Thus, the consequences we use to reduce problem behavior must neutralize or compete with those that maintain it, and an FA allows us to identify sources of maintenance prior to treatment.

A great deal of research has shown that the same learning processes that account for the development of socially appropriate behavior—positive and negative reinforcement—are involved in the acquisition and maintenance of problematic behavior (see Iwata, Kahng, Wallace, & Lindberg, 2000, for a more extended discussion). Self-injury, aggression, property destruction, and other harmful acts often produce a necessary reaction from caregivers to interrupt the behavior, which may be combined with other consequences (comfort, “redirection” to other activities, etc.) that may strengthen problem behavior through social-positive reinforcement. These behaviors also are sufficiently disruptive that they may terminate ongoing work requirements, thereby producing escape (social-negative reinforcement). Finally, some problem behaviors (self-injury and/or stereotypy) produce sensory consequences that are automatically reinforcing. Thus, the goal of an FA is to determine which sources of reinforcement account for problem behavior on an individual basis.

Sources of Information about Problem Behavior

A “functional behavioral assessment” consists of any formal method for identifying the reinforcers that maintain problem behavior. Informant responses to rating scales or questionnaires (also called indirect or anecdotal approaches) are easily obtained, which is why these methods are used most often by practitioners (Desrochers, Hile, & Williams-Moseley, 1997; Ellingson, Miltenberger, & Long, 1999). Although indirect methods continue to be recommended (Herzinger & Campbell, 2007), they have been shown repeatedly to be unreliable (Arndorfer, Miltenberger, Woster, Rortvedt, & Gaffaney, 1994; Conroy, Fox, Bucklin, & Good, 1996; Duker, & Sigafoos, 1998; Newton & Sturmey, 1991; Sigafoos, Kerr, & Roberts, 1994; Sigafoos, Kerr, Roberts, & Couzens, 1993; Spreat & Connelly, 1996; Sturmey, 1994; Zarcone, Rodgers, Iwata, Rourke, & Dorsey, 1991) and, as a result, inadequate as the basis for developing an intervention program. Their use seems justifiable only when there are no opportunities whatsoever to collect direct-observation data, and these types of situations, in which client verbal report defines both the extent and cause of the initial problem, as well as when it is resolved, more closely resemble a traditional counseling context rather than the practice of behavior analysis.

The descriptive analysis (Bijou, Peterson, & Ault, 1968), in which observational data are taken on the target behavior and the context in which it occurs, has a longstanding tradition in our field as the primary method for collecting baseline data and evaluating treatment effects. It is not, however, well suited to the identification of functional relations, a fact that was noted by Bijou et al.: “ . . . descriptive studies provide information only on events and their occurrence. They do not provide information on the functional properties of the events or the functional relationships among the events. Experimental studies provide that kind of information” (pp. 176–177). More specifically, descriptive analyses may not reveal differences among social contingencies (e.g., attention vs. escape) that maintain problem behavior (Lerman & Iwata, 1993; Mace & Lalli, 1991), cannot detect extremely thin schedules of reinforcement (Marion, Touchette, & Sandman, 2003), and may incorrectly suggest contingent attention as the source of maintenance because attention is a commonly observed consequence for problem behavior even though it may not be a reinforcer (St. Peter et al., 2005). For these reasons, comparisons of outcomes from independent descriptive and functional analyses of problem behavior generally have shown poor correspondence (Thompson & Iwata, 2007).

In light of limitations with both indirect and descriptive approaches, the functional or experimental analysis has emerged as the standard for assessment in clinical research.1 For example, Kahng, Iwata, and Lewin (2002) examined trends in behavioral research on the treatment of self-injury over a 35-year period and noted a continuing increase in the number of studies incorporating FA methodology, whereas those using other methods have either greatly decreased (descriptive analyses) or ceased altogether (indirect methods).

Key Components of a Functional Analysis

Procedures used for conducting FAs have varied widely, to the point where qualitative and quantitative characteristics of assessment conditions, as well as experimental designs, have been modified to suit a wide range of applications (see Hanley, Iwata, & McCord, 2003, for a review). Still, all methods share a common feature—observation of behavior under well-defined test versus control conditions. A test condition contains the variable (usually some combination of antecedent and consequent events) whose influence is being evaluated. Iwata, Dorsey, Slifer, Bauman, and Richman (1994/1982) described an initial set of test conditions to identify sources of reinforcement previously shown to maintain problem behavior: social-positive reinforcement (contingent-attention condition), social-negative reinforcement (escape-from-demands condition), and automatic reinforcement (alone condition). Variations of test conditions have included divided-attention (Mace, Page, Ivancic, & O'Brien, 1986), access to tangible items (Mace & West, 1986), and social avoidance (Hagopian, Wilson, & Wilder, 2001). Appendix A contains a brief description of commonly used test conditions. Antecedent events are those in effect prior to the occurrence of problem behavior and serve as potential establishing operations or EOs (Laraway, Snycerski, Michael, & Poling (2003). For example, in the test condition for attention, attention is withheld or is delivered to someone other than the client, either of which may increase the “value” of attention as a reinforcer. Consequent events are those that immediately follow behavior and may serve as reinforcers. The importance of a test condition is obvious; the control condition also is important to rule out the possibility that behavior observed under the test condition would have been seen regardless of what the condition contained.

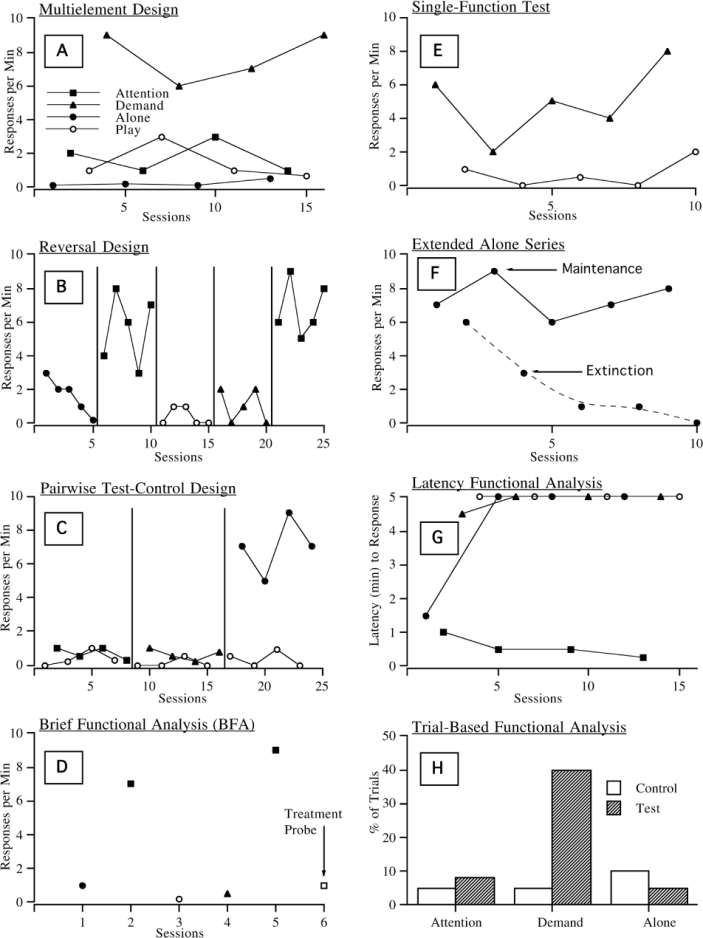

As noted by Baer et al. (1968), a functional analysis of a behavior consists of “ . . . a believable demonstration of the events that can be responsible for the occurrence or non-occurrence of that behavior” (pp. 93–94). From a research perspective, believability requires control over (a) measurement (dependent variable), (b) application of treatment (independent variable), and (c) potential sources of confounding. FAs reported in journals such as the Journal of Applied Behavior Analysis (JABA) typically meet this standard. The multielement design is the most efficient method for conducting multiple comparisons in an FA (see Figure 1, Panel A, illustrating behavior maintained by escape). Because the rapidly alternating conditions of the multielement design sometimes result in discrimination failure, the reversal design (see Figure 1, Panel B, illustrating maintenance by attention) or the pairwise, test-control design, which combines features of the multielement and reversal designs (Figure 1, Panel C, illustrating maintenance by automatic reinforcement), are used as alternatives.

Figure 1.

Variations of functional analysis designs (see text for details)

The standards for believability in practice are different yet may approximate those of research in many respects. For example, we expect objective measurement of target behaviors in routine clinical application even though assessment of observer reliability may be less than desirable (or nonexistent). In a similar way, we can incorporate the key components of an FA during assessment even though it may not meet the standards imposed on research because the essential feature—the controlled comparison—can be accommodated in many applied situations. When contingency-management programs are implemented to decrease the frequency of problem behavior, intervention usually is preceded by initial observation of clients and significant others in the setting in which treatment will occur and the collection of baseline data. Both of these provide an opportunity to conduct an FA because the only additional requirement is the inclusion of test and control conditions. Although practical constraints may preclude a demonstration of control similar to that seen in research reports, the methodology has been adapted for use under a number of limiting conditions.

Practical Constraints in the Implementation of Functional Analysis Methodology

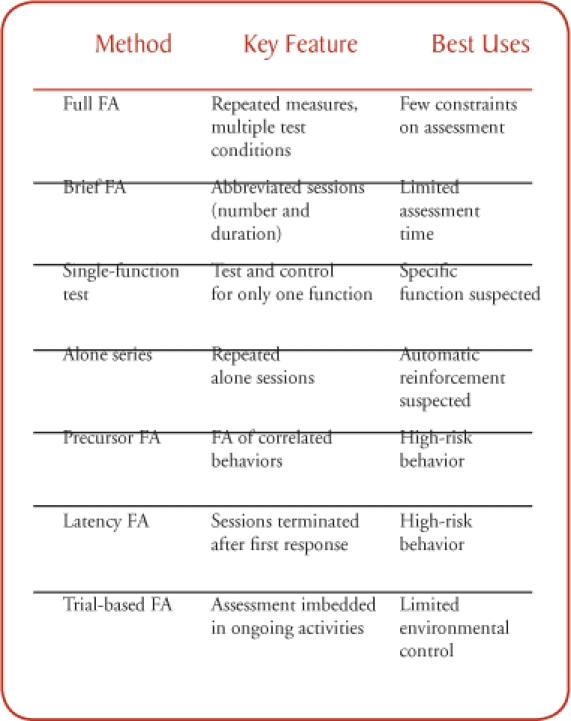

The chief limitations of a typical FA include constraints on the time available for assessment, risk posed by severe problem behavior, and the inability to exert tight control over environmental conditions. Each of these has been addressed through several procedural variations, which are described below and outlined in Table 1.

Table 1.

Summary of Functional Analysis (FA) Variations

Limited Assessment Time

When contact with clients is limited, as in outpatient and consultation work, it may be impossible to obtain repeated measures across an extended series of assessment sessions. The “brief functional analysis” or BFA (Northup et al., 1991) was developed exactly for these situations. It consists of a single exposure to 5-min test and control conditions, with replication of a key test condition if time permits, followed by a treatment “probe,” all of which can be accommodated within a 90-min time period (see Figure 1, Panel D, illustrating behavior maintained by attention). Derby et al. (1992) summarized results obtained with the BFA for 79 outpatient cases and reported that they were able to identify the function(s) of problem behavior in approximately 50% of the cases. This finding is remarkable given that the assessment was completed in such a short period of time and under highly novel clinic conditions. Furthermore, the assessment provided evidence of an empirical functional relation (unlike that obtained from indirect or descriptive methods) in less time than what has been required to conduct many descriptive analyses.

Aside from the BFA, a typical, repeated-measures FA may be abbreviated through the use of single-function testing. The FA most often used in research attempts to identify which of several sources of reinforcement maintains problem behavior and therefore includes multiple test conditions. By contrast, when anecdotal report or informal observation strongly suggests a particular source of maintenance, an FA could consist of a single test condition versus a control (see Figure 1, Panel E, illustrating behavior maintained by escape). Thus, preliminary information from rating scales and descriptive analyses, although unreliable or tentative, may enhance the efficiency of an FA. Positive results of a single-function test lead directly to treatment; only negative results require further analysis.

A second type of single-function test might be considered when behavior is presumed to be “self-stimulatory” in nature (i.e., maintained by automatic reinforcement), and consists of observing the individual during repeated “alone” sessions (Vollmer, Marcus, Ringdahl, & Roane, 1995). Although this procedure does not involve a test-control comparison, it provides a simple way to verify that problem behavior persists in the absence of all social stimulation (and therefore is unlikely to be maintained by social reinforcement). By contrast, decreased responding across sessions suggests the possibility of extinction and the need to include test conditions for social contingencies (see Figure 1, Panel F, illustrating two different outcomes in the alone condition–maintenance and extinction).

Potentially Dangerous Behavior

Behaviors such as severe self-injury or aggression are difficult to assess if they cannot be allowed to occur frequently. Although the descriptive analysis is appealing because it simply takes advantage of naturally occurring episodes, severe problem behavior often produces caregiver reactions (e.g., response interruption) that can bias interpretation. The challenge faced when conducting an FA is arranging conditions under which problem behavior may increase while at the same time minimizing risk. The most obvious strategy, in the case of self-injury, consists of having participants wear protective devices. Le and Smith (2002) observed, however, that protective equipment suppressed responding across all FA conditions. As an alternative, Smith and Churchill (2002) noticed that individuals who engaged in self-injury or aggression also engaged in other responses that reliably preceded the occurrence of problem behavior. Results of independent FAs of the “precursor” and target behaviors showed that both had the same functions and that occurrences of severe problem behavior were reduced during the FA of precursors, suggesting that an analysis of precursor behaviors might be helpful in reducing risk.

Another strategy consists of using a measure of responding that is not based on repeated occurrences of behavior. Response rate and duration are the typical measures in research, but latency to the first response also may be sensitive to the effects of contingencies. Thomason, Iwata, Neidert, and Roscoe (2008), for example, conducted independent FAs of problem behavior during sessions in which response rates were measured and during sessions that were terminated following the first occurrence of a target response. Correspondence between results of the two assessments was observed in 9 out of 10 cases, and in every case the latency-based FA resulted in many fewer occurrences of problem behavior (see Figure 1, Panel G, illustrating maintenance by attention. Note: shorter latency indicates responding earlier in a session; 5-min latency indicates that the response never occurred).

Limited Control over Environmental Conditions

Almost all FAs reported in research were conducted in settings that facilitated the environmental control needed to isolate the effects of independent variables. This raises the question of whether FAs can be applied under more naturalistic conditions in which the uncontrolled actions of bystanders may compromise results. In addition to conducting FAs in outpatient clinics, David Wacker's group at the University of Iowa has conducted a series of assessment-treatment studies in which FAs were conducted in homes (e.g., Wacker, Berg, Derby, Asmus, & Healey, 1998). Therapists “coached” parents to implement assessment conditions with their children, and procedures were implemented without any loss of precision. Extension to school settings has been shown in studies in which assessment conditions were embedded as probe trials during ongoing classroom routines across the school day (Bloom, Iwata, Fritz, Roscoe, & Carreau, 2008; Sigafoos & Saggers, 1995). For example, a demand probe is conducted in an academic-work context and consists of a 1 min to 2 min control in which no work is presented, followed immediately by a 1 min to 2 min test in which work is presented as the EO and removed contingent on problem behavior (see Figure 1, Panel H, illustrating maintenance by escape). Thus, it seems that the setting per se is not a limiting factor of the FA as long as confounding influences can be minimized for brief periods of time.

Other Suggestions for Implementation

Risk Assessment

When problem behavior results in injury to clients or others, more careful consideration of risk is needed than when conducting uncontrolled observations because the therapist explicitly arranges conditions under which problem behavior may increase. Documentation of past or potential risks of the behavior, informed consent, and modifications in assessment procedures (see previous comments on severe problem behavior) are strongly recommended in such cases.

Data Collection and Interpretation

Although a skilled therapist may be able to take data while conducting sessions, this practice is rarely used even in research. Thus, a therapist and observer are needed to conduct most sessions. If an observer is unavailable, sessions may be videotaped for later scoring. Traditional paper-and-pencil (data sheets) can be used for actual data recording. However, many inexpensive programs are available for recording data on laptop computers or PDAs and are highly recommended (see Kahng & Iwata, 1998, for a general review and Sarkar et al., 2006, for a recent example). Finally, because data interpretation is a subjective process, criteria for evaluating the results of single-subject designs may be helpful (see Fisher, Kelley, & Lomas, 2003; Hagopian et al., 1997).

Initial Case Selection

The most difficult problem faced by those first attempting to use FA methodology is the absence of a standard for comparison to establish the validity of assessment. That is, results of an assessment cannot be compared to those obtained by a more experienced clinician. Because some assessments may yield clear results only after several modifications, exceedingly complex cases (e.g., those suggesting multiple control or the influence of unusual combinations of events) are not ideal trial cases. A better strategy consists of selecting a case for which there is a strong (perhaps unanimous) suspicion that problem behavior is maintained by a particular social consequence. A positive test for the influence of that consequence provides a measure of face validity, whereas a negative test suggests the need to examine more closely the way in which assessment is conducted (a negative test also may simply reveal flaws in the information obtained initially). An accumulation of positive results, especially when they are combined with positive outcomes from function-based interventions, increases confidence that procedures are being implemented correctly and provides a basis for consideration of more complex cases.

Staff Training

FAs are more difficult to implement than other types of assessment because they require the ability to follow a prescribed sequence of interactions in a consistent manner. Although it can be argued that a behavior analyst who does not have the skills to conduct an FA also cannot implement any subsequent behavioral intervention, a more definitive reply is available by way of data. Results from several studies indicate that undergraduate students, teachers, and workshop participants all can acquire the skills to conduct FA sessions with a high degree of consistency following very brief training (Iwata et al., 2000; Moore et al., 2002; Wallace, Doney, Mintz-Resudek, & Tarbox, 2004). Although actually designing an FA or modifying it if initial results are unclear requires greater skill, neither task should be particularly difficult for a supervising behavior analyst. One especially valuable training aid is video modeling. Observer training seldom is limited to verbal or written instruction, and the same applies to the implementation of FAs. Scripted, role-playing scenarios can be easily produced to demonstrate the correct presentation of antecedent and consequent events, and video samples of actual sessions can serve as the basis for performance feedback.

Summary

The use of FA methodology as an assessment tool was described over 25 years ago (Iwata et al., 1994/1982). Since then, replication and extension have been reported in hundreds of published studies. Thus, the methodology is not new and has been adopted on a wide-scale basis in clinical research. It is unclear whether FA methodology has had a similar impact on practice because survey data (Desrochers et al., 1997; Ellingson et al., 1999) suggest that psychologists and behavior analysts continue to rely more heavily on traditional forms of assessment such as the questionnaire and uncontrolled observation. One possible reason for limited extension from research to practice is that clinicians, having never been trained in the use of FA methodology, view it as impractical except for research purposes. An examination of current research, however, indicates that refinement has been aimed not only at improving control but also at adapting the methodology for real-world application. Procedural variations have been developed for limiting conditions faced by most clinicians, and we hope that this overview will encourage practitioners to adopt, whenever possible, experimental approaches to behavioral assessment.

In closing, it should be noted that medicine was once a profession in which treatment was prescribed based on causes inferred from patient report and observed symptoms. Claude Bernard, widely regarded as the father of modern medicine, suggested an alternative approach: “ . . . experimental analysis is our only means of going in search of the truth . . .” (1865/1927, p. 55). By incorporating experimental procedures into clinical practice, behavior analysis is uniquely positioned to make a similar contribution to the assessment and treatment of “psychological” disorders.

Appendix A

Functional Analysis Conditions

Test Condition for Maintenance by Social-Positive Reinforcement

- Antecedent event:

- a) Attention condition: Begin the session by informing the client that you are busy and “need to do some work.” Then move away and ignore all client behavior except as noted below.

- b) “Divided-attention” variation: Begin the session in the same manner and then proceed to deliver attention to either another adult or to a peer of the client.

- c) “Tangible” variation: Identify an item that is highly preferred by the client and allow the client free access to it just prior to the session. Begin the session by requesting and removing the item and then move away from client as in the attention condition.

- Consequent event:

- a) Non-target behavior: If the target problem behavior does not occur (or if any behavior other than the target occurs), the antecedent event will remain in effect until the end of the session.

- b) Problem behavior: If the target problem behavior occurs, deliver attention, usually in the form of a mild reprimand, a statement of concern, and some comforting physical contact (or response blocking). In the tangible variation, deliver the tangible item briefly (about 30 s). After delivering attention or the tangible item, reinstate the antecedent event.

Test Condition for Maintenance by Social-Negative Reinforcement

- Antecedent event:

- a) Task-Demand condition: Conduct repeated learning trials throughout the session using academic or vocational tasks that are appropriate to the client's skill level but that are somewhat effortful. Typically, a trial begins with an instruction, followed as needed by prompts consisting of a demonstration and then physical assistance.

- b) Social-Avoidance variation: Initiate social interaction with the client at frequent intervals throughout the session. Do not conduct learning trials (academic or vocational) per se, but simply try to prompt some type of interaction by making comments about things in room, asking questions, etc.

- Consequent event:

- a) Non-target behavior: Deliver praise following appropriate responses (compliance in the task-demand condition, any appropriate social response in the social-avoidance condition).

- b) Problem behavior: If the target problem behavior occurs, immediately terminate the task (or ongoing interaction) and turn away from the client for about 30 s, then reinstate the antecedent condition.

Test Condition for Maintenance by Automatic-Positive Reinforcement

Antecedent event: This condition is designed to determine whether problem behavior will persist in the absence of stimulation; if so, it is not likely maintained by social consequences. Therefore, the condition is conducted ideally with the client alone in a relatively barren environment, and there is no programmed antecedent event.

Consequent event: None.

Control (Play) Condition

This condition is designed to eliminate or minimize the effects likely to be seen in the test conditions. Thus, it typically involves free access to preferred leisure items throughout the session, the frequent delivery of attention, and the absence of demands (Note: If social avoidance is suspected, attention will be deleted). Occurrences of problem behavior produce no consequences, other then the delay of attention for a brief period (5 s to10 s).

Footnotes

Preparation of this manuscript was supported in part by a grant from the Florida Agency on Persons with Disabilities. Reprints may be obtained from Brian Iwata, Psychology Department, University of Florida, Gainesville, Florida 32611.

1Two criticisms have been raised about the status of FA methodology as a benchmark standard. First, the FA itself should be considered tentative pending comparison with another standard. This is true, but given the characteristics of a typical FA (repeated measures, control over dependent and independent variables, replication), it is not clear what a more precise standard would be. Second, although results of an FA reveal the effects of contingencies applied during assessment, it is not clear that the same contingencies influence behavior under typical, “real-world” conditions. This criticism also has some merit; however, results from hundreds of studies have shown that the contingencies identified in an FA are “close enough” to form the basis of highly effective treatment. Furthermore, the best way to verify the influence of a suspected “real-world” contingency would be to isolate its effects in an FA.

Contributor Information

Brian A Iwata, University of Florida.

Claudia L Dozier, University of Kansas.

References

- Arndorfer R. E, Miltenberger R. G, Woster S. H, Rortvedt A. K, Gaffaney T. Home-based descriptive and experimental analysis of problem behaviors in children. Topics in Early Childhood Special Education. 1994;14:64–87. [Google Scholar]

- Baer D. M, Wolf M. M, Risley T. R. Some current dimensions of applied behavior analysis. Journal of Applied Behavior Analysis. 1968;1:91–97. doi: 10.1901/jaba.1968.1-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greene H. C, translator. An introduction to the study of experimental medicine. NY: Macmillan; 1927. Trans. (Original work published 1865) [Google Scholar]

- Bijou S. W, Peterson R. F, Ault M. H. A method to integrate descriptive and experimental field studies at the level of data and empirical concepts. Journal of Applied Behavior Analysis. 1968;1:175–191. doi: 10.1901/jaba.1968.1-175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bloom S. E, Iwata B. A, Fritz J. N, Roscoe E. M, Carreau A. B. Classroom application of a trial-based functional analysis. 2008. Manuscript submitted for publication. [DOI] [PMC free article] [PubMed]

- Conroy M. A, Fox J. J, Bucklin A, Good W. An analysis of the reliability and stability of the Motivation Assessment Scale in assessing the challenging behaviors of persons with developmental disabilities. Education and Training in Mental Retardation and Developmental Disabilities. 1996;31:243–250. [Google Scholar]

- Derby K. M, Wacker D. P, Sasso G, Steege M, Northup J, Cigrand K, Asmus J. Brief functional assessment techniques to evaluate aberrant behavior in an outpatient setting: A summary of 79 cases. Journal of Applied Behavior Analysis. 1992;25:713–721. doi: 10.1901/jaba.1992.25-713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desrochers M. N, Hile M. G, Williams-Moseley T. L. Survey of functional assessment procedures used with individuals who display mental retardation and severe problem behaviors. American Journal on Mental Retardation. 1997;101:535–546. [PubMed] [Google Scholar]

- Duker P. C, Sigafoos J. The Motivation Assessment Scale: Reliability and construct validity across three topographies of behavior. Research in Developmental Disabilities. 1998;19:131–141. doi: 10.1016/s0891-4222(97)00047-4. [DOI] [PubMed] [Google Scholar]

- Ellingson S. A, Miltenberger R. G, Long E. S. A survey of the use of functional assessment procedures in agencies serving individuals with developmental disabilities. Behavioral Interventons. 1999;14:187–198. [Google Scholar]

- Fisher W. W, Kelley M. E, Lomas J. E. Visual aids and structured criteria for improving visual inspection and interpretation of single-case designs. Journal of Applied Behavior Analysis. 2003;36:387–406. doi: 10.1901/jaba.2003.36-387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagopian L. P, Fisher W. W, Thompson R. H, Owen-DeSchryver J, Iwata B. A, Wacker D. P. Toward the development of structured criteria for interpretation of functional analysis data. Journal of Applied Behavior Analysis. 1997;30:313–326. doi: 10.1901/jaba.1997.30-313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagopian L. P, Wilson D. M, Wilder D. A. Assessment and treatment of problem behavior maintained by escape from attention and access to tangible items. Journal of Applied Behavior Analysis. 2001;34:229–232. doi: 10.1901/jaba.2001.34-229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanley G. P, Iwata B. A, McCord B. E. Functional analysis of problem behavior: A review. Journal of Applied Behavior Analysis. 2003;36:147–186. doi: 10.1901/jaba.2003.36-147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herzinger C. V, Campbell J. M. Comparing functional assessment methodologies: A quantitative synthesis. Journal of Autism and Developmental Disorders. 2007;37:1430–1445. doi: 10.1007/s10803-006-0219-6. [DOI] [PubMed] [Google Scholar]

- Iwata B. A, Dorsey M. F, Slifer K. J, Bauman K. E, Richman G. S. Toward a functional analysis of self-injury. Journal of Applied Behavior Analysis. 1982;27:197–209. doi: 10.1901/jaba.1994.27-197. (Reprinted from Analysis and Intervention in Developmental Disabilities, 1982, 2, 3–20) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iwata B. A, Kahng S, Wallace M. D, Lindberg J.S. The functional analysis model of behavioral assessment. In: Austin J, Carr J. E, editors. Handbook of applied behavior analysis. Reno, NV: Context Press; 2000. pp. 61–89. (Eds.) [Google Scholar]

- Iwata B. A, Wallace M. D, Kahng S, Lindberg J. S, Roscoe E. M, Conners J, Hanley G. P, Thompson R. T, Worsdell A. S. Skill acquisition in the implementation of functional analysis methodology. Journal of Applied Behavior Analysis. 2000;33:181–194. doi: 10.1901/jaba.2000.33-181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahng S, Iwata B. A. Computerized systems for collecting real-time observational data. Journal of Applied Behavior Analysis. 1998;31:253–261. [Google Scholar]

- Kahng S, Iwata B. A, Lewin A. B. Behavioral treatment of self-injury, 1964–2000. American Journal on Mental Retardation. 2002;107:212–221. doi: 10.1352/0895-8017(2002)107<0212:BTOSIT>2.0.CO;2. [DOI] [PubMed] [Google Scholar]

- Laraway S, Snycerski S, Michael J, Poling A. Motivating operations and terms to describe them: Some further refinements. Journal of Applied Behavior Analysis. 2003;36:407–414. doi: 10.1901/jaba.2003.36-407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Le D. D, Smith R. G. Functional analysis of self-injury with and without protective equipment. Journal of Developmental and Physical Disabilities. 2002;14:277–290. [Google Scholar]

- Lerman D. C, Iwata B. A. Descriptive and experimental analyses of variables maintaining self-injurious behavior. Journal of Applied Behavior Analysis. 1993;26:293–319. doi: 10.1901/jaba.1993.26-293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mace F. C, Lalli J. S. Linking descriptive and experimental analyses in the treatment of bizarre speech. Journal of Applied Behavior Analysis. 1991;24:553–562. doi: 10.1901/jaba.1991.24-553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mace F. C, Page T. J, Ivancic M. T, O'Brien S. Analysis of environmental determinants of aggression and disruption in mentally retarded children. Applied Research in Mental Retardation. 1986;7:203–221. doi: 10.1016/0270-3092(86)90006-8. [DOI] [PubMed] [Google Scholar]

- Mace F. C, West B. J. Analysis of demand conditions associated with reluctant speech. Journal of Behavior Therapy & Experimental Psychiatry. 1986;17:285–294. doi: 10.1016/0005-7916(86)90065-0. [DOI] [PubMed] [Google Scholar]

- Marion S. D, Touchette P. E, Sandman C. A. Sequential analysis reveals a unique structure for self-injurious behavior. American Journal on Mental Retardation. 2003;108:301–313. doi: 10.1352/0895-8017(2003)108<301:SARAUS>2.0.CO;2. [DOI] [PubMed] [Google Scholar]

- Moore J. W, Edwards R. P, Sterling-Turner H. E, Riley J, DuBard M, McGeorge A. Teacher acquisition of functional analysis methodology. Journal of Applied Behavior Analysis. 2002;35:73–77. doi: 10.1901/jaba.2002.35-73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newton J. T, Sturmey P. The Motivation Assessment Scale: Inter-rater reliability and internal consistency in a British sample. Journal of Mental Deficiency Research. 1991;35:472–474. doi: 10.1111/j.1365-2788.1991.tb00429.x. [DOI] [PubMed] [Google Scholar]

- Northup J, Wacker D, Sasso G, Steege M, Cigrand K, Cook J, DeRaad A. A brief functional analysis of aggressive and alternative behavior in an outclinic setting. Journal of Applied Behavior Analysis. 1991;24:509–52. doi: 10.1901/jaba.1991.24-509. 2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sarkar A, Dutta A, Dhingra U, Verma P, Juyal R, Black R, Monon V, Kumar J, Sazawi S. Development and use of behavior and social interaction software installed on Palm handheld for observation of a child's social interactions with the environment. Behavior Research Methods. 2006;38(3):407–415. doi: 10.3758/bf03192794. [DOI] [PubMed] [Google Scholar]

- Sigafoos J, Kerr M, Roberts D. Interrater reliability of the Motivation Assessment Scale: Failure to replicate with aggressive behavior. Research in Developmental Disabilities. 1994;15:333–342. doi: 10.1016/0891-4222(94)90020-5. [DOI] [PubMed] [Google Scholar]

- Sigafoos J, Kerr M, Roberts D, Couzens D. Reliability of structured interviews for the assessment of challenging behaviour. Behaviour Change. 1993;10:47–50. [Google Scholar]

- Sigafoos J, Saggers E. A discrete-trial approach to the functional analysis of aggressive behaviour in two boys with autism. Australia & New Zealand Journal of Developmental Disabilities. 1995;20:287–297. [Google Scholar]

- Spreat S, Connelly L. Reliability analysis of the Motivation Assessment Scale. American Journal on Mental Retardation. 1996;100:528–532. [PubMed] [Google Scholar]

- Smith R. G, Churchill R. M. Identification of environmental determinants of behavior disorders through functional analysis of precursor behaviors. Journal of Applied Behavior Analysis. 2002;35:125–136. doi: 10.1901/jaba.2002.35-125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- St. Peter C. C, Vollmer T. R, Bourret J. C, Borrero C. S. W, Sloman K. N, Rapp J. T. On the role of attention in naturally occurring matching relations. Journal of Applied Behavior Anlaysis. 2005;38:429–443. doi: 10.1901/jaba.2005.172-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sturmey P. Assessing the functions of aberrant behaviors: A review of psychometric instruments. Journal of Autism and Developmental Disorders. 1994;24:293–304. doi: 10.1007/BF02172228. [DOI] [PubMed] [Google Scholar]

- Thomason J. L, Iwata B. A, Neidert P. L, Roscoe E. M. Evaluation of response latency as the index of problem behavior during functional analyses. 2008. Manuscript submitted for publication. [DOI] [PMC free article] [PubMed]

- Thompson R. H, Iwata B. A. A comparison of outcomes from descriptive and functional analyses of problem behavior. Journal of Applied Behavior Analysis. 2007;40:333–338. doi: 10.1901/jaba.2007.56-06. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vollmer T. R, Marcus B. A, Ringdahl J. E, Roane H. S. Progressing from brief assessments to extended experimental analyses in the evaluation of aberrant behavior. Journal of Applied Behavior Analysis. 1995;28:561–576. doi: 10.1901/jaba.1995.28-561. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wacker D, Berg W, Derby K, Asmus J, Healey Evaluation and long-term treatment of aberrant behavior displayed by young children with disabilities. Journal of Developmental and Behavioral Pediatrics. 1998;19:260–266. doi: 10.1097/00004703-199808000-00004. [DOI] [PubMed] [Google Scholar]

- Wallace M. D, Doney J. K, Mintz-Resudek C. M, Tarbox R. S. F. Training educators to implement functional analyses. Journal of Applied Behavior Analysis. 2004;37:89–92. doi: 10.1901/jaba.2004.37-89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zarcone J. R, Rodgers T. A, Iwata B. A, Rourke D. A, Dorsey M. F. Reliability analysis of the Motivation Assessment Scale: A failure to replicate. Research in Developmental Disabilities. 1991;12:349–362. doi: 10.1016/0891-4222(91)90031-m. [DOI] [PubMed] [Google Scholar]