SYNOPSIS

Instruments designed to measure the performance of public health systems at state and local levels were supported by the Centers for Disease Control and Prevention (CDC) and implemented in 2002. This article describes the process and outcomes of a system and tool designed to measure performance of State Public Health Laboratory Systems, accomplished by the Association of Public Health Laboratories (APHL) in partnership with CDC. We describe the process used to develop the instrument and its subsequent pilot testing and field testing in 11 states.

Throughout the field testing and early implementation phases, both CDC and APHL recognized that the core rationale for measuring system performance would be to provide the basis for subsequent system improvement. APHL implemented the Laboratory System Improvement Program (L-SIP) in 2007 and conducted an evaluation of the field testing of the instrument and related materials that same year. We conclude with a summary of future implications for L-SIP, the prograM&Apos;s recognition as an international standard for laboratory systems, and the critical importance of its continuation.

The 10 Essential Public Health Services (hereafter, Essential Services) were first introduced in 19941 and were later defined as those practices or functions that needed to be in place to assure a fully operational public health system, whether at the local, state, or national level.2 The concept of practicing public health through a systems approach began to grow following elucidation of the Essential Services.

The Centers for Disease Control and Prevention (CDC), in partnership with the Association of State and Territorial Health Officials, the National Association of County and City Health Officials, and the National Association of Local Boards of Health, released three sets of performance standards and instruments in 2002 to measure system performance for state and local public health systems and local governance boards.3 In each case, the standards were based on the Essential Services and measured the performance of the respective systems based on optimal or “gold” standards. CDC continues to promote their use.

In that same year, the Association of Public Health Laboratories (APHL) convened several members and partners to address the unanswered question, “What are the basic functions and capacities of state public health laboratories?” A report was issued defining 11 Core Functions and Capabilities of State Public Health Laboratories (hereafter, Core Functions), together with a set of capacities and activities for each.4

Driven in part by that report, the Board of Directors for APHL expressed an interest in developing public health laboratory (PHL) system performance standards. The Board expressed an interest in developing performance standards as a means of supporting broad system improvement and possibly as a component of a future accreditation process.

Under its cooperative agreement with CDC, APHL contracted with Milne & Associates, LLC (M&A)—a public health consulting firm based in Portland, Oregon—in 2004 to conduct a study to address whether the development of system standards was feasible and would be supported by APHL members. The study demonstrated that PHL leaders across the country were broadly supportive of creating standards and a process for system improvement.

With a cooperative agreement from CDC, APHL contracted with M&A to design and facilitate a project to measure laboratory system performance. An initial planning meeting held in October 2005 included representatives from APHL staff, the APHL Board, CDC, and M&A. At that meeting, a number of deliverables were agreed upon for the project:

Performance standards would be developed for PHL systems as opposed to state PHLs;

Performance measures would be defined for each standard;

A performance measurement tool would be developed;

General intent would be directed at system improvement with potential application to include accreditation;

The project would include field testing to validate the standards, measures, and measurement tool; and

Both the development process and the impact of the standards, measures, and tool would be evaluated.

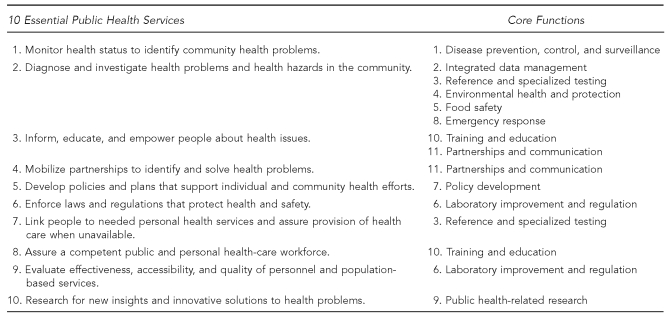

During the planning meeting, it was agreed that the framework for the laboratory performance standards would be the 11 Core Functions framed within the Essential Services (Figure 1). APHL requested that project work be transparent, with all drafts to be posted on the APHL website and ongoing communications to be conducted with partners and stakeholders. A Project Charter was completed, defining the mission, purpose, vision, and values to guide the project, and state PHL leaders were identified to serve as a steering committee to help guide policy and project completion. Work of the initial meeting was completed with brainstorming of a workgroup of laboratory professionals to develop the standards.

Figure 1.

Crosswalk of the 10 Essential Public Health Servicesa and the Core Functions and Capabilities of State Public Health Laboratoriesb

aPublic Health Functions Steering Committee. Public health in America: the 10 essential public health services. Washington: Public Health Service (US); July 1995.

bWitt-Kushner J, Astles JR, Ridderhof JC, Martin RA, Wilcke B Jr, Downes FP, et al. Core functions and capabilities of state public health laboratories: a report of the Association of Public Health Laboratories. MMWR Recomm Rep 2002;51(RR-14):1-8.

DESIGN OF THE STANDARDS AND ASSESSMENT INSTRUMENT

In preparing for the first work meeting, M&A proposed to APHL that the instrument to be developed be based on the attributes and elements of CDC's National Public Health Performance Standards Program (NPHPSP) instruments. However, M&A recommended using the approach employed in the Capacity Assessment for State Title V (CAST-5). CAST-5 was developed by the Johns Hopkins University Women's and Children's Health Policy Center in collaboration with the Association of Maternal and Child Health Programs (AMCHP) to measure organizational capacity for state maternal and child health programs.5 CAST-5 is also structured around the Essential Services and uses a rating system similar to NPHPSP. However, rather than employing a large number of specific questions for each standard, as found in the CDC tools, CAST-5 poses a small number (three to eight) of broader questions related to individual indicators. Participants in a CAST-5 assessment discuss the questions for an indicator and then rate the overall system capacity or performance for the indicator based on the discussion results.

Having participated in both NPHPSP and CAST-5 assessments, M&A was convinced that the deeper discussion of issues related to standards and indicators with the CAST-5 tool would lead to a significantly more robust exchange of perspectives, including the practical experiences of participants. While discussions do occur during NPHPSP assessments, most of the assessment time is spent rating the several hundred indicators with limited discussion. APHL approved incorporating this approach in the laboratory assessment instrument.

The first of two work meetings was held in Washington, D.C., in December 2005. Participants included several state PHL directors, staff from APHL and CDC, and a team of three from M&A. During the meeting, participants confirmed agreements from the October planning meeting, reviewed the project charter, and split into three workgroups to begin designing the instrument.

By the end of the second day of the meeting, the workgroups had identified key themes for each of the Essential Services, with several discussion questions to guide assessment group dialogue for each theme. The workgroups agreed to continue refining the work, meeting by Web conferencing once or twice monthly. Each workgroup took responsibility for three Essential Services and shared work on the 10th Essential Service. An APHL staff member, who was assigned responsibility for the technical aspects of Web conferencing, made on-screen revisions of drafts in real time as the respective workgroups discussed revisions and refinements, enabling participants to see suggested revisions as they occurred. Members of the M&A project team facilitated the Web conferences and assured that the evolving drafts matched decisions made.

Results of the formative meeting and the initial working session were shared with the steering committee in January 2006. Members of the committee agreed to serve through completion of the development and field testing of project material, and usually met by telephone monthly to review progress.

The three workgroups continued to meet through April 2006, refining the draft standards and performance measures and developing a glossary of terms as a companion piece to the assessment tool. Updated drafts of the tool and the glossary were posted on a website to accommodate broader review and input and to help assure project transparency. While site utilization data showed that people did visit the website, very few comments were received.

PILOT TESTING THE INSTRUMENT

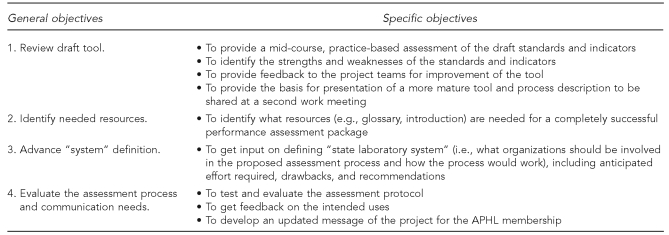

M&A compiled the work of the three groups into a draft instrument and designed a protocol to guide two pilot reviews of the draft. The objectives of the pilots are summarized in Figure 2.

Figure 2.

Objectives for pilot testing the draft public health laboratory system assessment tool

APHL = Association of Public Health Laboratories

Pilot tests were conducted in May 2006 at the Oregon State Public Health Laboratory in Portland and at the Wisconsin State Laboratory of Hygiene in Madison, Wisconsin. Participants included the laboratory director and, in Madison, several middle managers.

Participants in the pilots confirmed strong support for the assessment process, particularly at the system level, and suggested several content changes in the tool. Other results of the pilot testing of the instrument included:

Agreement with the draft scoring system, with a notation that it was compatible with the NPHPSP scoring system;

Recognition that the measurement system is qualitative, not quantitative;

Confirmation that assessments should not be conducted internally, but rather should include partners and be facilitated by outside facilitators trained to the tool and understanding systems;

Agreement that the concept of the State Public Health Laboratory System (SPH Laboratory System) is very important to employ in the tool, but that it is likely to cause confusion in the absence of a clear definition; and

Confirmation that a glossary is needed to assure consistently applied definitions of terms used in the instrument.

The workgroups incorporated pilot site content suggestions into the draft assessment tool and identified additional terms for which definitions were needed. M&A incorporated the work of the teams into an updated draft instrument and glossary, and drafted a User's Guide that included a tool kit to assist states in organizing assessments of their PHL systems. The three drafts were reviewed by all members of the working committees at a meeting held in conjunction with the APHL Annual Meeting in Long Beach, California, in June 2006. During that meeting, final changes to the tool were identified, additional terms for inclusion in the glossary were suggested, and a beginning marketing plan was developed.

Participants in the meeting identified a number of benefits the assessment process would provide for states:

Strengthened relationships with public health, commercial, and other laboratories and partners comprising the broader laboratory system;

A framework for continual improvement of PHL systems;

A concrete way to educate system partners and elected officials about the laboratory system;

A practical tool to help identify areas in need of advocacy and increased resources across the system;

A means to help expand and formalize the National Laboratory System around the country; and

Support for the planned process for accreditation of state PHLs.

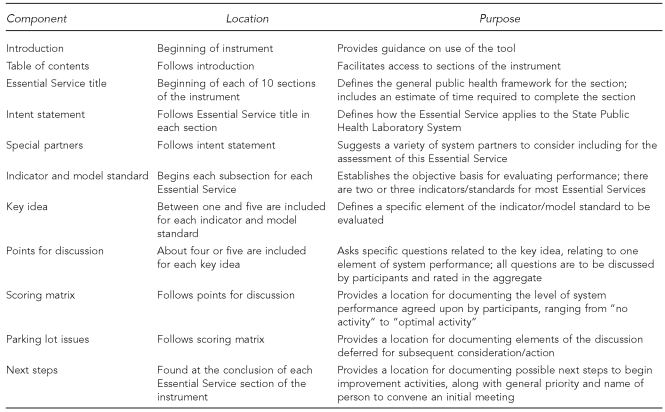

The final design of the tool combined elements from the CAST-5 tool and the NPHPSP assessment tools. Figure 3 includes a listing of the assessment instrument components and their respective functions.

Figure 3.

Components of the APHL State Public Health Laboratory System assessment instrument

APHL = Association of Public Health Laboratories

FIELD TESTING THE MATERIALS

Planning for field testing of the instrument began after the APHL 2006 Annual Meeting. Field tests were designed to test the instrument, assessment process, and supportive materials. Fourteen state PHLs volunteered to participate as field-test sites. The states were arrayed in a matrix by size, complexity, and geographic location. Nine states were selected, representing a cross-section of state PHLs.

The steering committee agreed that four of the field-test sites would be facilitated by M&A, which had facilitated several state and local public health assessments using the NPHPSP instrument. The remainder of the states would arrange for their own process facilitation. The states participating in the field tests included Washington State, Maine, New Hampshire, Missouri, Utah, South Carolina, New Jersey, California, and Texas.

Washington State completed the first field test of the instrument in December 2006. Because this was the first use of the instrument that included system partners, several changes in the instrument were made upon completion of the process to further improve clarity. Field tests continued after changes in the document and other materials were made. The remaining eight field tests were completed by March 2007.

M&A provided each of the field-test states with support for their planning and assessment arrangements. Biweekly technical assistance calls were conducted to provide an introduction to the process, review of materials, and information about steps to be taken to convene the assessment. The User's Guide provided a great deal of assistance to the states, identifying partners to consider inviting, providing draft invitational letters, describing recommendations for facilities including assessment layout, and offering sample agendas and additional information to include in packets for the participants. M&A also provided a brief training for people who were to facilitate the state sessions and for those who were designated as theme takers. M&A developed additional resources to share with the states, including a computer presentation to support the orientation of assessment participants and a spreadsheet-based score sheet.

The nine sites completing the field-test assessment followed a similar process. The mean number of external participants was 50 and included representatives from private and public laboratories, public health agencies, universities, law enforcement agencies, and businesses. A mean of five state laboratory employees also participated.

Each session began in the morning with an explanation of the rationale for conducting the assessment and an orientation to the process. All participants then took part in a plenary assessment of one of the Essential Services as a means of introducing all to the assessment and rating process. The facilitator briefly introduced the topic covered by the Essential Service, and then moved to the first key idea (Figure 3), facilitating a dialogue about the points for discussion designed to assess the major criteria to be met for the key idea by the state laboratory system.

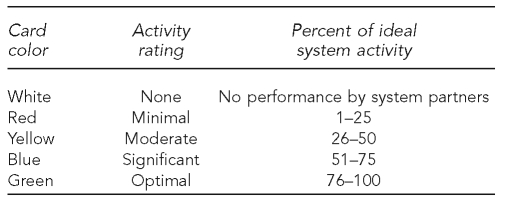

During the discussion, the theme takers documented major conversation points that might be pursued in subsequent improvement work. The facilitator would call for a rating of how well the key idea was satisfied by the system after a dialogue of key issues. Participants held up one of five colored index cards, each signifying a different rating (Figure 4).

Figure 4.

Description of scoring cards used during public health laboratory system assessments

The facilitator encouraged an exchange of perceptions about performance for the key idea and called for one or more re-votes if needed to identify a performance rating that all group members could accept. The facilitator then moved group discussion to the next key idea, repeating the process until all the key ideas for the Essential Service were completed.

After completing the plenary assessment session, participants moved into three concurrent breakout sessions to repeat the process during the remainder of the day. At the conclusion of the third breakout session, completing assessments for the final three Essential Services, participants reconvened to hear the scored results of the day's assessment, briefly discuss next steps, and participate in a brief evaluation of the day.

Field-test evaluations

M&A evaluated general results of the nine field tests upon their completion. Among the findings were the following:

Cost: The range of costs for conducting the assessments was $250 to $5,000 (mean = $2,400). Costs included facility rental, equipment rental, printing of materials, food, and (for some) facilitation.

Staff time: The mean range of staff time required was 71 to 90 hours for planning, making arrangements, and conducting the assessment.

- Benefits: Among those most frequently mentioned were:

- —Increased connection and improved relationships with partners and stakeholders

- —Improved communication across agencies and organizations

- —Increased orientation around system thinking

- —Creation of baseline data to help inform improvement activities

- Challenges: Among those identified were:

- —Understanding the concept of a PHL system among participants. The concept was new to most participants, including internal and external partners.

- —Some states were prohibited by state policy from purchasing food for participants. States were encouraged to provide lunch and refreshments during the assessment day as a way to manage time, increase participant satisfaction, and create opportunities for informal communication among partners.

- —Knowing how to proceed following the assessment.

PROGRAM IMPLEMENTATION

General results and findings from the field tests were presented in a scientific session at the 2007 APHL Annual Meeting. The presentation also served as an excellent marketing opportunity for the project. During the meeting, several state laboratory directors who had participated in the field tests conducted video interviews, describing their experiences and the benefits gained from the assessment. APHL staff incorporated many of the interviews into a brief marketing video to help promote the program. During the Annual Meeting, several states indicated an interest in participating in the next round of assessments.

Following the Annual Meeting, continued funding from CDC supported APHL in publishing a request for proposals, soliciting bids for program implementation, creating a four-year plan, formally evaluating the program, and developing an Online Resource Center. M&A was awarded a contract for implementation, development of a four-year plan, and evaluation. The Public Health Foundation was awarded a contract to develop an Online Resource Center.

At the beginning of the implementation work, M&A and APHL staff created a number of additional materials and resources to support states based on lessons learned from the field tests. The new resources included:

A brief video describing the program, including interviews with laboratory directors who completed the assessment process;

A narrated computer presentation designed to support individuals charged with planning and convening assessments for state laboratories;

A video describing the role and process guidelines for facilitators and theme takers;

Written materials, such as a description of the program and using M&A's Essential Services in English. The materials also included a definition of the PHL system, developed by a separate APHL committee; and

Development of a Web-based information center to house materials and resources for states planning to launch the program.

A biannual schedule of technical support for states planning to conduct system assessments began in 2008. Each phase includes provision of a series of conference calls during which participants are guided through the assessment planning process, the various tools, and the assessment instrument, and are provided password-protected access to the SharePoint site. Seven states completed assessments in 2008, four additional states began preparing for assessment in early 2009, and another five states expressed interest in participating during the second phase in 2009.

During 2008, the program name, formerly the State Public Health Laboratory System Performance Assessment Program, changed to the Laboratory System Improvement Program (L-SIP). APHL changed the name to reflect the core importance of system improvement as the principal rationale for completing system assessments.

PROGRAM EVALUATION

Program evaluation was based on a review of data collected during the field-testing phase of the project, together with telephone interviews with key informants and focus groups. Data sources used included:

April 2007 survey of field-test sites, conducted after the field tests were completed;

Debriefings of field-test sites, completed shortly after the field tests, between February and April 2007;

Post-assessment reports from three field-test sites;

Invitation and attendance lists from the field-test sites; and

Feedback from invited participants who were unable to attend assessments.

M&A conducted key informant interviews with the state laboratory directors from the nine field-test sites. Six focus groups were conducted—four with internal partners (primarily state laboratory staff) and two with partners external to the state laboratories. Conclusions from the evaluation included:

The assessment process was perceived to be a very positive experience, especially in providing opportunities for a diverse group of people to meet, network, learn, and exchange ideas.

The tools and resources made available to the field-test sites were viewed positively.

The technical assistance provided was seen as being very helpful.

The performance standards instrument provided a useful framework for evaluating the SPH Laboratory System.

The assessments provided many new insights for participants, particularly about roles and services provided by system partners and the importance of a systems approach.

The assessments created some immediate opportunities for partnerships.

The concept of a systems approach resulted in a number of powerful discoveries among partners, particularly that they were part of the system and that achieving optimal system performance depended on their contributions.

Challenges identified through the evaluation included:

Securing resources for planning and conducting the assessment (no state had assessment-specific funding and each had to be uniquely creative to support costs of the assessment);

Educating and preparing participants regarding the assessment process, including explanation of the SPH Laboratory System;

Recruitment of a representative group of system partners; and

Moving from the assessment phase to the action phase.

FUTURE OF THE PROGRAM

The program, which has been in operation since 2007, with nearly half of the states having completed or presently preparing for system assessments, has demonstrated value and is contributing to laboratory system improvement. Still, the program is in its infancy, and additional work is needed to attract the remaining states and to support the work of system improvement. The project steering committee continues to guide development and enhancement of project components. Leadership from committee members is helping advance adoption of system assessment and improvement activities among colleagues.

L-SIP continues to be a very dynamic program. APHL is leading efforts to define SPH Laboratory System improvement strategies and resources, and has convened a committee of laboratory experts to develop strategies that support improvement practices following initial assessments. Technical assistance calls began in April 2009 to support these activities among the states that have completed performance assessments. Monthly conference calls are being conducted among states to share experiences, successes, and challenges with improvement. The APHL Online Resource Center provides access to a variety of improvement resources, and will capture model practices from states as they are reported.

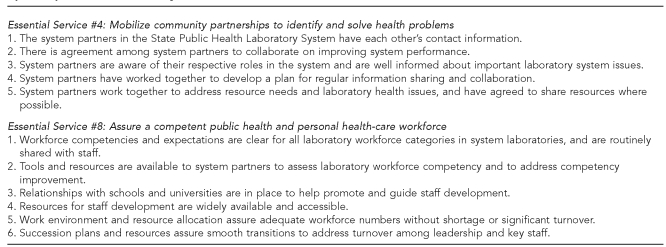

APHL has contracted with M&A to develop a set of basic metrics that describe key elements or capacities that are in place in an SPH Laboratory System functioning at a high (or gold) standard. These key elements or capacities will be developed for each of the Essential Services in the L-SIP assessment. Drafts have been completed and reviewed by the L-SIP Improvement Committee for community mobilization (Essential Service #4) and workforce development (Essential Service #8) (Figure 5). Metrics for the remaining Essential Services are currently being developed. APHL anticipates that these metrics will become instrumental in supporting system involvement and improved practice.

Figure 5.

State Public Health Laboratory System metrics describing optimal performance level by Essential Servicea

aPublic Health Functions Steering Committee. Public health in America: the 10 essential public health services. Washington: Public Health Service (US); July 1995.

The four-year plan, completed for L-SIP in early 2008, was designed to guide marketing, tool and materials revision, development of new materials, and other aspects of the program. Based on the plan, APHL has made several program enhancements using lessons learned from states' experiences in completing assessments and from the program evaluation conducted in 2008. The organization has increased availability of supportive materials and resources, particularly through its SharePoint site, and is revising the L-SIP User's Guide to incorporate new information about system improvement. In 2009, APHL received a small grant from CDC to provide mini-grants to states that were planning to begin assessments, and to provide paper copies of the tool and other materials. These new resources are helping overcome some of the financial challenges that have delayed L-SIP participation for some states. Finally, APHL has initiated an electronic L-SIP newsletter for its membership, providing information about lessons learned by participating states and how to participate.

The Public Health Agency of Canada identified the APHL assessment instrument as an international standard and contracted with APHL in 2008 to lead the development of instruments and processes for system performance assessment and improvement for the national and provincial PHLs in Canada. The work completed to date is helping inform improvements to the APHL instrument that is scheduled for revision in 2011.

APHL is committed to continual improvement of L-SIP, its tools, processes, and materials. The organization is currently conducting an evaluation of the project among its members. Additionally, the Laboratory Systems and Standards Committee of APHL conducts a biennial survey to evaluate the degree to which state laboratories are providing or assuring services within the framework of the 11 Core Functions.

What is clear is that challenges being faced by state PHLs and their partners are increasingly complex. Lessons learned from experiences such as anthrax, 9/11, and H1N1 have demonstrated that public safety responses must include an active, well-organized public health system with a highly effective laboratory system. Moreover, effective laboratory response depends on state laboratories working closely with their system partners. Responses to public emergencies are only as effective as the weakest system link. Canada's commitment to PHL system improvement was driven by its experience with severe acute respiratory syndrome and is a further demonstration that this work is compelling, and that greater and continuing collaboration among system partners at local, state, national, and international levels will be required.

Further, financial resources supporting services by state PHLs and most, if not all, of their partner organizations are insufficient and increasingly scarce. Collaboration among laboratory system partners may prove to be an important strategy for sustainability of core services.

These challenges will need to be addressed for states to adopt the assessment-improvement-reassessment-improvement cycle as a routine component of laboratory system practice. The longer-term vision of L-SIP is for states to incorporate these activities with their partners into a regular, ongoing process.

CONCLUSIONS

CDC has held a clear vision and provided continuing leadership for the public health system assessment programs it has supported, and clearly understands their importance. It is also clear that continued resource support from CDC is of central importance to sustaining the APHL program to advance SPH Laboratory System improvement. The vision for the program together with the gains already made justify the continued investment.

APHL is to be acknowledged for its vision, leadership, continued action, and support in this critical work. Its commitment to improving the performance of SPH Laboratory Systems will help states become better equipped to address new and emerging public health threats with their system partners and will improve laboratory practice.

Footnotes

The work described in this article was supported by Cooperative Agreement #CCU303019 from the Centers for Disease Control and Prevention (CDC). The findings and conclusions in this article are those of the authors and do not necessarily represent the official position of CDC or the Agency for Toxic Substances and Disease Registry.

REFERENCES

- 1.Public Health Functions Steering Committee. Public health in America: the 10 essential public health services. Washington: Public Health Service (US); July 1995. [Google Scholar]

- 2.Institute of Medicine. The future of the public's health in the 21st century. Washington: National Academies Press; 2002. [PubMed] [Google Scholar]

- 3.Centers for Disease Control and Prevention (US) National Public Health Performance Standards Program: assessment instruments 2008 to present, current (version 2) [cited 2009 Oct 23]. Available from: URL: http://www.cdc.gov/od/ocphp/nphpsp/TheInstruments.htm.

- 4.Witt-Kushner J, Astles JR, Ridderhof JC, Martin RA, Wilcke B, Jr, Downes FP, et al. Core functions and capabilities of state public health laboratories: a report of the Association of Public Health Laboratories. MMWR Recomm Rep. 2002;51(RR-14):1–8. [PubMed] [Google Scholar]

- 5.Association of Maternal and Child Health Programs. Capacity Assessment for State Title V (CAST-5) [cited 2009 Oct 23]. Available from: URL: http://www.amchp.org/MCH-Topics/A-G/CAST5/Pages/default.aspx.