Abstract

This study examined a community–university partnership model for sustained, high-quality implementation of evidence-based interventions. In the context of a randomized study, it assessed whether implementation quality for both family-focused and school-based universal interventions could be achieved and maintained through community–university partnerships. It also conducted exploratory analyses of factors influencing implementation quality. Results revealed uniformly high rates of both implementation adherence—averaging over 90%—and of other indicators of implementation quality for both family-focused and school-based interventions. Moreover, implementation quality was sustained across two cohorts. Exploratory analyses failed to reveal any significant correlates for family-intervention implementation quality, but did show that some team and instructor characteristics were associated with school-based implementation quality.

The extant literature clearly indicates the need to evaluate the quality of implementation of preventive interventions, particularly those that are evidence-based (Durlak, 1998; Goggin, Bowman, Lester, & O’Toole, 1990; Greenberg, Domitrovich, Graczyk, & Zins, 2001; Mihalic & Irwin, 2003). Although there is an expanding set of evidence-based interventions (hereafter EBIs) shown to be efficacious in reducing youth problem behaviors and promoting positive youth development, low-quality intervention implementation frequently diminishes positive outcomes (Backer, 2003; Domitrovich & Greenberg, 2000; Fixsen, Naoom, Blasé, Friedman, & Wallace, 2005). Quality monitoring is especially important when implementation occurs under real-world conditions, guided by community-based organizations or partnerships (Dzewaltowski, Estabrooks, Klesges, Bull, & Glasgow, 2004). Sustained, high quality implementation by communities is essential to the achievement of greater public health impact of EBIs (Glasgow, Klesges, Dzewaltowski, Bull, & Estabrooks, 2004; Lamb, Greenlick, & McCarty, 1998; Spoth & Greenberg, 2005).

Because of the importance of sustained, quality implementation of EBIs by community-based partnerships, there is a need to systematically evaluate partnership models guiding such implementation (Spoth & Greenberg, 2005). In addition, there is a need to study factors potentially influencing sustained, quality, community-based implementation of EBIs to better understand how to improve implementation systems (Fixsen et al., 2005; Greenberg et al., 2001). These research needs are addressed by the three objectives of the present study. The first objective is to examine adherence rates and other implementation quality ratings achieved through a community-based model for EBI implementation. To study the sustainability of the partnership model, the second objective is to determine how well implementation quality was maintained over time. The third and final objective was to explore whether community team and intervention instructor factors were correlated with implementation quality for family-focused and school-based EBIs.

EARLIER EMPIRICAL WORK

Spoth, Guyll, Trudeau, and Goldberg-Lillehoj (2002) examined the implementation quality of universal preventive interventions implemented by community–university partnerships. They presented data from two studies, one in which 22 schools were randomly assigned either to a universal, family-focused intervention or to a minimal contact control group, and another in which 36 schools were assigned to a multi-component universal intervention (family-focused and school-based), a school-based intervention alone, or to a minimal contact control. Both studies used independent observer-rated implementation adherence scores, consistent with data supporting the higher validity of observer-rated measures (Lillehoj, Griffin, & Spoth, 2004). Most notably, the findings of the Spoth et al. (2002) study highlight the benefits of community–university partnerships in achieving high levels of intervention adherence, as delineated in the earlier report. This work is extended in the present study, by further examination of implementation quality using multiple quality indicators, evaluation of implementation quality maintenance, and exploration of a range of factors potentially correlated with the quality of implementation.

CORRELATES AND PREDICTORS OF IMPLEMENTATION QUALITY

A report on a large-scale study of the implementation of eight different EBIs in 42 sites across the United States (Mihalic & Irwin, 2003) notes that there is very limited knowledge about factors influencing implementation quality, such as how it might be influenced by the nature and structure of organizational factors and technical assistance. Because so little is known, there is insufficient empirically based information to guide implementers in transporting programs to real-world settings (Mihalic & Irwin, 2003, p. 2). The lack of information and guidelines is especially acute in the case where a local community partnership is the organizational center of an implementation system.

Relevant conceptual and empirical literature guided the selection of putative correlates selected for analyses in this study. Several factors similar to those described in the comprehensive reviews of Fixsen et al. (2005) and Greenberg et al. (2001) were examined, with focus on those concerning organization or team supports for implementation, as well as variables pertaining to instructor characteristics.

Team Factors

Consistent with the limited evaluation of the effectiveness of community-based prevention partnership teams in general, our search of the literature uncovered no investigations of how community team factors, specifically, influenced the implementation quality of universal EBIs (Spoth & Greenberg, 2005). There is, however, an emerging literature on team-related factors influential in effectively performing a range of tasks, such as those generally concerning the conduct of various types of community improvements or intervention projects (Green, 2001; Green & Kreuter, 2002; Greenberg, Feinberg, Osgood, & Gomez, in press; Hallfors, Cho, Livert, & Kadushin, 2002; Kegler, Steckler, Malek, & McLeroy, 1998; Kreuter, Lezin, & Young, 2000; Roussos & Fawcett, 2000). Among the partnership team characteristics potentially associated with implementation quality, several were chosen for consideration in the present study, including team effectiveness (indicating cohesive efforts focused on identified tasks and the quality of the partnership leadership), team meeting quality, and team attitudes regarding prevention. Selection of these variables also was indicated by an earlier study of substance abuse prevention partnerships that suggested an association between limited cohesion in partnership functioning and poorly organized implementation work (Hallfors et al., 2002). Therefore, partnership support for cohesive and task-focused efforts was considered an important correlate to evaluate.

Review of the dissemination literature on factors influencing implementation quality in real-world settings (e.g., Mihalic, Fagan, Irwin, Ballard, & Elliott, 2002) also suggested that technical assistance (TA) received by the teams was correlated with implementation quality. For example, Mihalic and Irwin (2003) found that the most consistently important factor in implementation success was the quality of ongoing TA. Thus, it was expected that effective TA collaboration, as indicated both by the team’s responsiveness to TA and by high-quality TA communication, would positively correlate with implementation quality, as would the frequency of TA requests.

Instructor Characteristics

Several studies have indicated that instructor or teacher characteristics influence implementation of school-based programs (Fagan & Mihalic, 2003; Greenberg et al., 2001; Kegler et al., 1998). In the current study, in the case of the school-based interventions, it was hypothesized that instructor affiliation (whether or not the instructor was employed by the sponsoring school) would be associated with implementation quality. That is, instructors employed by the school were expected to have a stronger sense of program ownership and more instructional experience with implementing similar programs and thus would be more likely to implement with higher levels of quality. It also was considered important to examine the influence of instructor lecturing—the proportion of time instructors used lecture-like techniques. All of the EBIs employed in this study required training for prospective instructors that emphasized the use of teaching techniques other than lecturing, including interactive discussion or demonstration and role play (skills practice with feedback). Higher levels of lecturing were generally considered to be inconsistent with quality implementation. Therefore, it was hypothesized that the percentage of total instructional time devoted to lecturing would be inversely related to implementation quality variables.

METHOD

Community Selection and Assignment

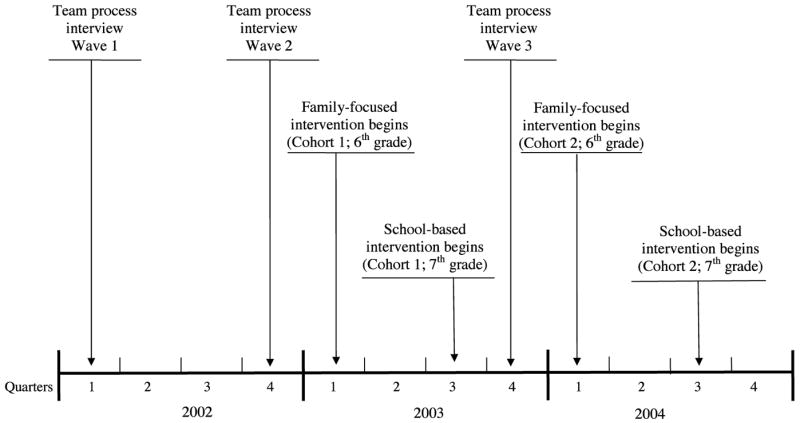

The project recruited 28 school districts from two states (Iowa and Pennsylvania) and utilized a cohort sequential design involving two cohorts (hereafter labeled Cohort 1 and Cohort 2). Initial eligibility criteria for communities considered for the study were (a) school district enrollment from 1,300 to 5,200, and (b) at least 15% of the student population eligible for free or reduced-cost school lunches. Communities in which over half of the residents were either employed by or attending a college or university were excluded from the study, as were communities that were involved in other university-affiliated prevention research projects with youth. Communities were matched on school district size and geographic location; they were randomly assigned to the partnership intervention or to the “normal programming” control conditions. During the first year, two communities (one in each state) withdrew and were replaced. The 28 communities consisted of a variety of rural towns and small cities across the two states. Based on the 2000 census, community population ranged from 6,975 to 44,510. Community and participant recruitment procedures are comprehensively described elsewhere (Greenberg, Feinberg, & Meyer-Chilenski, 2005; Spoth, Clair, Redmond, Shin, & Greenberg, 2005). The participating universities’ Institutional Review Boards authorized the study before recruitment began. There were two successive cohorts of participants that included parents and their children who were in sixth grade at the time they began their involvement with the study. Cohort 1 participants’ involvement with the study began in 2003 when Cohort 1 children were in the sixth grade; Cohort 2 participants’ involvement in the study began in 2004 when Cohort 2 children were in the sixth grade. A timeline of key study procedures is provided in Figure 1.

Figure 1. Study Time Line.a.

aImplementation assessments of the family-focused and school-based interventions in both Cohort 1 and Cohort 2 were completed by observers during each of the four implementations of the interventions. The family-focused interventions were completed in the 2nd Quarter of 2003 for Cohort 1, and in the 1st Quarter of 2004 for Cohort 2. The school-based inverventions were completed in the 2nd Quarter of 2004 for Cohort 1, and in the 2nd Quarter of 2005 for Cohort 2.

Partnership Model

The PROSPER model (PROmoting School-community-university Partnerships to Enhance Resilience) involves a three-component community-university partnership model that includes community teams, university Extension linking agents, and university researchers (see Spoth & Greenberg, 2005; Spoth, Greenberg, Bierman, & Redmond, 2004). Relatively small in size, the strategic community teams were designed with focused intervention goals. Community-based teams were co-led by a staff person based in the Land Grant University Extension System and a public school staff person. The Extension-based persons, typically called Extension Agents or Extension Educators, serve as an outreach function for the university, disseminating information and programs generated by university-based research. In their capacity as co-leaders of the PROSPER community-based teams they serve as agents who link local team members with resources in the community and with local stakeholders who have an interest in evidence-based prevention programming.

The county Extension staff person and the local public school co-leader recruited other community team members, including health and social service providers, parents, and youth. Following team formation, relevant local team activities included the selection of a universal family-focused program from a menu of three EBIs. All teams chose the Strengthening Families Program: For Parents and Youth 10–14 program for their family-focused intervention and recruited families. In the subsequent year, teams were presented with a menu of three school-based EBIs and asked to select one of them. In conducting program selection and during program recruitment and implementation, local teams received TA from an intermediate-level coordinator team—comprised of prevention coordinators (PCs) based in the university Extension system. The PROSPER model and the various functional roles are more thoroughly described in Spoth et al., 2004.

It is important to note that this partnership model represents a hybrid of two models. The first model is one in which intervention implementation is entirely conducted by community volunteers without any direct or indirect scientist involvement. The second model is one in which research staff, supervised by university-based scientists, is more directly involved. The hybrid model in the present study requires that the community teams have primary responsibility for intervention implementation, but also provides them with ongoing TA and support from PCs who, in turn, receive support from and are overseen by university-based prevention scientists.

Family Intervention

The Strengthening Families Program: For Parents and Youth 10–14 (SFP 10–14) (Molgaard, Kumpfer, & Fleming, 1997) is based upon empirically supported family risk and protective factor models (DeMarsh & Kumpfer, 1986; Kumpfer, Molgaard, & Spoth, 1996; Molgaard, Spoth, & Redmond, 2000). The long-range goal of SFP 10–14 is to reduce youth substance use and other problem behaviors. Intermediate goals include the enhancement of parental skills in nurturing, limit-setting, and communication, as well as youth prosocial and peer resistance skills.

The seven SFP 10–14 program sessions were conducted once each week for 7 consecutive weeks when the youth were in the sixth grade. Each session included a separate, concurrent one-hour parent and youth skills-building curriculum, followed by a one-hour conjoint family curriculum during which parents and youth practiced skills learned in their separate sessions.

The SFP 10–14 program sessions were offered in local community facilities after school hours. Each session required three facilitators, one for the parent session and two for the youth session. All three facilitators offered support and assistance to all family members and modeled appropriate skills during the family session. A detailed description of program content can be found at www.extension.iastate.edu/sfp/.

School Interventions

All school-based interventions, like the family-focused intervention, have long-range goals of reducing youth substance use and other problem behaviors. Other short-range goals (e.g., skill development), expected to mediate substance use and other problem behavior outcomes, are specified in the individual program descriptions below.

Life Skills Training

Life Skills Training (LST; Botvin, 1996, 2000) is a universal preventive intervention program based on social learning theory (Bandura, 1977) and problem behavior theory (Jessor & Jessor, 1977); it was selected by five of the 14 community teams. The primary goals of LST are to promote skill development (e.g., peer resistance, self-management, general social skills) and to provide information concerning the avoidance of substance use. Students are trained in the various LST skills through the use of interactive teaching techniques, plus homework exercises and out-of-class behavioral rehearsal. Teaching and skill development are accomplished through 15 program lessons (Botvin, Baker, Renick, Filazzola, & Botvin, 1984). All five teachers who implemented the seventh grade program were trained at a 2-day training workshop. The program was conducted during 40- to 45-minute classroom periods. Sessions were offered through a variety of scheduling formats, ranging from once per week to 5 days a week.

Project ALERT

Based on the social influence model of prevention, the Project ALERT program integrates three theories of behavior change: the health belief model, which focuses on cognitive factors that motivate health behavior (Rosenstock, 1974; Rosenstock, Strecher, & Becker, 1988); the social learning model, which emphasizes social norms and significant others as key determinants of behavior (Bandura, 1985); and the self-efficacy theory of behavior change, which maintains that the belief that one can accomplish a task is essential to effective action (Bandura, 1977). The Project ALERT program attempts to: (a) change students’ beliefs about substance use norms; (b) change students’ beliefs about the social, emotional, and physical consequences of substance use; (c) help students identify and resist prosubstance use pressures from parents, peers, the media, and others; and (d) build resistance self-efficacy. Project ALERT uses interactive teaching methods, such as question-and-answer techniques and small-group activities, which appear to be a crucial element in the effectiveness of this type of program (Tobler, 1986). The 11-session program was conducted during regular classroom periods when students were in seventh grade. Sessions were offered through a variety of scheduling formats, ranging from once each week to 5 days a week.

All Stars

All Stars is a problem behavior prevention/character education program that is based on social learning theory (Bandura, 1977) and problem behavior theory (Jessor & Jessor, 1977). All Stars has four objectives: (a) to influence students’ perceptions about substance use and violence, (b) to increase the accuracy of students’ beliefs about peer norms regarding substance use and violence, (c) to have students make a personal commitment to avoid substance use and violent behavior, and (d) to increase student school bonding (Hansen, 1996). The All Stars program is interactive, including debates, games, and general discussion, with homework designed to increase parent involvement and parent–child interaction (Harrington, Giles, Hoyle, Feeney, & Yungbluth, 2005). The program is designed to reinforce positive qualities typical of adolescents at this age through strengthening five specific qualities vital to achieving preventive effects: developing positive ideals and future aspirations, establishing positive norms, building strong personal commitments, promoting bonding with school and community organizations, and promoting positive interactions with parents. The 13-session program was conducted during regular classroom periods when students were in seventh grade. Scheduling formats ranged from once per week to 5 days a week.

Data Sources for Team Measures

The measures of team characteristics were derived from two different sources: local team members, including team co-leaders, and the PCs.

Local team members participated in team process interviews at the time of team formation (Wave 1 data) and 6 months later (Wave 2—prior to initiating the interventions for Cohort 1) and, again, 12 months later (Wave 3—prior to initiating the interventions for Cohort 2), as shown in Figure 1. To predict Cohort 1 implementation ratings, results from the Wave 2 process interviews were used; for Cohort 2, ratings from process interviews for Wave 2 and Wave 3 were combined. Each team member interview lasted approximately 60 minutes. The sample of local team members at Wave 1 included 120 individuals in 14 communities located in the two states. Other than team leaders, respondents were local volunteers recruited for the PROSPER project intervention teams described earlier.

The number of team member respondents in each community ranged from seven to 13, with a mean of 8.6. Respondents ranged in age from 24 to 59 years (M = 42.7 years). One third of respondents were men. All respondents indicated completing a minimum of a high school education or general equivalency diploma (GED), with 90.2% of the sample having obtained a college degree. The majority of the team members (87.5%) lived in or near the school district that organized the PROSPER intervention team.

A second source of data was collected from the PCs who observed the local teams and provided ratings of team effectiveness. The PC ratings were derived from two different types of assessments: a telephone biweekly report and a Web-based quarterly report. Biweekly reports were the result of telephone contacts between the PCs and the PROSPER team leaders. These telephone contacts included a set of specific questions to assess team leader perceptions about PROSPER-related activities. Some of the questions concerned whether PROSPER meetings had been held, the meeting attendance, problems that occurred during the meetings, and whether any TA was requested. Finally, the quarterly report was completed by the PCs and included items that assessed a range of local team characteristics.

Measures

Implementation adherence and quality

The present study defined implementation adherence as the proportion of program-specific content and activities in the intervention protocol that was actually delivered to students or their families by providers (i.e., classroom instructors and trained family intervention facilitators). This operational definition required the development and use of evaluation measures that identified specific program components that could be assessed as delivered (Blakely et al., 1987).

Intervention observation

Independent observation of interventions by trained observers was chosen as the measurement method for a number of reasons. To begin, less-obtrusive assessment methods were unavailable. Although it is conceivable that unobtrusive videotaping procedures could have reduced the likelihood of adverse reactions to the presence of an observer by the teachers and facilitators who were implementing the interventions, the nature of partnership research precludes the kind of researcher-driven control that would have been necessary to require that interventions be held in facilities with unobtrusive videotaping capabilities. Rather, the community teams were responsible for finding and scheduling the facilities in which to conduct the interventions. Typical intervention locations included schools, churches, and community centers. Also, the alternative of sending personnel to videotape sessions for later assessment was considered to be more obtrusive than the presence of observers without videotaping equipment, particularly in school settings. In addition, experience with anecdotal reports about this type of observation methodology across a large number of studies suggests that implementers being observed will—over time—effectively “tune out” the observers. Further, an earlier study supported the predictive validity of the type of observational assessment used in the present study, especially as compared to teacher–facilitator self-report alone (Lillehoj et al., 2004). Importantly, for situations such as were present in the current study, conclusions based on reviews of the literature recommend the use of trained, independent observers in assessments of implementation quality (Dane & Schneider, 1998).

Independent intervention observers were trained in the observation of particular interventions, with a focus on the valid and reliable completion of observation forms. The purpose of the training was to familiarize observers with the preventive intervention, results of prior studies, key program components, and implementation strategies, along with intervention objectives and rationales. Training also provided an opportunity for learning and practicing the skills needed to successfully observe the program. Generally, a single observer was present during a program session or class. However, approximately one-fourth of these sessions or classes were observed by a second observer to assess interrater reliability.

School-based intervention observation

Two types of implementation assessments were conducted. First, during the teaching of each classroom lesson, observers completed a program lesson-specific process evaluation form. Lesson-specific process evaluation forms were intended to indicate whether each lesson had been implemented as designed and included the assessment of two process components. The first process component pertained to lesson coverage and included implementation of specific content and activities consistent with the program lesson goals and objectives. Observers were asked to review a series of major content-specific goals, objectives, and activities and to indicate whether or not the information was covered (Yes or No). For example, the LST Decision-making Lesson included eight separate objectives and activities (e.g., “Identify everyday decisions,” and “Identify a process for making decisions”) for which observers indicated either Yes or No concerning whether or not the associated material was implemented as specified in the program manual. For the second process component of the evaluation, observers assessed the degree to which students were engaged in the lesson. Student engagement was assessed with four items rating students’ attitudes toward the lesson, the appropriateness of their behavior, their willingness to engage in discussion, and interest in program material and activities. Student engagement items were rated on a scale that ranged from 1 to 4, in which greater values correspond to more engagement.

Observers were trained to complete the lesson-specific implementation assessments during the intervention-specific training. The proportion of specific goals, objectives, and activities covered was calculated for each occasion upon which a lesson was observed. For each community, the school-based implementation adherence measure was then calculated by averaging these proportions across all observed lessons. Ratings of student engagement were treated in a similar fashion to yield community averages for this variable, as well.

Family intervention observations

Similar to the school-based forms, multiple family-intervention observation forms were developed so that each one corresponded to a specific intervention session. In addition, there were three forms for each session, one each for the youth, parent, and family portions of the session. Each observation form included three sections, including one section that pertained to adherence in which the observer marked “Yes” or “No” to indicate whether a particular content item was communicated or a specific activity performed, as prescribed by the intervention. A second section included two items that assessed how actively family members participated in session activities and the amount of interest they exhibited (0 = Little participation/Not interested, 4 = Active participation/Very interested). A third section entailed rating each of the facilitators on a number of positive and negative qualities or characteristics, such as “How accepting and friendly was the group leader?” and “To what extent … was [the group leader] unable to deal effectively with questions?” Response options ranged in value from 0 indicating that the quality or characteristic was not observed (i.e., the value 0 was associated with labels such as Unfriendly and Never for the two items cited) to 4 indicating the quality was clearly observed (i.e., the value 4 was associated with labels such as Friendly and Frequently for the two items cited). The negatively worded items were reverse scored such that greater values corresponded to more positive facilitator qualities for all items.

Implementation adherence was quantified for each community by calculating the proportion of prescribed content and activities observed in each session and averaging all such proportions for the observed sessions. Group participation was computed by averaging responses to the two relevant items and then averaging those values across all sessions within a community. An average facilitator adherence score was obtained by averaging the relevant items for each facilitator in each session and averaging those values across all facilitators and sessions within a community.

Team Factors

The Team Effectiveness measure was the average of 16 items from the team process interview concerning perceptions of team cohesion, task orientation, and quality of leadership. Sample items included: “There is a strong sense of belonging in this team”; “The team leadership has a clear vision for the team”; and “There is strong emphasis on practical tasks in this team.” A second measure was Meeting Quality, which was derived from an item in the PC reports based on team leaders’ reported ratings of the average meeting quality on a 5-point scale ranging from 1 = Poor to 5 = Excellent. This item was averaged across four assessments for Cohort 1 and across eight assessments for Cohort 2. Similar to the case of team process reports, data from all applicable assessments were combined for analyses for each cohort. The final team factor measure was Attitudes Regarding Prevention; this was a 2-item composite index from the team process interview. An illustrative item was “Violence prevention programs are a good investment.” Both items were rated on a 4-point scale ranging from 1 = Not at all True to 4 = Very True.

Two team-related measures were used to assess the nature and quality of the ongoing TA received by the teams. The Effective TA Collaboration measure was calculated by averaging seven items reported quarterly from the perspective of the PCs. These items included “Cooperation with technical assistance” and “Timeliness of reports, applications, materials,” with both items being rated on a 7-point scale from 1 = Poor to 7 = Excellent. Finally, the Frequency of TA Requests was based on the number of TA requests, as reported on the PC biweekly reports.

Instructor Factors

Instructor affiliation: Agency personnel vs. school teacher

In the school-based interventions, the instructor could either be a member of a social services agency or a teacher at the school. In the analyses, the affiliation of agency personnel was coded as “1” and that of school teachers was coded as “2”. It should be noted that the instructor affiliation variable is relevant only to the school-based interventions.

Instructor lecturing

Observers of the school-based interventions reported the percentage of lesson time spent using each of four teaching techniques: lecture, discussion, demonstration, and practice. The amount of instructor lecturing is the percentage of the lesson spent in lecture. As with the instructor affiliation variable, the instructor lecturing variable is only relevant to the school-based interventions.

Control Variable: Supportive District Policy

As indicated in the report by Fixsen et al. (2005), supportive administrative policy is a contextual, organizational factor that can indirectly influence implementation quality. In the current study, a measure of this construct was used to control for between-district variance, to evaluate whether that aspect of the community context was important in understanding the team and instructor variables’ association with the implementation quality outcomes. Supportive district policy was assessed by averaging two items from a school resource interview that was completed by a school district representative. For example, one item asked “In thinking about your school’s policy on alcohol, how much emphasis is placed on prevention?” The items used a 4-point scale ranging from 1 = None to 4 = A lot.

Analytic Procedures

Initially, descriptive analyses of the observation-based implementation adherence and quality measures were conducted and interrater agreement was assessed. To address issues related to sustainability of implementation across time, paired sample t -test comparisons (i.e., comparing Cohort 1 and Cohort 2) were made for each community to determine whether or not implementation quality changed with the passage of time.

Following the descriptive analyses, two additional steps were taken to examine correlates of both implementation adherence and implementation quality. The first step entailed examination of the full range of hypothesized correlates. That is, bivariate correlations with the implementation ratings were calculated for each of the hypothesized correlates previously described. The second step focused on exploring whether observed relationships were meaningfully changed by controlling for the supportive district policy variable. To this end, the partial correlations between the correlates and the implementation outcome variables were computed, controlling for supportive district policy.

RESULTS

Implementation Quality

Program adherence

As noted in the Method section, all communities selected SFP 10–14 as the family-focused intervention. Results presented in Table 1 show that adherence to SFP 10–14 was high, covering over 90% of prescribed program content in each session, on average. Communities exhibited similarly high levels of adherence for each of the school-based interventions, with overall adherence levels averaging more than 90% and ranging 87% to 94%.

Table 1.

Observer Ratings of Implementation Adherence and Quality of Facilitation

| Program | Implementation variable | Na | M | SD | Range | Interrater reliability (r)b |

|---|---|---|---|---|---|---|

| SFP 10–14 | Adherence | 411 | 90.9% | 9.2% | 48%–100% | .86 (n = 131) |

| 350 | 90.8% | 10.9% | 24%–100% | .76 (n = 106) | ||

| Group participation | 328 | 3.64 | .53 | 1.50–4.00 | .66 (n = 101) | |

| 283 | 3.58 | .56 | 2.00–4.00 | .63 (n = 90) | ||

| Facilitation quality | 894 | 3.76 | .34 | 1.89–4.00 | .71 (n = 271) | |

| 754 | 3.71 | .40 | 1.25–4.00 | .66 (n = 229) | ||

| All Stars | Adherence | 67 | 94.4% | 9.3% | 47%–100% | .94 (n = 22) |

| 64 | 87.4% | 22.5% | 0%–100% | .67 (n = 22) | ||

| Student engagement | 67 | 3.49 | .59 | 1.75–4.00 | .46 (n = 19) | |

| 64 | 3.60 | .53 | 1.50–4.00 | .68 (n = 21) | ||

| Life Skills Training | Adherence | 41 | 88.8% | 14.6% | 33%–100% | .84 (n = 11) |

| 48 | 88.5% | 16.4% | 27%–100% | .94 (n = 6) | ||

| Student engagement | 41 | 3.37 | .51 | 1.75–4.00 | .71 (n = 9) | |

| 48 | 3.49 | .63 | 1.75–4.00 | .90 (n = 6) | ||

| Project Alert | Adherence | 67 | 88.2% | 14.6% | 25%–100% | .24 (n = 25) |

| 50 | 89.3% | 10.2% | 64%–100% | .70 (n = 14) | ||

| Student engagement | 67 | 3.39 | .50 | 1.75–4.00 | .43 (n = 25) | |

| 50 | 3.31 | .53 | 2.00–4.00 | .23 (n = 13) | ||

| All school-based programsc | Adherence | 175 | 90.7% | 13.1% | 25%–100% | .81 (n = 58) |

| 162 | 88.3% | 17.6% | 0%–100% | .69 (n = 42) | ||

| Student engagement | 175 | 3.42 | .54 | 1.75–4.00 | .55 (n = 53) | |

| 162 | 3.48 | .57 | 1.50–4.00 | .55 (n = 40) |

Note. Values for Cohort 1 are placed above values for Cohort 2. SFP 10–14 = Strengthening Families Program: For Parents and Youth 10–14.

Values of N in this column correspond to the number of ratings on which the mean, standard deviation, and range are based for each variable. The ratings used for these statistics were those made in sessions observed by a single observer. For SFP 10–14, there was one rating of adherence per session observed. The SFP 10–14 observation forms did not include group participation items for all sessions, resulting in an average of less than one rating per observed session. Observation of SFP 10–14 sessions resulted in facilitation quality items being rated once for parent sessions (because they had a single facilitator), twice for youth sessions (because they had two facilitators), and three times for family sessions (because they had one parent and two youth facilitators). The school-based programs yielded one rating of adherence and one rating of student engagement for each session observed.

Interrater reliability values reflect bivariate correlations between ratings provided by two observers making ratings of the same session. The values of n in this column correspond to the number of rating pairs on which each correlation is based. See previous footnote for information regarding the number of ratings produced per session for each of the variables from the SFP 10–14 and school-based programs.

The school-based programs included All Stars, Life Skills Training, and Project Alert.

Qualitative aspects: Group participation, student engagement, and facilitator qualities

Participants tended to participate actively in and to be well engaged by the family-focused and school-based interventions, as evidenced by high scores on the relevant measures (shown in Table 1). In addition, the facilitator qualities described in the measures section—which were assessed only for the family-focused program—were also at the upper end of the scale, indicating that facilitators exhibited positive qualities.

Sustained Implementation Quality

Results indicated that quality of implementation generally was stable from Cohort 1 to Cohort 2. Comparing the implementation quality measures across cohorts with paired-sample t tests revealed no differences across time for any of the five implementation outcome measures. Thus, the community teams sustained quality implementation across time, as evidenced by sustained high levels of adherence, facilitator qualities, and intervention groups’ participation and student engagement.

Correlates of Implementation Quality

Table 2 presents the bivariate correlations of the five indicators of implementation quality measured in Cohort 1 and Cohort 2, with the seven factors that could potentially influence implementation quality. In light of the low statistical power resulting from the small number of observations associated with the community-level analysis (N = 14), we applied a significance level of p ≤ .10. For the family-focused SFP 10–14 intervention, none of the factors were associated with adherence for either cohort. With respect to the school-based interventions, greater adherence was associated with less reliance upon lecturing as a teaching technique in Cohort 1, and with higher quality prevention team meetings, positive team attitudes regarding prevention, and effective team collaboration with TA in Cohort 2. Greater student engagement in intervention activities in Cohort 1 was related to positive team attitudes regarding prevention.

Table 2.

Correlations Between Implementation Quality Outcomes and Potential Correlates

| Family-focused intervention

|

School-based intervention

|

||||

|---|---|---|---|---|---|

| Potential correlates | Adherence | Group participation | Facilitator quality | Adherence | Student engagement |

| Team factors | |||||

| Team effectiveness | −.16 | −.01 | −.12 | .03a | .25 |

| .13 | .07 | .13 | .43 | −.00 | |

| Meeting quality | −.03 | −.10 | −.12 | −.20 | .17 |

| −.05 | −.04 | −.12 | .58* | .05 | |

| Team attitude regarding prevention | .02 | .10 | −.02 | −.16 | .55* |

| .23 | .35 | .43 | .51+ | .29 | |

| Effective technical assistance collaboration | −.10 | −.12 | −.02 | −.03 | .25 |

| −.24 | −.29 | −.29 | .57* | .00 | |

| Frequency of technical assistance requests | .27 | .02 | .05 | .04 | −.09 |

| .14 | −.11 | −.06 | −.01 | .08 | |

| Instructor factors | |||||

| Instructor affiliation | n/a | n/a | n/a | .43 | .47 |

| .40 | .19 | ||||

| Instructor use of lecturing | n/a | n/a | n/a | −.72** | −.24 |

| .25 | −.09 | ||||

Note. Values for Cohort 1 are placed above values for Cohort 2.

After controlling for supportive district policy, the relationship between Team Effectiveness and Adherence to the school-based program in Cohort 1 became significant, partial r = .54, p ≤ .10. Controlling for supportive district policy did not cause any other nonsignificant (p > .10) relationship to become significant (p ≤ .10), nor did it cause any significant (p ≤ .10) relationship to become nonsignificant (p > .10).

p ≤ .10.

p ≤ .05.

p ≤ .01.

At this point it should be noted that just five of the 58 correlations attained marginal levels of statistical significance, making it is unwise to place too much interpretive value on these relationships. Moreover, there is little consistency to be seen in the pattern of relationships, with a particular variable and implementation outcome exhibiting a strong correlation for one cohort, but only a weak or even a reversed relationship for the other cohort. It is likely that the small number of communities (N = 14) contributed to the instability in the relationships across cohorts.

To further explore potential influences on implementation quality, we controlled for supportive district policy in the calculation of partial correlations between each of the correlates and each of the implementation quality outcome variables, and considered whether this analytic procedure meaningfully altered any of the observed bivariate relationships. Through this procedure, all relationships previously significant at p ≤ .10 remained so. In addition, the previously nonsignificant relationship between team effectiveness and adherence to the school-based intervention in Cohort 2 became significant at p ≤ .10, after controlling for supportive district policy, partial r = .54.

DISCUSSION

Implementation Quality Ratings and Maintenance Over Time

Results of this study showed consistently high implementation quality ratings for both the family-focused and school-based universal interventions, with an average adherence rate of over 90% for both types of interventions. Recent investigations of similar programs reported adherence values ranging from 42% to 86% (Elliott & Mihalic, 2004; Fagan & Mihalic, 2003; Gottfredson, 2001). Thus, the adherence rates for the current research are above the upper end of those reported in the relevant literature. It is worth highlighting that the implementation ratings were obtained from trained independent observers, a fact that compares favorably with the alternative of basing implementation ratings on the self-reports of the implementers themselves, as has been done in many studies (Fixsen et al., 2005).

In addition to the high levels of observed adherence, it is notable that results for the other indicators of implementation quality also were quite positive, in that they averaged at the upper end of the Likert-type scales used for their assessment. In the case of the family-focused intervention, observers’ ratings indicated high levels of group participation and facilitation quality. With respect to the school-based interventions, student engagement ratings also averaged in the upper range of their assessment scales for all three interventions.

In addition, all indicators of quality implementation—adherence and otherwise—were maintained across the two cohorts. There were no significant differences between Cohort 1 and Cohort 2 with regard to any implementation measure for either the family-focused or the school-based interventions. It is likely that the factors that positively influenced the implementation quality for Cohort 1 also were operative for Cohort 2.

The current study replicates Spoth et al. (2002) in that it demonstrates the feasibility of community–university partnerships for implementing universal preventive interventions with quality. In addition, the current research extends previous work in several key respects. First, rather than focus solely on adherence, this investigation considered multiple indicators of implementation quality and found that the partnership model yielded consistently positive results on all implementation outcomes. Second, this study examined implementation quality across two cohorts and found support for the idea that the partnership model would produce community teams with the potential to sustain implementation with quality over time. Third, and perhaps most importantly, the current study has greater ecological validity than does the Spoth et al. (2002) study. Specifically, the current study demonstrates the achievement of positive implementation quality outcomes under field conditions that correspond more closely to those encountered in real-world settings. That is, in the present study the implementation system was more completely under the auspices of a community team, with only indirect or peripheral involvement of university researchers with respect to the implementation of the EBIs. Finally, analyses in this article also tested the relationships between the implementation outcomes and a number of potentially relevant variables that had not been evaluated previously. The discussion next turns to a consideration of the results associated with these relationships.

Correlates of Implementation Quality Ratings

As was the case with the earlier Spoth et al. (2002) study, the consistently high averages for, and limited variability of, the implementation-quality outcome variables are likely to have constrained the degree to which relationships between implementation quality and potentially influential factors could be detected. Further, in the present study the need to perform community-level analyses effectively limited the sample size to the number of communities in the intervention condition (i.e., N = 14). Although this is a considerable number of communities to include in an intervention-control prevention trial, it nonetheless provides very limited statistical power to detect what might be important relationships. This point notwithstanding, findings of the exploratory analyses revealed no significant team-related correlates of family-focused intervention implementation quality. Also, there was little consistency with respect to the pattern of relationships among the potential correlates and the school-based intervention implementation quality outcomes, particularly in light of the number of statistical tests that were conducted and the number of statistical findings that would have been expected to occur by chance alone.

Despite limited statistically significant findings, there were some noteworthy relationships observed and some patterns in the findings that merit comment. One potentially meaningful pattern in the correlation- and regression-related findings is the relative strength and consistency of associations between the instructor or team correlates and the school-based implementation quality outcomes, especially adherence, as compared to the pattern of correlates for the family-focused implementation outcomes. In the school-based intervention implementation, all correlations with instructor and team variables that were significant in the original analyses remained significant after controlling for supportive district policy and the partial correlation of Cohort 2 team effectiveness with adherence became significant at p ≤ .10 as a result of this analysis.

Findings also are consistent with conceptual models proposing that implementation quality can be affected by factors at multiple levels, thereby implying that stronger associations would be expected for factors more proximal to the core components of the implementation system (Fixsen et al., 2005; Greenberg et al., 2001). Given this reasoning, for example, instructor characteristics would generally influence implementation quality more strongly than would team characteristics. Consistent with this reasoning, the instructor characteristics pertaining to their affiliation with the school system and their reliance upon the lecture technique showed some evidence of influencing adherence levels for the school-based programs.

Team factors demonstrated relatively weaker relationships with the implementation quality indicators in the case of the family-focused intervention. One possible explanation for this pattern of findings is that the community team variables measured may not have been sufficiently proximal to the implementation quality outcome variables in the case of the family-focused intervention implementation. Indeed, in the conceptual frameworks referenced in the beginning of this article (Fixsen et al., 2005; Greenberg et al., 2001), team factors would be categorized as an organizational component that includes less causally proximal factors expected to influence implementation outcomes only weakly and indirectly. That is, in the case of the family-focused program, the positive influence of the local team on implementation quality may only occur as a result of the team’s selection of effective facilitators. Although selected by the team members, implementers operated with considerable autonomy in the actual delivery of the interventions.

In consideration of the findings pertinent to the school-based interventions, the team characteristics of effectiveness, meeting quality, attitude regarding prevention, and TA collaboration were associated with positive implementation adherence, but only for Cohort 2. It is not entirely clear why team factors were more in evidence in the case of school-based implementation generally, and specifically with respect to Cohort 2. One possibility is that highly functioning teams had more effective school co-leaders who had a direct and positive influence both on the selection of skilled instructors and on program implementation. The relatively stronger Cohort 2 team factor influences are consistent with anecdotal reports from the PCs indicating that the teams gradually became more involved with and supportive of the school-based implementation process over time.

Significance of Findings

The findings from the current study are important in that they serve to expand the knowledge base regarding community team-based models of preventive intervention implementation. In particular, the current study examined the feasibility of a community-university partnership model in which the implementation system is under the direct auspices and guidance of the community team. Such a demonstration is essential, given that community team-based implementation systems are critical for achieving large-scale dissemination of EBIs and, ultimately, for attaining a positive impact on public health. In addition, this research explored a number of program instructor and team factors considered to have the potential to influence implementation quality. Although few strong correlates of implementation quality emerged in these exploratory analyses, it was instructive that the pattern of stronger and more consistent associations differed for the two different types of interventions considered, and that team factors were of possibly greater relevance to the school-based interventions.

Finally, it is important to note that the high, consistent, and sustained levels of implementation quality, combined with the absence of powerful and pervasive influences on the implementation outcomes, supports the strength of the PROSPER partnership model. Specifically, the findings of this report indicate that PROSPER partnerships were successful in generating and retaining the support and resources necessary to implement preventive interventions in multiple communities, and that the partnerships produced high quality interventions that were largely impervious to potential threats to implementation quality.

Acknowledgments

Work on this paper was supported by research grant DA 013709 from the National Institute on Drug Abuse.

References

- Backer TE. Evaluating community collaborations. New York: Springer; 2003. [Google Scholar]

- Bandura A. Social learning theory. Englewood Cliffs, NJ: Prentice Hall; 1977. [Google Scholar]

- Bandura A. Social foundations of thought and action: A social cognitive theory. Engle-wood Cliffs, NJ: Prentice-Hall; 1986. [Google Scholar]

- Blakely CH, Mayer JP, Gottschalk RG, Schmitt N, Davidson WS, Roitman DB, Emshoff JG. The fidelity-adaptation debate: Implications for the implementation of public sector social programs. American Journal of Community Psychology. 1987;15:253–268. [Google Scholar]

- Botvin GJ. Substance abuse prevention through Life Skills Training. In: Peters RDeV, McMahon RJ., editors. Preventing childhood disorders, substance abuse, and delinquency. Thousand Oaks, CA: Sage; 1996. pp. 215–240. [Google Scholar]

- Botvin GJ. Life Skills Training: Promoting health and personal development. Princeton, NJ: Princeton Health Press; 2000. [Google Scholar]

- Botvin GJ, Baker E, Renick NL, Filazzola AD, Botvin EM. A cognitive-behavioral approach to substance abuse prevention. Addictive Behaviors. 1984;9:137–147. doi: 10.1016/0306-4603(84)90051-0. [DOI] [PubMed] [Google Scholar]

- Dane AV, Schneider BH. Program integrity in primary and early secondary prevention: Are implementation effects out of control? Clinical Psychology Review. 1998;18:23–45. doi: 10.1016/s0272-7358(97)00043-3. [DOI] [PubMed] [Google Scholar]

- DeMarsh JP, Kumpfer KL. Family-oriented interventions for the prevention of chemical dependency in children and adolescents. In: Griswold-Ezekoye S, Kumpfer KL, Bukowski WJ, editors. Childhood and chemical abuse: Prevention and intervention. Binghamton, NY: Haworth Press; 1986. pp. 117–151. [Google Scholar]

- Domitrovich CE, Greenberg MT. The study of implementation: Current findings from effective programs that prevent mental disorders in school-aged children. Journal of Educational and Psychological Consultation. 2000;11:193–221. [Google Scholar]

- Durlak JA. Why program implementation is important. Journal of Prevention and Intervention in the Community. 1998;17:5–18. [Google Scholar]

- Dzewaltowski DA, Estabrooks PA, Klesges LM, Bull S, Glasgow RE. Behavior change intervention research in community settings: How generalizable are the results? Health Promotion International. 2004;19:235–245. doi: 10.1093/heapro/dah211. [DOI] [PubMed] [Google Scholar]

- Elliott DS, Mihalic S. Issues in disseminating and replicating effective prevention programs. Prevention Science. 2004;5:47–52. doi: 10.1023/b:prev.0000013981.28071.52. [DOI] [PubMed] [Google Scholar]

- Fagan AA, Mihalic S. Strategies for enhancing the adoption of school-based prevention programs: Lessons learned from the Blueprints for Violence Prevention replications of the Life Skills Training program. Journal of Community Psychology. 2003;31:235–253. [Google Scholar]

- Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation research: A synthesis of the literature (FMHI Publication #231) Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Researcher Network; 2005. [Google Scholar]

- Glasgow RE, Klesges LM, Dzewaltowski DA, Bull SS, Estabrooks P. The future of health behavior change research: What is needed to improve translation of research into health promotion practice? Annals of Behavioral Medicine. 2004;27:3–12. doi: 10.1207/s15324796abm2701_2. [DOI] [PubMed] [Google Scholar]

- Goggin ML, Bowman AO’M, Lester JP, O’Toole LJ., Jr . Implementation theory and practice: Toward a third generation. Glenview, IL: Scott, Foresman and Co; 1990. [Google Scholar]

- Gottfredson DC. Schools and delinquency. New York: Cambridge University Press; 2001. [Google Scholar]

- Green LW. From research to “best practices” in other settings and populations. American Journal of Health Behavior. 2001;25:165–178. doi: 10.5993/ajhb.25.3.2. [DOI] [PubMed] [Google Scholar]

- Green LW, Kreuter MW. Fighting back, or fighting themselves? Community coalitions against substance abuse and their use of best practices. American Journal of Preventive Medicine. 2002;23:303–306. doi: 10.1016/s0749-3797(02)00519-6. [DOI] [PubMed] [Google Scholar]

- Greenberg MT, Domitrovich CE, Graczyk PA, Zins JE. The study of implementation in school-based prevention research: Theory, research and practice. Report submitted to the Center for Mental Health Services (CMHS) and Substance Abuse and Mental Health Administration (SAMHSA), U.S. Department of Health and Human Services.; 2001. [Google Scholar]

- Greenberg MT, Feinberg ME, Meyer-Chilenski S. Community and team member factors that influence the early phases of local team partnerships in prevention: The PROSPER project. 2005 doi: 10.1007/s10935-007-0116-6. Manuscript submitted for publication. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greenberg MT, Feinberg ME, Gomez BJ, Osgood DW. Testing a community prevention focused model of coalition functioning and sustainability: A comprehensive study of Pennsylvania Communities That Care. In: Stockwell T, Gruenewald P, Toumbourou J, Loxley W, editors. Preventing harmful substance use: The evidence base for policy and practice. Chichester, UK: Wiley; 2005. pp. 129–142. [Google Scholar]

- Hallfors D, Cho H, Livert D, Kadushin C. Fighting back against substance abuse: Are community coalitions winning? American Journal of Preventive Medicine. 2002;23:237–245. doi: 10.1016/s0749-3797(02)00511-1. [DOI] [PubMed] [Google Scholar]

- Hansen WB. Pilot test results comparing the All Stars program with seventh grade D.A.R.E. program integrity and mediating variable analysis. Substance Use and Misuse. 1996;31:1359–1377. doi: 10.3109/10826089609063981. [DOI] [PubMed] [Google Scholar]

- Harrington NG, Giles SM, Hoyle RH, Feeney GJ, Yungbluth SC. Evaluation of the All Stars character education and problem behavior prevention program: Effects on mediator and outcome variables for middle school students. Health Education Research. 2001;28:533–546. doi: 10.1177/109019810102800502. [DOI] [PubMed] [Google Scholar]

- Jessor R, Jessor SL. Problem behavior and psychosocial development: A longitudinal study of youth. New York: Academic Press; 1977. [Google Scholar]

- Kam CM, Greenberg MT, Walls CT. Examining the role of implementation quality in school-based prevention using the PATHS Curriculum. Prevention Science. 2003;4:55–63. doi: 10.1023/a:1021786811186. [DOI] [PubMed] [Google Scholar]

- Kegler MC, Steckler A, Malek SH, McLeroy K. A multiple case study of implementation in 10 local Project ASSIST coalitions in North Carolina. Health Education Research: Theory and Practice. 1998;13:225–238. doi: 10.1093/her/13.2.225. [DOI] [PubMed] [Google Scholar]

- Kreuter MW, Lezin NA, Young LA. Evaluating community-based collaborative mechanisms: Implications for practitioners. Health Promotion Practice. 2000;1:49–63. [Google Scholar]

- Kumpfer KL, Molgaard V, Spoth R. The Strengthening Families Program for the prevention of delinquency and drug use. In: Peters RD, McMahon RJ, editors. Preventing childhood disorders, substance abuse, and delinquency. Thousand Oaks, CA: Sage; 1996. pp. 241–267. [Google Scholar]

- Lamb S, Greenlick MR, McCarty D, editors. Bridging the gap between practice and research: Forging partnerships with community-based drug and alcohol treatment. Washington, DC: National Academy Press; 1998. [PubMed] [Google Scholar]

- Lillehoj (Goldberg) CJ, Griffin KW, Spoth R. Program provider and observer ratings of school-based preventive intervention implementation: Agreement and relation to youth outcomes. Health Education and Behavior. 2004;31:242–257. doi: 10.1177/1090198103260514. [DOI] [PubMed] [Google Scholar]

- Meyer S, Greenberg MT, Feinberg M. Community readiness as a multidimensional construct. 2005 doi: 10.1002/jcop.20152. Manuscript submitted for publication. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mihalic S, Fagan A, Irwin K, Ballard D, Elliott D. Blueprints for violence prevention replications: Factors for implementation success. Boulder: University of Colorado, Center for the Study and Prevention of Violence, Institute of Behavioral Science; 2002. [Google Scholar]

- Mihalic SF, Irwin K. Blueprints for violence prevention: From research to real-world settings—factors influencing the successful replication of model programs. Youth Violence and Juvenile Justice. 2003;1:307–329. [Google Scholar]

- Molgaard V, Kumpfer K, Fleming B. The Strengthening Families Program: For Parents and Youth 10–14. Ames, IA: Iowa State University Extension; 1997. [Google Scholar]

- Molgaard VM, Spoth R, Redmond C. Competency training: The Strengthening Families Program for Parents and Youth 10–14. OJJDP Juvenile Justice Bulletin (NCJ 182208) Washington, DC: U.S. Department of Justice, Office of Justice Programs, Office of Juvenile Justice and Delinquency Prevention; 2000. [Google Scholar]

- Rosenstock IM. Historical origins of the Health Belief Model. Health Education Monographs. 1974;2:328–335. doi: 10.1177/109019817800600406. [DOI] [PubMed] [Google Scholar]

- Rosenstock IM, Strecher VJ, Becker MH. Social learning theory and the Health Belief Model. Health Education Quarterly. 1988;15:175–183. doi: 10.1177/109019818801500203. [DOI] [PubMed] [Google Scholar]

- Roussos ST, Fawcett SB. A review of collaborative partnerships as a strategy for improving community health. Annual Review of Public Health. 2000;21:369–402. doi: 10.1146/annurev.publhealth.21.1.369. [DOI] [PubMed] [Google Scholar]

- SAS Institute Inc. SAS/STAT User’s Guide (Version 6) 4. Vol. 2. Cary, NC: Author; 1990. [Google Scholar]

- Spoth R, Clair S, Redmond C, Shin C, Greenberg M. Toward dissemination of evidence-based family interventions: Community-based partnership effectiveness in recruitment. 2005 doi: 10.1037/0893-3200.21.2.137. Manuscript submitted for publication. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spoth R, Greenberg M, Bierman K, Redmond C. PROSPER community-university partnership model for public education systems: Capacity-building for evidence-based, competence-building prevention [Special issue] Prevention Science. 2004;5:31–39. doi: 10.1023/b:prev.0000013979.52796.8b. [DOI] [PubMed] [Google Scholar]

- Spoth RL, Greenberg MT. Toward a comprehensive strategy for effective practitioner–scientist partnerships and larger-scale community health and well-being. American Journal of Community Psychology. 2005;35:107–126. doi: 10.1007/s10464-005-3388-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spoth R, Guyll M, Trudeau L, Goldberg-Lillehoj C. Two studies of proximal outcomes and implementation quality of universal preventive interventions in a community-university collaboration context. Journal of Community Psychology. 2002;30:499–518. [Google Scholar]

- Spoth R, Redmond C. Study of participation barriers in family-focused prevention: Research issues and preliminary results. International Quarterly of Community Health Education. 1993;13:365–388. doi: 10.2190/69LM-59KD-K9CE-8Y8B. [DOI] [PubMed] [Google Scholar]

- Tobler N. Meta-analysis of 143 adolescent drug prevention programs: Quantitative outcome results of program participants compared to a control or comparison group. Journal of Drug Issues. 1986;16:537–567. [Google Scholar]