Abstract

One of the unintended consequences of the New Public Management (NPM) in universities is often feared to be a division between elite institutions focused on research and large institutions with teaching missions. However, institutional isomorphisms provide counter-incentives. For example, university rankings focus on certain output parameters such as publications, but not on others (e.g., patents). In this study, we apply Gini coefficients to university rankings in order to assess whether universities are becoming more unequal, at the level of both the world and individual nations. Our results do not support the thesis that universities are becoming more unequal. If anything, we predominantly find homogenisation, both at the level of the global comparisons and nationally. In a more restricted dataset (using only publications in the natural and life sciences), we find increasing inequality for those countries, which used NPM during the 1990s, but not during the 2000s. Our findings suggest that increased output steering from the policy side leads to a global conformation to performance standards.

Keywords: Elite universities, Gini coefficients, Inequality, University ranking, New public management, Output performance

Inequality Among Universities

Universities have increasingly been subject to output performance evaluations and ranking assessments (Frey and Osterloh 2002; Osterloh and Frey 2008). Performance indicators are no longer deployed only to assess university departments in the context of specific disciplines, but increasingly also to assess entire universities across disciplinary divides (Leydesdorff 2008). Well-known examples are the annual Shanghai ranking, the Times Higher Education Supplement ranking, and the Leiden ranking, but governments also collect data at the national level about how their academic institutions perform.

Not unlike restaurant or school ratings, university rankings convey the fascination of numbers despite the ambiguity of what is measured. A variety of interests convene around these numbers. Rankings seem to allow university managers to assess their organisation’s performance, but also to advertise good results in order to attract additional resources. These extra resources can be better students, higher tuition fees, more productive researchers, additional funding, wider media exposure, or similar capital increases. Rankings enable policymakers to assess national universities against international standards. Output indicators hold a promise of comparative performance measurement, suggesting opportunities to spur academic institutions to ever higher levels of production at ever reduced cost.

With university rankings, the competitive performance logic of New Public Management (NPM) further permeated into the academic sector (Martin 2010; Schimank 2005; Weingart and Maasen 2007). The complex changes around NPM in the public sector involve a belief in privatisation (or contractual public–private partnerships) and quasi-market competition, an emphasis on efficiency and public service delivery with budgetary autonomy for service providers, with a shift from steering on (monetary) inputs to outputs, through key performance indicators and related audit practices (Power 2005; Hood and Peters 2004). In the academic sector, NPM has expressed itself with reduced state regulation and mistrust of academic self-governance, insisting instead on external guidance of universities through their clients, under a more managerial regime stressing competition for students and research resources—although the precise mix of changes varies between countries (De Boer et al. 2007).

The expansion of performance measurement in the academic sector has incited substantial debate. Obvious objections concern the adequacy of the indicators. For example, the Shanghai ranking was criticised for failing to address varying publication levels among different research fields (Van Raan 2005). In response to this critique, the methodology of the Shanghai ranking was adjusted: one currently doubles the number of publications in the social sciences in order to compensate for differences in output levels between the social and natural sciences. Going even further, the Leiden ranking attempts to fine-tune output measurement by comparing publication output with average outputs per field (Centre for Science and Technology Studies 2008).1

In this article we focus on the debate about the consequences rather than methodology of output measurement. There is a growing body of research pointing to unwanted side-effects of counting publications and citations for performance measurement. Weingart (2005) has documented cases of ritual compliance, e.g., with journals attempting to boost impact factors with irrelevant citations. Similar effects are the splitting of articles to the ‘smallest publishable unit’ or the alleged tendency of researchers to shift to research that produces a steady stream of publishable data. Similar objections have been raised against other attempts to stimulate research performance through a few key performance indicators. Schmoch and Schubert (2009) showed that such a reduction may impede rather than stimulate excellency in research. As such, these objections are similar to objections voiced against NPM in other policy sectors, such as police organisations shifting attention to crimes with ‘easy’ output measurement, e.g., intercepted kilos of drugs, or schools grooming students to perform well on tests only. The debate about advantages and disadvantages of NPM is by no means closed (Hood and Peters 2004).

One of the contested issues in the rise of NPM at universities is whether the new assessment regime would lead to increased inequality among universities (Van Parijs 2009). According to the advocates of NPM, performance measurement spurs actors in the public sector into action. By making productivity visible, it becomes possible to compare performance and make actors aware of their performance levels. This can be expected to generate improvements, either merely through heightened awareness and a sense of obligation to improve performance, or through pressure from the actors’ clients.

For example, by making the performance of schools visible, NPM claims that parents can make more informed choices about where to send their children. This transparency is expected to put pressure on under-performing schools. To stimulate actors even further, governments may tie the redistribution of resources to performance, as has been the case in the UK Research Assessment Exercises. The claim of NPM is that this stimulation of actors can be expected to improve the quality of public services and reduce costs. In the university sector, NPM promises more and better research at lower cost to the tax payer, in line with Adam Smith’s belief in the virtues of the free market.

Opponents to the expansion of NPM into the university sector point to a number of objections that echo those made in other NPM-stricken public sectors. This is not the place to provide a complete overview of the debate; suffice it to say that the inequality in performance in the academic sector has been a crucial issue. While proponents of comparative performance measurement claim that all actors in the system will be stimulated to improve their performance, opponents claim that this ignores the redistributive effects of NPM. By moving university performance in the direction of commodification, NPM could create the accumulation of resources in an elite layer of universities, generating inequalities through processes that also produce the Matthew effect (Merton 1968). These authors stress the downsides of the US Ivy League universities, including the creation of old boys’ networks of graduates that produce an increasingly closed national elite, or the large inequalities of working conditions between elite and marginal universities.

In the same vein, critics claim that the aspirations of governments to have top-ranking universities, such as Cambridge or Harvard, may lead to the creation of large sets of insignificant academic organisations, teaching universities or professional colleges, at the other end of the distribution. In the case of Germany, where there has been much debate on inequalities among universities as a result of changes in academic policy, it has been argued that output evaluation practices reproduce status hierarchies between universities, affecting opportunities to attract resources (Münch 2008).2 In contrast to the belief in the general stimulation of actors, these critics appeal to a logic of resource concentration that is reminiscent of Marx’s critique of oligopolistic capitalism.

A third and more constructivist understanding of performance measurement suggests that major shifts in the university sector cannot be expected to lead to an overall increase in performance, nor a shift of resources, but rather a widespread attempt of actors to ‘perform performance’. If output is measured in terms of numbers of publications, then these numbers can be expected to increase, even at the expense of actual output: any activity that is not included in performance measurement will be abandoned in favour of producing good statistics. This reading of rankings considers them to be a force of performance homogenisation and control: a ‘McDonaldisation of universities’ (Ritzer 1998), under a regime of ‘discipline and publish’ (Weingart and Maasen 2007). These authors emphasise that the construction of academic actors, who monitor themselves via output indicators, may have even more detrimental effects than the capital destruction that comes with concentration. Output measurement is regarded as mutilating the very academic quality it claims to measure, through a process of Weberian rationalisation or an even more surreptitious expansion of governmentality, as signalled by Foucault (Foucault 1991).

Considering these serious potential consequences pointed out by the critics, there is surprisingly little systematic information on the changing inequalities among universities. Most of the debates rely on anecdotal evidence. Can one distinguish a top layer of increasingly elite universities that produce ever larger shares of science at the expense of a dwindling tail of marginalised teaching universities? Ville et al. (2006) reported an opposite trend of equalisation in research output among Australian universities (1992–2003) using Gini coefficients for the measurement. In this article, we use the Gini coefficient as an indicator for assessing the development of inequalities in academic output in terms of publications at the global level. The Gini measure of inequality is commonly used for the measurement of income inequalities and has intensively been used in scientometric research for the measurement of increasing (or decreasing) (in)equality (e.g., Bornmann et al. 2008; Cole et al. 1978; Danell 2000; Frame et al. 1977; Persson and Melin 1996; Stiftel et al. 2004; Zitt et al. 1999). Burrell (e.g., 1991) and Rousseau (e.g., 1992, 2001), among others, studied the properties of Gini in the bibliometric environment (cf. Atkinson 1970).

By providing a more systematic look at the distribution of publication outputs of universities and the potential shifts of these distributions over time, we hope to contribute with empirical data to the ongoing debate of the merits and drawbacks of comparative performance measurement in the university sector. Although we use indicators such as the Shanghai ranking or output measures in this article, we do not consider these to be unproblematic or desirable indicators of research performance. Rather, we want to investigate how the distribution of outputs between universities changes, irrespective of what these outputs represent in terms of the ‘quality’ of the universities under study. This implies that we do not want to take sides in the debate on the value of output measurement, but rather test the claims that are made about the effects of NPM in terms of the outputs it claims to stimulate. Which version is more plausible: the NPM argument of stimulated performance in line with Adam Smith, the fear of increasing elitism reminiscent of Marx’ logic of capital concentration, or the constructivist reading following Foucault’s spread of governmentality and discipline?

Methods and Data

The Gini indicator is a measure of inequality in a distribution. It is commonly used to assess income inequalities of inhabitants or families in a country. Gini indicators play an important role in the redistributive policies of welfare states, e.g., to assess whether all layers of the population share in collective wealth increases (Timothy and Smeeding 2005). They also play a key role in the debate about whether or not global inequalities are increasing (Dowrick and Akmai 2006; Sala-i-Martin 2006). In the case of income distributions, the Ginis of most Northern European countries are around 0.25 (Netherlands, Germany, Norway), while the Gini coefficient of the USA is 0.37. For Mexico—as an example of the relatively unequal countries in Latin America—the Gini coefficient is 0.47 (Timothy and Smeeding 2005).

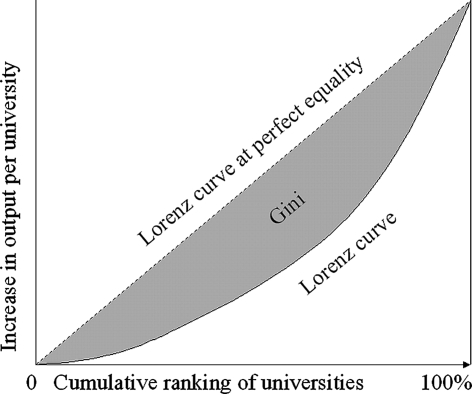

In order to calculate the Gini indicator, one orders the units of analysis—in our case, universities—from the lowest to highest output and plots a curve that shows the cumulative output: the first point in the plot corresponds to output of the smallest unit in these terms, the next is the smallest plus the one-but smallest, etc. This leads to the so-called Lorenz curve. In a perfectly ‘equal’ system, all universities would contribute the same share to the overall output. In that case, the Lorenz curve would be a straight line. In the most extremely unequal system, all universities but one would produce zero publications. A single university would produce all publications in the system, and the Lorenz curve would follow the x-axis until this last point is reached.

Based on this reasoning, the Gini coefficient measures the relative surface between the Lorenz curve and the straight line (Fig. 1). The Gini coefficient can be formulated as follows (Buchan 2002):

|

1 |

with n being the number of universities in the population and x i being the number of publications of the university with position i in the ranking. Hence, the Gini ranges between zero for a completely equal distribution and (n − 1)/n for a completely unequal distribution, approaching one for large populations. For comparison among smaller populations of varying size, this requires a normalisation that brings Gini coefficients for all populations to the same maximum unity. The formula for this normalised Gini coefficient is:

|

2 |

Fig. 1.

Lorenz curve and Gini coefficient

Although statistical in nature, the Gini index is a relatively simple and robust measure of inequality. However, there are some complications. First, the Gini coefficient is sensitive to tails at the top or bottom of the distribution. At the top end, the inclusion or omission of one more highly productive universities would alter the Gini drastically. In our data, however, these top-universities are also the most visible ones (e.g., Harvard, Oxford, Tokyo) and hence such an omission is unlikely in this study. At the bottom of the range, the data contains long tails of universities with very small numbers of publications; relatively unknown institutions, often even hard to recognise as universities. This problem can be resolved by comparing only fixed ranges, for example, the top-500 most productive universities. For the world’s leading scientific countries this makes little difference. For example, our counts for the Shanghai ranking systematically include 12 of the 14 Dutch universities, 40 universities of some 120 universities in the UK and 159 of some 2,000 universities and colleges for the USA. Nevertheless, this admittedly does exclude the very bottom of the range, and it may have an effect when we compare over time, as we shall see below for the case of China.

A second complication arises from double counts or alternate names of universities. For example, publications may be labelled as university or university medical centre publications; universities may change names over time, merge, or split. All of this creates larger or smaller units that will alter the distribution and hence the Gini. Therefore, it is important that publication data are carefully labelled, or at least consistently labelled over time. This requires a manual check.

Third, the Gini remains only a measure of overall inequality. This facilitates comparison from year to year, but the measure does not allow us to locate where changes in the distribution occur. To this end, Gini analysis can be complemented with comparisons of subset shares in overall output, such as the publication share of the top quartile or decile (10%) (cf. Plomp 1990).

In order to calculate inequality among universities, we have first used the university output data provided by the Shanghai rankings at http://www.arwu.org. These rankings consist of a compounded indicator, with weighted contributions of total numbers of publications per university, awards won by employees of the university and alumni, and publications per researcher, in addition to numbers of highly cited publications and publications in Nature and Science by the top universities’ scientists. For presentation purposes, the ranking scores of universities are expressed as a percentage of the top university (Harvard), but for the calculation of Gini-coefficients this normalisation does not make a difference.

The central part of the Shanghai ranking only pertains to the world’s top-50 universities, but publication data is provided for a larger set of 500 universities covering the years 2003–2008. The data other than numbers of publications for these top-500 is problematic because of cumulative scoring over years (e.g., for awards) or shifts in the data definition (e.g., inclusion of Fields awards in addition to Nobel Prizes). Unfortunately, the number of publications per scientist has also been adjusted during the series. The relevant definition is stable for the period 2005–2008.

Although this data provides us with a solid base for measuring inequalities, the time series is very short. For the precise ranking of each individual university in each year, the precision of total publications as a measure of productivity may be problematic. For our purposes, however, it makes little difference whether a specific university of—say, Manchester—follows at position number 40 (in 2008) or 48 (in 2007). The focus is on the shape of the distribution.

In order to investigate longer-term trends, additional calculations were performed on Science Citation Index data. Our data comprise results for the natural sciences only, but allow us to analyse developments over a longer period (1990–2007). Following best practice in scientometrics, we used only citable items, that is, articles, reviews, and letters.3 More than 60% of the addresses are single occurrences; these include also addresses with typos. Using only the institutional addresses which occurred more than once—21,393 in 1990, but 46,339 in 2007—we removed all non-university organisations from the list and merged alternate names of the same universities. We included academic hospitals as separate organisations as part of our effort to limit manual intervention in the data to a minimum. For the analysis of shifts in the distribution over time, we believe that consistency is more important than debatable re-categorisations.

We should stress that our parameter, total SCI publications, can as much be considered as an indicator of size as of productivity. For example, at the top of our list is not Harvard, but the much larger University of Texas (see Table 1 for the top-50 largest universities in 2007). When we talk about the largest or the top universities, we refer to this measure of total SCI-covered publication output. We cannot make any claims about the long tail of small universities, but our analysis reaches as far down as Hunan University (532 SCI publications in 2007), St Louis (540 publications), or Bath (588 publications).

Table 1.

The 50 largest universities in the world in 2007, in terms of totals of SCI publications

| Institute | Total | Country |

|---|---|---|

| University of Texas | 12,047 | USA |

| Harvard University | 11,479 | USA |

| University of Tokyo | 7,435 | Japan |

| University of Toronto | 7,120 | Canada |

| University of Calif Los Angeles | 6,803 | USA |

| University of Michigan | 6,603 | USA |

| University of Washington | 6,348 | USA |

| University of Illinois | 5,630 | USA |

| Kyoto University | 5,465 | Japan |

| Johns Hopkins University | 5,455 | USA |

| Stanford University | 5,447 | USA |

| University of Pittsburgh | 5,442 | USA |

| University of Wisconsin | 5,369 | USA |

| University of Penn | 4,977 | USA |

| University of Calif San Francisco | 4,962 | USA |

| University of Calif Berkeley | 4,956 | USA |

| University of Calif San Diego | 4,942 | USA |

| University of Minnesota | 4,742 | USA |

| Seoul Natl University | 4,687 | South Korea |

| Columbia University | 4,645 | USA |

| University of Sao Paulo | 4,628 | Brazil |

| Duke University | 4,587 | USA |

| Tohoku University | 4,579 | Japan |

| University of Florida | 4,450 | USA |

| Osaka University | 4,433 | Japan |

| University of N Carolina | 4,406 | USA |

| University of Calif Davis | 4,379 | USA |

| Ohio State University | 4,342 | USA |

| University of Maryland | 4,283 | USA |

| Yale University | 4,195 | USA |

| University of British Columbia | 4,094 | Canada |

| Mcgill University | 4,048 | Canada |

| Washington University | 4,036 | USA |

| Cornell University | 4,028 | USA |

| University of Cambridge | 4,018 | England |

| University of Colorado | 4,007 | USA |

| University of Oxford | 3,879 | England |

| MIT | 3,850 | USA |

| Natl Taiwan University | 3,848 | Taiwan |

| Penn State University | 3,654 | USA |

| Northwestern University | 3,621 | USA |

| University of Helsinki | 3,515 | Finland |

| Vanderbilt University | 3,398 | USA |

| Natl University of Singapore | 3,348 | Singapore |

| University of Paris 06 | 3,289 | France |

| University of Coll London | 3,255 | England |

| Zhejiang University | 3,203 | Peoples R china |

| University of Alabama | 3,193 | USA |

| University of Sydney | 3,184 | Australia |

| University of Melbourne | 3,170 | Australia |

Results

Inequality Among the Top-500 Universities: Shanghai Ranking Data

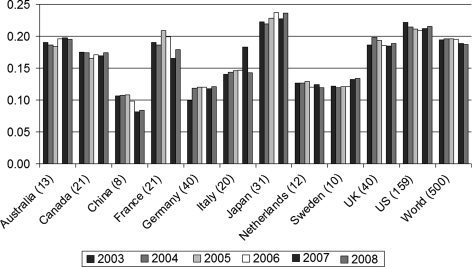

Gini coefficients for university publication output, based on the Shanghai ranking data, seem to remain stable between 2003 and 2008 (Fig. 2). If anything, the overall inequality among universities decreases slightly. In any case, there is no indication of a significant and lasting increase in inequality as predicted on the basis of qualitative observations (e.g., Martin 2010; Van Parijs 2009, at p. 203) (Table 2).

Fig. 2.

Normalised Gini coefficients for university publication output. Source: Shanghai ranking at http://www.arwu.org/

Table 2.

Normalised Gini coefficients for university publication outputs

| 2003 | 2004 | 2005 | 2006 | 2007 | 2008 | Avg 03–08 | n | |

|---|---|---|---|---|---|---|---|---|

| World | 0.195 | 0.196 | 0.196 | 0.195 | 0.188 | 0.187 | 0.193 | 500 |

| Australia | 0.191 | 0.187 | 0.184 | 0.196 | 0.198 | 0.195 | 0.192 | 13 |

| Canada | 0.175 | 0.175 | 0.166 | 0.171 | 0.169 | 0.174 | 0.172 | 21 |

| China | 0.106 | 0.108 | 0.108 | 0.098 | 0.082 | 0.084 | 0.098 | 8 |

| France | 0.190 | 0.187 | 0.209 | 0.199 | 0.166 | 0.179 | 0.188 | 21 |

| Germany | 0.099 | 0.119 | 0.120 | 0.120 | 0.118 | 0.121 | 0.116 | 40 |

| Italy | 0.141 | 0.143 | 0.146 | 0.147 | 0.183 | 0.143 | 0.150 | 20 |

| Japan | 0.223 | 0.219 | 0.229 | 0.237 | 0.227 | 0.236 | 0.228 | 31 |

| Netherlands | 0.126 | 0.127 | 0.129 | 0.120 | 0.124 | 0.119 | 0.124 | 12 |

| Sweden | 0.122 | 0.120 | 0.121 | 0.121 | 0.132 | 0.134 | 0.125 | 10 |

| UK | 0.187 | 0.198 | 0.194 | 0.185 | 0.184 | 0.189 | 0.190 | 40 |

| US | 0.222 | 0.214 | 0.211 | 0.209 | 0.212 | 0.215 | 0.214 | 159 |

Source: Shanghai ranking data at http://www.arwu.org/

Figure 2 shows remarkable differences in inequality among national systems. Here, we have to proceed with some caution, as the bottom tail of least productive universities may not be included to the same extent for all nations. China, for example, presents a problem, because ten more universities entered the top-500 between 2003 and 2008. All our calculations were made with the largest available set for all the years involved. (Hence, n is the same for every year.)

Figure 2 shows a relative equality in the university systems of the Netherlands, Sweden, and Germany. We must point out that this does not mean that all universities in the respective countries are equally ‘good’, but rather that these universities produce a relatively similar number of publications. Inversely, the relatively high inequalities in Japan, the UK, or the US could just as well be caused by large differences in the size of universities as of their productivity.

Perhaps more remarkably, we do not observe major shifts in inequality over time within each national system. This is especially interesting for countries such as the UK, where increased inequalities could have occurred due to the redistribution effects of the Research Assessment Exercises. These research assessments redistribute research resources to the more productive research units, while reducing the budgets of those that do poorly in the evaluations. France and Italy, both in the middle range, display one or two erratic results, which we fear may be due to data redefinitions.

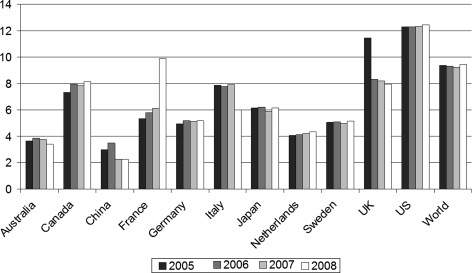

The lack of clear-cut increases in inequality among universities in terms of publication output raises further questions about productivity. What is happening to the outputs of publications per scientist? Because the use of the Gini coefficient is questionable here, as productivity data cannot be added meaningfully, we have used a simple standard deviation to measure dispersion. This is not quite the same as inequality, but does provide an indication of changes in the spread of productivity. The data is more irregular here, due to adjustments and improvements in the ranking data from year to year (Fig. 3). Here too, one sees no clear sign of growing disparities among universities. The world trend seems slightly in favour of increasingly similar output levels (Leydesdorff and Wagner 2009). Once again, the US ranks high in terms of spread in productivity levels, but Japan is now a member of the middle range. This implies that Japan may have a relatively large disparity between larger and smaller universities, but with more equal productivity levels. In the case of Australia, this difference is even larger, with the most equal distribution of productivity (SD = 3.7) among the other countries analysed, not considering China (Table 3).

Fig. 3.

Standard deviations for top-500 universities: productivity in SCI publications per faculty. Source: Shanghai ranking data at http://www.arwu.org/

Table 3.

Standard deviations for publication output per scientist

| 2005 | 2006 | 2007 | 2008 | Avg 05–08 | n | |

|---|---|---|---|---|---|---|

| World | 9.4 | 9.3 | 9.2 | 9.4 | 9.3 | 500 |

| Australia | 3.6 | 3.8 | 3.8 | 3.4 | 3.7 | 13 |

| Canada | 7.3 | 8.0 | 7.9 | 8.1 | 7.8 | 21 |

| China | 3.0 | 3.5 | 2.2 | 2.3 | 2.7 | 8 |

| France | 5.3 | 5.8 | 6.1 | 9.9 | 6.8 | 21 |

| Germany | 4.9 | 5.2 | 5.1 | 5.2 | 5.1 | 40 |

| Italy | 7.9 | 7.8 | 7.9 | 6.0 | 7.4 | 20 |

| Japan | 6.2 | 6.2 | 5.9 | 6.2 | 6.1 | 31 |

| Netherlands | 4.0 | 4.1 | 4.2 | 4.3 | 4.2 | 12 |

| Sweden | 5.1 | 5.1 | 5.0 | 5.1 | 5.1 | 10 |

| UK | 11.5 | 8.3 | 8.2 | 8.0 | 9.0 | 40 |

| US | 12.3 | 12.3 | 12.3 | 12.4 | 12.3 | 159 |

Source: Shanghai ranking data at http://www.arwu.org/

Our results undermine the hypothesis of increasing inequalities among universities. If anything, we see a small decrease in output inequalities among universities, in terms of both overall output and productivity. This raises additional questions. Is this result the product of the methodological flaws of the Shanghai ranking (Van Raan 2005), even if one uses only its least problematic component, that is, publication data derived from the Science Citation Index? Might we have missed the increasing formation of super-universities because the time frame used was too narrow? In order to answer these longitudinal questions, we turned to data sets from the Science Citation Index (SCI) for earlier years.

Inequality Between Universities: SCI Data

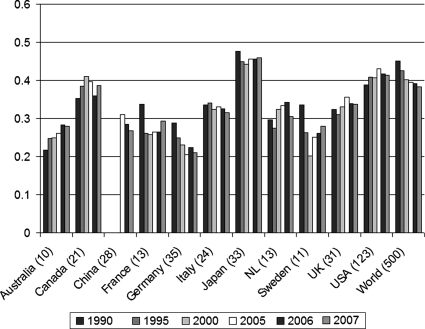

The 500 universities that publish most in the world, using the SCI, are becoming more equal in terms of their publication output. The trend is clear from 1990 to 2005 and continues thereafter for 2006 and 2007, confirming what we have found on the basis of the Shanghai ranking for a shorter time span (Fig. 4). The relative position of the countries is similar to that in the Shanghai ranking, also confirming the measurement.

Fig. 4.

Normalised Gini coefficients for top-500 universities. Source: SCI, n of publications (in brackets). The requirement to keep the number of universities per countries stable in order to calculate a comparable national Gini coefficient across the years led in the case of China to using a cut-off point of 28 universities in the years 2005–2007, disregarding the earlier presence of three Chinese universities among the top-500 in 1990, five in 1995, and 16 in 2000

The trend per country shows a somewhat different picture. In the UK, the US, the Netherlands, Canada, and Australia, we see increases in inequality between 1990 and 2005, although these seem to decrease for the first three of these countries during recent years. These are also the countries in which NPM has been picked up early. However, whereas the UK has attached a redistribution of resources to research assessment, other countries, such as the Netherlands, have not.

France, Italy, and Japan show a stable distribution of outputs, while there is a trend toward more equality in China, Germany, and Sweden, although with some erratic movement in the latter case. Although the overall image is consistent with the above results using data from the Shanghai rankings, the country patterns are different. However, these differences in trends are mainly the result of the expanded time horizon. For recent years at least the direction of the country trends is consistent with the Shanghai findings. Note that in all cases, the inequalities measured in the SCI are considerably larger than when using the Shanghai ranking, which suggests that the natural sciences are more unequally distributed than the social sciences because the latter are included in the Shanghai ranking and not in the SCI data.

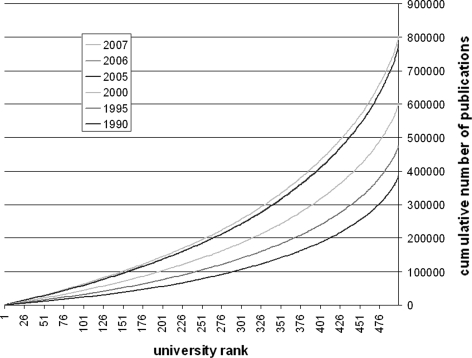

The Lorenz curves (Fig. 5) show first the expansion of the database during the period under study. The 500 largest universities have increased their numbers of SCI publications, accordingly, from just under 400,000 in 1990 to almost 800,000 publications per year in 2007. This figure provides us with an impression of the evolution of the distribution, but in order to obtain a more precise understanding, we need to analyse the distributions in more detail.

Fig. 5.

Lorenz curves SCI publications 500 largest universities. Source: SCI

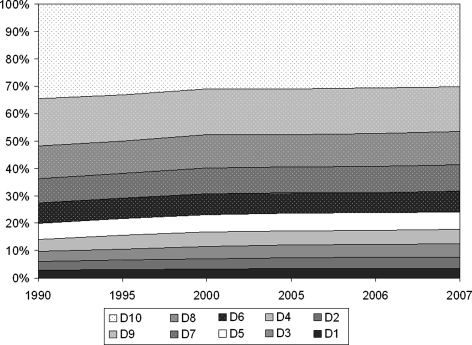

Details of the Distribution

Since much of the policy debate around rankings concerns aspirations to perform like the international top-universities, it is interesting to look in more detail at what the largest universities are doing. To this end, we analysed the shares of total publications produced by every quarter, every tenth (decile), and every hundredth section of the distribution. We report the deciles here, as they provide the clearest indication of where the distribution is shifting (Table 4).

Table 4.

Decile shares of the top-500 universities

| 1990 (%) | 1995 (%) | 2000 (%) | 2005 (%) | 2006 (%) | 2007 (%) | 2007–1990 (%) | |

|---|---|---|---|---|---|---|---|

| D1 | 2.9 | 2.9 | 3.2 | 3.4 | 3.5 | 3.5 | 0.7 |

| D2 | 3.3 | 3.4 | 3.7 | 3.9 | 4.0 | 4.1 | 0.8 |

| D3 | 3.9 | 4.0 | 4.4 | 4.6 | 4.6 | 4.7 | 0.8 |

| D4 | 4.7 | 4.9 | 5.3 | 5.3 | 5.3 | 5.4 | 0.7 |

| D5 | 5.9 | 6.0 | 6.2 | 6.4 | 6.2 | 6.3 | 0.4 |

| D6 | 7.3 | 7.3 | 7.6 | 7.6 | 7.5 | 7.7 | 0.4 |

| D7 | 9.0 | 9.2 | 9.5 | 9.6 | 9.4 | 9.5 | 0.5 |

| D8 | 11.8 | 12.1 | 12.4 | 12.1 | 12.2 | 12.2 | 0.4 |

| D9 | 16.9 | 17.0 | 16.6 | 16.4 | 16.6 | 16.2 | −0.6 |

| D10 | 34.4 | 33.1 | 31.1 | 30.8 | 30.8 | 30.3 | −4.1 |

| Total | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 |

Source: SCI

The top decile of universities is very slowly but steadily losing ground in terms of output share. Whereas the 50 largest universities produced 34.4% of all SCI publications in the world in 1990, this share had decreased to 30.3% in 2007. This is not exactly a landslide but, in any case, not an indication of a stronger oligopolistic concentration. Combined, the bottom half of the distribution has increased its share from a fifth (20.6%) to almost a quarter (24.0%) of the top-500 output (Fig. 6).

Fig. 6.

Cumulative decile shares in total SCI output top-500 universities

A detailed analysis of the top ten percentiles showed that the decreasing share of the top decile was shared throughout the fifty largest universities and strongest in the top percentiles. Among the 100 largest universities, the Gini coefficient has decreased from 0.230 to 0.211 between 1990 and 2007.

Conclusion

Our results suggest an ongoing homogenisation in terms of publication and productivity patterns among the top-500 universities in the world. Especially, the fifty largest universities are slowly losing ground, while the lowest half of the top-500 catches up. All of this occurs against the background of rising output in all sections and further expansion of the ISI-databases. In summary, it appears that the gap between the largest universities and the rest is closing rather than widening. Note that the top-500 universities are concentrated in North America, Western Europe and some Asian countries (Leydesdorff and Wagner 2008). Within this set, we found increasing inequality in some countries between 1990 and 2005 when using the SCI data, notably in the Anglo-Saxon world. However, even in these countries the trend seems to reverse in more recent years. Using a similar methodology, Ville et al. (2006) found decreasing inequality in research outputs among Australian universities during the period 1992–2003 given relatively stable funding distributions within this country.

In terms of Marxist, neo-liberal, and Foucauldian accounts of NPM, these results seem to refute the thesis suggesting oligopolistic tendencies in the university system, at least in terms of output. Further studies would have to analyse whether this trend is also present in the inputs of universities, such as research budgets, number of faculty, or even tuition fees. The Matthew effect, which generates concentration of reputation and resources in the case of individual scientists, if at all at work at the meso level of organisations, may have generated inequalities among universities in the past, but this process seems to have reached its limit. Perhaps the largest universities are now also facing disadvantages of scale.

The question remains whether the slow levelling-off corroborates the idea that the neo-liberal logic of activation is responsible for this result, or whether the Foucauldian reading carries more weight. There are indications that universities are indeed shifting their output more towards what is valued in the rankings and output indicators such as SCI publications. Leydesdorff and Meyer (2010) have observed that the increase in publication output may be achieved at the expense of patents output since approximately 2000. The prevailing trend of levelling-off of productivity differences in recent years also suggests that universities worldwide are conforming to isomorphic pressures of producing the same levels of SCI outputs. This further suggests that the self-monitoring of research actors increasingly follows the same global standards (DiMaggio and Powell 1983).

There may be a price to pay for such higher output levels, apart from the family life of researchers. In the Netherlands, one witnesses a devaluation of publications in national journals for the social sciences, to the extent that several Dutch social science journals have recently ceased to exist because of the lack of a good copy. Such trends have been criticised for undermining the contributions that the social sciences and humanities can make to national debates and public thought (Boomkens 2008). Anecdotal evidence further suggests that researchers consciously shift to activities that produce a regular stream of publications, or that research evaluations may favour such research lines (Weingart 2005; Laudel and Origgi 2006). Such evidence suggests that the slow levelling-off of scientific output may not support the neo-liberal argument for increased competition at all. Rather, it suggests that researchers become better at ‘performing performance’, i.e., the ritual production of output in order to score on performance indicators, even at the expense of the quality of one’s work. Further research about the effects of NPM on universities will have to provide more clarity on these issues. Hitherto, the NPM wave has been programmatically resilient against counter-indications such as unintended consequences (Hood and Peters 2004).

Whereas the inequality of scientific production has received scholarly attention in the past (Merton 1968; Price 1976), this discussion has focused mainly on the dynamics of reward structures of individuals and departments (Whitley 1984). However, inequality at the institutional level of universities remains topical in the light of the NPM discussion (Martin 2010). Our findings suggest that increased output steering from the policy side leads to a global conformity to performance standards, and thus tends to have an unexpectedly equalising effect. Whether countries adopt NPM or other regimes to promote publication behaviour (e.g., China) does not seem to play a crucial role in these dynamics.

Acknowledgments

The authors wish to thank Heide Hackmann, Ronald Rousseau, and two anonymous reviewers for comments on a previous draft of this article.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Biographies

Willem Halffman

is a lecturer in the department of Philosophy and Science Studies at the Radboud University Nijmegen, and is a co-ordinator of the PhD training programme of the Netherlands Graduate Research School of Science, Technology, and Modern Culture.

Loet Leydesdorff

reads Science and Technology Dynamics at the Amsterdam School of Communications Research (ASCoR) of the University of Amsterdam. He is Visiting Professor of the Institute of Scientific and Technical Information of China (ISTIC) in Beijing and Honorary Fellow of the Science and Technology Policy Research Unit (SPRU) of the University of Sussex.

Footnotes

The field normalisation is based on using the ISI Subject Categories which are often unprecise and thus to be used only as statistics (Rafols and Leydesdorff 2009).

An analysis of grants rewarded by the German science foundation showed no effect of institutional context on success of individual scientists’ grant applications (Auspurg, Hinz and Güdler 2008; cf. Van den Besselaar and Leydesdorff 2009; Bornmann et al. 2010).

On February 27, 2009, Thomson-Reuters ISI announced a reorganisation of the database in October 2008 (at http://isiwebofknowledge.com/products_tools/multidisciplinary/webofscience/cpci/usingproceedings/; Retrieved on March 11, 2009) An additional category of citable “Proceedings Papers” is now distinguished on the Web-of-Science. Our data is not affected by this change since based on the CD-Rom versions of the Science Citation Index.

Contributor Information

Willem Halffman, Phone: +31-24-3652204, Email: w.halffman@gmail.com, www.halffman.net.

Loet Leydesdorff, Email: loet@leydesdorff.net.

References

- Atkinson Anthony B. On the measurement of inequality. Journal of Economic Theory. 1970;2:244–263. doi: 10.1016/0022-0531(70)90039-6. [DOI] [Google Scholar]

- Auspurg Katrin, Hinz Thomas, Güdler Jürgen. Herausbildung einer akademischen Elite? Zum Einfluss der Größe und Reputation von Universitäten auf Forschungsförderung. Kölner Zeitschrift für Sozialpsychologie. 2008;60:653–685. doi: 10.1007/s11577-008-0032-7. [DOI] [Google Scholar]

- De Harry Boer, Enders Jurgen, Schimank Uwe. On the way towards new public management? The governance of university systems in England, the Netherlands, Austria, and Germany. In: Jansen Dorothea., editor. New forms of governance in research organizations. Disciplinary approaches, interfaces and integration. Dordrecht: Springer; 2007. pp. 137–154. [Google Scholar]

- Boomkens René. Topkitsch en slow science. Amsterdam: Van Gennep; 2008. [Google Scholar]

- Bornmann, Lutz, Loet Leydesdorff, and Peter van den Besselaar. 2010. A Meta-evaluation of scientific research proposals: Different ways of comparing rejected to awarded applications. Journal of Informetrics, in print. doi:10.1016/j.joi.2009.10.004.

- Bornmann Lutz, Mutz Rüdiger, Neuhaus Christoph, Daniel Hans-Dieter. Citation counts for research evaluation: Standards of good practice for analyzing bibliometric data and presenting and interpreting results. Ethics in Science and Environmental Politics. 2008;8:93–102. doi: 10.3354/esep00084. [DOI] [Google Scholar]

- Buchan, Iain. 2002. Calculating the Gini coefficient of inequality. Northwest Institute for BioHealth Informatics. https://www.nibhi.org.uk/Training/Forms/AllItems.aspx?RootFolder=%2FTraining%2FStatistics&FolderCTID=&View={4223A4850-B4790-4965-4285DB-D4220A4841A5430B}. Accessed on 11 March, 2009.

- Burrell Quentin L. The Bradford distribution and the Gini index. Scientometrics. 1991;21:181–194. doi: 10.1007/BF02017568. [DOI] [Google Scholar]

- Centre for Science and Technology Studies. 2008. The Leiden ranking 2008. http://www.cwts.nl/ranking/LeidenRankingWebSite.html. Accessed on 9 March, 2009.

- Cole Stephen, Cole Jonathan R, Dietrich Lorraine. Measuring the cognitive state of scientific disciplines. In: Elkana Yehuda, Lederberg Jonathan, Merton Robert K, Thackray Arnold, Zuckerman Harriet., editors. Toward a metric of science: The advent of science indicators. New York: Wiley; 1978. pp. 209–251. [Google Scholar]

- Danell Rickard. Stratification among journals in management research: A bibliometric study of interaction between European and American journals. Scientometrics. 2000;49:23–38. doi: 10.1023/A:1005605123831. [DOI] [Google Scholar]

- DiMaggio Paul J, Powell Walter W. The iron cage revisited: Institutional isomorphism and collective rationality in organizational fields. American Sociological Review. 1983;48:147–160. doi: 10.2307/2095101. [DOI] [Google Scholar]

- Dowrick Steve, Akmai Muhammad. Contradictory trends in global income inequality: A tale of two biases. The Review of Income and Wealth. 2006;51:201–229. doi: 10.1111/j.1475-4991.2005.00152.x. [DOI] [Google Scholar]

- Foucault Michel. Governmentality. In: Burchell Graham, Gordon Colin, Miller Peter., editors. The Foucault effect: Studies in govern mentality, with two lectures by and an interview with Michel Foucault. Chicago: University of Chicago Press; 1991. pp. 87–104. [Google Scholar]

- Frame JDavidson, Narin Francis, Carpenter Mark P. The distribution of world science. Social Studies of Science. 1977;7:501–516. doi: 10.1177/030631277700700414. [DOI] [Google Scholar]

- Hood Christopher, Guy Peters B. The middle aging of new public management: Into the age of paradox? Journal of Public Administration and Theory. 2004;14:267–282. doi: 10.1093/jopart/muh019. [DOI] [Google Scholar]

- Laudel Grit, Origgi Gloria. Introduction to a special issue on the assessment of interdisciplinary research. Research Evaluation. 2006;15:2–4. doi: 10.3152/147154406781776066. [DOI] [Google Scholar]

- Leydesdorff Loet. Caveats for the use of citation indicators in research and journal evaluation. Journal of the American Society for Information Science and Technology. 2008;59:278–287. doi: 10.1002/asi.20743. [DOI] [Google Scholar]

- Leydesdorff, Loet, and Martin Meyer. 2010. The decline of university patenting and the end of the Bayh–Dole effect. Scientometrics, in print. doi:10.1007/s11192-009-0001-6.

- Leydesdorff Loet, Wagner Caroline S. International collaboration in science and the formation of a core group. Journal of Informetrics. 2008;2:317–325. doi: 10.1016/j.joi.2008.07.003. [DOI] [Google Scholar]

- Leydesdorff Loet, Wagner Caroline S. Is the United States losing ground in science? A global perspective on the world science system. Scientometrics. 2009;78:23–36. doi: 10.1007/s11192-008-1830-4. [DOI] [Google Scholar]

- Martin, Ben R. 2010 (forthcoming). Inside the public scientific system: changing modes of knowledge production. In Innovation Policy: Theory and Practice, an International Handbook, eds. Stefan Kuhlmann, Philip Shapira, and Ruud Smits. London: Edward Elgar.

- Merton Robert K. The Matthew effect in science. Science. 1968;159:56–63. doi: 10.1126/science.159.3810.56. [DOI] [PubMed] [Google Scholar]

- Münch Richard. Stratifikation durch Evaluation. Mechanismen der Konstruktion von Statushierarchien in der Forschung. Zeitschrift für Soziologie. 2008;37:60–80. [Google Scholar]

- Osterloh, Margit, and Bruno S. Frey. 2008. Creativity and Conformity: Alternatives to the Present Peer Review System. Paper presented at the workshop on Peer Review Reviewed. Berlin: WZB, 24-25 April 2008.

- Persson Olle, Melin Göran. Equalization, growth and integration of science. Scientometrics. 1996;37:153–157. doi: 10.1007/BF02093491. [DOI] [Google Scholar]

- Plomp Reinier. The significance of the number of highly cited papers as an indicator of scientific prolificacy. Scientometrics. 1990;19:185–197. doi: 10.1007/BF02095346. [DOI] [Google Scholar]

- Power Michael. The theory of the audit explosion. In: Ferlie Ewan, Lynn Laurence E, Jr, Pollitt Christopher., editors. The Oxford handbook of public management. Oxford: Oxford University Press; 2005. pp. 326–344. [Google Scholar]

- Price de Solla Derek. A general theory of bibliometric and other cumulative advantage processes. Journal of the American Society for Information Science. 1976;27:292–306. doi: 10.1002/asi.4630270505. [DOI] [Google Scholar]

- Rafols Ismael, Leydesdorff Loet. Content-based and algorithmic classifications of journals: Perspectives on the dynamics of scientific communication and indexer effects. Journal of the American Society for Information Science and Technology. 2009;60:1823–1853. doi: 10.1002/asi.21086. [DOI] [Google Scholar]

- Ritzer, George. 1998. The McDonaldization Thesis. London: Sage.

- Rousseau, Ronald. 1992. Concentration and diversity measures in informetric research. Ph.D. Thesis, University of Antwerp, Antwerp.

- Rousseau Ronald. Concentration and evenness measures as macro-level scientometric indicators (in Chinese) In: Jiang G-h., editor. Research and university evaluation (Ke yan ping jia yu da xue ping jia) Beijing: Red Flag Publishing House; 2001. pp. 72–89. [Google Scholar]

- Sala-i-Martin Xavier. The world distribution of income: Falling poverty and… convergence, period. Quarterly Journal of Economics. 2006;71:351–397. doi: 10.1162/qjec.2006.121.2.351. [DOI] [Google Scholar]

- Schimank Uwe. New public management and the academic profession: Reflections on the German situation. Minerva. 2005;43:361–376. doi: 10.1007/s11024-005-2472-9. [DOI] [Google Scholar]

- Schmoch Ulrich, Schubert Torben. Sustainability of incentives for excellent research—the German case. Scientometrics. 2009;81:195–218. doi: 10.1007/s11192-009-2127-y. [DOI] [Google Scholar]

- Stiftel Bruce, Rukmana Deden, Alam Bhuiyan. Faculty quality at U.S. graduate planning schools. Journal of Planning Education and Research. 2004;24:6–22. doi: 10.1177/0739456X04267998. [DOI] [Google Scholar]

- Timothy MSmeeding. Public policy, economic inequality, and poverty: The United States in comparative perspective. Social Science Quarterly. 2005;86:955–983. doi: 10.1111/j.0038-4941.2005.00331.x. [DOI] [Google Scholar]

- Van den Peter Besselaar, Leydesdorff Loet. Past performance, peer review, and project selection: A case study in the social and behavioral sciences. Research Evaluation. 2009;18:273–288. doi: 10.3152/095820209X475360. [DOI] [Google Scholar]

- Van Philippe Parijs. European higher education under the spell of university rankings. Ethical Perspectives. 2009;16:189–206. doi: 10.2143/EP.16.2.2041651. [DOI] [Google Scholar]

- Raan Van Raan, Anthony FJ. Fatal attraction: Conceptual and methodological problems in the ranking of universities by bibliometric methods. Scientometrics. 2005;62:133–143. doi: 10.1007/s11192-005-0008-6. [DOI] [Google Scholar]

- Ville Simon, Valadkhani Abbas, O’Brien Martin. Distribution of research performance across Australian universities, 1992–2003, and its implications for building diversity. Australian Economic Papers. 2006;45:343–361. doi: 10.1111/j.1467-8454.2006.00298.x. [DOI] [Google Scholar]

- Weingart Peter. Impact of bibliometrics upon the science system: Inadverted consequences? Scientometrics. 2005;62:117–131. doi: 10.1007/s11192-005-0007-7. [DOI] [Google Scholar]

- Weingart Peter, Maasen Sabine. Elite through rankings: The emergence of the enterprising university. In: Whitley Richard, Gläser Jochen., editors. The changing governance of the sciences: The advent of research evaluation systems. Dordrecht: Springer; 2007. pp. 75–99. [Google Scholar]

- Whitley Richard D. The intellectual and social organization of the sciences. Oxford: Oxford University Press; 1984. [Google Scholar]

- Zitt Michel, Barré Rémi, Sigogneau Anne, Laville Françoise. Territorial concentration and evolution of science and technology activities in the European Union: A descriptive analysis. Research Policy. 1999;28:545–562. doi: 10.1016/S0048-7333(99)00012-8. [DOI] [Google Scholar]