Abstract

Despite much recent interest in the clinical neuroscience of music processing, the cognitive organization of music as a domain of non-verbal knowledge has been little studied. Here we addressed this issue systematically in two expert musicians with clinical diagnoses of semantic dementia and Alzheimer’s disease, in comparison with a control group of healthy expert musicians. In a series of neuropsychological experiments, we investigated associative knowledge of musical compositions (musical objects), musical emotions, musical instruments (musical sources) and music notation (musical symbols). These aspects of music knowledge were assessed in relation to musical perceptual abilities and extra-musical neuropsychological functions. The patient with semantic dementia showed relatively preserved recognition of musical compositions and musical symbols despite severely impaired recognition of musical emotions and musical instruments from sound. In contrast, the patient with Alzheimer’s disease showed impaired recognition of compositions, with somewhat better recognition of composer and musical era, and impaired comprehension of musical symbols, but normal recognition of musical emotions and musical instruments from sound. The findings suggest that music knowledge is fractionated, and superordinate musical knowledge is relatively more robust than knowledge of particular music. We propose that music constitutes a distinct domain of non-verbal knowledge but shares certain cognitive organizational features with other brain knowledge systems. Within the domain of music knowledge, dissociable cognitive mechanisms process knowledge derived from physical sources and the knowledge of abstract musical entities.

Keywords: music, semantic memory, dementia, semantic dementia, Alzheimer’s disease

Introduction

Understanding of the cognitive and neurological bases for music processing has advanced greatly in recent decades (Peretz and Coltheart, 2003; Peretz and Zatorre, 2005; Koelsch and Siebel, 2005; Stewart et al., 2006). However, while the perceptual and affective dimensions of music have received much attention, the cognitive organization of music knowledge has been less widely studied. Knowledge of music is multidimensional, involving abstract objects (compositions, notes), emotions as represented in music, physical sources (instruments), and symbols (musical notation). Each of these dimensions of music could be considered to convey ‘meaning’ beyond the purely perceptual features of the sounds or notations that compose them. The nature of meaning in music is a difficult problem and the subject of much philosophical and neuroscientific debate (Meyer, 1956; Huron, 2006; Patel, 2008). However, the terms ‘meaning’ and ‘knowledge’ are generally used by neuropsychologists to refer to learned facts and concepts about the world at large. Here we use ‘music knowledge’ in this neuropsychological sense to refer to the association of music with meaning based on learned attributes (such as recognizing a familiar tune or identifying the instrument on which it is played); i.e. associative knowledge of music. Musical emotions can also be considered in this framework, and warrant attention as the aspect of music that is most immediately meaningful for many listeners: while emotional responses themselves are not learned, the attributes and conventions that convey emotions in music are at least partly learned to the extent that they are products of a particular musical culture (Meyer, 1956).

The brain processes that mediate associative knowledge of music have a wider extra-musical significance. The organization of brain knowledge systems is an important neurobiological and clinical issue (Warrington, 1975; Wilson et al., 1995; Jefferies and Lambon Ralph, 2006; Hodges and Patterson, 2007). Neuropsychological accounts of brain knowledge systems have been heavily influenced by the study of patients with verbal deficits. However, the extent to which verbally-derived models apply to the processing of complex non-verbal objects and concepts remains unresolved. Among the domains of non-verbal knowledge, music is comparable to language in complexity, in its extensive use of both sensory objects and abstract symbols, and in the richness of its semantic associations (Peretz and Coltheart, 2003; Peretz and Zatorre, 2005). While individual variation in musical experience and expertise is wide, music (like language) is universal in human societies. Despite the many formal similarities between music and language, the cognitive status of such similarities, the extent to which the cognitive processes that underpin them are dissociable, and the brain mechanisms responsible all remain controversial (Peretz and Zatorre, 2005; Fitch, 2006; Hebert and Cuddy, 2006; Patel, 2008; Steinbeis and Koeslch, 2008a, b). Within the domain of music, the cognitive framework is even less clearly defined: while it seems clear that music has a modular cognitive organization (Peretz and Coltheart, 2003; Peretz and Zatorre, 2005), the status of the putative modules and their neuropsychological relations are debated. Music knowledge has chiefly been studied in relation to other (e.g. perceptual) musical modules. There is solid evidence for the independence of emotion comprehension from other aspects of music (Peretz et al., 1998; Griffiths et al., 2004; Gosselin et al., 2005, 2006, 2007; Khalfa et al., 2005, 2008). However, components of music knowledge have only infrequently been studied systematically in relation to one another (e.g. Eustache et al., 1990; Ayotte et al., 2000; Schuppert et al., 2000; Platel et al., 2003; Schulkind, 2004; Koelsch, 2005; Hebert and Cuddy, 2006; Stewart et al., 2006; Patel, 2008). The investigation of music knowledge provides an opportunity to probe the detailed organization of a uniquely complex, model non-verbal knowledge system and to elucidate brain processes that mediate non-verbal knowledge.

The brain mechanisms that process meaning in music have been addressed in functional imaging and electrophysiological studies of healthy subjects (Halpern and Zatorre 1999; Platel et al., 2003; Koelsch 2005; Satoh et al. 2006; Steinbeis and Koelsch 2008a, b) and clinical studies of individuals with focal brain damage (Eustache et al., 1990; Ayotte et al., 2000; Schuppert et al., 2000; Mendez 2001; Stewart et al., 2006). However, there are few systematic studies of music processing in neurodegenerative disease (Crystal et al., 1989; Polk and Kertesz, 1993; Beversdorf and Heilman, 1998; Cowles et al., 2003; Warren et al., 2003; Fornazzari et al., 2006; Baird and Samson, 2009; Drapeau et al., 2009; Hailstone et al., 2009). Although the study of cognitively impaired patients is challenging, the study of music knowledge in dementia offers valuable neurobiological and clinical perspectives. Certain neuropsychological functions relevant to the processing of music are characteristically affected in dementia: examples include semantic memory in semantic dementia (Hodges and Patterson, 2007), and episodic memory and auditory pattern analysis in Alzheimer’s disease (Taler et al., 2008). The nature of the neuropsychological deficits in degenerative disorders offers a perspective on the breakdown of brain knowledge stores that is complementary to the study of acute focal lesions: whereas lesions such as stroke typically disrupt access to stored information, degenerative disorders such as semantic dementia affect knowledge stores proper (Jefferies and Lambon Ralph, 2006). Disorders in the frontotemporal degeneration spectrum (including semantic dementia) have characteristic deficits in the processing of emotion (Rosen et al., 2002; Werner et al., 2007), which may be especially pertinent to music. Anatomically, the common dementia diseases affect regions of the frontal, temporal and parietal lobes that are likely to be critical for music processing (Platel et al., 2003; Satoh et al., 2006; Stewart et al., 2006; Warren, 2008). Finally, improved understanding of music processing, and more specifically musical memory, would provide a rationale for music-based therapies that have been used empirically in dementia populations (Raglio et al., 2008). Consistent with evidence from cases of focal brain damage (Wilson et al., 1995), selectively preserved memory for music despite episodic memory impairment has been described in patients with dementia, including Alzheimer’s disease (Polk and Kertesz, 1993; Beatty et al., 1994; Cowles et al., 2003). Relatively preserved knowledge of musical compositions, despite widespread impairment in other semantic domains, has been described in semantic dementia (Hailstone et al., 2009). However, musical deficits have also been documented in dementia (Bartlett et al., 1995; Baird and Samson, 2009).

Here we addressed the cognitive organization of music knowledge systematically in two expert musicians with characteristic dementia syndromes of semantic dementia and Alzheimer’s disease, in comparison with a control group of healthy expert musicians. In a series of neuropsychological experiments, we investigated associative knowledge of different aspects of music in relation to musical perceptual abilities and extra-musical neuropsychological functions. We hypothesized that semantic dementia and Alzheimer’s disease would be associated with distinct patterns of music knowledge deficits, in line with the characteristic clinico-anatomical profiles of these diseases. Specifically, we hypothesized that semantic dementia would produce deficits of musical instrument and emotion knowledge, reflecting a core deficit in extracting meaning from objects in the world at large associated with anterior temporal lobe dysfunction; while Alzheimer’s disease would produce deficits of musical composition and notation knowledge, reflecting a core deficit in the comprehension of auditory and visual patterns associated with temporo-parietal dysfunction.

Materials and methods

Subject details

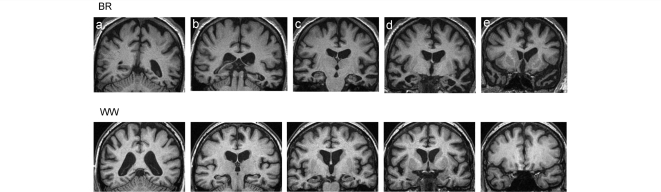

Patient B.R. is a 56-year-old right-handed male professional trumpet player and music teacher with 16 years music training and a career performing in professional orchestras. He possessed absolute pitch. He presented with a 2 year history of progressive word-finding and naming difficulty, circumlocutory speech, and later, difficulty recognizing the faces and voices of friends. Three months prior to assessment he had relinquished his professional musical commitments, but he continued to play the trumpet for several hours a day and to perform at social events; he reportedly remained highly competent in both playing and sight-reading. He continued to derive pleasure from music with no change in musical preferences. On cognitive examination, Mini-Mental State Examination score was 20/30, Frontal Assessment Battery score was 13/18, and there was evidence of anomia and surface dyslexia. The general neurological examination revealed a positive pout reflex but was otherwise unremarkable. A clinical diagnosis of semantic dementia was made. Brain MRI (Fig. 1) showed selective, predominantly left-sided anterior and inferior temporal lobe atrophy typical of semantic dementia.

Figure 1.

Representative T1-weighted coronal magnetic resonance sections showing profiles of brain atrophy in B.R. (above) and W.W. (below). More posterior sections are toward the left and more anterior sections to the right; the left hemisphere is shown on the right for each section. Sections have been selected to demonstrate the following key structures: (a) parietal lobes; (b) posterior temporal lobes; (c) hippocampi; (d) anterior temporal lobes and amygdalae; (e) frontal lobes and temporal poles. The sections for B.R. show asymmetric (predominantly left sided), selective anterior and inferior temporal lobe atrophy, typical of semantic dementia. The sections for W.W. show generalized cerebral atrophy with disproportionate symmetrical hippocampal atrophy, typical of Alzheimer’s disease.

Patient W.W. is a 67-year-old right-handed retired music librarian and curator with a PhD in musicology and over 50 years experience playing first oboe with several orchestras; he was also a competent amateur pianist. He presented with a 3 year history of progressive forgetfulness, word and route finding difficulties. In the months leading up to the assessment, he had noted increasing difficulty reading music and following the conductor, and he resigned from the last of his three orchestral posts one month prior to testing; he also reported errors playing the oboe and the piano. He continued to derive pleasure from music with no change in musical preferences. On examination Mini-Mental State Examination score was 24/30, and he exhibited anomia, impaired recall and ideomotor apraxia. A clinical diagnosis of Alzheimer’s disease was made. Brain MRI (Fig. 1) showed a typical pattern of generalized cortical atrophy with disproportionate bilateral hippocampal atrophy, and concurrent mild small-vessel ischaemic changes. He was not taking an acetylcholinesterase inhibitor at the time of the experimental assessment.

Control subjects

Six healthy professional musicians (age range 49–78 years, four males) with similar musical backgrounds to the patients participated as normal control subjects for the assessment of music cognition. Controls had between 11 and 22 years formal musical training and all were currently performing with professional orchestras as instrumentalists or conductors. Between two and six controls completed each of the tests in the experimental battery.

Background assessment: general neuropsychology, audiometry and music perception

Music knowledge might potentially be influenced by cognitive skills in non-musical domains, and by perceptual encoding of musical information. Moreover, the relations between music knowledge and other neuropsychological functions are of considerable interest in their own right. Accordingly we assessed general neuropsychological functions, peripheral hearing and music perceptual abilities in both patients.

General neuropsychological assessment (Table 1) corroborated the clinical diagnosis in each case. B.R. had profound impairment of semantic memory for both verbal and non-verbal material and severe dyslexia particularly affecting irregular word reading (surface dyslexia), with preserved general intellect, patchy impairment on executive tests, and intact arithmetical and visuoperceptual abilities. W.W. had impairments of general intellectual function, episodic memory, naming and executive skills, with mild weakness of visuoperceptual skills and well preserved literacy, arithmetical and visuospatial skills.

Table 1.

General neuropsychological assessment of patients

| Test | B.R. |

W.W. |

||

|---|---|---|---|---|

| Score | Percentile | Score | Percentile | |

| General intellectual function | ||||

| Ravens Advanced Matrices (/12) | 11 | 95th | 1 | <5th |

| Memory | ||||

| Camden Pictorial Memory Test (/30) | 30 | >50th | 30 | >50th |

| Recognition Memory Test-words (/50) | – | – | 28 | <5th |

| Recognition Memory Test-faces (/50) | – | – | 33 | <5th |

| Verbal Paired Associate Learning (/16) | – | – | 0 | <5th |

| Language | ||||

| Word repetition (/30) | 30 | >5tha | 30 | >5tha |

| Picture naming (/20) | 1 | <5tha | 15 | <5tha |

| Word-picture matching (/30) | 7 | <5tha | 29 | 50–75tha |

| Synonyms test (concrete) (/25) | 13 | <5thb | 22 | 50–75thb |

| Irregular word reading (/30) | 16 | <5tha | 30 | >75tha |

| Executive function | ||||

| Trail Making Test A | 62s | <5thc | 86 s | <5thc |

| Trail Making Test B | 109s | 10–25thc | out of time | <5thc |

| Number cancellation (number in 45 seconds) | 21 | 20-40tha | 13 | <5tha |

| Other skills | ||||

| Famous faces—naming (1/2) | 1 | <5th | 0 | <5th |

| Famous faces—recognition (/12) | 3d | <5th | 5 | <5th |

| Digit span (forwards, backwards) | – | – | 7,5 | 25–50thc |

| Graded Difficulty Arithmetic Test: addition items (/12) | 6 | 25–50the | 6 | 25–50the |

| VOSP object decision (/20) | 16 | 20–40th | 15 | 10-20th |

| VOSP dot counting (/10) | – | – | 10 | 10-50th |

| VOSP dot counting (mean time taken) | – | – | 2.3 s | 25-50th |

Percentiles calculated from standardized tests, except where marked; – = not attempted; VOSP = Visual Object and Space Perception Battery. Background information about the tests is provided in Supplementary Table S3.

a: Calculated from previous healthy control sample (n = 41–72).

b: Test administered with both visual and auditory presentation of words whereas the standardized percentiles are calculated for auditory presentation only.

c: Approximated from standardized scores.

d: Scored <5th percentile on a recognition test of famous buildings, 50th percentile on Benton test of face perception.

e: Calculated from previous healthy control sample (n = 100–143).

Neither patient gave a clinical history of altered hearing, however audiometric assessment in B.R. revealed mild bilateral high frequency hearing loss and abnormal otoacoustic emissions, probably secondary to longstanding noise damage. W.W. had pure tone audiometry and otoacoustic emissions within normal limits for his age.

Musical perceptual abilities were assessed in the patients and musician controls using the Montreal Battery for Evaluation of Amusia, a widely used and normed test of music perception in musically untrained subjects (Peretz et al., 2003), based on a two alternative (same/different) forced choice comparison of two short unfamiliar musical sequences. Scale (key), pitch contour (melody), pitch interval and rhythm discrimination subtests of the Montreal Battery for Evaluation of Amusia were administered. In addition, subjects were administered a novel timbre discrimination test (previously described in Garrido et al., 2009) in which the subject was presented with two different, brief melodic excerpts each played by a single instrument; the task was to decide whether the excerpts were played by the same instrument or by different instruments. Excerpts were selected such that the timbre of the instrument was strongly established whereas recognition of the source piece was unlikely; within each pair the two excerpts differed in pitch range, to reduce the use of non-timbral cues. This test comprised 20 trials (10 same, 10 different pairs), including 16 common instrument timbres (Supplementary Table S1; examples of stimuli available from the authors).

Results are summarized in Table 2. On the Montreal Battery for Evaluation of Amusia subtests, B.R. exhibited deficits of contour and interval discrimination and W.W. exhibited a deficit of interval discrimination relative to healthy musicians; on the interval subtest, W.W. (but not B.R.) had a perceptual deficit (P < 0.05) relative to published norms for healthy non-musician controls. On the timbre discrimination task, B.R. exhibited a moderate deficit and W.W. a mild deficit relative to healthy musicians.

Table 2.

Assessment of music cognition

| Experiment | Musical domain | Test | Scores |

|||

|---|---|---|---|---|---|---|

| B.R. | W.W. | Controls |

||||

| Mean (SD) | n | |||||

| Background | Music perception | MBEA Scale (/30; 15) | 28 | 28 | 28.8 (0.4) | 4 |

| MBEA Contour (/30; 15) | 25 | 26 | 29 (1.1) | 4 | ||

| MBEA Interval (/30; 15) | 23 | 18a | 27.8 (1.8) | 4 | ||

| MBEA Rhythm (/30; 15) | 30 | 29 | 29.5 (0.5) | 4 | ||

| Timbre discrimination test (/20; 10) | 16 | 18 | 20 (0) | 4 | ||

| Exp 1 | Knowledge of musical objects: composition-specific | Famous melody matching (/20; 10) | 17 | 14 | 19.2 (0.7) | 6 |

| Famous melody naming (/21) | 0 | 5 | 16.5 (2.1) | 4 | ||

| Pieces played from memory from music cueing (/15) | 13 | NA | NA | |||

| Pieces played from memory from name only (/15) | 2 | NA | NA | |||

| Vocal–non-vocal test (/40; 20) | NA | 30 | 37 (1.9) | 4 | ||

| Exp 2a | Knowledge of musical objects: categorical knowledge of compositions | Solo test: era (/20; 7) | NA | 17 | 18.8 (1.1) | 6 |

| Solo test: composer (/20; 7) | NA | 13 | 17.2 (1.3) | 6 | ||

| Solo test: instrument (/20; 7) | NA | 10 | 18.3 (1.5) | 6 | ||

| Semantic relatedness: same composer foils (/28; 7) | NA | 14 | 25.7 (0.9) | 3 | ||

| Semantic relatedness: different composer foils (/28; 7) | 21 | 26.7 (1.3) | 3 | |||

| Exp 2b | Knowledge of musical objects: notes (absolute pitch) | Pitch naming (/20) | 11 | NA | 1.5 (1.1) | 4 |

| Number of pitches identified within 1 semitone (/20) | 17 | 2.5 (1.7) | 4 | |||

| 50–100%b | ||||||

| Exp 3 | Knowledge of musical emotions | Emotion recognition in music (/40; 10) | 17 | 34 | 33.3 (4.1) | 3 |

| Exp 4 | Knowledge of musical sources: instruments | Instrument sound naming (/20) | 2 | 17 | 19.8 (0.4) | 5 |

| Instrument sound recognition (/20) | 8 | 19 | 19.8 (0.4) | 5 | ||

| Instrument picture naming (/20) | 4 | 18 | 20 (0) | 5 | ||

| Instrument picture recognition (/20) | 19 | 20 | 20 (0) | 5 | ||

| Instrument sound-picture matching (/20; 5) | 18 | NA | 20 (0) | 5 | ||

| Exp 5 | Knowledge of musical symbols | Musical symbol naming (/10) | 6 | 6 | 10 (0) | 4 |

| Musical symbol identification (/10) | 10 | 8 | 10 (0) | 4 | ||

| Music theory: keys and pitches identification (/18) | NA | 15 | 17 (1.4) | 2 | ||

| Music theory: ‘musical synonyms’ (/20; 10) | 20 | 15 | 19.3 (1.3) | 4 | ||

For each test (total score; chance score) is indicated in parentheses. Scores significantly different from control mean (P < 0.05; modified t test, Crawford and Howell, 1998) in bold. See text for details of experimental tests. NA = not attempted; MBEA = Montreal Battery for Evaluation of Amusia.

a: Also abnormal in relation to published norms for healthy non-musicians.

b: Published data for absolute pitch possessors (Levitin and Rogers, 2005).

Experimental assessment of music knowledge: experimental plan and general procedure

We designed a series of experiments to probe various aspects of knowledge of music. Arguably the purest objects of musical knowledge are the compositions (and their more basic musical constituents) that together constitute the corpus of musical pieces in a musical culture (assessed in Experiment 1). Musical pieces can be considered at different levels of analysis (general categories such as genre or era; knowledge of particular pieces): we designed tests (Experiment 2) to assess these different levels, and to determine whether neuropsychological constructs such as superordinate and item-specific knowledge can be applied validly to music. Comprehension of emotion is an essential dimension of musical understanding for most listeners and (based on prior neuropsychological evidence) is likely to warrant assessment in its own right (Experiment 3). Music is typically conveyed from an external source: we aimed to assess the extent to which knowledge of these sources (instruments) might be independent of other aspects of music knowledge (Experiment 4). Finally, music can be coded using a specialized notational system: tests were designed (Experiment 5) to assess music reading in relation both to other dimensions of music knowledge, and the more widespread notational system of text.

Conventionally, knowledge of music is assessed by having the subject label the musical stimulus in some way (e.g. by naming a familiar melody). Here, we aimed to assess associative knowledge of music using procedures tailored to our patients’ particular cognitive and musical abilities (e.g. B.R.’s premorbid possession of absolute pitch and retained performance skills, W.W.’s retained verbal capacity). Musical excerpts used are presented in Supplementary Table S1. In selecting excerpts, we aimed to include pieces with which a person steeped in Western classical music should be at least moderately familiar after a lifetime’s listening. Examples of the stimuli are available from the authors.

The experimental tests assessing dimensions of music knowledge were administered to subjects over several sessions. Auditory stimuli were presented from digital wavefiles on a notebook computer in free field at a comfortable listening level in a quiet room. Visual stimuli were presented and subject responses were collected for off-line analysis in Cogent 2000 (http://www.vislab.ucl.ac.uk/Cogent2000) running under MATLAB 7.0® (http://www.mathworks.com). Where the test required matching between an auditory stimulus and a verbal label, the words corresponding to the verbal choices were simultaneously displayed on the computer monitor and read out to the subject on each trial. Before the start of each test, several practice trials were administered to ensure that the subject understood the task. No feedback was given about performance during the test. No time limit was imposed.

For each experiment, patient performance was compared to healthy musician controls using the modified t-test procedure described by Crawford and Howell (1998) for comparing individual test scores against norms derived from small samples, with a statistical threshold of P < 0.05. Patient and control results for the experimental battery are summarized in Table 2.

Experiment 1

Knowledge of musical objects: composition specific

Musical compositions can be considered as ‘musical objects’ about which associative knowledge can be acquired. However, defining a musical object is problematic. Musical works can be altered substantially (e.g. transcribed for other instruments, transposed in key), yet still remain readily identifiable. Musically untrained listeners can recognize well-known melodies such as Happy Birthday after only a few notes (Dalla Bella et al., 2003; Schulkind et al., 2004). In the first experiment, we used various procedures to assess knowledge of particular musical objects (compositions) by B.R. and W.W.

Patients and healthy musician controls first performed a melody matching task. Twenty-one famous tunes derived from the Western classical canon, folk and pop music (Supplementary Table S1) were recorded on a piano by one of the authors (R.O.) using a single melody line, in the same key (G major); 19/20 melodies were in a different key to the original key of the composition. Tunes were selected such that two readily recognizable but distinct melodic fragments could be extracted for each tune (e.g. God Save the Queen). These fragments were arranged in pairs such that a given pair contained fragments from the same or different tunes: the task was to decide whether the two fragments belonged to the same tune or to different tunes. Melodic fragments from the ‘same’ tune could not be matched simply by matching pitch at the end of first clip to the beginning of the second clip, while ‘different’ tune excerpts were matched for musical style and tempo. This test comprised 20 trials (10 same, 10 different pairs), presented in randomized order. Subjects were subsequently presented with the same excerpts and asked to name each tune.

An additional procedure was used with B.R. capitalizing on his retained performance skills. Fifteen pieces of music in B.R.’s trumpet repertoire (Supplementary Table S1) were nominated by his wife. In the first part of the test, B.R. was presented with a musical introduction to each piece, and in the second part of the test B.R. was presented with the names of the same pieces: the task on each trial was to play (or sing) the piece from memory based on the introduction (Part 1) or the name (Part 2). B.R.’s performances were recorded and played back to a blinded assessor (J.E.W.); pieces that were identifiable to the assessor were counted as successfully played.

An alternative additional test was administered to W.W. and the healthy controls. In this ‘vocal–non-vocal’ test, 40 introductory melodic excerpts of orchestral music (popular operas, oratorios, ballets and symphonies) were presented in randomized order (Supplementary Table S1): half the source compositions from which the excerpts were drawn contained human voices, while the remaining half were entirely orchestral, however no voices were present in the excerpts presented. On hearing each excerpt, W.W. was asked to decide whether the source composition contained a voice.

Results

On the famous melody matching task, both B.R. and W.W. showed deficits relative to healthy musicians (Table 2). There was the suggestion of dissociation between matching and naming performance: B.R. scored 17/20 on the matching task but was unable to name any tunes, whereas W.W. scored 14/20 on the matching task but was able to name five tunes.

B.R. was able to play only 2/15 pieces from name but played or sang 13/15 pieces from a musical introduction (Table 2), indicating that he was able to access knowledge of particular musical compositions successfully from musical but not from verbal cues. In contrast, on the ‘vocal–non-vocal’ test W.W.’s score of 30/40 (significantly worse than healthy musicians: Table 2) provided further evidence for impaired item-specific knowledge of musical compositions.

Comment

Taken together, these findings suggest that knowledge of particular musical objects (compositions) is at least partly dissociable from the ability to label music verbally. Retained item-specific knowledge of music can be demonstrated even in the face of profound verbal impairment (as in B.R.), and deficits of musical item-specific knowledge can be demonstrated even where (as in W.W.’s performance both on the within-modality melody matching and vocal–non-vocal tasks) there is no requirement for explicit verbal identification. These findings support previous evidence for a defect of familiar tune recognition in Alzheimer’s disease (Baird and Samson, 2009) and relatively preserved knowledge of familiar tunes in semantic dementia (Hailstone et al., 2009).

An important issue in the psychology of music concerns the role of different memory systems (episodic, procedural and semantic). The use of tasks based on stimuli that were altered from their canonical form (piano versions transposed to a key different from the original) or presented as fragments requiring familiarity with a larger whole (the musical introductions played to B.R., the melodic excerpts played to W.W.) is likely to have reduced dependence on musical episodic memory here. While the melody matching and vocal–non-vocal tasks may have involved musical imagery, this is likely to be mediated by brain networks that are at least partially distinct from those mediating episodic memory (Halpern and Zatorre, 1999; Schurmann et al., 2002; Platel et al., 2003). Intact musical procedural memory alone would not predict B.R.’s successful performance of pieces cued from an initial fragment or in a form other than the trumpet arrangement in which he had learned them. In order to access the motor programme required to execute a piece, it was first necessary for B.R. to match the musical introduction with stored information about the composition as a whole. We argue that this matching process accesses musical semantic memory: stored knowledge about the musical characteristics of the piece. We do not, however, wish to over-emphasize this interpretation: it seems likely that any task relying on musical performance skills must engage procedural memory to a degree; nor can we exclude the possibility that episodic memory contributes to successful melody recognition, noting the typical prominent deficits of episodic memory (Table 1) and bilateral hippocampal damage (Fig. 1) in W.W.

Experiment 2

Knowledge of musical objects

Knowledge about musical works can be acquired at different levels of analysis. Non-musicians are able to categorize musical pieces according to genre (jazz, folk, classical etc.) and other associative attributes (e.g. Christmas music, nursery songs) (Halpern, 1984). The categorizations available to trained musicians are more elaborate and may range from single notes or pitch intervals to generic stylistic features linked to knowledge of composers or musical eras. Whereas a particular composition can be assigned to a musical era based on a number of rather broad timbral and melodic characteristics, the association with a particular composer (compositional style) is more specific, but does not rely on knowledge of the particular composition. By analogy with other kinds of sensory objects, these different levels of musical knowledge might equate to superordinate knowledge about compositions versus fine-grained knowledge specific to particular compositions. However, it has not been established whether distinctions between levels of musical knowledge or musical categories are reflected in the brain organization of knowledge about music.

Experiment 2a: categorical knowledge of compositions

For W.W., we designed a test that required matching of a musical excerpt to written words describing different levels of associative knowledge about the source composition. Twenty introductory excerpts of orchestral music (Supplementary Table S1) were selected based on the following criteria: the source composition was written for a prominent solo instrument, but this solo instrument was not present in the excerpt presented; and each excerpt was strongly associated melodically with the source composition. Analogously with the vocal–non-vocal test in Experiment 1, we reasoned that determination of an (unheard) solo instrument would depend on specific knowledge of the source composition. Excerpts covering Baroque, Classical, Romantic and 20th Century eras were presented in randomized order. The task was to match each excerpt with its era, its composer and the solo instrument for which the source composition had been written. On each trial, era, composer and solo instrument choices were presented sequentially as randomized three-item written word arrays; within the composer arrays, choices were selected such that all derived from a single musical era; i.e. era could not be used as a cue to composer identification (for example, on hearing the introduction to Grieg’s Piano Concerto, the subject was presented with the arrays: ‘Baroque–Romantic–20th Century’; followed by: ‘Bruch–Grieg–Schumann’; followed by: ‘piano–cello–viola’; further examples are summarized in Supplementary Table S2).

As an extension to this ‘solo test’, we also administered to W.W. a test to probe for the existence of knowledge category effects in music. If musical knowledge is organized into neuropsychological semantic categories, one might expect more frequent misidentifications of musical items within a category than for items in different categories. The semantic category probed here was ‘composer’. On being presented with an orchestral musical excerpt (Tables S1 and S2), the subject was asked to make a four-alternative forced-choice decision regarding the name of the piece. Twenty-eight excerpts were presented in randomized order; piece names were presented as four-item written arrays. The test was presented in two separate condition blocks, according to the semantic relatedness of the name arrays: in the first, within-category (closely semantically related) condition, foils were names of pieces composed by the same composer; in the second, between-category (less closely semantically related) condition, foils were pieces composed by different composers from the same era.

Results

On the solo test, W.W. performed best for recognition of era, followed by composer, followed by solo instrument (Table 2). W.W.’s ability to recognize musical era was comparable to healthy musicians; in contrast, he performed significantly worse than controls on other components of this test. In the semantic relatedness test, W.W. scored 14/28 when target piece names were presented with foils by the same composer. His performance improved to 21/28 when the foils were titles of pieces by different composers. W.W.’s performance on this test was above chance but inferior to healthy musicians.

Comment

The pattern of W.W.’s results on the solo test suggests that superordinate knowledge (musical era) is more robust to the effects of brain damage than item-specific knowledge about particular compositions. In addition, W.W. was more likely to confuse compositions by the same composer with each other than with pieces by other composers with similar style. Together these findings support the existence of categories of musical object knowledge: we suggest that ‘composer’ (compositional style) is one category for organizing knowledge about musical objects (musical compositions).

Experiment 2b: Absolute pitch

This test administered to B.R. capitalized on his reported status as an absolute pitch possessor. Absolute pitch is the capacity, rare amongst musicians, to identify or reproduce musical pitch values without an external reference; it can be considered associative music knowledge at the level of individual notes or pitch values (typically, association of pitch values with verbal labels) (Levitin and Rogers, 2005). While absolute pitch is a highly specialized skill, it indexes a fundamental aspect of musical object knowledge, since individual notes are the ultimate building blocks of music: in non-possessors (the majority of listeners), knowledge of pitch values resides in the relations between pitches (i.e. pitch intervals) rather than individual notes themselves. The experimental task here required identification of 20 musical pitches presented in isolation. Pitches were presented at random drawn from a two octave range A3 to G4 (220–392 Hz) using an electronic piano timbre (synthesized in Sibelius v4®, 2005; http://sibelius.com).

Results

B.R. identified 55% of pitches correctly (Table 2). Absolute pitch possessors typically score 50-100% on such tests (Levitin and Rogers, 2005); moreover, possessors are often correct within one semitone of the target pitch, and B.R. identified 85% of pitches correct within one semitone. His performance was clearly superior to healthy musician non-possessors (mean pitch naming score 1.5/20, 12.5% within one semitone of target).

Comment

These results indicate that B.R. retained absolute pitch ability. There is a spectrum of abilities amongst possessors and it is of course possible that B.R.’s absolute pitch ability had declined from premorbid level. However, the findings support previous evidence that absolute pitch may be preserved after extensive left anterior temporal lobe damage (Zatorre, 1989), and suggest that musical pitch may constitute a privileged route to naming in semantic dementia. These results contrast with B.R.’s performance on the famous melody matching test of composition-level knowledge (Experiment 1), in which his ability to identify particular compositions (though superior to his ability to name them) was inferior to healthy musicians.

Interpreted together, these findings in W.W. and B.R. suggest that superordinate knowledge about musical objects (compositions) is dissociable from specific knowledge of those objects. Superordinate generic knowledge about musical style (era and composer) and knowledge about the building blocks of musical objects (individual notes) may be more robust than musical object (composition)-specific knowledge.

Experiment 3

Knowledge of musical emotions

The relations between emotion recognition in music and other aspects of music cognition have not been fully defined. Dissociations between emotion processing and other musical perceptual and associative functions are well-documented (Peretz et al., 1998; Griffiths et al., 2004; Peretz and Zatorre, 2005). Furthermore, music emotion judgements have been found to be relatively resistant to brain damage (Peretz et al., 1998). Recognition of emotion in music is likely to be influenced by the internalization of ‘rules’ or conventions for conveying particular emotions in the listener’s particular musical culture (Juslin and Vastfjall, 2008) as well as by transcultural factors (Fritz et al., 2009).

Here we designed a novel battery to assess recognition of four canonical emotions (happiness, sadness, anger, fear) (Ekman and Friesen, 1976) as represented in music. Stimuli were excerpts drawn from the Western classical canon and film scores (Supplementary Table S1). Forty trials were presented, comprising 10 musical excerpts representing each of the four target emotions in randomized order. On each trial, the subject was asked to choose which one of the four target emotions was best represented by the stimulus. In order to rule out any confound from the use of verbal labels in this test, B.R.’s ability to identify emotions from facial expressions was also assessed using an identical procedure with corresponding stimuli (Ekman and Friesen, 1976).

Results

Recognition of musical emotions by W.W. was comparable to healthy musicians (score 34/40; control mean 33.3: Table 2). B.R.’s recognition of musical emotions was very impaired (score 17/40): this was not attributable to the verbal response procedure, since his recognition of facial emotions was significantly better [score 30/40; χ2(1) 7.42, P < 0.01]. B.R. had relatively greater difficulty recognising negative than positive musical emotions (individual scores: anger, 1/10; fear 4/10; happiness, 7/10; sadness, 5/10). W.W.’s scores on the same stimuli (anger 9/10; fear 8/10; happiness, 8/10; sadness, 9/10) indicate that B.R.’s performance profile was not attributable simply to stimulus factors.

Comment

Together with the results of Experiments 1 and 2, these findings indicate a partial dissociation of emotion recognition from other aspects of musical object knowledge, consistent with findings in previous case studies of patients with focal brain damage (Peretz et al., 1998; Griffiths et al., 2004; Peretz and Zatorre, 2005). The present findings suggest that focal degenerative pathologies (like semantic dementia) may degrade emotion recognition in music. This corroborates previous evidence for multimodal emotion recognition deficits in semantic dementia (Rosen et al., 2002; Werner et al., 2007). B.R. exhibited a more severe deficit for recognition of negative compared with positive musical emotions: this would also be consistent with previous data in other affective modalities, but requires care in interpretation since ‘happiness’ in music (like other modalities) requires less fine-grained differentiation than do individual negative emotions.

Experiment 4

Knowledge of musical sources: instruments

If musical compositions are the objects around which knowledge of music is built, to convey music in general requires an acoustic source. These sources, musical instruments, constitute a specialized category of semantic knowledge (Dixon et al., 2000; Mahon and Caramazza, 2009). The distinction we draw here between musical compositions as ‘objects’ and instruments as ‘sources’ is largely pragmatic, since instrument timbres are ‘auditory objects’ in a broader sense (Griffiths and Warren, 2004). However, much previous work has addressed the recognition of musical instruments from their pictures (i.e. instruments as visual artefacts) whereas it could be argued that the essential character of a musical instrument is auditory. In this experiment we assessed identification, naming and cross-modal matching of musical instruments in the auditory and visual modalities. Audio wavefiles of 20 musical instruments (Supplementary Table S1) were presented sequentially in randomized order and pictures of the same instruments were presented in an alternative randomized order. Subjects were asked to name or otherwise identify the instrument. Apart from naming, instrument recognition could be demonstrated by providing a piece of information about the instrument (e.g. ‘not a clarinet, it begins with “s” ’ to indicate recognition of a saxophone) or by miming how the instrument would be played; as it is difficult to indicate specific identification of some instruments without naming, recognition was also credited if the instrument family (e.g. percussion, woodwind) was identified correctly. A recognition deficit in either modality was further probed using a cross-modal procedure in which the same set of instrument sounds was presented in randomized order together with arrays of four written instrument names and pictures, and the task was to match each instrument sound with the correct name-picture combination.

Results

B.R. was able to name only 2/20 instruments from sound and 4/20 instruments from pictures (Table 2); he was able to provide identifying information for only 8/20 instruments from sound but 19/20 instruments from pictures. On the cross-modal instrument sound to picture four-alternative-forced-choice matching task, his score improved to 18/20, which was still inferior to the flawless performance of healthy musicians on this task. W.W. made errors on naming instruments both from sounds and pictures; however his ability to identify instruments in each modality did not differ significantly from healthy musicians (Table 2).

Comment

Together with the results of Experiments 1 and 2, these findings support a double dissociation between knowledge of musical objects (compositions) and knowledge of musical sources (instruments): W.W. demonstrated normal auditory instrument recognition but impaired composition-specific knowledge, while B.R. showed very impaired auditory instrument recognition despite relatively preserved composition-specific knowledge. Furthermore, within the category of musical instruments, B.R.’s markedly impaired identification of instruments from sound contrasted with his largely intact ability to recognize instruments visually. His auditory identification performance improved (though not to normal level) if cross-modal visual information was available. B.R.’s performance contrasted with that of W.W. who (despite impaired naming ability) was able to identify instruments normally in both the auditory and visual modalities. While it is tempting to ascribe the pattern of deficits exhibited by B.R. to an auditory associative agnosia affecting instruments, the findings on the musical perceptual tasks (Experiment 1, Table 2) suggest a need for some caution. B.R. did have evidence of a perceptual deficit affecting, in particular, timbre discrimination; it is therefore possible that the effects of degraded timbral representations interacted with auditory-based recognition of particular instruments. This interpretation would be consistent with previous neuropsychological evidence implicating ‘basic object level’ processing in the recognition of musical instruments (Palmer et al., 1989; Kohlmetz et al., 2003). On the other hand, a purely perceptual deficit would not easily account for B.R.’s improved performance on the auditory-visual matching task. We propose that B.R. retained sufficient general categorical information about instrument sounds to enable identification to be achieved once more specific visual information was available (Palmer et al., 1989).

Experiment 5

Knowledge of musical symbols

Like many languages, music has a complex system of symbolic written notation with agreed ‘rules’ for how these symbols should be understood and translated into musical output. Here we were interested to probe different levels of musical symbol comprehension.

In the first part of the experiment, 10 common musical symbols were presented sequentially and the task was to identify each symbol. If the subject was unable to name but was able to indicate unambiguously that they recognized the symbol (e.g. describing a crotchet as ‘like a minim but just one not two’), this was recorded. A supplementary test probing knowledge of key signatures and the notation of the clef was administered to W.W. and healthy musicians. On 18 sequential trials, minim notes were presented on a stave with a key signature; a range of keys (half major, half minor) and accidentals either in the treble or the bass clef. On the initial eight trials, the task was to identify the key signature. On the following 10 trials the task was to identify the pitch of the note; in order to identify the pitch correctly, it was necessary both to interpret the key signature and to understand the notation of the clef.

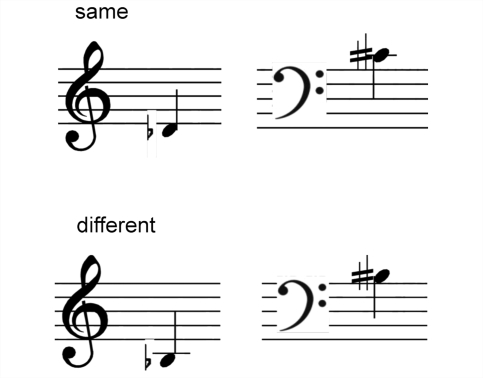

The second part of the experiment was designed as a musical analogue of the ‘Synonyms Test’ on word pairs, which has been widely used to assess single word comprehension (Warrington et al., 1998). While there is no precise equivalent to a ‘synonym’ in musical notation, there are often alternative ways of writing the same musical instruction which differ substantially in surface structure: in order to determine whether two musical notations are equivalent, understanding of the musical meaning of each instruction is required. Twenty pairs of musical notes or rests were presented sequentially in randomized order. The two items in each pair were always notated differently, however 10 pairs represented the same note (or rest duration) if played (‘musical synonyms’), while the remaining 10 pairs represented notes with different pitch or duration (or rests of different duration) if played (examples shown in Fig. 2). On each trial the subject was asked to determine whether the two notes or rests were equivalent.

Figure 2.

Examples of trials from the ‘Musical Synonyms’ test. In each trial the two items in each pair were notated differently and the task was to determine whether the two notes represented were equivalent (i.e. the same if played): in the examples shown, the notations above signify the ‘same’ note (D-flat – C-sharp) when played (i.e. this pair are ‘musical synonyms’); the notations below signify ‘different’ notes (B-flat and B-sharp) when played.

Results

B.R. and W.W. showed diverging patterns of performance on these tests (Table 2). Whereas naming of musical notes was impaired in both patients (both scored 6/10), B.R. performed flawlessly on tests of symbol comprehension, similar to healthy musicians (Table 1), while W.W. made errors even on the easier musical symbol identification task (score 8/10). W.W.’s comprehension of musical symbols benefited from the availability of a musical context (key identification 6/8, note identification, 9/10, total score 15/18; not significantly different from healthy controls), but deteriorated on the ‘Musical Synonyms’ task requiring assessment of symbol equivalence (score 15/20).

Comment

The pattern of performance of B.R. and W.W. on the musical symbol comprehension tasks suggests a partial dissociation from other dimensions of music knowledge. B.R. exhibited a retained comprehension of musical symbols despite impaired knowledge of musical sources and emotions, while W.W. showed a deficit of musical symbol comprehension despite intact knowledge of musical sources and emotions. There are several potential caveats on the interpretation of W.W.’s poor performance in this experiment. Musical notation is visuospatially complex; however, W.W. did not show frank visuospatial or visuoperceptual deficits on general neuropsychological assessment (Table 1). Particularly in the Musical Synonyms test, there was a demand for executive processing in switching between stimuli in a given pair and between different symbol transcoding ‘rules’ on sequential trials. However, B.R., who also had evidence of executive dysfunction, performed at ceiling on this task. We therefore propose that W.W. has a specific disorder of music symbol comprehension.

Discussion

Here we have presented neuropsychological profiles that together suggest a cognitive organization for music knowledge. The findings suggest that associative knowledge of music is at least partly dissociable from other neuropsychological functions and from musical perceptual ability. Within the domain of music knowledge, the findings support a modular organization with dissociations (summarized in Table 3) between knowledge of musical objects (compositions) and symbols (notation) versus knowledge of musical sources (instruments) and emotions. With respect to knowledge of musical objects, superordinate knowledge about musical style (eras, composers) and note values (absolute pitch) is less vulnerable than fine-grained, object-specific knowledge about particular compositions. The demonstration of semantic relatedness effects further suggests that musical categories such as composer have neuropsychological validity. Based on these findings, we propose that music constitutes a distinct but fractionated domain of non-verbal associative knowledge. We interpret the present findings as evidence of a relatively independent associative knowledge system for music that may be neuropsychologically equivalent to semantic memory systems in other cognitive domains and share at least some cognitive features with other domains of knowledge (Murre et al., 2001).

Table 3.

Neuropsychological dissociations within the domain of music knowledge

| Musical domain | B.R. (semantic dementia) | W.W. (Alzheimer’s disease) |

|---|---|---|

| Musical objects | ↓ | ↓↓ |

| Musical emotions | ↓↓ | N |

| Musical sources | ↓↓ | N |

| Musical symbols | N | ↓↓ |

N = normal performance; ↓ = impaired performance relative to controls; ↓↓ = impaired performance relative to both controls and other case.

The selectivity of brain damage even in a ‘focal’ dementia such as semantic dementia is relative rather than absolute, and anatomical correlation is necessarily limited. Nevertheless, the pattern of findings here would be consistent with neuroanatomical substrates for musical semantic memory that are partly separable from other domains of semantic memory, and consistent with our prior hypotheses concerning semantic dementia and Alzheimer’s disease. Knowledge of musical instruments and emotions may depend on inferior frontal and anterior temporal areas previously implicated in processing analogous kinds of information in voices and other domains (Griffiths et al., 2004; Khalfa et al., 2005; Gosselin et al., 2006; Schirmer and Kotz, 2006; Bélizaire et al., 2007; Eldar et al., 2007; Gosselin et al., 2007); following this interpretation, the striking involvement of anterior temporal lobe cortex and amygdala in B.R. (Fig. 1) would provide a neural correlate for impaired processing of both musical sources (instruments) and emotions in semantic dementia. In contrast, knowledge of musical objects (compositions) and symbols may have a substrate distinct both from non-musical knowledge domains and from knowledge of musical sources and emotions. The relatively selective sparing of knowledge of musical compositions shown by B.R. is consistent with previous evidence in semantic dementia (Hailstone et al., 2009). Anatomically, this could reflect a relatively greater dependence of musical object and symbol knowledge on brain areas beyond the anterior temporal lobe, as suggested by previous functional imaging (Platel et al., 2003; Satoh et al., 2006) and focal lesion (Stewart et al., 2006) studies implicating a distributed network of perisylvian areas in processes such as familiar melody recognition. This anatomical interpretation would be consistent with the more generalized involvement of perisylvian cortices in W.W. with Alzheimer’s disease (Fig.1).

In addition to any separation of anatomical substrates, might distinct cognitive operations dissociate musical object and symbol knowledge from other dimensions of musical and extra-musical associative knowledge? There are two important caveats to this interpretation. First, due to the particular profiles of general cognitive competence retained by our patients, it was necessary to use tasks to exploit these competencies, rather than a uniform musical battery: the tasks used to assess a particular musical function in each patient were not equivalent in their processing demands (for example, the performance task used in Experiment 1 with B.R. required intact praxic skills and procedural memory, while the tasks used with W.W. did not). Second, the demands for associative processing are not a priori equivalent between the various dimensions of music knowledge: knowledge of musical compositions is likely to depend on semantic and autobiographical factors, and knowledge of music notation is a specialized skill, whereas knowledge of musical instruments or emotions is likely to be less dependent on past experience, but on the other hand might be more susceptible to cross-modal and other influences. Nevertheless, the finding of doubly dissociated performance profiles between B.R. and W.W. here (Table 3) suggests that relations between the dimensions of musical knowledge are modular, rather than (strictly) hierarchical, in keeping with current theoretical and empirical formulations (Peretz and Coltheart, 2003; Peretz and Zatorre, 2005). Musical instrument sounds and musical emotions are closely associated with physical objects and affective states, respectively, in the extra-musical world: musical instruments exist as artefacts, and musical instrument timbres share many features with animate voices (Bélizaire et al., 2007), while musical emotions align with similar emotions expressed by voices and faces (Eldar et al., 2007). It is therefore plausible that the processing of these aspects of musical knowledge should have neuropsychological similarities with the processing of other kinds of sensory object knowledge, and perhaps also with language, which derives meaning exclusively from its external referents. Musical emotion has a further dimension of subjective arousal that was not indexed here, but which may interact with cognitive mechanisms for emotion analysis and musical meaning more generally (Koelsch et al., 2008). From the clinical standpoint, it may also be relevant that understanding of musical emotion (unlike other dimensions of music knowledge) does not necessarily depend on specialized training: this suggests a possible therapeutic opportunity (Sarkamo et al., 2008; Koeslch, 2009). These are important issues for future work. In contrast to instruments and emotions, musical compositions and symbols may constitute a relatively self-contained knowledge system that is more dependent on abstract characteristics that are intrinsic to the musical stimulus and less grounded in the non-musical world (Huron, 2006; Steinbeis and Koeslch, 2008a). Though any parallel must be cautious, we speculate that knowledge of abstract musical entities (such as compositions) may align with knowledge of another abstract non-verbal system, mathematics, some aspects of which may also be relatively spared in semantic dementia (Crutch and Warrington, 2002; Jefferies et al., 2005; Zamarian et al., 2006).

Our findings speak to the longstanding debate concerning the neurobiological status of music (Peretz and Zatorre, 2005; Fitch, 2006; Patel, 2008; Steinbeis and Koeslch, 2008a, b). Direct comparisons between musical and verbal functioning are problematic, for the very reason that music and language have unique processing demands. It is generally not possible to equate these demands between modalities: a music reading test, for example, taps knowledge of a relatively small set of symbols compared with the vast corpus of words, and whereas word familiarity (frequency) can be quantified reliably for native speakers of the language, musical familiarity is heavily dependent on autobiographical experience. On the other hand, while language skills are universal, musical skills are acquired by only a small minority of the population. Taking these caveats into account, the present findings suggest that sophisticated musical understanding can survive even grave impairment of verbal capacity (as in B.R.); on the other hand, aspects of musical understanding may be eroded even where verbal knowledge is more or less unscathed (as in W.W.). We propose that the abstract nature of certain key objects of music knowledge (such as melodies) may make such objects less reliant on knowledge about things in the world at large. We argue that studies of this kind further the case for music as a phenomenon of fundamental neurobiological relevance in human evolution (Fitch, 2006): only music can encode certain kinds of non-verbal symbolic information, and further, that information (whatever its original nature) was of sufficient evolutionary value to acquire a dedicated neural substrate.

The pattern of deficits observed here on tasks assessing comprehension of musical notation corroborates previous observations in the context of focal brain damage (Judd et al., 1983; Basso and Capitani, 1985; Cappelletti et al., 2000; McDonald, 2006). Like verbal symbols, musical symbols can be transcoded into sound-based representations or motor representations, or processed directly for meaning. However, the information coded by musical notation is essentially spatial (pitch values on a stave) and temporal (note and rest duration values, rhythm, metre), and the motor outputs based on this information (as when playing an instrument) involve precise spatio-temporal transformations (Hebert and Cuddy, 2006; Brodsky et al., 2008; Wong and Gauthier, 2009). It is therefore possible that the meaning of musical symbols arises from the interaction of semantic with sensorimotor processes. In anatomical terms, music reading is vulnerable to focal lesions involving the parieto-temporal junction (Hebert and Cuddy, 2006; McDonald, 2006). This is supported by the evidence presented by the neurodegenerative pathologies here (Fig. 1): loss of music reading skills accompanied a disease (Alzheimer’s disease) involving the parietal lobes, whereas music reading was preserved in a disease (semantic dementia) that selectively damages the anterior temporal lobes.

Studies of this kind capitalize on the interaction of strategic forms of brain damage with premorbid specialized knowledge (McNeil and Warrington, 1993; Crutch and Warrington, 2002, 2003; Jefferies et al., 2005); indeed, the unique skills possessed by expert musicians here were an essential prerequisite in order to undertake a detailed analysis of multiple dimensions of music knowledge. The ability to generalize conclusions that are based on the study of individuals with specialist skills is typically limited. However, music offers certain advantages over other domains of specialist knowledge in that musical expertise is not rare in the wider population and there is a widely accepted ‘canon’ of musical skills and compositions, enabling the uniform assessment of music knowledge in a population of healthy individuals with similar musical backgrounds. Furthermore, the experience of music is universal, and musical knowledge in some form is possessed by all normal listeners. Taking these considerations into account, the present findings suggest a rationale for a more detailed clinical analysis of the value of music-based therapies tailored to particular dementia diseases. Furthermore, the findings raise fundamental issues concerning the brain organization of non-verbal knowledge systems and the nature of musical knowledge. On the one hand, the existence of distinctive brain knowledge systems for music suggests that music may have played a specific biological role in human evolution. On the other hand, evidence for a multidimensional neuropsychological organization of music knowledge suggests parallels with other cognitive domains and argues for important similarities in the cognitive architecture of different brain knowledge systems.

Supplementary material

Supplementary material is available at Brain online.

Funding

This work was undertaken at UCLH/UCL who received a proportion of funding from the Department of Health’s NIHR Biomedical Research Centres funding scheme; The Dementia Research Centre is an Alzheimer’s Research Trust Co-ordinating Centre; Wellcome Trust; UK Medical Research Council; Royal College of Physicians / Dunhill Medical Trust Research Fellowship to RO. Alzheimer’s Research Trust Fellowship to SJC; Wellcome Trust Intermediate Clinical Fellowship to JDW.

Supplementary Material

Acknowledgements

We are grateful to the patients and controls for their participation. We thank Prof EK Warrington for helpful discussion and Dr Doris-Eva Bamiou for assistance with audiometric assessments.

References

- Ayotte J, Peretz I, Rousseau I, Bard C, Bojanowski M. Patterns of music agnosia associated with middle cerebral artery infarcts. Brain. 2000;123(Pt 9):1926–38. doi: 10.1093/brain/123.9.1926. [DOI] [PubMed] [Google Scholar]

- Baird A, Samson S. Memory for music in Alzheimer's; disease: unforgettable? Neuropsychol Rev. 2009;19(1):85–101. doi: 10.1007/s11065-009-9085-2. [DOI] [PubMed] [Google Scholar]

- Bartlett JC, Halpern AR, Dowling WJ. Recognition of familiar and unfamiliar melodies in normal aging and Alzheimer's; disease. Mem Cognit. 1995;23:531–46. doi: 10.3758/bf03197255. [DOI] [PubMed] [Google Scholar]

- Basso A, Capitani E. Spared musical abilities in a conductor with global aphasia and ideomotor apraxia. J Neurol Neurosurg Psychiatry. 1985;48:407–12. doi: 10.1136/jnnp.48.5.407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beatty WW, Winn P, Adams RL, Allen EW, Wilson DA, Prince JR, et al. Preserved cognitive skills in dementia of the Alzheimer type. Arch Neurol. 1994;51:1040–6. doi: 10.1001/archneur.1994.00540220088018. [DOI] [PubMed] [Google Scholar]

- Bélizaire G, Fillion-Bilodeau S, Chartrand JP, Bertrand-Gauvin C, Belin P. Cerebral response to 'voiceness': a functional magnetic resonance imaging study. Neuroreport. 2007;18:29–33. doi: 10.1097/WNR.0b013e3280122718. [DOI] [PubMed] [Google Scholar]

- Beversdorf DQ, Heilman KM. Progressive ventral posterior cortical degeneration presenting as alexia for music and words. Neurology. 1998;50:657–9. doi: 10.1212/wnl.50.3.657. [DOI] [PubMed] [Google Scholar]

- Brodsky W, Kessler Y, Rubinstein BS, Ginsborg J, Henik A. The mental representation of music notation: notational audiation. J Exp Psychol Hum Percept Perform. 2008;34:427–45. doi: 10.1037/0096-1523.34.2.427. [DOI] [PubMed] [Google Scholar]

- Cappelletti M, Waley-Cohen H, Butterworth B, Kopelman M. A selective loss of the ability to read and to write music. Neurocase. 2000;6:321–32. [Google Scholar]

- Cowles A, Beatty WW, Nixon SJ, Lutz LJ, Paulk J, Paulk K, et al. Musical skill in dementia: a violinist presumed to have Alzheimer's; disease learns to play a new song. NeuroCase. 2003;9:493–503. doi: 10.1076/neur.9.6.493.29378. [DOI] [PubMed] [Google Scholar]

- Crawford JR, Howell DC. Comparing an individual’s test score against norms derived from small samples. Clin Neuropsychol. 1998;12:482–6. [Google Scholar]

- Crutch SJ, Warrington EK. Preserved calculation skills in a case of semantic dementia. Cortex. 2002;38:389–99. doi: 10.1016/s0010-9452(08)70667-1. [DOI] [PubMed] [Google Scholar]

- Crutch SJ, Warrington EK. Spatial coding of semantic information: knowledge of country and city names depends on their geographical proximity. Brain. 2003;126:1821–9. doi: 10.1093/brain/awg187. [DOI] [PubMed] [Google Scholar]

- Crystal HA, Grober E, Masur D. Preservation of musical memory in Alzheimer's; disease. J Neurol Neurosurg Psychiatry. 1989;52:1415–6. doi: 10.1136/jnnp.52.12.1415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dalla Bella S, Peretz I, Aronoff N. Time course of melody recognition: a gating paradigm study. Perception Psychophysics. 2003;65:1019–28. doi: 10.3758/bf03194831. [DOI] [PubMed] [Google Scholar]

- Dixon MJ, Piskopos M, Schweizer TA. Musical instrument naming impairments: the crucial exception to the living/nonliving dichotomy in category-specific agnosia. Brain Cogn. 2000;43:158–64. [PubMed] [Google Scholar]

- Drapeau J, Gosselin N, Gagnon L, Peretz I, Lorrain D. Emotional recognition from face, voice, and music in dementia of the Alzheimer type. Ann NY Acad Sci. 2009;1169:342–5. doi: 10.1111/j.1749-6632.2009.04768.x. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Palo Alto CA: Consulting Psychologists Press; 1976. Pictures of facial affect. [Google Scholar]

- Eldar E, Ganor O, Admon R, Bleich A, Hendler T. Feeling the real world: limbic response to music depends on related content. Cereb Cortex. 2007;17:2828–40. doi: 10.1093/cercor/bhm011. [DOI] [PubMed] [Google Scholar]

- Eustache F, Lechevalier B, Viader F, Lambert J. Identification and discrimination disorders in auditory perception: a report on two cases. Neuropsychologia. 1990;28:257–70. doi: 10.1016/0028-3932(90)90019-k. [DOI] [PubMed] [Google Scholar]

- Fitch WT. The biology and evolution of music: a comparative perspective. Cognition. 2006;100:173–215. doi: 10.1016/j.cognition.2005.11.009. [DOI] [PubMed] [Google Scholar]

- Fornazzari L, Castle T, Nadkarni S, Ambrose M, Miranda D, Apanasiewicz N, et al. Preservation of episodic musical memory in a pianist with Alzheimer disease. Neurology. 2006;66:610–1. doi: 10.1212/01.WNL.0000198242.13411.FB. [DOI] [PubMed] [Google Scholar]

- Fritz T, Jentschke S, Gosselin N, Sammler D, Peretz I, Turner R, et al. Universal recognition of three basic emotions in music. Curr Biol. 2009;19:573–6. doi: 10.1016/j.cub.2009.02.058. [DOI] [PubMed] [Google Scholar]

- Garrido L, Eisner F, McGettigan C, Stewart L, Sauter D, Hanley JR, et al. Developmental phonagnosia: a selective deficit of vocal identity recognition. Neuropsychologia. 2009;47:123–31. doi: 10.1016/j.neuropsychologia.2008.08.003. [DOI] [PubMed] [Google Scholar]

- Gosselin N, Peretz I, Noulhiane M, Hasboun D, Beckett C, Baulac M, et al. Impaired recognition of scary music following unilateral temporal lobe excision. Brain. 2005;128:628–40. doi: 10.1093/brain/awh420. [DOI] [PubMed] [Google Scholar]

- Gosselin N, Samson S, Adolphs R, Noulhiane M, Roy M, Hasboun D, et al. Emotional responses to unpleasant music correlates with damage to the parahippocampal cortex. Brain. 2006;129:2585–92. doi: 10.1093/brain/awl240. [DOI] [PubMed] [Google Scholar]

- Gosselin N, Peretz I, Johnsen E, Adolphs R. Amygdala damage impairs emotion recognition from music. Neuropsychologia. 2007;45:236–44. doi: 10.1016/j.neuropsychologia.2006.07.012. [DOI] [PubMed] [Google Scholar]

- Griffiths TD, Warren JD. What is an auditory object? Nat Rev Neurosci. 2004;5:887–92. doi: 10.1038/nrn1538. [DOI] [PubMed] [Google Scholar]

- Griffiths TD, Warren JD, Dean JL, Howard D. “When the feeling's; gone”: a selective loss of musical emotion. J Neurol Neurosurg Psychiatry. 2004;75:344–5. [PMC free article] [PubMed] [Google Scholar]

- Hailstone JC, Omar R, Warren JD. Relatively preserved knowledge of music in semantic dementia. J Neurol Neurosurg Psychiatry. 2009;80:808–9. doi: 10.1136/jnnp.2008.153130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Halpern AR. Organisation in memory for familiar songs. J Exp Psychol. 1984;10:496–512. [Google Scholar]

- Halpern AR, Zatorre RJ. When that tune runs through your head: a PET investigation of auditory imagery for familiar melodies. Cereb Cortex. 1999;9:697–704. doi: 10.1093/cercor/9.7.697. [DOI] [PubMed] [Google Scholar]

- Hebert S, Cuddy LL. Music-reading deficiencies and the brain. Adv Cogn Psychol. 2006;2:199–206. [Google Scholar]

- Hodges JR, Patterson K. Semantic dementia: a unique clinicopathological syndrome. Lancet Neurol. 2007 Nov;6(11):1004–14. doi: 10.1016/S1474-4422(07)70266-1. [DOI] [PubMed] [Google Scholar]

- Huron D. Sweet Anticipation: Music and the Psychology of Expectation. Cambridge: MIT Press; 2006. [Google Scholar]

- Jefferies E, Bateman D, Lambon Ralph MA. The role of the temporal lobe semantic system in number knowledge: evidence from late-stage semantic dementia. Neuropsychologia. 2005;43(6):887–905. doi: 10.1016/j.neuropsychologia.2004.09.009. [DOI] [PubMed] [Google Scholar]

- Jefferies E, Lambon Ralph MA. Semantic impairment in stroke aphasia versus semantic dementia: a case-series comparison. Brain. 2006 Aug;129(Pt 8):2132–47. doi: 10.1093/brain/awl153. [DOI] [PubMed] [Google Scholar]

- Judd T, Gardner H, Geschwind N. Alexia without agraphia in a composer. Brain. 1983;106:435–57. doi: 10.1093/brain/106.2.435. [DOI] [PubMed] [Google Scholar]

- Juslin PN, Västfjäll D. Emotional responses to music: the need to consider underlying mechanisms. Behav Brain Sci. 2008;31:559–75. doi: 10.1017/S0140525X08005293. [DOI] [PubMed] [Google Scholar]

- Khalfa S, Guye M, Peretz I, Chapon F, Girard N, Chauvel P, et al. Evidence of lateralized anteromedial temporal structures involvement in musical emotion processing. Neuropsychologia. 2008;46:2485–93. doi: 10.1016/j.neuropsychologia.2008.04.009. [DOI] [PubMed] [Google Scholar]

- Khalfa S, Schon D, Anton JL, Liégeois-Chauvel C. Brain regions involved in the recognition of happiness and sadness in music. Neuroreport. 2005;16:1981–4. doi: 10.1097/00001756-200512190-00002. [DOI] [PubMed] [Google Scholar]

- Koelsch S. Neural substrates of processing syntax and semantics in music. Curr Opin Neurobiol. 2005 Apr;15(2):207–12. doi: 10.1016/j.conb.2005.03.005. [DOI] [PubMed] [Google Scholar]

- Koelsch S. A neuroscientific perspective on music therapy. Ann NY Acad Sci. 2009;1169:374–84. doi: 10.1111/j.1749-6632.2009.04592.x. [DOI] [PubMed] [Google Scholar]

- Koelsch S, Siebel WA. Towards a neural basis of music perception. Trends Cogn Sci. 2005;9:578–84. doi: 10.1016/j.tics.2005.10.001. [DOI] [PubMed] [Google Scholar]

- Koelsch S, Kilches S, Steinbeis N, Schelinski S. Effects of unexpected chords and of performer's; expression on brain responses and electrodermal activity. PLoS One. 2008;3:e2631. doi: 10.1371/journal.pone.0002631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohlmetz C, Muller SV, Nager W, Munte TF, Altenmuller E. Selective loss of timbre perception for keyboard and percussion instruments following a right temporal lesion. NeuroCase. 2003;9:86–93. doi: 10.1076/neur.9.1.86.14372. [DOI] [PubMed] [Google Scholar]

- Levitin DJ, Rogers SE. Absolute pitch: perception, coding, and controversies. Trends Cogn Sci. 2005;9:26–33. doi: 10.1016/j.tics.2004.11.007. [DOI] [PubMed] [Google Scholar]

- Mahon BZ, Caramazza A. Concepts and categories: a cognitive neuropsychological perspective. Annu Rev Psychol. 2009;60:27–51. doi: 10.1146/annurev.psych.60.110707.163532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDonald I. Musical alexia with recovery: a personal account. Brain. 2006;129:2554–61. doi: 10.1093/brain/awl235. [DOI] [PubMed] [Google Scholar]

- McNeil JE, Warrington EK. Prosopagnosia: a face-specific disorder. Q J Exp Psychol. 1993;46:1–10. doi: 10.1080/14640749308401064. [DOI] [PubMed] [Google Scholar]

- Mendez MF. Generalized auditory agnosia with spared music recognition in a left-hander. Analysis of a case with a right temporal stroke. Cortex. 2001;37:139–50. doi: 10.1016/s0010-9452(08)70563-x. [DOI] [PubMed] [Google Scholar]

- Meyer LB. Emotion and Meaning in Music. Chicago: University of Chicago Press; 1956. [Google Scholar]

- Murre JM, Graham KS, Hodges JR. Semantic dementia: relevance to connectionist models of long-term memory. Brain. 2001;124:647–75. doi: 10.1093/brain/124.4.647. [DOI] [PubMed] [Google Scholar]

- Palmer CF, Jones RK, Hennessy BL, Unze MG, Pick AD. How is a trumpet known? The "basic object level" concept and perception of musical instruments. Am J Psychol. 1989;102:17–37. [PubMed] [Google Scholar]

- Patel AD. Music, language and the brain. New York: Oxford University Press; 2008. [Google Scholar]

- Peretz I, Coltheart M. Modularity of music processing. Nat Neurosci. 2003;6:688–91. doi: 10.1038/nn1083. [DOI] [PubMed] [Google Scholar]

- Peretz I, Zatorre RJ. Brain organization for music processing. Annu Rev Psychol. 2005;56:89–114. doi: 10.1146/annurev.psych.56.091103.070225. [DOI] [PubMed] [Google Scholar]

- Peretz I, Champod A-S, Hyde KL. Varieties of musical disorders. The Montreal battery for evaluation of amusia. Ann NY Acad Sci. 2003;999:58. doi: 10.1196/annals.1284.006. [DOI] [PubMed] [Google Scholar]

- Peretz I, Gagnon L, Bouchard B. Music and emotion: perceptual determinants, immediacy, and isolation after brain damage. Cognition. 1998;68:111–41. doi: 10.1016/s0010-0277(98)00043-2. [DOI] [PubMed] [Google Scholar]

- Platel H, Baron JC, Desgranges B, Bernard F, Eustache F. Semantic and episodic memory of music are subserved by distinct neural networks. Neuroimage. 2003;20:244–56. doi: 10.1016/s1053-8119(03)00287-8. [DOI] [PubMed] [Google Scholar]

- Polk M, Kertesz A. Music and language in degenerative disease of the brain. Brain Cogn. 1993;22:98–117. doi: 10.1006/brcg.1993.1027. [DOI] [PubMed] [Google Scholar]

- Raglio A, Bellelli G, Traficante D, Gianotti M, Ubezio MC, Villani D, et al. Efficacy of music therapy in the treatment of behavioral and psychiatric symptoms of dementia. Alzheimer Dis Assoc Disord. 2008 Apr–Jun;22(2):158–62. doi: 10.1097/WAD.0b013e3181630b6f. [DOI] [PubMed] [Google Scholar]

- Rosen HJ, Perry RJ, Murphy J, Kramer JH, Mychack P, Schuff N, et al. Emotion comprehension in the temporal variant of frontotemporal dementia. Brain. 2002;125(Pt 10):2286–95. doi: 10.1093/brain/awf225. [DOI] [PubMed] [Google Scholar]

- Särkämö T, Tervaniemi M, Laitinen S, Forsblom A, Soinila S, Mikkonen M, et al. Music listening enhances cognitive recovery and mood after middle cerebral artery stroke. Brain. 2008;131:866–76. doi: 10.1093/brain/awn013. [DOI] [PubMed] [Google Scholar]

- Satoh M, Takeda K, Nagata K, Shimosegawa E, Kuzuhara S. Positron-emission tomography of brain regions activated by recognition of familiar music. AJNR Am J Neuroradiol. 2006;27:1101–6. [PMC free article] [PubMed] [Google Scholar]

- Schirmer A, Kotz SA. Beyond the right hemisphere: brain mechanisms mediating vocal emotional processing. Trends Cogn Sci. 2006;10:24–30. doi: 10.1016/j.tics.2005.11.009. [DOI] [PubMed] [Google Scholar]

- Schulkind MD. Serial processing in melody identification and the organization of musical semantic memory. Percept Psychophys. 2004;66:1351–62. doi: 10.3758/bf03195003. [DOI] [PubMed] [Google Scholar]

- Schuppert M, Munte TF, Wieringa BM, Altenmuller E. Receptive amusia: evidence for cross-hemispheric neural networks underlying music processing strategies. Brain. 2000;123(Pt 3):546–59. doi: 10.1093/brain/123.3.546. [DOI] [PubMed] [Google Scholar]

- Schürmann M, Raij T, Fujiki N, Hari R. Mind's; ear in a musician: where and when in the brain. Neuroimage. 2002;16:434–40. doi: 10.1006/nimg.2002.1098. [DOI] [PubMed] [Google Scholar]

- Steinbeis N, Koelsch S. Shared neural resources between music and language indicate semantic processing of musical tension-resolution patterns. Cereb Cortex. 2008a;18:1169–78. doi: 10.1093/cercor/bhm149. [DOI] [PubMed] [Google Scholar]