Abstract

Humans have a unique ability to coordinate their motor movements to an external auditory stimulus, as in music-induced foot tapping or dancing. This behavior currently engages the attention of scholars across a number of disciplines. However, very little is known about its earliest manifestations. The aim of the current research was to examine whether preverbal infants engage in rhythmic behavior to music. To this end, we carried out two experiments in which we tested 120 infants (aged 5–24 months). Infants were exposed to various excerpts of musical and rhythmic stimuli, including isochronous drumbeats. Control stimuli consisted of adult- and infant-directed speech. Infants’ rhythmic movements were assessed by multiple methods involving manual coding from video excerpts and innovative 3D motion-capture technology. The results show that (i) infants engage in significantly more rhythmic movement to music and other rhythmically regular sounds than to speech; (ii) infants exhibit tempo flexibility to some extent (e.g., faster auditory tempo is associated with faster movement tempo); and (iii) the degree of rhythmic coordination with music is positively related to displays of positive affect. The findings are suggestive of a predisposition for rhythmic movement in response to music and other metrically regular sounds.

Keywords: dance, emotion, entrainment, evolution, neurobiology

One of the most curious effects of music is that it compels us to move in synchrony with its beat. This behavior, also referred to as entrainment, includes spontaneous or deliberate finger and foot tapping, head nodding, and body swaying (1). The most striking of these phenomena is dancing: a human universal typically involving whole-body movements (2). Dancing rests on humans’ unique ability to tightly couple auditory-motor circuits. The neural components of these circuits are currently being identified (3). However, neurobehavioral mechanisms involved in adult entrainment are no indication of an endogenous predisposition for auditory-motor coupling. Previous developmental studies show that the ability to entrain to the beat emerges only around preschool age (4–8). Even then, the ability seems modest: it tends to be fraught with inaccuracies up until school age, it requires prompting by an experimenter, and it has been reported to occur for a restricted motor range only, usually finger and hand movements. From this evidence, one could understandably conclude that the skill for coordinating movement to music and other metrically regular sounds is, primarily, an acquired behavior.

Prerequisites for entrainment are present long before preschool age, however. For example, a rudimentary form of beat perception has recently been reported in neonates (9), and infants can perceive changes in rhythm (10) and meter (11). Furthermore, infants listen longer to rhythmic patterns that match the patterns to which they are bounced beforehand (12). A further prerequisite, the ability to produce rhythmically patterned movements, has also been documented in young infants (13). However, what is so striking in humans is not so much their ability to process temporal patterns or to move rhythmically, but their ability to entrain their movements to an external timekeeper, such as a beating drum. Exploratory work and case studies point to the possibility that infants (14, 15), and even parrots (16, 17), may be able to move rhythmically to music. However, casuistic evidence is difficult to interpret. A confluence of idiosyncratic factors may lead to striking yet otherwise species-atypical behavior in single cases, as in a dancing chicken (18).

The overarching aim of the current research was to examine whether preverbal infants engage actively and spontaneously in rhythmic behavior to music and, if so, how such behavior may be best characterized. To this end, we carried out two experiments in which we tested 120 infants (experiment 1: n = 51; experiment 2: n = 69). Experiments followed the logic of replication-plus-extension, with the two studies conducted in different countries for purposes of generalization. To scrutinize the type of auditory stimulation that may drive rhythmic engagement with music, infants were exposed to excerpts of classical music (Mozart, Saint-Saëns), rhythm-only versions of the same excerpts, a children’s song, isochronous drumbeats, and a musical stimulus with rapid tempo shifts. Control stimuli consisted of prerecorded human speech (Audio S1, S2, S3, S4, S5, S6, S7, S8, S9, and S10). An overview of the stimuli, including tempi, is given in Table 1.

Table 1.

Stimulus overview

| Auditory stimulus | Experiment | Tempo IOI in ms* | Category |

| Audio S1—Mozart (music) | 1 | 250 (35) | E |

| Audio S2—Mozart (rhythm) | 1 | 245 (52) | E |

| Audio S3—Saint-Saëns (music) | 1 and 2 | 290 (48) | E |

| Audio S4—Saint-Saëns (rhythm) | 1 and 2 | 288 (55) | E |

| Audio S5—AD speech | 1 | 369 (95) | C |

| Audio S6—ID speech | 2 | 466 (73) | C |

| Audio S7—children's music | 2 | 422 (80) | E |

| Audio S8—beat track (beat 1) | 2 | 285 (0) | E |

| Audio S9—beat track (beat 2) | 2 | 428 (0) | E |

| Audio S10—St. Saëns (fluctuating rhythm) | 2 | 346 (109) | (E) |

SDs in parentheses. AD, adult directed; ID, infant directed. C, control; E, experimental; IOI, interonset interval.

*For details on tempo estimation, see Materials and Methods and Fig. S1.

In experiment 1, we chose adult-directed speech (AD speech) as a control stimulus because it is auditory and somewhat rhythmically patterned, but does not exhibit the strict temporal regularity of music. In experiment 2, we used infant-directed speech (ID speech), which is more engaging for infants and slightly more regular than AD speech. Music with tempo shifts can be moved to (e.g., folk dances use this device), but this requires more effort and was therefore expected to trigger intermediate amounts of movement. Finally, and given that adults enjoy moving in time with music, we also explored whether rhythmic engagement with music is a source of positive affect in the human infant.

In both experiments infants were tested on their parent's lap. This setup proved the best of various pretested alternatives because it allows for meaningful comparisons across the entire age range (5–24 months), minimizes separation anxiety, and allows the infant to move relevant body parts (arms/hands, legs/feet, torso, head). We instructed the parents to avoid any movement during the experiment, but they were allowed to reposition the infant if the latter was sliding out of position. A digital video camera, located behind and slightly above the source of sound speakers, captured a frontal view of the infant. To ensure that the parents could not hear the stimuli, we asked them to wear headphones, through which they heard spoken text, during the experiment.

Infants’ rhythmic movements were assessed by a multimethod approach, involving human coding from the video excerpts and innovative 3D motion-capture technology. In both experiments, the video records were coded by two independent observers for occurrence of rhythmic movements, defined in Thelen's sense, as “a movement of parts of the body or the whole body that was repeated in the same form at least three times at regular short intervals” (13). Interrater agreement between two observers for 25% of the video records was satisfactory (experiment 1: κ = 0.84; experiment 2: κ = 0.82). In experiment 2, infants’ movements were also recorded by a 3D motion-capture tracking system, enabling an exact mapping of movement time onto musical time. Duration of rhythmic movement from the video analysis and from the motion capture analysis (detailed later) converged substantially, r = 0.74, P < 0.001.

Results

Rhythmic Engagement with Music, Rhythms, and Speech.

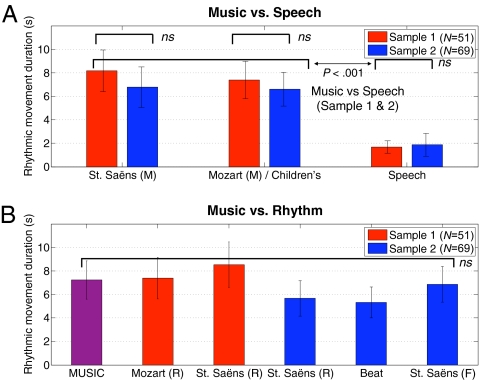

In both experiments, infants engaged in significantly more rhythmic movement to musical and other metrically regular stimuli than to speech (Fig. 1A). The rhythmic versions of the stimuli, including isochronous drumbeats, elicited as much rhythmic movement as did the music (Fig. 1B). The tempo-fluctuating stimulus also generated more movement than did the speech stimuli (Movies S1, S2, and S3). The duration of rhythmic movement to identical stimuli in both experiments (Saint-Saëns) was statistically equivalent, denoting replication (Fig. 1A).

Fig. 1.

Duration of rhythmic movement during all musical stimuli vs. control stimuli across both experiments. (A) Music was much more effective in generating rhythmic movements than was speech (Audio S1, S3, S7, and S10). (B) Rhythm was just as effective as music, and again more effective than speech, in eliciting rhythmic movement (Audio S2, S4, S8, S9, and S10). The MUSIC condition refers to the mean of Saint-Saëns, Mozart, and children’s music versions. Letters after the stimulus name refer to rhythm (R) and fluctuating (F) variants of the musical stimuli. The Beat label denotes the average of beat 1 and 2 conditions. Error bars indicate SEs.

Development of Rhythmic Engagement Across Infancy.

To examine potential age trends, we trichotomized the sample from experiment 1 into three age groups: (i) 5 to 7 months (n = 13), (ii) 9 to 12 months (n = 20), and (iii) 13 to 16 months (n = 18). To examine whether rhythmic movement increases over the course of infancy, we computed a linear contrast for the interaction between age group and the contrast, or L-score, which defines the degree to which any infant showed the predicted pattern (19). The contrast for the interaction was marginally significant, t(48) = 1.86, P = 0.07, η2 = 0.25. In experiment 2, the age-related trends in movements were investigated by dividing the sample into otherwise similar age groups as in experiment 1, but with an extension: (i) 5 to 7 months (n = 13), (ii) 9 to 12 months (n = 20), (iii) 13 to 16 months (n = 22), and (iv) 18 to 24 months (n = 14). The interaction age × L-score contrast yielded no significant effect, t(65) = 0.45, P = not significant (ns). The most notable age trend was the somewhat less-consistent behavior of the youngest infants. In experiment 1, these infants moved in predicted ways but there was less rhythmic movement overall compared with the older age groups (Table S1). In experiment 2, the youngest infants moved as much to ID speech as to the experimental stimuli (Table S2).

Rhythmic Coordination with Music.

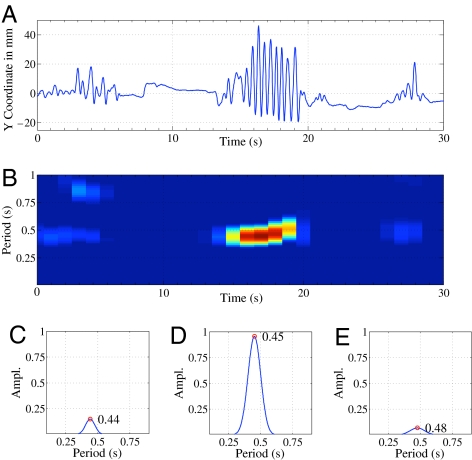

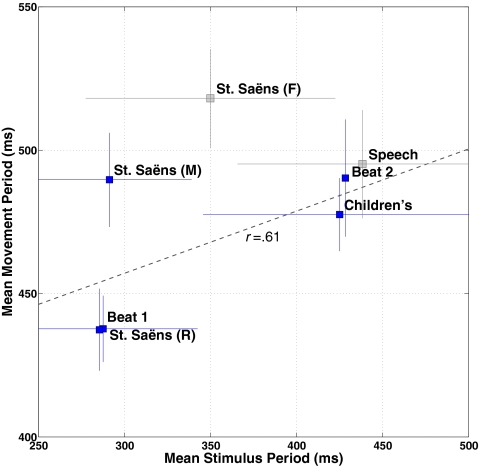

Having demonstrated that infants engage rhythmically with music, we asked whether the timing of infants’ rhythmic movements was coordinated with musical time. We examined this question by capitalizing on the 3D motion-capture technology used in experiment 2. Fig. 2 and Movie S4 illustrate the procedures for data extraction and processing. As shown in Fig. 3, faster auditory tempo was associated with faster movement tempo. This relationship is best described in terms of tempo flexibility rather than entrainment or synchronization, because the absolute periods of movement and music did not match. We tested whether the periodicity of the movements reflected the periodicity of the musical and rhythmic stimuli in predicted ways, by assigning a weight of −2 to the faster stimuli (beat 1, Saint-Saëns’ music and rhythm), and +3 to the slower stimuli (beat 2, children’s music). Results bore out this prediction, if moderately so: t(51) = 0.99, P = 0.08 (one-tailed), η2 = 0.14. Whereas slow vs. fast isochronous drumbeats were well aligned with differences in movement tempo (Fig. 3), other stimuli were less effective in eliciting tempo flexibility. Thus, we entertained the possibility that the degree to which infants are able to coordinate their movements to metrically regular sound patterns may be driven by pulse clarity.

Fig. 2.

Data extraction and periodicity calculation from a 30-s motion-capture excerpt of an infant. (A) Vertical movement trajectory of the right foot, exhibiting short bouts of periodic, oscillating movements (see Movie S4 for an animation). (B) Autocorrelation results in up to a 1-s lag during the excerpt, highlighting the periodic segments and their prevalent period (darker color). (C–E) Mean autocorrelation curves within each 10-s segment, demonstrating how the period is constant throughout the excerpt (between 440 and 480 ms), but the amplitude differs widely across segments.

Fig. 3.

Movement time follows musical time. Stimulus period means (x axis) are plotted against movement period means (y axis), yielding a correlation of r = 0.61 (computed on experimental stimuli only, colored blue). Movement period extraction followed the procedures illustrated in Fig. 2. Stimulus period extraction was obtained by a tempo-finding algorithm that uses autocorrelation of the spectral energy flux and that applies a resonance function emphasizing perceptually salient beat regions.

To test this hypothesis, we derived a computational and a perceptual index of pulse clarity. The former draws from a recent model of pulse clarity, which is based on the autocorrelation of the amplitude envelope of the audio waveform (20). To investigate children’s perceptual pulse clarity, we ran an additional experiment, in which 10 children (mean age of 8.1, SD = 2.4, six girls) were instructed to tap with the beat of all stimuli by using a small drum and mallet. We used 20-s excerpts of each stimulus, randomly presented using loudspeakers. A 10-s interstimulus duration, consisting of a distractor stimulus (an aperiodic click pattern) and silence, was inserted to eliminate carryover effects of tempo. Two common consensus measures based on circular statistics (cumulative length of R and SD of the angles of the taps in the circular time representation) of the tapping were extracted (6). As can be seen from the mean and confidence intervals of the normalized tapping-consistency index (Fig. S2), tapping-accuracy data and algorithmically derived pulse clarity correlate positively across the six stimuli (r = 0.77).

Subsequently, we examined whether there is a direct relationship between pulse clarity and rhythmic coordination with music as well as other metrically regular sounds. To this end, we created an index that captures the fit between movement and music tempi. Specifically, the mismatch between the movement and music tempo can be defined as the difference between the predicted motor tempo and the actual movement tempo, where the predicted motor tempo is the least-square regression line between the observed motor tempo (movement periodicity) and the music tempo (stimulus periodicity). To simplify the interpretation of this index, we subtracted the values from 1, so that it is an accuracy index (rather than an error index). As expected, the higher the pulse clarity, the better the coordination between musical and motor tempo (r = 0.74), as captured by the residuals of the accuracy index across the six stimuli. Not surprisingly, tempo flexibility was most accurate for the slow vs. fast isochronous drumbeats, t(51) = 2.64, P < 0.01, η2 = 0.35. To examine whether rhythmic coordination with music improved with age, we divided the sample into a younger and an older group of infants with a median split. There were no significant differences between the age groups, t(51) = 0.02, P = ns.

Two additional movement variables were considered, velocity and acceleration. Velocity refers to the rate of change in marker position over time, and acceleration is the degree of change in velocity. Here, these were calculated as the mean of the first- (velocity) and second- (acceleration) order time derivatives of the marker positions over the 500-ms analysis window. Whereas periodicity captures oscillatory movements, velocity indexes the overall vigor of the movements with no respect to their past position (the two measures were unrelated, r = 0.07, P = ns). We asked whether the faster stimuli induced higher velocities compared with the slower ones, but this was not the case. If anything, there was a trend in the opposite direction, t(51) = −1.59, P = 0.06. It is noteworthy that the stimulus with most acceleratory passages elicited the greatest degree of movement acceleration, as indicated by a contrast between the fluctuating stimulus against all of the other experimental stimuli on acceleration, t(51) = 1.80, P < 0.05, η2 = 0.25.

Rhythmic Engagement and Positive Affect.

Is rhythmic engagement and coordination with music a source of positive affect in the human infant? The question was addressed by computing the overall correlation between the duration of movement and the duration of smiles, which was r = 0.30 (P < 0.05) in experiment 1 and r = 0.37 (P < 0.01) in experiment 2. Next, we asked whether the degree of rhythmic coordination was positively related to smiling. To this end, eight dancers were recruited from a professional ballet company (Geneva Opera) in experiment 1. We extracted all bouts of rhythmic movement and had the dancers evaluate the infants’ synchronization accuracy on a 7-point Likert scale (1 = totally out of synchronization; 7 = perfect synchronization). The advantage of this holistic approach to synchrony estimation is that the dancers could be instructed to evaluate synchrony of infant movements with rhythm in its entire complexity rather than with isochronous drumbeats only. The same movement segments were then coded for duration of smiles by a separate group of coders. There was an appreciable correlation between infants’ synchronization accuracy and the duration of their smiles during rhythmic movement segments, r = 0.42, P < 0.01. Although a different rhythmic coordination index was used in experiment 2 (above), degree of coordination between musical and motor tempo was again positively related to smiling, r = 0.26 (P = 0.06).

Discussion

We have shown that human infants spontaneously display rhythmic motion of their bodies to music, rhythmical patterns with a regular beat, and isochronous drumbeats. In contrast, infants do not do so in response to AD and ID speech. The pattern suggests that induction of rhythmic behavior in infants requires auditory stimuli that are metrically regular. Indeed, the systematic manipulation of the stimulus material allowed us to discern that it is the beat, rather than other features of the music, that drives rhythmic engagement to music in infants. Infants also exhibited a certain degree of tempo flexibility. Furthermore, the stimulus with most acceleratory passages elicited most acceleratory movements. These findings point to a precocious ability to mirror auditory rhythms motorically. It is important to note that all rhythmic behaviors did occur in a completely spontaneous, unsolicited way in the current experiments, whereas children in previous studies needed prompting or practice trials to entrain.

Although these rhythmic behaviors could be seen as a rudimentary form of what evolves into entrainment later, it is important to note that we found no evidence for movement-to-music synchronization. Synchronization, which is characterized by perfectly overlapping music and body-movement phases, requires a degree of motor control that may not be achieved until preschool age. It is possible, of course, that the unfamiliar music and laboratory conditions may underestimate the incidence of infants’ rhythmic movement to music and other rhythmical stimuli. In addition, the current stimuli were relatively complex, and our findings suggest that stimuli with high beat clarity are more likely to trigger movement that is aligned with musical time.

A few findings were not anticipated. First, the youngest infants engaged rhythmically with ID speech in experiment 2. One possible explanation for this anomalous finding lies in the distinctive appeal that ID speech holds for 6-month-old infants, after which preference for ID speech declines (21). Hence, the greater preference for ID speech in the 6 month olds may have engendered more positive excitement and greater motor activation. Second, age effects tended to be more modest than expected. It is possible that infants are too limited in their motor control to make significant progress toward whole-body entrainment in the first 2 years of life. This view is consistent with the relatively late onset of tapping accuracy (4–8). Third, the extent of rhythmic engagement with the tempo-fluctuating music was surprising. The most obvious explanation is that we failed to produce a truly irregular stimulus and succeeded only at creating regular music that slowed down and sped up. It is also of note, however, that speeding up and slowing down are devices frequently used in folk dances, and it has been recently shown that they can be actually conducive to entrainment (22).

An important question left untouched concerns the mechanisms that may drive infants’ rhythmic engagement with music and other metrically regular sounds. It has been suggested that movement coordination with music has a social origin and is therefore facilitated by a social context (6). It is all the more surprising that the current behaviors occurred in the absence of any kind of social cues, calling attention to psychological and neurobiological factors. The extent of rhythmic engagement and coordination with our experimental stimuli was positively related to displays of positive affect. This association raises the possibility that surges in positive affect may facilitate, and perhaps even motivate, rhythmic engagement with metrically regular sound patterns. If this were the case, however, could it be that the experimental stimuli led to more extensive movements compared with human speech because of their arousing properties rather than because of their greater metrical regularity? Similarly, could the faster movements in response to faster stimuli have been driven by increases in arousal rather than by increases in tempo?

The fact that the experimental stimuli did not just lead to an increase in any movement, but to an increase in a specific kind of rhythmically regular movement, weakens the alternative explanation for the differential responses to speech vs. music somewhat. Arousal also seems unlikely to be the sole determinant of tempo flexibility because, whereas the tempo dimension of movement (periodicity) was sensitive to stimulus tempo, rate or vigor of movement (velocity) was not. Of course, arousal must be involved in the behaviors observed, even if only because movement is a form of arousal and arousal often finds its natural expression in movement. However, whether forms of psychological arousal are antecedents to bodily changes, or consequences thereof, has remained a matter of controversy since W. James proposed that it is the interoception of bodily changes that constitutes an emotion (23). In this view, what may have generated positive affect in our infants is the interoceptive feedback from moving in time with rhythmic pulses.

Neurobiological mechanisms underlying entrainment in adults provide an additional possible explanation. Recent imaging research suggests that the basal ganglia, the cerebellum, and the connections between the auditory and motor cortices along the dorsal auditory pathway are involved in auditory-motor coordination, with the ventral premotor cortex and left posterior superior temporal parietal junctions having a particularly important role (3, 24, 25). Another area involved in the transfer of auditory beats to locomotion tempo is the evolutionary ancient and early-developing vestibular system (26). These or related mechanisms, rather than requiring lengthy and effortful training processes to develop, may operate from an early age in almost automatic ways. This notion, however speculative, is consistent with the finding that the extent of previous exposure to music did not seem to affect infants’ rhythmic responses (SI Text).

Assuming that humans possess a brain mechanism for coordinating movement to music and other metrically regular sounds, one that is present from infancy, what kind of adaptive function would this mechanism serve? One possibility is that the involved brain mechanism was a target of natural selection for music; another is that it evolved for some other function that just happens to be relevant for music processing (27). The latter position recognizes that rhythmic pulses, although a salient feature in music, are also a recurrent element in our extramusical daily life. For example, the propensity for coordinating auditory rhythmic pulses with movement might have been a target of natural selection because it facilitates the alignment of movement patterns with environmental and social sounds of adaptational significance. More infant and animal work is needed before a well-founded scrutiny of these alternatives is possible.

Materials and Methods

Samples.

Experiment 1 involved a sample of 51 full-term, healthy infants (24 male and 27 female) aged between 6 and 16 months. Eleven additional infants were tested but excluded because they became fussy (10) or because of experimenter error (1). The parents were middle-class residents of the Geneva metropolitan area. They were recruited from leaflets distributed in pediatricians’ and midwives’ offices and by a university mailing sent out to university staff. Experiment 2 involved a sample of 69 full-term, healthy infants (35 male, 34 female) aged between 5 and 24 months. Thirty-four additional infants were tested but excluded because they became fussy (18) or interacted overly with the caregiver (15) or because of technical error (1). The parents were middle-class residents from the Jyväskylä area, a moderate-sized city in Finland. They were recruited in a similar manner to the parents in experiment 1. All caregivers of the infants provided informed consent prior to the experiment.

Stimuli.

In experiment 1, the stimulus material consisted of the last movement of Mozart's Eine Kleine Nachtmusik and the Finale from Saint-Saëns's Carnaval des Animaux. Each excerpt was presented in both the original orchestral version (Audio S1 and S3) and in a rhythm-only version (Audio S2 and S4). In the latter version, the base rhythm of the original was copied onto a virgin file by using drumbeat sound functions in Logic Express 6 software. In addition to the four experimental stimuli, there was a control stimulus consisting of a prerecorded children's story taken from a book for blind children, for a total of five trials (Audio S5). In experiment 2, we retained two of the stimuli from experiment 1 (Saint-Saëns's music and rhythm) for replication purposes. A second musical stimulus consisted of children's music that had been successful in setting 2- to 4-year-old toddlers in motion (Audio S7) (8). The adult speech stimulus was replaced by ID speech by using a foreign language (English). The purpose of this alteration was 2-fold. First, ID speech is not temporally regular, but it is more regular than AD speech. Second, we intended to increase the pleasantness and relevance of the speech stimulus to an infant population while keeping the semantic meaning incomprehensible (Audio S6).

To scrutinize the role of rhythm in entrainment, we created a beat-only stimulus that consisted of a 4/4 pattern with two percussion sounds organized in two tempo sections. One tempo was chosen to be fast [intervening interronset interval (IOI) of 285 ms], in the same range as Saint-Saëns and within the range of the spontaneous motor tempo of infants (Audio S8). For preschool children, the mean spontaneous motor tempo, and preferred tapping rate, is about 300 ms (4, 5). The other tempo was set to 428 ms IOI (Audio S9), and the order of the tempo sections within the beat condition was randomized. Another exploration of rhythmic structure for entrainment was created by distorting the periodic structure of the Saint-Saëns’s music. The stimulus with tempo fluctuations (Audio S10) was created by random stretching and compressing of the temporal structure of the original Saint-Saëns Music version (Audio S3), still preserving pitch and spectrum using phase vocoder (28). The duration of all of the stimuli was 102 s and meter was held constant across the experimental stimuli (4/4).

Tempo in all stimuli was extracted by a two-phase process. First, the spectral flux of the signal was calculated (window length of 46 ms, overlap of 78.5%) in which only the increase of energy was considered and the cosine distance between the successive frames was taken. Second, the periodicity of this half-wave rectified signal was estimated by using the enhanced autocorrelation (29). The autocorrelation function was further weighted by a resonance function (30), which was set to 300 ms to be closer to the assumed preferred rate of the infants (Fig. S1). This method, common in beat detection (31, 32), and several alternative methods (energy-based and onset detection-based methods), yields largely similar results in this material, although applying such music-specific techniques to speech is more problematic. All operations were carried out using MIR toolbox (33).

Procedure and Equipment.

In experiment 1, auditory stimuli were delivered through two loudspeakers placed at a distance of 1 m, 50 cm in front of the infant and at eye level. Between the two loudspeakers was a personal computer with a 17-inch screen that controlled acoustic stimulus delivery. Flowers were presented on the screen, changing at a very slow rate (10 s). This casual attentional focus was provided to keep the infant's position directed toward the source of sound and to impede excessive visual search behavior, notably visual search of the parent. The stimuli were delivered at a sound pressure level of 65 dB(A), in counterbalanced order and with an interstimulus interval of 10 s.

In experiment 2, the procedures were identical except for the presence of three to eight reflective markers that were attached to the infants before the experiment. Infants’ movements were recorded by a 3D motion-capture tracking system (five Qualisys Pro Reflex cameras, <2 mm spatial, and 60 frames per second temporal accuracy). For computation of movement-to-music synchronization, complete motion-capture data were available for 52 infants because some infants rejected the reflective markers (13) or because of technical problems (4). The periodicity of movements was analyzed from the movements of all markers in three dimensions by using the enhanced autocorrelation technique in a running window (29, 30). This technique provides an estimation of the periodicity of the movements for each moment in time. To remove noise and rapid periods (<100 ms) and to slow oscillations (>900 ms), we replicated the first local maxima from the enhanced autocorrelation by fitting a normal distribution to this curve. This analysis yielded the period of the movement, the amplitude of the period, and the duration of the periodic movement within each condition (see Fig. 2). Other techniques, such as estimating the period by means of multiple Rayleigh tests in the analysis of circular data and pure fast Fourier transform-based methods, were found to provide converging estimations of the periodicity and its amplitude.

Movement velocity and acceleration were calculated as the mean of the first- (velocity) and second- (acceleration) order time-derivatives of the marker positions over a 500-ms analysis window using a Savitkzy-Golay filter. Artifacts caused by occluded markers were filtered by removing observations that were ± 3 SDs from the mean.

Data Analysis.

Data analysis relating to Fig. 1.

In both experiments, repeated measures ANOVAs, with sex and order as the between-subject variables and experimental condition as the repeated measure, yielded a significant main effect of experimental condition on duration of periodic movement, but no effect of sex or order of presentation. Because of the directional nature of our expectations, we analyzed the predicted patterns by using planned contrasts for repeated measures. First, we tested a contrast of all experimental stimuli (musical and rhythmic) against the control stimuli (speech), assigning a weight of +1 to the four experimental conditions, a weight of −4 to the control condition in experiment 1, and a weight of −5 in experiment 2 (19). The contrasts were highly significant: t(50) = 4.84, P < 0.001, η2 = 0.56 for experiment 1; t(68) = 3.75, P < 0.001, η2 = 0.41 for experiment 2.

More specific contrasts comparing duration of rhythmic movement during the two music stimuli (+1, +1) vs. duration of movement during control (speech) (−2) were significant in experiment 1, t(50) = 5.68, P < 0.001, η2 = 0.63, and in experiment 2, t(68) = 2.95, P < 0.01, η2 = 0.34. Similarly, the two purely rhythmic stimuli generated significantly more movement than did the speech stimuli in experiment 1, t(50) = 4.28, P < 0.001, η2 = 0.52, and in experiment 2, t(68) = 3.14, P < 0.01, η2 = 0.36. Importantly, the rhythmic versions of the stimuli induced as much rhythmic movement as did the original musical stimuli, as evidenced by the absence of a significant contrast between the musical and the rhythm versions in both experiment 1, t(50) = 0.15, P = ns, and in experiment 2, t(68) = 0.61, P = ns.

Data analysis relating to the section “Rhythmic Engagement and Positive Affect.”

The overall duration of smiles across all conditions was coded by two independent observers from the video records. Interrater agreement between two observers for 20% of the video records was satisfactory (experiment 1: κ = 0.77; experiment 2: κ = 0.76). In experiment 1, the rhythmic movement segments (n = 346) were split into two equal parts, each being rated by four dancers. The average effective interrater reliability was RCronbach = 0.78 [0.80 for Part 1; 0.76 for Part 2 (see ref. 19)]. The smile durations of this segmented material were coded by nine trained psychology students. The segments were divided into three equal parts, each part being rated by three observers. The effective average interrater reliability was RCronbach = 0.84.

Supplementary Material

Acknowledgments

We thank Minna Huotilainen, John Iversen, Jerome Kagan, Anirruddh Patel, Philippe Rochat, and Petri Toiviainen for comments on earlier versions of this manuscript and Anna Buhbe, Eerika Niemelä, Alexandra Russell, and Terhi Väisänen for assisting with data collection and coding. Portions of this work were supported by a grant from the Swiss National Science Foundation (to M.Z.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/cgi/content/full/1000121107/DCSupplemental.

References

- 1.Repp B. Sensorimotor synchronization: A review of the tapping literature. Psychon Bull Rev. 2005;12:969–992. doi: 10.3758/bf03206433. [DOI] [PubMed] [Google Scholar]

- 2.Nettl B. In: The Origins of Music. Wallin L, Merker B, Brown S, editors. Cambridge, MA: MIT Press; 2000. pp. 463–473. [Google Scholar]

- 3.Zatorre RJ, Chen JL, Penhune VB. When the brain plays music: Auditory-motor interactions in music perception and production. Nat Rev Neurosci. 2007;8:547–558. doi: 10.1038/nrn2152. [DOI] [PubMed] [Google Scholar]

- 4.Provasi J, Bobin-Begue A. Spontaneous motor tempo and rhythmical synchronisation in 2-1/2 and 4-year-old children. Int J Behav Dev. 2003;27:220–231. [Google Scholar]

- 5.Drake C, Jones MR, Baruch C. The development of rhythmic attending in auditory sequences: Attunement, referent period, focal attending. Cognition. 2000;77:251–288. doi: 10.1016/s0010-0277(00)00106-2. [DOI] [PubMed] [Google Scholar]

- 6.Kirschner S, Tomasello M. Joint drumming: Social context facilitates synchronization in preschool children. J Exp Child Psychol. 2009;102:299–314. doi: 10.1016/j.jecp.2008.07.005. [DOI] [PubMed] [Google Scholar]

- 7.McAuley J, Jones M, Holub S, Johnston H, Miller N. The time of our lives: Life span development of timing and event tracking. J Exp Psychol Gen. 2006;135:348–367. doi: 10.1037/0096-3445.135.3.348. [DOI] [PubMed] [Google Scholar]

- 8.Eerola T, Luck G, Toiviainen P. In: Proceedings of the 9th International Conference on Music Perception and Cognition, Bologna. Baroni M, Addessi AR, Caterina R, Costa M, editors. Bologna, Italy: The Society for Music Perception and Cognition and European Society for the Cognitive Sciences of Music; 2006. pp. 472–476. [Google Scholar]

- 9.Winkler I, Háden GP, Ladinig O, Sziller I, Honing H. Newborn infants detect the beat in music. Proc Natl Acad Sci USA. 2009;106:2468–2471. doi: 10.1073/pnas.0809035106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Trehub SE, Thorpe LA. Infants’ perception of rhythm: Categorization of auditory sequences by temporal structure. Can J Psychol. 1989;43:217–229. doi: 10.1037/h0084223. [DOI] [PubMed] [Google Scholar]

- 11.Hannon E, Johnson S. Infants use meter to categorize rhythms and melodies: Implications for musical structure learning. Cognit Psychol. 2005;50:354–377. doi: 10.1016/j.cogpsych.2004.09.003. [DOI] [PubMed] [Google Scholar]

- 12.Phillips-Silver J, Trainor LJ. Feeling the beat: Movement influences infants’ rhythm perception. Science. 2005;308:1430. doi: 10.1126/science.1110922. [DOI] [PubMed] [Google Scholar]

- 13.Thelen E. Rhythmical stereotypies in normal human infants. Anim Behav. 1979;27:699–715. doi: 10.1016/0003-3472(79)90006-x. [DOI] [PubMed] [Google Scholar]

- 14.Moog H. The development of musical experience in children of preschool age. Psychol Music. 1976;4:38–45. [Google Scholar]

- 15.Trevarthen C. Musicality and the intrinsic motive pulse: Evidence from human psychobiology and infant communication. Music Sci. 1999–2000;(Special Issue):155–215. [Google Scholar]

- 16.Patel AD, Iversen JR, Bregman MR, Schulz I. Experimental evidence for synchronization to a musical beat in a nonhuman animal. Curr Biol. 2009;19:827–830. doi: 10.1016/j.cub.2009.03.038. [DOI] [PubMed] [Google Scholar]

- 17.Schachner A, Brady TF, Pepperberg IM, Hauser MD. Spontaneous motor entrainment to music in multiple vocal mimicking species. Curr Biol. 2009;19:831–836. doi: 10.1016/j.cub.2009.03.061. [DOI] [PubMed] [Google Scholar]

- 18.Breland K, Breland M. The misbehavior of organisms. Am Psychol. 1961;16:681–684. [Google Scholar]

- 19.Rosenthal R, Rosnow P. Essentials of Behavioral Research: Methods and Data Analysis. New York: McGraw-Hill; 2008. [Google Scholar]

- 20.Lartillot O, Eerola T, Toiviainen P, Fornari J. Multi-feature modelling of pulse clarity: Design, validation, and optimization. In: Bello JP, Chew E, Turnbull D, editors. Proceedings of the 9th International Conference on Music Information Retrieval. Philadelphia: Drexel University; 2008. pp. 521–526. [Google Scholar]

- 21.Newman RS, Hussain I. Changes in preference for infant-directed speech in low and moderate noise by 5- to 13-month-olds. Infancy. 2006;10:61–76. doi: 10.1207/s15327078in1001_4. [DOI] [PubMed] [Google Scholar]

- 22.Rankin S, Large EW, Fink P. Fractal tempo fluctuation and pulse prediction. Music Percept. 2009;26:401–413. doi: 10.1525/mp.2009.26.5.401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Keltner D, Lerner JS. In: The Handbook of Social Psychology. 5th Ed. Gilbert D, Fiske S, Lindsey G, editors. New York: McGraw Hill; 2010. Chapter 9. [Google Scholar]

- 24.Brown S, Martinez MJ, Parsons LM. The neural basis of human dance. Cereb Cortex. 2006;16:1157–1167. doi: 10.1093/cercor/bhj057. [DOI] [PubMed] [Google Scholar]

- 25.Warren J, Wise R, Warren J. Sounds do-able: Auditory–motor transformations and the posterior temporal plane. Trends Neurosci. 2005;28:636–643. doi: 10.1016/j.tins.2005.09.010. [DOI] [PubMed] [Google Scholar]

- 26.Trainor L. Do preferred beat rate and entrainment to the beat have a common origin in movement? Empirical Musicology Review. 2007;2:17–20. [Google Scholar]

- 27.Patel A. Music, Language, and the Brain. New York: Oxford University Press; 2008. [Google Scholar]

- 28.Ellis DPW. A Phase Vocoder in Matlab. 2002. http://www.ee.columbia.edu/~dpwe/resources/matlab/pvoc/ Accessed March 27, 2009.

- 29.Tzanetakis G, Cook P. Musical genre classification of audio signals. IEEE T Speech Audi P. 2002;10:293–302. [Google Scholar]

- 30.Toiviainen P, Snyder JS. Tapping to Bach: Resonance-based modeling of pulse. Music Percept. 2003;21:43–80. [Google Scholar]

- 31.Van Noorden L, Moelants D. Resonance in the perception of musical pulse. J New Music Res. 1999;28:43–66. [Google Scholar]

- 32.McKinney M, Moelants D, Davies M, Klapuri A, London U. Evaluation of audio beat tracking and music tempo extraction algorithms. J New Music Res. 2007;36:1–16. [Google Scholar]

- 33.Lartillot O, Toiviainen P. MIR in Matlab (II): A toolbox for musical feature extraction from audio. In: Dixon S, Bainbridge D, Typke R, editors. Proceedings of the 8th International Conference on Music Information Retrieval. Vienna, Austria: Österreichische Computer Gesellschaft; 2007. pp. 127–130. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.