Abstract

Learning typically increases the strength of responses and the number of neurons that respond to training stimuli. Few studies have explored representational plasticity using natural stimuli, however, leaving unknown the changes that accompany learning under more realistic conditions. Here, we examine experience-dependent plasticity in European starlings, a songbird with rich acoustic communication signals tied to robust, natural recognition behaviors. We trained starlings to recognize conspecific songs and recorded the extracellular spiking activity of single neurons in the caudomedial nidopallium (NCM), a secondary auditory forebrain region analogous to mammalian auditory cortex. Training induced a stimulus-specific weakening of the neural responses (lower spike rates) to the learned songs, whereas the population continued to respond robustly to unfamiliar songs. Additional experiments rule out stimulus-specific adaptation and general biases for novel stimuli as explanations of these effects. Instead, the results indicate that associative learning leads to single neuron responses in which both irrelevant and unfamiliar stimuli elicit more robust responses than behaviorally relevant natural stimuli. Detailed analyses of these effects at a finer temporal scale point to changes in the number of motifs eliciting excitatory responses above a neuron's spontaneous discharge rate. These results show a novel form of experience-dependent plasticity in the auditory forebrain that is tied to associative learning and in which the overall strength of responses is inversely related to learned behavioral significance.

INTRODUCTION

The response characteristics of neurons can be modified by developmental manipulations (Hubel and Wiesel 1965; Zhang et al. 2001, 2002), sensory deprivation (Robertson and Irvine 1989; Wiesel and Hubel 1963), and learning (Merzenich et al. 1984; Nudo et al. 1996). A common finding in many regions of the brain is that learning enlarges the representations of learned stimuli. In the primary auditory cortex (AI), learning typically causes a shift in the receptive fields of single neurons toward training sounds, resulting in an increased number of neurons responding strongly to the training sounds (Bakin and Weinberger 1990; Fritz et al. 2003; Polley et al. 2006; Recanzone et al. 1993; Rutkowski and Weinberger 2005; Weinberger 2004). Both simple stimulus exposure and noncontingent pairing of stimulus and reward fail to induce tonotopic changes (Blake et al. 2006; Recanzone et al. 1993). Instead, the experience-dependent tonotopic expansion in AI is understood to be mediated by associative learning mechanisms (Weinberger 1995).

The foregoing studies showed that learning modifies broad-scale changes in the tonotopic organization of AI. Nonetheless, it remains unclear how experience-dependent plasticity contributes to the processing of complex natural stimuli under the demands of ecologically relevant behaviors. Natural acoustic signals typically vary along multiple spectral and temporal dimensions, and power at single spectral bands is seldom behaviorally meaningful. In animals where auditory learning is an adaptive species-typical behavior, qualitatively different types of neural plasticity may be involved (Galindo-Leon et al. 2009).

Songbirds provide an opportunity to examine sensory plasticity in a neural system where behavioral relevance is tied to complex natural sounds. The songs of individual songbirds, including those of European starlings (Sturnus vulgaris), are composed of unique spectro-temporal features with continuous energy across multiple frequencies (see examples in Figs. 3 and 4). These signals are critical in several adaptive behaviors (Kroodsma and Miller 1996), and the recognition of individual conspecific songs is common among all songbird species studied (Stoddard 1996).

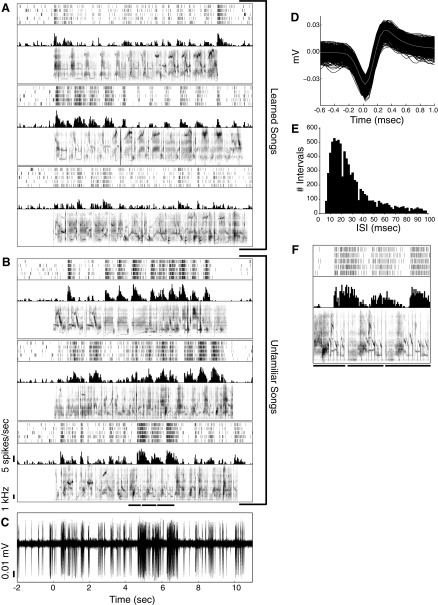

Fig. 3.

Preference for unfamiliar songs in single neurons. A: spectrogram, peristimulus time histogram (PSTH), and raster plot for responses of a single neuron to 5 repetitions of the 3 learned and (B) 3 unfamiliar songs that elicited the strongest mean firing rates from this neuron. Spikes are binned in 20-ms bins for the PSTH. C: sample trace showing raw voltage recorded during the 1st repetition of the bottom song. Zero marks the time of stimulus onset. D: overlay of spike waveforms with the mean (gray line). E: distribution of interspike intervals. Capped at 100 ms to show values near 0. F: zoomed in raster, PSTH, and spectrogram for the 3 motifs underlined in the bottom song in B. The separate lines show the start and stop of the different motifs. The mean spontaneous firing rate of this neuron was 5.46 spikes/s. This neuron was recorded at a depth of 3,020 μm.

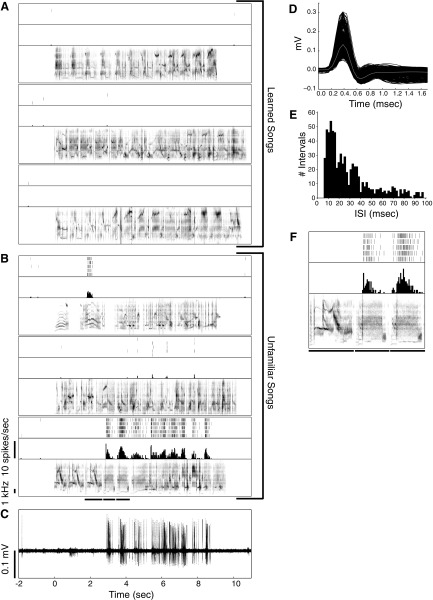

Fig. 4.

Preference for unfamiliar songs in single neurons. As in Fig. 3 for a different NCM neuron. The mean spontaneous firing rate of this neuron was 0.13 spikes/s. This neuron was recorded at a depth of 2,920 μm. In D, there is some variability in spike height caused by an improvement in isolation during recording as spike height increased.

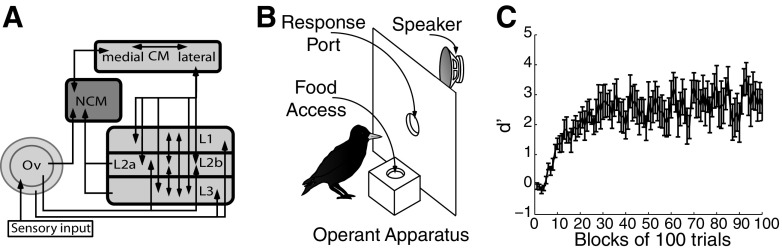

Here, we examine experience-dependent plasticity within the context of individual vocal recognition in European starlings. We focus on the caudomedial nidopallium (NCM), an auditory forebrain region analogous to secondary auditory cortex in mammals (Farries 2004). Neurons in NCM have complex response properties (Müller and Leppelsack 1985; Stripling et al. 1997) that can change with experience (Chew et al. 1995; Phan et al. 2006; Stripling et al. 1997) and are involved in developmental vocal learning (Bolhuis et al. 2000; Gobes and Bolhuis 2007; Phan et al. 2006; Terpstra et al. 2004). NCM is connected directly to field L, the primary thalamo-recipient zone in the avian auditory forebrain, and to the caudomedial mesopallium (CMM), another secondary auditory forebrain region (Fig. 1A). Neurons in these regions respond more strongly to conspecific song than to other complex stimuli (Chew et al. 1996; Stripling et al. 1997; Theunissen and Shaevitz 2006; Theunissen et al. 2004), and CMM neurons show an increased selectivity for the behaviorally relevant components of learned songs (motifs) after recognition training (Gentner and Margoliash 2003). NCM is likely part of a forebrain network involved in processing conspecific song (Gentner et al. 2004; Pinaud and Terleph 2008).

Fig. 1.

Auditory diagram and song recognition training. A: schematic of the songbird auditory forebrain. Ov, nucleus ovoidalis; L, field L; NCM, caudomedial nidopallium; CM, caudal mesopallium. B: schematic of the operant panel used for song recognition learning. C: acquisition curve showing the mean ± SE performance (d-prime) over the 1st 100 blocks of song recognition training for 9 starlings, 100 trials per block.

This study describes an unexpected form of experience-dependent plasticity in NCM after song recognition training. Unlike CMM (or most studies of mammalian A1), single NCM neurons, particularly in the ventral region, respond more strongly to unfamiliar songs than to learned songs. We show that this plasticity is tied to associative learning and not stimulus exposure or novelty. These song-level changes can be explained by a decrease in the number of motifs from learned songs that elicit an excitatory response from neurons in NCM.

METHODS

Subjects

For this study we caught 12 adult (9 male and 3 female) wild European starlings (S. vulgaris) in southern California. Both sexes readily learn to recognize the songs of individual conspecifics (Gentner and Hulse 1998; Gentner et al. 2000), and CMM neurons in both sexes undergo experience-dependent plasticity in auditory responsiveness (Gentner and Margoliash 2003). Before training and testing, the starlings were housed in large, mixed-sex, flight aviaries with free access to food and water. Throughout captivity and testing, light-dark cycles were synchronized to natural photoperiods. Subjects were unfamiliar with all song stimuli used in this study at the start of training. All procedures were conducted in accordance with the University of California, San Diego IACUC guidelines, and adhere to the APS Guiding Principles in the Care and Use of Animals.

Recognition training

We trained nine subjects (6 males and 3 females) to recognize two to three conspecific songs from two individuals (4–6 songs total) using an established Go/No-go operant procedure (Gentner and Margoliash 2003; Gentner et al. 2006). We removed each starling from the aviary and isolated it in a sound attenuation chamber (Acoustic Systems, Austin, TX). Each chamber was equipped with an operant panel containing a response port and a food hopper (Fig. 1B). Experimental contingencies controlled access to the food hopper. Water was freely available. The starlings remained in their chambers 24 h/d during training, and we provided all their food as part of the song recognition training. Each starling learned to use the operant panel through a series of successive shaping procedures. We monitored peck responses and controlled the stimulus presentation, food hopper, and lights with custom software. We maintained natural photoperiods and the starlings performed trials freely from dawn to dusk. At dusk the computer turned off the house light and operant panel.

Subjects initiated a trial by pecking their beak into the response port. This triggered the presentation of a song from a speaker mounted inside the testing chamber and behind the operant panel. On each trial, the computer selected the song randomly (with replacement) from the set of all training songs for that given subject. After the song completed, the starling had a to either 1) peck the response port again within 2 s if the song belonged to one singer (Go trials), or 2) withhold a peck to the response port if the song was from the other singer (No-go trials). Although no punishment was given for attempting to respond before the song finished playing, responses made during the song were not counted. We reinforced responses to the port on Go trials by allowing the subject access to the food hopper for 2 s. Responses to the port on No-go trials initiated a short time-out (10–60 s) during which the house light was extinguished and food was not available. We did not reinforce correctly withholding responses on No-go trials or failing to respond on Go trials.

We created song stimuli by sampling 10-s episodes of continuous singing from large recorded libraries of starling song bouts. We chose song libraries recorded from six different adult male starlings that were captured in Maryland, ensuring the songs were unfamiliar to the subjects at the start of this study. For the training songs, we chose two to three songs from one male and two to three songs from another male. Song stimuli from the same male were taken from nonoverlapping segments of the original source song. Some song stimuli from the same male share a few similar but not identical motifs. For each subject, we saved five to nine songs from different singers for later use as unfamiliar songs during electrophysiological testing. We counterbalanced the assignment of different singer's songs across subjects as training and unfamiliar. Each song served as a training stimulus for 24.2% of neurons and as an unfamiliar stimulus for 37.6% of neurons in our sample.

Recognition/passive exposure training

To distinguish the effects of song recognition learning from song exposure, we trained three additional starlings (all male), using a modified version of the training procedure described above. We taught each starling to use the operant panel using normal shaping procedures. Throughout song recognition training, we alternated 1-h blocks of song recognition and passive song exposure. Each starling began the day with a training block in which they learned to recognize two songs from one male and two songs from another male. As above, each starling controlled the initiation of a trial and the same Go/No-go procedures were used. After an hour, a passive block began when we turned off the operant panel and dimmed the house lights. During each passive block we played four songs to the subject. We selected the passive and the training songs from different males and always played the same set of four songs in each passive block. We yoked each passive song to a training song, such that the songs in each passive block were presented the same number of times, in the same order, and with the same interstimulus intervals as the corresponding training songs in the previous block. This regimen matches song exposure between block, but removes all operant contingencies from the passive blocks.

After a passive block completed, a new training block began when we turned on the main house light and operant panel. We continued the sequence of a training block followed by a passive block until dusk. For each subject, we reserved four songs (two each from two males) for use as unfamiliar stimuli in subsequent electrophysiological testing. We counterbalanced the assignment of songs as training, passive and unfamiliar such that each song was used once for the training, passive and unfamiliar conditions. We trained each starling until electrophysiological testing. Each starling's final trials were completed ∼12 h before the electrophysiological experiment began.

Electrophysiology

We recorded extracellular single neuron responses to songs in the NCM. We affixed a small steel pin stereotaxically to the skull with dental acrylic. We attached the pin on the day of electrophysiological testing with the starling under 20% urethane anesthesia (7–8 ml/kg; administered in 3 IM injections over 90–120 min) or in the days preceding the electrophysiological testing with the starling under isoflurane anesthesia. For electrophysiological recording, we placed the subject in a cloth jacket and secured the attached pin to a stereotaxic apparatus inside a sound attenuation chamber. We lowered custom-made, high-impedance, glass coated Pt-Ir microelectrodes into a small craniotomy dorsal to NCM. We used Spike2 (CED, Cambridge, UK) to present song stimuli, record extracellular waveforms, and sort single neuron spike waveforms off-line. Recordings were considered single units only in cases where the signal-to-noise ratio was high, and the sorted waveforms were clearly separate from other spikes (see Figs. 3 and 4 for examples).

In our initial experiments, a starling's stimulus set consisted of the four to six songs used during recognition training (from 2 individuals) and five to nine unfamiliar songs (from 1–4 individuals). For each starling trained in the song recognition/song exposure procedure, the stimulus set consisted of four songs used in recognition training, four songs heard passively, and four unfamiliar songs. We matched the intensity of all songs to 68-dB peak root mean square and presented them free-field. To search for auditory responsive units, we played all songs in a starling's stimulus set. We searched for neurons from dorsal to ventral and typically made more than one penetration per starling. We presented blocks of five repetitions of each song to each recording site. In a block, we played songs in a randomized order with a 4-s interstimulus interval. Once a block was completed, we searched for a new site. We played the same songs at each recording site and collected responses to a minimum of five stimulus repetitions. Sites were confirmed as being driven by the auditory stimuli if at least one stimulus caused a mean firing rate >1 SD above the mean spontaneous firing rate.

In total we recorded 119 single neurons from 12 starlings—roughly 10 neurons per starling (mean = 9.9, range = 6–15 neurons). Ninety-two neurons were recorded from males and 27 neurons were recorded from females. We observed no significant differences in the results when the data were split by sex (2-way ANOVA main effect of sex: F1,234 = 0.00, P = 0.9965; main effect of familiarity: F1,234 = 6.77, P = 0.0098; interaction between familiarity and sex: F1,234 = 1.14, P = 0.2867, Supplemental Fig. S1)1 and report results below for data pooled from both sexes.

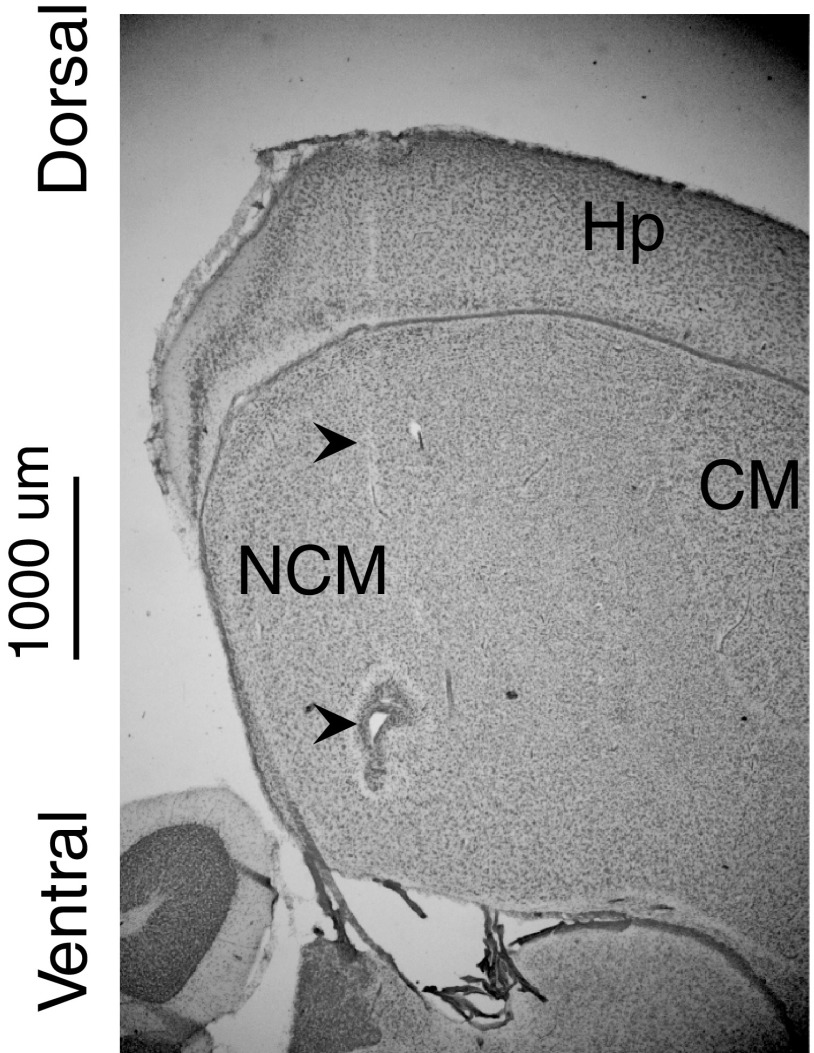

Histology

After the recording session, we injected the starling with a lethal dose of petabarbital sodium and perfused it trans-cardially with 10% neutral-buffered formalin and extracted the brain and postfixed it in neutral-buffered formalin. After several days, we transferred the brain to 30% sucrose PBS for cryoprotection. We sectioned the brains and stained for Nissl. We confirmed the position of each recording site in NCM by locating its position relative to small electrolytic lesions made following recording (Fig. 2).

Fig. 2.

Recording location. Parasagittal section of a starling brain showing an electrode tract and small fiducial electrolytic lesion in NCM. The arrowheads mark the electrode tract and the lesion. CM, caudal mesopallium; Hp, hippocampus; NCM, caudomedial nidopallium.

Data analysis

To quantify behavioral performance we calculated d-prime (d′) values over blocks of 100 trials as d′ = Z(hit rate) – Z(false alarm rate), where Z is the z-score, hit rate is the proportion of responses on Go trials, and false alarm rate is the proportion of responses on No-go trials. A d′ value of 0 indicates chance recognition and d′ increases with recognition performance.

We exported spike times from Spike2 into MATLAB (Mathworks, Natick, MA) for all analyses. We calculated the firing rate to each song as the number of spikes elicited during the song divided by the song length. We measured the spontaneous firing rate for a given neuron by taking the mean firing rate over the 2 s before the onset of each song stimulus.

We used a bias measure, adapted from previous studies (Janata and Margoliash 1999; Solis and Doupe 1997), to examine a preference for learned or unfamiliar songs in single neurons. The bias measure uses the ratio of mean raw firing rates evoked by learned and unfamiliar song stimuli, calculated as

where R¯U is the mean firing rate to unfamiliar songs, and R¯L is the mean firing rate to learned songs for a single neuron. Bias ranges from −1 for a neuron that responds only to the learned songs to +1 for a neuron that responds only to the unfamiliar songs. Bias is 0 for a neuron that responds equally to learned and unfamiliar songs. To determine whether a bias value was significantly higher or lower than chance, we compared it with a distribution of simulated bias values. To simulate bias values, we shuffled the firing rates on each stimulus repetition randomly among the song stimuli. We calculated bias values as above using these shuffled rates. This was repeated 1,000 times, and a bias value was considered significant if it was either higher or lower than 95% of the simulated bias values. We also obtained similar results when we compared real bias values to a distribution of simulated bias values generated by drawing random firing rates from a normal distribution that matched the empirical mean and SD of a given neuron's firing rates to the song stimuli.

To enable the comparison of firing rates across neurons with widely varying response rates, we converted each neuron's firing rates to z-scores as

where ri is the firing rate to the ith stimulus, r¯ is the mean firing rate over all stimuli presented to the neuron, and σ is the SD of the firing rate for all stimuli presented to the neuron. For a given neuron, zi is the z-score normalized firing rate evoked by a given stimulus.

To normalize the firing rates to motifs, we calculated response strength, RS, as follows

where FRi is the mean firing rate associated with the ith motif, FR is the set of rates for all motifs, σ is the SD, and FRspon is the mean spontaneous firing rate, calculated over the 2 s before the onset of each stimulus presentation. RS is identical to converting responses to z-scores, except that the resulting distribution is centered on the spontaneous response rate rather than the mean response rate over all stimuli.

Unless otherwise noted, we report the mean and SE to describe the central tendency and variability in each measure. Because our data often did not meet the assumptions of a normal distribution, we used the Wilcoxon signed-rank, Wilcoxon rank-sum test, and the Friedman test to examine differences between groups. The Friedman test was used as a nonparametric equivalent of a repeated-measures ANOVA. Where appropriate, we used two-way ANOVAs to examine the effects of multiple variables. All comparisons were two-tailed (α = 0.05).

RESULTS

Song recognition learning

To examine the neural mechanisms of individual vocal recognition, we taught starlings to recognize the songs of conspecifics. We trained nine adult starlings to classify two to three songs of one starling and two to three songs of another starling using a Go/No-go operant procedure (Fig. 1B). The starlings learned to respond to the songs of one singer (Go songs) and withhold responses to the songs of the other singer (No-go songs). Each starling acquired all food from the operant apparatus, making the learned songs behaviorally important. The starlings quickly learned the recognition task (Fig. 1C), requiring 1.0 ± 0.2 days (968 ± 94 trials, range = 600–1,600) to classify the Go and No-go songs accurately (d′ ≥1 for a 100-trial block; see methods). The starlings continued to train for several weeks, completing 674 ± 31 trials per day. We trained the starlings for numerous trials to ensure that they had extensive experience with the songs. At the time of electrophysiological testing, the starlings had trained for 63.44 ± 11.10 days (range = 23–118 days, 170–766 blocks of 100 trials), and recognition accuracy had increased to high levels (mean d′ = 3.6 ± 0.4, over the 10 final 100-trial blocks of training, corresponds to 89.56 ± 2.45% correct; see Supplemental Table S1 for additional information).

Preference for unfamiliar songs in NCM

After song recognition training, we anesthetized the starlings with urethane and recorded extracellular electrophysiological activity from 93 well-isolated single neurons in NCM (Fig. 2). To each neuron, we presented the four to six songs learned during recognition training and five to nine songs (sung by 1–4 conspecific males) that the subject had never heard before. We refer to these unheard songs as unfamiliar. Many single neurons responded more strongly to the unfamiliar songs than to the learned songs. Figures 3 and 4 show two sample NCM neurons that prefer (i.e., respond with a higher mean firing rate to) unfamiliar songs. These examples show the range of responses over which a preference for unfamiliar songs can be observed. Note that the neuron in Fig. 3 responds to all song stimuli that were presented but responds more strongly on average to the unfamiliar songs than the learned songs. The mean firing rate of this neuron to all unfamiliar songs was 15.72 ± 2.55 spikes/s and to all learned songs was 12.12 ± 1.71 spikes/s. In contrast, the neuron in Fig. 4 responds to only a few songs and responds strongly to a single unfamiliar song. The mean firing rate of this neuron to all unfamiliar songs was 1.86 ± 1.57 and to all learned songs was 0.01 ± 0.01 spikes/s.

To quantify each neuron's response, we calculated a bias measure using a normalized ratio of the mean firing rates for learned and unfamiliar songs (see methods). Bias values were calculated using the mean firing rate over whole songs, and we found no variation in bias values throughout the length of the song (Supplemental Material). Bias values could range from −1 for a neuron that responds only to learned songs to 1 for a neuron that responds only to unfamiliar songs. The neuron in Fig. 3 has a bias value of 0.13 and the neuron in Fig. 4 has a bias value of 0.99. The mean bias across all neurons (n = 93) was 0.088 ± 0.033, which corresponds to roughly a 15% increase in the firing rates elicited by unfamiliar compared with learned songs. A significant majority of single NCM neurons responded more strongly to unfamiliar than learned songs (56/93 neurons; χ2 = 3.88, P < 0.05; bias >0), leading to a mean bias for the population that was significantly greater than zero (1-sample Wilcoxon signed-rank test, P = 0.0144). Therefore a larger number of NCM neurons are driven more strongly by unfamiliar songs than by songs that the starlings have learned are behaviorally relevant.

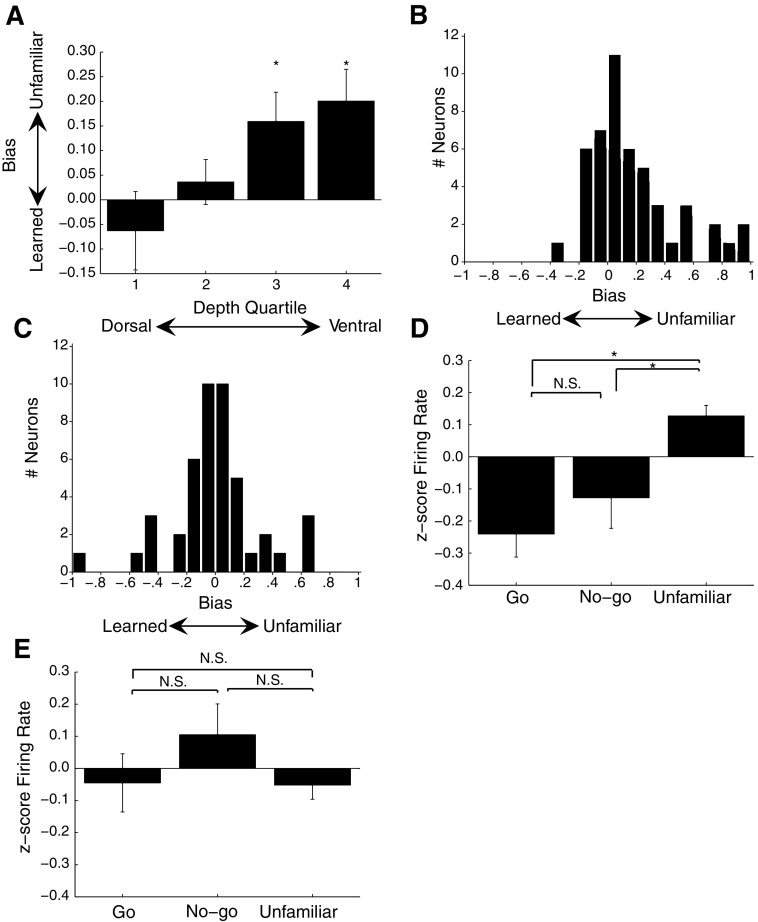

NCM is a large nucleus and, based on connectivity patterns with other auditory regions (Vates et al. 1996) and immediate early gene expression patterns (Chew et al. 1995; Gentner et al. 2004; Ribeiro et al. 1998), previous studies have suggested that different subregions of NCM may be involved in different types of auditory processing. To determine whether plasticity accompanying song recognition is concentrated in a subregion of NCM, we evenly divded our sample of single units into quartiles along the dorsal-ventral axis of each electrode penetration (quartile 1: 1,090–1,870 μm, n = 21; quartile 2: 1,871–2,580 μm, n = 24; quartile 3: 2,581–3,065 μm, n = 24; quartile 4: 3,066–4,091 μm, n = 24). We find significant variability in the bias values of neurons along this dorsal-ventral axis (Kruskal-Wallis, P = 0.0402; Fig. 5A). In the two dorsal quartiles, the mean bias values were not significantly different from zero (quartile 1: mean = −0.063 ± 0.080; quartile 2: mean = 0.046 ± 0.046; 1-sample Wilcoxon signed-rank test, P = 0.4549 and P = 0.6071, respectively). In the two ventral quartiles, however, mean bias values were significantly greater than zero (quartile 3: mean = 0.159 ± 0.060; quartile 4: mean = 0.200 ± 0.065; 1-sample Wilcoxon signed-rank test, P = 0.0140 and P = 0.0119, respectively). In subsequent analyses where depth is included, we collapse the neurons from the two dorsal quartiles and refer to them as “dorsal NCM” and the neurons from the two ventral quartiles and refer to them as “ventral NCM.” The distribution of bias values for neurons in ventral and dorsal NCM are shown in Fig. 5, B and C, respectively. The large mean bias values of neurons in the two ventral quartiles arise from an increase in the fraction of neurons that show a significant preference for unfamiliar over learned songs in this subregion. In ventral NCM, 24/48 neurons have bias values that are significantly different from those expected by chance (methods). A significant majority of these neurons (20/24) have bias values >0, whereas a few (4/24) have bias values <0 (χ2 = 10.667, df = 1, P < 0.005). In dorsal NCM, 20/45 neurons have significant bias values. In contrast to ventral NCM, roughly one half of these neurons (9/20) have bias values >0 and one half (11/20) have bias values <0 (χ2 = 0.200, df = 1, P > 0.500).

Fig. 5.

Preference for unfamiliar songs in NCM neurons. A: bar graph showing the bias values for neurons from different depth quartiles. The range of the quartiles from dorsal to ventral was 1,090–1,870 (n = 21), 1,871–2,580 (n = 24), 2,581–3,065 (n = 24), and 3,066–4,091 μm (n = 24), measured from the surface of the brain. *Bias values significantly different from 0. B: distribution of bias values for 48 ventral NCM neurons. Bias values >0 indicate neurons that responded higher to unfamiliar songs, whereas bias values <0 indicate neurons that responded higher to learned songs. C: distribution of bias values for 45 dorsal NCM neurons. D: bar graph showing the z-scores of the firing rates to the Go, No-go, and unfamiliar songs for 48 ventral NCM neurons. E: bar graph showing the z-scores of the firing rates to the Go, No-go, and unfamiliar songs for 45 dorsal NCM neurons. *P < 0.05. NS, P > 0.05. Error bars show SE.

The reduced firing rates to learned songs induced by recognition training are also observed in the mean firing rates elicited by the various song stimuli. To facilitate comparisons across neurons with widely varying evoked spike rates (range: 0.12–31.97 spikes/s), for each neuron, we converted the mean rates associated with each song to z-scores (methods). Among neurons located within the two ventral quartiles (n = 48), the mean normalized firing rates evoked by the learned songs were significantly weaker than those evoked by the unfamiliar songs (learned: −0.184 ± 0.055 units of SD, unfamiliar: 0.127 ± 0.033; Wilcoxon signed-rank test, P = 0.0005). However, among neurons located within the two dorsal quartiles (n = 45), there was no difference in the mean normalized firing rates evoked by learned and unfamiliar songs (learned: 0.030 ± 0.056 in units of SD, unfamiliar: −0.052 ± 0.044; Wilcoxon signed-rank test, P = 0.5091). Pooling response data from all the NCM neurons in our sample (n = 93), the difference in normalized firing rates evoked by learned (−0.081 ± 0.040, in units of SD) and unfamiliar songs (0.040 ± 0.029) remains statistically significant (Wilcoxon signed-rank, P = 0.0391). NCM displays an overall preference to respond more strongly to unfamiliar than learned songs, which is greatly magnified in the ventral region.

We also examined whether the differences in reinforcement during training had any effect on the strength of the mean evoked response in either the dorsal or ventral portion of NCM. It did not. For this analysis, we divided the responses to learned songs into Go and No-go classes (i.e., those songs associated with food reinforcement and those associated with no reinforcement, respectively). Consistent with the results already reported, neurons in the two ventral-most quartiles showed a significant overall difference between responses to the three classes of song: Go, No-go, and unfamiliar (Friedman test, P = 0.0173; Fig. 5D). For these neurons, the mean normalized firing rates evoked by the Go songs (−0.241 ± 0.072 units of SD) and by the No-go songs (−0.128 ± 0.095) were significantly weaker than those evoked by the unfamiliar songs (0.127 ± 0.033; for comparison with unfamiliar songs, Wilcoxon signed-rank test, P = 0.0240 for No-go songs, P = 0.0005 for Go songs), but did not significantly differ from one another (Wilcoxon signed-rank test, P = 0.4152). Among neurons in the two dorsal-most quartiles, we observed no significant differences between the strength of the evoked responses to the different classes of song stimuli (Go: −0.045 ± 0.091 units of SD, No-go: 0.105 ± 0.096, Unfamiliar: −0.052 ± 0.044; Friedman test, P = 0.9780; Fig. 5E). The experience-dependent decrease of firing rates associated with learned songs is strongest in the ventral portion of NCM and is observable across both classes of learned songs (i.e., Go and No-go).

We note that the weakened responses to learned songs cannot be explained by simple differences in initial spectro-temporal tuning properties of NCM neurons. By design, the song stimuli were balanced across the subjects and neurons so that songs used during recognition training for one starling served as unfamiliar songs for others (see methods). Instead, the response profiles of individual NCM neurons are modified by each animal's behavioral interaction with the training songs. We next examine how the specific characteristics of the behavioral training modify the responses of NCM neurons to songs.

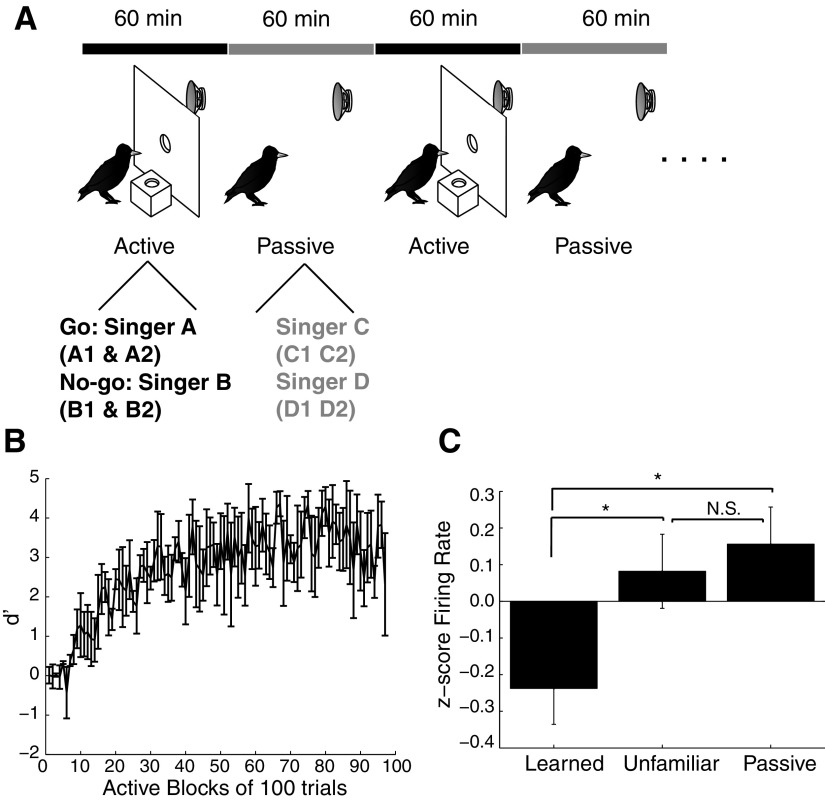

NCM responses are shaped by song learning not song exposure

Repeated presentations of the same song decrease both electrophysiological and immediate early gene (IEG) responses in NCM (Chew et al. 1995; Mello et al. 1995). In principle, this adaptation, which is driven by song exposure, could account for the decreased responses to learned songs observed in this study. To dissociate the effects of song recognition learning and song exposure, we trained a second group of starlings (n = 3) to recognize songs as described in the preceding sections while concurrently exposing them to a different set of songs without explicit behavioral consequences. We termed the latter songs “passive” songs (Fig. 6A; see methods). Even with the concurrent passive song exposure, starlings quickly learned to recognize the songs associated with operant contingencies (mean = 1,133 ± 285 trials to reach a d′ > 1, range = 800–1,700 trials; Fig. 6B). To ensure extensive experience with all the stimuli, including the passive songs, we allowed the starlings to perform numerous trials over several weeks (range = 56–208 days, 97–1,267 blocks of 100 trials). At the time of electrophysiological testing, recognition accuracy had increased to high levels (mean d′ = 3.7 ± 0.7, over the 10 final 100-trial blocks of training, this corresponds to 92.93 ± 4.80% correct).

Fig. 6.

Song exposure does not cause weakened responses to learned songs in NCM. A: diagram showing modified recognition-training procedure where the starlings alternated between 1-h training and passive listening blocks. B: mean acquisition curve for the song recognition learned in the training block for 3 starlings. D′ values were calculated over blocks of 100 trials. Data are shown for the 1st 97 blocks—not the entirety of each starling's training. Error bars show SE. C: bar graph showing the z-scores of the firing rates to learned, unfamiliar, and passively heard songs for 26 NCM neurons. *P < 0.05. NS, P > 0.05. Error bars show SE.

After the song recognition/passive exposure procedure, we anesthetized each subject with urethane and recorded extracellular activity from a total of 26 well-isolated NCM neurons in response to the behaviorally relevant learned songs, the passive songs, and an equal number of unfamiliar starling songs. If the decreased response to the learned songs was caused by simple exposure, the responses to learned and passive songs should both be significantly weaker than those to unfamiliar songs. This was not the case. To normalize responses between neurons, we converted the firing rates of each neuron to z-scores. The passive songs elicited very strong responses. The mean normalized firing rate to the learned songs was −0.238 ± 0.098 (units of SD), to the unfamiliar songs was 0.082 ± 0.101, and to the passive songs was 0.156 ± 0.101; responses varied significantly between the three song classes (Friedman test, P = 0.0111; Fig. 6C). Most importantly, the responses evoked by the learned songs were significantly weaker than those evoked by both the passive songs and the unfamiliar songs (Tukey's LSD, P < 0.05, both post hoc comparisons). Although quantitatively stronger, the responses to the passive songs were not significantly different from responses to the unfamiliar songs (Tukey's LSD, P > 0.50). These results replicate the original learning effects between learned and unfamiliar songs and rule out simple exposure as an explanation. Instead, NCM neurons respond robustly to both unfamiliar songs and to familiar songs made irrelevant by repeated exposure in the absence of behavioral contingencies. In contrast, these same neurons respond weakly to familiar songs with learned behavioral significance.

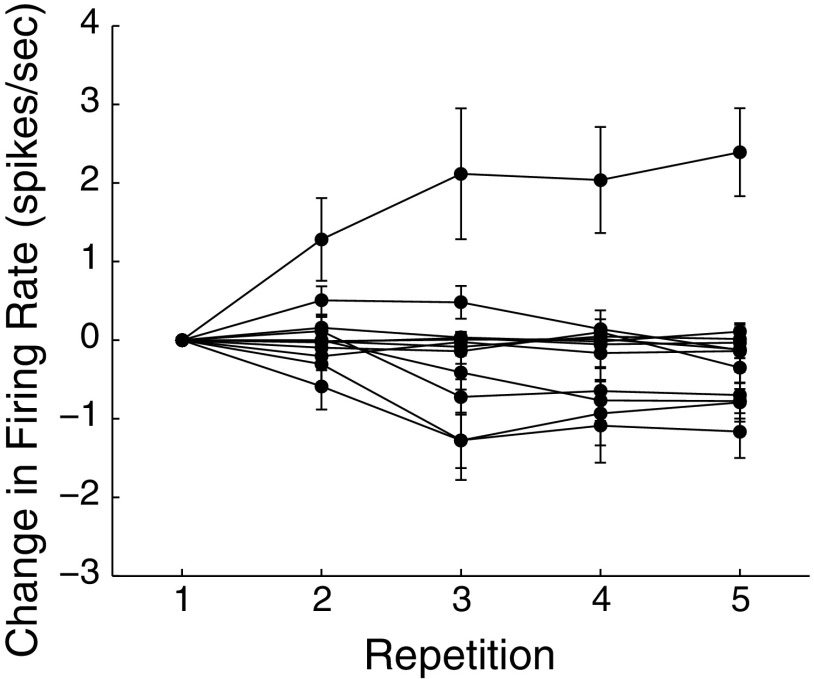

We also examined whether the weakened responses to learned songs in NCM could be the result of firing rate adaptation during electrophysiological recording. In NCM, adaptation occurs rapidly with the largest changes in spike rate occurring in the first few stimulus presentations (Stripling et al. 1997). Because we presented the same song stimuli at each recording site in a starling, we looked for changes in firing rates in the first neuron recorded in each starling (n = 12, all subjects from all experiments). Figure 7 shows the changes in firing rate for each neuron over five song repetitions. Overall, there was no significant change in firing rate with repetition (2-way ANOVA, effect of repetition F4,695 = 0.05, P = 0.9961). Importantly, there was no interaction between repetition and familiarity, indicating that firing rates did not change significantly with repeated presentations of either learned or unfamiliar songs (2-way ANOVA, interaction between repetition and familiarity, F8,695 = 0.02, P = 0.9976). Although the failure to find a significant effect of repetition does not rule out the occurrence of adaptation during our electrophysiological experiments, we find no evidence that it has occurred, and it cannot explain the observed difference in responses to learned and unfamiliar songs. Taken together, these results and others (Supplemental Material) rule out any single mechanism of plasticity that relies only on stimulus exposure, such as adaptation or habituation. The results of the song recognition/song exposure experiment, where decreased responses are seen only for songs that are paired with reinforcement show that, instead, the plasticity in NCM responses accompanying song recognition learning likely occurs through mechanisms that are tied to associative learning.

Fig. 7.

No evidence for firing rate adaptation during recording experiments. Change in firing rate across 5 repetitions in the 1st neuron recorded in each starling. For visualization, the firing rates of each neuron have the mean firing rate on the 1st repetition subtracted. Each point is the mean firing rate across all song stimuli.

Response weakening to learned songs increases with training

Although we did not make multiple electrophysiological recordings from subjects throughout training, there was a wide range in the total number of trials each starling performed. Starlings performed between 97 and 1,267 blocks of 100 trials during training. This allowed us to examine whether the decrease in the magnitude of responses to learned songs varied with different amounts of training. Neurons from starlings that performed more blocks tended to have larger bias values, indicating a greater preference for unfamiliar songs over learned songs. The mean bias value from each starling was significantly correlated with the total number of blocks the starling performed (data pooled from 12 starlings used in both experiments described above; Pearson correlation, r = 0.7247; P = 0.0077). We note that starlings with short training lengths did not show a strengthening of responses to learned songs. Instead, the mean bias values for starlings with short training lengths were above, but near zero, indicating a weak preference for unfamiliar songs. For starlings that performed <300 100-trial blocks (n = 3), the mean bias value was 0.0836 ± 0.0765. Some studies have shown that learning leads to an initial expansion of the representation of learned stimuli followed by a contraction (Molina-Luna et al. 2008; Yotsumoto et al. 2008); however, this does not seem to be occurring in NCM. Although the preference to respond stronger to unfamiliar songs increased with training in NCM, there was not an initial expansion of the representation of learned songs.

Song-level training differences reflect motif-level response variability

Starling songs are composed of stereotyped acoustic units called motifs (see examples of motifs in Figs. 3 and 4). The motif structure of songs plays an important role in recognition behavior and in the responses of neurons in the auditory forebrain region CMM (Gentner 2008; Gentner and Margoliash 2003). Variability in the neural responses to motifs was also observed in NCM. As shown in Figs. 3 and 4, some motifs elicited robust responses, whereas others elicited very little or no response. To understand the basis of the decreased response to learned songs on a finer timescale, we divided songs into their component motifs and analyzed the responses to each motif separately. The decreased responses to learned songs could be explained by any combination of 1) a decrease in the number of motifs that elicit excitatory responses from each neuron, 2) an increase in the number of motifs that elicit suppressive responses from each neuron, 3) a decrease in the magnitude of excitatory response to motifs, or 4) an increase in the magnitude of suppressive responses to motifs. We focused on the subset of NCM neurons that respond most weakly to learned songs by limiting the following analyses to ventral NCM (n = 61 neurons, combined from both experiments above). We obtain qualitatively similar results when all neurons are included (Supplemental Material).

For each neuron, we calculated the mean firing rate to each motif from learned and unfamiliar songs. We considered a response more than an SD above the mean spontaneous (i.e., nondriven) firing rate as excitation and more than an SD below as suppression. Overall, the spontaneous rates tended to be low (mean spontaneous firing rate = 1.86 ± 0.42 spikes/s; see Figs. 3 and 4 for examples), and changing the thresholds for a significant response above or below the spontaneous firing rate yields similar results (Supplemental Table S2). At the 1 SD threshold, all neurons (61/61) showed excitation for at least one motif, and most neurons (48/61) showed suppression for at least one motif. Accordingly, 48/61 neurons showed both excitation and suppression for at least one motif.

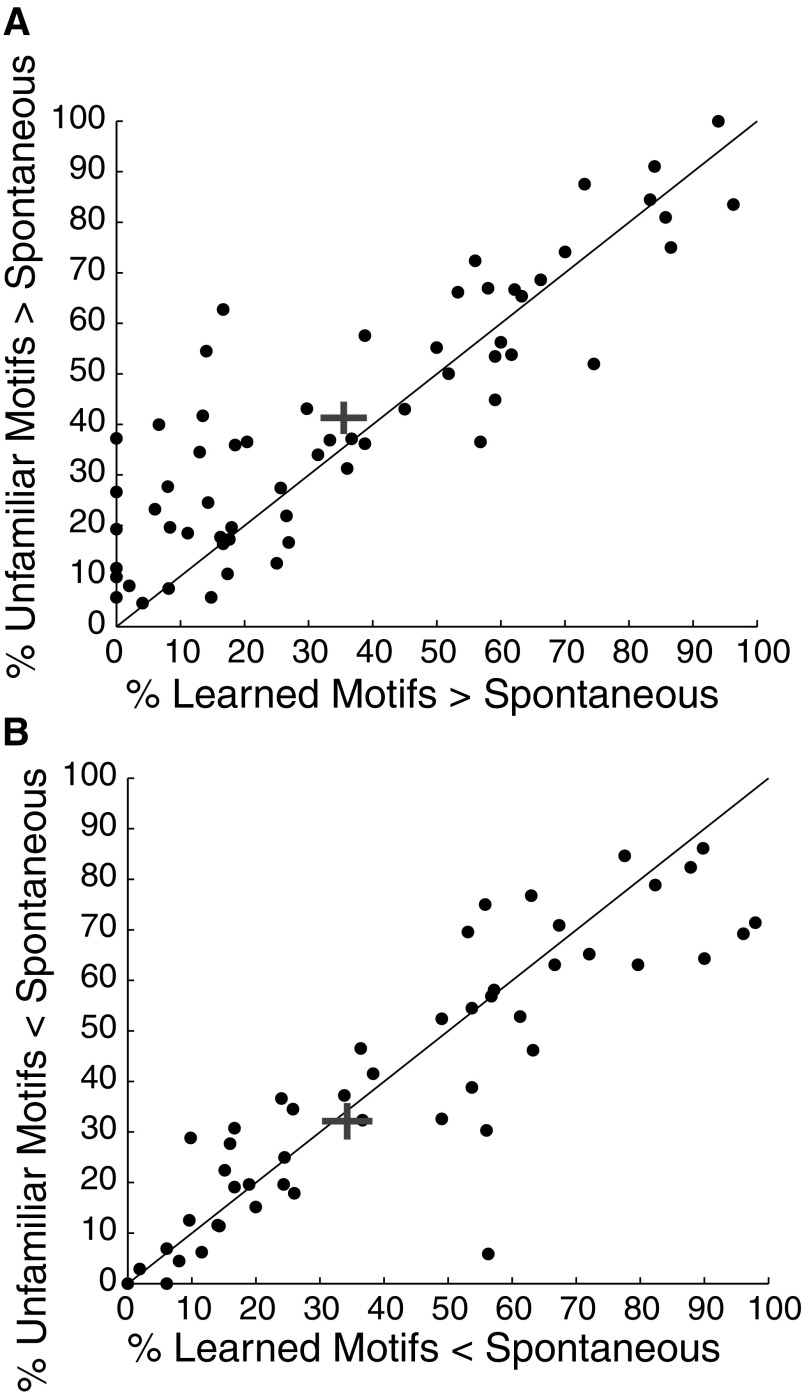

We first examined whether the song-level differences in the firing rates evoked by learned and unfamiliar songs may come from differences in the fraction of learned and unfamiliar motifs that elicit an excitatory or suppressive response. For each neuron (n = 61), we calculated the percentage of motifs from learned and unfamiliar songs that elicited a response more than an SD above the mean spontaneous firing rate. Overall, neurons gave excitatory responses to significantly fewer motifs from learned songs than from unfamiliar songs (Fig. 8A). A mean of 41.31 ± 3.20% of unfamiliar motifs and 35.48 ± 3.61% of learned motifs elicited an excitatory response above spontaneous firing rates (Wilcoxon signed-rank test, P = 0.0043). Suppressive responses were more equally distributed. There was a small difference in the percentage of motifs from unfamiliar and learned songs that evoked a decrease in response rate more that an SD below spontaneous firing rates, although this difference was not significant. A mean of 32.13 ± 3.61% of unfamiliar motifs and 34.25 ± 3.93% of learned motifs elicited a suppressive response from each neuron (Wilcoxon signed-rank test, P = 0.2815; Fig. 8B). After learning, motifs from learned songs are less likely to elicit excitatory responses from ventral NCM neurons than are motifs from unfamiliar songs. Consistent with these results, in ventral NCM, the bias value in individual neurons (computed for responses over whole songs) is negatively correlated with the percentage of motifs from learned songs causing excitation (r = −0.56, P < 0.0001). Bias is not significantly correlated, however, with the percentage of motifs from learned songs causing a suppressive response or the percentage of unfamiliar motifs causing a suppressive or excitatory response (r = 0.19, P = 0.1419; r = 0 0.02, P = 0.8987; and r = −0.24, P = 0.0662; respectively).

Fig. 8.

Motif-level contributions to song-level effects. A and B: scatter plots showing the relationship between the percentages of motifs from learned and unfamiliar songs that elicited significant (A) excitatory or (B) suppressive responses in each neuron. Significant excitatory and suppressive responses are defined as any response an SD above or below the spontaneous rate, respectively. Each circle corresponds to 1 neuron. The gray cross in each plot shows the mean and SE.

We next examined whether the decreased responses to learned songs could also be explained by a difference in the magnitude of the excitatory or suppressive firing rates to motifs from learned and unfamiliar songs. For each neuron, we calculated the mean firing rate for all learned and unfamiliar motifs. To facilitate comparison between neurons with widely varying rates, we normalized the firing rates for each motif presented to a given neuron using a response strength measure, similar to a z-score, in which zero marked the spontaneous firing rate for that neuron (methods). To examine excitatory responses, we included all ventral NCM neurons in our sample that showed a strong increase in firing rate (1 SD or more above the spontaneous firing rate) for one or more motifs from both a learned and unfamiliar song (n = 52). We observed no difference in the strength of the excitatory responses to motifs in learned and unfamiliar songs. The mean excitatory response strength evoked by motifs in learned songs was 1.22 ± 0.08 (in units of SD) and in unfamiliar songs was 1.32 ± 0.06, which are not significantly different (Wilcoxon signed-rank test, P = 0.1451). We examined suppressive responses in a similar way, including all ventral NCM neurons in our sample that showed a strong decrease in firing rate (1 SD or more below the spontaneous firing rate) to one or more motifs from both a learned and unfamiliar song (n = 44). Again, we observed no difference in the strength of suppressive responses to motifs in the learned and unfamiliar songs. The mean suppressive response strength evoked by motifs in learned songs was −0.44 ± 0.07 and in unfamiliar songs was −0.43 ± 0.07, and these are not significantly different (Wilcoxon signed-rank test, P = 0.3305). Across the population of ventral NCM neurons, the magnitude of both the excitatory and suppressive responses to single motifs do not seem to be different for motifs from learned or unfamiliar songs. Instead, the observed differences in the song-level responses are mostly driven by a decrease in the proportion of motifs from learned compared with unfamiliar songs that elicit excitatory responses. We note that the decrease in responsiveness to learned motifs is relatively small given the magnitude of the overall song-level effects. This suggests that changes in the frequency and magnitude of suppression, and in the magnitude of excitatory responses, are likely also involved in some neurons, but not in a manner consistent enough to allow detection across our population of ventral NCM neurons.

DISCUSSION

Our results provide evidence that recognition learning can weaken the sensory representation of acoustically complex, behaviorally relevant auditory stimuli. We found that training a starling to recognize sets of conspecific songs leads to a significant decrease in the responses to learned compared with unfamiliar songs in single NCM neurons, particularly those neurons in ventral NCM. This stimulus-specific response weakening cannot be explained by either stimulus-specific adaptation or by a general bias for novel stimuli. Songs presented during recognition training, but without explicit behavioral contingencies, elicit robust responses similar to unfamiliar songs. Rather, the experience-dependent plasticity in NCM that we observe here is likely associative, coding both stimulus exposure and behavioral relevance.

Although the decrease in firing rates to learned songs was observed across neurons throughout NCM, the effects were stronger in neurons recorded from more ventral parts of the region. The difference in the representations of ventral and dorsal NCM may arise from variation in the connectivity with other regions of the auditory system. Although CMM projects widely throughout NCM, projections from field L (L2a and L3) are much more dense in ventral NCM (Vates et al. 1996). Converging inputs from field L, the region receiving the strongest thalamic input, could provide one possible source for the greater degree of plasticity in ventral NCM.

The experience-dependent weakening of responses to learned songs reported here diverges from canonical studies of plasticity in mammalian primary auditory cortex. Sensory learning is typically tied to expansion of tonotopic representations in AI, shown through an increase in the number of neurons giving excitatory responses or an increase in firing rates to learned frequencies (Bakin and Weinberger 1990; Edeline and Weinberger 1993; Fritz et al. 2003; Gao and Suga 2000; Polley et al. 2006; Recanzone et al. 1993; Rutkowski and Weinberger 2005; Weinberger 2004) or other low-level, stimulus response characteristics (Bao et al. 2004). We examined learning-induced plasticity several synapses afferent to the primary thalamo-recipient zone (Vates et al. 1996) in neurons with complex receptive fields (Stripling et al. 1997) and response properties not captured by simple, linear, tone-evoked frequency tuning functions (Müller and Leppelsack 1985; Ribeiro et al. 1998). We found that recognition learning leads to a significant decrease in the magnitude of responses to the learned compared with unfamiliar songs.

Decreased responses to training stimuli have been observed, albeit less commonly, after habituation (Condon and Weinberger 1991) and backward conditioning tasks (Bao et al. 2003), but in both cases, the training sounds were not behaviorally important. Response decreases in the context of associative behavior are also uncommon in the literature and are accompanied by an enhancement of responses to sounds near the frequency of the training stimuli. This presumably enhances response signal-to-noise ratios by improving spectral (Ohl and Scheich 1996; Witte and Kipke 2005) or other contrast sensitivities (Beitel et al. 2003). It is difficult to project a similar framework onto our own data, because its unclear what an enhancement in contrast sensitivity means in the context of spectro-temporally complex signals, unless the contrast is instantiated at a higher level of representation, i.e., between complex auditory object composed of many features rather than between single tones. Under this scenario, the contrast may be between motifs or perhaps whole songs. In this sense, our results may be similar to the response suppression for learned sounds found using simpler auditory stimuli (Ohl and Scheich 1996; Witte and Kipke 2005). In any case, our results extend these earlier findings to show that learning can lead to similar response suppression in the auditory system for spectro-temporally complex natural sounds.

Other results also challenge the simple hypothesis that learning always enhances auditory responses. Recent results indicate that learning induced response increases depend on the learning strategy used by the animal (Bieszczad and Weinberger 2009). In mice, after mothers naturally learn the behavioral relevance of pup calls, both the timing and the strength of inhibition in AI is significantly altered in spectral bands surrounding the frequency of the pup calls (Galindo-Leon et al. 2009), showing that a natural form of learning (and/or the use of natural stimuli) can altering inhibitory processing. Understanding the full range of response changes induced by learning requires model systems in which complex natural signals can be tied to adaptive behaviors. In this task, we gain direct control over a natural auditory recognition behavior that uses spectro-temporally complex signals, and our results add to the diversity of plastic changes observed after learning. In visual and motor cortex, learning initially leads to an expansion of the representation of training stimuli followed by a contraction once learning performance plateaus (Molina-Luna et al. 2008; Yotsumoto et al. 2008). These results provide no evidence for an initial strengthening of responses to learned songs in NCM. However, it is possible that we did not examine NCM at early enough stages of learning.

Our results describe a novel form of experience-dependent plasticity in NCM. Previous studies measuring IEG expression and electrophysiological activity in NCM report that both response measures decrease over time as a single song is repeatedly presented (Chew et al. 1995; Mello et al. 1995; Stripling et al. 1997). These effects are often described as stimulus-specific habituation or adaptation (Dong and Clayton 2009; Pinaud and Terleph 2008). In contrast, the decreased response to learned songs observed in these experiments is not caused by song exposure. We found no evidence in our experiments for response adaptation over the course of repeated stimulus exposure (Fig. 7). The difference between our results and those of previous studies may reflect any number of methodological differences, including the parameters for stimulus presentation. In our study, the starlings controlled stimulus presentation rates during initial exposure/training, making the interval between song presentations variable, sometimes lengthy (often several seconds), and likely coincident with shifts in attention. Studies that report response adaptation typically present songs at short, fixed intervals (usually every 2 s). In addition, the starling songs used in our study were much longer (∼10 s) than the zebra finch songs used in previous studies (∼2 s). These factors alone make comparisons between previous studies of NCM, and these results difficult. In fact, response adaptation has yet to be studied in the NCM of starlings. It is possible that it does not occur in songbirds such as starlings with more complex and variable songs. Although our results clearly show that associative learning mechanisms are critical in shaping NCM response characteristics over the course of song recognition, they do not rule out a role for nonassociative mechanisms in shaping NCM responses. Indeed, given that NCM is also important for storing the memory of a songbird's tutor song (Bolhuis et al. 2000; Gobes and Bolhuis 2007; Phan et al. 2006), it may be that multiple forms or mechanisms of plasticity are at work in this region. Future studies are needed to understand how different types of NCM plasticity might be involved in song perception and vocal learning.

The responses in ventral NCM may be seen as more selective for learned than unfamiliar motifs in that fewer of the motifs in learned songs evoke strong responses. The responses in NCM are qualitatively different from the selective responses observed in the reciprocally connected area CMM under similar song recognition learning and testing conditions. As in ventral NCM, neurons in CMM respond to small sets of motifs within the training songs. However, unlike NCM, motifs in unfamiliar songs evoke very weak responses from neurons in CMM, leading to a strong preference for learned compared with unfamiliar songs (Gentner and Margoliash 2003). Additionally, NCM and CMM may differ in how plasticity generalizes across different classes of behaviorally relevant songs. In CMM, Go songs, which were paired with food reward, elicited even stronger responses than No-go songs, those never paired with reward. In NCM, there was no significant difference in the responses elicited by Go or No-go songs; however, there was a trend for Go songs to elicit weaker responses than No-go songs. Although CMM seems to be more sensitive to variation in reinforcement, the difference in how CMM and NCM generalize response changes across Go and No-go songs may be quantitative rather than qualitative. The mechanisms that underlie the markedly different responses to unfamiliar songs in these adjacent regions are not clear. The response profile in CMM may be seen as the result of a classic, feed-forward sensory hierarchy that selects for increasingly complex features. Using a similar model to understand NCM is more problematic, however, because its unclear how NCM neurons could be driven by a small set of acoustic features in familiar motifs and a much larger set of features heard in unfamiliar motifs. Instead, we hypothesize that the apparent selectivity in the evoked response of NCM neurons to learned motifs arises from selective suppression of specific motifs in the learned songs. CMM is a potential source of such selective suppression. It is not yet known how the responses of neurons in NCM and CMM relate in real-time during song recognition. A large proportion of the neurons in both NCM and CMM are inhibitory (Pinaud and Mello 2007; Pinaud et al. 2004), and a large number of these inhibitory neurons show IEG activation that is directly tied to song experience (Pinaud et al. 2004). Earlier work suggests that NCM and CMM, or subsets of IEG positive neurons within these regions, are differentially activated during the acquisition and recall stages of song recognition (Gentner et al. 2004). Future work is needed to understand the role of the bidirectional pathway between NCM and CMM and its relationship to behavior.

Our results tie the response characteristics of NCM neurons directly to associative learning, but the function of the weakened responses to learned songs is not clear. The selective representation of learned songs in NCM shares several similarities with observations in the primate ventral visual stream following object recognition learning (Gross et al. 1972; Logothetis 1998; Tanaka 1996). There, behavioral improvement in visual object recognition is reflected in the activity of inferior temporal and lateral prefrontal cortical neurons by an increase in stimulus selectivity among familiar images (both passively presented and trained) compared with novel images and an overall decrease in firing rates for familiar compared with novel images (Freedman et al. 2006; cf. Kobatake et al. 1998; Rainer and Miller 2000). The selective representation of familiar visual objects in these areas is thought to provide a concentrated and sparse representation of behaviorally important objects that is resistant to noise (Freedman et al. 2006; Rainer and Miller 2000). Similar advantages may be obtained in the songbird auditory system through associative learning. It may be that because ventral NCM neurons are driven by fewer motifs in learned songs, spike rate variability over of the course of a learned song becomes more informative than over a similar run of unfamiliar song. It remains to be seen whether the motifs from learned songs that continue to drive NCM neurons after recognition learning are also the most behaviorally relevant for song recognition. In any case, similarities in the neural mechanisms that underlie the recognition of natural visual objects and complex acoustic signals may represent coding strategies for natural stimuli that are heavily conserved, or efficient enough to evolve multiple times.

The representation of songs we observed in NCM could perform additional functions. The increased firing rates for unfamiliar song stimuli in NCM may provide a mechanism for novel (and familiar but behaviorally irrelevant) information to integrate into the auditory system should behavioral relevance change. In addition, the kind of responses observed in NCM could also act as a sensory prediction error to the recognition system, providing a signal when the acoustics of input signals diverge from representations that have acquired strong behavioral relevance.

GRANTS

This work was supported by National Institute of Deafness and Communication Disorders Grant DC-008358 to T. Q. Gentner.

Supplementary Material

ACKNOWLEDGMENTS

We thank members of the Gentner Laboratory and J. Serences for critical comments.

Footnotes

1The online version contains Supplemental Material.

REFERENCES

- Bakin JS, Weinberger NM. Classical conditioning induces CS-specific receptive field plasticity in the auditory cortex of the guinea pig. Brain Res 536: 271–286, 1990. [DOI] [PubMed] [Google Scholar]

- Bao S, Chan VT, Zhang LI, Merzenich MM. Suppression of cortical representation through backward conditioning. Proc Natl Acad Sci USA 100: 1405–1408, 2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bao S, Chang EF, Woods J, Merzenich MM. Temporal plasticity in the primary auditory cortex induced by operant perceptual learning. Nat Neurosci 7: 974–981, 2004. [DOI] [PubMed] [Google Scholar]

- Beitel RE, Schreiner CE, Cheung SW, Wang X, Merzenich MM. Reward-dependent plasticity in the primary auditory cortex of adult monkeys trained to discriminate temporally modulated signals. Proc Natl Acad Sci USA 100: 11070–11075, 2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bieszczad KM, Weinberger NM. Learning strategy trumps motivational level in determining learning-induced auditory cortical plasticity. Neurobiol Learn Mem In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blake DT, Heiser MA, Caywood M, Merzenich MM. Experience-dependent adult cortical plasticity requires cognitive association between sensation and reward. Neuron 52: 371–381, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolhuis JJ, Zijlstra GG, den Boer-Visser AM, Van Der Zee EA. Localized neuronal activation in the zebra finch brain is related to the strength of song learning. Proc Natl Acad Sci USA 97: 2282–2285, 2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chew SJ, Mello C, Nottebohm F, Jarvis E, Vicario DS. Decrements in auditory responses to a repeated conspecific song are long-lasting and require two periods of protein synthesis in the songbird forebrain. Proc Natl Acad Sci USA 92: 3406–3410, 1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chew SJ, Vicario DS, Nottebohm F. A large-capacity memory system that recognizes the calls and songs of individual birds. Proc Natl Acad Sci USA 93: 1950–1955, 1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Condon CD, Weinberger NM. Habituation produces frequency-specific plasticity of receptive fields in the auditory cortex. Behav Neurosci 105: 416–430, 1991. [DOI] [PubMed] [Google Scholar]

- Dong S, Clayton DF. Habituation in songbirds. Neurobiol Learn Mem 92: 183–188, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edeline JM, Weinberger NM. Receptive field plasticity in the auditory cortex during frequency discrimination training: selective retuning independent of task difficulty. Behav Neurosci 107: 82–103, 1993. [DOI] [PubMed] [Google Scholar]

- Farries MA. The avian song system in comparative perspective. Ann NY Acad Sci 1016: 61–76, 2004. [DOI] [PubMed] [Google Scholar]

- Freedman DJ, Riesenhuber M, Poggio T, Miller EK. Experience-dependent sharpening of visual shape selectivity in inferior temporal cortex. Cereb Cortex 16: 1631–1644, 2006. [DOI] [PubMed] [Google Scholar]

- Fritz J, Shamma S, Elhilali M, Klein D. Rapid task-related plasticity of spectrotemporal receptive fields in primary auditory cortex. Nat Neurosci 6: 1216–1223, 2003. [DOI] [PubMed] [Google Scholar]

- Galindo-Leon EE, Lin FG, Liu RC. Inhibitory plasticity in a lateral band improves cortical detection of natural vocalizations. Neuron 62: 705–716, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gao E, Suga N. Experience-dependent plasticity in the auditory cortex and the inferior colliculus of bats: role of the corticofugal system. Proc Natl Acad Sci USA 97: 8081–8086, 2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gentner TQ. Temporal scales of auditory objects underlying birdsong vocal recognition. J Acoust Soc Am 124: 1350–1359, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gentner TQ, Fenn KM, Margoliash D, Nusbaum HC. Recursive syntactic pattern learning by songbirds. Nature 440: 1204–1207, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gentner TQ, Hulse SH. Perceptual mechanisms for individual vocal recognition in European starlings, Sturnus vulgaris. Anim Behav 56: 579–594, 1998. [DOI] [PubMed] [Google Scholar]

- Gentner TQ, Hulse SH, Ball GF. Functional differences in forebrain auditory regions during learned vocal recognition in songbirds. J Comp Physiol [A] Neuroethol Sens Neural Behav Physiol 190: 1001–1010, 2004. [DOI] [PubMed] [Google Scholar]

- Gentner TQ, Hulse SH, Bentley GE, Ball GF. Individual vocal recognition and the effect of partial lesions to HVc on discrimination, learning, and categorization of conspecific song in adult songbirds. J Neurobiol 42: 117–133, 2000. [DOI] [PubMed] [Google Scholar]

- Gentner TQ, Margoliash D. Neuronal populations and single cells representing learned auditory objects. Nature 424: 669–674, 2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gobes SM, Bolhuis JJ. Birdsong memory: a neural dissociation between song recognition and production. Curr Biol 17: 789–793, 2007. [DOI] [PubMed] [Google Scholar]

- Gross CG, Rocha-Miranda CE, Bender DB. Visual propoerties of neurons in the inferotemporal cortex of the macaque. J Neurophysiol 35: 96–111, 1972. [DOI] [PubMed] [Google Scholar]

- Hubel DH, Wiesel TN. Binocular interaction in striate cortex of kittens reared with artificial squint. J Neurophysiol 28: 1041–1059, 1965. [DOI] [PubMed] [Google Scholar]

- Janata P, Margoliash D. Gradual emergence of song selectivity in sensorimotor structures of the male zebra finch song system. J Neurosci 19: 5108–5118, 1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kobatake E, Wang G, Tanaka K. Effects of shape-discrimination training on the selectivity of inferotemporal cells in adult monkeys. J Neurophysiol 80: 324–330, 1998. [DOI] [PubMed] [Google Scholar]

- Kroodsma DE, Miller EH. Ecology and Evolution of Acoustic Communication in Birds. Ithaca, NY: Comstock Publishing Associates, 1996. [Google Scholar]

- Logothetis N. Object vision and visual awareness. Curr Opin Neurobiol 8: 536–544, 1998. [DOI] [PubMed] [Google Scholar]

- Mello C, Nottebohm F, Clayton D. Repeated exposure to one song leads to a rapid and persistent decline in an immediate early gene's response to that song in zebra finch telencephalon. J Neurosci 15: 6919–6925, 1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merzenich MM, Nelson RJ, Stryker MP, Cynader MS, Schoppmann A, Zook JM. Somatosensory cortical map changes following digit amputation in adult monkeys. J Comp Neurol 224: 591–605, 1984. [DOI] [PubMed] [Google Scholar]

- Molina-Luna K, Hertler B, Buitrago MM, Luft AR. Motor learning transiently changes cortical somatotopy. Neuroimage 40: 1748–1754, 2008. [DOI] [PubMed] [Google Scholar]

- Müller CM, Leppelsack HJ. Feature extraction and tonotopic organization in the avian forebrain. Exp Brain Res 59: 587–599, 1985. [DOI] [PubMed] [Google Scholar]

- Nudo RJ, Milliken GW, Jenkins WM, Merzenich MM. Use-dependent alterations of movement representations in primary motor cortex of adult squirrel monkeys. J Neurosci 16: 785–807, 1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ohl FW, Scheich H. Differential frequency conditioning enhances spectral contrast sensitivity of units in auditory cortex (field Al) of the alert Mongolian gerbil. Eur J Neurosci 8: 1001–1017, 1996. [DOI] [PubMed] [Google Scholar]

- Phan ML, Pytte CL, Vicario DS. Early auditory experience generates long-lasting memories that may subserve vocal learning in songbirds. Proc Natl Acad Sci USA 103: 1088–1093, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pinaud R, Mello CV. GABA immunoreactivity in auditory and song control brain areas of zebra finches. J Chem Neuroanat 34: 1–21, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pinaud R, Terleph TA. A songbird forebrain area potentially involved in auditory discrimination and memory formation. J Biosci 33: 145–155, 2008. [DOI] [PubMed] [Google Scholar]

- Pinaud R, Velho TA, Jeong JK, Tremere LA, Leao RM, von Gersdorff H, Mello CV. GABAergic neurons participate in the brain's response to birdsong auditory stimulation. Eur J Neurosci 20: 1318–1330, 2004. [DOI] [PubMed] [Google Scholar]

- Polley DB, Steinberg EE, Merzenich MM. Perceptual learning directs auditory cortical map reorganization through top-down influences. J Neurosci 26: 4970–4982, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rainer G, Miller EK. Effects of visual experience on the representation of objects in the prefrontal cortex. Neuron 27: 179–189, 2000. [DOI] [PubMed] [Google Scholar]

- Recanzone GH, Schreiner CE, Merzenich MM. Plasticity in the frequency representation of primary auditory cortex following discrimination training in adult owl monkeys. J Neurosci 13: 87–103, 1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ribeiro S, Cecchi GA, Magnasco MO, Mello CV. Toward a song code: evidence for a syllabic representation in the canary brain. Neuron 21: 359–371, 1998. [DOI] [PubMed] [Google Scholar]

- Robertson D, Irvine DR. Plasticity of frequency organization in auditory cortex of guinea pigs with partial unilateral deafness. J Comp Neurol 282: 456–471, 1989. [DOI] [PubMed] [Google Scholar]

- Rutkowski RG, Weinberger NM. Encoding of learned importance of sound by magnitude of representational area in primary auditory cortex. Proc Natl Acad Sci USA 102: 13664–13669, 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Solis MM, Doupe AJ. Anterior forebrain neurons develop selectivity by an intermediate stage of birdsong learning. J Neurosci 17: 6447–6462, 1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stoddard PK. Vocal recognition of neighbors by territorial passerines. In: Ecology and Evolution of Acoustic Communication in Birds, edited by Kroodsma DE, Miller EH . Ithaca, NY: Comstock/Cornell, 1996, p. 356–374. [Google Scholar]

- Stripling R, Volman SF, Clayton DF. Response modulation in the zebra finch neostriatum: relationship to nuclear gene regulation. J Neurosci 17: 3883–3893, 1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanaka K. Inferotemporal cortex and object vision. Annu Rev Neurosci 19: 109–139, 1996. [DOI] [PubMed] [Google Scholar]

- Terpstra NJ, Bolhuis JJ, den Boer-Visser AM. An analysis of the neural representation of birdsong memory. J Neurosci 24: 4971–4977, 2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Theunissen FE, Amin N, Shaevitz SS, Woolley SM, Fremouw T, Hauber ME. Song selectivity in the song system and in the auditory forebrain. Ann NY Acad Sci 1016: 222–245, 2004. [DOI] [PubMed] [Google Scholar]

- Theunissen FE, Shaevitz SS. Auditory processing of vocal sounds in birds. Curr Opin Neurobiol 16: 400–407, 2006. [DOI] [PubMed] [Google Scholar]

- Vates GE, Broome BM, Mello CV, Nottebohm F. Auditory pathways of caudal telencephalon and their relation to the song system of adult male zebra finches. J Comp Neurol 366: 613–642, 1996. [DOI] [PubMed] [Google Scholar]

- Weinberger NM. Dynamic regulation of receptive fields and maps in the adult sensory cortex. Annu Rev Neurosci 18: 129–158, 1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weinberger NM. Specific long-term memory traces in primary auditory cortex. Nat Rev Neurosci 5: 279–290, 2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wiesel TN, Hubel DH. Single-cell responses in striate cortex of kittens deprived of vision in one eye. J Neurophysiol 26: 1003–1017, 1963. [DOI] [PubMed] [Google Scholar]

- Witte RS, Kipke DR. Enhanced contrast sensitivity in auditory cortex as cats learn to discriminate sound frequencies. Brain Res Cogn Brain Res 23: 171–184, 2005. [DOI] [PubMed] [Google Scholar]

- Yotsumoto Y, Watanabe T, Sasaki Y. Different dynamics of performance and brain activation in the time course of perceptual learning. Neuron 57: 827–833, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang LI, Bao S, Merzenich MM. Disruption of primary auditory cortex by synchronous auditory inputs during a critical period. Proc Natl Acad Sci USA 99: 2309–2314, 2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang LI, Bao S, Merzenich MM. Persistent and specific influences of early acoustic environments on primary auditory cortex. Nat Neurosci 4: 1123–1130, 2001. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.