SUMMARY

The neuroanatomical organization of the visual system is largely similar across primate species [1, 2], predicting similar visual behaviors and perceptions. While responses to trial-by-trial presentation of static images suggest primates share visual orienting strategies [3–8], these reduced stimuli fail to capture key elements of naturalistic, dynamic visual world in which we evolved [9, 10]. Here, we compared the gaze behavior of humans and macaque monkeys when they viewed three different 3-minute long movie clips. We found significant inter-subject and inter-species gaze correlations, suggesting that both species attend a common set of events in each scene. Comparing human and monkey gaze behavior with a computational saliency model revealed that inter-species gaze correlations were not driven primarily by low-level saliency cues, but by common orienting toward biologically-relevant social stimuli. Additionally, humans, but not monkeys, tended to gaze toward the targets of viewed individual’s actions or gaze. Together, these data suggest human and monkey gaze behavior comprises converging and diverging informational strategies, driven by both scene content and context; they are not fully described by simple low-level visual models.

RESULTS

Brains evolved to guide sensorimotor behavior within an immersive, interactive, ever-changing environment. In the laboratory, however, dynamic and interactive environments are problematic because subjects’ instantaneous responses to a stimulus change their perceptual experience. For example, though movie-viewing offers (at best) a minimalistic model of real-world interactions, viewers’ perceptions crucially drive and depend upon ongoing orienting behaviors. Commercially-produced movies nonetheless evoke reliable, selective, time-locked activity in many brain areas [11] (see also [12, 13]). Perceptual responses to movies depend upon gaze behavior, which in turn depends upon expectations, goals and strategy [14–17]; predictably, then, these movies also evoked reproducible gaze behavior [9, 10, 18, 19]. To what extent are these stereotyped experiences and perceptual decisions driven by low-level visual cues, as opposed to higher-order features such as ethologically significant objects, actions, or narrative content? One approach to answering this question is to examine the behavior of a closely-related species, such as the macaque monkey, that shares relevant neural structures involved in gaze control [20]. The gaze control system of the macaque is the best-studied primate model of the nested, iterative, sensorimotor decision loops that make up our natural behavior [21–25], and comprises an important substrate in which to address the evolution of behavior. A second approach is to examine whether gaze behavior can be predicted by neurologically-inspired computational models of visual saliency. Such models have proven effective at locating areas of interest in static scenes based on low-level visual cues [26, 27]. In the present study, we test the hypothesis that humans and monkeys have adapted shared neural mechanisms to identify, localize, and monitor distinct sets of behaviorally-relevant stimuli.

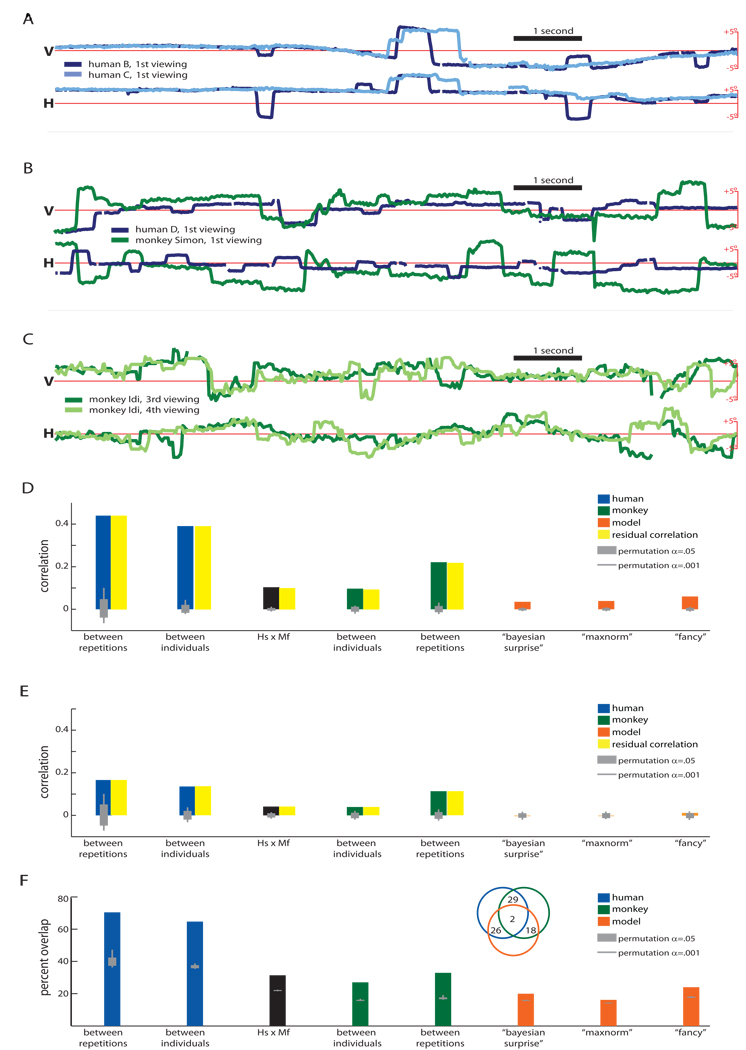

Specifically, we combined behavioral and modeling approaches to compare how humans, monkeys, and computer simulations respond during initial and repeat viewings of movie clips. Clips were taken from three films. One movie featured monkeys in natural environments (the BBC’s Life of Mammals), one featured cartoon humans and animals (Disney’s the Jungle Book), and one featured human social interactions (Chaplin’s City Lights). The movie clips were 3-minutes in duration, converted to black-and-white, and stripped of their soundtrack. Each subject viewed each movie clip multiple times in random sequence. Figure 1A–C shows movie frames with superimposed gaze locations (humans, blue; monkeys, green); Figure 2A–C shows representative human and monkey gaze traces (see also Supplemental Video S1). We found that the patterns of fixations of humans and monkeys across the movie clips were broadly similar. Scanpaths were significantly correlated across different viewings by humans and monkeys. These correlations were especially pronounced among humans, for whom the average inter-scanpath correlation (ISC) was almost as high between (r=0.39, permutation test, p<0.001) as within subjects (r=0.44, p<0.001), consistent with past reports [10]. Correlations between monkeys were also significant, but substantially lower than among humans (average same-monkey r=0.22, p<0.001; between-monkey r=0.10, p<0.001); correlations between species were significant and of comparable size to correlations between individual monkeys (average r=0.10, p<0.001) (Figure 2D, see also Supplemental Figure S1A). Finally, eye movement speed, like gaze position, was correlated between viewers (average same-human r=0.17, p<0.001; between-human r=0.14, p<0.001; across-species r=0.04, p<0.001; between-monkey r=0.04, p<0.001; same-monkey, r=0.11, p<0.001; Figure 2E, see also Supplemental Figure S1B). The most likely way for such correlations to arise is if different primates fixate similar locations at similar times; however, because correlation is invariant to shifting and scaling transformations, additional analyses are necessary to confirm this interpretation. We directly analyzed scanpath overlap, counting the percentage of samples in which one scanpath was within 3.5° of the other: Human scanpaths overlapped, on average, 70% of the time between repetitions and 65% between individuals; monkeys overlapped 33% between repetitions and 27% between individuals; between species, scanpaths overlapped 31% of the time (Figure 2F). Together, these findings suggest that humans and monkeys use similar spatiotemporal visual features to guide orienting behavior.

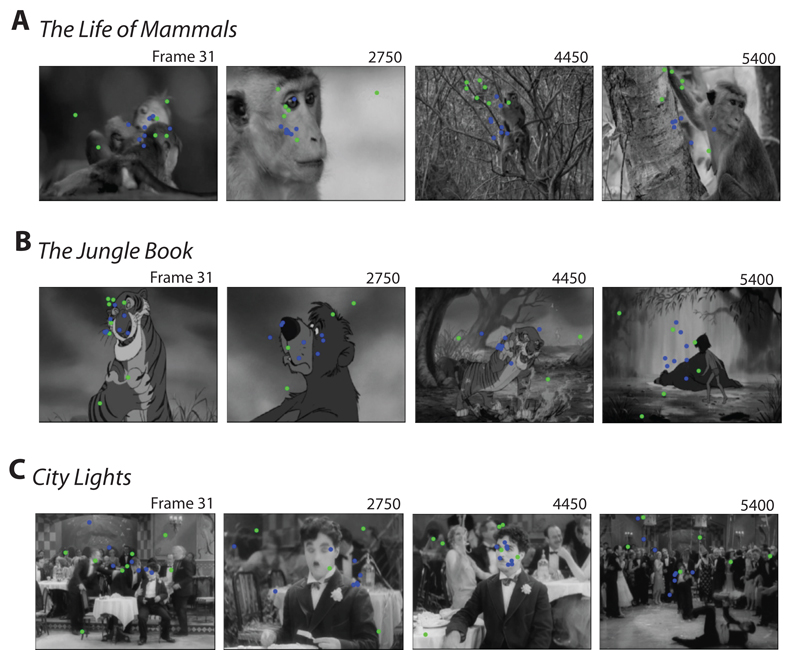

Figure 1. Video scenes with superimposed gaze traces.

Example frames from the Life of Mammals (A), the Jungle Book (B), and City Lights (C), shown with superimposed gaze coordinates (monkeys green, humans blue).

Figure 2. Correlation in gaze location across scanpaths.

Correlations are evident across humans, monkeys, and between species. Here, scanpaths are split into vertical and horizontal coordinates, and plotted for (A) two humans paths viewing City Lights (local correlation r=0.66), (B) a human and a monkey viewing the Jungle Book (local correlation r=0.08), and (C) one monkey during repeated viewings of the Life of Mammals (local correlation r=0.36). The local correlations are typical of the inter-human, inter-species, and intra-monkey scanpaths, respectively. Significant correlations existed between primate scanpaths produced in response to the same video clip. Both spatial position (D) and eye movement speed (E) were correlated across primate scanpaths, whether produced by the same individual or a different individual and whether produced by a human (blue) or a monkey (green). Gray bars indicate the permutation baseline for α = 0.05 (thick) or 0.001 (thin): all primate gaze ISCs were significant with α < .001. Artificial scanpaths produced by a low-level visual saliency model (orange) were significantly correlated with primate scanpaths in spatial position (α < 0.001) and gaze shift timing (α < 0.05); however, residual inter-primate correlations (yellow) were essentially unchanged despite partialling out shared similarities to artificial scanpaths. Finally, to confirm that behavioral correlations were driven by visual fixation priorities, we compared the percentage of gaze samples which overlapped (±3.5°) across different scanpaths (F): The pattern of results was identical to the pattern observed for inter-subject correlation. Furthermore, we found that samples that overlapped between humans and monkeys rarely overlapped with the best-performing artificial scanpath (2 of 31%, see inset), These data rule out the hypothesis that gaze correlations were driven primarily by low-level visual features, at least as characterized by well-established neuromorphic computational saliency models [27, 29].

Such similar scanpaths can arise in a variety of ways. One possibility is that shared orienting behaviors are driven solely by salient low-level visual features. At the other extreme, shared orienting behaviors might be driven primarily by high-level behaviorally-relevant narrative content. To distinguish between these possibilities, we compared human and monkey scanpaths to artificial scanpaths generated by a well-validated low-level saliency model [26–29]. Indeed, artificial scanpaths did correlate with human and monkey gaze positions in our experiment (Figure 2D, orange), but even the best-correlated artificial scanpath (r=0.06, p<0.001; see Methods) played a negligible role mediating primate ISCs: residual ISCs were just as pronounced after partialling out similarities to artificial scanpaths (average residuals: same-human, r=0.44; between-human, r=0.39; across-species, r=0.10; between-monkey, r=0.09; same-monkey, r=0.22). Artificial scanpaths were less successful at modeling the timing of attention shifts (r=0.01, p<0.05) and did not predict primate ISCs in gaze shift timing. While artificial scanpaths overlapped with observed human and monkey scanpaths—28% and 20% of the time, respectively (for the best-performing simulation, see Figure 2F, orange)—they were strikingly poor at predicting human and monkey overlap: of the 31% of samples in which human and monkey gaze overlapped, only 1 in 15 (2.1% of total) also overlapped the simulated scanpath (Figure 2F, inset). Application of multidimensional scaling to average normalized inter-scanpath distances revealed that each video produced a distinct cluster of human, monkey, and simulated scanpaths (Supplemental Figure S2); for The Life of Mammals and the The Jungle Book movie clips, human and monkey scanpaths clustered tightly, and were separate from artificial scanpaths.

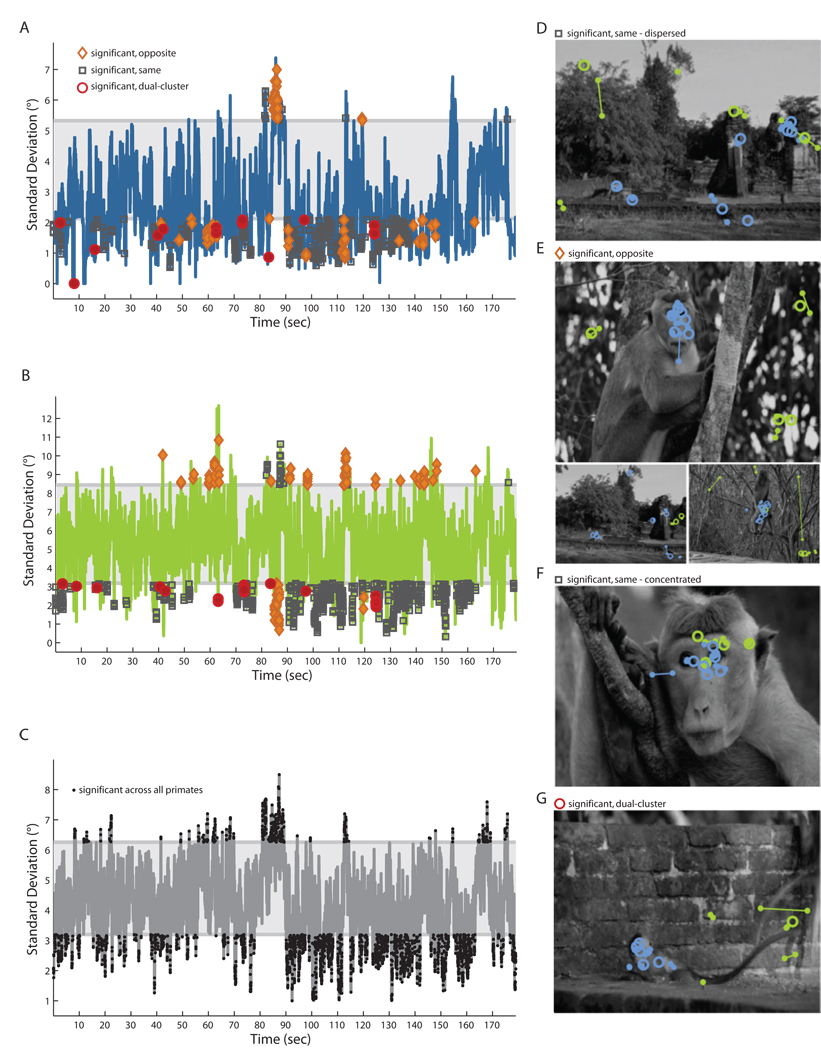

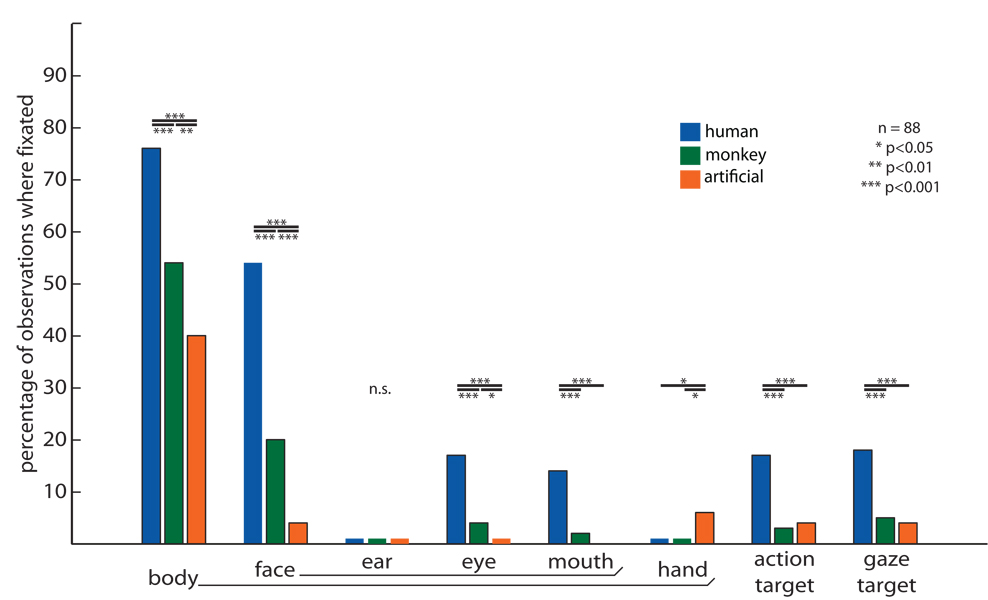

Tracking the standard deviation of gaze coordinates across viewers as a function of time proved to be an effective way of screening for patterns of interactions with the environment. By tracking the standard deviation of human (Figure 3A) and monkey (Figure 3B) gaze coordinates as they watched The Life of Mammals, we identified moments at which gaze was significantly clustered (below shading) or dispersed (above shading). Furthermore, by comparing human and monkey results to one another or to the standard deviation across all primate scanpaths (Figure 3C), we can define scenes of interest in four categories: 1) scenes that significantly dispersed both human and monkey gaze (Figure 3AB, gray boxes above shading; example in Figure 3D); 2) scenes that significantly clustered one species while dispersing another (Figure 3AB, orange diamonds; examples in Figure 3E); 3) scenes that significantly clustered both human and monkey gaze in the same place (Figure 3AB, gray boxes below shading; example in Figure 3F); and 4) scenes that separately clustered human and monkey gaze at different locations (Figure 3AB, red circle; example in Figure 3G). Both humans and monkeys generally looked toward faces and toward interacting individuals (Figure 3F), and while both humans and monkeys sometimes scanned the broader scene (Figure 3D), monkeys shifted gaze away from objects of interest more readily and more often. For example, of the three examples of differential clustering shown in Figure 3E, two occurred when monkeys shifted gaze away from regions of interest to scan the background, and one occurred when monkeys, but not humans, quickly scanned newly-revealed scenery during a camera pan. Monkeys and humans sometimes made collectively different decisions about where to look, and these differences sometimes reflected differential understanding of movie content: for example, while humans used cinematic conventions to track an individual of interest, looking to the character appearing centermost on the screen, monkeys instead tracked the more active member of a pair—even as he jumped offscreen (Figure 3G). To identify crucial visual stimuli omitted from the low-level saliency model, we selected frames on which a species' gaze was strongly clustered, but far from the simulated scanpath. We then contrasted image content at three locations on each frame: at a location viewed by a monkey, a location viewed by a human, and the location selected by the artificial scanpath (Figure 4). On each frame, these three locations were scored (blindly, in random order) as including an individual’s body, hands, face, ears, eyes or mouth, and as being the target of another individual’s actions or attention. We found that humans and monkeys gazed toward individuals in a scene significantly more than predicted by low-level visual saliency models. Both species looked particularly often at faces and eyes. Remarkably, humans (but not monkeys) strongly attended objects being manipulated or examined by others.

Figure 3. Comparison of gaze reliability across time, by species.

The standard deviation of simultaneously-recorded gaze coordinates from humans (A), monkeys (B) and both species (C) can, in conjunction, differentiate the visual strategies of monkeys or humans. At right we show frames during a clip from the Life of Mammals that most dispersed attention (D, a long shot featuring a number of monkeys), that sometimes gathered attention (E, social stimuli captured sustained human interest, while newly-visible scenery during a pan was quickly surveyed by monkeys). Faces often captured the attention of all primates (F), while dyadic social interactions sometimes produced separate gaze clusters for humans and macaques (G).

Figure 4. Higher-level social cues contribute to observed gaze correlations.

We selected video frames in which observed gaze was highly clustered at a location far from that chosen by the low-level saliency model, examined scene content at the human, monkey, and simulated gaze coordinates, and tallied the percentage of frames in which scene content comprised biologically-relevant stimuli. Relative to image regions selected by artificial scanpaths, regions that were consistently viewed by humans and monkeys often featured social agents and the targets of their actions or attentions. Humans and monkeys fixated bodies and faces significantly more than low-level simulations, especially the eye and (for humans) mouth regions. Humans, but not monkeys, fixated socially-cued regions – for example, things being reached or gazed toward – significantly more often than predicted by the model. This effect was not mediated just by hand motion, which attracted gaze significantly more often for the simulation than for actual humans or monkeys.

While statistically significant, the inter-species ISCs were low in magnitude. One reason for the low magnitude could be due to species differences in basic eye movements. For example, although humans and monkeys displayed a strong central bias, monkeys exhibit a broader spatial range of fixations (Supplemental Figure S3A). Similarly, though the dynamics of eye movement were similar between species, humans exhibited shorter saccades and longer fixations than monkeys (Supplemental Figure S3B). These data are consistent with previous reports [e.g. 30, 31, 32], and suggest that differences in gaze dynamics during movie viewing contribute to lowered ISCs between species.

DISCUSSION

We found that gaze behavior during movie viewing was significantly correlated across repetitions, individuals, and even species. Gaze behavior during temporally-extended video likely depends on species-specific cues, on the ability to integrate events over time, and on familiarity and fluency with videos. ISCs were substantially stronger among human participants than among monkeys or between humans and monkeys. Computational models of low-level video saliency poorly accounted for behavioral correlations within and between species. Primate scanpaths significantly overlapped, but this overlap seemed not to be mediated by low-level visual saliency: in particular, low-level models missed crucial biological stimuli such as faces and their expressions, bodies and their movements, and (particularly for humans) observed social signals and behavioral cues. ISCs during natural viewing suggest that in the absence of explicit, immediate goals—intrinsic or instructed—orienting priorities are overwhelmingly similar [9, 10, 31] and focused on faces and social interactions. The importance of such behaviorally-relevant visual cues has been supported by findings that primates quickly discriminate animate stimuli [33], facial locations [34, 35], facial expressions [reviewed, 36], and gaze directions [34, 37, 38] and encode these social variables in neurons governing attention [36, 39–41].

While ISCs between species and between monkeys were significant, they were lower than among humans. Several accounts may explain why correlations between monkeys were less pronounced than between humans, as has previously been reported for still images [42]. Like Berg et al [30], we found that humans and monkeys have similar gaze behavior, but differ in the degree of central bias, in the duration and regularity of fixation periods, and in the amplitude of saccades (Supplemental Figure S3). This may suggest species-specific visual strategies, with monkeys fixating for short and stereotyped intervals separated by large saccades and humans fixating for more prolonged and variable periods. Such differences might facilitate relatively fast threat and resource detection by monkeys and are also consistent with the finding that monkeys abbreviate fixations toward high-risk social targets, such as high-ranking male faces [43].

Alternatively, monkeys may fail to orient systematically in response to videos because they fail to attend toward or understand the meaning of videos. Our findings echo reports that monkeys poorly integrate and generalize concepts from laboratory experiences [44, 45] and fail to attend videos until they accrue adequate experience with the medium [46]. Most humans are familiar with video broadcast, driving powerful expectations and interest; likewise, cinematographers craft movies to entertain humans, not monkeys. Indeed, human gaze anticipates areas of interest even when viewing novel movie scenes [31]. If monkeys were inadequately engaged by video, it was not solely due to anthropocentric visual content: We found that humans and monkeys had similar responses to the three videos, independent of ecological relevance: For both species, The Jungle Book – a children's cartoon – strongly and consistently captured gaze, while City Lights – a visually-crowded comedy – did so weakly (see Supplemental Figure S1).

Our world is not static, and subtle perceptual behaviors, such as orienting, transform incoming sensation. However, ISCs suggest that across primates, complex and dynamic stimuli nonetheless may evoke consistent cognitive and behavioral responses. Human gaze is nearly as consistent across individuals as across repetitions; furthermore, significant correlations are evident between monkeys and between primate species. These findings extend the pioneering experiments of Buswell [47] and Yarbus [17] to natural temporal sequences: While our data cannot reveal covert orienting decisions, they strongly suggest primates attend similar features and shift attention at similar times. Weaker correlations in monkeys than humans may be due to species differences in vigilance or fluency. Importantly, ISCs within and between species were greater than could be explained by low-level saliency models: In particular, primates respond to biologically-relevant features including animate objects and faces. Finally, we find that humans (but not monkeys) strongly attended the foci of other individual’s attention and activity. This tendency is provocative, and suggests a synchronizing force at work in human social evolution. Primates tended to passively orient in similar directions, making observed gaze a useful indicator of important environmental features, incentivizing gaze following [40, 48], and further correlating our collective behavior. Inter-scanpath correlation may thus prove important not just because of what it tells us about the evolution of the visual system, but moreover, because of what it reveals about the evolution of primate societies.

METHODS

Participants

Two adult male long-tailed macaques, Macaca fascicularis, and four adult male humans participated in the study. Nonhuman participants were born in captivity and socially housed indoors; all nonhuman experimental procedures were in compliance with the local authorities, NIH guidelines, and IACUC standards for the care and use of laboratory animals. Human participants provided informed consent under a protocol authorized by the Institutional Review Board of Princeton University and were debriefed at the conclusion of the session. In addition to humans and monkeys, data sets collected from an artificially intelligent agent were derived from the iLab neuromorphic vision C++ toolkit (Itti, 2004).

Stimulus Presentation

The three visual stimuli consisted of silent, gray-scale, three-minute digital video clips taken from City Lights (1931), The Jungle Book (1967), or The Life of Mammals: Social Climbers (2003). Charlie Chaplin's film City Lights has been used in earlier fMRI experiments [10] and features humans in indoor environments. The Jungle Book, a cartoon, includes simplified caricatures of human and animal stimuli. Finally, the scene from The Life of Mammals features macaque monkeys in their natural habitat.

Human stimuli were presented on a 60 Hz, 17” LCD monitor operating at 1024×768 resolution at a distance of 85cm. This provided a 22°×18° field of view of the monitor. The 770×584 videos, centrally located, subtended approximately 17°×l14°. Human eye data were captured with the Tobii Eyetracker X120 (www.tobii.com) at 120 Hz. Prior to each session, participants completed a 5-point calibration. Following calibration, participants completed a 9-point calibration check three times consecutively; then viewed the three videos separated by 30-second intervals of blank screen. A 90-second break preceded another three consecutive 9-point calibration checks; followed by video presentation again in random order separated by 30-second blanks. A final set 9-point calibration checks concluded the session. To pass each point in the calibration check, the system was required to report sustained gaze within 2.5° of a 0.4° fixation target.

Monkey subjects sat in a primate chair fixed 74cm away from a 17” LCD or CRT monitor operating at 60 Hz and 1024×768 resolution and were restrained via head prosthesis. This provided a 25°×20° view of the monitor. All video stimuli were located centrally and occupied an area of 770×584 pixels; this subtended a visual angle of 19°×16°. Monkey eye data were captured with an ASL Eye-Tracker 600 (www.asleyetracking.com) with either an ASL R6/Remote Optics camera operating at 60 Hz (LCD rig) or an ASL High Speed Optics camera operating at 120 Hz (CRT rig). Prior to each session, monkeys completed a 9-point calibration. In the second session only, 9-point calibration checks confirmed gaze tracking accuracy, as described above. The sequence of calibration checks and video playback was identical to humans with the exception that monkeys were rewarded with juice during calibration checks and were randomly given juice throughout the course of the videos.

Quantifying eye movement behavior

Eye data downsampled in Matlab (www.mathworks.com) to 60 Hz (10740 data points), and all offscreen fixations and signal loss were recoded as ‘not-a-number’. In total, this filtering rejected a 16.8% of the monkey eye traces and 11.5% of the human eye traces. To facilitate low-level gaze analysis, eye data were grouped into saccades or fixations using a velocity-based criterion. Fixations were defined as eye movements in which the total velocity did not exceed 20°/s. Fixations shorter than 100 ms (6 samples) were discarded and integrated into the surrounding saccade, while fixations separated by 17 ms (1 sample) or saccades smaller than 2° were merged into single fixation events.

Saliency map and simulated-gaze generation

To model orienting responses to low-level visual stimuli, artificial scanpaths were generated toward each video using the Sun VirtualBox (www.virtualbox.org) implementation of the iLab C+++ Neuromorphic Vision Toolkit [29] (http://ilab.usc.edu/toolkit/downloads-virtualbox.shtml, downloaded May 26 2009). Details regarding the development of this toolkit have been published elsewhere [26–30, 49].

Analysis

For each pair of scanpaths, a general correlation was obtained by averaging the r values obtained from the series of horizontal and of vertical coordinates. To compare the similarity in gaze shift timing between pairs of scanpaths, we first smoothed the spatial position across time using a Gaussian kernel 100ms (6 samples) in standard deviation, then calculated correlations in the absolute value of the first derivative. Additionally, we performed an analysis of scanpath overlap, which we operationalized as the percentage of time points for which paired scanpath coordinates were within 3.5° of one another (2.5° error radius + 1° foveal radius). Finally, to detect high-dimensional scanpath features that may have varied across scanpaths, we performed a multidimensional scaling (MDS) of inter-scanpath distance, normalized by dividing out the average shuffled inter-scanpath distance. MDS maps high-dimensional data to a low-dimensional surface in which map proximity correlates with similarity, and was implemented using the Matlab command midscale.

To determine the significance of inter-scanpath correlations, it was essential to correct for sample-to-sample correlations within scanpaths. To do this, we established baselines using a consecutivity-preserving time-shuffling permutation procedure. Instead of randomly sampling each data point individually, we take the entire sequence of time points, randomly flip the direction, and rotate the indices so as to randomize timestamps while adding a single temporal discontinuity where the last sample looped back to the first. We then recalculate the statistic to be tested using the newly permuted data, and repeat. This population comprises the ‘chance’ baseline against which our observations can be compared: If our observations lie outside the 2.5th and 97.5th percentile, for example, then it is significant at two-tailed alpha level of 0.05. All permutation values reported here use this procedure unless otherwise indicated. (As a precaution, we also performed these analyses without randomly reversing the temporal order of samples: Results were not significantly altered.)

Because low-level visual features may have influenced human and monkey gaze in similar ways, we compared primate scanpaths to artificially-generated gaze sequences (described above). Specifically, we correlated human and monkey gaze behavior with the behavior of artificially-generated simulated eye movements and recalculated inter-scanpath correlations after partialing out the artificial scanpath using Matlab’s partial correlation function. We likewise compared scanpath overlap between human/monkey scanpaths and simulated scanpaths, and--to establish whether overlap in primate scanpaths was mediated by low-level visual features--measured the three-way overlap between human scanpaths, monkey scanpaths, and the best-performing simulation.

To determine those factors that consistently influenced human and monkey gaze, but were missed by the low-level saliency model, we selected frames on which gaze was strongly clustered (the standard deviation of the gaze locations were in the bottom 5% observed for that species), but where the artificial scanpath was unusually far from gaze (more than a standard deviation above average). When multiple frames were identified within the same ½ second period, only the first was accepted. We then scored these frames for image content at three locations—the artificial scanpath, a random human scanpath, and a random monkey scanpath—in a random order unknown to the scorer. Image content at a given location was described as including a social agent or the target of an agent’s action or gaze. Additionally, fixations on social agents that fell on faces or hands were tallied; facial fixations were likewise tallied based upon fixations on ears, eyes, or mouth. Throughout scoring, the observer was blind as to whether they were scoring an artificial, human, or monkey scanpath. Finally, the significance of differential image content at gaze-selected versus model-selected regions was determined by χ2 test.

Supplementary Material

ACKNOWLEDGEMENTS

This work was supported by Princeton University’s Training Grant in Quantitative Neuroscience NRSA T32 MH065214-1 (SVS), NSF BCS-0547760 CAREER Award (AAG), and Autism Speaks (AAG).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- 1.Kaas JH, Preuss TM. Archontan affinities as reflected in the visual system. In: Szalay FS, Novacek MJ, McKenna MC, editors. Mammalian phylogeny. New York: Springer-Verlag; 1993. pp. 115–128. [Google Scholar]

- 2.Krubitzer LA, Kaas JH. Cortical Connections of Mt in 4 Species of Primates - Areal, Modular, and Retinotopic Patterns. Visual Neuroscience. 1990;5:165–204. doi: 10.1017/s0952523800000213. [DOI] [PubMed] [Google Scholar]

- 3.Ghazanfar AA, Nielsen K, Logothetis NK. Eye movements of monkeys viewing vocalizing conspecifics. Cognition. 2006;101:515–529. doi: 10.1016/j.cognition.2005.12.007. [DOI] [PubMed] [Google Scholar]

- 4.Gothard KM, Brooks KN, Peterson MA. Multiple perceptual strategies used by macaque monkeys for face recognition. Animal Cognition. 2009;12:155–167. doi: 10.1007/s10071-008-0179-7. [DOI] [PubMed] [Google Scholar]

- 5.Gothard KM, Erickson CA, Amaral DG. How do rhesus monkeys (Macaca mulatta) scan faces in a visual paired comparison task? Animal Cognition. 2004;7:25–36. doi: 10.1007/s10071-003-0179-6. [DOI] [PubMed] [Google Scholar]

- 6.Guo K. Initial fixation placement in face images is driven by top-down guidance. Experimental Brain Research. 2007;181:673–677. doi: 10.1007/s00221-007-1038-5. [DOI] [PubMed] [Google Scholar]

- 7.Guo K, Robertson RG, Mahmoodi S, Tadmor Y, Young MP. How do monkeys view faces? - a study of eye movements. Experimental Brain Research. 2003;150:363–374. doi: 10.1007/s00221-003-1429-1. [DOI] [PubMed] [Google Scholar]

- 8.Nahm FKD, Perret A, Amaral DG, Albright TD. How do monkeys look at faces? Journal of Cognitive Neuroscience. 1997;9:611–623. doi: 10.1162/jocn.1997.9.5.611. [DOI] [PubMed] [Google Scholar]

- 9.Hasson U, Landesman O, Knappmeyer B, Vallines I, Rubin N, Heeger DJ. Neurocinematics: The Neuroscience of Film. Projections. 2008;2:1–26. [Google Scholar]

- 10.Hasson U, Yang E, Vallines I, Heeger DJ, Rubin N. A hierarchy of temporal receptive windows in human cortex. J Neurosci. 2008;28:2539–2550. doi: 10.1523/JNEUROSCI.5487-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hasson U, Nir Y, Levy I, Fuhrmann G, Malach R. Intersubject synchronization of cortical activity during natural vision. Science. 2004;303:1634–1640. doi: 10.1126/science.1089506. [DOI] [PubMed] [Google Scholar]

- 12.Bartels A, Zeki S. The chronoarchitecture of the cerebral cortex. Philos Trans R Soc Lond B Biol Sci. 2005;360:733–750. doi: 10.1098/rstb.2005.1627. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Hasson U, Malach R, Heeger DJ. Reliability of cortical activity during natural stimulation. Trends Cogn Sci. 2009 doi: 10.1016/j.tics.2009.10.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Henderson JM. Human gaze control during real-world scene perception. Trends Cogn Sci. 2003;7:498–504. doi: 10.1016/j.tics.2003.09.006. [DOI] [PubMed] [Google Scholar]

- 15.Land MF, Hayhoe M. In what ways do eye movements contribute to everyday activities? Vision Res. 2001;41:3559–3565. doi: 10.1016/s0042-6989(01)00102-x. [DOI] [PubMed] [Google Scholar]

- 16.Shinoda H, Hayhoe MM, Shrivastava A. What controls attention in natural environments? Vision Res. 2001;41:3535–3545. doi: 10.1016/s0042-6989(01)00199-7. [DOI] [PubMed] [Google Scholar]

- 17.Yarbus AL. Eye Movements and Vision. New York: Plenum; 1967. [Google Scholar]

- 18.Nakano T, Yamamoto Y, Kitajo K, Takahashi T, Kitazawa S. Synchronization of spontaneous eyeblinks while viewing video stories. Proc Biol Sci. 2009;276:3635–3644. doi: 10.1098/rspb.2009.0828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Goldstein RB, Woods RL, Peli E. Where people look when watching movies: do all viewers look at the same place? Comput Biol Med. 2007;37:957–964. doi: 10.1016/j.compbiomed.2006.08.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ghazanfar AA, Santos LR. Primate brains in the wild: The sensory bases for social interactions. Nature Reviews Neuroscience. 2004;5:603–616. doi: 10.1038/nrn1473. [DOI] [PubMed] [Google Scholar]

- 21.Ikeda T, Hikosaka O. Reward-dependent gain and bias of visual responses in primate superior colliculus. Neuron. 2003;39:693–700. doi: 10.1016/s0896-6273(03)00464-1. [DOI] [PubMed] [Google Scholar]

- 22.Hikosaka O. Basal ganglia mechanisms of reward-oriented eye movement. Ann N Y Acad Sci. 2007;1104:229–249. doi: 10.1196/annals.1390.012. [DOI] [PubMed] [Google Scholar]

- 23.Leon MI, Shadlen MN. Effect of expected reward magnitude on the response of neurons in the dorsolateral prefrontal cortex of the macaque. Neuron. 1999;24:415–425. doi: 10.1016/s0896-6273(00)80854-5. [DOI] [PubMed] [Google Scholar]

- 24.Platt ML, Glimcher PW. Neural correlates of decision variables in parietal cortex. Nature. 1999;400:233–238. doi: 10.1038/22268. [DOI] [PubMed] [Google Scholar]

- 25.Sugrue LP, Corrado GS, Newsome WT. Matching behavior and the representation of value in the parietal cortex. Science. 2004;304:1782–1787. doi: 10.1126/science.1094765. [DOI] [PubMed] [Google Scholar]

- 26.Itti L, Koch C. A saliency-based search mechanism for overt and covert shifts of visual attention. Vision Res. 2000;40:1489–1506. doi: 10.1016/s0042-6989(99)00163-7. [DOI] [PubMed] [Google Scholar]

- 27.Itti L, Dhavale N, Pighin F. Realistic Avatar Eye and Head Animation Using a Neurobiological Model of Visual Attention. In: Bosacchi B, Fogel DB, Bezdek JC, editors. Proc. SPIE 48th Annual International Symposium on Optical Science and Technology; Bellingham, WA: SPIE Press; 2003. pp. 64–78. [Google Scholar]

- 28.Itti L, Koch C, Niebur E. A Model of Saliency-Based Visual Attention for Rapid Scene Analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence. 1998;20:1254–1259. [Google Scholar]

- 29.Itti L. The iLab neuromorphic vision C++ toolkit: Free tools for the next generation of vision algorithms. The Neuromorphic Engineer. 2004;1:10. [Google Scholar]

- 30.Berg DJ, Boehnke SE, Marino RA, Munoz DP, Itti L. Free viewing of dynamic stimuli by humans and monkeys. Journal of Vision. 2009;9:1–15. doi: 10.1167/9.5.19. [DOI] [PubMed] [Google Scholar]

- 31.Tosi V, Mecacci L, Pasquali E. Scanning eye movements made when viewing film: preliminary observations. Int J Neurosci. 1997;92:47–52. doi: 10.3109/00207459708986388. [DOI] [PubMed] [Google Scholar]

- 32.Brasel SA, Gips J. Points of view: where do we look when we watch TV? Perception. 2008;37:1890–1894. doi: 10.1068/p6253. [DOI] [PubMed] [Google Scholar]

- 33.Kirchner H, Thorpe SJ. Ultra-rapid object detection with saccadic eye movements: visual processing speed revisited. Vision Res. 2006;46:1762–1776. doi: 10.1016/j.visres.2005.10.002. [DOI] [PubMed] [Google Scholar]

- 34.Fletcher-Watson S, Findlay JM, Leekam SR, Benson V. Rapid detection of person information in a naturalistic scene. Perception. 2008;37:571–583. doi: 10.1068/p5705. [DOI] [PubMed] [Google Scholar]

- 35.Cerf M, Harel J, Einhaeuser W, Koch C. Predicting human gaze using low-level saliency combined with face detection. Advances in Neural Information Processing Systems (NIPS) 2007;20:241–248. [Google Scholar]

- 36.Vuilleumier P. Facial expression and selective attention. Curr Opin Psychiatry. 2002;15:291–300. [Google Scholar]

- 37.Deaner RO, Platt ML. Reflexive social attention in monkeys and humans. Curr Biol. 2003;13:1609–1613. doi: 10.1016/j.cub.2003.08.025. [DOI] [PubMed] [Google Scholar]

- 38.Friesen CK, Kingstone A. The eyes have it! Reflexive orienting is triggered by nonpredictive gaze. Psychonomic Bulletin & Review. 1998;5:490–495. [Google Scholar]

- 39.Klein JT, Deaner RO, Platt ML. Neural correlates of social target value in macaque parietal cortex. Curr Biol. 2008;18:419–424. doi: 10.1016/j.cub.2008.02.047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Shepherd SV. Following Gaze: Gaze-following behavior as a window into social cognition. Frontiers in Integrative Neuroscience. doi: 10.3389/fnint.2010.00005. (under review) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Shepherd SV, Klein JT, Deaner RO, Platt ML. Mirroring of attention by neurons in macaque parietal cortex; Proceedings of the National Academy of Sciences of the United States of America; 2009. pp. 9489–9494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Einhauser W, Kruse W, Hoffmann KP, Konig P. Differences of monkey and human overt attention under natural conditions. Vision Res. 2006;46:1194–1209. doi: 10.1016/j.visres.2005.08.032. [DOI] [PubMed] [Google Scholar]

- 43.Deaner RO, Khera AV, Platt ML. Monkeys pay per view: adaptive valuation of social images by rhesus macaques. Curr Biol. 2005;15:543–548. doi: 10.1016/j.cub.2005.01.044. [DOI] [PubMed] [Google Scholar]

- 44.Nielsen KJ, Logothetis NK, Rainer G. Discrimination strategies of humans and rhesus monkeys for complex visual displays. Curr Biol. 2006;16:814–820. doi: 10.1016/j.cub.2006.03.027. [DOI] [PubMed] [Google Scholar]

- 45.Nielsen KJ, Logothetis NK, Rainer G. Object features used by humans and monkeys to identify rotated shapes. J Vis. 2008;8:9 1–9 15. doi: 10.1167/8.2.9. [DOI] [PubMed] [Google Scholar]

- 46.Humphrey NK, Keeble GR. How monkeys acquire a new way of seeing. Perception. 1976;5:51–56. doi: 10.1068/p050051. [DOI] [PubMed] [Google Scholar]

- 47.Buswell GT. How people look at pictures; a study of the psychology of perception in art. Chicago, Ill: The University of Chicago press; 1935. [Google Scholar]

- 48.Klein JT, Shepherd SV, Platt ML. Social attention and the brain. Curr Biol. 2009;19:R958–R962. doi: 10.1016/j.cub.2009.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Itti L, Baldi P. Advances in Neural Information Processing Systems. Vol. 19. Cambridge, MA: MIT Press; 2006. [g]. Bayesian Surprise Attracts Human Attention; pp. 547–554. (NIPS*2005) [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.