Abstract

How the brain ‘binds’ information to create a coherent perceptual experience is an enduring question. Recent research in the psychophysics of perceptual binding and developments in fMRI analysis techniques are bringing us closer to an understanding of how the brain solves the binding problem.

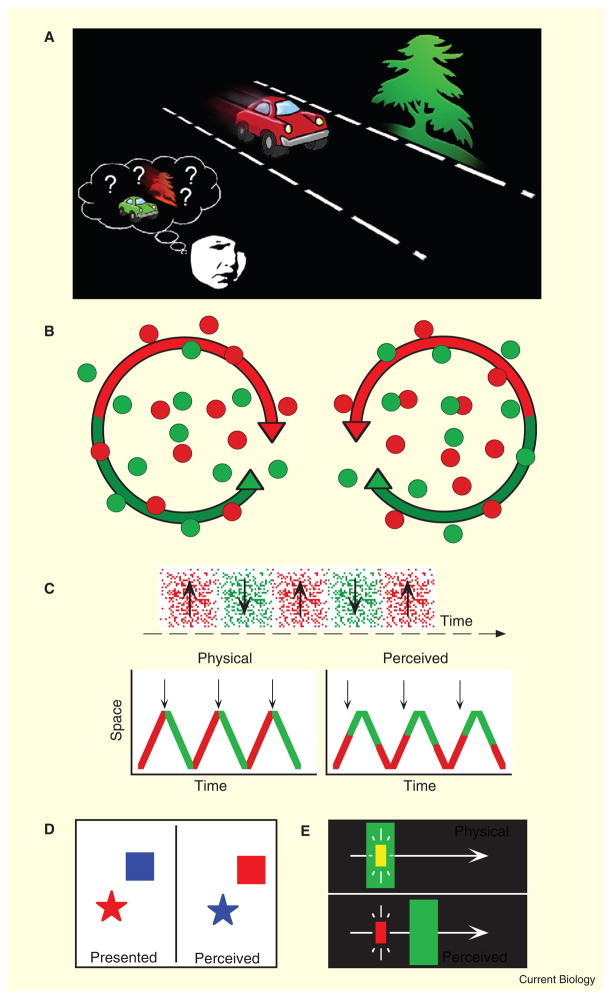

The visual system is organized in a parallel, hierarchical and modular fashion. The distributed processing of visual information is thought to lead to an intriguing ‘binding’ problem: if the attributes of an object, such as a red car driving down the road, are processed in distinct pathways, regions or modules, then how does the visual system bind these features — color, shape and motion — consistently and accurately into a single unified percept (Figure 1A)? Whether the binding problem is really a problem is debated [1,2], but there are several compelling phenomena that support its existence. Many of these demonstrate that, when the visual system is taxed, it can misbind the features of an object; for example, we can misperceive the position of the car while misattributing its redness to a different object in the scene.

Figure 1. The binding problem.

(A) The brain processes the visual attributes of objects (color, motion, shape) in different pathways or regions, and it is generally believed that there must therefore be neural mechanisms that ‘bind’ this information to generate coherent perceptual experience. Without binding, we would more frequently misperceive the features of objects, especially in dynamic and cluttered scenes. (B) Double-conjunction color/motion stimuli used by Seymour et al. [3]. The two conditions had identical feature information (two colors and two directions of motion). The only difference between the conditions was the conjunction of color with motion direction (for example, red dots rotated clockwise on the left but rotated counter-clockwise on the right). (C–E) Three of the many visual illusions that reveal ‘misbinding’ and would be powerful tools to extend the combination of techniques used by Seymour et al. [3]. (C) Color–motion asynchrony. An oscillating pattern (top panel) that changes color in synchrony with the direction reversals (vertical arrows on the left) appears asynchronous — the color change appears to lead the motion reversal (vertical arrows on the right). (D) Illusory conjunction. In brief displays, the color or shape of an object can be misperceived as belonging to another object. (E) Color decomposition. A static yellow flash is super-imposed on a moving green bar. The green bar appears shifted forward in position and the physically yellow flash appears red, demonstrating a misbinding of color and position. A variety of other visual illusions reveal misbindings of color, motion, position, texture, and shape [14,16–20].

A new study by Seymour et al. [3], reported recently in Current Biology, brings us closer to unraveling the neural mechanisms responsible for successful perceptual binding of visual features. The study combined a novel visual stimulus with recent developments in the analysis of functional magnetic resonance imaging (fMRI) data to show that the features of a pattern, such as color and motion direction, are conjointly represented (bound) even at the earliest stages of cortical visual processing.

Seymour et al. [3] presented sets of red or green dots that rotated clockwise or counterclockwise, for a total of four conditions. In a clever manipulation, two of these conditions were superimposed, creating a double conjunction stimulus in which both red and green colors, and both clockwise and counterclockwise motion directions, were simultaneously present (Figure 1A). There were two double conjunction stimuli, both of which contained the same feature information (red, green, clockwise, and counterclockwise). The only difference between the two double conjunction stimuli were the pairings of color and motion: in one, red was paired with clockwise motion and green was paired with counterclockwise motion; in the other, red was paired with counterclockwise motion and green was paired with clockwise motion.

The key finding in the paper is that although all four features (two colors, two directions of motion) were present in both double conjunction stimuli, a classifying algorithm was able to discriminate between the two conditions using information from the fMRI BOLD response in the human visual cortex. If the neural responses that underlie the fMRI BOLD response were generated by the individual features (color and motion independently), the response to the two double conjunction stimuli should have been equivalent. Surprisingly, however, this was not the case; the results demonstrate that feature conjunctions are represented as early as V1. In well-thought control analyses, the authors were able to rule out potential salience differences, attentional asymmetries, luminance artifacts in their color stimuli, and other potential confounds.

Seymour et al.’s [3] experiments are exciting for several reasons. First, as mentioned above, they reveal that color–motion conjunctions are represented as early as V1. Second, this is one of the first, and perhaps the strongest demonstration of conjunction coding using a combination of state-of-the-art fMRI analyses and psychophysically well-controlled visual stimuli that (for the first time) differed as bound conjunctions but were identical in terms of individual features. Finally, the combination of methods here opens a new door in the physiological study of visual feature binding using fMRI that future studies can readily employ.

By demonstrating conjunction coding of features, we are one step closer to identifying the neural mechanism of feature binding, but many questions remain. Although the mechanism of feature binding could operate as early as V1, there is compelling evidence that this is not the case. Previous single unit and anatomical studies support the prevailing notion that color and motion pathways are segregated in V1 [4]. Clinical studies also support the distributed and modular processing of color and motion, having revealed a double dissociation between the perception of these two features (distinct extrastriate lesions can cause the loss of color perception without a loss of motion perception, and vice versa [5,6]). And most existing psychological and physiological models of binding rely on higher-level mechanisms ([7–9], but compare [2,10]).

Seymour et al. [3] rightly acknowledge that their results do not unequivocally demonstrate that the binding of features occurs in V1. Indeed, it is well established that the fMRI response in V1 can reflect feedback (for example, spatial attention [11]). A representation of feature conjunctions in V1, then, is not inconsistent with the possibility that feature binding requires attention [9]: V1 responses could reflect feedback from a fronto-parietal attentional network. It therefore remains unclear whether the coding of feature conjunctions in V1 reflects feedback of already bound information, reflects feedback of unbound information that V1 then actively binds, or is completely unrelated to perceptual binding per se.

To fully address the uncertainties above, we need to extend the clever technique of Seymour et al. [3] to test conditions in which features are perceptually misbound and examine whether the conjoint coding of features gates, or is correlated with, perceptual binding itself. Several examples of perceptual misbinding have been demonstrated for a range of different features, including color, position, motion, shape, and texture. For example, synchronous changes in the color and motion of a pattern are perceived as asynchronous [12] (Figure 1C); the color of one briefly viewed object in a crowd can be misperceived as belonging to a different object (illusory conjunctions, Figure 1D) [13]; a static yellow flash superimposed on a moving green object appears to lag behind the green object and appears red [14] (Figure 1E); and an object can even appear to drift in one direction while appearing shifted in position in the opposite direction [15]. These and many other examples of perceptual misbinding (for example [16–20]) occur when the temporal and/or spatial limits of visual processing (or attention) are approached or exceeded.

Taking advantage of these sorts of illusions is necessary for at least three reasons. First, the mechanism of feature binding may not be recruited for unambiguous visual stimuli. Future experiments, building on the work of Seymour et al. [3], will need to demonstrate that the mechanism of binding is actually recruited; without testing a perceptual ‘misbinding’, it is difficult to know whether the mechanism normally responsible for perceptual binding is active. Second, the conjoint coding of features could reflect the physical or perceptual co-occurrence of those features. Physically bound features do not always lead to perceptually bound ones, so without studying visual illusions, like those above, we cannot be certain whether or when the conjoint coding of features is necessarily linked to perception. Third, the representation of conjoint features in early visual cortex could be the result of feedback. Employing visual illusions of misbinding will disambiguate whether V1 reflects the output of a binding process via feedback (in which case it would selectively code feature conjunctions that are perceived as bound).

The combination of elegant experiment design and sophisticated fMRI analysis of Seymour et al. [3] sets the stage for these future experiments and in so doing brings us closer than ever to addressing the binding problem directly.

References

- 1.Lennie P. Single units and visual cortical organization. Perception. 1998;27:889–935. doi: 10.1068/p270889. [DOI] [PubMed] [Google Scholar]

- 2.Riesenhuber M, Poggio T. Are cortical models really bound by the ‘binding problem’? Neuron. 1999;24:87–93. 111–125. doi: 10.1016/s0896-6273(00)80824-7. [DOI] [PubMed] [Google Scholar]

- 3.Seymour K, Clifford CW, Logothetis NK, Bartels A. The coding of colour, motion and their conjunction in human visual cortex. Curr Biol. 2009;19:177–183. doi: 10.1016/j.cub.2008.12.050. [DOI] [PubMed] [Google Scholar]

- 4.Horwitz GD, Albright TD. Paucity of chromatic linear motion detectors in macaque V1. J Vis. 2005;19:525–533. doi: 10.1167/5.6.4. [DOI] [PubMed] [Google Scholar]

- 5.Damasio AR. Disorders of complex visual processing: Agnosias, achromatopsia, Balint’s syndrome, and related difficulties of orientation and construction. In: Mesulam MM, editor. Principles of Behavioural Neurology. Vol. 1. Philadelphia: Davis; 1985. pp. 259–288. [Google Scholar]

- 6.Zihl J, von Cramon D, Mai N. Selective disturbance of movement vision after bilateral brain damage. Brain. 1983;106:313–340. doi: 10.1093/brain/106.2.313. [DOI] [PubMed] [Google Scholar]

- 7.Friedman-Hill SR, Robertson LC, Treisman A. Parietal contributions to visual feature binding: evidence from a patient with bilateral lesions. Science. 1995;269:853–855. doi: 10.1126/science.7638604. [DOI] [PubMed] [Google Scholar]

- 8.Reynolds JH, Desimone R. The role of neural mechanisms of attention in solving the binding problem. Neuron. 1999;24:19–29. 111–125. doi: 10.1016/s0896-6273(00)80819-3. [DOI] [PubMed] [Google Scholar]

- 9.Treisman AM, Gelade G. A feature-integration theory of attention. Cogn Psychol. 1980;12:97–136. doi: 10.1016/0010-0285(80)90005-5. [DOI] [PubMed] [Google Scholar]

- 10.Singer W, Gray CM. Visual feature integration and the temporal correlation hypothesis. Annu Rev Neurosci. 1995;18:555–586. doi: 10.1146/annurev.ne.18.030195.003011. [DOI] [PubMed] [Google Scholar]

- 11.Tootell RB, Hadjikhani N, Hall EK, Marrett S, Vanduffel W, Vaughan JT, Dale AM. The retinotopy of visual spatial attention. Neuron. 1998;21:1409–1422. doi: 10.1016/s0896-6273(00)80659-5. [DOI] [PubMed] [Google Scholar]

- 12.Moutoussis K, Zeki S. A direct demonstration of perceptual asynchrony in vision. Proc Biol Sci. 1997;264:393–399. doi: 10.1098/rspb.1997.0056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Treisman A, Schmidt H. Illusory conjunctions in the perception of objects. Cogn Psychol. 1982;14:107–141. doi: 10.1016/0010-0285(82)90006-8. [DOI] [PubMed] [Google Scholar]

- 14.Nijhawan R. Visual decomposition of colour through motion extrapolation [see comments] Nature. 1997;386:66–69. doi: 10.1038/386066a0. [DOI] [PubMed] [Google Scholar]

- 15.Bulakowski PF, Koldewyn K, Whitney D. Independent coding of object motion and position revealed by distinct contingent aftereffects. Vision Res. 2007;47:810–817. doi: 10.1016/j.visres.2006.10.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Cai RH, SJ A new form of illusory conjunction between color and shape. J Vis. 2001;1:127a. [Google Scholar]

- 17.Herzog MH, Koch C. Seeing properties of an invisible object: feature inheritance and shine-through. Proc Natl Acad Sci USA. 2001;98:4271–4275. doi: 10.1073/pnas.071047498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ogmen H, Otto TU, Herzog MH. Perceptual grouping induces non-retinotopic feature attribution in human vision. Vision Res. 2006;46:3234–3242. doi: 10.1016/j.visres.2006.04.007. [DOI] [PubMed] [Google Scholar]

- 19.Pelli DG, Palomares M, Majaj NJ. Crowding is unlike ordinary masking: distinguishing feature integration from detection. J Vis. 2004;4:1136–1169. doi: 10.1167/4.12.12. [DOI] [PubMed] [Google Scholar]

- 20.Shevell SK, St Clair R, Hong SW. Misbinding of color to form in afterimages. Vis Neurosci. 2008;25:355–360. doi: 10.1017/S0952523808080085. [DOI] [PubMed] [Google Scholar]