Abstract

The present study investigated the neural bases of phonological onset competition using an eye tracking paradigm coupled with fMRI. Eighteen subjects were presented with an auditory target (e.g. beaker) and a visual display containing a pictorial representation of the target (e.g. beaker), an onset competitor (e.g. beetle), and two phonologically and semantically unrelated objects (e.g. shoe, hammer). Behavioral results replicated earlier research showing increased looks to the onset competitor compared to the unrelated items. fMRI results showed that lexical competition induced by shared phonological onsets recruits both frontal structures and posterior structures. Specifically, comparison between competitor and no-competitor trials elicited activation in two non-overlapping clusters in the left IFG, one located primarily within BA 44 and the other primarily located within BA 45, and one cluster in the left supramarginal gyrus extending into the posterior-superior temporal gyrus. These results indicate that the left IFG is sensitive to competition driven by phonological similarity and not only to competition among semantic/conceptual factors. Moreover, they indicate that the SMG is not only recruited in tasks requiring access to lexical form but is also recruited in tasks that require access to the conceptual representation of a word.

Introduction

In retrieving information in the service of a specific goal, there are many instances in which more than one stimulus representation may be accessed (Desimone & Duncan, 1995; Duncan 1998; Miller & Cohen, 2001). Thus, competition is created among these multiple representations. However, typically only one of the active representations is appropriate as a response. As a consequence, competition needs to be resolved in order to achieve a specific goal or carry out a discrete response. This scenario is not specific to any one cognitive domain, but rather the maintenance of multiple representations, the resolution of competition, and ultimately response selection are functions associated with the domain general mechanisms of cognitive control. Converging evidence from neuropsychology and neurophysiology suggests that the prefrontal cortex is involved in cognitive control (cf. Miller & Cohen, 2001).

The resolution of competition is critical for the processing of language. In order to recognize a word, for example, the listener must select the appropriate word candidate from the thousands of words in the lexicon, many of which share sound shape properties. Most models of word recognition suggest that as the auditory input unfolds over time, this information is used online to continuously pare down the set of potential competitors. That is, at the onset of a word, all of those words which share onsets are initially activated; as the auditory input unfolds those word candidates that share sound properties remain partially activated until sufficient auditory information is conveyed such that the word is uniquely identified (Marslen-Wilson, 1978; McClelland & Elman, 1986; Norris, 1994; Luce & Pisoni, 1998). Not only is the sound shape of lexical competitors partially activated but so are their meanings. For example, using a cross-modal priming paradigm, subjects show semantic priming for words that share onsets (Marslen-Wilson, 1987). Presentation of a word such as general, which has an onset competitor word generous, primes gift when the prime word is presented before the input string is disambiguated (i.e. gener…).

Recently effects of lexical competition have been found using an eye tracking or visual world paradigm (e.g. Spivey-Knowlton, 1996; Tanenhaus et al., 1995, Allopenna et al., 1998). In this paradigm, subjects are presented with a visual array of objects and are asked to find specific targets as their eye movements are monitored. One of the advantages of this technique over priming and lexical decision judgments is that it provides information about the time course of lexical access. Tanenhaus et al. (1995) have shown that there is a systematic correspondence between the time course of processing a referent name and the eye movements to a referent object, indicating that monitoring eye movements over time provides a continuous measure of how word recognition unfolds (cf. Yee & Sedivy, 2006). Moreover, the eye tracking paradigm allows for the investigation of lexical processing in a more “ecologically valid” manner than most other paradigms used to investigate word recognition, as subjects are not required to make a metalinguistic judgment about the lexical status, the sound shape, or meaning of a stimulus (cf. Yee & Sedivy, 2006).

Previous studies have shown that when subjects are presented with an auditory target (e.g. beaker) and a visual display of the target object (e.g. beaker), an onset competitor (e.g. beetle), and two more phonologically and semantically unrelated objects (e.g. shoe, hammer), they show increased looks to the onset competitor compared to the unrelated items before looking consistently at the correct target (Allopenna et al., 1998; Dahan et al., 2001a; Dahan et al. 2001b; Tanenhaus et al., 1995). These findings suggest that as the auditory input unfolds, both the target stimulus and its onset competitor are partially activated, and that the subject must resolve this competition among this set of potential lexical candidates in order to select the correct item in the visual display.

Despite the richness of the literature investigating lexical competition effects with behavioral methods, less is known about the neural systems underlying the resolution of lexical competition. The present study set out to investigate the neural basis of phonological onset competition using fMRI coupled with the eye tracking paradigm. The paradigm used required subjects to find a target object named auditorily within a visual display containing four objects while their eye movements were being tracked. In some trials, two of the object names shared phonological onsets, while in other trials no competitor was present in the visual display.

A recent study by Yee and colleagues (2008) provides some suggestions about the brain areas that might be recruited under conditions of onset competition. Using the same eye tracking paradigm with Broca’s and Wernicke’s aphasics, they showed that both groups demonstrated pathological patterns. Specifically, they found that Broca’s aphasics with frontal lesions including the inferior frontal gyrus (IFG) showed a very weak onset competitor effect. That is, they made few fixations towards the onset competitor in the visual display. On the other hand, Wernicke’s aphasics with temporo-parietal lesions including the posterior portions of the superior temporal gyrus (STG) showed a larger competitor effect than age-matched controls. These results suggest that both anterior and posterior language processing areas are influenced by phonological onset competition. However, the differences between these two subject groups also suggest that these regions might have different functional roles.

Posterior temporo-parietal brain structures are involved in the initial processing of a lexical entry both at the phonological and the semantic level. Hickock and Poeppel (2000) have proposed that mapping from sound to meaning recruits a network of regions including the posterior superior temporal gyrus (STG), the supramarginal gyrus (SMG), and the angular gyrus (AG). The left temporo-parietal region, including the SMG and the AG, is also involved in mapping of sound structure to a phonological representation and in storing this representation (Paulesu et al., 1993). These regions have also been shown to be sensitive to phonological competition. In particular, using a lexical decision paradigm, Prabhakaran et al. (2006) showed increased activation in the left SMG when subjects performed a lexical decision task on words that were from high density neighborhoods (i.e. had a lot of phonological competitors) compared to words from low density neighborhoods (i.e. had few phonological competitors) (cf. Luce and Pisoni, 1998 for discussion of behavioral effects of neighborhood density on word recognition).

While posterior regions appear to be involved in processing the sound structure and meaning of words, as discussed earlier, frontal regions appear to be involved in “executive control functions” (e.g. Duncan, 2001; Duncan & Owen, 2000; Miller & Cohen, 2001; Smith & Jonides, 1999). Within prefrontal cortex there is evidence that the left IFG is recruited in the resolution of competition and in the ultimate selection of the appropriate response. Thompson-Schill and colleagues (1997; 1998; 1999) have suggested that the left IFG is involved in making a selection among competing conceptual representations. In a recent study, Snyder and colleagues (2007) showed increased activation in the left IFG as a function of semantic conflict during both semantic and phonological tasks. Similarly, Gold and Buckner (2002) found increased activation in the same portions of left inferior prefrontal cortex during controlled retrieval of both semantic and phonological information.

While the IFG is clearly involved in ‘executive control’, several recent proposals have suggested that there is a functional division within the IFG. Some have proposed that the left IFG is partitioned as a function of linguistic domain with a phonology-specific processing mechanism in the posterior portion of the IFG and a semantic-specific processing mechanism in the anterior portion of the IFG (Buckner et al., 1995; Burton et al., 2003; Burton, 2001; Fiez et al., 1997; Poldrack et al., 1999). Others (Badre & Wagner, 2007) have proposed that the left IFG can be divided on the basis of different functional processes. They propose that the most anterior portion is responsible for the maintenance of multiple conceptual representations that have been activated either by top-down or bottom-up processes, and the more posterior portion of the IFG is responsible for selecting the appropriate task-relevant representation (Badre & Wagner, 2007).

The goal of this study is to investigate the neural bases of phonological onset competition. Based on the findings reviewed above, it is hypothesized that both posterior regions, specifically the left SMG, and anterior regions, specifically the left IFG, will be recruited under conditions of phonological onset competition. While the role of the SMG is uncontroversially related to phonological analysis and access of lexical form, the role of the IFG in resolving phonological competition is less clear. Prabhakaran et al. (2006) failed to show increased IFG activation as a function of phonological competition, suggesting that this area may be recruited only in resolving competition among conceptual representations. Activation should occur in the IFG in the current study because the appropriate conceptual representation needs to be selected from the competing conceptual representations in order to look at the correct object in the visual array. However, IFG activation could also be modulated by phonological competition, independent of semantic/conceptual competition. In that case, the IFG would appear to play a domain general role in resolving competition not only as a function of competing conceptual representations but also as a function of competing phonological representations. Given that in our paradigm, competition is based upon similarities in the phonological form among words, and that no specific semantic or phonological judgment is required, we hope to provide evidence to further specify the role of the left IFG in the resolution of lexical competition.

Methods

Norming Experiments

Two preliminary norming experiments were conducted in order to select easily identifiable visual exemplars for each of the object names to be used as stimuli in the eye tracking experiment.

Subjects

Forty college-aged subjects were tested. All subjects were recruited from the Brown University community and were paid for their participation. All subjects were native speakers of American English and had either normal or corrected to normal vision and no hearing or neurological deficit.

Materials

Sixty names of highly pictureable common objects served as target words. Each of the target words was paired with a highly pictureable noun that shared either the entire first syllable or the onset and the vowel of the first syllable with it (e.g. lamb-lamp) to create a set of sixty onset competitors. The target-onset competitor pairs were chosen from those used in previous experiments and unpublished data that have investigated the behavioral effects of phonological onset competition (McMurray, personal communication; Yee & Sedivy, 2006; Yee et al., 2008). An additional, 120 highly pictureable nouns were selected to serve as filler items. The filler items were chosen from the MRC psycholinguistic database (The University of Western Australia, AU), such that there were no significant differences, as assessed by t-tests, in mean word frequency, number of syllables, concreteness, and imageability scores between the sets of targets, onset competitors, and fillers. The visual stimuli consisted of two distinct color photographs of each target, onset competitor, and filler object. The color photographs were taken from the Hemera Photo Object database (Hemera Technologies, Toronto, ON) and Google Images.

Procedure

The first preliminary experiment was a naming task designed to make sure that subjects consistently named each of the pictures chosen, and that the name given to each object corresponded to the name intended to be associated with each picture.

Twenty participants divided into two groups participated in this experiment. Each subject group saw only one exemplar per object, for a total of 240 images. In each trial subjects saw a picture of an object on a white background, and were asked to type the name of the object they thought it represented. The picture remained on the screen for 1s, and subjects had unlimited time to type the name. If more than 1/3 of the subjects misidentified a picture, a new image was selected to be paired with the object in question.

The second preliminary experiment was conducted to validate the new set of images that contained the images that met the identification criterion from the previous experiment plus the new exemplars selected to replace those that did not meet the above specified criterion. In this second experiment, subjects were asked to make a yes/no judgment about the word/picture pairs to provide a measure of identification accuracy, and a measure of ease of identification by recording reaction time latencies.

Two groups of 10 new subjects participated in this experiment. Each subject group saw only one visual exemplar per object name, for a total of 240 images. Each picture appeared on the screen for 1 sec, and was followed by a visually presented word that was either the correct name for the object presented or a different object name. Subjects were asked to decide whether the word they read was the correct name for the object presented or not. Accuracy and reaction times (RT) were measured. Items were eliminated from the stimulus set on the basis of 3 criteria: 1. if more than 1/3 of the subjects gave incorrect answers in the word/picture matching trials; 2. if more than 1/3 of the subjects had RT latencies longer than two standard deviations over their individual mean RT latencies for correct responses; 3. if the across-subjects mean RT for the correct responses to a specific item was more than two standard deviations above the across-subjects mean for the set of correct responses for the matching trials.

Based on the results of the two norming experiments, fifty-four target word-object pairs, fifty-four objects depicting phonological onset competitors, and one hundred and eight filler items were selected as stimuli for the eyetracking experiment. The stimuli used in the experiment are listed in the Appendix.

APPENDIX.

| Target | Competitor | Filler 1 | Filler 2 |

|---|---|---|---|

| ACORN | APRON | NEEDLE | SADDLE |

| BAGEL | BABY | PIPE | SHOVEL |

| BASKET | BATHTUB | NECKLACE | PENGUIN |

| BATTER | BANJO | PACKAGE | JACKET |

| BEAKER | BEETLE | HAMMER | TRAIN |

| BEAVER | BEEHIVE | LADDER | ARMCHAIR |

| BEE | BEACH | CHAIN | POT |

| BELL | BED | HAT | VAN |

| BOWL | BONE | KEY | SHIRT |

| BUCKLE | BUCKET | ENGINE | LEMON |

| BUG | BUS | TELEPHONE | TENT |

| BUTTER | BUTTON | SQUIRREL | RIFLE |

| CABBAGE | CABIN | MEDAL | PILLOW |

| CAMEL | CAMERA | MUFFIN | BLANKET |

| CANDLE | CANDY | HANGER | SHUTTER |

| CANE | CAKE | STATUE | LION |

| CANOE | CASSETTE | OLIVE | FEATHER |

| CARROT | CARRIAGE | SPEAKER | FOOTBALL |

| CASKET | CASTLE | TOASTER | MARBLE |

| CAT | CAB | NET | WATCH |

| CHOCOLATE | CHOPSTICKS | LOBSTER | HELMET |

| CLOWN | CLOUD | BOX | SOAP |

| COAT | COMB | TRUCK | GLASS |

| CRAYON | CRADLE | RACKET | IRON |

| DOLLAR | DOLPHIN | MIRROR | HONEY |

| HEN | HEAD | SHIP | DRILL |

| HOLE | HOME | BEER | DRESS |

| HORN | HORSE | CHAIR | MAP |

| HORNET | HORSHOE | ONION | DOCTOR |

| LAMB | LAMP | ROCK | GLOVE |

| LETTER | LETTUCE | TRUMPET | SCISSORS |

| MONEY | MONKEY | PEPPER | BRACELET |

| MUSTARD | MUSHROOM | BADGE | WHEEL |

| PADDLE | PADLOCK | LIGHTER | BULLET |

| PANCAKE | PANDA | DANCER | ORANGE |

| PENNY | PENCIL | BANANA | ANCHOR |

| PIER | PEACH | KNIFE | COW |

| PITCHER | PITCHFORK | HAMSTER | BALLOON |

| PIZZA | PEANUT | GUITAR | RIBBON |

| PLATE | PLANE | RING | MOUSE |

| PUPPY | PUPPET | WALNUT | TOILET |

| ROAD | ROLL | CUP | PIANO |

| ROCKER | ROCKET | APPLE | CIGAR |

| ROOSTER | RULER | CHERRY | DRUM |

| ROSE | ROPE | SCALE | BRAIN |

| SANDAL | SANDWICH | RABBIT | CHESTNUT |

| SEAHORSE | SEESAW | DESK | ARROW |

| SNAIL | SNAKE | MATCH | BOTTLE |

| SODA | SOFA | BRUSH | TREE |

| TIRE | TIGER | GARLIC | DAISY |

| TOWER | TOWEL | BELT | NEST |

| TUBA | TULIP | PUMPKIN | BARREL |

| TURKEY | TURTLE | QUARTER | SOLDIER |

| WHISTLE | WINDOW | CANNON | BACON |

fMRI and Eye tracking Experiment

Participants

Eighteen participants (14 females) ranging in age from 18 to 32 (mean age = 23) took part in one scanning session. Participants were all right-handed as assessed by the Oldfield handiness inventory (Oldfield, 1971). Participants reported normal hearing and normal or corrected-to-normal vision, and no neurological impairment. All participants gave written informed consent according to the guidelines of the Human Subjects Committee of Brown University. All participants were screened for MR safety prior to the scanning session. The data from one participant was excluded from both the behavioral and fMRI analyses because of technical difficulties.

fMRI acquisition

Scanning was done on a 3T Siemens TIM Trio scanner at Brown University, using a standard 8-channel head coil, outfitted with a mirror for back projection, an infrared illuminator, and an infrared mirror for eye tracking. High-resolution anatomical images were collected using a 3D T1-weighted magnetization prepared rapid acquisition gradient echo sequence (TR = 2.25s, TE = 2.98ms, 1 mm3 isotropic voxel size). Functional images were acquired using a multislice, ascending, interleaved EPI sequence (TR = 2.7s, TE = 28 ms, FOV = 192, 45 slices, 3mmX3mm in-plane resolution, 3mm slice thickness). A total of 164 volumes were acquired during each run. In addition, two “dummy” volumes were acquired at the start of the run to allow the MR signal to reach steady state; these volumes were discarded by the scanner.

Materials

The stimulus set consisted of 54 target auditory stimuli (mean duration = 480 ms), and 108 visual displays. The auditory target stimuli were recorded by a male speaker in a sound attenuated room. Each visual display consisted of a 3×3 grid on a white background containing four pictures, one picture in each of the corners of the grid. The visual grid subtended 16×16 degrees of visual angle, with each cell being approximately 5×5 degrees of visual angle.

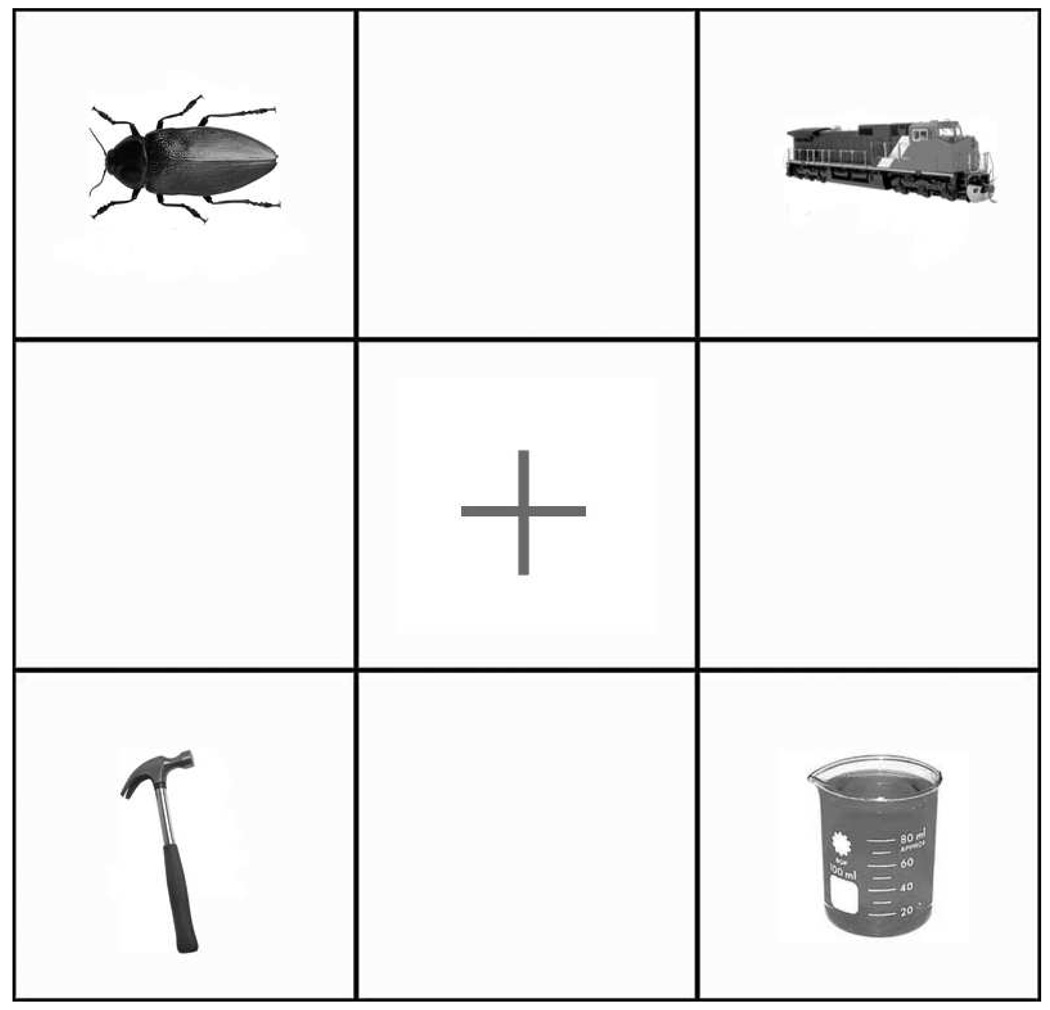

The competitor trials consisted of 54 visual displays containing four objects corresponding to the target auditory stimulus (e.g. beaker), the onset competitor (e.g. beetle), and two fillers (e.g. train, hammer) (see Figure 1). The remaining fifty-four displays served as the no-competitor trials and contained an object corresponding to the target auditory stimulus (e.g. beaker) and three filler objects that were phonologically, semantically, and visually unrelated to the target (e.g. ship, marble, comb). Each auditory target token was paired with two displays, one containing both the target and the onset competitor and two fillers (competitor trial), and one containing the target and three fillers (no-competitor trial). While each auditory token was repeated across the two conditions, none of the object pictures was seen more than once. That is, subjects saw two different exemplars of each object, one in the competitor trials and one in the no-competitor trials. The position of targets and competitors within the grid was counterbalanced, such that targets and competitors appeared an equal number of times in all four positions. Moreover, all repeated types (i.e. there were two visual exemplars for each object) never appeared in the same location across runs.

Figure 1.

Example of a competitor trial display. The target object (beaker) shares the onset with one of the objects in the display (beetle), while the other two objects are unrelated semantically and phonologically.

Procedure

An SMI iView X MRI (SensoMotoric Instruments Inc., Needham, MA) eye tracker was used. An infrared camera located at the edge of the MRI bed was used to monitor participants’ eye movements. The camera recorded the participant’s eye movements at 60Hz, with accuracy of greater than 1 degree of visual angle. Stimuli were presented with Bliss software (Mertus, 2002) on a Dell laptop, connected to an LCD projector, and back-projected to the head coil mirror, and through sound attenuating pneumatic headphones.

Subjects participated in two experimental runs of an event-related design, each consisting of 54 stimulus presentations. In each run they saw an equal number of target-competitor and target-fillers displays in random order and were exposed once to all of the auditory targets; one-half of the targets were paired with target-competitor displays and the other half were paired with target-filler displays. Hence, if an auditory target were paired with a target-competitor display in the first run, the same auditory target would be paired with a target-filler display in the second run. The occurrence of target-competitor and no-competitor displays was counterbalanced across conditions. Overall, subjects were presented with fifty-four target-competitor trials and fifty-four target-filler trials.

Each trial began with the presentation of the four-picture array in order to provide the subject with a chance to briefly scan the display. One second later a red fixation cross appeared in the center of the screen, and the objects disappeared. Participants were instructed to fixate on the cross when it appeared. The fixation cross remained on the screen for one second followed by the reappearance of the four objects with the simultaneous auditory presentation of the name of one of the objects present in the display (target). The four objects remained on the screen for 1.5 s. Subjects were instructed to find and look at the object that had been named until it disappeared. Presentation of stimuli was jittered according to a uniform distribution of nine trial onset asynchronies (TOA values ranging from 4.5 to 11.8 s in .9s steps). During the TOA intervals, the screen was left blank. Each TOA bin was used an equal number of times in each run, and an equal number of competitor and non-competitor trials were assigned to each TOA bin in each run.

Data Analysis Methods

fMRI data preprocessing

fMRI data was analyzed using AFNI (Cox, 1996; Cox & Hyde, 1997). Preprocessing steps included slice acquisition time correction for each run separately. The runs were then concatenated for each subject to carry out head motion correction by aligning all volumes to the fourth collected volume using a 6-parameter rigid body transform. These data were then warped to Talairach and Tournoux space (1998), and resampled to 3-mm isotropic voxels. Lastly, the data were spatially smoothed using a 6-mm full-width half maximum Gaussian kernel.

fMRI statistical analysis

Each subject’s preprocessed EPI data were regressed to estimate the hemodynamic response function for the two experimental conditions (competitor condition vs. non-competitor condition). Response functions were estimated by convolving vectors containing the onset times of each auditory stimulus with a stereotypic gamma-variate hemodynamic response function provided by AFNI (Cox, 1996; Cox & Hyde, 1997). The calculation of the raw fit coefficients for each voxel was carried out using the AFNI 3dDeconconvolve program. The raw coefficients were then converted to percent signal change by dividing each voxel coefficient by the experiment-wise mean activation for that voxel. Because of the repetition of auditory targets across the two runs, a group analysis was carried out by entering the percent signal change data into a mixed factor 2-way ANOVA, with subject as a random factor, and condition (competitor vs. no competitor), and run (1st run vs. 2nd run) as fixed factors. The resulting statistical maps were corrected for multiple comparisons using Monte Carlo simulations. A voxel level threshold of p< 0.025 and a cluster level threshold of p<0.025 (48 contiguous voxels) was used for all comparisons. The atlases used to locate the anatomical structures were the Anatomy Toolbox atlases (Eickhoff et al., 2005; 2006; 2007).

Results

Eye tracking results

Eye tracking data were initially processed by counting the number of fixations that each subject made on target, competitor, and filler objects in each trial, sampled every 17 ms. Only fixations initiated after the onset of the auditory target, and lasting longer than 100ms were included in the analysis. We defined four regions that contained target, competitor, and filler object pictures. Each region was a 3.5×3.5 degrees of visual angle square in each of the four corners of the display. A fixation to a specific object was defined as consisting of the time that a saccade moved the eye into the specific region until a saccade moved the eye out of that region. Thus, saccades in which the eye did not move out of the region were included as part of the fixation time for that region.

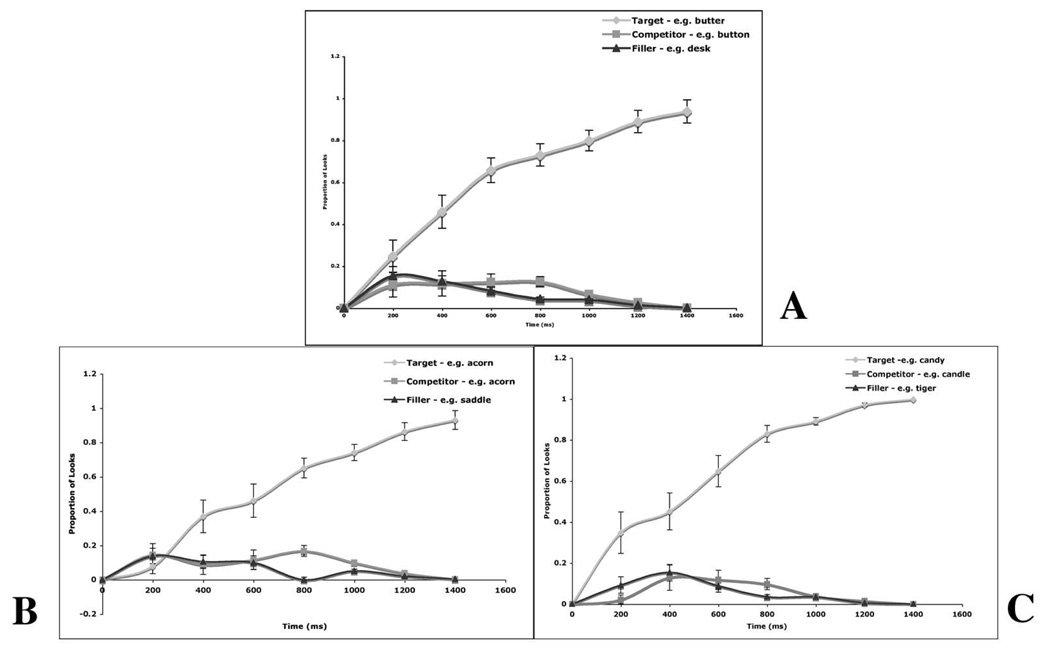

If the subject did not fixate on the target by the end of the trial, that trial was excluded from the analysis. On average, 4% of the trials were excluded for fifteen out of the seventeen subjects. The remaining two subjects proved to be difficult for the eye tracker to calibrate. Thus approximately one third of their trials were excluded from the analysis. For each subject, the proportion of fixations to target, competitor and filler objects was computed by averaging the number of fixations to each object in seven 200ms windows, and dividing it by the total number of fixations across all objects in the same time window. This analysis was performed on the data of both runs averaged together, and on the data from each run alone. Figure 2a shows the mean proportion of looks to the target, onset competitor, and the average of the two unrelated pictures in the competitor trials across both runs, figure 2b shows the same analysis done on the data from the first run, and figure 2c shows the results of the same analysis from the second run.

Figure 2.

Proportion of fixations over time to the target, the onset competitor, and the average of the two unrelated items in competitor trials. Zero corresponds to the onset of the auditory target. Standard error bars are shown for every data point. (A) Data averaged from both runs. (B) Data from the first run. (C) Data from the second run.

The data were statistically analyzed to determine whether a significant competitor effect emerged and to ascertain the time course of this effect. For the purpose of the analysis, a trial was defined as starting 200 ms after auditory stimulus onset, since it takes about 200 ms to launch a saccade (Altman & Kamide, 2004), and ending when the display disappeared from the screen. A two-way repeated measures ANOVA of the proportion of looks with object (competitor, filler) and time bin (seven 200 ms bins) as factors revealed a marginally significant main effect of condition F(1, 16) = 3.7, p <0.08, showing that overall competitors were fixated on more often than filler items. There was also a significant main effect for time bin, F(6,16) = 2.1, p < 0.06, suggesting that the overall proportion of looks decreased over time. This is not surprising, given that as time progresses subjects will almost exclusively be fixating on the target object. However, no significant interaction was found, F(8, 128) = 1.4, p < 0.3.

In order to assess the presence of competitor effects across the two runs, separate two way (Condition × Time Bin) repeated measures ANOVAs were also conducted. Results for the first run revealed a significant main effect for condition, F(1,16) = 5.74, p < 0.05, showing that competitors were fixated on significantly more than filler objects. The analysis also revealed a marginally significant main effect for time bin, F(6,16) = 1.9, p < 0.08, but no significant interaction, F(8,128) = 1.5, p < 0.2. Results from the second run revealed no significant main effect for condition, F(1,16) = 0.5, p < 0.9, and no significant interaction, F(8,128) = 1.8, p < 0.1. However, there was a significant main effect for time bin, F(6,16) = 2.8, p < 0.02.

fMRI results

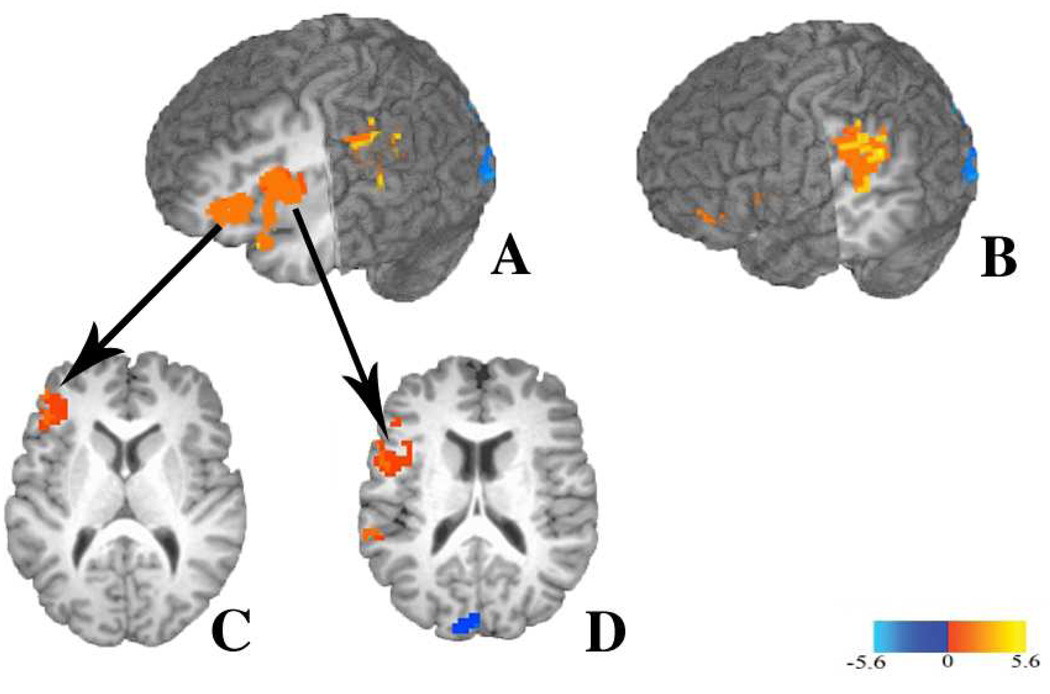

Table 1 provides a summary of the results of the two-way (Condition × Run) ANOVA. Table 1a lists those clusters that showed a main effect of condition and Table 1b lists those clusters that showed a main effect of run (p < 0.025, corrected). No clusters showed a significant condition × run interaction. The focus of discussion will be on those clusters falling within regions previously identified to be involved in language processing (see Figure 3).

Table 1.

| Table 1a. Significant clusters activated in the Condition X Run ANOVA | |||||

|---|---|---|---|---|---|

| Talairach Coordinates |

|||||

| Cortical Region | Brodmann’s Area | Cluster Size | x | y | z |

| Competitor > No-Competitor | |||||

| Left Supramarginal Gyrus | 40/2/13 | 139 | 58.5 | 46.5 | 35.5 |

| Left Inferior Frontal | 44/45 | 118 | 49.5 | −4.5 | 17.5 |

| Left Cingulate Gyrus | 24/23 | 77 | 1.5 | 4.5 | 38.5 |

| Left Inferior Frontal Gyrus | 45/46/10 | 70 | 46.5 | −43.5 | 11.5 |

| Right Inferior Parietal Lobule | 40 | 69 | −49.5 | 43.5 | 50.5 |

| Left Insula | 13/47 | 51 | 31.5 | −13.5 | −9.5 |

| Competitor < No-Competitor | |||||

| Left Cuneus | 18/17 | 59 | 4.5 | 91.5 | 26.5 |

| Left Lingual Gyrus | 18/17 | 55 | 19.5 | 97.5 | −6.5 |

| Right Lingual Gyrus | 18/17 | 50 | −10.5 | 82.5 | −6.5 |

| Table 1b. Significant clusters activated in the Condition X Run ANOVA | |||||

|---|---|---|---|---|---|

| Talairach Coordinates |

|||||

| Cortical Region | Brodmann’s Area | Cluster Size | x | y | z |

| First Run > Second Run | |||||

| Left Declive | 17/18 | 330 | 1.5 | 73.5 | −12.5 |

| Right Superior Parietal Lobule | 7/2/40 | 145 | −34.5 | 46.5 | 62.5 |

| Right Middle Temporal Cortex | 37/19 | 127 | −52.5 | 61.5 | −0.5 |

| Left Superior/Inferior Parietal | 7 | 109 | 31.5 | 55.5 | 56.5 |

| Left Middle Occipital Gyrus | 18/19/37 | 90 | 25.5 | 88.5 | 11.5 |

| Left Postcentral Gyrus | 3/40/22 | 81 | 58.5 | 25.5 | 35.5 |

| First Run < Second Run | |||||

| Posterior Cingulate | 31/23/7 | 510 | 1.5 | 49.5 | 17.5 |

| Left Superior Parietal Lobule | 7/5/39/ | 160 | 37.5 | 64.5 | 50.5 |

Main effect of condition. Clusters thresholded at a cluster-level threshold of P < 0.025 with a minimum of 48 contiguous voxels, and at a voxel-level threshold of P < 0.025, t = 2.459 (corrected). Coordinates indicate the maximum intensity voxel for that cluster in Talairach and Tournoux space. The first Brodmann area listed corresponds to the location of the maximum intensity voxel. If the cluster extended into other Bas, those are also listed (see text for details).

Main effect of run. Clusters thresholded at a cluster-level threshold of P < 0.025 with a minimum of 48 contiguous voxels, and at a voxel-level threshold of P < 0.025, t = 2.459 (corrected). Coordinates indicate the maximum intensity voxel for that cluster in Talairach and Tournoux space. The first Brodmann area listed corresponds to the location of the maximum intensity voxel. If the cluster extended into other Bas, those are also listed (see text for details).

Figure 3.

Maps thresholded at a voxel-level threshold of P < 0.025, t = 2.459, and clusters shown correspond to a corrected significant level of p< 0.025. (A) Clusters in the left inferior frontal gyrus showing greater activation for competitor trials compared to no-competitor trials. Sagittal slice shown at x = 35, and coronal cut shown at y = 15. (B) Cluster in the left inferior frontal gyrus (BA 45). Axial slice shown at z = 11. (C) Cluster in the left inferior frontal gyrus (BA 44/45). Axial slice shown at z = 17. (D) Cluster in the left temporo-parietal region showing greater activation for competitor trials compared to no-competitor trials. Sagittal slice shown at x = 50, coronal slice shown at y = 20.

Several clusters showed a significant effect of condition with greater activation for competitor trials than no-competitor trials. As Figure 3 shows, the left inferior frontal gyrus (LIFG) contains two clusters of activation that responded more strongly in the competitor condition compared to the no-competitor condition. The largest of these clusters was located primarily within the pars Opercularis (BA 44, 71% of active voxels), but also extended into the pars Triangularis (BA 45, 20% of active voxels). The second cluster was located primarily within the pars Triangularis (BA 45, 95% of active voxels), and extended marginally into the middle frontal gyrus (4% of active voxels). A third frontal cluster was located primarily in the left insula (56% of active voxels), but extended into the pars orbitalis (BA 47, 28% of active voxels). It is important to note that, despite the proximity of these three frontal clusters, they are anatomically distinct.

Beyond the frontal regions, there were also significant clusters of activation in the left and right temporo-parietal region showing increased activation in the competitor condition compared to the no-competitor condition. The left hemisphere cluster was located within the supramarginal gyrus (SMG, 50% of active voxels). This cluster extended into the inferior parietal lobule (22% of active voxels), and the posterior portion of the left superior temporal gyrus (STG, 7% of active voxels). The right hemisphere cluster was primarily located in the right SMG (71% of active voxels), and extended into the inferior parietal lobule (27% of active voxels). No clusters emerged in language areas in which there was greater activation in the non-competitor condition than the competitor condition.

The two-way ANOVA also revealed several clusters that showed a significant main effect of run. The clusters that showed a stronger response in the first run compared to the second run were located in the left middle occipital cortex, the superior parietal lobules bilaterally, the left postcentral gyrus extending into the posterior portion of the STG, and the right middle temporal cortex extending into extrastriate visual cortex. The clusters showing a larger response in the second run compared to the first run were located in the left superior parietal lobule and the posterior cingulate cortex

Discussion

In the present study, the neural bases of phonological onset competition were investigated. The results show that lexical competition induced by shared phonological onsets recruits both frontal structures, i.e. the left inferior frontal gyrus, and posterior structures, i.e. the supramarginal gyrus.

Behavioral Findings

The behavioral results replicate previous findings showing that subjects look more at pictures of objects that share onsets with an auditory target than to pictures of unrelated objects (Allopenna et al., 1998; Dahan et al., 2001a; Dahan et al. 2001b; Tanenhaus et al., 1995). This effect was marginally significant across both runs, and statistically significant in the first run. The lack of significance in the second run suggests that the repetition of auditory targets reduced the strength of the competition effect. However, as shown in Figure 2c, even in the second run the proportion of looks between competitors and filler items showed greater looks to competitor trials than to no-competitor trials. Thus, the presence of an onset competitor in the stimulus array affects access to the auditorily presented lexical target. It is worth noting that the initial divergence between the proportion of looks to the competitor and filler items emerged about 200–400 ms later than that reported in previous studies. Previous studies have shown that a divergence between looks to the competitor and filler objects emerges as early as 200ms after word onset (Yee & Sedivy, 2006; Yee et al., 2008).

In the current study, the delay of the onset competitor effect may be due to a number of methodological differences. First, traditionally a small number of competitor trials are presented together with a larger number of no-competitor trials to ensure that the subject remains unaware of the experimental manipulation. In the present study, an equal number of competitor and no-competitor trials was used, because the addition of more no-competitor trials would have lengthened significantly the amount of time subjects had to spend in the scanner. It is possible that the shift in the ratio of competitor to no-competitor trials influenced the size and latency of the competitor effect observed here. Second, earlier studies required subjects not only to look at the target but also to overtly point to the target with their finger or with a mouse. In the current study, subjects were only required to look at the target picture. The coupling of a motor and visual action may have a facilitatory effect on subjects’ responses that is not observed when only a visual response is required. Third, in contrast to earlier studies that used a head-mounted eye tracker that allowed subjects to move their heads as they were doing the task, participants were unable to move their heads in the scanner. This difference might have affected the ease with which subjects moved their eyes, as it is more natural to follow eye movements with head movements. Lastly, the stress of doing the task while in the scanner might have also affected the ease with which participants launched eye movements. In fMRI studies it is not uncommon to find slower reaction times in behavioral tasks while in the scanner compared to the same tasks outside of the scanner. For example, reaction time latencies in a lexical decision task were on the order of 200–300 ms slower in the scanner than outside the scanner (cf. Prabhakaran et al., 2006 and Luce and Pisoni, 1998 where the same stimuli were used). It is possible that eye movements are subject to a similar phenomenon.

The effects of competition in temporo-parietal structures

The comparison between competitor and no-competitor trials showed increased activation in the left posterior STG and SMG. Activation of the left STG and SMG has been previously identified to be involved in phonological processing (e.g. Binder & Price, 2001; Gelfand & Bookheimer, 2003 Hickock & Poeppel, 2000). More recently, it has been shown that the left SMG is recruited under conditions of phonological competition (Prabhakaran et al., 2006). Prabhakaran and colleagues (2006) observed increased activation in the SMG when subjects performed a lexical decision task on words with many phonological competitors compared to words with few phonological competitors. Of interest, the current study not only showed activation in the left SMG but also in the right SMG. However, it is worth noting, that although not discussed, other studies have shown that the right SMG is also recruited in phonological tasks (Price, Moore, et al., 1997). That the right SMG is activated in the current study suggests that right hemisphere mechanisms are also recruited under conditions of phonological competition.

The present findings confirm the recruitment of the SMG under conditions of competition driven by similarities in phonological form. However, these results differ from those of Prahbhakaran et al. (2006) in two important ways. First, the findings of the current study show that the SMG is not only recruited in a task requiring access to lexical form but it is also recruited in a task that requires access to the conceptual representation of a word. In the Prabhakaran et al. (2006) study, participants had to make a lexical decision on a singly presented auditory target stimulus. Thus, the subjects had only to overtly access the lexical form of the word to make a decision. In contrast, in the current study, subjects were required to look at a named picture. In order to do so they had to access the conceptual representation of the picture in order to match its shape to the auditorily presented target. Second, the findings of the current study show that the SMG is not only recruited when phonological competition is implicit, but is also recruited in a task in which competition is explicitly present in the stimulus array. That is, in the Prabhakaran et al. study the phonological competitors were never presented, whereas in the current study the phonological competitors were presented in the stimulus array. Thus, findings of the present study indicate that the left SMG is sensitive not only to phonological competition intrinsic to a stimulus, but also to phonological competition that is reinforced by conceptual representations. The influence of conceptual representations on activation in the SMG could result from two-way connections with frontal areas assumed to be involved in the manipulations of these representations. This is not surprising given that a previous study (Gold & Buckner, 2002) has shown that the left SMG coactivates with domain-general frontal regions when subjects are performing a controlled phonological judgment.

The effects of competition in frontal structures

There is a large body of evidence suggesting that ventrolateral portions of prefrontal cortex, which include the IFG, are involved in guiding response selection under conditions of conflict or competition (cf. Badre & Wagner, 2004; Desimone & Duncan, 1995; Miller & Cohen, 2001). Selection is undoubtedly present in our task. Thus, it is not surprising that increased left IFG activation was found. However, there are a number of experimental factors that could have contributed to the modulation of IFG activation. In the present task, the presence of an onset competitor in the stimulus array results not only in the activation of the phonological form of the target stimulus and its phonological competitor, but also in activating the conceptual representations of these competing stimuli. Thus, competition needs to be resolved at both phonological and conceptual levels of representation.

Thompson-Schill and colleagues (Thompson-Schill et al, 1997, 1998, 1999) have proposed that the left IFG is a domain general mechanism that guides selection among competing conceptual representations. In support of this hypothesis, a recent study by Snyder and colleagues (2007) found increased activation in the left IFG when multiple semantic representations were competing regardless of whether the subject performed a phonological or semantic judgment task (Snyder et al., 2007). Thus, in their study competition was driven by semantic factors. In the current study, competition was driven by phonological factors, and activation of the IFG was modulated by the presence of phonological onset competition. Taken together, these findings support the hypothesis that the IFG is domain-general in that it is responsive to phonological as well as semantic/conceptual competition and it is recruited even when the response is not dependent on either semantic or phonological judgments.

Nonetheless, competitor trials elicited more activation than non-competitor trials in the current study in two non-overlapping clusters in the left IFG. The largest cluster was located primarily within BA 44 (71% of active voxels), and the second cluster was located within BA 45 (95% of active voxels). A third cluster in the insula extended into BA 47. The presence of these distinct clusters of activation suggests that there is a functional division of the IFG. It is to this issue that we now turn.

There are several neuroimaging studies showing a functional distinction between anterior and posterior IFG on the basis of whether semantic or phonological information is processed. These studies have shown that the anterior portion of the left IFG, corresponding to BA 45/47 is activated in tasks requiring semantic processing, while the posterior portion of the left IFG, corresponding to BA 44 is activated in tasks requiring phonological processing (Bucker et al., 1995; Burton et al., 2000; Fiez et al., 1997; Poldrack et al. 1999). This framework would suggest that the clusters found in the present study are responding separately to competition at the semantic/conceptual level and at the phonological level. Thus, the emergence of a cluster in BA 44 is consistent with the view that it is responsive to phonological factors, whereas the emergence of clusters in BA 45 and including BA 47 is consistent with the view that they are responsive to semantic/conceptual factors. Given that the presence of phonological competition appeared to activate more than one conceptual representation, thus increasing conceptual competition in the competitor condition, it is reasonable to suggest that BA 45/47 and BA 44 are tightly coupled.

Other researchers have proposed that there is a functional subdivision of the IFG based upon different processes involved in cognitive control. Badre & Wagner (2007) suggest that within the IFG two distinct subregions perform two different functions: controlled retrieval and post-retrieval selection (Badre & Wagner, 2007). Controlled retrieval refers to the top-down activation of semantic knowledge relevant to the task at hand and it is suggested to recruit BA47. If more than one knowledge representation becomes active, post-retrieval selection is needed to resolve competition, regardless of the form of these representations (e.g. semantic, phonological, and perceptual). In this framework post-retrieval selection is implemented in BA45.

In the current study, BA 47 (which was part of a larger cluster including the insula) could be recruited by the activation of task-relevant semantic knowledge. The increased activation found in BA 47 for competitor trials could reflect the higher number of semantic representations that are activated in the competitor condition. Namely, not only are the semantic representations associated with the target stimulus activated but in addition the semantic representations of the phonological competitor are as well. The cluster found in BA 45 could reflect the domain general post-retrieval selection mechanism. As to the role of BA 44, Badre and Wagner (2007) agree with its involvement in phonological processing, and further suggest that its proximity to speech production regions might implicate it as a selection mechanisms tied with a specific overt response (Badre & Wagner, 2007). Thus, the activation found in BA 44 could be interpreted as involved in carrying out response-related selection.

To summarize, the present study showed that the left IFG is sensitive to competition driven by phonological similarity, and not only to competition among semantic/conceptual factors. Moreover, the activation found in the left IFG is consistent with a functional segregation of this region in anterior and posterior portions both on the basis of linguistic domain and on the basis of different processes involved in cognitive control. Further studies will be necessary to determine whether these interpretations are mutually exclusive, or whether the IFG can be functionally divided according to both models.

Acknowledgements

This research was supported in part by NIH Grant RO1 DC006220 from the National Institute on Deafness and Other Communication Disorders. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute on Deafness and Other Communication Disorders or the National Institutes of Health. Many thanks to Emily Myers for help with the analysis of the data, Kathleen Kurowski for help in the preparation of the auditory stimuli, and Eiling Yee and Bob McMurray for providing competitor pair candidates.

References

- Allopenna PD, Magnuson JS, Tanenhaus MK. Tracking the time course of spoken word recognition using eye movements: evidence for continuous mapping models. Journal of Memory and Language. 1998;38:419–439. [Google Scholar]

- Altman GTM, Kamide Y. Now you see it, now you don’t: meditating the mapping between language and the visual world. In: Henderson J, Ferreira F, editors. The interface of language, vision, and action. Psychology Press; 2004. To appear. [Google Scholar]

- Badre D, Wagner AW. Selection, integration, and conflict monitoring: assessing the nature and generality of prefrontal cognitive control mechanisms. Neuron. 2004;41:473–487. doi: 10.1016/s0896-6273(03)00851-1. [DOI] [PubMed] [Google Scholar]

- Badre D, Wagner AW. Left ventrolateral prefrontal cortex and the cognitive control of memory. Neuropsychologia. 2007;45:2883–2901. doi: 10.1016/j.neuropsychologia.2007.06.015. [DOI] [PubMed] [Google Scholar]

- Binder JR, Price CJ. Functional neuroimaging of language. In: Cabeza R, Kingstone A, editors. Handbook of functional neuroimaging. Cambridge: MIT Press; 2001. pp. 187–251. [Google Scholar]

- Buckner RL, Raichle ME, Petersen SE. Dissociation of human prefrontal cortical areas across different speech production tasks and gender groups. Journal of Neurophysiology. 1995;74:2163–2173. doi: 10.1152/jn.1995.74.5.2163. [DOI] [PubMed] [Google Scholar]

- Burton MW. The role of inferior frontal cortex in phonological processing. Cognitive Science. 2001;25:695–709. [Google Scholar]

- Burton MW, Small SL, Blumstein SE. The role of segmentation in phonological processing: An f MRI investigation. Journal of Cognitive Neuroscience. 2000;12:679–690. doi: 10.1162/089892900562309. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Computers and Biomedical Research. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Cox RW, Hyde JS. Software tools for analysis and visualization of fMRI data. NMR in Biomedicine. 1997;10:171–178. doi: 10.1002/(sici)1099-1492(199706/08)10:4/5<171::aid-nbm453>3.0.co;2-l. [DOI] [PubMed] [Google Scholar]

- Dahan D, Magnuson JS, Tanenhaus MK, Hogan EM. Subcategorical mismatches and the time course of lexical access: Evidence for lexical competition. Language and Cognitive Processes. 2001a;16:507–534. [Google Scholar]

- Dahan D, Magnuson JS, Tanenhaus MK. Time course of frequency effects in spoken-word recognition: Evidence from eye movements. Cognitive Psychology. 2001b;42:317–367. doi: 10.1006/cogp.2001.0750. [DOI] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annual Review of Neuroscience. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- D’Esposito M, Postle BR, Rypma B. Prefrontal cortical contributions to working memory: evidence from event-related fMRI studies. Experimental Brain Research. 2000;133:3–11. doi: 10.1007/s002210000395. [DOI] [PubMed] [Google Scholar]

- Duncan J. Converging levels of analysis in the cognitive neuroscience of visual attention. Philosophical Transcripts of the Royal Society of London B. 1998;353:1307–1317. doi: 10.1098/rstb.1998.0285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan J. An adaptive model of neural function in prefrontal cortex. Nature Reviews Neuroscience. 2001;2:820–829. doi: 10.1038/35097575. [DOI] [PubMed] [Google Scholar]

- Duncan J, Owen AM. Common regions of the human frontal lobe recruited by diverse cognitive demands. Trends in Neuroscience. 2000;23:475–483. doi: 10.1016/s0166-2236(00)01633-7. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Stephan KE, Mohlberg H, Grefkes C, Fink GR, Amunts K, Zilles K. A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. Neuroimage. 2005;25:1325–1335. doi: 10.1016/j.neuroimage.2004.12.034. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Heim S, Zilles K, Amunts K. Testing anatomically specified hypotheses in functional imaging using cytoarchitectonic maps. Neuroimage. 2006;32:570–582. doi: 10.1016/j.neuroimage.2006.04.204. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Paus T, Caspers S, Grosbras MH, Evans AC, Zilles K, Amuants K. Assignment of functional activations to probabilistic cytoarchitectonic areas revisited. Neuroimage. 2007;26:511–521. doi: 10.1016/j.neuroimage.2007.03.060. [DOI] [PubMed] [Google Scholar]

- Fiez JA. Phonology, semantics, and the role of the left inferior prefrontal cortex. Human Brain Mapping. 1997;5:79–83. [PubMed] [Google Scholar]

- Gelfand JR, Bookheimer SY. Dissociating neural mechanisms of temporal sequencing and processing phonemes. Neuron. 2003;38:831–842. doi: 10.1016/s0896-6273(03)00285-x. [DOI] [PubMed] [Google Scholar]

- Gold BT, Buckner RL. Common prefrontal regions coactivate with dissociable posterior regions during controlled semantic and phonological tasks. Neuron. 2002;35:803–812. doi: 10.1016/s0896-6273(02)00800-0. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. Towards a functional neuroanatomy of speech perception. Trends in Cognitive Sciences. 2000;4:131–138. doi: 10.1016/s1364-6613(00)01463-7. [DOI] [PubMed] [Google Scholar]

- Luce PA, Pisoni DB. Recognizing spoken words: the neighborhood activation model. Ear and Hearing. 1998;19:1–36. doi: 10.1097/00003446-199802000-00001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marslen-Wilson W, Welsh A. Processing interactions during word-recognition in continuous speech. Cognitive Psychology. 1978;10:29–63. [Google Scholar]

- Marslen-Wilson W. Functional parallelism in spoken word recognition. Cognition. 1987;25:71–102. doi: 10.1016/0010-0277(87)90005-9. [DOI] [PubMed] [Google Scholar]

- McClelland JL, Elman JL. The TRACE model of speech perception. Cognitive Psychology. 1986;18:1–86. doi: 10.1016/0010-0285(86)90015-0. [DOI] [PubMed] [Google Scholar]

- Mertus JA. BLISS: The Brown Lab interactive speech system. Providence, Rhode Island: Brown University; 2002. [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annual Review of Neuroscience. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Norris D. Shortlist: A connectionist model of continuous speech recognition. Cognition. 1994;52:189–234. [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh Inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Paulesu E, Frith CD, Frackowiak RSJ. The neural correlates of the verbal component of working memory. Nature. 1993;362:342–345. doi: 10.1038/362342a0. [DOI] [PubMed] [Google Scholar]

- Poldrack RA, Wagner AD, Prull MW, Desmond JE, Glover GH, Gabrielli JD. Functional specialization for semantic and phonological processing in the left inferior prefrontal cortex. Neuroimage. 1999;10:15–35. doi: 10.1006/nimg.1999.0441. [DOI] [PubMed] [Google Scholar]

- Prabhakaran R, Blumstein SE, Myers EB, Hutchinson E, Britton B. An event-related investigation of phonological-lexical competition. Neuropsychologia. 2006;44:2209–2221. doi: 10.1016/j.neuropsychologia.2006.05.025. [DOI] [PubMed] [Google Scholar]

- Price C, Moore CJ, Humphreys GW, Wise RJS. Segregating Semantic from phonological processes during reading. Journal of Cognitive Neuroscience. 1997;9:727–733. doi: 10.1162/jocn.1997.9.6.727. [DOI] [PubMed] [Google Scholar]

- Smith EE, Jonides J. Storage and executive processes in the frontal lobes. Science. 1999;283:1657–1661. doi: 10.1126/science.283.5408.1657. [DOI] [PubMed] [Google Scholar]

- Snyder HR, Feignson K, Thompson-Schill SL. Prefrontal cortical response to conflict during semantic and phonological tasks. Journal of Cognitive Neuroscience. 2007;19:761–775. doi: 10.1162/jocn.2007.19.5.761. [DOI] [PubMed] [Google Scholar]

- Spivey-Knowlton MK. Unpublished Ph. D. thesis. Rochester, NY: University of Rochester; 1996. Integration of visual and linguistic information: Human data and model simulations. [Google Scholar]

- Tanenhaus MK, Spivey-Knowlton MJ, Eberhard KM, Sedivy JC. Integration of visual and linguistic information in spoken language comprehension. Science. 1995;268:632–634. doi: 10.1126/science.7777863. [DOI] [PubMed] [Google Scholar]

- Thompson-Schill SL, D'Esposito M, Aguirre GK, Farah MJ. Role of the inferior prefrontal cortex in retrieval of semantic knowledge: a reevaluation. Proceedings of the National Academy of Sciences. 1997;94:14792–14797. doi: 10.1073/pnas.94.26.14792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson-Schill SL, Swick D, Farah MJ, D’Esposito M, Kan IP, Knight RT. Verb generation in patients with focal frontal lesions: A neuropsychological test of neuroimaging findings. Proceedings of the National Academy of Sciences of the United States of America. 1998;95:15855–15860. doi: 10.1073/pnas.95.26.15855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson-Schill SL, D'Esposito M, Kan IP. Effects of repetition and competition on activity in left prefrontal cortex during word generation. Neuron. 1999;23:513–522. doi: 10.1016/s0896-6273(00)80804-1. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. A co-planar stereotaxic atlas of a human brain. Stuttgart, Germany: Thieme; 1988. [Google Scholar]

- Wagner AD, Maril A, Bjork RA, Schacter L. Prefrontal contributions to executive control: fMRI evidence for functional distinctions within lateral prefrontal cortex. Neuroimage. 2001;14:1337–1347. doi: 10.1006/nimg.2001.0936. [DOI] [PubMed] [Google Scholar]

- Wagner AD, Pare-Blagoev EJ, Clark, Poldrack RA. Recovering meaning: Left prefrontal cortex guides controlled semantic retrieval. Neuron. 2001;31:329–338. doi: 10.1016/s0896-6273(01)00359-2. [DOI] [PubMed] [Google Scholar]