Abstract

Publication bias has been around for about 50 years. It has become a concern for almost 20 years in the medical research community. This review briefly summarizes the current status of publication bias, potential sources where bias may arise from, and its common evaluation methods. In the field of translational stroke research, publication bias has long been suspected; however, it has not been addressed with sufficient efforts. Its status has remained the same during the last decade. The author emphasizes the important role that publishers might play in addressing publication bias.

Keywords: Publication bias, stroke, funnel plot, preclinical trials, neuroprotection, efficacy

1. Introduction

Publication bias has been noticed for about 50 years (Sterling 1959), and has become a concern for almost 20 years (Chalmers et al 1990; Dickersin 1990; Sharp 1990). In most cases, it refers to the assertion that studies having positive and/or statistically significant results are more easily and frequently published. Other types of publication bias may include a preference to publish based on research directions, authors’ nationalities, and institutes’ professional ranks. Publication bias in the medical research reporting system may have distorted the medical research literature and may have influenced the conclusion of some meta-analysis (systematic) reviews. A small scale random sample of 86 studies (Machan et al 2006) in medical informatics evaluation research showed a remarkably high percentage (69.8%) of descriptions of positive results, 19 (36.6%) of the analyzed 54 reviews and meta-analyses came to a positive conclusion with regard to the overall effect of the analyzed system, 32 (62.5%) were inconclusive, and only one review came to a negative conclusion.

2. Publication bias in translational stroke research

In the field of translational stroke research, the discrepancy of the neuroprotective efficacy between preclinical trails and clinical trials has caused growing concerns. An extensive review of 1026 experimental treatments (O’Collins et al 2006) revealed that neuroprotective efficacy was superior to control conditions in 62% of the preclinical models of focal ischemia, in 70% of preclinical models of global ischemia, and in 74% of culture models. Such a rate of reporting positive results in preclinical trials is drastically high when compared with the rate of reporting positive results in clinical trials. Currently we still have no FDA-approved neuroprotective treatment for ischemic stroke.

Factors contributing to the translational failures of neuroprotective treatments for ischemic stroke have been addressed in the Stroke Therapy Academic Industry Roundtable (STAIR) guidelines for preclinical stroke trials (Stroke Therapy Academic Industry Roundtable 1999), which include species differences, inappropriate time windows, ineffective drug levels, inability of drugs to cross the blood-brain-barrier (BBB), use of young animals without co-morbidities, failure to model white matter damage, and the heterogeneity of stroke subtypes in patients. However, another issue, the known publication bias, may also play a role in causing the discrepancy of positive result reporting between preclinical trials and clinical trials in translational stroke research.

An article from Collaborative Approach to Meta Analysis and Review of Animal Data from Experimental Stroke (CAMARADES) dealing with publication bias across stroke studies suggests that at least 15% of experiments remain unpublished, and that this results in an overstatement of efficacy of 30% (unpublished communication with Dr. Macleod).

Some reviews with detailed systematic analyses further confirmed the widely suspected publication bias in preclinical stroke trails (Perel et al 2007). As manifested on the funnel plot, which is the most commonly used graphical evaluation for publication bias, an inflated efficacy was shown with the treatment of recombinant tissue plasminogen activator, and similar effects were shown with tirilazad treatments (Perel et al 2007), but not with hypothermia treatment (van der Worp et al 2007).

3. Sources of bias

Although it is widely noticed that positive or significant results more frequently appear in journals, the fundamental reasons have not been well addressed. The bias that has been noticed in medical research literature might come from the publication process, the experimental process, or both. Currently there is no particular study specifically addressing the bias sources in translational stroke research. It is reasonable to assume that similar bias sources exist in translational stroke research as in other medical research fields.

3.1. Bias from the publishing process

In addition to the result being positive or negative (Blackwell et al 2009; Hopewell et al 2009), many other factors may contribute to publication bias, such as research directions of authors and reviewers(Joyce et al 1998), manuscript’s potential value for pursuing and maintaining a journal’s high impact factor (Opthof et al 2002b), conflicts of interest (Perlis et al 2005), research funding sources (Liss 2006), publishing cost, language (Egger et al 1997b), author’s nationality (Opthof et al 2002b; Yousefi-Nooraie et al 2006), the rank and geographic location of the sponsor institute (Eloubeidi et al 2001; Sood et al 2007), and multiple biases from the review process (Alasbali et al 2009; Goldbeck-Wood 1999; Opthof et al 2001a; Opthof et al 2002a; Opthof et al 2002b). The following paragraphs mainly discuss the influence of sponsorship and impact factor on publication bias.

It has been noticed that research funding sources influence the published outcomes of studies. Results favorable for the drugs studied were significantly more common in those funded by a pharmaceutical company (98% vs. 32%) (Liss 2006). Financial conflicts of interest have been reported to be prevalent in clinical trials and are associated with a greater likelihood of reporting results favorable to the intervention being studied (Perlis et al 2005). Research funded by drug companies was less likely to be published than research funded by other sources (Hall et al 2007; Lexchin et al 2003). Studies sponsored by pharmaceutical companies were more likely to have outcomes favoring the sponsor than were studies with other sponsors (Lexchin et al 2003). Therefore, additional procedures may need to be taken for avoiding bias as well as a declaration of conflict of interest.

While the impact of research funding sources on publication bias has been noticed and addressed, the role of a journal’s operating goal and supporting sources has rarely been discussed. Although it is controversial for using the impact factor as a criterion for measuring a journal’s quality (Barendse 2007; Boldt et al 2000; Peleg and Shvartzman 2006; Roussakis et al 2007), most journals tend to treat the impact factor as a measure of their journal’s achievements. Publisher bias may be encouraged when the impact factor prevails in a journal’s operational strategy (Opthof et al 2001b; Opthof et al 2002b). A study assessed the relationship of a journal’s impact factor and publishing outcomes in the literature of neonatology (Littner et al 2005). It showed that studies with positive results were more frequently published in journals with high impact factors, suggesting a role of the impact factor in selective publishing or submitting. Seeking a different operating strategy for professional journals may be needed for dealing with publication bias.

3.2. Bias from experimental process

Bias coming from the experimental process may mix with the publication bias; it may not be easy to tell whether the bias is from the publication process, the experimental process, or both. Experimental bias has been well-noticed and addressed in a few systematic reviews (Macleod et al 2008b; van der Worp et al 2007). Some editors may argue that researchers are more likely to produce and recommend manuscripts with positive or significant results. JAMA did a specific investigation on publication bias in editorial decision making (Olson et al 2002); after having adjusted simultaneously for study characteristics and quality indicators, the publication rates between studies with significant and non-significant results did not differ significantly, with an adjusted odds ratio of 1.30 (95% CI, 0.87–1.96). However, editorial policies and processes differ from journal to journal, and a study based on one journal’s articles is insufficient to reflect the overall status of journals’ roles in publication bias. JAMA are to be praised for their constructive efforts in addressing the issue of publication bias from the journal’s side.

4. Evaluation methods for publication bias

There is no specially designed or tailored evaluation method for the detection of publication bias in translational stroke research. To estimate a suspected publication bias in stroke studies, all regular methods that are widely accepted by the medical research community will apply. Publication bias can be detected by several commonly used graphic, or statistical, methods, such as the funnel plot (Egger et al 1997a) or fail safe numbers (Persaud 1996; Rosenberg 2005). Other methods, such as selection models using weighted distribution theory (Sutton et al 2000), are also in development, but they have not been used regularly. Brief introductions for the common evaluation methods and sample funnel plots for the efficacies of some neuroprotectants are provided in the following paragraphs.

The funnel plot, the plot of a trial’s effect estimates against sample size, has been widely used to deal with publication bias. It detects bias based on the assumption that the plot resembles a symmetrical inverted funnel in the absence of bias (Egger et al 1997a). Many factors may potentially contribute to the detected asymmetry; therefore, this method should be used with caution, especially when limited numbers of studies are used in a meta-analysis (Irwig et al 1998; Stuck et al 1998; Vandenbroucke 1998). Detection of asymmetry in a funnel plot can be conducted by several methods, such as visual inspection, “trim and fill”, regression approach (Soeken and Sripusanapan 2003), and a newly emerged method (Formann 2008) in which the proportion of unpublished studies is estimated by the degree of truncation from a left-truncated normal distribution. Each method has its own advantages and limitations; it is suggested that multiple methods should be used when there is a suspicion of publication bias.

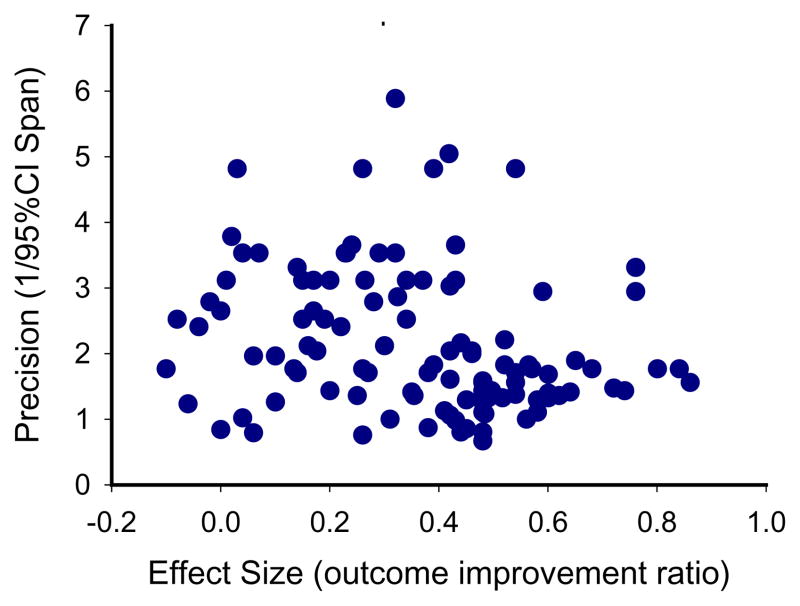

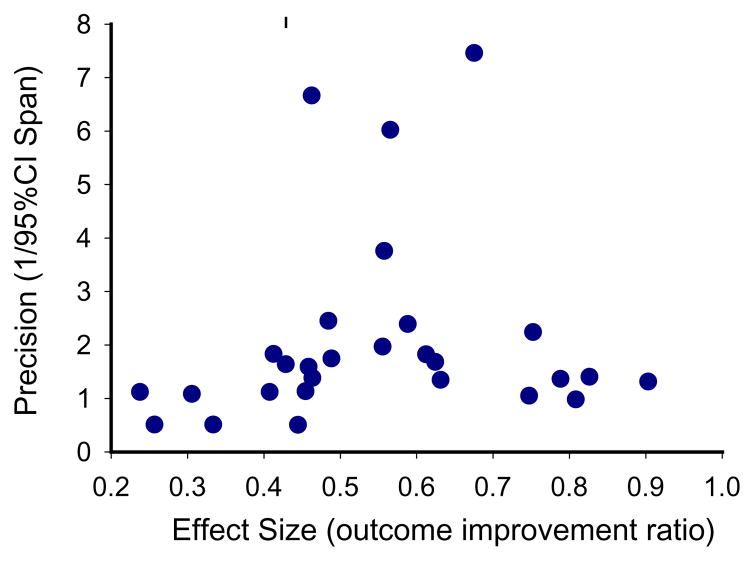

For demonstration purposes, here I present a sample funnel plot basing on the available data from a systematic review paper (Macleod et al 2005a) for the neuroprotective efficacy of FK506. As shown in Fig. 1, the funnel plot of precision against effective size is asymmetric by visual inspection, with more studies allocated in the left side. The “missing” studies in the right portion (large efficacy) of the plot may indicate possible publication bias or true heterogeneity of studies. A similar sample funnel plot (Fig. 2) for the neuroprotective efficacy of melatonin has also been completed based on the available data from another systematic review (Macleod et al 2005b). Being different from Fig 1 by visual inspection, the asymmetry of Fig 2 reveals apparent “missing” studies in the left portion of small efficacy, indicating a possibly inflated efficacy. These asymmetries in funnel plots may indicate the existence of publication bias, missing literature, or true heterogeneity among studies. Therefore, addressing suspected publication bias in systematic reviews may provide valuable information for the selection of neuroprotective candidates for acute stroke treatment.

Fig 1.

Funnel plot for FK506 efficacy. Asymmetry can be detected by visual inspection, with more studies being allocated in the left portion, indicating “missing” studies in the right portion of large efficacy.

Fig 2.

Funnel plot for melatonin efficacy. Asymmetry can be detected by visual inspection, with more studies being allocated in the right portion of large efficacy, indicating possibly inflated efficacy.

Fail safe numbers are to some degree analogous to the confidence intervals. They aid in the assessment of the degree of confidence for a particular result in meta-analysis studies, which the funnel plots do visually. Fail safe numbers may be defined as the number of new, unpublished, non-significant studies that would be required to exist to lower the significance of a meta-analysis to some specified level (Persaud 1996). Using fail-safe numbers is a quick way to estimate whether publication bias is likely to be a problem for a specific meta-analysis study (Rosenberg 2005).

Some graphic evaluation methods frequently appear in systematic reviews, such as the forest plot, the Galbraith plot, the L’Abbé plot, and the box plot; but they are designed for assessing study variation and heterogeneity, not specifically for assessing publication bias (Bax et al 2008).

5. Multiple initiatives suggested for reducing publication bias

About 50 years have passed since the notice of publication bias (Sterling 1959), yet little has changed. A significant amount of research conducted never appears in the public forum. It has been estimated that less than half of all studies initially presented as summaries or abstracts at professional meetings are subsequently published as peer-reviewed fulltext articles (Scherer et al 1994; Scherer et al 2007), although various factors may account for such a low publication rate.

Publication bias influences the conclusion of systematic reviews; therefore it should be addressed appropriately when there is a suspicion. However, the methods used for dealing with publication bias in most systematic reviews appear merely as a description of the number of pooled studies, the number of searched databases, or the statistical analysis of heterogeneity. In attempts to address this situation, both the general medical research community and the translational stroke research community have started multiple initiatives.

5.1. Initiatives in the general medical research community

Some journals have implemented guidelines to make sure that the issue of publication bias be dealt with, such as the QUOROM statement, and MOOSE guidelines. The quality of reports of meta-analyses (QUOROM) statement was suggested for meta-analyses of randomized controlled trials (Moher et al 2000a; Moher et al 2000b). The meta-analysis of observational studies in epidemiology (MOOSE) guidelines, the MOOSE checklist, was suggested for meta-analyses of observational studies (Stroup et al 2000). Some journals implement a general requirement to address publication bias using an appropriate method when necessary. More detailed descriptions of dealing with publication bias have been provided in the Cochrane handbook for Systematic Reviews of Interventions.

In an effort to balance publication bias in medical literature, Journal of Negative Results in BioMedicine (http://www.jnrbm.com/) was started in 2004 to accept manuscripts with unexpected, controversial, provocative and/or negative results/conclusions.

Although not being established particularly for addressing publication bias, ClinicalTrials.gov (http://clinicaltrials.gov/) provides a global view of clinical trials. ClinicalTrials.gov is a registry of federally and privately supported clinical trials conducted in the United States and around the world. ClinicalTrials.gov currently contains 66,791 trials sponsored by the National Institutes of Health, other federal agencies, and private industry. Studies listed in the database are conducted in all 50 States and in 161 countries. A similar registration agent for preclinical trials will be expected to provide a global view for preclinical trials, to counter against publication bias, and to help with the selection of candidates for clinical trials.

5.2. Initiatives in the translational stroke research community

As in the meta-analyses of cardiovascular diseases (Palma and Delgado-Rodriguez 2005), the assessment of publication bias in systematic reviews of preclinical and clinical trials for ischemic stroke treatment appears at a low frequency. Although methodology details and recommendations for assessing publication bias have been provided in the Cochrane handbook for Systematic Reviews of Interventions (http://www.cochrane-handbook.org/), none of the Cochrane’s 35 systematic reviews (http://www.cochrane.org/reviews/en/subtopics/93.html) on medical therapies for ischemic stroke used the widely accepted methods, such as funnel plots, or failsafe-N. Only the heterogeneity of studies has been assessed in these reviews. It is a good sign to see that a few other reviews have addressed publication bias in professional details(Perel et al 2007; van der Worp et al 2007).

Because the bias that appears in published materials may also come from the experimental process, efforts in reducing experimental bias may help to reduce the observed bias in the literature. More details about reducing experimental bias have been described in Good Lab Practice Guidelines (GLPG) (Macleod et al 2008a). Measures such as random allocation, random sampling, blind assessment, and standardized operational procedures could be used for reducing experimental bias. This GLPG, together with the STAIR criteria, if being followed strictly, may help to reduce the inflated efficacy in preclinical trials for the treatment of acute ischemic stroke. However, almost ten years have passed since the first version of the STAIR criteria (Stroke Therapy Academic Industry Roundtable 1999), yet the efficacy discrepancy between preclinical and clinical stroke trials doesn’t seem to have improved. An independent registration and validating system may help to reduce the bias from both publication process and experimental process. Seeking a different approach is necessary to reduce the impact of this problem (Hall et al 2007).

The CAMARADES collaboration has been active in addressing publication bias in experimental stroke. Some of their focuses include identifying potential sources of bias in animal work, developing recommendations for improvements in the design and reporting of animal studies, and developing better meta-analysis methodologies for animal studies. The CAMARADES group will soon launch an on-line facility for the registration of animal studies in stroke with enough details to help systematic reviewers contact authors of unpublished studies. This would be a good start for the preclinical stroke trial registration, and more work will still be needed to establish a world-wide registry. Research sponsors and governmental authorities may be suggested as needing to become involved in promoting mandatory registration.

6. How JESTM addresses publication bias?

The goal of the Journal of Experimental Stroke & Translational Medicine (JESTM, www.jestm.com) is to foster new concepts and to reflect the status of preclinical trials in the field of experimental and translational stroke research. Therefore, manuscripts with controversial/provocative ideas and negative results are encouraged equally.

In order to increase the quality of our published contents and to reduce publication bias, we require authors of review manuscripts be aware of and to address publication bias appropriately. We require authors of research studies to conform to Good Lab Practice Guidelines for reducing experimental bias.

JESTM is operated with support from enthusiastic professional volunteer workers. Because it is purely an online journal, its operational cost is considerably lowered. All articles on JESTM are free-access and authors are not charged for publishing cost. Articles will be made immediately available online after completion of the requisite review process.

Acknowledgments

This work was supported by NIH grant 5T32NS051147-02 and NS 21076-24. The author appreciates and acknowledges Dr. Levine and Dr. Winn at Mount Sinai School of Medicine for his contribution on revising this paper.

References

- Alasbali T, Smith M, Geffen N, Trope GE, Flanagan JG, Jin Y, Buys YM. Discrepancy between results and abstract conclusions in industry- vs nonindustry-funded studies comparing topical prostaglandins. Am J Ophthalmol. 2009;147:33–8. e2. doi: 10.1016/j.ajo.2008.07.005. [DOI] [PubMed] [Google Scholar]

- Barendse W. The strike rate index: a new index for journal quality based on journal size and the h-index of citations. Biomed Digit Libr. 2007;4:3. doi: 10.1186/1742-5581-4-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bax L, Ikeda N, Fukui N, Yaju Y, Tsuruta H, Moons KG. More Than Numbers: The Power of Graphs in Meta-Analysis. Am J Epidemiol. 2008 doi: 10.1093/aje/kwn340. [DOI] [PubMed] [Google Scholar]

- Blackwell SC, Thompson L, Refuerzo J. Full Publication of Clinical Trials Presented at a National Maternal-Fetal Medicine Meeting: Is There a Publication Bias? Am J Perinatol. 2009 doi: 10.1055/s-0029-1220786. [DOI] [PubMed] [Google Scholar]

- Boldt J, Haisch G, Maleck WH. Changes in the impact factor of anesthesia/critical care journals within the past 10 years. Acta Anaesthesiol Scand. 2000;44:842–9. doi: 10.1034/j.1399-6576.2000.440710.x. [DOI] [PubMed] [Google Scholar]

- Chalmers TC, Frank CS, Reitman D. Minimizing the three stages of publication bias. Jama. 1990;263:1392–5. [PubMed] [Google Scholar]

- Dickersin K. The existence of publication bias and risk factors for its occurrence. Jama. 1990;263:1385–9. [PubMed] [Google Scholar]

- Egger M, Davey Smith G, Schneider M, Minder C. Bias in meta-analysis detected by a simple, graphical test. Bmj. 1997a;315:629–34. doi: 10.1136/bmj.315.7109.629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Egger M, Zellweger-Zahner T, Schneider M, Junker C, Lengeler C, Antes G. Language bias in randomised controlled trials published in English and German. Lancet. 1997b;350:326–9. doi: 10.1016/S0140-6736(97)02419-7. [DOI] [PubMed] [Google Scholar]

- Eloubeidi MA, Wade SB, Provenzale D. Factors associated with acceptance and full publication of GI endoscopic research originally published in abstract form. Gastrointest Endosc. 2001;53:275–82. doi: 10.1016/s0016-5107(01)70398-7. [DOI] [PubMed] [Google Scholar]

- Formann AK. Estimating the proportion of studies missing for meta-analysis due to publication bias. Contemp Clin Trials. 2008;29:732–9. doi: 10.1016/j.cct.2008.05.004. [DOI] [PubMed] [Google Scholar]

- Goldbeck-Wood S. Evidence on peer review-scientific quality control or smokescreen? Bmj. 1999;318:44–5. doi: 10.1136/bmj.318.7175.44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall R, de Antueno C, Webber A. Publication bias in the medical literature: a review by a Canadian Research Ethics Board. Can J Anaesth. 2007;54:380–8. doi: 10.1007/BF03022661. [DOI] [PubMed] [Google Scholar]

- Hopewell S, Loudon K, Clarke MJ, Oxman AD, Dickersin K. Publication bias in clinical trials due to statistical significance or direction of trial results. Cochrane Database Syst Rev. 2009:MR000006. doi: 10.1002/14651858.MR000006.pub3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Irwig L, Macaskill P, Berry G, Glasziou P. Bias in meta-analysis detected by a simple, graphical test. Graphical test is itself biased. Bmj. 1998;316:470. author reply -1. [PMC free article] [PubMed] [Google Scholar]

- Joyce J, Rabe-Hesketh S, Wessely S. Reviewing the reviews: the example of chronic fatigue syndrome. Jama. 1998;280:264–6. doi: 10.1001/jama.280.3.264. [DOI] [PubMed] [Google Scholar]

- Lexchin J, Bero LA, Djulbegovic B, Clark O. Pharmaceutical industry sponsorship and research outcome and quality: systematic review. Bmj. 2003;326:1167–70. doi: 10.1136/bmj.326.7400.1167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liss H. Publication bias in the pulmonary/allergy literature: effect of pharmaceutical company sponsorship. Isr Med Assoc J. 2006;8:451–4. [PubMed] [Google Scholar]

- Littner Y, Mimouni FB, Dollberg S, Mandel D. Negative results and impact factor: a lesson from neonatology. Arch Pediatr Adolesc Med. 2005;159:1036–7. doi: 10.1001/archpedi.159.11.1036. [DOI] [PubMed] [Google Scholar]

- Machan C, Ammenwerth E, Bodner T. Publication bias in medical informatics evaluation research: is it an issue or not? Stud Health Technol Inform. 2006;124:957–62. [PubMed] [Google Scholar]

- Macleod MM, Fisher M, O’Collins V, Sena ES, Dirnagl U, Bath PM, Buchan A, van der Worp HB, Traystman R, Minematsu K, Donnan GA, Howells DW. Good Laboratory Practice. Preventing Introduction of Bias at the Bench. Stroke. 2008a doi: 10.1161/STROKEAHA.108.525386. [DOI] [PubMed] [Google Scholar]

- Macleod MR, O’Collins T, Horky LL, Howells DW, Donnan GA. Systematic review and metaanalysis of the efficacy of FK506 in experimental stroke. J Cereb Blood Flow Metab. 2005a;25:713–21. doi: 10.1038/sj.jcbfm.9600064. [DOI] [PubMed] [Google Scholar]

- Macleod MR, O’Collins T, Horky LL, Howells DW, Donnan GA. Systematic review and meta-analysis of the efficacy of melatonin in experimental stroke. J Pineal Res. 2005b;38:35–41. doi: 10.1111/j.1600-079X.2004.00172.x. [DOI] [PubMed] [Google Scholar]

- Macleod MR, van der Worp HB, Sena ES, Howells DW, Dirnagl U, Donnan GA. Evidence for the efficacy of NXY-059 in experimental focal cerebral ischaemia is confounded by study quality. Stroke. 2008b;39:2824–9. doi: 10.1161/STROKEAHA.108.515957. [DOI] [PubMed] [Google Scholar]

- Moher D, Cook DJ, Eastwood S, Olkin I, Rennie D, Stroup DF. Improving the Quality of Reports of Meta-Analyses of Randomised Controlled Trials: The QUOROM Statement. Onkologie. 2000a;23:597–602. doi: 10.1159/000055014. [DOI] [PubMed] [Google Scholar]

- Moher D, Cook DJ, Eastwood S, Olkin I, Rennie D, Stroup DF. Improving the quality of reports of meta-analyses of randomised controlled trials: the QUOROM statement. QUOROM Group. Br J Surg. 2000b;87:1448–54. doi: 10.1046/j.1365-2168.2000.01610.x. [DOI] [PubMed] [Google Scholar]

- O’Collins VE, Macleod MR, Donnan GA, Horky LL, van der Worp BH, Howells DW. 1,026 experimental treatments in acute stroke. Ann Neurol. 2006;59:467–77. doi: 10.1002/ana.20741. [DOI] [PubMed] [Google Scholar]

- Olson CM, Rennie D, Cook D, Dickersin K, Flanagin A, Hogan JW, Zhu Q, Reiling J, Pace B. Publication bias in editorial decision making. Jama. 2002;287:2825–8. doi: 10.1001/jama.287.21.2825. [DOI] [PubMed] [Google Scholar]

- Opthof T, Coronel R, Janse MJ. Submissions and impact factor 1997–2001: focus on Sweden. Cardiovasc Res. 2001a;51:202–4. doi: 10.1016/s0008-6363(01)00355-8. [DOI] [PubMed] [Google Scholar]

- Opthof T, Coronel R, Janse MJ. Impact factor of Cardiovascular Research in 2000: all time high! Cardiovasc Res. 2001b;50:1–2. doi: 10.1016/s0008-6363(01)00219-x. [DOI] [PubMed] [Google Scholar]

- Opthof T, Coronel R, Janse MJ. The significance of the peer review process against the background of bias: priority ratings of reviewers and editors and the prediction of citation, the role of geographical bias. Cardiovasc Res. 2002a;56:339–46. doi: 10.1016/s0008-6363(02)00712-5. [DOI] [PubMed] [Google Scholar]

- Opthof T, Coronel R, Janse MJ. Submissions, impact factor, reviewer’s recommendations and geographical bias within the peer review system (1997–2002): focus on Germany. Cardiovasc Res. 2002b;55:215–9. doi: 10.1016/s0008-6363(02)00500-x. [DOI] [PubMed] [Google Scholar]

- Palma S, Delgado-Rodriguez M. Assessment of publication bias in meta-analyses of cardiovascular diseases. J Epidemiol Community Health. 2005;59:864–9. doi: 10.1136/jech.2005.033027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peleg R, Shvartzman P. Where should family medicine papers be published - following the impact factor? J Am Board Fam Med. 2006;19:633–6. doi: 10.3122/jabfm.19.6.633. [DOI] [PubMed] [Google Scholar]

- Perel P, Roberts I, Sena E, Wheble P, Briscoe C, Sandercock P, Macleod M, Mignini LE, Jayaram P, Khan KS. Comparison of treatment effects between animal experiments and clinical trials: systematic review. Bmj. 2007;334:197. doi: 10.1136/bmj.39048.407928.BE. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perlis RH, Perlis CS, Wu Y, Hwang C, Joseph M, Nierenberg AA. Industry sponsorship and financial conflict of interest in the reporting of clinical trials in psychiatry. Am J Psychiatry. 2005;162:1957–60. doi: 10.1176/appi.ajp.162.10.1957. [DOI] [PubMed] [Google Scholar]

- Persaud R. Misleading meta-analysis. “Fail safe N” is a useful mathematical measure of the stability of results. Bmj. 1996;312:125. doi: 10.1136/bmj.312.7023.125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenberg MS. The file-drawer problem revisited: a general weighted method for calculating fail-safe numbers in meta-analysis. Evolution. 2005;59:464–8. [PubMed] [Google Scholar]

- Roussakis AG, Stamatelopoulos A, Balaka C. What does impact factor depend upon? J Buon. 2007;12:415–8. [PubMed] [Google Scholar]

- Scherer RW, Dickersin K, Langenberg P. Full publication of results initially presented in abstracts. A meta-analysis. JAMA. 1994;272:158–62. [PubMed] [Google Scholar]

- Scherer RW, Langenberg P, von Elm E. Full publication of results initially presented in abstracts. Cochrane Database Syst Rev. 2007:MR000005. doi: 10.1002/14651858.MR000005.pub3. [DOI] [PubMed] [Google Scholar]

- Sharp DW. What can and should be done to reduce publication bias? The perspective of an editor. Jama. 1990;263:1390–1. [PubMed] [Google Scholar]

- Soeken KL, Sripusanapan A. Assessing publication bias in meta-analysis. Nurs Res. 2003;52:57–60. doi: 10.1097/00006199-200301000-00009. [DOI] [PubMed] [Google Scholar]

- Sood A, Knudsen K, Sood R, Wahner-Roedler DL, Barnes SA, Bardia A, Bauer BA. Publication bias for CAM trials in the highest impact factor medicine journals is partly due to geographical bias. J Clin Epidemiol. 2007;60:1123–6. doi: 10.1016/j.jclinepi.2007.01.009. [DOI] [PubMed] [Google Scholar]

- Sterling TD. Publication Decisions and Their Possible Effects on Inferences Drawn from Tests of Significance--Or Vice Versa. Journal of the American Statistical Association. 1959;54:30–4. [Google Scholar]

- Stroke Therapy Academic Industry Roundtable. Recommendations for Standards Regarding Preclinical Neuroprotective and Restorative Drug Development. Stroke. 1999;30:2752–8. doi: 10.1161/01.str.30.12.2752. [DOI] [PubMed] [Google Scholar]

- Stroup DF, Berlin JA, Morton SC, Olkin I, Williamson GD, Rennie D, Moher D, Becker BJ, Sipe TA, Thacker SB. Meta-analysis of observational studies in epidemiology: a proposal for reporting. Meta-analysis Of Observational Studies in Epidemiology (MOOSE) group. Jama. 2000;283:2008–12. doi: 10.1001/jama.283.15.2008. [DOI] [PubMed] [Google Scholar]

- Stuck AE, Rubenstein LZ, Wieland D. Bias in meta-analysis detected by a simple, graphical test. Asymmetry detected in funnel plot was probably due to true heterogeneity. Bmj. 1998;316:469. author reply 70–1. [PMC free article] [PubMed] [Google Scholar]

- Sutton AJ, Song F, Gilbody SM, Abrams KR. Modelling publication bias in meta-analysis: a review. Stat Methods Med Res. 2000;9:421–45. doi: 10.1177/096228020000900503. [DOI] [PubMed] [Google Scholar]

- van der Worp HB, Sena ES, Donnan GA, Howells DW, Macleod MR. Hypothermia in animal models of acute ischaemic stroke: a systematic review and meta-analysis. Brain. 2007;130:3063–74. doi: 10.1093/brain/awm083. [DOI] [PubMed] [Google Scholar]

- Vandenbroucke JP. Bias in meta-analysis detected by a simple, graphical test. Experts’ views are still needed. Bmj. 1998;316:469–70. author reply 70-1. [PMC free article] [PubMed] [Google Scholar]

- Yousefi-Nooraie R, Shakiba B, Mortaz-Hejri S. Country development and manuscript selection bias: a review of published studies. BMC Med Res Methodol. 2006;6:37. doi: 10.1186/1471-2288-6-37. [DOI] [PMC free article] [PubMed] [Google Scholar]