Abstract

This paper concerns task-based image quality assessment for the task of discriminating between two classes of images. We address the problem of estimating two widely-used detection performance measures, SNR and AUC, from a finite number of images, assuming that the class discrimination is performed with a channelized Hotelling observer. In particular, we investigate the advantage that can be gained when either (i) the means of the signal-absent and signal-present classes are both known, or (ii) when the difference of class means is known. For these two scenarios, we propose uniformly minimum variance unbiased estimators of SNR2, derive the corresponding sampling distributions and provide variance expressions. In addition, we demonstrate how the bias and variance for the related AUC estimators may be calculated numerically by using the sampling distributions for the SNR2 estimators. We find that for both SNR2 and AUC, the new estimators have significantly lower bias and mean-square error than the traditional estimator, which assumes that the class means, and their difference, are unknown.

I. Introduction

Task-based assessment of image quality provides a pragmatic paradigm for objective evaluation and comparison of image reconstruction algorithms and imaging systems [1]. One task that is frequently of interest is the detection of a specified object in an image. For example, an important task in radiology is the discrimination of two classes of images: images with a lesion present and with no lesion present.

Lesion detectability may be appraised with both human observers and mathematical model observers [1]. However, human observers come with prominent difficulties. They are highly variable, slow, costly, and subject to fatigue, to the extent that they become impractical, especially for imaging system optimization, as noted by Park et al. [2]. By contrast, mathematical model observers [1] can be implemented efficaciously using computers. Thus, they offer an attractive alternative to human observers for task-based assessment of image quality. One type of model observer which has been given significant attention in medical imaging is the channelized Hotelling observer (CHO) [1]. Specifically, CHO methodology for image quality assessment has been applied in nuclear medicine, e.g., [3]–[8] and in x-ray computed tomography (CT) [9]–[12].

To assess the capability of a given model observer to detect lesions, summary measures of observer performance need to be estimated. Two popular measures of observer performance are the observer signal-to-noise ratio (SNR) and the area under the receiver operating characteristic curve, denoted as AUC [1] (see section II-C). In most situations, it is not possible to calculate SNR and AUC exactly, and they must be estimated from a finite collection of images. Therefore, it is important to understand the small-sample statistical properties of the estimators which are used. We note that two main approaches may be considered for the estimation of SNR and AUC: (i) an SNR-based approach, in which the SNR is first estimated, and then the AUC is obtained from the SNR estimate [1]; (ii) an AUC-based approach, in which the AUC is first estimated, and then the SNR is obtained from the AUC estimate [1]. Under conditions of normality, both approaches are valid because SNR and AUC are functions of each other [1]. Here, we focus on the SNR-based approach. Some researchers have investigated direct estimation of the AUC, e.g., [13]–[15]; these works rely on the Mann-Whitney U statistic.

Note that two main estimation strategies may be selected for the two approaches described above. In the first strategy, all available images are used together to estimate the desired figure of merit [1, p. 972]. In the second strategy, the images are divided into two groups, with one group being used to define (aka train) the observer whereas the second group is used to estimate the performance of the trained observer (aka testing the observer) [1, p. 973]. The second strategy has the advantage of yielding a negative (conservative) bias in the estimated performance [16], [17]. In this paper, we adopt the first strategy, and we will see later that negative biases may also be achieved in this case, provided that the class means, or their difference, are known.

In general, deriving the small-sample properties of estimators is difficult. However, analytical results are known for some cases. For example, if SNR2 for a CHO is estimated using a scalar multiple of the two-sample Hotelling T2 statistic, then the sampling distribution is known for normally distributed measurements [18, p. 216]; see section IV-A. Also, results pertaining to direct AUC estimation via the nonparametric Mann-Whitney U statistic may be found in [15]. When exact analytical results are not readily available, Taylor series approximations may be beneficial [19], [20], or one may resort to analysis based on Monte Carlo simulation and resampling techniques [16], [17], [21]–[27].

As mentioned by Barrett and Myers [1, p. 972], there is potential to improve the estimation accuracy of SNR2 for a CHO if prior knowledge of the mean images with and without a lesion present is incorporated into the estimation procedure. However, to our knowledge, and according to Barrett and Myers [1, p. 973], the statistical properties of such estimators have not been investigated. We have examined this potential and report on our findings here.

Specifically, we propose uniformly minimum variance unbiased (UMVU) estimators of SNR2 for a CHO in the situation when the mean images for the lesion present and lesion absent cases are known, and also when only the difference of the mean images is known. We present analytical results for the sampling distributions of these estimators and provide expressions for their variance. Using the sampling distributions of the SNR2 estimators, we illustrate how the bias and variance for the related AUC estimators may be numerically evaluated, and we compare the results with a traditional estimator which assumes that the class means, and their difference, are unknown. For expositional clarity, all mathematical proofs are deferred to the appendices.

II. Background

In this section, we set our notation and review several necessary concepts. First, some probability distributions that will be used later in the paper are introduced. Afterwards, the classification task and the channelized Hotelling observers which we consider in this paper are covered. Next, two common summary measures for model observer performance, SNR and AUC, are reviewed. The remainder of the section discusses calculation of moments for AUC estimators. Throughout the paper, we write vectors using boldface and denote the transpose of vectors and matrices with the superscript T, e.g., xT is the transpose of the vector x.

A. Some Probability Distributions

We assume that the reader is familiar with both the multivariate normal distribution and the univariate noncentral χ2 distribution, e.g., see [18], [28]. A p×1 random vector X ∈ ℝp following a multivariate normal distribution with mean μ and covariance matrix Σ is denoted X ~ Np(μ, Σ). A random variable X ∈ ℝ following a noncentral χ2 distribution with ν degrees of freedom and noncentrality parameter, λ, is denoted . Recall that when λ = 0, the noncentral χ2 distribution becomes the central χ2 distribution.

1) Inverted Gamma

The inverted gamma distribution arises as the distribution of the reciprocal of a gamma variate, and has two positive parameters, α and β. A random variable X is said to have an inverted gamma distribution if its probability density function (pdf) is of the form [29]

| (1) |

when x > 0, and fX(x) = 0 otherwise. Just as the central χ2 distribution is a special case of the gamma distribution, the inverted central χ2 distribution is a special case of the inverted gamma distribution. In particular, the reciprocal of a central χ2 random variable with ν degrees of freedom is an inverted gamma random variable with α = ν/2 and β = 1/2. An inverted gamma random variable X will be denoted as X ~ IG(α, β). The mean and variance of an inverted gamma deviate are [29]

| (2) |

and

| (3) |

2) Noncentral F

The noncentral F distribution [18], [28]–[30] appears as the distribution of the ratio of a noncentral χ2 random variable to an independent, central χ2 random variable. Specifically, if and X and Y are independent, then the random variable F = (X/ν1)/(Y/ν2) has a noncentral F distribution with ν1 and ν2 degrees of freedom and noncentrality parameter, λ. In this case, we write . An expression for the pdf of the noncentral F may be found in [28].

3) Wishart

The Wishart distribution [18], [28], [31] is a matrix variate generalization of the χ2 distribution and it emerges naturally as the distribution of the sample covariance matrix for multivariate normal measurements [18, p.82], [31, p. 92–93]. Let Z1, Z2, … Zn each be independently distributed according to Np(0, Σ) and consider the p × p random matrix . The matrix W is said to have a Wishart distribution, written as W ~ Wp(n, Σ), with n degrees of freedom and p × p positive definite scale matrix Σ. When n ≥ p, W is invertible and expressions for the pdf of W may be found in [18], [28], [31]. When n < p, W is singular, and the pdf does not exist in the conventional sense, but the distribution is nonetheless defined [18, p. 85].

4) Inverted Wishart

The inverted Wishart distribution originates as the distribution of the inverse of a Wishart distributed random matrix, and it is the matrix variate generalization of the inverted gamma distribution [31, p. 111]. A p × p random matrix V which is distributed as an inverted Wishart with m degrees of freedom and a p × p positive definite parameter matrix, Ψ, will be written as V ~ IWp(m, Ψ). The inverted Wishart distribution is defined if m > 2p and is undefined otherwise. An expression for the pdf is given in [18], [31]. Lemma 4 in appendix A clarifies the relationship between Wishart and Inverted Wishart matrices. According to this lemma, when n ≥ p, S ~ Wp(n, Σ) if and only if S−1 ~ IWp(n + p + 1, Σ−1).

B. Channelized Hotelling Observers

We consider a binary classification task in which the goal is to discriminate between two classes of noisy images. The two classes will be denoted as class 1 and class 2, respectively. When the classification task corresponds to lesion detection, we assume that class 1 consists of images with no lesion present, and that class 2 consists of images with a lesion present.

For each noisy image realization, a model observer generates a statistic, t, which is compared to a threshold, tc, in order to classify the image as belonging to either class 1 or class 2. Specifically, if t > tc, the observer indicates that the image belongs to class 2, otherwise, the observer indicates that the image belongs to class 1 [1].

Channel filters are often added to model observers in order to reduce the dimensionality of the image data and to model human performance. Suppose that the noisy image realization is represented as a m × 1 column vector, g. We denote the number of channels by p, where usually, p is much smaller than m. The weights that make up each channel are organized into a column of a m × p channel matrix, designated as U. A channelized model observer applies the channel matrix to the image to get a p×1 output vector, v, according to v:= UTg. Note that a model observer without channels corresponds to the case when the channel matrix is the identity, i.e., U = I and p = m.

We denote the means of the channel outputs over class 1 and class 2 by μi, for i = 1, 2 respectively, and write the difference of these means as Δμ:= μ2 − μ1. Also, we write the covariance matrices for v over the two classes as Σi, for i = 1, 2 respectively, and designate their average as Σ̄:= 0.5(Σ1 + Σ2).

One type of model observer is the Channelized Hotelling observer (CHO) [1], which is the focus of this paper. This observer computes the observer statistic as the inner product of v with a p × 1 template, defined as wCHO:= Σ̄−1 Δμ, i.e., .

C. Observer Performance Measures

One measure of an observer’s performance is its signal-to-noise ratio (SNR), defined as the difference of the class means for t divided by the pooled standard deviation [1, p. 819]. The SNR is a good measure of class separability when t is normally distributed for each class, higher values of the observer SNR indicating greater class separation [1, p. 819]. The square of the SNR for a CHO may be computed as [1, p. 967]

| (4) |

The performance of an observer may also be characterized by its receiver operating characteristic (ROC) curve, which plots the true positive fraction (TPF) versus the false positive fraction (FPF) for different values of the discrimination threshold [1, p. 814–815]. A useful summary measure for the overall performance of an observer is the area under the ROC curve, denoted as the AUC. The AUC ranges from 0.5 to 1, where higher values of the AUC indicate better class discrimination. The AUC may be interpreted as the average TPF over all FPF values. Another useful interpretation of the AUC is as the probability that the observer makes a correct classification when a randomly selected pair of images from class 1 and class 2 are compared [32, p. 77–78], [1, p. 823]. If the observer statistic, t, is normally distributed for each class, then the AUC may be computed from the SNR as [1, p. 819]

| (5) |

where erf(z) is the conventional error function. When t is computed as a linear combination of channel outputs, the observer statistic is typically well-approximated by normal distributions for each class [1, p. 824].

D. Calculation of Moments for AUC estimators

Given a generic estimator of SNR2, denoted as , we may define an estimator for the AUC by substituting the square root of this generic estimator into equation (5). We denote the resulting AUC estimator as .

When the sampling distribution for is known, we may use it to calculate the kth raw moment of by numerically evaluating the integral

| (6) |

where fX(x) is the pdf for . Therefore, we can compute the mean and variance of by evaluating equation (6) for k = 1, 2.

III. Estimation of SNR2 with Known Class Means or Known Difference of Class Means

In this section, estimation of SNR2 is considered for two scenarios. In the first scenario, the means for classes 1 and 2 are assumed to be known. In the second scenario, only the difference of the class means is assumed to be known. We introduce one estimator for scenario 1 and two different estimators for scenario 2. We assume that we have n independent realizations of channel outputs from class 1, , and n independent realizations of channel outputs from class 2, . Also, we assume that the channel outputs are normally distributed with equal class covariance matrices, i.e., and for i = 1, 2, … n. In this case, Σ̄ = Σ. The normality is a reasonable assumption in many CHO applications thanks to the multivariate central limit theorem, and the equal covariance assumption is easily met for low-contrast signals. After defining each estimator, we provide an analytical expression for the estimator’s sampling distribution and discuss its properties.

A. Scenario 1: Known Class Means

First, we consider the situation when the class means μ1 and μ2 are known, and the class covariance matrix, Σ, is unknown.

1) Estimator Definition

In this scenario, unbiased estimates for the covariance matrices of class 1 and class 2 are [18, p. 17]

| (7) |

for k = 1, 2, respectively. Let Ŝ:= 0.5(Ŝ1 + Ŝ2). From Lemmas 2 and 8(i) in appendix A, it follows that ((2n − p − 1)/(2n))Ŝ−1 is an unbiased estimator for Σ−1 if n > (p + 1)/2. Substituting this estimator for Σ−1 into equation (4) yields an estimator for SNR2, defined as

| (8) |

for n > (p + 1)/2.

2) Sampling Distribution

For normally distributed samples, the sampling distribution for θ̂1 may be expressed as an inverted gamma distribution. We state this result in the following theorem, which is proved in appendix A.

Theorem 1

Suppose that θ̂1 is computed from independent samples and from classes 1 and 2, respectively, where i = 1, 2, … n. Then

for n > (p + 1)/2.

3) Estimator Properties

Since the sampling distribution for θ̂1 is an inverted gamma distribution, one may easily find expressions for the mean and variance of θ̂1 by using Theorem 1 together with equations (2) and (3). We write these expressions in the following corollary.

Corollary 1

If θ̂1 is computed from independent, normally distributed samples as stated in Theorem 1, then θ̂1 is an unbiased estimator of SNR2, i.e., E[θ̂1] = SNR2 for n > (p+1)/2, and its variance is

Also, we have the pleasing result that θ̂1 is the uniformly minimum variance unbiased (UMVU) estimator for SNR2 when the class means are known. For reference, we state this result as a theorem; see appendix B for a proof.

Theorem 2

Suppose that μ1 and μ2 are known, and that Σ is unknown. If θ̂1 is computed from independent, normally distributed samples as stated in Theorem 1 with n > (p+1)/2, then θ̂1 is the unique UMVU estimator of SNR2.

B. Scenario 2: Known Difference of Class Means

Next, we examine the case for which the difference of the class means, Δμ, is known but μ1, μ2, and Σ are unknown. We consider two estimators of SNR2 for this scenario.

1) Estimator Definitions

The first estimator which we consider for scenario 2 incorporates knowledge of Δμ into the estimation of Σ. The conventional unbiased estimators for the means of classes 1 and 2 are [28, p. 77]

| (9) |

for k = 1, 2, respectively. Using equations (9) together with our knowledge of Δμ, we define unbiased estimators for the means of classes 1 and 2 as

| (10) |

and

| (11) |

Using these estimators for the class means, we define estimators for the covariance matrices of classes 1 and 2 as

| (12) |

for k = 1, 2, respectively. Next, we define a pooled estimator of Σ as S̃:= 0.5(S̃1 + S̃2). From Lemmas 2 and 8(ii) in appendix A, it follows that ((2n − p − 2)/(2n − 1))S̃−1 is an unbiased estimator for Σ−1 if n > (p + 2)/2. This suggests the following estimator for SNR2:

| (13) |

which is defined for n > (p + 2)/2.

The second estimator which we consider in this scenario does not use knowledge of Δμ for estimation of Σ. The conventional unbiased estimators for the covariance matrices of classes 1 and 2 have the form [28, p. 77]

| (14) |

for k = 1, 2, respectively. Define a pooled estimator of Σ as S̄:= 0.5(S1 + S2). From Lemmas 2 and 8(iii) in appendix A, it follows that ((2n − p − 3)/(2n − 2)))S̄−1 is an unbiased estimator for Σ−1 if n > (p + 3)/2. This result motivates an estimator for SNR2, defined as

| (15) |

for n > (p + 3)/2.

2) Sampling Distributions

For normally distributed samples, the sampling distributions for θ̂2 and θ̂3 may be expressed as inverted gamma distributions. We state these results below. Proofs are provided in appendix A.

Theorem 3

Suppose that θ̂2 is computed from independent samples and from classes 1 and 2, respectively, where i = 1, 2, … n. Then

for n > (p + 2)/2.

Theorem 4

Suppose that θ̂3 is computed from independent samples and from classes 1 and 2, respectively, where i = 1, 2, … n. Then

for n > (p + 3)/2.

3) Estimator Properties

Similar to section III-A3, expressions for the mean and variance of θ̂2 and θ̂3 may be easily found by using equations (2) and (3) together with Theorems 3 and 4, respectively. These results are collected below.

Corollary 2

If θ̂2 is computed from independent, normally distributed samples as stated in Theorem 3, then θ̂2 is an unbiased estimator of SNR2, i.e., E[θ̂2] = SNR2 for n > (p+2)/2, and its variance is

Corollary 3

If θ̂3 is computed from independent, normally distributed samples as stated in Theorem 4, then θ̂3 is an unbiased estimator of SNR2, i.e., E[θ̂3] = SNR2 for n > (p+3)/2, and its variance is

As in section III-A3, we have a satisfying optimality property. Namely, if only the difference of class means is known, then θ̂2 is the UMVU estimator for SNR2. This property is stated precisely as the following theorem. A proof may be found in appendix B.

Theorem 5

Suppose that Δμ is known, and that μ1, μ2 and Σ are unknown. If θ̂2 is computed from independent, normally distributed samples as stated in Theorem 3 with n > (p + 2)/2, then θ̂2 is the unique UMVU estimator of SNR2.

From the above results, it is clear that θ̂3 is not the UMVU estimator for SNR2 in scenario 2. However, θ̂3 is the UMVU estimator within the family of unbiased estimators of SNR2 that disregard the knowledge that Δμ is equal to μ2 − μ1. This statement is clarified by the following theorem. We omit the proof, which is similar to that of Theorem 5 in appendix B.

Theorem 6

Suppose that and are independent samples from classes 1 and 2, respectively, where i = 1, 2, … n with n > (p + 3)/2, and where μ1, μ2, and Σ are unknown. Let δ be an arbitrary, known p × 1 column vector, and define the estimator

Then φ̂ is the unique UMVU estimator of δTΣ−1δ.

IV. Computational Evaluations

Now, we further explore the theoretical properties of the estimators introduced in the last section. We will compare these estimators to a conventional estimator for SNR2, which is reviewed first. As before, we assume that there are independent realizations of channel outputs from class 1 and independent realizations of channel outputs from class 2. Also, we will be assuming that p = 40 channels are used. There is nothing special about p = 40, as opposed to another value, such as p = 4, which is typical for isotropic channels. Our choice of p = 40 is motivated by our current interest in evaluating the impact of anisotropic noise on lesion detectability [11].

A. An Estimator for SNR2 When the Class Means and Their Difference Are Unknown

Suppose that the class means, μ1 and μ2, and their difference, Δμ, are unknown. In this situation, the conventional method to construct an estimator for SNR2 is to substitute the sample estimates Δv̄:= v̄2 − v̄1 and S̄ for Δμ and Σ in equation (4). This approach is used in [1, p. 972], and yields an estimator defined as

| (16) |

The sampling distribution for θ̂4 is known in the case when the samples are normally distributed with equal covariance matrices, i.e., and for i = 1, 2, … n. Specifically, [18, p. 216]

| (17) |

where is the non-central F distribution with ν1 = p and ν2 = 2n − p − 1 degrees of freedom and noncentrality parameter, λ = (n/2)SNR2. Expressions for the mean and variance for the noncentral F distribution [18], [30] may be used to derive formulae for the bias and variance of θ̂4. These formulae are [33]

| (18) |

for n > (p + 3)/2, and

| (19) |

for n > (p + 5)/2.

B. Comparison of the SNR2 Estimators

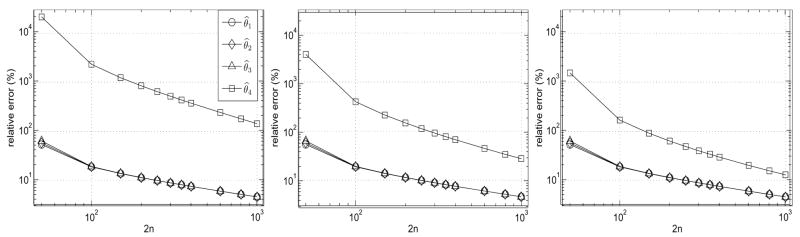

In the first set of comparisons, the relative error for each of the four SNR2 estimators is plotted as a function of the total number of images, 2n; see Figure 1. The plots assume SNR2 values of 0.12837, 0.90987, and 3.2847, corresponding to AUC values of 0.6, 0.75, and 0.9, respectively. Here the relative error (in %) is calculated as the square root of the mean-square error (MSE) divided by the SNR2 value times 100. Using the fact that the MSE is equal to the bias squared plus the variance [34], the MSE for θ̂1, θ̂2, θ̂3 and θ̂4 was calculated using the expressions from Corollaries 1, 2, and 3 and equations (18) and (19).

Fig. 1.

Relative error of SNR2 estimators as a function of the total number of images, 2n. The plots assume p = 40 channels with SNR2 = 0.12837 (left), 0.90987 (middle), and 3.2847 (right), corresponding to AUC values of 0.6, 0.75, and 0.9, respectively. The estimators are denoted as follows: θ̂1 (circles), θ̂2 (diamonds), θ̂3 (triangles), and θ̂4 (squares). The relative error (in %) is calculated as , where MSE denotes the mean-square error.

Comparing θ̂1, θ̂2, and θ̂3 in the plots, we observe that their relative errors are very similar. This is not surprising, since they are all unbiased and their variance expressions are comparable. In addition, we note that for fixed n and p, the relative errors for θ̂1, θ̂2 and θ̂3 are invariant over the different SNR2 values. Although this invariance is only shown in the plots for particular values of n and p, it actually holds for any choice of n and p. This follows from the fact that the standard deviation of each estimator divided by SNR2 only depends on n and p; this quantity is independent of SNR2 and also of the correlation matrix and the class means.

On the other hand, the relative error for θ̂4 decreases as the true SNR2 value increases. Furthermore, we see that the relative error for θ̂4 is significantly larger than that for θ̂1, θ̂2, and θ̂3. For example, in the case of SNR2 = 0.90987 with 2n = 250 total images, the relative error for θ̂1 is 9.8%, compared to a relative error of 112.3% for θ̂4.

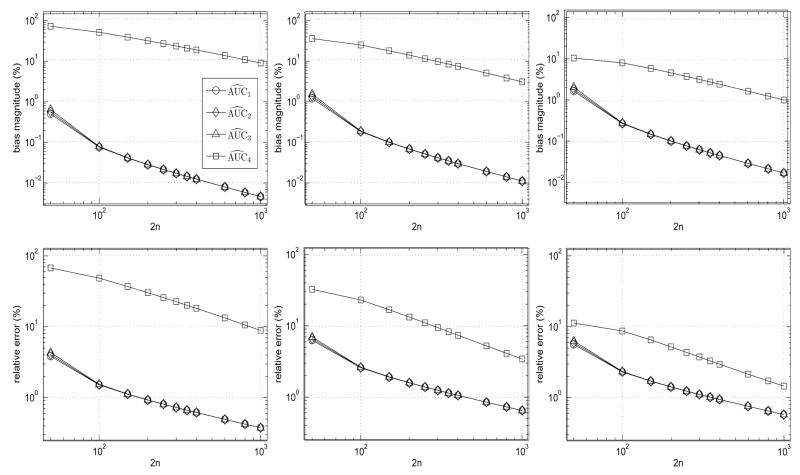

C. Comparison of the AUC Estimators

For the next set of comparisons, we consider the AUC estimators which are found by substituting the square root of θ̂i for SNR in equation (5). For each i ∈ {1, 2, 3, 4}, the resulting AUC estimator will be denoted as .

In Figure 2, the bias magnitude and relative error for each of the AUC estimators are plotted as functions of the total number of images, 2n. The plots compare the estimator behavior for AUC values of 0.6, 0.75, and 0.9. The bias magnitude (in %) is calculated by dividing the absolute value of the bias by the AUC value and multiplying by 100. The relative error (in %) is calculated as the square root of the MSE divided by the AUC value times 100. The bias and MSE for each AUC estimator were computed from the estimator’s mean and variance, which were calculated numerically using the pdf of the corresponding θ̂i and the method described in section II-D. In all cases, the numerical error for the mean and variance results was constrained to be less than 10−6.

Fig. 2.

Bias magnitude and relative error of AUC estimators as a function of the total number of images, 2n. The plots assume p = 40 channels with AUC = 0.6 (left), 0.75 (middle), and 0.9 (right). Bias magnitude is on the top and relative error is on the bottom. The estimators are (circles), (diamonds), (triangles), (squares). For each i ∈ {1, 2, 3, 4}, is calculated by substituting the square root of θ̂i for SNR in equation (5). The bias magnitude (in %) for an AUC estimator, , is calculated as . The relative error (in %) is calculated as , where MSE denotes the mean-square error.

Examining the bias magnitude plots in Figure 2 (top row), we see that the bias magnitudes for , and are very similar, and increase at fixed n with increasing AUC values, but are generally very small. Specifically, for 2n ≥ 100, the bias magnitudes for these three estimators are all less than 0.3%. In contrast, the bias magnitude for decreases with increasing AUC values, but it is always much larger than for the other three estimators. For instance, in the case of 2n = 100, the bias magnitude for has values of 47.1%, 23.1%, and 8.4%, corresponding to AUC values of 0.6, 0.75, and 0.9, respectively. Although not reflected in the plots, the biases for and were negative in all cases and the bias for was positive in all cases.

Directing our attention to the relative error plots in Figure 2 (bottom row), the trends of , and are again seen to be very similar. For 2n ≥ 100, the relative errors for these three estimators are less than 2.7%. On the other hand, the relative error for is significantly larger. For example, when 2n = 100, the relative error for has values of 47.4%, 23.4%, and 8.55% corresponding to AUC values of 0.6, 0.75, and 0.9, respectively. Comparing these relative errors to the bias magnitude values mentioned above, we see that most of the relative error for is due to bias.

V. Discussion and Conclusions

In this work, we were interested in the estimation of classical performance metrics for discrimination between two classes of images. The discrimination was assumed to be performed by a channelized Hotelling observer. Also, we assumed that the estimation is to be carried out using a small number of image realizations, as is typically the case in medical imaging.

Unfortunately, access to a limited number of image realizations implies that the estimated performance values will have some statistical errors, quantified, for example, in terms of estimator bias and variance. Barrett and Myers [1, p. 972] suggested that these statistical errors could potentially be reduced if the class means are assumed to be known. We have investigated this issue thoroughly. Assuming that the channel outputs corresponding to the two classes of images have multivariate normal distributions with equal covariance matrices, we have completely characterized the sampling distributions for three estimators of SNR2 that assume that the class means, or their difference, are known. Using these distributions, we have demonstrated that a significant decrease in estimation error can be achieved when the class means, or their difference, are known.

A useful property of our three SNR2 estimators is the fact that the ratio of each estimator’s standard deviation to its mean is a function of only n and p. This property allows for easy pre-selection of the number of images needed to achieve a desired level of accuracy.

Another interesting property of our three SNR2 estimators is that they yield AUC estimators that have negative bias, just as estimators based on a training/testing paradigm [16], [17]. Thus, we demonstrated that the training/testing paradigm is not the only estimation strategy that can result in negative biases. Moreover, recall from Figure 2 that the biases achieved by our new estimators are fairly small.

Of course, it is natural to ask in which situations the image class means, or their difference, may be found. One situation where this is possible is for image reconstruction from simulated data with the data mean known. For example, in the case of linear reconstruction algorithms (e.g, filtered backprojection (FBP) type), which are still the methods of choice in CT, the image class means may be found by simply reconstructing the means of the data for each class. Similarly, for many types of (nonlinear) iterative reconstruction methods, the image class means may be found to first order by reconstructing the means of the data for each class [35]. This approximation has been found to be fairly accurate for the cases of expectation maximization (EM) [36], [37] and penalized-likelihood reconstruction [35].

When significant uncertainty exists for the class mean estimates, our estimators may not be satisfactory. This could be the case for iterative reconstruction methods if first-order estimates of the means are not accurate enough. This could also be the case for image quality assessment from real data, because the class means of real data are rarely known exactly. For these situations, it would be useful to understand how our estimators are affected by errors in the mean estimates. Moreover, as refined estimates of the class means may possibly be obtained at low cost, we note that knowledge of the distributions for our estimators may pave the way for new attractive estimators that incorporate prior information pertaining to the class means. These topics define interesting areas of further research.

In order to safely apply our results to a particular imaging application, we emphasize that it is necessary to verify that the channel outputs corresponding to the two classes of images are well-approximated by multivariate normal distributions that have the same covariance matrix. We are currently assessing the validity of this assumption for x-ray CT. This assessment will include a comparison of our approach to nonparametric AUC estimation using the Mann-Whitney U statistic.

Last, note that our estimators allow for a significant improvement in efficiency for the computation of detectability maps. These maps plot SNR or AUC as a function of lesion location [1, p. 858] [38]. This is one way to investigate the dependence of lesion detectability on background variation [1, p. 858–859]. In the future, we will evaluate our estimators for the purpose of building detectability maps for commercially-available FBP reconstruction methods in x-ray CT.

Acknowledgments

This work was partially supported by NIH grant R01 EB007236 and by a generous grant from the Ben B. and Iris M. Margolis Foundation. Its contents are solely the responsibility of the authors.

Appendix A

In this appendix, we prove Theorems 1, 3, and 4 which characterize the sampling distributions of θ̂1, θ̂2, and θ̂3. For this task, we first review several useful properties of the Wishart, inverted Wishart, and inverted gamma distributions.

Lemma 1

If A1 ~ Wp(m, Σ) and A2 ~ Wp(n, Σ) are independent, p×p random matrices, then A1 + A2 ~ Wp(m+ n, Σ).

Proof

Lemma 2

Let n > p + 1. If S ~ Wp(n, Σ), then

Proof

Lemma 3

Let n ≥ p. If S ~ Wp(n, Σ) and A is a q × p matrix of rank q ≤ p, then

Proof

Lemma 4

Let n ≥ p. Then S ~ Wp(n, Σ) if and only if S−1 ~ IWp(n + p + 1, Σ−1).

Proof

See [31, Thm 3.4.1, p. 111].

Lemma 5

Let n ≥ p. If S ~ Wp(n, Σ) and A is a q × p matrix of rank q ≤ p, then

Proof

This follows immediately from Lemmas 3 and 4.

Lemma 6

If X ~ IW1(m, Ψ) and m > 2, then .

Proof

Suppose that X ~ IW1(m, Ψ) and m > 2. First, note that since X is an inverted Wishart matrix with p = 1, X and Ψ are scalars. For the p = 1 case, the pdf of the inverted Wishart distribution [31, p. 111] is

| (20) |

Comparing with equation (1), we recognize the above expression as the pdf for an inverted gamma random variable with α = (m − 2)/2 and β = Ψ/2.

Lemma 7

Let c > 0 be an arbitrary constant. If X ~ IG(α, β), then Y = cX ~ IG(α, cβ).

Proof

Suppose that X ~ IG(α, β) and let Y = cX. The pdf for Y may be expressed in terms of the pdf for X by applying the rule for a monotonic transformation of a random variable [39, p. 51], i.e., fY (y) = (1/c)fX(y/c). Using this rule together with equation (1), we may express the pdf for Y as

| (21) |

Hence, Y ~ IG(α, cβ).

Next, we collect some results concerning the distributions of Ŝ, S̃, and S̄.

Lemma 8

Suppose that and are independent samples from classes 1 and 2, respectively, where i = 1, 2, … n. If Ŝ, S̃, and S̄ are computed from these samples according to equations (7), (12), and (14), respectively, then (i) 2nŜ ~ Wp(2n, Σ), (ii) (2n − 1) S̃ ~ Wp (2n − 1, Σ), and (iii) (2n − 2) S̄ ~ Wp(2n − 2, Σ).

Proof

First, observe that Ŝ1 may be expressed as , where . Since Z1, Z2, … Zn are independently distributed according to Np(0, Σ), it follows from the definition of the Wishart distribution [18, p. 82] that nŜ1 ~ Wp(n, Σ). By a similar argument, nŜ2 ~ Wp(n, Σ). Because nŜ1 and nŜ2 are independent, Lemma 1 implies that 2nŜ = nŜ1 + nŜ2 ~ Wp(2n, Σ).

-

From the definition of S̃, we have

(22) Substituting for and for into the definition, and rearranging, we find(23) Inserting the definitions of μ̃ 1and μ̃2 given by equations (10) and (11) into the last equation, and simplifying, yields(24) By a theorem concerning the distribution of the sample covariance matrix [31, Thm 3.3.2, p. 92], the first and second terms in equation (24) are distributed as Wp(n − 1, Σ). Also, using standard results for the sample mean and multivariate normal distribution [18], it follows that . Therefore, by the definition of the Wishart distribution [18, p. 82], we see that the third term in equation (24) has a Wp(1, Σ) distribution.

The first and second terms in equation (24) are clearly independent because they are computed from independent samples. Moreover, for the same reason, we note that the sample means, v̄1 and v̄2, are independent of the second and first terms, respectively. Also, by a standard theorem [31, Thm. 3.3.6, p. 92], v̄1 and v̄2 are independent of the first and second terms, respectively. Hence, the third term is independent of the first and second terms. Because the three terms in equation (24) are independent, we may apply Lemma 1 to conclude that (2n − 1)S̃ ~ Wp(2n − 1, Σ).

By a theorem for the distribution of the sample covariance matrix [31, Thm 3.3.2, p. 92], we have (n−1)S1 ~ Wp(n−1, Σ) and (n−1)S2 ~ Wp(n−1, Σ). In addition, since S1 and S2 are computed from independent samples, they are independent. Thus, Lemma 1 implies that (2n − 2)S̄ = (n − 1)S1 + (n − 1)S2 ~ Wp(2n − 2, Σ).

Now, we are ready to prove Theorems 1, 3, and 4. Recall that each of these estimators is computed from independent samples and from classes 1 and 2, respectively, where i = 1, 2, … n.

Proof of Theorem 1

Assume n > (p+ 1)/2. The definition of θ̂2 in equation (8) may be rewritten as (1/(2n − p − 1)) θ̂1 = ΔμT (2nŜ)−1Δμ. From Lemma 8(i), 2nŜ ~ Wp(2n, Σ). Hence, we may apply Lemma 5 and equation (4) to find that (1/(2n − p − 1)) θ̂1 ~ IW1(2n − p + 3, SNR2). Lemma 6 then yields (1/(2n − p −1)) θ̂1 ~ IG((2n − p + 1)/2, (1/2)SNR2). Finally, application of Lemma 7 gives the desired result.

Proof of Theorem 3

Assume n > (p + 2)/2. The definition of θ̂2 in equation (13) may be rewritten as (1/(2n − p − 2)) θ̂2 = ΔμT [(2n − 1)S̃]−1Δμ. From Lemma 8(ii), (2n − 1)S̃ ~ Wp(2n − 1, Σ). Hence, we may apply Lemma 5 and equation (4) to find that (1/(2n−p−2)) θ̂2 ~ IW1(2n − p + 2, SNR2). Lemma 6 then yields (1/(2n − p − 2)) θ̂2 ~ IG((2n − p)/2, (1/2)SNR2). Finally, application of Lemma 7 gives the desired result.

Proof of Theorem 4

Assume n > (p + 3)/2. The definition of θ̂3 in equation (15) may be rewritten as (1/(2n − p − 3)) θ̂3 = ΔμT [(2n − 2) S̄]−1Δμ. From Lemma 8(iii), (2n − 2)S̄ ~ Wp(2n − 2, Σ). Hence, we may apply Lemma 5 and equation (4) to find that (1/(2n−p−3)) θ̂3 ~ IW1(2n − p + 1, SNR2). Lemma 6 then yields (1/(2n −p −3)) θ̂3 ~ IG((2n − p − 1)/2, (1/2)SNR2). Finally, application of Lemma 7 gives the desired result.

Appendix B

In this appendix, we prove Theorems 2 and 5, which state that θ̂1 and θ̂2 are UMVU estimators. Again, we assume that each of these estimators is computed from independent samples and from classes 1 and 2, respectively, where i = 1, 2, … n.

Proof of Theorem 2

Assume n > (p + 1)/2. Also, suppose that μ1 and μ2 are known, and that Σ is unknown. First, we show that Ŝ is a sufficient statistic for the joint pdf of the sample. The joint pdf of and is

| (25) |

where

| (26) |

Using the additive and cyclic properties of the trace, denoted tr, we may rewrite η as

| (27) |

i.e.,

| (28) |

Hence, the joint pdf has the form

| (29) |

By the Fisher-Neyman factorization theorem [40, Thm. 6.5, p. 35] [41, Prop. IV.C.1, p. 159], Ŝ is a sufficient statistic. Moreover, because the expression in equation (29) has the form of a full rank exponential family [40, p. 23–24], Ŝ is a complete statistic [40, Thm. 6.22, p. 42]. Since (i) Ŝ is a complete sufficient statistic, (ii) θ̂1 is an unbiased estimator of SNR2, and (iii) θ̂1 = E[θ̂1|Ŝ], i.e., θ̂1 is a function of Ŝ only, the Lehmann-Scheffé Theorem [40, Thm. 1.11, p. 88] [41, p. 164] implies that θ̂1 is the unique UMVU estimator of SNR2 in scenario 1.

Proof of Theorem 5

Assume n > (p + 2)/2. Also, suppose that Δμ is known, and that μ1, μ2, and Σ are unknown. The joint pdf of and is given by equations (25) and (26). After lengthy algebra, one may rewrite the joint pdf in the form

| (30) |

By the Fisher-Neyman factorization theorem [40, Thm. 6.5, p. 35] [41, Prop. IV.C.1, p. 159], the statistic

| (31) |

where τ = (2n−1)S̃ −n(v̄1 + v̄2 − Δμ)(v̄1 + v̄2 − Δμ)T, is sufficient. Moreover, because the expression in equation (30) has the form of a full rank exponential family [40, p. 23–24], T is a complete statistic [40, Thm. 6.22, p. 42]. Since (i) T is a complete sufficient statistic, (ii) θ̂2 is an unbiased estimator of SNR2, and (iii) θ̂2 = E[θ̂2|T], i.e., θ̂2 is a function of T only, the Lehmann-Scheffé Theorem [40, Thm. 1.11, p. 88] [41, p. 164] implies that θ̂2 is the unique UMVU estimator of SNR2 in scenario 2.

References

- 1.Barrett HH, Myers KJ. Foundations of Image Science. John Wiley & Sons; 2004. [Google Scholar]

- 2.Park S, Barrett HH, Clarkson E, Kupinski MA, Myers KJ. Channelized-ideal observer using Laguerre-Gauss channels in detection tasks involving non-Gaussian distributed lumpy backgrounds and a Gaussian signal. J Opt Soc Am A. 2007 Dec;24(12):B136–B150. doi: 10.1364/josaa.24.00b136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Wollenweber S, Tsui B, Lalush D, Frey E, LaCroix K, Gullberg G. Comparison of Hotelling observer models and human observers in defect detection from myocardial SPECT imaging. IEEE Trans Nuc Sci. 1999 Dec;46(6):2098–2103. [Google Scholar]

- 4.Bonetto P, Qi J, Leahy RM. Covariance approximation for fast and accurate computation of channelized Hotelling observer statistics. IEEE Trans Nuc Sci. 2000 Aug;47(4):1567–1572. [Google Scholar]

- 5.Gifford HC, King MA, de Vries DJ, Soares EJ. Channelized Hotelling and human observer correlation for lesion detection in hepatic SPECT imaging. Journ Nucl Med. 2000 Mar;41(3):514–521. [PubMed] [Google Scholar]

- 6.Kim J-S, Miyaoka RS, Harrison RL, Kinahan PE, Lewellen TK. Detectability comparisons of image reconstruction algorithms using the channelized Hotelling observer with bootstrap resampled data. Proc of 2001 IEEE Nucl Sci Symp. 2001:1444–1448. [Google Scholar]

- 7.Zeng GL, Gullberg GT. A channelized-Hotelling-trace collimator design method based on reconstruction rather than projections. IEEE Trans Nuc Sci. 2002 Oct;49(5):2155–2158. [Google Scholar]

- 8.Kim JS, Kinahan PE, Lartizien C, Comtat C, Lewellen TK. A comparison of planar versus volumetric numerical observers for detection task performance in whole-body PET imaging. IEEE Trans Nuc Sci. 2004 Feb;51(1):34–40. [Google Scholar]

- 9.LaRoque SJ, Sidky EY, Edwards DC, Pan X. Evaluation of the channelized Hotelling observer for signal detection in 2D tomographic imaging. Medical Imaging 2007. :651514. ser. Proc. of SPIE, vol. 6515, 2007. [Google Scholar]

- 10.Sidky EY, LaRoque SJ, Pan X. Accurate computation of the Hotelling observer for the evaluation of image reconstruction algorithms in helical, cone-beam CT. Proc of 2007 IEEE Nucl Sci Symp. 2007:3233–3236. [Google Scholar]

- 11.Wunderlich A, Noo F. Evaluation of the impact of tube current modulation on lesion detectability using model observers. Proc. of the 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; August 2008; pp. 2705–2708. [DOI] [PubMed] [Google Scholar]

- 12.Wunderlich A, Noo F. Image covariance and lesion detectability in direct fan-beam x-ray computed tomography. Phys Med Biol. 2008 May;53(10):2471–2493. doi: 10.1088/0031-9155/53/10/002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Bamber D. The area above the ordinal dominance graph and the area below the receiver operating characteristic graph. Journ Math Psych. 1975;12:387–415. [Google Scholar]

- 14.Hanley JA, McNeil BJ. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology. 1982 Apr;143(1):29–36. doi: 10.1148/radiology.143.1.7063747. [DOI] [PubMed] [Google Scholar]

- 15.Yousef WA, Wagner RF, Loew MH. Assessing classifiers from two independent data sets using ROC analysis: A nonparametric approach. IEEE Trans Pattern Analysis and Machine Intelligence. 2006 Nov;28(11):2006. doi: 10.1109/TPAMI.2006.218. [DOI] [PubMed] [Google Scholar]

- 16.Chan HP, Sahiner B. Classifier design for computer-aided diagnosis: Effects of finite sample size on the mean performance of classical and neural network classifiers. Med Phys. 1999 Dec;26(12):2654–2668. doi: 10.1118/1.598805. [DOI] [PubMed] [Google Scholar]

- 17.Sahiner B, Chan HP, Petrick N. Feature selection and classifier performance in computer-aided diagnosis: The effect of finite sample size. Med Phys. 2000 Jul;27(7):1509–1522. doi: 10.1118/1.599017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Muirhead RJ. Aspects of Multivariate Statistical Theory. John Wiley & Sons; 1982. [Google Scholar]

- 19.Fukunaga K, Hayes RR. Effects of sample size in classifier design. IEEE Trans Pattern Analysis and Machine Intelligence. 1989 Aug;11(8):873–885. [Google Scholar]

- 20.Abbey CK, Barrett HH, Eckstein MP. Practical issues and methodology in assessment of image quality using model observers. Medical Imaging 1997. :182–194. ser. Proc. of SPIE, vol. 3032, 1997. [Google Scholar]

- 21.Wagner R, Brown D, J-P G, Myers K, Wear K. Multivariate gaussian pattern classification: Effects of finite sample size and the addition of correlated or noisy features on summary measures of goodnessInformation Processing in Medical Imagingser. In: Barrett H, Gmitro A, editors. Lecture Notes in Computer Science #687. Springer-Verlag; 1993. pp. 507–524. [Google Scholar]

- 22.Wagner R, Chan H-P, Sahiner B, Petrick N, Mossoba J. Finite-sample effects and resampling plans: Applications to linear classifiers in computer-aided diagnosis. Medical Imaging 1997. :467–477. ser. Proc. of SPIE, vol. 3034, 1997. [Google Scholar]

- 23.Beiden SV, Maloof MA, Wagner RF. A general model for finite-sample effects in training and testing of competing classifiers. IEEE Trans Pattern Analysis and Machine Intelligence. 2003 Dec;25(12):1561–1569. [Google Scholar]

- 24.Gallas BD. Variance of the channelized-Hotelling observer from a finite number of trainers and testers. Medical Imaging 2003. :100–111. ser. Proc. of SPIE, vol. 5034, 2003. [Google Scholar]

- 25.Yousef WA, Wagner RF, Loew MH. Proc 33rd Applied Imagery Pattern Recognition Workshop. IEEE Comp. Soc.; 2004. Comparison of non-parametric methods for assessing classifier performance in terms of ROC parameters; pp. 190–195. [Google Scholar]

- 26.Gagne RM, Gallas BD, Myers KJ. Toward objective and quantitative evaluation of imaging systems using images of phantoms. Med Phys. 2006 Jan;33(1):83–95. doi: 10.1118/1.2140117. [DOI] [PubMed] [Google Scholar]

- 27.Sahiner B, Chan HP, Hadjiiski L. Classifier performance prediction for computer-aided diagnosis using a limited dataset. Med Phys. 2008 Apr;35(4):1559–1570. doi: 10.1118/1.2868757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Anderson TW. An Introduction to Multivariate Statistical Analysis. 3. John Wiley & Sons; 2003. [Google Scholar]

- 29.Evans M, Hastings N, Peacock B. Statistical Distributions. 2. John Wiley & Sons, Inc; 1993. [Google Scholar]

- 30.Johnson NL, Kotz S, Balakrishnan . Continuous Univariate Distributions. 2. Vol. 2 John Wiley & Sons; 1995. [Google Scholar]

- 31.Gupta A, Nagar D. Matrix Variate Distributions. Chapman & Hall/CRC; 2000. [Google Scholar]

- 32.Pepe MS. The Statistical Evaluation of Medical Tests for Classification and Prediction. Oxford University Press; 2003. [Google Scholar]

- 33.Wunderlich A, Noo F. Accuracy of channelized Hotelling observer performance measures estimated from repeated CT scans. IEEE Nucl Sci Symp Conference Record. 2008 [Google Scholar]

- 34.Kay SM. Fundamentals of Statistical Signal Processing. Prentice Hall; 1993. p. 1. Estimation Theory. [Google Scholar]

- 35.Fessler JA. Mean and variance of implicitly defined biased estimators (such as penalized maximum likelihood): applications to tomography. IEEE Trans Image Processing. 1996 Mar;5(3):493–506. doi: 10.1109/83.491322. [DOI] [PubMed] [Google Scholar]

- 36.Barrett HH, Wilson DW, Tsui BMW. Noise properties of the EM algorithm: I. Theory. Phys Med Biol. 1994;39:833–846. doi: 10.1088/0031-9155/39/5/004. [DOI] [PubMed] [Google Scholar]

- 37.Wilson DW, Tsui BMW, Barrett HH. Noise properties of the EM algorithm: II. Monte Carlo simulations. Phys Med Biol. 1994;39:847–871. doi: 10.1088/0031-9155/39/5/005. [DOI] [PubMed] [Google Scholar]

- 38.Pineda AR, Schweiger M, Arridge SR, Barrett HH. Information content of data types in time-domain optical tomography. J Opt Soc Am A. 2006 Dec;23(12):2989–2996. doi: 10.1364/josaa.23.002989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Casella G, Berger RL. Statistical Inference. 2. Duxbury Press; 2001. [Google Scholar]

- 40.Lehmann E, Casella G. Theory of Point Estimation. 2. Springer; 1998. [Google Scholar]

- 41.Poor HV. An Introduction to Signal Detection and Estimation. 2. Springer; 1994. [Google Scholar]