Abstract

The asymptotic distribution of the multivariate variance component linkage analysis likelihood ratio test has provoked some contradictory accounts in the literature. In this paper we confirm that some previous results are not correct by deriving the asymptotic distribution in one special case. It is shown that this special case is a good approximation to the distribution in many situations. We also introduce a new approach to simulating from the asymptotic distribution of the likelihood ratio test statistic in constrained testing problems. It is shown that this method is very efficient for small p-values, and is applicable even when the constraints are not convex. The method is related to a multivariate integration problem. We illustrate how the approach can be applied to multivariate linkage analysis in a simulation study. Some more philosophical issues relating to one-sided tests in variance components linkage analysis are discussed.

1. Introduction

There are numerous statistical applications for which one-sided tests may be justified. Indeed, any quick search of the literature on one-sided tests or constrained tests will reveal applications in clinical studies, pharmacokinetics, genetic epidemiology and many other fields. This paper is motivated by a variance component testing problem that occurs in multivariate linkage analysis. In most one-sided situations we have a very simple hypothesis to test, such as H0 : μ = 0 vs Ha : μ > 0. Here, something like the one-sided t-test is sufficient. However, sometimes the one-sided hypothesis is more complex, such as can be expressed as special cases of H0 : θ ∈ Ω1 vs Ha : θ ∈ Ω2. The statistics available for this kind of test (Silvapulle and Sen 2005) do not necessarily follow simple asymptotic distributions. Perhaps the most obvious choice of statistic is the constrained maximum likelihood ratio test (CLRT) statistic discussed below. Numerous authors, such as Self and Liang (1987), have shown that in some cases this statistic asymptotically follows a mixture of chi-squared distributions, while in other cases the distribution is more complex. In this paper we discuss some results for constrained hypothesis testing that are relevant to multivariate linkage analysis.

In section 2 we briefly review some of the literature on the CLRT. In section 3 we suggest a simple and efficient approach to calculating the asymptotic significance levels of the CLRT even when the null distribution cannot be described as a mixture of chi-squared distributions. We demonstrate that our method has a strong computational advantage over another known method when small p-values are involved. We then show that the asymptotic distribution may be reduced to a hyperspherical integration problem. In section 4, we demonstrate our method for the multivariate variance component linkage analysis problem (Amos, de Andrade et al. 2001). This model has a particularly complex asymptotic distribution. The general consensus seems to be that “this issue warrants further detailed attention” (Marlow, Fisher et al. 2003), and, “there is an urgent need to characterize the asymptotic distribution associated with these multivariate tests” (Evans, Zhu et al. 2004). In a recent paper, Han and Chang (2008) have challenged the correctness of several relevant results in the literature. They also suggest a fairly simple way of simulating from the asymptotic null distribution. In this paper we confirm some of the findings of Han and Chang by showing that, under certain restrictive assumptions, analytical results are available. When these assumptions are not met, we show that much faster simulation methods are available. In section 5 we discuss some philosophical points concerning constrained testing in the variance component linkage setting, and review our results.

2. Asymptotics under Nonstandard Conditions

By definition, a cone C with its vertex at θ0 is a set such that, at, if x ∈ C, then a (x – θ0) + θ0 ∈ C for all a ≥ 0. We say that a cone C (Ω, θ0)approximates a set Ω at θ0 if: ∀y ∈ Ω and ∀x ∈ C (Ω,θ0). A function f (z) is said to be o(z) if . The above definition of approximating cones was introduced by Chernoff (1954). Geyer (1994) has discussed its relation to other approximating cones. The primary intuition behind the approximating cone is that, near θ0, there is a similar shape for both C (Ω,θ0) and Ω. We know from the (typical) consistency of the maximum likelihood estimate that, with a large enough sample size, estimates of a parameter θ will be very close to the true value θ0. At this point we may substitute C (Ω,θ0) for the parameter space Ω. The beauty of a cone is that no matter how close we zoom in on the vertex, it looks the same. One fact that falls easily out of the definition of an approximating cone is that all cones approximate themselves. Furthermore, it is quite obvious that the space of positive semidefinite matrices is a cone with vertex at the zero matrix (if M is positive semidefinite, then so is aM for all a ≥ 0). Indeed, the parameter spaces that are relevant to the present work are already cones and they do not need to be approximated.

Chernoff (1954) produced one of the original works on this subject. Perhaps the most well known work in this area was by Self and Liang (1987). It has been shown (Geyer 1994) that the regularity conditions used by Self and Liang are not sufficient to guarantee all the results given, but the results still hold with corrected regularity conditions. There have been numerous reformulations of the basic results presented below under relaxed regularity conditions (Geyer 1994; Vu and Zhou 1997; Delmas and Foulley 2007). The results have been extended to include the non-identically distributed case (Vu and Zhou 1997; Delmas and Foulley 2007). A thorough review of the literature on constrained testing is given by Silvapulle and Sen (2005).

When some of the parameters lie on the boundary of the parameter space, the asymptotic distribution of the CLRT quickly becomes complicated. We first reproduce the main result from Self and Liang (1987) and Chernoff (1954). However, we assume the regularity conditions used in Theorem 4.4 of Geyer (1994). Let θ be a vector with p elements containing all model parameters, and let θ0 be the actual parameters for the generating distribution of y. Let l (θ;y) be the log-likelihood for the data. Let us define the Fisher information as . Suppose we are performing a hypothesis test such that under the null hypothesis θ ∈ Ω0, and under the alternative hypothesis θ ∈ Ω1. The CLRT statistic is defined as:

We have the following well-known result:

Theorem 1: Let Z be a multivariate normal (MVN) random variable with mean θ0 and variance f–1 (θ0). Let Ω 0 and Ω 1 be sets with non-empty approximating cones C (Ω1, θ0) and C (Ω0, θ0). Then, under certain regularity conditions, the asymptotic null distribution of the CLRT is the same as the distribution of the likelihood ratio test of θ ∈ C (Ω0, θ0) versus the alternative θ ∈ C (Ω1, θ0) based on a single realization of Z.

To restate Theorem 1, the asymptotic distribution of the CLRT statistic is equivalent to the distribution of a function of a multivariate random variable (Z):

| (1) |

This can be simplified somewhat. Let f1/2 be a matrix such that f1/2f1/2 = f and let Z̃ be a MVN random variable with mean 0 and variance the identity matrix. Theorem 1 then implies that the asymptotic distribution of the CLRT statistic is the same as that of:

| (2) |

where C̃ (Ωi, θ0) = {f1/2 (θ – θ0) | θ ∈ C (Ωi, θ0)}. Note that C̃ (Ωi, θ0) is a cone with vertex at the origin. Also note that g (•) and g̃ (•) are related by the simple identity g̃(V) = g (f−1/2V + θ0).

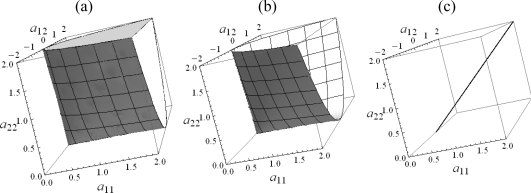

In many cases the asymptotic distribution turns out to be a mixture of chi-squared distributions. It is fairly easy to understand this nice behavior when g(Z) involves projections onto a set of flat surfaces in C (Ωi, θ0). In fact, it can be shown that when testing a hypothesis of the form H0 : θ = θ0 vs H0 : θ ∈ Ω1, where C (Ω1, θ0) is a piecewise smooth convex cone, the asymptotic distribution under the null hypothesis will always be a mixture of chi-squared distributions (Takemura and Kuriki 1997). However, deriving the mixing proportions is often a difficult task. Furthermore, many realistic situations violate these conditions. For example, in variance component linkage analysis it is desirable to use a nonconvex space as the alternative hypothesis. This space is illustrated in Figure 1.b. Also, the existence of nuisance parameters may cause the hypothesis to be of a different form. In this paper we suggest that a direct derivation or estimation of mixing proportions is not necessary. To calculate statistical significance it is easy to implement efficient algorithms that do not assume the asymptotic distribution is a mixture of chi-squared random variables.

Figure 1:

Geometric view of the alternative hypothesis in the bivariate case. (a) Constraint 1: Ωa = {A | A ≥ 0}, (b) Constraint 2: Ωa = {φφT | φ ∈ ℝt}, (c) Constraint 3: .

3. Calculating Statistical Significance

Method 1: Simple Simulation

As discussed earlier, the asymptotic distribution may be represented as a function (g̃(Z̃)) of standard normal variables. Suppose that f is the distribution function of Z̃, and S is a likelihood ratio statistic. Then the p-value for S is:

| (3) |

The simplest possible approach to finding the asymptotic p-value would be to sample from Z̃ and find the fraction of samples for which g̃(Z̃) > S. Various authors have suggested this (Silvapulle and Sen 2005, pp. 78–81). In multivariate linkage analysis this has been suggested by Han and Chang (2008). With very small p-values, often necessary to allow for multiple comparisons, accurate estimates require a large number of simulation samples.

Other simulation methods have been suggested that calculate mixing proportions (Silvapulle and Sen 2005 p 78–81). However, such methods are not applicable when the distribution is not a mixture of chi-squared distributions.

Method 2: Direction and Length Decomposition

We now suggest a more computationally efficient method. We can decompose the MVN distribution into two pieces: a directional p-vector V = Z̃ / ‖Z̃‖ and a scalar ‖Z̃‖. Thus Z̃ = ‖Z̃‖ V. V can be visualized as a point on the unit hypersphere, while ‖Z̃‖ is the Euclidian length of Z̃. It can be shown that , where d is the number of elements in θ and V has a uniform distribution on the surface of a unit hypersphere.

The function g̃(z) has some very interesting properties, including being continuous. Let C̃i = C̃ (Ωi, θ0). The one property that we wish to exploit follows (in line 3 below) from the definition of a cone:

| (4) |

Let Fd be the cumulative distribution function of . Also, define Fd (S / 0) = 1 for S > 0 and Fd (0 / 0) = 0. Using this notation, we can express the p-value as:

| (5) |

The following simulation can be performed to calculate the p-value:

Simulate a d-vector from a multivariate normal distribution with mean 0, then divide it by its norm to obtain a sample from V.

Calculate g̃(V) = g (f1/2V + θ0). Note: this will usually be the most computationally demanding step.

Repeat steps 1 and 2 n times.

- Find the estimated p-value:

(6)

Implementing the simulation in this way can involve significant computational gains. Let us denote 1 – Fd (S) as pU for the unconstrained p-value and let p denote the actual p-value. We point out the following inequality which is shown in Appendix A:

| (7) |

As a crude estimate of what we gain in efficiency, we looked at the ratio of the variances achieved by using Method 2 (designated p̂n) versus that using Method 1 (designated p̃n) :

| (8) |

Now note that (pU (S) – p(S))/(1 – p(S)) → 0 as S → ∞. In other words, for very small p-values (and large S), Var (p̂n) is a tiny fraction of Var (p̃n). This improvement is purchased at virtually no increased computational expenditure.

Consider the special case of testing H0 : θ = θ0 versus Ha : θ ∈ Ω1 where Ω is sufficiently regular to define the non-trivial cone C̃ (Ω1, θ0). In this case we may improve upon (8) somewhat. We show in Appendix A that:

| (9) |

Equation (9) shows again that for very small p-values Var (p̂n) is a tiny fraction of Var (p̃n). These equations, however, show only worst case scenarios and in actual practice the benefits of Method 2 may be even greater. In section 4 we show an empirical evaluation of the relative variance of the two estimators in several situations.

One potential way to speed up the simulation is this: for each new Vi we can use – Vi as another observation. This leads to a negative correlation among the observations, thus reducing the variance. Another modification of the basic procedure is to implement a stopping rule to determine how many simulations to perform. In general, very large p-values do not need to be estimated accurately.

Method 3: Numerical Integration Approach

It is possible to avoid completely the use of Monte Carlo simulation. V can be represented in a hyperspherical coordinate system (Blumenson 1960). This involves a transformation from a series of d-1 angles to the d elements of V. So:

| (10) |

where φp–1 goes from 0 to 2π, and φ1 through φp–2 go from 0 to π. The Jacobian for this transformation is: J = sinp–2 (φ1) sinp–3 (φ2)...sin (φp–2). Using this transformation it is possible to evaluate the integral numerically:

| (11) |

Here Sd = 2πd/2 / Γ (d / 2) is the surface area of a d dimensional hypersphere of radius 1. We use the integral representation in our proof of theorem 2 below.

Nuisance Parameters

Suppose that we divide the parameter space into q parameters to be tested and d-q nuisance parameters: θT = [ψT λT]. Similarly, we partition the Fisher information matrix as:

| (12) |

We wish to test a hypothesis of the form H0 : θ ∈ Ω0 = {θ : ψ = b and λ ∈ Ω*} versus Ha : θ ∈ Ω1 = {θ : ψ ∈ Ωa and λ ∈ Ω*}. We define Ωb = {θ : ψ = b} for notational convenience. In other words, we allow for the nuisance parameters to have constraints imposed upon them. This may be reasonable, for example, in the variance component setting. The first problem with having a large number of nuisance parameters is that the function g (Z) involves optimizing two quadratic forms each with d2 terms. Lemma 1, below, states two conditions under which we may reduce these quadratic forms to have only q2 terms. Let be the Schur complement of the block fλλ of f, and . Also, define .

Lemma 1: If either (a) fψλ (θ0) = 0 or (b) C (Ω*, θ0) = ℝd–q, then PY[h (Y) ≥ S] = PZ[g (Z) ≥ S], where Y and Z are MVN variables with means b and θ0 and variances M and f, respectively.

Proof of Lemma 1 is given in Appendix A. Note that only one of the two conditions must be met for the results to follow. One situation where condition (a) is met is in the normal variance component setting where the fixed effects are being tested. In this situation, the part of the Fisher information corresponding to the covariances between the estimators of the main effects and the variance components is usually 0 and, as a result, enforcing constraints on variance components has no effect on the asymptotic distribution of the test. Note that if condition (a) is met, then we may set M = fψψ. Condition (b) requires that any constraints on the nuisance parameters disappear asymptotically. Condition (b) is satisfied when λ is an interior point of Ω*.

A second problem with nuisance parameters is that using Theorem 1 and Lemma 1 requires us to know the actual parameter values (θ0). One natural approach would be replace θ0 and f in g (Z;f (θ0); C (Ω0, θ0), C (Ω1, θ0)) with some consistent estimate (θ̂, f̂). Unfortunately, this will not always work because g (Z;f;C (Ω0, θ0), C (Ω1, θ0)) is not a continuous function of θ0. The approximating cone C (Ω, θ0) may behave badly as a function of θ0. As a simple example, consider the space Ω = {θ|θ ≥ 0}. If θ0 > 0, then C (Ω, θ0) = ℝ; but if θ0 = 0, then C (Ω, θ0) = {θ|θ ≥ 0. This problem has been discussed from a different point of view by Wolak (1991). Under the null hypothesis discussed above, ψ is specified and does not need to be estimated, while λ must be estimated. However, C (Ωb, b) and C (Ωa, b) are not functions of λ and hence the bad behavior of the approximating cone may be avoided when the conditions for Lemma 1 hold. Let f̂n be an estimate of f(θ0), , and . Also, suppose that LRn is an observed test statistic.

Theorem 2: Suppose that under H0. If (a) fψλ (θ0) = 0 or (b) C (Ω*, θ0) = ℝd–q, then for all 0 ≤ α < 1 – P(g̃ (Z̃) = 0)

| (13) |

where the elements of Ỹ are distributed as independent standard normal random variables.

A sketch of the proof for Theorem 2 is deferred to Appendix A. Hence simulating from h̃n (Ỹ) is equivalent asymptotically to simulating from g̃ (Z̃). This approach has been criticized by some (Silvapulle and Sen 2005, p. 153) because the asymptotic distribution may be quite sensitive to the nuisance parameters. However, as we show below, there are at least some situations where the asymptotic distribution is relatively insensitive to the actual values of the nuisance parameters.

4. Multivariate Variance Component Linkage Analysis

Some Model Properties

First, we present the basic model for multivariate variance component linkage analysis (Amos, de Andrade et al. 2001). Let there be t traits (variates), nk individuals in the kth pedigree, and K pedigrees. Let yki be a t × 1 vector of trait values for individual i in pedigree k, and let . The commonly used genetic model for quantitative traits assumes that yki is formed by adding together a set of independent random effects. For example, there may be a random environmental (including measurement error) effect and a random polygenic effect (i.e. the cumulative effect of alleles in many genes acting together additively). Because family members share on average a certain proportion of their alleles there will be some correlation between the random polygenic effects of family members. In linkage analysis, we are interested in testing whether a particular locus in the genome is linked to the traits of interest, and so we also have a random effect for a single gene of interest. Using genetic marker data we may calculate the proportion of alleles shared identical by descent (IBD) (Haseman and Elston 1972) for each pair of relatives at a particular genomic location. While there are many variations and additional random effects suggested in the literature, we will consider only these three random effects: linked locus, polygenic and environmental. First we define the following:

Πk specifies the proportion of alleles shared identical by descent for each pair of relatives at a particular genomic location.

Φk is a matrix of kinship coefficients.

Ik is an nk × nk identity matrix.

A represents the covariance matrix for the additive component of variance.

P represents a polygenic covariance matrix and

E represents an environmental (including measurement error) covariance matrix.

Note that A, P and E are each t × t covariance matrices containing parameters, while Πk, Φk and Ik are nk × nk matrices containing observed properties of the pedigree. In the multivariate variance component model, it is assumed that, given the number of alleles shared identical by descent for the pairs of members in each pedigree, yk follows a MVN distribution with covariance matrix

| (14) |

where ⊗ represents the Kronecker product.

In general, we wish to test for linkage using the model above. This is a hypothesis test of the form H0 : A = 0 vs Ha : A ∈ Ωa. For example, in the univariate case (t = 1) we have H0 : a = 0 vs Ha : a > 0 because we expect variances to be positive. However, in the multivariate case there are several options for Ωa. As we point out in the discussion, each of these options is valid if decided upon a priori. First, we may set Ωa = {A | A ≥ 0} where A ≥ 0 means that A is positive semi-definite. We will refer to this as constraint 1. Constraint 1 may be justified by the fact that covariance matrices must be positive semidefinite by definition. Second, we may y choose Ωa = {φφT | φ ∈ ℝt} which we will refer to as constraint 2 (Todorov, Vogler et al. 1998; Amos, de Andrade et al. 2001; Marlow, Fisher et al. 2003). This is equivalent to constraining all the cross-trait correlations to be ±1. Constraint 2 may be justified when the polymorphisms at a given genomic location responsible for the trait are effectively diallelic. Third, we may have , where 1t is a column t-vector of ones (Evans, Zhu et al. 2004). This kind of constraint may be reasonable for repeated measures if we believe the gene will have the same effect on all measurements. Each of these constraints is illustrated in Figure 1. Wang (2003) and Amos et al. (2001) present some other possible constraints. A number of similar tests of variance components have been presented in contexts other than linkage analysis (Stram and Lee 1994; Stram and Lee 1995).

Under the third constraint, the asymptotic distribution is a ½:½ mixture of and , where represents a point mass at 0 (Evans, Zhu et al. 2004). To our knowledge, the literature does not directly address the asymptotic null distribution under constraint 1. However, constraint 2 has prompted some confusion and even contradiction within the literature. As mentioned earlier, Han and Chang (2008) have challenged several claims in the literature regarding constraint 2.

Although pedigrees come in various structures and sizes, it is still possible to force the variance component models discussed in the introduction into an independent identically distributed context. We assume that there is an infinite population of non-overlapping pedigree structures from which we randomly sample. Although the whole human population can be considered as a single pedigree, in practice the way pedigrees are sampled can ensure that they are nonoverlapping overlapping (Ginsburg, Elston et al. 2006). Thus, the probability P (Πk, Φk, nk) is not a function of the variance component parameters, and the likelihood function is of the form ∏k P (yk | Πk, Φk, nk) P (Πk, Φk, nk). In the likelihood ratio, the factor P (Πk, Φk, nk) will cancel out. We have not proven in this work that the multivariate variance component model meets the regularity requirement for Theorems 1 and 2. However, our simulation results show the usefulness of our approach. In fact, the regularity requirements given by Geyer (1994) require global optima. The likelihood that needs to be optimized for our model is not convex, and it would be difficult to prove that the global maximum was truly achieved. Instead, we depend on the heuristic approach of using multiple starting points.

We have chosen below to enforce the constraint that P and E are positive definite under both the null and alternative hypotheses. However, we assume that the true parameters P and E do not lie on the boundary of the parameter space, and hence these constraints disappear asymptotically. Thus conditions (a) and (b) in Theorem 2 are both satisfied.

We are now ready to define a set of conditions:

(C1) P and E do not lie on the boundary of the parameter space.

(C2) Given the pedigree structure, the data generating process actually follows a MVN distribution with Var (yk) = Ck = Φk ⊗ P + I ⊗ E and E (yki) = μ.

(C3) The random variables Π and Φ may only take on a finite number of possible values.

Next we define the following space:

| (15) |

Lemma 2: If (C1) through (C3) are met, then for constraints 1 and 2 Theorem 1 and 2 may be applied to the variance component likelihood ratio test for linkage. Here the likelihood ratio test is defined for arbitrarily large η and for ɛ > 0 arbitrarily close to 0, and the test statistic is

We have not rigorously proven Lemma 2, but a very brief discussion of how these conditions may be related to the regularity conditions given by Geyer may be found in Appendix B. In fact, the regularity requirements given by Geyer (1994) require global optima. The likelihood function for our model is not convex, and it would be difficult to prove that the global maximum was truly achieved. Instead, we depend on the heuristic approach of using multiple starting points. Our simulation results show the usefulness of our approach.

It is convenient to reparametrize the covariance structure to be Σk = (Πk – Φk) ⊗ A + Φk ⊗ P* + Ik ⊗ E, where P* = P – A. Let , where ei is the unit vector of length t in the ith direction, and let If [•] be the indicator function, In this case we can easily derive that, conditional on Πk and the pedigree structure, the Fisher information for A and the cross parameter information between A and P* and that between A and E are respectively:

| (16) |

However, E [Πk] – Φk = 0 and therefore f̂pi1i2aj1j2 and f̂ei1i2aj1j2 will converge in probability to 0.

The above formulas show the Fisher information conditional on Πk and pedigree structure, but f̂ai1i2aj1j2 will converge in probability to some value fai1i2aj1j2 that is dependent on the pedigree structures in the population. It may also be of interest to know some typical values for fai1i2aj1j2. Assume we have a population of families containing only a father, mother and two siblings each with a fully informative marker. For this population, letting , where ei is the unit vector in the ith direction of length 4, the Fisher information is:

| (17) |

Alternative Hypothesis Constraint 1

Constraint 2, as pictured in Figure 1, requires that A be positive semi-definite. Such a constraint is defensible because variances must be positive. To our knowledge there is no work suggesting an asymptotic distribution for the multivariate linkage model under this constraint. In Theorem 3 we show that in one special case the asymptotic distribution is available. We first define one more condition: (C4) P = γE for some positive scalar γ.

Theorem 3: If (C1) through (C4) are met, then for an arbitrary number of traits the null asymptotic cumulative distribution function (CDF) of the multivariate linkage analysis CLRT statistic under constraint 1 is a mixture of chi-squared random variables with mixing proportions derivable by the recursion given in Kuriki (1993).

Theorem 3 follows immediately from Lemma 2 and Lemmas 3 and 5 in Appendix B and the work of Kuriki (1993). In Table 1 we give some of the mixing proportions derivable from the recursive formulas in Kuriki (1993).

Table 1:

Mixing Proportions for Constraint 1

| DF | t=1 | t=2 | t=3 |

|---|---|---|---|

| 0 | 2−1 | ||

| 1 | 2−1 | ||

| 2 | 0 | ||

| 3 | 0 | ||

| 4 | 0 | 0 | |

| 5 | 0 | 0 | |

| 6 | 0 | 0 |

Of course, it is not reasonable to assume that P = γE, and so we might expect the asymptotic distribution derived in Theorem 3 to be of only theoretical interest. However, we may still use the methods suggested in section 3 if P ≠ γE. Our approach requires us to replace the nuisance parameters P and E with consistent estimates. As mentioned earlier, if the distribution is strongly dependent on P and E, then this approach may be subject to some criticism for finite samples. We briefly investigate this in Figure 2. We recalculated the asymptotic CDF under different possible values for r, where

| (18) |

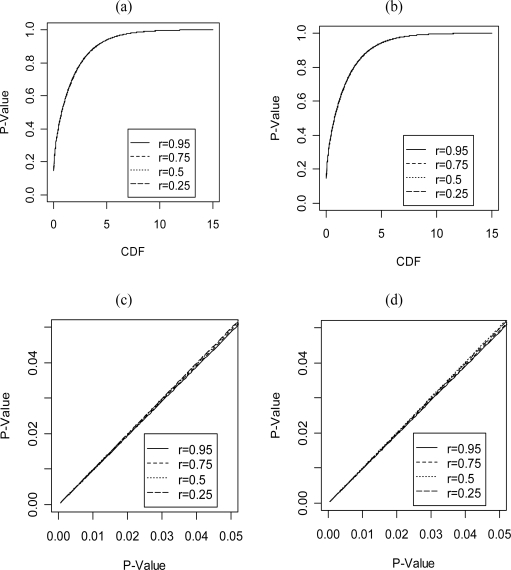

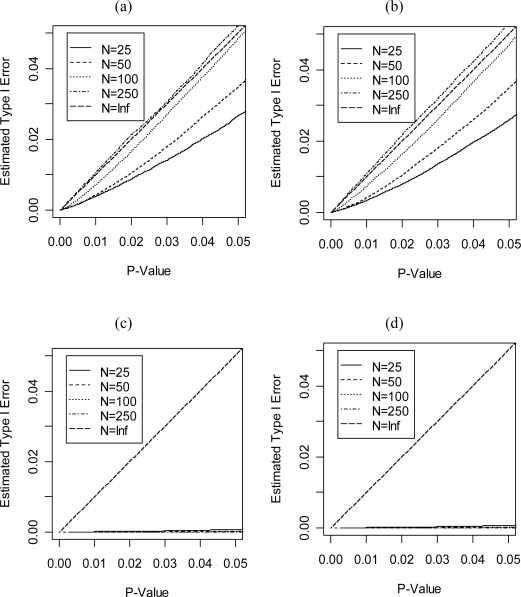

Figure 2:

Comparison of the asymptotic distribution of the CLRT statistic for a population of pedigrees containing only a father, mother and two sibs when P and E are parameterized as in equation (18). The CDFs were calculated based on Method 2 in section 3. (a) CDF under constraint 1. (b) CDF under constraint 2. (c) This comparison is for constraint 1. The x-axis represents a p-value from the analytical formula derived in Theorem 3; The y-axis represents the p-value calculated as in (a). (d) This comparison is for constraint 2. The x-axis represents a p-value from the distribution derived in Theorem 4; The y-axis represents the p-value calculated as in (b).

The results in Figure 2 were obtained from 200,000 simulation samples using Method 2 in section 3. We implemented this method in MATLAB and used the cutting-plane algorithm suggested by Shaw and Geyer (1997) for the optimization. As can be seen, in the cases investigated, the asymptotic distribution using different values for P and E appear to be nearly indistinguishable. While these results seem to indicate that Theorem 3 has some practical value, we have no guarantee that P and E will have such a small effect on the asymptotic distribution for other pedigree structures, numbers of traits or population parameter values. Therefore, we can only recommend using Theorem 3 as a first pass approximation.

Alternative Hypothesis Constraint 2

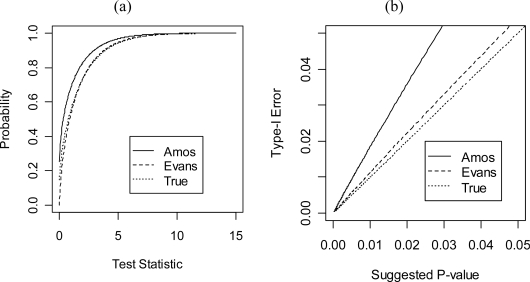

Constraint 2, as pictured in Figure 1, requires that A be of the form φφT (Todorov, Vogler et al. 1998; Amos, de Andrade et al. 2001; Marlow, Fisher et al. 2003). This is equivalent to constraining all the cross-trait correlations to be ±1. In the bivariate case, Evans (2002) seems to have claimed that the asymptotic distribution is while Amos et al. (2001) have claimed that the asymptotic distribution is . In fact, both of these claims are incorrect, although the distribution suggested by Evans closely approximates the true asymptotic distribution in the tails for some values of θ0. Theorem 4 gives the asymptotic distribution in one special case, and Figure 3 displays a graphical comparison of the two previous suggestions and the true distribution in this special case.

Figure 3:

Comparison of some suggested distributions to the true asymptotic distribution. (a) Plot of the CDF function. (b) The x-axis of this plot represents a p-value from one of the suggested distributions; the y-axis represents the p-value that would have been calculated using the distribution in Theorem 4.

Theorem 4: If (C1) through (C3) are met, then the null asymptotic CDF of the bivariate (t = 2) linkage analysis statistic under constraint 2 is: .

Here Φ (x) represents the CDF of the standard normal distribution and If [.] is the indicator function. Proof of Theorem 4 is discussed in Appendix B. Note that the asymptotic distribution in Theorem 4 does not appear to be a mixture of chi-squared random variables. This is precisely what we would expect, because the alternative hypothesis is not convex. Figure 3 shows that using the distribution suggested by Amos et al. (2001) may be asymptotically quite liberal in terms of type 1 error, as may be expected from the simulation results given by Amos et al. (2001). This also confirms the work of Han and Chang (2008).

As with constraint 1, we investigated the sensitivity of the asymptotic distribution to the values of P and E. Figure 2 shows that the models investigated have nearly identical asymptotic distributions. Hence P and E have very little effect, as we may have suspected from the simulation work of Amos et al. (2001).

Empirical Evaluation of Method 2 versus Method 1

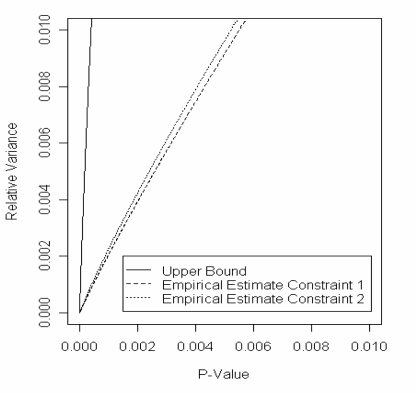

In equation (9) we gave an upper bound for the relative variances of estimation Methods 1 and 2. We now give an empirical evaluation of the relative variances. Consider the situation that P = E = I2. We may use the formulas derived in Appendix B to easily find the Fisher information in this case. We simulated 100,000 samples of V as in Method 2, and in each case we evaluated 1 – Fd (S/g̃(V)) at a large number of possible values for S. At each of those values for S we estimated the associated p-value and its variance for Methods 1 and 2. Figure 4 shows the results. As may be seen, the relative variance is much better than the upper bound, and for small p-values Method 2 is much better than Method 1.

Figure 4:

Empirical evaluation of the benefit of Method 2. The y-axis is the relative variance (Var (p̂n) / Var (p̃n)). The x-axis is the estimated p-value. The upper bound is as derived in (9). The lines for constraints 1 and 2 are based on 100,000 simulations using Method 2.

Simulation Study of Convergence

To see how large a sample is needed for the statistic to approach the asymptotic distribution under the null hypothesis, we performed a small simulation study. Pedigrees with 2 parents and 2 siblings were simulated. A fully informative marker was assumed. The data were simulated to follow the actual variance component model. All simulations were performed using a program written in MATLAB release 2007b. For likelihood maximization, A, P and E were constrained to be positive semi-definite using the Cholesky method (Marlow, Fisher et al. 2003).

For our first set of simulations, we assumed d that E = I2 and P was either I2 or 0. We let the sample sizes be 25, 50, 100 and 250 pedigrees. We simulated 200,000 replicates for each situation. Note that when P = 0, the assumptions used in this paper are violated because the nuisance parameters are not allowed to be on the boundary of the parameter space. Figure 5 shows the empirical type 1 error rates when Theorems 3 and 4 are used to calculate statistical significance. As can be seen, when P = I2 the asymptotic distribution nicely approximates the empirical distribution with only around 100 pedigrees. However, when P = 0 the type I error rate is unreasonably conservative. We give some additional comments on this problem in the discussion.

Figure 5:

Empirical Type I error for different samples sizes assuming the statistic follows the distribution given in Theorems 3 and 4 for: (a) Constraint 1 and P = E = I2, (b) Constraint 2 and P = E = I2, (c) Constraint 1 and E = I2, P = 0 and (d) Constraint 2 and E = I2, P = 0.

In a second set of simulations we parameterized P and E as in equation (18). for differing values of r. We then calculated the p-values using Method 2 in section 3. As stopping rule, for each simulation we tested whether the p-value was statistically different from 0.001 at an alpha level of 0.01. To our knowledge, the simulation algorithm failed to yield a p-value only once, probably due to a failed optimization. Missing values were ignored. For each parameter setup we used 50,000 simulated replicates. Table 2 shows the results. As can be seen, when r comes close to 1, the statistic approaches its asymptotic distribution more slowly. Again we see that using the asymptotic method described in this paper leads to conservative results when the nuisance parameters are near the boundary. We give some additional comments on this problem in the discussion.

Table 2.

Type I error rates for various genetic models parameterized as in equation (18) when α = 0.001. A value of 1 represents the theoretically correct Type 1 error. Each cell is estimated from 50,000 simulated replicates.

| Type I Error | ||||||

|---|---|---|---|---|---|---|

| Constraint | Sample Size | r = 0 | r = 0.25 | r = 0.5 | r = 0.75 | r = 0.95 |

| 1 | 50 | 0.32 | 0.36 | 0.20 | 0.20 | 0.36 |

| 100 | 0.38 | 0.36 | 0.40 | 0.32 | 0.20 | |

| 250 | 0.82 | 0.84 | 0.50 | 0.28 | 0.44 | |

| 1000 | 1.18 | 1.06 | 1.18 | 0.50 | 0.40 | |

| 2 | 50 | 0.18 | 0.24 | 0.44 | 0.58 | 0.48 |

| 100 | 0.52 | 0.30 | 0.46 | 0.24 | 0.10 | |

| 250 | 0.90 | 0.66 | 0.64 | 0.50 | 0.44 | |

| 1000 | 1.02 | 1.06 | 0.84 | 0.50 | 0.50 | |

5. Discussion

There are two possible motivations for a one-sided test. First, we may only be interested in the results of the study if the deviation from the null hypothesis is in a specific direction. In this case we really have a compound null hypothesis such as H0 : μ ≥ 0 vs Ha : μ > 0 Second, we may be convinced that the parameter could fall on only one side of the null hypothesis. It is this second reason that justifies the use of a one-sided test in the variance component setting. We test H0 : a = 0 against Ha : a > 0 because we have a strong a priori commitment to the notion that a, as a variance, must be positive. In the univariate tests mentioned above, the one-sided p-value will be half the two-sided p-value if the parameter estimate is positive. Hence, one-sided tests cannot be performed after peeking at the data. We must justify the one-sided constraint by substantive knowledge, and not because it produces a lower p-value. Looking at the estimated covariance matrix before choosing a test is not an acceptable statistical procedure. In terms of type I error, any alternative hypothesis is defensible when decided upon a priori, although it may result in lower power. It is this last point that we wish to emphasize in the rather confusing set of possible alternative hypotheses.

We must make one additional cautionary note about one-sided tests in linkage analysis. While each of the constraints suggested above seems intuitively reasonable, we cannot say that A must always be positive semi-definite. It is only the total variance that is mathematically constrained to be positive semi-definite, and not the individual components of variance. Some bizarre scenarios are remotely possible. For example, suppose that there is a gene for contrarianism (i.e., family members intentionally seek to differentiate themselves). In such a situation, many behavioral traits would have a negative familial correlation induced by the locus for contrarianism. We would not be able to detect the linkage due to such behavioral traits using a one-sided test. There are also examples where environmental factors such as competition could result in negative familial correlations (Verbeke and Molenberghs 2003), but in such cases the one-sided test of linkage may still be valid. We also point out that estimation and testing are distinct issues. Within the context of estimation it may not be advisable to restrict variances to be positive because the accumulation of such results across studies may result in upwardly biased estimates (Lynch and Walsh 1998 pp. 563–564).

In this paper we have shown that it is possible to evaluate asymptotic significance levels using fairly simple methods, even in the absence of analytical results. While others (Silvapulle and Sen 2005, pp. 78–81) have discussed similar methods, our results are unique in that they are both efficient and applicable even to non-convex spaces. Our method may be easily transformed into a numerical integration problem. We have also shown that replacing the information matrix with a consistent estimate is sometimes reasonable. With regard to variance component linkage analysis, we have shown that analytical formulas for the distribution function may be derived that can be used to obtain a first pass approximation to the statistical significance. However, we recommend that Method 2 above should generally be used and not the analytical formulas.

In this paper we have investigated a multivariate variance component likelihood ratio test that enforces constraints on the nuisance parameters. We do not recommend that this statistic be routinely used in practice for two reasons. First, when nuisance parameters are too near the boundary convergence to the asymptotic distribution may be very slow. A similar observation has been made in the univariate case (Shugart, O'Connell et al. 2002). However, in all cases simulated, the statistic was conservative. The most natural approach to deal with this problem is to stop enforcing constraints on the nuisance parameters. This may lead to increased computational difficulties, but it would improve the performance of the methods we have suggested. Second, tests of variance components that make use of the normal likelihood are not necessarily robust to the multivariate normal assumption. This is a well known problem in the univariate case (Allison, Neale et al. 1999). Our simulations assumed that the multivariate normal variance component model was actually correct. In reality, we expect a mixture distribution and many other sources of non-normality. Fortunately, there are many ways to make the variance component framework robust to the normality assumption (Chen, Broman et al. 2005). In future work we plan to address both these concerns by developing a robust score test that does not enforce constraints on the nuisance parameters. The asymptotic approach developed in this paper may also be used with these robust score tests.

Acknowledgments

Research was supported in part by U.S. Public Health Service NHLBI grant HL0756, NIGMS grant GM2835, NCI grant P30CAD43703 and NCRR grants KL2RR024990 and P51RR036551. This work made use of the High Performance Computing Resource in the Core Facility for Advanced Research Computing at Case Western Reserve University.

Appendix A

Explanation of Equation (7):

Notice first that:

| (19) |

because distance measures are greater than 0. Also:

| (20) |

because 0 ∈ C̃ (Ω0, θ0). Putting (19) and (20) together we obtain:

| (21) |

Furthermore, it is clear that , under the very general assumption that C̃ (Ω0, θ0) ⊂ C̃ (Ω1, θ0). As a result,

| (22) |

Now notice that 1 – Fd (S/x) is an increasing function because it is a decreasing function composed with a decreasing function. Note also that 1 – Fd (S/0) = 0 by definition and 1 – Fd (S/1) = 1 – Fd (S). Therefore, from (22) and the fact that 1 – Fd (S/x) is increasing in x:

| (23) |

We note that the variance of each simulated distribution is at its maximum when all its probability is concentrated at either end of the limits described above. That is, its variance is as large as possible when it is distributed as a point mass at 0 and a point mass at pU. We let ζ be the amount of probability concentrated at pU. Then E [p̂n] = p = ζ pU. So ζ = p / pU, and

| (24) |

Explanation of Equation (9):

Consider the cone C̃ (Ω1, θ0) that has vertex at 0. Because it is nontrivial, there exists some non-zero vector e ∈ C̃ (Ω1, θ0). We define the cone Ω2 = {ae | a > 0}. Define . Clearly Ω2 ⊆ C̃ (Ω1, θ0) and therefore . Thus

| (25) |

But, because 1 – Fd (S/x) is increasing in x, 1 – Fd (S/g̃*(V) ≤ – 1 Fd (S/g̃(V)). Thus

| (26) |

However, the test statistic for H0 : θ = 0 versus Ha : θ ∈ Ω2 is asymptotically distributed as , and therefore . Combining this with (26) we get that and thus We then have

| (27) |

Equation (9) then follows by combining (27) with (8).

(28)

Proof of Lemma 1:

Define and let Q1 (ψ) = (Z1 – ψ)T M (Z1 – ψ), where . It is easily verified that (Z – θ)T f (Z – θ) = Q1 (ψ) + Q2 (θ). Thus we see that

| (29) |

where Ci = C (Ωi, θ0). Under condition (a), Q2 (θ) is only a function of λ, so . Under condition (b) . Thus under either condition (a) or (b) h (Z1) = g(Z). It is a well known property of the Schur complement (M) of the block fΨΨ of f that M−1 is the first block entry of f̂−1. Thus the distribution of the first q entries of f−1/2Z̃ + θ0, where Z̃ is a vector of independent standard normal random variables, is identical in distribution to that of M−1/2Ỹ + b where Ỹ is a vector of independent standard normal random variables. Therefore

| (30) |

Proof of Theorem 2:

First we prove that is a continuous function of f̂n. Note that M̂n is a continuous function of f̂n because the matrix inverse is a continuous mapping (Lange 2004, p. 31). Also, suppose is the Cholesky decomposition, which is also a continuous mapping of M̂n (Schatzman and Taylor 2002, p. 295). It can be easily shown that is a continuous function of x and A. So

| (31) |

is a continuous function of f̂n.

Let β (B, l) = PỸ [h̃n (Ỹ) ≥ LRn|f̂n = B, LRn = l]. By inspection of equation (11), we have β (B, l) = ∫φ[1-Fp (l/h M(B)−1/2Ỹ + b))] Jdφ/Sp. Let (Bi, li) → (B, l) be a convergent sequence with l > 0 and B > 0. Note that 0 ≤ Fp (x) ≤ 1, so, by the dominated convergence theorem, β (Bi, li) → β (B, l) and β is thus continuous for l > 0 and B > 0. A discontinuity at l = 0 occurs because by our definition Fp (S / 0) = 1 for S > 0 and Fp (0 / 0) = 0. To fix this discontinuity define:

| (32) |

But γ (B, l) is fairly clearly a continuous function for B > 0 and l ∈ ℝ. Also and . From Slutsky’s Theorem, . Theorem 2 follows from the definition of convergence in distribution.

Appendix B

Discussion of Lemma 2:

For sufficiently large η and sufficiently small ε the true parameter will be an interior point of Γ(ε, η) and hence this constraint will asymptotically disappear. For i ∈ {0,1}, Ωi ∩ Γ (ε, η) is a compact space with bounded derivatives for the likelihood up to the 3rd derivative. As mentioned earlier, our problem fits into the independent identically distributed framework if we consider pedigree structure and IBD values to be random. Geyer (1994) gives four regularity conditions (excluding the Chernoff regularity of the cone, which is clearly satisfied in our case) for establishing theorem 1. See Theorem 4.4 and the remark at the end of section 4 in Geyer (1994). The first regularity condition is the existence of a local quadratic approximation of the likelihood. This appears to be obviously satisfied in our case. The second condition is termed stochastic equicontinuity. This condition is more difficult to verify, but it appears that an application of the Equicontinuity Lemma on p. 150 of Pollard (1984) could be used. The third regularity condition is the existence of a central limit theorem for the first derivative, and this again seems to be fairly obviously satisfied. The final condition is the use of a consistent root-K minimizer. Consistency may be established for example by Theorem 17 of Ferguson (1996), and the root-K minimizer is guaranteed by how we have defined the likelihood ratio.

Let vecAt×t = [a11 … a1t a21 … att] and vechAt×t = [a11 … a1t a22 … att] as defined for example in (Henderson and Searle 1979). There exists matrices G and H such that vecA = GvechA and vechA = HvecA, again defined for example in Henderson and Searle (1979).

Lemma 3: If A = 0 and P = γE for some positive scalar γ, then M = κ [GT (E−1 ⊗ E−1)G], where M is the Schur complement of the block fΨΨ of f and κ is some constant dependent upon the population.

Proof of Lemma 3:

Let τ represent a random pedigree structure sampled from a population. The covariance structure for a given τ is Cτ = Φτ ⊗ P + Iτ ⊗ E = (γΦτ + Iτ) ⊗ E. Let Δτ = Πτ – Φτ and , where ei is the unit vector of length t in the ith direction. Then:

| (33) |

where κ = EτEΠτ tr [(γΦτ + Iτ)]−1Δτ (γΦτ + Iτ)−1Δτ]. Line 4 above follows from line 9 of Henderson and Searle (1979). Applying the same algebraic steps and using the fact that EΠτΔτ = 0, we obtain fai1i2pj1j2 = 0 and fai1i2ej1j2 = 0. Note that G = [vecB11 … vecB1t vecB22 … vecBtt]. Lemma 3 follows immediately.

Lemma 4: If M can be factored as [GT (T−1 ⊗ T−1) G] and P and E are not on the boundary of the parameter space, then the asymptotic null distribution of the variance component CLRT statistic (λ) for H0 : A = 0 vs. Ha : A ≥ 0 is equivalent to the distribution of Here bi represents the eigenvalues of a symmetric random matrix Z̃t×t, and the elements Z̃ are independent with distribution N (0,1) on the diagonals and N (0, ½) on the off diagonals.

Proof of Lemma 4:

Let X and θ be t × t symmetric matrices with Z = vechX distributed as a MVN random variable with mean 0 and variance M−1. Then

| (34) |

Let X̃ = T−1/2XT−1/2. Note that M−1 = H(T ⊗ T)HT. This may be easily verified using line (24) and (20) of Henderson and Searle (1979). Note also that vechX̃ = H (T−1/2 ⊗ T−1/2) GvechX. Hence vechX̃ is distributed as MVN with mean 0 and variance

| (35) |

But HHT is a diagonal matrix with 1 corresponding to the diagonal elements of X̃ and ½ for the off diagonal elements. S̃ = {θ̃:θ̃ = T−1/2θT−1/2 for some θ ≥ 0}= {θ̃:θ̃ ≥ 0}. From Theorem 1 and 2, λ has the same asymptotic distribution as:

| (36) |

Lemma 4 then follows from Theorem 8 in Chapter 2 of Dimedenco (2005).

Note that Lemmas 3 and 4 show the asymptotic distribution to be identical to the traditional multivariate variance component model in one special case.

Lemma 5: If M can be factored as [GT (T−1 ⊗ T−1)G], where T > 0, then the asymptotic null distribution of the CLRT statistic (λ) for a variance component linkage model, testing H0 : A = 0 vs. Ha : A ≥ 0, is equivalent to the distribution of: λ* = [max (b1, 0)]2. Here b1 ≥ … ≥ bt represent the eigenvalues of a symmetric random matrix X̃t×t, and the elements Z̃ are independent with distribution N (0,1) on the diagonals and N (0, ½) on the off-diagonals.

Proof of Lemma 5:

Define S̃ = {T−1/2φφTT−1/2 | φ ∈ ℝt} = {φφT | φ ∈ ℝt}. Following the same steps as in Lemma 3, we have:

Lemma 5 follows from this.

Sketch of Proof for Theorem 4:

By using Lemmas 3 and 5 we may conclude that the asymptotic distribution is a function of the maximum eigenvalue of a random matrix. The eigenvalue distribution has been given in numerous places, such as Amemiya, Anderson et al. (1990). The distribution function for t = 2 is: . It is a fairly straightforward application of calculus to derive the distribution in Theorem 4 using a symbolic computational system such as Mathematica.

References

- Allison DB, Neale MC, et al. “Testing the Robustness of the Likelihood-Ratio Test in a Variance-Component Quantitative-Trait Loci–Mapping Procedure.”. The American Journal of Human Genetics. 1999;65(2):531–544. doi: 10.1086/302487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amemiya Y, Anderson TW, et al. “Percentage points for a test of rank in multivariate components of variance.”. Biometrika. 1990;77:637–641. doi: 10.1093/biomet/77.3.637. [DOI] [Google Scholar]

- Amos C, de Andrade M, et al. “Comparison of multivariate tests for genetic linkage.”. Hum Hered. 2001;51(3):133–44. doi: 10.1159/000053334. [DOI] [PubMed] [Google Scholar]

- Blumenson LE. “A Derivation of n-Dimensional Spherical Coordinates”. The American Mathematical Monthly. 1960;67(1):63–66. doi: 10.2307/2308932. [DOI] [Google Scholar]

- Chen WM, Broman KW, et al. “Power and robustness of linkage tests for quantitative traits in general pedigrees.”. Genetic Epidemiology. 2005;28(1):11–23. doi: 10.1002/gepi.20034. [DOI] [PubMed] [Google Scholar]

- Chernoff H. “On the Distribution of the Likelihood Ratio”. The Annals of Mathematical Statistics. 1954;25(3):573–578. doi: 10.1214/aoms/1177728725. [DOI] [Google Scholar]

- Delmas C, Foulley JL. “On testing a class of restricted hypotheses”. Journal of Statistical Planning and Inference. 2007;137(4):1343–1361. doi: 10.1016/j.jspi.2006.04.006. [DOI] [Google Scholar]

- Demidenko E. Mixed Models: Theory and Applications. Wiley-Interscience; 2005. [Google Scholar]

- Evans DM. “The power of multivariate quantitative-trait loci linkage analysis is influenced by the correlation between variables”. American journal of human genetics. 2002;70(6):1599–1602. doi: 10.1086/340850. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Evans DM, Zhu G, et al. “Multivariate QTL linkage analysis suggests a QTL for platelet count on chromosome 19q.”. European Journal of Human Genetics. 2004;12:835–842. doi: 10.1038/sj.ejhg.5201248. [DOI] [PubMed] [Google Scholar]

- Ferguson TS. A Course in Large Sample Theory. Chapman & Hall/CRC; 1996. [Google Scholar]

- Geyer CJ. “On the Asymptotics of Constrained M-Estimation”. The Annals of Statistics. 1994;22(4):1993–2010. doi: 10.1214/aos/1176325768. [DOI] [Google Scholar]

- Ginsburg E, Elston RC, et al. Theoretical aspects of pedigree analysis. Ramottel Aviv University; 2006. [Google Scholar]

- Han SS, Chang JT.2008“Reconsidering the null distribution of likelihood ratio tests for genetic linkage in multivariate variance components models” eprint arXiv: 0808.2000 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haseman JK, Elston RC. “The investigation of linkage between a quantitative trait and a marker locus”. Behavior Genetics. 1972;2(1):3–19. doi: 10.1007/BF01066731. [DOI] [PubMed] [Google Scholar]

- Henderson HV, Searle SR. “Vec and vech operators for matrices, with some uses in Jacobians and multivariate statistics”. Canadian Journal of Statistics. 1979;7:65–81. doi: 10.2307/3315017. [DOI] [Google Scholar]

- Kuriki S. “One-sided test for the equality of two covariance matrices”. Annals of Statistics. 1993;21:1379–1379. doi: 10.1214/aos/1176349263. [DOI] [Google Scholar]

- Lange K. Optimization. New York: Springer; 2004. [Google Scholar]

- Lynch M, Walsh B. Genetics and Analysis of Quantitative Traits: Sinauer Associates; Sunderland, MA: 1998. [Google Scholar]

- Marlow AJ, Fisher SE, et al. “Use of Multivariate Linkage Analysis for Dissection of a Complex Cognitive Trait.”. The American Journal of Human Genetics. 2003;72(3):561–570. doi: 10.1086/368201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pollard D. Convergence of stochastic processes: Springer; 1984. [Google Scholar]

- Schatzman M, Taylor J. Numerical Anlysis: A Mathematicl Introduction. New York: Oxford University Press; 2002. [Google Scholar]

- Self SG, Liang KY. “Asymptotic Properties of Maximum Likelihood Estimators and Likelihood Ratio Tests Under Nonstandard Conditions”. Journal of the American Statistical Association. 1987;82(398):605–610. doi: 10.2307/2289471. [DOI] [Google Scholar]

- Shaw FH, Geyer CJ. “Estimation and testing in constrained covariance component models”. Biometrika. 1997;84(1):95–102. doi: 10.1093/biomet/84.1.95. [DOI] [Google Scholar]

- Shugart YY, O'Connell JR, et al. “An evaluation of the variance components approach: type I error, power and size of the estimated effect.”. European journal of human genetics: EJHG. 2002;10(2):133. doi: 10.1038/sj.ejhg.5200772. [DOI] [PubMed] [Google Scholar]

- Silvapulle M, Sen P. Constrained Statistical Inference: Inequality, Order, and Shape Restrictions. Hoboken, New Jersey: Wiley-Interscience; 2005. [Google Scholar]

- Stram DO, Lee JW. “Variance Components Testing in the Longitudinal Mixed Effects Model”. Biometrics. 1994;50(4):1171–1177. doi: 10.2307/2533455. [DOI] [PubMed] [Google Scholar]

- Stram DO, Lee JW. “Correction to: variance components testing in the longitudinal mixed effects model”. Biometrics. 1995;51(1):196. [PubMed] [Google Scholar]

- Takemura A, Kuriki S. “Weights of Chi-Bar Squared Distribution for Smooth or Piecewise Smooth Cone Alternatives”. The Annals of Statistics. 1997;25(6):2368–2387. [Google Scholar]

- Todorov AA, Vogler GP, et al. “Testing causal hypotheses in multivariate linkage analysis of quantitative traits: general formulation and application to sibpair data.”. Genet Epidemiol. 1998;15(3):263–78. doi: 10.1002/(SICI)1098-2272(1998)15:3<263::AID-GEPI5>3.0.CO;2-5. [DOI] [PubMed] [Google Scholar]

- Verbeke G, Molenberghs G. “The Use of Score Tests for Inference on Variance Components”. Biometrics. 2003;59(2):254–262. doi: 10.1111/1541-0420.00032. [DOI] [PubMed] [Google Scholar]

- Vu HTV, Zhou S. “Generalization of Likelihood Ratio Tests under Nonstandard Conditions”. The Annals of Statistics. 1997;25(2):897–916. doi: 10.1214/aos/1031833677. [DOI] [Google Scholar]

- Wang K. “Mapping Quantitative Trait Loci Using Multiple Phenotypes in General Pedigrees”. Hum Hered. 2003;55(1):1–15. doi: 10.1159/000071805. [DOI] [PubMed] [Google Scholar]

- Wolak FA. “The local nature of hypothesis tests involving inequality constraints in nonlinear models”. Econometrica. 1991;59(4):981–995. doi: 10.2307/2938170. [DOI] [Google Scholar]