Abstract

In recent work, several authors have introduced methods for sparse canonical correlation analysis (sparse CCA). Suppose that two sets of measurements are available on the same set of observations. Sparse CCA is a method for identifying sparse linear combinations of the two sets of variables that are highly correlated with each other. It has been shown to be useful in the analysis of high-dimensional genomic data, when two sets of assays are available on the same set of samples. In this paper, we propose two extensions to the sparse CCA methodology. (1) Sparse CCA is an unsupervised method; that is, it does not make use of outcome measurements that may be available for each observation (e.g., survival time or cancer subtype). We propose an extension to sparse CCA, which we call sparse supervised CCA, which results in the identification of linear combinations of the two sets of variables that are correlated with each other and associated with the outcome. (2) It is becoming increasingly common for researchers to collect data on more than two assays on the same set of samples; for instance, SNP, gene expression, and DNA copy number measurements may all be available. We develop sparse multiple CCA in order to extend the sparse CCA methodology to the case of more than two data sets. We demonstrate these new methods on simulated data and on a recently published and publicly available diffuse large B-cell lymphoma data set.

1. Introduction

Canonical correlation analysis (CCA), due to Hotelling (1936), is a classical method for determining the relationship between two sets of variables. Given two data sets X1 and X2 of dimensions n × p1 and n × p2 on the same set of n observations, CCA seeks linear combinations of the variables in X1 and the variables in X2 that are maximally correlated with each other. That is, w1 ∈ p1 and w2 ∈ p2 maximize the CCA criterion, given by

| (1) |

where we assume that the columns of X1 and X2 have been standardized to have mean zero and standard deviation one. In this paper, we will refer to w1 and w2 as the canonical vectors (or weights), and we will refer to X1w1 and X2w2 as the canonical variables.

In recent years, CCA has gained popularity as a method for the analysis of genomic data. It has become common for researchers to perform multiple assays on the same set of patient samples; for instance, DNA copy number (or comparative genomic hybridization, CGH), gene expression, and single nucleotide polymorphism (SNP) data might all be available. Examples of studies involving two or more genomic assays on the same set of samples include Hyman et al. (2002), Pollack et al. (2002), Morley et al. (2004), Stranger et al. (2005), and Stranger et al. (2007). In the case of, say, DNA copy number and gene expression measurements on a single set of patient samples, one might wish to perform CCA in order to identify genes whose expression is correlated with regions of genomic gain or loss. However, genomic data is characterized by the fact that the number of features generally greatly exceeds the number of observations; for this reason, CCA cannot be applied directly.

To circumvent this problem, Parkhomenko et al. (2007), Waaijenborg et al. (2008), Parkhomenko et al. (2009), Le Cao et al. (2009), and Witten et al. (2009) have proposed methods for penalized CCA. In this paper, we will restrict ourselves to the criterion proposed in Witten et al. (2009), which takes the form

| (2) |

where P1 and P2 are convex penalty functions. Since P1 and P2 are generally chosen to yield w1 and w2 sparse, we call this the sparse CCA criterion. This criterion follows from applying penalties to w1 and w2 and also from assuming that the covariance matrix of the features is diagonal; that is, we replace and in the CCA criterion with and . The sparse CCA criterion results in w1 and w2 unique, even when p1, p2 ≫ n, for appropriate choices of P1 and P2.

It has been shown that sparse CCA can be used to identify genes that have expression that is correlated with regions of DNA copy number change (Waaijenborg et al. 2008, Witten et al. 2009), to identify genes that have expression that is correlated with SNPs (Parkhomenko et al. 2009), and to identify sets of genes on two different microarray platforms that have correlated expression (Le Cao et al. 2009). However, some questions remain:

Sometimes, in addition to data matrices X1 ∈ n×p1 and X2 ∈ n×p2, a vector of outcome measurements in n is also available. For instance, a survival time might be known for each patient. CCA and sparse CCA are unsupervised methods; that is, they do not make use of an outcome. However, if outcome measurements are available, then one might seek sets of variables in the two data sets that are correlated with each other and associated with the outcome.

More than two sets of variables on the same set of observations might be available. For instance, it is becoming increasingly common for researchers to collect gene expression, SNP, and DNA copy number measurements on the same set of patient samples. In this case, an extension of sparse CCA to the case of more than two data sets is required.

In this paper, we develop extensions to sparse CCA that address these situations and others.

The rest of this paper is organized as follows. Section 2 contains methods for sparse CCA when the data consist of matrices X1 and X2. In Section 2.1, we present details of the sparse CCA method from Witten et al. (2009), and in Section 2.2, we explain the connections between that method and those of Waaijenborg et al. (2008), Le Cao et al. (2009), and Parkhomenko et al. (2009). The remainder of Section 2 contains some extensions of sparse CCA for two sets of features on a single set of observations. Section 3 contains an explanation of sparse multiple CCA, an extension of sparse CCA to the case of K data sets X1, ..., XK with features on a single set of samples. In Section 4, we present sparse supervised CCA, a method for performing sparse CCA when the data consist of matrices X1, X2, and y, a vector containing an outcome measurement for each sample. Section 5 contains the discussion. Throughout the paper, methods are applied to the diffuse large B-cell lymphoma (DLBCL) data set of Lenz et al. (2008), which consists of 17350 gene expression measurements and 386165 DNA copy number measurements for 203 patients. For each patient, two clinical outcomes are available: a possibly censored survival time, as well as the subtype of DLBCL to which that patient’s disease belongs.

2. Sparse CCA

2.1. The sparse CCA method

The sparse CCA criterion was given in Equation (2) for general penalty functions P1 and P2. We will be interested in two specific forms of these penalty functions:

P1 is an L1 (or lasso) penalty; that is, P1(w1) = ||w1||1. This penalty will result in w1 sparse for c1 chosen appropriately. We assume that .

P1 is a fused lasso penalty (see e.g. Tibshirani et al. 2005), of the form P1(w1) = ∑j |w1j | + ∑j |w1j – w1(j–1)|. This penalty will result in w1 sparse and smooth, and is intended for cases in which the features in X1 have a natural ordering along which smoothness is expected.

In order to indicate the form of the penalties P1 and P2 in use, we will refer to the method as sparse CCA(P1, P2). That is, if both penalties are L1, then we will call this sparse CCA(L1, L1), and if P1 is an L1 penalty and P2 a fused lasso penalty, then we will call it sparse CCA(L1, FL) (where “FL” indicates fused lasso). Note that when P1 and P2 are L1 or fused lasso penalties, the resulting canonical vectors are unique, even when p1, p2 ≫ n. Witten et al. (2009) propose the use of sparse CCA(L1, FL) in the case where X1 corresponds to gene expression measurements and X2 corresponds to copy number measurements (ordered by position along the chromosomes); this is related to the proposal of Tibshirani & Wang (2008) for estimating copy number for a single CGH sample.

Now, consider the criterion (2) with P1 and P2 convex penalty functions. With w1 fixed, the criterion is convex in w2, and with w2 fixed, it is convex in w1. The objective function of this biconvex criterion increases in each step of a simple iterative algorithm.

Algorithm for sparse CCA:

Initialize w2 to have L2 norm 1.

- Iterate the following two steps until convergence:

- w1 ← arg maxw1 subject to ||w1||2 ≤ 1, P1(w1) ≤ c1.

- w2 ← arg maxw2 subject to ||w2||2 ≤ 1, P2(w2) ≤ c2.

If P1 is an L1 penalty, then the update has the form

| (3) |

where Δ1 = 0 if this results in ||w1||1 ≤ c1; otherwise, Δ1 > 0 is chosen so that ||w1||1 = c1. Here, S(·) denotes the soft-thresholding operator; that is, S(a, c) = sgn(a)(|a| – c)+. Soft-thresholding arises in the update due to the L1 penalty and the assumption that the covariance matrices are independent. Δ1 can be chosen by a binary search. If P1 is instead a fused lasso penalty, then a slightly modified version of the sparse CCA criterion yields the update step

| (4) |

which can be computed using software implementing fused lasso regression. w2 can be updated analogously.

Methods for selecting tuning parameter values and assessing significance of the resulting canonical vectors are presented in Appendix A. The above algorithm is easily extended to obtain multiple canonical vectors, as described in Witten et al. (2009) and summarized in Appendix B. However, to simplify interpretation of the examples presented in this paper, we will only consider the first canonical vectors w1 and w2, as given in the criterion (2).

2.2. Connections with other sparse CCA proposals

This paper extends the sparse CCA proposal of Witten et al. (2009). As mentioned earlier, the Witten et al. (2009) method is closely related to a number of other methods for sparse CCA. We briefly review those methods here.

Waaijenborg et al. (2008) first recast classical CCA as an iterative regression procedure; then an elastic net penalty is applied in order to obtain penalized canonical vectors. An approximation of the iterative elastic net procedure results in an algorithm that is similar to that of Witten et al. (2009) in the case of L1 penalties on w1 and w2. However, Waaijenborg et al. (2008) do not appear to be exactly optimizing a criterion.

Parkhomenko et al. (2009) develop an iterative algorithm for estimating the singular vectors of . At each step, they regularize the estimates of the singular vectors by soft-thresholding. Though they do not explicity state a criterion, it appears that they are approximately optimizing a criterion that is related to (2) with L1 penalties. However, they use the Lagrange form, rather than the bound form, of the constraints on w1 and w2. Their algorithm is closely related to that of Witten et al. (2009), though extra normalization steps are required due to computational problems with the Lagrange form of the constraints. The algorithm of Le Cao et al. (2009) is also closely related to those of Parkhomenko et al. (2009) and Witten et al. (2009), though again Le Cao et al. (2009) use the Lagrange form, rather than the bound form, of the penalties.

Hence, the Waaijenborg et al. (2008), Parkhomenko et al. (2009), Le Cao et al. (2009) and Witten et al. (2009) methods are all closely related; we pursue the criterion (2) in this paper.

2.3. Sparse CCA with nonnegative weights

The sparse CCA method will result in canonical vectors w1 and w2 that are sparse, if the penalties P1 and P2 are chosen appropriately. However, the nonzero elements of w1 and w2 may be of different signs. In some cases, one might seek a sparse weighted average of the features in X1 that is correlated with a sparse weighted average of the features in X2. Then one will want to additionally restrict the elements of w1 and w2 to be nonnegative (or nonpositive). If we require the elements of w1 and w2 to be nonnegative, the sparse CCA criterion becomes

| (5) |

and the resulting algorithm is as follows:

Algorithm for sparse CCA with nonnegative weights:

Initialize w2 to have L2 norm 1.

- Iterate the following two steps until convergence:

- w1 ← arg maxw1 subject to ||w1||2 ≤ 1, w1j ≥ 0, P1(w1) ≤ c1.

- w2 ← arg maxw2 subject to ||w2||2 ≤ 1, w2j ≥ 0, P2(w2) ≤ c2.

Consider the criterion (5) with w1 fixed; we can write the optimization problem for w2 with as

| (6) |

Assume that P2 is an L1 penalty. It is obvious that if aj ≤ 0, then w2j = 0. For j such that aj > 0, w2j can be found by solving the optimization problem

| (7) |

This can be solved using the following update for w2:

| (8) |

where Δ2 = 0 if this results in ||w2||1 ≤ c2; otherwise, Δ2 > 0 is chosen so that ||w2||1 = c2. An analogous update step can be derived for w1 if P1 is an L1 penalty.

2.4. Application of sparse CCA to the DLBCL data

We demonstrate the sparse CCA method on the lymphoma data set of Lenz et al. (2008), which consists of gene expression and array CGH measurements on 203 patients with DLBCL. The data set is publicly available at http://www.ncbi.nlm.nih.gov/geo/query/acc.cgi?acc=GSE11318. There are 17350 gene expression measurements and 386165 copy number measurements. (In the raw data set, more gene expression measurements are available. However, we limited the analysis to genes for which we knew the chromosomal location, and we averaged expression measurements for genes for which multiple measurements were available.) For computational reasons, sets of adjacent CGH spots on each chromosome were averaged before all analyses were performed. In previous research, gene expression profiling has been used to define three subtypes of DLBCL, called germinal center B-cell-like (GCB), activated B-cell-like (ABC), and primary mediastinal B-cell lymphoma (PMBL) (Alizadeh et al. 2000, Rosenwald et al. 2002). For each of the 203 observations, survival time and DLBCL subtype are known.

For chromosome i, we performed sparse CCA(L1, FL) using X1 equal to expression data of genes on all chromosomes and X2 equal to DNA copy number data on chromosome i. Tuning parameter values were chosen by permutations; details are given in Appendix A. P-values obtained using the method in Appendix A, as well as the chromosomes on which the genes corresponding to nonzero w1 weights are located, can be found in Table 1. Canonical vectors found on almost all chromosomes were significant, and for the most part, cis interactions were found. Cis interactions are those for which the regions of DNA copy number change and the sets of genes with correlated expression are located on the same chromosome. The presence of cis interactions is not surprising because copy number gain on a given chromosome could naturally result in increased expression of the genes that were gained.

Table 1:

Column 1: The number indicates the chromosome to which the CGH data corresponds. Sparse CCA was performed using all gene expression measurements, and CGH data from chromosome i only. Column 2: In almost every case, the canonical vectors found were highly significant. P-values were obtained using the permutation approach given in Appendix A. Column 3: For the most part, CGH measurements on chromosome i were found to be correlated with the expression of sets of genes on chromosome i. That is, the canonical vectors found for the expression data had non-zero elements only for genes on chromosome i. Columns 4 and 5: P-values are reported for the Cox proportional hazards and multinomial logistic regression models that use the canonical variables to predict survival and cancer subtype.

| Chr. | P-Value | Chr. of Genes w/Nonzero Weights | P-Value w/Surv. | P-Value w/Subtype |

|---|---|---|---|---|

| 1 | 0 | 1 1 1 | 0.346978 | 0.020115 |

| 2 | 0 | 2 2 | 0.139221 | 0.000336 |

| 3 | 0 | 3 3 3 | 0.000395 | 0 |

| 4 | 0 | 4 4 | 0.473788 | 0.127702 |

| 5 | 0 | 5 5 5 5 5 | 0.607717 | 0.015423 |

| 6 | 0 | 6 6 6 | 0.421753 | 6e-05 |

| 7 | 0 | 7 7 | 0.530759 | 0 |

| 8 | 0 | 8 8 | 0.698352 | 0.000375 |

| 9 | 0 | 9 9 9 9 9 9 9 9 | 0.435872 | 0 |

| 10 | 0 | 10 10 10 10 10 | 0.123747 | 7e-06 |

| 11 | 0 | 11 11 11 | 0.344889 | 0.000265 |

| 12 | 0 | 12 12 | 0.436956 | 0.000557 |

| 14 | 0.02 | 1 14 | 0.006953 | 0 |

| 15 | 0 | 15 15 15 15 15 15 15 15 | 0.000552 | 3.5e-05 |

| 16 | 0 | 16 16 | 0.298069 | 0.029809 |

| 17 | 0 | 17 17 17 17 17 17 17 | 0.047078 | 0.775681 |

| 18 | 0 | 18 18 | 0.00619 | 5e-06 |

| 19 | 0 | 19 19 10 | 0.046235 | 0 |

| 20 | 0 | 20 20 20 2 3 20 20 20 | 0.922793 | 0.004198 |

| 21 | 0 | 21 21 | 0.309212 | 0.012914 |

| 22 | 0.06 | 22 1 1 | 0.441799 | 0.000272 |

We used the CGH and expression canonical variables as features in a multivariate Cox proportional hazards model to predict survival. Note that X1w1 and X2w2 are vectors in n. We also used the canonical variables as features in a multinomial logistic regression to predict cancer subtype. The resulting p-values are shown in Table 1. The Cox proportional hazards models predicting survival from the canonical variables were not significant on most chromosomes. However, on many chromosomes, the canonical variables were highly predictive of DLBCL subtype. Boxplots showing the canonical variables as a function of DLBCL subtype are displayed in Figure 1 for chromosomes 6 and 9. For chromosome 9, Figure 2 shows w2, the canonical vector corresponding to copy number, as well as the raw copy number for the samples with largest and smallest (absolute) value in the canonical variable for the CGH data. It is not surprising that there are many significant p-values for the prediction of cancer subtype in Table 1, since the subtypes are defined using gene expression, and it was found in Lenz et al. (2008) that the subtypes are characterized by regions of copy number change.

Figure 1:

Sparse CCA was performed using CGH data on a single chromosome and all gene expression measurements. For chromosomes 6 and 9, the gene expression and CGH canonical variables, stratified by cancer subtype, are shown. It is clear that the values of the canonical variables differ by subtype. Pvalues reported are replicated from Table 1; they reflect the extent to which the canonical variables predict cancer subtype in a multinomial logistic regression model.

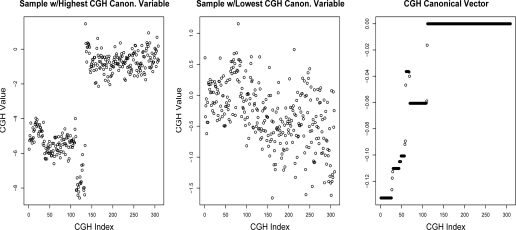

Figure 2:

Sparse CCA was performed using CGH data on chromosome 9, and all gene expression measurements. The samples with the highest and lowest absolute values in the CGH canonical variable are shown, along with the canonical vector corresponding to the CGH data. As expected, the sample with the highest CGH canonical variable is highly correlated with the CGH canonical vector, and the sample with the lowest CGH canonical variable shows little correlation. The sample with highest CGH canonical variable is of subtype PMBL, and the sample with lowest canonical variable is of subtype ABC. The CGH data on chromosome 9 consists of 309 features, of which 111 have non-zero weights in the right-hand panel.

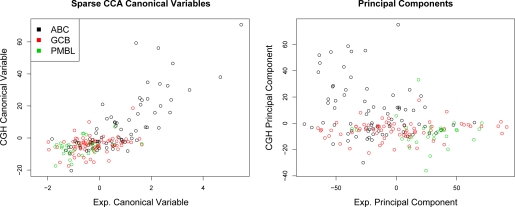

We can also compare the sparse CCA canonical variables obtained on the DLBCL data to the first principal components that arise if principal components analysis (PCA) is performed separately on the expression data and on the copy number data. PCA and sparse CCA were performed using all of the gene expression data, and the CGH data on chromosome 3; Figure 3 shows the resulting canonical variables and principal components. Sparse CCA results in CGH and expression canonical variables that are highly correlated with each other, due to the form of the sparse CCA criterion. PCA results in principal components that are far less correlated with each other, and, as a result, may yield better separation between the three subtypes. But PCA does not allow for an integrated interpretation of the expression and CGH data together.

Figure 3:

Sparse CCA and PCA were performed using CGH data on chromosome 3, and all gene expression measurements. The resulting canonical variables and principal components are shown. The CGH and expression canonical variables are highly correlated with each other. Both sparse CCA and PCA result in some separation between the three DLBCL subtypes, although PCA results in better separation because the first principal components of the CGH and expression data are less correlated with each other.

In this section, we assessed the association between the canonical variables found using sparse CCA and the clinical outcomes in order to determine if the results of sparse CCA have biological significance. However, in general, if a clinical outcome of interest is available, then the sparse sCCA approach of Section 4 may be appropriate.

2.5. Connection with nearest shrunken centroids

Consider now a new setting in which we have n observations on p features, and each observation belongs to one of two classes. Let X1 denote the n × p matrix of observations by features, and let X2 be a binary n × 1 matrix indicating class membership of each observation of X1. In this section, we will show that sparse CCA applied to X1 and X2 yields a canonical vector w1 that is closely related to the nearest shrunken centroids solution (NSC, Tibshirani et al. 2002, Tibshirani et al. 2003).

Assume that each column of X1 has been standardized to have mean zero and pooled within-class standard deviation equal to one. NSC is a high-dimensional classification method that involves defining “shrunken” class centroids based on only a subset of the features; each test set observation is then classified to the nearest shrunken centroid. We first explain the NSC method, applied to data X1. For 1 ≤ k ≤ 2, we define vectors dk, d′k, X̄′1k ∈ p as follows:

| (9) |

Here, X̄1k is the mean vector of the features in X1 over the observations in class k, and where nk is the number of observations in class k. X̄′1k is the shrunken centroid for class k obtained using tuning parameter δ ≥ 0. As in Section 2.1, S is the soft-thresholding operator.

Now, consider the effect of applying sparse CCA with L1 penalties to data X1 and X2. Rescale X2 so that the class 1 values are and the class 2 values are . The sparse CCA criterion is

| (10) |

Since w2 ∈ 1, the constraints on its value result in w2 = 1. The criterion reduces to

| (11) |

which can be rewritten as

| (12) |

The solution to (12) is

| (13) |

where Δ = 0 if that results in ||w1||1 ≤ c1; otherwise, Δ > 0 is chosen so that ||w1||1 = c1. So sparse CCA yields a canonical vector that is proportional to the shrunken centroid X̄′11 when the tuning parameters for NSC and sparse CCA are chosen appropriately.

3. Sparse multiple CCA

3.1. The sparse multiple CCA method

CCA and sparse CCA can be used to perform an integrative analysis of two data sets with features on a single set of samples. But what if more than two such data sets are available? A number of approaches for generalizing CCA to more than two data sets have been proposed in the literature, and some of these extensions are summarized in Gifi (1990). We will focus on one of these proposals for multiple-set CCA.

Let the K data sets be denoted X1, ..., XK; data set k contains pk variables, and each variable has mean zero and standard deviation one as in previous sections. Then, the single-factor multiple-set CCA criterion involves finding w1, ..., wK that maximize

| (14) |

where wk ∈ pk. It is easy to see that when K = 2, then multiple-set CCA simplifies to ordinary CCA. We can develop a method for sparse multiple CCA by imposing sparsity constraints on this natural formulation for multiple-set CCA. In the spirit of our criterion for sparse CCA with two sets of variables (2), we assume that the features are independent within each data set: that is, for each k. Then, our criterion for sparse multiple CCA (sparse mCCA) is as follows:

| (15) |

where Pi are convex penalty functions. Then, wi is the canonical vector associated with Xi. If Pi is an L1 or fused lasso penalty and ci is chosen appropriately, then wi will be sparse.

It is not hard to see that just as (2) is biconvex in w1 and w2, (15) is multiconvex in w1, ..., wK. That is, with wj held fixed for all j ≠ i, (15) is convex in wi. This suggests an iterative algorithm that increases the objective function of (15) at each iteration.

Algorithm for sparse mCCA:

For each i, fix an initial value of wi ∈ pk.

- Repeat until convergence: For each i, let

(16)

For instance, if Pi is an L1 penalty, then the update takes the form

| (17) |

where Δi = 0 if this results in ||wi||1 ≤ ci; otherwise, Δi > 0 is chosen such that ||wi||1 = ci.

We demonstrate the performance of sparse mCCA on a simple simulated example. Data were generated according to the model

| (18) |

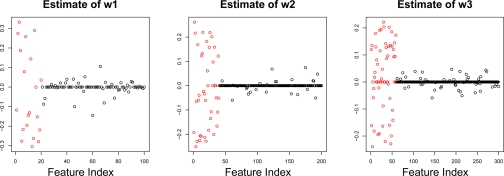

where u ∈ 50, w1 ∈ 100, w2 ∈ 200, w3 ∈ 300. Only the first 20, 40, and 60 elements of w1, w2, and w3 were nonzero, respectively. Sparse mCCA was run on this data, and the resulting estimates of w1, w2, and w3 are shown in Figure 4.

Figure 4:

Three data sets X1, X2, and X3 are generated under a simple model, and sparse mCCA is performed. The resulting estimates of w1, w2, and w3 are fairly accurate at distinguishing between the elements of wi that are truly nonzero (red) and those that are not (black). From left to right, the three canonical vectors shown have 57, 67, and 92 nonzero elements.

A permutation algorithm for selecting tuning parameter values and assessing significance of sparse mCCA can be found in Appendix A. In addition, an algorithm for obtaining multiple sparse mCCA factors is given in Appendix B.

3.2. Application of sparse mCCA to DLBCL copy number data

If CGH measurements are available on a set of patient samples, then one may wonder whether copy number changes in genomic regions on separate chromosomes are correlated. For instance, certain genomic regions may tend to be coamplified or codeleted.

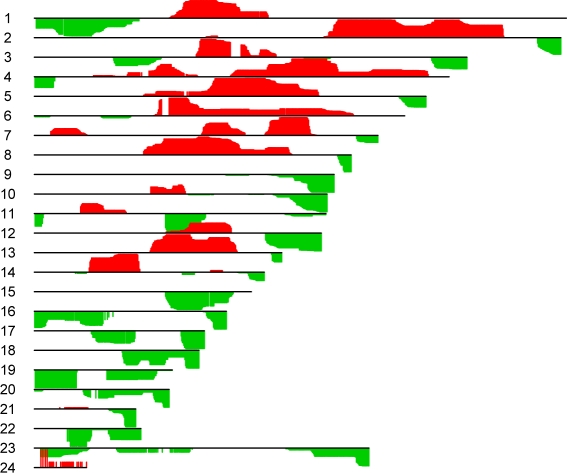

To answer this question for a single pair of chromosomes, we can perform sparse CCA(FL, FL) with two data sets, X1 and X2, where X1 contains the CGH measurements on the first chromosome of interest and X2 contains the CGH measurements on the second chromosome of interest. If copy number change on the first chromosome is independent of copy number change on the second chromosome, then we expect the corresponding p-value obtained using the method of Appendix A not to be small. A small p-value indicates that copy number changes on the two chromosomes are more correlated with each other than one would expect due to chance. However, in general, there are ( ) pairs of chromosomes that can be tested for correlated patterns of amplification and deletion; this leads to a multiple testing problem and excessive computation. Instead, we take a different approach, using sparse mCCA. We apply sparse mCCA to data sets X1, ..., X24, where Xi contains the CGH measurements on chromosome i. A fused lasso penalty is used on each data set. The goal is to identify correlated regions of gain and loss across the entire genome.

This method is applied to the DLBCL data set mentioned previously. We first denoise the samples by applying the fused lasso to each sample individually, as in Tibshirani & Wang (2008). We then perform sparse mCCA on the resulting smoothed CGH data. The canonical vectors that result are shown in Figure 5. From the figure, one can conclude that complex patterns of gain and loss tend to co-occur. It is unlikely that a single sample would display the entire pattern found; however, samples with large values in the canonical variables most likely contain some of the patterns shown in the figure.

Figure 5:

Sparse mCCA was performed on the DLBCL copy number data, treating each chromosome as a separate “data set”, in order to identify genomic regions that are coamplified and/or codeleted. The canonical vectors w1, ..., w24 are shown. Positive values of the canonical vectors are shown in red, and negative values are in green.

4. Sparse supervised CCA

In Section 2.4, we determined that on the lymphoma data, many of the canonical variables obtained using sparse CCA are highly associated with tumor subtype (and for some chromosomes, the canonical variables are also associated with survival time). Though an outcome was available, we took an unsupervised approach in performing sparse CCA. In this section, we will develop a framework to directly make use of an outcome in sparse CCA. Our method for sparse supervised CCA (sparse sCCA) is closely related to the supervised principal components analysis (supervised PCA) method of Bair & Tibshirani (2004) and Bair et al. (2006), and so we begin with an overview of that method.

4.1. Supervised PCA

Principal components regression (PCR; see e.g. Massy 1965) is a method for predicting an outcome y ∈ n from a data matrix X ∈ n×p. Assume that the columns of X have been standardized. Then, PCR involves regressing y onto the first few columns of XV, where X = UDVT is the singular value decomposition of X. Since V is estimated in an unsupervised manner, it is not guaranteed that the first few columns of XV will predict y well, even if some of the features in X are correlated with y.

Bair & Tibshirani (2004) and Bair et al. (2006) propose the use of supervised PCA, which is a semisupervised approach. Their method can be described simply:

On training data, the features that are most associated with the outcome y are identified.

PCR is performed using only the features identified in the previous step.

Theoretical results regarding the performance of this method under a latent variable model are presented in Bair et al. (2006).

4.2. The sparse supervised CCA method

Suppose that a quantitative outcome is available; that is, we have y ∈ n in addition to X1 and X2. Then we might seek linear combinations of the variables in X1 and X2 that are highly correlated with each other and associated with the outcome.

We define the criterion for supervised CCA as follows:

| (19) |

where Q1 is the set of features in X1 that are most correlated with y, and Q2 is the set of features in X2 that are most correlated with y. The number of features in Q1 and Q2, or alternatively the correlation threshold for features to enter Q1 and Q2, can be treated as a tuning parameter or can simply be fixed. If X1 = X2, then the criterion (19) simplifies to supervised PCA; that is, w1 and w2 are equal to each other and to the first principal component of the subset of the data containing only the features that are most associated with the outcome.

sCCA can be easily extended to give sparse sCCA,

| (20) |

where as usual, P1 and P2 are convex penalty functions.

We have discussed the possibility of y being a quantitative outcome (e.g. tumor diameter), but other options exist as well. For instance, y could be a time to event (e.g. a possibly censored survival time) or a class label (for instance, DLBCL subtype). Our definition of sparse sCCA must be generalized in order to accommodate other outcome types. If y is a survival time, then for each feature, we can compute the score statistic (or Cox score) for the univariate Cox proportional hazards model that uses that feature to predict the outcome. Only features with sufficiently high (absolute) Cox scores will be in the sets Q1 and Q2. In the case of a multiple class outcome, only features with a sufficiently high F-statistic for a one-way ANOVA will be in Q1 and Q2. Other outcome types could be incorporated in an analogous way. The algorithm for sparse sCCA can be written as follows:

Algorithm for sparse sCCA:

- Let X͂1 and X͂2 denote the submatrices of X1 and X2 consisting of the features in Q1 and Q2. Q1 and Q2 are calculated as follows:

- In the case of an L1 penalty on wi, Qi is the set of indices of the features in Xi that have highest univariate association with the outcome.

- In the case of a fused lasso penalty on wi, the vector of univariate associations between the features in Xi and the outcome is smoothed using the fused lasso. The resulting smoothed vector is thresholded to obtain the desired number of nonzero cofficients. Qi contains the indices of the coefficients that are nonzero after thresholding.

Perform sparse CCA using data X͂1 and X͂2.

Note that the fused lasso case is treated specially because one wishes for the features included in X͂i to be contiguous, so that smoothness in the resulting wi weights will translate to smoothness in the weights of the original variable set. Algorithms for tuning parameter selection and assessment of significance, as well as a method for obtaining multiple canonical vectors, are given in the Appendix.

We explore the performance of sparse sCCA with a quantitative outcome on a toy example. Data are generated according to the model

| (21) |

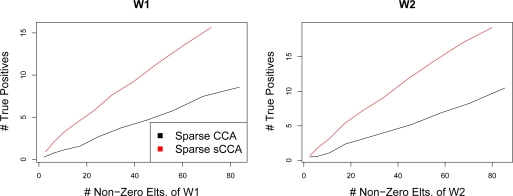

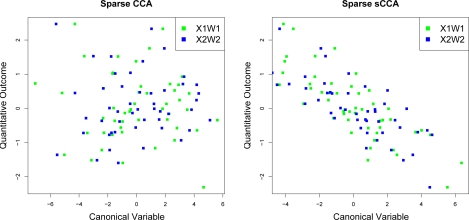

with u ∈ 50, w1 ∈ 500, w2 ∈ 1000, ɛ1 ∈ 50×500, ɛ2 ∈ 50×1000. 50 elements of w1 and 100 elements of w2 are non-zero. The first canonical vectors of sparse CCA and sparse sCCA (using L1 penalties) were computed for a range of values of c1 and c2. In Figure 6, the resulting number of true positives (features that are nonzero in w1 and w2 and also in the estimated canonical vectors) are shown on the y-axis, as a function of the number of nonzero elements of the canonical vectors. It is clear that greater numbers of true positives are obtained when the outcome is used. In Figure 7, the canonical variables obtained using sparse CCA and sparse sCCA are plotted against the outcome. The canonical variables obtained using sparse sCCA are correlated with the outcome, and those obtained using sparse CCA are not. Note that under the model (21), in the absence of noise, the canonical variables are proportional to u; therefore, sparse sCCA more accurately uncovers the true canonical variables.

Figure 6:

Sparse CCA and sparse sCCA were performed on a toy example, for a range of values of the tuning parameters in the sparse CCA criterion. The number of true positives in w1 and w2 is shown as a function of the number of nonzero elements in the estimates of the canonical vectors.

Figure 7:

Sparse CCA and sparse sCCA were performed on a toy example. The canonical variables obtained using sparse sCCA are highly correlated with the outcome; those obtained using sparse CCA are not.

In theory, one could choose Q1 and Q2 in Step 1 of the sparse sCCA algorithm to contain fewer than n features; then, ordinary CCA could be performed instead of sparse CCA in Step 2. However, we recommend using a less stringent cutoff for Q1 and Q2 in Step 1, and instead performing further feature selection in Step 2 via sparse CCA.

4.3. Connection with sparse mCCA

Given X1, X2, and a two-class outcome y, one could perform sparse mCCA by treating y as a third data set. This would yield a different but related method for performing sparse sCCA in the case of a two-class outcome.

Note that the outcome y is a matrix in n×1. We code the two classes (of n1 and n2 observations, respectively) as and . Assume that the columns of X1 and X2 have mean zero and pooled within-class standard deviation equal to one. Consider the sparse mCCA criterion with L1 penalties, applied to data sets X1, X2, and y:

| (22) |

Note that since w3 ∈ 1, it follows that w3 = 1. So we can re-write the criterion (22) as

| (23) |

Now, this criterion is biconvex and leads naturally to an iterative algorithm. However, this is not the approach that we take with our sparse sCCA method. Instead, notice that

| (24) |

where X̄1k ∈ p is the mean vector of the observations in X1 that belong to class k, and where t1 ∈ p is the vector of two-sample t-statistics testing whether the classes defined by y have equal means within each feature of X1. Similarly, for t2 defined analogously. So we can rewrite (23) as

| (25) |

As λ increases, the elements of w1 and w2 that correspond to large |t1| and |t2| values increase in absolute value relative to those that correspond to smaller |t1| and |t2| values.

Rather than adopting the criterion (25) for sparse sCCA, our sparse sCCA criterion results from assigning nonzero weights only to the elements of w1 and w2 corresponding to large |t1| and |t2|. We prefer our proposed sparse sCCA algorithm because it is simple, generalizes to the supervised PCA method when X1 = X2, and extends easily to non-binary outcomes.

4.4. Application of sparse sCCA to DLBCL data

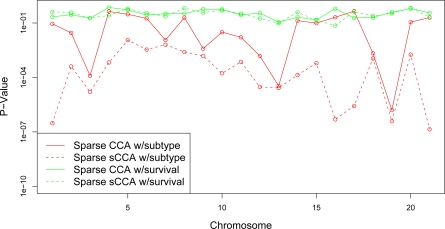

We evaluate the performance of sparse sCCA on the DLBCL data set, in terms of the association of the resulting canonical variables with the survival and subtype outcomes. We repeatedly split the observations into training and test sets (75% / 25%). Let ( , , ytrain) denote the training data, and let ( , , ytest) denote the test data. (y can denote either the survival time or the cancer subtype.) We perform sparse sCCA analysis on the training data. As in Section 2.4, for each chromosome, sparse sCCA is run using CGH measurements on that chromosome, and all available gene expression measurements. An L1 penalty is applied to the expression data, and a fused lasso penalty is applied to the CGH data. Let , denote the canonical vectors obtained. We then use and as features in a Cox proportional hazards model or a multinomial logistic regression model to predict ytest. The resulting p-values are shown in Figure 8 for both the survival and subtype outcomes; these are compared to the results obtained if the analysis is repeated using unsupervised sparse CCA on the training data. On the whole, for the subtype outcome, the p-values obtained using sparse sCCA are much smaller than those obtained using sparse CCA. The canonical variables obtained using sparse CCA and sparse sCCA with the survival outcome are not significantly associated with survival. In this example, sparse CCA was performed so that 20% of the features in X1 and X2 were contained in Q1 and Q2 in the sparse sCCA algorithm.

Figure 8:

On a training set, sparse CCA and sparse sCCA were performed using CGH measurements on a single chromosome, and all available gene expression measurements. The resulting test set canonical variables were used to predict survival time and DLBCL subtype. Median p-values (over training set / test set splits) are shown.

5. Discussion

As it becomes more commonplace for biomedical researchers to perform multiple assays on the same set of patient samples, methods for the integrative analysis of two or more high-dimensional data sets will become increasingly important. The sparse CCA methods previously proposed in the literature (Parkhomenko et al. 2007, Waaijenborg et al. 2008, Parkhomenko et al. 2009, Le Cao et al. 2009, Witten et al. 2009) provide an attractive framework for performing an integrative analysis of two data sets. In this paper, we have developed extensions to sparse CCA that can be used to apply the method to the case of more than two data sets, and to incorporate an outcome into the analysis.

The methods proposed in this paper will be available on CRAN as part of the PMA (Penalized Multivariate Analysis) package.

APPENDIX.

A Tuning parameter selection and calculation of p-values

We first present a permutation-based algorithm for selection of tuning parameters and calculation of p-values for sparse CCA. Note that a number of methods have been proposed in the literature for selecting tuning parameters for sparse CCA (see e.g. Waaijenborg et al. 2008, Parkhomenko et al. 2009, Witten et al. 2009). The method proposed here has the advantage over the proposals of Waaijenborg et al. (2008) and Parkhomenko et al. (2009) that it does not require splitting a possibly small set of samples into a training set and a test set. Witten et al. (2009) present a method for tuning parameter selection for their penalized matrix decomposition; however, it does not extend in a straightforward way to the sparse CCA case.

Algorithm to select tuning parameters and determine significance for sparse CCA:

- For each tuning parameter value (generally this will be a two-dimensional vector) Tj being considered:

- Compute w1 and w2, the canonical vectors using data X1 and X2 and tuning parameter Tj. Compute dj = Cor(X1w1, X2w2).

- For i ∈ 1, ..., N, where N is some large number of permutations:

- Permute the rows of X1 to obtain the matrix , and compute canonical vectors and using data and X2 and tuning parameter Tj.

- Compute .

- Calculate the p-value .

Choose the tuning parameter Tj corresponding to the smallest pj. Alternatively, one can choose the tuning parameter Tj for which is largest, where sd ( ) indicates the standard deviation of . The resulting p-value is pj.

Since multiple tuning parameters Tj are considered in the above algorithm, a strict cut-off for the p-value pj should be used in order to avoid problems associated with multiple testing.

Given the above algorithm, the analogous method for selecting tuning parameters and determining significance for sparse sCCA is straightforward. For simplicity, we assume that the threshold for features to enter Q1 and Q2 in the sparse sCCA algorithm is fixed (not a tuning parameter).

Algorithm to select tuning parameters and determine significance for sparse sCCA:

- For each tuning parameter (generally this will be a two-dimensional vector) Tj being considered:

- Compute w1 and w2, the supervised canonical vectors using data X1, X2, and y and tuning parameter Tj. Compute dj = Cor(X1w1,X2w2).

- For i ∈ 1, ..., N, where N is some large number of permutations:

- Permute the rows of X1 and X2 separately to obtain the matrices and , and compute supervised canonical vectors and using data , , y, and tuning parameter Tj.

- Compute .

- Calculate the p-value .

Choose the tuning parameter Tj corresponding to the smallest pj. Alternatively, one can choose the tuning parameter Tj for which is largest, where sd ( ) indicates the standard deviation of . The resulting p-value is pj.

Note that in the permutation step, we permute the rows of X1 and X2 without permuting y; this means that under the permutation null distribution, y is not correlated with the columns of X1 and X2.

We can similarly use the following permutation-based algorithm to assess the significance of the canonical vectors obtained using sparse mCCA:

Algorithm to select tuning parameters and determine significance for sparse mCCA:

- For each tuning parameter (generally this will be a K-dimensional vector) Tj being considered:

- Compute w1, ..., wK, the canonical vectors using data X1, ..., XK and tuning parameter Tj. Compute dj = ∑s<t Cor (Xsws, Xtwt).

- For i ∈ 1, ..., N, where N is some large number of permutations:

- Permute the rows of X1, ..., XK separately to obtain the matrices , and compute canonical vectors using data and tuning parameter Tj.

- Compute .

- Calculate the p-value .

Choose the tuning parameter Tj corresponding to the smallest pj. Alternatively, one can choose the tuning parameter Tj for which is largest, where sd ( ) indicates the standard deviation of . The resulting p-value is pj.

B Extension of methods to obtain multiple canonical vectors

We first review the method of Witten et al. (2009) for obtaining multiple sparse CCA canonical vectors. Note that the sparse CCA algorithm uses the cross-product matrix and does not require knowledge of X1 and X2 individually.

Algorithm for obtaining J sparse CCA factors:

Let .

- For j ∈ 1, ..., J:

- Compute and by applying the single-factor sparse CCA algorithm to data Yj.

- .

and are the jth canonical vectors.

To obtain J sparse sCCA factors, submatrices X͂1 and X͂2 are formed from the features most associated with the outcome; the algorithm for obtaining J sparse CCA factors is then applied to this new data.

To obtain J sparse mCCA factors, note that the sparse mCCA algorithm requires knowledge only of the ( ) cross-product matrices of the form with s < t, rather than the raw data Xs and Xt.

Algorithm for obtaining J sparse mCCA factors:

For each 1 ≤ s < t ≤ K, let .

- For j ∈ 1, ..., J:

- Compute by applying the single-factor sparse mCCA algorithm to data for 1 ≤ s < t ≤ K.

- .

are the jth canonical vectors.

Footnotes

We thank Andrew Beck, Patrick Brown, Jonathan Pollack, Robert West, and anonymous reviewers for helpful comments. Daniela Witten was supported by a National Defense Science and Engineering Graduate Fellowship. Robert Tibshirani was partially supported by National Science Foundation Grant DMS-9971405 and National Institutes of Health Contract N01-HV-28183.

References

- Alizadeh A, Eisen M, Davis RE, Ma C, Lossos I, Rosenwald A, Boldrick J, Sabet H, Tran T, Yu X, Powell J, Marti G, Moore T, Hudson J, Lu L, Lewis D, Tibshirani R, Sherlock G, Chan W, Greiner T, Weisenburger D, Armitage K, Warnke R, Levy R, Wilson W, Grever M, Byrd J, Botstein D, Brown P, Staudt L. ‘Distinct types of diffuse large B-cell lymphoma identified by gene expression profiling’. Nature. 2000;403:503–511. doi: 10.1038/35000501. [DOI] [PubMed] [Google Scholar]

- Bair E, Hastie T, Paul D, Tibshirani R. ‘Prediction by supervised principal components’. J Amer Statist Assoc. 2006;101:119–137. doi: 10.1198/016214505000000628. [DOI] [Google Scholar]

- Bair E, Tibshirani R. ‘Semi-supervised methods to predict patient survival from gene expression data’. PLOS Biology. 2004;2:511–522. doi: 10.1371/journal.pbio.0020108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifi A. Nonlinear multivariate analysis. Wiley, Chichester; England: 1990. [Google Scholar]

- Hotelling H. ‘Relations between two sets of variates’. Biometrika. 1936;28:321–377. [Google Scholar]

- Hyman E, Kauraniemi P, Hautaniemi S, Wolf M, Mousses S, Rozenblum E, Ringner M, Sauter G, Monni O, Elkahloun A, Kallioniemi O-P, Kallioniemi A. ‘Impact of DNA amplification on gene expression patterns in breast cancer’. Cancer Research. 2002;62:6240–6245. [PubMed] [Google Scholar]

- Le Cao K, Pascal M, Robert-Granie C, Philippe B. ‘Sparse canonical methods for biological data integration: application to a crossplatform study’. BMC Bioinformatics. 2009;10 doi: 10.1186/1471-2105-10-34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lenz G, Wright G, Emre N, Kohlhammer H, Dave S, Davis R, Carty S, Lam L, Shaffer A, Xiao W, Powell J, Rosenwald A, Ott G, Muller-Hermelink H, Gascoyne R, Connors J, Campo E, Jaffe E, Delabie J, Smeland E, Rimsza L, Fisher R, Weisenburger D, Chano W, Staudt L. ‘Molecular subtypes of diffuse large Bcell lymphoma arise by distinct genetic pathways’. Proc Natl Acad Sci. 2008;105:13520–13525. doi: 10.1073/pnas.0804295105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Massy W. ‘Principal components regression in exploratory statistical research’. Journal of the American Statistical Association. 1965;60:234–236. doi: 10.2307/2283149. [DOI] [Google Scholar]

- Morley M, Molony C, Weber T, Devlin J, Ewens K, Spielman R, Cheung V. ‘Genetic analysis of genome-wide variation in human gene expression’. Nature. 2004;430:743–747. doi: 10.1038/nature02797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parkhomenko E, Tritchler D, Beyene J. ‘Genome-wide sparse canonical correlation of gene expression with genotypes’. BMC Proceedings. 2007;1:S119. doi: 10.1186/1753-6561-1-s1-s119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parkhomenko E, Tritchler D, Beyene J. ‘Sparse canonical correlation analysis with application to genomic data integration’. Statistical Applications in Genetics and Molecular Biology. 2009;8:1–34. doi: 10.2202/1544-6115.1406. [DOI] [PubMed] [Google Scholar]

- Pollack J, Sorlie T, Perou C, Rees C, Jeffrey S, Lonning P, Tibshirani R, Botstein D, Borresen-Dale A, Brown P. ‘Microarray analysis reveals a major direct role of DNA copy number alteration in the transcriptional program of human breast tumors’. Proceedings of the National Academy of Sciences. 2002;99:12963–12968. doi: 10.1073/pnas.162471999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenwald A, Wright G, Chan WC, Connors JM, Campo E, Fisher RI, Gascoyne RD, Muller-Hermelink HK, Smeland EB, Staudt LM. ‘The use of molecular profiling to predict survival after chemotherapy for diffuse large B-cell lymphoma’. The New England Journal of Medicine. 2002;346:1937–1947. doi: 10.1056/NEJMoa012914. [DOI] [PubMed] [Google Scholar]

- Stranger B, Forrest M, Clark A, Minichiello M, Deutsch S, Lyle R, Hunt S, Kahl B, Antonarakis S, Tavare S, Deloukas P, Dermitzakis E. ‘Genome-wide associations of gene expression variation in humans’. PLOS Genetics. 2005;1(6):e78. doi: 10.1371/journal.pgen.0010078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stranger B, Forrest M, Dunning M, Ingle C, Beazley C, Thorne N, Redon R, Bird C, de Grassi A, Lee C, Tyler-Smith C, Carter N, Scherer S, Tavare S, Deloukas P, Hurles M, Dermitzakis E. ‘Relative impact of nucleotide and copy number variation on gene expression phenotypes’. Science. 2007;315:848–853. doi: 10.1126/science.1136678. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tibshirani R, Hastie T, Narasimhan B, Chu G. ‘Diagnosis of multiple cancer types by shrunken centroids of gene expression’. Proc Natl Acad Sci. 2002;99:6567–6572. doi: 10.1073/pnas.082099299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tibshirani R, Hastie T, Narasimhan B, Chu G. ‘Class prediction by nearest shrunken centroids, with applications to DNA microarrays’. Statistical Science. 2003:104–117. doi: 10.1214/ss/1056397488. [DOI] [Google Scholar]

- Tibshirani R, Saunders M, Rosset S, Zhu J, Knight K. ‘Sparsity and smoothness via the fused lasso’. J Royal Statist Soc B. 2005;67:91–108. doi: 10.1111/j.1467-9868.2005.00490.x. [DOI] [Google Scholar]

- Tibshirani R, Wang P. ‘Spatial smoothing and hotspot detection for CGH data using the fused lasso’. Biostatistics. 2008;9:18–29. doi: 10.1093/biostatistics/kxm013. [DOI] [PubMed] [Google Scholar]

- Waaijenborg S, Verselewel de Witt Hamer P, Zwinderman A. ‘Quantifying the association between gene expressions and DNA-markers by penalized canonical correlation analysis’. Statistical Applications in Genetics and Molecular Biology. 2008;7 doi: 10.2202/1544-6115.1329. [DOI] [PubMed] [Google Scholar]

- Witten D, Tibshirani R, Hastie T. ‘A penalized matrix decomposition, with applications to sparse principal components and canonical correlation analysis’. Biostatistics. 2009 doi: 10.1093/biostatistics/kxp008. [DOI] [PMC free article] [PubMed] [Google Scholar]