Abstract

Background

Children of depressed mothers are themselves at elevated risk for developing a depressive disorder. We have little understanding, however, of the specific factors that contribute to this increased risk. This study investigated whether never-disordered daughters whose mothers have experienced recurrent episodes of depression during their daughters’ lifetime differ from never-disordered daughters of never-disordered mothers in their processing of facial expressions of emotion.

Method

Following a negative mood induction, daughters completed an emotion identification task in which they watched faces slowly change from a neutral to a full-intensity happy, sad, or angry expression. We assessed both the intensity that was required to accurately identify the emotion being expressed and errors in emotion identification.

Results

Daughters of depressed mothers required greater intensity than did daughters of control mothers to accurately identify sad facial expressions; they also made significantly more errors identifying angry expressions.

Conclusion

Cognitive biases may increase vulnerability for the onset of disorders and should be considered in early intervention and prevention efforts.

Keywords: Affective disorders, cognition, depression, emotion, facial expression, risk factors

Given the high personal and societal costs associated with a diagnosis of major depressive disorder (MDD), efforts to identify vulnerability factors for the onset of this disorder are particularly pressing. In this context, children of parents with depressive disorders are themselves at elevated risk for experiencing depressive episodes; in fact, having parents who have experienced MDD is associated with a threefold increase in the risk to the offspring for developing a depressive episode during adolescence (Hammen, 2008). Moreover, maternal depression has been found to be associated with an earlier onset and more severe course of depression in the offspring (Lieb, Isensee, Hofler, Pfister, & Wittchen, 2002).

Despite repeated demonstrations of the increased likelihood that offspring of depressed mothers will develop depression, the mechanisms underlying this risk are not well understood. Over the past two decades, cognitive models of depression have been formulated in attempts to understand the etiology of MDD and vulnerability to this disorder (e.g., Beck, 1967). In essence, these models propose that individual differences in the processing of emotional material are related to increased risk for depression. Numerous studies of depressed adults have provided support for the basic tenets of these models (see Mathews & MacLeod, 2005, for a review). Cognitive processing of social cues and, in particular, of facial expressions may play a particularly critical role in the development and maintenance of depression (Joiner & Timmons, 2008). Indeed, individual differences in the accurate identification of facial expressions of emotion have been found to predict the course of a depressive episode (Bouhuys, Geerts, & Gordijn, 1999; Geerts & Bouhuys, 1998), recovery from depression, and recurrence of depressive episodes (Hale, 1998). Processing of facial expressions of emotion emerges early in development and the ability to correctly recognize basic emotions is in place by middle childhood(Durand, Gallay, Seigneuric, Robichon, & Jean-Yves, 2007). More specifically, happiness appears to be recognized earliest and most accurately, followed by sadness, and then by expressions of anger (Durand et al., 2007; Herba et al., 2006, 2008). Durand et al. (2007), for example, reported that whereas full-intensity happiness and sadness are accurately recognized by children as young as 5 or 6 years of age, anger recognition does not reach the level exhibited by adults before 10 years of age, and even then may not be at an adult level for low-intensity expressions. Moreover, age differences appear to be particularly strong in children’s decoding of low-intensity and ambiguous faces (van Beek& Dubas, 2008a).

Although the majority of studies in this area use full-intensity facial expressions, it is important to note that in everyday life, people process a wide range of emotional stimuli, including signals that are far less intense than the prototypical facial expressions contained in standardized picture sets. Consequently, researchers have started to use morphed stimuli to assess the identification of traces of emotion and the processing of subtle changes in facial expressions. Indeed, investigators have found that depressed participants differ from their non-depressed counterparts in the processing of these stimuli (Joormann & Gotlib, 2006; Surguladze et al., 2004). To examine the operation of these processes prior to the onset of depression, however, it is necessary to study people who are at risk for developing, but have not yet experienced, this disorder.

The aim of the present study was to investigate whether daughters at familial risk for depression who have no current or past diagnosis of psychopathology differ from low-risk control daughters in their processing of subtle facial expressions of emotion. We used a morphed-faces task to assess the identification of emotional expressions. In this task, participants watch a series of computerized ‘movies’ of faces whose expressions change gradually from neutral to a full emotion. For each movie, participants are asked to press a key on the computer keyboard as soon as they detect an emotion, and are then asked to identify the emotion they detected. Drawing on diathesis-stress models (Monroe & Simons, 1991) and previous studies that have investigated cognitive processes in high-risk children (e.g., Taylor & Ingram, 1999), we used a negative mood induction procedure as a mild stressor prior to the assessment of emotion identification and compared the functioning of girls at low and high risk for depression.

Method

Participants

Participants were 85 girls between the ages of 9 and 14 with no current psychopathology and no history of any disorder listed on Axis I in the Diagnostic and Statistical Manual (DSM) of Mental Disorders, 4th edition (e.g., anxiety, mood, or eating disorders; DSM-IV; American Psychiatric Association, 1994). We recruited participants in this age group because girls younger than 9 are likely to have difficulties with the task instructions and because daughters of depressed mothers older than 14 are likely to have experienced a depressive episode themselves (Angold, Costello, & Worthman, 1998). Fifty girls had biological mothers with no current or past DSM Axis I disorder, and 35 girls had biological mothers with a history of recurrent MDD during their daughter’s lifetime. Participants were recruited through advertisements posted within the local community. A telephone screen established that both mothers and daughters were fluent in English and that daughters were between 9 and 14 years of age. Daughters were excluded if they had experienced severe head trauma, learning disabilities, and/or current or past depression. Of all the potential high-risk pairs screened (including pairs that were pre-screened on the phone and pairs that underwent the full diagnostic interview), 11% were included; of all the potential low-risk pairs screened, 26% were included.

Assessment of depression

Trained interviewers assessed the diagnostic status of daughters by administering the Schedule for Affective Disorders and Schizophrenia for School-Age Children-Present and Lifetime version (K-SADS-PL; Kaufman, Birmaher, Brent, Ryan, & Rao, 2000) separately to the daughters and to their mothers (about the daughters). The K-SADS-PL has been shown to generate reliable and valid child psychiatric diagnoses. A different interviewer administered the Structured Clinical Interview for the DSM-IV (SCID; First, Spitzer, Gibbon, & Williams, 1995) to the mothers. Both K-SADS-PL and SCID interviewers had previous experience administering structured clinical interviews. To assess inter-rater reliability, an independent rater who was blind to group membership evaluated 30% of our SCID and K-SAD-PL interviews by randomly selecting audiotapes of equal numbers of high-risk and control pairs. In all cases these diagnoses matched the original interviewer.

Daughters in the high-risk group (RISK) were eligible to participate in the study if: 1) they did not meet criteria for any past or current DSM Axis-I disorder according to both the parent and child K-SADS-PL; and 2) their mothers met the DSM criteria for at least two distinct episodes of MDD since the birth of their daughters, but did not currently meet criteria for MDD. In addition, mothers in the high-risk group were included only if they had no current diagnosis of any DSM Axis-I disorder, although they could have a past diagnosis. Daughters in the healthy control group (CTL) were eligible to participate if: 1) they did not meet criteria for any past or current DSM Axis-I disorder based on both the parent and child K-SADS-PL; and 2) their mothers did not meet criteria for any DSM Axis-I disorder during their lifetime.

Questionnaires

Daughters completed the 10-item version of the Children’s Depression Inventory (CDI-S; Kovacs, 1992) and the Multidimensional Anxiety Scale for Children (MASC; March, 1997). Mothers completed the Beck Depression Inventory-II (BDI; Beck, Steer, & Brown, 1996). Daughters also completed mood rating sheets before and after the mood induction. Ratings were made on a five-point scale consisting of drawn face pictures, ranging from very sad (1) to very happy (5) (e.g., Taylor & Ingram, 1999). Finally, the vocabulary section of the verbal subtest of the Wechsler Intelligence Scale for Children-IV (WISC-IV; Wechsler, 2003) was administered to the daughters to ensure that groups did not differ in intellectual ability.

Mood inductions

A meta-analysis of mood induction studies concluded that film clips with explicit instructions to enter a specific mood state are the most effective form of induction (Westermann, Spies, Stahl, & Hesse, 1996). Importantly, Silverman (1986) analyzed studies using mood inductions in children and concluded that methodologies used in adults are also effective in children. Thus, we used brief film clips to induce a negative mood state in the daughters. Participants were shown one of three randomly assigned film clips before completing the morphing task. Stepmom (Columbus, 1998) depicts a young son and adolescent daughter saying good-bye to their terminally ill mother. My Girl (Zeiff, 1991) depicts an adolescent girl learning that her best friend has died. Dead Poet’s Society (Weir, 1989) depicts an adolescent boy learning that his best friend has committed suicide. Following the film clip, guided imagery was presented for two minutes instructing the participants to think about how they would feel if they experienced the situation they had just viewed.

Emotion identification task

Stimuli

Stimuli were faces taken from the Facial Expressions of Emotions series set (FEEST; Young, Perrett, Calder, Sprengelmeyer, & Ekman, 2002), in which faces from Ekman and Friesen’s (1976) Series of Facial Affect have been morphed from a neutral expression to a fully emotive expression in 10% intervals. We selected a male and a female Caucasian face from the morphed series and included the sad, angry, and happy versions of each of these faces. Faces of the same two actors expressing disgust were used in practice trials. Using these pictures as raw material, we used the ‘Morph Studio: Morph Editor’ (Ulead, 2000) to further refine the morphed pictures: we created intermediate images between the 10% intervals for a total of 50 unique faces changing in 2% steps from neutral to full emotion. Using E-Prime software, each face was presented for 500 ms, which created the impression of an animated clip of the development of an emotional facial expression. The black-and-white faces were 18.5 × 13 cm in size and were presented in the middle of the screen using a black background. These movies were presented on a high-resolution 17” monitor.

Design

Each of the sequences (male and female actor expressing angry, happy, and sad emotion) was presented 5 times, for a total of 30 sequence presentations. To avoid a perfect correlation between time and expression intensity, and to increase the difficulty of the task, faces within the sequences were sometimes repeated so that the appearance of the next face in the emotion sequence was ‘jittered.’ For example, in some sequences the face with 12% emotion was repeated 3 times before the 14% emotion face was presented, while in other sequences 12% was followed immediately by 14%. In no case did the sequences move ‘backwards.’ Thus, each sequence consisted of 50 unique emotion faces, but 70 face presentations. The presentation of the sequences was randomized across participants. For each sequence, participants were instructed to watch the face change from neutral to an emotion and to press the space bar as soon as they saw an emotion. After the participants pressed the space bar, the sequence stopped and they were presented with a rating screen asking them to identify the emotion as happy, sad, or angry. The intensity of the emotion being expressed on the face when the participants pressed the space bar was recorded, as was their identification of the emotion.

Procedure

All participants provided informed consent, took part in the clinical interviews, and completed the questionnaires following the interviews. The task started with a mood measurement, followed by the mood induction and a second mood assessment, followed by the emotion identification task.1 Finally, a positive mood induction film clip was presented, followed by a final mood assessment. At the end of the session the daughters completed the WISC-IV Vocabulary subscale.

Results

Participant characteristics

Demographic and clinical characteristics of the two participant groups are presented in Table 1. The two groups did not differ with respect to age, t(83) < 1, ns, WISC-IV vocabulary scores or MASC scores, both t(83) < 1, ns, or ethnicity, χ2(4,84) = 7.79, ns; the RISK girls obtained slightly, but significantly, higher scores on the CDI-S than did the CTL girls, t(83) = 2.34, p < .05. In addition, although they did not meet diagnostic criteria for current MDD, the mothers of the RISK girls obtained significantly higher BDI-II scores than did the mothers of the CTL girls, t(80) = 4.85, p < .05. Six of the mothers indicated that they had experienced too many depressive episodes to count; for all other mothers, the number of depressive episodes ranged from 2 to 20 with a mean of 6.52 (SD = 5.74). Finally, although none of the mothers had a current diagnosis of a DSM Axis-I disorder, 10 of the 35 mothers in the high-risk group were diagnosed with a past disorder besides MDD, including anxiety disorders and eating disorders.

Table 1.

Characteristics of participants and mood measures

| Groups | ||

|---|---|---|

| RISK | CTL | |

| Demographics | ||

| N | 35 | 50 |

| % Caucasian | 71 | 70 |

| Age | 12.51 (1.50)a | 12.50 (1.42)a |

| WISC-IV | 52.06 (6.23)a | 51.52 (7.96)a |

| CDI-S | 2.71 (2.58)a | 1.56 (1.96)b |

| MASC | 39.30 (15.09)a | 42.93 (17.86)a |

| BDI-II (Mother) | 10.47 (9.47)a | 3.11 (4.73)b |

| Mood (1: very sad – 5: very happy) | ||

| Before MI | 4.00 (.59)a | 4.18 (.63)a |

| Before task | 2.91 (1.07)a | 2.38 (1.01)b |

| End of session | 4.29 (.63)a | 4.47 (.58)a |

Note: CTL = control participants; RISK = daughters of formerly depressed mothers; WISC-IV = Wechsler Intelligence Scale for Children; CDI-S = Child Depression Inventory; MASC = Multi-dimensional Anxiety Scale for Children; BDI-II = Beck Depression Inventory; MI = Mood induction. Standard deviations are shown in parentheses. Means having the same subscript are not significantly different at p < .05.

Mood induction

Mood changes were comparable for all three film clips, F(2,84) < 1. To examine the effectiveness of the mood induction procedure, we conducted a repeated-measures analysis of variance (ANOVA) on the mood ratings with group (RISK, CTL) as the between-subjects factor, and time (before mood induction, before task, and at the end of the session) as the within-subject factor. This ANOVA yielded a significant main effect of time, F(2,162) = 160.61, p < .01, which was qualified by a significant interaction of group and time, F(2,162) = 7.94, p < .01; the main effect of group was not significant, F(1,81) < 1, ns. The means of the mood ratings are presented in Table 1. Follow-up tests indicated that the two groups did not differ at the first or last mood rating (time 1: t(83) = 1.32; time 3: t(83) = 1.30, both p > .05). After the negative mood induction, however, RISK girls reported being less sad than did CTL girls, t(83) = 2.35, p < .05. Despite this group difference, both groups rated their mood as significantly more negative after the mood induction than they did before the mood induction (CTL: t(49) = 13.75, p < .01; RISK: t(34) = 6.75, p < .01). Thus, the mood inductions were effective in both groups.

Emotion identification task

Error rates

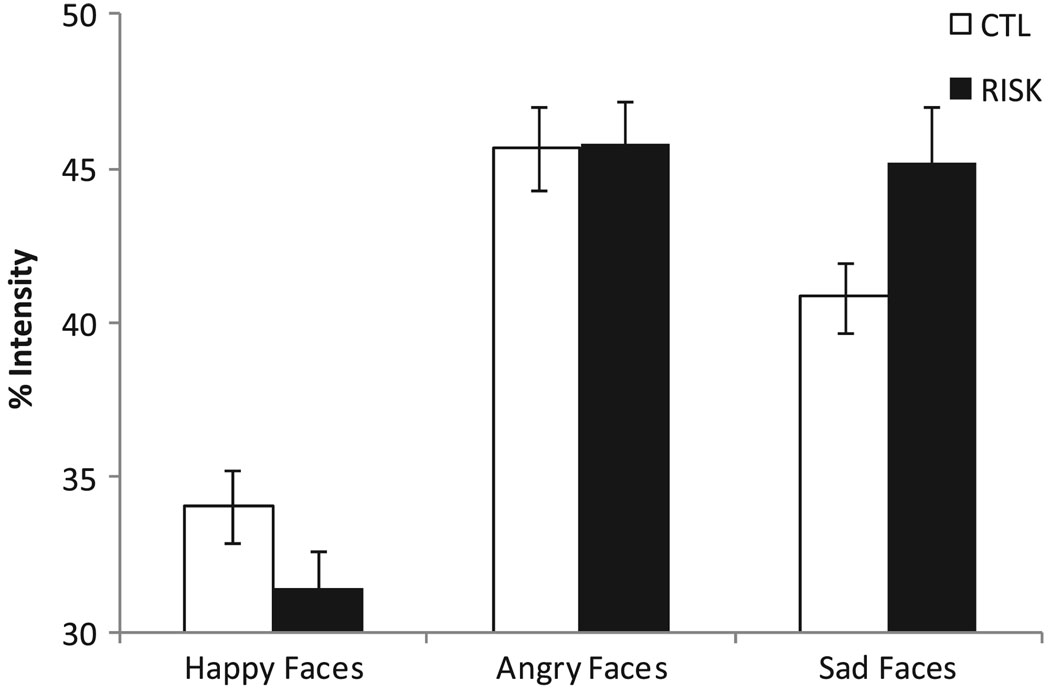

To investigate group differences in the accuracy of emotion identification, we analyzed the percentage of correct identifications (see Figure 1). As is apparent in Figure 1, overall identification accuracy was high. A two-way (group [RISK, CTL] by expression type [happy, angry, sad]) repeated-measures ANOVA conducted on the percentage of correct responses yielded a significant main effect for expression type, F(2,166) = 34.66, p < .001, which was qualified by a significant interaction of group and expression type, F(2,166) = 4.06, p < .05; the main effect for group was not significant, F(1,83) = 2.13, p > .05. Paired t-tests within each group indicated that CTL and RISK girls were more accurate when identifying happy faces than sad (CTL: t(49) = 2.00, p < .05; RISK: t(34) = 3.19, p < .01) or angry faces (CTL: t(49) = 3.99, p < .01; RISK: t(34) = 6.08, p < .01). In addition, both groups were more accurate in identifying sad than angry faces (CTL: t(49) = 2.51, p < .05; RISK: t(34) = 4.32, p < .01). Importantly, RISK participants did not differ from CTL participants in their accuracy to identify happy or sad faces, both t(83) < 1, ns. The RISK participants, however, were significantly less accurate than the CTL participants when identifying angry faces, t(83) = 2.22, p < .05.

Figure 1.

Mean percentage of correct emotion identifications made by girls at risk for depression (RISK) and control girls (CTL) as a function of facial expression. Error bars represent one standard error

Intensity analyses

Because we are examining the degree of intensity of the emotional facial expression required for the correct identification of the presented emotion, we restricted our analyses to trials in which participants accurately identified the facial expressions. In addition, we excluded trials in which participants waited until the presented face had reached 80% intensity or more (less than 3% of responses; no group difference in number of excluded trials, t(83) < 1, ns).

The intensity scores of the facial expression at the time of the key press were analyzed by a two-way (group [RISK, CTL] by expression type [happy, angry, sad]) repeated-measures ANOVA. This analysis yielded a significant main effect for expression type, F(2,166) = 97.47, p < .001, which was qualified by the predicted significant interaction of group and expression type, F(2,166) = 6.44, p < .01; the main effect for group was not significant, F(1,83) < 1.2 Follow-up analyses were conducted to compare RISK and CTL girls in their responses to the happy, angry, and sad faces (see Figure 2). No significant differences between the two groups of girls were found for either happy, t(83) = 1.54, p > .05 or angry faces, t(83) < 1, ns. Compared to the CTL girls, however, the RISK girls needed significantly greater levels of intensity to correctly identify the sad faces, t(83) = 2.11, p < .05.

Figure 2.

Mean emotional intensity of correctly identified facial expressions at time of key press made by girls at risk for depression (RISK) and control girls (CTL). Error bars represent one standard error

Paired t-tests indicated that both the CTL and the RISK girls needed less intensity to correctly identify the happy faces than the sad (CTL: t(49) = 4.89, p < .01; RISK: t(34) = 9.45, p < .01) or angry faces (CTL: t(49) = 7.35, p < .01; RISK: t(34) = 14.72, p < .01). Whereas the CTL participants needed less intensity to identify the sad than the angry faces, t(49) = 3.93, p < .01, this difference was not significant within the RISK group, t(34) < 1, ns.3

Discussion

Despite a growing literature demonstrating that children of depressed mothers are at elevated risk for developing a depressive episode, we know relatively little about the factors and mechanisms that underlie this heightened risk. The present study was designed to examine the identification of subtle facial expressions of emotion in carefully diagnosed never-disordered daughters of mothers with recurrent depression compared to never-disordered daughters of never-depressed mothers. Whereas no group differences were found in the processing of happy faces, the high-risk girls needed more intensity to correctly identify sad expressions and made more errors when identifying low-intensity angry expressions.

The morphing task used in this study allows us to assess two aspects of the processing of subtle facial expressions of emotion: (1) the intensity required to correctly identify a specific expression; and (2) the accuracy of differentiating between low-intensity sadness and anger after an emotional expression has been detected. Compared to low-risk girls, high-risk girls needed more intensity to correctly identify sad expressions and they were more likely to mistakenly identify a low-intensity expression of an angry face as sad. This suggests that the high-risk girls have difficulty detecting low-intensity sadness, requiring more intense expressions for correct identification, and tend to incorrectly identify low-intensity anger expressions as sad.

This is one of the first studies to investigate the processing of emotional facial expressions in high-risk children. Because we used subtle expressions of emotion and expressions that slowly changed from a neutral to a full-intensity expression, this task allowed us to assess the processing of facial expressions that are encountered frequently in everyday life. The pattern of findings in the CTL group is consistent with developmental research suggesting that expressions of happiness are easier to identify than are expressions of sadness, and that identification of anger, particularly at low-intensity levels, develops later in life (Thomas, De Bellis, Reiko, & LaBar, 2007). Thus, the CTL girls in our study needed less intensity to accurately identify sad faces than they did angry faces. For the high-risk girls, however, identifying sad expressions appeared to be as difficult as identifying angry expressions. It is possible that the high-risk girls are delayed in their development of decoding negative emotional expressions, and that we did not observe group differences for angry faces because anger identification is difficult for everyone. It is also possible that high-risk girls avoid thorough processing of the sad faces, putting them at a disadvantage compared to the CTL girls. Future studies are needed to investigate these explanations of our findings. In addition, high-risk girls made significantly more errors than did CTL girls when identifying low-intensity angry faces, suggesting that they have difficulty differentiating between subtle expressions of sadness and anger, and tend to interpret low-intensity angry faces as sad.

Two recent studies investigating high-risk groups of adults reported no risk-associated differences in emotion face processing. Mannie, Bristow, Harmer, and Cowen (2007) found that never-disordered young adults at familial risk for depression did not differ from controls in their identification of facial expressions of emotion with varying intensities. Similarly, Le Masurier, Cowen, and Harmer (2007) found no differences in emotion categorization of full-intensity expressions in non-disordered adult relatives of depressed patients. It is important to note, however, not only that neither of these studies included mood inductions prior to the emotion identification task, but perhaps more important, that both studies examined participants at familial risk for depression who had not experienced a depressive episode even though they had reached adulthood. It is possible, therefore, that the participants in these studies are resilient.

Interestingly, our results differ in important ways from findings of research conducted with depressed adults. Although not entirely consistent, the majority of studies examining emotion recognition show that, compared with controls, depressed people are faster in classifying negative expressions, and/or exhibit greater difficulties in identifying positive facial expressions (e.g., Surguladze et al., 2004). Most of these studies, however, used full-intensity expressions. In the only study that employed a morphing task with depressed adults similar to the task used in the present study, depression was found to be associated primarily with difficulties identifying positive expressions (Joormann & Gotlib, 2006). Thus, although both depressed adults and children at risk for depression exhibit difficulties in emotion processing, whereas depressed adults lack sensitivity to happiness, high-risk children have difficulty identifying and differentiating among subtle negative emotional expressions.

The few studies that have investigated emotion recognition in children diagnosed with depression document difficulties in their processing of negative expressions. Van Beek and Dubas (2008b), for example, reported that depressive symptoms in adolescents were associated with higher perceived intensity of anger and lower perceived intensity of joy in low-intensity faces. Similarly, higher levels of depression in the 9–11-year-old subsample were associated with more errors in the detection of anger. Lenti, Giacobbe, and Pegna (2000) found that currently depressed/dysthymic children exhibited difficulties recognizing full-intensity negative emotions. It is difficult, however, to compare findings in children who are already depressed with results obtained with children who are not depressed but are at high risk. It is not clear, for example, that risk factors necessarily overlap with symptoms that are consequences of being, or having been, depressed.

Future studies are needed to investigate mechanisms that may underlie the group differences obtained in this study. Researchers have proposed that age-related improvements in expression recognition are due to increased efficiency in encoding faces (e.g., De Sonneville et al., 2002). Thus, differences in emotion regulation strategies may lead high-risk girls to avoid a close inspection of negative facial expressions, increasing errors in recognition. Other studies have linked decoding of facial expressions to differences in the exposure to negative and positive facial expressions in social relations (e.g., peer relationships; van Beek & Dubas, 2008a). Because we did not assess these factors, these potential explanations of our data remain speculative.

We carefully selected our sample to control for various confounds that have characterized previous risk studies, such as current psychopathology in the parents and/or the children, and a history of psychopathology in the children. We also included only mothers who had experienced recurrent depressive episodes during their daughter’s lifetime. It is important to note, however, that controlling for these confounds came at the cost of recruiting a sample that is not representative of the population of children at risk for depression. That is, many children of depressed parents have already developed a disorder by age 9–14; such children would not have been included in our sample. It is possible, therefore, that we recruited a group of resilient girls. In addition, we examined only female offspring, and the mothers turned out to have a lower rate of comorbid conditions than would be expected given recent epidemiological studies; these two factors also limit the generalizability of our findings. Although we carefully diagnosed mothers and daughters to ensure that there were no current or lifetime diagnoses in the daughters, and no current diagnosis of any Axis-I disorder, including MDD, in the mothers, the mothers in the high-risk group nevertheless had higher BDI scores than did the never-disordered mothers; moreover, 10 mothers with a history of recurrent depression also met diagnostic criteria for another lifetime DSM Axis-I disorder. Thus, we cannot eliminate the possibility that the impairments in emotion processing observed in the daughters are related to the greater incidence of lifetime diagnoses other than depression in their mothers, or to their mothers’ elevated levels of current depressive symptoms. We should point out, however, that covarying the mother’s BDI scores in our analyses did not affect our results, nor did excluding mothers with a lifetime diagnosis other than depression from the analyses. In addition, including number of depressive episodes of the mothers in our analyses did not affect our findings. Because we did not assess at which ages of the daughters the mothers experienced depressive episodes, we were not able to control for this variable in our analyses. Similarly, although the high-risk daughters had slightly but significantly higher CDI-S scores than did the control daughters, not only were the high-risk daughters’ CDI-S scores well below the suggested cut-off scores for clinically significant depression (Kovacs, 1992), but further, we used CDI-S scores as a covariate in our analyses. Therefore, we are confident in concluding that the findings obtained in our study are not attributable to differences in current symptoms of depression.

Another limitation of the current design involves the nature of the negative mood induction. Guided by the formulations of cognitive models of depression and by previous findings with high-risk children (e.g., Taylor & Ingram, 1999), we made a decision to expose all of our participants to a negative mood manipulation. Consequently, we cannot be certain that our results are due to the mood manipulation. We also used only adult faces in our emotion identification task; future studies should include faces of children and adolescents to determine whether high-risk children process faces of peers differently than they do faces of adults (McClure, Pope, Hoberman, Pine, & Leibenluft, 2003). Finally, this study is limited by the lack of follow-up data. Certainly, it is important to elucidate how high-risk samples differ from controls; indeed, the current research strategy allows us to identify potential causal factors in the onset of depression. Nevertheless, follow-up data are needed to determine whether the processing of facial expressions of emotion is implicated explicitly in the onset of depression.

Taken together, the results of this study suggest that high-risk children have difficulties detecting subtle expressions of sadness and are prone to making errors when identifying low-intensity angry faces. Individuals use facial expressions of others as cues to regulate their own behavior, as indicators of success during attempts to regulate the emotions of others, and as important reflections of the attitudes of others. The relative inability of the high-risk children to accurately identify subtle changes in sad and angry facial expressions displayed by their interaction partners may contribute to their widely documented interpersonal difficulties and impairments in various aspects of social functioning (Hammen, 2008). Future studies investigating the predictive value of these individual differences in emotion face processing and mechanisms of the intergenerational transmission of risk for depression are clearly needed and could provide important information for interventions designed to prevent the onset of depression.

Key points

Depressed differ from nondepressed people in their processing of facial expressions of emotion.

Differences in emotion face processing may play a role in the onset, maintenance, and recurrence of depression but research has not established that differences are observed prior to the onset of depressive episodes.

High-risk girls without any current disorder or history of psychopathology differed from low-risk girls in their processing of low-intensity facial expressions of emotion.

High-risk girls needed more intensity to identify sad faces and were more likely to mistake low-intensity angry faces for sad faces.

Individual differences in emotion expression recognition may increase vulnerability for the onset of disorders and should be considered in early intervention and prevention efforts.

Acknowledgements

This research was supported by a Young Investigator Award from the National Alliance for Research on Schizophrenia and Affective Disorders (NARSAD) to Jutta Joormann and NIMH Grant MH MH074849 to Ian H. Gotlib.

Footnotes

Conflict of interest statement: No conflicts declared.

A dot-probe and self-referential encoding task were also assessed. The order of the tasks was counter-balanced. Including order as a factor in our analyses did not affect the current findings.

We included CDI scores, mood ratings after the mood induction, and daughter age as covariates in our analyses. We found no significant effects of the covariates, and the inclusion of the covariates did not alter our findings. Daughter age was correlated only with the intensity needed for the correct identification of happy expressions (−.31). Finally, within the high-risk group, neither mother BDI score nor number of depressive episodes were correlated significantly with any of the dependent measures.

o ensure that group differences in intensity needed to accurately identify an emotion were not due to group differences in error rates, we reanalyzed the data with number of errors as a covariate. There were no significant correlations among error rates and intensity for any of the emotional expressions; including error rates did not change the findings and did not result in any significant effects involving the covariate.

References

- American Psychiatric Association. Diagnostic and statistical manual of mental disorders. 4th edn. Washington, DC: American Psychiatric Association; 1994. [Google Scholar]

- Angold A, Costello EJ, Worthman CM. Puberty and depression: The roles of age, pubertal status and pubertal timing. Psychological Medicine. 1998;28:51–61. doi: 10.1017/s003329179700593x. [DOI] [PubMed] [Google Scholar]

- Beck AT. Depression: Clinical, experimental, and theoretical aspects. New York: Harper & Row; 1967. [Google Scholar]

- Beck AT, Steer RA, Brown GK. Manual for the Beck Depression Inventory-II. San Antonio, TX: Psychological Corporation; 1996. [Google Scholar]

- Bouhuys AL, Geerts E, Gordijn MCM. Depressed patients’ perceptions of facial emotions in depressed and remitted states are associated with relapse: A longitudinal study. Journal of Nervous and Mental Disease. 1999;187:595–602. doi: 10.1097/00005053-199910000-00002. [DOI] [PubMed] [Google Scholar]

- Columbus C. Stepmom [Film] 1998 (director) [Google Scholar]

- De Sonneville LM, Verschoor CA, Njiokiktjien C, Op het Veld V, Toorenaar N, Vranken M. Facial identity and facial emotions: Speed, accuracy, and processing strategies in children and adults. Journal of Clinical and Experimental Neuropsychology. 2002;24:200–213. doi: 10.1076/jcen.24.2.200.989. [DOI] [PubMed] [Google Scholar]

- Durand K, Gallay M, Seigneuric A, Robichon F, Jean-Yves B. The development of facial emotion recognition: The role of configural information. Journal of Experimental Child Psychology. 2007;97:14–27. doi: 10.1016/j.jecp.2006.12.001. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Measuring facial movement. Environmental Psychology and Nonverbal Behavior. 1976;1:56–75. [Google Scholar]

- First MB, Spitzer MB, Gibbon M, Williams JBW. Structured Clinical Interview for DSM-IV Axis I Disorders – Patient Edition (SCID-I/P, Version 2.0) New York: Biometrics Research Department, New York State Psychiatric Institute; 1995. [Google Scholar]

- Geerts E, Bouhuys N. Multi-level prediction of short-term outcome of depression: Non-verbal interpersonal processes, cognitions and personality traits. Psychiatry Research. 1998;79:59–72. doi: 10.1016/s0165-1781(98)00021-3. [DOI] [PubMed] [Google Scholar]

- Guyer AE, McClure EB, Adler AD, Brotman MA, Rich BA, Kimes AS, et al. Specificity of facial 0 in childhood psychopathology. Journal of Child Psychology and Psychiatry. 2007;48:863–871. doi: 10.1111/j.1469-7610.2007.01758.x. [DOI] [PubMed] [Google Scholar]

- Hale WW. Judgment of facial expressions and depression persistence. Psychiatry Research. 1998;80:265–274. doi: 10.1016/s0165-1781(98)00070-5. [DOI] [PubMed] [Google Scholar]

- Hammen C. Children of depressed parents. In: Gotlib IH, Hammen CL, editors. Handbook of depression. 2nd edn. New York: Guilford Press; 2008. pp. 275–297. [Google Scholar]

- Herba CM, Benson P, Landau S, Russell T, Goodwin C, et al. Impact of familiarity upon children’s developing facial expression recognition. Journal of Child Psychology and Psychiatry. 2008;49:201–210. doi: 10.1111/j.1469-7610.2007.01835.x. [DOI] [PubMed] [Google Scholar]

- Herba CM, Landau S, Russell T, Ecker C, Phillips ML. The development of emotion-processing in children: Effects of age, emotion, and intensity. Journal of Child Psychology and Psychiatry. 2006;47:1098–1106. doi: 10.1111/j.1469-7610.2006.01652.x. [DOI] [PubMed] [Google Scholar]

- Joiner TE, Jr, Timmons KA. In: Handbook of depression. 2nd edn. Gotlib IH, Hammen CL, editors. New York: Guilford Press; 2008. pp. 322–339. [Google Scholar]

- Joormann J, Gotlib IH. Is this happiness I see? Biases in the identification of emotional facial expressions in depression and social phobia. Journal of Abnormal Psychology. 2006;115:705–714. doi: 10.1037/0021-843X.115.4.705. [DOI] [PubMed] [Google Scholar]

- Kaufman J, Birmaher B, Brent D, Ryan ND, Rao U. K-SADS-PL. Journal of the American Academy of Child and Adolescent Psychiatry. 2000;39:1208. doi: 10.1097/00004583-200010000-00002. [DOI] [PubMed] [Google Scholar]

- Kovacs M. The Children’s Depression Inventory manual. New York: Multi-Health Systems; 1992. [Google Scholar]

- Le Masurier M, Cowen PJ, Harmer CJ. Emotional bias and waking salivary cortisol in relative of patients with major depression. Psychological Medicine. 2007;37:403–410. doi: 10.1017/S0033291706009184. [DOI] [PubMed] [Google Scholar]

- Lenti C, Giacobbe A, Pegna C. Recognition of emotional facial expressions in depressed children and adolescents. Perceptual and Motor Skills. 2000;91:227–236. doi: 10.2466/pms.2000.91.1.227. [DOI] [PubMed] [Google Scholar]

- Lieb R, Isensee B, Hofler M, Pfister H, Wittchen H-U. Parental major depression and the risk of depression and other mental disorders in offspring: A prospective-longitudinal community study. Archives of General Psychiatry. 2002;59:365–374. doi: 10.1001/archpsyc.59.4.365. [DOI] [PubMed] [Google Scholar]

- Mannie ZN, Bristow GC, Harmer CJ, Cowen PJ. Impaired emotional categorization in young people at increased familial risk of depression. Neuropsychologia. 2007;45:2975–2980. doi: 10.1016/j.neuropsychologia.2007.05.016. [DOI] [PubMed] [Google Scholar]

- March JS. Multidimensional Anxiety Scale for Children: Technical manual. Toronto, ON: Multi-Health Systems; 1997. [Google Scholar]

- Mathews A, MacLeod C. Cognitive vulnerability to emotional disorders. Annual Review of Clinical Psychology. 2005;1:167–195. doi: 10.1146/annurev.clinpsy.1.102803.143916. [DOI] [PubMed] [Google Scholar]

- McClure E, Pope K, Hoberman A, Pine D, Leibenluft E. Facial expression recognition in adolescents with mood and anxiety disorders. American Journal of Psychiatry. 2003;160:1172–1174. doi: 10.1176/appi.ajp.160.6.1172. [DOI] [PubMed] [Google Scholar]

- Monroe SM, Simons AD. Diathesis-stress theories in the context of life stress research: Implications for the depressive disorders. Psychological Bulletin. 1991;110:406–425. doi: 10.1037/0033-2909.110.3.406. [DOI] [PubMed] [Google Scholar]

- Nowicki S, Carton E., Jr The relation of nonverbal processing ability of faces and voices and children’s feelings of depression and competence. The Journal of Genetic Psychology. 1997;158:357–363. doi: 10.1080/00221329709596674. [DOI] [PubMed] [Google Scholar]

- Silverman WK. Psychological and behavioral effects of mood-induction procedures with children. Perceptual and Motor Skills. 1986;63:1327–1333. [Google Scholar]

- Surguladze SA, Young AW, Senior C, Brebion G, Travis MJ, Phillips ML. Recognition accuracy and response bias to happy and sad facial expressions in patients with major depression. Neuropsychology. 2004;18:212–218. doi: 10.1037/0894-4105.18.2.212. [DOI] [PubMed] [Google Scholar]

- Taylor L, Ingram RE. cognitive reactivity and depressotypic information processing in children of depressed mothers. Journal of Abnormal Psychology. 1999;108:202–208. doi: 10.1037//0021-843x.108.2.202. [DOI] [PubMed] [Google Scholar]

- Thomas LA, De Bellis MD, Reiko G, LaBar KS. Development of emotional facial recognition in late childhood and adolescence. Developmental Science. 2007;10:547–558. doi: 10.1111/j.1467-7687.2007.00614.x. [DOI] [PubMed] [Google Scholar]

- Ulead . Morph Studio (Version 1.0) Torrance, CA: Author; 2000. [Computer software] [Google Scholar]

- van Beek Y, Dubas J. Age and gender differences in decoding basic and non-basic facial expressions in late childhood and early adolescence. Journal of Nonverbal Behavior. 2008a;32:37–52. [Google Scholar]

- van Beek Y, Dubas J. Decoding basic and non-basic facial expressions and depressive symptoms in late childhood and adolescence. Journal of Nonverbal Behavior. 2008b;32:53–64. [Google Scholar]

- Walker E. Emotion recognition in disturbed and normal children: A research note. Journal of Child Psychology and Psychiatry. 1981;22:263–268. doi: 10.1111/j.1469-7610.1981.tb00551.x. [DOI] [PubMed] [Google Scholar]

- Wechsler D. Wechsler Intelligence Scale for Children–IV. San Antonio, TX: The Psychological Corporation; 2003. [Google Scholar]

- Weir P. Dead Poets Society [Film] 1989 (director) [Google Scholar]

- Westermann R, Spies K, Stahl G, Hesse FW. Relative effectiveness and validity of mood induction procedures: A meta-analysis. European Journal of Social Psychology. 1996;26:557–580. [Google Scholar]

- Young A, Perrett D, Calder A, Sprengelmeyer R, Ekman P. Facial Expressions of Emotion – Stimuli and Tests (FEEST) Bury St. Edmunds, England: Thames Valley Test; 2002. [Google Scholar]

- Zeiff H. My Girl [Film] 1991 (director) [Google Scholar]