Abstract

Previous studies have shown that recognition of facial expressions is influenced by the affective information provided by the surrounding scene. The goal of this study was to investigate whether similar effects could be obtained for bodily expressions. Images of emotional body postures were briefly presented as part of social scenes showing either neutral or emotional group actions. In Experiment 1, fearful and happy bodies were presented in fearful, happy, neutral and scrambled contexts. In Experiment 2, we compared happy with angry body expressions. In Experiment 3 and 4, we blurred the facial expressions of all people in the scene. This way, we were able to ascribe possible scene effects to the presence of body expressions visible in the scene and we were able to measure the contribution of facial expressions to the body expression recognition. In all experiments, we observed an effect of social scene context. Bodily expressions were better recognized when the actions in the scenes expressed an emotion congruent with the bodily expression of the target figure. The specific influence of facial expressions in the scene was dependent on the emotional expression but did not necessarily increase the congruency effect. Taken together, the results show that the social context influences our recognition of a person’s bodily expression.

Keywords: Emotion, Body expression, Social scene, Context effects

Facial expressions are by far the most frequently used stimuli in human emotion perception research. Over decades, a large body of evidence has been published showing that emotion perception is not just based on facial information alone (Hunt 1941). Indeed, in our natural world, a face is usually encountered not as an isolated object but as an integrated part of a whole body. The face and the body both contribute in conveying the emotional state of the individual. Meeren et al. (2005) show that observers judging a facial expression (fear or anger) are strongly influenced by emotional body language; an enhancement of the occipital P1 component as early as 115 ms after stimulus presentation onset points to the existence of a rapid neural mechanism sensitive to the agreement between simultaneously presented facial and bodily emotional expressions. Aviezer et al. (2008a) positioned prototypical pictures of disgust faces on torsos conveying different emotions. Their results showed that placing a face in a context induced striking changes in the recognition of emotional categories from the facial expressions to the extent where the “original” basic expression was lost when positioned on an emotionally incongruent torso (for the interested reader see Aviezer et al. 2008b). Knowledge of the social situation (Carroll and Russell 1996), body postures (Meeren et al. 2005; Van den Stock et al. 2007; Aviezer et al. 2008a), voices (de Gelder and Vroomen 2000; Van den Stock et al. 2007), scenes (Righart and de Gelder 2006, 2008a, b), linguistic labels (Barrett et al. 2007), or other emotional faces (Russel and Fehr 1987) all influence emotion perception.

Research on context effects has a long tradition in object but not in face recognition. Because of repetitive co-occurrence of objects or co-occurrence of a given object in a specific context, our brains generate expectations (Bar and Ullman 1996; Biederman et al. 1974). A context can facilitate object detection and recognition (Biederman et al. 1982; Boyce and Pollatsek 1992; Boyce et al. 1989; Palmer 1975), even when glimpsed briefly and even when the background can be ignored (Davenport and Potter 2004). Joubert et al. (2008) observed that context incongruence induced a drop of correct hits and an increase in reaction times, affecting even early behavioral responses. They conclude that object and context must be processed in parallel with continuous interactions, possibly through feed-forward co-activation of populations of visual neurons selective to diagnostic features. Facilitation would be induced by the customary co-activation of “congruent” populations of neurons, whereas interference would take place when conflictual populations of neurons fire simultaneously. Bar (2004) proposes a model in which interactions between context and objects take place in the inferior temporal cortex.

In line with the evolutionary significance of the information, the effects of the emotional gist of a scene may occur at an early level and it has been suggested that the rapid extraction of the gist of a scene may be based on low spatial frequency coding (Oliva and Schyns 1997). We previously showed scene context congruency effects on the perception of facial expressions (Righart and de Gelder 2006, 2008a, b). They were seen when participants explicitly categorized the emotional expression of the face (Righart and de Gelder 2008a) but also when they focussed on its orientation (Righart and de Gelder 2006). This indicates that affective gist congruency reflects an early and mandatory process and suggests a perceptual basis. Our EEG studies support this view: the presence of a fearful expression in a fearful context enhanced the face-sensitive N170 amplitude when compared to a face in a neutral context. This effect was absent for contexts-only, indicating that it resulted from the combination of a fearful face in a fearful context (Righart and de Gelder 2006). Righart and de Gelder (2008a) replicated this finding by briefly (200 ms) presenting fearful faces in fearful versus happy scenes.

Similar context effects have already been found for bodies. Using point-light displays, Thornton and Vuong (2004) have shown that the perceived action of a walker depends upon actions of nearby “to-be-ignored” walkers. The task-irrelevant figures could not be ignored and were processed unconsciously to a level where they influenced behavior. Another point-light study demonstrates that the recognition of a person’s emotional state depends upon another person’s presence (Clarke et al. 2005).

If indeed we recognize a person’s emotional behavior in relation to that of the social group, it is important to focus on the specific aspects of group behavior. Group behavior may be considered at different levels, of which three are relevant for understanding the visual process at stake: (1) the relative group size, (2) the dynamic motor and action aspects of the group and (3) the affective significance of the group’s activity (Argyle 1988). Context effects may take place along all three dimensions and therefore require appropriate control conditions. First, group size is not considered as a variable in our study as the different group scenes used all have similar group sizes. The second and third aspects relating to action and effect were the focus of our recent brain imaging studies (de Gelder et al. 2004; Grèzes et al. 2007; Kret et al. submitted; Pichon et al. 2008) and see de Gelder et al. (2010) for an overview.

Here we investigated whether briefly viewed information from a task-irrelevant social scene influences how observers categorize the emotional body expression of the central figure. For this purpose, we selected scenes that represent a group of people engaged in an intense action either neutrally or affectively laden. By contrasting the affective meaning and keeping the action representation similar, we manipulated specifically the affective dimension of the social scenes. Our main interests were threefold. First, we aimed to investigate the influence of a congruent versus incongruent scene on body expression recognition. We expected enhanced performance in the congruent conditions. Second, we were interested in disambiguating the contribution from the emotion versus the action component. Our hypothesis was that the similarity along the emotion dimension of the social rather than along the action dimension influences recognition of the target body expression. If so, bodily expressions may be recognized faster in an emotionally congruent than in a neutral action scene indicating that the effect derives from target-scene emotional congruency. Third, we aimed to investigate the contribution of facial expressions visible in the scene to the recognition of emotional body expressions. Based on previous studies that report strong mutual influence of face and body expressions, we expected the strongest context effects when scenic facial expressions were visible.

In Experiment 1, these predictions were tested by presenting fearful and happy bodies in fearful, happy, neutral and scrambled contexts. In Experiment 2, we compared happy with angry body expressions. Experiment 3 was similar to Experiment 1, but the faces in the background were blurred to ascertain that possible effects were a result of the body expressions visible in the scene and not of bystanders’ facial expressions. In Experiment 4, faces in the scenes were blurred and the same design as Experiment 2 was kept. We used naturalistic color photographs as color has been shown to improve object and scene recognition (Oliva and Schyns 2000; Wurm et al. 1993). Based on previous results (Righart and de Gelder 2006, 2008a, b) and on studies of rapid scene recognition (Bar et al. 2006; Thorpe and Fabre-Thorpe 2002; Maljkovic and Martini 2005), we used short presentation times.

Angry, fearful, sad and happy expressions are the emotions that are most often used in emotion research (de Gelder 2006). Here we specifically wanted to contrast a positive versus negative body expression in a positive/welcoming versus negative/threatening social scene that one wants to avoid. As an opponent of the positive, happy emotion, we could choose among angry, disgusted, fearful or sad stimuli. We did not opt for sad bodies and scenes since these contain less action than happy bodies and scenes, whereas angry and fearful stimuli contain comparable action intensity. Whereas disgust can be expressed very clearly via the face, the body expressions are more ambiguous and resemble fearful expressions (de Gelder 2006). We did not include anger and fear in one design since that would result in twice as many negative versus positive emotions. With the current design, we had an equal amount of positive and negative body expressions in each experiment.

The influence of a social context on the perception of an emotional body expression

Experiment 1. Fearful and happy bodies in a social emotional context including faces

Method

Images of emotional body postures were briefly presented in scenes showing intense group activities with either neutral or emotional valence. Participants rapidly categorized the target body expression.

Participants: Twenty-four students of Tilburg University (7 men; mean age: 20 years, range 17–25 years old) with no neurological or psychiatric history and normal or corrected-to-normal vision participated in the study. The experimental procedures were in accordance with the Helsinki Declaration and approved by Tilburg University.

Apparatus, Design and Procedure: We briefly describe the construction and validation of the target body stimuli. A total of 38 male and 46 female amateur actors were recruited. Prior to the photography session, they were instructed with a standardized procedure and received payment. As part of the instructions, the actors were familiarized with a typical scenario corresponding to each emotion; the fearful scenario was an encounter with an aggressive dog and the happy scenario was an encounter with a friend. A total of 869 body stimuli (consisting of fearful, happy, angry, sad, disgusted and neutral instrumental actions) were included in the validation study and were shown to 120 participants. Stimuli were presented for 4 s with an inter-stimulus interval of 7 s. Participants were instructed to categorize the emotion displayed by circling the correct answer on an answer sheet. Eight happy and fearful body images, correctly recognized on average for 91% (standard deviation, SD 10), were included in the experiment.

Scenes were selected from the Internet. We took care to make them gender balanced (for example, the neutral condition included a soccer field with male players; therefore, we also included a female hockey team playing). In a separate validation study, we measured affective gist recognition by presenting each image twice for 100 ms in random order. Fearful (people running away for danger), happy (people dancing at a party) and neutral scenes (people involved in sports) were correctly recognized for 87, 97, and 92%, respectively. Scenes showing bodies involved in neutral actions served as baseline.

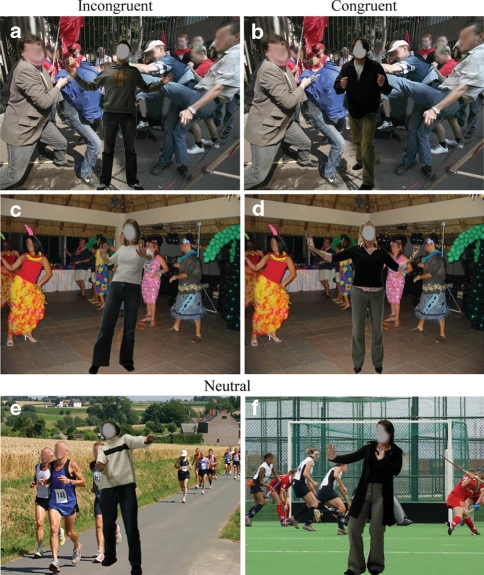

We also validated the stimuli as they were used in the experiments described in this paper. The selected bodies were pasted on fearful, angry, happy and neutral scenes. These were presented with unlimited duration to 24 participants who had to categorize the emotion of the middle target body. The mean (M) recognition rates and SDs were as followed: angry bodies in angry scenes (M = 91%, SD 8), angry bodies in happy scenes (M = 89%, SD 11), angry bodies in neutral scenes (M = 91%, SD 9), fearful bodies in fearful scenes (M = 97%, SD 6), fearful bodies in happy scenes (M = 98%, SD 4), fearful bodies in neutral scenes (M = 96%, SD 8), happy bodies in happy scenes (M = 75%, SD 21), happy bodies in fearful scenes (M = 73%, SD 23), happy bodies in angry scenes (M = 75%, SD 22) and happy bodies in neutral scenes (M = 77%, SD 21). Body expressions were not better or worse recognized in a congruent versus incongruent or in a congruent versus neutral scene when stimuli were presented with unlimited duration. See Fig. 1 for stimulus examples.

Fig. 1.

a The upper left figure shows a man who is joyfully surprised and greets an old friend. Strangely, this man is in the middle of a fight. b The upper right figure shows a man who is threatening another person that also wants to join the fight. c Middle left: the woman on the foreground is frightened at something but the other people in the scene do not experience the situation as threatening and are still enjoying the party, as can be read from their body language. The incongruence makes recognition of the emotion of the foreground figure difficult. d Middle right: the girl on the foreground is happily welcoming a new visitor/a friend at the party. Her emotion matches the social situation and the emotion of the other people. e/f The figure below shows a man (left) and women (right) who are frightened at something. The people in the scene are involved in sports. Body expressions are easier to recognize when congruent with the social scene

Mosaic squared scrambles (38 × 28) were created using MATLAB, containing identical luminance, color and contrast as the originals. For each scene category, eight similar scenes were included. There were two emotions (fearful and happy) shown by eight actors (half man), four context categories (fearful, happy, neutral, scrambles) and eight different scenes (versions) per context category, yielding eight conditions of 64 stimuli each (512 stimuli). Stimuli were arranged in two equivalent blocks to allow participants a 2-min break in between. Each block thus contained 256 randomized trials. In order to have the scrambles equally represented in the experiment and not to fatigue the participant with superfluous trials, we included 64 of the 384 scrambled counterparts of all unscrambled stimuli. Since we controlled for handedness by counterbalancing two versions of the experiment across the participants (in version 1, fear was button nr.1 on the response box and happy button nr. 2 and in version 2 the inverse), we were able to select different scrambles per version and use as many different ones as possible. Initially, we meant to use the scrambled condition as a non-emotion, non-action condition. However, across all experiments, bodies were significantly better recognized in these scrambled contexts than in unscrambled contexts (possible pop-out effect or due to the semantic information content of the background) (t (175) ≤ 2.58, P ≤ .01) similar to the results for faces in scrambled context observed in Righart and de Gelder (2006). Therefore, the neutral condition was considered as a more viable baseline (see also Sommer et al. 2008). Compared to the scrambled scenes, neutral scenes still contain action (without emotion) and were therefore considered a better baseline.

Participants were seated at a table in a dimly lit, soundproof booth. Distance to the computer screen was 60 cm. Stimuli were presented on a PC screen with a 60 Hz refresh rate and subtended 19.9° of visual angle vertically and 30.8° horizontally. Instructions were given verbally and via an instruction screen. Participants were given a two-alternative forced choice task over two emotions and were instructed to focus on the main figure in the middle of the screen, to categorize as accurate and rapid as possible its emotion, to respond with their right index and middle finger and not to change the position of their fingers during the experiment. A trial started with a white fixation cross on a gray screen (300 ms), a stimulus (100 ms), followed by a gray screen shown until button press (with a maximum duration of 8 s).

Results

Trials with reaction times (RT) below 200 ms or above 2,000 ms were discarded from the analysis, leading to 2.1% outliers. Trials were also excluded from the RT analyses if the response was incorrect. Main and interaction effects of scene and body emotion for mean accuracy (ACC) and RT were tested in a 2 × 3 repeated measures analysis of variance (ANOVA) with two within-participant variables, “body emotion” (fear and happy) and “context emotion” (fear, happy, neutral). T-test planned comparisons were used to test our hypothesis of congruency effects and to compare the perception of an emotional body expression in a congruent emotional scene with a scene that contains action but is emotionally neutral. We expected that a congruent social scene would not only enhance recognition, it would also speed up recognition when compared to an incongruent and also when compared to a neutral scene. All statistical information can be found in Table 1.

Table 1.

| Accuracy | Reaction time | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Experiment 1 (N = 24) | |||||||||||

| Congruent context vs. Incongruent context | M | 87 | vs. | 74 | t = 2.43, P < .05, d = .31 | 665 | vs. | 682 | t = 1.59, P = .063, d = .14 | ||

| SD | 10 | 26 | 110 | 129 | |||||||

| Congruent context vs. Neutral context | M | 87 | vs. | 83 | t = 1.53, P = .07, d = .32 | 665 | vs. | 688 | t = 1.97, P < .05, d = .19 | ||

| SD | 10 | 15 | 110 | 137 | |||||||

| Neutral context vs. Incongruent context | M | 83 | vs. | 74 | t = 3.17, P < .005, d = .42 | 688 | vs. | 682 | P = .650 | ||

| SD | 15 | 26 | 137 | 129 | |||||||

| Fearful body in fearful context vs. Fearful body in happy context | M | 87 | vs. | 72 | t = 2.76, P < .005, d = .76 | 643 | vs. | 668 | t = 1.79, P < .05, d = .21 | ||

| SD | 10 | 26 | 108 | 129 | |||||||

| Fearful body in fearful context vs. Fearful body in neutral context | M | 87 | vs. | 77 | t = 1.89, P < .05, d = .51 | 643 | vs. | 693 | t = 2.52, P < .01, d = .36 | ||

| SD | 10 | 26 | 108 | 162 | |||||||

| Happy body in happy context vs. Happy body in fearful context | M | 88 | vs. | 75 | t = 2.08, P < .05, d = .64 | 687 | vs. | 696 | P = .228 | ||

| SD | 12 | 27 | 115 | 138 | |||||||

| Happy body in happy context vs. Happy body in neutral context | M | 88 | vs. | 89 | P = .160 | 687 | vs. | 682 | P = .276 | ||

| SD | 12 | 10 | 115 | 124 | |||||||

| Experiment 2 (N = 22) | |||||||||||

| Congruent context vs. Incongruent context | M | 87 | vs. | 74 | t = 4.07, P < .001, d = 1.03 | 659 | vs. | 683 | t = 3.02, P < .005, d = .13 | ||

| SD | 8 | 16 | 170 | 196 | |||||||

| Congruent context vs. Neutral context | M | 87 | vs. | 80 | t = 5.68, P < .001, d = .82 | 659 | vs. | 661 | P = .302 | ||

| SD | 8 | 9 | 170 | 174 | |||||||

| Neutral context vs. Incongruent context | M | 80 | vs. | 74 | t = 2.53, P < .01, d = .46 | 661 | vs. | 683 | t = 3.19, P < .01, d = .12 | ||

| SD | 9 | 16 | 174 | 196 | |||||||

| Angry body in angry context vs. Angry body in happy context | M | 83 | vs. | 70 | t = 6.13, P < .001, d = .81 | 639 | vs. | 662 | t = 2.54, P < .01, d = .15 | ||

| SD | 15 | 17 | 160 | 146 | |||||||

| Angry body in angry context vs. Angry body in neutral context | M | 83 | vs. | 72 | t = 9.45, P < .001, d = .78 | 639 | vs. | 645 | P = .240 | ||

| SD | 15 | 13 | 160 | 170 | |||||||

| Happy body in happy context vs. Happy body in angry context | M | 90 | vs. | 79 | t = 2.70, P < .01, d = .62 | 678 | vs. | 705 | t = 1.32, P = .101, d = .12 | ||

| SD | 7 | 24 | 189 | 256 | |||||||

| Happy body in happy context vs. Happy body in neutral context | M | 90 | vs. | 88 | t = 1.34, P = .096, d = .19 | 678 | vs. | 678 | P = .485 | ||

| SD | 7 | 13 | 189 | 187 | |||||||

| Experiment 3 (N = 22) | |||||||||||

| Congruent vs. Incongruent | M | 87 | vs. | 74 | t = 2.47, P < .05, d = .70 | 691 | vs. | 714 | t = 1.98, P < .05, d = .14 | ||

| SD | 8 | 25 | 145 | 174 | |||||||

| Congruent vs. Neutral | M | 87 | vs. | 81 | t = 2.09, P < .05, d = .52 | 691 | vs. | 724 | t = 3.00, P < .005, d = .21 | ||

| SD | 8 | 14 | 145 | 166 | |||||||

| Neutral vs. Incongruent | M | 81 | vs. | 74 | t = 2.63, P < .01, d = .35 | 724 | vs. | 714 | P = .288 | ||

| SD | 14 | 25 | 166 | 174 | |||||||

| Fearful body in fearful context vs. Fearful body in happy context | M | 88 | vs. | 73 | t = 2.69, P < .01, d = .77 | 676 | vs. | 724 | t = 3.12, P < .005, d = .29 | ||

| SD | 9 | 27 | 142 | 183 | |||||||

| Fearful body in fearful context vs. Fearful body in neutral context | M | 88 | vs. | 80 | t = 1.92, P < .05, d = .48 | 676 | vs. | 725 | t = 3.27, P < .005, d = .30 | ||

| SD | 9 | 22 | 142 | 186 | |||||||

| Happy body in happy context vs. Happy body in fearful context | M | 87 | vs. | 74 | t = 2.22, P < .05, d = .65 | 705 | vs. | 705 | P = .499 | ||

| SD | 11 | 26 | 180 | 157 | |||||||

| Happy body in happy context vs. Happy body in neutral context | M | 87 | vs. | 83 | P = .105 | 705 | vs. | 723 | P = .118 | ||

| SD | 11 | 16 | 180 | 168 | |||||||

| Experiment 4 (N = 20) | |||||||||||

| Congruent context vs. Incongruent | M | 85 | vs. | 77 | t = 2.54, P < .01, d = .55 | 619 | vs. | 626 | P = .178 | ||

| SD | 10 | 18 | 140 | 139 | |||||||

| Congruent context vs. Neutral context | M | 85 | vs. | 81 | t = 2.72, P < .01, d = .34 | 619 | vs. | 624 | P = .250 | ||

| SD | 10 | 13 | 140 | 145 | |||||||

| Neutral context vs. Incongruent context | M | 81 | vs. | 77 | t = 1.85, P < .05, d = .25 | 624 | vs. | 626 | P = .713 | ||

| SD | 13 | 18 | 145 | 139 | |||||||

| Angry body in angry context vs. Angry body in happy context | M | 82 | vs. | 77 | t = 1.67, P < .05, d = .29 | 603 | vs. | 624 | t = 2.35, P < .05, d = .17 | ||

| SD | 12 | 18 | 120 | 130 | |||||||

| Angry body in angry context vs. Angry body in neutral context | M | 82 | vs. | 80 | P = .253 | 603 | vs. | 612 | P = .150 | ||

| SD | 12 | 15 | 120 | 126 | |||||||

| Happy body in happy context vs. Happy body in angry context | M | 88 | vs. | 77 | t = 3.01, P < .005, d = .63 | 636 | vs. | 628 | P = .449 | ||

| SD | 13 | 21 | 166 | 154 | |||||||

| Happy body in happy context vs. Happy body in neutral context | M | 88 | vs. | 81 | t = 3.74, P < .001, d = .46 | 636 | vs. | 636 | P = .493 | ||

| SD | 13 | 17 | 166 | 172 | |||||||

Congruent = happy body in happy context + anger (or fearful) body in anger (or fearful) context

Incongruent = happy body in anger (or fearful) context + anger (or fearful) body in happy context

Neutral = happy body in neutral context + anger (or fearful) body in neutral context

M mean (in percentage correct or milliseconds), SD standard deviation

Accuracy: There was a main effect of context emotion [F(2, 46) = 5.39, P < .01, η2p = .19] and for body emotion [F(1, 23) = 4.30, P < .05, η2p = .16]. Bonferroni corrected pairwise comparisons revealed that bodies were better recognized in a neutral context than in a happy context (P < 0.05). Happy bodies were better recognized than angry bodies (P < 0.01). An interaction effect was observed for body emotion × context emotion [F(2, 46) = 5.39, P < .01, η2p = .19].

Bodies, irrespective of the specific emotion, were more accurately recognized in a congruent context versus incongruent context and in a neutral context versus in an incongruent context. There was a trend toward significance for enhanced body recognition in a congruent context versus in a neutral context. Fearful body expressions were more accurately recognized in a fearful context than in a happy context or than in a neutral context. Happy bodies were better recognized in a happy versus in a fearful context but not versus in a neutral context.

Reaction time: An interaction was found for body emotion × context emotion [F(2, 46) = 3.83, P < .05, η2p = .14].

Bodies were faster recognized in a congruent context versus in a neutral one. There was a trend toward significance for faster recognition of body expressions in a congruent versus neutral context but there was no difference between a neutral and incongruent context. There was a faster response for a fearful body in a fearful context than for a fearful body in a happy or neutral context. A happy body in a happy context when compared to in a fearful context did not yield a significant difference and neither when compared to in a neutral context.

Discussion

Target body expressions were more accurately recognized in congruent social scenes than in incongruent or baseline social scenes. Recognizing a fearful expression was more accurate in a context of people fleeing from danger and a happy body expression was best recognized in a context consisting of people dancing at a party. An emotionally congruent scene possibly speeds up the recognition process of fearful body expressions. Although fearful bodies were recognized faster in a fearful context, we cannot draw the conclusion that happy bodies were also faster recognized in the congruent condition. Importantly, the ACC congruency effects were no speed-accuracy trade-offs.

Experiment 2. Angry and happy bodies in a social emotional context including faces

Our goal was to measure whether the observed effects of Experiment 1 would generalize to angry expressions. Therefore, we replicated the previous experiment with angry rather than fearful bodily expressions in an angry (congruent), happy (incongruent) or neutral context.

Method

Participants: A new group of 22 students participated (5 men; range 18–25 years old, Mean: 21 years old).

Apparatus, Design and Procedure: Apparatus, design and procedure were identical to the former experiment with the difference of angry rather than fearful stimuli. Angry scenes (people on strike) were 88% correctly recognized as was measured subsequent to the main experiment in a separate validation of the scenes without foreground bodies. Eight angry body images that were on average correctly recognized for 92% (SD 10) replaced the fearful body images that were used in the previous experiment.

Results

ACC and RT were calculated after exclusion of two participants due to recognition at chance level and extremely fast RTs. 2.5% of the trials fell out of the range of 200–2,000 ms and were treated as outliers. Trials were also excluded from the RT analyses if the response was incorrect.

Accuracy: A main effect was found of body emotion [F (1, 21) = 6.31, P < .05, η2p = .23]. Bonferroni corrected pairwise comparisons revealed that happy bodies were better recognized than angry bodies (M = 86, SD 17 vs. M = 75, SD 16) (P < 0.05). There was no main effect of scene emotion. There was a significant interaction between body emotion and context emotion [F (2, 42) = 17.41, P < .001, η2p= .45].

Bodies were better recognized in a congruent versus in an incongruent and versus in a neutral context and also in a neutral versus in an incongruent context. Angry bodies in an angry context were better recognized than in a happy context or in a neutral context. Happy bodies were better recognized in a happy context than in an angry context. Happy bodies were not better recognized in a happy than in a neutral context, although a trend toward significance was observed.

Reaction time: There was a main effect for body emotion [F(1, 21) = 4.32, P < .05, η2p = .17]. Bonferroni corrected pairwise comparisons revealed that angry bodies were recognized faster than happy bodies (M = 649, SD 157 vs. M = 687, SD 210) (P = 0.05). An interaction effect between body emotion and context emotion was found [F(2, 42) = 3.36, P < .05, η2p = .14]. Bodies were recognized faster in a congruent versus in an incongruent context and in a neutral context versus in an incongruent context. Target body expressions were not faster recognized in a congruent context versus in a neutral context. Angry bodies were recognized faster in an angry versus in a happy context but not versus in a neutral context. There was a trend toward significance for happy bodies in a happy versus in an angry context, but not when compared to in a neutral context.

Discussion

The congruency effect we found in Experiment 1 was also present for angry expressions. Angry bodies were more accurately and faster recognized in an angry context. Happy body expressions were better recognized in a happy context. We cannot rule out, however, the possible confounding influence of the presence of faces in the scenes.

The role of facial expressions in interactions between emotion of the context and body expression

Experiment 3. Fearful and happy bodies in a social emotional context with blurred faces

We repeated the first experiment but blurred the facial expressions that were still visible in the scenes that may have confounded the obtained results in Experiment 1. Blurring the faces allowed us to measure the influence of pure bodily expressions of bystanders on the recognition of one individual’s body expression.

Method

Participants: A new group of 22 students participated (6 men; range 18–41 years old, Mean: 22 years old).

Apparatus, Design and Procedure: The single difference from Experiment 1 was the blurring of faces in the scenes.

Results

The trials that fell out of the pre-defined range that was used in the former experiments were considered as outliers (2.5%). Trials were also excluded from RT analyses if the response was incorrect.

Accuracy: An interaction effect was observed of body emotion × context emotion [F (2, 42) = 5.14, P < .01, η2 p= .20].

Bodies were better recognized in a congruent context versus in an incongruent context and in a congruent context versus in a neutral context. Moreover, bodies were better recognized in a neutral context versus in an incongruent context. Recognition of fearful bodies was better in a fearful context than in a happy context or than in a neutral context. Happy bodies were better recognized in a happy context than in a fearful context but not than in a neutral context, although a trend was observed.

Reaction time: A trend toward significance was found for the interaction body emotion × context emotion [F (2, 42) = 2.41, P < .1, η2p = .11].

Body expressions were when compared to baseline facilitated by a congruent context and slowed down by an incongruent versus a congruent context. There was no difference between a neutral and incongruent context. RTs in the congruent fear condition were shorter than in the incongruent happy context and were also shorter than in the neutral context. Happy bodies were not recognized faster in a happy context when compared to in a fearful context or in a neutral context.

Discussion

The enhanced recognition ACC of body postures in congruent versus incongruent scenes found in Experiment 1 obtains when faces in the scenes were blurred. Happy bodies were best recognized in a happy context, fearful bodies in a fearful context. RTs in the congruent fear condition were shorter than fearful bodies in the happy or neutral scenes, but there was only a trend toward significance for the interaction body emotion × context emotion. The results indicate that the emotional congruency effect that we found in Experiment 1 cannot be attributed to the presence of facial expressions. The presence of people expressing emotions via bodily postures influences the perception of body emotion from the target figure. Our next question is whether the same conclusion can be drawn for angry expressions.

Experiment 4. Angry and happy bodies in a social emotional context with blurred faces

Method

Participants: A new group of twenty students participated (7 men; range 19–30 years old, Mean: 22 years old).

Apparatus, Design and Procedure: Apparatus, design and procedure were identical to the former experiment that used blurred faces with the sole difference of angry instead of fearful stimuli.

Results

Due to recognition at chance level, three participants were excluded from analysis. Moreover, 2.4% of the trials were excluded since they fell out of the pre-defined range that was maintained across all experiments. Trials were also excluded from the RT analyses if the response was incorrect.

Accuracy: A main effect was found of context emotion [F (2, 38) = 4.57, P < .05, η2p = .19]. Bonferroni corrected pairwise comparisons revealed that bodies were better recognized in a happy context than in an angry context (P < 0.05). There was an interaction effect between body and context emotion [F (2, 38) = 5.22, P < .01, η2p = .22].

Bodies were better recognized in a congruent context versus in a neutral or incongruent context and in a neutral context versus in an incongruent context. Angry bodies in an angry context were better recognized than in a happy context but not than in a neutral context. Happy bodies in a happy context were more accurately recognized than in an angry context or than in a neutral context.

Reaction time: There were no main or interaction effects. In the perspective of the former experiments, we had clear expectations about a possible facilitating influence of a congruent scene and therefore, we conducted planned comparison paired samples t-tests. These revealed that RTs in the congruent anger condition were shorter than in the happy context but not than in the neutral context. Happy bodies were not recognized faster in happy contexts when compared to in angry or neutral contexts.

Discussion

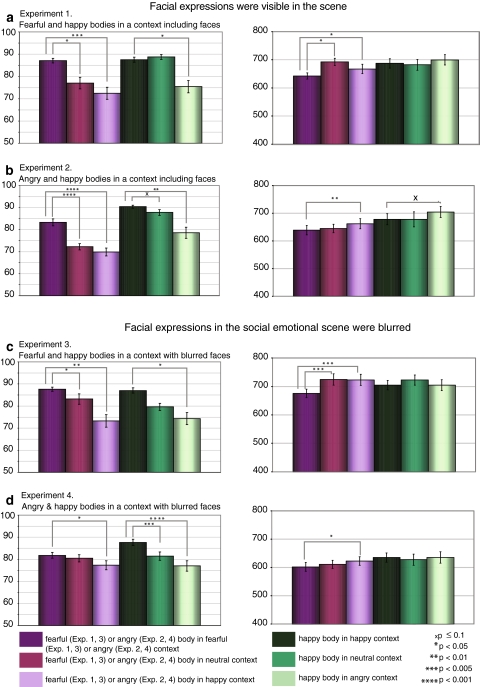

After having blurred the faces, all effects remained in the ACC data and for the angry but not happy body expressions in the RT data. The results were not related to speed-accuracy trade-offs. See Fig. 2.

Fig. 2.

a Recognition was more accurate and faster for a fearful body in a fearful versus happy or neutral context. A happy body in a happy versus fearful context was better recognized. b Angry bodies in an angry context were more accurate and faster recognized than in a happy context and happy bodies in a happy versus angry (or neutral for ACC data) context. c Congruency effects in the ACC data for both body emotions were observed. Moreover, fearful bodies were better and faster recognized in a fearful versus neutral and happy context. d Angry bodies in an angry context were more accurately and faster recognized than in a happy context. Happy bodies in a happy context were better recognized versus in an angry or neutral context. In sum, individual body expressions were best recognized in emotionally congruent social scenes

The presence of facial expressions

Difference scores of congruent and incongruent conditions were calculated and independent sample t-tests conducted to compare the context congruency effect in the experiments where faces were still visible versus where they were not.

Angry body expressions: The congruency effects in the ACC data of angry body expressions were stronger in the experiments where facial expressions were visible: ACC anger non-blurred congruent—incongruent (M difference = .13, SD .10) versus anger blurred congruent—incongruent (M difference = .04, SD .12) (t (40) = 2.61, P < .05, d = .80). Subsequent t-tests revealed the origin of this effect; angry body expressions were better recognized in a happy context where faces were blurred than where they were visible (M = 77%, SD 18 vs. M = 70%, SD 17) (t (40) = 1.38, P = .09, d = .43). This was not due to the specific presence of happy facial expressions since a similar effect was observed for the presence of neutral facial expressions (blurred vs. non-blurred (M = 72%, SD 13 vs. M = 80%, SD 15) (t (40) = 1. 89, P < .05, d = .58). The presence of angry facial expressions did not influence ACC or RT.

Fearful body expressions: A numerically consistent but non-significant trend as with angry body expressions was observed.

Happy body expressions: The congruency effect in the ACC data of happy bodies (in happy or angry context) was larger when facial expressions were invisible (M difference = −.09, SD .25 vs. M difference = .11, SD .16) (t (40) = 2.97, P < .01, d = .93). Subsequent t-tests did not reveal any differences. There were no differences in the RTs.

In conclusion, the influence of facial expressions in the scene was dependent on the specific emotional expression. The presence of facial expressions was not the crucial factor toward a congruency effect. In all four experiments, the body expressions in the scene influenced how the target body expression was perceived.

General discussion

The aim of this study was to investigate the influence of social contexts on the recognition of a single emotional body expression. The effects of congruency on emotional body perception were investigated using manipulated photographs containing a foreground figure that was either displaying the same or a different emotion than the people in the background. In the first experiment, participants categorized as fast as possible the emotion of the actor (fear and happy). In the second experiment, the task was similar, but we used different emotions (anger and happy). The third experiment was similar to Experiment 1, but the faces of people in the scene were blurred to ascertain that the obtained effects were specifically related to the congruence of the body expressions seen in the background. In the fourth experiment, angry and happy expressions were used and faces in the scenes were blurred. Finally, the effect of the presence of facial expressions was tested by comparing reaction times and accuracy rates of all conditions of Experiment 1 with 3 and 2 with 4.

In the human emotion literature, there is thus far no answer to the question of whether our recognition of an individual’s emotional body language is influenced by bodily expressions of other individuals as perceived in a naturalistic scene. It is known that an emotional scene influences the perception of facial expressions. Body expressions, in contrast to facial expressions, represent and implement emotion, but in addition also direct action. Therefore, we go beyond our prior studies by investigating the role of the social emotional scene representing actions from other people on the perception of an individuals’ emotional body expression. During our life span, we are confronted more often with situations where people express similar (group) emotions and therefore it is likely that we are quicker and better in reacting to less ambiguous situations since this has survival value. Therefore, we predicted an enhanced recognition of body expressions in an emotionally congruent versus incongruent and neutral social action scene especially when facial expressions in the scenes were visible.

Indeed, fearful, angry and happy body expressions were more accurately recognized in congruent social emotional scenes. We observed a significant contribution to a congruency effect of the presence of facial expressions in Experiment 4 versus 2. This was merely due to increased incongruence for an angry body in a happy and neutral context with facial expressions visible. The presence of facial expressions did not increase ACC in the congruent conditions and neither specifically speeded up processing. However, different participants were involved in the different experiments. For the interested reader, see Meeren et al. 2005; Van den Stock et al. 2007; Aviezer et al. 2008a, b.

The actions we see going on in the background may automatically trigger action representation. We hypothesized that when the actions seen in the background have emotional significance similar to that of the central character, the target recognition would speed up. In Experiment 1 and 3, we indeed found that fearful bodies were recognized faster in a fearful than in a happy or neutral context. Furthermore, the presence of other angry people in the scene speeded up the RT in the observer when compared to when the angry body expression was perceived in a happy context (Experiment 2). After having blurred the faces in Experiment 4, we lost the interaction between body and scene emotion in the RTs, but a congruency effect for angry expressions was still present. All predicted effects were present in the ACC data. We found the strongest congruency effects for the RT data in the fearful body scene compounds. Although we expected to find it as clearly for the other emotions, thinking about how fast mass panic can spread out over many people versus observers’ ambivalent behavior in an aggressive situation (fight, help or flight?) or the time it takes to “warm up/drink in” at a party, this might not be a strange result after all.

Our study is in line with earlier studies about scene congruency effects in object recognition. For example, if there is high probability that a certain context surrounds a visual object, the processing of that object is facilitated, whereas unexpected contexts tend to inhibit it (Palmer 1975; Ganis and Kutas 2003; Davenport and Potter 2004; but see also Hollingworth and Henderson 1998). Studies of scene recognition and context effects show that scenes can be processed and scene gist recognized very rapidly (Thorpe and Fabre-Thorpe 2002; Maljkovic and Martini 2005; Bar et al. 2006; Joubert et al. 2007). ERPs recorded from the visual cortices demonstrate differences between emotional and neutral scenes as early as 250 ms from stimulus onset (Junghöfer et al. 2001). In a recent study, Joubert et al. (2008) investigated the time-course of animal/context interactions in a rapid go/no-go categorization task. They conclude that the congruence facilitation is induced by the customary co-activation of “congruent” populations of neurons, whereas interference would take place when conflicting populations of neurons fire simultaneously.

However, our study includes the factor ‘emotion’. Emotions are intimately linked to action preparation. The results of the current study are in line with the few experimental studies that currently exist on the influence of emotional scenes on the perception of faces and bodies and imply that the facilitating effect of context congruence reflects a mandatory process with an early perceptual basis (Righart and de Gelder 2006).

There is a possibility that the congruency effect occurs at the response level. Especially in case of a more ambiguous stimulus participants may attend to the context. However, there is not much time for that and it is against the task instructions. A presentation time of 100 ms (although not masked) is too short to make saccades from the fixation point. Biederman et al. (1982) and Davenport and Potter (2004) have shown that 100 ms is sufficient to expect pop-out effects between background and foreground object. In the current experiment, the foreground figure is pasted on the background and although we tried to make the scene as naturalistic as possible, some pop-out effect of the foreground figure may have come through. However, if so, this will be true as much in all conditions since all the foreground bodies were combined with all scenes. Moreover, when stimuli were presented with unlimited presentation duration, and scenes processed consciously, body expressions were not better or worse recognized in a congruent versus in an incongruent or neutral scene that also speaks against a response conflict.

Finally, yet other processes than the ones measured here may contribute to the observed effects. For example, the tendency to automatically mimic and synchronize facial expressions, vocalizations, postures and movements with those of another person and, consequently, to converge emotionally may play a role (de Gelder et al. 2004; Hatfield et al. 1994). The same brain areas are involved when subjects experience disgust (Wicker et al. 2003) or pain (Jackson et al. 2005), as when they observe someone else experiencing these emotions. Such a process may contribute to observers’ ability to perceive rapidly ambiguity between a person’s body language and its social emotional context. This incongruity may create a conflict in emotional contagion processes triggered by the target figure and help to explain the slower and less accurate reaction of the observer. This explanation needs further testing using EMG measurements.

Acknowledgments

Research was supported by Nederlandse Organisatie voor Wetenschappelijk Onderzoek, (NWO, 400.04081), Human Frontiers Science Program RGP54/2004 and European Commission (COBOL FP6-NEST-043403) grants. We are grateful to J. A. Arends for his assistance with editing figures and stimuli and the COBOL consortium for inspiring discussions. We thank M. Tamietto, M. Keetels, S. van Mierlo and A. Takaka for their useful comments on the paper.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

References

- Argyle M. Bodily communication. London: Methuen; 1988. [Google Scholar]

- Aviezer H, Hassin R, Ryan J, Grady G, Susskind J, Anderson A, et al. Angry, disgusted or afraid? Studies on the malleability of emotion perception. Psychol Sci. 2008;19:724–732. doi: 10.1111/j.1467-9280.2008.02148.x. [DOI] [PubMed] [Google Scholar]

- Aviezer H, Hassin R, Bentin S, Trope Y. Putting facial expressions back in context. In: Ambady N, Skowroski J, editors. First impressions. New York: Guilford Press; 2008. pp. 255–288. [Google Scholar]

- Bar M. Visual objects in context. Nat Rev Neurosci. 2004;5(8):617–629. doi: 10.1038/nrn1476. [DOI] [PubMed] [Google Scholar]

- Bar M, Ullman S. Spatial context in recognition. Perception. 1996;25(3):343–352. doi: 10.1068/p250343. [DOI] [PubMed] [Google Scholar]

- Bar M, Kassam KS, Ghuman AS, Boshyan J, Schmid AM, Dale AM, Hämäläinen MS, et al. Top-down facilitation of visual recognition. Proc Natl Acad Sci Online (US) 2006;103(2):449–454. doi: 10.1073/pnas.0507062103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Lindquist KA, Gendron M. Language as context in the perception of emotion. Trends Cogn Sci. 2007;11:327–332. doi: 10.1016/j.tics.2007.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biederman I, Rabinowitz JC, Glass AL, Stacy EW., Jr On the information extracted from a glance at a scene. J Exp Psychol. 1974;103(3):597–600. doi: 10.1037/h0037158. [DOI] [PubMed] [Google Scholar]

- Biederman I, Mezzanotte RJ, Rabinowitz JC. Scene perception: detecting and judging objects undergoing relational violations. Cogn Psychol. 1982;14(2):143–177. doi: 10.1016/0010-0285(82)90007-X. [DOI] [PubMed] [Google Scholar]

- Boyce SJ, Pollatsek A. Identification of objects in scenes: the role of scene background in object naming. J Exp Psychol Learn Mem Cogn. 1992;18(3):531–543. doi: 10.1037/0278-7393.18.3.531. [DOI] [PubMed] [Google Scholar]

- Boyce SJ, Pollatsek A, Rayner K. Effect of background information on object identification. J Exp Psychol Hum Percept Perform. 1989;15(3):556–566. doi: 10.1037/0096-1523.15.3.556. [DOI] [PubMed] [Google Scholar]

- Carroll JM, Russell JA. Do facial expressions signal specific emotions? Judging emotion from the face in context. J Pers Soc Psychol. 1996;70(2):205–218. doi: 10.1037/0022-3514.70.2.205. [DOI] [PubMed] [Google Scholar]

- Clarke TJ, Bradshaw MF, Field DT, Hampson SE, Rose D. The perception of emotion from body movement in point-light displays of interpersonal dialogue. Perception. 2005;34(10):1171–1180. doi: 10.1068/p5203. [DOI] [PubMed] [Google Scholar]

- Davenport JL, Potter MC. Scene consistency in object and background perception. Psychol Sci. 2004;15:559–564. doi: 10.1111/j.0956-7976.2004.00719.x. [DOI] [PubMed] [Google Scholar]

- de Gelder B. Towards a biological theory of emotional body language. Biol Theory. 2006;1(2):130–132. doi: 10.1162/biot.2006.1.2.130. [DOI] [Google Scholar]

- de Gelder B, Vroomen J. The perception of emotions by ear and by eye. Cogn Emot. 2000;14(3):289–311. doi: 10.1080/026999300378824. [DOI] [Google Scholar]

- de Gelder B, Snyder J, Greve D, Gerard G, Hadjikhani N. Fear fosters flight: a mechanism for fear contagion when perceiving emotion expressed by a whole body. Proc Natl Acad Sci (US) 2004;101(47):16701–16706. doi: 10.1073/pnas.0407042101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B, Van den Stock J, Meeren HKM, Sinke CBA, Kret ME, Tamietto M (2010) Standing up for the body. Recent progress in uncovering the networks involved in processing bodies and bodily expression. Neurosci and Biobehav Rev 34:513–527 [DOI] [PubMed]

- Ganis G, Kutas M. Visual scene effects on object identification: an electrophysiological investigation. Cogn Brain Res. 2003;16:123–144. doi: 10.1016/S0926-6410(02)00244-6. [DOI] [PubMed] [Google Scholar]

- Grèzes J, Pichon S, de Gelder B. Perceiving fear in dynamic body expressions. NeuroImage. 2007;35(2):959–967. doi: 10.1016/j.neuroimage.2006.11.030. [DOI] [PubMed] [Google Scholar]

- Hatfield E, Cacioppo JT, Rapson RL. Emotional contagion. Cambridge: Cambridge University Press; 1994. [Google Scholar]

- Hollingworth A, Henderson JM. Does consistent scene context facilitate object perception? J Exp Psychol Gen. 1998;127:398–415. doi: 10.1037/0096-3445.127.4.398. [DOI] [PubMed] [Google Scholar]

- Hunt WA. Recent developments in the field of emotion. Psychol Bull. 1941;38:249–276. doi: 10.1037/h0054615. [DOI] [Google Scholar]

- Itier RJ, Taylor MJ. Face recognition memory and configural processing: a developmental ERP study using upright, inverted, and contrast-reversed faces. J Cogn Neurosci. 2004;16:487–502. doi: 10.1162/089892904322926818. [DOI] [PubMed] [Google Scholar]

- Jackson PL, Meltzoff AN, Decety J. How do we perceive the pain of others? A window into the neural processes involved in empathy. NeuroImage. 2005;24(3):771–779. doi: 10.1016/j.neuroimage.2004.09.006. [DOI] [PubMed] [Google Scholar]

- Joubert OR, Rousselet GA, Fize D, Fabre-Thorpe M. Processing scene context: fast categorization and object interference. Vis Res. 2007;47:3286–3297. doi: 10.1016/j.visres.2007.09.013. [DOI] [PubMed] [Google Scholar]

- Joubert OR, Fize D, Rousselet GA, Fabre-Thorpe M (2008) Early interference of context congruence on object processing in rapid visual categorization of natural scenes. J Vis 8(13):11,1–18 [DOI] [PubMed]

- Kret ME, Pichon S, Grèzes J, de Gelder B. Gender specific brain activations in perceiving threat from dynamic faces and bodies. (submitted) [DOI] [PMC free article] [PubMed]

- Junghöfer M, Bradley MM, Elbert TR, Lang PJ. Fleeting images: a new look at early emotion discrimination. Psychophysiology. 2001;38(2):175–178. doi: 10.1017/S0048577201000762. [DOI] [PubMed] [Google Scholar]

- Maljkovic V, Martini P. Short-term memory for scenes with affective content. J Vis. 2005;5(3):215–229. doi: 10.1167/5.3.6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meeren HK, van Heijnsbergen CC, de Gelder B. Rapid perceptual integration of facial expression and emotional body language. Proc Natl Acad Sci Online (US) 2005;102(45):16518–16523. doi: 10.1073/pnas.0507650102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oliva A, Schyns PG. Coarse blobs or fine edges? Evidence that information diagnosticity changes the perception of complex visual stimuli. Cogn Psychol. 1997;34:72–107. doi: 10.1006/cogp.1997.0667. [DOI] [PubMed] [Google Scholar]

- Oliva A, Schyns PG. Diagnostic colors mediate scene recognition. Cogn Psychol. 2000;41:176–210. doi: 10.1006/cogp.1999.0728. [DOI] [PubMed] [Google Scholar]

- Palmer SE. The effect of contextual scenes on the identification of objects. Mem Cogn. 1975;3:519–526. doi: 10.3758/BF03197524. [DOI] [PubMed] [Google Scholar]

- Pichon S, De Gelder B, Grèzes J. Emotional modulation of visual and motor areas by still and dynamic body expressions of anger. Soc Neurosci. 2008;3(3):199–212. doi: 10.1080/17470910701394368. [DOI] [PubMed] [Google Scholar]

- Righart R, de Gelder B. Context influences early perceptual analysis of faces-an electrophysiological study. Cereb Cortex. 2006;16(9):1249–1257. doi: 10.1093/cercor/bhj066. [DOI] [PubMed] [Google Scholar]

- Righart R, de Gelder B. Rapid influence of emotional scenes on encoding of facial expressions. An ERP study. Soc Cogn Affect Neurosci. 2008;3:270–278. doi: 10.1093/scan/nsn021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Righart R, de Gelder B. Recognition of facial expressions is influenced by emotional scene gist. Cogn Affect Behav Neurosci. 2008;8(3):264–272. doi: 10.3758/CABN.8.3.264. [DOI] [PubMed] [Google Scholar]

- Russel JA, Fehr B. Relativity in the perception of emotion in facial expressions. J Exp Psychol. 1987;116:233–237. [Google Scholar]

- Sommer M, Döhnel K, Meinhardt J, Hajak G. Decoding of affective facial expressions in the context of emotional situations. Neuropsychologia. 2008;46(11):2615–2621. doi: 10.1016/j.neuropsychologia.2008.04.020. [DOI] [PubMed] [Google Scholar]

- Thornton IM, Vuong QC. Incidental processing of biological motion. Curr Biol. 2004;14(12):1084–1089. doi: 10.1016/j.cub.2004.06.025. [DOI] [PubMed] [Google Scholar]

- Thorpe SJ, Fabre-Thorpe M (2002) Fast visual processing and its implications In M Arbib (ed) The handbook of brain theory and neural networks. pp. 255–258

- Van den Stock J, Righart R, de Gelder B. Body expressions influence recognition of emotions in the face and voice. Emotion. 2007;7(3):487–494. doi: 10.1037/1528-3542.7.3.487. [DOI] [PubMed] [Google Scholar]

- Wicker B, Keysers C, Plailly J, Royet JP, Gallese V, Rizzolatti G. Both of us disgusted in my insula: the common neural basis of seeing and feeling disgust. Neuron. 2003;40(3):655–664. doi: 10.1016/S0896-6273(03)00679-2. [DOI] [PubMed] [Google Scholar]

- Wurm LH, Legge GE, Isenberg LM, Luebker A. Color improves object recognition in normal and low vision. J Exp Psychol Hum Percept Perform. 1993;19:899–911. doi: 10.1037/0096-1523.19.4.899. [DOI] [PubMed] [Google Scholar]