Abstract

Current conceptions of human language include a gestural component in the communicative event. However, determining how the linguistic and gestural signals are distinguished, how each is structured, and how they interact still poses a challenge for the construction of a comprehensive model of language. This study attempts to advance our understanding of these issues with evidence from sign language. The study adopts McNeill’s criteria for distinguishing gestures from the linguistically organized signal, and provides a brief description of the linguistic organization of sign languages. Focusing on the subcategory of iconic gestures, the paper shows that signers create iconic gestures with the mouth, an articulator that acts symbiotically with the hands to complement the linguistic description of objects and events. A new distinction between the mimetic replica and the iconic symbol accounts for the nature and distribution of iconic mouth gestures and distinguishes them from mimetic uses of the mouth. Symbiotic symbolization by hand and mouth is a salient feature of human language, regardless of whether the primary linguistic modality is oral or manual. Speakers gesture with their hands, and signers gesture with their mouths.

Keywords: sign language, gesture, mouth gesture, iconic, hand and mouth, symbolization

The vocal apparatus in spoken languages conveys the lion’s share of linguistic material in that medium. In sign languages used by deaf people, it is the hands that perform this role. But it has become increasingly clear that humans exploit other physical articulators in the process of communication. Spoken languages are universally accompanied by co-speech manual gestures (Kendon 1980, 2004; McNeill 1992; Goldin-Meadow 2003), and by gestures of the face and body as well (special issue of Language and Speech, in press). Similarly, in sign languages, the face, head, and body contribute to the linguistic message conveyed primarily by the hands (e.g., Liddell 1978, 1980; Reilly et al. 1990; Wilbur 2000; Nespor and Sandler 1999; Sandler in press). Yet within this panoply of corporeal activity, most researchers agree that some kinds of expression are prototypically linguistic and others prototypically gestural, while acknowledging that there may be grey areas in between (see Kendon 2004). The crucial point is that language — all language — requires both. Here I will argue that language in the manual modality also includes a simultaneously transmitted, expressive gestural component.

The type of gesture that is the focus of this study is what McNeill (1992) calls iconic gestures. These gestures have the special function of adding meaningful, imagistic information to the symbolic content of the text, and they do so in a simultaneous and complementary fashion. In spoken language, the oral component conveys the text, and the manual component complements the text in an interaction that can be called symbiotic. In sign language, the semiotic components of the symbiosis are reversed: the hands convey the text, and the mouth simultaneously supplies the complementary gesture.

In order to demonstrate that iconic mouth gestures are indeed gestural, it is first necessary to distinguish them from the linguistic structure of sign languages, and the latter is described in Section 1. That section includes a description of non-manual signals in sign language that have linguistic functions, distinct from the gestural. The distinction is made clearer by the criteria argued by McNeill to distinguish linguistically organized material in the oral modality from gestures, described in Section 2. To specify precisely the role of these gestures, a new distinction is necessary — a distinction between the symbolic icon and the mimetic replica. This categorization is supported in Section 2.2, where the semiotic function of symbolization is attributed to the former. The heart of the paper is Section 3, where it is shown that signers of Israeli Sign Language (ISL) produce gestures that are naturally categorized as iconics, and that they do it with their mouths. The gestural properties of these mouth shapes and movements are distinguished from linguistic properties, and their distribution in the language is described.

The central claim made here is that the human “language instinct”1 drives the hand and the mouth to create symbolic images, combining in a simultaneous and complementary fashion linguistically organized material with holistic and iconic expressions. The two modalities participate in what we may call symbolic symbiosis. The use of iconic manual gestures in spoken language is found universally, and similarly, the use of iconic mouth gestures in ISL is no fluke. Section 4 demonstrates that other established sign languages also incorporate mouth gestures. We see there that even early signers of a new sign language that arose recently within an insular community, Al-Sayyid Bedouin Sign Language, accompany their signing with iconic mouth gestures. Section 5 provides an interim summary.

While iconic mouth gestures have a particular role to play in a comprehensive model of language, there are several other widespread functions of the mouth in sign languages, which Section 6 describes briefly. Their existence, and their coordination with the manual linguistic signal, provide further evidence for the drive to use hand and mouth in language. The findings reported here are integrated into a broader research context pointing to the hand-mouth relation in language in Section 7, specifically noting studies in language evolution, including certain implications of mirror neuron research. Section 8 is a summary and conclusion.

1. Sign languages are linguistically structured

Sign languages are spontaneously occurring languages that arise whenever a group of deaf people has opportunity to meet and interact regularly. They are not consciously invented by anyone, nor are they derivative of ambient spoken languages. Sign languages are the product of the same human brain and social interaction as spoken language, but selfstructured in a different physical modality. Over half a century of intensive research on sign language has demonstrated that there are substantial formal similarities between languages in the two modalities, though they differ in certain interesting ways from one another (see Sandler and Lillo-Martin 2006).

1.1. Phonology

Working against a backdrop on which sign languages were pictured as crude gestural systems with no inherent linguistic structure (e.g., Bloomfield 1933), it was William Stokoe who first demonstrated that sign languages have formal linguistic structure of their own, in his aptly named monograph, Sign language structure (Stokoe 1978 [1960]). There he showed that signs are not holistic hand pictures, but that they are comprised of a discrete, finite set of meaningless elements belonging to the major categories of handshape, location, and movement. Minimal pairs (corresponding to pairs like “pat” and “bat” in English) are distinguished by these elements (Klima and Bellugi 1979), and rules can alter them discretely in certain environments (Liddell and Johnson 1986; Sandler 1987, 1989, 1993). An example of a minimal pair in Israeli Sign Language (ISL) is shown in Figure 1, distinguished only by the meaningless hand configurations shown in Figure 2. Several pieces of independent evidence have led researchers to argue that another phonological element exists in sign languages: the syllable, which functions as a rhythmic and organizational unit (Coulter 1982; Sandler 1989, 2008; Brentari 1990, 1998; Wilbur 1993; Perlmutter 1992; Sandler and Lillo-Martin 2006). That these discrete elements are meaningless, that they can make contrasts between words, and that they can be manipulated by rules that refer only to their form and not to meaning, these characteristics warrant the use of the term “phonology” in describing this system. The existence in sign languages of a phonological level of structure alongside the meaningful level of morphemes and words shows that these languages have duality of patterning, claimed to be an essential design feature of human language (Hockett 1960).

Figure 1.

The ISL minimal pair (a) INTERESTING, and (b) DANGEROUS, distinguished only by features of hand configuration.

Figure 2.

Handshapes minimally distinguishing ISL signs.

1.2. Morphology

The basic words of sign languages can be made more complex by altering their form in ways that add elements of meaning or grammatical function. Sign languages have morphological systems characterized by both inflectional and derivational morphology and even by abstract linguistic properties such as allomorphy (Brentari 1998; Sandler and Lillo-Martin 2006). While sequential affixation is less common in signed than in spoken languages, it does exist. For example there is a negative verbal suffix in American Sign Language (ASL) that means “not X at all,” while a group of sense prefixes in ISL generally add the meaning, “do X by seeing, hearing, smelling (intuiting), etc.” (Aronoff et al. 2005; Meir and Sandler 2008). Far more common in sign languages is a more simultaneously structured kind of morphological complexity. These typical morphological patterns can be said to characterize sign languages as a language type (Aronoff et al. 2004). For example, many sign languages have verb agreement systems that change the beginning and ending location of the basic form to agree with referential loci in the signing space associated with participants in the discourse (Padden 1988). Specifically, verbs denoting transfer (literally or metaphorically) agree for person and number with source and goal, which in turn are associated with subjects and objects (Meir 2002). Figure 3 illustrates the sign SHOW in ISL with various inflections for person and number.

Figure 3.

ISL SHOW inflected for verb agreement (clockwise from top left): I-SHOW-YOU; YOU-SHOW-ME; S/HE-SHOWS-YOU; I-SHOW-YOU (exhaustive); I-SHOW-YOU (multiple).

Temporal aspect is marked in many sign languages through reduplication and changing the shape and rhythmic properties of the movement (Klima and Bellugi 1979; Sandler 1990). Another morphologically complex system in sign languages is the system of classifier constructions (Supalla 1986). These are complex forms that are unique to sign languages, comprised of nominal classifiers in the form of handshapes (standing for classes of nouns, e.g., HUMAN, SMALL-ROUND-OBJECT) combined with movement manners and shapes, and with locations (see Emmorey 2003). It is these structures that are most often accompanied by the iconic mouth gestures, and we will have more to say about them in Section 5.

1.3. Syntax

Those sign languages whose syntax has been studied have been shown to have an underlying basic word order, though this order can change for various pragmatic reasons. Sign language sentences can be complex, and syntactic tests have been developed for ASL to distinguish coordinate structures (Babsy hit Bobby and Bobby told his mother) from subordinate structures (Bobby told his mother that Babsy hit him) (Padden 1981, 1988). The existence of subordinate clauses in ASL and other sign languages demonstrates that the property of recursion — a phrase within a phrase of the same type or a clause within a clause — is present in these languages. Recursion has been argued to be the quintessential and even defining property of human language (Hauser, Chomsky, and Fitch 2002), making its existence in sign languages an important discovery. Constraints posited as universal that hold on the movement of syntactic elements have been argued to exist in ASL (Lillo-Martin 1991) and a number of other kinds of evidence have accrued to demonstrate that sign languages indeed have syntactic structure, comparable in nontrivial ways to that of spoken languages (Neidle et al. 2000; Sandler and Lillo-Martin 2006; Pfau and Quer 2007).

1.4. Prosody

In addition to the primary manual signal, sign languages make use of the face, head, and body in systematic ways. Certain types of structures in ASL are accompanied by conventionalized non-manual displays (Stokoe et al. 1976 [1965]; Bellugi and Fischer 1972; Baker and Padden 1978; Liddell 1978, 1980). For example, ASL relative clauses are typically marked by head back, raised brows, and raised upper lip (Liddell 1978, 1980). Since those discoveries, many researchers have studied this area in ASL and other sign languages, some proposing that conventionalized non-manual signals are among the syntactic devices of the grammar (e.g., Liddell 1980; Petronio and Lillo-Martin 1997; Neidle et al. 2000; Wilbur and Patchke 1999), and others attributing them to the prosodic component (e.g., McIntire and Reilly 1988; Reilly, McIntire, and Bellugi 1990; Nespor and Sandler 1999; Sandler 1999a, 2005, in press; Wilbur 2000; Sandler and Lillo-Martin 2006; van der Kooij et al. 2006).

A model developed on the basis of Israeli Sign Language demonstrates the nature of the system. The model is based on evidence that facial expressions as well as head and body positions align with rhythmic manual features of the signing stream to mark prosodic constituent boundaries at different levels of the prosodic hierarchy (Nespor and Sandler 1999; Sandler 1999a, 2005). In this model, conventionalized facial expressions so aligned are seen as analogous to linguistic intonation in spoken languages. Figure 4 exemplifies this system. It shows the two signs on either side of an intonational phrase boundary in an ISL counterfactual conditional sentence meaning “If the goalkeeper had caught the ball, they would have won the game.” Observable in 4a is the following intonational configuration on CATCH-BALL: head forward, brows raised, eyes squinted. In 4b, the second intonational phrase, WIN, is characterized by a different head position and neutral facial expression. This change in head or body position and across the board change in facial expression typically mark the boundary between intonational phrases.

Figure 4.

Intonational phrase boundary between two parts of the counterfactual sentence, GOALKEEPER CATCH-BALL, WIN — “If the goalkeeper had caught the ball, they would have won the game.” (a) CATCH-BALL, (b) WIN.

The linguistic prosodic system is conventionalized, and its facial expressions have different properties from those of emotional or paralinguistic expressions (Baker-Shenk 1983). For example, in ISL linguistic facial expressions use fewer muscles than emotional expressions, and, unlike emotional facial expressions, they are aligned with prosodic constituents,and they are obligatory in particular pragmatically determined contexts (Dachkovsky 2005, in press; Dachkovsky and Sandler 2007). The complexity of the ISL system is exemplified by the componentiality of facial expressions, where each component supplies an independent element of meaning (Nespor and Sandler 1999; Sandler 1999a; Dachkovsky 2005, 2007; Dackhovsky and Sandler in press). In short, following McNeill’s dichotomy between the linguistic and the gestural described in the next section, non-manual signals of this kind are placed within the linguistic domain. They are conventionalized, combinatoric, and have systematic distribution. Intonational facial expression is expressed primarily by articulators of the upper face; the brows and eyes. The mouth is less important in the prosodic system, but it does have a number of other linguistic functions, to be discussed in Section 5. Now that the linguistic structure of sign languages has been outlined, we turn to the notions of gesture and iconicity assumed in this study.

2. Gesture

In Kendon’s recent overview and analysis of gesture research, he defines gesture as “a label for actions that have the features of manifest deliberate expressiveness” (Kendon 2004: 15). That is, gestures are voluntary and performed for the purposes of expression. While this definition rules out certain kinds of actions, such as motions that have a practical purpose like combing one’s hair, it still admits a wide range of others, and these have been characterized in various ways by different scholars. McNeill’s criteria for identifying gesture provide a useful point of entry, and are adopted here (McNeill 1992: 19–23). Gestures are distinguished from the linguistic signal by being holistic and synthetic, and lacking in hierarchical and combinatoric properties. Gestures are also idiosyncratic — different speakers or even the same speaker may use different gestures to represent the same image. And they are context-sensitive: their interpretation depends on the linguistic context. Linguistic structure, on the other hand, is componential, combinatoric, and hierarchically organized. Linguistic signs such as words are highly conventionalized in form, meaning, and distribution.

Though the linguistic and the gestural are distinguishable on a number of criteria, gestures are indispensable to language. Investigations of spoken language have provided compelling evidence that manual gesture must be considered part of the language system. Gestures enable speakers to enrich the communicative message by conveying imagistic information simultaneously with speech. In all known human societies, gestures tend to co-occur with speech. Even very small children communicate with both gesture and speech (Butcher and Goldin-Meadow 2000; Capirci et al. 2005). In addition to its communicative function, gesture can be self-oriented, for example, we gesture while talking on the phone, and even congenitally blind people gesture to each other (Iverson and Goldin-Meadow 1998). Gesture helps to conceptualize information in a way that allows us to package it into units that can then be verbalized (Kita 2000). The idea that gesture helps us think is supported by studies of the way children gesture when talking about math problems (Goldin-Meadow et al. 2001). Gesture is part of language.

2.1. Iconic gesture

The type of gesture I am focusing on here is what McNeill (1992) calls iconics, “reference by virtual ostension” according to Kendon (2004: 307). These are the gestures that picture aspects of the object or event being described by speech. For example, in describing how she bakes hallah, a Jewish ceremonial bread that is braided and has a particular shape, a speaker used the iconic gestures shown in Figure 5 as she said, “You braid it, so it’s higher in the middle and tapered at the ends.”

Figure 5.

Iconic co-speech gesture for the sentence, “You braid it, so it’s higher in the middle and tapered at the ends.” (a) coincided with “higher in the middle” and (b) with “tapered at the ends.”

Iconic gestures provide a pictorial representation of what is being said, but they often also add information not included in speech, and herein lies the symbiosis. In the example, the size and proportions of the bread are gleaned from the gesture alone. McNeill provides several similar examples, in which gestures provide complementary aspects of an event. Enfield’s (2004) study of complex interaction of the two hands in the gestures of people describing fish traps in Lao provides a series of pictures that reveal the symbiosis of speech and gesture throughout a discourse. The size and dimensions of the trap are indicated by the hands and fingers, and the shape and path of the fish relative to the parts of the trap are also conveyed gesturally, with one hand and forearm representing the side and bottom of the trap and the other the fish. Only part of this information is transmitted in the speech signal; details of size, shape, dimension, and spatial relations appear in the gesture alone.

In McNeill’s taxonomy, iconic gestures are distinguished from other types of gestures, all of which are distinguished from linguistically organized speech. Iconic gestures are distinguished from “beats,” which punctuate the rhythm of speech, from emblems (Ekman and Friesen 1969) — conventionalized gestures such as “OK” or “thumbs up” — and from other gestural types as well (McNeill 1992: 12–18).

2.2. Gestural icons are symbols

The term iconic as used here departs from the meaning attributed to it by Peirce (1987 [1903]) and Deacon (1997), who each distinguish iconic signs from symbolic signs, and by McNeill (1992), who proposes that all gestures are symbols. Here, symbolization is attributed specifically to iconic gestures. A careful treatment of the differences among authors in their notions of symbolization and iconicity would take us too far afield; suffice it to say that investigation of sign language mouth behaviors seems to call for a finer taxonomy, one in which iconic gestures (and only these) may be considered symbolic. The characterization rests on a distinction between an icon and a replica.

Iconic gestures create a likeness of an object or concept symbolically, through a configuration of the hands (or mouth). In contrast, a replica uses the same body part to replicate itself or an action made with it. According to this distinction, a replica is not symbolic in the same sense that an icon is, but is rather imitative or mimetic. The distinction made here reflects qualitatively different types of representation, and facilitates a distinction between different types of mouth gestures in ISL, only one of which functions like the iconic gestures used by speakers.

This distinction between the symbol and the replica helps us to distinguish iconic signs and gestures from pantomime or mimesis. Mimesis is an imitative replica; it uses the body to represent the body, the face to represent the face. In sign languages, the distribution of mimetic gestures is different from that of iconic gestures conveyed by the mouth. We may see the distinction between these two types of gesture by way of an illustration provided in Klima and Bellugi (1979) for the purpose of demonstrating a somewhat different categorization — that of the pantomime versus the conventionalized sign language sign or word. The example, a pantomime of the concept “egg” and the ASL sign EGG, also happens to illustrate the distinction made here between mimetic replica and iconic symbol, and it is reproduced in Figure 6.

Figure 6.

(a) and (b) are a sequence consisting of an iconic gesture (“round object”) and a pantomime of cracking an egg and throwing away the shell. (c) is the conventionalized ASL sign, EGG. Illustrations reproduced with permission from Ursula Bellugi.

I have taken the liberty of decomposing Klima and Bellugi’s original image to make the point relevant for us here: that the icon is different from the replica.2 The main point of the example is seen by comparing the iconic gesture 6a with the mimetic gestures in 6b. Figure 6a is a symbolic icon in which the hands create a shape that is like that of an egg. Figure 6b is mimesis: The hands mimic the action of the hands in breaking an egg and throwing away the shell. Figure 6c is the conventionalized ASL sign EGG. It is neither a mimetic replica nor an iconic gesture, as it is conventionalized, dually patterned, and combinatoric in the syntax of the language. Because of these properties, EGG in 6c is a symbol in the sense of Pierce and Deacon.3 Here, I am making a further bifurcation that includes 6a (but not 6b) as a symbol, albeit an iconic and gestural one.

Pantomime can be heightened to artistic use, but, being imitative rather than symbolic, its essence in non-artistic use is more rudimentary than gesture, and it is therefore not surprising that it is found more in the signing of the earliest signers of new sign languages than in later generations (Sandler 2007).

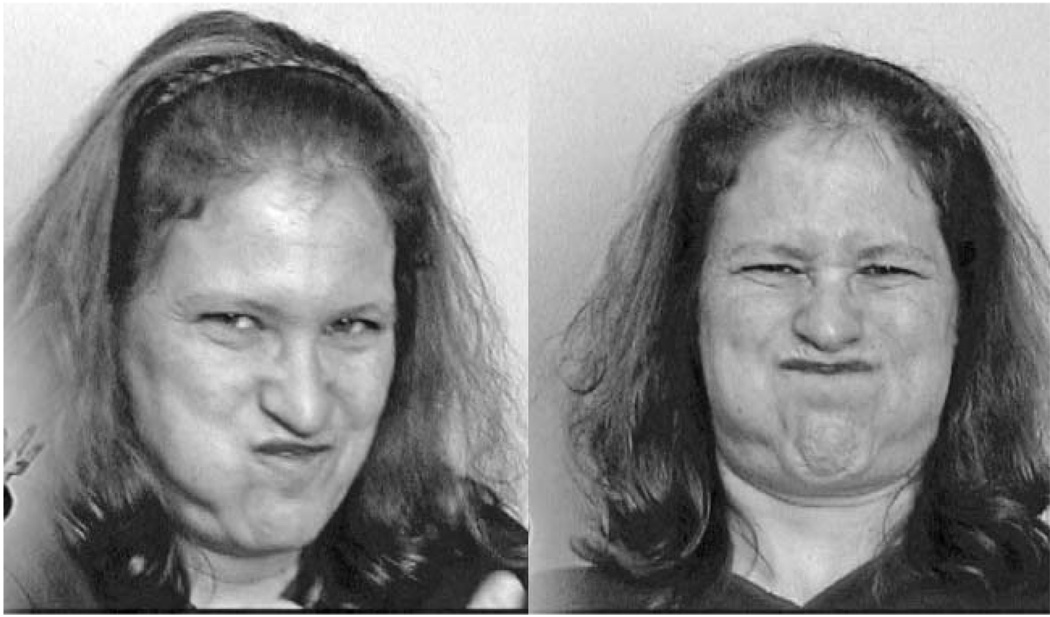

In any face-to-face communication, people make mimetic use not only of the body but of their facial features, including their mouths, to convey affect and attributes of characters in a described event. Signers are no different from speakers in this regard, except that for them the mouth is freer to participate in such displays, since it is not being used to convey words. Examples shown in Figure 7 are an open mouth to convey surprise (7a) and a monkey mouth to represent the cat masquerading as a monkey in the cartoon being described (7b).

Figure 7.

Non-linguistic uses of the mouth: (a) mimetic affect — surprise; and (b) mimetic character attribute — monkey.

This particular mimetic use of the mouth is not linguistic, but it is important to note that it is not iconic either, in the sense intended here. Rather, it replicates emotional mouth shapes of people or, in the case of the monkey, the actual physical shape of the character’s mouth.4 They are mimetic replicas, in which the person’s face is a face; they are not symbolic icons, which use a body part to stand for something else. Emotional mouth and other facial expressions do not coincide temporally with the linguistic signal (Dachkovsky 2005). Their occurrence reflects emotional states of the signer or a participant being described, and they may begin before any utterance, end after it, and change at any time in between. As for mimetic facial expressions, they may be used discursively to indicate which character is talking or acting, but here too, they may persist through entire discourse chunks and their distribution is determined pragmatically. In Section 3, we will see that the distribution of iconic mouth gestures is different.

Observing the difference between the symbolic icon and the replica is of further use in helping to distinguish human gesture from that of other primates, in that the latter appears to be primarily mimetic. This makes sense, as creating a symbol for something with a part of the body that is not actually involved in the object or activity arguably requires a more abstract or symbolic kind of reasoning than reenacting something directly. Most of the gestures (apart from attention-getting activities) described in Tomasello and Call’s (1997) partial survey of intentional communicative behavior among apes imitate some activity, such as raising the arm to initiate grooming under the arm. While some of these gestures become ritualized and may be used flexibly in different contexts (Plooij 1978; Pollick and de Waals 2007), they are still replicas of actual actions and not symbols created by one part of the body (such as the hands or mouth) to represent the appearance of some other body part, object, or action. According to these criteria, gestures like performing a twisting motion to request help in opening a container with a twist-top lid, though described by Savage-Rumbaugh and colleagues (1986) as iconic and symbolic, are classed as mimetic. In fact, to my knowledge, no gesture in the category of iconic as defined here has been identified in non-human primates. The distinction made here between a (mimetic) replica and an iconic symbol is compatible with Tomasello and Carpenter’s analysis of the different motives that humans and apes have for gesturing in the first place. They state that “apes gesture in order to manipulate others — to get others to do what they want them to do — not, as humans, to inform others of things helpfully or to simply share experience with them” (Tomasello and Carpenter 2007: 112). It seems that social creatures need the cognitive machinery necessary to create iconic symbols only when they have more informative communicative motives like those described for humans.

Therefore, a distinction between the replica and the symbolic icon is useful, allowing us to distinguish between imitation/mimesis and iconic symbolization as communication strategies. For our purposes here, we assume that iconic gestures are a type of symbolization that is qualitatively different from mimetic replicas, and will distinguish different kinds of mouth gestures accordingly.5

2.3. More on complementarity

The conceptual unity of language and gesture put forward by Kendon (1981, 2000, 2004) and McNeill (1992) has gained wide currency. Gestures occur almost exclusively together with speech; they are temporally coordinated with it; and they often fill in substantive information that is missing in the spoken part of the message. The linguistically organized system and the gestural system complement each other in human language (Goldin-Meadow and McNeill 1999; Sandler 2003). In the example in Figure 4, part of the image is created by the words and additional visual information is supplied by the gesture. This complementarity of information conveyed by speech and gesture is very common (see, e.g., McNeill and Duncan 2000; Enfield 2004) and is reflected in the concept of the growth point (McNeill 1992). McNeill and Duncan define the growth point as “an analytic unit combining imagery and linguistic categorical content” (2000: 144). They also point to the fact that the temporal synchrony between the two is robust as further evidence for the psychological reality of the combined unit.

The observation that sign languages convey the linguistically organized part of language manually has led some investigators (e.g., McNeill 1992, based on Kendon 1988) to propose that sign languages originated in manual gesture, placing gesture at the least systematic and least conventionalized end of a continuum that has sign language at the other end: gesture > mimesis > emblems > sign language. Such a model does not predict complementarity/symbiosis in either system. The continuum view is reinforced by claims of other researchers that sign languages, though linguistically organized, bear certain imprints of manual gesture (e.g., Liddell and Metzger 1998; Liddell 2003; Duncan 2005). Using gradience vs. discreteness as the primary criterion, Liddell (2003) argues that there are more gestural elements in sign languages than commonly thought, and suggests that the same may be true of spoken language, giving (paralinguistic) intonation as an example. Emmorey (1999) shows that signers make use of conventionalized (non-iconic) gestures (such as index finger to lips — “sh”) by interjecting them in the sign stream. While such observations cannot be ignored and ask for explanation in a comprehensive model of sign language, neither Emmorey’s gestures nor aspects of the system claimed by Liddell to be gestural possess two properties that are central to the language-gesture amalgam found in spoken language and shown in Section 3 to be found in sign language as well: co-temporality of gesture with the verbal message and conceptual complementarity between the two (Sandler 2003), that is, symbiotic symbolization.

A more symmetrical view of language suggested by an experimental study of Singleton, Goldin-Meadow, and McNeill (1995) opposes the continuum model. Comparing gestures that accompany speech with gestures used when speech is prohibited reveals a qualitative difference between the two. When hands are used in place of speech, their behavior is altered substantially, and they convey information in a more linguistic manner. But when the linguistic material is conveyed by the hands, the question remains: where is the complementary gesture?

3. Iconic mouth gestures in Israeli Sign Language

There is indeed a parallel gesture channel in sign languages. When the hands are used for encoding the linguistic signal, the mouth is used to perform the function of iconic gesture.6 And these gestures are comparable in distribution and function to the manual iconic gestures of speakers.

The investigation I describe below relies on data from four native signers’ retelling of the animated cartoon Canary Row. Signers included a range of iconic mouth gestures in their retellings of the story. The gestures are classed as iconic because they directly convey physical images or sensations associated with the event being described linguistically by the hands. They represent these properties symbolically, using the mouth to convey properties of other objects or events. The iconic mouth gestures are co-temporal with the manual verbal string, and they complement or embellish it. Finally, they fit the definition of gestures according to the criteria described above as is shown in Section 3.2.

3.1. Method

Each of four native signers described to another signer the content of the cartoon, Canary Row. The cartoon was shown and recounted in two parts, to aid memory. Signers were recorded with two video cameras, one recording the whole body and the other a close-up of the face only. The two video images were combined by computer and placed next to each other on a single screen for analysis. Mouth gestures were defined as iconic use of the mouth to convey images, which in turn were defined as shape or dimensions of an object, or sensations, such as sound (vibration) or texture. With the help of two native signers, linguistic uses of the mouth in ISL, such as conventionalized adverbial and adjectival shapes (Meir and Sandler 2008) and mouthing of Hebrew words, as well as mimicry of character attributes, were excluded from analysis (see Section 5 below for discussion of these other uses of the mouth). Iconic mouth gestures of each signer were coded by two coders certified to use the Ekman and Friesen Facial Action Coding System (FACS; Ekman and Friesen 1978). The coders recorded the action units for each mouth gesture, and noted the image to which it corresponded, episode by episode. Intercoder agreement was seventy percent, with discrepancies almost exclusively in the quantity (not choice) of units coded. The more experienced coder noticed more AUs, and the analysis is based on her coding. Ignoring the units missed by the second coder, agreement was ninety-two percent. Analysis was conducted by comparing the action units per episode and image within and across signers.

3.2. Use of Iconic mouth gestures in ISL

A segment of the Canary Row cartoon will illustrate the use of mouth gestures. In the segment, Sylvester the Cat squeezes himself into a drainpipe and climbs up inside it in order to reach Tweety Bird on the window ledge at the top. To foil the cat’s scheme, Tweety drops a bowling ball down the drainpipe. Bowling ball meets cat inside the drainpipe; a bulge is visible making its way back down; and the cat plops out, having swallowed the bowling ball.

Before describing the mouth gestures, a word about illustrating them in still video grabs is in order. These gestures typically involve some movement of the mouth. Although the mouth is a small articulator compared to the hands, for example, even very small movements made by the mouth are very salient perceptually. We know this from the classic McGurk effect, in which subjects who hear a syllable and view the mouth movements of a person pronouncing a different syllable report having perceived something in between (MacDonald and McGurk 1978). In sign language communication, although the words are transmitted by the hands, addressees focus their gaze on the face of the signer, not on the hands (Siple 1978). The mouth gestures pictured here then are much more salient when the action is seen during actual signing than in still pictures.

The first mouth gesture shown in Figure 8 conveys the tight fit of the cat inside the narrow pipe. While the hands convey the journey of the cat (SMALL-ANIMAL classifier) up the pipe, the image is complemented by the mouth gesture, which conveys the narrowness and tight fit. The second mouth gesture indicates the zig-zag bend in the drainpipe where it wraps around a small ledge on the side of the building, followed in the third picture by the return to the “narrow/tight fit” mouth. The fourth reflects the full round shape of the bowling ball accompanying the sign. The sign in this case is a conventionalized representation of the two-finger-and-thumb grip on the ball, and has no inherent information about the shape of the ball. That information is supplied by the mouth gesture. Here too we see symbiotic symbolization, as the hand signs BOWLING-BALL while the mouth indicates its shape. The sequence continues with a repeated opening and closing mouth gesture not pictured here, corresponding to repeated reverberation of the ball rolling down the pipe. It is repeated several times, to indicate iconically the length of the pipe and the contact that the ball makes with the inside of the pipe as it goes down. The hand moves fluidly downward, while the bump-bump-bump is transmitted by the mouth gesture. This symbiotic expression of the general movement (linguistic) and the agitation inside the pipe (gestural) is reported for a speaking gesturer as well, who said, “but it rolls him out” while wiggling the hand (McNeill and Duncan 2000: 150). Finally, the bulge in the pipe at the point of impact between the cat and the bowling ball is indicated with a puffed cheeks mouth gesture.7

Figure 8.

Mouth gestures in Canary Row bowling ball scene. (a) tight fit in narrow space (cat in pipe). (b) zig-zag shape (pipe). (c) tight fit in narrow space (cat in pipe). (d) full/ round shape (ball).

The gestures are global; they not comprised of discrete meaningless parts like words or signs. The form of a whole gesture may be altered gradiently, depending on relative dimensions or intensity. For example, one signer gestured the volume of the bowling ball with one puffed cheek (Action Unit L34), and that of the cat after he swallowed the bowling ball with two puffed cheeks (AU 34), shown in Figure 9.

Figure 9.

Gradience. One cheek is puffed for the ball, and two for the image of the cat after swallowing the ball.

The mouth gestures are context sensitive; the meaning of a gesture can change according to context. For example, a mouth gesture used for “narrow” was identical to a gesture used to indicate the wind generated by flying through the air, both shown in Figure 10. The specific Action Units (AUs), which encode the actions and configurations of the mouth area, are listed in the figure caption.

Figure 10.

Context sensitivity: Same mouth gesture conglomerate for “narrow” and “whoosh” (action units 8c, 14b, 17b, 18b, and 25).

The converse is observed as well: Different signers produce different gestures for the same event. For example, two signers created a mouthgestural image of the cat climbing up inside a narrow drainpipe. One signer indicated the bulge of the cat seen from outside with puffed cheeks (AU 34). Another signer (shown in Figure 8 above) gestured the tight fit in the narrow pipe. This is the kind of idiosyncracy expected with a non-conventionalized system. Even when signers choose to represent the same particular image with a mouth gesture, the gesture may be different. In one scene, the Old Lady hits Sylvester on the head with her umbrella, raising a bump. In the study, signers tended to use mouth gestures to describe this bump, but the gestures varied across signers.8

Throughout the data, signers varied in terms of the number of gestural images produced and the specific images they represented gesturally.9 Of the sixteen cases where more than one signer used a mouth gesture for the same image (out of sixty-eight total mouth gestures), no two signers’ gestures were identical in all AUs, and only forty percent of these “same” gestures shared more than half of their AUs. The variation across signers is characterized in Figure 11.

Figure 11.

Individual variation in form of mouth gestures.

Finally, the same signer may choose to gesturally represent different images of the same action occurring in different episodes of the cartoon — e.g., whether the impact of the Old Lady’s umbrella striking the cat creates a “fa” or a “pa” air disturbance.

Gesture is an individual matter: some people gesture more than others, and people vary both with respect to which images they choose to convey with gestures, and how they form the gestures. Signers in this study behaved the same way.

The idiosyncracy and context-sensitivity exhibited by mouth gestures contrasts starkly with the patterns observed in linguistic non-manual signals. For example, in a study that elicited different kinds of isolated sentences in ISL, such as polar questions, wh-questions, and conditionals, facial intonation patterns, measured in terms of AUs, were identical across signers (Dachkovsky and Sandler 2007). Conventionalized adverbial mouth shapes also behave uniformly (see Section 5).

To sum up, these native signers of ISL spontaneously evoke a stream of iconic images and convey them during linguistic communication in a parallel system of expressive mouth gestures.

3.3. Distribution of iconic gestures

Like iconic co-speech gestures, iconic co-sign mouth gestures occur simultaneously with the (signed) verbal message and embellish or complement it. But there is a particular type of sign structure that mouth gestures occur with most often: classifier constructions. These are complex predicates that consist of one or two classifier handshapes combined with different locations and movement shapes and manners. The subsystem exists in many unrelated sign languages (Emmorey 2003). The classifier handshapes fall into three main categories: size and shape specifiers (e.g., SMALL-ROUND-OBJECT; CYLINDRICAL OBJECT), handling classifiers (representing the shape of the hand or object handling another object), and entity classifiers (e.g., UPRIGHT-OBJECT; SEATED HUMAN; VEHICLE). Once thought to be entirely mimetic in character, these forms are now understood to be conventionalized and to consist of a finite list of classifier handshapes, which combine with movement and location components (Supalla 1982, 1986). Classifier constructions are typically used to describe spatial relations among participants in a discourse, as well as directions and manners of motions. Figure 12 shows two classifier constructions in ISL.

Figure 12.

Two ISL classifier constructions. (a) “two cars (VEHICLES) drove past each other,” (b) “cup (CYLINDRICAL-OBJECT) next to piece of paper (FLAT-OBJECT).”

The classifier subsystem of sign language grammar is different in many respects from ordinary lexical signs. Each handshape, location, and movement in classifier constructions is a morpheme (rather than a meaningless phonological unit, as in ordinary words); each hand can be an independent classifier (while each hand in two-handed lexical signs is a meaningless formational element); and various phonological constraints on words are not observed (Sandler and Lillo-Martin 2006). Nevertheless, there are many indications that the system is linguistic: classifiers and movements draw from a conventionalized list; the constructions take time for children to master (Supalla 1982; Slobin et al. 2003) and are very difficult for second language learners; and there are constraints on the combination of the morphemes (Supalla 1986). Furthermore, while many unrelated established sign languages have comparable classifier systems (see Emmorey 2003), the new Bedouin sign language that my colleagues and I are investigating, described below, has not yet developed such a system. If they were purely gestural rather than linguistic, classifier constructions would be expected to appear at the outset. Iconic mouth gestures co-occur with these linguistic entities, a distribution that distinguishes them structurally from either emotional or mimetic mouth shapes and actions described in Section 2.2, in addition to the inherent differences in function.

But at the same time, these constructions have certain properties not characteristic of linguistic systems as well: they enjoy more degrees of freedom than regular signs; they do not seem to have duality of patterning; and the system has been shown to have gradient properties (Liddell 2003 for ASL; Duncan 2005 for Taiwan Sign Language). They are a particularly expressive subsystem within sign language and they have clearly iconic properties.

It is not surprising that iconic mouth gestures tend to co-occur with classifier constructions, as these are the forms that are most likely to be used to describe the kinds of concrete objects and spatial and dynamic events that iconic co-speech gestures typically complement. A clear example from spoken language for this sort of compatibility can be seen in Japanese auditorily iconic mimetics. These verbal mimetic forms represent such notions as “hurried walk of a human,” indicated by the mimetic sutasuta (Kita 1997) as well as attitudes of the speaker (Hamano 1986). They occur in the same sentences with words carrying similar meaning, as example 1 shows.

-

(1)

Japanese mimetics

Taro wa sutasuta to haya-aruki o si -ta

Taro Topic Mimetic haste-walk Acc do Past

‘Taro walked hurriedly.’

(Kita 1997: 338)

According to Kita, it is especially common for manual gestures to cooccur with mimetics, which he takes as evidence that gestures require their own dimension of semantic representation. So, both in Japanese and in ISL, iconic elements exploit two modalities symbiotically to symbolize concepts: hand and mouth.

4. Mouth gestures in other sign languages

Mouth gestures are plentiful in other sign languages as well. In a corpus of retellings of the same Tweety cartoon by signers of several other unrelated sign languages, all used mouth gestures in similar ways. The mouth of one signer of German sign language became Tweety’s cage. Her tongue was Tweety, flying around frantically to escape Sylvester’s paws, and finally the signer stuck out her tongue to show Tweety escaping through the door of the cage.10

American Sign Language, while sparser in mouthing of English words than European sign languages, is not at all lacking in mouth articulations of various kinds. Mouth gestures are also abundant in ASL. Ben Bahan’s signing of Hans Christian Andersen’s The Little Mermaid11 is accompanied by a rich variety of expressive mouth gestures. A nice example is his description of a drawbridge before a castle, pictured in Figure 13.

Figure 13.

Mouth gestures accompanying DRAWBRIDGE in ASL.

The use of two modalities is so deeply rooted in human language that it is even found in a new language that arose spontaneously in a community of about 100–150 deaf people. Al-Sayyid Bedouin Sign Language (ABSL) is a language that has arisen in the last seventy years in an endogamous community with a high incidence of genetic deafness (Scott et al. 1995). The language, used in a Bedouin village of 3,500 in the Israeli Negev, is young, and the first generations of signers had little outside influence. It therefore offers researchers a rare opportunity to identify the most fundamental ingredients of human language (Sandler et al. 2005; Aronoff et al. 2008).

We have found that even this new language makes use of two channels, augmenting manual signs with mouth gestures. In descriptions of the Laurel and Hardy silent movie, Big Business, signers complement the manual signal with mouth gestures, in particular to indicate different types of impact and a variety of sounds/vibrations. Figure 14 shows a mouth gesture indicating the release of water from a hose tap.

Figure 14.

Mouth gesture in Al Sayyid Bedouin Sign Language indicating vibration caused by water being released through a hose tap.

In these older signers, such mouth gestures are all the more salient because other articulations of the face are relatively rare in their communication system. It is striking that most of the mouth gestures among the Al-Sayyid correspond to what hearing people would call sound, presumably representing vibrations for the deaf signers. The use of these gestures reveals that the sensation of sound/air disturbances is not inconsequential in their experience of the world, although it is perceived differently.

5. The linguistic and the gestural in sign language: Interim summary

Sign languages have structural properties that are attributed to linguistic organization, such as phonology, morphology, and syntax. These levels of structure are conveyed primarily through the manual channel. Sign languages have a prosodic level as well, and there is evidence that this level is hierarchically organized, and indirectly linked to syntactic structure. Both the words and sentences, as well as the rhythmic constituency,are conveyed by the hands, and the intonational facial expressions are structurally subordinate to the hands in the prosodic system.

Simultaneously with the linguistic signal, sign languages universally convey gestures that are defined as iconic, and they do this with the mouth. These gestures complement imagistic descriptions presented linguistically by the hands — precisely the reverse distribution from that found in spoken language.

Iconic mouth gestures in sign language, corresponding to iconic hand gestures in spoken language, reveal the bimodal character of symbolic communication in humans. But the mouth has a number of additional non-iconic functions in sign languages, and together they complete the picture of the contribution of this articulator to manual languages. In Section 5, we describe these, before going on to the broader context of language evolution in Section 6.

6. Other uses of the mouth in sign languages

The mouth has many different roles in sign languages, some of them linguistic, and others more gestural, but none apart from the gestures described in the previous section are iconic. A more complete picture of the uses of the mouth in sign language will help both to distinguish the other uses from iconic mouth gestures, and to convey the extent to which the mouth is used in the sign modality.

6.1. Lexical mouth components

One type of information encoded by the mouth is lexical: certain signs require particular shapes or movements of the mouth. In ISL, for example, a sign meaning THE-REAL-THING requires a movement of the mouth, similar to one that would be used to pronounce the syllable “fa” (Meir and Sandler 2008). These mouth shapes and movements are sometimes referred to as mouth gestures (see articles in Boyes-Braem and Sutton-Spence 2001), but since they are not intended to express meaning and since they obligatorily co-occur with specific lexical items, Bergman and Wallin’s (2001) label “mouth component” is more appropriate. Mouth components are listed as part of the phonological description of lexical items.

6.2. Adverbial and adjectival modification

While lexical specification of mouth shape is infrequent (though present in all documented sign languages), another use of the mouth is common and productive: the articulation of conventionalized shapes that correspond to adverbial and adjectival modification in ASL (Liddell 1980; Reilly, McIntire, and Bellugi 1990), in British Sign Language (Sutton-Spence and Woll 1999), in ISL (Meir and Sandler 2008), and in many other sign languages as well. For example, in ISL, the adverbial modification meaning “protracted motion” is encoded in the open mouth shape shown in Figure 15. These pictures are taken from ISL retellings of the same animated movie described above. They are extracted from the renditions of three signers, each describing a point in the film when Sylvester the Cat is catapulted upwards by a weight falling on the other side of a see-saw contraption, allowing him to snatch Tweety Bird from his window perch, and the two fly through the air. Notice that all three signers use the same, conventionalized mouth shape, in contrast to the variation found across signers in the use of mouth gestures.

Figure 15.

Linguistic use of the mouth: conventionalized adverbial mouth shape in ISL for “protracted motion.”

Like ASL and other sign languages, ISL has a set of such shapes, used for adverbial and adjectival modification (see Meir and Sandler 2008).Shapes of this kind are conventionalized and productive, and constitute part of the grammar of sign languages; they too are linguistic.12

6.3. Mouthing

The last non-gestural use of the mouth that falls under the “linguistic” heading is mouthing. This refers to the (usually non-vocal) articulation of words or word parts from the spoken language, Hebrew in the case of ISL, English in ASL and British SL, etc. This system should not be confused with speaking Hebrew or English while signing SL, which is impossible, given the differences in word order and other central aspects of structure between the two languages.13 Instead, mouthing in ISL is sporadic, and follows its own rules. In fact, we do not know much about how mouthing is distributed in ISL, but we can make the following two observations. First, it is sometimes used to disambiguate two meanings of a single sign, such as the ISL sign SIBLING, with Hebrew mouthing for either “brother” or “sister.” Second, mouthing tends to follow ISL prosodic constituency, so that mouthing of a lexical sign is likely to extend over a host and clitic. For example, in the cliticized expression STORE-THERE, comprising a single phonological word, the mouthing of the host, STORE, spans the whole phonological word (Nespor and Sandler 1999; Sandler 1999b). Boyes-Braem (2001) makes a similar observation about what she calls stretched mouthing in Swiss-German Sign Language. This pattern indicates that mouthing enters into the sign language linguistic system, and is not just a sporadic borrowing from the spoken language. The amount of mouthing varies from sign language to sign language, apparently being more frequent in ISL and European sign languages than in ASL, for example.

In a study of various types of mouth actions in Norwegian Sign Language, Vogt-Svendsen (2001) observes that they coincide temporally with manually produced signing in reduplication, extension or shortening, intensity and rhythm, start- and end-points of movement, and number of movements. The coordination of hand and mouth, not only in mouth gestures, but in other mouth actions in sign languages, illustrates the symbiotic relationship between the two articulators in language.

In the next section, we turn to research in biology and evolution of language dealing with the relation between these two channels in the language domain.

7. Hand and mouth in the biology and evolution of language

This study and the conclusions drawn from it are compatible with recent research in the biology and evolution of language that attribute a close biological tie between hand and mouth in the evolved human capacity for language. Some models propose that precursors to language employed manual gesture and vocalization together (Armstrong, Stokoe, and Wilcox 1995), or manual gesture first and then vocalization, with a period of overlap (Arbib 2005). Research on mirror neurons in monkeys, which are proposed to underlie the ability for speech, suggests another link between hand and mouth. Rizzolatti, Fogassi, and colleagues hypothesize that this phenomenon (in which the same neurons are activated when an animal produces an action and when it watches another individual produce the same action) underlies imitation as a learning mechanism used by humans in the translation of perceived phonetic gestures into motor commands in speech (Gallese et al. 1996; Rizzolatti and Arbib 1998). Of special relevance in the present context is the finding that certain mirror neurons discharge when either the hand or the mouth moves, provided the movements have the same goal, i.e., in response to the same “behavioral meaning” (Gentilucci and Rizzolatti 1990).

Behavioral research on humans propels the theoretical basis for this link further. Gentilucci and colleagues found differences in formant frequency when subjects were asked to pronounce a syllable after bringing small vs. large pieces of fruit to the mouth (Gentilucci et al. 2004). The investigators suggest that voice modulation and articulatory movements of the vocal tract may have emerged from a manual action repertoire that was already in place.

An analysis of conventionalized mouth movements obligatorily associated with a subset of signs in British Sign Language points in the same direction (Woll 2001). Woll found that the mouth movements are articulatorily similar to the manual movements. For example, signs of this subset that involve opening the hand also involve opening the mouth (“pa”), and vice versa (“ap”). Woll suggests that this “echo phonology” may be a key to the way in which arbitrary spoken forms derived from nonarbitrary gestural forms in language evolution. This line of reasoning, and the types of mouth actions that Woll found, are compatible with the suggestion that the earliest precursors to spoken language were CV monosyllables uttered as a result of mandibular oscillation during grooming, for example (MacNeilage and Davis 2000). The tendency for humans to activate the mouth and the hands in communicative contexts may have been overlaid with vocalization, leading ultimately to spoken language.

8. Conclusion

In spoken language, the oral-aural channel transmits messages that are multi-leveled, hierarchically organized, highly complex, and bear certain significant characteristics that are shared by all languages but not by the communication systems of any other species. Speakers also gesture with the hands, face, and body, and this gesture augments and enriches the signal. People use all they have for communication. But how do they use it all? How is the information load divided up? How do the various signals interact?

Perhaps surprisingly, investigating sign language helps to bring certain aspects of the organization of the human communication system into sharper focus. By singling out iconic gestures performed by the mouth in sign languages, this study has shown that hand and mouth act in tandem to convey images symbolically in the service of language. Iconic mouth gestures in a sign language simultaneously convey imagistic information that is often complementary to that presented in the primary linguistic signal. There is not so much a continuum between gesture and the primary language signal as a complementary relationship in both modalities: If the primary language signal is oral, the gesture is manual — and vice versa. The evidence presented here provides independent support for the notion that gesture and linguistically organized material are structurally distinct but conceptually intertwined in human language. The gestures have the properties that are not associated with linguistic organization: they are holistic, noncombinatoric, idiosyncratic, and context-sensitive. The linguistic signal is the converse: dually patterned, combinatoric, conventionalized, and far less context-dependent in the relevant sense.

In order to understand the gestures of sign language and their distribution, a distinction was made between mimetic replicas and iconic symbols — only the latter are expressed by mouth gestures and pattern symbiotically with linguistic description of the same event. This distinction may reflect different kinds of cognitive operations: nonhuman primates apparently use only the mimetic kind, which is not symbolic in the same sense.

As comprehensive theories of human language develop to encompass gesture, a privileged place in the model should be reserved for symbiotic symbolization by hand and mouth. Human language is universally bimodal, regardless of which modality is selected as primary, and it is versatile, in that each modality may assume the role of transmitting meaningful information that is either linguistically organized or gestural.

Biography

Wendy Sandler (b. 1949) is a professor at University of Haifa <wsandler@research.haifa.ac.il>. Her research interests include phonology, morphology, and prosody of sign languages, facial expression, gesture, and the development of nascent languages. Her publications include Phonological Representation of the Sign (1989); Sign Language and Linguistic Universals (2006, co-authored with Diane Lillo-Martin) and A Language in Space: The Story of Israeli Sign Language (2007, co-authored with Irit Meir).

Footnotes

I am grateful to Peter MacNeilage, Asher Koriat, and Satoro Kita for comments on earlier stages of this work, and to Irit Meir for helpful comments on this paper. Thanks to Michael Tomasello for discussion of nonhuman primate gestures. I also wish to thank audiences at the International Conference on Gesture Studies 2007 and at the Max Planck Institute for Psycholinguistics for thought provoking comments and discussion of this study. This research was supported in part by grants from the Israel Science Foundation, the U.S.-Israel Binational Science Foundation, and the National Institute on Deafness and other Communication Disorders of the National Institutes of Health.

The compelling term language instinct is borrowed from Pinker (1994).

In the original figure, published in Klima and Bellugi (1979), the first gesture, showing a round object and called an iconic gesture here, is included with the following mimetic sequence in the same illustration, together referred to as “pantomime” there. Here, thanks to Photoshop, the two are separated out in order to demonstrate the distinction made in the present study between an iconic symbol and a mimetic replica.

Note that conventionalized signs in sign languages may originate as replicas. Once they function as words in the language, they become symbolic in every sense of the word.

Whether emotional facial expressions are gestural in Kendon’s sense of being intentionally communicative is a subject of controversy (see, e.g., Fridlund 1994). Either way, they are neither linguistically organized nor iconic.

Whether the distinction between the icon and the replica is useful in subcategorizing the manual gestures called “iconics” by McNeill is an open question.

The question of whether there are gestural elements in the manual part of sign language is beyond the scope of this work.

A movie of this sequence in slow motion can be viewed at http.//dx.doi.org/10.1515/semi.2009.035_supp-1

Two examples can be viewed at http.//dx.doi.org/10.1515/semi.2009.035_supp-2

The idiosyncracy of gestures produced with the mouth contrasts starkly with the conventionalized use of the upper face in ISL for intonation (Nespor and Sandler 1999), which is far more uniform across signers (Dachkovsky in press). It also contrasts with the systematic use of the mouth for conventionalized linguistic purposes mentioned in Section 5.

Thanks very much to Diane Brentari for generously sharing her Tweety data (collected with the support of NSF grant BCS 0112391).

A Hans Christian Andersen Story, The Little Mermaid, signed (in ASL) by Ben Bahan, distributed by Dawn Press.

In a highly informative volume on uses of the mouth in sign languages, both mouth components and adverbial/adjectival mouth shapes are referred to as mouth gestures (Boyes-Braem and Sutton-Spence 2001). We reserve that term for the iconic mouth gestures described in this study.

As is the case in many countries with deaf education systems, a sign-accompanied speech system has arisen is Israel, which can be called Signed Hebrew or Manually Coded Hebrew. In Manually Coded Hebrew (as in Manually Coded English, Manually Coded Dutch, etc.), bare signs are selected from the sign language lexicon and strung together in the word order of the spoken language, often with contrived manual symbols to stand for bound morphemes of the spoken language. The system, intended to aid in the Hebrew instruction of deaf children, is not a natural language, and is not the object of study here. See Meir and Sandler (2008) for a comparison between a natural sign language and a manually coded system.

References

- Arbib Michael A. From monkey-like action recognition to human language: An evolutionary framework for neurolinguistics. Behavioral and Brain Sciences. 2005;28(2):105–124. doi: 10.1017/s0140525x05000038. [DOI] [PubMed] [Google Scholar]

- Armstrong David E, Stokoe William C, Wilcox Sherman. Gesture and the nature of language. Cambridge: Cambridge: University Press; 1995. [Google Scholar]

- Aronoff Mark, Meir Irit, Padden Carol, Sandler Wendy. The roots of linguistic organization in a new language. Interaction Studies. 2008:133–149. doi: 10.1075/is.9.1.10aro. Special Issue. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aronoff Mark, Meir Irit, Padden Carol, Sandler Wendy. Morphological universals and the sign language type. In: Booj G, van Marle J, editors. Yearbook of Morphology 2004. Dordrecht/Boston: Kluwer Academic; 2004. pp. 19–39. [Google Scholar]

- Aronoff Mark, Meir Irit, Sandler Wendy. The paradox of sign language morphology. Language. 2005;81(2):301–344. doi: 10.1353/lan.2005.0043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker Charlotte, Padden Carol A. Focusing on the nonmanual components of ASL. In: Siple Patricia., editor. Understanding language through sign language research. New York: Academic Press; 1978. pp. 27–57. [Google Scholar]

- Baker-Shenk Charlotte. A Micro-analysis of the non-manual components of questions in American Sign Language. Berkeley, CA: University of California dissertation; 1983. [Google Scholar]

- Bergman Brita, Wallin Lars. A preliminary analysis of visual mouth segments in Swedish Sign Language. In: Boyes Braem P, Sutton-Spence R, editors. The hands are the head of the mouth: The mouth as articulator in sign languages. Hamburg: Signum-Verlag; 2001. pp. 51–68. (International Studies of Sign Language and Communication of the Deaf 39) [Google Scholar]

- Bellugi Ursula, Fischer Susan. Cognition. Vol. 1. 1972. A comparison of signed and spoken language; pp. 173–200. [Google Scholar]

- Bloomfield Leonard. Language. New York: Henry Holt; 1933. [Google Scholar]

- Boyes Braem Penny, Sutton-Spence Rachel., editors. The hands are the head of the mouth: The mouth as articulator in sign languages. Hamburg: Signum-Verlag; 2001. (International Studies of Sign Language and Communication of the Deaf 39) [Google Scholar]

- Boyes Braem Penny. Functions of the mouthings in the signing of Deaf early and late learners of Swiss German Sign Language (DSGS) In: Boyes Braem P, Sutton-Spence R, editors. The hands are the head of the mouth: The mouth as articulator in sign languages. Hamburg: Signum-Verlag; 2001. pp. 99–132. (International Studies of Sign Language and Communication of the Deaf 39) [Google Scholar]

- Brentari Diane. Theoretical foundations of American Sign Language phonology. Chiacago: University of Chicago dissertation; 1990. [Google Scholar]

- Brentari Diane. A prosodic model of sign language phonology. Cambridge, MA: MIT Press; 1998. [Google Scholar]

- Butcher Carol, Goldin-Meadow Susan. In: Language and gesture. McNeill David., editor. Cambridge: Cambridge University Press; 2000. pp. 235–257. [Google Scholar]

- Capirci Olga, Contaldo Annarita, Caselli Maria C, Volterra Virginia. From action to language through gesture: A longitudinal perspective. Gesture. 2005;5(1–2):155–177. [Google Scholar]

- Coulter Geoffrey. On the nature of ASL as a monosyllabic language; Paper presented at the Annual Meeting of the Linguistic Society of America; San Diego, CA. 1982. [Google Scholar]

- Dachkovsky Svetlana. Facial expression as intonation in ISL: The case of conditionals. Haifa: University of Haifa dissertation; 2005. [Google Scholar]

- Dachkovsky Svetlana. Facial expression as intonation in sign language: The case of ISL conditionals. In: Quer Josep., editor. Theoretical issues in sign language research. Hamburg: Verlag; in press. [Google Scholar]

- Dachkovsky Svetlana, Sandler Wendy. Visual intonation in the prosody of a sign language. Special issue. Language and Speech. doi: 10.1177/0023830909103175. In press. [DOI] [PubMed] [Google Scholar]

- Deacon Terrence W. The symbolic species. New York & London: W.W. Norton; 1997. [Google Scholar]

- Duncan Susan. Language and Linguistics. 2. Vol. 6. 2005. Gesture in signing: A case study from Taiwan Sign Language; pp. 279–318. [Google Scholar]

- Ekman Paul, Friesen Wallace V. The repertoire of nonverbal behavior: Categories, origins, usage, and coding. Semiotica. 1969;1:49–98. [Google Scholar]

- Ekman Paul, Friesen Wallace V. Facial action coding system. Pal Alto, CA: Consulting Psychologist Press; 1978. [Google Scholar]

- Emmorey Karen. Do signers gesture? In: Messing Lynn S, Campbell Ruth., editors. Gesture, speech, and sign. Oxford: Oxford University Press; 1999. pp. 133–159. [Google Scholar]

- Emmorey Karen. Perspectives on classifier constructions in sign languages. Mahwah, NJ: Lawrence Erlbaum Associates; 2003. [Google Scholar]

- Enfield Nick J. On linear segmentation and combinatorics in co-speech gesture: A symmetry-dominance construction in Lao fish trap descriptions. Semiotica. 2004;149(1/4):57–123. [Google Scholar]

- Fridlund Alan J. Human facial expression: An evolutionary view. San Diego: Academic Press; 1994. [Google Scholar]

- Gallese Vittorio, Fadiga Luciano, Fogassi Leonardo, Rizzolatti Giacomo. Action recognition in the premotor cortex. Brain. 1996;119(2):593–609. doi: 10.1093/brain/119.2.593. [DOI] [PubMed] [Google Scholar]

- Gentilucci Maurizio, Rizzolatti Giacomo. Cortical motor control of arm and hand movements. In: Goodale Melvyn A., editor. Vision and action: The control of grasping. Norwood, NJ: Ablex; 1990. pp. 147–162. [Google Scholar]

- Gentilucci Maurizio, Santunione Paola, Roy Alice C, Stefanini Silvia. Execution and observation of bringing a fruit to the mouth affect syllable pronunciation. European Journal of Neuroscience. 2004;19(1):190–202. doi: 10.1111/j.1460-9568.2004.03104.x. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow Susan, McNeill David. The role of gesture and mimetic representation in making language the province of speech. In: Corbalis Michael C, Lea Stephen E.G., editors. The descent of mind. Oxford: Oxford University Press; 1999. pp. 155–172. [Google Scholar]

- Goldin-Meadow Susan. Hearing gesture. Cambridge & London: Belknap & Harvard University Press; 2003. [Google Scholar]

- Goldin-Meadow Susan, Nusbaum Howard, Kelly Spencer D, Wagner Susan M. Explaining math: Gesturing lightens the load. Psychological Science. 2001;12(6):516–522. doi: 10.1111/1467-9280.00395. [DOI] [PubMed] [Google Scholar]

- Hamano Shoko Saito. The sound-symbolic system of Japanese. Gainesville, FL: University of Florida dissertation; 1986. [Google Scholar]

- Hauser Marc D, Chomsky Noam, Tecumseh Fitch W. The faculty of language: What is it, who has it, and how did it evolve? Science. 2002;298(5598):1569–1579. doi: 10.1126/science.298.5598.1569. [DOI] [PubMed] [Google Scholar]

- Hockett Charles F. The origin of speech. Scientific American. 1960;203(3):88–96. [PubMed] [Google Scholar]

- Iverson Jana M, Goldin-Meadow Susan. Why people gesture when they speak. Nature. 1998;396(6708):228. doi: 10.1038/24300. [DOI] [PubMed] [Google Scholar]

- Kendon Adam. Gesticulation and speech: Two aspects of the process of utterance. In: Key MR, editor. The relationship of verbal and nonverbal communication. The Hague: Mouton; 1980. pp. 207–227. [Google Scholar]

- Kendon Adam. Geography of gesture. Semiotica. 1981;37(1/2):129–163. [Google Scholar]

- Kendon Adam. Language and gesture: unity or duality? In: McNeill David., editor. Language and gesture. Cambridge: Cambridge University Press; 2000. pp. 47–63. [Google Scholar]

- Kendon Adam. Gesture: Visible action as utterance. Cambridge: Cambridge University Press; 2004. [Google Scholar]

- Kita Satoro. Two dimensional semantic analysis of Japanese mimetics. Linguistics. 1997;35:379–415. [Google Scholar]

- Kita Satoro. How representational gestures help speaking. In: McNeill David., editor. Language and gesture. Cambridge: Cambridge University Press; 2000. pp. 162–185. [Google Scholar]

- Klima Edward S, Bellugi Ursula. The signs of language. Cambridge, MA: Harvard University Press; 1979. [Google Scholar]

- Liddell Scott K.MSiple Patricia.Nonmanual signals and relative clauses in American Sign Language Understanding language through sign language research 1978New York: Academic Press; 59–90. [Google Scholar]

- Liddell Scott K. American sign language syntax. The Hague: Mouton; 1980. [Google Scholar]

- Liddell Scott K. Grammar, gesture, and meaning in American Sign Language. Cambridge: Cambridge University Press; 2003. [Google Scholar]

- Liddell Scott K, Johnson Robert E. American Sign Language compound formation processes, lexicalization, and phonological remnants. Natural Language and Linguistic Theory. 1986;4:445–513. [Google Scholar]

- Liddell Scott K, Metzger Melanie M. Gesture in sign language discourse. Journal of Pragmatics. 1998;30:657–697. [Google Scholar]

- Lillo-Martin Diane. Universal grammar and American Sign Language: Setting the null argument parameters. Dordrecht: Kluwer; 1991. [Google Scholar]

- MacDonald John, McGurk Harry. Visual influences on speech perception processes. Perception and Psychophysics. 1978;24:349–357. doi: 10.3758/bf03206096. [DOI] [PubMed] [Google Scholar]

- MacNeilage Peter F, Davis Barbara. On the origin of internal structure of word forms. Science. 2000;288(5465):527–531. doi: 10.1126/science.288.5465.527. [DOI] [PubMed] [Google Scholar]

- McIntire Marina L, Reilly Judy S. Nonmanual behaviors in L1 & L2 learners of American Sign Language. Sign Langauge Studies. 1988;17(61):351–375. [Google Scholar]

- McNeil David. Hand and mind. Chicago: University of Chicago Press; 1992. [Google Scholar]

- McNeill David, Duncan Susan D. Growth points in thinking-for-speaking. In: McNeill David., editor. Language and gesture. Cambridge: Cambridge University Press; 2000. pp. 141–161. [Google Scholar]

- Meir Irit, Sandler Wendy. A language in space: The story of Israeli Sign Language. Mahwah, NJ: Lawrence Erlbaum Associates; 2008. [Google Scholar]

- Meir Irit. A cross-modality perspective on verb agreement. Natural Language and Linguistic Theory. 2002;20(2):413–450. [Google Scholar]

- Neidle Carol, Kegl Judy, MacLaughlin Dawn, Bahan Benjamin, Lee Robert G. The syntax of American Sign Language: Functional categories and hierarchical structure. Cambridge, MA: MIT Press; 2000. [Google Scholar]

- Nespor Marina, Sandler Wendy. Prosodic phonology in Israeli Sign Language. Language and Speech. 1999;42(2–3):143–176. [Google Scholar]

- Padden Carol A. Some arguments for syntactic patterning in American Sign Language. Sign Language Studies. 1981;10(32):239–259. [Google Scholar]

- Padden Carol A. Interaction of morphology and syntax in American Sign Language. New York: Garland; 1988. [Google Scholar]

- Peirce Charles Sanders. Logic as semiotic: The theory of signs. In: Buchler Justus., editor. The philosophical writings of Peirce. New York: Dover; 1987 [1903]. pp. 98–119. [Google Scholar]

- Perlmutter David. Sonority and syllable structure in American Sign Language. Linguistic Inquiry. 1992;23:407–422. [Google Scholar]

- Petronio Karen, Lillo-Martin Diane. Wh-movement and the position of Spec-CP: Evidence from American Sign Language. Language. 1997;73:18–57. doi: 10.2307/416592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfau Roland, Quer Josep. On the syntax of negation and modals in Catalan Sign Language and German Sign Language. In: Perniss Pamela, Pfau Roland, Steinbach Markus., editors. Visible variation: Comparative studies on sign language structure. Berlin & New York: Mouton de Gruyter; 2007. pp. 129–161. (Trends in linguistics: Studies and monographs 188) [Google Scholar]

- Pinker Steven. The language instinct. New York: William Morrow; 1994. [Google Scholar]

- Plooij FX. Some basic traits of language in wild chimpanzees? In: Lock Andy., editor. Action, gesture, and symbol. London: Academic Press; 1978. pp. 111–131. [Google Scholar]

- Pollick Amy, de Waal Frans DM. Ape gestures and language evolution. Proceedings of the National Academy of Sciences. 2007;104(19):8184–8189. doi: 10.1073/pnas.0702624104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reilly Judy S, McIntire Marina L, Bellugi Ursula. The acquisition of conditionals in American Sign Language: Grammaticized facial expressions. Applied Psycholinguistics. 1990;11:369–392. [Google Scholar]

- Rizzolatti Giacomo, Arbib Michael A. Language within our grasp. Trends in Neurosciences. 1998;21:188–194. doi: 10.1016/s0166-2236(98)01260-0. [DOI] [PubMed] [Google Scholar]

- Sandler Wendy. Papers from the Chicago linguistic society: Parasession on autosegmental and metrical phonology. Chicago: Chicago Linguistic Society; 1987. Assimilation and feature hierarchy in American Sign Language; pp. 266–278. [Google Scholar]

- Sandler Wendy. Phonological representation of the sign: Linearity and nonlinearity in American Sign Language. Dordrecht: Foris; 1989. [Google Scholar]

- Sandler Wendy. Temporal aspect and American Sign Language. In: Fischer S, Siple P, editors. Theoretical issues in sign language research. Chicago: University of Chicago Press; 1990. pp. 103–129. [Google Scholar]

- Sandler Wendy. Sign language and modularity. Lingua. 1993;89:315–351. [Google Scholar]

- Sandler Wendy. The medium and the message: Prosodic interpretation of linguistic content in Israeli Sign Language. Sign Language & Linguistics. 1999a;2:187–216. [Google Scholar]

- Sandler Wendy. Cliticization and prosodic words in a sign language. In: Hall Tracy, Kleinhenz Ursula., editors. Studies on the phonological word. Amsterdam: Benjamins; 1999b. pp. 223–254. [Google Scholar]

- Sandler Wendy. On the complementarity of signed and spoken languages. In: Levy Yonata, Schaeffer Jeannette., editors. Language competence across populations: Towards a definition of SLI. Mahwah, NJ: Lawrence Erlbaum Associates; 2003. pp. 383–409. [Google Scholar]

- Sandler Wendy. Prosodic constituency and intonation in a sign language. Linguistische Berichte. 2005;13:60–86. [Google Scholar]

- Sandler Wendy. Watching the emergence of linguistic organization. Paper presented at Max Planck Institute for Psycholinguistics colloquium. 2007 [Google Scholar]

- Sandler Wendy. The syllable in sign language: Considering the other natural language modality. In: Davis Barbara L, Zajdo Kristine., editors. The syllable in speech production. New York: Taylor & Francis; 2008. pp. 379–408. [Google Scholar]

- Sandler Wendy. The visual prosody of sign language. In: Woll B, Steinbach M, Pfau R, editors. Handbook of sign language linguistics. Berlin & New York: Mouton de Gruyter; In press. [Google Scholar]

- Sandler Wendy, Meir Irit, Padden Carol A, Aronoff Mark. The emergence of grammar: Systematic structure in a new sign language. Proceedings of the National Academy of Sciences. 2005;102:2661–2665. doi: 10.1073/pnas.0405448102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sandler Wendy, Lillo-Martin Diane. Sign language and linguistic universals. Cambridge: Cambridge University Press; 2006. [Google Scholar]