Abstract

This paper uses random assignment in professional golf tournaments to test for peer effects in the workplace. We find no evidence that playing partners’ ability affects performance, contrary to recent evidence on peer effects in the workplace from laboratory experiments, grocery scanners, and soft-fruit pickers. In our preferred specification we can rule out peer effects larger than 0.043 strokes for a one stroke increase in playing partners’ ability. Our results complement existing studies on workplace peer effects and are useful in explaining how social effects vary across labor markets, across individuals, and with the form of incentives faced.

Is an employee’s productivity influenced by the productivity of his or her nearby coworkers? The answer to this question is important for the optimal organization of labor in a workplace and for the optimal design of incentives.1, 2 Despite their importance, however, peer effects questions like this one are notoriously difficult to answer empirically. In this paper we exploit the conditional random assignment of golfers to playing partners in professional golf tournaments to identify peer effects among co-workers in a high-skill professional labor market.3

A few other recent studies have found evidence that peer effects can be important in several work settings: among low-wage workers at a grocery store (Alexandre Mas and Enrico Moretti, 2008), among soft-fruit pickers (Oriana Bandiera, Iwan Barankay and Imran Rasul, 2008), and among workers performing a simple task in a laboratory setting (Armin Falk and Andrea Ichino, 2006). However, the two field studies (Mas and Moretti, 2008; and Barankay, Bandiera and Rasul, 2008) rely on observational variation in peers, while the Falk and Ichino study is of the behavior of high-school students in a laboratory. Ours is the first field study of peer effects in the workplace to exploit random assignment of peers. It is also the first to study a high-skill professional labor market. As such, a comparison of results across markets speaks to heterogeneity in the importance of peer effects that is missed by looking at one particular type of labor market.

Random assignment is an important attribute to a peer effects research design (see e.g. Bruce Sacerdote, 2001 and David J. Zimmerman, 2003), but it is not a panacea. Shocks common to a randomly assigned peer group still make causal inference difficult. As we describe below, our design allows a convenient way to test and control for such common shocks (e.g. from common weather shocks which vary over the course of the day) by examining golfers who play nearby on the golf course and in close temporal proximity, but in different groups and therefore with whom there are no social interactions.

The main result of this paper is that neither the ability nor the current performance of playing partners affect the performance of professional golfers. Output in golf is measured in strokes, the number of times a player hits the ball in his attempt to get it into the hole. In our preferred specification, we can rule out peer effects larger than 0.043 strokes for a 1 stroke increase in playing partners’ ability and our point estimate is actually negative. The results are robust to alternative peer effects specifications, and we rule out multiple forms of peer effect mechanisms including learning and motivation.

We also point out a bias that is inherent in typical tests for random assignment of peers and suggest a simple correction. Because individuals cannot be their own peers, even random assignment generates a negative correlation in pre-determined characteristics of peers. Intuitively, the urn from which the peers of an individual are drawn does not include the individual. Thus, the population at risk to be peers with high-ability individuals is on average lower ability than the population at risk to be peers with low-ability individuals. As a result, the typical test for random assignment, a regression of i’s predetermined characteristic on the mean characteristic of i’s peers, produces a slightly negative coefficient even when peers are truly randomly assigned. This bias can cause researchers to infer that peers are randomly assigned when in fact there is positive matching. We present results from Monte Carlo simulations showing that this bias can be reasonably large, and that it is decreasing in the size of the population from which peers are selected. We then propose a simple solution to this problem— controlling for the average ability of the population at risk to be the individual’s peers— and show that including this additional regressor produces test statistics that are well-behaved.

Our results compare the performance of professional golfers that are randomly grouped with playing partners of differing abilities. Within a playing group, players are proximate to one another and can therefore observe each others’ shots and scores. This proximity creates the opportunity to learn from and be motivated by peers.

There are several learning opportunities during a round. For example, a player must judge the direction of the wind when hitting his approach shot to the putting green. Wind introduces uncertainty into shot and club selection. Thus, by observing the ball flight of others in the playing group, a player can reduce this uncertainty and increase his chance of hitting a successful shot. Another example is the putting green. Subtle slopes, moisture, and the type of grass all affect the direction and speed of a putt. The chance to learn how a skilled putter manages these conditions may confer an advantage to a peer golfer.4

Turning to motivation, there are several ways that motivation can affect performance. The chance to visualize a good shot may help a player to execute his own shot successfully. Similarly, seeing a competitor play well may directly motivate a player and help him to focus his mental attention on the task at hand. Still more, a player’s self-confidence, and in turn his performance (Roland Benabou and Jean Tirole, 2002), may be directly affected by the abilities or play of his peers (Leon Festinger, 1954). Interestingly, there is a popular perception among PGA golfers that these psychological effects may facilitate play.5

In addition to a simple overall measure of skill, we present results based on multidimensional measures of ability that line up nicely with these important potential underlying mechanisms of peer effects. We discuss why these data allow us to distinguish between learning effects and motivational effects, the latter of which are more psychological in nature. The results show no evidence of peer effects of either the learning or motivational sorts. Golf tournaments are well-designed to identify peer effects for two other reasons. First, our results are purged of relative incentive effects because the objective for each player in a golf tournament is to score the lowest, regardless of with whom that player is playing.6 Pay is based on relative performance, but performance is compared to the entire field of entrants in a tournament, not relative to the players within a playing group. This contrasts with other settings, such as classrooms, where the performance of an individual is assessed relative to the individual’s peers. For example, in a classroom where grades are based on relative performance we would expect to see students try hard to perform better than their peers. Such behavior is the result of response to incentives, not of learning from peers.7

And second, we are able to identify peer effects in a setting devoid of most production-technology complementarities, i.e. cross-productivity effects that stem from the production function rather than social or behavioral effects. This is unlike, as one example, the grocery store setting considered in Mas and Moretti (2008). As they mention, in the supermarket checkout setting there is a shared resource, a bagger who helps some checkout workers place items in customers’ bags. If the bagger spends more time helping the fastest checkout worker, this will negatively affect the productivity of others in his shift.

The remainder of this paper is organized as follows: section I reviews the literature, section II briefly describes the PGA Tour and our data, section III describes the methodological point concerning bias in typical peer effects regressions and shows results verifying the random assignment mechanism, section IV discusses our empirical approach, section V discusses the validity of our empirical strategy and our results and section VI concludes.

I. Related Literature

It has long been recognized by psychologists that an individual’s performance might be influenced by his peers. The first study to show evidence of such peer effects was Norman Triplett (1898), who noted that cyclists raced faster when they were pitted against one another, and slower when they raced only against a clock. While Triplett’s study shows that the presence of others can facilitate performance, others found that the presence of others can inhibit performance. In particular, Floyd Allport (1924) found that people in a group setting wrote more refutations of a logical argument, but that the quality of the work was lower than when they worked alone. Similarly Joseph Pessin (1933) found that the presence of a spectator reduced individual performance on a memory task. Robert B. Zajonc (1965) resolved these paradoxical findings by pointing out that the task in these experimental setups varied in a way that confounded the results. In particular, he argued that for well-learned or innate tasks, the presence of others improves performance. For complex tasks however, he argued that the presence of others worsens performance.

Guided by the intuition that peers may affect behavior and hence market outcomes, several economic studies of peer effects have recently emerged in a variety of domains. Examples include education (Bryan S. Graham, 2008), crime (Edward L. Glaeser, Bruce Sacerdote and Jose A. Scheinkman, 1996), unemployment insurance take-up (Kory Kroft, 2008), welfare participation (Marianne Bertrand, Erzo F.P. Luttmer and Sendhil Mullainathan, 2000), and retirement planning (Esther Duflo and Emmanuel Saez, 2003). The remainder of this section reviews three papers that are conceptually most similar to our research, in that they attempt to measure peer effects in the workplace or in a work-like task.

The first economic study of peer effects in a work-like setting is a laboratory experiment conducted by Falk and Ichino (2006). This experiment measures how an individual’s productivity is influenced by the presence of another individual working on the same task: stuffing letters into envelopes. They find moderate and significant peer effects: a 10 percent increase in peers’ output increases a given individual’s effort by 1.4 percent. A criticism of this study is one that applies broadly to other studies in the lab; in particular, that it may have low external validity because of experimenter demand effects or because experimental subjects get paid minimal fees to participate and as a result their incentives may be weak (Steven Levitt and John A. List, 2007).8

Two recent studies of peer effects in the workplace have examined data collected from the field. Mas and Moretti (2008) measure peer effects directly in the workplace using grocery scanner data. There is not explicit randomization, but the authors present evidence that the assignment of workers to shifts appears haphazard. Since grocery store managers do not measure individual output directly in a team production setting, one might expect to observe significant free riding and suboptimal effort. Instead, this study finds evidence of significant peer effects with magnitudes similar to those found by Falk and Ichino (2006): a 10 percent increase in the average permanent productivity of co-workers increases a given worker’s effort by 1.7 percent. As Mas and Moretti discuss in their paper, grocery scanner is an occupation where compensation is not very responsive to changes in individual effort and output. They conjecture that “economic incentives alone may not be enough to explain what motivates [a] worker to exert effort in these jobs.”

Bandiera, Barankay and Rasul (2008) examine how the identity and skills of nearby workers affect the productivity of soft-fruit pickers on a farm. Assignment of workers to rows of fruit is made by a combination of managers. Though assignment is not explicitly random, the authors present evidence to support the claim that it is orthogonal to worker productivity. The authors find that productivity responds to the presence of a friend working nearby, but do not show evidence that productivity responds to the skill-level of non-friend co-workers. High-skilled workers slow down when working next to a less productive friend, and low-skilled workers speed up when working next to a more productive friend. Workers are paid piece rates in this setting and are willing to forgo income to conform to a social norm along with friends. The authors also find that workers respond to the cost of conforming: on high-yield days the relatively high-skill friend slows down less and the relatively low-skill friend works harder.

In light of the fact that monetary incentives are weaker in the Mas and Moretti (2008) study than in Bandiera et al.’s (2008), it is interesting to note that Mas and Moretti (2008) find more general peer effects. A recent paper by Thomas Lemieux, Bentley Macleod and Daniel Parent (2009) points out that an increasing fraction of US jobs contain some type of performance pay based either on a commission, a bonus, or a piece rate.9 Therefore, it is natural to ask whether peer effects also exist in settings with stronger incentives than either of the aforementioned peer effects studies.10 In the setting that we consider— professional golf tournaments— compensation is determined purely by performance.11 Pay is high and the pay structure is quite convex. For example, during the 2006 PGA season, the top prize money earner, Tiger Woods, earned almost $10 million, and 93 golfers earned in excess of $1 million during the season. We discuss the convexity of tournament payouts later in the paper.

The existing field studies in the literature on peer effects in the workplace have focused on low-skilled jobs in particular industries. It is possible that there is heterogeneity in how susceptible individuals are to social effects at work. Motivated by this, we ask whether peer effects exist in workplaces made up of highly skilled professional workers.12

In terms of research design, our study is related to Sacerdote (2001) and Zimmerman (2003), who measure peer effects in higher education using the random assignment of dorm roommates at Dartmouth and Williams Colleges, respectively. Sacerdote finds that an increase in roommate’s GPA by 1 point increases own freshman year GPA by 0.120. The coefficient on roommate’s GPA, however, drops significantly— from 0.120 to 0.068— when dorm fixed effects are included, suggesting that common shocks might be driving some of the correlation in GPAs between roommates. As we discuss below, we are able to test directly for the most likely source of common shocks to golfers.13

II. Background and Data

A. Institutional Details of the PGA Tour

Relevant Rules14

There is a prescribed set of rules to determine the order of play. The player with the best (i.e. lowest) score from the previous hole takes his initial shot first, followed by the player with the next best score from the previous hole. After that, the player who is farthest from the hole always shoots next.

Selection

Golfers from all over the world participate in PGA tournaments that are held (almost) every week.15 At the end of each season, the top 125 earners in PGA Tour events are made full-time members of the PGA Tour for the following year. Those not in the top 125 and anyone else who wants to become a full-time member of the PGA Tour must go to ‘Qualifying School’, where there are a limited number of spots available to the top finishers. For most PGA Tour tournaments, the players have the right but not the obligation to participate in the tournament. In practice, there is variation in the fraction of tournaments played by PGA Tour players. The median player plays in about 59 percent of a season’s tournaments.16 Some players avoid tournaments that do not have a large enough purse or a high prestige, while other players might avoid tournaments that require a substantial amount of travel. Stephen Bronars and Gerald Oettinger (2008) look directly at several determinants of selection into golf tournaments and they find a substantial effect of the level of the purse on entry decisions. Because membership on the tour for the following year is based on earnings, lower-earning players have an incentive to enter tournaments that higher-skilled players choose not to enter. Conditional on the set of players who enter a tournament, playing partners are randomly assigned within categories (which we define and describe just below). Unconditional on this fully interacted set of fixed effects, assignment is not random.

Assignment Rule

Players are continuously assigned to one of four categories according to rules described in detail in Appendix A. Players in category 1 are typically tournament winners from the current or previous year or players in the top 25 on the earnings list from the previous year. These include players such as Tiger Woods, Phil Mickelson and Ernie Els. Players in category 1A include previous tournament winners who no longer qualify for category 1 and former major championship winners such as Nick Faldo and John Daly. Players in category 2 are typically those between 26 and 125 on the earnings list from the previous year, and players who have made at least 50 cuts during their career or who are currently ranked among the top 50 on the World Golf Rankings. Category 3 consists of all other entrants in the tournament. Within these categories, tournament directors then randomly assign playing partners to groups of three golfers.17 These groups then play together for the first two (of a total of four) rounds of the tournament.

Tournament Details

Tournaments are generally four rounds of 18 holes played over four days. Prizes are awarded based on the cumulative performance of players over all four rounds. At the end of the second round, there is a cut that eliminates approximately half of the tournament field based on cumulative performance. Most tournaments have 130–160 players, the top 70 (plus ties) of which remain to play the final two rounds. We evaluate performance from the first two rounds only, since in the third and fourth rounds players are assigned to playing partners based on performance of the previous rounds.18

Economic Incentives

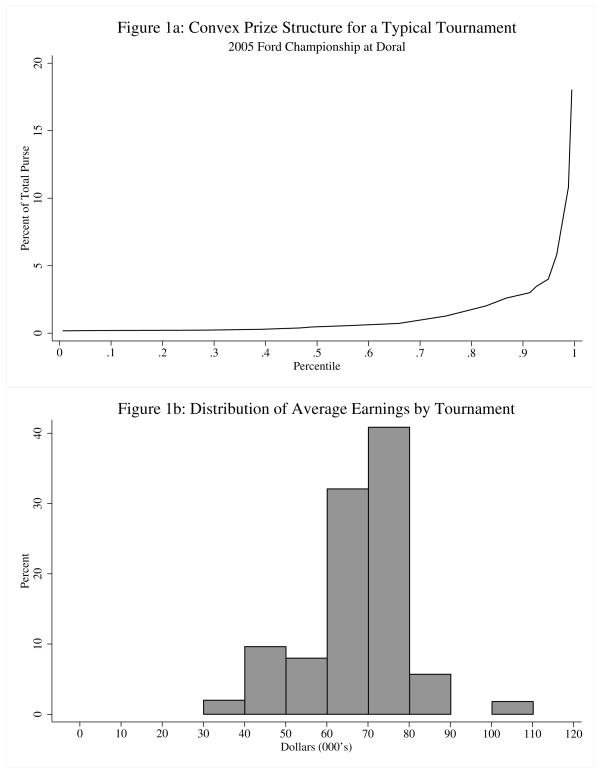

A player must survive the second-round cut to qualify to earn prize money.19 The prize structure is extremely convex: first prize is generally 18 percent of the total purse. Furthermore, the full economic incentives are even stronger than this implies, since better performance generally attracts endorsement compensation. Figure 1a shows the convexity in the prize structure of a typical tournament, and Figure 1b shows the distribution of average earnings in our sample.

Figure 1.

Tournament Earnings

Note: Figure 1a shows the distribution of earnings within a typical tournament; the convex prize structure implies that the top few percentiles receive a majority of the purse. Figure 1b shows the distribution in average earnings across all tournaments in our sample.

B. Data

Key Variables

We collected information on tee times, groupings, results, earnings, course characteristics, and player statistics and characteristics from the PGA Tour website and various other websites.20 Most of our data spans the 1999–2006 golf seasons; however, we only have tee times, groupings (i.e. the peer groups) and categories for the 2002, 2005, and 2006 seasons.21 As discussed below, to construct a pre-determined ability measure, we use the 1999, 2000, and 2001 data for the players from the 2002 season and the 2003 and 2004 data for the players from the 2005 and 2006 seasons. Our data therefore allow us to observe the performance of the same individuals playing in many PGA tournaments over three seasons (2002, 2005, and 2006).

We also collected a rich set of disaggregated player statistics for all years of the sample. These variables were collected in hopes of shedding light on the mechanism through which the peer effects operate. These measures of skill include the average number of putts per round, average driving distance, a measure of driving accuracy, and the average number of greens hit in regulation (the fraction of times a golfer gets the ball onto the green in at least two shots less than par). We discuss below how these measures might allow us to separately identify two specific forms of peer effects: learning and motivation.

Sample Selection

As discussed in more detail in Appendix A, the scores from some tournaments must be dropped because random assignment rules are not followed. The four most prestigious tournaments (called ‘major championships’) do not use the same conditionally random assignment mechanism. For example, the U.S. Open openly admits to creating ‘compelling’ groups to stimulate television ratings. The vast majority of PGA tournaments, however, use the same random assignment mechanism, and we have confirmed this through several personal communications with the PGA Tour, and through statistical tests reported below.

C. A Measure of Ability

To estimate peer effects, we require a measure of ability or skill for every player. We construct such a measure based on players’ scores in prior years.22 However, a simple average of scores from prior years understates differences in ability across players because better players tend to self-select into tournaments played on more difficult golf courses.

We address this problem using a simplified form of the official handicap correction used by the United States Golf Association (USGA), the major golf authority that oversees the official rules of the game. For the purpose of computing the handicap correction, the USGA estimates the difficulty of most golf courses in the United States. Using scores of golfers of different skill levels, the USGA assigns each course a slope and a rating, which are related to the estimated slope and intercept from a regression of score on ability. We normalize the slopes of the courses in our sample so that the average slope is 1.23 We then use the courses’ ratings and adjusted slopes to regression-adjust each past score, indexed by n. Specifically, for each past score we compute

where c indexes golf courses. For each golfer in each year, we then take the simple average of hn for the scores from the previous two or three years to be our measure of ability.24 This ability measure is essentially an estimate of the number of strokes more than 72 (i.e. above par) that a golfer typically takes in a round, on an average course that is used for professional golf tournaments. As is true for golf scores generally, higher values are worse. Thus the measure of ability is positively correlated with a golfer’s scores.

This correction varies from the official USGA handicap correction in two ways. First, the official correction predicts scores on the average golf course, whose slope is 113, whereas we calibrate to the average course slope in our sample. This adjustment ensures that our measure of ability is in the same units as the dependent variable of our peer effects regressions. Second, the official handicap formula averages the 10 lowest of the last 20 adjusted scores. Because we are interested in predicted performance, we instead average over all past scores. We have experimented with several other estimates of ability, including the simple average of scores from the previous two years, the average score from the previous two years after adjusting for course fixed effects and a best linear predictor. Estimates based on these alternative measures of ability yield very similar results.

III. Bias in Typical Tests for Random Assignment of Peers

A. Explaining the problem

Before presenting the empirical results, in this section we describe an important methodological consideration. Given the importance of random assignment, papers that report estimates of peer effects typically present statistical evidence to buttress the case that assignment of peers is random, or as good as random. The typical test is an OLS regression of individual i’s predetermined characteristic x on the average x of i’s peers, conditional on any variables on which randomization was conditioned. The argument is made that if assignment of peers is random, or if selection into peer groups is ignorable, then this regression should yield a coefficient of zero. In our case, this regression would be of the form

| (1) |

where i indexes players, k indexes (peer) groups, t indexes tournaments25, c indexes categories, and δtc is a fully interacted set of tournament-by-category dummies, including main effects. This is, for example, the test for random assignment reported in Sacerdote (2001).

This test for the random assignment of individuals to groups is not generally well-behaved. The problem stems from the fact that an individual cannot be assigned to himself. In a sense, sampling of peers is done without replacement— the individual himself is removed from the ‘urn’ from which his peers are chosen. As a result, the peers for high-ability individuals are chosen from a group with a slightly lower mean ability than the peers for low-ability individuals.

Consider an example in which four individuals are randomly assigned to groups of two. To make the example concrete, let the individuals have pre-determined abilities 1, 2, 3, and 4. If pairs are randomly selected, there are three possible sets of pairs. Individual 1 has an equal chance of being paired with either 2, 3, or 4. So, the ex-ante average ability of his partner is 3. Individual 4 has an equal chance of being paired with either 1, 2, or 3, and thus the ex-ante average ability of his partner is 2. This mechanical relationship between own ability and the mean ability of randomly-assigned peers— which is a general problem in all peer effects studies— causes estimates of equation (1) to produce negative values of π̂2. Random assignment appears non-random, and positively matched peers can appear randomly matched.

This bias is decreasing in the size of the population from which peers are drawn, i.e. the size of the ‘urn’. As the urn increases in size, each individual contributes less to the average ability of the population from which peers are drawn, and the difference in average ability of potential peers for low and high ability individuals converges to zero. In settings where peers are drawn from large groups, ignoring this mechanical relationship is inconsequential. In our case, the average urn size is 60, and 25 percent of the time the urn size is less than 18.

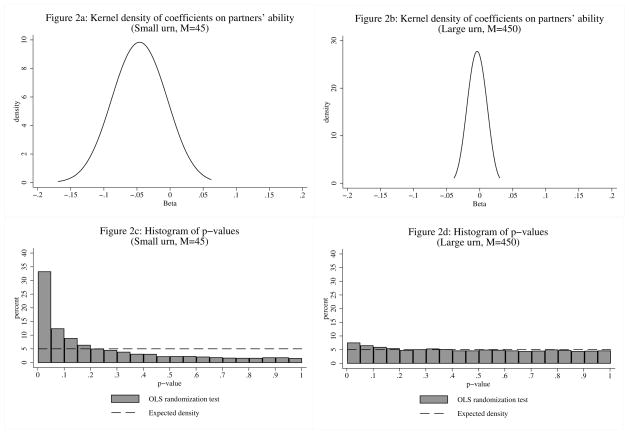

We present Monte Carlo results in Figure 2 which confirm that estimates of (1) are negatively biased and that the bias is decreasing in the size of the urn. We report the results from two simulations. For the first simulation, we created 55 players with ability drawn from a normal distribution with mean zero and standard deviation one. We then created 100 tournaments and for each tournament randomly selected M players. Each of these M players were then assigned to groups of three. M, which corresponds to the size of the urn from which peers were drawn, was randomly chosen to be either 39, 42, 45, 48, or 51 with equal probability for an average M of 45. We explain below the reason for the variation in M. Finally, we estimated an OLS regression of own ability on the average of partners’ ability, controlling for tournament fixed effects, and the estimates of π2 and p-values were saved. This procedure was repeated 10,000 times. For the second simulation, we increased the average size of M by creating 550 players, and allowing M to take on values of 444, 447, 450, 453, and 456 with equal probability for an average M of 450.

Figure 2.

Monte Carlo of OLS Randomization Tests

Note: This graph displays the results of a Monte Carlo study to show the bias of the randomization test described in the text. There are two sets of results: (1) the “Small urn” setting (where three-person pairings are randomly drawn from an urn of size 45) and (2) the “Large urn” setting (where three-person pairings are randomly drawn from an urn of size 450). In the Large urn setting the randomization test is fairly well behaved, while in the Small urn setting the distribution of betas is negatively biased and p-values from a randomization test that tests whether beta is statistically significantly different from zero are very far from uniformly distributed. Monte Carlo results are based on 10,000 repetitions.

The results from the first simulation are shown on the left side of Figure 2. As predicted, the typical OLS randomization test is not well behaved. The test substantially overrejects, rejecting at the 5-percent level more than 18 percent of the time. Even though peers are randomly assigned, the estimated correlation between abilities of peers is on average −0.046. This negative relationship is exactly as one should expect, resulting from the fact that individuals cannot be their own peers.

The intuitive argument made above also implies that the size of the bias should be decreasing in M. Indeed, this is the case. The right side of Figure 2 shows results from the second simulation where the urn size was increased by an order of magnitude. The typical randomization test is more well-behaved. The estimates of π2 center around zero, the test rejects at the 5-percent level 5.4 percent of the time, and p-values are close to uniformly distributed between 0 and 1. In short, the Monte Carlo results show that the typical test for randomization is biased when the set of individuals from which peers are drawn is relatively small.

B. A proposed solution

To our knowledge, this point has not been made clearly in the literature.26 We propose a simple correction to equation (1) that will produce a well-behaved test of random assignment of peers, even with small urn sizes. Since the bias stems from the fact that each individual’s peers are drawn from a population with a different mean ability, we simply control for that mean. Specifically, we add to equation (1) the mean ability of all individuals in the urn, excluding individual i. The modified estimating equation is thus

| (2) |

where is the mean ability of all players in the same category × tournament cell as player i, other than player i himself (i.e. all individuals that are eligible to be matched with individual i), and ϕ is a parameter to be estimated. It should be noted that it is necessary for there to be variation in the set of players in player i’s urn to be able to separately identify π2 and ϕ27

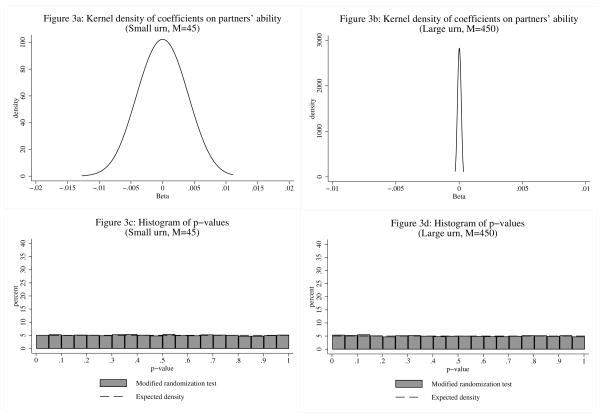

Figure 3 shows the results from Monte Carlo simulations analogous to those reported above. The difference here is that instead of estimating the typical OLS regression, we include as an additional regressor. As can be seen clearly in the figure, the addition of this control makes the OLS test of randomization well-behaved regardless of whether average urn size is large or small. In both cases, the estimated correlation of peers’ ability centers around zero, the test rejects at the 5-percent level approximately 5 percent of the time, and p-values are approximately uniformly distributed between 0 and 1. In results not reported here we have also confirmed that the test can detect deviations from random assignment. Going forward, we therefore include as a control in tests for random assignment.

Figure 3.

Monte Carlo of Modified Randomization Tests

Note: This graph displays the results of a Monte Carlo study to show that our proposed “correction” of the biased randomization test described in Figure 2 (and the accompanying text) produces a well-behaved randomization tests regardless of the size of the urn. There are two sets of results: (1) the “Small urn” setting (where three-person pairings are randomly drawn from an urn of size 45) and (2) the “Large urn” setting (where three-person pairings are randomly drawn from an urn of size 450). In the both settings the randomization test is very well behaved (p-values from a randomization test that tests whether beta is statistically significantly different from zero are uniformly distributed). Monte Carlo results are based on 10,000 repetitions.

An alternative to the regression control approach that we propose is to compare the estimated π2 to a distribution generated by randomly assigning golfers to counterfactual peer groups and estimating π2.28 In our case we would repeatedly assign the golfers in our data to counterfactual groups of three according to the conditional random mechanism assumed by the null hypothesis. For each set of peer group assignments, we would estimate π2 according to the typical OLS randomization test described in section 4.1, repeating the process some large number of times. The π̂2 that we estimate from the real peer group assignments in our data could then be compared to the distribution of π̂2 generated from this process. As we describe below, our estimate of π̂2 from such an excercise lies at the very close to the median of the randomly generated distribution of π̂2, yielding the same conclusion as our corrected randomization test.

C. Summary Statistics

Before turning to a test of randomization in our sample we first present descriptive statistics, which can be found in Table 1. It is important to understand the units of our primary variables of interest. Score is a variable that represents the number of strokes the player took, and is the actual golf score the player achieved in a given tournament-round. Ability is in the same units as score (i.e. golf strokes). Usually the player’s score is measured relative to the par on the course, which is typically 72 strokes. Ability, while in the same units as score, is typically expressed as deviation of score from par. Throughout the results section, it is helpful to keep in mind that lower scores in golf indicate better performance (and, analogously, a lower Ability measure indicates a higher ability player).

Table 1.

Descriptive Statistics

| Percentiles | ||||||||

|---|---|---|---|---|---|---|---|---|

| Variable | Obs. | Mean | Std. Dev. | 10th | 25th | 50th | 75th | 90th |

| Score | 17492 | 71.158 | 3.186 | 67 | 69 | 71 | 73 | 75 |

| Avg(Score), partners | 17492 | 71.158 | 2.606 | 68 | 69.5 | 71 | 73 | 74.5 |

| Ability (Handicap) | 17492 | −2.86 | 0.92 | −3.81 | −3.40 | −2.93 | −2.45 | −1.84 |

| Avg(Ability), partners | 17492 | −2.86 | 0.78 | −3.66 | −3.32 | −2.94 | −2.52 | −2.04 |

| Driving distance (yards) | 17182 | 281.00 | 9.85 | 269.10 | 274.01 | 280.10 | 288.00 | 293.30 |

| Putts per round | 17182 | 28.67 | 1.03 | 27.33 | 28.03 | 28.83 | 29.34 | 29.72 |

| Greens per round | 17182 | 11.60 | 0.65 | 10.90 | 11.33 | 11.66 | 11.97 | 12.32 |

| Years of Experience | 17492 | 11.80 | 6.69 | 3 | 6 | 11 | 18 | 21 |

| Tiger Woods in group | 17492 | 0.00 | 0.06 | 0 | 0 | 0 | 0 | 0 |

| Category 1 players | ||||||||

| Ability | 6377 | −3.14 | 0.77 | −4.10 | −3.62 | −3.20 | −2.65 | −2.17 |

| Avg(Ability), partners | 6377 | −3.14 | 0.60 | −3.86 | −3.53 | −3.15 | −2.77 | −2.41 |

| Category 1A players | ||||||||

| Ability | 6818 | −2.81 | 0.73 | −3.67 | −3.23 | −2.87 | −2.43 | −2.03 |

| Avg(Ability), partners | 6818 | −2.81 | 0.58 | −3.46 | −3.18 | −2.86 | −2.50 | −2.18 |

| Category 2 players | ||||||||

| Ability | 2998 | −2.86 | 0.90 | −3.73 | −3.36 | −3.02 | −2.51 | −1.87 |

| Avg(Ability), partners | 2998 | −2.85 | 0.75 | −3.59 | −3.29 | −2.95 | −2.55 | −2.08 |

| Category 3 players | ||||||||

| Ability | 1299 | −1.68 | 1.45 | −3.13 | −2.50 | −1.65 | −1.13 | −0.29 |

| Avg(Ability), partners | 1299 | −1.71 | 1.25 | −3.12 | −2.50 | −1.79 | −1.13 | −0.35 |

Notes:

See Appendix A for more information on player categories.

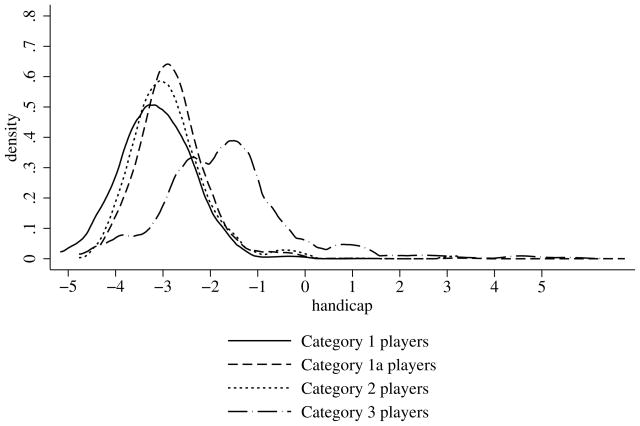

Figure 4 shows the distribution of handicap of players in each by category. Two things should be noted from the figure. First, there is a reasonable amount of variation in ability even among professional golfers. Across the three categories, the difference between the 90th percentile and 10th percentile in adjusted average score— our baseline measure of ability—is 1.97. Perhaps more importantly, given the stratification by category prior to random assignment, is that much of the variance in ability remains after separating by categories. As can be seen clearly in Figure 4, the average ability increases from category 1 to category 3. However, there is a great deal of overlap in the distributions. The 90-10 differences in measured ability in category 1, 1A, 2, and 3 are 1.93, 1.64, 1.86, and 2.84, respectively. These differences represent wide ranges in ability. They are differences in average scores per round, and tournaments are typically four rounds long. Using our data on earnings, a reduction in handicap of 1.97 translates into an increase in expected tournament earnings of 87 percent.29 This suggests that differences in strokes on this order of magnitude should be quite salient to players.

Figure 4.

Kernel density of player handicaps, by category

Note: This graph displays the density of handicaps (our primary measure of player ability) for each player category.

D. Verifying Random Assignment of Peers in Professional Golf Tournaments

Using our various measures of ability, we now test the claim that assignment to playing groups is random within a tournament-by-category cell.30 In this section, we report results from estimating variations of equation (2), with various measures of pre-determined ability. As discussed earlier, the correct randomization test includes the full set of tournament-by-category fixed effects along with the control . Table 2 reports these results. In column (1) we present results from this “correct” randomization test. The coefficient on partners’ average ability is −0.018 and insignificant. The small insignificant conditional correlation between own ability and partners’ ability is consistent with players being randomly assigned to playing partners.31

Table 2.

Testing for Random Assignment

| (1) | (2) | (3) | (4) | |

|---|---|---|---|---|

| Avg(Ability), partners | −0.017 (0.015) | 0.158 (0.021) | 0.089 (0.020) | −0.087 (0.022) |

| Leave-me-out Avg(Ability), urn | −10.803 (1.629) | |||

| Tournament F.E. | Y | Y | N | Y |

| Category F.E. | Y | N | Y | Y |

| Tournament × Category F.E. | Y | N | N | Y |

| Leave-me-out urn mean included | Y | N | N | N |

| R2 | 0.542 | 0.124 | 0.162 | 0.254 |

| N | 8801 | 8801 | 8801 | 8801 |

Notes:

Results from estimating equation (2).

Avg(Ability) is the average handicap of a player’s playing partners, as described in the text.

Column (1) includes the average of all of the other players in your tournament-by-category urn (not including yourself). This control is necessary to produce a well-behaved randomization test (see text for more details).

Standard errors are in parentheses and are clustered by playing group.

All regressions are unweighted (unlike the specifications in the other tables), since the weighting is not appropriate for the randomization test.

In the remaining columns of table 2, we present results from “incorrect” randomization tests to illustrate the importance of controlling for and to show that tests of this form have the power to detect deviations from random assignment. In column (2), we present estimates of the randomization test that is most typical in the literature. It includes the full set of tournament-by-category fixed effects but excludes the bias correction described in section 4.1, . The correlation between own and partners’ ability is negative and significant (−0.088 with a standard error of 0.022). Ignoring the bias discussed in section 4.1 would lead to the erroneous conclusion that peers were negatively assortatively matched. The test results would erroneously be interpreted as evidence of non-random assignment. In columns (3) and (4) we present estimates of equation (2) that drop the controls for category and tournament fixed effects, respectively. Failing to account for the conditional nature of random assignment generates inference of positively matched peer groups. The positive and significant estimates from these specifications show that the test has sufficient power to detect deviations from random assignment in a setting where we know assignment is not random.

We also test for random assignment using various disagreggated measures of ability (e.g. driving distance, putts per round and greens in regulation per round, years of experience).32 We report estimates of equation (2), replacing average adjusted score with these alternative measures of ability in columns (2) through (6) of Table 3. Panel A reports the correct test (i.e. those that include the control, calculated for the respective measure of ability), while panel B reports results from the typical test excluding the correction term. In specifications with the correction control, correlations between all other measures of predetermined ability are small in magnitude and statistically insignificant. Just as with the measure of overall ability, all specifications that exclude the correction term yield estimated correlations that are negative and significant.33

Table 3.

Robustness Tests of Random Assignment

| Panel A: Modified Randomization Test | ||||||

|---|---|---|---|---|---|---|

| Dependent Variable,X = | ||||||

| Ability (1) | Driving Distance (2) | Putts per Round (3) | Greens per Round (4) | Years of Experience (5) | Length of Name (6) | |

| Avg(X ), playing partners | −0.017 (0.015) | 0.002 (0.015) | −0.002 (0.012) | −0.004 (0.014) | −0.010 (0.012) | −0.013 (0.014) |

| Leave-me-out Avg( X ), urn | −10.803 (1.629) | −10.189 (1.210) | −12.529 (1.653) | −12.033 (2.665) | −15.793 (3.795) | −14.587 (1.364) |

| Tournament × Category F.E. | Y | Y | Y | Y | Y | Y |

| Leave-me-out urn mean included | Y | Y | Y | Y | Y | Y |

| R2 | 0.542 | 0.632 | 0.579 | 0.580 | 0.593 | 0.515 |

| N | 8801 | 8646 | 8646 | 8646 | 8646 | 8646 |

| Panel B: Biased Randomization Test | ||||||

|---|---|---|---|---|---|---|

| Dependent Variable,X = | ||||||

| Ability (1) | Driving Distance (2) | Putts per Round (3) | Greens per Round (4) | Years of Experience (5) | Length of Name (6) | |

| Avg(X ), playing partners | −0.087 (0.022) | −0.081 (0.021) | −0.071 (0.022) | −0.080 (0.023) | −0.050 (0.020) | −0.063 (0.020) |

| Tournament × Category F.E. | Y | Y | Y | Y | Y | Y |

| Leave-me-out urn mean included | N | N | N | N | N | N |

| R2 | 0.254 | 0.382 | 0.163 | 0.251 | 0.167 | 0.035 |

| N | 8801 | 8646 | 8646 | 8646 | 8646 | 8646 |

Notes:

Results from estimating equation (2).

Avg(Ability) is the average handicap of a player’s playing partners, as described in the text.

All specifications in Panel A include the average of all of the other players in your tournament-by-category urn (not including yourself). This control is necessary to produce a well-behaved randomization test.

Standard errors are in parentheses and are clustered by playing group.

All regressions are unweighted (unlike the specifications in the other tables), since the weighting is not appropriate for the randomization test.

IV. Empirical Framework

Having established that peers are randomly assigned, we now turn to the estimation of peer effects. We estimate peer effects using a simple linear model where own score depends on own ability and playing partners’ ability. The key identifying assumption, which was tested in the previous section, is that, conditional on tournament and category, players are randomly assigned to groups. Our baseline specification is

| (3) |

where i indexes players, k indexes groups, t indexes tournaments, r indexes each of the first two rounds of a tournament, c indexes categories, δtc is a full set of tournament-by-category fixed effects to be estimated, α1, β1, γ1, and ϕ1 are parameters, and e is an error term.34 The parameter γ1 measures the effect of the average ability of playing partners on own score, and is our primary measure of peer effects. Its magnitude is generally evaluated in relation to the magnitude of β1, which is the effect of own ability on own score. Since playing partners are randomly assigned, the coefficients in equation (3) can be estimated consistently using OLS.35

Even with the handicap correction described above, a remaining potential problem with estimating equation (3) is that ability might be measured with error. This should be a concern for all studies of peer effects that estimate exogenous effects. A nice feature of the specification above is that it contains a simple correction for measurement error. If each golfer had a single peer and each golfer’s individual measure of ability contained the same amount of measurement error, then the estimates of β̂1 and γ̂1 would be equally attenuated. In this case, the ratio γ̂1/β̂1 would give us a measurement-error-corrected estimate of the reduced-form exogenous peer effect. In addition to reporting this ratio, we also report measurement-error-corrected estimates following Wayne A. Fuller and Michael A. Hidiroglou (1978) and David Card and Thomas Lemieux (1996). This estimator corrects for attenuation bias of a known (and estimated) form and is described in more detail in Appendix B. The advantage of this estimator is that it allows for the degree of measurement error to vary by player and leverages the structure imposed on the measurement error from the fact that the regressor of interest is an average of two error-ridden measures of ability.

A key advantage to estimating the reduced-form specification in (3) is that the average ability of playing partners is a pre-determined characteristic. Thus, our estimate of γ1 is unlikely to be biased due to the presence of common unobserved shocks.36 An alternative commonly estimated specification replaces peers’ ability with peers’ score. This outcome-on-outcome specification estimates a combination of endogenous and contextual effects, but intuitively examines how performance relates to the contemporaneous performance of peers, rather than just to peers’ predetermined skills. Even with random assignment, one cannot rule out that a positive relationship between own score and peers’ score is driven by shocks commonly experienced by individuals within a peer group. We nevertheless run regressions of the following form to get an upper bound on the magnitude of peer effects:

| (4) |

where is the average score in the current round of player i’s playing partners. Because common shocks are expected to cause positive correlation in outcomes, the estimate of γ2 should be viewed as an upper bound on the extent of peer effects. Since we can observe playing groups playing at the same time nearby on the course, we are able to gauge the magnitude of the bias created by common shocks. We report estimates that take advantage of this feature of the research design in the following section.

V. Results

A. Visual Evidence

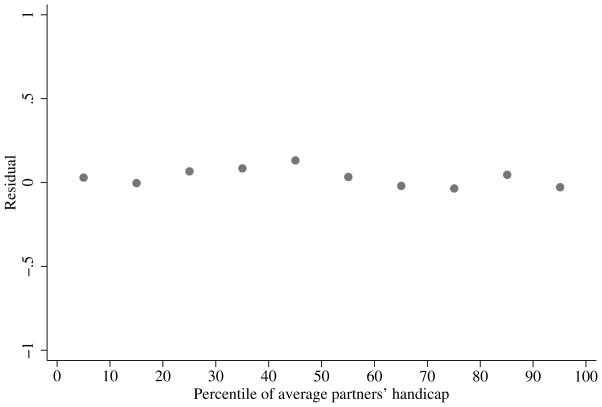

To get a sense of the importance of peer effects, we first plot regression-adjusted scores against playing partners’ handicap. To do this, we first regress each player’s score on tournament and category fixed effects and their interactions and the ‘leave-me-out’ mean of the tournament-by-category urn. Then we take the residuals from this regression, compute means by each decile of the partners’ ability distribution, and graph the average residual against each decile bin. Figure 5 reports this graph for the full sample, which shows zero correlation between own score and the ability of randomly assigned playing partners. Those who were randomly assigned to partners with higher average scores scored no differently than those who were assigned to partners with low average scores. There also does not appear to be evidence of non-linear peer effects. We take this to be a first piece of evidence that peer effects among professional golfers are economically insignificant. To place a confidence interval around this estimate, we next estimate the linear regression model in equation (3).

Figure 5.

Estimates of Peer Effects by Decile of Partners’ Ability

Note: This figure displays the average of a residual by decile of playing partners’ handicap. The residual is from a regression of own score on own handicap and a full set of player category by tournament fixed effects. If these points suggested a positive slope, we would interpret that as evidence of positive peer effects.

B. Regression Estimates of the Effect of Playing Partners’ Ability on Own Score

The results of estimating equation (3) are shown in the first column of Table 4. Since our measure of ability is an average of varying numbers of prior adjusted scores, we weight all regressions by the number of past performance observations used to compute Abilityi. Shown in column (1), the coefficient on own ability is strongly statistically significant and large in magnitude, as expected. A one-stroke increase in a player’s average score in past rounds is associated with an increase in that player’s score of 0.672 strokes. That this coefficient is not equal to 1 suggests there is some measurement error in our measure of ability, but as a conditional reliability ratio this is reasonably large in magnitude. If we think of β̂1 this way, then as we described above γ̂1/β̂1 is a measurement-error corrected estimate of the effect of partners’ ability on own score.

Table 4.

The Effect of Peers’ Ability on Own Score

| (1) | (2) | (3) | |

|---|---|---|---|

| Own Ability | 0.673 (0.039) | 0.950 (0.057) | |

| Avg(Ability), partners | −0.035 (0.040) | −0.035 (0.063) | −0.032 (0.040) |

| Tournament × Category Fixed Effects | Y | Y | Y |

| Measurement Error Correction | N | Y | N |

| Player Fixed Effects | N | N | Y |

| N | 17492 | 17492 | 17492 |

Notes:

Results in column are from baseline specifications as specified in equation (3). Column (2) reports a measurement-error-corrected estimate using the estimator described in Appendix B. Column (3) reports results using player fixed effects instead of own ability.

The dependent variable is the golf score for the round.

The Ability variable is measured using the player’s handicap.

Standard errors are in parentheses and are clustered by playing group.

All regressions weight each observation by the inverse of the sample variance of estimated ability of each player.

The estimate of γ1, the effect of playing partners’ ability on own score, is not statistically significant, and the point estimate is actually negative. The insignificant point estimate suggests that improving the average ability of one’s playing partners by one stroke actually increases (i.e. worsens) one’s score by 0.035 strokes. Our estimates make it possible to rule out positive peer effects larger than 0.043 strokes for an increase in average ability of one stroke. One stroke is 28 percent greater than one standard deviation in partners’ average ability (0.78). If we divide the upper bound of the 95-percent confidence interval by the estimate of β̂1 to correct for measurement error, we can still rule out that a one-stroke increase in partners’ average ability increases own score by more than 0.065 strokes. The results from our baseline specification therefore suggest that there are not significant peer effects overall.

We address measurement error more formally in column (2), which reports results using the measurement-error-corrected estimator described in more detail in Appendix B. The coefficient on own ability increases from 0.672 to 0.949 and it is no longer statistically significantly different from 1, suggesting that much of the measurement error has been eliminated. The coefficient on partners’ average ability remains essentially unchanged (from −0.035 to −0.036), and the standard error on the peer effect coefficient increases (from 0.040 to 0.063), which results in a slightly larger upper bound of the 95-percent confidence interval of 0.087. Interestingly, the point estimates suggest measurement error affects the coefficient on own ability more than the coefficient on average ability of playing, which is consistent with peers’ ability being an average of two values.

Column (3) verifies that the results are insensitive to controlling for player fixed effects instead of player ability. Going forward, we report results from the specification that includes own ability rather than player fixed effects for ease of interpretation.

C. The Effect of Different Dimensions of Ability: Does the Overall Effect Hide Evidence of Learning or Motivation?

As described earlier, we collected data on various dimensions of player skill. We hypothesize that players might learn about wind conditions or optimal strategies from more accurate players (i.e. those who take the fewest shots to get the ball on the green), or from better putters. In contrast, we assume that players cannot learn how to hit longer drives by playing alongside longer hitters, and therefore that any effect of playing alongside a longer driver must operate through increased motivation.37 If these assumptions are correct, specifications comparable to (3) but replacing partners’ average ability with partners’ average driving distance, putts per round, or greens reached in regulation can separately identify motivation and learning effects. An effect of the accuracy measures (putts per round and greens reached in regulation) would be interpreted as evidence of learning from peers, while an effect of partners’ driving distance would be interpreted as evidence of motivation by peers.38

The results are presented in columns (2)–(5) of Table 5 (column (1) reproduces baseline results with average ability). In column (2), we present results using average driving distance. While the coefficient on own driving distance is negative and strongly statistically significant (longer drives enable a player to achieve a lower score), the point estimate on partners’ driving distance is small and statistically insignificant. The results for putts per round, shown in column (3), are similar. Own putting skill has a large and strongly significant effect on own score, but golfers do not appear to shoot lower scores when they play with better putters. The results for shot accuracy, shown in column (4), similarly show strong effects of own accuracy, but no effect of partners’ accuracy on a golfer’s performance.39 Finally we present a specification that jointly estimates the effects of all three measures of ability in column (5). A golfer’s putting accuracy and number of greens hit in regulation have the most significant effects on his score,40 but as in the separately estimated specifications, no dimension of his partners’ ability appears to have any effect on score.

Table 5.

Peer Effects with Alternate Measures of Player Ability

| (1) | (2) | (3) | (4) | (5) | |

|---|---|---|---|---|---|

| Own Ability | 0.673 (0.039) | ||||

| Avg(Ability), playing partners | −0.035 (0.040) | ||||

| Driving Distance | −0.022 (0.004) | −0.009 (0.004) | |||

| Avg(Driving), playing partners | 0.003 (0.004) | 0.003 (0.004) | |||

| Putts | 0.137 (0.030) | 0.172 (0.030) | |||

| Avg(Putts), playing partners | −0.039 (0.039) | −0.045 (0.039) | |||

| Greens per round | −0.687 (0.049) | −0.683 (0.051) | |||

| Avg(Greens), playing partners | −0.022 (0.059) | −0.024 (0.060) | |||

| Tournament × Category Fixed Effects | Y | Y | Y | Y | Y |

| R2 | 0.152 | 0.139 | 0.138 | 0.147 | 0.150 |

| N | 17492 | 17182 | 17182 | 17182 | 17182 |

Notes:

Results from baseline specifications as specified in equation (3).

The dependent variable is the golf score for the round.

The Ability variable is measured using the player’s handicap.

Standard errors are in parentheses and are clustered by playing group.

All regressions weight each observation by the inverse of the sample variance of estimated ability of each player.

Another form learning might take is that the effect of playing alongside a better golfer would manifest as time passes. There does not appear to be any evidence of such a pattern in our data. In results not presented here, we find no differential effect of playing partners’ ability in the second nine holes as compared with the first nine holes of the round, no differential effect on the second day played with the same partners, and no carryover effect of partners’ ability one, two or three tournaments (i.e. weeks) later.

D. Using Alternative Measures of Peer Ability

Having seen no evidence that the average ability of peers affects individual performance, we next ask whether the linear-in-means specification obscures peer effects in a different way. It is possible that it is not the mean ability of co-workers that matters, but rather the minimum or maximum ability of co-workers. Possibly playing with bad players matters, but playing with good players does not. Or, maybe playing alongside one very good player or one very bad player affects performance. In each of these cases, the mean ability of peers would not measure the relevant peer environment accurately. Motivated by these possibilities, in Table 6 we present estimates of specification (3) where is replaced with alternative measures of peers’ ability. We report the baseline specification in column (1) for comparison. In column (2) we replace the average ability with the maximum ability of the player’s peers. The point estimate is slightly smaller, but virtually unchanged. In column (3), we show that the estimated effect of the minimum of peers’ ability is again negative and insignificant, and virtually the same as the average ability effect.

Table 6.

Peer Effects with Alternative Measures of Peer Ability

| (1) | (2) | (3) | (4) | (5) | |

|---|---|---|---|---|---|

| Measure of Peer Ability: | Average Ability | Max Ability | Min Ability | Tiger Woods is partner | Avg. Ability × (Avg. Ability − Own Ability) |

| Own Ability | 0.673 (0.039) | 0.673 (0.039) | 0.673 (0.039) | 0.675 (0.039) | 0.673 (0.039) |

| Peer Ability | −0.035 (0.040) | −0.021 (0.032) | −0.032 (0.040) | −0.346 (0.464) | −0.016 (0.014) |

| R2 | 0.152 | 0.152 | 0.152 | 0.152 | 0.152 |

| N | 17492 | 17492 | 17492 | 17492 | 17492 |

| (6) | (7) | (8) | (9) | |

|---|---|---|---|---|

| Measure of Peer Ability: | 1{any partner in top 10%} | 1{any partner in top 25%} | 1{any partner in bot 25%} | 1{any partner in bot 10%} |

| Own Ability | 0.674 (0.039) | 0.672 (0.039) | 0.674 (0.039) | 0.674 (0.039) |

| Peer Ability | 0.027 (0.070) | 0.050 (0.059) | −0.039 (0.069) | −0.175 (0.141) |

| R2 | 0.152 | 0.152 | 0.152 | 0.152 |

| N | 17492 | 17492 | 17492 | 17492 |

Notes:

Column (1) is reproduced from Table 3. Other columns present results from modifying baseline specifications as specified in equation (3) to support heterogeneous peer effects.

The dependent variable is the golf score for the round.

The Ability variable is measured using the player’s handicap.

Standard errors are in parentheses and are clustered by playing group.

All regressions weight each observation by the inverse of the sample variance of estimated ability of each player.

All regressions include tournament-by-category fixed effects.

In column (9), average ability of playing partners is also included in regression; the estimated coefficient for this variable is −0.014 (0.048).

In columns (6) through (9), we investigate whether there appears to be a non-linear effect of partners’ ability. To do this, we include indicators for whether individual i was assigned to a player in the top decile, top quartile, bottom quartile, or bottom quintile of the ability distribution in his category. None of the four estimates are statistically significant, though suggestively the point estimates for the top-quantile specifications are positive while those for the bottom-quantile specifications are negative. Recall that lower scores are better, so this pattern would suggest that players play worse when they are matched with much better players. We also ask whether playing with Tiger Woods, the best player of his generation, affects performance. The point estimate in column (4) suggests that being partnered with Tiger Woods reduces golfers’ scores, but the standard errors are large enough that we cannot rule out a zero effect. In our sample, there are only 70 golfer-days paired with Tiger Woods.

The specifications thus far have assumed it is the absolute level of peers’ ability that affects performance. An alternative hypothesis is that relative ability also matters. To investigate this possibility we present a specification which allows the effect of peers’ ability to vary with the difference between peers’ and own ability. The results are reported in column (5) of Table 6, and they suggest that the effect of peers’ ability does not vary with relative ability. Similar specifications based on the other measures of ability also yield small and statistically insignificant results.

Finally, we consider whether there exist peer effects more generally, beyond the skill-based peer effects for which we have tested thus far. In particular, we create a set of J partner dummy variables, equal to one if player i is partnered with player j, where j ranges from 1 to J. We then estimate a model where each player’s score depends on this set of playing partner fixed effects. The F-test of the joint significance of these playing partner fixed effects indicates whether performance systematically varies with the identity of one’s playing partner. This test is more general than those presented thus far because it allows playing partners’ effects to be based on unobservable characteristics. For example, the F-test would reject if a group of mediocre golfers improved the scores of their partners, for example because they were pleasant people. The test also would detect peer effects if there were both performance-enhancing and performance-reducing partners. In our data, however, the F-test that the coefficients on the full set of playing partner dummies are jointly zero fails to reject. Consistent with our previous results, we do not find any evidence that there are heterogeneous peer effects.41

E. Endogenous Effect Regressions and Common Shocks

Table 7 reports results of equation (4), the specification that replaces partners’ ability with partners’ score as the regressor of interest. As described earlier, the coefficient estimate on playing partners’ score overstates the true peer effect if there are unobserved common shocks affecting all players uniformly in the group. Nevertheless, this regression is informative as an upper bound on γ. The first column in Table 7 shows, contrary to the results above, that the peer effect is positive and statistically significant— an increase in the average score of one’s playing partners is associated with an increase of own score by 0.055 strokes. An important point, however, is that without even accounting for the upward bias in this estimate due to common shocks, the coefficient is still small in magnitude. Mas and Moretti’s (2008) elasticities evaluated at the mean of our dependent variable (own score), would predict that an increase in average partners’ score of one stroke would raise own score by 0.170 strokes, more than two times what we estimate. We do not correct for measurement error here, since each player’s score, and therefore the average score of peers, is measured without error.42

Table 7.

The Effect of Peers’ Score on Own Score

| (1) | (2) | (3) | (4) | (5) | |

|---|---|---|---|---|---|

| Own Ability | 0.677 (0.039) | 0.676 (0.039) | 0.674 (0.039) | 0.669 (0.039) | 0.667 (0.040) |

| Avg(Score), partners | 0.058 (0.015) | 0.032 (0.015) | 0.022 (0.015) | 0.014 (0.016) | 0.006 (0.016) |

| Tournament × Category F.E. | Y | Y | Y | Y | Y |

| Tournament × Time-of-day F.E. | N | Y | N | N | N |

| Time cubic per tournament | N | N | Y | N | N |

| Time quartic per tournament | N | N | N | Y | N |

| Time quintic per tournament | N | N | N | N | Y |

| R2 | 0.154 | 0.168 | 0.173 | 0.178 | 0.182 |

| N | 17492 | 17492 | 17492 | 17492 | 17492 |

Notes:

Results from alternate specifications using average partners’ score instead of average partners’ ability as the primary independent variable of interest.

The dependent variable is the golf score for the round.

The Ability variable is measured using the player’s handicap.

Standard errors are in parentheses and are clustered by playing group.

All regressions weight each observation by the inverse of the sample variance of estimated ability of each player.

To look at the importance of common shocks more systematically, we try several additional controls. We hypothesize that the most likely sources of common shocks are variation in weather and crowd size. Because these shocks also affect the playing groups simultaneously closeby on the golf course, we construct controls for common shocks which are based on comparing groups with similar starting times.

In column (2) we interact time-of-day (early morning, mid-morning, afternoon) fixed effects with the full set of tournament fixed effects. This should capture weather shocks and other changes in course conditions that affect all groups that play at the same part of the day (e.g. a common complaint on the PGA Tour is that afternoon groups experience more ‘spike marks’ on the green, which make it more difficult to putt effectively). The point estimate on partners’ score drops by about 50 percent, and is marginally significant. To capture the fact that weather and other common shocks vary more smoothly than the dummy specification assumes, in column (3) we introduce a cubic in start-time which is allowed to vary by tournament. The coefficient on partners’ score is further reduced to 0.019 and is no longer significant at conventional levels. In additional specifications, we include higher-order polynomials in start-time, which allow the effect of weather, and other common shocks that change over the course of a day, to vary more and more and more flexibly. Moving across the columns, as the order of the polynomial increases from a cubic to a quartic to a quintic the estimated effect of partners’ score decreases. With the control for a quintic in start-time, the endogenous peer effect coefficient is 0.003 and is insignificantly different from zero. Interestingly, adding controls for start-time does not affect the estimate of own ability on own score. It appears that the correlations between own score and partners’ score are driven primarily by common shocks.

As with any peer effects regression of own outcome on peer’s outcome it is difficult to interpret the regressions shown in Table 7. They should certainly be regarded as upper bounds for peer effects since any remaining common shocks that are not controlled for should bias the estimates upwards. Furthermore, regressions of outcomes on peers’ outcomes suffer from the ‘reflection problem’ described by Manski (1993). In short, the fact that more and more extensive controls for common shocks reduce the estimate of γ2 but do not appreciably affect the estimate of β2, along with the fact that the estimates of γ1 are consistently zero, lead us to conclude that peer effects are negligible among professional golfers.

F. Do Peer Effects Vary with Player Skill or Player Experience?

One possible explanation for why we find such different results than previous studies is that there may exist heterogeneity in the susceptibility of workers to social influences by co-workers. Professional golfers are elite professionals subject to a selection process, and perhaps the most successful professional golfers are those who are able to avoid these social responses. If heterogeneity in this ability across occupations explains the differences between our results and those in Mas and Moretti (2008), it may also be the case that there is heterogeneity among golfers in the susceptibility to social influences. In Table 8, we present estimates of equation (3) that allow the effect of partners’ ability to vary by the reference player’s skill. This interaction tells us, for example, whether low-skill players respond more to high-skill players than high-skill players do.43 The results are shown in column (2). The positive coefficient on this interaction term implies that lower-skill players respond more to their co-workers’ ability than do better players. The coefficient is statistically significant at conventional levels and is consistent with the idea that more skilled workers are less responsive to peer effects. Together with the small point estimate for the average-skilled golfer, this interaction indicates that there are some high-skill players who appear to experience small negative peer effects and some low-skilled players who appear to experience small positive peer effects.

Table 8.

Peer Effects by Skill and Experience

| (1) | (2) | (3) | (4) | |

|---|---|---|---|---|

| Own Ability | 0.673 (0.039) | 0.669 (0.039) | 0.660 (0.039) | 0.656 (0.039) |

| Avg(Ability), partners | −0.035 (0.040) | −0.032 (0.040) | −0.038 (0.040) | −0.035 (0.040) |

| Avg(Ability), partners × Own Ability | 0.078 (0.032) | 0.082 (0.033) | ||

| Years of Experience | 0.019 (0.004) | 0.019 (0.004) | ||

| Avg(Ability), partners × Years of Experience | 0.015 (0.005) | 0.015 (0.005) | ||

| Tournament × Category F.E. | Y | Y | Y | Y |

| R2 | 0.152 | 0.152 | 0.154 | 0.154 |

| N | 17492 | 17492 | 17492 | 17492 |

Notes:

Column (1) is reproduced from Table 3. Other columns present results from modifying baseline specifications as specified in equation (3) to support heterogeneous peer effects.

The dependent variable is the golf score for the round.

The Ability variable is measured using the player’s handicap.

Standard errors are in parentheses and are clustered by playing group.

All regressions weight each observation by the inverse of the sample variance of estimated ability of each player.

Columns (3) and (4) report estimates of equation (3) that allow the effect of partners’ ability to vary by the reference player’s experience. We measure experience as the number of years since the player’s first full year on the PGA Tour.44 The positive and statistically significant coefficient on the interaction of experience and partners’ ability (in both columns (3) and (4)) implies that more experienced players respond more to their co-workers’ ability. This is inconsistent with the idea that experience mitigates peer effects. However, more experienced players appear to get higher (i.e. worse) scores, suggesting that comparisons based on experience are complicated by selection. Thus, it is difficult to discern whether the positive experience interaction indicates that players learn to benefit from their peers with experience, or that lower ability players (as proxied by high experience on the PGA Tour) are more prone to influence from their peers.45

VI. Concluding comments

We use the random assignment of playing partners in professional golf tournaments to test for peer effects in the workplace. Contrary to recent evidence on supermarket checkout workers and soft-fruit pickers, we find no evidence that the ability or current performance of playing partners affects the performance of professional golfers. With a large panel data set we observe players repeatedly, and the random assignment of players to groups makes it straightforward to estimate the causal effect of playing partners’ ability on own performance. The design of professional golf tournaments also allows for direct examination of the role of common shocks, which typically make identification of endogenous peer effects difficult. In our preferred specification, we are able to reject positive peer effects of more than 0.043 strokes for a one stroke increase in playing partners’ ability. We are also able to rule out that the peer effect is larger than 6.5 percent of the effect of own ability.

Interestingly, we find a small positive effect of partners’ score on own score, but we interpret this as mostly due to common shocks (and we present evidence that controlling for these common shocks reduces this correlation). The raw correlation in scores, though, might help explain why many PGA players perceive peer effects to be important. Our results suggest that players might be misinterpreting common shocks as peer effects.

Our estimates contrast with a number of recent studies of peer effects in the workplace. Mas and Moretti (2008) find large peer effects in a low-wage labor market where workers are not paid piece rates and do not have strong financial incentives to exert more effort. Bandiera, Barankay and Rasul (2008) find peer effects specific to workers’ friends in a low-skilled job where workers are paid piece rates. Experimental studies (e.g. Falk and Ichino, 2006) find evidence of peer effects in work-like tasks. We conclude by speculating several non-exclusive explanations for our contrasting findings and conclude that there is much to be learned from the difference between our results and those of other recent studies.

First, ours is the only study of which we are aware that estimates peer effects in a workplace where peers are randomly assigned. As we discuss above, random assignment is not a panacea for estimating peer effects, but it is extremely helpful in overcoming many of the difficult issues associated with identifying such models.

Second, the PGA Tour is a unique labor market that is characterized by extremely large financial incentives for performance. In such a situation, it may be the case that the incentives for high effort are already so high that the marginal effect of social considerations are minimal, or zero. In similar labor markets where there are high-powered incentives for better performance (e.g. surgeons, floor traders at an investment bank, lawyers in private practice, tenure track professors), the social effects of peers may not be as important as implied by existing studies. Consistent with this view is Mas and Moretti’s (2008) conclusion that the peer effects they observe are mediated by co-worker monitoring. As incentives become stronger and monitoring output becomes easier, monitoring of effort becomes less necessary.

Perhaps just as interesting is the implication that social incentives may be a substitute for financial incentives. This would suggest that when creating strong financial incentives is difficult (such as when monitoring costs are high, or measuring individual output is difficult), firms should optimally organize workers to take advantage of social incentives.

Third and, we speculate, most importantly, the sample of workers under study has been subject to extreme selection. Many people play golf, but only the very best are professional golfers. Even among professionals, PGA Tour players are among the elite. It is quite possible that an important selection criterion is the ability to avoid the influences of playing partners. Relatedly, successful skilled workers may have chosen over the course of their life to invest in human capital whose productivity is not dependent on social spillovers, whether positive or negative, in order to avoid risks out of their control. The results described at the end of the previous section are suggestively consistent with this view. Even among the highly selected group of professional golfers, the least skilled are the only ones whose productivity respond positively to the composition of their peers. We view this as an interesting finding because it suggests that there is a great deal of heterogeneity across individuals in their susceptibility to social influences in the workplace. It is an open question whether professional golfers are rare exceptions or representative of a larger class of high-skill professional workers.

This conclusion also implies that workers may sort across firms according to the potential importance of peer effects. In settings where positive peer effects— such as the learning story described above— are potentially important, we should expect to see workers who respond relatively well to these learning opportunities. Ignoring such market-induced sorting can lead to misleading generalizations about the importance of social effects at work.

Though our results are different than those found in recent studies of peer effects in the workplace, we view our results as complementary. There is much to learn from the differences in findings. Primarily, our results suggest that there is heterogeneity in the importance of peer effects, both across individuals and across settings. Sorting of workers across occupations according to how they are affected by social pressures and other social spillovers, whether positive or negative, is likely an important feature of labor markets. By focusing solely on occupations with low skill requirements, the existing studies miss this rich heterogeneity. Perhaps just as importantly, we show that peer effects are not important in a setting with strong financial incentives. Similarly, Peter Arcidiacono and Sean Nicholson (2005) find no evidence of peer effects among medical school students in their choice of specialty or their medical board exam scores, both of which carry large financial returns. These findings suggest that social effects may be substitutes for incentive pay, and is consistent at least in spirit with the work of John A. List (2006) and Levitt and List (2007) who argue that the expression of social preferences is likely to vary according to whether behavior is observed in a market setting, the strength of incentives in that setting, and the selection of subjects that researchers observe. We hope our findings will spur other researchers to further explore this heterogeneity in peer effects in the workplace.

Acknowledgments