Abstract

For the detailed analysis of sedimentation velocity data, the consideration of radial-dependent baseline offsets is indispensable. Two main approaches are data differencing (‘delta-c’ approach), and explicit inclusion of baseline parameters (‘direct boundary model’ of the raw data). The present work aims to clarify the relationships between the two approaches. To this end, a simple model problem is examined. We show that the explicit consideration of the baseline in the model is equivalent to a differencing scheme where from all data points their average value is subtracted. Pair-wise differencing in the ‘delta-c’ approach always results in higher parameter uncertainty. For equidistant time points, the increase is smallest when the reference points are taken at intervals of 1/3 or 2/3 of the total number of time points. If the difference data are misinterpreted to be statistically independent samples, errors in the calculation of the parameter uncertainties can occur. Contrary to claims in the literature, we observe there is no distinction in the approaches regarding their ‘model-dependence’: both approaches arise from the integral or differential form of same model, and both can and should provide explicit estimates of the baseline values in the original data space for optimal discrimination between macromolecular sedimentation models.

Keywords: analytical ultracentrifugation, model-free analysis, direct boundary modeling, statistical data analysis

Introduction

In the last decade, sedimentation velocity analytical ultracentrifugation (SV) has made great progress in the level of detail that can be reliably extracted from the experiment through the mathematical data analysis. This has been exploited in many biophysical studies of macromolecular interactions and multi-protein complexes, fiber formation, macromolecular shapes and size-distributions, as well as applications in other fields, among them protein pharmaceuticals and nanoparticles. For reviews, see [1–3]. One key element of this progress was the development of techniques to account for the baseline profiles that are invariant with time and superimposed to the measured macromolecular sedimentation signals [4, 5] (often referred to as ‘TI noise’). For the Rayleigh interference optics, these baseline offsets are typically between 10 – 100 fold the magnitude of the statistical error of data acquisition, often similar in magnitude to the signal from the sedimenting macromolecular species of interest, and therefore essential to be accounted for. When using the absorbance optical system, the time-invariant profile is smaller but still usually of similar magnitude as the statistical noise [5]. With the pseudo-absorbance method [6], where data from samples in both centerpiece sectors can be recorded, data with similar noise structure as the interference optical data are obtained.

An efficient approach to address this problem directly was the time-derivative method g(s*) introduced by Stafford in 1992 [7]. Radial-dependent, time-invariant baseline profiles were eliminated by forming a time-difference Δc/Δt r,t* (attributed to a particular effective time point t* between t and t + Δt) serving as an approximation of the time-derivative dc/dt r,t. Numerically this involved the division of the even number of total scans N into two sets, and pair-wise subtraction according to Δcj(r) = cj(r) − c(N/2)+j(r) with j = 1…N/2 [8]. This step is followed by averaging, rescaling, and transformation along s* = ω2t*−1 log r*/m into to apparent sedimentation coefficient distributions g(s*) [8]. The elimination of time-invariant baseline offsets through differencing brought unprecedented sensitivity to SV analysis and was widely applied at the time. However, the differencing of time-points and the determination of the effective time t* has non-trivial consequences for the resolution and accuracy of the dc/dt method, and ultimately causes significant limitations in the total number of scans that can be included in the analysis [9–11], thus not fully exploiting the experimental data and causing bias due to the pre-selection of scans [12]. However, such complications are absent, in principle, in the ‘delta-c’ approach where the same scheme for differencing of scans is used solely to remove the time-invariant baseline offsets from the data and similarly the model [13].

In 1999, a different approach to address the baseline problems in SV analysis was introduced that consists simply of the idea of accounting for all unknown baseline parameters rigorously by introducing the corresponding parameters explicitly as unknowns into the model [5]. Although there are many such parameters across the radial baseline profile, it was shown that, based on the principle of separation of linear and non-linear parameters [14], the baseline parameters are trivial to optimize analytically and independently of all other parameters, without causing significant computational overhead. This was termed the ‘algebraic noise elimination’ method. This is the standard method of accounting for baseline offsets in the ‘direct boundary modeling’ approach, and is applied widely, for example, in the software SEDFIT in conjunction with the diffusion-deconvoluted sedimentation coefficient distributions c(s) [15, 16], multisignal sedimentation coefficient distributions ck(s) [17], size-and-shape distributions [18], as well as Lamm equation models for reacting systems in SEDPHAT [19]. In addition to allowing the full exploitation of the signal/noise ratio of the raw data and allowing the comparison of data and fitted model in the raw data space, it provides an explicit estimate of the baseline profile that may be compared with the expected baseline profiles as measured from water blanks. After the fit, the estimated baseline profile can be subtracted from the data to visualize directly the macromolecular sedimentation profiles for inspection [20]. This strategy was initially described only for scans with equal radial ranges, but recently generalized to non-overlapping radial ranges in ‘partial boundary modeling’ [12]. The same strategy was shown to be highly useful also in the analysis of sedimentation equilibrium data [21].

The relationship between the two methods has not yet been rigorously examined. Distinctions were perceived and proposed to guide the choice of analysis strategy. For example, the differencing method is sometimes referred to as being ‘model-independent’ [22–24], in contrast to the direct boundary model approach, where the results (and in particular the baselines) are characterized as ‘model-dependent’ and of more limited “validity” [25]. On the other hand, the dogma that no data processing step can increase information would lead one to fit an explicit model in the raw data space. The goal of the present work is to clarify these attributes and to better characterize the different approaches with regard to their statistical implications for the data analysis.

One difficulty in this task is the problem that the error surfaces are quite complex, even for simple models, due to the dimensionality of the raw data sets and due to the non-linear nature of the macromolecular sedimentation parameters. To circumvent this difficulty, we instead examine here a much simpler, linear problem, to which the concepts of differencing or direct boundary modeling can be applied. In this way, the implicit and explicit assumptions as well as the statistical consequences can become more transparent. In particular, we consider data points that have the form

| (Eq. 1) |

(with a representing a slope, b a baseline offset, and σi normally distributed noise), which are to be fitted by least-squares with the straight line

| (Eq. 2) |

with unknown values for the slope and an unknown offset. We can apply both ideas of data differencing and direct modeling to account for the baseline offset. The linear nature of the model allows easily determining the resulting properties of these models. Some of the results are well-known from text-book examples [26], however, most are not previously considered in this context.

There are obvious differences to SV analysis, including: (i) Rather than single data points, in SV we would measure a whole scan at each time-point, and rather than a single baseline there would be a complete baseline profile across the whole cell; (ii) The parameters of primary interest in SV are often sedimentation coefficients, which are non-linear parameters in the model that depend in a more complex way on the evolution of the profiles, rather than being simply a slope from the propagation with time; and (iii) the number and spacing of time-points differ. In spite of this, we believe that the insights from this simple model does still reflect some salient features of the time-differencing in comparison with the direct boundary modeling approach in SV analysis. At the very least, we hope that the analysis presented here will clarify the discussion and focus on the question in which way any aspects of real SV analysis could possibly cause properties of the differencing schemes fundamentally different from those exhibited here in the simpler context.

Method

The theoretical analysis is based on basic statistical principles, as described by Bevington [26]. Due to the linearity of the model, all aspects can be analytically solved. Results were confirmed analytically using the symbolic math toolbox of MATLAB (The Mathworks, Natick, MA), and key statistical quantities were compared with the result of Monte-Carlo analyses, based on normally distributed samples with between 100 and 100,000 data points, typically using 10,000 to 100,000 iterations.

Outline of the Analysis Approaches

When applied to the classical text-book problem of fitting a straight line, some aspects of the approaches discussed here may appear trivial. Nevertheless, this is a worthwhile exercise that can clarify the concepts and relationships in light of the analogy to SV analysis. Further, it will lead to interesting results which have to our knowledge not yet been considered in this context.

Direct boundary modeling

Here, we directly consider the original problem (Eq. 1) and (Eq. 2) in the raw data space, explicitly accounting for all unknowns (in the SV problem typically including baselines, concentration factors, sedimentation coefficients and diffusion coefficients, but here only represented by a and b). This leads to the minimization problem

| (Eq. 3) |

where S1(a, b) denotes the error surface formed by the sum of squared deviations in the original data space that depends on the two unknowns a and b. For simplicity, we assume all data points have equal weight.

As introduced in [5], the direct boundary modeling approach proceeds in applying the principle of “separation of linear and non-linear parameters” [14]. This separates out the linear parameters (e.g. the concentration factors and baselines in SV), and finds a formula for its optimum as a function of the remaining nonlinear parameters. In our fitting analogy, all parameters are linear. Nevertheless, we can apply the same technique and first optimize for b, a linear parameter, and defer the calculation of the slope a to the separate optimization in a second stage. In the present context, it is irrelevant how the second stage optimization takes place (non-linear regression in SV, analytically here), and therefore this separation can be viewed analogous to that in real SV analyses.

To this end, the original problem is split it up into

| (Eq. 4) |

and we can define a second error surface S2 which is of lower dimension than S1 and arises from S1 by choosing for each point a the best-possible b(a)

| (Eq. 5) |

For the first problem , a necessary condition for optimality is dS1/db = 0, which leads to

| (Eq. 6) |

This solves unambiguously. When this result is inserted in S1, it follows that

| (Eq. 7) |

We are left then with the remaining problem :

| (Eq. 8) |

We denote the best estimate of a obtained in this way as ad (with the subscript ‘d’ for direct modeling). Once we have determined ad, we go back to (Eq. 6), use our knowledge b(a) of the dependence of the optimal b given any a, and calculate the best-fit b.

‘Delta-c’ approach

Here, the goal is also to obtain a best-fit estimate for the slope a, but without explicitly calculating the baseline b. To this end, the data yi(ti) are ‘transformed’ to Δyi(Δti),

| (Eq. 9) |

such that the pair-wise differencing removes b from the correspondingly ‘transformed’ model: Now, we can fit the ‘transformed’ data with the new function

| (Eq. 10) |

resulting in the minimization problem

| (Eq. 11) |

where SΔ(a) is defined here as the sum of squared deviations of the ‘transformed’ data and model, i.e. the error surface. Let us denote aΔ the best-fit slope based on (Eq. 11).

Comparison

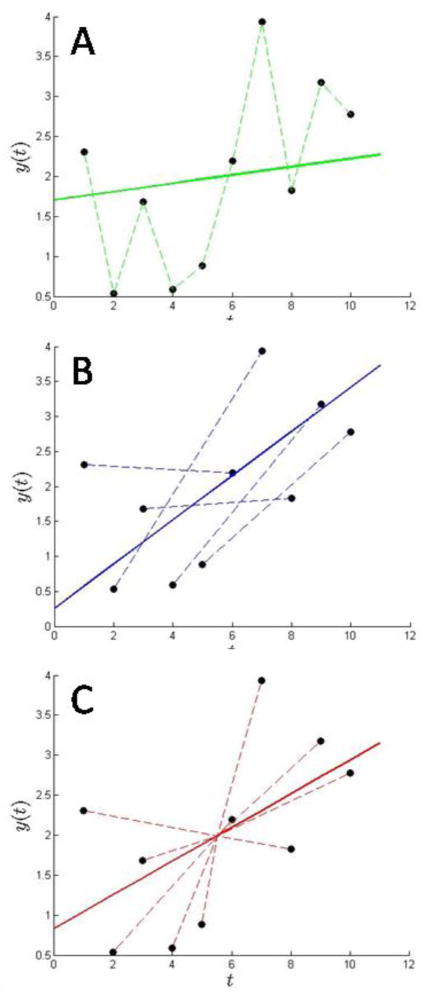

Figure 1 shows a graphical illustration of the approaches, for the case of N = 10 noisy data points. The ‘delta-c’ approach is depicted in green (Panel A) and blue (Panel B): the slope aΔ (solid line) is the average of the slopes from the pair-wise differences (dotted lines), with ΔN = 1 (green, Panel A) and ΔN = N/2 (blue, Panel B). The former provides more lines with higher fluctuation, the latter has fewer lines with less fluctuation. The slope a determined with the direct boundary approach is shown by the red line (Panel C). Its slope is the average of the slopes between each data point and the average ȳ at the average time t̄.

Figure 1.

Illustration of different schemes for estimating the baseline and slope of noisy data points. Circles show 10 points calculated as y = 0.2t + 1 superimposed with normally distributed noise with σ = 1.0. The best-fit models based on pair-wise differencing are shown with ΔN = 1 and ΔN = 5, with the dashed lines indicating the slopes of the individual data point pairs, yielding 9 differences (Panel A, green) and 5 differences (Panel B, blue), respectively. Their average slope determines the slope of the best-fit results (solid lines). Their implicit baseline offset was calculated by (Eq. 13). In red is shown the best-fit model from the direct fit (Panel C, solid red line), which has a slope that is determined by the average between the differences (10 dashed red lines) of each data point and the mean signal ȳ at t̄, as indicated by the intersection of the solid lines.

Despite the fact that b does not occur explicitly in the formulas of the ‘delta-c’ approach, it is important to realize that this approach still implies that there can be a baseline offset in the original data, and that this baseline is unknown but constant with time. (Even though hypothetical functions for straight lines without offsets, f*(t) = at, would lead to the same expression rhs of (Eq. 10) for their time-difference, use of (Eq. 10) and (Eq. 11) does not imply the model f*.)

We note that S2 and SΔ are very much alike: both do not depend on b. Both arise from differencing the data. Interestingly, just like the differencing scheme in (Eq. 9) was postulated without further justification, one could have guessed different starting points for a ‘delta-c’ approach. In particular, based on the notion that the average scan will certainly represent the baseline at least equally well as any particular scan, one could have arbitrarily taken the more refined form of (Eq. 8) upfront.

Although the current ‘delta-c’ implementations in SV choose to leave the baseline undetermined, once we estimate aΔ from minimizing SΔ, we can go back and ask the new question of what b might be. For this, however, we have to go back into the original data space, minimizing S1 given aΔ. (Certainly we should believe that aΔ is an excellent estimate for the value of a.) The result was already given above in (Eq. 6), and we can define

| (Eq. 12) |

This has been proposed, for example, for calculating TI profiles from g(s*) analyses [27]. In SV practice, this is an important step, as it allows one to compare the baseline profile implicit in the particular boundary interpretation with the expected TI noise profile from water blanks or from the profiles at the start of sedimentation.

Having determined aΔ and bΔ, we can make explicit the model that was silently underlying the ‘delta-c’ approach, and trace the fitted function in the original data space

| (Eq. 13) |

Likewise, we can insert for the ‘direct modeling’ (Eq. 6) into the model (Eq. 2)

| (Eq. 14) |

which is identical except for the possible difference in the estimated slope (Figure 1). Thus, just like the two approaches lead to optimization problems of similar structure in the ‘transformed’ data space (just with different referencing), they are also equivalent in the raw data space once the analytically optimized baseline is inserted. Since the baseline offsets are always linear parameters, their analytical optimization and elimination can be achieved easily for any real SV model.

Solving the Problems

Since we chose at the outset to study a problem where all parameters are linear, we can analytically solve the problems. First, for the direct boundary approach, the problem (Eq. 8) can be solved by requiring that dS2/da = 0, leading to

| (Eq. 15) |

We can insert here in (Eq. 15) the definitions of the averages (Eq. 6), which after reordering leads to

| (Eq. 16) |

The latter expression is the well-known standard expression for the slope resulting from least-squares fit of a straight line (see, e.g., Eq. 6.12 in [26], noting the switched terminology of a and b). We can rewrite this under the assumption of equidistant time points, ti = t1 + (i − 1) δt, as

| (Eq. 17) |

For the delta-c approach, we solve Eq. (Eq. 11) by requiring that dSΔ/da = 0. This leads to

| (Eq. 18) |

For equidistant time-points it is (ti − ti−ΔN) = ΔNδt, and

| (Eq. 19) |

or

| (Eq. 20) |

i.e., aΔ is the average slope (compare Figure 1), or the average difference in y divided by the corresponding time-difference. Not surprisingly, the best-fit estimate ad (Eq. 17) is different from aΔ (Eq. 20).

Statistical Error Estimates

A key question is the statistical accuracy of the different estimates. We can determine the errors of the estimates aΔ and ad by applying the standard error propagation formula for determining the error σp of a parameter p (Eq. 6.19 of [26]). If the data points all have the same errors σ, this takes the form

| (Eq. 21) |

(we assume only errors in y, not in t).

For the error of ad the resulting value from applying (Eq. 21) to (Eq. 16) has been reported by Bevington ([26] Eq. 6.23) as

| (Eq. 22) |

In the case of equidistant time points, ti = t1 + (i − 1) δt, this simplifies to

| (Eq. 23) |

If one were interested in the uncertainty of the baseline parameter, once we know σa,d a convenient approach is via (Eq. 6) applying (Eq. 21) (assuming equidistant time points):

| (Eq. 24) |

We can compare the errors σa,d with those that would occur if there was no baseline offset. In this case, , and in the case of δt = 1 and t1 = 1 simplifies to

| (Eq. 25) |

As one might expect, this is much smaller than (Eq. 23).

For the error of aΔ, we apply (Eq. 21) to (Eq. 19). For ΔN ≤ N/2, contributions with ΔN < n ≤ N − ΔN cancel out, which leads to

| (Eq. 26) |

The dependence of the error of aΔ on the choice of ΔN is illustrated in Figure 2. For example, if we assume ΔN = N/2 (for even N), this is

Figure 2.

Dependence of the uncertainty of the slope aΔ in the pair-wise differencing scheme on the choice of ΔN, following Eq. (Eq. 26). The value of is normalized relative to the uncertainty of the slope parameter from the direct fit Eq. (Eq. 23). The discontinuity and symmetry relative to the point ΔN/N = 1/2 arises from the cancellation of errors from statistically non-independent difference data Δy. If they were independent data points the error would follow the dashed line Eq. (Eq. 29).

| (Eq. 27) |

This is not the best choice for ΔN, since (Eq. 26) has minima for ΔN = N/3 and ΔN = 2N/3, both of which would lead to only

| (Eq. 28) |

These seem to be a better compromise between the signal/noise ratio of the Δyi in (Eq. 9) and the number of ‘transformed’ data points available.

In the case of ΔN < N/2, each original data point may participate in two pairs (making positive and negative contributions). Therefore, the pair-wise differences are not all independent data points, and instead subject to serial autocorrelation. However, data points to be statistically independent observations is a pre-requisite of the error analysis based on standard χ2 and F-statistics [28]. In this case, if one would make a mistake to regard the differences Δy simply as our (pre-processed) new data points, each having an uncertainty σΔ2 = 2σ2, the apparent error σaΔ′ would follow

| (Eq. 29) |

which is indicated in Figure 2 as the dashed line. This coincides with the true error (Eq. 26) only for ΔN ≥ N/2. The Monte-Carlo approach to determine parameter uncertainties does not suffer from this potential difficulty.

Thus, in summary, the ‘delta-c’ approach with the differencing scheme at ΔN = N/2 will have a 15 % larger error (factor ) than the ‘direct modeling’ approach. If the ‘delta-c’ approach was conducted with differences of ΔN = N/3 or ΔN = 2N/3, the loss in precision would only be 6%. This is independent of the number of data points.

Effect of Systematic Deviations

Although the baseline stability in SV is remarkably good, it is certainly not absolute. For example, the ‘aging’ procedure that the cell components are customarily subjected to prior to sedimentation equilibrium experiments with interference optical detection aims to mechanically stabilize the cell assembly and prevent baseline shifts, possibly arising from the compression of the window cushions [29]. Whether such baseline changes may occur continually during the long time-range of the sedimentation experiment, or accompany a relatively sudden collapse of a window cushion, or occurs during rotor acceleration and deceleration, is unclear. Also, unmatched buffer salts can contribute signals that appear as continuous shifts in the baseline, if unaccounted for in the model of the sedimenting species (manuscript submitted). Finally, periodic oscillations of the baseline patterns can occasionally be discerned in the residual bitmaps of SV experiments, possibly arising from temperature fluctuations [13], or rotor precession [25].

Therefore, we ask if there is any difference in the two approaches in the extent to which systematic errors in the model may go undetected and/or may bias the outcome of the analysis. Often when analyzing experimental data, a fit with a simpler model is tested against a more complex model and statistical criteria are applied whether the fits are significantly different and justify inclusion of the additional parameter.

For example, we can simulate data that have a small quadratic component, i.e., yi = cti2 + ati + b + σ as a model for data with a slight baseline drift bdrift = b + cti2, and fit these with the same model as above, y = at + b or Δy = aΔt, respectively. We observed the increase in the χ2 value of the fit with increasing c. When the change in χ2 relative to the correct case c = 0 reaches the Δχ2 = 1 level [26], inclusion of corrective terms would be justified and the more complex behavior could possibly be correctly recognized. To this end, we determined the critical level of c by 104 Monte-Carlo simulations for data sets of N = 104 equidistant data points from t = 1 to 1000 with normally distributed noise of σ = 1. For the quadratic model, we obtained a critical value of c = 5.57×10−7 for a possible detection of a quadratic term the direct modeling approach. For the ‘delta-c’ approach we assumed ΔN = N/2 and evaluated the quality of fit from SΔ(a) in (Eq. 11), i.e. treating the difference data as if they were the data points to be modeled. This yielded a critical value of 5.71×10−7, i.e. only 2.5% higher than the direct modeling approach.

For other systematic disturbances the two methods exhibit larger differences in their potential for detection. As an extreme example, when an offset in the middle of the time-interval occurs in the form of a step-function, cH t − tmax/2, the direct boundary approach offers possible detection at c > 0.071, whereas it obviously cannot be detected at all in the ‘delta-c’ approach with ΔN = N/2 for any c, as it can be fully compensated by corresponding offsets in aΔ.

For periodic systematic disturbances c sin 2πt/τ, resembling a baseline oscillation, a strong dependence of the critical value of c on the period τ is observed for the ‘delta-c’ approach (see Figure 3), whereas nearly uniform value of ~0.025 were found for the direct modeling approach.

Figure 3.

Critical amplitude c for an oscillatory signal offset c sin 2πt/τ to create a significant decrease in the quality of the best-fit straight line. The critical amplitude was determined as the value that causes an increase χ2 of the fit by an amount Δχ2 = 1, which could make it potentially detectable. 10,000 data points were simulated with constant time-interval Δt = 1 and normally distributed noise with σ = 1.0. The critical amplitude is nearly independent of the period of oscillation for the ‘direct modeling’ approach (solid line), but shows sharp maxima for the ‘delta-c’ approach calculated at ΔN/N = 1/2 (crosses and interpolated dotted line).

Discussion

The unknowns in SV analysis can be divided in macromolecular parameters and baseline parameters. The baseline parameters often make very large signal contributions, but they are linear and therefore easy to eliminate. This can be accomplished either analytically in the original data space through the procedure termed ‘algebraic noise decomposition’ (allowing ‘direct boundary modeling’), or the model can be ‘transformed’ into a differential form where the constant baseline terms disappear. Both have corresponding models in the original data space and in the ‘transformed’, differenced data spaces.

For the direct boundary modeling approach, it is key to understand that the baselines are analytically pre-optimized, such that for the macromolecular parameters the error surface represents a subspace S2 of the original error surface S1, in which the baseline parameters are locally optimal for each macromolecular parameter combination. This simplification is possible due to the fact that analytical solutions for the baseline parameters can be derived algebraically as a function of macromolecular parameters.1 The workflow consists first of the optimization of the macromolecular parameters in the pre-optimized subspace S2, and then, as a second step, in S1 the analytical determination of the baseline values that belong to (i.e. are optimal for) the estimated macromolecular parameters.

Interestingly, once in the subspace of algebraically pre-optimized baselines, S2, the direct boundary modeling approach appears to be a differencing scheme that fits differences of data points. However, it is distinct from the ‘delta-c’ approach in that the difference is not taken between pairs of data points, but rather between each data point and the average signal from data points (at averaged position). This has significant statistical advantages, as it suppresses the noise-amplification inherent in the pair-wise subtraction. However, it is otherwise very similar to the ‘delta-c’ approach in the fact that both the optimal values of macromolecular parameters as well as their error estimates are completely independent of the values of the baseline parameters (the latter being eliminated in the subspace S2).

Vice versa, we note that the differencing scheme of the ‘delta-c’ approach could not be conceived without implying the existence of a baseline term and the constancy of the latter. (Obviously, we know it has direct physical meaning). Therefore, just like differential equations can define the solution to a problem only up to integration constants, any differencing scheme allows for calculating the macromolecular sedimentation parameters but leaves the baseline parameters undetermined. Explicit values for b can be determined, though, by going back to S1. This allows us trivially to calculate the least-squares best-fit values of the baseline for any set of macromolecular parameter estimates, which is a very useful step (see below).

The fact that the baseline parameters have not been determined in the historic delta-c based analysis approaches changes nothing about the existence of these unknown baseline parameters, and the effect that they exert on the error surface (as demonstrated here for SΔ and S2 in our linear analogue). The delta-c based historic methods simply do not completely determine the parameters of the sedimentation model in the raw data space. The differencing step does rephrase the problem in a way that is independent of the baseline value, but leaves it an unknown. This is a property that all differencing schemes share.

Just like the pair-wise differencing with ΔN = N/2 was postulated as a model, taken among many possible differencing schemes, one could have guessed upfront that the differences between particular and average scans would give statistical advantages. If one would postulate the latter differencing model directly for use in the differenced data space, without explicitly deriving it from its root in the raw data space (and ignore the determination of baselines), we would arrive at the same (Eq. 8), whether or not the baseline parameters are subsequently made explicit. Again, our choice to restrict the analysis to the parameters that appear in the differenced data space does not affect the true mathematical relationship of the model with its integral form in the raw data space.

The premise of the present contribution is that the linear model retains key properties of the differencing schemes of SV data. Clearly the higher dimensionality of the SV data, which requires typically hundreds of baseline parameters (one at each radial point), and the complex macromolecular sedimentation model, which exhibits non-linear parameters in the solution of the Lamm equation, present a different problem. However, we believe that the differencing of the data a(r, ti) to model Δa = a(r, ti) − a(r, ti−ΔN) in the ‘delta-c’ approach exhibits similar statistical properties, even though it would appear as an array of one-dimensional problems (side-by-side for each radius value, all sharing the non-linear macromolecular parameters describing the evolution of signal at each radius). Likewise, we have shown previously that for any sedimentation model in SV the direct boundary fitting problem allows for the algebraic elimination of baseline parameters, and the optimization of the sedimentation parameters in a sub-space of the original error surface, completely analogous to the strategy outlined for the straight line problem. In particular, for any macromolecular sedimentation model Mrt({p}) (generally depending on the macromolecular parameter set {p}), it is

| (Eq. 30) |

where pre-optimization of all time-invariant noise parameters br from the original error surface (lhs of (Eq. 30)) leads to an error surface (rhs of (Eq. 30)), in which the data art and the model Mrt({p}) appear as difference to their respective time-averaged scans, ār and M̄r ({p}) [12]. Eq. (Eq. 30) amounts to fitting differences , similar to those examined here in the straight line model, except for the extension of the single baseline to an array of baseline values br. It is difficult to see how these differences between the SV problem and the straight line problem could fundamentally change the relationships between the ‘delta-c’ and the ‘direct modeling’ approach.

Therefore, we conclude that also for the case of SV there are no ‘philosophical’ differences or differences in the ‘model-dependence’ between the ‘delta-c’ and ‘direct boundary modeling’ approaches, which were suggested previously [22–25]. Just like for the straight line problem outlined above, both approaches are equally model dependent, as they are based on the same model and only expressed in either differential or integral form. Unfortunately, the step of explicitly calculating the baseline parameters that are implied by the macromolecular sedimentation model is sometimes ignored, and, to the author’s knowledge, it is currently not implemented in software packages such as SEDANAL and DCDT+. With the exception of differences in the error surface arising from noise amplification and possible distortions from data transformations [12], essentially the same models will fit the data similarly in both integral and differential formulations. This specifically includes models that, due to correlations of the sedimentation and baseline parameters, would imply baselines we would dismiss as unreliable (along with the sedimentation model) once available for inspection. Examples are strongly curving baseline profiles inconsistent with the usually relatively flat fringe profile of a well-aligned interference optics with empty cells. One should not be misled by merely the neglect to explicitly calculate the baseline, and the lack of its display, not to recognize that the baseline does constitute an unknown parameter that increases the flexibility of the fit. Vice versa, Stafford’s suggestion [25] that offering this information about the implicit baselines for inspection would affect the “validity” of the model or cause higher parameter correlation is erroneous. To the contrary, the ability to discern from the value of the explicit baseline that a certain model is invalid is an important advantage as an additional diagnostic information when searching for true macromolecular parameters.

The ‘delta-c’ approach has historical roots in the time-derivative method for SV to determine an apparent distribution of non-diffusing particles g(s*) [7] that relies on time-difference to approximate the rate dc/dt. However, distributions of non-diffusing particles can be determined also directly [11]. Similarly, models using explicit Lamm equation solutions can be naturally phrased in the raw data space, and there is no particular justification for the differencing approach. There is no other biophysical technique besides SV where a differential form of the fitting function is advocated to provide less parameter correlation and greater ‘model-independence’ due to the ‘elimination’ of the baseline parameters. If such an advantage were to exist, one should exploit the same advantage also in sedimentation equilibrium (SE) analytical ultracentrifugation, where baseline correlations can be very significant, and one would consequently be led to proceed fitting the pair-wise signal differences at different radii a(ri) − a(rj), rather than the raw equilibrium profiles a(r). Yet, despite the many decades long study of the baseline problem in the SE literature, to our knowledge such a strategy has not been proposed (for obvious reasons).

While the approaches are based on the same underlying model and assumptions, the integral and differential forms differ significantly in performance. Partly, this arises from the fact that differencing leads to noise amplification. In our simple model, for the pair-wise averages, the loss of precision of the estimate of the slope depends on the time-interval. Obviously, longer time-intervals Δt = ti − tj provide for smaller relative errors of the difference data Δy = yi − yj. This is balanced by the fact that only fewer difference data points are possible at longer time intervals. Shorter time intervals are not as bad as they may appear at first sight, since two difference data points that share the same raw data point (once positive and once negative) are not statistically independent. In our linear model with equidistant time-point, equally optimal choices were time-intervals of 1/3 and 2/3 of the total observation time. The choice of dividing the total number of data points in half [8] (this is the default choice in SEDANAL, DCDT+) exhibits a local maximum of the error amplification. In contrast, the best choice is differencing between each point and the average, yi − ȳ, since the statistical precision of ȳ improves with the number of data points. We believe this is the optimal out of any possible differencing schemes, noting that the latter scheme arises directly as a computational result from the direct boundary modeling, and following the axiom that to fit the raw data is optimal.

In our simple model, we could also demonstrate a lower potential of the pair-wise differencing for the possible detection of systematic deviations of the data from the model. For example, it appears that a greater flexibility of the pair-wise differencing model can arise when data pairs from different segments of the experiment yield similar slopes but imply different baselines. Specifically, there appears to be a high susceptibility to oscillations, since addition of an arbitrary periodic offset z that fulfills z(ti) = z(ti−ΔN) leaves the Δyi = yi − yi−ΔN data completely invariant. Similar effects may be relevant in SV in practice considering the possibility of signal oscillations from the vibration of optical components or rotor precession. This is not the case for ‘direct modeling’ approach, which is therefore a more stringent model.

A problem arises in SV analysis that the data suffer not only from time-invariant baseline profiles, but also from radial-invariant but time-dependent noise contributions βt, which play in the time-domain the same role as the br in the spatial domain. We have shown previously that the same principle of separation of linear and non-linear variables can be applied, and equations equivalent to (Eq. 30) can be found in the presence of radial-invariant offsets βt, as well as for simultaneous br and βt baseline offsets [5]. In contrast, the ‘delta-c’ approach does not seem to allow the equivalent treatment of these orthogonal baseline parameters. In conjunction with the ‘delta-c’ approach, it is required to empirically eliminate the temporal offsets by ‘aligning’ the scans in small regions either outside the solution column or where the solutes are believed to be depleted. Clearly, this is a source of potential bias.

In the practice of SV analytical ultracentrifugation, the higher statistical errors for the ‘delta-c’ approach should be relevant for solving problems such as the determination of trace aggregates in protein pharmaceuticals [30–35], or the estimation of kinetic rate constants from sedimentation profiles of reacting systems [13, 19], both problems where the boundary shapes need to be assessed to a level of detail where signal/noise ratio becomes limiting. However, perhaps the most important conclusion of the present work is that there is no difference in the ‘model-dependence’ of the two approaches, but that making explicit the baseline profiles that are equally part of both approaches provides a useful diagnostic tool. The theoretical difference in their statistical performance may be small compared to the increase in parameter uncertainty arising from the arbitrary exclusion of data from considerations (subset analysis), and from sources of systematic error such as convection, un-accounted hydrodynamic non-ideality, signal offsets from buffer mismatch (Zhao et al., submitted), bias from constraining the meniscus parameter to incorrect values, and/or systematic errors arising from unaccounted time-resolution of the absorbance scanner [12].

Acknowledgments

This work was supported by the Intramural Research Program of the National Institute of Bioimaging and Bioengineering, NIH.

Footnotes

If one were interested in exploring the uncertainties of the baseline parameters, this principle is incompatible with pre-constraining the baselines to non-optimal parameters by the commonly used projection method.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Howlett GJ, Minton AP, Rivas G. Analytical ultracentrifugation for the study of protein association and assembly. Curr Opin Chem Biol. 2006;10:430–6. doi: 10.1016/j.cbpa.2006.08.017. [DOI] [PubMed] [Google Scholar]

- 2.Schuck P. Sedimentation velocity in the study of reversible multiprotein complexes. In: Schuck P, editor. Protein Interactions: Biophysical Approaches for the study of complex reversible systems. Springer; New York: 2007. pp. 469–518. [Google Scholar]

- 3.Cole JL, Lary JW, PMT, Laue TM. Analytical ultracentrifugation: sedimentation velocity and sedimentation equilibrium. Methods Cell Biol. 2008;84:143–79. doi: 10.1016/S0091-679X(07)84006-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Stafford WF, Stafford WF. Modern Analytical Ultracentrifugation. Birkhauser; Boston: 1994. Sedimentation Boundary Analysis of Interacting Systems; Use of the Apparent Sedimentation Coefficient Distribution Function; pp. 119–137. [Google Scholar]

- 5.Schuck P, Demeler B. Direct sedimentation analysis of interference optical data in analytical ultracentrifugation. Biophys J. 1999;76:2288–2296. doi: 10.1016/S0006-3495(99)77384-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kar SR, Kinsbury JS, Lewis MS, Laue TM, Schuck P. Analysis of transport experiment using pseudo-absorbance data. Anal Biochem. 2000;285:135–142. doi: 10.1006/abio.2000.4748. [DOI] [PubMed] [Google Scholar]

- 7.Stafford WF. Boundary analysis in sedimentation transport experiments: a procedure for obtaining sedimentation coefficient distributions using the time derivative of the concentration profile. Anal Biochem. 1992;203:295–301. doi: 10.1016/0003-2697(92)90316-y. [DOI] [PubMed] [Google Scholar]

- 8.Stafford WF. Boundary analysis in sedimentation velocity experiments. Methods Enzymol. 1994;240:478–501. doi: 10.1016/s0076-6879(94)40061-x. [DOI] [PubMed] [Google Scholar]

- 9.Stafford WF. 2009 http://www.bbri.org/faculty/stafford/Thumb-8.html.

- 10.Philo JS. A method for directly fitting the time derivative of sedimentation velocity data and an alternative algorithm for calculating sedimentation coefficient distribution functions. Anal Biochem. 2000;279:151–163. doi: 10.1006/abio.2000.4480. [DOI] [PubMed] [Google Scholar]

- 11.Schuck P, Rossmanith P. Determination of the sedimentation coefficient distribution by least-squares boundary modeling. Biopolymers. 2000;54:328–341. doi: 10.1002/1097-0282(20001015)54:5<328::AID-BIP40>3.0.CO;2-P. [DOI] [PubMed] [Google Scholar]

- 12.Brown PH, Balbo A, Schuck P. On the analysis of sedimentation velocity in the study of protein complexes. Eur Biophys J. 2009;38:1079–1099. doi: 10.1007/s00249-009-0514-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Stafford WF, Sherwood PJ. Analysis of heterologous interacting systems by sedimentation velocicty: curve fitting algorithms for estimation of sedimentation coefficients, equilibrium and kinetic constants. Biophys Chem. 2004;108:231–243. doi: 10.1016/j.bpc.2003.10.028. [DOI] [PubMed] [Google Scholar]

- 14.Ruhe A, Wedin PÅ. Algorithms for separable nonlinear least squares problems. SIAM Review. 1980;22:318–337. [Google Scholar]

- 15.Schuck P. Size distribution analysis of macromolecules by sedimentation velocity ultracentrifugation and Lamm equation modeling. Biophys J. 2000;78:1606–1619. doi: 10.1016/S0006-3495(00)76713-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Schuck P, Perugini MA, Gonzales NR, Howlett GJ, Schubert D. Size-distribution analysis of proteins by analytical ultracentrifugation: strategies and application to model systems. Biophys J. 2002;82:1096–1111. doi: 10.1016/S0006-3495(02)75469-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Balbo A, Minor KH, Velikovsky CA, Mariuzza R, Peterson CB, Schuck P. Studying multi-protein complexes by multi-signal sedimentation velocity analytical ultracentrifugation. Proc Natl Acad Sci U S A. 2005;102:81–86. doi: 10.1073/pnas.0408399102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Brown PH, Schuck P. Macromolecular Size-And-Shape Distributions by Sedimentation Velocity Analytical Ultracentrifugation. Biophys J. 2006;90:4651–4661. doi: 10.1529/biophysj.106.081372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Dam J, Velikovsky CA, Mariuzza R, Urbanke C, Schuck P. Sedimentation velocity analysis of protein-protein interactions: Lamm equation modeling and sedimentation coefficient distributions c(s) Biophys J. 2005;89:619–634. doi: 10.1529/biophysj.105.059568. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Dam J, Schuck P. Calculating sedimentation coefficient distributions by direct modeling of sedimentation velocity profiles. Methods Enzymol. 2004;384:185–212. doi: 10.1016/S0076-6879(04)84012-6. [DOI] [PubMed] [Google Scholar]

- 21.Vistica J, Dam J, Balbo A, Yikilmaz E, Mariuzza RA, Rouault TA, Schuck P. Sedimentation equilibrium analysis of protein interactions with global implicit mass conservation constraints and systematic noise decomposition. Anal Biochem. 2004;326:234–256. doi: 10.1016/j.ab.2003.12.014. [DOI] [PubMed] [Google Scholar]

- 22.Philo JS. Improved methods for fitting sedimentation coefficient distributions derived by time-derivative techniques. Anal Biochem. 2006;354:238–46. doi: 10.1016/j.ab.2006.04.053. [DOI] [PubMed] [Google Scholar]

- 23.Demeler B, Brookes E. Modeling conformational and molecular weight heterogeneity with analytical ultracentrifugation experiments. In: Demeler B, Brookes E, editors. From Computational Biophysics to Systems Biology. Vol. 40. John von Neumann Institute for Computing; Juelich: 2008. pp. 73–76. [Google Scholar]

- 24.Philo JS. 2009 http://jphilo.mailway.com/dcdt+.htm.

- 25.Stafford WF., 3rd Protein-protein and ligand-protein interactions studied by analytical ultracentrifugation. Methods Mol Biol. 2009;490:83–113. doi: 10.1007/978-1-59745-367-7_4. [DOI] [PubMed] [Google Scholar]

- 26.Bevington PR, Robinson DK. Data Reduction and Error Analysis for the Physical Sciences. Mc-Graw-Hill; New York: 1992. [Google Scholar]

- 27.Schuck P. On the analysis of protein self-association by sedimentation velocity analytical ultracentrifugation. Anal Biochem. 2003;320:104–124. doi: 10.1016/s0003-2697(03)00289-6. [DOI] [PubMed] [Google Scholar]

- 28.Johnson ML, Straume M. Comments on the analysis of sedimentation equilibrium experiments. In: Johnson ML, Straume M, editors. Modern Analytical Ultracentrifugation. Birkhäuser; Boston: 1994. pp. 37–65. [Google Scholar]

- 29.Ansevin AT, Roark DE, Yphantis DA. Improved ultracentrifuge cells for high-speed sedimentation equilibrium studies with interference optics. Anal Biochem. 1970;34:237–261. doi: 10.1016/0003-2697(70)90103-x. [DOI] [PubMed] [Google Scholar]

- 30.Berkowitz SA. Role of analytical ultracentrifugation in assessing the aggregation of protein biopharmaceuticals. AAPS J. 2006;8:E590–605. doi: 10.1208/aapsj080368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Liu J, Andya JD, Shire SJ. A critical review of analytical ultracentrifugation and field flow fractionation methods for measuring protein aggregation. Aaps J. 2006;8:E580–9. doi: 10.1208/aapsj080367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Gabrielson JP, Randolph TW, Kendrick BS, Stoner MR. Sedimentation velocity analytical ultracentrifugation and SEDFIT/c(s): Limits of quantitation for a monoclonal antibody system. Anal Biochem. 2007;361:24–30. doi: 10.1016/j.ab.2006.11.012. [DOI] [PubMed] [Google Scholar]

- 33.Pekar A, Sukumar M. Quantitation of aggregates in therapeutic proteins using sedimentation velocity analytical ultracentrifugation: practical considerations that affect precision and accuracy. Anal Biochem. 2007;367:225–37. doi: 10.1016/j.ab.2007.04.035. [DOI] [PubMed] [Google Scholar]

- 34.Brown PH, Balbo A, Schuck P. A bayesian approach for quantifying trace amounts of antibody aggregates by sedimentation velocity analytical ultracentrifugation. Aaps J. 2008;10:481–93. doi: 10.1208/s12248-008-9058-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Gabrielson JP, Arthur KK, Kendrick BS, Randolph TW, Stoner MR. Common excipients impair detection of protein aggregates during sedimentation velocity analytical ultracentrifugation. J Pharm Sci. 2009;98:50–62. doi: 10.1002/jps.21403. [DOI] [PubMed] [Google Scholar]