Abstract

This study examined whether measurement constructs behind reading-related tests for struggling adult readers are similar to what is known about measurement constructs for children. The sample included 371 adults reading between the third and fifth grade levels, including 127 males and 153 English Speakers of Other Languages. Using measures of skills and subskills, confirmatory factor analyses were conducted to test child-based theoretical measurement models of reading: an achievement model of reading skills, a core deficit model of reading subskills, and an integrated model containing achievement and deficit variables. Although the findings present the best measurement models, the contribution of this paper is the description of the difficulties encountered when applying child-based assumptions to developing measurement models for struggling adult readers.

As indicated by the 2003 National Assessment of Adult Literacy, 43% of adults in the United States have difficulty reading materials encountered in their houses, neighborhoods and workplaces (Kutner et al., 2007). There is a paucity of research on struggling adult readers, and therefore researchers interested in investigating the reading skills and processes of struggling adult readers rely on the extensive literature describing children's reading development (e.g., Greenberg, Ehri, & Perin, 1997; Kruidenier, 2002). The appropriateness of this reliance has not been tested, and therefore, the purpose of this study is to evaluate child-based measurement models of reading constructs with struggling adult readers. Such an evaluation will help elucidate reading skills and subskills, their interrelationships, and their measures for this specific group of struggling readers. Three measurement models are investigated: a reading achievement skill model, a reading subskill based model referred to as the core deficit model, and an integrated model of both reading achievement skills and reading subskills. Reading achievement skills including word reading, nonword reading, reading fluency, and reading comprehension are important areas of reading performance. Reading subskills, or underlying processes, including phonological awareness, rapid automatic naming (RAN), and oral vocabulary are subskills that impact overall reading performance but do not involve actual reading.

This study used confirmatory factor analysis to test child-based theoretical measurement models of reading constructs with struggling adult readers. Measurement models, as tested with confirmatory factor analysis, specify the number of factors (or constructs), reveal how the factors correlate, and show how the indicators (or observed variables) relate to the factors. Based on theory, these models are specified apriori and then tested for fit. When a tested model meets fit criteria, it is an indication that the observed variables are measuring constructs as specified in the model. Measurement model assessment is a crucial data analysis step prior to developing causal models, especially for populations for which there is so little research that it is impossible to know if the measures used actually form constructs as might be expected. One such population is that of struggling adult readers. The current limited research in the area of adult literacy is based on previously conducted reading research with children. Measurement modeling of critical reading skills and subskills for struggling adult readers will therefore help determine if reading skills and subskills and their associated measures form constructs as they commonly do with children. Findings from this type of research will help indicate whether the reliance adult literacy researchers place upon child-based reading development theory is appropriate.

Reading Achievement Measurement Model

Children's reading literature indicates that reading achievement skills such as word reading, nonword reading, reading fluency, and reading comprehension are important components of reading (National Institute for Child Health and Human Development, 2000). There is limited research on these achievement constructs with struggling adult readers. Researchers comparing struggling adult readers to children often use word reading to match participants from the two groups (e.g., Greenberg et al., 1997; Read & Ruyter, 1985; Thompkins & Binder, 2003). The fact that the adults are reading at levels comparable to children highlights the deficits these adults have in word reading. However, compared to children with similar word reading levels, adult readers have a relative strength in orthographic skills such as sight word reading (Greenberg et al.; Thompkins & Binder).

Despite the relative strength in sight word reading, many adults struggling with reading have significant deficits in nonword reading. Comparisons of adults and children matched on word reading levels reveal that the adults perform worse than the children on nonword reading (Greenberg et al., 1997). Greenberg and colleagues hypothesized that the poor nonword reading skills of struggling adult readers resemble those of children with reading disabilities. In fact, many struggling adult readers with deficits in nonword reading report having a learning disability (Strucker, Yamamoto, & Kirsch, 2007).

Reading fluency also seems to be problematic for struggling adult readers (Winn, Skinner, Oliver, Hale, & Ziegler, 2006). Mudd (1987) compared struggling adult readers to two groups of reading age-matched children. One group of children included skilled readers whose actual age was less than or equal to their reading age while the other group of children included less skilled readers whose actual age was at least two months greater than their reading age. Mudd found that the struggling adults read faster than the less skilled children but slower than the more skilled children.

Reading comprehension also poses a problem for adults struggling with reading. In fact, struggling adult readers have difficulty on a variety of reading comprehension tasks that resemble real-world uses of literacy (Kutner, Greenberg, & Baer, 2006). Chall (1994) hypothesized that adults struggling with reading comprehension may simply lack the basic skills such as word reading, nonword reading, and reading fluency necessary to read at a level required for comprehension.

Reading achievement skills including word reading, nonword reading, reading fluency, and reading comprehension are vital to overall reading performance. However, there is limited research on these reading achievement constructs for struggling adult readers. One purpose of this study is to analyze how a measurement model with constructs of word reading, nonword reading, reading fluency, and reading comprehension and their associated observed measures fits for struggling adult readers.

Core Deficit Measurement Model

There is some evidence that struggling adult readers have deficits in the same reading subskills that differentiate children struggling with reading: phonological awareness, RAN, and oral vocabulary. For example, researchers comparing struggling adult readers to typically developing children matched on reading age found that the struggling adults possessed poorer phonological skills (Greenberg et al., 1997; Thompkins & Binder, 2003). Read and Ruyter's (1985) work proposed that the phonological skills of struggling adults are similar to those of children with reading disabilities. Similar to phonological skills, RAN also appears to be a deficit for struggling adult readers. In a study investigating the naming speed of adults who were good and poor readers, Sabatini (2002) found that compared to the good readers, adults reading at lower levels had slower naming rates.

Prior to 1980, researchers generally thought that adults struggling with reading would not necessarily have deficits in oral language because these adults had accumulated a lifetime of oral language experiences (Hoffman, 1978). Since 1980, some research has emerged indicating that struggling adult readers are actually weak in oral language skills. For example, Greenberg and colleagues (1997) found very low receptive vocabulary skills for adults reading from the third through fifth grade levels with age-based norms placing the adults at the first percentile rank. They also found that the adults reading at the third and fourth grade levels exhibited better vocabulary skills than reading level matched children. However, the vocabulary advantage for the adults disappeared when comparing the adults and children reading at the fifth grade level. Greenberg and colleagues hypothesized that vocabulary growth at fifth grade and beyond may be greatly influenced by reading experiences; so, adults lacking reading skills may have deficits in vocabulary.

While we have some evidence that struggling adult readers, like children struggling with reading, perform poorly on phonological awareness, RAN, and vocabulary tasks, we do not know whether tasks measuring these core deficit skills form constructs like they do with children. This uncertainty of modeling constructs based on children's reading theory with struggling adult readers leads us to the second purpose of this research: to determine how the core deficit measurement model with constructs of phonological awareness, RAN, and oral vocabulary and their associated measures fits for struggling adult readers.

Integrated Measurement Model

The achievement model of reading skills and the core deficit model of reading subskills each include different tasks important to overall reading. However, reading researchers indicate that reading skills and subskills work together in integrated models of reading (Adams, 1990; Vellutino, Tunmer, Jaccard, & Chen, 2007). Prior to testing causal pathways in integrated models, a measurement model must first be verified. What remains unknown and will be addressed with the third purpose is how such an integrated measurement model including assessments of reading skills from the achievement model and reading subskills from the core deficit model fits for struggling adult readers.

Nonnative English Speaking Adults Struggling with Reading

The heterogeneity of struggling adult readers complicates the investigation of their reading skills and subskills. In the United States, the population of adults struggling with reading consists of both native English speakers (NES) as well as English Speakers of Other Languages (ESOL) (Kutner et al., 2007). Therefore, studies on struggling adult readers should include an examination of the possible differences between NES and ESOL participants.

There are many gaps in the research on struggling adult readers who are ESOL (Kruidenier, 2002). The limited research that has been conducted with this special population indicates that when comparing struggling adult readers who are ESOL and NES, ESOL readers have different profiles of relative strengths and weaknesses (Strucker et al., 2007). Unlike NES readers, the ESOL group tends to have a relative strength in decoding (Chall, 1994; Strucker et al.). This relative strength in decoding is highlighted by research investigating the errors in word recognition of NES and ESOL struggling adult readers matched on nonword reading. Even though the two groups exhibited the same decoding skills, the ESOL readers relied more on their decoding skills for word reading as evidenced by their abundance of phonetically plausible incorrect responses (Davidson & Strucker, 2002).

Despite relative strengths in decoding, ESOL struggling adult readers have extensive difficulties with reading and tend to be overrepresented in the lowest ranks of comprehension skills (Kutner et al., 2007). Their comprehension difficulties may be due to their limited experience with English and their resulting poor English vocabularies. Specifically, their limited vocabularies may hinder their overall reading ability even when they do not have significant problems with decoding (Chall, 1994; Strucker et al., 2007).

Summary and Research Questions

While there are many gaps in the research literature on struggling adult readers, some research indicates that struggling adult readers perform poorly on achievement skills of word reading (Greenberg et al., 1997), nonword reading (Greenberg et al.), reading fluency (Mudd, 1987), and reading comprehension (Kutner et al., 2007), with particularly poor performance on nonword reading. Furthermore, research indicates that struggling adult readers, like children struggling with reading, also have difficulties in the core deficit subskills of phonological awareness (Greenberg et al.), RAN (Sabatini, 2002), and oral vocabulary (Greenberg et al.).

The study of struggling adult readers is complicated by the prevalence of ESOL readers. The ESOL group compared to the NES group tends to have different profiles of strengths and weaknesses including a relative strength in decoding and large deficits in oral vocabulary and comprehension (Strucker et al., 2007). What remains unknown is whether the tasks commonly used to assess constructs from the achievement, core deficit, and integrated measurement models will measure these constructs for NES and ESOL struggling adult readers.

Because of the lack of research on struggling adult readers, adult literacy researchers rely, perhaps inappropriately, on constructs and measures based on children's reading research. The purpose of this study was to examine measurement models of constructs behind tests of reading skills and subskills for struggling adult readers to determine whether the constructs prevalent in children's reading research are evident in struggling adult readers. This research used confirmatory factor analyses to test three child-based measurement models of reading constructs with adults reading between the third and fifth grade levels. The models include: 1) an achievement measurement model with constructs of word reading, nonword reading, reading fluency, and reading comprehension and their associated assessments; 2) a core deficit measurement model with constructs of phonological awareness, RAN, and oral vocabulary and their associated assessments; and 3) an integrated measurement model combining the constructs and assessments from the achievement and core deficit models. For each of the three measurement models, the following questions were investigated:

How does the measurement model fit for NES struggling adult readers?

How does the measurement model fit for ESOL struggling adult readers?

Is the measurement model different for struggling adult readers who are ESOL compared to those who are NES?

Based on reading research with children, one might expect these measurement models to fit as they include constructs commonly studied with children. However, due to the lack of research in adult literacy, it is unknown how these measurement models will fit.

Method

Participants

This study utilized reading assessment data from 371 struggling adult readers ages 16 and older who attended adult literacy programs. The participants included 218 NES and 153 ESOL individuals who were recruited from adult literacy programs in a large southeastern city and volunteered to partake in a study investigating the effectiveness of various instructional strategies. To participate in this larger study, participants were screened and invited to take part if they possessed word reading skills from the third through the fifth grade levels as measured by the Letter-Word Identification subtest of the Woodcock-Johnson III: Tests of Achievement (WJ-III; Woodcock, McGrew, & Mather, 2001). Table 1 includes demographic characteristics of the participants. These characteristics are representative of the adult literacy programs from which the participants were recruited.

Table 1.

Frequencies and Descriptive Statistics for Demographic Characteristics of the NES (n = 218) and ESOL (n = 153) Participants

| English speaking status |

|||

|---|---|---|---|

| Sample characteristics | NES | ESOL | |

| Gender Frequencies | Male | 62 | 65 |

| Female | 156 | 88 | |

| Race Frequencies | Black | 202 | 46 |

| Hispanic | 3 | 59 | |

| Asian | 0 | 39 | |

| White | 10 | 9 | |

| Other/Mixed | 3 | 0 | |

| Word Reading Level Frequencies | 3rd grade | 95 | 49 |

| 4th grade | 74 | 48 | |

| 5th grade | 49 | 56 | |

| Age Descriptives | Range | 16 - 72 | 16 - 62 |

| Mean | 34.89 | 31.45 | |

| Standard Deviation | 15.70 | 10.61 | |

| Years of Education Descriptives | Range | 5-14 | 0 - 21 |

| Mean | 10.08 | 11.67 | |

| Standard Deviation | 1.55 | 3.89 | |

Measures

Each measure was selected based on its psychometric properties and the age range of intended examinees. While each test has excellent psychometric properties for its norm group, none of the norm groups included samples of struggling adult readers. Because it is unclear whether standard scores are appropriate for struggling adult readers and because some assessments do not have standard scores for all ages included in this investigation, raw scores were used for all the analyses, unless otherwise specified.

For the achievement model, data was analyzed on the following assessments:

Word reading

To assess word reading skills, two different tests were administered: the WJ-III Letter-Word Identification subtest (Woodcock et al., 2001) and the Adams and Huggins' (1985) Sight Word Reading Test. The WJ-III Letter-Word Identification was normed on people ages 2 to 80+ with reliability of .94. This subtest requires examinees to read lists of words that gradually increase in difficulty. The Adams and Huggins' Sight Word Reading Test is an unstandardized test assessing the ability of examinees to read words with atypical spellings.

Word reading and reading fluency

The Sight Word Efficiency subtest of the Test of Word Reading Efficiency (TOWRE; Torgesen & Wagner, 1999) was administered. This subtest was normed on people ages 6 through 24 with reliability of .93. In this assessment, examinees read as many words as they can in 45 seconds from a list of words that continually increases in difficulty.

Nonword reading

To assess nonword reading skills, the WJ-III Word Attack subtest was administered (Woodcock et al., 2001). WJ-III Word Attack was normed on people ages 4 to 80+ with reliability of .87. For the first few items, examinees evaluate basic sound symbol correspondences. For the rest of the items, examinees read aloud progressively more difficult nonwords.

Nonword reading and reading fluency

The Phonemic Decoding Efficiency subtest of the TOWRE (Torgesen & Wagner, 1999) was administered. This subtest was normed on people ages 6 through 24 with reliability of .94. In this assessment, examinees read as many nonwords as they can in 45 seconds from a list of nonwords that continually increases in difficulty.

Reading fluency

To assess reading fluency two different tests were administered: the WJ-III Reading Fluency subtest (Woodcock et al., 2001) which was normed on people ages 6 to 80+ with reliability of .90 and the Gray Oral Reading Test – Fourth Edition (GORT-4; Weiderholt & Bryant, 2001) which was normed on people ages 6 through 18 with reliability of .93. In the WJ-III Reading Fluency subtest, examinees silently read as many statements as they can in three minutes, decide while reading if each statement is true or false, and mark their decision in their test booklets. In the GORT-4, examinees read stories aloud and the examiner marks errors, times the reading, and converts the errors and times into fluency scores.

Reading comprehension

Two measures assessing reading comprehension were used: the WJ-III Passage Comprehension subtest (Woodcock et al., 2001) which was normed on people ages 2 to 80+ with reliability of .88 and the GORT-4 (Weiderholt & Bryant, 2001) which was normed on people ages 6 through 18 with reliability of .97. The WJ-III Passage Comprehension subtest is a cloze reading comprehension procedure in which the examinee reads a passage silently and supplies a word to fill in the blank in the passage. The GORT-4 includes increasingly difficult passages each with five multiple choice comprehension questions. Examinees read a story aloud, listen and follow along while comprehension questions and answer options are read to them, and select answer options.

For the core deficit model, data was analyzed on the following assessments:

Phonological awareness

The Elision and Blending Words subtests of the Comprehensive Test of Phonological Processing (CTOPP; Wagner, Torgesen, & Rashotte, 1999) were used to assess phonological awareness. The Elision subtest was normed on people ages 5 to 24 with reliability of .89. This subtest assesses the ability to manipulate sounds in words. The examinee listens to an orally presented word, says the word, listens to an orally presented sound in that word, removes that sound from the word, and says the resulting word. The Blending Words subtest was normed on people ages 5 to 24 with reliability of .84. This subtest assesses the ability to combine sounds to form words. The examinee listens to orally presented individual sounds in a word, combines those sounds, and says the resulting word. CTOPP Elision for the NES group (but not for the ESOL group) had questionable normality with skewness of 1.33 and kurtosis of 6.01. A square root transformation of CTOPP Elision reduced the skewness to .04 and reduced the kurtosis to 2.98. Therefore, the square root transformed CTOPP Elision variable was used for analysis for the NES group, but the original raw CTOPP Elision score was used in analyses for the ESOL group.

Rapid automatic naming (RAN)

The Rapid Letter Naming and Rapid Color Naming subtests of the CTOPP (Wagner et al., 1999) were used to evaluate RAN. Each subtest was normed on people ages 5 to 24 with reliabilities of .82 for each one. In each subtest, examinees name the targets (lowercase letters in Rapid Letter Naming and colored squares in Rapid Color Naming) as fast as they can while being timed. The CTOPP Rapid Letter Naming and CTOPP Rapid Color Naming times were converted to rate scores by taking the inverse of the raw time scores. Rate scores are advantageous because a higher rate score indicates better performance.

Oral vocabulary

To assess receptive vocabulary, the Peabody Picture Vocabulary Test – Third Edition (PPVT-III; Dunn & Dunn, 1998) was administered. The PPVT-III was normed on people ages 2 to 90+ with reliability of .95. In the PPVT-III, the examinee looks at a template with four pictures, listens to the word presented orally by the examiner, and chooses the picture that best represents the word. To assess expressive vocabulary, the Boston Naming Test (BNT; Kaplan, Goodglass, & Weintraub, 2001) was administered. In this unstandardized assessment, the examinee orally labels individually presented drawings. If the examinee does not know or answers incorrectly, the examiner provides cues including a stimulus cue which states information about the item in the picture and then a phonemic cue stating the beginning sound of the target response. The raw score used for this study was the total number correct which includes items answered correctly with initial presentation or with the stimulus cue.

Procedure

The tests were individually administered by trained graduate research assistants in the following order: PPVT-III, BNT, WJ-III Reading Fluency, WJ-III Passage Comprehension, WJ-III Word Attack, GORT-4 Fluency and Comprehension, TOWRE Sight Word Efficiency, TOWRE Phonemic Decoding Efficiency, Adams and Huggins (1985) Sight Word Reading Test, CTOPP Elision, CTOPP Blending Words, CTOPP Rapid Letter Naming, and CTOPP Rapid Color Naming. Test order was based on the authors' previous testing experience with this population. For example, tests with pictures were administered first, a balance of task duration and demand was attempted for change of pace while testing, and examinee fatigue was considered. Testing was completed in one session lasting one and half to two hours with frequent breaks.

Results

The means, standard deviations, and reliabilities for each assessment for the NES and ESOL groups are shown in Table 2. To assess reliability, coefficient alphas (Cronbach, 1951) were computed for all nontimed subtests with available item-by-item data. For other tests, test-retest reliability is provided in which there was approximately a four-month delay between test and retest. In addition, Table 2 presents the statistical results of one-way ANOVAs comparing NES and ESOL groups for each subtest. Based on effect sizes greater than 0.20, the NES group performed better on the PPVT-III, BNT, WJ-III Passage Comprehension subtest, and Sight Word Reading Test while the ESOL group performed better on the TOWRE Phonemic Decoding Efficiency subtest.

Table 2.

Means, Standard Deviations, and Reliability Estimates for Tests for NES (n = 218) and ESOL Participants (n = 153), and Test Statistics for NES and ESOL Mean Test Score Comparisons

| English speaking status |

NES and ESOL | |||||||

|---|---|---|---|---|---|---|---|---|

| NES |

ESOL |

differences |

||||||

| Measures | M | SD | reliability | M | SD | reliability | F | Effect size |

| PPVT | 134.06 | 18.01 | .95d | 94.07 | 31.99 | .98d | 234.75*** | .39 |

| BNT | 35.78 | 7.27 | .89d | 19.31 | 8.84 | .92d | 385.38*** | .51 |

| WJ Passage Comprehension | 25.97 | 4.19 | .77d | 19.20 | 3.85 | .79d | 251.38*** | .41 |

| GORT Comprehension | 24.76 | 9.73 | .58e | 19.78 | 9.48 | .55e | 24.05*** | .06 |

| WJ Letter-Word Identification | 49.41 | 4.46 c | .75d | 50.94 | 4.59 c | .69d | 10.37** | .03 |

| Sight Word Reading Test | 28.57 | 7.51 | .87d | 20.88 | 7.19 | .86d | 97.67*** | .21 |

| TOWRE Sight Word | 64.06 | 11.32 | .84e | 62.41 | 10.99 | .75e | 1.96 | .01 |

| WJ Reading Fluency | 38.45 | 9.47 | .82e | 31.54 | 9.93 | .83e | 46.10*** | .11 |

| GORT Fluency | 43.88 | 12.86 | .65e | 38.22 | 11.93 | .74e | 18.46*** | .05 |

| CTOPP Rapid Letter Naming Ratea | .032 | .008 | .83e | .031 | .007 | .72e | 3.90* | .01 |

| CTOPP Rapid Color Naming Ratea | .020 | .005 | .79e | .017 | .004 | .77e | 26.13*** | .07 |

| TOWRE Phonemic Decoding | 16.81 | 10.71 | .79e | 32.50 | 11.03 | .77e | 188.37*** | .34 |

| WJ Word Attack | 12.56 | 6.17 | .89d | 17.72 | 5.78 | .83d | 66.05*** | .15 |

| CTOPP Elision | 6.25 | 2.47 | .80d | 7.17 | 4.61 | .92d | 6.13* | .02 |

| CTOPP Elision Square Rootb | 2.65 | .46 | na | 2.73 | .84 | na | 1.39 | .004 |

| CTOPP Blending Words | 5.53 | 3.05 | .84d | 7.29 | 4.32 | .88d | 21.05*** | .05 |

Note. PPVT = Peabody Picture Vocabulary Test – Third Edition; BNT = Boston Naming Test; WJ = Woodcock Johnson-III: Tests of Achievement; TOWRE = Test of Word Reading Efficiency; GORT = Gray Oral Reading Tests – Fourth Edition; CTOPP = Comprehensive Test of Phonological Processing; na = not applicable.

Rate score is the inverse of the raw time score. This transformation results in higher scores that indicate better performance.

The transformation is the square root of the sum of the CTOPP Elision raw score and 1. This transformation improves normality for the NES participants.

Range is limited on this test as all participants read from the third through fifth grade level according to this assessment.

Reliability coefficient computed was Cronbach's alpha.

Reliability coefficient was test retest for 108 NES participants and 88 ESOL participants with approximately 4 months between testing points.

p < .05

p < .01

p < .001

To further explore the performance of struggling adult readers on the assessments, standard scores were computed for each norm-based test. Table 3 shows the standard scores for each assessment using norms at the participants' actual ages when available. For the tests that did not have norms for the ages of the participants in this investigation, the norms for age 18 were used to identify standard scores instead.

Table 3.

Standard Score Means, Standard Deviations, and Minimum and Maximum Values for Tests for NES (n = 218) and ESOL participants (n = 153)

| English speaking status |

||||||||

|---|---|---|---|---|---|---|---|---|

| NES |

ESOL |

|||||||

| Measures | M | SD | minimum | maximum | M | SD | minimum | maximum |

| PPVTac | 72.64 | 9.72 | 40 | 94 | 51.14 | 15.13 | 40 | 87 |

| WJ Passage Comprehensionac | 76.42 | 11.66 | 28 | 95 | 66.64 | 12.74 | 21 | 91 |

| GORT Comprehensionbd | 3.80 | 1.52 | 1 | 8 | 3.10 | 1.42 | 1 | 7 |

| WJ Letter-Word Identificationac | 75.56 | 8.68 | 52 | 89 | 77.40 | 8.18 | 55 | 89 |

| TOWRE Sight Wordbc | 72.33 | 7.55 | 50 | 92 | 71.27 | 7.17 | 50 | 86 |

| WJ Reading Fluencyac | 79.07 | 6.38 | 63 | 100 | 76.32 | 6.57 | 58 | 90 |

| GORT Fluencybd | 1.28 | 0.63 | 1 | 4 | 1.09 | 0.35 | 1 | 3 |

| CTOPP Rapid Letter Namingbd | 6.20 | 2.95 | 1 | 14 | 5.50 | 2.85 | 1 | 15 |

| CTOPP Rapid Color Namingbd | 6.45 | 2.65 | 1 | 18 | 5.10 | 2.35 | 1 | 10 |

| TOWRE Phonemic Decodingbc | 61.12 | 11.19 | 50 | 96 | 76.93 | 9.78 | 50 | 100 |

| WJ Word Attackac | 73.77 | 11.03 | 20 | 97 | 81.47 | 10.72 | 33 | 98 |

| CTOPP Elisionbd | 2.82 | 1.42 | 1 | 10 | 3.50 | 2.48 | 1 | 12 |

| CTOPP Blending Wordsbd | 3.36 | 1.92 | 1 | 11 | 4.48 | 2.74 | 1 | 12 |

Note. PPVT = Peabody Picture Vocabulary Test – Third Edition; WJ = Woodcock Johnson-III: Tests of Achievement; TOWRE = Test of Word Reading Efficiency; GORT = Gray Oral Reading Tests – Fourth Edition; CTOPP = Comprehensive Test of Phonological Processing.

Assessment has a standard score mean of 100 and standard deviation of 15 in the norm group.

Assessment has a standard score mean of 10 and standard deviation of 3 in the norm group.

Standard score computed based on norms for the actual age of the participant.

Standard score computed based on norms for 18 year olds due to lack of norms at participants' actual ages.

Correlations coefficients were computed separately for all assessments for the NES and ESOL groups. As seen in Table 4, correlations were low with only 15% and 14% of correlations larger than .50 for the NES and ESOL groups, respectively. Fisher z transformations (Fisher, 1921) were computed to test the differences between the correlation coefficients for the two groups. Out of 105 correlation coefficients, 10 were larger for the NES group and 23 were larger for the ESOL group at the .05 level.

Table 4.

Correlation Coefficients for Tests for NES Participants (n = 218) Below the Diagonal and ESOL Participants (n = 153) Above the Diagonal

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14c | 15d | 16 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. PPVT | - | .72 | .51 | .39 | .25 | .32 | .25b | .48b | .16b | .10b | .28b | .15b | .03 | .25b | .24 | .25b |

| 2. BNT | .76 | - | .59b | .49 | .28 | .34 | .22b | .46b | .26b | .17b | .28b | .05 | .03 | .23 | .25 | .13 |

| 3. WJ Passage Comprehension | .39 | .42 | - | .60b | .30 | .43 | .28 | .54 | .42b | .20 | .32b | .05 | .11 | .26 | .27 | .18 |

| 4. GORT Comprehension | .37 | .38 | .34 | - | .13 | .30b | .24b | .46b | .35b | .16 | .22b | .01 | .04 | .21 | .23 | .18 |

| 5. WJ Letter-Word Identification | .17 | .22 | .31 | .08 | - | .48 | .36 | .38 | .45 | .16 | .15 | .43 | .39 | .29 | .27 | .16 |

| 6. Sight Word Reading Test | .19 | .18 | .31 | .02 | .52 | - | .52 | .40 | .56 | .26 | .20 | .51b | .44 | .30 | .26 | .09 |

| 7. TOWRE Sight Word | −.06 | −.03 | .26 | −.01 | .57a | .53 | - | .51 | .65 | .41 | .36 | .61 | .26 | .33 | .32 | .11 |

| 8. WJ Reading Fluency | .05 | .11 | .37 | .17 | .41 | .41 | .68a | - | .54 | .26 | .45 | .36 | .20 | .42 | .46b | .13 |

| 9. GORT Fluency | −.08 | −.05 | .23 | −.01 | .44 | .48 | .63 | .56 | - | .35 | .30 | .51 | .43 | .27 | .26 | .15 |

| 10. CTOPP Letter Naming Rate | −.13 | −.12 | .12 | .01 | .18 | .10 | .59a | .52a | .41 | - | .31 | .13 | .10 | .12 | .17 | .00 |

| 11. CTOPP Color Naming Rate | −.12 | −.01 | .11 | .01 | .19 | .07 | .39 | .43 | .36 | .53a | - | .24 | .14 | .28 | .28 | .15 |

| 12. TOWRE Phonemic Decoding | −.20 | −.06 | .19 | −.08 | .52 | .32 | .60 | .43 | .49 | .35a | .27 | - | .58 | .37 | .32 | .17 |

| 13. WJ Word Attack | −.11 | .03 | .27 | .01 | .59a | .34 | .46a | .39 | .44 | .16 | .24 | .73a | - | .34 | .31 | .23 |

| 14. CTOPP Elisionc | .04 | .18 | .23 | .18 | .31 | .13 | .21 | .28 | .17 | .05 | .18 | .37 | .53a | - | .97 | .32 |

| 15. CTOPP Elision Square Rootd | .04 | .20 | .24 | .19 | .31 | .12 | .20 | .27 | .16 | .06 | .17 | .39 | .52a | .98 | - | .30 |

| 16. CTOPP Blending Words | .03 | .20 | .12 | .10 | .17 | −.04 | .07 | .13 | .01 | .05 | .10 | .24 | .37 | .44 | .45 | - |

Note. PPVT = Peabody Picture Vocabulary Test – Third Edition; BNT = Boston Naming Test; WJ = Woodcock Johnson-III: Tests of Achievement; TOWRE = Test of Word Reading Efficiency; GORT = Gray Oral Reading Tests – Fourth Edition; CTOPP = Comprehensive Test of Phonological Processing. CTOPP Letter Naming Rate is the inverse of CTOPP Rapid Letter Naming Time. CTOPP Color Naming Rate is the inverse of CTOPP Rapid Color Naming Time. CTOPP Elision Square Root is used for NES and CTOPP Elision is used for ESOL participants.

Correlation for NES is significantly larger than that for ESOL participants (α = .05).

Correlation for ESOL participants is significantly larger than that for NES (α = .05).

CTOPP Elision raw scores are used for ESOL participants.

CTOPP Elision raw scores with a square root transformation are used for NES to improve the normality of the variable for the group.

NES correlations > .134 are significant at the .05 level and NES correlations >.176 are significant at the .01 level.

ESOL correlations > .161 are significant at the .05 level and ESOL correlations >.220 are significant at the .01 level.

The primary purpose of the study was to test three child-based theoretical models of reading with struggling adult readers. The main data analysis included confirmatory factor analysis (CFA) in LISREL 8.72 (Joreskog & Sorbom, 2005) of the achievement model, the core deficit model, and the integrated model with adults reading from the third through fifth grade level who are NES or ESOL readers. Good model fit was determined with RMSEA values below 0.05, and NFI and CFI values above 0.95 (MacCallum, Browne, & Sugawara, 1996).

In completing the confirmatory factor analyses, the hypothesized models were evaluated first. The resulting models were inspected for theoretically justifiable areas of improvement. In cases where the resulting models had low factor loadings, the variables with the low loadings were removed from their associated factors and allowed to load elsewhere. In cases where there were very high factor correlations, models with the factors combined were considered. In addition, the modification indices were reviewed for each model to see if adding variables to other factors or including error covariances would be appropriate. In testing the hypothesized models and modifying them as described above, many problems were encountered including matrices that were not positive definite, negative error variances, poor overall fit, and models not working for both the NES and ESOL groups. Matrices that are not positive definite contain a set of values that are not possible resulting in eigenvalues that are zero or negative. With zero or negative eigenvalues, certain mathematical operations cannot be performed and solutions are indeterminable. Negative error variances, or Heywood cases, are problematic because they are impossible values. The best fitting models are presented below.

Achievement Measurement Model

The achievement models included variables assessing word reading, nonword reading, reading fluency, and reading comprehension.

NES Participants

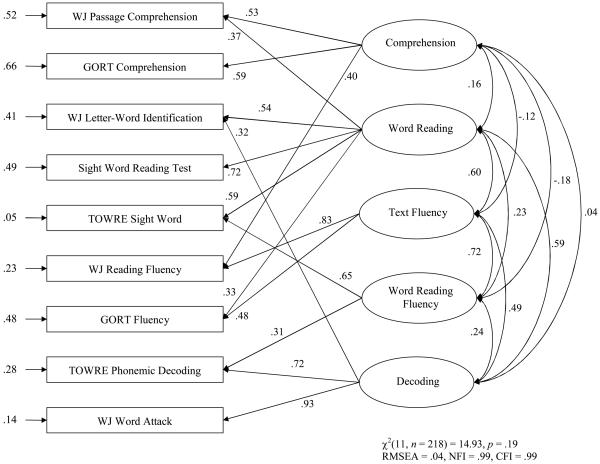

Figure 1 presents the factor loadings, interfactor correlations, and error variances for the best fitting CFA model of achievement variables for the NES participants. The model shows the observed variables in rectangles and the latent factors in ovals with straight and curved lines and their associated values as estimated solutions. The observed variables have straight lines with arrows pointing at them from two directions. The arrows coming from the factors with associated factor loadings indicate the extent to which the factor contributes to performance on the variable. The straight lines and associated values going to the observed variables from the left are error variances. The curved lines with arrows on each end and their corresponding values are estimated correlations between factors. The model in Figure 1 had good fit as indicated by the χ2(11, n = 218) = 14.93, p = .19, RMSEA = .04, NFI = .99, and CFI = .99.

Figure 1.

CFA results for the achievement model for NES participants

ESOL Participants

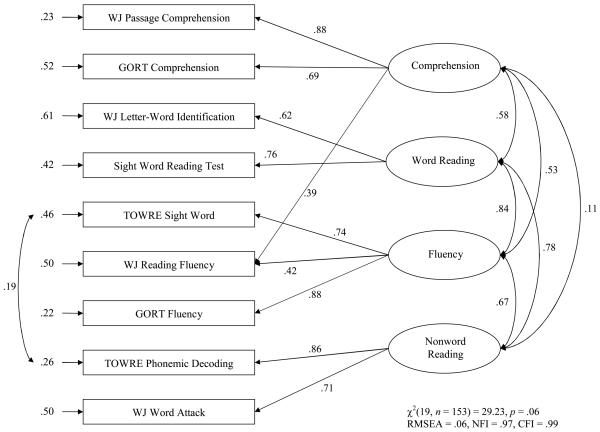

Figure 2 presents the factor loadings, interfactor correlations, and error variances for the best fitting CFA model of achievement variables for the ESOL participants. Model fit statistics included χ2(19, n = 153) = 29.23, p = .06, RMSEA = .06, NFI = .97, and CFI = .99.

Figure 2.

CFA results for the achievement model for ESOL participants

Differences between NES and ESOL Participants

To address the question whether the CFA models would fit differently for NES and ESOL participants, the best fitting achievement model for the NES group shown in Figure 1 was also tested for the ESOL group. This model converged for the ESOL group, but TOWRE Sight Word Efficiency had standardized loadings greater than one and an accompanying negative error variance. The best-fitting ESOL achievement model presented in Figure 2 was also assessed for the NES group. For the NES participants, the model pictured in Figure 2 had negative error variance on the WJ-III Passage Comprehension variable. Without the model fitting for both groups, a multigroup CFA could not be completed.

Core Deficit Measurement Model

The core deficit model included variables measuring phonological awareness, RAN, and oral vocabulary.

NES Participants

The confirmatory factor analyses of the core deficit model with NES participants did not converge and the preliminary solution provided to help identify problems revealed a theta-delta matrix that was not positive definite along with negative error variances for BNT and CTOPP Rapid Color Naming rate. Other CFA models of the core deficit variables were attempted for the NES group including one and two factor models, but similar problems were encountered with these models. A one factor CFA model in which all six variables loaded onto one factor and the three pairs of variables had correlated error variances did converge but was not acceptable. The PPVT and CTOPP Rapid Letter Naming rate error variances approached one while the corresponding loadings were close to zero.

ESOL Participants

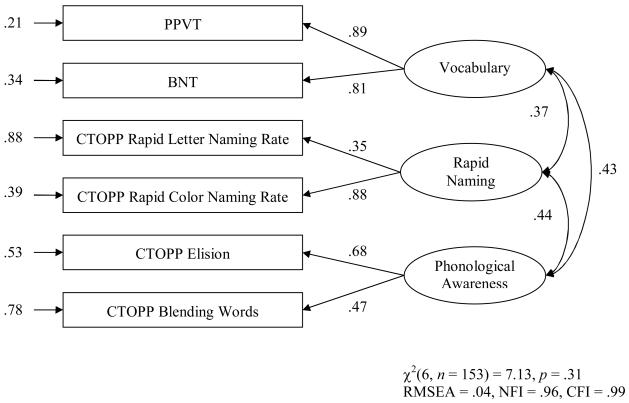

For the ESOL group, the hypothesized core deficit model converged and met criteria for good fit. Figure 3 shows the core deficit model for the ESOL participants and the associated fit statistics.

Figure 3.

CFA results for the core deficit model for ESOL participants

Differences between NES and ESOL Participants

Because no model fit for the NES participants, the question of whether the core deficit model fits differently for NES and ESOL readers cannot be addressed using multigroup CFA.

Integrated Measurement Model

The integrated model included constructs and associated measures from both the achievement and core deficit models.

NES Participants

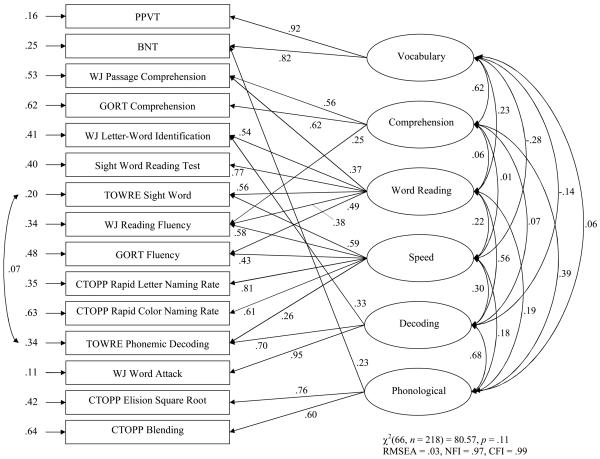

A best fitting integrated model for NES participants was identified with factors of vocabulary, comprehension, word reading, speed, decoding, and phonological awareness (χ2(66, n = 218) = 80.57, p = .11, RMSEA = .03, NFI = .97, and CFI = .99). Figure 4 presents the factor loadings, interfactor correlations, and error variances for this best fitting integrated CFA model for the NES participants.

Figure 4.

CFA results for the integrated model for NES participants

ESOL Participants

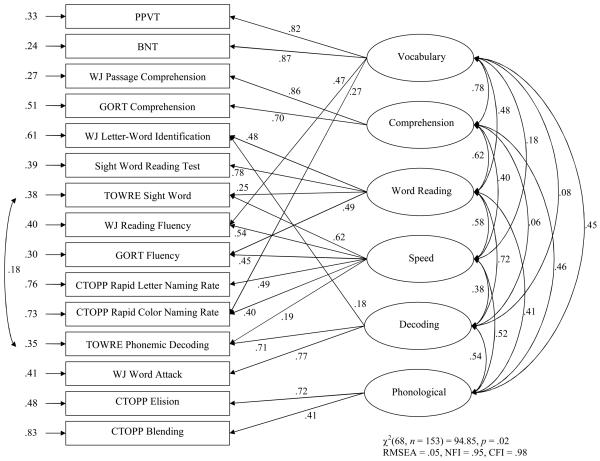

A best fitting integrated model for the ESOL group, as seen in Figure 5, was identified with factors of vocabulary, comprehension, word reading, speed, decoding, and phonological awareness (χ2(68, n = 153) = 94.85, p = .02, RMSEA = .05, NFI = .95, and CFI = .98).

Figure 5.

CFA results for the integrated model for ESOL participants

Differences between NES and ESOL Participants

To address the question whether the CFA models would fit differently for NES and ESOL readers, the CFA models would have to fit for each group independently. The best fitting integrated model for the NES group shown in Figure 4 did not converge for the ESOL group. In addition, the best-fitting integrated model for the ESOL group presented in Figure 5 did not meet fit criteria for the NES group. Because of the difficulty obtaining good fitting CFA models for both groups with all the variables, multi-group CFA was not completed.

Discussion

Due to a lack of research on struggling adult readers, adult literacy researchers have relied, perhaps inappropriately, on reading research with children. The purpose of this study was to examine measurement models of constructs behind tests of reading skills and subskills for struggling adult readers to determine whether the constructs prevalent in children's reading research are evident in struggling adult readers. Confirmatory factor analyses were conducted to test three child-based theoretical models of reading: an achievement model of word reading, nonword reading, reading fluency, and reading comprehension; a core-deficit model of vocabulary, naming speed, and phonological awareness; and a third model containing both achievement model and core deficit model variables. Overall, there was difficulty fitting the measurement models for the struggling adult readers. Following brief interpretations of the three models for NES and ESOL participants, a discussion ensues as to why these models were so problematic.

Since the achievement measurement model included tests commonly used in both reading research and practice to measure word reading, nonword reading, reading fluency, and reading comprehension it is surprising that there was difficulty fitting this model for struggling adult readers. The achievement model was problematic for the NES participants and after testing numerous alternatives, a best-fitting 5-factor CFA model with numerous double loadings was identified, as seen in Figure 1. This model renames the nonword reading factor as decoding because WJ-III Letter-Word Identification loaded with the nonword reading tasks. In addition, fluency split into the two factors connected text fluency and word reading fluency rather than being a single fluency construct.

For the ESOL group, both the initially hypothesized achievement model and the best-fitting model for the NES group were problematic. After modifications to the initial four-factor model, a best-fitting ESOL achievement model was identified as seen in Figure 2. This model was simpler than was the best fitting NES achievement model as this ESOL model did not separate word and text fluency and did not include many double loadings, even though some could be theoretically justified. The only double loading included for both groups was WJ-III Reading Fluency on both fluency and comprehension. This test required examinees to read sentences silently and decide if each sentence was true or false. While determining if the sentences were true or false was not intended to be difficult, it did require language and comprehension skills. Caution should be taken in using this test as a primary measure of fluency for struggling adult readers as results may be confounded by comprehension skills.

The core deficit measurement model was based on research with children who were struggling with reading and since some have hypothesized that struggling adult readers are similar to children struggling with reading (Greenberg et al., 1997) it was assumed that this model would fit for struggling adult readers. Specifically, one could argue that some of the NES struggling child readers, on whom the model was based, grow up to be NES struggling adult readers. Unexpectedly, in this study the core deficit model was problematic for the NES group with estimated matrices that were not positive definite, negative error variances, and overall poor fit. However, it fit beautifully for the ESOL group as seen in Figure 3.

The integrated measurement model combining the core deficit and achievement constructs did not work initially for either group. For the NES group, the hypothesized model had estimated matrices that were not positive definite. Modifications including allowing all timed measures to load on a speed factor resulted in a best-fitting six-factor integrated model for the NES participants as seen in Figure 4. The speed factor positively related to the reading, decoding, and phonological awareness factors. This indicates that general processing speed may be a critical component in many timed tasks of reading skills and subskills and that this component relates to reading skills and subskills. This supports the findings of Sabatini (2002) indicating that both domain general and domain specific processing speed tasks related to reading level for struggling adult readers.

For the ESOL participants, numerous problems were encountered when trying to fit the initial integrated model and the best fitting integrated model for the NES group. A model combining the best-fitting core deficit and achievement models for the ESOL participants was evaluated but did not meet criteria for good fit. Instead, a six factor model as seen in Figure 5 fit best for the ESOL group. In this model, CTOPP Rapid Color Naming loaded on speed and vocabulary factors. While expected to be a good speed measure, this subtest may also assess vocabulary in the form of color names.

While the main structure of the integrated models remained similar for the NES and ESOL groups, differences were primarily seen in double loadings. This implies that the major structure of the measures is similar between groups, but that there are nuances for the assessments when using them with struggling adult readers. The WJ-III Reading Fluency subtest functioned differently for the groups as it loaded on speed, reading, and comprehension factors for the NES group and on speed and vocabulary factors for the ESOL group. Group differences are further highlighted with the WJ-III Passage Comprehension loadings. Specifically, WJ-III Passage Comprehension had a single strong loading on comprehension for the ESOL group but had a double loading on comprehension and word reading for the NES group. This supports the conclusions of Strucker et al. (2007) that poor word reading skills may limit the performance of NES readers on comprehension tasks.

Overall, there was great difficulty in obtaining models with good fit. Prevalent problems included matrices that were not positive definite, negative error variances, poor overall fit, and models not working for both NES and ESOL participants. There are many possible explanations for these types of problems including lack of normality in the measures, poor reliability of the measures, lack of variability in the measures, low correlations between measures, and possible real differences between NES and ESOL participants who struggle with reading. Each of these possible reasons was explored.

Lack of normality is one factor that could result in issues with model convergence in CFA, particularly when samples are not especially large. Results revealed adequate normality overall with an exception for CTOPP Elision for the NES participants. It is possible that the nonnormality of the CTOPP Elision distribution, even after transformation, hindered convergence of the core deficit model and the integrated model for the NES group.

Perhaps analysis difficulties occurred because the tests involved were not reliable for the participants. None of the tests were developed for or specifically normed with struggling adult readers. In fact, some tests such as the CTOPP and GORT-4 were not even intended for people 25 or older. However, the reliability coefficients for the participants presented in Table 2 were quite high for both groups with all alpha values exceeding .75 except those for WJ-III Letter-Word Identification. Lower reliabilities were expected for this subtest due to the restriction of range created by its use in participant selection. Some of the test retest reliabilities were low as might be expected due to the four-month stretch between testing sessions. In particular, the test retest reliability of the GORT-4 Fluency and Comprehension subtests were low and may hinder convergence of the achievement and integrated models. Future research should evaluate each measure to explore its appropriateness for struggling adult readers. In addition, test designers should include additional norm subgroups of struggling adult readers so researchers and practitioners can choose tests most appropriate for their specific sample.

Lack of variability in measures can be problematic for model convergence and fit. It is possible that the sample selection criteria for this study resulted in a restriction of range in test scores leading to low variability. When reviewing the means and standard deviations of each test as seen in Table 2, there is a lack of variability on the WJ-III Letter-Word Identification subtest. This is not surprising since only participants with grade equivalent scores from the third through fifth grade levels were invited to participate. This lack of variability for the WJ-III Letter-Word Identification subtest likely impacted the variability of other measures, although other measures had larger standard deviations relative to their means than did WJ-III Letter-Word Identification. It is possible that the restriction of range based on participant selection impacted model convergence and fit for all models but particularly for the achievement and integrated models that contained WJ-III Letter-Word Identification.

Low correlations between variables could also lead to problems with model convergence and fit. In this study, the magnitudes of the correlations for both groups were low overall. The low correlations could represent true low relationships between the tests for struggling adult readers or could be a result of the restriction of range in scores created with participant selection. Greenberg et al. (1997) reported lower correlations between reading measures for struggling adult readers than for children when all participants were reading from the third through fifth grade levels on word identification. Because the low correlations were not seen for the children, Greenberg et al. hypothesized that low correlations between reading measures for struggling adult readers may be evidence of their lack of integration of skills and subskills needed for reading. It is possible that participants in this study also struggled with reading due to lack of skill and subskill integration. Regardless of cause, the low correlations found for the participants in this study may have resulted in poor model convergence and fit.

A possible explanation for difficulties with model fit for both groups on the same model may be due to real differences between the NES and ESOL participants. Preliminary analyses describing the performance of NES and ESOL struggling adult readers revealed that the NES participants performed better than the ESOL participants on oral vocabulary tests, a reading comprehension test, and a sight word reading test but performed worse than the ESOL group on nonword reading fluency. These results are consistent with the findings of Strucker et al. (2007) which indicated that the two groups have different patterns of strengths and weaknesses with ESOL readers weak in vocabulary and comprehension and strong in decoding while the NES readers were very weak in decoding.

When comparing the correlations between the tests for the NES and ESOL readers in this study there were striking differences. Thirty one percent of all correlations were different between the two groups. The NES group had higher correlations among speeded tasks and had higher correlations for nonword reading tasks with other assessments. The ESOL group had higher correlations for vocabulary and comprehension tasks with other assessments. Overall, it appears that measures in this study interrelate differently for the NES and ESOL participants and that this will impact the fitting of identical measurement models to both groups.

The differences between NES and ESOL participants could be due to the origination of reading problems. The NES group may have a high prevalence of learning disabilities preventing their reading development while the ESOL group may have a language barrier hindering their reading. It is also possible that the differences were simply due to differences in language levels and not due to true differences in the measures and their associated constructs for the groups as the ESOL group had lower receptive and expressive oral vocabulary than the NES group. Although hard to do, researchers may want to match NES and ESOL participants on language skills to investigate if language level accounts for some group differences. However, by doing this a researcher may obtain results related to statistical limitations due to the restriction of range instead of actual differences. A Monte Carlo modeling of a fuller distribution for each group is one way for researchers to address this issue. In addition, teasing out the differences between groups might be easier if researchers include reading and language tests for the ESOL participants in their native language.

Several limitations of this study should be noted. The study did not include several variables that might also be interesting in these models such as listening comprehension and working memory as those were not administered to the sample. In addition, the participant selection criteria restricted the range of performance to a point that may have impacted variability in the sample. Furthermore, the criteria only included word reading and perhaps should have included a language threshold for the ESOL participants.

In conclusion, this study found that fitting child-based theoretical measurement models to struggling adult readers is very challenging. While there were minor problems with test normality, reliability, and variability, low correlations were pervasive and may have hindered model convergence and fit. In addition, it is possible that there are true differences in models for NES and ESOL struggling adult readers. Although this paper presents the best models found with the reading skill variables, the reading subskill variables, and a combination of both skill and subskill variables, the major finding is the difficulty in fitting measurement models of constructs from children's research with struggling adult readers. Results from this study depict the care that needs to be taken when applying assumptions based on research of children's reading development to struggling adult readers. More research specifically focused on struggling adult readers is needed. This need is crucial in order to advance our understanding of the difficulties 43% of adults have with reading (Kutner et al., 2007). This understanding can help to lead to implications for adult literacy instruction so that this percentage can be decreased.

References

- Adams MJ. Beginning to read: Thinking and learning about print. MIT Press; Cambridge, MA: 1990. [Google Scholar]

- Adams MJ, Huggins AW. The growth of children's sight vocabulary: A quick test with education and theoretical implications. Reading Research Quarterly. 1985;20:262–281. [Google Scholar]

- Chall JS. Patterns of adult reading. Learning Disabilities: A Multidisciplinary Journal. 1994;5(1):29–33. [Google Scholar]

- Cronbach LJ. Coefficient alpha and the internal structure of tests. Psychometrika. 1951;16:297–334. [Google Scholar]

- Davidson RK, Strucker J. Patterns of word-recognition errors among adult basic education native and nonnative speakers of English. Scientific Studies of Reading. 2002;6:299–316. [Google Scholar]

- Dunn LM, Dunn LM. Peabody Picture Vocabulary Test - Third Edition. American Guidance Service; Circle Pines, MN: 1998. [Google Scholar]

- Fisher RA. On the probable error of a coefficient of correlation deduced from a small sample. Metron. 1921;1:3–32. [Google Scholar]

- Greenberg D, Ehri LC, Perin D. Are word-reading processes the same or different in adult literacy students and third-fifth graders matched for reading level? Journal of Educational Psychology. 1997;89:262–275. [Google Scholar]

- Hoffman L. Reading errors among skilled and unskilled adult readers. Community/Junior College Research Quarterly. 1978;2:151–162. [Google Scholar]

- Joreskog K, Sorbom D. Lisrel 8.72. Scientific Software International; Chicago: 2005. [Google Scholar]

- Kaplan E, Goodglass H, Weintraub S. Boston Naming Test. Lippincott Williams & Williams; Baltimore: 2001. [Google Scholar]

- Kruidenier J. Research-based principles for adult basic education reading instruction. National Institute for Literacy; Washington, DC: 2002. [Google Scholar]

- Kutner M, Greenberg E, Baer J. A first look at the literacy of America's adults in the 21st century. (NCES 2006-470) National Center for Education Statistics; Washington, DC: 2006. [Google Scholar]

- Kutner M, Greenberg E, Jin Y, Boyle B, Hsu Y, Dunleavy E. Literacy in everyday life: Results from the 2003 National Assessment of Adult Literacy (NCES 2007-490) National Center for Education Statistics; Washington, DC: 2007. [Google Scholar]

- MacCallum RC, Browne MW, Sugawara HM. Power analysis and determination of sample size for covariance structure modeling. Psychological Methods. 1996;1:130–149. [Google Scholar]

- Mudd N. Strategies used in the early stages of learning to read: A comparison of children and adults. Educational Research. 1987;29:83–94. [Google Scholar]

- National Institute for Child Health and Human Development . Teaching children to read: An evidence-based assessment of the scientific research literature on reading and its implications for reading instruction: Reports of the subgroups (NIH Publication No. 00-4754) U.S. Government Printing Office; Washington, D. C.: 2000. Report of the National Reading Panel. [Google Scholar]

- Read C, Ruyter L. Reading and spelling skills in adults of low literacy. Remedial & Special Education. 1985;6(6):42–53. [Google Scholar]

- Sabatini JP. Efficiency in word reading of adults: Ability group comparisons. Scientific Studies of Reading. 2002;6:267–298. [Google Scholar]

- Strucker J, Yamamoto K, Kirsch I. The relationship of the component skills of reading to IALS performance: Tipping points and five classes of adult literacy learners. National Center for the Study of Adult Learning and Literacy; Cambridge, MA: 2007. [Google Scholar]

- Thompkins AC, Binder KS. A comparison of the factors affecting reading performance of functionally illiterate adults and children matched by reading level. Reading Research Quarterly. 2003;38:236–255. [Google Scholar]

- Torgesen JK, Wagner R. Test of Word Reading Efficiency. Pro-Ed; Austin, TX: 1999. [Google Scholar]

- Vellutino FR, Tunmer WE, Jaccard JJ, Chen R. Components of reading ability: Multivariate evidence for a convergent skills model of reading development. Scientific Studies of Reading. 2007;11:3–32. [Google Scholar]

- Wagner RK, Torgesen JK, Rashotte CA. Comprehensive Test of Phonological Processing. Pro-Ed; Austin, TX: 1999. [Google Scholar]

- Weiderholt JL, Bryant BR. Gray Oral Reading Tests-Fourth Edition. Pro-Ed; Austin, TX: 2001. [Google Scholar]

- Winn BD, Skinner CH, Oliver R, Hale AD, Ziegler M. The effects of listening while reading and repeated reading on the reading fluency of adult learners. Journal of Adolescent & Adult Literacy. 2006;50:196–205. [Google Scholar]

- Woodcock RW, McGrew KS, Mather N. Woodcock-Johnson III: Tests of Achievement. Riverside Publishing; Itasca, Il: 2001. [Google Scholar]