Abstract

Many decisions involve a trade-off between the quality of an outcome and the time at which that outcome is received. In psychology and behavioral economics, the most widely studied models hypothesize that the values of future gains decline as a roughly hyperbolic function of delay from the present. Recently, it has been proposed that this hyperbolic-like decline in value arises from the interaction of two separate neural systems: one specialized to value immediate rewards and the other specialized to value delayed rewards. Here we report behavioral and functional magnetic resonance imaging results that are inconsistent with both the standard behavioral models of discounting and the hypothesis that separate neural systems value immediate and delayed rewards. Behaviorally, we find that human subjects do not necessarily make the impulsive preference reversals predicted by hyperbolic-like discounting. We also find that blood oxygenation level dependent activity in ventral striatum, medial prefrontal, and posterior cingulate cortex does not track whether an immediate reward was present, as proposed by the separate neural systems hypothesis. Activity in these regions was correlated with the subjective value of both immediate and delayed rewards. Rather than encoding only the relative value of one reward compared with another, these values are represented on a more absolute scale. These data support an alternative behavioral–neural model (which we call “ASAP”), in which subjective value declines hyperbolically relative to the soonest currently available reward and a small number of valuation areas serve as a final common pathway through which these subjective values guide choice.

INTRODUCTION

Many decisions involve a trade-off between the quality of an outcome and the time at which that outcome is received. This is because the subjective value of a potential reward falls as the delay to that reward lengthens, a phenomenon called temporal discounting (Bickel and Marsch 2001; Frederick et al. 2002; Green and Myerson 2004). Since the 1930s, the standard economic model has assumed that the subjective value of future rewards must, in principle, decline exponentially (at a constant rate) as a function of delay from the present (Samuelson 1937). Exponential discounters remain consistent as they move through time, making the same choices about future gains regardless of when they make those choices (Strotz 1956). They do not make impulsive preference reversals—initially committing to the larger-and-later of two rewards when both options are in the future, but then switching and taking the smaller-but-sooner reward when that option becomes immediately available. Nor do they impulsively overweight the desirability of immediately available rewards, always consuming a greater portion of their resources now than later.

Despite its normative appeal, there is overwhelming evidence that people and other animals are not exponential discounters (Bickel and Marsch 2001; Frederick et al. 2002; Green and Myerson 2004). In particular, their choices exhibit decreasing impatience with delay: subjective value declines at a rate that decreases as the delay between two options grows larger, a kind of “overweighting” of immediately available rewards. Starting from this well-described phenomenon, models in psychology and behavioral economics assume that subjective value declines hyperbolically (Mazur 1987) or quasi-hyperbolically (Laibson 1997) from the present. Since these two models have similar behavioral predictions but very different mathematical implications, we use the term “(quasi-)hyperbolic” discounting when making statements that apply to both models. Importantly, (quasi-)hyperbolic models predict both that choosers will overweight the desirability of immediately available rewards and that they will make impulsive preference reversals.

Hyperbolic and quasi-hyperbolic discounting are descriptive—or in the language of economics positive—models of behavior, rather than hypotheses about psychological or neural mechanisms. However, perhaps the best known quasi-hyperbolic model, the β–δ model (Laibson 1997), has inspired the mechanistic hypothesis that discounting behavior arises from the interaction of two competing systems: 1) an impatient emotional system (“β”) that exclusively or primarily values immediate rewards and 2) a patient rational system (“δ”) that values both immediate and delayed rewards. Previous imaging studies have found some support for this hypothesis, associating each of the two systems with specific brain regions (McClure et al. 2004a, 2007). In these studies the β system was associated with ventral striatum, ventromedial prefrontal, and posterior cingulate cortex, since these regions exhibited greater activity when an immediate reward could be chosen, compared with when all potential rewards were delayed. The δ system was associated with dorsolateral prefrontal and posterior parietal cortex, since these regions exhibited increased activity for all choices, with greater activity when the choice was more difficult or when a delayed reward was selected. To distinguish this behavioral–neural model from the behavioral quasi-hyperbolic model, we refer to this specific behavioral–neural model as the “separate neural systems” hypothesis.

We recently reported evidence inconsistent with the separate neural systems hypothesis (Kable and Glimcher 2007). In our study, participants chose between a fixed immediate gain and a second gain that varied in amount and delay from trial to trial. We found that blood oxygenation level dependence (BOLD) activity in ventral striatum, medial prefrontal cortex, and posterior cingulate cortex (all part of the proposed β system) was correlated with the subject-specific subjective value of the delayed reward, regardless of whether that reward was to be obtained in hours or in months. We hypothesized that rather than representing an impulsive signal that exclusively or primarily values immediate rewards, these regions encode the subjective value of rewards at any delay. In addition to our study, other recent studies have also found that these brain regions are sensitive to changes in the value of delayed rewards, in a manner that is not predicted by the separate neural systems hypothesis (Ballard and Knutson 2009; Pine et al. 2009; Tanaka et al. 2004).

An often-noted drawback of our previous study, however, was that participants always chose between a future gain and a fixed immediate reward. Thus we could not test one of the key behavioral implications of (quasi-)hyperbolic discounting: that people will make impulsive preference reversals. Nor could we test a key neural claim regarding the hypothesized β system: that mean neural activity is greater when an immediate reward is available compared with when only delayed rewards can be chosen. On a more global level, we were also not able to test whether the value signals we observed were related to the relative subjective value of the later gain compared with the sooner reward or whether these signals encoded subjective value on a more absolute scale.

Here we report two experiments that allow us to test the key behavioral implications of (quasi-)hyperbolic models and the key neural predictions of the separate neural systems hypothesis. Our behavioral findings are inconsistent with (quasi-)hyperbolic models and our neural findings are inconsistent with the separate neural systems hypothesis (see also Glimcher et al. 2007). Behaviorally, our subjects did exhibit decreasing impatience as the later reward was delayed in time [the first of the two choice anomalies1 predicted by (quasi-)hyperbolic models]. However, this was a function of the difference in delay between two rewards. Participants overweighted the value of the soonest available reward regardless of whether it was offered immediately or after a delay. The degree of their decreasing impatience was thus anchored to the soonest possible reward, not simply to the present. This meant that participants in our study did not make impulsive preference reversals in a consistent or statistically significant fashion [the second choice anomaly predicted by (quasi-)hyperbolic models].

Neurally, we did not find robust increases in mean activity in the proposed β areas when an immediate reward could be chosen, compared with when only delayed rewards were available, nor did we find evidence consistent with the existence of a δ system. We did, however, replicate our previous finding that BOLD activity in ventral striatum, medial prefrontal, and posterior cingulate cortex encodes the subjective value of immediate and delayed rewards. Further, we found that these regions encode subjective value on an absolute scale that depends on the delay from the present, rather than only encoding the relative subjective value of the later reward compared with the sooner reference, as might have been inferred from behavior. Our results question the generality of both standard (quasi-)hyperbolic behavioral models (although not necessarily the generality of hyperbolic discounting) and the proposal that separate neural systems value immediate and delayed rewards. We propose an alternative behavioral–neural model that can account for the behavioral and neural data we have gathered. In this “as soon as possible” (ASAP) model (Glimcher et al. 2007), discounting arises from a unitary value signal that declines with the delay from the present and is also modulated by what other options are currently available. This model can account for our behavioral findings that impatience declines as a function of delay from the soonest possible reward and that impulsive preference reversals do not occur, and our neural findings that activity accurately tracks the overall delay to all rewards.

A conference proceeding containing a preliminary description of these conclusions was previously published (Glimcher et al. 2007).

METHODS

Participants

Twenty-five paid volunteers participated in the first experiment (13 women, 12 men, all right-handed; mean age = 22.2 yr). One of these volunteers was unable to return for the scanning session. Data from two participants' scanning sessions were discarded because these individuals' intertemporal choice behavior was not stable across sessions and this led to an underconstrained estimate of the discount function in the scanning session. Thus data from 25 individuals were included in the behavioral analyses, whereas data from 22 individuals were included in the neural analyses. Four of these 22 had previously participated in a similar experiment in our laboratory (Kable and Glimcher 2007). Ten participants (3 women, 7 men; mean age = 22.4 yr) with the highest discount rates from the first experiment were selected for participation in the second experiment. All 10 participants were included in both the behavioral and neural analyses. Two of these 10 had also participated in the previously published study (Kable and Glimcher 2007). We selected only these 10 individuals to participate because high discount rates provide more statistical power in behavioral and neural tests of hyperbolic discounting, since it is only when discount functions are steep that the distinction between exponential and (quasi-)hyperbolic is detectable. All participants gave informed consent in accordance with the procedures of the University Committee on Activities Involving Human Subjects of New York University.

Experimental design

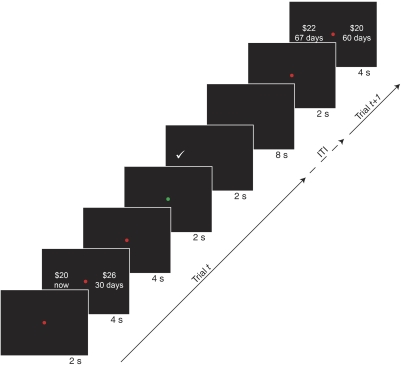

A trial began with a red dot appearing in the center of the screen for 2 s (Fig. 1). Then, the two choice options were presented, one on each side of the red dot. After 4 s, the two options disappeared. After an additional 4 s, the red dot turned green, which cued participants to indicate their choice. Participants indicated their choice by pressing a button with the hand corresponding to the side of the screen on which their choice appeared.

Fig. 1.

The sequence of events within a trial is shown. First, a red dot appeared in the center of the screen for 2 s, then the 2 options were presented on either side of the screen for 4 s. The options disappeared and, after another 4 s, the red dot in the center turned green to cue participants to indicate their choice. Participants pushed a button with their left (right) hand to choose the left (right) option and received feedback indicating the option chosen. Each trial was a fixed length of 22 s. The left/right location of the sooner option was randomized across trials and the trials from the 2 experimental conditions were completely intermixed.

Each trial involved a choice between a smaller amount of money paid at a sooner time and a larger amount paid at a later time. In the first experiment, there were two conditions. In the NOW condition, the choice was between $20 available immediately and a larger amount available at a later date. The larger-later option was constructed using one of five delays (1 day to 120 days) and one of five amounts ($21–116). Thus there were 25 unique choices in this condition. In the 60 DAY condition, the choices were constructed by adding a fixed delay of 60 days to both options in the NOW condition. Thus choices in this condition were between $20 in 60 days and $21–$116 in 61–180 days. Each choice was presented four times over the course of a session, for a total of 100 choices per condition and 200 choices per session. The side of the screen on which each option was presented was counterbalanced across the four repetitions. Delays were the same for each participant. Amounts were the same for each participant in the initial two behavioral sessions, and chosen individually for each participant in the scanning session based on their previous behavior so that (as much as possible) an approximately equal number of sooner and later options were chosen. The five amounts chosen were the five predicted indifference points for the delays used, based on the participant's previous sessions and rounded to the nearest whole dollar, or those predicted for a discount rate of kASAP = 0.005 if the participant discounted at a lower rate than this (see Eq. 3 in the following text). This important manipulation ensured that the sooner and later options were of roughly equal average value for each subject. Participants were not told how options would change across sessions. Critically, choices from the two conditions (NOW and 60 DAY) were randomly intermixed and participants were not explicitly informed of the structure of the sets of choices offered to them.

In the second experiment, the NOW condition again involved choices between $20 available immediately and $21–$86 available in 1–120 days. The SCALED 60 DAY condition, however, involved choices between a fixed amount greater than $20 (ranging from $32 to $56 across participants) in 60 days and an even larger amount (up to $224) in 61–180 days. Amounts for the SCALED 60 DAY condition were chosen as follows: First, for each participant, the fixed amount in 60 days was chosen based on that individual's previously acquired behavioral data as the smallest amount that the individual reliably preferred in 60 days over $20 immediately. In this way, we identified a reward at 60 days that was roughly equivalent in subjective value to a reward of $20 now. Then, the other five amounts paired with the larger-later rewards were scaled in the same manner; if subjects were roughly indifferent between $20 immediately and $35 at a delay of 60 days, all later rewards were increased by 75%. So, for example, the NOW condition might involve a choice between $20 now and {$21, $22, $26, $32, $44} in 1–120 days, whereas the SCALED 60 DAY condition involved a choice between $35 and {$36, $37, $46, $56, $77} in 61–180 days.

In the first experiment, subjects participated in two 1 h behavioral sessions and one 2 h scanning session. Sessions were completed over 6–197 days (median = 34 days), with consecutive sessions separated by 2–191 days (median = 14 days). The 10 participants in the second experiment completed an additional behavioral session of the first experiment, 8–427 days after the first scanning session (median = 42 days). They then completed the second experiment, which involved one 2 h scanning session, after an additional 2–62 days (median = 8 days). Since people's decisions involving real gains may differ from those involving hypothetical gains (Camerer and Hogarth 1999; Collier and Williams 1999; Smith and Walker 1993), participants were paid according to four randomly selected trials per session (except for the first behavioral session, which subjects were informed involved only hypothetical choices), using commercially available prepaid debit cards (see Kable and Glimcher 2007 for details). The four subjects who had previously participated in a similar experiment in our laboratory skipped the first session of the first experiment, which involved purely hypothetical choices.

Behavioral analysis

In each condition, we estimated a discount function that described how the subjective value of the larger-later reward declined as the delay from the fixed sooner reward increased. We estimated these discount functions individually for each participant. First, for the five amounts measured at each delay interval (in each condition), we estimated an indifference point from that individual's choices at that delay. This indifference point was estimated by fitting a logistic curve to the proportion of choices of the larger-later reward, plotted as a function of the larger-later amount. The indifference point was thus the amount, at that delay interval, for which the individual would be predicted to choose the smaller-sooner and larger-later rewards with equal frequency. To obtain a discount curve, these five delay/amount indifference points were then divided into $20 (or the amount of the fixed sooner reward in the SCALED 60 DAY data set) and a single-parameter hyperbolic (Eq. 3) or exponential (Eq. 4) function was fit to the resulting curve.

This follows standard practice in the psychological literature on discounting (Green and Myerson 2004; Mazur 1987). We should note that interpreting these curves as pure discount functions relies on the (almost certainly false) assumption that the subjective value, or utility, of money (independent of delay) increases linearly with objective amount. Although this assumption is a standard one in previous experimental studies of discounting, we do not expect that it would hold generally outside a limited range of monetary amounts and is likely violated to some degree in our data set. Violations of this assumption, however, would not alter the fact that these curves reflect the subjective desirability of delayed gains, although such violations would mean that these functions are better described as discounted utility functions because they incorporate effects of amount as well as delay.2

Imaging

Imaging data were collected with a Siemens Allegra 3T head-only scanner equipped with a head coil from Nova Medical (Wakefield, MA). T2*-weighted functional images were collected using an echoplanar imaging sequence (repetition time [TR] = 2 s, time to echo [TE] = 30 ms, 35 axial slices acquired in ascending interleaved order, 3 × 3 × 3 mm, 64 × 64 matrix in a 192 mm field of view [FOV]). Each scan consisted of 275 images. The first two images were discarded to avoid T1 saturation effects. There were 25 choice trials during each scan; each trial lasted 14 s with an 8 s intertrial interval (Fig. 1). Each experiment involved one session of eight scans. High-resolution, T1-weighted anatomical images were also collected using a magnetization-prepared rapid gradient-echo sequence (TR = 2.5 s, TE = 3.93 ms, inversion time [TI] = 900 ms, flip angle = 8°, 144 sagittal slices, 1 × 1 × 1 mm, 256 × 256 matrix in a 256 mm FOV).

fMRI analysis

Functional imaging data were analyzed using BrainVoyager QX (Brain Innovation, Maastricht, The Netherlands). Functional images were sinc-interpolated in time to adjust for staggered slice acquisition, corrected for any head movement by realigning all volumes to the first volume of the scanning session using six-parameter rigid-body transformations, and detrended and high-pass filtered (cutoff of 3 cycles/scan, or 0.0064 Hz) to remove low frequency drift in the functional magnetic resonance imaging (fMRI) signal. Images were then coregistered with each person's high-resolution anatomical scan, rotated into the anterior commissure–posterior commissure plane, and normalized into Talairach space using piecewise affine Talairach grid scaling. All spatial transformations of the functional data used trilinear interpolation. Before group-level random-effects analyses, data were also spatially smoothed with a Gaussian kernel of 8 mm (full-width half-maximum).

Statistical analyses were performed using a general linear model, estimated with ordinary least squares. To address the temporal autocorrelation in the noise, data were “prewhitened” by estimating a first-order autoregressive [AR(1)] model on the residuals. We used a variant of a “finite-impulse response” (FIR) or “deconvolution” model. In our main analysis, the two conditions in each experiment (NOW and 60 DAY or SCALED 60 DAY) were modeled separately. For each condition, the model included ten covariates that fit the mean activity, across all trials, for each of the first ten time points in a trial. Each of these ten covariates was a one-TR impulse of unit height, occurring at a specific time point in each trial. Ten additional covariates fit the deviations from the mean at each time point that were correlated with the relative subjective value (SVASAPg=1 in Eq. 3 in the following text) of the later reward across trials. Each of these ten covariates was a one-TR impulse, occurring at a specific time point in each trial, with a height corresponding to the mean-centered relative subjective value of the later reward on that trial, as determined individually for each subject using Eq. 3. We chose such a FIR model because it could flexibly capture subjective value effects at any point in the trial, without making assumptions about their precise timing a priori (i.e., for the duration of option presentation, during the delay, preceding the motor response, etc.). This model also did not require any assumptions about the shape of the hemodynamic response function.

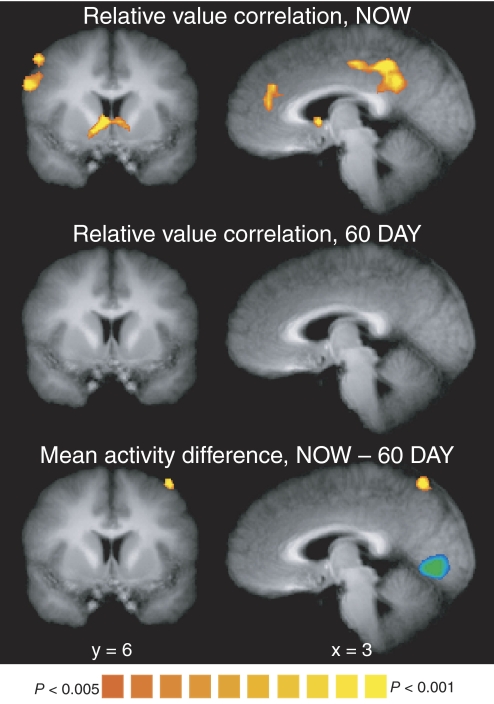

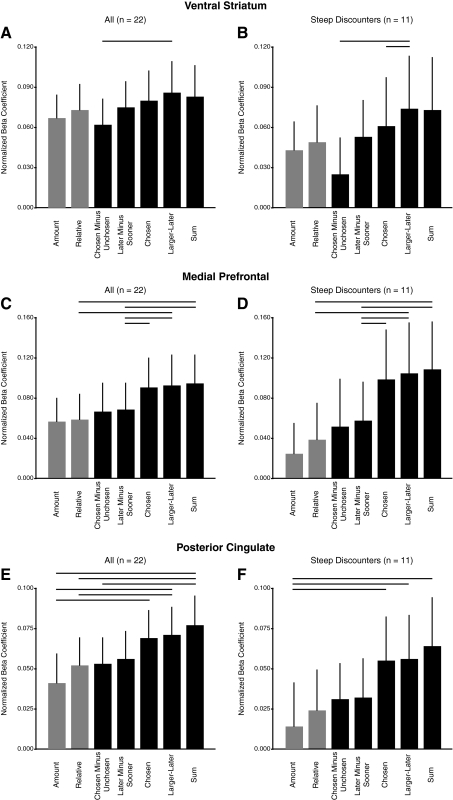

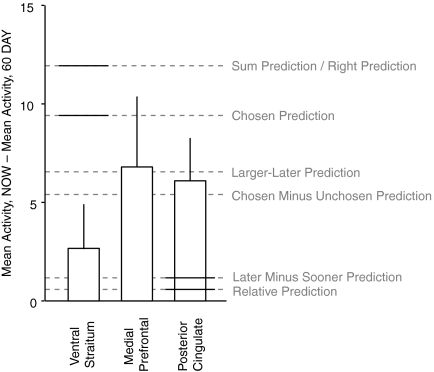

Figure 4 shows three effects that were of primary interest: the effect of relative subjective value (SVASAPg=1) at time points 4–6 in the NOW condition, the same effect in the 60 DAY condition, and the difference in mean activity at time points 4–6 between the two conditions. We focus on this particular time window based on the results of our previous study; there were no additional significant effects at other points in the trial. Figure 8 reports the effects of mean activity at time points 4–6 for both conditions (NOW + 60 DAY), as well as the results of two additional analyses, which replaced the relative subjective value regressor in the two conditions with either 1) the participant's reaction time on that trial or 2) a binary variable indicating the participant's choice (later or sooner reward). The additional analyses in Figs. 6 and 7 examined both conditions (NOW and 60 DAY) together. These analyses use the same FIR structure (with 20 covariates modeling both conditions combined rather than 40 modeling the two separately), with a subjective value covariate (SVASAP) constructed assuming different combination rules (see Table 2 in the following text). In each case, this covariate assumes the ASAP discounting function (Eq. 2) with the gain factor described in Eq. 5, althought the covariates differ in how the subjective values of the two options are combined (Table 2). Two additional analyses are included for comparison: one that tests for correlations with the amount of the delayed reward alone and one that tests for correlations with relative subjective value alone (i.e., SVASAPg=1, where the gain factor in Eq. 2 is fixed at one). To be able to compare beta coefficients across analyses in Figs. 6 and 7, as well as across experiments in Fig. 9, the values used to construct each covariate were mean-centered and divided by the SD. The beta coefficients on such normalized covariates have been used previously for model comparison in fMRI (Hampton et al. 2008; Hare et al. 2008).

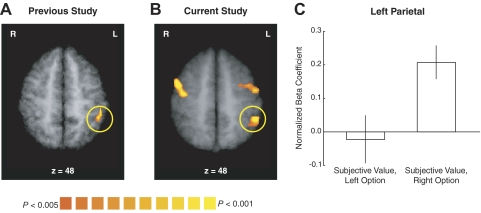

Fig. 4.

Group random-effects results are shown for the correlation with relative subjective value (i.e., SVASAPg=1) in the NOW (top) or 60 DAY (middle) condition and for the difference in mean activity between the NOW and 60 DAY conditions (bottom). Each contrast is for time points 4–6 in the trial, which corresponds to a neural effect at the time both options are shown. Maps are thresholded at P < 0.005 (uncorrected), with a spatial extent threshold determined for each map so that cluster-level inference is at P < 0.05 (corrected). Ventral striatum (coronal) and medial prefrontal and posterior cingulate cortex (sagittal) all exhibited a significant correlation with relative subjective value in the NOW condition, but no significant correlation in the 60 DAY condition and no significant differences in mean activity.

Fig. 8.

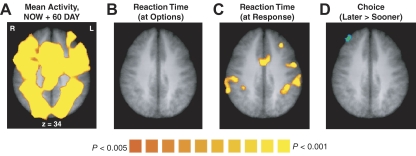

Group random-effects results are shown, for increased activity compared with rest in both the NOW and 60 DAY conditions (A), for the correlation between activity and reaction time (B and C), and for greater activity when the later reward was chosen (D). The contrasts in A, B, and D are for time points 4–6 in the trial, which corresponds to a neural effect at the time both options are shown. The contrast in C is for time points 8–10 in the trial, which corresponds to a neural effect at the time when the participant responds. All maps are thresholded at P < 0.005 (uncorrected), with a spatial extent threshold so that cluster-level inference is at P < 0.05 (corrected).

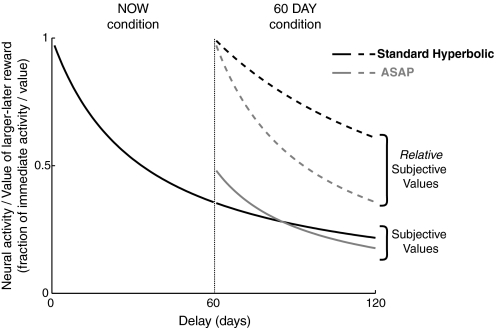

Fig. 6.

A model comparison is shown for the ventral striatum (A and B), medial prefrontal (C and D), and posterior cingulate (E and F) ROIs. Normalized beta coefficients for the subjective value covariate [specifically, SVASAP with g(DASAP) equal to Eq. 5] are plotted for 7 different combination rules, which differ in how they assume the subjective values of 2 options are combined. These coefficients are for the contrast involving time points 4–6 in the trial, which corresponds to a neural effect at the time both options are shown. Comparisons including all participants are shown in A, C, and E. Since the correlation between the predictions of different rules is high in patient participants, comparisons for the 11 most impatient participants are shown in B, D, and F. Lines indicate paired comparisons where one combination rule provides a significantly better fit (P < 0.05, paired t-test, one-tailed).

Fig. 7.

A: group random-effects results from our previous study are shown, illustrating a correlation with the subjective value of the changing delayed reward in left anterior parietal cortex. These results are from the analysis assuming a hemodynamic response function reported in the Supplemental Materials of Kable and Glimcher (2007). The map is thresholded at P < 0.005 (uncorrected), with a spatial extent threshold of 100 mm3. B: group random-effects results from the current study are shown, for the correlation with relative subjective value in the NOW condition. This contrast is for time points 4–6 in the trial, which corresponds to a neural effect at the time both options are shown. The map is thresholded at P < 0.005 (uncorrected), with a spatial extent threshold so that cluster-level inference is at P < 0.05 (corrected). Correlations in left anterior parietal cortex as well as premotor areas bilaterally can be seen in this slice. C: results from an analysis in which the subjective values of the left and right options were modeled separately. As in Fig. 6, we calculate the subjective values of the left and right options using the ASAP model [specifically, SVASAP with g(DASAP) equal to Eq. 5] and model the NOW and 60 DAY conditions together. In the left anterior parietal region shown in B, only the correlation with the subjective value of the right option is significant and this correlation is significantly greater than that with the left option. This contrast is for time points 4–6 in the trial.

Table 2.

Likely possible combination rules for the subjective values of two options

| BOLD Combination Rule | Value |

|---|---|

| “Sum” | SV1 + SV2 |

| “Larger-Later” | SVL |

| “Later Minus Sooner” | SVL − SVS |

| “Chosen” | SVC |

| “Chosen Minus Unchosen” | SVC − SVU |

| “Right” or “Left” | SVR or SVL |

The superscript on SV is omitted here for clarity, since the combinations could apply to any subjective value function (Eqs. 1–4). The subscripts refer to how the two options are distinguished. Further possible combinations are listed in Supplemental Table S1 and are used in Supplemental Figs. S1 and S2.

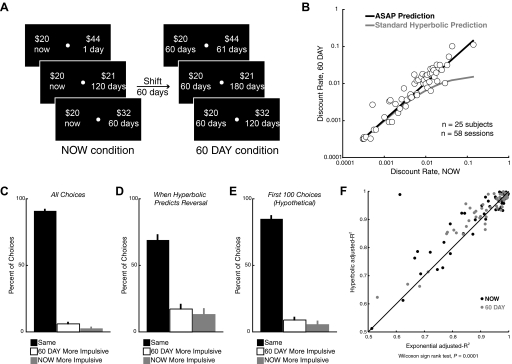

Fig. 9.

A: schematic illustrating how the rewards in the SCALED 60 DAY condition were determined in experiment 2. For each participant, a discount function was estimated in the NOW condition in experiment 1, which describes how the subjective value of a reward declines with delay compared with an immediate reward reference. This hyperbolic discount function corresponds to a line in indifference point space, which connects the amount of money at each delay that is subjectively equivalent to $20 immediately. In the second experiment, the amount of the fixed 60 day reward was chosen so that that reward would be subjectively equivalent to $20 now, according to that participant's discount function. In the example, this amount was $35 in 60 days. B: schematic illustrating the conditions in experiment 1 and experiment 2. C–E: for the 10 subjects that participated in both experiments, the normalized beta coefficients are shown for the correlation with relative subjective value in the NOW and 60 DAY conditions in the first experiment and for the SCALED 60 DAY condition in the second experiment. Each contrast is for time points 4–6 in the trial, which corresponds to a neural effect at the time both options are shown. Scaling the amounts in experiment 2 recovers a correlation with relative subjective value in ventral striatum (C), medial prefrontal (D), and posterior cingulate cortex (E).

As discussed in the following text, some of the our analyses speak to an important issue, which is distinguishing neural signals that track subjective value from those that track only a component of subjective value, such as the reward magnitude or the discount factor associated with the delay. If a region encoded only magnitude, then it should show a similar correlation with relative subjective value in the NOW and 60 DAY conditions (Fig. 4), since the magnitudes are exactly the same in these conditions. Also, activity in such a region should be best fit by the magnitude alone model in the comparison reported in Fig. 6. Another, perhaps stronger, test would be to include magnitude, discount factor, and subjective value in the same statistical model (Ballard and Knutson 2009; Pine et al. 2009). We also estimated several such combined models: 1) a model including all three variables (magnitude, discount factor, and subjective value) with no orthogonalization; 2) a model in which discount factor was orthogonalized with respect to magnitude and subjective value was orthogonalized with respect to magnitude and discount factor, so that all shared variance was attributed to the magnitude covariate; and 3) a model in which discount factor was orthogonalized with respect to subjective value and magnitude was orthogonalized with respect to subjective value and discount factor, so that all shared variance was attributed to the subjective value covariate. Since the results of these analyses were not conclusive, we do not discuss them in detail here, although the trends observed were consistent with the conclusions drawn here. We suspect that some of the difference between our results and those of other investigators may be due to a higher correlation between the three variables in our data set, driven by the presence of several very patient individuals (if a person does not discount at all, the reward magnitude is the subjective value).

Group random-effects analyses were performed using the summary statistics approach, which tests whether the mean effect at each voxel is significantly different from zero across subjects. We report all effects that were significant at P < 0.05 (corrected) using cluster-level inference. Contrast maps were initially thresholded at P < 0.005 (uncorrected) and the appropriate spatial extent threshold for corrected cluster-level inference at P < 0.05 was determined for each contrast. Spatial extent thresholds were determined using Monte Carlo simulations on noisy data sets that were artificially generated with the same spatial smoothness as the contrast maps. The cluster threshold estimator implemented in BrainVoyager is thus conceptually similar to the AlphaSim program in Analysis of Functional NeuroImages (AFNI). To investigate whether the whole brain analysis might have missed weaker effects, we also defined regions of interest (ROIs) in ventral striatum, medial prefrontal, and posterior cingulate cortex. These ROIs encompassed all of the voxels in the significant cluster in that region for the relative subjective value effect in the NOW condition (ventral striatum ROI = 1,115 mm3, medial prefrontal ROI = 1,065 mm3, posterior cingulate ROI = 8,695 mm3) and we took the average signal across all of these voxels as the time series for that ROI. We used the relative subjective value effect in the NOW condition because this was the only contrast that generated significant effects in these regions and because it was conceptually identical to the effects reported in our previous study. Also, defining the ROIs in this way does not bias the two statistical tests we perform using the same data set—whether there is a significant correlation with relative subjective value in the 60 DAY condition and whether there is a significant difference in mean activity between the NOW and 60 DAY conditions. We also use the same ROIs in analyzing the independent data set from experiment 2.

RESULTS

Hyperbolic and “as soon as possible” discounting

(Quasi-)hyperbolic discounting models assume that subjective value declines roughly hyperbolically relative to the present. Specifically, the standard hyperbolic function for the subjective value is

| (1) |

where SVH is subjective value estimated from this model, A is the objective amount of the reward, D is the delay to the reward, and kH is a subject-specific constant. However, as several others have noted (Green et al. 2005; Read 2001; Read and Roelofsma 2003; Scholten and Read 2006), most previous studies have used choices where one reward is always immediately available (similar to our NOW condition). Thus at least in principle, one alternative to (quasi-)hyperbolic models is one in which subjective value declines hyperbolically relative to the soonest possible reward, rather than with regard to the present. We call this possibility “as soon as possible” (ASAP) discounting, to distinguish it from (quasi-)hyperbolic discounting (Glimcher et al. 2007). The most general form of an ASAP function for subjective value is

| (2) |

where SVASAP is the subjective value estimated from this model, DASAP is the delay to the soonest possible reward, and g(DASAP) is a gain factor that is a function of the delay to the soonest possible reward.

This ASAP discounting function is closely related to several previous proposals. Ok and Masatlioglu (2005) described a class of “relative discounting” functions, in which the difference in delay between two options, rather than the delay from the present, is the key variable. The difference in delay is also a critical variable in Read's “subadditive” (Read 2001; Read and Roelofsma 2003) and “discounting by intervals” (Scholten and Read 2006) models, in the “elimination-by-aspects” and “common-aspect attenuation” models discussed by Green and colleagues (2005) and in the similarity model reported by Rubinstein (2003). The assumption of a hyperbolic form makes ASAP most similar to “elimination-by-aspects.” However, ASAP differs from these previous models in two critical ways. ASAP is less broad in its psychological claims. It is not committed to the discount function arising from a particular psychological process, such as subadditivity or the serial comparison of dimensions. ASAP is also unique in that it makes explicit neural claims and is thus falsifiable on both behavioral and neural grounds. These other related models describe only behavior. As discussed in the following text, this aspect of ASAP means that Eq. 2 includes novel testable components that would be irrelevant in a behavior-only model.

Figure 2 provides a graphical comparison between the hyperbolic and ASAP models. There are three important things to note about this comparison. First, both models make the same predictions about the NOW condition, where the soonest reward is available immediately (DASAP = 0). Second, for the ASAP model, the subjective value of a larger-later reward in the 60 DAY condition will not necessarily match the subjective value of the same exact reward in the NOW condition.3 This is because in the ASAP model subjective value depends on the other options that are available, particularly on the delay to the soonest reward in the choice set. A subjective value in the ASAP model is consistent only across choices within a single “condition” in which the soonest possible reward is always offered at the same delay. This choice-set dependence has broader implications that we mention in the discussion and can explain the deviations between the ASAP and hyperbolic predictions for behavior and neural activity in the 60 DAY condition. Third, if the only goal is to make behavioral predictions, then the function that serves as the gain factor, g(DASAP), is an unnecessary addition to the model. In our hands, ASAP is meant to be a model of both choices and the neurally encoded subjective values that drive choices. The gain function scales these neurally encoded subjective values within a “condition,” where a “condition” is a set of choices in which the time to the soonest possible option is held constant. The gain function in ASAP thus serves to condition the numerical subjective value of an option on the earliest available option in the choice set. This means that, at a behavioral level, the value of g(DASAP) (which is constant within a choice set with a fixed earliest reward) does not change what is preferred.4 There is therefore no way, in principle, to estimate the gain function behaviorally or to distinguish between different gain functions based on the behavioral data alone. Models with different gain functions make the same behavioral predictions.5

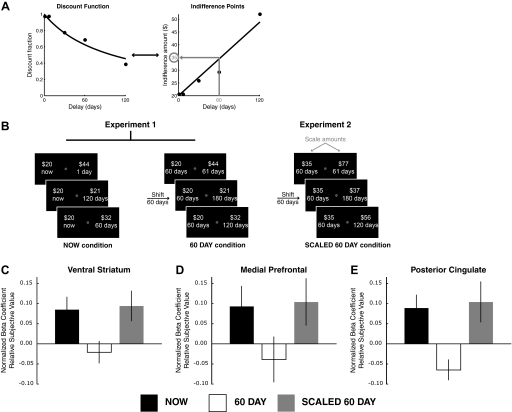

Fig. 2.

Schematic illustrating standard hyperbolic discounting vs. “as soon as possible” (ASAP) discounting. Plotted is how the subjective value and relative subjective value of the larger-later reward change in the 60 DAY condition. The 2 models make the same predictions in the NOW condition. Standard hyperbolic discounting predicts decreased impatience in the 60 DAY condition, as evidenced by the shallower decline in relative subjective value, whereas ASAP discounting predicts the same degree of impatience, as evidenced by the same steepness in the decline of relative subjective value. Standard hyperbolic discounting also assumes that subjective values are consistent between the 2 conditions, as evidenced by the continuity of the curves in the 2 conditions, whereas ASAP discounting assumes that subjective values depend on other options available and this choice-set dependence leads to the discontinuity in the 2 curves. All of the features illustrated here for hyperbolic discounting are shared by quasi-hyperbolic discounting as well. The subjective values shown assume kH = kASAP = 0.03, g(0) = 1, and g(60) = 0.495.

Although the inclusion of g(DASAP) may for this reason seem odd to purely behavioral scientists, it serves a powerful intuition about both mental state and brain function. Even though in ASAP someone's preference between two options will depend only on the difference in delay between the two, the brain (and the psychological processes it underlies) might still keep track of how much both options are delayed from the present. When choosing $21 in 61 days over $20 in 60 days, the internal subjective values of these options may be less than the values when choosing $21 tomorrow over $20 today. In other words, subjects may know that a choice between $21 in 61 days and $20 in 60 days is worse that a choice between $21 tomorrow and $20 today, even when given no opportunity to express that fact. In any case, we include the gain function in the model here because it does affect the neural predictions. Neural representations of subjective value would differ for different gain functions in this model.

Subjective value of delayed rewards declines hyperbolically relative to the soonest possible reward (experiment 1)

For the purposes of the behavioral analyses alone, we can make the simplifying assumption that the gain function g(DASAP) is always equal to one, effectively omitting it from the behavior-only predictions of the model

| (3) |

Note that this simplified form of ASAP corresponds to the “elimination-by-aspects” model discussed by Green and colleagues (2005), as well as to one parameterization of the more general “discounting by intervals” model presented by Scholten and Read (2006). One important thing to note about this simplified parameterization of ASAP is that SVASAPg=1 is now the subjective value of a reward, relative to the soonest possible reward. Thus the distinction between an ASAP model in which the gain factor [g(DASAP)] varies with the delay to the soonest possible reward versus one in which the gain factor is a constant (i.e., g = 1, Eq. 3) corresponds to a distinction between encoding subjective values across choice sets6 versus encoding only relative subjective values within choice sets. For this reason, we refer in the following text to the subjective values calculated using Eq. 3 (SVASAPg=1) as relative subjective values.

Another nice feature of the simplified parameterization of ASAP (Eq. 3) is that it highlights an important general feature of ASAP models. When these models are fit to the NOW and 60 DAY choices separately, the standard hyperbolic and ASAP models make clearly distinguishable predictions about the resulting estimates of the discount rate from the two conditions (k0ASAP, k60ASAP). It should be clear that ASAP predicts that the discount rates estimated from the two conditions should be the same This prediction also reflects the fact that in ASAP the hyperbolic decline in subjective value occurs relative to the soonest possible delay, so people should exhibit the same degree of impatience in the NOW and 60 DAY conditions. An illustration of this prediction can also be seen in Fig. 2, which shows that the decline in relative subjective value for the ASAP model is identical in the NOW and 60 DAY conditions.

In contrast, in (quasi-)hyperbolic discounting, the rate of decline in subjective value decreases as rewards move farther out from the present. (Quasi-)hyperbolic discounting thus predicts that people should be more impatient in the NOW condition compared with the 60 DAY condition. This means that the discount rate estimated in this analysis for the NOW condition (k0ASAP) should be greater than the discount rate estimated for the 60 DAY condition (k60ASAP). Again, this prediction is illustrated in Fig. 2, which shows that the decline in relative subjective value for the hyperbolic model is less steep in the 60 DAY condition than that in the NOW condition. It can be shown algebraically using Eqs. 1 and 3 that standard hyperbolic discounting predicts that the two discount rates estimated in this way should be precisely related by the following (see Green et al. 2005 or Supplemental Note for a derivation)7 where We separated out the choices in the NOW and 60 DAY conditions and fit Eq. 3 separately for each condition. Consistent with ASAP, but not with (quasi-)hyperbolic discounting, our empirical data indicate that participants discounted at similar rates in both conditions (Fig. 3B; see also Glimcher et al. 2007). [These data are thus also inconsistent with models intermediate between ASAP and (quasi-)hyperbolic discounting, such as “common-aspect attenuation”; see Supplemental Note and Supplemental Fig. S1.] The discount rate we observed in the 60 DAY condition was close to the discount rate we observed in the NOW condition for each subject (k60ASAP ≈ k0ASAP) and the discount rates in the 60 DAY condition were significantly larger than those predicted by standard hyperbolic discounting (Wilcoxon signed-rank test, P < 1 × 10−8). Perhaps surprisingly though, the discount rate we observed in the 60 DAY condition was actually slightly larger than the discount rate we observed in the NOW condition on a subject-by-subject basis (k60ASAP > k0ASAP; Wilcoxon signed-rank test, P = 0.008). Thus if anything, participants were more impatient in the 60 DAY condition than in the NOW condition, an effect that goes in the opposite direction from that predicted by (quasi-)hyperbolic models. The size of this effect, however, was very small compared with the discount rates in the two conditions (median difference between k60ASAP and k0ASAP = 0.0002, median k0ASAP = 0.006), suggesting this difference might best be considered trivial.

Fig. 3.

A: schematic illustrating the 2 conditions in experiment 1. The NOW condition involved choices between a fixed immediate reward of $20 and a larger variable reward available after some delay. The 60 DAY condition was constructed from the choices in the NOW condition by adding a fixed delay of 60 days to both options. B: the discount rate (kASAP estimated from Eq. 3) in the 60 DAY condition is plotted as a function of the discount rate in the NOW condition, for all participants and all sessions. Consistent with the ASAP hypothesis, the points lie predominantly on the diagonal, indicating that the discount rates are similar in the 2 conditions. C: for pairs of choices differing only by the addition of the fixed 60 day delay, the percentage of choices is shown for which participants made the same choice (both smaller-sooner or larger-later), were more impulsive in the NOW condition (chose smaller-sooner in NOW and larger-later in 60 DAY), or were more impulsive in the 60 DAY condition (chose larger-later in NOW and smaller-sooner in 60 DAY). Participants predominantly made the same choice in both conditions. D: same analysis as in C, for only those choices in which standard hyperbolic discounting would predict an impulsive preference reversal. Again participants predominantly made the same choice in both conditions, consistent with ASAP but not standard hyperbolic discounting. E: same analysis as in C and D, for only the first 100 choices the participants faced in the very first session, which involved only hypothetical choices. Even when they did not have extensive experience with the task, participants predominantly made the same choice in both conditions, consistent with ASAP but not (quasi-)hyperbolic discounting. F: plotted is the adjusted-R-squared for the ASAP-hyperbolic discount function (Eq. 3) vs. the adjusted-R-squared for the exponential discount function (Eq. 4). Each point represents the fit from a single participant's data from a single session for either the NOW or the 60 DAY condition. Across both conditions, the ASAP-hyperbolic equation provides better fits than the exponential.

Participants do not make impulsive preference reversals (experiment 1)

In addition to comparing the discount rates estimated from the NOW and 60 DAY data, we also compared the choices in these two conditions directly, to test whether participants made impulsive preference reversals as predicted by (quasi-)hyperbolic discounting. To do this, we considered pairs of choices across the two conditions that differed only in the addition of a common 60 day delay to both options. (Quasi-)hyperbolic discounting predicts that people will sometimes make impulsive preference reversals between two such choices: taking the larger-later reward from a pair in the 60 DAY condition, while also picking the smaller-sooner reward in the corresponding pair in the NOW condition. ASAP (and the behavioral models like it) differs from (quasi-)hyperbolic models in that it does not predict such reversals. Under ASAP participants should make the same choice in both conditions, on average. Under ASAP only stochasticity in choice could yield changes between the NOW and 60 DAY conditions and these changes should be randomly distributed, rather than systematically distributed toward more impulsive choices in the NOW condition. Across all pairs of choices in the two conditions, we found that participants made the same choice in both conditions >90% of the time (Fig. 3C). In addition, even when participants' choices did differ, the differences appeared randomly distributed. Subjects, if anything, were slightly more likely to be more impulsive (choosing smaller-sooner) in the 60 DAY condition, rather than in the NOW condition (paired t-test, P = 0.0001). Thus the preference reversals we observed in our data set were more likely to go in the opposite direction from that predicted by (quasi-)hyperbolic discounting. This effect is completely consistent, however, with the trivially larger discount rates in the 60 DAY condition described earlier.

(Quasi-)hyperbolic discounting, though, predicts preference reversals for only a subset of choice pairs, so including all choice pairs (as we did in Fig. 3C) may not be the fairest test of this prediction of more standard models. Therefore using the discount rate estimated for each individual in the NOW condition, we isolated only those choice pairs where standard hyperbolic discounting (Eq. 1) specifically predicts that a participant should make an impulsive preference reversal. The predicted number of reversals ranged across participants from 0 to 56% of choice pairs (mean: 8.00 ± 1.36%), with the number of predicted reversals depending on the steepness of the discount function and increasing for more impatient participants. We examined this subset of the behavioral data, which included data from 17 subjects and 36 sessions, for evidence of systematic preference reversals. Critically, even for these choices where the standard hyperbolic model clearly predicts a reversal, participants still made the same choice in both conditions the majority of the time (70% of choices, Fig. 3D), as predicted by ASAP. Even when they did make a reversal, these reversals were apparently randomly distributed. Participants were as likely to be more impulsive in the 60 DAY condition as in the NOW condition, even in this subset of the data where they should have been most likely to exhibit preference reversals according to (quasi-)hyperbolic models (paired t-test, P = 0.51).

Since the absence of preference reversals in this data set seemed odd, given the prominence of (quasi-)hyperbolic discounting in the literature, we also performed an additional analysis to see whether some aspect of our procedure had actively eliminated preference reversals from the behavior of our subjects. The analyses in Fig. 3, B–D described earlier included only paid sessions. All participants had made ≥200 practice choices by this point. To examine whether practice with the task could explain our failure to observe preference reversals, we also analyzed the first 100 choices of each participant in the very first practice session involving hypothetical choices. This is the smallest number of choices in which all 50 pairs are represented, thus constituting the smallest data set in which one could systematically search for the existence of preference reversals. (This analysis also excluded the four subjects who had previously participated in a similar experiment in our laboratory, so n = 21.) As shown in Fig. 3E, the pattern observed in the first 100 hypothetical choices for these subjects is the same as that observed in the full data set of paid sessions. The majority of the time (85% of choices), participants made the same choice in each condition and did not reverse their preference. When the choices did differ, these differences appeared random. Again, there were more instances where participants were more impulsive in the 60 DAY condition, although this difference was not statistically significant (paired t-test, P = 0.28).

Participants overweight soonest possible rewards (experiment 1)

Of course the ASAP model is not the only model that can explain a failure to observe preference reversals. Another possible explanation for this pattern of results shown in Fig. 3 is that subjective value may decline exponentially with delay for our participants, rather than hyperbolically. Although previous meta-analyses have shown that hyperbolic functions almost always account for intertemporal choice data better than exponential functions (Bickel and Marsch 2001; Frederick et al. 2002; Green and Myerson 2004; Laibson 1997), our unexpected failure to find preference reversals led us to compare the fit of ASAP to that of the traditional economic model of exponential discounting. The exponential function for subjective value is

| (4) |

We fit this equation separately in each of the two conditions and therefore obtained different estimates of the exponential discount rate from the NOW and 60 DAY data sets. Since the exponential curve has a constant first derivative, the exponential discounting model predicts that the discount rates estimated in this way should be the same in the two conditions. In other words, exponential discounting, like ASAP, also predicts similar discount rates in the two conditions and no impulsive preference reversals. However, although the exponential Eq. 4 provided an acceptable fit to the behavioral discount functions in both conditions, the ASAP-hyperbolic Eq. 3 provided a better fit overall (Fig. 3F, Wilcoxon signed-rank test, across both conditions, P = 0.0001; for the 60 DAY condition alone, P = 0.0002; for the NOW condition alone, P = 0.086). Thus the model that best accounted for our participants' choices in our task was the ASAP model, in which choosers hyperbolically discount the value of delayed rewards, but this hyperbolic decline is anchored to the soonest possible reward.

Ventral striatum, medial prefrontal, and posterior cingulate cortex encode subjective value, rather than an immediacy signal (experiment 1)

The behavioral data demonstrate, perhaps surprisingly, that our participants did not make the impulsive preference reversals predicted by (quasi-)hyperbolic models. Our first analysis of the fMRI data were therefore aimed at testing the hypothesis, inspired by the quasi-hyperbolic discounting model, that separate neural systems value immediate and delayed rewards. We constructed a general linear model of the fMRI data that estimated two effects in each condition (NOW or 60 DAY): the mean level of neural activity in that condition, across all trials; and trial-to-trial deviations from that mean that were correlated with the relative subjective value of the larger-later reward (SVASAPg=1, as estimated behaviorally using Eq. 3).

The separate neural systems hypothesis proposes that ventral striatum, medial prefrontal, and posterior cingulate cortex encode an immediacy signal, which is high when an immediate reward can be chosen and low in all other situations (McClure et al. 2004a, 2007). This hypothesis predicts that the level of mean activity in the NOW condition should be significantly greater than that in the 60 DAY condition in these regions. Also, according to this hypothesis, neural activity in these regions tracks only whether an immediate reward is available, so changes in the value of the larger-later reward should not affect activity in these regions and there should be no correlation with relative subjective value in either the NOW or the 60 DAY condition.

In contrast, the hypothesis that these regions encode subjective value (as in the ASAP model) predicts a strong correlation with relative subjective value. This hypothesis is also consistent with increased mean activity in the NOW condition compared with the 60 DAY condition, since sooner rewards will have greater subjective values. However, the absolute predicted size of this difference depends on the parameters of the ASAP model, such as whether the gain factor g(DASAP) is a constant and how impatient each subject is (kASAP).

Our results were consistent with the hypothesis that ventral striatum, medial prefrontal, and posterior cingulate cortex encode subjective value, but inconsistent with the hypothesis that these regions encode an immediacy signal (see also Glimcher et al. 2007). Replicating our previous findings (Kable and Glimcher 2007), we found that there was a significant effect of relative subjective value (SVASAPg=1) in the NOW condition in ventral striatum, medial prefrontal cortex, and posterior cingulate cortex (P < 0.05, corrected; see Fig. 4 and Table 1). However, when we explicitly searched for an “immediacy signal,” we found no significant difference in these regions between the mean levels of activity in the NOW and 60 DAY conditions at the whole brain level (at P < 0.05, corrected; see Fig. 4, bottom and Table 1). Since the whole brain analysis might have missed weaker effects, we also defined ROIs in the ventral striatum, medial prefrontal, and posterior cingulate cortex. ROIs were defined based on the relative subjective value effect in the NOW condition (see methods for details). In each ROI, the difference in mean activity between the NOW and the 60 DAY conditions was positive. This difference was statistically significant in the posterior cingulate cortex (P = 0.01, Fig. 5), borderline in medial prefrontal cortex (P = 0.07, Fig. 5), and nonsignificant in ventral striatum (P = 0.25, Fig. 5; see also Supplemental Fig. S2). As we discuss in more detail in the following text, the size of these differences in mean activity is consistent with what would be predicted if neural activity tracked subjective value. However, the overall pattern of effects fails to support the predictions of the immediacy signal hypothesis. This reinforces our earlier conclusion (Kable and Glimcher 2007) regarding this hypothesis in a new and larger set of participants.

Table 1.

Location of significant effects in experiment 1 (P < 0.05, corrected, cluster-wise inference)

| Anatomical Description | Center of Gravity (Talairach Coordinates) | Size, mm3 | Peak Location (Talairach Coordinates) | Peak z-Score | ||||

|---|---|---|---|---|---|---|---|---|

| Correlation with relative subjective value, NOW (P < 0.005 and cluster size > 924 mm3) | ||||||||

| Posterior cingulate (BA 31/23) | −1 | −39 | 36 | 8,695 | −12 | −37 | 34 | 4.18 |

| Precentral gyrus/sulcus (BA 6) | 47 | 0 | 42 | 5,655 | 39 | −13 | 49 | 3.76 |

| Inferior frontal gyrus/lateral orbital (BA 47) | 40 | 23 | −10 | 2,176 | 33 | 17 | −11 | 3.54 |

| Precentral gyrus/sulcus (BA 6) | −42 | −4 | 47 | 1,855 | −48 | −10 | 43 | 3.33 |

| Anterior intraparietal sulcus (BA 40) | −44 | −44 | 52 | 1,395 | −45 | −43 | 49 | 3.79 |

| Caudate/ventral striatum | 5 | 8 | 2 | 1,115 | 3 | 5 | 4 | 3.55 |

| Medial prefrontal/anterior cingulate (BA 32/24) | 2 | 36 | 23 | 1,065 | 0 | 35 | 28 | 3.13 |

| Inferior frontal gyrus (BA 44) | −50 | 14 | 7 | 1,042 | −54 | 11 | 7 | 3.36 |

| Correlation with relative subjective value, 60 DAY (P < 0.005 and cluster size > 473 mm3) | ||||||||

| None | ||||||||

| Mean activity difference, NOW − 60 DAY (P < 0.005 and cluster size > 782 mm3) | ||||||||

| Medial occipital/calcarine sulcus (BA 17/18) | 0 | −73 | 2 | 14,063 | −12 | −76 | 4 | −4.36 |

| Posterior middle temporal gyrus/superior temporal sulcus (BA 21/22) | −58 | −41 | 5 | 2,540 | −63 | −25 | −2 | 3.57 |

| Posterior parietal (BA 40/7) | −44 | −62 | 46 | 1,574 | −42 | −67 | 46 | 3.90 |

| Temporal–parietal junction (BA 39/40) | 55 | −51 | 27 | 1,462 | 54 | −46 | 31 | 3.53 |

| Posterior parietal (BA 40/7) | 40 | −48 | 58 | 1,387 | 42 | −49 | 58 | 3.64 |

| Temporal–parietal junction (BA 39/40) | −63 | −44 | 27 | 1,080 | −63 | −43 | 28 | 3.68 |

| Anterior intraparietal sulcus (BA 40) | −48 | −32 | 56 | 933 | −57 | −25 | 52 | 3.45 |

| Angular gyrus (BA 39) | −38 | −70 | 28 | 901 | −39 | −70 | 28 | 4.45 |

| Precuneus (BA 7) | 0 | −64 | 57 | 882 | 3 | −64 | 55 | 3.59 |

| Middle frontal gyrus (BA 6/8) | −34 | 14 | 55 | 808 | −30 | 20 | 52 | 3.86 |

Negative effects are in italics. BA, Brodmann Area.

Fig. 5.

The difference in mean activity between the NOW and 60 DAY conditions is plotted for the ventral striatum, medial prefrontal, and posterior cingulate regions of interest (ROIs), for time points 4–6 in the trial. In each case, this effect is normalized relative to that for the relative subjective value effect in the NOW condition. The dotted lines show the predicted size of this effect for each of the 6 combination rules tested in Fig. 6, illustrating that the size of this effect is consistent with the hypothesis that activity in these regions tracks subjective value [i.e., SVASAP when g(DASAP) is not a constant]. Solid horizontal lines indicate when the observed effect is significantly different from that predicted by the given combination rule. Note that the “Sum” and “Right” combination rules make almost identical predictions regarding the difference in mean activity and are thus not shown separately.

Ventral striatum, medial prefrontal, and posterior cingulate cortex encode subjective value on an absolute rather than a relative scale (experiment 1)

The same statistical model also allowed us to begin to examine whether ventral striatum, medial prefrontal, and posterior cingulate cortex track the subjective value or the relative subjective value of immediate and delayed rewards (see Fig. 2). Single neurons in posterior parietal cortex have been found to track relative subjective values (Dorris and Glimcher 2004; Sugrue et al. 2004), whereas neurons in orbitofrontal cortex and striatum appear to track subjective value on a more absolute scale (Padoa-Schioppa and Assad 2006, 2008; Samejima et al. 2005). However, the issue of absolute versus relative valuation has received less attention in human fMRI studies of the subjective values that drive choice, although the issue has been studied in the context of the experienced values of outcomes (Breiter et al. 2001; Nieuwenhuis et al. 2005). Remember that, in the ASAP model, the distinction between subjective value and relative subjective value corresponds to a distinction between a model in which the gain factor [g(DASAP) in Eq. 2] varies as a function of the delay to the soonest possible reward and a model in which the gain factor is always a constant (i.e., g = 1, as in Eq. 3). This then provides a potential neural parameterization of this aspect of the ASAP model.

If these regions track only the relative subjective value of the later reward compared with the sooner reward [a situation in which g(DASAP) is always equal to a constant], then neural activity should show a similarly sized effect (of relative subjective value, SVASAPg=1, as estimated behaviorally from Eq. 3) in both the NOW and the 60 DAY conditions. However, even though we found a robust effect of relative subjective value in these regions in the NOW condition, this effect did not reach significance in the 60 DAY condition in any region (at P < 0.05, corrected; see Fig. 4, middle and Table 1). Even when using a more sensitive ROI analysis, the relative subjective value effect in the 60 DAY condition did not reach significance in the ventral striatum (P = 0.11), medial prefrontal cortex (P = 0.52), or posterior cingulate cortex (P = 0.28; see also Supplemental Fig. S2). Thus we failed to find evidence that ventral striatum, medial prefrontal, and posterior cingulate cortex encode only relative subjective value. (Note that this finding taken alone is also inconsistent with these regions encoding only the magnitude of rewards and ignoring delays, since the monetary amounts are also exactly the same in the NOW and the 60 DAY conditions.)

However, although these results suggest that ventral striatum, medial prefrontal, and posterior cingulate cortex do not encode relative subjective value [i.e., they are incompatible with an ASAP model in which g(DASAP) is equal to a constant], they are consistent with the possibility that these regions encode a more absolute form of subjective value [i.e., they are compatible with an ASAP model in which g(DASAP) varies as a function of delay; see Fig. 2]. If these regions track a more absolute form of subjective value, then the effect of a relative subjective value (SVASAPg=1) as a regressor should be greater in the NOW condition than that in the 60 DAY condition, since the neural modulations in the NOW condition would be greater than those in the 60 DAY condition. This is what we observed.

Testing BOLD combination rules: how does the BOLD signal reflect the subjective values of multiple options? (experiment 1)

If neural activity in ventral striatum, medial prefrontal, and posterior cingulate cortex does encode subjective value, as these data imply, this raises an additional question: How does the BOLD signal combine the subjective values of the multiple options presented to a subject on a given trial? Previous studies in which more than one option is presented at the same time have usually assumed that BOLD activity tracks the sum of the values of the two options or the value of the chosen option. There are other possibilities, however. Table 2 lists six possible BOLD combination rules for the current experiment: the sum of the values of both options (“Sum”), the value of the larger-later option alone (“Larger-Later”), the larger-later value minus the smaller-sooner value (“Later Minus Sooner”), the value of the chosen option (“Chosen”), the chosen value minus the unchosen value (“Chosen Minus Unchosen”), and the value of the option presented on the right/left (“Right”).

These six combination rules were selected from a larger list of possible combination rules (Supplemental Table S1), based on the numerical predictions of each rule for the modulations in BOLD activity in both the NOW and the 60 DAY conditions. These predictions were calculated using the discount functions estimated for each individual subject and the choices presented to each participant. Only the six combinations of subjective values (i.e., SVASAP) listed in Table 1 would lead to differential modulations in the NOW and 60 DAY conditions, as we observed. The other combination rules tested predict similar modulations in these two conditions and thus can be discarded for this data set (Supplemental Fig. S3). Importantly, these calculations also showed that any combination of relative subjective values (i.e., SVASAPg=1) predicts similar modulations in the NOW and 60 DAY conditions and is therefore inconsistent with the results presented earlier (Supplemental Fig. S4).

Thus six different combinations of ASAP subjective values are broadly consistent with our finding of significant value-related modulations in the NOW condition but not in the 60 DAY condition. All of these combinations predict large trial-to-trial modulations in the NOW condition (in fact, “Sum,” “Larger-Later,” and “Later Minus Sooner” make the same exact predictions when restricted to the NOW condition alone, as do “Chosen” and “Chosen Minus Unchosen”; see Supplemental Fig. S3) and smaller trial-to-trial modulations in the 60 DAY condition. Further testing between these combination rules, however, requires making some assumption about the gain function g(DASAP), since it cannot be estimated behaviorally. Here we assume as the simplest possible parameterization that g(DASAP) is the hyperbolic discount factor associated with the soonest possible reward

| (5) |

We have examined several related gain functions, such as the exponential discount factor associated with the soonest possible reward. The results discussed in the following text do not hinge on this particular assumption (indeed there may be good theoretical reasons to assume the exponential form). We consider Eq. 5 an ad hoc assumption of a functional form necessary for testing between BOLD combination rules, rather than a critical aspect of the ASAP model.

As discussed earlier, we observed modest differences in mean activity between the NOW and 60 DAY conditions in the ventral striatum, medial prefrontal, and posterior cingulate ROIs. Figure 5 shows that the six BOLD combination rules listed in Table 1 are broadly consistent with this finding. The differences in mean activity presented in Fig. 5 are normalized for each participant by the relative subjective value effect in the NOW condition, so that the units of these differences can be interpreted as “subjective dollars immediately.” In all three ROIs, the difference in mean activity we observed was within the range of that expected if these regions represented some combination of subjective values (i.e., SVASAP) and, in each case, was consistent with most of the six combination rules.

We next directly tested which BOLD combination rule best accounted for trial-by-trial modulations in neural activity in the ventral striatum, medial prefrontal, and posterior cingulate ROIs. To do this, we performed an additional set of analyses that tested for correlations with different combinations of ASAP subjective values, combining data across both the NOW and the 60 DAY conditions. The subjective value regressors in this case were normalized so that the beta values from these different analyses could be compared (Hampton et al. 2008; Hare et al. 2008). Figure 6 reports this test for the first five of the BOLD combination rules (the results of the right-only or left-only combination rule are presented in the following text) and includes two control analyses for comparison: 1) one testing for correlations with only the relative subjective value of the larger-later reward (i.e., SVASAPg=1, which we call “Relative”) and 2) one testing for correlations with only the magnitude of the larger-later reward, ignoring the delay (“Amount”). In all three regions, the sum, larger-later, and chosen combination rules provided the best fits and could not be statistically distinguished (at P < 0.05, paired t-test, one-tailed). In ventral striatum, the best-fitting combination was the subjective value of the larger-later reward, whereas in medial prefrontal, and posterior cingulate cortex the best-fitting combination was the sum of the subjective values. In all three regions, the best-fitting combination provided a significantly better fit than the worst-fitting combination and, in some cases, further differences were statistically significant (at P < 0.05, paired t-test, one-tailed). The differences between many pairs of these combination rules, however, were small. These tests do reinforce our earlier conclusion that these regions encode subjective value [i.e., g(DASAP) is not constant] rather than relative subjective value [i.e., g(DASAP) is constant]. However, we are unable to draw definitive conclusions about the precise combination rule expressed by the BOLD signal in these areas.

Anterior parietal cortex encodes the subjective value of the contralateral option (experiment 1)

In addition to the three regions discussed earlier, there were five additional areas in which activity was significantly correlated with the relative subjective value of the changing later reward (SVASAPg=1) in the NOW condition (P < 0.05, corrected). These were left anterior parietal cortex and dorsal premotor and ventrolateral prefrontal cortices bilaterally (Table 1, Fig. 7). In our previous study (Kable and Glimcher 2007), we did find an effect of subjective value in left anterior parietal cortex in some analyses, but we did not find such an effect previously in any of the prefrontal areas (Fig. 7). In the four frontal areas observed here, neither the relative subjective value effect in the 60 DAY condition nor the difference in mean activity between the two conditions reached significance (Table 1). There was a different pattern of effects in left anterior parietal cortex, in which the difference in mean activity between the two conditions did reach significance (Table 1). Left anterior parietal cortex was also the only region that displayed a laterality effect: neural activity was significantly more correlated with the subjective value (SVASAP) of the option presented on the right (and requiring a right-hand motor response), compared with the option presented on the left (Fig. 7). Note that we could not have tested for such a laterality effect in our previous study, in which options were presented centrally and a right-handed “go”–“no go”-style response was intentionally used. Thus the pattern of effects in left anterior parietal cortex we observed here is consistent with activity in the posterior parietal cortex tracking the subjective value of the option presented on the contralateral side or associated with a contralateral motor response (Glimcher 2009; Glimcher et al. 2007).

Is there evidence for a δ system? (experiment 1)

In addition to proposing that ventral striatum, ventromedial prefrontal, and posterior cingulate cortex carry an immediacy signal, the separate neural systems hypothesis also proposes that dorsolateral prefrontal and posterior parietal regions carry an opponent signal that more equally values immediate and delayed rewards. We did find prefrontal and parietal regions in which neural activity scaled with subjective value. However, the hypothesized δ system was defined based on three properties (McClure et al. 2004a, 2007): increased activity for all choices compared with rest, increased activity for difficult compared with easy choices, and greater activity when a delayed reward is chosen compared with when an immediate reward is chosen. In our data set, we saw increased activity across much of the brain, including prefrontal and parietal regions, for all choices compared with rest (P < 0.05, corrected, Fig. 8A). Using reaction time as an index of difficulty, we also saw a correlation between activity and difficulty in prefrontal, parietal, and anterior cingulate regions, although this occurred at the time of the response rather than when the choice options appeared (P < 0.05, corrected; Fig. 8, B and C). However, we found no regions in which activity was significantly greater on trials where the later reward was chosen, compared with when the sooner reward was chosen (at P < 0.05, corrected; Fig. 8D). We consider this last contrast the key prediction regarding the δ system—the first two effects have many possible interpretations. We therefore conclude that there is not strong evidence in our data set for a δ system or a set of regions that shows uniformly increased activity for all choices of delayed rewards over immediate rewards; however, this does not preclude a role for these regions in a more specific subset of choices (Hare et al. 2009).

Ventral striatum, medial prefrontal, and posterior cingulate cortex do carry a value signal during choices between delayed rewards (experiment 2)

To summarize, the results of the first experiment suggest that ventral striatum, medial prefrontal, and posterior cingulate cortex track the subjective value of immediate and delayed rewards, on a more absolute rather than purely relative scale. However, this hypothesis predicts that there should be some measurable value signal in these regions during choices involving only delayed options, yet the relative subjective value effect was not significant in any of these three regions in the 60 DAY condition alone. This observation suggests either that no signal occurs in these areas for the 60 DAY condition alone or that the signal exists but is beneath our measurement threshold. Although the latter seems likely and is consistent with our findings and reasoning so far, it would be reassuring to have some positive evidence for a value signal during choices between delayed options. The search for this positive evidence thus motivated our second experiment. Ten subjects from the first experiment participated in this second experiment, which involved one session in the MRI scanner. (The behavioral data for this experiment, which by design do not provide as clean a test between standard hyperbolic discounting and ASAP as that in experiment 1, are shown in Supplemental Fig. S5.)

The parameterization of the ASAP model that we found support for in the first experiment assumes that the subjective value of the later reward varies with delay differences in a similar manner in the NOW and 60 DAY conditions, only that the absolute size of these neural signals is reduced in the 60 DAY condition by some gain function [g(DASAP)]. If the small absolute size of these signals is the reason that the relative subjective value correlation failed to reach significance in the 60 DAY condition, then increasing the gain on these signals by scaling the amounts of the rewards should recover a significant correlation, further supporting the ASAP model. Accordingly, to construct the options for the SCALED 60 DAY condition, we not only added a delay of 60 days to both options from the NOW condition, but also increased the reward amounts proportionally by a scaling factor. This scaling factor was chosen individually for each participant based on previous sessions, so that the sooner reference option ($X in 60 days) would be weakly preferred (almost identical in subjective value) to $20 now. By scaling-up all of the amounts in the SCALED 60 DAY set of choices in this way, we should make the overall subjective values of the SCALED 60 DAY choice set roughly equivalent to the subjective values of the NOW choice set. Thus the critical question in this experiment is then whether scaling the amounts in this way improves the correlation between BOLD and relative subjective value in the SCALED 60 DAY condition, as would be predicted by the ASAP model with a nonconstant gain function.