Abstract

While several techniques are available in proteomics, LC-MS based analysis of complex protein/peptide mixtures has turned out to be a mainstream analytical technique for quantitative proteomics. Significant technical advances at both sample preparation/separation and mass spectrometry levels have revolutionized comprehensive proteome analysis. Moreover, automation and robotics for sample handling process permit multiple sampling with high throughput.

For LC-MS based quantitative proteomics, sample preparation turns out to be critical step, as it can significantly influence sensitivity of downstream analysis. Several sample preparation strategies exist, including depletion of high abundant proteins or enrichment steps that facilitate protein quantification but with a compromise of focusing on a smaller subset of a proteome. While several experimental strategies have emerged, certain limitations such as physiochemical properties of a peptide/protein, protein turnover in a sample, analytical platform used for sample analysis and data processing, still imply challenges to quantitative proteomics. Other aspects that make analysis of a proteome a challenging task include dynamic nature of a proteome, need for efficient and fast analysis of protein due to its constant modifications inside a cell, concentration range of proteins that exceed dynamic range of a single analytical method, and absence of appropriate bioinformatics tools for analysis of large volume and high dimensional data.

This paper gives an overview of various LC-MS methods currently used in quantitative proteomics and their potential for detecting differential protein expression. Fundamental steps such as sample preparation, LC separation, mass spectrometry, quantitative assessment and protein identification are discussed.

For quantitative assessment of protein expression, both label and label free approaches are evaluated for their set of merits and demerits. While most of these methods edge on providing “relative abundance” information, absolute quantification is achieved with limitation as it caters to fewer proteins. Isotope labeling is extensively used for quantifying differentially expressed proteins, but is severely limited by successful incorporation of its heavy label. Lengthy labeling protocols restrict the number of samples that can be labeled and processed. Alternatively, label free approach appears promising as it can process many samples with any number of comparisons possible but entails reproducible experimental data for its application.

Keywords: Liquid chromatography-mass spectrometry (LC-MS), Quantitative proteomics, Labeling, Label-free, Tandem mass spectrometry (MS/MS)

Proteomics

Genomics era arrived with a promise of providing complete genome sequence needed for comprehensive analysis of an organism. However, it was discovered much later that a genome could be predominantly static and unaltered in response to extra- and intracellular influences (Souchelnytskyi, 2005). The obvious line of attack was to explore transcriptomics as both genome and proteome were dynamically linked to it. Transcriptomic studies were successful as they provided quantitative information on mRNA transcripts generated for certain point of time. But a lack of correlation observed, between mRNA and protein expression levels, eventually led investigators to focus directly on proteins, referred to as ultimate effectors of a cell.

Proteomics plays a central role in the discovery process due to its diverse applications – mechanism of disease process, drug targets, nutritional and environmental science, functional genomics etc. Proteomics focuses on identifying and quantifying proteins, characterizing them based on interaction, pre-translational and post-translational modifications, sub-cellular localization, and structure under physiological conditions. Based upon its underlying approach, proteomics is categorized as: expression, structural, and functional. Expression proteomics deals with quantitative comparison of proteins that differ by an experimental condition. Proteins are profiled on expression level changes or any modifications that may have occurred between groups that are being compared (Souchelnytskyi, 2005). Structural proteomics, on the other hand aims at mapping out structure of a protein complex or specific protein isolated from a system. Likewise, functional proteomics characterizes a selective group of proteins and assigns function derived from protein signaling and/or drug interaction mechanism. In this article, we focus on quantitative aspects of expression proteomics and its workflow. Due to its ability to reflect dynamic nature of all cellular processes and provide a global integrated view of all entities, quantitative proteomics has been extensively used for monitoring both physiological phenomenon and pathological conditions (Souchelnytskyi, 2005).

One such area that has greatly benefited from quantitative comparisons is biomarker discovery in cancer research. Biomarker discovery entails quantitative analysis and identification of proteins that can be mapped back to the cause of the condition. The basis for biomarker discovery is to develop diagnostic techniques that facilitate early detection and treatment options. Despite its enormous clinical importance, the overall process of biomarker finding is long with validation needed at several steps of discovery process. (Chambers et al., 2000). Irrespective of individual objectives of a proteome study, most researchers find themselves interested in identifying proteins of relevance or comparing protein abundances under different conditions (normal and diseased); with mass spectrometry (MS) as the enabling technology. Recently, several protein profiling technologies have been developed that allow identification of hundreds of proteins and facilitate quantitative comparison of analytes from cell, tissue, or human fluid samples (Graves and Haystead, 2002). Such progress has primarily resulted from initial and continuous development of instrumentation and analytical methods such as mass spectrometry and chromatographic and electrophoretic separation as well as from data analysis tools. Since protein identification and quantification are complimentary to each other most proteomics studies include the following: i) Extraction and isolation of proteins, ii) Separation of proteins/peptides (2D gel and non-gel) iii) Data acquisition of protein fragmentation pattern using mass spectrometry, and iv) Database search to reveal protein identification. While these steps are still being improved and developed to accommodate multi-dimensional data (Graves and Haystead, 2002), inherent factors that still make proteome investigations a difficult task are broad dynamic nature of a proteome and the in ability to capture constantly changing dynamics of a proteome (Graves and Haystead, 2002).

Quantitative Proteomics

Quantitative proteomics is an extension of expression proteomics as it provides quantitative information (relative or absolute) on existing proteins within a sample. Since biological processes are mainly controlled by proteins, it is desirable to study and compare proteins directly. Obtaining accurate information on protein is crucial, as any change in response to external influence, indicates toward proteins that control underlying biological mechanisms. Further to this, quantitative protein information can also be used towards modeling of biochemical networks. Absolute concentrations of proteins help build high definition models; whereas relative quantitative data can be used to compare protein expression levels among samples, provided the expression levels are normalized to a reference protein within sample (Souchelnytskyi, 2005).

Even though current quantitative proteomics is far from characterizing comprehensive proteome, several techniques exist that are successful in extracting quantitative information on proteins in their own limited way. These techniques include two-dimensional electrophoresis, protein microarrays, microfludics, and liquid chromatography coupled with mass spectrometry as outlined below.

Two-dimensional electrophoresis (2DE) method resolves proteins as spots on a gel each spot specified by its molecular weight (MW) and isoelectric point (pI). Widely used for complex proteins mixtures, this technique has been successfully employed as a tool for examining pathological processes such as Alzheimer’s disease, schizophrenia (neuropathology), cardiac hypertrophy, and cardiomyopathy (cardiovascular diseases) progression (Souchelnytskyi, 2005). However, certain drawbacks exist: difficulty in accommodating hydrophobic proteins or extracting less soluble proteins (membrane proteins) and inability to achieve an entire representation of a proteome. To broaden the range of proteins covered and improve loading amounts, protocols can include sequential extraction of proteins by fractionation, however that leads to an extensive workflow which is a major bottleneck for 2DE methodology. Bottlenecks also occur at protein detection and quantitation levels, as intensive image analysis is needed for single or doubly stained gels. In view of current limitations of the 2DE platform, many gel free techniques were developed that can include insoluble proteins as well and are more global in set of proteins being analyzed.

Protein microarray is one such gel free technique that consists of a library of peptides, proteins or analyte of interest spotted on a solid support. Spotted protein samples are labeled with a fluorescent tag binding to the individual targets for quantification and measurement purpose (Veenstra and Yates, 2006). In addition to fluorescence, chromogen, chemi-luminescent and radioisotopic labeling has also been utilized used for detection purposes. Even though protein array are flexible and have a great deal of potential to complement other prevalent proteomic technologies, its utilization and development has been severely limited due to several technical challenges (Hall et al., 2007). Some of these consist of: a) absence of a wider variety of affinity reagents (besides monoclonal antibodies, recombinant proteins) b) Improved surface chemistry to facilitate immobilization and capture of affinity reagents and c) need for self-assembling protein array platform(Veenstra and Yates, 2006). While protein microarray is a high throughput method to probe an entire collection of proteins, it is as good as the quality of proteins fixed on the chip.

Microfluidics is a miniaturized technique that has rapidly advanced with the aim of analyzing small volumes of proteins. Recent advances have been made towards implementing microfluidics for protein sample treatment, cell manipulation, sample cleanup, protein fractionation as well as on chip proteolytic digestion (Veenstra and Yates, 2006). With dramatic advances observed for microfabrication and microfluidic applications, several protein profiling strategies such as microfluidics based isoelectric focusing system, microdialysis of small volumes of proteins have emerged that have the potential for improved delivery to MS setup. Both elctrospary ionization (ESI-MS) and matrix assisted laser desorption ionization (MALDI) interfaces have been investigated for microfabricated microfluidic devices, to be successfully applied towards protein/peptide separation using chromatographic and/or electrokinetic-based principles. While ESI-MS emitters have been effective for an infusion analysis, the tips can contribute toward peak broadening not conducive for microfluidics based separation. Similarly for MALDI purposes, crystallized peptides have been presented along the edge of a disc for MS analysis. In such cases, the device has an increased surface-to-volume ratio which allows protein digestion by means of proteolytic enzymes immobilized on the chip surface (Veenstra and Yates, 2006). One such successful application is multi-dimensional separation of yeast cell lysate proteins, demonstrated with microfluidics interface (Veenstra and Yates, 2006).

Liquid chromatography coupled with mass spectrometry (LC-MS) is another attempt at achieving a fully integrated proteomic system for analyzing protein components and is our main topic of our discussion. LC is the most commonly used mechanism for separating peptides and proteins. Separated analytes are detected and identified by mass spectrometry. Inside a mass spectrometer, biomolecules are further separated before fragmentation, by their mass to charge ratio (m/z). Discussed in the following sections are the various LC-MS methods currently used for quantitative expression proteomics.

Sample Preparation

Given the large differences observed in concentration of thousands of proteins present in a sample, it is imperative to generate consistent and reliable data at all times. To achieve this, an optimal protocol is needed to minimize the impact of various factors that influence data quality. These include sample type (body fluid, cells, tissue, etc.), sample collection method, sample storage, physiochemical properties of analytes extracted, and/or solubilized and reagents used.

Human samples are the most studied species for bimolecular profiling, as they carry physiological information for onset of a disease. Constantly monitored for their diagnostic abilities, human specimens include serum, plasma, cerebrospinal fluid, bile, urine, milk, seminal fluid, hair, skin, saliva, etc. Careful selection of samples is necessary as certain groups of specimens are more conducive to proteomic investigations. For example, serum and plasma have high protein content, compared to saliva that is 99% water and 0.3% protein, making the former more suitable for proteomic studies. Conversely, urine samples mainly composed of metabolites or end products of blood are more appropriate for metabolomic studies. Similarly, hair is recognized as an attractive specimen for drug analysis. Hence, a compromise in terms of sample availability and study objective is needed, as all analytes may not show up in all body fluids (Rieux, 2006). Once samples are selected, sample integrity is maintained with optimal storage conditions, to minimize sample variability before analysis. Storage conditions vary according to its duration and kind of analytes needed to be preserved. Most body fluids collected can be stored at −80°C. Likewise, mammalian cell lines and tissue samples collected at the time of biopsy are usually stored for long durations in cryo-vial units. These materials are frozen in liquid nitrogen and maintained at low temperatures for years. Protein loss can occur due to interaction with surfaces through adsorption or aggregation. In such cases, samples sensitive to certain surfaces or light can be stored in special dark vials (polypropylene) under a regulated environment (Rieux, 2006).

Processing of fluid samples can get difficult as it may contain cells, proteins, peptides, nucleic acids, lipids, sugars, metabolites, and small molecules etc. An initial step is to separate cells and cellular debris from soluble components by means of low-speed centrifugation and sample clarification via filters. Following this would be steps that ensure proper processing, sampling, and storage of specimens. Since incorrect sampling can activate endogenous processes, leading to variation in analyte composition, it is essential to ensure optimal handling conditions after sample collection. One such example is serum preparation from plasma through coagulation. Since coagulation is a cascade of proteolytic events, it is a difficult to control process which ultimately affects composition of the resulting serum. Experimental variation due to erroneous sampling can be minimized by careful selection of sample population, reduced number of pretreatments (after collection), and by running appropriate number of samples.

In the absence of a universal sample preparation protocol, extraction of proteins from samples is accomplished by a combination of mechanisms such as cell lysis, density gradient centrifugation, fractionation, ultrafiltration, depletion/enrichment, and precipitation (Wells et al., 2003).

Cell lysis can be carried out in appropriate solubilization buffer by means of mechanical and chemical disruption methods. Gentle-lysis methods include osmotic, freeze thaw, and detergent based lysis; whereas vigorous methods include sonication, grinding, mechanical homogenization, and glass bead disruption (Wells et al., 2003).

Density gradient centrifugation can resolve complex cell lysates by separating intact organelles based on their molecular weight, size, and shape. This approach has been successful in isolating specific protein complexes and/or proteins from certain sub cellular compartments such as nuclei, mitochondria etc. Proteins from homogenate fractions are then further analysed for protein identification (Graves and Haystead, 2002). Due to their complexity, cell lysates need further fractionation to isolate low abundant proteins from the high abundant ones.

Fractionation reduces sample complexity by enriching for a specific subset of proteins, before separation and MS analysis (Wells et al., 2003). While fractionation can help detect more proteins via mass spectrometry, it is limited to soluble proteins only as they can be easily recovered (Graves and Haystead, 2002).

Ultrafiltration can also reduce sample complexity by removing high molecular weight (MW) proteins, based on a MW cutoff, thus increasing relative concentration of low MW proteins in a given sample. It cannot be used towards targeted protein profiling, however, when interfaced with other protein depletion/enrichment techniques it can enhance dynamic range of proteomic analysis (Graves and Haystead, 2002). Use of magnetic beads is one such method that is used in conjunction with ultrafiltration. This method not only enriches and desalts peptides from a mixture but subsequently leads to noise suppression with the ability to quantify low mass analytes. Magnetic beads are coated with functional groups, such as reverse phase C8, with recovery of peptides (from beads) being reproducible via an automated sample processing robot (Orvisky et al., 2006).

Besides ultrafiltration, high abundant protein depletion and/or low abundant protein enrichment are other methods that primarily aim towards low abundant proteins, which can be potential biomarkers. Removal of high abundant proteins is facilitated by using antibody-based or affinity dye-based resins. Different suppliers support different matrices that are targeted to a variety of proteins, however, one needs to evaluate various methodologies (MARS-Agilent, ProteoMiner-BioRad, Sigma-16 protein depletion) before deciding on a method that gives best results. For label free approach, it has been established that depletion improves linearity of protein intensity within the dynamic range of an MS instrument (Wang et al., 2006a).

Another technique commonly used for concentrating proteins in sample extracts is protein/peptide precipitation which removes any contaminating species present, such as nucleic acids, lipids, salts etc. (Graves and Haystead, 2002). Salting out, use of iso-electric point and organic solvents are some additional methods commonly used in protein sample preparation (Graves and Haystead, 2002). Use of organic solvent or denaturing conditions allows release of smaller proteins /peptides/ hormones bound to large carrier proteins which can otherwise be lost in sample preparation methods. Clean up kits can be used instead of precipitation to remove any insoluble components from sample before enzymatic digestion. Furthermore, for a sensitive MS analysis, salts, detergents and electrolytes need to be removed, as they lead to ion suppression (Graves and Haystead, 2002).

Once analytes of interest are extracted, peptides are generated by enzymatic or chemical cleavage of intact proteins and subjected to MS analysis for detection and identification purposes. Trypsin enzyme, commonly used for proteolytic digestion cleaves at the carboxyl side of lysine and arginine residues. Due to trypsin specificity it is possible to predict peptides which ensure reproducible and effective generation of peptides. These peptides are then cleaned/desalted using C18 cartridge and/or Ziptip prior to downstream MS analysis (Graves and Haystead, 2002).

LC Separation Mechanisms

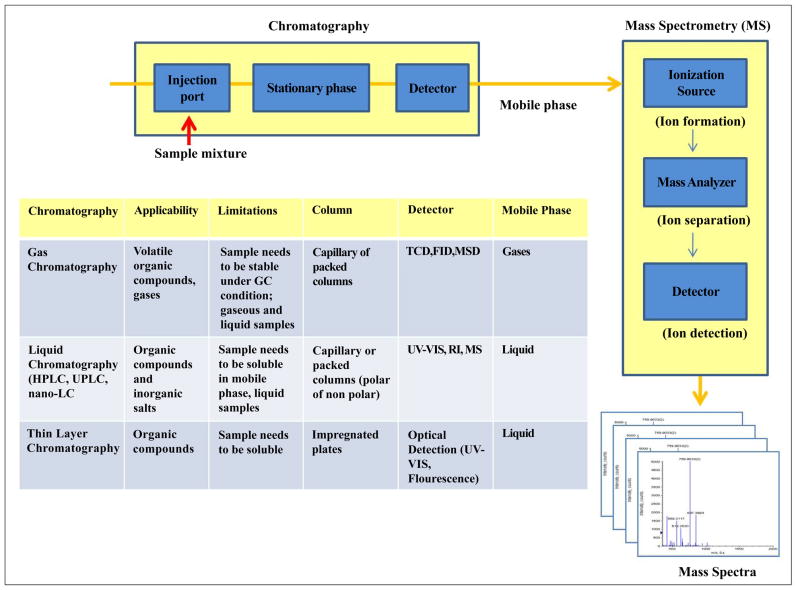

Since a proteome of an organism is too complex to be analyzed by a single separation step, multi-dimensional separation is needed to achieve greater selectivity and peak capacity. Chromatography is one such technique that can separate protein/peptide before downstream analysis. Based on its application, chromatography can be categorized as: gas chromatography (GC), LC, or thin layer chromatography (TLC) (Fig. 1).

Figure 1. Basic LC-MS system and different kinds of chromatography (GC, LC, TLC).

(TCD: thermal conductivity detector, FID: flame ionization detector, MSD: mass selective detector, UV-VIS: ultraviolet-visible, RI: refractive index).

Separation with liquid chromatography is achieved in two phases: mobile phase (liquid) and stationary phase. The mobile phase permeates through a stationary phase at high pressure while separating analytes, which are subsequently analyzed by mass spectrometry. Stationary phase is a column packed with irregular or spherically shaped particles or a porous monolithic layer. Separation is accomplished by standard liquid chromatography, high performance liquid chromatography (HPLC), ultra-performance liquid chromatography (UPLC). UPLC is a new technique similar to HPLC, except for the decreased run time and less use of solvent. Separation on UPLC is performed under high pressure using small particle packed columns (5 μm) that improves separation efficiency. Parameters affecting performance of LC include solvent strength, pH, organic modifier, ion pairing reagent, type of buffer and ionic strength. Based on the polarity of the mobile and stationary phases, LC is further divided into two sub-classes: reverse phase and normal phase. Reverse phase has water-methanol mixture as the mobile phase and C18 packing as the stationary phase; whereas normal phase has stationary phase as more polar and a non-polar mobile phase (toluene).

Discussed below are some of the most commonly employed LC-MS based separation methodologies (adsorption, partition, ion exchange, affinity, size exclusion) currently used in quantitative proteomics.

Reverse phase (RP) chromatography is a commonly used liquid-based separation that utilizes physiochemical properties of proteins/peptides for its chromatographic profile. Since column media is used to differentially retard migration of peptides, RP-LC is more suited for complex peptide mitures. Parameters such as stationary and mobile phase, retention mode, analyte charge, hydrophobicity, and analyte conformation are critical for RP-LC separation of analytes (Rieux, 2006). Typically, differences in hydrophobicity are the driving force for separation between analytes. Analytes elute in combination with mobile phase that differs in its aqueous composition with organic modifiers. Depending upon the kind of organic modifier used (mobile phase), different solute-solvent interactions occur that allow orthogonal selectivity in RP-RP (2D) LC setup (Rieux, 2006). For a multidimensional setup, RP is the last dimension as volatility of organic mobile phase facilitates its coupling to MS interface. Advantages include fewer ion suppression effects during LC elution and efficient retention of polar compounds (Rieux, 2006).

Normal phase (NP) chromatography is another but rarely used method of separation that focuses upon compounds that are water/organic solvent insoluble and has been applied for isomer separation (McMaster, 2005). Normal phase chromatography uses stationary phase made of silica, amino, cyan, and diol packing, with mobile phase being mainly organic (Rieux, 2006). NP has good retention for polar compounds with fine separation capabilities, but suffers from solubility issues and poor reproducibility (Rieux, 2006).

Ion exchange (IEX) is another method commonly used for purifying proteins or pre-fractionated peptides. This method uses charge and surface distribution of analytes to determine their interaction with solid phase. Since pH affects charges on amino acids, pH control of mobile phase is critical, as it helps exploit different IEX that can be used. Ion exchange chromatography can be categorized as: weak anion exchange (WAX), weak cation exchange (WCX), strong anion exchange (SAX), and strong cation exchange (SCX). The weak ion exchanges occur over a relatively narrow pH whereas strong ion exchanges ionize over a wide range of pH. Elution is attained by varying pH or salt concentration of mobile phase which displaces peptides adhering to stationary phase of a column (Rieux, 2006). At low pH (< 3), N terminus amino acids as well as basic amino acids contribute towards net positive charge of an analyte, which can be separated by cation exchange chromatography. Anion exchange chromatography however, needs higher pH values. Besides using buffers with different pH, solvents with salt concentration increased in a stepwise manner can also be used to elute peptides. Use of salt displacer leads to adduct formation, which can be minimized by adding ammonium acetate or formate, as they are preferably chosen over Na+ and K+ salts. Several studies using ion exchange chromatography find SCX a preferred mode of fractionation as it allows buffer exchange before separating onto second dimension RP-LC. For all 2D LC separations evaluated, SCX-RP and RP-RP LC techniques were found to be comparable (Delmotte et al., 2007). Groups using SAX-RP reported similar results when switching over to RP-RP 2-D LC and SCX-RP 2-D LC model (Rieux, 2006). Advantages of IEX include high resolving power, fast separation, compatibility with downstream chromatographic separation or assay, high recovery and the ability to concentrate proteins from a dilute solution. However, certain constraints apply: application of sample under low ionic strength and controlled pH conditions, protection of chromatographic instrumentation against salt-induced corrosion, and post-chromatographic concentration of recovered proteins resulting in high salt concentrations (>1 M) making it unsuitable for downstream applications (Stanton, 2003).

Affinity chromatography enriches for a set of proteins, with certain structural features such as phosphorylation, glycosylation, nitration or histidine, from a mixture of analytes. Selection is based on analyte interaction with immobilized molecules present on solid packing material. Depending upon the kind of immobilized molecules used, affinity chromatography is described as: immuno-affinity chromatography (antibodies), immobilized metal affinity chromatography (IMAC) or as ligands such as dye, lectin, hexapeptides, etc. Immuno-affinity chromatography uses antibody specificity for selecting analytes (Rieux, 2006). Amino acids on antibody’s binding site engage in various non-covalent interactions with amino acids of peptides/proteins. The tridimensional structure of immobilized antibodies is preserved by using buffer with a certain composition and pH that resembles physiological condition. Acidic buffers are used to disrupt interactions that elute analytes of interest. For example protein A and G can form complexes with immunoglobins used to deplete serum/plasma of existing antibodies. (Rieux, 2006). Similarly, immobilized metal affinity chromatography relies upon complex formation between metal ions and specific amino acids and their functional groups, particularly histidine. For example, phospho-proteins and amino acids can bind to immobilized Fe3+ ions and metal oxides such as aluminium, zirconium, and titanium (Rieux, 2006) which is used towards retaining phospho-proteins/peptides from protein mixtures. Likewsie, glycosylated analytes can be selected using lectin packed columns that easily recognize glycosylation motifs present on peptides/proteins. Such columns use higher concentration NaCl with 0.2–0.8M sugar added to it (Rieux, 2006). Affinity chromatography is applicable to most organic and non volatile compounds and is flexible for a wide range of parameters (solid and liquid phase) that can be varied to accommodate better separation. However, affinity chromatography can be time consuming and it can be realized with certain analytical detectors only (Stanton, 2003). Also IMAC can be biased towards proteins and peptides with histidine residues (Rieux, 2006).

Size exclusion chromatography (SEC) uses matrices of different pore size to exclude analytes based on their size. Proteins/peptides that are small enough to penetrate through the pores elute toward the end of the run, thus separating them from high MW compounds and polymers. Restricted access media (RAM) is one kind of SEC extensively employed in pharmaceuticals to separate low-molecular weight analytes from their counterparts. Porous silica based packing is used to deplete samples of albumin, by means of size-exclusion and adsorption chromatography (McMaster, 2005). Advantages include less sample loss due to minimal interaction with stationary phase, low molecular weight cleanup of samples, ability to screen for small molecules in a sample mix. Since analytes are separated by size, a 10% difference between molecular masses of peptides/proteins is needed for better resolution. Also a limited number of bands can be accommodated in one run time (McMaster, 2005).

Hydrophilic interaction liquid chromatography (HILIC) and hydrophobic interaction chromatography (HIC) belong to a normal phase chromatography that uses miscible solvents on a polar stationary phase. Analytes absorbed on a stationary phase are partitioned into mobile phase. In HILIC, charged polar compounds undergo cation exchange with silanol groups of stationary phase (silica particles coated with hydrophilic moieties) (McMaster, 2005). HIC on the other hand is more closely related to RP except that it exploits hydrophobic properties of proteins in a more polar and less denaturing environment. The assumption being all proteins precipitate at high salt concentrations (neutral salts) and are released from adsorbing surfaces at lower salt concentrations Protein binding to HIC is accomplished by high concentrations of anti-chaotropic salts, whereas elution is carried out by decreasing salt concentration of adsorbent buffer (McMaster, 2005). Both HILIC and/or HIC have the following advantages: (i) they are complementary to RP-LC, (ii) they enhance ESI-MS as higher organic composition allows better sensitivity, and (iii) they allow sample preparation from liquid phase. Both techniques suffer from versatility issues as well as their inability to analyze non-polar compounds (McMaster, 2005).

Ion pair chromatography (IPC) differs from reverse phase chromatography as it focuses on polar or ionic biomolecular separation. Analyte selectivity is determined by mobile phase where organic eluent is supplemented with an ion pairing reagent that has a charge opposite to the analyte of interest. IPC (in elueant) forms an ion-pair with the counter ion retained on stationary phase through hydrophobic moiety (McMaster, 2005). Due to diverse interactions between analytes and IPC reagent, each ion pair is retained differently which facilitates sharper separation. One successful application of IPC is in separation of biogenic amines: adrenaline, tryptamine, dopamine that share similar retention times but are imparted different retention times by means of an ion-pair. Selection of IPC reagents is influenced by the presence of necessary counter ion and is further optimized by adjusting pH and IPC reagent concentration (McMaster, 2005). Disadvantages include short column life and poor reproducibility.

Protein fractionation two dimensional (PF2D) is an alternative technique to classical proteome approach where protein fractions are collected in the first dimension based on iso-electric point and second dimension by hydrophobicity (Ruelle et al., 2007). PF2D represents a two dimensional (similar to 2DE) liquid phase separation technique in which fractions collected can be analyzed by mass spectrometry. Samples separated on a first dimension column are put through second dimension reverse phase HPLC (Ruelle et al., 2007). Limitations of PF2D inlcude: the need for large volume of sample and repeated replacement of separation column. PF2D has been favorably applied towards characterizing immunogens from nonpathogenic bacteria Bacillus subtilis, in conjunction with polyclonal antibody and tandem mass spectrometry (Ruelle et al., 2007). This setup appropriately referred to as “i-F2D-MS/MS”, successfully integrated analytical 2D LC (PF2D) with immuno-blotting and mass spectrometry (Ruelle et al., 2007).

Mass Spectrometry

While significant advances have been successfully accomplished at liquid chromatography level, mass spectrometry remains an integral tool for protein identification and quantitation purposes. A mass spectrometer consists of (i) an ionization source on the front end that converts eluting peptides into gas phase ions; (ii) a mass analyzer that separates ions based on m/z ratios; and (iii) a detector that registers relative abundance of ions at discrete m/z. Two ionization methods that have revolutionized the use of mass spectrometers are: MALDI and ESI. Both methods are soft-ionization techniques that allow formation of intact gas-phase ions prior to molecular masses measurement by mass analyzer. There are various types of mass analyzer currently used in mass spectrometers inlcuding: ion trap (IT), time-of-flight (TOF), quadrupole (Q), iv) ion cyclotron resonance (ICR) and Orbitrap (Yates et al., 2009). Mass analyzer plays a critical role in mass spectrometry as it can store and separate ion based on mass to charge ratio. Uniqueness of a mass analyzer is assessed by its sensitivity, mass resolution, accuracy, analysis speed, ion transmission and dynamic range (Yates et al., 2009). A mass spectrometer can have various arrangements of ion source and analyzer, some including one mass analyzer or more than one analyzer, referred to as hybrid instruments. The most common hybrid instruments include ESI-Q-Q-Q, ESI-Q-TOF, Q-IT, IT-TOF, TOF-TOF, IT-FTICR, IT-Orbitrap, MALDI-TOF and MALDI-QIT-TOF (Veenstra and Yates, 2006; Panchaud et al., 2008).

Based on the kind of analysis pursued, mass analyzers are categorized as: scanning (TOF), ion beam (Q), or trapping (such as Orbitrap, IT, ICR) (Yates et al., 2009). The scanning analyzers are coupled to ionization techniques that proceed with pulsed analysis (MALDI) whereas ion beam and trapping analyzers are coupled to continuous ESI source (Yates et al., 2009). Instruments with ion trap analyzers feature high sensitivity, fast scan rates, high duty cycle, and multiple MS scans with high resolution and mass accuracy (100ppm). It is observed that mass spectrometers with one ion trap are more suited to bottom up approach mainly due to their high sensitivity and fast scan rates. Due to its ability to select, trap and manage ionic reactions, ion trap is mostly used at the front end of Orbitrap and Fourier transform-ion cyclotron resonance (FT-ICR) (Yates et al., 2009). Orbitrap and FT-ICR are recent additions to mass spectrometry instrumentation and discussed later in this section.

Once ions pass through mass analyzer they are detected and transformed into a signal by a detector. Three kinds of detector available are: i) electron multiplier, ii) photomultiplier conversion dynode, and iii) Faraday cup. Each of these amplify incident ion signal to output current which is then directly measured (Graves and Haystead, 2002).

In ionization, MALDI relates to laser desorption ionization (LDI) of analytes. While LDI encompasses airdrying of sample on a metal surface, MALDI uses a matrix compound that absorbs and transfers energy from the laser. A variety of matrices including aromatic acids can be used towards this objective. The aromatic group absorbs energy at the level of laser wavelength which results in proton transfer to the analyte (Veenstra and Yates, 2006). MALDI is fast and efficient in ionizing peptides and proteins, but the quality of its spectra greatly depends on matrix preparation. The sample co-crystallizes with an excess matrix solution which has led to experimentation with various methods of sample-matrix preparation. All methods aim towards achieving a homogenous layer of analyte crystals as nonuniform sample/matrix crystals give low resolution accompanied by low correlation between analyte concentration and its intensity. In general, MALDI ion source interfaced with TOF mass analyzer can scan tryptic digest of target proteins with an average of 30–40% protein sequence coverage. ESI on the other hand forms ions at atmospheric pressure followed by droplet evaporation. Peptide/protein solution passed through a fine needle at high potential helps generate analyte ions. The electrical potential produces charged droplets which shrink by evaporation resulting in charge density. The ESI ion source has a tendency to produce multiply charged peptide ions depending upon the number of groups on a polypeptide chain that are available for ionization (Veenstra and Yates, 2006). Tandem MS of polypeptides is often done in positive ionization although negative ionization can also be applied towards identifying sulfated or phophorylated peptides. A common setup for ESI includes reverse phase-liquid chromatography (RP-LC) coupled to ESI-MS/MS. The flow rates of the solution can be adjusted depending upon the nano or micro bore RP columns. Typically the flow rates used for LC systems are 100–300nL/min and 1–100μL/min for the nano or micro LC, respectively (Veenstra and Yates, 2006).

Comparisons between MALDI and ESI reveal both strategies are complementary to each other, each having its own strength and weakness. MALDI mainly produces singly charged peptide ions which make mass spectra interpretation very straightforward. Being a sensitive technique, it is more tolerant of presence of buffers, salts or detergent than ESI. It works best with simpler protein mixtures as high ion yields of intact analyte can be achieved with high accuracy. However, factors that limit its application include: i) Inability of certain peptides to co-crystallize with matrix ii) Disparity in ionization affinity being observed, as all expected tryptic peptides do not show up iii) Need for homogenous sample-matrix crystals as good target (Veenstra and Yates, 2006). Additionally, LC-ESI can generate multiple charged ions, directly from sample solution. When coupled to LC, peptides separated continuously can be examined sequentially with high efficiency and increased throughput. However, ESI is less tolerant of interfering compounds in the sample matrix. With peptide elution (from chromatographic column) exceeding MS/MS scan, sometimes peptides present in samples can be severely under-sampled (Veenstra and Yates, 2006).

Fourier transform-ion cyclotron resonance (FT-ICR) is a novel mass analyzer with increased resolution, peak capacity and resolving power. In FT-ICR, ions in the cyclotron are irradiated with same frequency electromagnetic wave which leads to resonance absorption of the wave (Yates et al., 2009). Energy transferred to the ion increases its kinetic energy which further increases its trajectory radius. All ions in a cyclotron are then simultaneously excited by a rapid scan of large frequency range within 1 microsecond time span (Yates et al., 2009). Based on extensive calculations, Fourier transform mass spectrometer can achieve time spans of 1 sec per spectrum. Due to high sensitivity, the dynamic range of these instruments is limited to 106 with an ability to scan ions for a longer duration. Ability to select ions of a single mass based on resonance frequencies increases the resolution for these instruments from100,000 to 500,000 (Yates et al., 2009).

Orbitrap is the most recent addition to the pool of mass analyzers currently available, used in the form of LTQ-Orbitrap. This application traps moving ions in an electrostatic field that forces them to move in complex spiral patterns (Yates et al., 2009). Accurate reading on measuring m/z is achieved by means of oscillation frequencies of ions with different masses through use of a Fourier transform. The orbitrap mass analyzer presents a dynamic range greater than 103 with high resolution (150,000), high mass accuracy (2–5 ppm) and a m/z range of 6000 (Yates et al., 2009). Upon coupling to LTQ ion trap, the hybrid instrument can provide high resolution and mass accuracy along with faster scans and high sensitivity. Orbitrap has been successfully used for large-scale analysis of Mycobacterium tuberculosis proteome and applied to a virtual multiple reaction monitoring (MRM) approach (Yates et al., 2009). Due to its two complete mass analyzers capable of detecting and recording ions, orbitrap can operate for both top-down and bottom-up analyses (Scigelova and Makarov, 2006). Large scale bottom up proteomics is plagued by false identifications which can be minimized if all data acquired is with high mass accuracy. In such instances, linear ion trap of LIT-Orbitrap isolates and fragments ions selected for analysis, for full scan and subsequent MS fragmentation in orbitrap (Scigelova and Makarov, 2006) which considerably increase mass accuracy. While MS/MS in ion trap is similar to that of orbitrap, the most significant difference is in the resolution and mass accuracy observed for its peaks. Alternatively high resolving power of orbitrap can also facilitate analysis of intact proteins and help locate modifications on fragment sequences (Scigelova and Makarov, 2006). Moreover, orbitrap has been applied for extensive characterization of phosphopeptides by means of MS3 and de novo sequencing using computer algorithms such as PEAKS (Scigelova and Makarov, 2006). Overall benefits of Orbitrap via high mass accuracy ability, includes quantification of low abundant peptides, profiling of complex samples, and identification of proteins from limited sequence proteomes (Yates et al., 2009).

Comparisons reveal Orbitrap offers comparable mass accuracy to FT-ICR instruments, but at a very low cost and less maintenance. While Orbitrap has been used in both bottom-up and top-down approaches, FT-ICR offers broader mass/charge range more suited to top-down protein analysis (Yates et al., 2009).

Protein/Peptide Identification

Protein identification by mass spectrometry is categorized as either top-down or bottom-up approach. In top-down proteomics, intact proteins or large protein fragments are introduced into the mass analyzer whereas in bottom-up peptides are introduced as ESI ions. Upon entry precursor ions receive multiple charge, which are then further fragmented to produce product ions, using electron capture dissociation (ECD) and/or electron transfer dissociation (ETD) mechanism. Interpreting top-down MS/MS spectra can be difficult as each multiply charged precursor can generate a set of multiply charged product ions. This limitation is circumvented by employing charge state manipulation or use of high mass accuracy instruments such as FT-ICR. While ion charge state manipulation is easy to accomplish by ion proton transfer, having access to a FT-ICR instrument is not easy. While top-down is still an upcoming field, major advantages include complete protein sequence along with characterization and location of post translational modifications (PTM), no time wasted on protein digestion due to use of intact proteins. However, it is mainly restricted to proteins smaller than 50kDa, requires use of high end mass spectrometers (FT-ICR, Orbitrap) and generates a composite spectrum that is more suited for simple protein mixtures (Mikesh et al., 2006).

Alternatively, bottom-up ionizes peptides separated over online-chromatography coupled to a mass spectrometer. Peptides from first scan form a peptide mass fingerprint (PMF) that is directly searched against a theoretical database for protein identification or subjected to tandem MS by collision induced fragmentation. Mass spectrometry instruments typically used for bottom-up approach include ions traps, hybrid Q-TOF and TOF-TOF mass spectrometers. Bottom-up is the most widely used approach successfully applied to identifying proteins from complex mixtures. With online reverse phase LC coupled to MS the whole setup can be automated minimizing experimental variation. However, certain limitations exist: partial sequence coverage as only a small fraction of peptides are identified, loss in PTM information, extended run times on multi-dimensional LC and loss of low abundant peptides masked by high abundant protein information.

Although both (top-down and bottom-up) approaches terminate with mass spectrometry identification, top down uses an offline separation, as coupling online chromatography to FT-ICR and other high mass accuracy spectrometers is difficult. Commonly used separation methods for top-down are pre-fractionation, protein fractionation, and purification. Also included are affinity capture and solution phase isoelectric focusing techniques that separate protein based on sequence and pI information. Ion exchange, size exclusion, and hydrophilic- or hydrophobic-interaction chromatography and capillary electrophoresis are other viable options for protein fractionation (Mikesh et al., 2006). Gel electrophoresis separation can also be used but with restraint as it is difficult to extract proteins and one encounters detergents that interfere with MS analysis. Likewise, bottom-up uses either gel electrophoresis (1D or 2-D GE) with peptides extracted from in gel digested proteins, separated over reverse phase LC or multidimensional LC of complex peptide mixture. Gel separation offers great advantages in terms of access to additional protein information such as mass, pI and PTM. However, drawbacks include labor intensive gel analysis, predominance of high abundant proteins, poor recovery of hydrophobic proteins, etc.

With common mass spectrometers, peptides introduced by ESI are sequenced for information by tandem mass spectrometry (MS/MS). By far, the most common method used is low energy collision activated dissociation (CAD) that cleaves amide bonds on peptide backbone to produce b and y ions. However, CAD is not conducive to detecting post-translational mondifications (PTM) due to presence of missed cleavages by trypsin, low charge peptides and size limitation imposed by CAD (Mikesh et al., 2006). While modifications on cellular proteins are widespread, any protein alteration is an indication towards its role in biological phenomenon. PTM’s provide insights into the function of a protein; therefore analyzing them is critical for disease investigations. To facilitate better peptide sequence identification with labile PTM’s being retained on different peptides; two alternative methods of dissociation have been developed (ETD and ECD). ETD utilizes ion/ion chemistry to fragment peptides, by transferring an electron to multiply charged positive precursor ions. ECD however, relies on peptide cations in magnetic field of FT-ICR to capture floating low energy electrons, themselves. These reactions result in peptide cations containing an odd electron that undergoes subsequent dissociation. ECD is indifferent to peptide sequence and length, therefore results in random breakage of peptide backbone while retaining labile modifications. Although both techniques elicit c and z ions in the end by cleaving Cα-N bond, they differ in the kind of instruments and method of peptide dissociation (Mikesh et al., 2006). ETD uses a radio frequency quadropole ion trapping instrument that is not only inexpensive and low maintenance but easily accessible. However, ECD requires FT-ICR, a high mass accuracy instrument that is expensive and least accessible (Mikesh et al., 2006). While modifications on cellular proteins are widespread, any alterations on protein are an indication of its role in biological phenomenon. PTM’s provide insights into the function and role of a protein; therefore analyzing them is critical for disease investigations (Mikesh et al., 2006).

Protein identification depends upon the PMF characteristic of a protein and the pattern of masses generated by a MS. The fingerprint is then searched against a proteome database; best matches of experimentally obtained peptide map to theoretical PMF of individual proteins within a database, leads to the identity of unknown protein. Several factors that can affect peptide mapping results can be grouped together as either fingerprint–constructing or fingerprint-searching factors (Veenstra and Yates, 2006). Factors that influence fingerprint construction include i) Noise level of a peptide set ii) The number of peptides in a given fingerprint and iii) Mass accuracy based on instrument calibration. When searching the database, characteristics of the organism being studied and specific PTM’s are two factors that need attention. The query PMF is compared to every sequence that exists in the specified database. A match is evaluated based on various algorithms which returns a probability based score. If the fragment is a result of tryptic digest, every fragment between K and R in a protein’s theoretical sequence is quantified by the weight of amino acids in that fragment (in Daltons) and if the mass of peak submitted as a query matches to this calculated mass, a random chance or true protein identification (Veenstra & Yates, 2006). The accuracy for the mass of an unknown sample can vary anywhere from between 1 to 1000 ppm, with 100 to 400 ppm being the most typical for many laboratories. Depending upon how well is the instrument calibrated; a more stringent search will lead a searching algorithm to fail to match some observed peaks to database fragment masses resulting in no identification. The percentage length coverage of a peptide is an index of how well is a protein represented in the query PMF. Fragments outside the allowable range of 600Da and above 3000Da and below an intensity threshold are not included in the query PMF in database search. PMF can sometimes have limitations such as some peptides tend to ionize over the expense of others or signal for modified peptides can be observed that are not predicted by in silico digestion which cannot be matched unless accounted for the modification (Palcy and Chevet, 2006) Features such as database size, distribution frequency of a peptide mass for a given protein, and distribution of mass accuracy are some parameters that can influence the specificity of a search Therefore the choice of a parameter helps user to get high specificity without missing a true protein positive (Veenstra and Yates, 2006). The intensities of spectrum do not correlate with the amount of peptide in the samples due to suppression effect and ionization bias, therefore it is relevant to evaluate if the intense peaks have been used toward protein identification or not. For large proteins, large amount of peptides should be matched whereas for a small protein low number of peptide matches can result in reasonable coverage (Veenstra and Yates, 2006).

Tandem MS (MS/MS), on the other hand reveals additional information on peptide sequences. Peptide samples can be separated by one or multi-dimensional LC and subjected to tandem mass spectrometry for peptide sequencing. Database search parameters include types of ion selected, method of mass calculation, peptide charge state, and parent ion tolerance. Types of ions selected for generating theoretical data can depend upon the kind of instrument used for fragmentation. Mass spectrometers such as ion trap, quadrupole and Q-TOF result in b and y ions whereas instrument with high energy collision induce dissociation (CID) can generate a, c, x and z ions as well. For the calculation of peptide mass, monoisotopic or average method can be used as mass spectrometers do no measure mass of peptides but instead mass to charge values (Veenstra and Yates, 2006). For a given protein, the monoisotopic mass is the mass of the isotopic peak whose elemental composition is composed of the most abundant isotopes of those elements. Average mass is the weighted average of all the isotopic masses abundant of that element. High resolution mass spectrometers can use monoisotopic determination for mass whereas with ion traps it is better to use average mass (low resolution). Peptide ion charge state can be determined in high resolution instruments by the isotopic distribution patterns observed in MS spectrum. With low resolution instruments it is not possible to tell the exact charge, though single and multiple charged ions can be easily distinguished. Parent ion tolerance allows certain measured peptides selected from sequence database to be scored against the experimental spectra along with the choice of enzyme used for peptide digestion. The number of candidate peptides needed for analysis is reduced with the specification of an enzyme which reduces search time significantly. Modifications such as reduction and alkylation for gel based proteins are incorporated prior to analysis; Other modifications categorized as static or variable are incorporated as search parameters in database search.; static modifications are where all occurrences of a residue are modified whereas variable modifications are when some residues may or may not be modified (Veenstra and Yates, 2006).

For protein mixtures as complex as > 10,000 proteins, fragment-ion matching technology is used instead of PMF. Peptides from protein digest are dissociated into fragments using mass spectrometers, the mass spectral of which is measured and searched against a database to determine the resulting precursor peptide mass. This approach is particularly useful when a peptide sequence is unique to possibly identify the protein origin based only on MS/MS fragmentation. Researchers have found that tandem mass spectrometry has a higher success rate in protein identification than MS-based identification (Gulcicek et al., 2005). Another method for database searching involves the “sequence tag” approach which uses short amino acid tags generated after tandem MS interpretation against peptides in protein databases for the same enzymatic cleavage. For a protein with no previous sequence information, de novo interpretation is considered useful at the tandem MS level (Veenstra and Yates, 2006). For a given peptide sequence all fragment ions and masses can be specified, which is exactly how de novo sequencing tries to assemble amino acids sequences for a peptide based on spectral pattern.

In order to search databases, several MS search engines have been developed for peptide identification by searching experimental mass spectra against MS data of in silico digested protein databases. SEQUEST (BioWorks), MASCOT and ProteinProspector are some of the algorithms used for peptide identification. Peptide, matching a protein entry are clustered together and reported as a protein hit (Palcy and Chevet, 2006). The database score is computed according to some scoring function that measures the degree of similarity between experimental spectra and the peptide pattern observed for theoretical fragmentation. SEQUEST one of the most commonly used programs calculates cross correlation score for all peptides queried. In addition to X-corr a derivative score which computes the relative difference between the best and second best X-corr is computed which is useful for discriminating between correct and incorrect identifications. MASCOT, a probability based score estimates the probability of matches occurring by chance for the number of peaks in an experimental spectra and the distribution of a predicted ions. With the SEQUSET algorithm, manual review of data is needed to avoid choosing false positives. Since MASCOT uses probability based scoring that assigns score to all identifications, it depends entirely upon the researcher to consider which protein identification as significant (Gulcicek et al., 2005).

Alternatively, spectral library (instead of theoretical spectra) can be used for database searches. Here, peptide mass spectral libraries (MS/MS spectra) become standardized resource for robust peptide identification as they are based on actual physical measurements of peptides already identified in previous experiments (Kienhuis and Geerdink, 2002). Many advantages exist in using this approach in comparison to traditional approach: firstly it is fast as it uses experimental spectra only rather than searching against all possible peptide sequences generated from genomic sequence. Secondly, peptides and proteins are identified with higher sensitivity because an experimental spectrum is more likely to match to a library spectrum better (i.e. with higher confidence) than to a theoretically predicted spectrum. Ultimately, spectrum libraries can provide a common reference point allowing researchers to objectively analyze and compare datasets generated in different experiments (Kienhuis and Geerdink, 2002).

LC–MS Based Quantitative Assessment

Mass spectrometry identifies unknown biomolecules based on their accurate mass and fragmentation pattern. However, for proteomic studies this is possible only if sample is a simple mixture or has been previously divided into simpler parts by high resolution separation methods. Liquid chromatography interfaced with tandem mass spectrometry is shown to be well suited for such quantitation purposes without the use of gels. However, LC-MS generated data is contingent upon factors such as instrument sensitivity, detection coverage, dynamic range, mass accuracy and resolution (Listgarten and Emili, 2005). One such high resolution method that accommodates most of the above mentioned separation methodologies, is nano-liquid chromatography (nano-LC). While detecting proteins present at reasonable levels was easy to achieve, measuring small quantity proteins in complex mixtures has always been very challenging. In order to achieve maximum sensitivity, concentration of low quantity proteins needs to be within detection limits of the instrument pushing the need for small volumes. This approach has led to the development of nano-LC where LC pumping devices capable of delivering samples at nl/min flowrate separate components on columns of diameter size < 100μm (Qian et al., 2006). Additionally samples can be enriched for low abundant protein by means of depletion, pre-fractionation and concentration techniques prior to nano-LC-MS detection. This is greatly beneficial for samples that have proteins in concentration range of 105 – 1010 or even more (tissue, plasma, serum etc). Nano-LC offers several advantages such as: low sample volumes, improved electrospray efficiency due to small droplet formation, high efficiency packed columns that can be operated with MS friendly solvent systems, reproducible delivery of solvents to nano columns etc.(Qian et al., 2006) However certain disadvantages limit its potential as a high throughput technique. These include the not so robust LC-MS interface as nano-flow components and analytical column interfacing to nano-spray emitter (5μm) are frequently prone to failure, small volume leaks that go undetected, dispersion due to dead volume between components and of course longer runs needed for better separation (Qian et al., 2006). Overall, in absence of a single platform for global profiling of a proteome, nano-LC definitely has the upper hand with maximizing number of identifications being reported; provided samples are pre-fractionated prior to analysis. Importantly other new techniques like fast LC, gas phase separations and better nano-ESI interfaces also present a promising future for discovery applications (Qian et al., 2006).

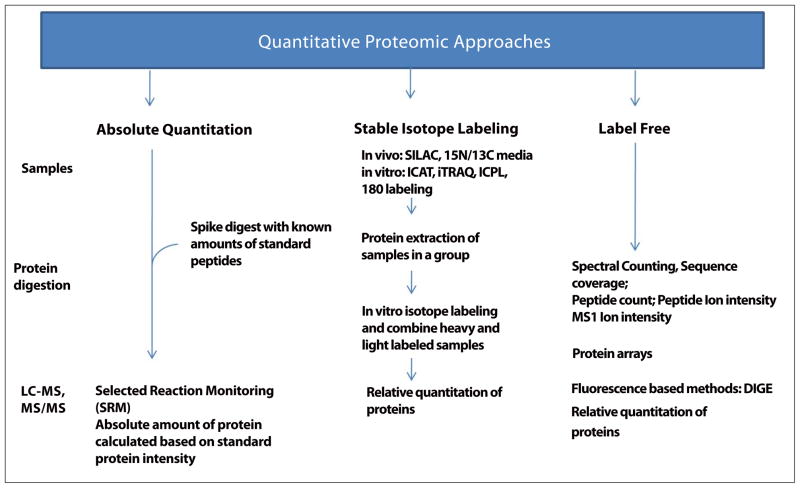

Also, since mass spectrometry is not intrinsically quantitative, strategies have been developed that allow differential mass-labeling of analytes prior to mass spectrometry. Although these methods are “gold standards” for protein quantitation, they have not been widely used for large-scale multiplexed analyses. This is mainly due to their relatively high cost, limited availability of different mass-coded labels and frequent under-sampling associated with MS/MS. Besides, since peptide identification seems to precede quantification, numerous peptides are identified that are unchanged in abundance between samples. As a result, a lot of instrument time and data analysis is consumed by proteins that may have less biological significance. Discussed below are commonly used methods absolute quantification and relative quantification (using stable isotope labeling and label-free methods) for quantitative assessment of protein expression (Fig. 2.).

Figure 2.

Different approaches for quantitative assessment of proteins in biological samples.

Absolute quantitation

Absolute quantitation of proteins, commonly known as AQUA, is achieved by adding internal standards of known quantity to a protein digest that is subsequently compared to the mass spectrometric signal of peptide present in the sample. It uses synthetic peptides that have some kind of differential isotopic label used for spiking purposes prior to LC-MS. These synthetic peptides can match to the experimentally observed sequence but are synthesized with heavy analogues of amino acids. Quantification is achieved by calculating intensity ratio of the endogenous peptide (light) to the intensity of the reference peptide (heavy), that share same physicochemical properties including chromatographic elution, ionization efficiency, fragmentation pattern but are distinguished by mass difference (Pan et al., 2009). While this approach is attractive for validation purposes, a number of limitations exist such as: very few proteins can be quantified, amount of labeled standard needs to be determined before spiking, ambiguity due to presence of multiple isobaric peptides in mixture etc. Some of these issues can be resolved by selected reaction monitoring (SRM) method that compares the intensity of precursor and fragment ions of “heavy” standard to “light” peptides of a test protein (Raghothama, 2007). The combination of peptide retention time, mass and fragment mass removes any ambiguities in peptide assignment and generally broadens the quantification range (Bantscheff et al., 2007). SRM has been applied to studying low abundant yeast proteins involved in gene silencing (Bantscheff et al., 2007). Variation to SRM is multiple reaction monitoring (MRM) which uses multiple peptides for multiplexed quantitation. Samples include internal standard peptides with light and heavy label such that it is applicable to pair peptides in an identical fashion (Okulate et al., 2007). In addition to using individual tryptic peptides, one can use a recombinant protein made up of many tryptic peptides, as a standard protein. While having prior information on protein helps decide what masses to look for, it is important to realize that amount of protein determined in an experiment may not reflect its true expression levels in a cell (Bantscheff et al., 2007). Protein standard absolute quantification (PSAQ) is another method that uses an intact protein with stable isotope label as an internal standard for quantification. PSAQ has been successfully applied to quantify staphylococcal super antigen toxins in urine and drinking water and determine absolute levels of alcohol dehydrogenase in human liver samples (Bantscheff et al., 2007). Metal coded tags (MeCAT) is another method that utilizes metal bound by MeCAT reagent to a protein or biomolecule, in combination with element mass spectrometry inductively coupled plasma mass spectrometry (ICP-MS), for first time absolute quantification (Pan et al., 2009).

Stable isotope labeling

For past several decades, stable isotope labeling has been recognized as an accurate method for MS based protein quantification. While several methods exist, each method is distinguishable by the way heavy labels are introduced into a peptide/protein. Based on the introduction of stable isotope labels into analytes, isotope labeling is categorized as metabolic (in vivo or culture) and chemical (in vitro).

Metabolic labeling relies on growing cells in culture media with isotopically labeled amino acids and nutrients that allow in vivo protein labeling during cell growth process. Relative quantitation is determined by comparison of “heavy” with “light” labeled cells. Peptides identical in sequence (between samples) but labeled with different mass show up as a distinct mass shift on MS. There are different kinds of metabolic labeling.

Stable isotope labeling by means of metabolites (15N or 13C) is achieved by enriching media with 15N ammonium salts to replace all 14N nitrogen atoms or 13C glucose to replace 12C atoms respectively. Both “light” and “heavy” samples are combined in 1:1 ratio to exclude for any experimental variations during cell growth. The 14N/15N or 12C/13C labeled peptides are identified in mass spectra as doublet ion cluster, separated by the mass shift introduced by heavy nitrogen isotope. Comparison based on peak intensities or peak areas is used to relatively quantify protein samples. Depending upon the length of a peptide and varying number of N or C atoms, the heavy isotope leads to varying mass shift which results in highly complex mass spectral data. 15N labeling has been used for simpler organisms such as bacteria, yeast and has been applied to A. thaliana and mammalian cell culture as well (Gulcicek et al., 2005).

Stable isotope labeling by amino acids in cell culture (SILAC) is another labeling approach that uses amino acid as a labeling precursor, added to the culture media during cell growth (Monteoliva and Albar, 2004). Originally developed to generate mass-tagged peptides for accurate and specific protein identification via peptide fingerprinting, this method is now established for quantitative proteomics. This approach makes tandem MS interpretation much easier as labeled and unlabelled peptides mass differences are easily predictable (Monteoliva and Albar, 2004). Unlike 14N/15N peptide pair comparison, peptides in SILAC exhibit mass differences defined by the combination of isotopically labeled amino acids used for labeling purpose. First described for yeast model, SILAC has been applied towards studying protein-protein interactions, identifying post translational modifications and assessing protein expression levels (Monteoliva and Albar, 2004). To achieve complete labeling of proteome, only amino acids that are essential to the organism or contribute to genetically auxotrophic state are generally used. Amino acids with relatively high abundance such as arginine, leucine and lysine are employed to result in high number of labeled peptides, thus providing information on multiple peptide pairs. Isotopes generally used are: 13C and 2H. SILAC being a simple process, has been used for identifying and analyzing post translational modifications and signal transduction events in yeast pheromone pathway as well (Monteoliva and Albar, 2004).

Several studies have effectively applied metabolic labeling with stable isotopes towards comparative proteomic investigations. Some of these studies include investigation of human Hela cells labeled with 13C6Arg and 2H3Leu labels followed by LC-MS/MS analysis, S. cerevisae cells labeled with 2H10Leu for protein identification with MALDI, mouse fed with 3C2Gly labeled diet to map peptides to a protein based on mass and leucine content information, etc. (Beynon and Pratt, 2005). SILAC has been successful with mammalian cell culture labeling where isotope labeled essential amino acids have been fully incorporated into the proteome, plant cell culturing with 70%–80% incorporation and with auxotrophic yeast mutants (Engelsberger et al., 2006). Elements of quantitative proteomics that have greatly benefited from SILAC include the following: formation of signal-dependent protein complexes, modification-dependent protein-protein interaction screens, analyses of the dynamics of signal-dependent phosphorylation events etc (Engelsberger et al., 2006). Major advantages over other stable isotope labeling strategies include: labels that are biosynthesized and are present in live cells, compatibility with cell culture conditions, no affinity purification step, samples or cells from two states can be mixed into one providing an internal control for finding real proteomic differences independent of variability from processing steps, absence of side reactions due to highly specific enzymes, etc. Limitations include: a small subset of tryptic peptides being tagged, substantial incorporation of isotope needed for effective labeling, experimental variability introduced during labeling processes, etc. (Beynon and Pratt, 2005).

For samples that are less amenable to metabolic labeling, chemical reactions have been exploited to introduce isotope encoded tags into proteins/peptides. The chemical tag chosen is targeted to a specific functional group of an amino acid residue to which it is covalently bonded. Choice of a labeling method depends upon factors such as sample complexity, protein quantity and downstream instrumentation employed. Chemical tagging can be categorized as:

Isotope coded affinity tags (ICAT), a widely accepted quantitative technique allows protein quantification by using light or heavy isotopes that bind to sulphydryl groups of amino acid residues that can be identifiable by micro-capillary LC/ESI/MS/MS (Monteoliva and Albar, 2004). Chemical incorporation of isotope tags is typically pursued after protein extraction, with control and experimental samples being derivatized with light and heavy ICAT reagent followed by trypsin digestion (Monteoliva and Albar, 2004). The ICAT reagent has three components: a biotin tag, an oxyethylene linker region and a thiol specific iodoacetyl group that derivatizes Cys residues in proteins. Labeled peptides are fractionated using strong-cation exchange liquid chromatography followed by RP-HPLC and tandem mass spectrometry analysis to identify and quantify ICAT peptide pairs (Gulcicek et al., 2005). ICAT is analogous to microarray use of two different dyes or DIGE protein expression analysis, as changes in expression are determined by differences in intensity observed. ICAT technology has been reportedly applied to several proteomic studies including total proteome characterization of yeast, Pseudomonas aeruginosa, etc. (Monteoliva and Albar, 2004). Labeling is dependent upon the presence of cysteine residues as its sulphydryl groups are chemically labeled in proteins; which makes this technique less suitable to proteins that lack Cys residues. The limitation arises at the mass spectra and database search levels, as proteins that lack cysteine cannot be included in the analysis (Monteoliva and Albar, 2004). The advantage is however, enrichment for Cys containing peptides which reduce sample complexity before MS analysis (Monteoliva and Albar, 2004).

Another strategy in quantitative MS-based proteomics is the derivatization of primary amines including amino termini of proteins/peptides. Two techniques currently established under this category are iTRAQ and ICPL:

Isobaric tag for relative and absolute quantification (iTRAQ) specifically aims at multiplexing sample without generating clusters of peptide pairs. This technique utilizes labeled amine modifying chemistry with MS/MS based quantification mode. iTRAQ reagents consist of three principal components: a reporter group based on N methyl piperazine, carbonyl balance group and a peptide reactive group (McMaster, 2005). Owing to selective inclusion of 13C, 15N and 18O atoms, differentially labeled peptides appear as single peaks in MS spectra which can be quantified based on their iTRAQ reporter ion information received in the second step of mass analysis. Since iTRAQ labeled peptides need to be analyzed with tandem MS, the strategy relies heavily on only these reporter groups that can be observed in MS/MS scans. Due to appearance of reporter ions in low m/z range, iTRAQ cannot be used with conventional ion trap instruments.

Isotope coded protein labeling (ICPL) uses an isotope coded N- nicotinoyl oxysuccinamide tag to allow incorporation of amine reactive tags inside intact proteins. The samples are reduced and alkylated before derivatizing with ICPL however; one need to consider that tryptic cleavage of ICPL labeled proteins would occur only to C terminal Arg residues and not at the modified Lys residues. Use of ICPL for MS-based quantitative proteomics has been demonstrated in various differential analysis studies including rat hepatoma cell exposure to carcinogenic toxin, halobacterium membrane proteome etc. (McMaster, 2005).

18O labeling: Peptide C termini can be selectively derivatized by incorporating heavy oxygen atoms (18O) using serine proteases in combination with protein digestion or after completion of amide bond hydrolysis with heavy labeled water. For relative quantification, two samples digested parallel in H2O18 and H2O16 are mixed in 1: 1 ratio prior to chromatographic separation and MS analysis. Relative abundance is determined by comparing signal intensities or peak areas of 16O/18O encoded peptide pairs (McMaster, 2005).

Chemical labeling of protein samples is mainly achieved by post-synthetic modification of proteins and tryptic peptides, by chemical and enzymatic derivatization. While chemical labeling has been advantageous for highly complex samples, it is prone to certain limitations. Certain concerns that limit its application are: incomplete labeling of peptides that incorporate label at different rates making data analysis a formidable task, use of cysteine and lysine residues in ICAT making this technique less suitable for proteins that have no or few lysine or cysteine residues or for identifying post translational modifications and splice isoforms, labeling kinetics dependent upon protein turnover, modified lysine is not digested by trypsin resulting in longer peptides that obscure MS analysis, high labeling efficiency needed prior to separation as incomplete labeling impairs resolving power and of course side reaction during labeling that can lead to unforeseen products that confound data interpretation (Bantscheff et al., 2007).

Label-free

While labeling protocols (e.g., ICAT, iTRAQ, 18O- or 15N-labeling, etc.) remain the core technologies used in MS-based proteomic quantification, increasing efforts have been directed to the label-free approaches. Label-free method is attractive to investigators due to cost effectiveness, simpler experimental protocols, fewer measurement artifacts, and limited availability of isotope labeled references (Goodlett and Yi, 2003; Lill, 2003). The most common label-free methods include the following:

Spectral count method, is where the total number of MS/MS spectra taken on peptides from a given protein in a given LC-MS/MS analysis is used to compare differential abundance between cases and controls (Old et al., 2005). This approach is based on the fact that more a protein present in a sample; more of MS/MS spectra is collected for its peptides. This method simply counts the number of spectra identified for a given peptide in different samples and integrates results of all measured peptides for the protein quantified. It can be used for quantitative protein profiling as extensive MS/MS data is collected across chromatographic time scale. Sequence coverage method uses information on total coverage of a protein sequence by its identified peptides (Florens et al., 2002). The peptide count method uses the total number of peptides identified from a protein (Gao et al., 2003). Peptide ion intensity method measures peptide ion intensity by integrating area under the curve and comparing them for their relative abundance. It requires MS data to collect under “data dependent” mode (MS scan, Zoom Scan and MS/MS scan). Comparison of ion intensities, is a method where LC-MS runs are compared to identify differentially abundant ions at specific m/z and retention time (RT) points. This approach is based on precursor signal intensity (MS), applicable to data derived from high mass precision spectrometers. The high resolution facilitates extraction of peptide signal at the MS1 level and thus uncouples quantification from the identification process. It is based on the observation that intensity in ESI-MS is linearly proportional to the concentration of the ions being detected. The key factor to label free method is in the reproducibility of its LC-MS runs and proper alignment of LC runs, for reliable detection of differences. Since label free methods can go beyond pair-wise comparison they rely heavily on computational analysis.

The first three methods relate the relative protein abundance to the observed sampling statistics from tandem MS/MS. However, these methods are not fast enough to probe every ion detected in the first stage of mass spectrometry and much of the information available in that stage is discarded, especially for low-abundance ions. Direct comparison of LC-MS peaks without using the corresponding MS/MS data provides the opportunity to examine all biomolecules present in the entire LC-MS profiles. To estimate relative abundance of biomolecules from multiple LC-MS runs, some investigators apply direct comparison methods using MS1 ions and the entire retention profiles, (Prakash et al., 2006; Radulovic et al., 2004) while others use monoisotopic masses and the peak apex of elution profiles.(Kearney and Thibault, 2003; Pierce et al., 2005; Wang et al., 2003) It is based on the principle that the relative abundance of the same biomolecule in different samples can be estimated by the precursor ion signal intensity across consecutive LC-MS runs, given that the measurements are performed under identical conditions (Kuhner and Gavin, 2007).

It also appears that labeling efficiency is not consistent as it varies between samples. Alternatively, label free methods are used to calculate relative abundance of a biomolecule by estimating precursor ion signal intensity across consecutive LC-MS runs. The assumption being all measurements are performed under identical conditions (Kuhner and Gavin, 2007). A critical challenge in using this method for biomarker discovery lies in normalizing and aligning the LC-MS data from various runs to ensure bias-free comparison of the same biological entities across multiple spectra.