Abstract

Standard statistical techniques often require transforming data to have mean 0 and standard deviation 1. Typically, this process of “standardization” or “normalization” is applied across subjects when each subject produces a single number. High throughput genomic and financial data often come as rectangular arrays, where each coordinate in one direction concerns subjects, who might have different status (case or control, say); and each coordinate in the other designates “outcome” for a specific feature, for example “gene,” “polymorphic site,” or some aspect of financial profile. It may happen when analyzing data that arrive as a rectangular array that one requires BOTH the subjects and features to be “on the same footing.” Thus, there may be a need to standardize across rows and columns of the rectangular matrix. There arises the question as to how to achieve this double normalization. We propose and investigate the convergence of what seems to us a natural approach to successive normalization, which we learned from colleague Bradley Efron. We also study the implementation of the method on simulated data and also on data that arose from scientific experimentation.

Keywords and phrases: normalization, standardization, backwards martingale convergence theorem

1. Introduction

This paper is about a method for normalization, or regularization, of large rectangular sets of numbers. In recent years many statistical efforts have been directed towards inference on such rectangular arrays. The exact geometry of the array matters little to the theory that follows. Positive results apply to the situation where there are at least three rows and at least three columns. We explain difficulties that arise when either numbers only two. Scenarios to which methodology studied here applies tend to have many more rows than columns. Data can be from gene expression microarrays, SNP (single nucleotide polymorphism) arrays, protein arrays, alternatively from large scale problems in imaging. Often there is one column per subject, with rows consisting of real numbers (as in expression) or numbers 0, 1, 2 (as with SNPs). Subjects from whom data are gathered may be “afflicted” or not, with a condition that while heritable is far from Mendelian. A goal is to find rows, better groups of rows, by which to distinguish afflicted from other subjects. One can be led to testing many statistical hypotheses simultaneously, thereby separating rows into those that are “interesting” for further follow-up and those that seem not to be. Genetic data tend to be analyzed by test “genes” (rows), beginning with their being “embedded” in a chip, perhaps a bead. There may follow a subsequent molecule that binds to the embedded “gene”/molecule. A compound that makes use of the binding preferences of nucleotides and to which some sort of “dye” is attached is then “poured.” The strength of binding depends upon affinity of the “gene” or attached molecule and the compound. Laser light is shined on the object into which the test “gene” has been embedded, and from its bending, the amount of bound compound is assessed, from which the amount of the “gene” is inferred. The basic idea is that different afflicted status may lead to different amounts of “gene”.

With the cited formulation and ingenious technology, data may still suffer from problems that have nothing to do with differences between groups of subjects or with differences between “genes” or groups of them. There may be differences in background, by column, or even by row. Perhaps also “primers” (compounds) vary across columns for a given row. For whatever reasons, scales by row or column may vary in ways that do not enable biological understanding. Variability across subjects could be unrelated to afflicted status.

Think now of the common problem of comparing variables that can vary in their affine scales. Because covariances are not scale-free, it can make sense to compare in dimensionless coordinates that are centered at 0, that is, where values of each variable have respective means subtracted off, and are scaled by respective standard deviations. That way, each variable is somehow “on the same footing”.

Standardization, or normalization, studied here is done precisely so that both “subjects” and “genes” are “on the same footing”. We recognize one might require only that “genes” (or some “genes”) be on the same footing, and the same for “subjects.” The successive transformations studied here apply when one lacks a priori opinions that might limit goals. Thus, “genes” that result from the standardization we study are transformed to have mean 0 and standard deviation 1 across all subjects, while the same is true for subjects across all “genes”. How to normalize? One approach is to begin with, say, row, though one could as easily begin with columns. Subtract respective row means and divide by respective standard deviations. Now do the same operation on columns, then on rows, and so on. Remarkably, this process tends to converge, even rapidly in terms of numbers of iterations, and to a set of numbers that have the described good limiting properties in terms of means and standard deviations, by row and by column.

In this paper we show by examples how the process works and demonstrate for them that indeed it converges. We also include rigorous mathematical arguments as to why convergence tends to occur. Readers will see that the process and perhaps especially the mathematics that underlies it are not as simple as we had hoped they would be. This paper is only about convergence, which is demonstrated to be exponentially fast (or faster) for examples. The mathematics here does not apply directly to “rates”. The Hausdorff dimension of the limit set seems easy enough to study. Summaries will be reported elsewhere.

2. Motivating Example

We introduce a motivating example to ground the problem that we address in this paper. Consider a simple 3-by-3 matrix with entries generated from a uniform distribution on [0,1]. We standardize the initial matrix X(0) by row and column, first subtracting the row mean from each entry and then dividing each entry in a given row by its row standard deviation. The matrix is then column standardized by subtracting the column mean from each entry and then by dividing each entry by the respective column standard deviation. In this section, these four steps of row mean polishing, row standard deviation polishing, column mean polishing and column standard deviation polishing entail one iteration in the process of attempting to row and column standardize the matrix. After one such iteration, the same process is applied to resulting matrix X(1) and the process repeated with the hope that successive renormalization will eventually yield a row and column standardized matrix. Hence these fours steps are repeated until “convergence” - which we define as the difference in the Frobenius norm between two consecutive iterations being less than 10−8.

In order to illustrate this numerically, we start with the following 3-by-3 matrix with independent entries generated from a uniform distribution on [0,1]and repeat the process described above.

| (1) |

The successive normalization algorithm took 9 iterations to converge. The initial matrix, the final solution, and relative (and log relative) difference for the 9 iterations are given below (see also figure 1):

| (2) |

| (3) |

Fig 1.

Relative differences at each iteration on the log scale - 3-by-3 dimensional example

The whole procedure of 9 iterations takes less than 0.15 seconds on a standard modern laptop computer. We also note that the final solution has effectively 3 distinct entries. When other random starting values are used, we observe that convergence patterns can vary in the sense that convergence may not be monotonic. The plots below (see Figure 2) capture the type of convergence patterns that are observed in our simple 3-by-3 example.

Fig 2.

Convergence patterns for the 3-by-3 example

Despite the different convergence patterns that are observed, when our successive renormalization is repeated with different starting values - a surprising phenomenon surfaces. The process seems always to converges, and moreover the convergence is very rapid. One is led naturally to ask whether this process will always converge and if so under what conditions. These questions lay the foundation for the work in this paper.

3. Preliminaries

We establish the notation that we will use by revisiting a normalization/standardization method that is traditional for multivariate data. If the main goal of a normalization of a rectangular array is achieving zero row and and column averages, then a natural approach is to “mean polish” the row (i.e., subtract the row mean from every entry of the rectangular array), followed by a column “mean polish”. This cycle of successive row and column polishes is repeated until the resulting rectangular array has zero row and and column averages. The following theorem proves that this procedure attains a double mean standardized rectangular array in one iteration where an iteration is defined as constituting one row mean polish followed by one column mean polish.

Lemma 3.1

Given an initial matrix X(0), an iterative procedure to cycle through repetitions of a row mean polish followed by a column mean polish until convergence terminates in one step.

Proof

Let X(0) be an n × k matrix and define the following:

Now the first part of the iteration, termed as a “row mean polish” subtracts from each element its respective row mean:

The second step of the iteration, termed a “column mean polish” subtracts from each element of the current matrix its respective column mean:

where

After the second step of the iteration it is clear that the columns sum to zero; the previous operation enforces this. In order to prove that the iterative procedure terminates at the second part of the iteration it is sufficient to show that the rows of the current iterate sum to zero. Now note that

The remaining is to show that the row sum of this matrix X(2) expressed as the elements of X(0) sum to zero. So,

Note that the above double standardization is implicit in a 2-way ANOVA, and though not explicitly stated it can be deduced from the work of Scheffé [9]. It is nevertheless presented here first in order to introduce notation, second as it is not available in this form above in the ANOVA framework, but third for the intuition it gives since it is a natural precursor to the subject of work in the remainder of this paper.

3.1. Example 1(cont.): A 3-by-3 example with only mean polishing

We proceed to illustrate the previous theorem on the motivating example given after the introduction and draw contrasts between the two approaches. As expected the successive normalization algorithm terminates in one iteration. The initial matrix, the final solution, and the column and row standard deviations of the final matrix are given below:

| (4) |

| (5) |

| (6) |

| (7) |

| (8) |

We note unlike in the motivating example, and as expected, the row and column means are both 0; but the standard deviations of the rows and the columns are not identical, let alone identically 1. Since mean polishing has already been attained, and we additionally require that row and column standard deviations to be 1, it is rather tempting to row and column standard deviation polish the terminal matrix Y(final) above. We conclude this example by observing the simple fact that doing so results in the loss of the zero row and column averages.

3.2. The 2-by-2 problem

We now examine the successive row and column mean and standard deviation polishing for a 2 × 2 matrix and hence illustrate that for the results in this paper to hold true, the minimum of row(k) and column dimension(n) of the matrix under consideration must be at least 3, that is min(k, n) ≥ 3. Consider a general 2 × 2 matrix: . If a < b and c < d, then after one row normalization, ; so . Therefore, allowing for both inequalities to be reversed, and, assuming that, for example, a, b, c, and d are iid with continuous distribution(s), then , in which case the procedure is no longer well-defined.

A moment’s reflection shows that if X is n × 2, with n odd, then after each row normalization, each column has an odd number of entries, each entry being −1 or +1. However, each row has exactly one −1 and one +1. Thus occurs only on a set of product Lebesgue measure 0. With min(k, n) ≥ 3, both tend to 1 a.e. as m ↗ ∞. Henceforth, assume min(k, n) ≥ 3.

4. Theoretical Considerations

For a matrix X as defined, take x ∈ ℛn×k. Let λ denote Lebesgue measure on ℛn×k, and P be the probability on the coordinates that renders them iid N (0, 1). That is, Xij (xij) = xij. Xij are iid N (0, 1). What is more, for any orthogonal linear transformation  that preserves orthogonality,

that preserves orthogonality,  X ~ X, that is,

X ~ X, that is,  X is distributed as X Muirhead [8], Anderson [1].

X is distributed as X Muirhead [8], Anderson [1].

Because λ and P are mutually absolutely continuous, if  = {algorithm for successive row and column normalization converges}, then P (

= {algorithm for successive row and column normalization converges}, then P ( ) = 1 iff λ(ℛn×k\

) = 1 iff λ(ℛn×k\ ) = 0, though only one direction is used.

) = 0, though only one direction is used.

For the remainder, assume that P governs X. Positive results will be obtained for 3 ≤ min(n, k) ≤ max (n, k) < ∞. Our arguments require notation, to which we turn attention now. We redefine the notation and symbols introduced in Lemma 3.1 as we now bring in row and column standard deviation polishing. Define X = X(0).

, where ; a.s. since k ≥ 3 > 1.

By analogy, set

Arguments sketched in what follows entail that a.s. since n ≥ 3.

For m odd, , with and defined by analogy to previous definitions.

For m even, , again with and defined by analogy to earlier definitions.

Back to the general problem

We first note that because the process we study is a coordinate process, there is no difference between regular conditional probabilities and regular conditional distributions (see Durrett [4], Section 4.1.c, p33 and pp. 229–331 for more details). They can be computed as densities with respect to Lebesgue measure on a finite Cartesian product ×Sph(q), where q = k refers to after row normalization and where q = n if subsequent to column normalization. In a slight abuse of notation, for any positive integer q we define Sph(q) = {x ∈ ℛq: ||x||2 = q}, where the norm is the usual Euclidean norm.

Let {rij: i = 1, …, n; j = 1, …, k} be a set of n · k rational numbers, not all 0. Obviously,

An inductive argument involving conditional densities shows that

| (9) |

Consequently

Further, a.s. X(m) is defined and finite for every m.

What we know about the t distribution Efron [5] and geometric arguments entail that X(1) can be viewed as having probability distribution on ℛn×k that is the n-fold product of independent uniform distributions on Sph(k) × … × Sph(k). For the sake of clarity we note again that conditional probabilities are always taken to be “regular” and concentrated on the relevant product of spheres. Readers will note that in the cited arguments for , and , relevant conditional probabilities and densities are used. Of course, after the mth row standardization , and analogously for column standardization and X(2m).

As an aside, write g1(X) = X(1) on {

}. Elsewhere, let g1(X) = 0. Necessarily g1 depends on X only through its direction in ℛn×k. Equivalently, g1 is a function of X that is homogeneous of degree 0. Moreover, g1(X) ~ g1( X) for all orthogonal linear

X) for all orthogonal linear  on ℛn×k. X(1) has independent rows, each uniformly distributed on Sph(k).

on ℛn×k. X(1) has independent rows, each uniformly distributed on Sph(k).

We turn now to study as m increases without bound. Note first that for m = 1, 2, … X(2m−1) has joint distribution that is unchanged if two columns of X are transposed, therefore if two columns of X(2m−1) are transposed. Since every permutation is a product of transpositions, X(2m−1) is column exchangeable. Each is also row exchangeable.

Write π for a permutation of the integers {1, …, k}; let be the finite σ-field of all subsets of {π}. The marginal probability induced on {π} from the joint distribution of (X, {π}) is discrete and uniform, assigning probability 1/k! to each π.

Write to be the σ-field

THEOREM 3.2

a.s. for m = 1, 2, …

Proof

Write , where IA is the indicator function of the event A. Obviously, is measurable; {αq1,q2} is a set of real numbers, doubly indexed by {αq1,q2) ∈ F; F is a finite subset of {1, …, k} × {1, 2,…}. Form .

Note that is generated by {B × Q}, B of the cited form and Q ∈ Π. In particular, each . Proof of our claim is complete if we show that for m = 2, 3, …

The left hand side of the display can be expressed

Now, for any π, the expression inside the sum is (k)(1/k) = 1 if π ∈ Q and 0 if not. That is, the expression constituting the sum is k I[π(1)=l] I[π∈Q]. Now the, expectation factors into P (B)P (π(1) = l|π ∈ Q) P (π ∈ Q) = P (B) P (Q).Retracing steps shows clearly that in the computation just completed could be replaced by with all equalities remaining true. The claim is now proven.

Convergence of

The backwards martingale convergence theorem Doob [3] entails that converges a.s. as m → ∞. So, for each fixed j ∈ {1, …, k}, converges a.s. It follows that [ ] converges a.s. as m → ∞.

If previous arguments are perturbed so that π denotes a permutation of {1, …, n}, with replaced by by by , and by , then one concludes that also [ ] converges a.s. as m →; ∞. Without further argument it is unclear the a.s. limits along odd, respectively even, indices are the same; and it is crucial to what remains that this is in fact true.

Obviously, , so in a certain sense measurability is the same. Obviously, too, randomization of index is by columns in the first case and by rows in the second. But now a path to the required conclusion presents itself. Given success in proving a.s. convergence along odd indices after randomizing columns and along even indices randomizing rows, and given a requirement of our approach is that these two limits be identical a.s., perhaps there is a path by simultaneously randomizing both columns and rows? Fortunately, that is the case. Thus, let π1 be a permutation of {1, …, n} and π2 be a permutation of {1, …, k}. With obvious product formulation of governing probability mechanism and further obvious formulation of decreasing σ-fields, as an example of what can be proved,

From this arguments for the display there are several paths by which one concludes that a.s., simultaneously for all (i, j), [ ] converges. Dominated convergence entails that the limit random matrix has expectation 1 in all coordinates. As a consequence of this convergence, a.s. and simultaneously for all (i, j), as m → ∞.

Convergence of [ ]

We turn now to key ideas in extending our argument that [ ] converges almost surely simultaneously to the same conclusion with the square removed. To limit notational complexity, we study first only odd indices as m grows without bound. Conclusions are identical for even indices, and by extension for indices not constrained to be odd or even.

A first necessary step is to show that for arbitrary j,

To that end, let A be the event [ ]. Obviously, regardless of square roots taken. We show that P {A} = 0.

By way of contradiction, suppose that P{A} > 0. Write

We know that the first term tends to 1 a.s. on A. Therefore, also the second term tends to 1 a.s. on A. Since for m > 1, is bounded a.s., is a finite-valued random variable C = C(x). Simple considerations show that the only possibilities are that for all l, C = +1 or C = −1 a.s. Similar arguments show that is +1 or −1. It is clear that there is no sequence {mk} of {m} along which

It follows that exists a.s. on A, and that the limit of the sequence is +1 or −1 on A.

Recall that X ~ −X, and this equality is inherited by all joint distributions of X(m). However, when X is replaced by its negative, any limit of becomes its negative; a.s. convergence is a property of the probability distribution of X; and . Therefore, the only possibility is that C = 0, which entails that and . The upshot is that P {A} = 0; .

Again, let us fix j. Consider a sample path of {X(2mq−1)} along which . Clearly, . Indeed, let . Row and column exchangeability of X(m) entail that necessarily the cardinality of E is at least 2.

Let i0≠ i1 ∈ E. Because min(n, k) ≥ 3, there is a further subsequence of {{mq (i)}} – for simplicity again write it {mq} – along which

The first two requirements can always be met off of the set of probability 0 implicit in eqn(9). That the third can be met as well is a consequence of the argument just concluded. In any case, if there were no such subsequence, then our proof would be complete because all for j fixed tend to the same number. But now, write

Since a.s., and likewise with i0 replaced by i1, the first expression of the immediately previous display has limit 0. Thus, so too does the second expression. This is possible only if (where we have taken the positive square root). Further,

As a corollary to the above, one sees now that . Since the original {mq} could be taken to be an arbitrary subsequence of {m}, we conclude that:

a.s.;

a.s.; and

converges a.s.

Now replace arguments for (i) and (ii) on columns by analogous arguments on rows. Deduce that every infinite subsequence of positive integers has a subsequence along which our desired conclusion obtains.

5. Properties of successive normalization

We now comment on theoretical properties of successive normalization. In particular, we elaborate on the generality of the result by showing that the Gaussian assumption is not necessary and serves only as a convenient choice of measure. We also discuss convergence in Lebesgue measure and the domains of attraction of successive normalization.

5.1. Choice of probability measure

Write λ for Lebesgue measure on ℛn×k. Thus, x ∈ ℛn×k is an n × k rectangular array of real numbers. Let P be a measure on the Borel sets of ℬ of ℛn×k that is mutually absolutely continuous with respect of λ. By this is meant that if B ⊂ ℛn×k is Borel measurable, then λ (B) = 0 iff P (B) = 0. One obvious example of such a P is the measure on ℬ that renders its nk coordinates iid Gaussian. If xrc is the (r, c) coordinate of X ∈ ℛn×k, and P (r,c) is the marginal measure of P on its (r, c) real coordinate, then P (r,c)((−∞, x0]) = Φ (x0), where Φ is the standard normal cumulative distribution function. This is what we mean by P henceforth. The iid specification entails that P is an nk-fold product of identical probabilities, and because P is now defined uniquely for all product rectangles, necessarily it is defined uniquely for all B ∈ ℬ. It is obvious that Φ being mutually absolutely continuous with respect to one-dimensional Lebesgue measure means that the product measure P is mutually absolutely continuous with respect to the nk-fold Borel product measure λ.

Now, let f1, f2, … be a sequence of ℬ-measurable functions, ℛn×k → ℛn×k. Then . When fm(x) converges, then , where for any set C, IC is its indicator. Because each fm is ℬ-measurable, lim fm(x) is also ℬ-measurable. If we are given that {fm} converges P -almost everywhere, then {fm converges} is a Borel set of P -measure 1. Necessarily its complement has P -measure 0, therfore λ measure 0. It follows that {fm} converges except for a Borel subset of ℛn×k of Lebesgue measure 0.

In the present paper, the (r, c) coordinate of {fm} is the set of successive normalizations of the initial real entry multiplied by the indicator of the subset of ℛn×k that is {successive normalizations possible}. The latter is clearly a Borel subset of ℛn×k of P -measure 1, so its complement is a Borel set of λ -measure 0. It is immaterial to convergence whether the first initialization is by row or by column. Readers should note that any study of asymptotic properties of {fm} under P may entail that {fm(x)} has properties such as row and column exchangeability, where the coordinate functions are taken to be random variables. They should note, too, that: (i) changing the original measure to one more conducive to computation is standard, and is what happens with “exponential tilting” as it applies to the study of large deviations of sums; and (ii) the interplay between measure and topology, as is utilized here, is a standard approach in probability theory that is applied seldom to statistical arguments; the lack thus far owes only to necessity.

5.2. Convergence in pth mean for Lebesgue measure

Whenever standardization is possible, after one standardization the sum over all nk coordinates of squares of respective values is bounded by nk it follows from dominated convergence that as m grows without bound, each term converges not only P-almost everywhere but also in pth mean for every ∞ > p ≥ 1 so long as P remains the applicable measure. Because λ is not a finite measure, convergence in pth mean for underlying measure λ does not follow. It is impossible for such convergence to apply to Lebesgue measure on the full Euclidean space ℛn×k. This is because there is a set of positive Lebesgue measure whose members are not fixed points for normalization by both rows and columns. No matter which normalization comes first in any infinite, alternating sequence, the normalization is invariant to fixed scale multiples of each x ∈ ℛn×k, in particular to fixed scale multiples of x in the set of positive Lebesgue measure just described. Obviously, no further arguments are required, and convergence in pth mean is immediate if Lebesgue measure λ is restricted to a bounded subset of ℛn×k.

5.3. Domains of attraction

Reviewers of research presented here have wondered if we can describe simply what successive and alternating normalization does to rectangular arrays of data, beyond introductory comments about putting rows and columns on an equal footing and the analogy to computing correlation from covariance. We begin our reply here, though details await further research and a subsequent paper. Please remember invariance to either row or column normalization (when possible), to scale multiples of x ∈ ℛn×k. In other words, results of normalization are constant along rays defined by these multiples, and without loss of generality we can assume that x lies in an n-fold product of Sph(k), where each of the n components is orthogonal to the linear one-dimensional subspace of ℛk consisting of its equiangular line; call it Sph(k)\{1}. Thus, without loss we assume that the object under study is X(m) subject to a row normalization of the process of successive normalization. We study only subsets of the n-fold product space that was described and that is complementary to { }, where {rij } are rational. The set in braces just before the comma has P-measure 0; and we know that on its complement, X(m) can be defined for all m = 1, 2, …

Because normalization always involves subtraction of a mean and division by a standard deviation, and because each X(m) is row and column exchangeable, the limiting process we study here when P applies seems on superficial glance to be analogous to “domains of attraction” as that notion applies to sequences of iid random variables. One obvious difference is that here limits are almost sure rather than in distribution. While a.s. limits of X(m) are shown to have row and column means 0 and row and column standard deviations 1, n × k arrays of real numbers with this property are obviously the only fixed points of the alternating process studied here. The Hausdorff dimension of the set of fixed points is not difficult to compute, but we have been unable thus far to give rigorously supported conditions for the domain of attraction (in the sense described) of each fixed point. The simple case for which domains of attraction for limits in distribution were described was a major development in the history of probability (see Feller [6], Gnedenko and Kolmogorov [7], Zolotarev [10]). We report some intuitive results, and next a mathematical question that arose in our study of domains of attraction for which at present we have only a heuristic argument.

Is there a set E ⊂ ℛn×k for which P (E) = 1; X(m)(x) converges for x ∈ E; and for each fixed i lim Xij (x) ≠ lim Xij′(x) for all j ≠ j′ if x ∈ E? Clearly one could ask the equivalent question for each fixed j, and a corresponding subset E′ ⊂ ℛn×k. We conjecture the existence of E ∩ E′. However, arguments available thus far do not confirm existence rigorously. Therefore, suggestions regarding domains of attraction as X(m) grows without bound should be taken as only heuristic for now.

Given that on a subset of ℛn×k with complementary P-measure 0, therefore Lebesgue measure 0, almost surely ultimately (meaning for m = m(x) large enough), row normalization does not perturb the (strict) sort of any row. By analogy, a.s. ultimately column normalization does not perturb the (strict) sort of any column. Thus, a.s. ultimately, successive and alternative row and column normalization do not perturb any row or column sort. This joint strict sort determines an open subset of ℛn×k. If we restrict ourselves to rows, then we may speak more precisely of n-fold products of sorts of members of Sph(k)\{1}. For a fixed row there are k! strict sorts of the squared entries. Given a fixed sort of the squares there are, say, f (k) assignments of sign to the square roots of the k entries so that the sum of entries for that row is 0. For any assignments of signs to the necessarily non-trivial values of the k squares that renders the sum of entries for the row 0, there is always a scaling so that the sum of squares is any fixed value, therefore so that the variance of the set of numbers in that row is 1. One computes f (3) = 2; f (4) = 4; and so on. Computation of the exact value of f (k) is slightly tricky and is not reported here. For all k, f (k) ≥ 2. To summarize, for each fixed row there are a.s. ultimately f (k)k! disjoint (open) invariant sets following row normalization, making for [f (k)k!]n a.s. ultimately (open) invariant sets simultaneously for all n rows. If we count a.s. ultimately invariant sets subsequent to column normalization, then entirely analogous arguments result in a.s. ultimately [f (n)n!]k disjoint (open) sets simultaneously for all columns.

From computations, after a row normalization the surface area of the sphere in k-space orthogonal to the equiangular line - that corresponds to only one row of for k ≥ 21. The expression → 0 as k ↗ ∞. Even for k = 4, the quantity is only about 14.7 (larger than the actual value). Remember that there are at most [f (k)k!] a.s. ultimately non-empty “invariant sets” for row normalization. Thus, one sees that for k large the quantity is nearly 0.

6. Computational Results and Applications

We include three examples to highlight and illustrate some computational aspects of our iterative procedure. The first two examples are studies by simulation whereas the third example is an implementation on a real dataset.

For the simulation study, we consider a 3-by-3 matrix and a 10-by-10 matrix both with entries generated independently from a uniform distribution on [0,1]. For a given matrix, the algorithm computes/calculates the following 4 steps at each iteration:

Mean polish the column

Stand deviation polish the column

Mean polish the row

Stand deviation polish the row

These fours steps, which constitute one iteration, are repeated until “convergence” - which we define as when the difference in some norm-squared (the quadratic/Frobenius norm in our case) between two consecutive iterations is less than some small prescribed value - which we take to be 0.00000001 or 10−8.

6.1. Example 2: Simulation study on a 10-by-10 dimensional example

We proceed now to illustrate the convergence of the successive row and column mean-standard deviation polishing for the simple 10-by-10 dimensional example cited. The algorithm took 15 iterations to converge. The initial matrix, the final solution, and relative(and log relative) difference for the 15 iterations are:

| (10) |

| (11) |

| (12) |

We note once more how the relative differences decrease linearly on the log scale(though empirically) and is once again suggestive of the rate of convergence. As both the figure (see Fig. 3) and the vector of relative differences indicate, there is a substantial jump at iteration 2; and then the curve behaves linearly.

Fig 3.

Relative differences at each iteration on the log scale - 10-by-10 dimensional example

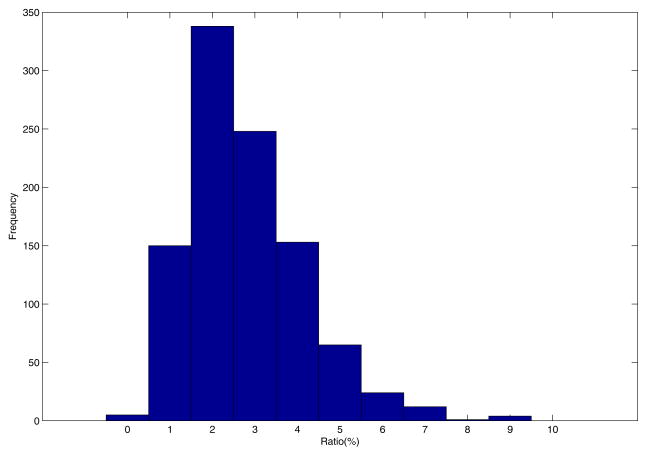

The whole procedure takes about 0.37 seconds on a standard modern laptop computer and terminates after 15 iterations. It might appear that the increase in the number of iterations increases with increase in dimension. For instance, the number of iterations goes from 9 to 15 as we go from dimension 3 to 10. We should however bear in mind that when we go from dimension 3 to 10 the “tolerance level” is kept constant at 0.00000001. The number of elements that must be close to their respective limiting values, however, goes from 9 in the 3-dimensional case, to 100 in the 10-dimensional case. The rapidity of convergence was explored further, and the process above was repeated over 1000 simulations. The convergence proves to be stable in the sense that the mean and standard deviation of the number of steps till convergence over the 1000 simulations are 14.5230 and 2.0331 respectively. A histogram of the number of steps till convergence is given below (fig. 4).

Fig 4.

Distribution of the number of steps to convergence

A closer look at the vector of successive differences suggests that the “bulk of the convergence” is achieved during the first iteration. This seems reasonable since necessarily the first steps render the resulting columns, then, rows, members of their respective unit spheres (and even within cited subsets of them). Convergence then is only within these spheres. Our numerical results also indicate the the sequence of matrices X(i) changes most drastically during this first iteration. It suggests that mean polishing to a larger extent is responsible for the rapidity of convergence and is reminiscent of the result in Lemma 1 - which states that if only row and column mean polishing are performed, then convergence is achieved immediately. We explore this issue further by looking at the distance between X(1) and X(final), and compare it to the distance between X(0) and X(final). The ratio of these two distance is defined below:

For our 10-by-10 example, we simulated 1000 initial starting values and implemented our successive normalization procedure. The average value of the distance from the first iterate to the limit, as a proportion of the total distance to the limit from the starting value, is only 2.78%. One could interpret this heuristically as saying that on average the crucial first step does as much as 97.2% of the work towards convergence. We therefore confirm that the bulk of the convergence is indeed achieved in the first step (termed as a “one-step analysis”’ from now onwards). The distribution of the ratio defined above is graphed in the histogram below (fig. 5). We also note that none of the 1000 simulations yielded a ratio of over 10%.

Fig 5.

Distribution of distance to limit after 1-step as a proportion of distance to limit from initial-step

Yet a another illuminating perspective of our successive normalization technique is obtained when we track the number of sign changes in the individual entries of the matrices from one iteration to the next. Please remember that this is related to the “invariant sets” that were described in subsection 5.3. Naturally, one would expect the vast majority of sign changes to occur in the first step as the bulk of the convergence is achieved during this first step. We record the number of sign changes at each iteration, as a proportion of the total number of sign changes until convergence, over 1000 simulations, in our 10-by-10 case. The results are illustrated in the table below1 (see Table 1). An empirical study of the occurrence of the sign changes reveals interesting heuristics. We note that on average 95% of sign changes occur during the first step and an additional 3% in the next step. The table also demonstrates that as much as 99% of sign changes occur during the first three iterations. When we examine infrequent cases where there is a sign change well after the first few iterations, we observe that the corresponding limiting value is close to zero, thus indicating that a sign change well into the successive normalization technique (i.e., a change from positive to negative or vice versa) amounts to a very small change in the actual magnitude of the corresponding value of the matrix.

Table 1.

Distribution of the occurrence of sign changes

| Iteration no. | relative frequency | relative cumulative frequency |

|---|---|---|

| 1 | 94.97% | 94.97% |

| 2 | 3.15% | 98.12% |

| 3 | 1.03% | 99.15% |

| 4 | 0.40% | 99.55% |

| 5 | 0.20% | 99.75% |

| 6 | 0.12% | 99.87% |

| 7 | 0.05% | 99.92% |

| 8 | 0.03% | 99.95% |

| 9 | 0.02% | 99.97% |

| 10 and above | 0.03% | 100.00% |

| Total | 100% | — |

We conclude this example by investigating more thoroughly whether the dimensions of the matrices have an impact on either the rapidity of convergence and/or on the one-step analysis. The following table gives the mean and standard deviations of the number of iterations needed for convergence for various values of the dimension of the matrix - denoted by p and n when keeping the total number of cells in the matrix constant 2. Once more our successive normalization procedure is applied to 1000 uniform random starting values. Results of this exercise are given in the table below (see Table 2).

Table 2.

Rapidity of convergence and one-step analysis for various p and n combinations

| p | 3 | 4 | 5 | 10 |

|---|---|---|---|---|

| n | 33 | 25 | 20 | 10 |

| mean(count) | 34.0870 | 22.3500 | 18.0720 | 14.5790 |

| std(count) | 5.3870 | 3.3162 | 2.5477 | 2.1099 |

| mean(ratio) | 2.7026 | 2.5812 | 2.6237 | 2.7207 |

| std(ratio) | 1.8483 | 1.5455 | 1.4768 | 1.2699 |

We find that when n and p are close convergence appears to be faster, keeping everything else constant. Interestingly enough, a one-step analysis performed for the different scenarios above tends to suggest that the onestep ratio, defined as the distance from the first iterate to the limit as a proportion of the total distance to the limit from the starting value, seems largely unaffected by the row or column dimension of the problem.

6.2. Example 3: Simulation study on a 5-by-5 dimensional example

We now proceed to further investigate the successive normalization procedure when one begins with column mean-standard deviation polishing followed by row mean-standard deviation polishing or vice versa on a simple 5-by-5 dimensional example. The theory developed in the previous sections proves convergence of the successive normalization procedure whether the first normalization that is performed on the matrix is row polishing or column polishing.

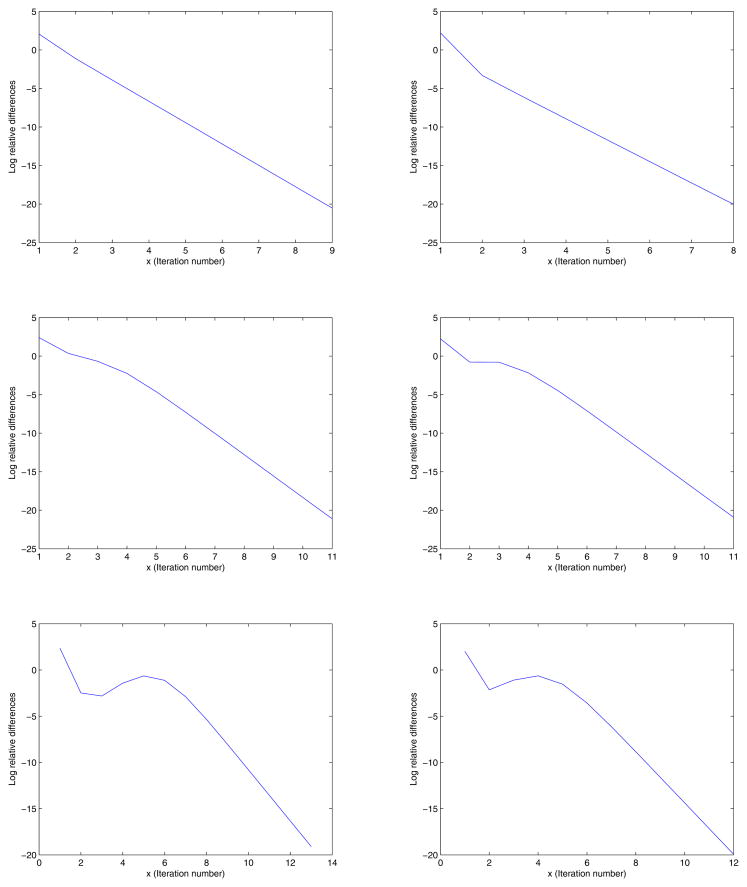

The algorithm took 30 iterations to converge when one begins with column mean-standard deviation polishing, and when one begins with row mean-standard deviation polishing it took 26 iterations to converge. The initial matrix, the final solutions, log relative differences and their respective plots for both approaches are given below (see Fig. 6).

Fig 6.

Relative differences at each iteration on the log scale for 5-by-5 dimensional example (a) starting with column polishing (b) starting with row polishing

| (13) |

| (14) |

| (15) |

| (16) |

As expected the final solutions are different. The simulations were repeated with different initial values, and we note that the convergence patterns (as illustrated in Fig. 6) are similar whether the procedure starts with column polishing or row polishing, though actual the number of iterations required to converge can vary.

6.3. Example 4: Gene expression data set: 20426-by-63 dimensional example

We now illustrate the convergence of the successive row and column mean-standard deviation polishing for a real life gene expression example -a 20426-by-63 dimensional example. This dataset arose originally from a study of human in-stent restenosis by Ashley et al. [2]. The algorithm took considerably longer in terms of time and computer resources but converged in eight iterations. The initial matrix and the final are too large to display, but the relative(and log relative) difference for the eight iterations are given subsequently:

| (17) |

Note once more how the relative differences decreases linearly on the log scale(though empirically) and is once again suggestive of the rate of convergence. As both the figure (see Fig. 7) and the vector of relative differences indicates, there is a jump between iteration 1 and 2 and then the curve behaves linearly.

Fig 7.

Relative differences at each iteration on the log scale - 20426-by-63 dimensional example

Additionally the whole procedure takes about 853.2 seconds or approximately 14.22 minutes on a desktop computer3 vs 0.4 seconds for the 10-by-10 example. However, the algorithm terminates after only eight iterations. In this example the number of iterations does NOT change with the increase in dimensionality. It may make sense to investigate this behavior more thoroughly, empirically, using simulation for rectangular but not square matrices. It seems that the ratio of the two dimensions or the minimum of the two dimensions may play a role. We should also bear in mind that the tolerance level, which is the sum of the individual differences squared, has been kept constant at 0.00000001.

7. Conclusion

In this section we attempt to lend perspective to our results and to point the way for future developments. Readers please note that for rectangular n × k arrays of real numbers with min(k, n) ≥ 3, the technique beginning with rows (alternatively columns) and successively subtracting row (column) means and dividing resulting differences, respectively, by row (column) standard deviations converges for a subset of Euclidean ℛn×k whose complement has Lebesgue measure 0. The limit is row and column exchangeable given the Gaussian probability mechanism that applies in our theoretical arguments. We do not offer other information on the nature of the exact set on which successive iterates converge. A single “iteration” of the process we study has four steps, two each respectively for rows and columns. Note that on the set for which the algorithm converges, convergence seems remarkably rapid, exponential or even faster, perhaps because after a half an iteration, the rows (alternately columns) lie as n (respectively k) points on the surface of the product of relevant unit spheres. Further iterations adjust only directions, not lengths.

Viewing the squares of the entries as the terms of a backwards martingale entails maximal inequalities for them, and therefore implicitly contains information on “rates of convergence” of the squares; but these easy results appear far from the best one might establish. Our arguments for (almost everywhere) convergence of the original signed entries do not have information regarding rates of convergence. One argues easily that if successive iterates converge, and no limiting entry is 0, then after finitely many steps, (the number depending on the original values and the limiting values) signs are unchanged. In our examples of small dimension, evidence of this can be made explicit. In particular we observe empirically that the vast majority of sign changes that are observed do indeed take place in the first few iterations. Any sign changes that are observed well after the first few iterations correspond to sign changes around entries with limiting values close to zero. We also have no information on optimality in any sense of the iterated transformations we study. One reason for our thinking that our topic is inherently difficult is that we were unable to view successive iterates as “contractions” in any sense familiar to us.

If we take any original set of numbers, and multiply each number by the realized value of a positive random variable with arbitrarily heavy tails, then convergence is unchanged. Normalization entails that after half a single iteration the same points on the surface of the relevant unit spheres are attained, no matter the multiple. The message is that what matters to convergence are the distributions induced on the surfaces of spheres after each half iteration, and not otherwise common heaviness of the tails of the probability distributions of individual entries.

Acknowledgments

The authors gratefully acknowledge Bradley Efron for introducing them to the research question addressed in the paper. They also thank Johann Won and Thomas Quertermous for useful discussions and Thomas Quertermous for granting us access to and understanding of data we study and reference; Bonnie Chung and Cindy Kirby are also acknowledged for administrative assistance. Richard Olshen was supported in part by NIH MERIT Award R37EB02784, and Bala Rajaratnam was supported in part by NSF grant DMS 0505303 DMS 0906392 and NIH grant 5R01 EB001988-11REV

Footnotes

Since the number of iterations to convergence depends on the starting point, the length of the vector of the number of sign changes will vary accordingly. We summarize this vector by averaging over all the 1000 simulations the relative frequency of the number of sign changes for the first nine iterations. The first nine iterations were chosen as each of the 1000 simulations required at least 9 iterations to converge.

or approximately constant

2GHz Core 2 Duo processor and 2GB of RAM

Contributor Information

Richard A. Olshen, Email: olshen@stanford.edu, Depts. of Health Research and Policy, Electrical Engineering, and Statistics, Stanford, CA 94305-5405, U.S.A.

Bala Rajaratnam, Email: brajarat@stanford.edu, Department of Statistics, Stanford, CA 94305-4065, U.S.A..

References

- 1.Anderson TW. An introduction to multivariate statistical analysis. 3. Wiley; New Jersey: 2003. [Google Scholar]

- 2.Ashley EA, Ferrara R, King JY, Vailaya A, Kuchinsky A, He X, Byers B, Gerckens U, Oblin S, Tsalenko A, Soito A, Spin J, Tabibiazar R, Connolly AJ, Simpson JB, Grube E, Quertermous T. Network analysis of human in-stent restenosis, in. Circulation. 2006;114(24):2644–2654. doi: 10.1161/CIRCULATIONAHA.106.637025. [DOI] [PubMed] [Google Scholar]

- 3.Doob JL. Regularity properties of certain functions of chance variables, in. Transactions of the American Mathematical Society. 1940;47(2):455–486. [Google Scholar]

- 4.Durrett R. Probability: Theory and Examples. 2. Duxbury Press; Belmont: 1995. [Google Scholar]

- 5.Efron B. Student’s t-test under symmetry conditions, in. Journal of the American Statistical Association. 1969;64(328):1278–1302. [Google Scholar]

- 6.Feller W. An Introduction to Probability Theory and Its Applications. 2. Vol. 2. Wiley; New York: 1966. [Google Scholar]

- 7.Gnedenko BV, Kolmogorov AN. Limit Distributions for Sums of Independent Random Variables. Addison-Wesley; Boston: 1954. [Google Scholar]

- 8.Muirhead RJ. Aspects of Multivariate Statistical Theory. Wiley; New York: 1999. [Google Scholar]

- 9.Scheffé H. The Analysis of Variance. Wiley; New York: 1999. (Reprint) [Google Scholar]

- 10.Zolotarev VM. One-dimensional Stable Distributions. American Mathematical Society; Providence: 1986. [Google Scholar]