The role of usability testing in the evaluation of an electronic health record system could improve chances that the design is integrated with existing workflow and business processes in a clear, efficient way.

Abstract

Purpose:

An oncology electronic health record (EHR) was implemented without prior usability testing. Before expanding the system to new clinics, this study was initiated to examine the role of usability testing in the evaluation of an EHR product and whether novice users could identify issues with usability that resonated with more experienced users of the system. In addition, our study evaluated whether usability issues with an already implemented system affect efficiency and satisfaction of users.

Methods:

A general usability guide was developed by a group of five informaticists. Using this guide, four novice users evaluated an EHR product and identified issues. A panel of five experts reviewed the identified issues to determine agreement with and applicability to the already implemented system. A survey of 42 experienced users of the previously implemented EHR was also performed to assess efficiency and general satisfaction.

Results:

The novice users identified 110 usability issues. Our expert panel agreed with 90% of the issues and recommendations for correction identified by the novice users. Our survey had a 54% response rate. The majority of the experienced users of the previously implemented system, which did not benefit from upfront usability testing, had a high degree of dissatisfaction with efficiency and general functionality but higher overall satisfaction than expected.

Conclusion:

In addition to reviewing features and content of an EHR system, usability testing could improve the chances that the EHR design is integrated with existing workflow and business processes in a clear and efficient way.

Introduction

Since 2006, there has been a national push to “make wider use of electronic records and other health information technology, to help control costs and reduce dangerous medical errors.”1 ASCO has also recognized that the electronic health record (EHR) is an essential vehicle for advancing quality of care and has taken progressive steps toward educating and supporting its membership in identifying EHR technology for their oncology practices.2–17 Amid all of the discussion surrounding product features, functions, and which product is right for which practice setting, one important component that warrants a closer look is usability. This article not only discusses the importance of usability but also shares data and analysis regarding an institutional implementation of an EHR from one form of usability testing called heuristic evaluation.

The usability of a product is determined by a combination of its features, functionality, visual appeal, and usefulness. The product must be tailored to the context in which it is used, and it must take into consideration the characteristics of the people who use it. The purpose of an EHR is to handle the medical information essential for patient care and improve the efficiency and accessibility of that information. Those who choose to implement an EHR system must first gain a solid understanding of the tasks in which users engage to accomplish their work. These tasks involve the information people need, the point in the patient care process at which it is needed, and the reason it is needed. Clinical tasks can be mapped as a sequence of events with respect to business activities, regulations, and other related entities. Diagrams may be developed to capture workflow and dependencies and clarify the factors that will influence success or failure of a product in the given environment.

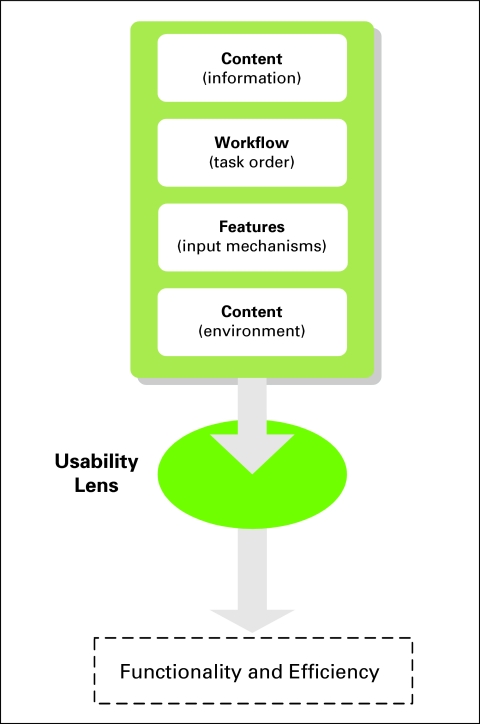

It is at this point that usability analysis enters. Once a foundational understanding of the processes and people of a clinic is grasped, attention can be shifted to the available vendor products that may meet the identified needs. To effectively evaluate EHR products, several components need to be reviewed that have the potential to significantly affect user satisfaction. As illustrated in Figure 1, these components should be viewed through a usability lens that allows product evaluation based on known guidelines for usable systems, with the goal of improving the functionality and efficiency of the implemented product. One does not have to be an expert to incorporate at least some usability principles into an EHR implementation project, and even a small effort will help to improve user satisfaction with the system.

Figure 1.

Electronic health record components affecting user satisfaction.

Usability testing and analysis are being increasingly used in the medical community for the development and improvement of telemedicine systems, computer patient records, and medical devices.18–20 Usability analysis has been shown to decrease the number of end-user problems with systems as well as reduce the cost of implementing change requests that would have been up to 100 times more expensive to fix as a result of not conducting usability analysis.21

There are several forms of discount usability analyses that require fewer resources and time than formal usability testing. The methods are collectively known as usability inspection, the goals of which are to identify usability problems in software design and provide recommendations on how to improve the system.22 One particularly popular method of usability inspection is called heuristic evaluation.

Heuristic evaluation is the systematic inspection of a software design for its compliance with known guidelines (or heuristics) of usable systems.21 Problems are identified and rated for severity by usability and subject-matter experts. Problems are analyzed and reformulated into suggestions that can be incorporated into new design iterations. For example, through heuristic evaluation, it may be discovered that the software under analysis does not associate a date when weight measurements are entered. This identified problem can be reformulated into a requirement that the software designers add an automatic date stamp when a weight measurement is entered into the system. This requirement would then be incorporated into the next software update release.

Heuristic evaluation, as with all usability inspection techniques, aims to identify defects in a software design that may cause problems for users once the software is implemented. If problems can be found and fixed during the initial (or customization) phase of development, then the final product should be more usable and of better quality than it would be otherwise. For EHR implementations, heuristic evaluation can be exercised to give users greater control over the functionality of their EHR system and potentially save them time and money by not having to make critical changes after implementation of the system. Furthermore, applying this technique during the vendor selection process allows users to compare features and functionality on a more objective basis while taking into account the characteristics of their practices and people.

Methods

Heuristic Evaluation

Heuristic evaluation was conducted in summer and fall 2006 on a test (demonstration) system provided by an EHR vendor. The software had been implemented in several clinics of the Division of Hematology-Oncology at the University of California, Los Angeles, and expansion was planned. A team of five individuals comprising two technical informaticists (one senior, one junior), two medical informaticists (one senior, one junior), and one lay individual contributed, at varying times, to preparing and administering the evaluation. All members of the team were familiar with usability principles, and the senior technical informaticist had prior knowledge and training regarding usability testing methods.

The functionality of the test system that the vendor provided was largely geared toward physicians; therefore, four physicians were recruited to serve as evaluators of the system. These physicians had no previous exposure to the EHR system, so they were considered novice users with subject-matter expertise. Scenario-based exercises of common tasks were created by the junior medical informaticist. The evaluation protocol included a think-aloud technique to have the novice users speak their thoughts as they worked through the exercises. The junior technical informaticist served as an observer during the sessions, which were conducted individually with each novice user. Using a mock patient profile, the novice users were expected to create and populate a new outpatient progress note and work with other components including allergies, medications, problems, and visit history. The scenario focused on data review and did not include drug-ordering components.

On the basis of published generalized heuristic principles,20,23,24 the technical informaticists compiled an EHR heuristic evaluation template (Data Supplement, online only) to educate novice users on the topic of heuristic evaluation. The packet included a brief summary of the technique, a description of the evaluation process, details of the heuristics tailored for this study, and narrative and graphic examples of each heuristic violation. The packets were distributed to the novice users several days before the scheduled evaluation along with instructions to review the packet before the session.

Evaluation sessions averaged 1 to 2 hours in length. The novice users were encouraged to talk aloud as they progressed through the tasks so the informaticists could gain insight into their thought processes. The junior technical informaticist transcribed the novice users' verbalizations, took notes on their actions, and answered their questions. The entire session was also audio recorded to ensure accuracy and completeness of the observation.

Spreadsheets were created to store the data collected from the novice users. Each entry in the spreadsheet represented a comment given about the system. To organize the comments by the heuristic each entry represented, a separate column for heuristic category was added to the spreadsheet. The two technical informaticists and lay individual separately analyzed each entry and assigned a heuristic category. A one-best-choice approach was taken to map each comment to a single heuristic violation. System bugs that were encountered but not part of the software design were handled separately. Table 1 lists the heuristic categories used.

Table 1.

Categories of Heuristic Violations

| Category | Description | Related Questions |

|---|---|---|

| Match | Use words, terms, and phrases familiar to user, especially for abbreviations, acronyms; ensure task components match user's mental model for activity | —Are system options, tasks described in terminology familiar to user? —Are system objects, tasks ordered in most logical way? |

| Visibility | Inform user of what system is doing and how it is processing data input; also includes response times for system processes | —Is there visual feedback in menus or dialog boxes indicating which choices are selectable? —If there are observable delays in system response time, is user kept informed of system progress? |

| Memory | Ensure all information user needs to complete task is at hand; allow information to be carried forward through process; provide helpful default fields and menus | —Are all data needed by user on display at each step in multistep tasks? —Are there appropriate menu selection/data entry defaults? |

| Minimalist | Avoid too much information on screen at one time; this will detract from task at hand; avoid clutter, extraneous information | —Has unnecessary duplication of information been eliminated? —Can user choose to display or hide details of system elements (ie, expanding, collapsing lists)? |

| Error | Offer informational messages to help user solve current problem; avoid error-producing situations; provide mechanisms to check for errors before task completion | —Does system warn user if potentially serious error is about to be made? —Do error messages inform user of error severity, suggest cause of problem? |

| Consistency | Use consistent themes for colors, styles, fonts, headers, buttons; user should be able to visually distinguish parts of interface | —Are buttons/commands that perform same action named consistently across all screens in system? —Are menu names consistent in grammatical style, terminology, within each menu, across screens? |

| Control | Avoid trapping user in situations in which action cannot be canceled, undone | —Can user cancel actions/operations in progress? —Can user easily reverse actions? |

| Flexibility | Provide ability to reuse interaction history, use templates, shortcut keys, jump directly to desired locations | —Does system provide shortcuts for high-frequency actions? —Is user able to avoid unnecessary steps through use of templates? |

To confirm the findings from the novice users, the informaticists verified the results of the heuristic evaluation with a panel of five experts who each had advanced knowledge of the implemented product. The expert panel reviewed the findings of the heuristic evaluation and rated by consensus whether the identified problems, if solved, would improve the current production version of the system.

Survey of Experienced Users

After the expert-panel verification, the informaticists conducted an individual user satisfaction survey of all experienced users of the EHR system. To qualify as experienced, a user had to have used the system for at least 1 year. Forty-two physicians (12 hematology-oncology fellows and 30 attendings) were surveyed across three community oncology clinics and one academic hospital clinic. The 14-question survey instrument covered topics dealing with the efficiency, flexibility, and accessibility of the EHR system. Specific items explored users' satisfaction with functionality such as documenting care, ordering and administering chemotherapy, and maintaining lists of medication and problems. The survey was administered via an online survey tool over a 3-week period in May 2008. E-mail messages were sent to prospective participants no more than three times requesting their completion of the survey.

Both the heuristic evaluation and the survey were reviewed by the institutional review board of the University of California, Los Angeles, and received approval. The data was de-identified before analysis. The analysis was primarily descriptive in nature.

Results

Heuristic Evaluation

The heuristic evaluation yielded 167 distinct comments. There were 110 unique problems identified as well as three system bugs, five missing items, and four unaccommodated regulatory requirements. As noted in Table 2, the largest number of heuristic violations was found in the categories of Match and Visibility. Most of the problems found in the Match category dealt with discordance between the novice users' mental model and how a specified task was performed using the system. For example, three users commented on how the navigational elements of the software were arranged. This was related to having the appropriate type and location of links to efficiently move around the system. In another example, all four novice users found that a particular section of the progress note form was named incorrectly. The title of the section did not accurately reflect its contents, and a more appropriate term should have been used instead to avoid confusion.

Table 2.

Heuristic Violations by Category

| Category | No. of Violations | Problems Found by Novice Users |

|---|---|---|

| Match | 24 | —“Name of tab is not reflective of its content” —“Clicking on date link under ‘Visit Summary’ should bring up physician notes from that date” |

| Visibility | 21 | —“Relationship between checkboxes and text boxes is not readily apparent” —“Was unsure of what hitting ‘Enter’ did after typing in problem” |

| Memory | 15 | —“Does not have information on prior assessments/plans from previous note (one of the most important elements)” —“Problem list should be visible or at least available on the screen as one is filling out the discussion/plan” |

| Minimalist | 12 | —“Page layout is overwhelming” —“There are space utilization issues in the printable format of note—it shows everything regardless of whether or not the information was actually collected from patient (this would actually constitute fraud)” |

| Error | 11 | —“System needs to ensure that physicians enter primary diagnosis” —“Allergies can be deleted” |

| Consistency | 9 | —“It is difficult to distinguish between the field names and options, also between fields” —“Options for ‘DM’ are inconsistent with other options on the same page” |

| Control | 9 | —“Cannot undo deletions from ‘Current Medications’ or ‘Prior Medications’ list” —“Having the system generate a printable view of the note after user selects the billing level is unexpected and confusing” |

| Flexibility | 9 | —“Need ability to refill multiple prescriptions at once” —“How will we deal with the issue of generating triplicates for controlled substances?” |

Abbreviation: DM, diabetes mellitus.

The Visibility heuristic violations highlighted an abundance of missed opportunities for the system to provide meaningful visual cues. For example, novice users found an unclear usage of checkboxes in four different sections of the interface. The purpose of the checkboxes was not readily apparent to the users, and they functioned differently in each section (also a Consistency violation). Several additional problems were discovered by the novice users during their sessions. All of the novice users found a system bug in the medication section that did not allow certain information to be updated or modified. Furthermore, three of the four users identified that the system did not accommodate documenting whether other chronic illnesses were active. Additional examples of problems found in each category can be seen in Table 2.

Expert Panel

The expert panel reviewed the usability findings and agreed that 99 (90%) of the 110 unique problems identified in the heuristic evaluation by the novice users were valid. Rejected items reflected issues that the expert panel felt the experienced users had become accustomed to in the configuration of the implemented system and, therefore, were not viewed as problems.

Survey

Of the 42 experienced user physicians invited to participate, a total of 23 physicians responded to either a portion or the entirety of the survey for a response rate of 54%. However, only 17 (74%) and 18 (78%) of the 23 respondents completed the sections on efficiency and satisfaction, respectively. These experienced users disagreed that the EHR improved efficiency by first, reducing time looking for forms and documents (82.3% disagreed); second, improving data access and accountability (70.6% disagreed); third, improving data organization (64.7% disagreed); or fourth, facilitating more efficient documentation (64.7% disagreed; Table 3). In addition, a substantial percentage of respondents were dissatisfied with how the system created notes (44%), managed data (50%), and tracked data (50%). Moreover, only 66.7% of respondents would choose the currently configured and implemented version of the EHR if they could choose again, but only 44.4% would either be neutral about or prefer to go to paper rather than use the EHR system (Table 3).

Table 3.

Survey Results

| Characteristic | Disagree/ Dissatisfied |

Agree/Neutral/Satisfied |

||

|---|---|---|---|---|

| No. | % | No. | % | |

| Efficiency (n = 17) | ||||

| Reduces time spent looking for forms, documents | 14 | 82.3 | 3 | 17.7 |

| Improves data access, accountability | 12 | 70.6 | 5 | 29.4 |

| Improves data organization | 11 | 64.7 | 6 | 35.3 |

| Facilitates efficient documentation | 11 | 64.7 | 6 | 35.3 |

| Satisfaction (n = 18) | ||||

| Creating notes | 8 | 44 | 10 | 56 |

| Managing data | 9 | 50 | 9 | 50 |

| Tracking data | 9 | 50 | 9 | 50 |

| Preference (n = 18) | ||||

| Would choose EHR again if he/she could choose again | 12 | 66.7 | 6 | 33.3 |

| Would prefer paper over EHR | 10 | 55.6 | 8 | 44.4 |

Abbreviation: EHR, electronic health record.

Discussion

Our study demonstrated that novice users of an EHR with modest training on a simple generalizable guide were able to identify a significant number of usability issues when reviewing a system. Importantly, 90% of these issues resonated with the EHR expert panel and were recommended for resolution. Because the system was implemented without an upfront usability analysis, the majority of our survey respondents felt there were issues with efficiency and satisfaction that could have been identified and resolved through usability testing before implementation.

The survey of experienced users identified dissatisfaction with the efficiency, flexibility, and accessibility of the implemented system. Some respondents (26% and 22%, respectively) chose not to complete the questions about efficiency and satisfaction. Because the survey was de-identified, it was not possible to probe the reasons why these sections were skipped by some respondents. However, one self-identified individual expressed that the efficiency and satisfaction questions were skipped because of a high level of frustration with the system. One surprising finding was that the overall satisfaction of survey respondents with the EHR was higher than expected, given their dissatisfaction with the efficiency, flexibility, and accessibility of the system. This could have been a result of their adapting to the implemented system and feeling that having an EHR was better than the paper alternative (Table 3).

The results presented in this report have several limitations. First, they are specific to a single institution and a single EHR product, which importantly does not have a significant oncology EHR market share. Second, our usability analysis was performed without rating the severity of identified problems. This would have yielded a clearer picture of how the problems discovered by heuristic evaluation ranked in priority or criticality to system functionality. Despite these limitations and our small sample size, our analysis yielded important lessons learned that could be generalized to any EHR selection and implementation.

EHR systems have the potential to make patient care more efficient while improving access to needed medical information. The cost of implementing an EHR, whether the system is large or small, constitutes an investment for users of the product. The resources to invest in the system include human and financial capital as well as hours of technical setup and training for the system to work. Like any type of preventive measure, usability testing before system selection and implementation could potentially save money, time, and effort down the road. The best value of usability testing occurs when applied early on in the planning process, when problems are easier and less expensive to fix than they would be after implementation of the EHR.

Heuristic evaluation is a simple, effective general tool that requires only modest training for assessment of an EHR and can be incorporated into the process of selecting and implementing an EHR system. Implementers should know their users and the tasks that need to be accomplished through the EHR for their practice. This information, combined with heuristic evaluation, can be used to compare the features and functionality among competing vendor systems to make the most informed choice about which product to purchase. Furthermore, heuristic evaluation conducted before implementation of a system can identify problems that could be resolved before they negatively affect the efficiency and user satisfaction of the system.

Supplementary Material

Acknowledgment

Supported by Grant No. G08LM007851 from the National Library of Medicine. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Library of Medicine or the National Institutes of Health.

Authors' Disclosures of Potential Conflicts of Interest

The authors indicated no potential conflicts of interest.

Author Contributions

Conception and design: Natalie J. Corrao, Alan G. Robinson, Michael A. Swiernik, Arash Naeim

Financial support: Alan G. Robinson, Arash Naeim

Administrative support: Alan G. Robinson, Arash Naeim

Provision of study materials or patients: Michael A. Swiernik, Arash Naeim

Collection and assembly of data: Natalie J. Corrao, Arash Naeim

Data analysis and interpretation: Natalie J. Corrao, Alan G. Robinson, Michael A. Swiernik, Arash Naeim

Manuscript writing: Natalie J. Corrao, Alan G. Robinson, Michael A. Swiernik, Arash Naeim

Final approval of manuscript: Natalie J. Corrao, Alan G. Robinson, Michael A. Swiernik, Arash Naeim

References

- 1.President Bush's State of the Union Address [transcript] http://www.washingtonpost.com/wp-dyn/content/article/2006/01/31/AR2006013101468.html.

- 2.Wolfe TE. J Oncol Pract; Making the case for electronic health records: A report from ASCO's EHR symposium.; 2008. pp. 41–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Shulman LN, Miller RS, Ambinder EP, et al. Principles of safe practice using an oncology EHR system for chemotherapy ordering, preparation, and administration: Part 2 of 2. J Oncol Pract. 2008;4:254–257. doi: 10.1200/JOP.0857501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Shulman LN, Miller RS, Ambinder EP, et al. Principles of safe practice using an oncology EHR system for chemotherapy ordering, preparation, and administration: Part 1 of 2. J Oncol Pract. 2008;4:203–206. doi: 10.1200/JOP.0847501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Miller RS. Electronic health records for the practicing oncologist: 2007 update on ASCO's role. J Oncol Pract. 2007;3:106–107. doi: 10.1200/JOP.0727501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Korn JM. Electronic health records: How the new Stark law and anti-kickback rules may help speed adoption. J Oncol Pract. 2007;3:76–77. doi: 10.1200/JOP.0722501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Karp DD. Selecting an electronic health record system. J Oncol Pract. 2007;3:172–173. doi: 10.1200/JOP.0737501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Jordan WM. Electronic health records: A community practitioner's perspective. J Oncol Pract. 2007;3:231–232. doi: 10.1200/JOP.0747501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Goldwein JW, Rose CM. QOPI, EHRs, and quality measurement. J Oncol Pract. 2007;3:340. doi: 10.1200/JOP.0769001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Goldwein JW, Rose CM. QOPI, EHRs, and quality measures. J Oncol Pract. 2006;2:262. doi: 10.1200/jop.2006.2.5.262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Goldwein J. Using an electronic health record for research. J Oncol Pract. 2007;3:278–279. doi: 10.1200/JOP.0757501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Cox JV. ASCO's commitment to a better electronic health record: We need your help! J Oncol Pract. 2008;4:43–44. doi: 10.1200/JOP.0817501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Basch P. The physicians' electronic health record coalition. J Oncol Pract. 2007;3:321–322. doi: 10.1200/JOP.0768001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Electronic medical records. Possibilities and uncertainties abound. J Oncol Pract. 2006;2:75–76. doi: 10.1200/jop.2006.2.2.75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Ambinder EP. ASCO's role in facilitating the adoption of electronic records in oncology. J Oncol Pract. 2005;1:64–65. doi: 10.1200/jop.2005.1.2.64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Information technology in oncology offices. Advice based on experience. J Oncol Pract. 2006;2:67–69. doi: 10.1200/jop.2006.2.2.67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.The Oncology Electronic Health Record Field Guide. Selecting and Implementing an EHR. Am Soc Clin Oncol Ed Book. 2008 [Google Scholar]

- 18.Tang Z, Johnson TR, Tindall RD, et al. Applying heuristic evaluation to improve the usability of a telemedicine system. Telemed J E Health. 2006;12:24–34. doi: 10.1089/tmj.2006.12.24. [DOI] [PubMed] [Google Scholar]

- 19.Kushniruk AW, Patel VL. Cognitive and usability engineering methods for the evaluation of clinical information systems. J Biomed Inform. 2004;37:56–76. doi: 10.1016/j.jbi.2004.01.003. [DOI] [PubMed] [Google Scholar]

- 20.Zhang J, Johnson TR, Patel VL, et al. Using usability heuristics to evaluate patient safety of medical devices. J Biomed Inform. 2003;36:23–30. doi: 10.1016/s1532-0464(03)00060-1. [DOI] [PubMed] [Google Scholar]

- 21.Nielsen J. Usability Engineering. San Francisco, CA: Morgan Kaufmann; 1993. [Google Scholar]

- 22.Nielsen J, Mack RL. Usability Inspection Methods. New York, NY: John Wiley & Sons; 1994. p. 413. [Google Scholar]

- 23.Holbrook A, Keshavjee K, Troyan S, et al. Applying methodology to electronic medical record selection. Int J Med Inform. 2003;71:43–50. doi: 10.1016/s1386-5056(03)00071-6. [DOI] [PubMed] [Google Scholar]

- 24.Krug S. Indianapolis, IN: Que; 2000. Don't Make Me Think! A Common Sense Approach to Web Usability; p. 195. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.