Abstract

We formalize and provide tests of a set of logical-rule models for predicting perceptual classification response times (RTs) and choice probabilities. The models are developed by synthesizing mental-architecture, random-walk, and decision-bound approaches. According to the models, people make independent decisions about the locations of stimuli along a set of component dimensions. Those independent decisions are then combined via logical rules to determine the overall categorization response. The time course of the independent decisions is modeled via random-walk processes operating along individual dimensions. Alternative mental architectures are used as mechanisms for combining the independent decisions to implement the logical rules. We derive fundamental qualitative contrasts for distinguishing among the predictions of the rule models and major alternative models of classification RT. We also use the models to predict detailed RT distribution data associated with individual stimuli in tasks of speeded perceptual classification.

A fundamental issue in cognitive science concerns the manner in which people represent categories in memory and the decision processes that they use to determine category membership. In early research in the field, it was assumed that people represent categories in terms of sets of logical rules. Research focused on issues such as the difficulty of learning different rules and on the hypothesis-testing strategies that might underlie rule learning (e.g., Bourne, 1970; Levine, 1975; Neisser & Weene, 1962; Trabasso & Bower, 1968). Owing to the influence of researchers such as Posner and Keele (1968) and Rosch (1973), who suggested that many natural categories have “ill-defined” structures that do not conform to simple rules or definitions, alternative theoretical approaches were developed. Modern theories of perceptual classification, for example, include exemplar models and decision-bound models. According to exemplar models, people represent categories in terms of stored exemplars of categories, and classify objects based on their similarity to these stored exemplars (Hintzman, 1986; Medin & Schaffer, 1978; Nosofsky, 1986). Alternatively, according to decision-bound models, people may use (potentially complex) decision bounds to divide up a perceptual space into different category regions. Classification is determined by the category region into which a stimulus is perceived to fall (Ashby & Townsend, 1986; Maddox & Ashby, 1993).

Although the original types of logical rule-based models no longer dominate the field, the general idea that people may use rules as a basis for classification has certainly not disappeared. Indeed, prominent models that posit rule-based forms of category representation, at least as an important component of a fuller system, continue to be proposed and tested (e.g., Ashby, Alfonso-Reese, Turken, & Waldron, 1998; Erickson & Kruschke, 1998; Feldman, 2000; Goodman, Tenenbaum, Feldman, & Griffiths, 2008; Nosofsky, Palmeri, & McKinley, 1994). Furthermore, such models are highly competitive with exemplar and decision-bound models, at least in certain paradigms.

A major limitation of modern rule-based models of classification, however, is that, to date, they have not been used to predict or explain the time course of classification decision making.1 By contrast, one of the major achievements of modern exemplar and decision-bound models is that they provide detailed quantitative accounts of classification response times (RTs) (e.g., Ashby, 2000; Ashby & Maddox, 1994; Cohen & Nosofsky, 2003; Lamberts, 1998, 2000; Nosofsky & Palmeri, 1997a).

The major purpose of the present research is to begin to fill this gap and to formalize logical-rule models designed to account for the time course of perceptual classification. Of course, we will not claim that the newly developed rule models provide a reflection of human performance that holds universally across all testing conditions and participants. Instead, according to modern views (e.g., Ashby & Maddox, 2005), there are multiple systems and modes of classification, and alternative testing conditions may induce reliance on different classification strategies. Furthermore, even within the same testing conditions, there may be substantial individual differences in which classification strategies are used. Nevetheless, it is often difficult to tell apart the predictions from models that are intended to represent these alternative classification strategies (e.g,. Nosofsky & Johansen, 2000). By studying the time course of classification, and requiring the models to predict classification RTs, more power is gained in telling the models apart. Thus, the present effort is important because it will provide a valuable tool and a new arena in which rule-based models can be contrasted with alternative models of perceptual category representation and processing.

As will be seen, en route to developing these rule-based models, we combine two major general approaches to the modeling of RT data. One approach has focused on alternative “mental architectures” of information processing (e.g., Kantowitz, 1974; Schweickert, 1992; Sternberg, 1969; Townsend, 1984). This approach asks questions such as whether information from multiple dimensions is processed in serial or parallel fashion, and whether the processing is self-terminating or exhaustive. The second major approach uses “diffusion” or “random-walk” models of RT, in which perceptual information is sampled until a criterial amount of evidence has been obtained to make a decision (e.g., Busemeyer, 1985; Link, 1992; Luce, 1986; Ratcliff, 1978; Ratcliff & Rouder, 1998; Townsend & Ashby, 1983). The present proposed logical-rule models of classification RT will combine the mental-architecture and random-walk approaches within an integrated framework (for examples of such approaches in other domains, see Palmer & Mclean, 1995; Ratcliff, 1978; Thornton & Gilden, 2007). Fific, Nosofsky, and Townsend (2008, Appendix A) applied some special cases of the newly proposed models in preliminary fashion to assess some very specific qualitative predictions related to formal theorems of information processing. The present work has the far more ambitious goals of: i) using these architectures as a means of formalizing logical-rule models of classification RT; ii) deriving an extended set of fundamental qualitative contrasts for distinguishing among the models; iii) comparing the logical-rule models to major alternative models of classification RT; and iv) testing the ability of the logical-rule models to provide quantitative accounts of detailed RT distribution data and error rates associated with individual stimuli in tasks of speeded classification.

Conceptual Overview of the Rule-Based Models

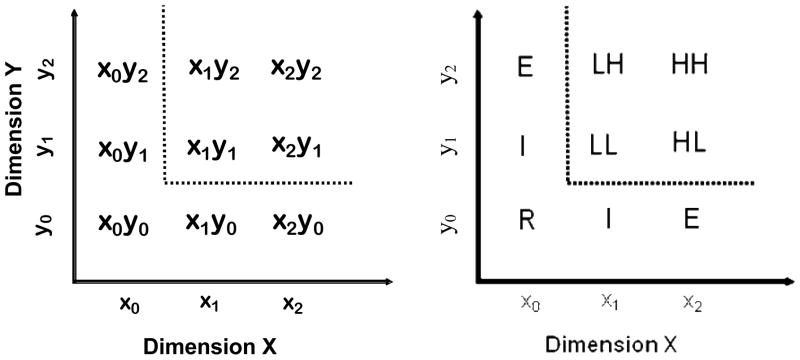

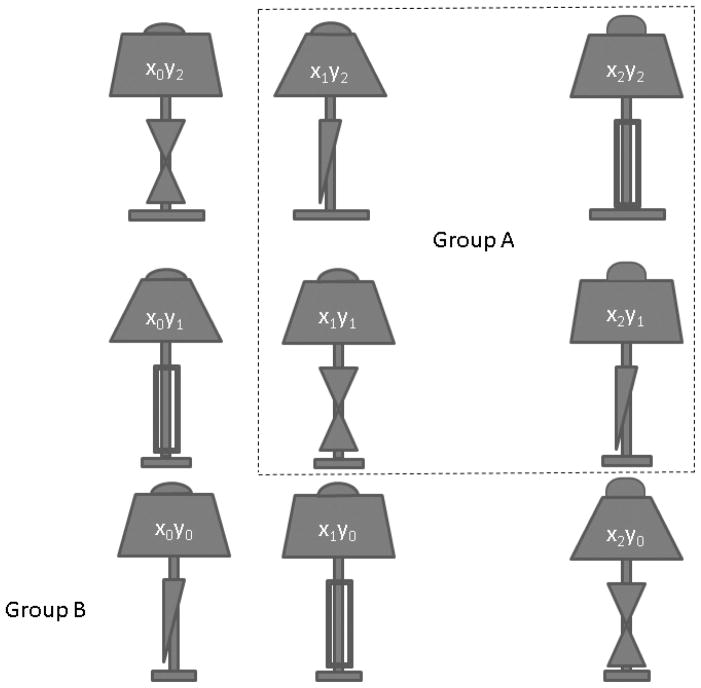

It is convenient to introduce the proposed rule-based models of classification RT by means of the concrete example illustrated in Figure 1 (left panel). This example turns out to be highly diagnostic for distinguishing among numerous prominent models of classification RT and will guide all of our ensuing empirical tests. In the example, the stimuli vary along two continuous dimensions, x and y. In the present case, there are three values per dimension and the values are combined orthogonally to produce the 9 total stimuli in the set. The four stimuli in the upper right quadrant of the space belong to the “target” category (A), whereas the remaining stimuli belong to the “contrast” category (B).

Figure 1.

Left Panel: Schematic illustration of the structure of the stimulus set used for introducing and testing the logical-rule models of classification. The stimuli are composed of two dimensions, X and Y, with three values per dimension, combined orthogonally to produce the nine members of the stimulus set. The stimuli in the upper-right quadrant of the space (x1y1, x1y2, x2y1, and x2y2) are the members of the “target” category (Category A), whereas the remaining stimuli are the members of the “contrast” category (Category B). Membership in the target category is described in terms of a conjunctive rule, and membership in the contrast category is described in terms of a disjunctive rule. The dotted boundaries illustrate the decision boundaries for implementing these rules. Right Panel: Shorthand nomenclature for identifying the main stimulus types in the category structure.

Following previous work, by a “rule” we mean that an observer makes independent decisions regarding a stimulus’s value along multiple dimensions and then combines these separate decisions by using logical connectives such as “AND”, “OR”, and “NOT” to reach a final classification response (Ashby & Gott, 1988; Feldman, 2000; Nosofsky, Clark, & Shin, 1989). The Figure 1 category structure provides an example in which the target category can be defined in terms of a conjunctive rule. Specifically, a stimulus is a member of the target category if it has a value greater than or equal to x1 on Dimension x AND greater than or equal to y1 on Dimension y. Conversely, the contrast category can be described in terms of a disjunctive rule: A stimulus is a member of the contrast category if it has value less than x1 on Dimension x OR has value less than y1 on Dimension y. A reasonable idea is that a human classifier may make his or her classification decisions by implementing these logical rules.

Indeed, this type of logical rule-based strategy has been formalized, for example, within the decision-boundary framework (e.g., Ashby & Gott, 1988; Ashby & Townsend, 1986). Within that formalization, one posits that the observer establishes two decision boundaries in the perceptual space, as is illustrated by the dotted lines in Figure 1. The boundaries are orthogonal to the coordinate axes of the space, thereby implementing the logical rules described above. That is, the vertical boundary establishes a fixed criterion along Dimension x and the horizontal boundary establishes a fixed criterion along Dimension y. A stimulus is classified into the target category if it is perceived as exceeding the criterion on Dimension x AND is perceived as exceeding the criterion on Dimension y; otherwise, it is classified into the contrast category. In the language of decision-bound theory, the observer is making independent decisions along each of the dimensions and then combining these separate decisions to determine the final classification response.

Decision-bound theory provides an elegant language for expressing the structure of logical rules (as well as other strategies of classification decision making). In our view, however, to date, researchers have not offered an information-processing account of how such logical rules may be implemented. Therefore, rigorous processing theories of rule-based classification RT remain to be developed. The main past hypothesis stemming from decision-bound theory is known as the “RT-distance hypothesis” (Ashby, Boynton, & Lee, 1994; Ashby & Maddox, 1994). According to this hypothesis, classification RT is a decreasing function of the distance of a stimulus from the nearest decision bound in the space. In our view, this hypothesis seems most applicable in situations in which a single decision bound has been implemented to divide the psychological space into category regions. The situation depicted in Figure 1, however, is supposed to represent a case in which separate, independent decisions are made along each dimension, with these separate decisions then being combined to determine a classification response. Accordingly, a fuller processing model would formalize the mechanisms by which such independent decisions are made and combined. As will be seen, our newly proposed logical-rule models make predictions that differ substantially from past distance-from-boundary accounts.

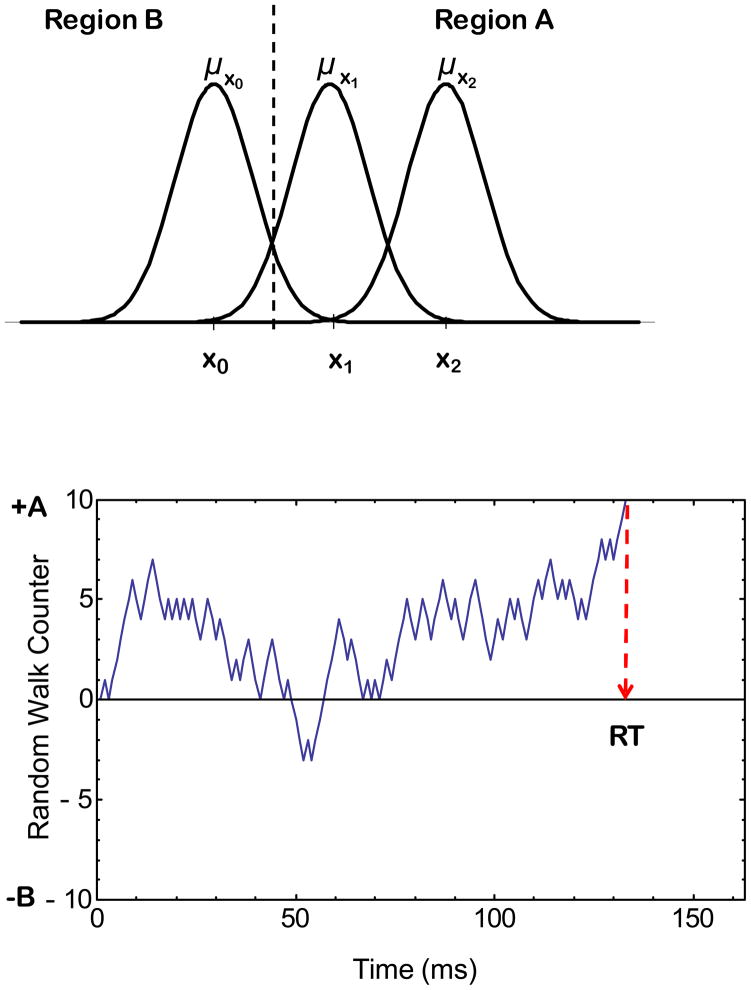

To begin this effort of developing these rule-based processing models, we combine two extremely successful general approaches to the modeling of RT data, namely random-walk and mental-architecture approaches. In the present models, the independent decisions along Dimensions x and y are each presumed to be governed by a separate, independent random-walk process. The nature of the process along Dimension x is illustrated schematically in Figure 2. In accord with decision-bound theory, on each individual dimension, there is a (normal) distribution of perceptual effects associated with each stimulus value (top panel of Figure 2). Furthermore, as illustrated in the figure, the observer establishes a decision boundary to divide the dimension into decision regions. On each step of the process, a perceptual effect is randomly and independently sampled from the distribution associated with the presented stimulus. The sampled perceptual effects drive a random-walk process (bottom panel of Figure 2). In the random walk, there is a counter that is initialized at zero, and the observer establishes criteria representing the amount of evidence that is needed to make an A or B decision. If the sampled perceptual effect falls in Region A, then the random-walk takes a unit step in the direction of criterion A; otherwise, it takes a unit step in the direction of criterion B. The sampling process continues until either Criterion A or B has been reached. The time to complete each individual-dimension decision process is determined by the number of steps that are required to complete the random walk. Note that, in accord with the RT-distance hypothesis, stimuli with values that lie far from the decision boundary (i.e., x2 in the present example) will tend to result in faster decision times along that dimension.

Figure 2.

Schematic illustration of the perceptual-sampling and random-walk processes that govern the decision process on Dimension X.

The preceding paragraph described how classification decision making takes place along each individual dimension. The overall categorization response, however, is determined by a mental architecture that implements the logical rules by combining the individual-dimension decisions. That is, the observer classifies a stimulus into the target category (A) only if both independent decisions point to region A (such that the conjunctive rule is satisfied). By contrast, the observer classifies a stimulus into the contrast category (B) if either independent decision points to B (such that the disjunctive rule is satisfied).

In the present research, we consider five main candidate architectures for how the independent decisions are combined to implement the logical rules. The candidate architectures are drawn from classic work in other domains such as simple detection and visual/memory search (Sternberg, 1969; Townsend, 1984). To begin, processing of each individual dimension may take place in serial fashion or in parallel fashion. In serial processing, the individual-dimension decisions take place sequentially. A decision is first made along one dimension, say, Dimension x; then, if needed, a second decision is made along Dimension y. By contrast, in parallel processing, the random-walk decision processes operate simultaneously, rather than sequentially, along Dimensions x and y.2 A second fundamental distinction pertains to the stopping rule, which may be either self-terminating or exhaustive. In self-terminating processing, the overall categorization response is made once either of the individual random-walk processes has reached a decision that allows an unambiguous response. For example, in Figure 1, suppose that stimulus x0y2 is presented, and the random-walk decision process on Dimension x reaches the correct decision that the stimulus falls in Region B of Dimension x. Then the disjunctive rule that defines the contrast category (B) is already satisfied, and the observer does not need to receive information concerning the location of the stimulus on Dimension y. By contrast, if an exhaustive stopping rule is used, then the final categorization response is not made until the decision processes have been completed on both dimensions.

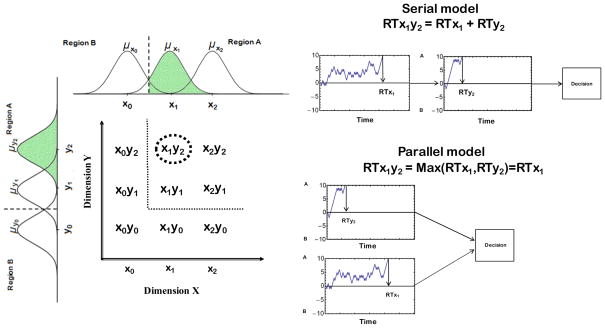

Combining the possibilities described above, there are thus far four main mental architectures that may implement the logical rules -- serial exhaustive, serial self-terminating, parallel exhaustive, and parallel self-terminating. It is straightforward to see that if processing is serial exhaustive, then the total decision time is just the sum of the individual-dimension decision times generated by each individual random walk. Suppose instead that processing is serial self-terminating. Then, if the first processed dimension allows a decision, total decision time is just the time for that first random walk to complete; otherwise, it is the sum of both individual-dimension decision times. In the case of parallel exhaustive processing, the random walks take place simultaneously, but the final categorization decision is not made until the slower of the two random walks has completed. Therefore, total decision time is the maximum of the two individual-dimension decision times generated by each random walk. And in the case of parallel self-terminating processing, total decision time is the minimum of the two individual-dimension decision times (assuming that the first-completed decision allows an unambiguous categorization response to be made). A schematic illustration of the serial-exhaustive and parallel-exhaustive possibilities for stimulus x1y2 from the target category is provided in Figure 3.

Figure 3.

Schematic illustration of the serial-exhaustive and parallel-exhaustive architectures, using stimulus x1y2 as an example. In the serial example, we assume that Dimension X is processed first. The value x1 lies near the decision boundary on Dimension X, so the random-walk process on that dimension tends to take a long time to complete. The value y2 lies far from the decision boundary on Dimension Y, so the random-walk process on that dimension tends to finish quickly. For the serial-exhaustive architecture, the two random walks operate sequentially, so the total decision time is just the sum of the two individual-dimension random-walk times. For the parallel-exhaustive architecture, the two random walks operate simultaneously, and processing is not completed until decisions have been made on both dimensions, so the total decision time is the maximum (i.e., slower) of the two individual-dimension random-walk times.

Finally, a fifth possibility that we consider in the present work is that a “coactive” mental architecture is used for implementing the logical rules (e.g., Diederich & Colonius, 1991; Miller, 1982; Mordkoff & Yantis, 1993; Townsend & Nozawa, 1995). In coactive processing, the observer does not make separate “macro-level” decisions along each of the individual dimensions. Instead, “micro-level” decisions from each individual dimension are pooled into a common processing channel, and it is this pooled channel that drives the macro-level decision-making process. Specifically, to formalize the coactive rule-based process, we assume that the individual dimensions contribute their inputs to a pooled random-walk process. On each step, if the sampled perceptual effects on Dimensions x and y both fall in the target-category region (A), then the pooled random walk steps in the direction of criterion A. Otherwise, if either sampled perceptual effect falls in the contrast category region (B), then the pooled random walk steps in the direction of criterion B. The process continues until either criterion A or B has been reached.

Regarding the terminology used in this article, we should clarify that when we say that an observer is using a “self-terminating” strategy, we mean that processing terminates only when it has the logical option to do so. For example, for the Figure-1 structure, in order for an observer to correctly classify a member of the target category into the target category, logical considerations dictate that processing is always exhaustive (or coactive), because the observer must verify that both independent decisions satisfy the conjunctive rule. Therefore, for the Figure-1 structure, the serial exhaustive and serial self-terminating models make distinctive predictions only for the members of the contrast category (and likewise for the parallel models). All models assume exhaustive processing for correct classification of the target-category members.

Finally, note that for all of the models, error probabilities and RTs are predicted using the same mechanisms as correct RTs.3 For example, suppose that processing is serial self-terminating and that Dimension x is processed first. Suppose further that x0y2 is presented (see Figure 1), but the random-walk leads to an incorrect decision that the stimulus falls in the target-category region (A) on Dimension x. Then processing cannot self-terminate, because neither the disjunctive rule that defines Category B nor the conjunctive rule that defines Category A has yet been satisfied. The system therefore needs to wait until the independent decision process on Dimension y has been completed. Thus, in this case, the total (incorrect) decision time for the serial self-terminating model will be the sum of the decision times on Dimensions x and y.

Free Parameters of the Logical-Rule Models

Specific parametric assumptions are needed to implement the logical-rule models described above. For purposes of getting started, we introduce various simplifying assumptions. First, in the to-be-reported experiments, the stimuli will vary along highly separable dimensions (Garner, 1974; Shepard, 1964). Furthermore, preliminary scaling work indicated that adjacent dimension values were roughly equally spaced. Therefore, a reasonable simplifying assumption is that the psychological representation of the stimuli mirrors the 3×3 grid structure illustrated in Figure 1. Specifically, we assume that associated with each stimulus is a bivariate normal distribution of perceptual effects, with the perceptual effects along Dimensions x and y being statistically independent for each stimulus. Furthermore, the means of the distributions are set at 0, 1, and 2, respectively, for stimuli with values of x0, x1, and x2 on Dimension x; and likewise for Dimension y. All stimuli have the same perceptual-effect variability along Dimension x, and likewise for Dimension y. To allow for the possibility of differential attention to the component dimensions, or that one dimension is more discriminable overall than the other, the variance of the distribution of perceptual effects (Figure 2, top panel) is allowed to be a separate free parameter for each dimension, σx2 and σy2, respectively.

In addition, to implement the perceptual-sampling process that drives the random walk (Figure 2, top panel), the observer establishes a decision-bound along Dimension x, Dx, and a decision-bound along Dimension y, Dy. Furthermore, the observer establishes criteria, +A and −B, representing the amount of evidence needed for making an A or a B decision on each dimension (Figure 2, bottom panel). A scaling parameter k is used for transforming the number of steps in each random walk into milliseconds.

Each model assumes that there is a residual base time, not associated with decision-making processes (e.g., reflecting encoding and motor-execution stages). The residual base-time is assumed to be log-normally distributed with mean μR and variance σR2.

Finally, the serial self-terminating model requires a free parameter px representing the probability that, on each individual trial, the dimensions are processed in the order x-then-y (rather than y-then-x).

In sum, in the present applications, the logical-rule models use the following 9 free parameters: σx2, σy2, Dx, Dy, +A, −B, k, μR and σR2. The serial self-terminating model also uses px. The adequacy of these simplifying assumptions can be assessed, in part, from the fits of the models to the reported data. Some generalizations of the models are considered in later sections of the article.

Fundamental Qualitative Predictions

In our ensuing experiments, we test the Figure-1 category structure under a variety of conditions. In all cases, individual subjects participate for multiple sessions and detailed RT-distribution and error data are collected for each individual stimulus for each individual subject. The primary goal is to test the ability of the alternative logical-rule models to quantitatively fit the detailed RT-distribution and error data and to compare their fits with well known alternative models of classification RT. As an important complement to the quantitative-fit comparisons, it turns out the Figure-1 structure is a highly diagnostic one for which the alternative models make contrasting qualitative predictions of classification RT. Indeed, as will be seen, the complete sets of qualitative predictions serve not only to distinguish among the rule-based models, but also to distinguish the rule models from previous decision-bound and exemplar models of classification RT. By considering the patterns of qualitative predictions made by each of the models, we gain insight into the reasons why one model might yield better quantitative fits than the others. In this section, we describe these fundamental qualitative contrasts. They are derived under the assumption that responding is highly accurate, which holds true under the initial testing conditions established in our experiments.

Target-Category Predictions

First, consider the members of the target-category (A). The category has a 2×2 factorial structure, formed by the combination of values x1and x2 along Dimension x, and y1 and y2 along Dimension y. Values of x1 and y1 along each dimension lie close to their respective decision boundaries, so tend to be hard to discriminate from the contrast category values. We will refer to them as the “low-salience” (L) dimension values. The values x2 and y2 lie farther from the decision boundaries, so are easier to discriminate. We will refer to them as the “high-salience” values (H). Thus, the target-category stimuli x1y1, x1y2, x2y1, and x2y2 will be referred to as the LL, LH, HL, and HH stimuli, respectively, as depicted in the right panel of Figure 1. This structure forms part of what is known as the double-factorial paradigm in the information-processing literature (Townsend & Nozawa, 1995). The double-factorial paradigm has been used in the context of other cognitive tasks (e.g., detection and visual/memory search) for contrasting the predictions from the alternative mental architectures described above. Here, we take advantage of these contrasts for helping to distinguish among the alternative logical rule-based models of classification RT. In the following, we provide a brief summary of the predictions along with intuitive explanations for them. For rigorous proofs of the assertions (along with a statement of more technical background assumptions), see Townsend and Nozawa (1995).

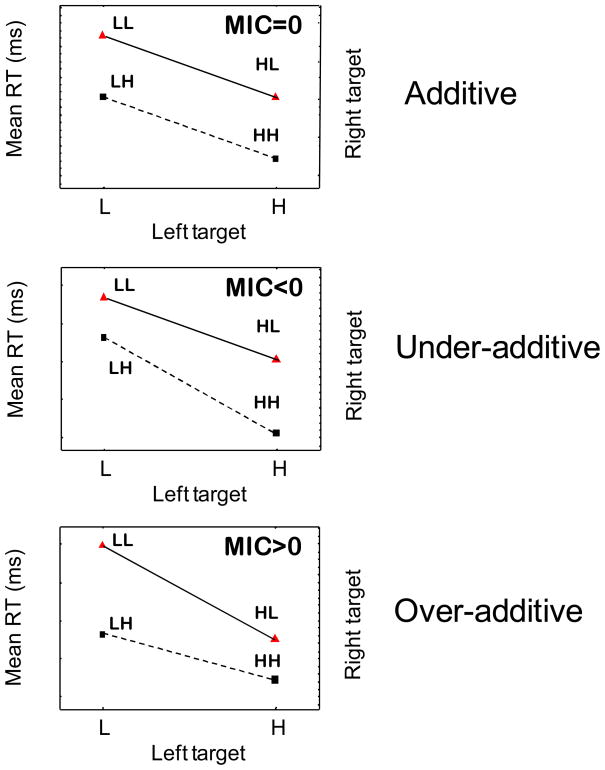

To begin, assuming that the H values are processed more rapidly than are the L values (as is predicted, for example, by the random-walk decision process represented in Figure 2), then there are three main candidate patterns of mean RTs that one might observe. These candidate patterns are illustrated schematically in Figure 4. The patterns have in common that LL has the slowest mean RT, LH and HL intermediate mean RTs, and HH the fastest mean RT. The RT patterns illustrated in the figure can be summarized in terms of an expression known as the mean interaction contrast (MIC):

| (1) |

where RT(LL) stands for the mean RT associated with the LL stimulus, and so forth. The MIC simply computes the difference between: the distance between the leftmost points on each of the lines [RT(LL)-RT(LH)] and the distance between the rightmost points on each of the lines [RT(HL)-RT(HH)]. It is straightforward to see that the pattern of “additivity” in Figure 4 is reflected by an MIC equal to 0. Likewise, “under-additivity” is reflected by MIC<0, and “over-additivity” is reflected by MIC>0.

Figure 4.

Schematic illustration of the three main candidate patterns of mean RTs for the members of the target category, assuming that the “high-salience” values on each dimension are processed more quickly than are the “low-salience” values. The patterns are summarized in terms of the mean-interaction-contrast, MIC = [RT(LL)-RT(LH)] − [RT(HL)-RT(HH)]. When MIC=0 (top panel), the mean RTs are “additive”; when MIC<0 (middle panel), the mean RTs are “under-additive”; and when MIC>0 (bottom panel), the mean RTs are “over-additive”.

The serial rule models predict MIC=0, that is, an additive pattern of mean RTs. Recall that, for the Figure-1 structure, correct classification of the target-category items requires exhaustive processing, so the serial self-terminating and serial-exhaustive models make the same predictions for the target-category items. In general, LH trials will show some slowing relative to HH trials due to slower processing of the x dimension. Likewise, HL trials will show some slowing relative to HH trials due to slower processing of the y dimension. If processing is serial exhaustive, then the increase in mean RTs for LL trials relative to HH trials will simply be the sum of the two individual sources of slowing, resulting in the pattern of additivity that is illustrated in Figure 4.

The parallel models predict MIC<0, that is, an under-additive pattern of mean RTs. (Again, correct processing is always exhaustive for the target category items, so in the present case there is no need to distinguish between the parallel-exhaustive and parallel self-terminating models.) If processing is parallel-exhaustive, then processing of both dimensions takes place simultaneously; however, the final classification decision is not made until decisions have been made on both dimensions. Thus, RT will be determined by the slower (i.e, maximum time) of the two individual-dimension decisions. Clearly, LH and HL trials will lead to slower mean RTs than will HH trials, because one of the individual-dimension decisions will be slowed. LL trials will lead to the slowest mean RTs of all, because the more opportunities for an individual decision to be slow, the slower on average will be the final response. The intuition, however, is that the individual decisions along each dimension begin to “run out of room” for further slowing. That is, although the RT distributions are unbounded, once one individual-dimension decision has been slowed, the probability of sampling a still slower decision on the other dimension diminishes. Thus, one observes the under-additive increase in mean RTs in the parallel-exhaustive case.

Finally, Townsend and Nozawa (1995) have provided a proof that the coactive architecture predicts MIC>0, i.e., the over-additive pattern of mean RTs shown in Figure 4. Fific et al. (2008, Appendix A) corroborated this assertion by means of computer-simulation of the coactive model. Furthermore, their computer simulations showed that the just-summarized MIC predictions for all of these models hold when error rates are low (and, for some of the models, even in the case of moderate error rates).

A schematic summary of the target-category mean RT predictions made by each of the logical-rule architectures is provided in the left panels of Figure 5. We should note that the alternative rule models make distinct qualitative predictions of patterns of target-category RTs considered at the distributional level as well (see Fific et al., 2008, for a recent extensive review). However, for purposes of getting started, we restrict initial consideration to comparisons at the level of RT means.

Figure 5.

Summary predictions of mean RTs from the alternative logical-rule models of classification. The left panels show the pattern of predictions for the target-category members, and the right panels show the pattern of predictions for the contrast-category members. Left panels: L = low-salience dimension value, H = high-salience dimension value, D1 = Dimension 1, D2 = Dimension 2. Right panels: R = redundant stimulus, I = interior-stimulus, E = exterior stimulus.

Contrast-Category Predictions

The target-category contrasts summarized above are well known in the information-processing literature. It turns out, however, that the various rule-based models also make contrasting qualitative predictions for the contrast-category, and these contrasts have not been considered in previous work. Although the contrast-category predictions are not completely parameter free, they hold over a wide range of the plausible parameter space for the models. Furthermore, parameter settings that allow some of the models to “undo” some of the predictions then result in other extremely strong constraints for telling the models apart. We used computer simulation to corroborate the reasoning that underlies the key predictions listed below. To help keep track of the ensuing list of predictions, they are summarized in canonical form in the right panels of Figure 5. The reader is encouraged to consult Figures 1 and 5 as the ensuing predictions are explained.

For ease of discussion (see Figure 1, left and right panels), in the following we will sometimes refer to stimuli x0y1 and x0y2 as the left-column members of the contrast category; to stimuli x1y0 and x2y0 as the bottom-row members of the contrast category; and to stimulus x0y0 as the redundant (R) stimulus. Also, we will sometimes refer to stimuli x0y1 and x1y0 as the interior (I) members of the contrast category, and to stimuli x0y2 and x2y0 as the exterior (E) members (see Figure 1, left and right panels). In our initial experiment, subjects are provided with instructions to use a fixed-order serial self-terminating strategy as a basis for classification. For example, they may be instructed to always process the dimensions in the order x-then-y. In this case, we will refer to Dimension x as the first-processed dimension and to Dimension y as the second-processed dimension. Of course, for the parallel and coactive models, the dimensions are assumed to be processed simultaneously. Thus, this nomenclature refers to the instructions, not to the processing characteristics assumed by the models.

As will be seen, in Experiment 1, RTs were much faster for contrast-category stimuli that satisfied the disjunctive rule on the first-processed dimension as opposed to the second-processed dimension. To allow each of the logical-rule models to be at least somewhat sensitive to the instructional manipulation, in deriving the following predictions, we assume that the processing rate on the first-processed dimension is faster than on the second-processed dimension. This assumption was implemented in the computer simulations by setting the perceptual noise parameter in the individual-dimension random walks (Figure 2) at a lower value on the first-processed dimension than on the second-processed dimension.

Fixed-Order Serial Self-Terminating Model

Suppose that an observer makes individual dimension decisions for the contrast category in serial self-terminating fashion. For starters, imagine that the observer engages in a fixed order of processing by always processing Dimension x first and then, if needed, processing Dimension y. Note that presentations of the left-column stimuli (x0y1 and x0y2) will generally lead to a correct classification response after only the first dimension (x) has been processed. The reason is that the value x0 satisfies the disjunctive OR rule, so processing can terminate after the initial decision. (Note as well that this fixed-order serial self-terminating model predicts that the redundant stimulus, x0y0, which satisfies the disjunctive rule on both of its dimensions, should have virtually the same distribution of RTs as do stimuli x0y1 and x0y2.) By contrast, presentations of the bottom-row stimuli (x1y0 and x2y0) will require the observer to process both dimensions in order to make a classification decision. That is, after processing only Dimension x, there is insufficient information to determine whether the stimulus is a member of the target category or the contrast category (i.e., both include members with values greater than or equal to x1 on Dimension x). Because the observer first processes Dimension x and then processes Dimension y, the general prediction is that classification responses to the bottom-row stimuli will be slower than to the left-column stimuli. More interesting, however, is the prediction from the serial model that RT for the exterior stimulus x2y0 will be somewhat faster than for the interior stimulus x1y0. Upon first checking Dimension x, it is easier to determine that x2 does not fall to the left of the decision criterion than it is to determine the same for x1. Thus, in the first stage of processing, x2y0 has an advantage compared to x1y0. In the second stage of processing, the time to determine that these stimuli have value y0 on Dimension y (and so are members of the contrast category) is the same for x1y0 and x2y0. Because the total decision time in this case is just the sum of the individual-dimension decision times, the serial self-terminating rule model therefore predicts faster RTs for the exterior stimulus x2y0 than for the interior stimulus x1y0.

In sum (see Figure 5, top-right panel), the fixed-order serial self-terminating model predicts virtually identical fast RTs for the exterior, interior, and redundant stimuli on the first-processed dimension; slower RTs for the interior and exterior stimuli on the second-processed dimension; and, for that second-processed dimension, that the interior stimulus will be somewhat slower than the exterior one.

Mixed-Order Serial Self-Terminating Model

A more general version of the serial self-terminating model assumes that instead of a fixed order of processing the dimensions, there is a mixed probabilistic order of processing. Using the reasoning above, it is straightforward to verify that (except for unusual parameter settings) this model predicts an RT advantage for the redundant stimulus (x0y0) compared to all other members of the contrast category; and that both exterior stimuli should have an RT advantage compared to their neighboring interior stimuli. Also, to the extent that one dimension tends to be processed before the other, stimuli on the first-processed dimension will have faster RTs than stimuli on the second-processed dimension. (See Figure 5.)

Parallel Self-Terminating Model

The parallel-self-terminating rule-based model yields a markedly different set of qualitative predictions than does the serial self-terminating model. According to the parallel-self-terminating model, decisions along dimensions x and y are made simultaneously, and processing terminates as soon as a decision is made that a stimulus has either value x0 or y0. Thus, total decision time is the minimum of those individual-dimension processing times that lead to contrast-category decisions. The time to determine that x0y1 has value x0 is the same as the time to determine that x0y2 has value x0 (and analogously for x1y0 and x2y0). Thus, the parallel self-terminating rule model predicts identical RTs for the interior and exterior members of the contrast category, in marked contrast to the serial self-terminating model. Like the mixed-order serial model, it predicts an RT advantage for the redundant stimulus x0y0, because the more opportunities to self-terminate, the faster the minimum RT tends to be. Also, as long as the rate of processing on each dimension is allowed to vary as described above, it naturally predicts faster RTs for stimuli that satisfy the disjunctive rule on the “first-processed” dimension rather than on the “second-processed” one.

Serial Exhaustive Model

For the same reason as the serial self-terminating model, the serial exhaustive model (Figure 5, fourth row) predicts longer RTs for the interior stimuli on each dimension than for the exterior stimuli. Interestingly, and in contrast to the previous models, it also predicts a longer RT for the redundant stimulus than for the exterior stimuli. The reasoning is as follows. Because processing is exhaustive, both the exterior stimuli and the redundant stimulus require that individual-dimension decisions be completed on both dimensions x and y. The total RT is just the sum of those individual-dimension RTs. Consider, for example, the redundant stimulus (x0y0) and the bottom-row exterior stimulus (x2y0). Both stimuli are the same distance from the decision bound on Dimension y, so the independent decision on Dimension y takes the same amount of time for these two stimuli. However, assuming a reasonable placement of the decision bound on Dimension x (i.e., one that allows for above-chance performance on all stimuli), then the exterior stimulus is farther than is the redundant stimulus from the x boundary. Thus, the independent decision on Dimension x is faster for the exterior stimulus than for the redundant stimulus. Accordingly, the predicted total RT for the exterior stimulus is faster than for the redundant one. Analogous reasoning leads to the prediction that the left-column exterior stimulus (x0y2) will also have a faster RT than the redundant stimulus. The predicted RT of the redundant stimulus compared to the interior stimuli depends on the precise placement of the decision bounds on each dimension. The canonical predictions shown in the figure are for the case in which the decision bounds are set midway between the means of the redundant and interior stimuli on each dimension.

Parallel-Exhaustive Model

The parallel-exhaustive model (Figure 5, Row 5) requires that both dimensions be processed, and the total decision time is the slower (maximum) of each individual-dimension decision time. For the interior stimuli and the redundant stimulus, both individual-dimension decisions tend to be slow (because all of these stimuli lie near both the x and y decision bounds). However, for the exterior stimuli, only one individual-dimension decision tends to be slow (because the exterior stimuli lie far from one decision bound, and close to the other). Thus, the interior stimuli and redundant stimulus should tend to have roughly equal RTs that are longer than those for the exterior stimuli. Again, the precise prediction for the redundant stimulus compared to the interior stimuli depends on the precise placement of the decision bounds on each dimension. The canonical predictions in Figure 5 are for the case in which the decision bounds are set midway between the means of the redundant and interior stimuli.

Coactive Rule-Based Model

Just as is the case for the target category, the coactive model (Figure 5, Row 6) yields different predictions than do all of the other rule-based models for the contrast category. Specifically, it predicts faster RTs for the interior members of the contrast category (x1y0 and x0y1) than for the exterior members (x2y0 and x0y2). The coactive model also predicts that the redundant stimulus will have the very fastest RTs. The intuitive reason for these predictions is that the closer a stimulus gets to the lower left-corner of the contrast category, the higher is the probability that at least one of its sampled percepts will fall in the contrast-category region. Thus, the rate at which the pooled random walk marches towards the contrast-category criterion tends to increase. The same intuition can explain why contrast-category members that satisfy the disjunctive rule on the “first-processed” dimension are classified faster than those on the “second-processed” dimension (i.e., by assuming reduced perceptual variability along the first-processed dimension).

Comparison Models and Relations Among Models

As a source of comparison for the proposed logical rule-based models, we consider some of the major extant alternative models of classification RT.

RT-Distance Model of Decision-Boundary Theory

Recall that in past applications, decision-bound theory has been used to predict classification RTs by assuming that RT is a decreasing function of the distance of a percept from a multidimensional decision boundary. To provide a process interpretation for this hypothesis, and to improve comparability among alternative models, Nosofsky and Stanton (2005) proposed a random-walk version of decision-bound theory that implements the RT-distance hypothesis (see also Ashby, 2000). We will refer to the model as the RW-DFB (random-walk, distance-from-boundary) model. The RW-DFB model is briefly described here for the case of stimuli varying along two dimensions. The observer is assumed to establish (two-dimensional) decision boundaries for partitioning perceptual space into decision regions. On each step of a random-walk process, a percept is sampled from a bivariate normal distribution associated with the presented stimulus. If the percept falls in region A of the two-dimensional space, then the random-walk counter steps in the direction of criterion A, otherwise it steps in the direction of criterion B. The sampling process continues until either criterion is reached. In general, the farther a stimulus is from the decision boundaries, the faster are the RTs that are predicted by the model. (Note that, in our serial and parallel logical-rule models, this RW-DFB process is assumed to operate at the level of individual dimensions, but not at the level of multiple dimensions.)

A wide variety of two-dimensional decision boundaries could be posited for the Figure-1 category structure, including models that assume general linear boundaries, quadratic boundaries, and likelihood-based boundaries (see, e.g., Maddox & Ashby, 1993). Because the stimuli in our experiments are composed of highly separable dimensions, however, a reasonable representative from this wide class of models assumes simply that the observer uses the orthogonal decision boundaries that are depicted in Figure 1. (We consider alternative types of multidimensional boundaries in our General Discussion. Crucially, we will be able to argue that our conclusions hold widely over a very broad class of random-walk, distance-from-boundary models.)

Given the assumption of the use of these orthogonal decision boundaries, as well as our previous parametric assumptions involving statistical independence of the stimulus representations, it turns out that, for the present category structure, the coactive rule-based model is formally identical to this previously proposed multidimensional RW-DFB model.4 Therefore, the coactive model will serve as our representative of using the multidimensional RT-distance hypothesis as a basis for predicting RTs. Thus, importantly, comparisons of the various alternative serial and parallel rule models to the coactive version can provide an indication of the utility of adding “mental-architecture” assumptions to decision-boundary theory.

EBRW Model

A second comparison model is the exemplar-based random walk (EBRW) model (Nosofsky & Palmeri, 1997a,b; Nosofsky & Stanton, 2005), which is a highly successful exemplar-based model of classification. The EBRW model has been discussed extensively in previous reports, so only a brief summary is provided here. According to the model, people represent categories by storing individual exemplars in memory. When a test item is presented, it causes the stored exemplars to be retrieved. The higher the similarity of an exemplar to the test item, the higher is its retrieval probability. If a retrieved exemplar belongs to Category A, then a random-walk counter takes a unit step in the direction of criterion A; otherwise it steps in the direction of criterion B. The exemplar-retrieval process continues until either criterion A or criterion B is reached. In general, the EBRW model predicts that the greater the “summed similarity” of a test item to one category, and the less its summed similarity to the alternative category, the faster will be its classification RT.

In the present applications, the EBRW uses 8 free parameters (see Nosofsky & Palmeri, 1997a, for detailed explanations): an overall sensitivity parameter c for measuring discriminability between exemplars; an attention-weight parameter wx representing the amount of attention given to Dimension x; a background-noise parameter back; random-walk criteria +A and −B; a scaling constant k for transforming the number of steps in the random walk into milliseconds; and residual-time parameters μR and σR2 that play the same role in the EBRW model as in the logical rule-based models. Adapting ideas from Lamberts (1995), Cohen and Nosofsky (2003) proposed an elaborated version of the EBRW that includes additional free parameters for modeling the time course with which individual dimensions are perceptually encoded. For simplicity in getting started, however, in this research we limit consideration to the baseline version of the model.

We have conducted extensive investigations that indicate that, over the vast range of its parameter space, the EBRW model makes predictions that are similar to those of the coactive rule model for the target category (i.e, overadditivity in the MIC). These investigations are reported in Appendix A. In addition, like the coactive model, the EBRW model predicts that, for the contrast category, the interior stimuli will have faster RTs than will the exterior stimuli; and that the redundant stimulus will have the fastest RTs of all. The intuition, as can be gleaned from Figure 1, is that the closer a stimulus gets to the lower-left corner of the contrast category, the greater is its overall summed similarity to the contrast-category exemplars. Finally, the EBRW model can predict faster RTs for contrast-category members that satisfy the disjunctive rule on the first-processed dimension by assigning a larger “attention weight” to that dimension (Nosofsky, 1986).

Because the EBRW model and the coactive rule model make the same qualitative predictions for the present paradigm, we expect that they may yield similar quantitative fits to the present data. However, based on previous research (Fific et al., 2008), we do not expect to see much evidence of coactive processing for the highly separable-dimension stimuli used in the present experiments. Furthermore, as can be verified from inspection of Figure 5, the EBRW model makes sharply contrasting predictions from all of the other logical-rule models of classification RT. Thus, to the extent that observers do indeed use logical rules as a basis for the classification in the present experiments, the results should clearly favor one of the rule-based models compared to the EBRW model.5

Free Stimulus-Drift-Rate Model

As a final source of comparison, we also consider a random-walk model in which each individual stimulus is allowed to have its own freely estimated step-probability (or “drift rate”) parameter. That is, for each individual stimulus i, we estimate a free parameter pi that gives the probability that the random-walk steps in the direction of criterion A. This approach is similar to past applications of Ratcliff’s (1978) highly influential diffusion model. (The diffusion model is a continuous version of a discrete-step random walk.) In that approach, the “full” diffusion model is fitted by estimating separate drift-rate parameters for each individual stimulus or condition, and investigations are then often conducted to discover reasons why the estimated drift rates may vary in systematic ways (e.g., Ratcliff & Rouder, 1998). The present free stimulus-drift-rate random-walk model uses 14 free parameters: the 9 individual stimulus step-probability parameters; and 5 parameters that play the same role as in the previous models -- +A, −B, k, μR and σR2.

The free stimulus-drift-rate random-walk model is more descriptive in form than are the logical-rule models or the EBRW model, in the sense that it can describe any of the qualitative patterns involving the mean RTs that are illustrated in Figure 5. Nevertheless, because our model-fit procedures penalize models for the use of extra free parameters (see the Model-Fit section for details), the free stimulus-drift-rate model is not guaranteed to provide a better quantitative fit to the data than do the logical rule models or the EBRW model. To the extent that the logical-rule models (or the EBRW model) capture the data in parsimonious fashion, they should provide better penalty-corrected fits than does the free stimulus-drift-rate model. By contrast, dramatically better fits of the free stimulus-drift-rate model could indicate that the logical-rule models or exemplar model are missing key aspects of performance.

Finally, it is important to note that, even without imposing a penalty for its extra free parameters, the free stimulus-drift-rate model could in principle provide worse absolute fits to the data than do some of the logical-rule models.6 The reason is that our goal is to fit the detailed RT-distribution data associated with the individual stimuli. Although the focus of our discussion has been on the predicted pattern of mean RTs, there is likely to also be a good deal of structure in the RT-distribution data that is useful for distinguishing among the models. For example, consider a case in which an observer uses a mixed-order serial self-terminating strategy. In cases in which Dimension x is processed first, then the left-column members of the contrast category should have fast RTs. But in cases in which Dimension x is processed second, then the left-column members should have slow RTs. Thus, the mixed-order serial self-terminating model allows the possibility of observing a bimodal distribution of RTs.7 By contrast, a random-walk model that associates a single “drift rate” with each individual stimulus predicts that the RT distributions should be uni-modal in form (see also Ashby et al., 2007, p. 647). As will be seen, there are other aspects of the RT distribution data that also impose interesting contraints on the alternative models.

Ratcliff and McKoon (2008) have recently emphasized that single-channel diffusion models are appropriate only for situations in which a “single stage” of decision making governs performance. To the extent that subjects adopt the present kinds of logical rule-based strategies in our classification task, “multiple stages” of decision making are taking place, so even the present free stimulus-drift-rate model may fail to yield good quantitative fits.

Experiment 1

The goal of our experiments was to provide initial tests of the ability of the logical-rule models to account for speeded classification performance, using the category structure depicted in Figure 1. Almost certainly, the extent to which rule-based strategies are used will depend on a variety of experimental factors. In these initial experiments, the idea was to implement factors that seemed strongly conducive to the application of the rules. Thus, the experiments serve more in the way of “validation tests” of the newly proposed models, as opposed to inquiries of when rule-based classification does or does not occur. If preliminary support is obtained in favor of the rule-based models under these initial conditions, then later research can examine boundary conditions on their application.

Clearly, one critical factor is whether the category structure itself affords the application of logical rules. Because for the present Figure-1 structure, an observer can classify all objects perfectly with the hypothesized rule-based strategies, this aspect of the experiments would seem to promote the application of the rules. The use of more complex category structures might require that subjects supplement a rule-based strategy with the memory of individual exemplars, exceptions-to-the-rule, more complex decision boundaries, and so forth.

A second factor involves the types of dimensions that are used to construct the stimuli. In the present experiments, the stimuli varied along a set of highly separable dimensions (Garner, 1974; Shepard, 1964). In particular, following Fific et al. (2008), the stimuli were composed of two rectangular regions, one to the left and one to the right. The left rectangle was a constant shade of red and varied only in its overall level of saturation. The right rectangle was uncolored and had a vertical line drawn inside it. The line varied only its left-right positioning within the rectangle. One reason why we used these highly separable dimensions was to ensure that the psychological structure of the set of to-be-classified stimuli matched closely the schematic 3×3 factorial design depicted in Figure 1. A second reason is that use of the rule-based strategies entails that separate independent decisions are made along each dimension. Such an independent-decisions strategy would seem to be promoted by the use of stimuli varying along highly separable dimensions. That is, for the present stimuli, it seems natural for an observer to make a decision about the extent to which an object is saturated, to make a separate decision about the extent to which the line is positioned to the left, and then to combine these separate decisions to determine whether or not the logical rule is satisfied.

Finally, to most strongly induce the application of the logical rules, subjects were provided with explicit knowledge of the rule-based structure of the categories and were provided with explicit instructions to use a fixed-order serial self-terminating strategy as a basis for classification. The instructions spelled out in step-by-step fashion the application of the strategy (see Method section). Of course, there is no certainty that observers can follow the instructions, and there is a good possibility that other automatic types of classification processes may override attempts to use the instructed strategy (e.g., Brooks, Norman, & Allen, 1991; Logan, 1988; Logan & Klapp, 1991; Palmeri, 1997). Nevertheless, we felt that the present conditions could potentially place the logical-rule models in their best possible light and that it was a reasonable starting point. In a subsequent experiment, we test a closely related design, with some subjects operating under more open-ended conditions.

Method

Subjects

The subjects were 5 graduate and undergraduate students associated with Indiana University. All subjects were under 40 years of age and had normal or corrected-to-normal vision. The subjects were paid $8 per session plus up to a $3 bonus per session depending on performance.

Stimuli

Each stimulus consisted of two spatially separated rectangular regions. The left rectangle had a red hue that varied in its saturation and the right rectangular region had an interior vertical line that varied in its left-right positioning. (For an illustration, see Fific et al, 2008, Figure 5.)

As illustrated in Figure 1, there were nine stimuli composed of the factorial combination of three values of saturation and three values of vertical-line position. The saturation values were derived from the Munsell color system and were generated on the computer by using the Munsell color conversion program (WallkillColor, version 6.5.1). According to the Munsell system, the colors were of a constant red hue (5R) and of a constant lightness (value 5), but had saturation (chromas) equal to 10, 8, and 6 (for dimension values x0, x1, and x2, respectively). The distances of the vertical line relative to the leftmost side of the right rectangle were 30, 40, and 50 pixels (for dimension values y0, y1, and y2, respectively). The size of each rectangle was 133 × 122 pixels. The rectangles were separated by 45 pixels and each pair of rectangles subtended a horizontal visual angle of about 6.4 degrees and a vertical visual angle of about 2.3 degrees. The study was conducted on a Pentium PC with a CRC monitor, with display resolution 1024 × 768. The stimuli were presented on a white background.

Procedure

The stimuli were divided into two categories, A and B, as illustrated in Figure 1. On each trial, a single stimulus was presented, the subject was instructed to classify it into Category A or B as rapidly as possible without making errors, and corrective feedback was then provided.

The experiment was conducted over 5 sessions, one session per day, with each session lasting approximately 45 minutes. In each session, subjects received 27 practice trials and then were presented with 810 experimental trials. Trials were grouped into six blocks, with rest breaks in between each block. Each stimulus was presented the same number of times within each session. Thus, for each subject, each stimulus was presented 93 times per session and 465 times over the course of the experiment. The order of presentation of the stimuli was randomized anew for each subject and session. Subjects made their responses by pressing the right (Category A) and left (Category B) buttons on a computer mouse. The subjects were instructed to rest their index fingers on the mouse buttons throughout the testing session. RTs were recorded from the onset of a stimulus display up to the time of a response. Each trial started with the presentation of a fixation cross for 1770 ms. After 1070 ms from the initial appearance of the fixation cross, a warning tone was sounded for 700 ms. The stimulus was then presented on the screen and remained visible until the subject’s response was recorded. In the case of an error, the feedback “INCORRECT” was displayed on the screen for 2 sec. The inter-trial interval was 1870 msec.

At the start of the experiment, subjects were shown a picture of the complete stimulus set (in the form illustrated in Figure 1, except with the actual stimuli displayed). While viewing this display, subjects read explicit instructions to use a serial self-terminating rule-based strategy to classify the stimuli into the categories. [Subjects 1 and 2 were given instructions to process Dimension y (vertical-line position) first, whereas Subjects 3–5 were given instructions to process Dimension x (saturation) first.] The instructions for the “saturation-first” subjects were as follows:

We ask you to use the following strategy ON ALL TRIALS to classify the stimuli. First, focus on the colored square. If the colored square is the most saturated with red, then classify the stimulus into Category B immediately. If the colored square is not the most saturated with red, then you need to focus on the square with the vertical line. If the line is furthest to the left, then classify the stimulus into Category B. Otherwise classify the stimulus into Category A. Make sure to use this same sequential strategy on each and every trial of the experiment.

Analogous instructions were provided to the subjects who processed Dimension y (vertical-line position) first. Finally, because the a priori qualitative contrasts for discriminating among the models are derived under the assumption that error rates are low, the instructions emphasized that subjects needed to be highly accurate in making their classification decisions. In a subsequent experiment, we also test subjects with a speed-stress emphasis and examine error RTs.

Results

Session 1 was considered practice and these data were not included in the analyses. In addition, conditionalized on each individual subject and stimulus, we removed from the analysis RTs greater than 3 standard deviations above the mean and also RTs of less than 100 msec. This procedure led to dropping less than 1.2% of the trials from analysis.

We examined the mean correct RTs for the individual subjects as a function of sessions of testing. Although we observed a significant effect of sessions for all subjects (usually, a slight overall speedup effect), the basic patterns for the target-category stimuli and contrast-category remained constant across sessions. Therefore, we combine the data across Sessions 2–5 in illustrating and modeling the results.

The mean correct RTs and error rates for each individual stimulus for each subject are reported in Table 1. In general, error rates were low and mirrored the patterns of mean correct RTs. That is, stimuli associated with slower mean RTs had a higher proportion of errors. Therefore, our initial focus will be on the results involving the RTs.

Table 1.

Experiment 1, Mean Correct RTs and error rates for individual stimuli, observed and best-fitting model predicted.

| Subject 1 | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| x2y2 | x2y1 | x1y2 | x1y1 | x2y0 | x1y0 | x0y2 | x0y1 | x0y0 | |

| RT Observed | 501 | 585 | 564 | 637 | 467 | 476 | 578 | 552 | 446 |

| RTParallel Self-Terminating | 491 | 590 | 580 | 631 | 469 | 468 | 565 | 564 | 467 |

| p(e) Observed | .00 | .02 | .00 | .01 | .00 | .01 | .03 | .01 | .00 |

| p(e) Parallel Self-Terminating | .00 | .01 | .00 | .01 | .00 | .00 | .00 | .00 | .00 |

| Subject 2 | |||||||||

| x2y2 | x2y1 | x1y2 | x1y1 | x2y0 | x1y0 | x0y2 | x0y1 | x0y0 | |

| RT Observed | 609 | 682 | 687 | 748 | 476 | 479 | 635 | 658 | 452 |

| RT Serial Self-Terminating | 618 | 681 | 674 | 737 | 478 | 476 | 632 | 686 | 480 |

| p(e) Observed | .00 | .04 | .01 | .04 | .02 | .03 | .03 | .02 | .01 |

| p(e) Serial Self-Terminating | .00 | .04 | .03 | .08 | .01 | .01 | .02 | .02 | .00 |

| Subject 3 | |||||||||

| x2y2 | x2y1 | x1y2 | x1y1 | x2y0 | x1y0 | x0y2 | x0y1 | x0y0 | |

| RT Observed | 596 | 664 | 665 | 709 | 707 | 721 | 559 | 548 | 531 |

| RT Serial Self-Terminating | 606 | 659 | 658 | 707 | 699 | 745 | 548 | 553 | 534 |

| p(e) Observed | .01 | .00 | .01 | .01 | .01 | .02 | .01 | .00 | .00 |

| p(e) Serial Self-Terminating | .00 | .00 | .00 | .01 | .01 | .01 | .00 | .00 | .00 |

| Subject 4 | |||||||||

| x2y2 | x2y1 | x1y2 | x1y1 | x2y0 | x1y0 | x0y2 | x0y1 | x0y0 | |

| RT Observed | 590 | 618 | 619 | 657 | 624 | 657 | 424 | 423 | 432 |

| RT Serial Self-Terminating | 591 | 617 | 620 | 647 | 628 | 659 | 431 | 433 | 432 |

| p(e) Observed | .00 | .01 | .01 | .03 | .02 | .04 | .01 | .01 | .00 |

| p(e) Serial Self-Terminating | .00 | .01 | .01 | .02 | .01 | .01 | .00 | .00 | .00 |

| Subject 5 | |||||||||

| x2y2 | x2y1 | x1y2 | x1y1 | x2y0 | x1y0 | x0y2 | x0y1 | x0y0 | |

| RT Observed | 546 | 618 | 596 | 687 | 630 | 615 | 484 | 481 | 464 |

| RT Serial Self-Terminating | 548 | 626 | 596 | 674 | 611 | 658 | 478 | 481 | 476 |

| p(e) Observed | .00 | .02 | .01 | .05 | .02 | .03 | .01 | .00 | .00 |

| p(e) Serial Self-Terminating | .00 | .04 | .01 | .05 | .03 | .03 | .00 | .00 | .00 |

Note. p(e) = proportion of errors. RT = mean correct response time.

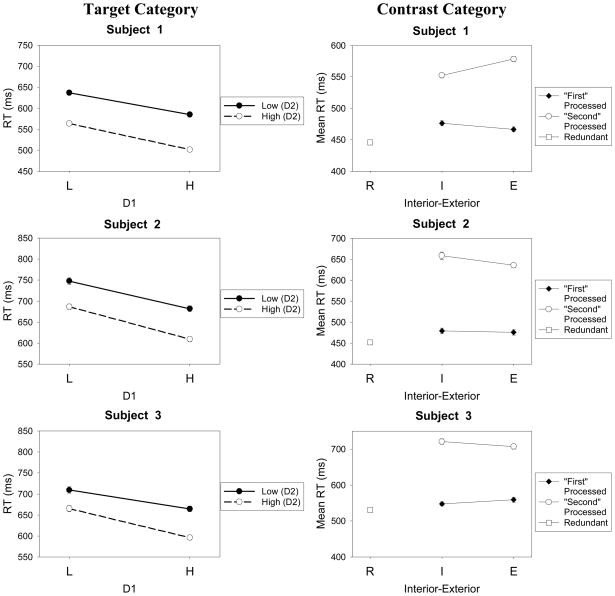

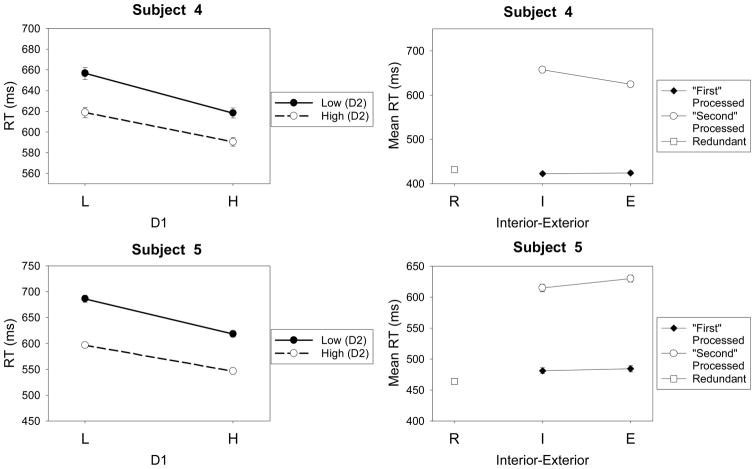

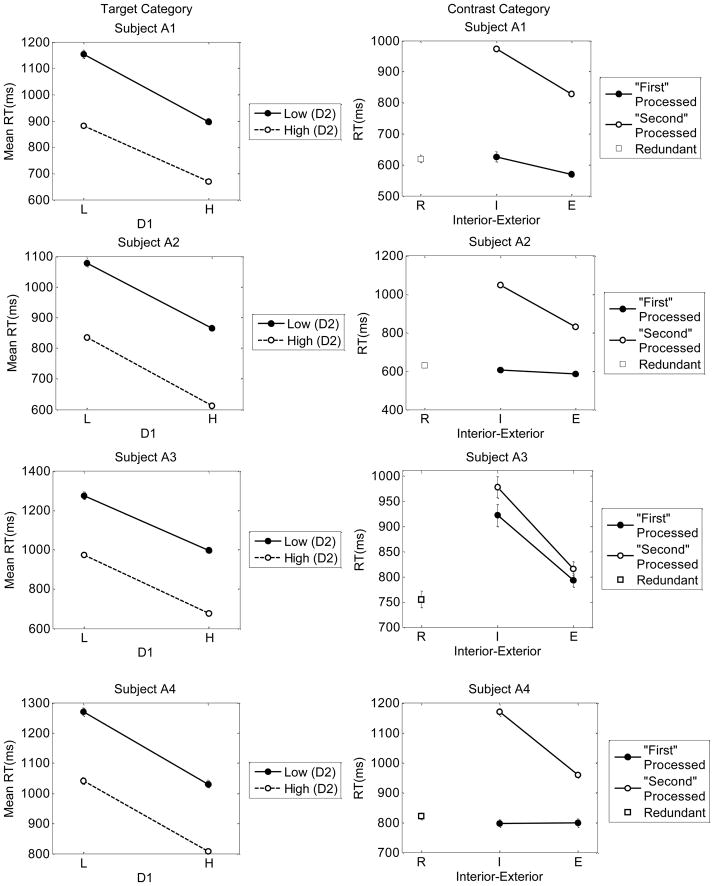

The mean correct RTs for the individual subjects and stimuli are displayed graphically in the panels in Figure 6. The left panels show the results for the target-category stimuli and the right panels show the results for the contrast-category stimuli. Regarding the contrast-category stimuli, for ease of comparing the results to the canonical-prediction graphs in Figure 5, the means in the figure have been arranged according to whether a stimulus satisfied the disjunctive rule on the instructed “first-processed” dimension or the “second-processed” dimension. For example, Subject 1 was instructed to process Dimension y first. Therefore, for this subject, the interior and exterior stimuli on the “first-processed” dimension are x1y0 and x2y0 (see Figure 1).

Figure 6.

Observed mean RTs for the individual subjects and stimuli in Experiment 1. Error bars represent one standard error. Left panels show the results for the target-category stimuli and right panels show the results for the contrast-category stimuli. Left panels: L = low-salience dimension value, H = high-salience dimension value, D1 = Dimension 1, D2 = Dimension 2. Right panels: R = redundant stimulus, I = interior stimulus, E = exterior stimulus.

Regarding the target-category stimuli, note first that for all five subjects, the manipulations of salience (high versus low) on both the saturation and line-position dimensions had the expected effects on the overall pattern of mean RTs, in the sense that the high-salience (H) values led to faster RTs than did the low-salience (L) values. Regarding the contrast-category stimuli, not surprisingly, stimuli that satisfied the disjunctive rule on the first-processed dimension were classified with faster RTs than those on the second-processed dimension. These global patterns of results are in general accord with the predictions from all of the logical-rule models as well as the EBRW model (compare to Figure 5).

The more fine-grained arrangement of RT means, however, allows for an initial assessment of the predictions from the competing models. To the extent that subjects were able to follow the instructions, our expectation is that the data should tend to conform to the predictions from the fixed-order serial self-terminating model of classification RT. For Subjects 2, 3 and 4, the overall results seem reasonably clear-cut in supporting this expectation (compare the top panels in Figure 5 to those for Subjects 2–4 in Figure 6). First, as predicted by the model, the mean RTs for the target category members are approximately additive (MIC=0). Second, for the contrast-category members, the mean RTs for the stimuli that satisfy the disjunctive rule on the first-processed dimension are faster than those for the second-processed dimension. Third, for those stimuli that satisfy the disjunctive rule on the second-processed dimension, RTs for the exterior stimulus are faster than for the interior stimulus (whereas there is little difference for the interior and exterior stimuli that satisfy the disjunctive rule on the first-processed dimension). Fourth, the mean RT for the redundant stimulus is almost the same as (or perhaps slightly faster than) the mean RTs for the stimuli on the first-processed dimension. These qualitative patterns of results are all in accord with the predictions from the fixed-order serial self-terminating model. Furthermore, considered collectively, they violate the predictions from all of the other competing models.

The results for Subjects 1 and 5 are less clear-cut. On the one hand, for both subjects, the mean RTs for the target category are approximately additive, in accord with the predictions from the serial model. (There is a slight tendency towards under-additivity for Subject 1 and towards over-additivity for Subject 5.) In addition, for the contrast category, both stimuli that satisfy the disjunctive rule on the first-processed dimension are classified faster than those on the second-processed dimension. On the other hand, for stimuli in the contrast category that satisfy the disjunctive rule on the second-processed dimension, RTs for the external stimulus are slower than for the internal one, which is in opposition to the predictions from the serial self-terminating model. The qualitative results from these two subjects do not point in a consistent, converging direction to any single one of the contending models (although, overall, the serial and parallel self-terminating models appear to be the best candidates). We revisit all of these results in the section on Quantitative Model Fitting.

We conducted various statistical tests to corroborate the descriptions of the data provided above. The main results are reported in Table 2. With regard to the target-category stimuli, for each individual subject, we conducted three-way ANOVAs on the RT data using as factors session (2–5), level of saturation (H or L), and level of vertical-line position (H or L). Of course, the main effects of saturation and line position (not reported in the table) were highly significant for all subjects, reflecting the fact that the H values were classified more rapidly than were the L values. The main effect of sessions was statistically significant for all subjects, usually reflecting either a slight speeding up or slowing down of performance as a function of practice in the task. However, there were no interactions of session with the other factors, reflecting that the overall pattern of RTs was fairly stable throughout testing.

Table 2.

Experiment 1 statistical-test results table.

| Target-Category Factor | Contrast-Category Comparisons | ||||

|---|---|---|---|---|---|

| Subject 1 | df | F | Subject 1 | M | t |

| Session | 3 | 24.33 ** | E1-I1 | −9.6 | −1.70 |

| Sat. × LP | 1 | 1.73 | E2-I2 | 25.9 | 4.46 ** |

| Sat. × LP × Session | 3 | 0.59 | E1-R | 20.8 | 4.20 ** |

| Error | 1401 | I1-R | 30.5 | 6.21 ** | |

| Subject 2 | df | F | Subject 2 | M | t |

| Session | 3 | 2.86 * | E1-I1 | −3.7 | −0.39 |

| Sat. × LP | 1 | .80 | E2-I2 | −23.0 | −2.18 * |

| Sat. × LP × Session | 3 | .21 | E1-R | 23.7 | 2.72 ** |

| Error | 1373 | I1-R | 27.4 | 3.14 ** | |

| Subject 3 | df | F | Subject 3 | M | t |

| Session | 3 | 82.44 ** | E1-I1 | 11.4 | 1.26 |

| Sat. × LP | 1 | 3.52± | E2-I2 | −13.5 | −1.31 |

| Sat. × LP × Session | 3 | .04 | E1-R | 28.2 | 3.27 ** |

| Error | 1392 | I1-R | 16.8 | 2.22 * | |

| Subject 4 | df | F | Subject 4 | M | t |

| Session | 3 | 139.74 ** | E1-I1 | 1.5 | 0.32 |

| Sat. × LP | 1 | 1.21 | E2-I2 | −33.0 | −4.55 ** |

| Sat. × LP × Session | 3 | .71 | E1-R | −7.5 | −1.52 |

| Error | 1386 | I1-R | −9.0 | −1.85± | |

| Subject 5 | df | F | Subject 5 | M | t |

| Session | 3 | 8.88 ** | E1-I1 | 3.0 | 0.44 |

| Sat. × LP | 1 | 2.63 | E2-I2 | 15.0 | 1.80± |

| Sat. × LP × Session | 3 | .92 | E1-R | 20.4 | 3.23 ** |

| Error | 1383 | I1-R | 17.4 | 2.84 ** | |

Note. Sat. = Saturation, LP = Line Position, E1 = Exterior stimulus on first-processed dimension, I1 = Interior stimulus on first-processed dimension, E2 = Exterior stimulus on second-processed dimension, I2 = Interior stimulus on second-processed dimension, R = redundant stimulus, M = Mean RT difference (ms).

p<.01,

p<.05,

p<.075.

For the contrast-category t-tests, the df vary between 687 and 712, so the critical values of t are essentially z.

The most important question is whether there was an interaction between the factors of saturation and line position. The interaction test is used to assess the question of whether the mean RTs show a pattern of additivity, under-additivity, or over-additivity. The interaction between level of saturation and level of line position did not approach statistical significance for Subjects 1, 2, 4 or 5, supporting the conclusion of mean RT additivity. This finding is consistent with the predictions from the logical rule models that assume serial processing of the dimensions. The interaction between saturation and line position was marginally significant for Subject 3, in the direction of under-additivity. Therefore, for Subject 3, the contrast between the serial versus parallel-exhaustive models is not clear-cut for the target-category stimuli.

Regarding the contrast-category stimuli, we conducted a series of focused t-tests for each individual subject for those stimulus comparisons most relevant to distinguishing among the models. The results, reported in detail in Table 2, generally corroborate the summary descriptions that we provided above. Specifically, for all of the subjects, the RT difference between the interior and exterior stimulus on the first-processed dimension was small and not statistically signficant; the redundant stimulus tended to be classified significantly faster than both the interior and exterior stimuli; and, in the majority of cases, the exterior stimulus was classified significantly faster than was the interior stimulus on the second-processed dimension.

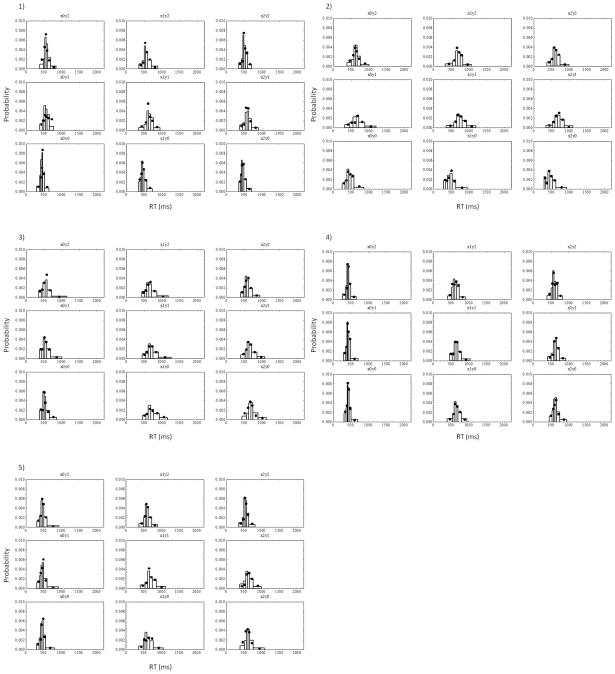

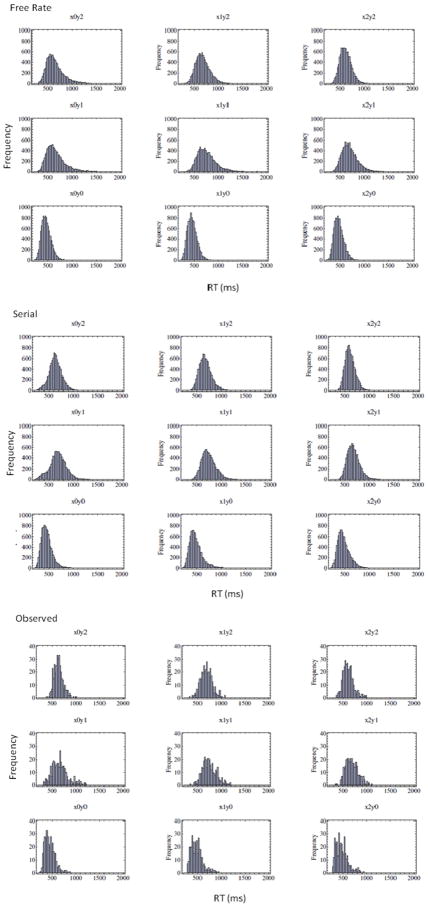

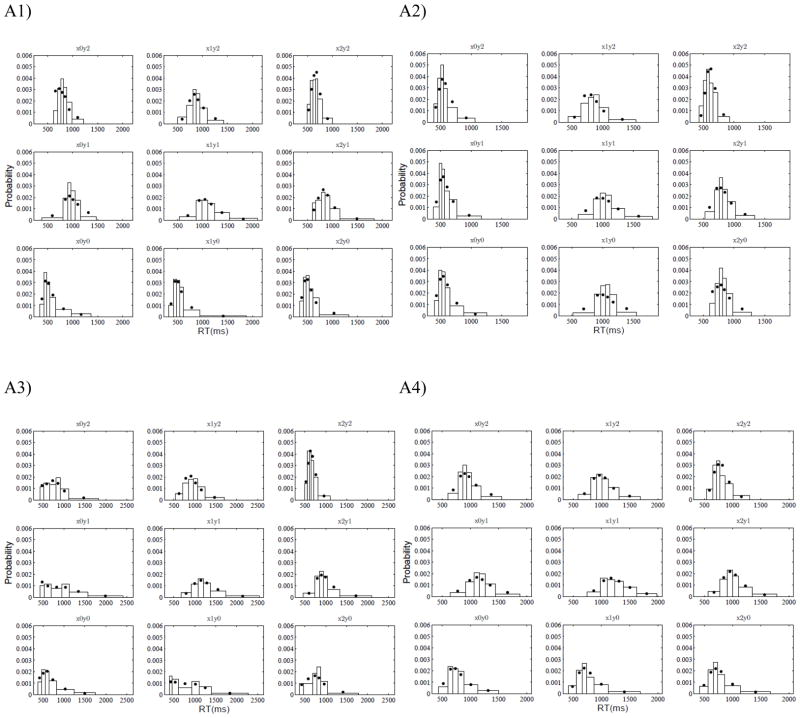

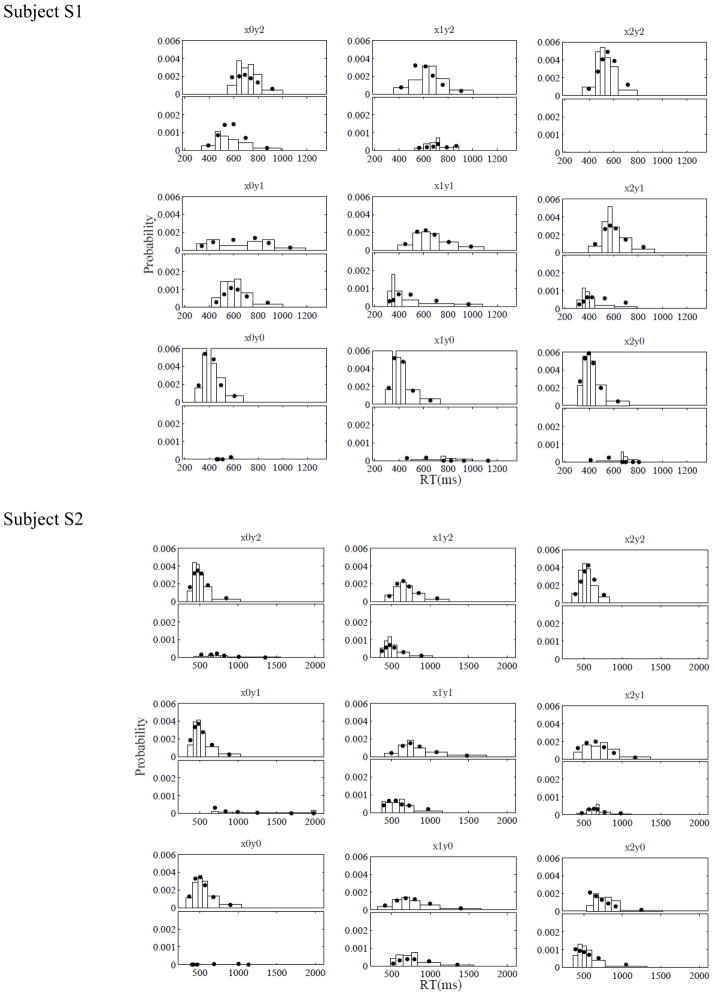

Quantitative Model-Fitting Comparisons

We turn now to the major goal of the studies, which is to test the alternative models on their ability to account in quantitative detail for the complete RT-distribution and choice-probability data associated with each of the individual stimuli. We fitted the models to the data by using two methods. The first was a minor variant of the method of quantile-based maximum-likelihood estimation (QMLE) (Heathcote, Brown, & Mewhort., 2002). Specifically, for each individual stimulus, the observed correct RTs were divided into the following quantile-based bins: the fastest 10% of correct RTs, the next four 20% intervals of correct RTs, and the slowest 10% of correct RTs. (The observed RT-distribution data, summarized in terms of the RT cutoffs that marked each RT quantile, are reported for each individual subject and stimulus in Appendix B.) Because error proportions were low, it was not feasible to fit error-RT distributions. However, the error data still provide a major source of constraints, because the models are required to simultaneously fit the relative frequency of errors for each individual stimulus (in addition to the distributions of correct RTs).

Table B1.

Correct RT quantiles and error probabilities for each individual stimulus and subject in Experiment 1.

| Stimulus | RT Quantiles | ||||||

|---|---|---|---|---|---|---|---|

| .1 | .3 | .5 | .7 | .9 | N | p(e) | |

| Sub 1 | |||||||

| x2y2 | 419 | 466 | 494 | 535 | 600 | 358 | .00 |

| x2y1 | 459 | 525 | 578 | 628 | 711 | 357 | .02 |

| x1y2 | 446 | 505 | 547 | 603 | 711 | 355 | .00 |

| x1y1 | 505 | 583 | 632 | 691 | 768 | 358 | .01 |

| x2y0 | 391 | 421 | 452 | 488 | 575 | 357 | .00 |

| x1y0 | 395 | 428 | 460 | 503 | 582 | 353 | .01 |

| x0y2 | 489 | 535 | 565 | 603 | 689 | 355 | .03 |

| x0y1 | 460 | 506 | 544 | 590 | 651 | 353 | .01 |

| x0y0 | 383 | 413 | 442 | 467 | 516 | 357 | .00 |

| Sub 2 | |||||||

| x2y2 | 482 | 545 | 595 | 656 | 744 | 354 | .00 |

| x2y1 | 523 | 598 | 672 | 749 | 864 | 356 | .04 |

| x1y2 | 533 | 621 | 682 | 750 | 834 | 355 | .01 |

| x1y1 | 577 | 659 | 728 | 810 | 940 | 358 | .04 |

| x2y0 | 332 | 401 | 455 | 527 | 634 | 353 | .02 |

| x1y0 | 338 | 396 | 465 | 527 | 632 | 352 | .03 |

| x0y2 | 514 | 581 | 626 | 673 | 767 | 354 | .03 |

| x0y1 | 468 | 557 | 644 | 721 | 889 | 357 | .02 |

| x0y0 | 333 | 388 | 436 | 500 | 581 | 356 | .01 |

| Sub 3 | |||||||

| x2y2 | 486 | 529 | 574 | 629 | 738 | 356 | .01 |

| x2y1 | 527 | 584 | 640 | 711 | 858 | 356 | .00 |

| x1y2 | 515 | 575 | 639 | 711 | 866 | 352 | .01 |

| x1y1 | 550 | 616 | 681 | 758 | 906 | 354 | .01 |

| x2y0 | 563 | 634 | 692 | 748 | 888 | 356 | .01 |

| x1y0 | 553 | 624 | 689 | 780 | 938 | 359 | .02 |

| x0y2 | 422 | 478 | 541 | 595 | 720 | 351 | .01 |

| x0y1 | 429 | 487 | 533 | 588 | 686 | 352 | .00 |

| x0y0 | 427 | 481 | 514 | 556 | 658 | 355 | .00 |

| Sub 4 | |||||||

| x2y2 | 502 | 544 | 577 | 630 | 694 | 356 | .00 |

| x2y1 | 505 | 566 | 613 | 659 | 731 | 355 | .01 |

| x1y2 | 521 | 560 | 606 | 663 | 737 | 354 | .01 |

| x1y1 | 533 | 593 | 641 | 694 | 799 | 354 | .03 |

| x2y0 | 528 | 569 | 611 | 650 | 745 | 354 | .02 |