Abstract

Purpose:

Implementation strategies of imaging guidelines can assist in reducing the number of radiographic examinations. This study aimed to compare the perceived need for diagnostic imaging before and after an educational intervention strategy.

Methods:

One hundred sixty Swiss chiropractors attending a conference were randomized to either receive a radiology workshop, reviewing appropriate indications for diagnostic imaging for adult spine disorders (n = 80), or be in a control group (CG). One group of 40 individuals dropped out from the CG due to logistic reasons. Participants in the intervention group were randomly assigned to three subgroups to evaluate the effect of an online reminder at midpoint. All participants underwent a pretest and a final test at 14–16 weeks. A posttest was administered to two subgroups at 8–10 weeks.

Results:

There was no difference between baseline scores, and overall scores for the pretest and the final tests for all four groups were not significantly different. However, the subgroup provided with access to a reminder performed significantly better than the subgroup with whom they were compared (F = 4.486; df = 1 and 30; p = .043). Guideline adherence was 50.5% (95% CI, 39.1–61.8) for the intervention group and 43.7% (95% CI, 23.7–63.6) for the CG at baseline. Adherence at follow-up was lower, but mean group differences remained insignificant.

Conclusions:

Online access to specific recommendations while making a clinical decision may favorably influence the intention to either order or not order imaging studies. However, a didactic presentation alone did not appear to change the perception for the need of diagnostic imaging studies.

Key Indexing Terms: Diagnostic Imaging; Diagnostic X-Ray; Education, Continuing; Guidelines; Knowledge Acquisitions (Computer); Radiology; Randomized Controlled Trial

Introduction

Imaging technology can improve patient outcomes by allowing greater precision in diagnosing and treating patients. However, evidence of overuse, underuse, and misuse of imaging services has been reported in the literature.1–4 Although an integral part of chiropractic practice for over a century, the role of diagnostic imaging remains a source of controversy.5–7 We previously developed diagnostic imaging guidelines for chiropractors and other primary health care professionals to assist clinical decision making and to allow more selective use of imaging studies for adult spine disorders.8 Clinical guidelines are particularly useful where significant variation in practice exists, because they aim to describe appropriate care based on the best available scientific evidence and broad consensus while promoting efficient use of resources.9, 10

Current guideline dissemination and implementation strategies can encourage practitioners to conform to best practices and lead to improvements in care.11 However, high-quality studies documenting effectiveness and efficiency of guideline dissemination and implementation strategies are scarce.12 Interventions designed to improve professional practice and the delivery of effective health services may include continuing education, quality assurance programs, computer-based information and recalls, financial incentives, and organizational and regulatory interventions.13 Educational strategies are thought to have mixed effects. These include the distribution of educational materials to professionals, guideline implementation information, printed educational materials, continuing education activities and small group interactive education with active participation, educational outreach by experts or trained facilitators, and use of local opinion leaders.14 Used alone, two of the most common strategies for dissemination of new knowledge— publication of educational material and meetings, including seminars and conferences—appear to have a small impact on practice.15–18 However, multifaceted interventions, including workshops and didactic presentations and interactive workshops, can result in moderately large changes in professional practice.17 Among a group of chiropractors, an educational intervention strategy emphasizing the use of evidence-based diagnostic imaging guidelines was shown to decrease the perceived need for plain-film radiography in uncomplicated low back pain patients in specific case scenarios.19 Furthermore, information recall has been shown to be important in achieving behavior change in interventions providing information,20 and “online” support may be an effective way to deliver reminders.21 Rationale for selecting these interventions is further discussed in the latter part of this article.

Rationale for the Study

Introducing new scientific findings or best practice or clinical guidelines into routine daily practice is challenging. The authors were interested in exploring educational strategies that would facilitate the use of recently developed evidence-based diagnostic imaging guidelines for chiropractors and other health care providers.22 Ultimately, application of these guidelines should help avoid unnecessary radiographs, increase examination precision, and decrease health care costs without compromising the quality of care. These guidelines suggest that imaging studies should be reserved for patients with “red flags” or clinical indicators suggestive of serious underlying pathologies. For instance, a combination of the following four red flags has a 100% sensitivity for cancer: considerable low back pain starting after age 50, a history of cancer/ carcinoma in the last 15 years, unexplained weight loss, and failure of conservative care.23

Objectives

The aim of this study was to compare the self-reported diagnostic imaging ordering practices of a group of Swiss chiropractors before and after an educational intervention strategy using a multifaceted educational intervention (a radiology workshop plus an online reminder), a radiology workshop alone, and a control group. The following hypotheses were tested: 1) between the pre- and the posttest, participants receiving a radiology workshop alone will demonstrate greater adherence to diagnostic imaging guideline recommendations for selected case scenarios compared to the control group; and 2) participants receiving a multifaceted educational intervention will have a greater improvement in the self-reported need for diagnostic imaging compared to those receiving a radiology workshop alone.

Methods

Participants

Eligibility

Of all 254 chiropractors licensed in Switzerland, 207 attended a continuing education conference in the city of Davos in September 2007; all 207 attendees spoke English and were candidates for this study. Inclusion criteria were 1) to be a member in good standing of the Swiss Chiropractic Association, 2) to attend the September 2007 continuing education conference in Davos, 3) to be willing to complete the consent form to participate, and 4) to be available at the time of the pretest.

Setting and Location

All 254 licensed chiropractors in Switzerland work in private practice, providing ambulatory care. To be a member in good standing, Swiss chiropractors are required to 1) have completed their undergraduate training in an accredited school as listed on the Swiss government registry, 2) have completed a mandatory 4-month hospital training program and a 2-year postgraduate training program in a private chiropractic practice, and 3) have successfully passed the Swiss National Board Exams. Participants were therefore trained in diagnostic imaging studies according to the standards of the European Council on Chiropractic Education and had hospital-based training, including the ordering of specialized diagnostic imaging such as computed tomography, magnetic resonance imaging, bone scanning, and ultrasound.

Intervention

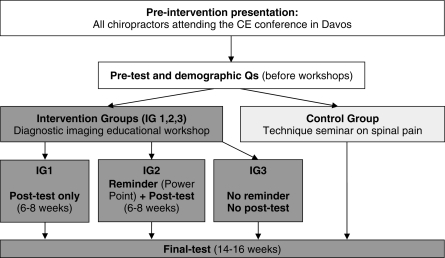

A flow diagram of interventions and measurements is shown in Figure 1. Registered participants received a conference package from the Swiss Chiropractic Association indicating the schedule (time, room, and title) of presentations to which they were assigned and were asked to sign a list of attendance. All participants also signed a consent form for the current study before completing the pretest during the 30-minute afternoon break immediately following a preintervention presentation. For all measurements (pretest, posttest, and final test), participants were instructed to complete questionnaires by themselves and to select a single answer per question.

Figure 1.

Flow diagram of interventions and measurements.

Preintervention Presentation

All participants in both the experimental and control groups first attended a 20-minute lecture entitled Diagnostic Imaging Practice Guidelines for Musculoskeletal Complaints in Adults: An Evidence-Based Approach. The objectives of this presentation were 1) to familiarize the audience with the methodology used to develop a new set of diagnostic imaging guidelines, 2) to improve understanding of factors involved in clinical decision making for diagnostic imaging studies, and 3) to briefly discuss potential risks associated with ionizing radiation exposure. None of the recommendations contained in the diagnostic imaging guidelines were specifically discussed during this first presentation.

Intervention Group

A 90-minute educational workshop was presented to the intervention group by two chiropractic specialists—one in clinical sciences and one in radiology. Topics covered included evidence-based recommendations contained in diagnostic imaging guidelines for spine disorders8 and this was underpinned with 10 case scenarios. Information provided to participants pertained to appropriate indications for the ordering of imaging studies. There were 80 participants in this group. Participants from the intervention group were randomly assigned to three subgroups [intervention group 1 (IG1), intervention group 2 (IG2), and intervention group 3 (IG3)], each composed of 26 or 27 participants. This strategy allowed evaluating the effect of introducing a reminder at midpoint. Participants were assigned to interventions by using random allocation, according to the test number distributed at random before the pretest. Only one subgroup (IG2) was invited to review the PowerPoint presented at the educational workshop 6–8 weeks after the conference, acting as a reminder. Other subgroups were not informed of this reminder/additional intervention (Table 1).

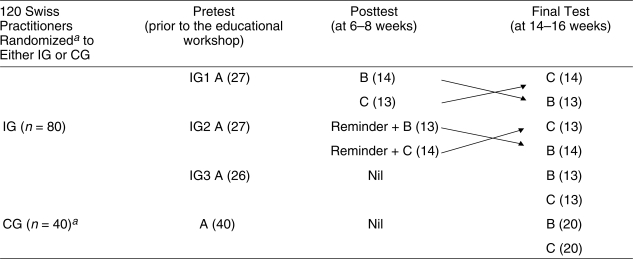

Table 1.

Administration of three competency tests for diagnostic imaging guidelines implementation study

|

Arrows: Versions B and C of the questionnaires were crossed over within each of the subgroups undergoing both the posttest and the final test (IG1 and IG2), so that for the posttest, half of participants within each subgroup were first assigned to version B of the questionnaire, while the remaining participants completed version C, and vice versa at the final test. aOne group of 40 participants dropped out because they were not available for the pretest. IG, intervention group; CG, control group; IG1–3, intervention subgroups 1–3. A, B, and C are the three equivalent versions of the competency test.

Control Group

In order to determine if the proposed guideline implementation strategy was effective, the control group, composed of 40 Swiss practitioners, did not attend the radiology workshop, but instead, attended a chiropractic technique seminar on spinal pain where no discussion on the use of imaging took place. This seminar was entitled Unique Neuro-mobilization Technique for Treating Spinal Pain. The control group (CG) completed the pretest and the final test at 14–16 weeks.

Outcomes

All participants were asked to answer seven demographic questions at the pretest. Baseline clinical characteristics included the following: 1) year of graduation, 2) postgraduate degree, 3) practice hours, 4) type of practice, 5) on-site access to radiography, 6) average number of spine x-ray series ordered per week, and 7) average number of referrals for special imaging per month.

The primary outcome measures with respect to rate of appropriate responses for the use of diagnostic imaging were three questionnaires, each consisting of 10 different spine case scenarios (A, B, C), all different from those presented during the educational intervention workshop, included in a pretest, a posttest at 6–8 weeks, and a final test at 14–16 weeks after the conference (Fig 1). The clinician's decision to provide any of the listed clinical services [plain-film radiography, computed tomography (CT), magnetic resonance imaging (MRI), or ultrasound; urgent referral; conservative therapy without imaging) was also assessed for consistency with the guidelines for each clinical vignette. The evidence-based Diagnostic Imaging Guidelines for Spinal Disorders represented the gold standard. These were published soon after administering the final test of this study, decreasing the risk of between-group contaminations. Plain-film radiographs were consistent with the guidelines when “ordered” in the presence of any indicators of potentially serious pathologies (red flags). CT, MRI, bone densitometry (DEXA), bone scan, and ultrasound were consistent with the guidelines at any time in the presence of progressive neurologic deficits, painful or progressive structural deformity, potentially serious pathology (suspected cauda equina syndrome, neoplasia, infection, fracture, abdominal aortic aneurysm, and nonmusculoskeletal causes of chest wall pain including disorders of the heart and lungs), or failed conservative care after 4–6 weeks. Urgent referrals were consistent with the guidelines at any time in the presence of potentially serious pathology. Conservative therapy without imaging was consistent with the guidelines in the presence of uncomplicated musculoskeletal disorders (nontraumatic pain without neurologic deficits or indicators of potentially serious pathologies).

The pretest and the final test were administered to all participants included in the intervention and in the control groups. In order to evaluate the reminder (access to the PowerPoint at midpoint), a posttest was administered at 6–8 weeks to subgroups IG1 and IG2. Subgroup IG2 was instructed to review the PowerPoint, either prior to or while answering the 10 spine case scenarios (Fig 1).

In addition, versions B and C of the questionnaires were crossed over within each of the subgroups undergoing both the posttest and the final test (IG1 and IG2), so that for the posttest, half of participants within each subgroup were first assigned to version B of the questionnaire, while the remaining participants completed version C, and vice versa at the final test. Similarly, half of the participants who were not administered a posttest at midpoint (subgroup IG3 and controls) were assigned to either version B or version C of the questionnaires at the final test (Table 1). This strategy aimed to compensate for possible dissimilarities between successive test versions. All three versions of the questionnaires were balanced and evaluated for face validity by four chiropractic experts in the field of radiology (Diplomates of the American Chiropractic Board of Radiology), who determined the difficulty level. For all versions of the questionnaires, approximately one third of cases did not require any imaging prior to administering conservative care, plain-film radiography was in order for one third of cases, and the remaining scenarios called for specialized imaging studies. All 10 questions were answered using either yes/no or multiple choice (A–E). Each appropriate answer was used to generate a sum score.

Implementation

Each participant was asked to indicate whether or not imaging studies were indicated for each of the 10 cases presented. The estimated time to complete each of the tests was 15–20 minutes. The pretest was administered to the intervention and control groups simultaneously on September 7, 2007 before workshops took place. The radiology workshop was provided on site to the intervention group only. Versions B and C of the questionnaires were administered electronically on a protected website after the conference at 6–8 weeks (posttest) and at 14–16 weeks (final test).

Data Quality Assurance

A username and password were provided to all participants either by e-mail or mail before launching the website. Two electronic reminders were sent to participants failing to complete the online questionnaires by the due date. Assigned questionnaires were mailed to the remaining participants and to those who could not be reached by e-mail due to incorrect addresses. Completion of the pretest, posttest, and final test required participants to enter the following information: 1) group number, 2) username, and 3) password. Responses were later transcribed onto a Microsoft Excel spreadsheet by the principal investigator. Appropriate responses to case scenarios were compared to the gold standard by two independent evaluators. Other methods used to enhance the quality of the data included checking for accuracy, completion, and cross-form consistency of data forms after each measure.

Blinding

While participants and those administering the intervention could not be blinded to the assigned workshop, all were blinded to the version of the questionnaire received, and subgroups IG1 and IG3 were blinded to the reminder that IG2 received at midpoint (online access to the PowerPoint presentation). Those assessing the outcomes were blinded to group assignment. The success of blinding was not evaluated.

Randomization

We conducted a randomized trial with postal follow-ups among a group of Swiss chiropractors attending a continuing education conference. From a separate study taking place simultaneously on an unrelated topic (Patient Safety and Critical Incident Reporting), investigators made a list of all members of the Swiss Chiropractic Association who had agreed to participate.

Sequence Generation

One hundred sixty participants were randomly assigned to one of four groups of 40 individuals according to a computerized random-number generator (http://www.randomizer.org/form.htm). Numbers were randomly generated using a “Math.random” method within the JavaScript programming language by use of a complex algorithm (seeded by the computer's clock).

Allocation Concealment and Implementation

Allocation sequence was concealed until the interventions were assigned. Investigators of the patient safety study generated the allocation sequence, enrolled participants, and assigned participants to their groups. All participants were asked to return a signed consent form. One group of 40 practitioners initially randomized to the control group dropped out from the current study for logistic reasons. For the purpose of a separate study, these practitioners had been assigned to a presentation on patient safety while participants of the current study were undergoing a pretest. Due to the busy conference schedule, it was not possible for this group to undertake the pretest at a later time. The remaining 120 participants were assigned to either the intervention (n = 80) or the control group (n = 40). The intervention group was further randomized into three subgroups, two of which received a posttest at 6–8 weeks. All participants underwent a pretest and a final test at 14–16 weeks. Ethical approval was obtained from the Université du Québec à Trois-Rivières. All participants were instructed not to discuss or share any information related to the study to limit group contamination.

Statistical Analysis

Primary Analysis

All data analyses were carried out according to a pre-established plan (two-way factorial design with repeated measures (A × BR). Chi-squared tests were used to compare demographic data between participants from the intervention and control groups (year of graduation, postgraduate degree, practice frequency, type of practice, on-site access to radiography, average number of spine x-ray series ordered per week, and average number of referrals for special imaging per month). Mean differences before and after the intervention at midpoint and end point were tested using analysis of variance. Differences were considered significant at p < .05. Where such differences were found to be significant, follow-up analyses of single items were performed using unpaired t tests. Post-hoc breaking down of a complex interaction term was performed using Dunnett's control-group criterion.

Secondary Analysis

The measure of adherence to guideline recommendations was estimated by calculating (using a 2 × 2 contingency table) the proportion of patients who were not recommended for radiography among cases who did not present any red flags, because we were interested in determining if imaging had not been ordered unnecessarily.24 Since the guidelines state that all patients recommended for radiography should have at least one red flag, the proportion of participants who indicated the need for imaging studies in agreement with guideline recommendations was also calculated (Table 2). Both primary and secondary measures of adherence were calculated using 95% confidence intervals.

Table 2.

A 2 × 2 contingency table outlining how secondary measure of adherence to imaging guidelines was calculated

| Self-Reported Radiography | |||

|---|---|---|---|

| Yes | No | Total | |

| Red flags: Yes | a | b | a + b |

| Based on guidelines: No | c | d | c + d |

| Total | a + c | b + d | |

Adherence = percentage of cases without red flags not recommended for radiography among all cases without red flags = d / (c + d) ×100%.

Results

Participant Flow and Recruitment

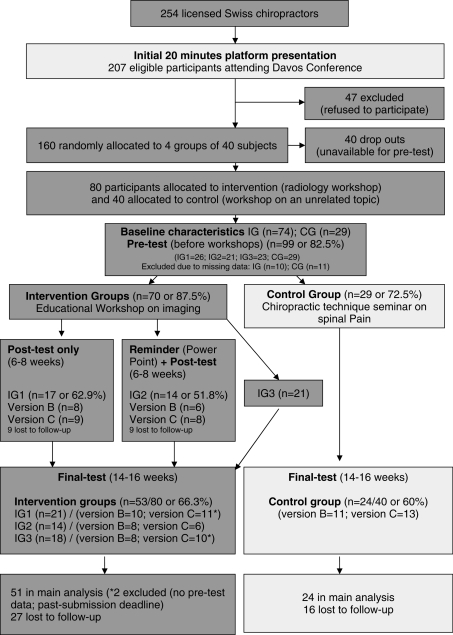

The flow of participants through each stage is shown in Figure 2. Among the 207 licensed Swiss chiropractors registered to attend a continuing education conference in Davos in September of 2007, 160 were randomly assigned to four groups of 40 participants; one group dropped out from the current study because they could not attend the pretest during the afternoon break. Of the remaining 120 participants, 99 (82.5%) participants completed the pretest in Davos, and 79 (65.8%) respondents returned the final tests (53 in the intervention group and the 26 in the control group). The intervention group was further divided into three subgroups. Only two subgroups were asked to complete a test at midpoint. Seventeen of the 27 participants (62.9%) from subgroup IG1 and 14 of 27 participants (51.8%) from subgroup IG2 completed and returned the posttest. The final test was administered at 14–16 weeks, between January 31 and February 15, 2008. The total number of questionnaires sent by mail, either because e-mails failed to reach participants or they had not yet responded, was 27 for the posttest and 35 for the final test. Among those, 8 and 14 participants, respectively, answered and mailed back the questionnaires. Six participants were excluded from the main analysis: four questionnaires in the intervention group were incomplete at pretest, one participant in subgroup IG1 returned the final test but failed to complete the pretest, and one in subgroup IG3 passed the submission deadline for the final test.

Figure 2.

Flow diagram of participants through each stage.

Baseline Data

Baseline clinical characteristics of participants in the intervention groups and control groups are presented in Table 3. Globally, 58% of participants graduated after 1991 and less than 20% had a postgraduate degree. Over two thirds of participants were in full-time practice, approximately 30% ordered more than five spine x-ray series per week, and between 14% and 21% referred patients for special imaging of the spine each month. Those in solo practice tended to have on-site access to radiography. A larger proportion of practitioners in the intervention group were in group or multidisciplinary practice. Differences were found for baseline clinical characteristics on two items: on-site access to radiography (χ2 = 5.80, df =1, p < .05), where the experimental group had greater access (82.4%) than the control group (58.5%), and type of practice (χ2 = 5.03, df =1, p < .05), where the experimental group was more likely to be in group or multidisciplinary practice (63.5%) than the control group (37.0%). The effect of this on scores obtained for the pretest, the posttest, and the final test for both intervention and control groups was further investigated. For the pretest, means and standard deviations were, respectively, 4.872 ± 1.242 (n = 78) and 4.680 ± 1.492 (n = 25). The Student t test was not significant (t = 0.640, df =102, p > .05), suggesting that having on-site radiography did not influence general attitude pertaining to ordering imaging studies based on case scenarios presented to participants.

Table 3.

Baseline clinical characteristics (number and percentage) of study sample

| Characteristics | Intervention Group (n = 74) No. (%) | Control Group (n = 29) No. (%) |

|---|---|---|

| 1. Years in practice | ||

| A) <1960–1980 | 8 (10.8) | 6 (20.7) |

| B) 1981–1990 | 23 (31.1) | 6 (20.7) |

| C) 1991–2000 | 26 (35.1) | 9 (31) |

| D) 2001–2007 | 17 (22.9) | 8 (27.6) |

| 2. Postgraduate degree | ||

| A) Yes | 13 (17.6) | 4 (13.8) |

| B) No | 61 (82.4) | 25 (86.2) |

| 3. Practice | ||

| A) Full Time | 49 (66.2) | 21 (72.4) |

| B) Part Time | 25 (33.8) | 8 (27.6) |

| 4. Type of practice | ||

| A) Solo | 27 (36.5) | 18 (62.1) |

| B) Group or multidisciplinary | 47 (63.5) | 11 (37.0) |

| 5. On-site access to radiography | ||

| A) Yes | 61 (82.4) | 17 (58.6) |

| b) No | 13 (17.6) | 12 (41.4) |

| 6. Average number of spine x-ray series ordered per week | ||

| A) Less then 5 | 42 (56.8) | 21 (72.4) |

| B) Over 5 | 32 (43.2) | 8 (27.6) |

| 7. Average number of referrals for special imaging of the spine per month | ||

| A) Less then 5 | 58 (78.4) | 25 (86.2) |

| B) Over 5 | 16 (21.6) | 4 (13.8) |

Furthermore, scores obtained at the pretest versus the final test, for both intervention and control groups combined, when considering on-site radiography did not appear to differ (t = 0.933, df =71, p > .05). To test for variability between the intervention and the controls groups at the pretest and at the final test, interactions were further dissected using Dunnett's control group method.25 Subgroup interactions with the control group were not significant (t = 0.398 for IG1, t = 1.1255 for IG2, and t = −0.213 for IG3) with Dunnett's criteria (critical t = 2.097, df =73, k = 4). Similarly, for the pretest, means and standard deviations for both intervention and control groups were, respectively, 5.013 ± 1.566 (n = 43) and 5.121 ± 1.061 (n = 58) for type of practice. The Student t test was not significant (t = 0.375, df =99, p > .05), suggesting that being in solo practice did not influence general attitude pertaining to ordering imaging studies based on case scenarios presented to participants. Furthermore, differences between scores obtained at the pretest and the final test (intervention and control groups combined) when considering type of practice did not reach significance (t = 1.197, df =73, p > .05).

Respondents and nonrespondents, both in the intervention and control groups, had similar characteristics, whether the nonresponse occurred for the posttest or the final test (Tables 4 and 5). Nonrespondents (dropouts) typically fall into one of two groups: people who refuse to participate in the survey and those who cannot be reached during data collection. We were unable to distinguish between refusal and noncontact, however.

Table 4.

Comparisona of practice characteristics of respondents and nonrespondents to both pretest and posttest

| Intervention Subgroup IG1 | Intervention Subgroup IG2 | |||

|---|---|---|---|---|

| Characteristics | Respondents (n = 17) No. (%) | Nonrespondents (n = 9)No. (%) | Respondents (n = 14)No. (%) | Nonrespondents (n = 9)No. (%) |

| 1. Graduate after 1991 | 12 (70.6) | 5 (55.5%) | 7 (50) | 4 (44.4) |

| 2. Postgraduate degree | 1 (5.9) | 2 (22.2) | 2 (11.7) | 3 (33.3) |

| 3. Full-time practice | 12 (70.6) | 8 (88.8) | 7 (50.0) | 6 (66.7) |

| 4. Solo practice | 7 (41.2) | 4 (44.4) | 4 (28.6) | 3 (33.3) |

| 5. On-site access to radiography | 13 (76.5) | 7 (77.7) | 12 (85.7) | 8 (88.9) |

| 6. Between 5 and 14 spine x-ray series ordered per week | 16 (94.1) | 9 (100) | 9 (64.3) | 5 (55.6) |

| 7. Less then 5 referrals for special imaging of the spine per month | 15 (88.2) | 6 (66.7) | 12 (85.7) | 6 (66.7) |

IG, intervention groups (IG1, subgroup unexposed to reminder but undergoing a posttest at midpoint; IG2, subgroup exposed to reminder and a posttest at midpoint).

Table 5.

Comparison of practice characteristics of respondents and nonrespondents to both pretest and final test

| Intervention | Control | |||

|---|---|---|---|---|

| Characteristics | Respondents (n = 53) No. (%) | Nonrespondents (n = 21) No. (%) | Respondents (n = 24) No. (%) | Nonrespondents (n = 5) No. (%) |

| 1. Graduate after 1991 | 32 (60.4) | 10 (47.6) | 15 (62.5) | 2 (40,0) |

| 2. Postgraduate degree | 8 (15.0) | 5 (23.8) | 4 (16.6) | 0 (0,0) |

| 3. Full-time practice | 34 (64.1) | 15 (71.4) | 18 (75.0) | 3 (60,0) |

| 4. Solo practice | 18 (34.0) | 9 (42.8) | 14 (58.3) | 4 (80,0) |

| 5. On-site access to radiography | 43 (81.1) | 18 (85.7) | 14 (58.3) | 3 (60,0) |

| 6. Between 5 and 14 spine x-ray series ordered per week | 23 (43.3) | 9 (42.8) | 5 (20.8) | 2 (40,0) |

| 7. Less then 5 referrals for special imaging of the spine per month | 40 (75.5) | 16 (76.2) | 21 (87.5) | 4 (80,0) |

IG, intervention groups; CG, control group.

Numbers Analyzed and Outcomes and Estimation

Primary Analysis

Number of participants (denominator) in each group included in each analysis and summary results for each study group obtained at the pretest, posttest, and final tests are presented in Tables 6–8. There was no difference between scores obtained at baseline for the intervention group and the control group (unpaired Student t test = 0.065, df =98, p > .05) (Table 6). However, a significant increase in guideline-consistent behavior among clinicians assigned to receive the multifaceted educational intervention (ie, online reminder at midpoint in addition to the radiology workshop) was found. Scores for the subgroup that was provided access to the online reminder (PowerPoint presentation) at 8–10 weeks (IG2) increased at the posttest by 16.4% compared to baseline. Mean difference reached 5% significance level (t = 1.924, df = 13, p =.077) and this performance was significantly greater than the comparison group (IG1) for the same period [0.647 (2.06), 95% CI, −0.33 to 1.63] (F = 4.486, df = 1 and 30, p = .043) (Table 7).

Table 6.

Summary results (mean scores and standard deviations) for each study group for the three measurements (pretest, posttest, and final test)

| Pretest | Posttest | Final Test | ||||

|---|---|---|---|---|---|---|

| Group | n | Mean Scores (SD) | n | Mean Scores (SD) | n | Mean Scores (SD) |

| IG1 | 26 | 5.35 (1.52) | 17 | 4.82 (1.55) | 21 | 4.86 (1.53) |

| IG2 | 21 | 4.70 (0.93) | 14 | 5.57 (1.02) | 14 | 5.43 (1.60) |

| IG3 | 23 | 4.84 (1.40) | X | 17 | 4.65 (1.37) | |

| CG | 29 | 4.45 (1.15) | X | 24 | 4.79 (1.38) | |

IG, intervention groups (IG1, subgroup unexposed to reminder but undergoing a posttest at midpoint; IG2, subgroup exposed to reminder and a posttest at midpoint; IG3, subgroup unexposed to reminder and receiving no posttest); CG, control group; n, number of participants completing the competency tests; SD, standard deviation; X, subgroups IG3 and CG did not undergo a posttest.

Table 7.

Summary results (mean scores and standard deviations) for two subgroups exposed to an educational intervention (IG1 and IG2) for pretest and posttest at 8–10 weeks where only one subgroup (IG2) had a reminder at midpoint

| Groupsa | Pretest Mean (SD) | Posttest Mean (SD) | Change Mean (SD) | 95% CI | p Value |

|---|---|---|---|---|---|

| IG1 (n = 17) | 5.412 (1.33) | 4.765 (1.52) | 0.647 (2.06) | −0.33 to 1.63 | |

| Percent change | −11.9% | p = .043 | |||

| IG2 (n = 14) | 4.786 (0.89) | 5.571 (1.02) | 0.785 (1.53) | −0.92 to 1.59 | F = 4.486 |

| Percent change | +16.4% |

Includes respondents who completed both pretest and posttest. IG, intervention groups (IG1, subgroup unexposed to reminder but undergoing a posttest at 8–10 weeks; IG2, subgroup exposed to reminder at midpoint and to a posttest at 8–10 weeks); n, number of participants completing the competency tests; SD, standard deviation. A higher score at the posttest indicates performance improved over time.

Table 8.

Mean scores and standard deviations obtained in pretest and final test (14–16 weeks) for four groups of practitioners: all those exposed to a radiology workshop (IG1, IG2, and IG3) and control group (CG)

| Groupsa | Pretest Mean (SD) | Final test Mean (SD) | Change Mean (SD) | 95% CI | p Value |

|---|---|---|---|---|---|

| IG 1 (n = 20) | 5.200 (1.28) | 4.900 (1.55) | 0.300 (1.45) | −0.34 to 0.94 | |

| Percent change | −5.8% | ||||

| IG 2 (n = 14) | 4.857 (0.95) | 5.500 (1.56) | 0.643 (1.55) | −0.17 to 1.45 | p = .348 |

| Percent change | +13.2% | F = 1.117 | |||

| IG 3 (n = 17) | 4.588 (1.46) | 4.647 (1.37) | 0.059 (1.71) | −0.75 to 0.87 | |

| Percent change | +1.3% | ||||

| CG (n = 24) | 4.542 (1.07) | 4.791 (1.38) | 0.25 (0.89) | −0.11 to 0.61 | |

| Percent change | 5.5% |

Includes respondents who completed both pretest and final test. IG, intervention groups (IG1, subgroup unexposed to reminder but undergoing a posttest at midpoint; IG2. subgroup exposed to reminder and undergoing a posttest at midpoint; IG3, subgroup unexposed to reminder and receiving no posttest); CG, control group; n, number of participants completing the competency tests; SD, standard deviation. A higher score at the final test indicates performance improved over time.

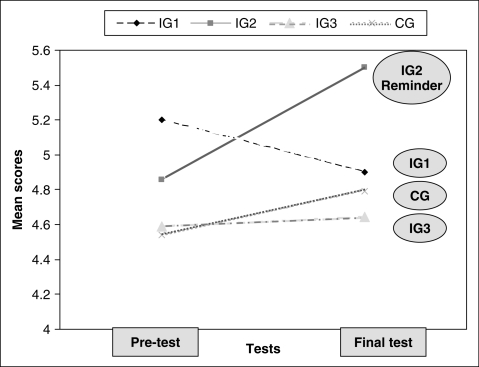

The subgroup with access to the reminder at midpoint (IG2) continued to perform better at 14–16 weeks compared to baseline (13.2% improvement), although overall scores for the pretest and the final test for all four groups were not significantly different (F = 1.117, df = 1 and 74, p = .348). In contrast, the performance of the comparison group (IG1) that was not provided access to a reminder at midpoint had decreased by 5.8% at the final test compared to baseline. There were virtually no changes in performance for the intervention group receiving no reminder and no posttest (IG3 = 1.3%), and a slight (nonsignificant) increase in guideline-consistent performance from baseline for the control group at the end of the study (5.5%).

Mean scores obtained for each study group for the pretest and posttest, and for the pretest and final test, are presented in Tables 7 and Table 8, respectively. Data presented differ slightly between the two tables for the pretest due to the exclusion of participants from main analysis [missing data at the final test for one participant from an intervention group (IG1) and two from the control group; one other participant from subgroup IG3 was excluded because the questionnaire was received after the deadline].

Secondary Analysis

Adherence rates to guideline recommendations (percentage agreement of clinicians' responses compared with the gold standard) are presented in Tables 9 and 10. Each of the competency tests contained three case studies without mention of any red flags and where no disease was expected. We used a 2 × 2 contingency table to estimate the adherence to guideline recommendations (gold standard) for the proportion of cases not recommended for radiography among cases without red flags. Adherence rates were 50.5% (95% CI, 39.1–61.8) for the intervention group and 43.7% (95% CI, 23.7–63.6) for the control group at baseline (Table 9). The Student t test was not significant (t = 1.260, df =101, p > .05). At midpoint, the adherence rate for the subgroup with access to the online reminder (IG2) was 38.1% (95% CI, 12.4–63.8) compared to 29.3% (95% CI, 7.5–51.4) for the subgroup without access (IG1) (unpaired Student t test = 0.604, df =29, p > .05). Adherence rates at the final test were 33.9% (95% CI, 20.4–46.3) for the intervention group compared to 19.5% (95% CI, 3.5–35.4) for the control group (unpaired Student t test = 1.840, df =73, p = .07) (Table 10).

Table 9.

Percentage agreement of clinicians' responses compared with gold standard (adherence rate) at pretest for intervention and control groups

| Case 1 | Case 2 | Case 3 | Case 4 | Case 5 | Case 6 | Case 7 | Case 8 | Case 9 | Case 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Intervention (n = 74) | ||||||||||

| Yes | 6 | 40 | 16 | 62 | 64 | 40 | 38 | 72 | 6 | 33 |

| No | 68 | 34 | 58 | 12 | 10 | 34 | 36 | 2 | 68 | 41 |

| Gold standard | No | No | Rx | CT/MRI | No | US | MRI | MRI | Rx | Rx + add |

| % agreement | 91.9 | 45.9 | 21.6 | 83.8 | 13.5 | 54 | 51.4 | 97.3 | 8.1 | 44.6 |

| Control (n = 29) | ||||||||||

| Yes | 4 | 18 | 6 | 19 | 27 | 14 | 13 | 29 | 7 | 17 |

| No | 25 | 11 | 23 | 10 | 2 | 15 | 16 | 0 | 22 | 12 |

| Gold standard | No | No | Rx | CT/MRI | No | US | MRI | MRI | Rx | Rx + add |

| % agreement | 86.2 | 37.9 | 20.7 | 65.5 | 6.9 | 48.3 | 44.8 | 100 | 24 | 58.6 |

Rx, plain films; Rx + add, plain films conventional views and additional views; CT, computed tomography scans; MRI, magnetic resonance scans; US, diagnostic ultrasound.

Table 10.

Percentage agreement of clinicians' responses compared with gold standard (adherence rate) at final test for intervention and control groups

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Intervention | ||||||||||

| Version B (n = 26) | ||||||||||

| Yes | 24 | 24 | 17 | 3 | 12 | 19 | 15.0 | 11 | 22 | 6 |

| No | 2 | 2 | 9 | 23 | 14 | 7 | 11.0 | 15 | 4 | 20 |

| Gold standard | MRI | Ref | No | Rx | Bone S | Rx + add | US | Rx | No | No |

| % agreement | 92.3 | 92.3 | 34.6 | 11.5 | 46.2 | 73.1 | 60.0 | 42.3 | 15.4 | 76.9 |

| Version C (n = 25) | ||||||||||

| Yes | 18 | 11 | 11 | 21 | 7 | 21 | 20 | 11 | 20 | 11 |

| No | 8 | 15 | 24 | 5 | 19 | 4 | 5 | 14 | 6 | 22 |

| Gold standard | No | Rx | MRI | No | DEXA | Rx | Ref | MRI | No | Rx |

| % agreement | 30.8 | 42.3 | 45.8 | 19.2 | 26.9 | 84 | 80. | 44 | 23 | 42.1 |

| Control | ||||||||||

| Version B (n = 11) | ||||||||||

| Yes | 11 | 10 | 8 | 2 | 2 | 6 | 6 | 1 | 10 | 4 |

| No | 0 | 1 | 3 | 9 | 9 | 4 | 5 | 10 | 1 | 7 |

| Gold standard | MRI | Ref | No | Rx | Bone S | Rx + add | US | Rx | No | No |

| % agreement | 100 | 90.9 | 27.3 | 15.4 | 18.2 | 54.5 | 54.5 | 9.1 | 9.1 | 63.6 |

| Version C (n = 13) | ||||||||||

| Yes | 12 | 7 | 9 | 11 | 6 | 13 | 10 | 9 | 13 | 8 |

| No | 1 | 6 | 4 | 2 | 7 | 0 | 3 | 4 | 0 | 5 |

| Gold standard | No | Rx | MRI | No | DEXA | Rx | Ref | MRI | No | Rx |

| % agreement | 7.7 | 53.8 | 69.2 | 15.4 | 46.2 | 100 | 76.9 | 69.2 | 0 | 61.5 |

Rx, plain films; Rx + add, plain films conventional views and additional views; CT, computed tomography scans; MRI, magnetic resonance scans; US, diagnostic ultrasound; bone S, bone scans; DEXA, Dual-energy x-ray absorptiometry; Ref, urgent medical referral.

The secondary measure of adherence to guideline recommendations (gold standard) is defined as the proportion of cases containing at least one red flag among all case scenarios where imaging studies were recommended. Adherence rates were 51.5% (95% CI, 40.2–62.9) for the intervention group and 51.7% (95% CI, 33.5–69.9) for the control group at the pretest. For the posttest, this value was 63.3% (95% CI, 37.8–88.6) in the group (IG2) having access to the reminder at midpoint versus 56.3% (95% CI, 32.6–79.9) in the comparison group (IG1). For the final test, the results were 56.3% (95% CI, 43.0–69.9) for the intervention group compared to 59.5% (95% CI, 39.8–79.2) for the control group (Tables 9 and 10).

Protocol Deviation from Study as Planned

The posttest and the reminder were administered 2 weeks after the intended date (at 8–10 weeks) due to technical problems encountered.

Discussion

Interpretation

This study aimed to compare the self-reported diagnostic imaging ordering practices of a group of Swiss chiropractors before and after an educational intervention strategy using a multifaceted educational intervention. Forty-four percent of participants were in solo practice, while 56% were in either a group or multidisciplinary practice. Results from a 2003 survey among 254 Swiss chiropractors suggested that 60% were working in a solo practice, 30% in a practice with more than one chiropractor, and 10% in a multidisciplinary environment.26 This difference, significant at 1% (z = 2.844), may be a reflection of a recent trend to join group or multidisciplinary practices.27, 28

Group characteristics were similar for both the intervention and control groups, except for the number of practitioners having on-site radiography and for type of practice (solo or group/ multidisciplinary). It has previously been shown that self-referral increases utilization of diagnostic imaging29, 30; however, in our study, intention to prescribe imaging studies based on case scenarios provided at the pretest or at the final test was not influenced by having on-site access to radiography or by the type of practice. Nonetheless, the fact that groups were not balanced does not rule out the possibility of high-level interactions.

An educational intervention strategy alone did not improve self-reported decision-making ability as to whether or not imaging studies were needed based on the case scenarios presented, whereas a multifaceted intervention consisting of an educational workshop on radiology and reminder at midpoint significantly improved the appropriate response rate (Table 7). While previous studies have suggested that online continuing education (CE) can stimulate knowledge transfer,21 our results should be interpreted with caution in light of the reverse relationship between scores obtained for the two subgroups receiving the original educational intervention (Fig. 3). Furthermore, results at end point were not statistically significant (Table 8).

Figure 3.

Scores obtained in the pretest (1) and in the final test (2) for all three intervention groups (subgroups IG1, IG2, IG3) and the control group (CG).

Overall performance of the subgroup who could not access the PowerPoint presentation at 8–10 weeks (IG1) declined over time, whereas scores for subgroup IG3 and the control group did not truly change compared to baseline. Failing to measure the intention to prescribe diagnostic imaging studies immediately or soon after the initial lecture presentation somewhat limits the interpretation of the lower performance of subgroup IG1. It could be argued that the on-site presentation (radiology workshop) actually confused some participants, suggesting that the radiology workshop lacked relevance or that the format used to disseminate recommendations was inappropriate. Since the workshop was provided by academic experts in the fields of radiology and clinical sciences, both primary authors of the recently published diagnostic imaging guidelines31 that were used as the gold standard to compare responses for the current study, one may assume that the content was relevant. Although the level of appreciation from participants assigned to this workshop was high according to the conference organizing committee evaluation, level of satisfaction does not necessarily translate into better understanding or an intention to change practice. Alternatively, the radiology workshop may have sensitized participants to the importance of x-ray, resulting in fewer prescriptions of specialized imaging studies even when required.

High baseline scores for the pretest in both the intervention and the control groups would suggest that adherence to guidelines was already high, possibly explaining the lack of difference after the intervention (already high adherence with little room for further improvement). In addition to a mandatory 2-year postgraduate training prior to obtaining a full license in Switzerland, the number of mandatory CE hours per year for Swiss chiropractors is among the highest in the world. Such characteristics influence guidelines acceptance, an important feature of knowledge transfer.14 More training could lead to better overall performance, thereby causing a ceiling effect. This did not seem to be the case, however, because the proportion of vignettes without red flags and where no radiography was prescribed (primary measure of adherence) and those with red flags where imaging studies where appropriately asked for (secondary measure of adherence) was approximately 50% for all groups at baseline. This suggests that adherence to evidence-based diagnostic imaging guidelines was fair to moderate at the onset of the study. Secondary analysis of intervention effectiveness revealed a 7% increase in guideline-consistent behavior among clinicians assigned to receive the radiology workshop and the reminder at midpoint (posttest). Measures of guideline adherence did not differ significantly at end point.

Another explanation for the absence of significance of the study results may be that the questionnaires themselves failed to test similar domains or to measure a unified construct, because Cronbach alpha scores were quite low for all three questionnaires, suggesting a lack of internal consistency. Furthermore, the preintervention platform presentation offered to all practitioners prior to the workshop may have influenced participants' attitudes toward the behavior we intended to change, that is, the self-reported practice of ordering images. Attitudes toward behavior constitute one of three variables which the theory of planned behavior suggests will predict the intention to perform a behavior.32 According to this motivational theory, intentions are the precursors of behavior. These intentions are determined largely by perceived social norms, perceived behavior control, and attitudes toward behavior. The radiology workshop may have influenced perceived social norms (social pressure to perform or not perform the target behavior considering the continuing education conference was organized by the Swiss Chiropractic Association) and perceived control related to the behavior (extent to which a person feels able to enact the behavior considering the interactive format of the case presentations during the workshop). The preintervention presentation discussed the various phases involved in the guideline development process and reviewed basic concepts relating to clinical decision making and ionizing radiation exposure, thereby aiming to convince practitioners that the proposed recommendations were sound and that appropriate use of imaging was important. Such discussion may have influenced participants' attitudes toward imaging. In addition, attitudes toward behavior are proposed to arise from a combination of beliefs about its consequences (behavioral beliefs) and evaluations of those consequences (outcome evaluations).33 Information provided during the preintervention also aimed to address potential questions from the audience, such as the following: What would happen if no x-rays were ordered? What is the risk/benefit ratio of imaging? What are the costs of imaging and what are the costs of the consequences? Additionally, does the evidence suggest that routine imaging is a good practice? These questions compose the theoretical constructs of the beliefs about consequences, one of the 12 domains recently identified to explain behavior change.34 While normally included in interviews of health care professionals to assist in explaining a behavior and in designing a behavior change intervention,34, 35 information covering these various topics during the preintervention may have had an important role to play. Attitude toward prescribing imaging studies and beliefs about consequences were shown to be significant components of behavior change in previous studies.33, 36 It is therefore possible that the preintervention platform presentation significantly influenced participants' attitudes toward self-reported ordering practice and beliefs about consequences, both apparent determinants of the intention to perform a simulated behavior. Between-group contamination may explain the lack of differences observed in our study. However, this post-hoc interpretation remains speculative because variables underlying behavioral theories were not measured in our study.

Using multiple interventions and focusing only on one or two recommendations at a time may be preferable. In a quasi-experimental method comparing outcomes before and after the educational intervention with those of a control community, a significant reduction in self-reported need for plain radiography for uncomplicated acute low back pain (LBP) and for patients with acute LBP of less than 1 month was seen in the intervention community compared to controls.19 The interventions in that study included the following strategies: focus group session, workshop meeting, handout material (key research papers), decision aid tool (checklist for x-ray use), one-on-one meeting with researcher, and news release (educating public). Unfortunately, the size of the audience, time constraints, long distance, and budget constraints prevented several of the strategies found useful in the study by Ammendolia et al19 from being applied to the current study.

Chiropractic students undergo extensive training in the field of radiology in all accredited colleges. Recently, interns in their final year were shown to have the ability to detect and recognize the need to x-ray patients according to published evidence-based guidelines.37 While interns were not consistent in choosing the correct views, agreement with the gold standards for the question of whether or not they would take x-rays ranged from 63.2% to 100%. A high level of adherence to guidelines was also reported in a clinical cohort study for patients with a new episode of LBP who presented to one of six outpatient teaching chiropractic clinics.24 The proportion of patients without red flags who were not recommended for radiography ranged from 89.4% to 94.7%, suggesting a strong adherence to radiography evidence-based guidelines. Of interest, radiography was only recommended for 12.3% of patients, although the proportion of patients with red flags ranged from 45.3% to 70.5%. This low utilization rate may be partly explained by the fact that many common red flags are nonpathologic, such as age over 50, decision regarding career or athletics, and pain worst lying down and/or at night in bed.23 Current utilization rates observed in community practice are estimated at approximately 25% in Switzerland6 and 37% in Canada.27 It is envisioned that adherence to imaging guidelines in professional practice will continue to improve in the upcoming years as new graduates enter practice and more effective educational intervention strategies are implemented.

Study Limitations

This study has several limitations. There were no measurements immediately or soon after the educational intervention, thereby limiting interpretation of the immediate effect of the intervention. This study was underpowered. Sample size estimates for a larger trial with a group receiving a reminder at midpoint suggest that the number of subjects needed for an 80% power level at a significance of.05 is 71 and 222 subjects for a power level of 90%. In addition, half of participants randomized to the control group dropped out from the current study for logistic reasons, having been assigned to a presentation on patient safety by organizers of the conference at the time the pretest was administered. The loss of the data for this group was not related to the intervention; hence there is no attrition bias in this study. Although some questions from the pretest were included in both the posttest and final test, and some questions from the posttest and from the final test were included in each other, it was not possible to include questions from the two posttests into the pretest. This was partially compensated for, however, by the fact that versions B and C of the questionnaires were crossed over at the posttest and at the final test. In addition, while levels of difficulty for all questions were assessed qualitatively by four independent experts, quantitative assessments were not done systematically, and a thorough study of the content and concurrent validity of the questionnaires was missing. A written clinical vignette does not replace patient–doctor interactions and can only measure intention to prescribe. Finally, technical difficulties encountered may have disenchanted some participants, partly explaining the lower level of participation at midpoint and possibly at end-point measurements. Generalizability (external validity) of the trial findings is limited due to the study limitations previously discussed, including lack of internal consistency and absence of test–retest reliability of the questionnaires, small sample size, and unbalanced study groups.

It is now suggested that the choice of dissemination and implementation strategies be based on characteristics of the evidence or the guidelines themselves, the obstacles and incentives for change, and the likely costs and benefits of different strategies.14, 38, 39 Interventions tailored to prospectively identify barriers may improve care and patient outcomes.40 While barriers to the diagnostic imaging guidelines were partly addressed in a previous study,22 the interventions were not underpinned by a health psychology theory. Since the interaction of factors at multiple levels may influence the success or failure of quality improvement interventions, an understanding of these factors, including the theoretical assumptions and hypotheses behind these factors, is a recommended initial step, because it enables the consideration of theory-based interventions for quality improvement.41, 42

Future studies should limit the number of behaviors that are targeted, aim to identify perceived barriers and facilitators to the utilization of diagnostic imaging guidelines for management of adult musculoskeletal disorders according to a theoretical approach, identify the best strategies to overcome these barriers, and apply tailored interventions to influence implementation of evidence-based practice.11, 12, 40, 43

Conclusion

Results from this study suggest that online access to specific recommendations while making a clinical decision to prescribe diagnostic imaging studies for adult spinal disorders deserves further study. The findings in this study agree with the current appreciation regarding the ability of a didactic presentation to influence a behavior change. These findings should be interpreted with caution considering the small sample size.

Conflict of Interest

The authors haves no conflicts of interest to declare.

Acknowledgments

We are very grateful to the Beatrice Zaugg, DC, MME, and Martin Wangler, DC, MME, from the Swiss Academy for Chiropractic for making this study possible by providing access to groups previously randomized for a study on patient safety attitude change and for coordinating the schedule for the pretest and workshops. We wish to acknowledge all four chiropractic radiologists who agreed to evaluate case scenarios and the three versions of the questionnaires. Special thanks to all study participants, as well as to Yves Girouard, PhD, for his input for the study design.

Contributor Information

André E. Bussières, Université du Québec à Trois-Rivières and University of Ottawa.

Louis Laurencelle, Université du Québec à Trois-Rivières.

Cynthia Peterson, Swiss Academy for ChiropracticBussières, Laurencelle, and PetersonDiagnostic Imaging Guidelines Implementation.

References

- 1.Owen JP, Rutt G, Keir MJ, et al. Survey of general practitioners' opinions on the role of radiology in patients with low back pain. Br J Gen Pract. 1990;40(332):98–101. [PMC free article] [PubMed] [Google Scholar]

- 2.Halpin SF, Yeoman L, Dundas DD. Radiographic examination of the lumbar spine in a community hospital: an audit of current practice. Br Med J (Clin Res Ed) 1991;303(6806):813–5. doi: 10.1136/bmj.303.6806.813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Houben PHH, der van Weijden T, Sijbrandij J, Grol RPTM, Winkens RA. Reasons for ordering spinal x-ray investigations: how they influence general practitioners' management. Can Fam Physician. 2006;52(10):1266–7. [PMC free article] [PubMed] [Google Scholar]

- 4.Dunnick NR, Applegate KE, Arenson RL. The inappropriate use of imaging studies: a report of the 2004 Intersociety Conference. J Am Coll Radiol. 2005;2(5):401–6. doi: 10.1016/j.jacr.2004.12.008. [DOI] [PubMed] [Google Scholar]

- 5.Ammendolia C, Bombardier C, Hogg-Johnson S, Glazier R. Views on radiography use for patients with acute low back pain among chiropractors in an Ontario community. J Manipulative Physiol Ther. 2002;25(8):511–20. doi: 10.1067/mmt.2002.127075. [DOI] [PubMed] [Google Scholar]

- 6.Aroua A, Decka I, Robert J, Vader JP, Valley JF. Chiropractor's use of radiography in Switzerland. J Manipulative Physiol Ther. 2003;26(1):9–16. doi: 10.1067/mmt.2003.10. [DOI] [PubMed] [Google Scholar]

- 7.Beck RW, Holt KR, Fox MA, Hurtgen-Grace KL. Radiographic anomalies that may alter chiropractic intervention strategies found in a New Zealand population. J Manipulative Physiol Ther. 2004;27(9):554–9. doi: 10.1016/j.jmpt.2004.10.008. [DOI] [PubMed] [Google Scholar]

- 8.Bussières A, Taylor JA, Peterson C. Diagnostic imaging practice guidelines for musculoskeletal complaints in adults—an evidence-based approach. Part 3: Spinal disorders. J Manipulative Physiol Ther. 2008;31(1):33–88. doi: 10.1016/j.jmpt.2007.11.003. [DOI] [PubMed] [Google Scholar]

- 9.Field MJ, Lohr KN. Clinical practice guidelines: directions for a new program. Washington, DC: National Academy Press; 1990. p. 38. [PubMed] [Google Scholar]

- 10.Woolf SH, Grol R, Hutchinson A, Eccles M, Grimshaw J. Clinical guidelines: potential benefits, limitations, and harms of clinical guidelines. Br Med J (Clin Res Ed) 1999;318(7182):527–30. doi: 10.1136/bmj.318.7182.527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Grimshaw JM, Eccles M, Thomas R, et al. Toward evidence-based quality improvement. Evidence (and its limitations) of the effectiveness of guideline dissemination and implementation strategies 1966–1998. J Gen Intern Med. 2006;21(Suppl 2):S14–20. doi: 10.1111/j.1525-1497.2006.00357.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Grimshaw JM, Thomas RE, Maclennan G, et al. Effectiveness and efficiency of guideline dissemination and implementation strategies. Health Technol Assess. 2004;8(6):iii. doi: 10.3310/hta8060. [DOI] [PubMed] [Google Scholar]

- 13.Cochrane Effective Practice and Organisation of Care Group [homepage on the Internet] Ottowa: the association; © 2007–2009 [cited 2007 Oct 5]; Available from: http://www.epoc.cochrane.org/en/index.html. [Google Scholar]

- 14.Grol R, Grimshaw J. From best evidence to best practice: effective implementation of change in patients' care. Lancet. 2003;362(9391):1225–30. doi: 10.1016/S0140-6736(03)14546-1. [DOI] [PubMed] [Google Scholar]

- 15.Freemantle N, Harvey EL, Wolf F, Grimshaw JM, Grilli R, Bero LA. Printed educational materials: effects on professional practice and health care outcomes. Cochrane Database Syst Rev. 2000;(2):CD000172. doi: 10.1002/14651858.CD000172. [DOI] [PubMed] [Google Scholar]

- 16.Doherty S. Evidence-based implementation of evidence-based guidelines. Int J Health Care Qual Assur Inc Leadersh Health Serv. 2006;19(1):32–41. doi: 10.1108/09526860610642582. [DOI] [PubMed] [Google Scholar]

- 17.Forsetlund L, Bjørndal A, Rashidian A, et al. Continuing education meetings and workshops: effects on professional practice and health care outcomes. Cochrane Database Syst Rev. 2009;(2):CD003030. doi: 10.1002/14651858.CD003030.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Coomarasamy A, Khan KS. What is the evidence that postgraduate teaching in evidence based medicine changes anything? A systematic review. Br Med J (Clin Res Ed) 2004;329(7473):1017. doi: 10.1136/bmj.329.7473.1017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ammendolia C, Hogg-Johnson S, Pennick V, Glazier R, Bombardier C. Implementing evidence-based guidelines for radiography in acute low back pain: a pilot study in a chiropractic community. J Manipulative Physiol Ther. 2004;27(3):170–9. doi: 10.1016/j.jmpt.2003.12.021. [DOI] [PubMed] [Google Scholar]

- 20.Eccles MP, Grimshaw J, Walker A, Johnston M, Pitts N. Changing the behavior of healthcare professionals: the use of theory in promoting the uptake of research findings. J Clin Epidemiol. 2005;58(2):107–12. doi: 10.1016/j.jclinepi.2004.09.002. [DOI] [PubMed] [Google Scholar]

- 21.Vollmar HC, Schurer-Maly C, Frahne J, Lelgemann M, Butzlaff M. An e-learning platform for guideline implementation—evidence- and case-based knowledge translation via the Internet. Methods Inf Med. 2006;45(4):389–96. [PubMed] [Google Scholar]

- 22.Bussières A, Peterson C, Taylor JA. Diagnostic imaging practice guidelines for musculoskeletal complaints in adults—an evidence-based approach: introduction. J Manipulative Physiol Ther. 2007;30(9):617–83. doi: 10.1016/j.jmpt.2007.10.003. [DOI] [PubMed] [Google Scholar]

- 23.Simmons ED, Guyer RD, Graham-Smith A, Herzog R. Radiographic assessment for patients with low back pain. Spine. 1995;20(16):1839–41. doi: 10.1097/00007632-199508150-00017. [DOI] [PubMed] [Google Scholar]

- 24.Ammendolia C, Côté P, Hogg-Johnson S, Bombardier C. Do chiropractors adhere to guidelines for back radiographs? A study of chiropractic teaching clinics in Canada. Spine. 2007;32(22):2509–14. doi: 10.1097/BRS.0b013e3181578dee. [DOI] [PubMed] [Google Scholar]

- 25.Winer BJ. Statistical principles in experimental design. New York: McGraw-Hill Book Company; 1971. p. 907. [Google Scholar]

- 26.Robert J. The multiple facets of the Swiss chiropractic profession. Eur J Chiropr. 2003;50:199–210. [Google Scholar]

- 27.Buhlman MA Canadian Chiropractic Resources Databank. National Report. Toronto: Intellipulse Inc., Public Affairs and Marketing Research; 2007. p. 98. [Google Scholar]

- 28.Christensen MG, Kollasch MW, Ward R, et al. Job analysis of chiropractic 2005: a project report, survey analysis, and summary of the practice of chiropractic within the United States. Greeley, CO: National Board of Chiropractic Examiners; 2005. [Google Scholar]

- 29.Levin DC, Rao VM. Turf wars in radiology: the overutilization of imaging resulting from self-referral. J Am Coll Radiol. 2004;1(3):169–72. doi: 10.1016/j.jacr.2003.12.009. [DOI] [PubMed] [Google Scholar]

- 30.Gazelle GS, Halpern EF, Ryan HS, Tramontano AC. Utilization of diagnostic medical imaging: comparison of radiologist referral versus same-specialty referral. Radiology. 2007;245(2):517–22. doi: 10.1148/radiol.2452070193. [DOI] [PubMed] [Google Scholar]

- 31.Bussières A, Peterson C, Taylor JA. Diagnostic imaging guideline for musculoskeletal complaints in adults— an evidence-based approach. Part 2: Upper extremity disorders. J Manipulative Physiol Ther. 2008;31(1):2–32. doi: 10.1016/j.jmpt.2007.11.002. [DOI] [PubMed] [Google Scholar]

- 32.Ajzen I. Nature and operation of attitudes. Annu Rev Psychol. 2001;52:27–58. doi: 10.1146/annurev.psych.52.1.27. [DOI] [PubMed] [Google Scholar]

- 33.Bonetti D, Eccles M, Johnston M, et al. Guiding the design and selection of interventions to influence the implementation of evidence-based practice: an experimental simulation of a complex intervention trial. Soc Sci Med. 2005;60(9):2135–47. doi: 10.1016/j.socscimed.2004.08.072. [DOI] [PubMed] [Google Scholar]

- 34.Michie S, Johnston M, Abraham C, Lawton R, Parker D, Walker A. Making psychological theory useful for implementing evidence based practice: a consensus approach. Qual Saf Health Care. 2005;14(1):26–33. doi: 10.1136/qshc.2004.011155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Francis JE, Johnston MP, Walker A, et al. Constructing questionnaires based on the theory of planned behavior: a manual for health services researchers. 2004:42. Newcastle upon Tyne: Centre for Health Services Research. [Google Scholar]

- 36.Bonetti D, Pitts NB, Eccles M, et al. Applying psychological theory to evidence-based clinical practice: identifying factors predictive of taking intra-oral radiographs. Soc Sci Med. 2006;63(7):1889–99. doi: 10.1016/j.socscimed.2006.04.005. [DOI] [PubMed] [Google Scholar]

- 37.Butt A, Clarfield-Henry J, Bui L, Butler K, Peterson C. Use of radiographic imaging protocols by Canadian Memorial Chiropractic College interns. J Chiropr Educ. 2007;21(2):144–52. doi: 10.7899/1042-5055-21.2.144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Reardon RL, Lavis J. From research to practice: a knowledge transfer planning guide. Toronto: Institute for Work and Health; 2006. p. 19. [Google Scholar]

- 39.Siddiqi K, Newell J, Robinson M. Getting evidence into practice: what works in developing countries? Int J Qual Health Care. 2005;17(5):447–54. doi: 10.1093/intqhc/mzi051. [DOI] [PubMed] [Google Scholar]

- 40.Shaw B, Cheater F, Baker R. Tailored interventions to overcome identified barriers to change: effects on professional practice and health care outcomes. Cochrane Database Syst Rev. 2005;(3):CD005470. doi: 10.1002/14651858.CD005470. [DOI] [PubMed] [Google Scholar]

- 41.Grol R, Bosch MC, Hulscher MEJL, Eccles MP, Wensing M. Planning and studying improvement in patient care: the use of theoretical perspectives. Milbank Q. 2007;85(1):93–138. doi: 10.1111/j.1468-0009.2007.00478.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Improved Clinical Effectiveness Through Behavioural Research Group (ICEBeRG) Designing theoretically-informed implementation interventions. Implement Sci. 2006;1:4. doi: 10.1186/1748-5908-1-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Bosch M, van der Weijden T, Wensing M, Grol R. Tailoring quality improvement interventions to identified barriers: a multiple case analysis. J Eval Clin Pract. 2007;13(2):161–8. doi: 10.1111/j.1365-2753.2006.00660.x. [DOI] [PubMed] [Google Scholar]