Abstract

This study tested the usability of a touch-screen enabled “Personal Education Program” (PEP) with Advanced Practice Registered Nurses (APRN). The PEP is designed to enhance medication adherence and reduce adverse self-medication behaviors in older adults with hypertension. An iterative research process was employed, which involved the use of: (1) pre-trial focus groups to guide the design of system information architecture, (2) two different cycles of think-aloud trials to test the software interface, and (3) post-trial focus groups to gather feedback on the think-aloud studies. Results from this iterative usability testing process were utilized to systematically modify and improve the three PEP prototype versions—the pilot, Prototype-1 and Prototype-2. Findings contrasting the two separate think-aloud trials showed that APRN users rated the PEP system usability, system information and system-use satisfaction at a moderately high level between trials. In addition, errors using the interface were reduced by 76 percent and the interface time was reduced by 18.5 percent between the two trials. The usability testing processes employed in this study ensured an interface design adapted to APRNs' needs and preferences to allow them to effectively utilize the computer-mediated health-communication technology in a clinical setting.

Purpose

The purpose of this study was to conduct usability testing with Advanced Practice Registered Nurses (APRNs) of a computer-mediated “Personal Education Program” (PEP) that captures the self-medication behaviors of older adults with hypertension and delivers a tailored education program aimed at increasing medication adherence and reducing adverse self-medication behaviors. The PEP uses a touchscreen interface on a tablet PC. The APRN enters the patient's medication regimen, blood pressure and other study parameters before turning the PEP over to the patient in the waiting room. After the patient answers questions about his/her medication behaviors and interacts with the education component, a report is printed for both patient and provider listing patient-identified problems and symptoms, PEP-identified adverse self-medication behaviors, and corrective strategies.

Introduction

Preliminary studies have found computer-based interventions to be effective in both prevention and intervention programs to improve nutrition, reduce medication errors and increase medication adherence among older adults.1-5 To develop the program content and interface that will meet older patient needs, it is necessary to tailor the design with the use of age-appropriate information architecture and ergonomics as well as iterative usability testing of prototypes.6, 7 Likewise, design of computer-based interventions to be used by providers must not only involve providers in formative design research (e.g., expert panels, qualitative focus groups), but the usability of the intervention must also be tested by providers in a realistic setting. Koppel et al.8 and Garg et al.9 argue that the failure of computerized systems deployed in healthcare settings to effect change in practitioner performance may be due, in part, to a lack of provider participation in iterative usability studies and the failure to “focus on the organization of work” in which the technology is to be used.8

This study presents a methodologically rigorous participatory usability design aimed at eliciting characteristics of the provider interface on the PEP that is engaging (so providers will want to use it), easy to use (so providers will be able to use it) and simple (so providers will make minimal errors). The goal of the project was to ensure that providers would find the PEP to be usable, useful and enjoyable before the system is implemented in the actual clinical setting.

Background

In order to adequately assess antihypertensive medication adherence and efficacy, the primary care provider must not only assess medication outcome (e.g. blood pressure) but also inquire about any new symptoms, the frequency and time for taking the prescribed regimen, barriers to taking prescribed medications, and any other self-medicated agents (e.g., over-the-counter medications, nutritional and herbal supplements, and alcohol). The provider must then make a rapid assessment of the patient's adverse self-medication behaviors and deliver patient education to address those behaviors that could engender serious health risks to the patient.

When this type of care is being delivered to older adults, the barriers to success also rise significantly. Physical and perceptual changes with aging make older adults less able to hear, see and comprehend health information than younger individuals.10-14 Consequently, adults aged 65 and older also have the lowest health literacy level in the population.15 For older adults with hypertension, frequent health care visits do not ensure achievement of target blood pressure readings.16 Intensive, monthly one-on-one counseling can improve antihypertensive medication adherence, but the effect dissipates once the counseling sessions end.17

Gurwitz et al.18 maintain that the current primary health care environment contributes to inadequate patient education about safe medication use and, as a result, preventable adverse drug events continue. The National Ambulatory Medical Care Survey noted that the median length of time for primary care visits is 14 minutes, with a waiting room time of 40 minutes.19 This limited face time between the provider and patient makes it difficult to offer adequate patient education about medication safety, above and beyond providing basic care.

An alternative to circumvent limited face time between the provider and patient is to utilize the 40-minute waiting time as a “teachable moment,” prior to the patient's visit with the provider. In other words, allowing patients to access targeted and tailored medication-safety education materials, prior to their provider visit, could help prepare patients for more efficient patient-provider communication and help the provider improve patient care effectiveness. When these tailored medication-safety education materials are being provided to the patients via a computer-mediated health communication system, it is important to consider the social interaction aspects within a healthcare organization, which could influence the success or failure of a technology innovation.

According to Wears and Berg,20 while a new technology can change work practices (whether as intended or not), it can in turn change how the technology is used. The way the technology is used then forms a feedback loop whereby the technology's usage pattern can further change the functional applications of the technology. In the diffusion of innovations literature, this process is considered a “reinvention” of the technology application and the organizational functions that surround the use of the technology.21 In other words, when a computer-mediated information system is introduced, understanding how both providers and patients adopt and use the system is as important as the architectural engineering of the database and system interface.

The Current Study

The PEP is targeted to older adults with hypertension and includes a companion patient data-entry and analysis program aimed at providers. The interface architecture was informed by the physical and cognitive characteristics of older adults and builds on our team's prior visual design research with older adults (see Table 1).2, 3 The PEP is designed for patients to independently report current symptoms and medication use - including time and frequency of administration - during their waiting room time and prior to their clinical visit. It also allows the provider to enter the patient medication regimen, which provides the basis to evaluate patient self-medication errors. When implemented in the clinical setting, the PEP is deployed on a wireless, touch-sensitive tablet personal computer (tablet PC) placed on a cart that can be adjusted with respect to height and angle of the screen.

Table 1.

Characteristics of Older Adults that Informed the Architectural Design of the PEP Interface

| Cognitive | Ability to process information slows.22, 23 |

| Attention, short-term memory, discourse comprehension, problem-solving, reasoning skills, interference construction and elucidation, memory encoding and retrieval diminish.24 | |

| Declines in spatial memory impair navigation of spatial objects from a map.25, 26 | |

| Easily distracted by task errors, features that jump out from the display (i.e. “pop-ups”).27, 28 | |

| Computer programs are more difficult to use when they include sounds; sound plus text increases cognitive load and is neither preferred or effective.29, 30 | |

| Spatial Visual | Sensitivity to color and contrast are reduced as is ability to accommodate to change in illumination level. Sensitivity to glare is increased.31-33 |

| Less likely to notice movement on screen or subtle screen changes.32, 34 | |

| Blue background with dark bold type minimizes perception of glare.35 | |

| Figures rendered as flat shapes with thick, dark outlines support diminished perception of color contrast and edge discrimination. 35, 36 | |

| Reduced animation speed accommodates diminished ability to process visual information.35 | |

| Motor Skills | Using a mouse to perform clicking, dragging, or scrolling is difficult; many have stiff fingers/hands and fine tremors. Light pen (stylus) is the preferred input device.32, 37-42 |

| Prefer self-paced interaction tasks rather than computer-paced.34 | |

| Need more time to recover from disruptions stemming from making interface errors. 34 | |

| General Attributes | Graphics with minimal text sustain interest.1 |

| Gender-, race- and age neutral animated humanoid are preferred.35 | |

| Bold, Arial Black 20 point font preferred with large (3 cm) navigation buttons.35 |

Macromedia's Flash ActionScript language (Adobe, San Jose, CA) was used to program PEP text, graphic elements and animation materials to allow for a user interface with a touch-screen enabled tablet PC.1 The system was designed for older adults without previous experience with using a computer. A stylus is used as the interface tool to interact with the program by pointing at and then pressing (clicking) either a large graphic object (3 cm high), a large letter (20 point size Arial Black font), an entire phrase (or sentence), or an entire text block all written at a Fleshch-Kincaid grade-5 reading level).44 The information, graphic and other interactive features of the program are all older-adult friendly.

For instance, the color of the text and background, illumination level and the graphic and animation style are all specifically tailored for older adults' declining vision. 41 The speed of the display, object movements and animation sequence are also adequately slowed to properly accommodate older adults' visual and cognitive processing capability. In addition, scroll bars are extra-wide and dropdown-menus are displayed in blocks of eight lines to ease the maneuver from an older user's reduced motor skills.

Data entry privacy on the PEP is protected by a HIPPA-compliant 3M (Boston, MA) privacy shield, which is attached to the screen to prevent the interface from being visible to others in the waiting room. Data collected are automatically coded and transferred to the database via a Virtual-Private-Network, which is HIPAA-compliant.45, 46 The PEP analyzes information entered by the patient and then delivers tailored, interactive educational content—“Medicine Facts” and “What You Can Do”—that address three reported behaviors with the highest adverse risk.3 It then displays educational animations that show how the medicine can work in the human body to lower the blood pressure reading, followed by a display of interactive questions and answers. At the end of the PEP interface, a printout for both the patient and provider lists the reported symptoms and medication behaviors and provides suggested corrective strategies along with thumbnail illustrations from the animations. The printout provides education support for the provider during the one-on-one visit and can be entered into the medical record (paper for now, electronically in the future).

Findings from formal “Think Aloud” usability tests with older adults with hypertension indicate that their interface with the Prototype-2 version of the PEP had significantly fewer cognitive and motor errors compared to their interface with the Prototype-1 version (i.e., 201.91% decrease). Older adults also gave much higher ratings (means were all above 6.5 on a 7-point scale) to the Prototype-2 usability (129% increase), usefulness (120% increase), and their satisfaction (127% increase), compared to Prototype-1. Interface time between Prototype-1 and Prototype-2 showed a decrease of 24%, even though the latter had 1.26 times more interface screens than the former. These results suggest that older adults were able to navigate the final usability-study version (Prototype-2) of the PEP with minimal error and user “burden.” Usability study results of the PEP with older adults have been reported separately.47

Since the PEP intervention will undergo a clinical efficacy trial in primary care practices where APRNs deliver care to older adults with hypertension, we recruited APRNs and graduate APRN students to test the provider interface screens using our structured usability testing protocol. Following Nielsen's usability testing conventions,48 this study adopted an iterative approach to test the PEP prototypes of provider interface screens to ascertain user satisfaction, program-content usefulness and interface-design usability.

To test assumptions that the PEP system would achieve its usability testing goals, we hoped to find that APRN users would perceive: (1) the system interface features of the PEP as usable, (2) the system information provided by the PEP as useful, and (3) the system-use experience provided by the PEP as satisfactory. To confirm whether the modifications made to improve the system interface between trials were effective, we also explored whether there was a decrease in (1) interface time between trials and (2) interface errors between trials.

Methods

The research protocol was approved by the University's Human Subjects Review Committee; all researchers also completed an on-line research ethics program as required. Informed consent was obtained from all participants prior to participating in any study procedures.

The study adapted the usability testing guidelines proposed by Nielsen,48, 49 who recommended the use of 5-20 usability test participants for an iterative testing process, as more than 20 usability participants typically result in a saturated/diminishing return in measurement validity and reliability. The typical norm is to utilize 9-10 test participants for each iteration.50

Study Participants

Nurse participants were recruited via email (through the departmental APRN graduate student listserv) and flyers at the university Student Health Services. To participate in the study, nurses needed to be either APRNs practicing in primary care or RNs in the final year of their nurse practitioner program before obtaining licensure as an APRN. No prior experience in using a computer, the Internet or any interactive technologies (for electronic record keeping or care delivery in a clinical setting) was required or assumed. Participants were given $10 grocery gift cards for participating. The 28 study participants ranged in age from 24 to 65, including 5 males and 23 females. All but 6 participants were Caucasian (non-Hispanic); two of these were African American, 3 were Hispanic, and one was Asian American.

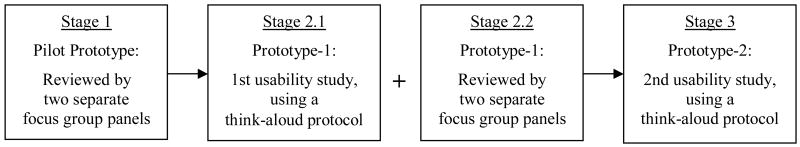

Research Procedures

Usability testing data collection was executed in three stages, as illustrated in Figure 1. Different groups of nurses participated in each stage. During Stage 1, formative research was conducted to gather data on participant opinions on the PEP design. This formative research involved two different focus group panels—one with 6 panelists and the other with 4 panelists—facilitated by a communication designer who was also a trained focus-group moderator. The panels reviewed and discussed the information architecture, graphic design, animation, color, font and screen size preference, and interactivity functions for data entry and retrieval—as well as ergonomic features including stylus touch-screen interface—one page/screen at a time. Results from these two sets of focus groups were utilized to help modify the pilot prototype to create Prototype-1 for testing in stage 2, nearly 4 months later.

Figure 1.

Usability Testing Procedures

During Stage 2, a different sample of 9 participants tested the revised program -Prototype-1. The usability test was conducted with a think-aloud procedure that followed a standard protocol.51 Each participant's interface action and think-aloud expressions were captured and recorded in digital video. This protocol also allowed participants to “think out loud” by verbalizing their thoughts during the system interface. A trained usability observer was present to help facilitate these usability-study participants to think out loud during their interface session. During the think-aloud session, the usability observer did not guide or provide answers to the participants' interface, navigation and content questions.

The usability observer played a neutral observer role. When asked for help by participants, the observer only prompted participants to consider alternative ways to think about how to solve their interface problems. Examples of this “prompting” practice could be “Think again to decide what this question is asking.” or “Think again to decide what that window is for.” In essence, the participants were allowed to make their own mistakes and were prompted only when they asked for help. This think-aloud procedure then ensured the participants' independent thought processes and interface action. The usability observer also took observation notes to record any interface errors and critical incidents that occurred to be cross-validated with the digital video capture of the interface. These observation notes reflected the major obstacles or merits of the participant interface experience.

During the think-aloud interface session, study participants were required to enter a sample case of a “typical” older adult patient's age, gender and health literacy scores as well as the patient's blood pressure reading, in addition to the dosages, frequency, time of administration, and special instructions for three different types of medications that the patient might take, including the antihypertensive regimen. These medication entries were selected from a drug database constructed and programmed specifically for the PEP and presented in the form of an alphabetized keyboard-style table on screen. After selecting a letter, an extra-wide bright-color scroll bar and 8 medicines whose names start with that letter appear as a group. Any additional scrolling action will bring out another group of 7 new medicines, with the medicine name that appears last in the last group of 8 appearing first with these 7 new medicine names, and so on. The comprehensive drug database included all of the commonly taken over-the-counter (e.g., pain relievers or cold medicines) and prescription medicines (e.g., Celebrex®) that could interact with antihypertensive medications or medications commonly taken by individuals with hypertension such as low dose aspirin.

Following the entry of the “patient's” medication information, the nurse participant proceeded to review the 5 basic “patient tutorial screens” to see what the patient will learn from the tutorial. These tutorial screens contained interface items that ask the patient to indicate their intake of an aspirin (as an example), the name of the aspirin, the strength of the aspirin, the medication frequency and the time the medication was taken.

At the end of the think-aloud session, participants completed a paper questionnaire adapted from the Post Study System Usability Questionnaire (PSSUQ),52 which was developed to assess the psychometric response associated with user interface with computer-mediated programs and tasks. The questionnaire was modified to contain 15 items that measure the interface experience associated with our tailored PEP prototypes; two items that query the participants' PC-user and Internet-user status were also added for a total of 17 items.

Two weeks following the think-aloud usability study, two post-trial focus group panels were convened - one group with 4 panelists and another group with 5 panelists. These panelists were the same nurses who participated in the previous think-aloud sessions. They reviewed and discussed the technical and content features of Prototype-1 to provide participatory design ideas based on the evaluation of their own interface experience. The think-aloud, post-trial survey and focus group data combined served as the basis for revising Prototype-1 to create Prototype-2 for the next rounds of usability test. This revision process took about 2 months. Examples of technical improvements made based on these qualitative data sets included programming considerations associated with the system's information content/structure, interaction actions/functions, animation sequences/features, and ergonomic elements/designs.

Other, more specific examples include adding a list of commonly prescribed dosages to allow the APRNs to select and adding names of additional drugs for selection, in addition to improving the scroll-bar function to display only a limited set of choices for each scrolling action to prevent information overload and skipped information. Another example of system-interface improvement was to give the APRNs the option of using an attached keyboard to enter patient data, instead of confining them to use the stylus-enabled interface with the small keyboard display on screen. In addition, a number of visual presentation elements were also refined between prototype versions to create the most ergonomically functional design.

During Stage 3, a new sample of 9 APRNs was recruited to test Prototype-2. The think-aloud protocol and procedures used to conduct this second usability study were the same as those of the first usability study to test Prototype-1. Again, the iterative participatory design process dictated that the data collected for the second usability study be used as the basis for making final changes to Prototype-2 to create the Beta version of the PEP, which was subsequently beta tested in a clinical study with repeated measures on a later date.

Data Analysis

The think-aloud usability session data for the two usability studies were separately cross-validated by reviewing the screen-capture recording and the usability observers' notes. In particular, participant interface time and interface errors were independently verified by two graduate assistants who served as data coders. The data cross-validation results on these two measurement items yielded 100% agreement between the two different coders.

SPSS, v. 14.0 (Chicago, IL) was used for data analysis. The modified PSSUQ scale items were tested for their scale reliability (Cronbach's alpha) to help confirm the three a priori conceptual dimensions of: 1) system usability, 2) system usefulness and 3) system-use satisfaction. To determine whether participants deemed the Prototype-2 system as usable, useful or whether their system-use experience was satisfactory, the one-sample t-test procedure was used to compare user means on Prototype-2 with the midpoint (or “4”) of the 7-point scale. Independent-sample t-tests were used to compare means of participant perceived system usability, usefulness and satisfaction between Prototype-1 and Prototype-2.

In the process of reviewing and validating the think-aloud usability data, it was also revealed and confirmed that one APRN participant in the second usability study experienced an unusually high level of difficulty in navigating the information architecture, graphic displays and interactive features. The interface data associated with this participant's “case number” showed that his/her response pattern was highly skewed (on 87% of the items) and interface-completion time was unusually long (2.71 times longer than the average). This participant's case number was identified as a statistical outlier case and his/her interface data were removed from further analyses.

Results

The scale-reliability test results for the 15 modified PSSUQ items are reported as follows. With regard to the first usability study, reliability coefficients associated with the three conceptual dimensions were: (1) system usefulness - a 5-item scale (Cronbach's α=.85), (2) system usability - a 6-item scale (Cronbach's α= .95), and (3) system-use satisfaction - a 4-item scale (Cronbach's α=.86). For the second usability study, reliability coefficients for the three parallel scales were: (1) system usefulness (Cronbach's α= .87), (2) system usability (Cronbach's α=.96), and (3) system-use satisfaction (Cronbach's α= .91).

PC and Internet Use

All participants reported that they were users of personal computers and the Internet.

System Usability

The mean rating of usability in the second usability trial (M = 5.41, SD = .93) was found to be significantly above the midpoint of the 7-point scale (t = 4.01, p = .007) with a large effect size (d = 1.57, r = .60), according to the one-sample t-test (see Table 2), suggesting that the participants did find Prototype-2 to be usable. The mean usability value in the second trial was not statistically different from that of the first trial (See Tables 3), demonstrating that participants from the two different trials perceived the usability of Prototype-1 and Protoype-2 to be similar.

Table 2.

One-Sample T-Test Results for Prototype-2

| M | SD | N | t | p | |

|---|---|---|---|---|---|

| System Usability (d = 1.51; r = .60) |

5.41 | .93 | 7 | 4.01 | .007 |

| System Usefulness (d = 1.31; r = .55) |

5.24 | .95 | 5 | 2.91 | .044 |

| System-Use Satisfaction (d = 1.75; r = .66) |

5.75 | 1.00 | 8 | 4.95 | .002 |

Table 3.

Mean Comparisons between Prototype-1 and Prototype-2

| Prototype-1 | Prototype-2 | Levene's Test | ||||||

|---|---|---|---|---|---|---|---|---|

| M | SD | M | SD | t | p | F | p | |

| System Usability (d= .84; r= .38) |

6.19 N=7 |

.92 | 5.41 N=7 |

.93 | 1.59 | .138 | .05 | .826 |

| System Usefulness (d= .22; r= .10) |

5.45 N=4 |

.99 | 5.24 N=5 |

.95 | .32 | .757 | .02 | .893 |

| System-Use Satisfaction (d= .78; r= .36) |

6.53 N=9 |

.68 | 5.75 N=8 |

1.00 | 1.90 | .077 | 1.48 | .242 |

| Interface Time (d= .50; r= .24) |

7.02 N=9 |

2.25 | 5.72 N=8 |

2.62 | 1.10 | .290 | .95 | .346 |

| Interface Error (d= 2.28; r= .75) |

4.22 N=9 |

1.99 | 1.00 N=8 |

1.41 | 3.81 | .002 | 1.42 | .252 |

| Interface Error per Minute (d= 1.15; r= .50) |

.618 N=9 |

.299 | .211 N=8 |

.354 | 2.57 | .021 | .050 | .826 |

System Usefulness

The mean system usefulness rating (M =5.24, SD = .95) for Prototype-2 was significantly higher than the midpoint of the 7-point scale (t =2.91, p = .044), with a large effect size (d = 1.31, r = .55), indicating that the participants did find the information provided by Prototype-2 to be useful. There was no statistically significant difference in the mean system usefulness values between the two trials (see Table 3), indicating that participants from both trials found the system information provided by Prototype-1 and Prototype-2 to be equally useful.

System-Use Satisfaction

The mean rating of perceived system-use satisfaction (M =5.75, SD = 1) for the second usability trial was statistically significant (t = 4.95. p = .002) based on the midpoint of the 7-point scale with a very large effect size (d = 1.75, r = .66), signifying that the users found their interface experience with Prototype-2 satisfactory (see Table 2). There was no statistically significant difference in satisfaction ratings between the two trials (see Table 3), suggesting a comparably satisfactory experience from interfacing with Prototype-1 and Prototype-2.

System-Interface Time

There was an 18.5 percent reduction in interface time between the two usability trials, but these differences were not statistically significant. The mean interface time was 7.02 minutes (SD = 2.25) for Prototype-1 (the first usability trial) and 5.72 minutes (SD = 2.62) for Prototype-2 (the second usability trial) (see Table 3). This illustrates that study participants took a similar amount of time to complete their interface with both Prototype-1 and Prototype-2, even as Prototype-2 contains more interface features than Prototype-1.

System-Interface Errors

Study participants made 76 percent fewer system-interface errors with Prototype-2 compared to Prototype-1, which was statistically significant with a very large effect size (d = 2.28, r = .75). Additionally, the mean interface error per minute was significantly lower for Prototype-2 compared to Prototype 1, with a very large effect size (d = 1.15, r = .50).

Discussion

There are certain limitations associated with this study that should be noted. First, additional study iterations might produce minor usability improvements and added reliability for the study results.50 Second, the samples should have included APRN participants from a more diverse range of age, race and interactive technology efficacy levels to more closely reflect the APRN population. Third, the questionnaire was kept brief in order to emulate the realistic amount of time that a provider might have to complete an evaluation instrument when the system is being tested in the clinical setting later. As a result, measurement items53 that could have tested such constructs as computer efficacy, Internet efficacy, provider communication efficacy, and attitudes toward using interactive technology for health intervention were excluded.

Even with these inevitable shortcomings, the results of the study are nonetheless informative and valuable. In particular, the study followed well-established usability testing principles and procedures (including sample size and number of iterations) developed by Nielsen48-50, 54 who contends that, when designing interactive communication programs, it is important to consider the attainment of system learnability, interface efficiency, low error rates and user satisfaction. When applying these standards50 to assess the present study results, it appears that the final PEP prototype had low error rates and was seen as generally learnable, efficient and satisfactory by the APRNs who participated in the two separate think-aloud trials.2

The criterion of system learnability, as reflected by perceived system usefulness and system usability measures in the present study, was moderately high in both Prototypes. While the mean values for both measures were higher in Prototype-1 than in Prototype-2, the differences were not statistically significant. The second participant sample might have had a lower efficacy in using the state-of-the art interactive technology deployed in the present study and/or a more negative attitude toward the use of such technology, compared to the first sample. Measures of interactive technology efficacy, if implemented, could have served to isolate its potential confounding effect on the participants' responses.

System-use efficiency, as indicated by system-interface time in the present study, showed that the first trial took 22% longer than the second trial to complete, even though the difference was not statistically significant. Prototype-2 contains more features for medication input, including additional drop-down screens allowing the APRN to record special instructions for taking a medication and why a medication was discontinued and additional choices for routes and frequency of administration. The participants were able to complete their interface with a larger number of interface items in a shorter amount of time via Prototype-2, relative to the first participant sample, who took a longer amount of time to interface with fewer items on Prototype-1. This finding also attests to the merits of the more efficient system design of Prototype-2, compared to its predecessor, Prototype-1.

Likewise, Nielsen's48-50 third usability criterion - lower error rates - was also achieved. The mean number of interface errors between Prototype-1 and Prototype-2 showed a 76 percent decrease and the interface error per minute was significantly lower for Prototype-2 compared to Prototype 1. From a usability design perspective, this suggests that Prototype-2 was less prone to induce interface errors than Prototype-1. A well-designed interactive technology system should allow even the novice user to be able to navigate, interact with and manipulate the system to accomplish the task objectives with a minimal number of errors. Lastly, user satisfaction (Nielsen's fourth evaluation criterion 48-50), measured by perceived system-use satisfaction, achieved a moderately high rating for both Prototype-1 and Prototype-2. The lower mean system-use satisfaction with Prototype-2 relative to Prototype-1 was not statistically significant.

Even though the qualitative comments recorded during the interface sessions were not the focus of the present analysis, it is worth mentioning the importance of these comments in contributing to the improvement of the various versions of the PEP prototypes. Moreover, these spontaneous “think aloud” comments also confirmed all of the pre-trial focus group system design suggestions and validated all of the post-trial focus group evaluations.

Henderson et al. 55 contend that the success of a prevention and/or intervention program delivered with the use of an interactive technology system in a clinical setting will be contingent upon whether patients and providers perceive the system as being objective, accurate, appropriate and useful in providing the program content as well as the ease of use of the interface design. The participatory design approach developed in this study, which integrated the focus group method and the iterative think-aloud usability trials, seems to reflect the Henderson et al. criteria for delivering a successful health-prevention communication program.

The think-aloud usability-testing method was a valuable approach to evaluate the user system-interface experience. In particular, it provided a direct means to observe and record how the users navigated the system as well as what errors they made and how they overcame their interface errors. The usability observer's neutral role in helping to prompt the users to think critically about how to solve their interface problems, which did not provide any specific clues or assistive cues, also made the think-aloud process a potentially more productive and enjoyable self-paced experience for the users.

Conclusion

The present study supports our previous older-adult usability study findings 47 and shows that the interface architectural design was successfully tailored for both providers and older adult users. Results from the subsequent beta test of the PEP suggest that the PEP had a large effect size in increasing knowledge and self-efficacy over time for avoiding adverse self-medication behaviors and blood pressure declined over a 3-month period for 82% of the participants.56 The PEP is currently undergoing a clinical trial in 11 primary care practices.

In essence, these study findings help establish and confirm the need for conducting usability studies of interactive technology-based health communication systems prior to the procurement and implementation of the system in a clinical setting. Moreover, they also reveal potentially inconsistent levels of interactive-technology efficacies among providers during a time when the entire health care system in the U.S. is moving toward the active use of these technologies for a wide variety of patient record keeping and transport, prescription dispensing, and other care-related functions.

As more than 90% of adults aged 65 and older reportedly use at least one medication and 57% take five or more medications daily, 57 both medication adherence and self-medication safety are growing concerns. Compounded by the 14-minute median face time during outpatient primary-care visits,19 the degree to which providers can offer patient self-medication education (if any) remains dismal. Interactive technology-based health education can be an alternative means to facilitate a productive provider-patient communication about safe self-medication practices. Future studies could replicate the participatory usability design methodology developed here to help improve clinical efficiency in patient education, by advancing the usability design of interactive health-communication systems.

Acknowledgments

The authors wish to thank Christian Rauh, MS and Yan Li, MS for creating the computer code for the PEP interface. The authors also wish to thank Zoe Strickler, MDes for conducting the focus groups and facilitating the usability sessions and Veronica Segarra, MS and Sheri Peabody, MS, RN for their assistance in coding the usability data. This work was supported by funding from the National Heart, Lung and Blood Institute, grant number 5R01HL084208, P. J. Neafsey, Principal Investigator.

Footnotes

The tablet PC (Motion LE 1600 Centrino) was manufactured by the Motion Computing, Inc. in 2006. Technical specifications for this model include: Intel Pentium® M Processor LV 778 (1.6 GHz), Integrated Intel PRO Wireless 2915ABG, 512MB RAM, 30GB HDD with View Anywhere Display, 12.1″ wide view XGA TFT display, convertible keyboard, 3-M privacy filter, and Genuine Windows® XP.

The decision to not conduct additional testing was based on the following rationale: 1) the participants only made one error on average for their interface with Prototype-2; 2) Prototype-3 would most likely produce marginal improvements to Prototype-3, judging from the current study results and the literature on usability testing; and 3) there was a lack of time and resources to test Prototype-3 (the Beta version) with both patients and APRNs in time for us to conduct beta testing in the field and the subsequent clinical trial.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Prochaska JJ, Marion MS, Zabinski F, Calfas KJ, Sallis JF, Patrick K. Interactive communication technology for behavior change in clinical settings. Am J Prev Med. 2000;19(2):127–131. doi: 10.1016/s0749-3797(00)00187-2. [DOI] [PubMed] [Google Scholar]

- 2.Authors (2001)

- 3.Authors (2002a)

- 4.Alemagno SA, Niles SA, Treiber EA. Using computers to reduce medication misuse of community-based seniors: Results of a pilot intervention program. Geriatric Nurs. 2004;25(5):281–285. doi: 10.1016/j.gerinurse.2004.08.017. [DOI] [PubMed] [Google Scholar]

- 5.Authors (2007)

- 6.Ellis RD, Kurniawan SH. Increasing the usability of online information for older users: A case study in participatory design. Int J Human-computer Interaction. 2000;12(2):263–276. [Google Scholar]

- 7.Nahm ES, Preece J, Resnick B, Mills ME. Usability of health web sites for older adults. Comput Inform Nurs. 2004;22(6):326–334. doi: 10.1097/00024665-200411000-00007. [DOI] [PubMed] [Google Scholar]

- 8.Koppel R, Metlay JP, Cohen A, Abaluck B, Localio AR, Kimmel SE, Strom BL. Role of computerized physician order entry systems in facilitating medication errors. JAMA. 2005;293(10):1197–1203. doi: 10.1001/jama.293.10.1197. [DOI] [PubMed] [Google Scholar]

- 9.Garg AX, Adhikari NK, McDonald H, Rosas-Arellano MP, Devereaux PJ, Beyene J, Sam J, Haynes RB. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes. A systematic review. JAMA. 2005;293(10):1261–1263. doi: 10.1001/jama.293.10.1223. [DOI] [PubMed] [Google Scholar]

- 10.Bloom JA, Frank JW, Shafir MS, Martiquet P. Potentially undesirable prescribing and drug use among the elderly. Can Fam Physician. 1993;39:2337–2345. [PMC free article] [PubMed] [Google Scholar]

- 11.Hanlon JT, Fillenbaum GG, Burchett B, Wall WE, Service C, Blazer DG, George LK. Drug-use patterns among black and nonblack community dwelling elderly. The Ann Pharmacother. 1992;26:697–685. doi: 10.1177/106002809202600514. [DOI] [PubMed] [Google Scholar]

- 12.Pollow RL, Stoller EP, Forster LE, Duniho TS. Drug combinations and potential for risk of adverse drug reaction among community-dwelling elderly. Nurs Res. 1994;43(1):44–49. [PubMed] [Google Scholar]

- 13.Salzman C. Medication compliance in the elderly. J Clin Psychol. 1995;56(supplement 1):18–22. [PubMed] [Google Scholar]

- 14.Wallsten S, Sullivan R, Hanlon J, Blazer D, Tyrey M, Westlund R. Medication taking behaviors in the high- and low-functioning elderly: MacArthur field studies of successful aging. Ann Pharmacother. 1995;29:359–364. doi: 10.1177/106002809502900403. [DOI] [PubMed] [Google Scholar]

- 15.U.S. Department of Education. Institute of Education Sciences, Education Statistics, 2003 National Assessment of Adult Literacy. 2006. The Health Literacy of America's Adults: Results from the 2003 National Assessment of Adult Literacy. [Google Scholar]

- 16.Berlowitz DR, Ash AS, Hickey EC, Friedman RH, Glickman M, Kader B, Moskowitz MA. Inadequate management of blood pressure in a hypertensive population. N Engl J Med. 1998;33:1957–1963. doi: 10.1056/NEJM199812313392701. [DOI] [PubMed] [Google Scholar]

- 17.Lee KL, Grace KA, Allen AJ. Effect of a pharmacy care program of medication adherence and persistence, blood pressure, and low-density lipoprotein cholesterol: A randomized controlled trial. JAMA. 2006;296(21):2563–2571. doi: 10.1001/jama.296.21.joc60162. [DOI] [PubMed] [Google Scholar]

- 18.Gurwitz JH, Field TS, Harrold LR, Rothschild J, Debellis K, Seger AC, Cadoret C, Fish LS, Garber L, Kelleher M, Bate DW. Incidence and Preventability of Adverse Drug Events Among Older Persons in the Ambulatory Setting. JAMA. 2003;289:1107–1116. doi: 10.1001/jama.289.9.1107. [DOI] [PubMed] [Google Scholar]

- 19.Hing E, Cherry DK, Woodwell DA. Advance data from vital and health Statistics No 374. Hyattsville, MD: National Center for Health Statistics; 2006. National Ambulatory Medical Care Survey: 2004 Summary. [PubMed] [Google Scholar]

- 20.Wears RL, Berg M. Computer technology and clinical work. Still waiting for Godot. JAMA. 2005;293(10):1261–1263. doi: 10.1001/jama.293.10.1261. [DOI] [PubMed] [Google Scholar]

- 21.Authors (2003)

- 22.Salthouse TA. A theory of cognitive aging. Amsterdam: North-Holland; 1985. [Google Scholar]

- 23.Czaja SJ, Sharit J. Ability-performance relationships as a function of age and task experience for a data entry task. J Exp Psychol. 1998;4:332–351. [Google Scholar]

- 24.Park D. Applied cognitive aging research. In: Craik FIM, Salthouse TA, editors. The handbook of aging and cognition. Mahwah, NJ: Lawrence Erlbaum; 1992. pp. 449–494. [Google Scholar]

- 25.Pak R. A further explanation of the influence of spatial abilities on computer task performance in younger and older adults. Proceedings of the Human Factors and Ergonomics Society, 44th Annual Meeting; Santa Monica, CA: Human factors and Ergonomics Society; 2001. Sep, pp. 551–1555. [Google Scholar]

- 26.Vicente J, Hayes BC, Williges RC. Assaying and isolating individual differences in search a hierarchical file system. Hum Factors. 1987;29:349–359. doi: 10.1177/001872088702900308. [DOI] [PubMed] [Google Scholar]

- 27.Rogers WA, Fisk AD, Hertzog C. Do ability related performance relationships differentiate age and practice effects in visual search? J Exp Psychol Learn Mem Cogn. 1994;20:710–738. doi: 10.1037//0278-7393.20.3.710. [DOI] [PubMed] [Google Scholar]

- 28.McDowd JM, Craik FIM. Effects of aging and task difficulty on divided attention performance. J Exp Psychol Hum Percept Perform. 1998;14:267–280. doi: 10.1037/0096-1523.14.2.267. [DOI] [PubMed] [Google Scholar]

- 29.Nielsen J, Shaefer L. Sound effects as an interface element for older users. Behavioral Information Technology. 1993;12:208–215. [Google Scholar]

- 30.Koroghlanian CM, Sullivan HJ. Audio and text density. Computer-based instruction. 2000;22:217–230. [Google Scholar]

- 31.Kline DW, Scialfa CT. Sensory and perceptual functioning: Basic research and human factors implications. In: Fisk AD, Rogers WA, editors. Handbook of human factors and the older adults. San Diego, CA: Academic Press; 1997. pp. 27–54. [Google Scholar]

- 32.Morris JM. User interface design for older adults. Interact Comput. 1994;6(4):373–393. [Google Scholar]

- 33.Czaja SJ, Lee CC. Designing compute systems for older adults. In: Jacko JA, Sears A, editors. The human-computer interaction handbook: Fundamentals, evolving technologies and emerging applications. Mawah, NJ: Lawrence Erlbuam; 2003. pp. 413–427. [Google Scholar]

- 34.Hawthorn D. Possible implications of aging for interface designers. Interact Comput. 2000;12:507–528. [Google Scholar]

- 35.Authors (2002b)

- 36.Morrell RW, Echt KV. Designing written instructions for older adults: Learning to use computers. In: Fisk AD, Rogers WA, editors. Handbook of Human Factors and the Older Adult. New York, NY: Academic Press; 1997. pp. 335–361. [Google Scholar]

- 37.Smith M, Sharit J, Czaja S. Aging, motor control, and the performance of computer mouse tasks. Hum Factors. 1999;4(3):389–396. doi: 10.1518/001872099779611102. [DOI] [PubMed] [Google Scholar]

- 38.Charness N, Holley P. Human factors and environmental support in Alzheimer's disease. Aging Ment Health. 2001;5:S65–S73. [PubMed] [Google Scholar]

- 39.Charness N, Bosman E. Human factors in design. In: Birren JE, Schaie KW, editors. Handbook of the Psychology of Aging 3rded. San Diego, CA: Academic Press; 1990. pp. 446–463. [Google Scholar]

- 40.Kelley CL, Charness N. Issues in training older adults to use computers. Behav Inf Technol. 1995;14:107–120. [Google Scholar]

- 41.Wood E, Willoughby T, Rushing A, Bechtel L, Gilbert J. Use of computer input devices by older adults. J Appl Gerontol. 2005;24(5):419–438. [Google Scholar]

- 42.Rau Pei-Luen P, Hsu Jia-Wen. Interaction devices and web design for novice older users. Educ Gerontol. 2005;31(1):19–40. [Google Scholar]

- 43.Sutherland LA, Campbell M, Ornstein K, Wildemuth B, Lobach D. Development of an adaptive multimedia program to collect patient health data. Am J Prev Med. 2001;21(4):320–324. doi: 10.1016/s0749-3797(01)00362-2. [DOI] [PubMed] [Google Scholar]

- 44.Flesch R. A readability formula that saves time. Journal of Reading. 1968;11:513–516. [Google Scholar]

- 45.Federal Register. Standards for privacy of individually identifiable health information, proposed rule modifications. 45 CFR Parts 160 and 164. 2002 March 27;:14776–14815. [Google Scholar]

- 46.de Meyer F, Lundgren P, de Moor G, Fiers T. Determination of user requirements for the secure communication of electronic medical record information. Int J Med Informat. 1998;49:125–130. doi: 10.1016/s1386-5056(98)00021-5. [DOI] [PubMed] [Google Scholar]

- 47.Authors (2008a; In Press)

- 48.Nielsen J. Usability Engineering. San Diego, LA: Morgan Kaufman; 1993. [Google Scholar]

- 49.Nielsen J. Why you need to test with 5 users. Albertbox. 2000 March 19;:doc 20000319. serial on-line. [Google Scholar]

- 50.Nielsen J. Iterative User-Interface Design. Computer. 1993;26(11):32–41. [Google Scholar]

- 51.Shneiderman B, Plaisant C. Designing the user interface: Strategies for effective human-computer interaction. 4th. New York, NY: Pearson/Addison Wesley; 2005. [Google Scholar]

- 52.Lewis JR. Psychometric evaluation of the PSSUC using data from five years of usability studies. Int J Hum Comput Interact. 2002;14(3&4):463–488.53. [Google Scholar]

- 53.Bronsnan MJ. The impact of computer anxiety and self-efficacy upon performance. J Computer Assisted Learning. 1998;14:223–234. [Google Scholar]

- 54.Nielsen J. Usability 101: Introduction to Usability. Alertbox. 2003 August 25;:doc20030825. serial on-line. [Google Scholar]

- 55.Henderson J, Noell J, Reeves T, Robinson T, Strecher V. Developers and evaluation of interactive health communication application. Am J Prev Med. 1999;16(1):30–34. doi: 10.1016/s0749-3797(98)00106-8. [DOI] [PubMed] [Google Scholar]

- 56.Authors (2008b; In Press)

- 57.Kaufman DW, Kelly JP, Rosenberg L, Anderson TE, Mitchell AA. Recent patterns of medication use in the ambulatory adult population of the United States: The Slone survey. JAMA. 2002;287:337–344. doi: 10.1001/jama.287.3.337. [DOI] [PubMed] [Google Scholar]