Abstract

Objective:

We evaluated the effect of performance feedback on acute ischemic stroke care quality in Minnesota hospitals.

Methods:

A cluster-randomized controlled trial design with hospital as the unit of randomization was used. Care quality was defined as adherence to 10 performance measures grouped into acute, in-hospital, and discharge care. Following preintervention data collection, all hospitals received a report on baseline care quality. Additionally, in experimental hospitals, clinical opinion leaders delivered customized feedback to care providers and study personnel worked with hospital administrators to implement changes targeting identified barriers to stroke care. Multilevel models examined experimental vs control, preintervention and postintervention performance changes and secular trends in performance.

Results:

Nineteen hospitals were randomized with a total of 1,211 acute ischemic stroke cases preintervention and 1,094 cases postintervention. Secular trends were significant with improvement in both experimental and control hospitals for acute (odds ratio = 2.7, p = 0.007) and in-hospital (odds ratio = 1.5, p < 0.0001) care but not discharge care. There was no significant intervention effect for acute, in-hospital, or discharge care.

Conclusion:

There was no definite intervention effect: both experimental and control hospitals showed significant secular trends with performance improvement. Our results illustrate the potential fallacy of using historical controls for evaluating quality improvement interventions.

Classification of evidence:

This study provides Class II evidence that informing hospital leaders of compliance with ischemic stroke quality indicators followed by a structured quality improvement intervention did not significantly improve compliance more than informing hospital leaders of compliance with stroke quality indicators without a quality improvement intervention.

GLOSSARY

- CI

= confidence interval;

- HERF

= Healthcare Evaluation and Research Foundation;

- ICC

= intracluster correlation coefficient;

- ITT

= intent-to-treat;

- MCCAP

= Minnesota Clinical Comparison and Assessment Program;

- OR

= odds ratio;

- PRISMM

= Project for the Improvement of Stroke Care Management in Minnesota;

- PSC

= Primary Stroke Center Certification;

- QI

= quality improvement;

- RCT

= randomized controlled trial;

- tPA

= tissue plasminogen activator.

The well-documented evidence-practice gap in stroke care reflects a delay in the diffusion of new therapies into widespread clinical practice. For example, the nationwide rate of tissue plasminogen activator (tPA) use after acute ischemic stroke in the United States is estimated to be between 1.8% and 2.1%.1 Aspects of secondary prevention after stroke such as antithrombotic use, smoking cessation counseling, and hypertension control are also suboptimal.2–6

A previous collaborative project involving members from our group showed improved physician adherence to guideline-recommended therapies for acute myocardial infarction through performance audit and targeted feedback delivered by local opinion leaders, formal and informal education to change provider behavior, and system changes addressing existing barriers.7 In the Project for the Improvement of Stroke Care Management in Minnesota (PRISMM), we continued our work by evaluating the effectiveness of another intervention to improve acute ischemic stroke care quality in Minnesota hospitals. The goal of our research was to develop and test replicable interventions grounded in a theoretical framework of adult learning and behavior change to improve the quality of care for acute conditions.8–11

METHODS

The design was a cluster randomized controlled trial with the hospital as the unit of randomization, intervention, and inference. The design included preintervention data collection, an intervention, and postintervention data collection (figure 1).

Figure 1 Project for the Improvement of Stroke Care Management in Minnesota (PRISMM) study design

Reason for hospital attrition after randomization: 19 hospitals took part in the preintervention data collection. All hospitals had Institutional Review Board (IRB) approval at the start of PRISMM. The advent of Health Insurance Portability and Accountability Act in Minnesota in 2002 while the study was underway led to all hospitals reapplying for IRB approval. Two hospitals, unable to obtain IRB reapproval for the second phase of data collection, dropped out.

Setting.

PRISMM was a joint effort involving the Healthcare Evaluation and Research Foundation (HERF), a nonprofit organization in St. Paul, MN, and the University of Minnesota. The Minnesota Clinical Comparison and Assessment Program (MCCAP) was a collaboration between HERF and hospitals throughout Minnesota, whereby HERF had access to hospital medical records to collect quality of care data for quality improvement (QI).12 MCCAP started in 1989 and all acute care Minnesota hospitals were invited to join MCCAP. At the time of the study, there were 45 participating MCCAP hospitals, accounting for 60% of all hospital admissions statewide. All MCCAP hospitals were invited to participate in PRISMM. Of these, 24 hospitals provided written agreements promising participation.

Power and sample sizes.

Power calculations, done to detect an overall intervention effect, assumed 12 hospitals for each study condition, an average of 100 patients per hospital, baseline performance ranging from 50% to 80%, a performance differential ranging 5%-7% due to the intervention, and an intracluster correlation coefficient (ICC) ranging from 0.001 to 0.005. For a baseline performance of 50% and an ICC of 0.001, we had greater than 90% power (α = 0.05) to detect a 7% performance differential between experimental and control hospitals postintervention. Under the same assumptions, when the ICC was increased to 0.005, power was reduced to 80%. Power increased when baseline performance was varied from 50% and was symmetric on either side of the 50% midpoint (i.e., assumptions of 20% or 80% baseline performances yielded the same power).

Approvals and consents.

PRISMM was approved by the institutional review boards of the University of Minnesota and each participating hospital. As part of the MCCAP collaborative framework, HERF had authorization to access patient records under legal business agreements with each hospital since data were collected as part of a QI initiative. Data were stripped of personal identifiers for research use.

Overall design and randomization.

A randomized-block design was used to sort similar hospitals based on the presence of a neurologist practicing on site as providers (rather than as consultants only). Hospitals were allocated from each block to experimental or control condition. Allocation was blinded except for one hospital where the medical director of the study practiced. This hospital was assigned to the experimental condition. Following allocation, study personnel in charge of the intervention were unblinded to assignment while hospitals and data abstractors remained blinded.

Study patients.

Included were patients aged 30-84 years discharged with International Classification of Diseases, 9th Revision codes 434.x and 436.x and confirmed as having an acute ischemic stroke by MD documentation. Only patients admitted through the emergency room were included. Preintervention data collection included patients discharged during July to December 2000 and postintervention data collection included discharges during July to December 2003.

Target quality of care measures for acute ischemic stroke.

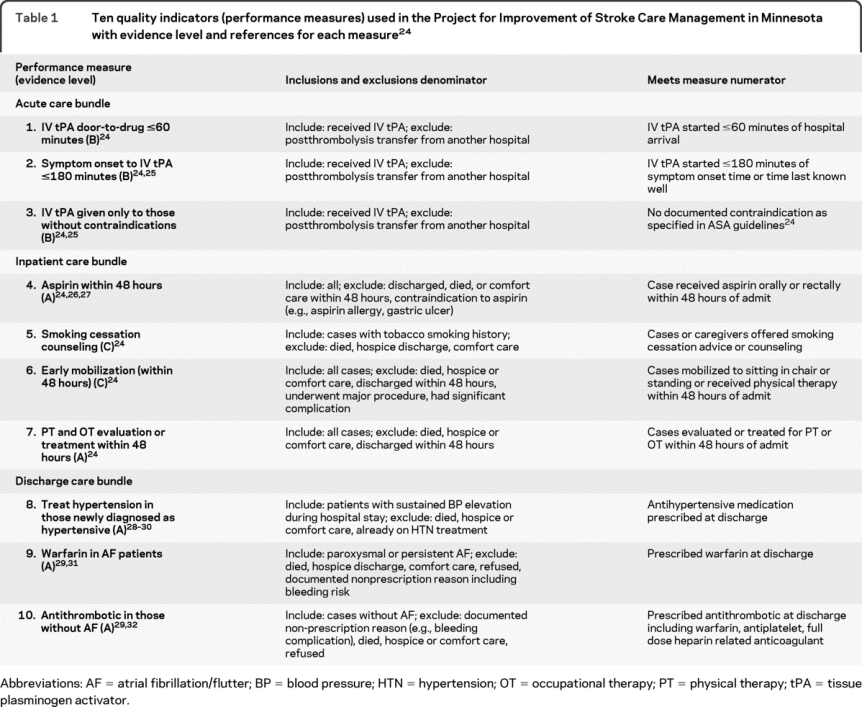

Ten measures for inclusion in our study (table 1) were identified from a list of acute stroke care performance measures developed by a national multidisciplinary expert panel.3 The measures were selected based on measurability, underlying level of evidence, and levels of noncompliance reported in other studies.2 Eligible subsets (denominator) were defined for each measure based on indications and contraindications to its application. Similarly, compliance with each measure (numerator) was defined. Stroke care quality was measured using the performance (numerator/denominator) on these 10 indicators.

Table 1 Ten quality indicators (performance measures) used in the Project for Improvement of Stroke Care Management in Minnesota with evidence level and references for each measure24

Data collection and quality control.

Data were abstracted from patient medical records by trained nurses using a laptop program and a manual. Abstraction reliability was examined by reabstraction of a randomly selected 5% sample from each abstractor's completed cases by an experienced gold standard abstractor. A 95% interrater reliability (using the κ statistic) was achieved on key variables used to calculate performance measures during both phases of data collection.

Control and experimental conditions.

Key elements of the QI intervention included 1) audit and written feedback of baseline performance; 2) analysis of structural and knowledge barriers to stroke care identified by provider questionnaires; 3) use of clinical opinion leaders to deliver customized feedback to care providers; 4) use of hospital management leaders to overcome identified barriers to stroke care. Control hospitals received the first item whereas experimental hospitals received all 4 elements.

Clinical opinion leader recruitment in all hospitals.

Neurologists were asked to rank order 3 colleagues with whom they often discussed ischemic stroke cases and whose personal characteristics were similar to attributes of informal opinion leaders.13 Candidates with the highest number of votes at each hospital were successfully recruited to participate in the study.

Management leader recruitment in experimental hospitals.

Each experimental hospital appointed a management leader to co-lead the project with the opinion leader. These individuals, typically nurses in management roles, were responsible for facilitating changes in care processes to address barriers in stroke care.

Physician and nurse surveys.

Nurses and physicians who cared for ischemic stroke patients were surveyed in order to identify key knowledge and skill deficits and other barriers to stroke care (questionnaires in appendix e-1 on the Neurology® Web site at www.neurology.org).

Control group.

Because of ethical concerns that a “pure” control group might be denied data on their current practice, the opinion leader of each control hospital received a report on the hospital's baseline care quality (figure 1).

Experimental group.

Salient features of the experimental intervention were as follows: 1) opinion leader-led feedback to providers and 2) management leader-led interventions to resolve barriers to stroke care. Following preintervention data collection, experimental hospital leaders took part in a retreat to review evidence and guidelines related to the target performance measures (table 1). They were then charged with presenting hospital-specific data to their neurologists, ER physicians, primary care physicians, and internists caring for stroke patients, as well as nurses and rehabilitative staff involved in stroke care. Data presented included hospital-specific baseline performance data and information on knowledge and organizational barriers to stroke care identified by the surveys. Accountability for corrective strategies was assigned during these meetings. The opinion leaders were selected for their ability to influence their peers; hence they were allowed to customize the feedback sessions to suit individual hospitals and clinical circumstances (i.e., ER vs inpatient care).

The management leaders worked on identified knowledge barriers among the multidisciplinary stroke staff (e.g., importance of warfarin in atrial fibrillation among nurses), organizational barriers such as lack of order sets and pathways, and process issues (e.g., implementing existing physical and occupational therapy orders). HERF QI staff held monthly phone calls with management leaders to discuss progress made on resolving barriers and provided copies of compiled examples of stroke care order sets, pathways, links to practice guidelines, relevant articles, and copies of tools (e.g., patient education tool).

The hypothesis underlying the hospital-specific feedback was that physicians and nurses were more likely to change their behavior if made aware that their practices fell short in providing best care as defined by clinically meaningful quality criteria agreed upon in advance by credible authorities in their hospitals' group.8,10,11

Statistical analysis.

PRISMM used a cluster-randomized, control group study design with repeated cross-sectional binary measures (pretest, posttest).14,15

Patient characteristics and hospital profiles were compared between intervention and control conditions using χ2 statistic for categorical variables and the median test for continuous non-normal variables. Survey results were analyzed qualitatively to categorize barriers to stroke care. Primary analysis was based on intent-to-treat (ITT). Secondary analysis excluded the 2 hospitals that left the study midway (as-treated analysis). We used a multilevel random-effects modeling framework to evaluate the intervention effect. This framework adjusts for the correlation between subjects (intracluster correlation) in the individual level analysis thereby capturing hierarchical clustering of the data (patients are nested within hospitals).14

The 10 measures were grouped into 3 bundles (table 1): acute care, in-hospital treatment, and discharge treatment. Preintervention and postintervention performance change was examined for each bundle and for each measure. Bundle performance was calculated by weighting the contribution of each indicator by its denominator size and summing over all indicators in the bundle. The multilevel models yielded odds ratios that compared the direction and magnitude of change between experimental and control hospitals. The analyses tested the effect of our intervention by looking at the interaction between secular trends in stroke care quality and hospital assignment to experimental vs control conditions. In addition, secular trends were examined separately by looking at the main effect of the trend alone (adjusting for assignment) but without the interaction term. SAS (PROC GLIMMIX) was used for all analyses.

Process evaluation.

A qualitative process evaluation done following study completion examined the implementation of the intervention. In particular, postintervention adoption of standardized protocols and order sets by hospitals as well as the actual use of these in patient care were examined.

RESULTS

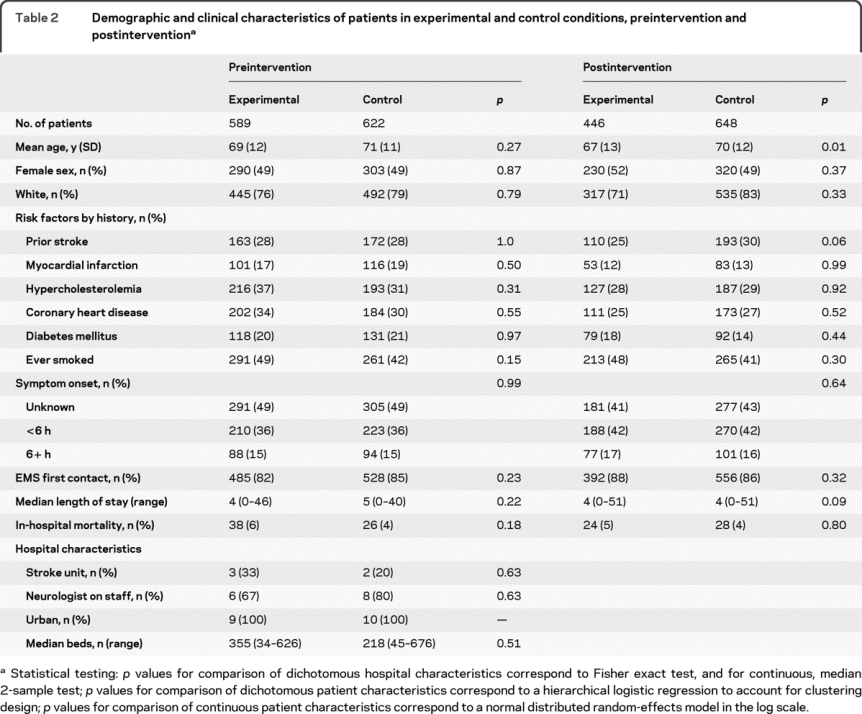

Twenty-four hospitals agreed to participate in the study from the 45 MCCAP hospitals. Nineteen hospitals participated in randomization and preintervention data collection. Postintervention data were provided by 17 hospitals. Figure 1 shows causes for hospital attrition. There were 1,211 acute ischemic stroke cases preintervention and 1,094 cases postintervention across all hospitals. Table 2 shows the demographic and clinical characteristics of experimental vs control patients before and after intervention. All hospitals were urban. Table e-1 compares clinical and other aspects of the 2 hospitals that left the study with the 17 that continued.

Table 2 Demographic and clinical characteristics of patients in experimental and control conditions, preintervention and postintervention

Table e-2 summarizes barriers to stroke care identified by preintervention provider surveys. Response to surveys was poor with only 32% of physicians and 36% of nurses responding after 3 mailings. Lack of standardized order sets was identified as a barrier to stroke care by 67% of physicians and 48% of nurses.

Intervention effect and secular trends.

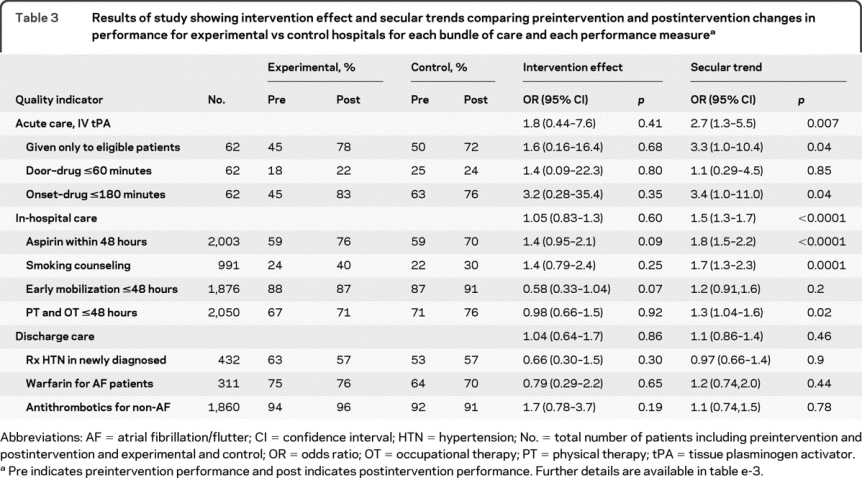

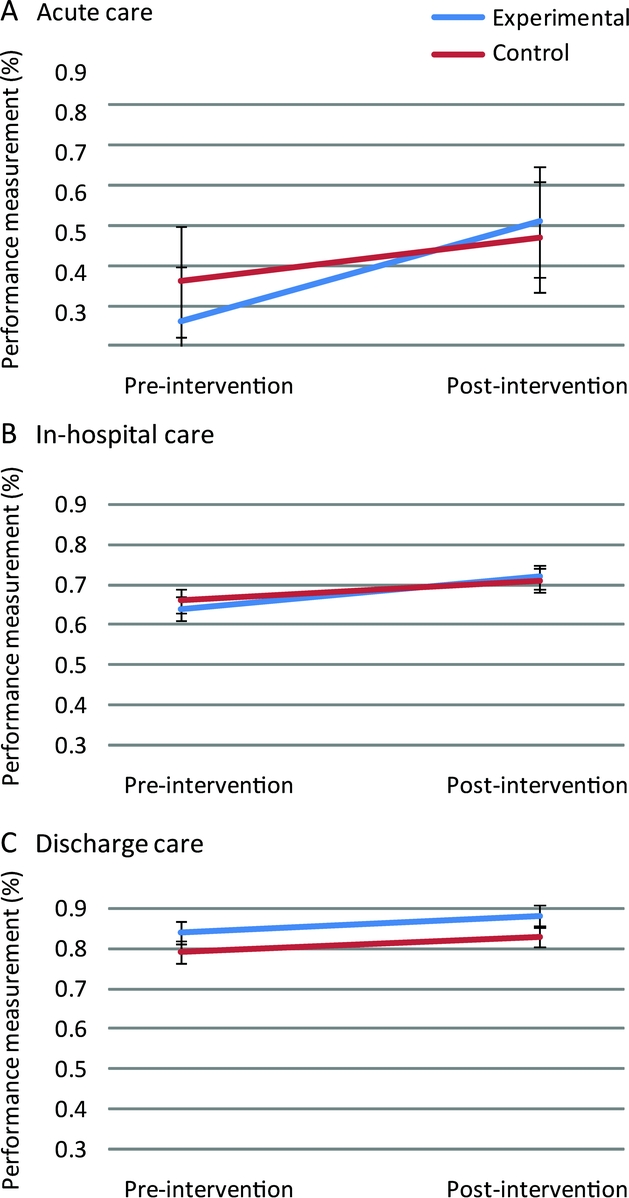

The ITT analysis indicated that there was no significant intervention effect for acute, in-hospital, or discharge cases (table 3; table e-3 has details). In contrast, significant secular trends in the quality of stroke care were seen for acute care (odds ratio [OR] = 2.7; 95% confidence interval [CI] = 1.3-5.5; p = 0.007) and in-hospital care (OR = 1.5, 95% CI = 1.3-1.7; p < 0.0001) but not for discharge care (table 3). Figure 2 illustrates the interaction between hospital assignment and secular trend for each bundle.

Table 3 Results of study showing intervention effect and secular trends comparing preintervention and postintervention changes in performance for experimental vs control hospitals for each bundle of care and each performance measure

Figure 2 Change in acute (A), in-hospital (B), and discharge care (C) bundles preintervention and postintervention in experimental vs control hospitals

Calculation of bundle performance was by combining a weighted average of performance on each measure comprising the bundle (table 1). This illustrates secular trends in both acute and in-hospital care and the lack of interaction of these trends with experimental vs control conditions (i.e., no intervention effect). Discharge care did not show any secular trend or intervention effect.

We explored the statistical implications of the 2 hospital dropouts. These 2 experimental hospitals contributed a total of 100 out of 1,211 cases (8%) to the preintervention data. The ITT and as-treated analyses were very similar, suggesting no significant bias due to the dropouts.

We examined the relation between the magnitude of postintervention improvement and baseline performance for in-hospital and discharge care measures. For all but one measure (PT, OT within 48 hours) hospitals with higher baseline performances showed a smaller magnitude of postintervention improvement and this was significant for early mobilization (Spearman rank correlation −0.6, p = 0.007) and warfarin in AF (Spearman rank correlation −0.7, p = 0.009).

Qualitative process evaluation data (table e-2) showed that despite the provision of order sets and other patient care tools to all intervention hospitals, the adoption of these was incomplete. Many control hospitals had order sets and protocols at baseline. However, the actual use of these patient care tools, even when available, was poor in both experimental and control hospitals.

Evidence level.

This study provides Class II evidence that informing hospital leaders of compliance with ischemic stroke performance measures followed by a structured QI intervention did not significantly improve compliance more than informing hospital leaders of compliance without this QI intervention. However, a potentially large benefit from the intervention for IV tPA use could not be excluded due to lack of statistical precision (OR [95% CI] = 1.8 [0.44-7.6]).

DISCUSSION

PRISMM failed to detect an intervention effect but demonstrated significant secular trends in care quality with performance changes in both experimental and control hospitals. Our results illustrate the potential fallacy of using historical controls for evaluating QI interventions as is current practice.16,17 Without contemporaneous controls, changes in performance, however substantial, cannot be causally attributed to the intervention.

The lack of a demonstrable intervention effect in the PRISMM trial could indicate that this specific intervention was ineffective or that the intervention would have worked but that its implementation was incomplete. Process evaluation data (table e-2) support the latter hypothesis. While stroke care order sets, protocols, and patient education materials were made available to all intervention hospitals, not all hospitals adopted them. Furthermore, the use of order sets in actual patient care was poor. Many control hospitals either had order sets at baseline or developed them on their own. It is to be noted, however, that the actual order set usage seems to be poor across the board and future interventions should address order set use in addition to ensuring their availability. A different possibility for the lack of an intervention effect was that it was swamped by large secular trends. There was a nationwide focus on the quality of acute stroke care coincident with PRISMM including the Brain Attack Coalition stroke center recommendations published in 200018 and the Primary Stroke Center Certification (PSC) program launched nationwide in 2003-2004.19 PRISMM quality indicators were similar to those proposed by these programs. Many hospitals, aware of these developments during the PRISMM trial, were preparing for PSC by 2003 when postintervention data were being collected. Four control hospitals vs 1 experimental hospital attained PSC in 2004, the first year disease-specific certification was offered for stroke (table e-3). This imbalance, unforeseen prior to trial design, persists to this day. These events likely contributed to a dilution of the intervention effect and the observed secular trends.

Strengths of our study design include the substantial number of participating hospitals. One weakness is that an intervention effect for the acute care bundle could have escaped detection due to loss of statistical power from hospital attrition (from 24 to 19 hospitals). Recruitment and retention of hospitals, schools, and organizations is a major difficulty in cluster-randomized trials. We were nevertheless able to detect significant secular trends in performance. A different related issue is regarding the balance of study design due to the postrandomization loss of 2 experimental hospitals. We addressed this by comparing the ITT and as-treated results and there was no evidence of bias due to hospital dropouts. Another limitation is that at the time of study design there were no estimates of ICC to design interventions for acute care studies and our power calculations were based on estimates reported from primary care literature.20 Consequently, one contribution of our study is the reported ICC estimates for acute, in-hospital, and discharge care. These will be useful for forthcoming studies.

Cluster-randomized trials are uncommon in neurologic literature despite being used in other fields of medicine, such as primary care, and also in the education field, where schools are randomized to different curricula.15 Few randomized controlled trials (RCTs) have examined the efficacy of interventions to improve the quality of stroke care, particularly complex interventions targeting care providers and hospital organizations.21 PRISMM addresses this gap by systematically assessing a complex intervention based on a theory of behavior change8,10,11 to bring about improvement in the care quality. Are QI interventions really necessary since secular trends apparently lead to improved care quality over time? Our postintervention data showed continued suboptimal performance on many measures. Hence, secular trends alone cannot be relied on to ensure optimal care and interventions to improve care are needed.

An ongoing debate in the quality improvement field is whether RCTs such as PRISMM have a role in evaluating QI interventions given the difficulty and expense of such studies.22 While RCTs are the gold standard for evaluating simple therapeutic interventions, they have drawbacks when used in the evaluation of complex interventions such as PRISMM. Complex interventions have multiple interconnected parts, are difficult to implement, and aim to achieve outcomes which may be difficult to influence.21 The main drawback (in addition to the expense and effort needed for cluster-randomized studies) is that RCT are searching for generalizable results; hence the goal is strip away the local context in which the intervention is deployed. QI interventions, on the other hand, aim to bring about social change and depend heavily on the local context and interpersonal dynamics to bring about such change.22,23 Hence RCTs may miss contexts, mechanisms, and factors that affect the intervention outcome and therefore fail to identify factors that may influence generalizability.22,23 In counter argument, RCTs are designed to guard against confounders. In the PRISMM, had we used the experimental arm alone without control hospitals, we could have incorrectly concluded a significant intervention effect due to the secular trends for acute and in-hospital care bundles. We believe that a wide range of methodologies should be used to evaluate QI interventions. RCT have a special role in that they guard against confounders and prevent fallacious conclusions about intervention efficacy. However, other evaluation paradigms that model the local context and mechanisms underlying process change are also important in identifying why certain QI interventions seem to work while others fail.22,23

AUTHOR CONTRIBUTIONS

Statistical analysis was conducted by Dr. G. Vazquez.

COINVESTIGATORS

Susan Duval, PhD (University of Minnesota, School of Public Health; study design, power analysis, randomization); Thomas Kiresuk, PhD (University of Minnesota and Minneapolis Medical Research Foundation; analysis of provider survey data); Edward Guadagnoli, PhD (Harvard Medical School, Department of Healthcare Policy; design of provider surveys); Helen Hansen (University of Minnesota, School of Nursing; development of leadership surveys, tracking leadership activities).

ACKNOWLEDGMENT

The authors thank the following contributors: Stephen Soumerai, ScD (Harvard University; consultation on research and intervention design); Andrew Van de Ven, PhD (University of Minnesota, Carlson School of Management; consultation on organizational change); Jerry Gurwitz, MD (Meyers Primary Care Institute; consultation on quality improvement trials); David Anderson (Hennepin County Medical Center; clinical opinion leader); Ronald Tarrel and Richard Shronts (Abbott Northwestern Hospital; clinical opinion leader); Allan Ingenito (Buffalo and Mercy Hospitals; clinical opinion leader); Sandra Hanson (Methodist Hospital; clinical opinion leader); Irfan Altafullah (North Memorial Hospital; clinical opinion leader); Moeen Masood (Regions Hospital; clinical opinion leader); Paul Schanfield (HealthEast St. John's, HealthEast St. Joseph's, and HealthEast Woodwinds Hospitals; clinical opinion leader); Frederick Strobl (Ridgeview Medical Center; clinical opinion leader); Lawrence Schut (St. Cloud Hospital; clinical opinion leader); Jack Hubbard (St. Francis Regional Medical Center and Fairview Ridges Hospitals); John Floberg (United Hospital; clinical opinion leader); David Dorn (Unity Hospital; clinical opinion leader); Karen Porth (Fairview Southdale Hospital; clinical opinion leader); Pat Janey (HERF; data abstraction and validation); Amy Boese (HERF; database management); and Dr. Robert L. Kane, MD (University of Minnesota, Health Policy and Management, review and comments on early manuscript drafts). This work was done in collaboration with the American Academy of Neurology; American Heart Association, Northland Affiliate; Minneapolis Medical Research Foundation; and University of Minnesota, School of Nursing and School of Public Health, Division of Epidemiology and Community Health.

DISCLOSURE

Dr. Lakshminarayan serves on a scientific advisory board for CVRx and receives research support from the NIH (K23NS051377 [PI]), the CDC (U58DP000857 [Co-I]), and the American Academy of Neurology (Fellowship 84500-2002). Dr. Borbas has received research support from the Agency of Healthcare Research and Quality. B. McLaughlin has received research support from the Agency of Healthcare Research and Quality. N.E. Morris has received research support from the Agency of Healthcare Research and Quality. Dr. Vazquez receives research support from the NIH (K23NS051377 [epidemiologist/analyst] and R01NS44976 [epidemiologist/analyst]), and from the American Heart Association. Dr. Luepker serves on scientific advisory boards for CVRx, Reynolds Foundation, Lund University, Sweden, and Claremont-McKenna University; served as Guest Editor of Circulation; receives research support from the NIH (NCRR K12 RR023247 [PI] and NHLBI R01-HL023727-25A1 [PI]), and from the CDC (U58DP000857 [Co-I]); and served as a plaintiff's expert witness in the Vioxx® case against Merck Serono. Dr. Anderson serves on a scientific advisory board for CVRx; has received travel expenses and/or honoraria for lectures or educational activities not funded by industry; serves on the event adjudication committees of AIM-HIGH (sponsored by NHLBI) and SAMMPRIS (sponsored by NINDS); and receives research support from the NIH (2U01NS38529-04A1 [Site PI], 1U10 NS058994-011R01 [Co-PI], and 1R01HD053153-01A2 [Co-I]).

Supplementary Material

Address correspondence and reprint requests to Dr. K. Lakshminarayan, Department of Neurology, MMC 295, 420 Delaware Street SE, Minneapolis, MN 55455 laksh004@umn.edu

Supplemental data at www.neurology.org

Study funding: Sponsored by the Agency for Healthcare Research and Quality (U18HS11073). Supported by the American Academy of Neurology (Clinical Training Fellowship 84500-2002 to K.L.) and the NIH K23NS051377 (K.L. and G.V.).

Disclosure: Author disclosures are provided at the end of the article.

Received July 11, 2009. Accepted in final form February 3, 2010.

REFERENCES

- 1.Kleindorfer D, Lindsell CJ, Brass L, Koroshetz W, Broderick JP. National US estimates of recombinant tissue plasminogen activator use: ICD-9 codes substantially underestimate. Stroke 2008;39:924–928. [DOI] [PubMed] [Google Scholar]

- 2.Holloway RG, Benesch C, Rush SR. Stroke prevention: narrowing the evidence-practice gap. Neurology 2000;54:1899–1906. [DOI] [PubMed] [Google Scholar]

- 3.Holloway RG, Vickrey BG, Benesch C, Hinchey JA, Bieber J, National Expert Stroke Panel. Development of performance measures for acute ischemic stroke. Stroke 2001;32:2058–2074. [DOI] [PubMed] [Google Scholar]

- 4.Kernan WN, Viscoli CM, Brass LM, Makuch RW, Sarrel PM, Horwitz RI. Blood pressure exceeding national guidelines among women after stroke. Stroke 2000;31:415–419. [DOI] [PubMed] [Google Scholar]

- 5.Reeves MJ, Arora S, Broderick JP, et al. Acute stroke care in the US: Results from 4 pilot prototypes of the Paul Coverdell National Acute Stroke Registry. Stroke 2005;36:1232–1240. [DOI] [PubMed] [Google Scholar]

- 6.Lakshminarayan K, Solid CA, Collins AJ, Anderson DC, Herzog CA. Atrial fibrillation and stroke in the general Medicare population: a 10-year perspective (1992 to 2002). Stroke 2006;37:1969–1974. [DOI] [PubMed] [Google Scholar]

- 7.Soumerai SB, McLaughlin TJ, Gurwitz JH, et al. Effect of local medical opinion leaders on quality of care for acute myocardial infarction: a randomized controlled trial. JAMA 1998;279:1358–1363. [DOI] [PubMed] [Google Scholar]

- 8.Greco PJ, Eisenberg JM. Changing physicians' practices. N Engl J Med 1993;329:1271–1273. [DOI] [PubMed] [Google Scholar]

- 9.Zimmerman JE, Shortell SM, Rousseau DM, et al. Improving intensive care: observations based on organizational case studies in nine intensive care units: a prospective, multicenter study. Crit Care Med 1993;21:1443–1451. [PubMed] [Google Scholar]

- 10.Doumit G, Gattellari M, Grimshaw J, O'Brien MA. Local opinion leaders: effects on professional practice and health care outcomes. Cochrane Database Syst Rev 2007;(1):CD000125. [DOI] [PubMed]

- 11.Shojania KG, Grimshaw JM. Evidence-based quality improvement: the state of the science. Health Aff 2005;24:138–150. [DOI] [PubMed] [Google Scholar]

- 12.Borbas C, McLaughlin DB, Schultz A. The Minnesota Clinical Comparison and Assessment Program: bridging the gap between clinical practice guidelines and patient care. In: Zablocki E, ed. Changing Physician Practice Patterns: Strategies for Success in a Capitated World. Gaithersburg, MD: Aspen Publishers Inc.; 1995:29. [Google Scholar]

- 13.Hiss RG, MacDonald R, David WR. Identification of physician educational influentials in small community hospitals. Res Med Educ 1978;17:283–288. [Google Scholar]

- 14.Ukoumunne OC, Thompson SG. Analysis of cluster randomized trials with repeated cross-sectional binary measurements. Stat Med 2001;20:417–433. [DOI] [PubMed] [Google Scholar]

- 15.Murray DM. Design and Analysis of Group-Randomized Trials. New York: Oxford University Press; 1998. [Google Scholar]

- 16.Morgenstern LB, Bartholomew LK, Grotta JC, Staub L, King M, Chan W. Sustained benefit of a community and professional intervention to increase acute stroke therapy. Arch Intern Med 2003;163:2198–2202. [DOI] [PubMed] [Google Scholar]

- 17.Schwamm LH, Fonarow GC, Reeves MJ, et al. Get With The Guidelines-Stroke is associated with sustained improvement in care for patients hospitalized with acute stroke or transient ischemic attack. Circulation 2009;119:107–115. [DOI] [PubMed] [Google Scholar]

- 18.Alberts MJ, Hademenos G, Latchaw RE, et al. Recommendations for the establishment of primary stroke centers: Brain Attack Coalition. JAMA 2000;283:3102–3109. [DOI] [PubMed] [Google Scholar]

- 19.JCAHO. Primary Stroke Center Certifications. Available at: http://www.jointcommission.org/CertificationPrograms/PrimaryStrokeCenters/. Accessed June 25, 2009.

- 20.Adams G, Gulliford MC, Ukoumunne OC, Eldridge S, Chinn S, Campbell MJ. Patterns of intra-cluster correlation from primary care research to inform study design and analysis. J Clin Epidemiol 2004;57:785–794. [DOI] [PubMed] [Google Scholar]

- 21.Redfern J, McKevitt C, Wolfe CD. Development of complex interventions in stroke care: a systematic review. Stroke 2006;37:2410–2419. [DOI] [PubMed] [Google Scholar]

- 22.Berwick DM. The science of improvement. JAMA 2008;299:1182–1184. [DOI] [PubMed] [Google Scholar]

- 23.Pawson R, Tilley N. Realistic Evaluation. London: Sage Publications Ltd.; 1997. [Google Scholar]

- 24.Adams HP Jr, del Zoppo G, Alberts MJ, et al. Guidelines for the early management of adults with ischemic stroke: a guideline from the American Heart Association/American Stroke Association Stroke Council, Clinical Cardiology Council, Cardiovascular Radiology and Intervention Council, and the Atherosclerotic Peripheral Vascular disease and Quality of Care Outcomes in Research Interdisciplinary Working Groups: The American Academy of Neurology affirms the value of this guideline as an educational tool for neurologists. Stroke 2007;38:1655–1711. [DOI] [PubMed] [Google Scholar]

- 25.The National Institute of Neurological Disorders and Stroke rt-PA Stroke Study Group. Tissue plasminogen activator for acute ischemic stroke. N Engl J Med 1995;333:1581–1587. [DOI] [PubMed] [Google Scholar]

- 26.CAST (Chinese Acute Stroke Trial) collaborative group. CAST: Randomised placebo-controlled trial of early aspirin use in 20,000 patients with acute ischaemic stroke. Lancet 1997;349:1641–1649. [PubMed] [Google Scholar]

- 27.International Stroke Trial Collaborative Group. The International Stroke Trial (IST): a randomised trial of aspirin, subcutaneous heparin, both, or neither among 19435 patients with acute ischaemic stroke. Lancet 1997;349:1569–1581. [PubMed] [Google Scholar]

- 28.Chobanian AV, Bakris GL, Black HR, et al. The seventh report of the Joint National Committee on Prevention, Detection, Evaluation, and Treatment of High Blood Pressure: The JNC 7 report. JAMA 2003;289:2560–2572. [DOI] [PubMed] [Google Scholar]

- 29.Sacco RL, Adams R, Albers G, et al. Guidelines for prevention of stroke in patients with ischemic stroke or transient ischemic attack: a statement for healthcare professionals from the American Heart Association/American Stroke Association Council on Stroke: Co-sponsored by the Council on Cardiovascular Radiology and Intervention: The American Academy of Neurology affirms the value of this guideline. Stroke 2006;37:577–617. [DOI] [PubMed] [Google Scholar]

- 30.PROGRESS Collaborative Group. Randomised trial of a perindopril-based blood-pressure-lowering regimen among 6,105 individuals with previous stroke or transient ischaemic attack. Lancet 2001;358:1033–1041. [DOI] [PubMed] [Google Scholar]

- 31.Adjusted-dose warfarin versus low-intensity, fixed-dose warfarin plus aspirin for high-risk patients with atrial fibrillation: Stroke Prevention in Atrial Fibrillation III randomised clinical trial. Lancet 1996;348:633–638. [PubMed] [Google Scholar]

- 32.Antiplatelet Trialists' collaboration. Collaborative overview of randomised trials of antiplatelet therapy: I: prevention of death, myocardial infarction, and stroke by prolonged antiplatelet therapy in various categories of patients. BMJ 1994;308:81–106. [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.