Abstract

Recent neuroimaging studies suggest lateralized cerebral mechanisms in the right temporal parietal junction are involved in complex social and moral reasoning, such as ascribing beliefs to others. Based on this evidence, we tested 3 anterior-resected and 3 complete callosotomy patients along with 22 normal subjects on a reasoning task that required verbal moral judgments. All 6 patients based their judgments primarily on the outcome of the actions, disregarding the beliefs of the agents. The similarity in performance between complete and partial callosotomy patients suggests that normal judgments of morality require full interhemispheric integration of information critically supported by the right temporal parietal junction and right frontal processes.

1. Introduction

Recent functional neuroimaging indicate that processes for ascribing beliefs and intentions to other people are lateralized to the right temporal parietal junction (TPJ) (Young, Cushman, Hauser, & Saxe, 2007; Young & Saxe, 2008). Specifically, Young and Saxe (2009) found that TPJ activity in the right hemisphere, but not the left, is correlated with moral judgments of accidental harms. These findings suggest that patients with disconnected hemispheres would provide abnormal moral judgments on accidental harms and failed attempts to harm, since normal judgments in these cases require information about beliefs and intentions from the right brain to reach the judgmental processes in the left brain.

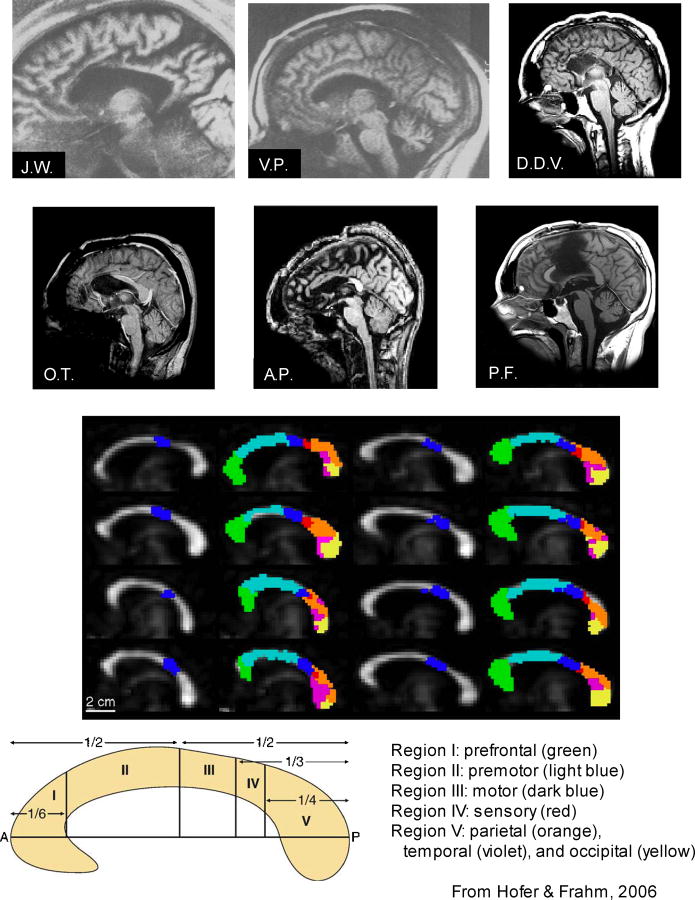

The present study examines this hypothesis by comparing the performance of 22 normal subjects to 6 patients, three with the corpus callosum completely severed and three with only anterior portions severed (see Table 1 and Figure 1). If normal moral judgments require transfer of information regarding an agent’s beliefs from the rTPJ, full split-brain patients should be abnormal in their judgments. Partial split-brain patients with an intact splenium and isthmus, however, might show normal moral reasoning because the fibers connecting the right TPJ with the left hemisphere are intact.

Table 1.

Split-Brain Patient Information

| patient | sex | hand | born | IQ | home | surgery | type | year |

|---|---|---|---|---|---|---|---|---|

| J.W. | M | R | 1954 | 96 | USA | full | 2-stage | 1979 |

| V.P. | F | R | 1953 | 91 | USA | full | 2-stage | 1980 |

| D.D.V. | M | R | 1964 | 81 | Italy | full | 2-stage | 1986/94 |

| O.T. | M | R | 1958 | 80 | Italy | partial | 1/3 anterior | 1995 |

| A.P. | M | R | 1965 | 90 | Italy | partial | 3/4 anterior | 1993 |

| P.F. | M | R | 1984 | ?? | Italy | partial | 1/3 central | 2008 |

Figure 1.

The top panel shows MR images of midsagittal brain slices from the 6 patients with either full (J.W., V.P., and D.D.V) or partial (O.T., A.P., and P.F.) corpus callosum resections. The bottom panel is from Hofer & Frahm (2006) showing fractional anisotropy maps of the midsagittal corpus callosum from 4 female (on the left side) and 4 male (on the right side) subjects and their classification scheme for originating brain regions of white matter projections.

Patients and controls made moral judgments about scenarios used in previous neuroimaging studies (Young, Cushman, Hauser, & Saxe, 2007). In each scenario the agent’s action either caused harm or not, and the agent believed that the action would either cause harm or cause no harm. The crucial scenarios involved accidental harm (where the agent falsely believed that harm would not occur, but the outcome was harmful) and failed attempts (where the agent falsely believed that harm would occur but the outcome was not harmful). After each scenario was read, subjects were asked to judge the agent’s action by vocally responding “permissible” or “forbidden”. For the patients, this testing did not require any lateralized procedures as only the left hemisphere was assumed to be responding verbally.

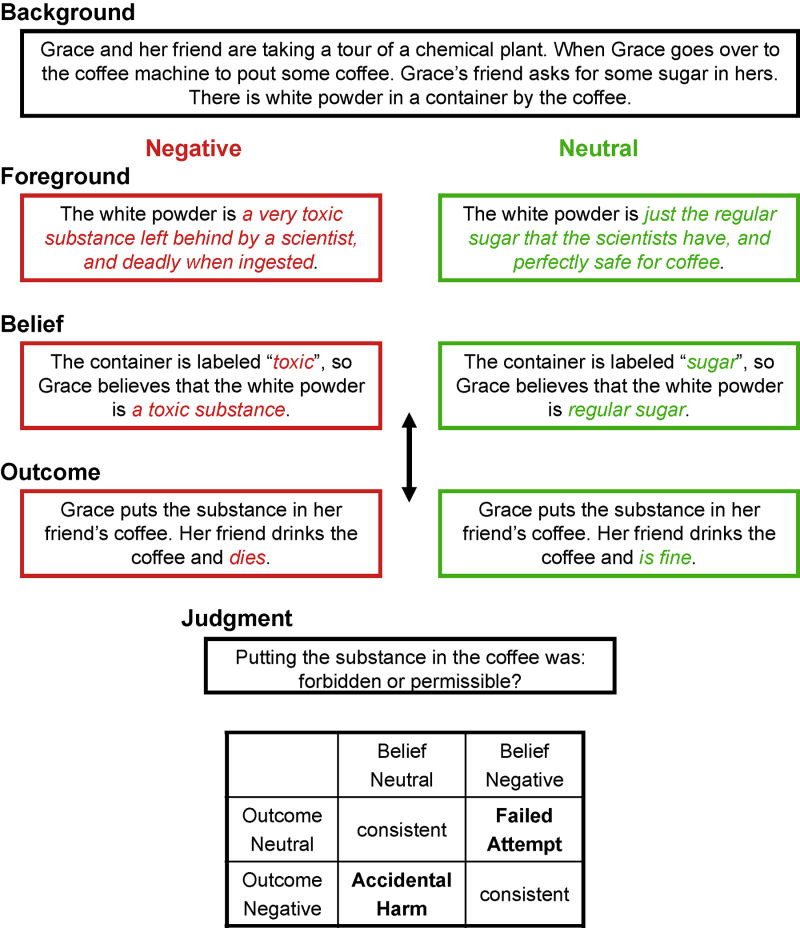

Previous studies show that normal subjects typically base their moral judgments on agents’ beliefs even when these are inconsistent with the actions’ outcomes (Young & Saxe, 2008). In the example in Figure 2, if Grace believed the powder was sugar but it was really poison, normal subjects judge Grace’s action to be morally permissible because of her neutral belief. In contrast, when Grace falsely believed the powder was poison, normal subjects judge Grace’s action to be morally impermissible because of her negative belief even though no harm ensues. Given prior evidence that processing of agents’ beliefs is supported by regions in the right hemisphere, whereas the left hemisphere is responsible for the verbalization of moral judgments, we hypothesized that agents’ beliefs would have less impact on moral judgments when the hemispheres are disconnected in only full callosotomy patients.

Figure 2.

An example of the moral scenarios read to each patient and subject. Each scenario included either a negative or neutral belief and a negative or neutral outcome and foreground. The table at the bottom of the figure represents the four possible outcomes (adapted from Young & Saxe, 2008).

2. Methods

2.1 Participants

Twenty-eight participants (6 patients, 22 control subjects) provided prior informed consent and were treated according to APA ethical standards.

The six patients (four males, two females; ages 24-38) had undergone corpus callosum (CC) resection to treat drug-resistant epilepsy. Three patients (J.W., V.P., and D.D.V) had complete CC resectioning, while the other three (O.T., A.P., and P.F.) had only partial CC resections (see Table 1). All patients were right-handed. Four resided in Italy, two in the United States.

Sixteen neurologically normal control subjects (10 females, 6 males; ages 17-23, mean = 19.88) were recruited from an online subject pool and paid $10 for participation. Six additional subjects (2 females, 4 males; ages 44-50) were paired with the patients’ level of education for control comparison. All subjects were debriefed after the experiment.

Twenty-two neurologically normal control subjects participated in the study. Sixteen of these subjects (10 females, 6 males; ages 17 – 23) were recruited from an online subject pool at the University of California, Santa Barbara and paid $10 for participation. An additional six subjects (2 females, 4 males; ages 44-50) were recruited from the community in order to control for any differences that may be due to age and/or level of education. English was a second language in three of these older subjects, and they were matched to the patients by level of education (high school only or some college). All subjects were debriefed after the experiment.

2.2 Materials and Design

The moral reasoning task presented 48 scenarios in a 2 × 2 × 2 design (see Figure 2 for an example scenario). Each scenario began with background and facts foreshadowing the eventual outcome, followed by the agents’ belief and the outcome. The first factor in the design was whether the agent’s action caused harm or not (outcome: O- or O+); the second factor was whether the agent believed or did not believe that the action would cause harm (belief: B- or B+); and the third factor represented whether the agent’s belief was revealed before or after the description of the outcome in the scenario (BO or OB). This design produced eight different versions of the task. Each version contained 6 trials of eight different trials types (O+B+, O+B-, O-B+, O-B-, B+O+, B+O-, B-O+, B-O-). The 6 split-brain patients and the 6 education-matched controls were randomly assigned to a unique version of the task. For the remaining 16 normal control subjects, the 8 different versions were counterbalanced across subjects. Scenarios were presented in the same order for all subjects.

In half of the scenarios, the belief and the outcome were consistent with each other (neutral belief and outcome, or negative belief and outcome). In the other half, the belief and outcome were inconsistent: neutral belief and negative outcome (accidental harm) or negative belief and neutral outcome (failed attempt to harm).

2.3 Procedure

Subjects were first read aloud instructions saying that they would hear a series of fictional scenarios, each of which would contain information about the actions of a person that would affect somebody else, and their task would be to judge whether the action was permissible or forbidden. “Permissible” was explained as meaning “morally acceptable,” whereas “forbidden” was described as meaning “not morally acceptable.” Experimenters emphasized that there was no right or wrong answer, and subjects should base their answers on their own moral judgment. They were also encouraged to ask for clarification if they were ever confused or needed something repeated. After the experimenter read aloud each scenario, subjects indicated vocally whether they judged the action to be morally permissible or forbidden.

2.4 The Faux Pas Task

To determine whether any differences in performance represented a general deficit in belief attribution, two of the split-brain patients (V. P. and J. W.) and the six education-matched control subjects were also tested in a social faux task previously used to test theory of mind (Stone, Baron-Cohen, & Knight, 1998). Experimenters read aloud twenty scenarios, half of which involved a social faux pas (FP), such as telling somebody how much they dislike a bowl while forgetting that that person had given them that bowl as a wedding present. Subjects were then asked, “Did anyone say something they shouldn’t have said or something awkward?” If their response was “yes,” then they were asked five more questions about the faux pas, and two control questions to assess their comprehension of the story. If they responded “no,” they were asked only the two control questions. All responses were recorded by the experimenter.

3. Results

3.1 The Moral Reasoning Task

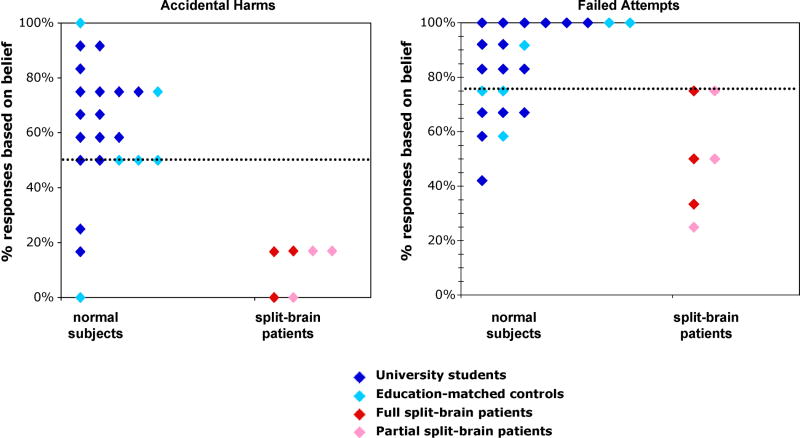

All 6 patients based their moral judgments primarily on the outcomes of the scenarios and not the beliefs of the agents (see Figure 3 and Table 2). When beliefs and outcomes were inconsistent, the proportion of judgments that the patients based on agents’ beliefs (mean = 31%) fell well below the 95% confidence interval level of the normal subjects (mean = 72%). A repeated measures ANOVA using the percentage of responses based on the agents’ beliefs as the dependent variable showed a significant main effect for patients versus normal controls (F(1,26) = 29.95, MSE = .04, p < .001), a significant main effect for consistency between beliefs and outcomes or not (F(1,26) = 105.04, MSE = .02, p < .001), and a significant interaction between the consistency of the scenarios and the group (F(1,26) = 16.89, MSE = .02, p < .001).

Figure 3.

A plot of accidental harms (where the agent falsely believed that harm would not occur, but the harmful outcome did occur) and failed attempts (where the agent falsely believed that harm would occur but the harmful outcome did not occur). All the split-brain patients fall below the 95% confidence interval (the dotted line).

Table 2.

Percentage of responses based on agents’ beliefs (standard error in parentheses)

| consistent | Inconsistent | |||

|---|---|---|---|---|

| negative belief and outcome | neutral belief and outcome | negative belief and neutral outcome (failed attempts) | neutral belief and negative outcome (accidental harms) | |

| normal subjects | 98% (1%) | 90% (2%) | 83% (4%) | 61% (5%) |

| split-brain patients | 92% (4%) | 74% (3%) | 51% (8%) | 11% (4%) |

The difference between the patient group and the control group was larger for accidental harm scenarios (neutral belief and negative outcome) than for failed attempt scenarios (negative belief and neutral outcome). Along with a significant main effect for negative versus neutral beliefs (F(1,26) = 43.47, MSE = .02, p < .001), there was also a significant interaction between the group and whether the belief was neutral or negative (F(1,26) = 7.04, MSE = .01, p = .013) (the 3-way interaction was not significant). This might be due either to the emotional salience of negative versus neutral outcomes or to qualitative differences between neutral versus negative beliefs. It has been suggested that the right TPJ mediates ascriptions of belief, whereas the ventral medial prefrontal cortex mediates ascriptions of desire and intent (Koenigs, Young, Adolphs, Tranel, Cushman, et al., 2007; Ciaramelli, Muccioli, Ladavas, & di Pellegrino, 2007; Young & Saxe, 2009). Judgments of accidental harm depend only on the former, whereas judgments of failed attempts may also involve the latter. Our results indicate that both attributions are affected, but that hemispheric disconnection might affect judgments of accidental harms more than judgments of failed attempts.

Also, the patients tended to respond “forbidden” more than normal controls, even when the belief and outcome were consistently neutral (see Table 1; t(26) = 3.66, p = .001). Spontaneous verbalizations by the split-brain patients indicated that they often based judgments on safety (e.g. judging an act forbidden because “kids shouldn’t be playing near a swimming pool” even though the agent’s belief and the action’s outcome were both neutral). However, as noted above, the difference between the split-brain patients and the normal subjects in the proportion of responses based on agents’ beliefs was much more pronounced for the inconsistent scenarios than for the consistent scenarios.

Although previous studies have not shown a relationship between moral judgments and intelligence (Cima, Tonnaer, & Hauser, in press), there may be concern that the low average IQ (87.6) of the callosotomy patients may be the critical factor in their tendency to not respond to the accidental harms and failed attempts based on beliefs. For example, are they confused by the task, leading to a lower percentage of responses based on beliefs? We believe this is an unlikely explanation for three reasons. First, all of the patients performed normally on the control tasks including the consistent scenarios and they expressed no confusion about the task during informal debriefing sessions. Second, although we only have IQ scores for 5 out of the 6 patients, there appears to be no relationship between IQ score and the percentage of times that their response is based on the beliefs for the inconsistent scenarios. For example, patient JW had the highest IQ score (96) yet he had the lowest percentage of belief responses (0% for accidental harms). And, third, six of the control subjects were selected in order to be matched with the patients for level of education (high school only or some college). Although level of education is not as reliable a measure of intelligence as IQ scores, the difference between the two groups was quite significant. As shown in Figure 3, the 6 education-matched controls responded based on beliefs significantly more than the 6 patients (t(10) = -3.51, p = .006). Further, the 6 education-matched controls responded no differently than the 16 controls that were currently university students (t(20) = -0.53, n.s.).

Also, in order to discount the possibility that patients base their judgments on the last bit of information received, we counterbalanced when beliefs were presented in the scenarios (half before outcomes, half after). When the factor of order of belief and outcome was included in the analysis, we found no main effect for order (F(1,26) = 0.25, MSE = .03, n.s.) nor any significant interaction with other factors, including group characteristics.

Unexpectedly, all of the results reported above were similar for both the full and partial callosotomy patients. According to MRI scans of the partially disconnected patients, their surgical cut of the corpus callosum did not extend posterior enough to transect any crossing fibers from the right TPJ (see Figure 1). While we predicted abnormal performance in the full callosotomy patients, we did not anticipate a similar effect in partial callostomy patients. The observed effect in the partial patients might be explained in three ways: (1) Despite the MRI evidence, the cut extended far enough to affect callosal fibers crossing from the TPJ; (2) The true site for decoding beliefs is anterior to the TPJ; or (3) the TPJ is necessary for decoding beliefs but must work together with more anterior modules to function properly.

3.2 The Faux Pas Task

To determine whether the observed effects were isolated to a particular moral reasoning task, we also tested two of the full callostomy patients on a social faux pas task. The patients could not identify the faux pas. Out of ten faux pas, patient V.P. successfully detected only six, and patient J.W. correctly identified only four. Both patients responded correctly to both control questions in all ten of these scenarios, demonstrating intact comprehension. The control subjects in our study all recognized 100% of the faux pas, as did previously reported patient and control samples, including dorsal frontal patients (M = 100%, SD = 0, n = 4), orbital frontal patients (82.5%, SD = 12.9, n = 5), anterior temporal patients (M = 100%, SD = 0, n = 1) and normal controls (M = 100%, SD = 0, n = 10) (Stone, Baron-Cohen, & Knight, 1998). Although our sample size was small, we observed a significant relationship between the performance on the moral reasoning task and on the faux pas task across the 6 education-matched control subjects and the 2 callosotomy patients (r(7) = .65, p = .041, one-tailed). This finding indicates that the impairment in the callosotomy patients reflects a specific deficit in belief attribution resulting in a deficit in this specific aspect of moral reasoning.

4. Discussion

We found that full and partial callosotomy patients based their moral judgments primarily on actions’ outcomes, disregarding agents’ beliefs. Young children make similar judgments on comparable tasks (e.g., Piaget, 1965; Baird & Astington, 2004), often judging the wrongness of an action by the outcome and not by the intention of the actor. However, the patients appear to have no difficulty comprehending the scenarios, given their near-perfect responses to control questions and their explanations during debriefing sessions following testing.

It seems paradoxical that no real-life effects of impaired belief processing have been reported in the literature on callosotomy patients. There are three possible explanations: (1) Profound effects have gone unreported. This seems unlikely, though a few anecdotal but unpublished reports from spouses and family members describe personality changes after surgery. However, most reports from family members suggest no changes in mental functions or personality and early studies that thoroughly tested patients pre- and post-operatively reported no changes in cognitive functioning (LeDoux, Risse, Springer, Wilson, & Gazzaniga, 1977). (2) The tasks used in this study have little relevance to the real world. This also seems unlikely given that the effect was found in tasks commonly used for probing social cognition. (3) The impairment is compensated for by other mechanisms when patients have more time to think and process information. Some have postulated two separate systems for deriving moral judgments (Greene, 2007) and for belief reasoning (Apperly et al., 2006; Apperly & Butterfill, in press): a fast, automatic, implicit system and a slow, deliberate, explicitly-reasoned system. If the patients do not have access to the fast, implicit systems for ascribing beliefs to others, their initial, automatic moral judgments might not take into account beliefs of others.

The third explanation seems most likely to us, given the spontaneous verbalizations and rationalizations offered by some patients during testing. The spontaneous drive to interpret and rationalize events in the world is a well-known phenomenon in split-brain research (Gazzaniga, 2000), as is the rationalization of moral judgments in the general population (Haidt, 2001). In this study, it appears the patients sensed their judgments were not quite right, and they often offered rationalizations for why they judged the act as they did without any prompting. For example, in one scenario a waitress served sesame seeds to a customer while falsely believing that the seeds would cause a harmful allergic reaction. Patient J.W. judged the waitress’s action “permissible.” After a few moments, he spontaneously offered, “Sesame seeds are tiny little things. They don’t hurt nobody,” as though this justified his judgment. Out of the 24 scenarios read to patient J.W. in this testing session, he offered 5 spontaneous rationalizations for his judgments (see the supplementary material). Although these examples are not necessarily evidence of a slow, deliberate system of moral reasoning that is adjusting for a fast, automatic moral judgment, they are illustrative of how these previously hypothesized systems may operate (Haidt, 2001; Greene, 2007). The proposed explanation is also supported by evidence that high-functioning autistic children use cognitive processes like language and logical reasoning to compensate for deficits in belief reasoning or theory of mind (Tager-Flusberg, 2001).

The present study demonstrates that full and partial callosotomy patients fail to rely on agents’ beliefs when judging the moral permissibility of those agents’ actions. This finding confirms the hypotheses that specialized belief-ascription mechanisms are lateralized to the right hemisphere and that disconnection from those mechanisms affects normal moral judgments. Moreover, the neural mechanism by which interhemispheric communication occurs between key left and right hemisphere processes seems complex. Since the partial anterior callostomy patients also showed the effect, it would appear the right TPJ calls upon right frontal processes before communicating information to the left speaking hemisphere.

Supplementary Material

Acknowledgments

The authors thank Gabriella Venanzi, Claudia Passamonti, Chiara Pierpaoli, and Valentina Ranaldi for invaluable help in the testing of the Italian patients, and Guilia Signorini for translating the moral scenarios from English into Italian. We are also grateful to Steve Hillyard, Barry Giesbrecht, Alan Kingstone, Scott Grafton, Meghan Roarty, Tamsin German, Fiery Cushman, Rebecca Saxe, Jonathan Haidt, and Elisabetta Ladavas for insightful comments. Finally, the authors happily acknowledge that this research was supported by a grant from NINDS RO1 NS 031443-11 to MSG and MBM.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Apperly IA, Riggs KJ, Simpson A, Chiavarino C, Samson D. Is belief reasoning automatic? Psychological Science. 2006;17(10):841–844. doi: 10.1111/j.1467-9280.2006.01791.x. [DOI] [PubMed] [Google Scholar]

- Apperly IA, Butterfill SA. Do humans have two systems to track beliefs and belief-like states? Psychological Review. doi: 10.1037/a0016923. in press. [DOI] [PubMed] [Google Scholar]

- Baird JA, Astington JW. The role of mental state understanding in the development of moral cognition and moral action. New Directions for Child and Adolescent Development. 2004;103:37–49. doi: 10.1002/cd.96. [DOI] [PubMed] [Google Scholar]

- Ciaramelli E, Muccioli M, Ladavas E, di Pellegrino G. Selective deficit in personal moral judgment following damage to ventromedial prefrontal cortex. Social, Cognitive, and Affective Neuroscience. 2007;2:84–92. doi: 10.1093/scan/nsm001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cima M, Tonnaer F, Hauser MD. Psychopaths know right from wrong but don’t care. Social, Cognitive, and Affective Neuroscience. doi: 10.1093/scan/nsp051. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greene JD. Why are VMPFC patients more utilitarian?: A dual-process theory of moral judgment explains. Trends in Cognitive Sciences. 2007;11(8):322–323. doi: 10.1016/j.tics.2007.06.004. [DOI] [PubMed] [Google Scholar]

- Gazzaniga MS. Cerebral specialization and interhemispheric communication: does the corpus callosum enable the human condition? Brain. 2000;123:1293–1326. doi: 10.1093/brain/123.7.1293. [DOI] [PubMed] [Google Scholar]

- Haidt J. The emotional dog and its rational tail: A social intuitionist approach to moral judgment. Psychological Review. 2001;108:814–834. doi: 10.1037/0033-295x.108.4.814. [DOI] [PubMed] [Google Scholar]

- Hofer S, Frahm J. Topography of the human corpus callosum revisited – comprehensive fiber tractography using diffusion tensor magnetic resonance imaging. Neuroimage. 2006;32:989–994. doi: 10.1016/j.neuroimage.2006.05.044. [DOI] [PubMed] [Google Scholar]

- Koenigs M, Young L, Adolphs R, Tranel D, Cushman F, et al. Damage to the prefrontal cortex increases utilitarian moral judgements. Nature. 2007;446:908–911. doi: 10.1038/nature05631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeDoux JE, Risse GL, Springer SP, Wilson DH, Gazzaniga MS. Cognition and commissurotomy. Brain. 1977;100:87–104. doi: 10.1093/brain/100.1.87. [DOI] [PubMed] [Google Scholar]

- Piaget J. The moral judgment of the child. New York: Free Press; 1965/1932. [Google Scholar]

- Stone VE, Baron-Cohen S, Knight RT. Frontal lobe contributions to theory of mind. Journal of Cognitive Neuroscience. 1998;10(5):640–656. doi: 10.1162/089892998562942. [DOI] [PubMed] [Google Scholar]

- Tager-Flusberg H. A reexamination of the Theory of Mind hypothesis of autism. In: Burack JA, Charman T, Yirmiya N, Zelazo PR, editors. The Development of Autism: Perspectives from Theory and Research. Lawrence Erlbaum Associates; 2001. [Google Scholar]

- Young L, Saxe R. The neural basis of belief encoding and integration in moral judgment. Neuroimage. 2008;40:1912–1920. doi: 10.1016/j.neuroimage.2008.01.057. [DOI] [PubMed] [Google Scholar]

- Young L, Saxe R. Innocent intentions: a correlation between forgiveness for accidental harm and neural activity. Neuropsychologia. 2009;47:2065–2072. doi: 10.1016/j.neuropsychologia.2009.03.020. [DOI] [PubMed] [Google Scholar]

- Young L, Cushman F, Hauser M, Saxe R. The neural basis of the interaction between theory of mind and moral judgment. Proc Natl Acad Sci. 2007;104:8235–8240. doi: 10.1073/pnas.0701408104. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.