Abstract

Background

The use of bilateral amplification is now common clinical practice for hearing aid users but not for cochlear implant recipients. In the past, most cochlear implant recipients were implanted in one ear and wore only a monaural cochlear implant processor. There has been recent interest in benefits arising from bilateral stimulation that may be present for cochlear implant recipients. One option for bilateral stimulation is the use of a cochlear implant in one ear and a hearing aid in the opposite nonimplanted ear (bimodal hearing).

Purpose

This study evaluated the effect of wearing a cochlear implant in one ear and a digital hearing aid in the opposite ear on speech recognition and localization.

Research Design

A repeated-measures correlational study was completed.

Study Sample

Nineteen adult Cochlear Nucleus 24 implant recipients participated in the study.

Intervention

The participants were fit with a Widex Senso Vita 38 hearing aid to achieve maximum audibility and comfort within their dynamic range.

Data Collection and Analysis

Soundfield thresholds, loudness growth, speech recognition, localization, and subjective questionnaires were obtained six–eight weeks after the hearing aid fitting. Testing was completed in three conditions: hearing aid only, cochlear implant only, and cochlear implant and hearing aid (bimodal). All tests were repeated four weeks after the first test session. Repeated-measures analysis of variance was used to analyze the data. Significant effects were further examined using pairwise comparison of means or in the case of continuous moderators, regression analyses. The speech-recognition and localization tasks were unique, in that a speech stimulus presented from a variety of roaming azimuths (140 degree loudspeaker array) was used.

Results

Performance in the bimodal condition was significantly better for speech recognition and localization compared to the cochlear implant–only and hearing aid–only conditions. Performance was also different between these conditions when the location (i.e., side of the loudspeaker array that presented the word) was analyzed. In the bimodal condition, the speech-recognition and localization tasks were equal regardless of which side of the loudspeaker array presented the word, while performance was significantly poorer for the monaural conditions (hearing aid only and cochlear implant only) when the words were presented on the side with no stimulation. Binaural loudness summation of 1–3 dB was seen in soundfield thresholds and loudness growth in the bimodal condition. Measures of the audibility of sound with the hearing aid, including unaided thresholds, soundfield thresholds, and the Speech Intelligibility Index, were significant moderators of speech recognition and localization. Based on the questionnaire responses, participants showed a strong preference for bimodal stimulation.

Conclusions

These findings suggest that a well-fit digital hearing aid worn in conjunction with a cochlear implant is beneficial to speech recognition and localization. The dynamic test procedures used in this study illustrate the importance of bilateral hearing for locating, identifying, and switching attention between multiple speakers. It is recommended that unilateral cochlear implant recipients, with measurable unaided hearing thresholds, be fit with a hearing aid.

Keywords: Bimodal hearing, cochlear implant, hearing aid, localization, speech recognition

Benefits that arise from having binaural hearing are found with normal-hearing and hearing-impaired individuals, with and without amplification. Whereas the type and magnitude of benefit are variable among hearing-impaired individuals, they are typically smaller than those seen for normal-hearing individuals (Gatehouse, 1976; Durlach and Colburn, 1978; Feston and Plomp, 1986; Noble and Byrne, 1990; Byrne et al, 1992; Noble et al, 1995; Naidoo and Hawkins, 1997). One type of benefit that can be quantified is an improvement in speech understanding in quiet and also in noise. This benefit is thought to arise from phenomena that include the acoustic head shadow effect, binaural summation, and binaural squelch (Litovsky et al, 2006). Another benefit that has been demonstrated is an improvement in the ability to localize sounds, which arises from the ability of the auditory system to compute the differences in timing and level of sounds that arrive at the two ears; this benefit depends on the extent to which these acoustic cues are preserved with integrity and processed appropriately by neurons in the binaural pathway. These effects are discussed at great length elsewhere (Causse and Chavasse, 1942; Hirsh, 1948; Licklider, 1948; Carhart, 1965; Dirks and Wilson, 1969; Mackeith and Coles, 1971; Durlach and Colburn, 1978; Libby, 1980; Haggard and Hall, 1982; Blauert, 1997).

The benefit received from bilateral amplification was a topic of debate for many years with regard to hearing aids. It is now standard clinical practice to fit hearing aids bilaterally. This debate is now ongoing with regard to cochlear implants. Cochlear implant recipients have two options for bilateral stimulation, the use of a cochlear implant in one ear and a hearing aid in the nonimplanted ear (bimodal hearing) or the use of a cochlear implant in each ear (bilateral cochlear implants). In recent years, there has been an increase in the amount of research on the potential benefits that might arise due to bilateral activation in cochlear implant recipients (for reviews, see van Hoesel, 2004; Ching et al, 2006; Brown and Balkany, 2007; Ching et al, 2007; Schafer et al, 2007). Bilateral cochlear implants are becoming more common but may not be an option or recommended for all adult recipients. This could be due to health issues that prevent a second surgery, lack of insurance coverage, or in many cases a notable amount of residual hearing in the nonimplanted ear. In these cases, the use of a hearing aid in the nonimplanted ear can represent a viable, affordable, and potentially beneficial option for bilateral stimulation.

Bimodal users have a complicated integration task, as they not only have an asymmetry in hearing levels between the two ears; but the type of auditory input received by the two ears is quite different. The cochlear implant provides electric stimulation, while the hearing aid provides acoustic stimulation, which in combination across the ears is likely to provide atypical interaural difference cues in time and level. These are the two primary cues used by the binaural system for segregating target speech from background noise and for localizing sounds. Past research shows that both normal-hearing and hearing-impaired individuals can learn to take advantage of atypical time and intensity cues (Bauer et al, 1966; Florentine, 1976; Noble and Byrne, 1990). It is feasible, therefore, that bimodal recipients could also obtain benefit from bimodal stimulation, despite the asymmetry in hearing between ears.

In the last few years, there has been an increasing amount of research on the effects of bimodal hearing in adult cochlear implant recipients (Shallop et al, 1992; Waltzman et al, 1992; Armstrong et al, 1997; Chute et al, 1997; Blamey et al, 2000; Tyler et al, 2002; Ching et al, 2004; Hamzavi et al, 2004; Iwaki et al, 2004; Seeber et al, 2004; Blamey, 2005; Ching et al, 2005; Dunn et al, 2005; Kong et al, 2005; Luntz et al, 2005; Morera et al, 2005; Mok et al, 2006). The majority of these studies show a benefit in speech recognition and sound localization, although the magnitude of benefit and the testing procedures used differ greatly among these studies.

One source of variability in bimodal studies is the fitting procedure, which is not always discussed in detail and/or variêd among participants. Research has shown that the type of signal processing and the way a device is programmed can affect an individual’s performance with hearing aids and with cochlear implants (Skinner et al, 1982; Sullivan et al, 1988; Humes, 1996; Dawson et al, 1997; Ching et al, 1998; Skinner et al, 2002b; James et al, 2003; Skinner, 2003; Blamey, 2005; Holden and Skinner, 2006). It is thus important to carefully control and understand the extent to which signal processing and the fitting procedures affect performance in bimodal users. The first study to fit all participants using the same hearing aid and fitting procedure (NAL-NL1 prescriptive target, modified for user preference) is that of Ching et al (2004). Results showed improvement in speech recognition in noise and in sound localization in the bimodal condition compared with the cochlear implant alone condition.

An often recognized factor in hearing research is the inherent difficulty in effectively evaluating an individual’s performance in a way that reflects real-life listening situations. Typical measures of speech recognition evaluate intelligibility for a single voice, whose spatial position is static and predictable. This is unlike real-life situations in which listeners are asked to locate, identify, attend to, and switch attention between signals (Gatehouse and Noble, 2004). Accordingly, the current study attempts to move beyond static measures and investigate bimodal abilities using a dynamic speech stimulus.

In summary, the purpose of this study was to document the effects of wearing a well-fit cochlear implant and digital hearing aid in the nonimplanted ear (bimodal hearing) for a variety of measures: soundfield thresholds, loudness growth, speech recognition, localization, and subjective preferences. The speech-recognition and localization tasks were unique in that a speech stimulus presented from a variety of roaming azimuths was used.

METHOD

Participants

Nineteen adult Cochlear Nucleus 24 unilateral cochlear implant (CI) recipients were included in the study. The participants’ demographic data and hearing history information are listed in Table 1. The participants’ ages ranged from 26 to 79 years (mean = 49.8). Nine of the participants (2, 4, 6, 8, 9, 11, 13, 14, and 16) had some degree of hearing loss before the age of six. Only one participant (14), however, had a profound loss by age six. The difference between the mean age at the time the hearing loss was diagnosed and the mean age at the time the hearing loss became severe/profound was 20.9 years. Three participants (3, 14, and 17) were within a year of the time that their hearing loss was identified as becoming severe to profound.

Table 1.

Participant Demographic Information and Hearing Loss History

| Participant | Gender | Implanted Ear | Age | Age Hearing Impairment Diagnosed |

Age of Severe-Profound Deafness |

Etiology |

|---|---|---|---|---|---|---|

| 1 | M | L | 71 | 26 | 36 | Unknown |

| 2 | F | R | 33 | 2 | 29 | Unknown |

| 3 | F | R | 29 | 23 | 24 | Autoimmune Disease |

| 4 | F | R | 26 | 6 | 19 | Genetic |

| 5 | M | L | 63 | 54 | 60 | Unknown |

| 6 | F | R | 55 | 5 | 46 | Genetic |

| 7 | F | R | 73 | 60 | 69 | Genetic |

| 8 | M | R | 46 | 1 | 12 | Unknown |

| 9 | M | R | 61 | 6 | 56 | Autoimmune Disease |

| 10 | M | R | 47 | 21 | 45 | Multiple Sclerosis |

| 11 | F | L | 27 | 2 | 21 | Unknown |

| 12 | F | R | 46 | 14 | 42 | Genetic |

| 13 | F | L | 46 | 1 | 38 | Unknown |

| 14 | F | L | 43 | 5 | 5 | Unknown |

| 15 | M | R | 52 | 12 | 33 | Unknown |

| 16 | M | L | 39 | 5 | 33 | Spinal Meningitis |

| 17 | F | L | 36 | 30 | 30 | Unknown |

| 18 | M | R | 79 | 29 | 76 | Unknown |

| 19 | F | L | 75 | 50 | 74 | Genetic |

| Mean | 49.8 | 18.5 | 39.4 | |||

| SD | 16.7 | 18.7 | 20.4 |

Note: Gender: 8 males, 11 females; implanted ear: 11 right, 8 left.

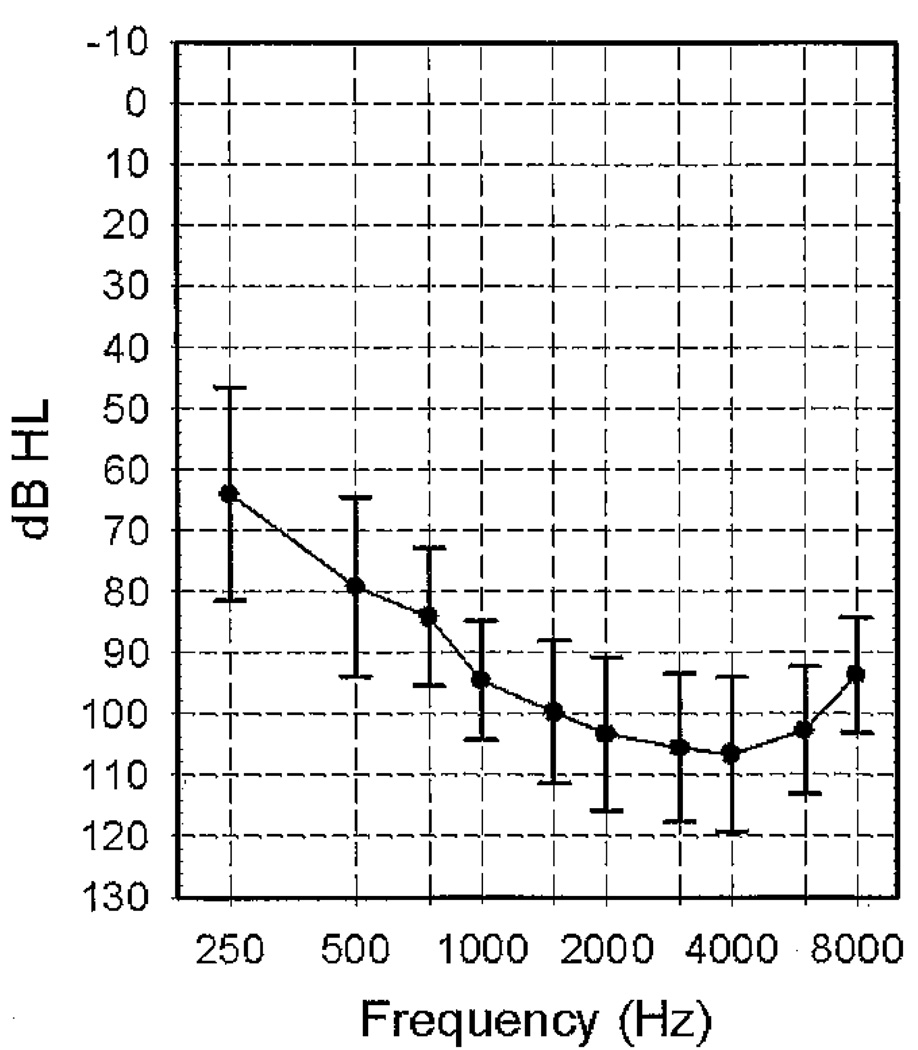

Plotted in Figure 1 are group means for unaided pure-tone thresholds at octave frequencies ranging from 250 to 8000 Hz, measured at the beginning of the study in the nonimplanted ear. Table 2 shows the mean aided preoperative scores for sentences obtained prior to the cochlear implant surgery, for both the nonimplanted (hearing aid [HA] ear) and the ear that was subsequently implanted (CI ear). Sentence testing was completed with either Central Institute for the Deaf (CID) Everyday Sentences (participants 6, 8, and 16 [Davis and Silverman, 1978]) or Hearing in Noise Test (HINT) sentences (Nilsson et al, 1994). Stimuli were presented at 60 (participants 5, 7, and 19) or 70 dB SPL. The CID sentences were used for testing when the audiologist was of the opinion that the candidate would not be able to understand the more difficult HINT sentences. The level for testing was also chosen by the audiologist. Two participants (14 and 17) were not tested with sentences in the ear that was subsequently implanted because the hearing loss was too severe. Two participants (10 and 15) were not tested in the nonimplanted ear due to time constraints at the time of the evaluation.

Figure 1.

Mean unaided thresholds (dB HL) and ±1 SD for the nonimplanted ear (hearing aid ear) measured with supraaural earphones.

Table 2.

Preoperative Speech-Recognition Scores, Years of Hearing Aid (HA) Use, and Years of Cochlear Implant (Cl) Use

| Participant | Pre-Op Sentences, HA Ear |

Pre-Op Sentences, Cl Ear |

Years of HA Use, HA Ear |

Years of HA Use, Cl Ear |

Years of Cl Use |

Cl Strategy and Rate (pps/ch) |

Maxima | Processor |

|---|---|---|---|---|---|---|---|---|

| 1 | 58 | 40 | 40 | 22 | 3.3 | ACE 1800 | 8 | SPrint |

| 2 | 38 | 10 | 29 | 28 | 1.0 | ACE 1800 | 8 | SPrint |

| 3 | 59 | 59 | 5 | 3 | 1.7 | ACE 1200 | 11 | Freedom |

| 4 | 60 | 15 | 19 | 18 | 1.7 | ACE 1800 | 8 | 3G |

| 5 | 59 | 52 | 8 | 7 | 1.2 | ACE 1800 | 8 | 3G |

| 6 | 17 | 3 | 46 | 41 | 4.5 | ACE 900 | 12 | 3G |

| 7 | 2 | 2 | 11 | 9 | 1.1 | ACE 1200 | 10 | 3G |

| 8 | 3 | 3 | 45 | 42 | 2.6 | ACE 1800 | 8 | SPrint |

| 9 | 45 | 15 | 8 | 28 | 2.4 | ACE 900 | 12 | 3G |

| 10 | DNT | 44 | 19 | 18 | 1.6 | ACE 1800 | 8 | 3G |

| 11 | 29 | 8 | 22 | 19 | 2.8 | SPEAK 250 | 9 | 3G |

| 12 | 52 | 31 | 31 | 30 | 1.6 | ACE 1800 | 8 | 3G |

| 13 | 25 | 36 | 41 | 5 | 2.7 | ACE 1800 | 8 | 3G |

| 14 | 45 | DNT | 37 | 33 | 4.9 | ACE 1200 | 10 | 3G |

| 15 | DNT | 2 | 35 | 35 | 3.9 | ACE 900 | 8 | 3G |

| 16 | 15 | 15 | 32 | 30 | 4.5 | ACE 1800 | 8 | SPrint |

| 17 | 53 | DNT | 3 | 1 | 2.5 | ACE 1800 | 8 | 3G |

| 18 | 4 | 10 | 49 | 49 | 0.6 | ACE 1800 | 8 | 3G |

| 19 | 5 | 8 | 0.4 | 24 | 0.4 | ACE 1200 | 8 | 3G |

| Mean | 33.5 | 20.8 | 25.3 | 23.2 | 2.4 | |||

| SD | 22.3 | 18.8 | 15.9 | 13.8 | 1.3 |

Note: Pre-op aided sentence score at 70 dB SPL with Hearing in Noise Test sentences; bold = input level 60 dB SPL; italics = Central Institute for the Deaf sentences; Years of HA Use, HA Ear = years of hearing aid experience in the nonimplanted ear prior to implantation of the opposite ear; Years of HA Use, Cl Ear = years of hearing aid experience in the ear to be implanted with the cochlear implant.

The majority of participants had bilateral HA experience prior to receiving their cochlear implant (see Table 2). Only four participants (1, 9, 13, and 19) had a difference in duration of HA use between the two ears that was greater than five years. Participant 19 was the only one who had no hearing aid experience in the HA ear prior to implantation. This participant began wearing a hearing aid in the nonimplanted ear one month after receiving a cochlear implant. Four participants (13, 15, 16, and 17) were not wearing a hearing aid at the beginning of the study. These participants had stopped wearing a hearing aid after they received the cochlear implant due to lack of perceived benefit from the hearing aid.

Information about cochlear implant history and speech-processor programs that participants were using in everyday life is reported in Table 2. The participants had worn a cochlear implant for a minimum of four months to a maximum of 4.9 years (mean = 2.4 years). Sixteen participants had the CI24 receiver/stimulator with the Contour array, one participant (14) had a CI24 receiver/stimulator with a straight array, and two participants (3 and 7) had the Freedom receiver/stimulator with the Contour array. Surgeons affiliated with Washington University School of Medicine implanted the participants and reported a full insertion of the electrode array.

The advanced combination encoder (ACE) strategy (Skinner et al, 2002a) with various rates of stimulation on each electrode was used by 18 of the participants, and one participant (11) used spectral-peak (SPEAK) speech-coding strategy (Seligman and McDermott, 1995). All devices were programmed in a monopolar stimulation mode with 25 µsec/phase biphasic pulses. Most participants had the two or three most basal electrodes removed from their program to eliminate an irritating high-pitched sound or to accommodate the maximum 20 electrodes allowed in the ear level processor. The majority of participants used an ear level processor (ESPrit 3G or Freedom). The remaining participants used a body processor (SPrint).

All participants were seen pre- and postoperatively in the Adult Cochlear Implant and Aural Rehabilitation Division at Washington University School of Medicine. All programs had been optimized through a uniform behavioral fitting protocol. A detailed discussion of the fitting protocol can be found in Skinner et al (1995), Sun et al (1998), Skinner et al (1999), Holden et al (2002), Skinner et al (2002a), and Skinner et al (2002b) and is briefly summarized in the “Procedures” section. The research testing was completed using the participant’s preferred CI program that was worn in everyday life prior to the start of the study.

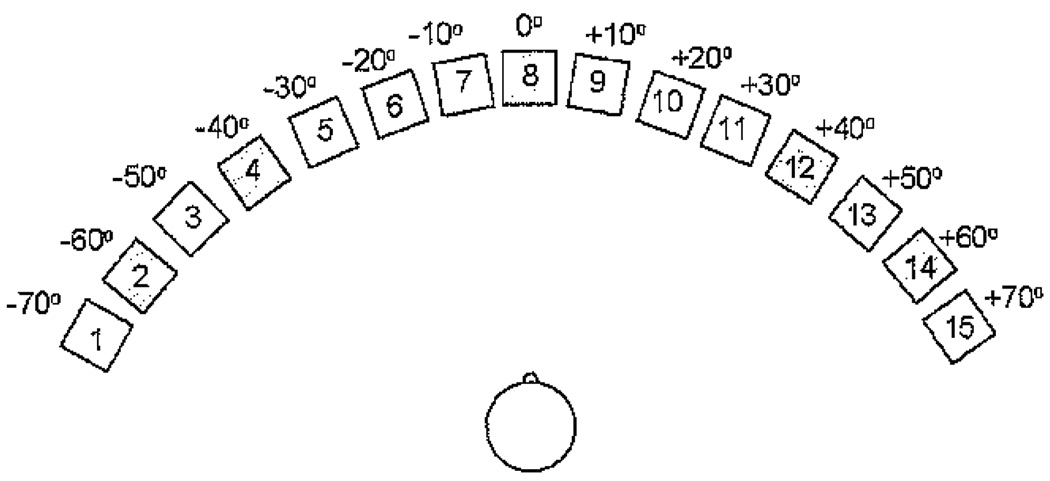

Test Environment

Participants were seated in a quiet room for programming of the HA and real-ear measurements. The soundfield threshold, speech recognition, and localization testing were completed with the participant seated in a double-walled sound-treated booth (IAC, Model 404-A; 254 × 272 × 198 cm), and the loudness-growth testing was also completed in a double-walled sound-treated booth (IAC, Model 1204-A; 254 × 264 × 198 cm). Participants were seated with the center of the head at a distance of 1.5 m from the loudspeaker(s). For soundfield threshold and loudness-growth testing a single loudspeaker was positioned at 0 degrees (front). For speech-recognition and localization testing, participants were seated in the center of a 15-loudspeaker array; loudspeakers were positioned on a horizontal arc with a radius of 140 degrees (137 cm) and spaced in increments of 10 degrees from +70 degrees (left) to −70 degrees (right) at a height of 117 cm. This height was chosen to be ear level for a person who is 168 cm tall. For a diagram of the loudspeaker configuration, see Figure 2.

Figure 2.

Schematic of the 140 degree loudspeaker array used for the localized speech-recognition and localization of speech tasks, with 15 loudspeakers spaced 10 degrees apart in the frontal plane. The shaded loudspeakers represent loudspeakers that were not active during testing.

Signal Presentation Equipment

The standard clinical system developed by Cochlear Americas that includes software {WINDPS, 126 (v2.1)}, an interface unit (Processor Control Interface), and computer were used to program the Cl speech processors prior to the start of the study. The HA was programmed at the start of the study with the Compass (v3.6) software supported by NOAH 3 on a Dell personal computer connected to the Hi-Pro interface box and programming cable.

A GSI 16 audiometer coupled to TDH-50P circumaural earphones was used for pure-tone and unaided loudness-growth testing. Real-ear measurements were obtained with the AudioScan Verifit Real-Ear Hearing Aid Analyzer. A Dell personal computer with a sound card, a power amplifier (Crown, Model D-150), and a custom-designed, mixing and amplifying network (Tucker-Davis Technologies) was utilized for presenting the soundfield warble tones and four-talker speech babble through loudspeakers (Urei Model 809 and JBL Model LSR32). For the speech-recognition and localization tasks, 15 Cambridge Sound Works Newton Series MC50 loudspeakers (frequency range from 150 to 16,000 Hz) were controlled by a Dell personal computer using Tucker-Davis Technologies hardware with a dedicated channel for each loudspeaker. Each channel included a digital-to-analog converter (TDT DD3-8), a filter with a cutoff frequency of 20 kHz (TDT FT5), an attenuator (TDT PA4), and a power amplifier (Crown, Model D-150).

Stimuli

Unaided threshold and loudness scaling was made with pulsed (1500 msec on/1500 msec off) pure tones at 250, 500, 750, 1000, 1500, 2000, 3000, 4000, 6000, and 8000 Hz. Pulsed tones were used to make the sound easier to discriminate, especially in the presence of tinnitus, and to control the duration of the signal. The female connected discourse on the AudioScan Verifit real-ear hearing aid analyzer was used for real-ear measures. It has three filters for various levels of vocal effort. The soft filter was applied to the 55 dB level; the average filter, to 65 dB level; and the loud filter, to the 75 dB level. The female discourse was chosen because its long-term average spectra are most representative of everyday speech.

Soundfield thresholds were obtained with frequency-modulated warble tones (centered at 250, 500, 750, 1000, 1500, 2000, 3000, 4000, and 6000 Hz) with sinusoidal carriers modulated with a triangular function over the standard bandwidths. The modulation rate was 10 Hz. One-second segments of four-talker broadband speech babble were used to obtain loudness-growth judgments. This babble consisted of four individual speakers each reading a separate passage (Auditec of St. Louis recording).

For speech-recognition and localization testing, newly recorded lists of consonant–vowel nucleus–consonant (CNC) words (Peterson and Lehiste, 1962; Skinner et al, 2006) were used. The same speaker (male talker of American English) and the same recording company that had been used for the original CNC recording were also used for this recording. Skinner et al (2006) tested the equivalency of the 10 original CNC lists and the 33 new lists and the mean scores on the new lists to be 22 percent poorer than the original lists. The use of this new recording allowed for greater flexibility in CNC testing with no repetition of any test list during the study and decreased the possibility of a ceiling effect. The use of a speech stimulus in a localization task has been shown to result in improved or equivalent localization compared to other noise stimuli for cochlear implant recipients (Verschuur et al, 2005; Grantham et al, 2007; Neuman et al, 2007).

Calibration

The sound pressure level of the soundfield stimuli was measured with the microphone (Bruel & Kjaer, Model 4155) of the sound-level meter (Bruel & Kjaer, Model 2230) and third-octave filter set (Bruel & Kjaer, Model 1625) at the position of the participant’s head during testing with the participant absent. The microphone was calibrated separately prior to soundfield calibration. For calibration of CNC words, the overall SPL of 50 words from list 1 was taken as the average of the peaks on the slow, root mean square, linear scale through the front loudspeaker. The carrier word “ready” was not used in the calibration. A daily check using a handheld sound-level meter (Realistic 33-2050) was completed. Each of the loudspeakers was calibrated independently with pink noise. The loudspeaker output was adjusted so that the SPL measured at the position of the participant’s head was within 1 dB for each of the 10 loudspeakers. A check of the linearity of each loudspeaker from 30 to 80 dB SPL was also completed.

An equivalent continuous sound-level measure was obtained for 10 minutes with the four-talker babble used in the loudness-growth test. The measure was 69.9 dB SPL for a presentation of 70 dB SPL. The linearity of the system was checked for the output range used in testing, that is, from 30 to 80 dB SPL.

PROCEDURES

Cochlear Implant Programming

The cochlear implant programming and aural rehabilitation regime followed in the Adult Cochlear Implant and Aural Rehabilitation Program at Washington University School of Medicine has evolved over the last 16 years and has been strongly influenced by clinical research findings (Skinner et al, 1995; Sun et al, 1998; Skinner et al, 1999). Recipients are programmed weekly for two months. First, the ACE 900 pps/ch and the ACE 1800 pps/ch are programmed, and the preferred rate is determined (Holden et al, 2002). Following this, SPEAK and CIS strategies as recommended by Skinner and colleagues (Skinner et al, 2002a; Skinner et al, 2002b) are programmed. Each cochlear implant recipient’s preferred strategy and parameters within that strategy are chosen based on the recipient’s report of the greatest benefit in everyday life and assessment in the weekly aural rehabilitation sessions.

Initially, the minimum (T) and maximum (C) stimulation levels for every electrode are programmed using ascending loudness judgments and counted thresholds. To assure that loudness is equalized across all electrodes, stimulation is swept across electrodes at T level, at C level, and at 50 percent between T and C levels. The patient judges the loudness of each sweep. This judgment should be in the “very soft” to “soft” range for a T-level sweep, in the “medium” range for the 50 percent sweep, and “medium loud” to “loud” for the C-level sweep. The programming adjustments are made to optimize the audibility of soft to loud speech so that conversational speech will be clear and comfortably loud, loud sounds will be tolerable, and soft sounds will be audible as verified by soundfield, frequency-modulated thresholds between 15 and 30 dB HL.

Each participant’s preferred program had been clinically evaluated within six months of the start of the study. There were no additional processing features active in any of the participants’ preferred programs (i.e., noise suppression, adaptive dynamic range optimization).

Unaided Measures in the Nonimplanted Ear

Unaided thresholds and loudness judgments were measured with TDH-50P supra-aural earphones for the nonimplanted ear. Threshold was measured in the modified Hughson-Westlake procedure (Carhart and Jerger, 1959) with ascents in a 2 dB step size and descents in a 4 dB step size. Loudness scaling was completed with an eight-point loudness scale including first hearing, very soft, soft, medium soft, medium, medium loud, loud, and very loud. The participant rated the loudness beginning 10 dB below threshold and ascending in 2 dB steps until a judgment of “very loud” was obtained. Due to the profound hearing losses of these participants, “very loud” was not reached at all frequencies.

Hearing Aid Fitting

All participants were fit with the Senso Vita 38 hearing aid at the start of the study. The Vita 38 is a digital, three-channel hearing aid with a 138 dB SPL peak output and maximum insertion gain of 65 dB SPL in the low and high channels and 75 dB SPL in the midchannel and an adjustable crossover frequency. It has enhanced dynamic range compression that is similar to wide dynamic range compression but with a low compression threshold. The Vita 38 provides a uniform gain decrease (i.e., compression) that starts at the level of the first compression threshold (approximately 20 dB HL) and continues up to a second compression threshold, which is different for each of the three channels based on the fitting parameters. Above the second compression threshold, the gain is further reduced but maintained at a constant level (i.e., linear) in order to approximate the loudness functions of normal-hearing individuals at a high input level. This method of signal processing allows compression, working with other processing variables, to fit sound comfortably within an individual’s dynamic range with minimal distortion.

The initial hearing aid fitting was based on the fitting algorithm recommended by the manufacturer. The in situ threshold measurement using pulsed warble tones, called the Sensogram, was completed in four frequency bands (500, 1000, 2000, and 4000 Hz). These settings were measured using real-ear and soundfield thresholds. The Verifit Speechmap system was used to optimize the hearing aid output within the participant’s dynamic range for soft (55 dB SPL), medium (65 dB SPL), and loud (75 dB SPL) input levels. The measured hearing aid output was programmed to be above threshold for all input levels and below the level of the “loud” rating for the 75 dB SPL input. Due to the profound hearing loss of these participants, especially in the high frequencies, the measured hearing aid output was not always above threshold. The Speech Intelligibility Index (SII [American National Standards Institute, 1997]) that is calculated by the Verifit system was also used to maximize audibility during the hearing aid fitting. In addition, the hearing aid was optimized so that soundfield thresholds were as low as possible. Adjustments to the hearing aid, however, were limited by the unaided hearing level and the maximum hearing aid gain. All participants were fit with earmolds designed for power hearing aids. Earmolds were remade from one to three times for each participant in an attempt to optimize available gain without feedback.

Soundfield Threshold and Loudness-Growth Measures

Frequency-modulated soundfield thresholds were obtained from 250 to 6000 Hz in a modified Hughson-Westlake procedure (Carhart and Jerger, 1959) with ascents in 2 dB steps and descents in 4 dB steps beginning below estimated threshold. A stimulus duration of 1–2 sec and interstimulus interval of 20 sec was used to minimize variability that can occur with soundfield thresholds for nonlinear hearing aids (Kuk, 2000). Soundfield thresholds were obtained in three counterbalanced conditions: hearing aid monaurally, cochlear implant monaurally, and cochlear implant and hearing aid bimodally (CI&HA). Soundfield thresholds were completed at the beginning of each test session to verify the functioning of the devices.

Loudness growth was obtained in three counterbalanced conditions (HA, CI, and CI&HA). The measured threshold level for four-talker broadband speech babble was used to calculate 15 evenly spaced presentation levels from threshold to 80 dB SPL. These levels were presented in a randomized order. The participant responded to each presentation by choosing the appropriate loudness category (very soft, soft, medium soft, medium, medium loud, loud, and very loud). Each participant had one practice condition prior to testing.

Speech-Recognition and Localization Measures

Testing was conducted separately for speech recognition and sound localization. On, both tasks, the loudspeakers that were positioned in 10 degree increments were numbered from 1 (−70 degrees azimuth) to 15 (+70 degrees azimuth). The participants were not informed that five loudspeakers were inactive, so all loudspeakers were included in the participants’ responses. On the speech-recognition task, the participant repeated words that were presented randomly from the loudspeaker array at a roving level of 60 dB SPL (±3 dB SPL). Stimuli were from two lists of 50 CNC words, and testing was conducted for each of the three listening conditions (HA, CI, and CI&HA). On each trial, the stimulus was presented for 1–2 sec with an interstimulus interval of 20 sec. Presentation of the 50 words was divided among 10 of the loudspeakers, referred to as the active loudspeakers, including #1 and 15 (±70 degrees azimuth), #3 and 13 (±50 degrees azimuth), #5 and 11 (±30 degrees azimuth), #6 and 10 (±20 degrees azimuth), and #7 and 9 (±10 degrees azimuth). The remaining five loudspeakers, #2 and 12 (±60 degrees azimuth), #4 and 14 (±40 degrees azimuth), and #8 (0 degrees azimuth), were not active but were visible to the participants. The participant was allowed to turn toward the loudspeaker from which the word was perceived and then return to 0 degrees azimuth prior to the next presentation. The condition order was counterbalanced, and the lists were randomly assigned.

The sound-localization task was also completed using CNC words, with stimuli presented randomly from the same set of 10 loudspeakers in the array. The same procedure used in the roaming speech-recognition task was followed, except the participants were asked to state the number of the loudspeaker from which the word was perceived to be coming. The condition order (HA, CI, and CI&HA) was counter-balanced, and the lists were randomly assigned to each condition. These tasks utilized words as targets in order to increase the difficulty of the tasks and thus minimize the likelihood that a ceiling effect would be observed. In addition, a speech stimulus was selected since it is generally more representative of stimuli that one encounters in everyday listening situations.

Questionnaires

At the conclusion of the study the Speech, Spatial, and Qualities of Hearing Scale (SSQ) questionnaire, version 3.1.1 (Gatehouse and Noble, 2004; Noble and Gatehouse, 2004), was taken home for completion by each participant. The purpose and content of the questionnaire were discussed with each participant prior to completion of the questionnaire.

Each participant completed an additional Bimodal Questionnaire designed for this study. Participants were asked to report the percentage of time they used the HA with the CI, if they would continue to use the HA after the conclusion of the study, and if they heard differently when listening bimodally. If the participant answered yes to the last question, he or she was asked to describe how the sound was different. Participants were also asked to report where sound was perceived for each of the conditions (HA, CI, and CI&HA). The choices were “Right Ear,” “Left Ear,” “In Your Head,” and “Center of Your Head.”

Schedule

At the initial visit, the participant’s audiogram and unaided loudness growth were measured, and an earmold impression was made. The participants were fit with the HA and earmold approximately two weeks later. The earmold was remade and/or the HA was reprogrammed over three–four visits spaced one to two weeks apart. After four to six weeks of optimized HA use, the participants completed the soundfield threshold, loudness-growth, roaming speech-recognition, and localization of speech, testing. The testing required four to six hours to complete and was divided into two to three sessions to prevent participant fatigue. All measures were repeated approximately four to six weeks later.

The protocol was approved by the Human Studies Committee at Washington University School of Medicine (#04-110). Each participant signed an informed consent outlining the test procedures prior to enrollment in the study. The participants were allowed to keep the HA and earmold at the conclusion of the study.

Data Analysis

Major hypotheses were tested using repeated-measures analysis of variance. Mauchly’s test was used to assess the sphericity assumption. If the test of sphericity was violated, the Greenhouse-Geisser correction was used to adjust the degrees of freedom for the averaged tests of significance. Significant effects were further examined using pairwise comparison of means or in the case of continuous moderators, regression analyses. Correlations were used to determine the test–retest reliability between measures and sessions.

RESULTS

Test-Retest Reliability

The test measures were administered twice approximately four–six weeks apart to assess test–retest reliability. The correlation coefficients for the roaming speech-recognition word score were very high and almost equivalent across conditions: HA condition, r = 0.96; CI condition, r = 0.95; CI&HA condition, r = 0.94. Correlation coefficients were also computed for the root mean square (RMS) errors obtained from the sound-localization task. Although these were slightly lower and more variable than the speech-recognition correlation values, the values were still quite high: HA condition, r = 0.90; CI condition, r = 0.80; CI&HA condition, r = 0.87. These correlation values suggest that, for these tasks, intrasubject variability is generally small within the four- to six-week period used in this study.

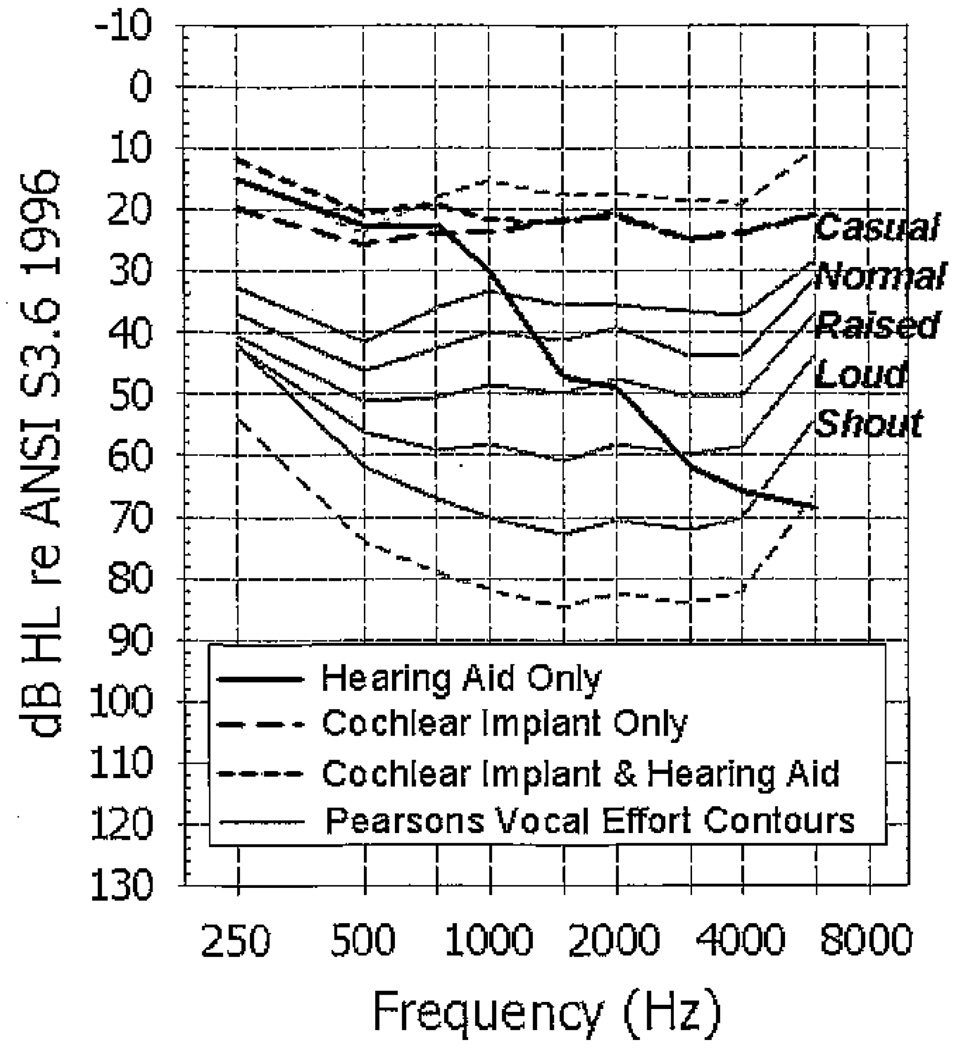

Soundfield Thresholds

For the warble-tone soundfield thresholds, mean values in dB HL for each condition are plotted in Figure 3. The soundfield thresholds are plotted in relation to long-term average speech spectra (solid gray lines) across men, women, and children for speech spoken at five vocal efforts (causal, normal, raised, loud and shout [Pearsons et al, 1977]) measured in 1/3-octave band levels. The upper dashed gray line represents the long-term average speech level that is 18 dB less intense than the “casual” contour, and the lower dashed gray line represents the long-term average speech level that is 12 dB more intense than the “shout” contour (Skinner et al, 2002b). With the HA, the frequencies above 1000 Hz are not audible at a “casual” vocal effort (solid black line). It was expected that the soundfield thresholds would be elevated in the HA condition given the unaided thresholds in the HA ear. All participants had soundfield thresholds through 1500 Hz with their HA. The CI thresholds (large dashed black line) were audible across the frequency range well below the level of the long-term average “casual” vocal effort. The additional low-frequency amplification provided by the HA contributes to the 1–3 dB of binaural loudness summation seen in the bimodal CI&HA condition (small dashed black line).

Figure 3.

Mean soundfield thresholds (dB HL) for the three listening conditions. Long-term average speech spectra across men, women, and children for speech spoken at five vocal efforts (causal, normal, raised, loud, and shout [Pearsons et al, 1977]) are also shown. The upper gray dashed line represents the speech energy that is 18 dB less intense than the “casual” contour, and the lower gray dashed line represents the speech energy that is 12 dB more intense than the “shout” contour.

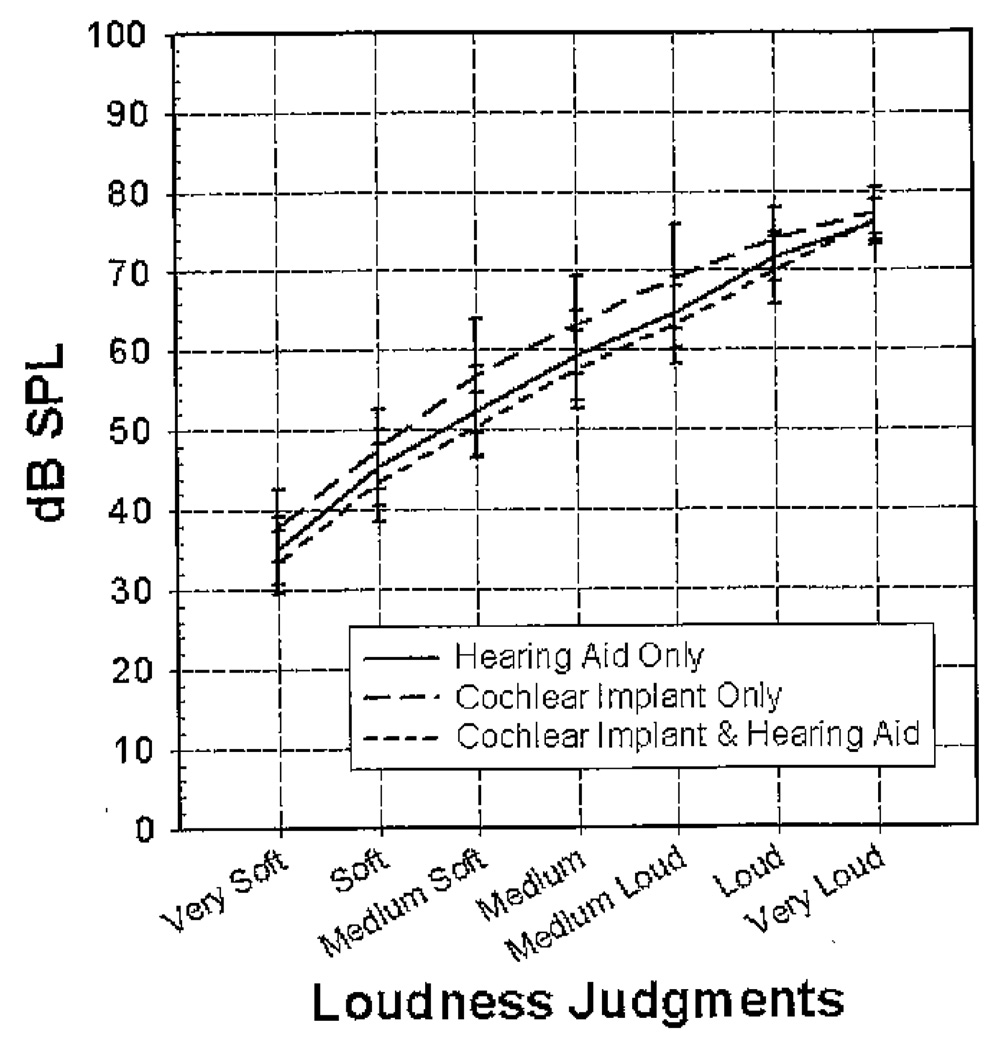

Loudness Growth

Mean loudness judgments (dB SPL) for four-talker broadband babble in the three conditions are shown as a function of level in Figure 4. In all conditions, there is steady growth of loudness from “very soft” to “very loud.” These curves, however, show distinct patterns of loudness growth for each of the conditions. Binaural loudness summation occurs at loudness categories from “very soft” to “loud” in the bimodal condition. The lower input levels in the bimodal condition suggest that the participant is receiving additional loudness with the HA in the opposite ear. The CI&HA condition has the lowest SPL for all loudness categories up to “very loud” (i.e., equivalent speech levels were rated louder than in the monaural conditions). The HA condition has an increased audibility of sound (2–5 dB lower) from “very soft” to “medium loud” compared to the CI condition. The CI condition had the highest input levels for each loudness category. All the conditions have almost identical “very loud” levels. The highest presentation level was 80 dB SPL, so this may have limited the rating of “very loud” for some participants.

Figure 4.

Mean loudness judgments (dB SPL) and ±1 SD measured with four-talker babble for the three listening conditions.

Roaming Speech Recognition

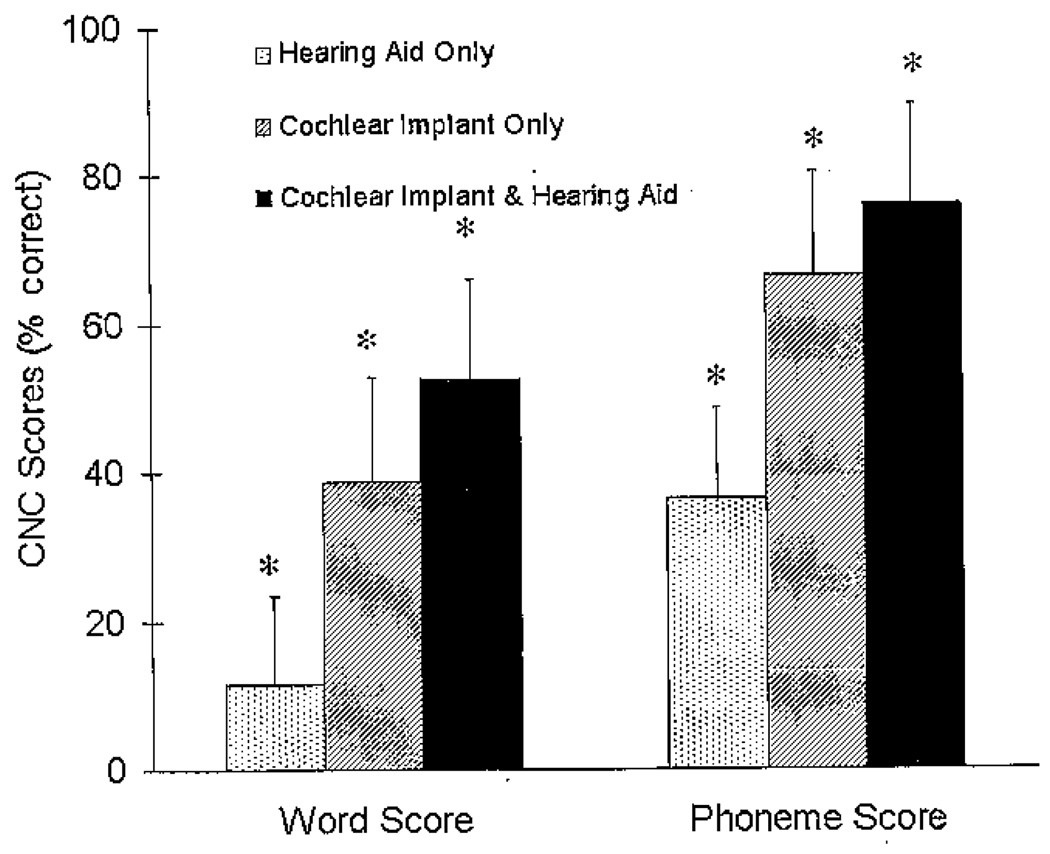

The repeated-measures analysis of variance for the roaming speech-recognition task showed a significant condition effect for words (F[1.26, 22.68] = 59.42, p < .001) and phonemes (F[1.05, 18.83] = 57.06, p < .001). The mean word and phoneme scores for each of the three conditions in percent correct are shown in Figure 5, and individual word scores are shown in Table 3. The scores for Session 1 and Session 2 were collapsed across session because there was no session effect and no session by condition interaction. The scores are, therefore, reported as averaged means in percent correct across sessions for each condition. The HA condition has much lower mean scores (word = 11.58%, phoneme = 36.55%) than the CI (word = 38.84%, phoneme = 66.44%) or CI&HA (word = 52.55%, phoneme = 76.01%) conditions.

Figure 5.

Mean consonant–nucleus vowel–consonant (CNC) word scores (left side of figure) and phonemes scores (right side of figure) in percent correct and +1 SD for the three listening conditions for the roaming speech-recognition task. The asterisks represent a significant difference between conditions (p < .05).

Table 3.

Individual Participant Consonant-Nucleus Vowel-Consonant Word Scores (% Correct) for Each Condition Collapsed across Sessions

| Participant | Hearing Aid (HA) |

Cochlear Implant (Cl) |

Cochlear Implant and Hearing Aid (CI&HA) |

CI&HA Score Minus Monaural HA Plus Monaural Cl Scores |

|---|---|---|---|---|

| 1 | 16 | 60 | 69 | −6.5 |

| 2 | 46 | 24 | 60 | −9.5 |

| 3 | 12 | 63 | 78 | 3.0 |

| 4 | 6 | 67 | 76 | 4.0 |

| 5 | 11 | 40 | 53 | 2.5 |

| 6 | 12 | 41 | 47 | −6.0 |

| 7 | 3 | 53 | 66 | 10.0 |

| 8 | 1 | 31 | 40 | 8.5 |

| 9 | 3 | 52 | 63 | 9.0 |

| 10 | 18 | 41 | 68 | 8.5 |

| 11 | 31 | 17 | 52 | 4.0 |

| 12 | 5 | 39 | 45 | 2.0 |

| 13 | 4 | 25 | 30 | 2.0 |

| 14 | 5 | 33 | 33 | −4.0 |

| 15 | 6 | 27 | 41 | 8.0 |

| 16 | 2 | 49 | 49 | −1.5 |

| 17 | 20 | 21 | 43 | 2.0 |

| 18 | 22 | 43 | 62 | −3.5 |

| 19 | 2 | 17 | 27 | 8.0 |

| Mean | 11.58 | 38.84 | 52.55 | 2.13 |

| SD | 11.71 | 15.28 | 15.18 | 5.89 |

Note: The last column shows the measured CI&HA condition score subtracted from a calculated score that was obtained by adding the HA condition and CI condition scores together (HA + CI).

The pairwise comparisons with a Bonferroni adjustment for multiple comparisons revealed that for both word and phoneme scores, conditions were significantly different from each other (p < .001). The range of improvement in speech recognition in the bimodal condition was from 0 to 27 percent with a mean improvement of 14 percent compared to the best monaural condition. Two participants (2 and 11) had higher scores with their HA monaurally than with their CI monaurally. Both of these participants had asymmetric hearing when they received their CI and had their poorer ear implanted. Two participants (14 and 16) had no difference in scores between the CI and CI&HA conditions. These two participants had minimal speech recognition with their hearing aids alone, scoring 5 percent and 2 percent, respectively, on CNC words. No participant had a poorer score with the CI&HA together than with the HA or CI monaurally.

The difference between the bimodal word score (CI&HA) and the summed monaural conditions (HA + CI) is shown in the last column of Table 3. The word scores when the HA and CI monaural scores are added together are almost equivalent to the word scores found in the bimodal condition. The difference scores range from 0 to ±10 percent with a mean difference of 2 percent. This suggests that the bimodal benefit found in speech recognition could possibly be estimated based on monaural HA and monaural CI speech-recognition scores.

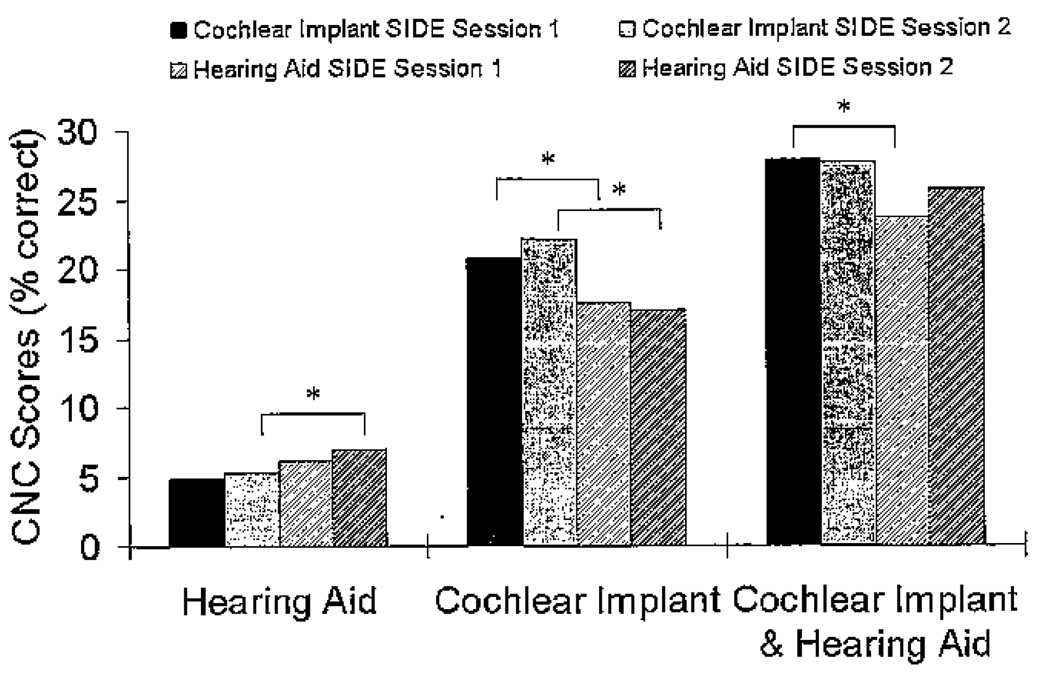

The roaming speech-recognition word scores were furthered analyzed based on the side of the loudspeaker array that presented the word. The three-way repeated-measures analysis of variance for the roaming speech-recognition task (2 [Session] × 2 [Side] × 3 [Condition]) showed a significant session by condition by side effect (F[2, 36] = 3.24, p = .05). Pairwise comparisons with Bonferroni correction were used to determine the nature of the three-way interaction. For the hearing aid condition there was not a significant side difference for Session 1 (p = .070), but a significant side difference did emerge for Session 2 (p = .047). For the CI condition, there were significant side differences for both sessions (Session 1, p = .006; Session 2, p < .001). For the bimodal (CI&HA) condition, there was a significant side difference for Session 1 (p = .003) but not for Session 2 (p = .136). The condition by side roaming speech-recognition word scores for Sessions 1 and 2 can be seen in Figure 6. The session differences speak to the possibility of improvement over time as participants’ experience with the hearing aid increased as well as better integration of the CI&HA input.

Figure 6.

Mean consonant–nucleus vowel–consonant (CNC) word scores (% correct) for the three listening conditions for the roaming speech-recognition task divided by the side of the loudspeaker array that presented the word for Session 1 and Session 2. The asterisks represent a significant difference for side of presentation (p < .05).

Localization of Speech

Raw data collected during the localization of speech task were analyzed by calculating RMS error for each of the conditions. The RMS error is the mean deviation of the responses from the target locations, irrespective of the direction of the deviation. It was computed as shown in the equation below:

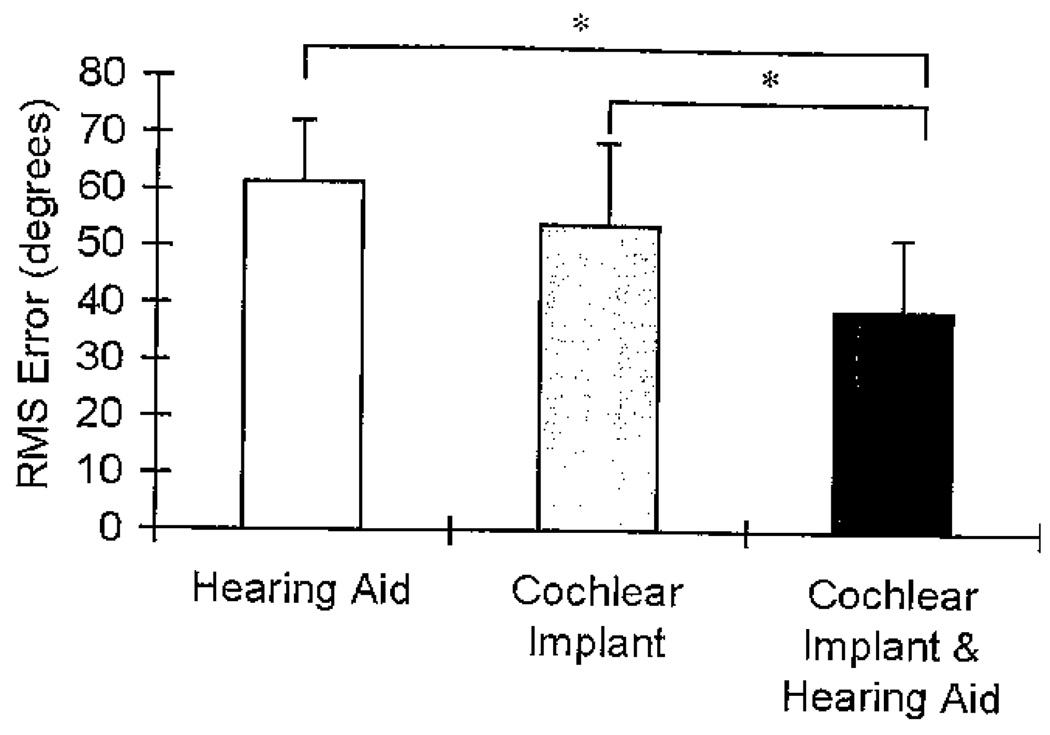

where x1 = target location, x2 = response location, and n = number of trials. The repeated-measures analysis of variance of the RMS errors showed a significant condition effect (F[2, 36] = 20.85, p < .001). The RMS errors for Session 1 and Session 2 were collapsed across session because there was no session effect and no session by condition interaction and are reported as averaged means across sessions. The average RMS errors (in degrees) for each of the three conditions are shown in Figure 7, and individual participant RMS errors are shown in Table 4. The smaller the RMS error, the more accurate the localization. An RMS error of 0 implies perfect performance. The HA condition had the highest RMS error (i.e., poorest localization) of the three conditions at 61.4 degrees (range 37.5–79.2 degrees), followed by the CI condition at 53.8 degrees (range 30.3–80.4 degrees), and then the CI&HA condition had the lowest RMS error (i.e., best localization) at 39.3 degrees (range 21.2–65.6 degrees).

Figure 7.

Mean root mean square (RMS) error (in degrees) and +1 SD for the three listening conditions for the localization of speech task. The asterisks represent a significant difference between conditions (p < .05).

Table 4.

Individual Root Mean Square Error for the Three Conditions Collapsed across Sessions

| Participant | Hearing Aid |

Cochlear Implant |

Cochlear Implant and Hearing Aid |

|---|---|---|---|

| 1 | 73.42 | 72.68 | 35.24 |

| 2 | 37.53 | 61.28 | 21.19 |

| 3 | 60.50 | 45.96 | 28.30 |

| 4 | 53.29 | 30.29 | 23.42 |

| 5 | 47.72 | 56.27 | 51.65 |

| 6 | 55.01 | 51.31 | 28.45 |

| 7 | 79.19 | 42.24 | 43.65 |

| 8 | 53.01 | 33.77 | 40.29 |

| 9 | 75.99 | 36.39 | 39.46 |

| 10 | 73.34 | 72.11 | 38.90 |

| 11 | 55.62 | 52.62 | 34.16 |

| 12 | 71.87 | 65.63 | 41.49 |

| 13 | 55.80 | 38.56 | 35.98 |

| 14 | 65.62 | 58.81 | 51.52 |

| 15 | 64.16 | 63.84 | 65.61 |

| 16 | 56.65 | 37.90 | 36.75 |

| 17 | 51.66 | 57.47 | 26.59 |

| 18 | 77.92 | 80.42 | 41.59 |

| 19 | 58.09 | 63.75 | 62.74 |

| Mean | 61.39 | 53.75 | 39.32 |

| SD | 11.43 | 14.50 | 12.05 |

Pairwise comparisons with a Bonferroni adjustment for multiple comparisons showed that the bimodal condition was significantly different from both monaural conditions (HA, p < .001; CI, p = .003). The mean RMS error for the CI&HA condition was 22.07 degrees lower than the HA condition and 14.45 degrees lower than the CI condition. The HA and CI monaural conditions were not significantly different from each other (p = .14). The RMS error difference between the two monaural conditions was 7.63 degrees, which is less than the distance of one loudspeaker.

The data shown in Table 4 reveal notable differences in RMS error between individual participants and also across conditions. There were three participants (5, 15, and 19) who did not show a difference in their localization ability between the three conditions (HA, CI, and CI&HA). Only one participant (2) had better localization with the HA monaurally than with the CI monaurally. There were four participants (7, 9, 13, and 16) who had a minimal difference (1–3 degrees) between their CI and CI&HA conditions. For these participants their bimodal localization appeared to be determined by their ability to localize with their CI. There were no participants whose bimodal CI&HA localization ability appeared to be determined by their ability to localize with their HA alone. Unlike with the roaming speech-recognition task, the bimodal localization errors could not be estimated based on either monaural HA or CI errors.

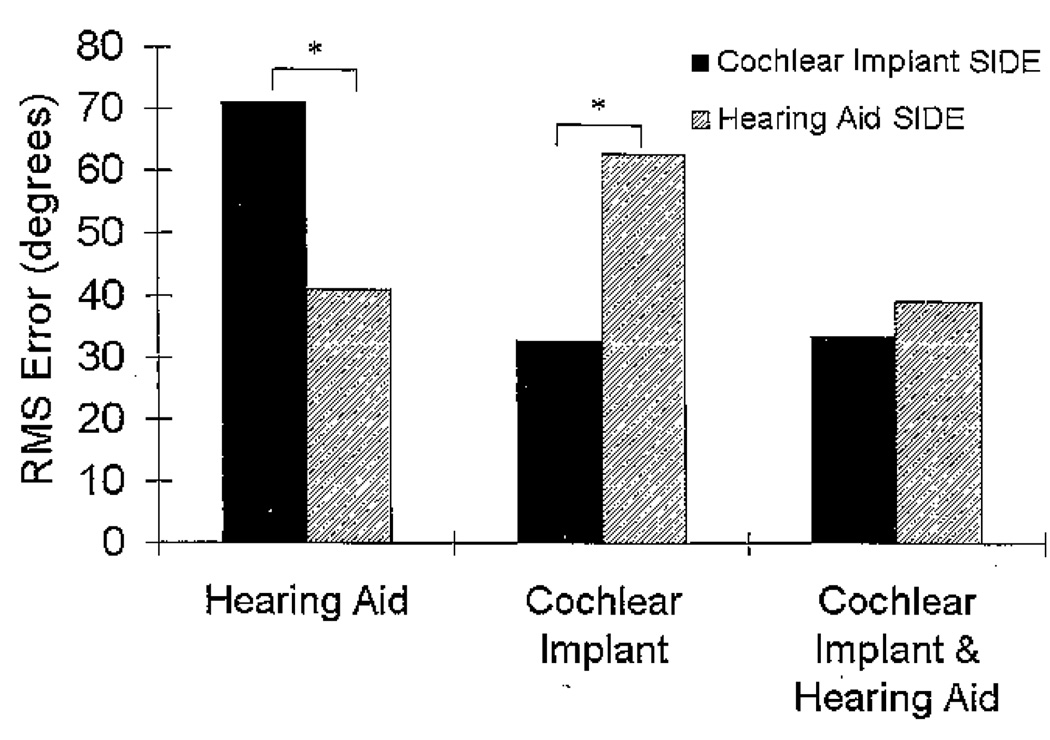

The RMS errors were furthered analyzed according to the side of the loudspeaker array from which the word was presented. A three-way repeated-measures analysis of variance for Session (2) × Side (2) × Condition (3) showed a significant interaction of side by condition (F[1.32, 23.66] = 27.02, p < .001), with no other interaction effects. Pairwise comparisons with a Bonferroni adjustment for multiple comparisons showed significant side differences for the HA and CI conditions but not for the CI&HA condition. The mean RMS errors are shown in Figure 8 by side. When listening monaurally, the RMS error is significantly lower on the stimulated side of the array compared to the nonstimulated side, for both the HA (p < .001) and CI (p < .001) conditions. Localization errors decreased by approximately 30 degrees when sound was presented from the stimulated side of the array compared with the nonstimulated side of the array, for both the HA and CI conditions. RMS error was not significantly different between sides of the array for the CI&HA condition (p = .130). That is, when input to both ears was provided, the large error differences between the sides of the array were not found.

Figure 8.

Mean root mean square (RMS) error (in degrees) for the three listening conditions for the localization of speech task divided by the side of the loudspeaker array that presented the word. The asterisks represent a significant difference between sides (p < .05).

Correlation between Roaming Speech Recognition and Localization of Speech

There were several significant correlations between roaming speech-recognition scores (% correct CNC words) and localization of speech (RMS error). The significant Pearson correlations always involved the bimodal condition (CI&HA). The CI&HA roaming speech recognition in Session 1 was significantly correlated with the CI&HA localization of speech in both Session 1 (r = −0.58) and Session 2 (r = −0.48). The roaming speech recognition in Session 2 for the CI&HA condition was significantly correlated with localization of speech in Session 1 for the CI&HA (r = −0.46) but not the CI&HA localization error for Session 2. These correlations can be seen in the individual data as well. For example, participant 4 had the second highest CI&HA speech recognition (76%) and also the second best CI&HA localization (23.42 degrees). The HA speech recognition in Session 1 (r = −0.54) and Session 2 (r = −0.57) was also significantly correlated with localization in Session 2 for the CI&HA condition. This correlation is represented by participant 2, who had the highest speech recognition with the HA alone (46%) and the best localization bimodally (21.19 degrees). These correlations could suggest that there are similar underlying mechanisms involved in speech recognition and localization improvements when listening bimodally.

The roaming speech-recognition scores and localization of speech errors did not correlate significantly for the monaural HA or CI conditions. Therefore, with monaural device use, there appears to be no observable relation between speech recognition and localization. This lack of correlation can be seen in the individual data. Participant 8, for example, had the third best localization value with the HA alone but had the lowest HA speech-recognition score (1%). Participant 8 also had the second best localization with the CI alone, but the CI speech-recognition score was below average (31%).

Significant Moderators

Additional analyses were performed to determine if the results for the roaming speech-recognition and localization of speech tasks were moderated by other variables. Demographic variables examined included age, gender, age hearing impairment suspected, age hearing impairment diagnosed, age hearing impairment became severe/profound, years of HA use in HA ear, years of HA use in CI ear, years of binaural hearing aid use, ear wearing HA or CI, and age at CI surgery. Several audiologic variables were also examined. These included unaided hearing thresholds under headphones (dB HL); slope of the hearing loss (calculated from 250 and 500 Hz through 4000 Hz for each octave and interoctave); unaided speech discrimination with Northwestern University Auditory Test No. 6 words (under headphones); HA soundfield thresholds (250–6000 Hz); and the SII calculated by the AudioScan Verifit at 55, 65, and 75 dB SPL. Finally, the mean SSQ scores for each of the three scales were analyzed.

In these analyses, the previously described statistical design was modified to include one additional moderator (no more than one could be included due to sample size restrictions) for the roaming speech-recognition and localization of speech tasks separately. There were no significant demographic variables. The moderators that were significant are related to the hearing in the HA ear and/or the audibility with the HA, which influenced the HA and/or the CI&HA conditions. In addition, the significant moderators are almost identical between the roaming speech-recognition and localization tasks.

First, the analyses of the roaming speech-recognition task revealed interactions with condition and unaided thresholds in the mid- and high frequencies: 1500 Hz, F(1.31, 22.32) = 9.41, p = .003; 2000 Hz, F(1.39, 23.65) = 10.00, p = .002; 3000 Hz, F(1.34, 22.77) = 5.11, p = .025; 4000 Hz, F(1.35, 22.99) = 5.81, p = .017; and 6000 Hz, F(l.33, 22.64) = 4.24, p = .041. As Appendix A shows, the relation of unaided thresholds to roaming speech recognition was uniformly negative in the HA condition, uniformly positive in the CI condition, and near zero in the bimodal condition.

The analyses of the localization of speech task revealed interactions with condition and unaided thresholds in the mid- and high frequencies: 1000 Hz, F(2, 34) = 3.31, p = .049; 1500 Hz, F(2, 34) = 10.563, p = .000; 2000 Hz, F(2, 34) = 10.32, p = .001; 3000 Hz, F(2, 34) = 4.69, p = .016; and 4000 Hz, F(2, 34) = 5.11, p = .012. As Appendix B indicates, the relation of unaided thresholds to localization of speech task outcome was positive in the HA condition (except for at 1000 Hz) and the bimodal condition but was negative in the CI condition.

Analyses of roaming speech recognition and localization of speech using HA soundfield thresholds as moderators indicated significant interactions with condition at 1500 Hz (F[1.54, 26.26] = 16.27, p < .001; F[2, 34] = 12.71, p < .001) and 2000 Hz (F[1.42, 18.42] = 5.20, p < .025; F[2, 26] = 8.43, p = .002).Appendix A shows that HA soundfield thresholds were negatively related to roaming speech recognition in the HA condition, positively related in the CI condition, and positively (though less so) related in the bimodal condition. Appendix B shows that HA soundfield threshold was positively related to localization in the HA and bimodal conditions but negatively related to localization in the CI condition.

The SII score interacted significantly with condition at each of the three measured input levels (55, 65, and 75 dB SPL) for roaming speech-recognition (SII55, F[1.33, 22.59] = 10.72, p = .003; SII65, F[1.34, 22.82] = 10.38, p = .002; SII75, F[1.30, 22.09 = 9.52, p = .003) and localization of speech tasks (SII55, F[2, 34] = 6.63, p = .004; SII65, F[2, 34] = 6.77, p = .003; SII75, F[2, 34] = 9.21, p = .001 [see Appendixes A and B]). For both tasks, the SII at each input level was strongly related to the outcome in the HA condition. This was an expected finding as the SII is a measure of HA audibility. The SII at each input level was moderately related to the CI&HA condition, which speaks to the contribution of the hearing aid in the bimodal condition and the importance of maximizing the SII at all input levels.

Speech, Spatial, and Qualities of Hearing Questionnaire

The three SSQ scales were analyzed as separate moderators, as each scale represents notably different environments. The Spatial and Sound Qualities scales were found to be significant moderators of roaming speech-recognition (F[1.35, 22.93] = 5.97, p = .015; F[I.39, 23.71] = 7.96, p = .005) and localization of speech tasks (F[2, 34] = 3.65, p = .037; F[2, 34] = 3.14, p = .056 [see Appendixes A and B]. Participants who had higher speech recognition and better localization in the CI and CI&HA conditions reported better spatial perception and more satisfactory sound quality.

The Spatial scale addresses the participants’ perception regarding their own ability to localize sounds and orient in the direction of sounds in the environment. The mean rating for the Spatial scale was 4.7, with a range of 3.7–6.2. The question on which the participants rated themselves the highest (#3) asks about a relatively simple left/right discrimination task in a quiet environment. The questions on which the participants rated themselves the lowest (#8, 10, and 11) involved situations that were outdoors and/or had a moving stimulus. The Spatial scale contains more dynamic environments than are found in the other scales, which is reflected in the overall lower ratings.

The Sound Qualities scale was designed to access the quality and clarity of various types of sounds, including music and voices. The mean for the Sound Qualities scale was 6.4 (range = 3.5–7.9), which was the highest mean rating for the three scales. The question the participants rated themselves the lowest on was “Can you easily ignore other sounds when trying to listen to something?” This rating accurately reflects the difficulty reported by hearing-impaired individuals in complex environments (background noise/multiple speakers). The four questions the participants rated themselves the highest on asked about the clarity and naturalness of speech (#8, 9, 10, and 11). These ratings reflect the participants’ ability to process sound with their CI and HA as well as their ability to integrate the electric and acoustic signals.

The mean for the SSQ Speech scale was 5.9, with a range from 3.3 to 9.3. Although the Speech scale was not a significant moderator, the ratings still reflect performance in everyday life for hearing-impaired individuals. The question that the participants rated themselves the highest on asked about an easy one-on-one conversation (question #2), while the questions the participants rated themselves the lowest on (#6, 10, and 14) involved multiple speakers and/or noise.

Bimodal Questionnaire

The participants’ responses to the Bimodal Questionnaire are shown in Table 5. All participants reported that they would continue to use the HA after the conclusion of the study. Only two participants (13 and 15) reported wearing the HA less than 90 percent of the time. All participants stated that sound is different when wearing the CI and HA together. The participants descriptions of how the sound is different when wearing the HA are listed in the last column. Some of these include descriptions of sound being clearer, more natural, and more balanced and having more bass.

Table 5.

Individual Participant Responses to the Bimodal Questionnaire

| Participant | Where Sound Is Heard When Wearing Cochlear Implant (CI) Only |

Where Sound Is Heard When Wearing Hearing Aid (HA) Only |

Where Sound Is Heard When Wearing CI and HA |

Percentage of Time HA Worn |

Continue to Use HA after Study |

Hear Differently with HA |

How Sound Is Different When Wearing HA |

|---|---|---|---|---|---|---|---|

| 1 | CI Left Ear | HA Right Ear | Center of Head | 95 | Y | Y | Sound is like what the participant remembers normal hearing being |

| 2 | CI Right Ear | HA Left Ear | Center of Head | 100 | Y | Y | Sound is louder, clearer, and more natural |

| 3 | CI Right Ear | HA Left Ear | Center of Head | 100 | Y | Y | Sound is more balanced; more bass and soft sounds |

| 4 | CI Right Ear | HA Left Ear | Center of Head | 100 | Y | Y | Sound is more balanced and louder |

| 5 | CI Left Ear | Center of Head | Center of Head | 100 | Y | Y | Sound is more complete; HA rounds out the sound |

| 6 | In Your Head | HA Left Ear | Center of Head | 100 | Y | Y | Hear better and do not miss as much |

| 7 | CI Right Ear | HA Left Ear | Center of Head | 99 | Y | Y | Sound is Clearer |

| 8 | CI Right Ear | Center of Head | CI Right Ear | 100 | Y | Y | Sound has a little extra depth, richness, and volume |

| 9 | CI Right Ear | HA Left Ear | Center of Head | 100 | Y | Y | Sound is fuller; more comfortable |

| 10 | CI Right Ear | HA Left Ear | Center of Head | 95 | Y | Y | Sounds are stronger and lower in pitch |

| 11 | CI Left Ear | HA Right Ear | Center of Head | 100 | Y | Y | Sounds are a little louder |

| 12 | CI Right Ear | HA Left Ear | In Your Head | 100 | Y | Y | Sound quality is better and more balanced |

| 13 | CI Left Ear | In Your Head | CI Left Ear | 50 | Y | Y | Sound is enhanced |

| 14 | CI Left Ear | HA Right Ear | In Your Head | 100 | Y | Y | Sound is fuller, Clearer, and louder |

| 15 | CI Right Ear | HA Left Ear | Right and Left Ears | 75 | Y | Y | Without HA, only hear on one side |

| 16 | CI Left Ear | HA Right Ear | Center of Head | 95 | Y | Y | Sound is more uniform and natural |

| 17 | In Your Head | In Your Head | Center of Head | 90 | Y | Y | Sound is Clearer |

| 18 | CI Right Ear | HA Left Ear | Center of Head | 100 | Y | Y | Could not describe |

| 19 | CI Left Ear | HA Right Ear | Right and Left Ears | 100 | Y | Y | Speech is more understandable |

The answers to where sound was perceived for each listening condition are fairly consistent among the participants. When stimulated monaurally the majority of participants reported sound was heard in that ear. When stimulated bimodally, sound was reported as “in their head” or “in the center of their head” by all but four of the participants. Of these four, participants 8 and 13 reported that sound was heard in their CI ear when stimulated bimodally. These two had the poorest unaided pure-tone averages (in the HA ear) of all the participants in the study. The limited hearing may explain why they reported sound as heard in the CI ear when they were bimodally stimulated. The other two participants (#15 and 19) who did not report sound as being “in their head/center of their · head” when stimulated bimodally were different from participants 8 and 13. They reported hearing sound in the monaurally stimulated ear, like the majority of the other participants, but they chose “right and left ears” when stimulated bimodally. These were the only two participants who did not report hearing sound as “in their head/center of their head” in some condition. They were also the two participants with the poorest localization ability across all three conditions.

DISCUSSION

The results of this study cannot be discussed without first emphasizing the importance of the cochlear implant and hearing aid fittings. This study differs from several prior bimodal studies in which the type of hearing aid is not specified and in which no information about the hearing aid or cochlear implant fitting process is given (Shallop et al, 1992; Waltzman et al, 1992; Chmiel et al, 1995; Tyler et al, 2002; Iwaki et al, 2004; Dunn et al, 2005; Kong et al, 2005; Luntz et al, 2005; Morera et al, 2005). By narrowing down details of the amplification and fitting process, the present study could provide a more complete picture regarding the effects of specific stimulation approaches on performance. The goals of this study, with regard to both the cochlear implant and hearing aid fitting, were to make very soft sounds audible, loud sounds loud but tolerable, and average conversational speech clear and comfortably loud. These goals are not new, as they have been described and implemented for many years (Pascoe, 1975; Skinner, 1988). When these goals are achieved, speech recognition improves with a cochlear implant (James et al, 2003; Skinner, 2003; Firszt et al, 2004; Holden and Skinner, 2006) and with a hearing aid (Pascoe, 1988; Sullivan et al, 1988; Humes, 1996; Ching et al, 1998; Skinner et al, 2002b).

The goodness of fit for the devices used in this study is demonstrated in the soundfield thresholds, SII, loudness growth, and subjective reports. The mean cochlear implant and bimodal soundfield thresholds approximate 20 dB HL, which is the target level recommended by Mueller and Killion (1990). The amount of binaural loudness summation (3 dB) agrees with that found with pure tones at threshold by Hirsh (1948) and Causse and Chavasse (1942). Binaural loudness summation is also reflected in the subjective comments on the Bimodal Questionnaire, as participants reported that the addition of the hearing aid made sounds louder, added more bass, and made soft sounds more audible (see Table 5).

The SIIs obtained with the hearing aid are well below 1.0, the level of maximum audibility. Despite relatively low values, the SIIs were still significant moderators for speech-recognition and localization tasks at all three input levels. This significant finding is supported by the steep growth in intelligibility predicted on the Connected Speech Test for low SII values (i.e., a 64% increase in intelligibility from an SII of 0.2 to 0.4 [Cox et al, 1987; Hornsby, 2004]). It is possible, however, that maximization of the SII may not be appropriate in all cases (Ricketts, 1996; Stelmachowicz et al, 1998; Mok et al, 2006) and should be evaluated for each individual based on frequency-specific threshold and loudness-growth information.

Loudness growth measured with four-talker broadband babble showed binaural loudness summation of 1.5–2 dB (see Figure 4), which is less than the 3–5 dB found with speech stimuli for normal-hearing (Haggard and Hall, 1982) and hearing-impaired individuals (Hawkins et al, 1987). This difference is probably due to the compression characteristics of the devices, as the individuals in the previous studies were unaided. The level of binaural loudness summation found in this study does agree closely with that found for bimodal devices by Blamey et al (2000).

The highest roaming speech-recognition score was found in the bimodal condition for 17 of the 19 participants (see Table 3). The remaining two participants had equal speech recognition between the cochlear implant and bimodal conditions. No participant, therefore, had a decrease in speech recognition with the addition of the hearing aid. For many years, there was concern that a hearing aid would provide no benefit or actually detract from a cochlear implant recipient’s speech recognition. Several early bimodal studies showed no improvement or minimal improvement with the addition of a hearing aid (Waltzman et al, 1992; Armstrong et al, 1997; Chute et al, 1997; Tyler et al, 2002). It is probable that these early studies used linear hearing aids with high levels of distortion that can have detrimental effects on speech recognition (Naidoo and Hawkins, 1997; Bentler and Duve, 2000). Given the digital hearing aids that are available today, distortion should not be an issue.

A loudspeaker array was used to present the words for the speech-recognition task to approximate a real-life environment. In everyday situations, listeners are asked to locate, identify, attend to, and switch attention between speakers (Gatehouse and Noble, 2004). When speech is presented from directly in front of the listener, as is commonly done for speech testing in a clinic, there is one sound source and it is static and predictable. This testing environment would be similar to a one-on-one conversation, which most hearing-impaired individuals report as a relatively easy listening situation. The roaming speech-recognition task used in this study had multiple sound sources, making the spatial position not static or predictable but, rather, dynamic and like that in the real world. This testing environment would be similar to a group situation, which most hearing-impaired individuals report as a challenging listening situation.

In the roaming speech-recognition task, the location of the loudspeaker was important in determining if the word would be identified correctly across sessions. For both the hearing aid and the cochlear implant, the words presented from the stimulated side of the loudspeaker array resulted in significantly better speech recognition than those words that were presented from the nonstimulated side of the array (see Figure 6). In the bimodal condition (stimulation on both sides), the speech recognition was equal regardless of which side of the loudspeaker array presented the word. This improvement suggests that a bimodally stimulated individual would be able to understand equally well regardless of whether the speaker is on the individual’s right or left side. This is in contrast to when the individual has stimulation on only one side and speech understanding is poorer on the side with no stimulation. This was an unexpected finding given the large difference in speech recognition between the cochlear implant and the hearing aid. This suggests that a hearing aid improves bimodal speech recognition over time even when there is minimal speech recognition with the hearing aid.

It has long been accepted that bilateral input is required to detect differences in interaural information and that these differences provide cues required for localization. Overall, this study found the best localization with bilateral input (i.e., in the bimodal condition). The mean RMS error of 39 degrees in the bimodal condition, however, is notably poorer than that found for normal-hearing (Butler et al, 1990; Makous and Middlebrooks, 1990; Noble et al, 1998) and other hearing-impaired individuals (Durlach et al, 1981; Hausler et al, 1983; Byrne et al, 1998). This discrepancy is most likely due to the difference in signals (electric vs. acoustic) as well as an asymmetry in hearing levels found with bimodal hearing. The mean bimodal RMS error found in this study is very close (within 4 degrees) to values reported in two other bimodal studies (Ching et al, 2004; Dunn et al, 2005). This similarity suggests that the reduction in localization ability in the present participants is not due to inherent differences between subjects across studies but is more likely a reflection of abnormal and asymmetric cues inherent in bimodal hearing and the limits that these impose on localization ability.

It seems that for a small number of cochlear implant recipients, for reasons not yet understood, there may be an inability to use acoustic and/or electric signals to localize sound. Seeber et al (2004) report four participants who showed very poor localization abilities monaurally and bimodally. In this study, there were three participants who did not have an improvement in localization in the bimodal condition and who also had poor monaural localization. Two of these participants were the only ones who did not report hearing sound “in their head” on the Bimodal Questionnaire. This suggests that subjective reports used in conjunction with localization testing could possibly provide insight into an individual’s binaural processing.

Nonetheless, the addition of the hearing aid to the cochlear implant is clearly beneficial when contrasted with use of the cochlear implant alone. Thus, the bimodal condition reflects an intermediary step between normal hearing function and monaural hearing. An additional step that is not the focus of the present study, but that lies on that continuum between unilateral hearing and normal binaural hearing, is the use of bilateral cochlear implants, which are being provided to a growing number of cochlear implant users worldwide. On average, users of bilateral cochlear implants can localize sounds with an RMS error of less than 30 degrees (e.g., van Hoesel and Tyler, 2003; Nopp et al, 2004; Grantham et al, 2007; Litovsky et al, in press). Follow-up investigations on bimodal users who transition to bilateral cochlear implants and the effects of the transition on performance using tasks such as those used here would be important.

The current study used a speech stimulus that consisted of a carrier word (“ready”) followed by a monosyllabic word. This stimulus was chosen so that the participant could begin turning toward the perceived location during the carrier phrase and then finalize the location with the presentation of the word. This same orienting action occurs in everyday life when an individual hears his or her name called and turns to focus on the incoming speech signal. Several studies have suggested that localization improves if the participant’s head is moving while listening to a sound (Wallach, 1940; Thurlow et al, 1967; Blauert, 1997; Noble et al, 1998; Noble and Perrett, 2002). In addition, Noble et al (1998) have suggested that information obtained by searching and orienting the head may be especially valuable to hearing-impaired individuals particularly when normal phase, time, or intensity differences are unclear or missing.

The side effects found in the localization task are similar to those found in the speech-recognition task where the stimulated side had better localization than the nonstimulated side. This side difference occurred for both monaural conditions (see Figure 8). The side difference disappeared, however, when both ears were stimulated. This suggests that a bimodally stimulated individual would be able to locate a talker equally well whether the talker is on the individual’s right or left side. The improvement in localization in the bimodal condition is supported by subjective comments from the Bimodal Questionnaire, as many participants reported that sound was more balanced when using both devices. One participant even stated that without the hearing aid, he only hears on one side (see Table 5).

The difference in performance between the stimulated side and the nonstimulated side of the loudspeaker array highlights the importance of bilateral/bimodal hearing. In everyday situations if the individual is unable to locate the talker quickly and accurately, the message may be finished before the individual can attend to it. The individual with bimodal devices can turn and face the talker more effectively and efficiently. The ability to locate and visually attend to the talker is especially important for a hearing-impaired individual who requires the combination of listening and speechreading to maximize understanding.

There were no demographic or hearing history variables that were significant moderators of outcome. This is most likely due to sample size restrictions. The moderators that were significant were primarily related to the hearing aid ear. In addition, the significant moderators were almost identical between the roaming speech-recognition and the localization of speech tasks. One significant moderator, unaided hearing thresholds in the mid- and high frequencies, has also been shown to be significantly correlated with speech recognition in other bimodal studies (Seeber et al, 2004; Morera et al, 2005). The Ching and colleagues studies (Ching et al, 2001; Ching et al, 2004), however, did not find a correlation between unaided hearing thresholds and speech recognition but only analyzed thresholds up to 1000 Hz. It is possible that if the mid-and high-frequency thresholds were examined, there may have been a correlation.

The importance of maximizing amplification from the hearing aid is highlighted by the soundfield thresholds (1500 and 2000 Hz) and SII, which were also significant moderators. Given the heavy weighting the mid-frequencies receive in the SII calculation, every attempt to maximize amplification through 2000 Hz with a hearing aid should be made. This finding is in contrast to that of Mok et al (2006), who found that participants with poorer aided thresholds at 1 and 2 kHz had better speech recognition in the bimodal condition. The disparity between the findings could be due to differences in hearing aid technology and/or fitting procedures. These studies do agree, however, that there was minimal if any benefit to speech recognition from high-frequency amplification (above 2000 Hz). Because of the severity of the hearing loss, a truncated range problem may exist.

There was a significant correlation found between speech recognition and localization in this study. This correlation was also found by Ching et al (2004) for both quiet and noise in their adult bimodal study. Hirsh (1950) also found a correlation between a normal-hearing person’s speech recognition in noise and the ability to localize. Noble et al (1997), on the other hand, show a weak correlation between speech recognition in noise and localization for hearing-impaired individuals. The relation between speech recognition and localization needs to be examined further.

Last, the agreement found between the questionnaires and the speech-recognition and localization testing suggests that the use of a speech stimulus presented through a loudspeaker array provides a dynamic listening situation similar to that encountered in real life. In addition, the answers on the questionnaires reflect advantages of bilateral hearing such as greater ease of listening, better auditory spatial organization, and better sound quality (Byrne, 1980). It is possible that these benefits may be just as important as speech recognition and localization for hearing-impaired individuals.

CONCLUSION

All participants in this study were traditional cochlear implant candidates with both ears meeting cochlear implantation criteria. The benefit received from wearing a hearing aid in the nonimplanted ear was, therefore, expected to be limited. In spite of this, the improvement in speech recognition and localization in the bimodal condition was significant. The soundfield thresholds, loudness judgments, speech recognition, localization, and subjective comments all suggest that cochlear implant recipients with residual hearing should use a hearing aid in the nonimplanted ear.