SYNOPSIS

Objectives

We compared national and state-based estimates for the prevalence of mammography screening from the National Health Interview Survey (NHIS), the Behavioral Risk Factor Surveillance System (BRFSS), and a model-based approach that combines information from the two surveys.

Methods

At the state and national levels, we compared the three estimates of prevalence for two time periods (1997–1999 and 2000–2003) and the estimated difference between the periods. We included state-level covariates in the model-based approach through principal components.

Results

The national mammography screening prevalence estimate based on the BRFSS was substantially larger than the NHIS estimate for both time periods. This difference may have been due to nonresponse and noncoverage biases, response mode (telephone vs. in-person) differences, or other factors. However, the estimated change between the two periods was similar for the two surveys. Consistent with the model assumptions, the model-based estimates were more similar to the NHIS estimates than to the BRFSS prevalence estimates. The state-level covariates (through the principal components) were shown to be related to the mammography prevalence with the expected positive relationship for socioeconomic status and urbanicity. In addition, several principal components were significantly related to the difference between NHIS and BRFSS telephone prevalence estimates.

Conclusions

Model-based estimates, based on information from the two surveys, are useful tools in representing combined information about mammography prevalence estimates from the two surveys. The model-based approach adjusts for the possible nonresponse and noncoverage biases of the telephone survey while using the large BRFSS state sample size to increase precision.

The U.S. Preventive Services Task Force (USPSTF) recommends screening mammography, with or without clinical breast examination, every one to two years for women aged 40 years and older.1 Approximately 50% of the recent decrease in breast cancer mortality can be attributed to mammography screening, with adjuvant treatments contributing the remaining half.2 Population estimates of mammography prevalence rates in the U.S. are primarily obtained from national surveys such as the National Health Interview Survey (NHIS) and the Behavioral Risk Factor Surveillance System (BRFSS) survey.3,4 Both surveys are important in tracking cancer screening and other national health objectives.5 Prevalence estimates for geographical areas, such as states, are also important, as much of public health policy is carried out at the state or local level. While the most recent prevalence-level estimates are used for intervention planning,6–8 trends in prevalence are also important for surveillance and resource allocation.9

Both the NHIS and the BRFSS have advantages and disadvantages for providing state-level prevalence estimates. The NHIS is a high-response-rate, nationally representative survey of all households that collects information based on face-to-face interviews. Although the NHIS samples from all 50 U.S. states and the District of Columbia (DC) each year, it is designed to produce reliable estimates for the nation and Census regions but not necessarily for all states.10 In contrast to the NHIS, the BRFSS is conducted by telephone with a single, randomly selected adult resident of each surveyed household and is designed to produce state-based estimates. The strength of the BRFSS is the large sample taken in each state, but the BRFSS has potential biases due to noncoverage and nonresponse,11 although it uses post-stratification weights to mitigate such potential biases.

A recent study for the period 1997–2000 suggested differences of practical significance in prevalence estimates for mammography screening between the NHIS and the BRFSS.12 Due to the large differences between the estimates from the two surveys, non-sampling causes should be investigated. Numerous factors could cause bias in survey estimates for a particular year, over time for the same survey, and between surveys over time.13 The different response modes of the BRFSS (telephone) vs. the NHIS (in-person) could cause a difference. Other factors that could cause survey bias or a difference between the two survey estimates include differences in survey design, data collection operations, question and response wording, proxy responses, noncoverage, and nonresponse.

Telephone coverage varies among the states and is also lower among minority and low-income households.14 Telephone survey estimates could be biased for outcomes in which there is a substantial difference concerning the outcome of interest between telephone-equipped and non-telephone-equipped households. Weighting adjustments have been proposed to reduce the potential noncoverage bias due to non-telephone-equipped households.15,16 Survey response rates have been falling for all types of surveys, both nationally and internationally.17,18 However, survey nonresponse does not necessarily imply survey bias.19,20

The study goals were to (1) demonstrate the difference between the estimated mammography prevalence levels and trends from the NHIS and the BRFSS at both the national and state levels and (2) demonstrate the utility of model-based (MB) state-level estimates based on the use of data from both surveys simultaneously.12 The MB approach seeks to incorporate the surveys' strengths while minimizing the effects of their weaknesses. In particular, the MB approach adjusts for the possible nonresponse and noncoverage biases of the telephone survey while using the large BRFSS state sample size to increase precision.

METHODS

Data

Consistent with USPSTF guidelines for mammography screening,1 we used results from the BRFSS and the NHIS for the period 1997–2003 to estimate the proportion of women who have had a mammogram in the past two years, among those aged 40 years or older. The mammography questions were available for four of the seven years from the NHIS (1998, 1999, 2000, and 2003) and for five of the seven years from the BRFSS (1997–2000 and 2002). BRFSS data were used only for the years when mammography was used as a core question (i.e., when all states used the question).

There are slight differences in the mammography questions on the two surveys. Both surveys use a lead-in question that defines a mammogram and asks the woman if she has ever had one. If the woman responds “no,” the second question eliciting the time since the last mammogram is skipped in both surveys. The BRFSS and the NHIS forms of the second question, used to determine whether the woman is on schedule (i.e., mammogram within the last two years), are as follows:

BRFSS form: How long has it been since you had your last mammogram? Possible responses include:

Within past year (one to 12 months ago)

Within past two years (one to two years ago)

Within past three years (two to three years ago)

Within past five years (three to five years ago)

Five or more years ago

Don't know/not sure

Refused

NHIS form: When did you have your most recent mammogram? Was it a year ago or less, more than one year but not more than two years, more than two years but not more than three years, more than three years but not more than five years, or more than five years ago? Possible responses include:

A year ago or less

More than one year but not more than two years

More than two years but not more than three years

More than three years but not more than five years

More than five years ago

Refused

Don't know

For both surveys, women who answer 1 or 2 are deemed to have obtained a test within the last two years and, hence, to be on schedule (responses of 6 or 7 were excluded in the estimation of rates). In some survey years, the NHIS has allowed additional response modes in which the woman was allowed to specify the date of her last mammogram or a specific length of time.

To increase sample size for state estimation, we aggregated data over years to produce NHIS and BRFSS state and national prevalence estimates (using established methodology) for two time periods: 1997–1999 and 2000–2003.21,22 Each estimate represents a mean value for the time period. We calculated standard errors of parameter estimates for the two surveys using statistical methods that accounted for the survey design, and assessed statistical significance of various differences by using 95% confidence intervals (CIs) for the differences.23

We included the information from 26 socioeconomic-demographic state-level covariates (obtained from U.S. Census, survey, and administrative record sources) considered to be related to mammography screening propensity or to survey response propensity in the model.24–29 To minimize the impact of influential values and to eliminate computational problems that have occurred with hierarchical models, covariates were log transformed and standardized to have a mean of 0 and standard deviation of 1.30 To reduce the number of covariates and eliminate the possibility of multicollinearity, we conducted a principal component analysis (using maximum likelihood estimation) on the initial set of 26 covariates, which resulted in the use of eight principal components. The BRFSS state response rate, which was computed as a mean of the state-level yearly rates over the time period, was included as an additional covariate,31 along with an indicator variable for the time period.

Model-based estimation

We constructed MB mammography estimates for all states and the nation using a hierarchical model that was documented previously;12 the method is outlined in this article, with the model summarized in Figure 1. In the first stage of the model, we used an approximate sampling distribution for the three state-level direct-prevalence estimates (NHIS telephone, NHIS non-telephone, and BRFSS) conditional on state-level population parameters. We used the NHIS question “Is there at least one telephone INSIDE your house that is currently working?” to determine the respondent's household telephone status.

Figure 1.

A brief summary of the methodology used to construct model-based mammography estimates using a hierarchical model

We obtained design-unbiased NHIS state estimates for telephone and non-telephone households using weights that adjust for selection probability and nonresponse. A key assumption in the first stage of the model was that the NHIS telephone (and non-telephone) estimates were unbiased, whereas the BRFSS estimates were possibly biased. We expressed potential BRFSS nonresponse and noncoverage biases in terms of differences in the expected values of the sampling distributions of the direct estimates. In the second stage, we expressed the between-state variation in the population parameters in terms of state-level covariates. In the analysis, we used NHIS state identifiers for respondents, which are not available in the NHIS public-use files.

We used a Bayesian approach to estimate the model parameters (and obtain standard errors) via the Markov-Chain Monte Carlo method.32 We estimated the state mammography prevalence rates for the populations of households with and without telephones and combined these as Mpt+(1-M)pnt, where pt and pnt were the MB state prevalence estimates for telephone and non-telephone households, respectively, and M was the state proportion of telephone households obtained from the 2000 U.S. Census.

The MB approach seeks to correct for potential biases in BRFSS estimates in two ways, while taking advantage of their precision. Using information on differences between NHIS state-level estimates for telephone households and BRFSS estimates, we modeled possible biases in BRFSS estimates of the telephone-household component. Also, based on NHIS state-level estimates for non-telephone households, we modeled an adjustment for noncoverage of such households in the BRFSS.

Evaluation of estimates

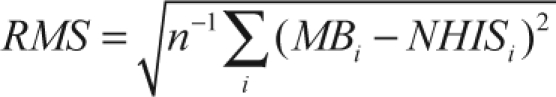

Due to model assumptions regarding bias, we expected the agreement between the MB and the NHIS estimates to increase with the NHIS sample size.33,34 To evaluate whether this occurred, we partitioned the states into three groups (Groups 1–3) based on the NHIS sample size, where Group 1 comprised the 17 states with the largest NHIS state sample sizes and Group 3 comprised the 17 states with the smallest NHIS state sample sizes. We compared the differences between the MB and the NHIS state prevalence estimates graphically and by root mean square (RMS) differences by group. The RMS deviation for the MB and NHIS estimates was computed using

|

where the NHIS direct and the MB prevalence estimates for state i were labeled as NHISi and, respectively, and the summation was over the 17 states in the group. We contrasted the graphical and RMS comparisons between the MB and NHIS estimates with the differences between the MB and BRFSS estimates.

RESULTS

National-level results

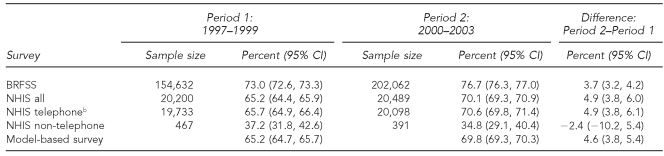

We observed large differences between the NHIS and the BRFSS national-level prevalence estimates (Table 1). The BRFSS estimate exceeded the NHIS estimate by 7.8 percentage points (95% CI 7.0, 8.6) and 6.6 percentage points (95% CI 5.7, 7.4) in the two time periods (1997–1999 and 2000–2003), respectively (Table 2). In contrast, the estimates of change (trend) from the two surveys were more similar; the estimated increase in mammography screening between the two time periods was 3.7% (95% CI 3.2, 4.2) for the BRFSS and 4.9% (95% CI 3.8, 6.0) for the NHIS (Table 1). Although these two trend estimates differed by only 1.2%, the difference was statistically significant (p=0.04) due to the large sample sizes.

Table 1.

National estimates for prevalence rates of mammography within the last two years for women aged ≥40 years during 1997–1999 and 2000–2003a

aSources: BRFSS and NHIS

bFor NHIS vs. BRFSS comparisons, the NHIS telephone sample in its own right was not reweighted to the same national controls as was the BRFSS.

CI = confidence interval

BRFSS = Behavioral Risk Factor Surveillance System

NHIS = National Health Interview Survey

Table 2.

Selected differences of national estimates for prevalence rates of mammography within the last two years for women aged ≥40 years during 1997–1999 and 2000–2003a

aSources: BRFSS and NHIS

bCIs were not calculated for functions of both direct and MB estimates.

CI = confidence interval

BRFSS = Behavioral Risk Factor Surveillance System

NHIS = National Health Interview Survey

MB = model-based

As shown in Table 2, the differences between the national estimates of prevalence for MB and NHIS were small in both time periods (–0.1% and 0.3%, respectively). In contrast, the prevalence differences between the MB and BRFSS estimates were of practical significance in both time periods (7.8 and 6.8, respectively). The differences between estimated national prevalence for the NHIS telephone and non-telephone households were large: 28.5% (95% CI 23.0, 34.0) and 35.8% (95% CI 30.2, 41.5), respectively, during the two time periods, thereby indicating the potential for non-telephone, noncoverage bias. However, at the national level, the potential telephone-only coverage bias was not large, as evidenced by the small prevalence difference between the NHIS telephone “only” and “all” groups in both time periods (0.5% in both time periods).

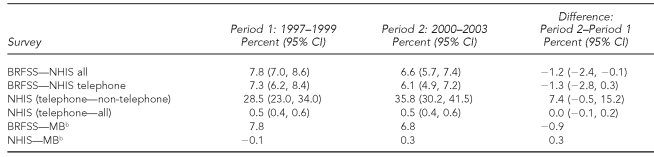

State-level results

The RMS differences of the MB and the two direct estimates for the three state groups are shown in Table 3. For the two time periods and their differences, the RMS values were smaller for the NHIS than for the BRFSS within all groups, showing the expected greater fidelity of the MB estimates to the NHIS estimates than to the BRFSS estimates. Also, the increasing fidelity of the MB estimates to both the NHIS and the BRFSS estimates as the NHIS sample size increased was demonstrated for both prevalence levels and trend (e.g., the RMS values decreased with increasing NHIS state sample size). However, for the prevalence difference (in contrast to prevalence level) the RMS values for the BRFSS were more similar to those of the NHIS.

Table 3.

Root mean square deviation of model-based estimates from the BRFSS and NHIS estimates computed by groups of 17 states, for the prevalences for the two time periods (1997–1999 and 2000–2003) and for the difference between the two time periodsa

aSources: BRFSS and NHIS

bThe 51 states (including Washington, D.C.) are divided into three ranked groups of 17 states, whereby Group 1 denotes the 17 states with the largest NHIS sample size and Group 3 denotes the 17 states with the smallest NHIS sample size.

BRFSS = Behavioral Risk Factor Surveillance System

NHIS = National Health Interview Survey

RMS = root mean square

MB = model-based

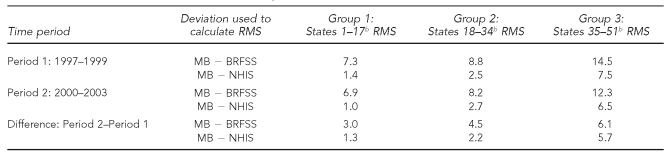

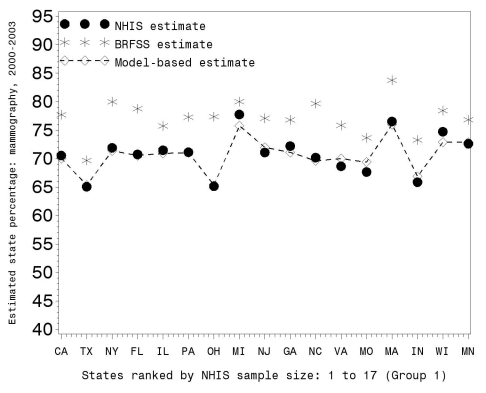

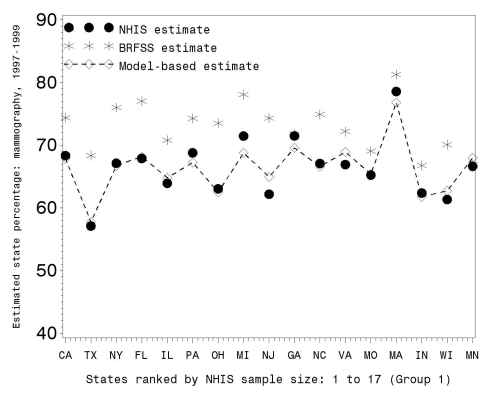

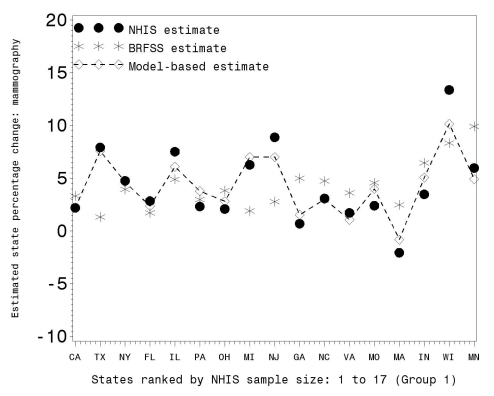

Figures 2, 3, and 4 illustrate the RMS comparisons graphically using only Group 1 states (the 17 states with the largest NHIS sample size; graphical results for the other groups are available upon request). Figures 2 and 3 show the three prevalence estimates for 2000–2003 and 1997–1999, respectively, while Figure 4 shows the change between these two time periods. Figures 2 and 3 demonstrate that (1) the BRFSS state prevalence estimates were uniformly larger than the NHIS direct estimates and (2) the MB prevalence estimates tracked the NHIS direct estimates.

Figure 2.

BRFSS, NHIS, and model-based estimates of percent mammography usage within the last two years for women ≥40 years of age during 2000–2003 for Group 1 states (with the largest NHIS sample size)

BRFSS = Behavioral Risk Factor Surveillance System

NHIS = National Health Interview Survey

Figure 3.

BRFSS, NHIS, and model-based estimates of percent mammography usage within the last two years for women ≥40 years of age during 1997–1999 for Group 1 states (with the largest NHIS sample size)

BRFSS = Behavioral Risk Factor Surveillance System

NHIS = National Health Interview Survey

Figure 4.

BRFSS, NHIS, and model-based estimates of mammography prevalence change for women ≥40 years of age between 1997–1999 and 2000–2003 in percent for Group 1 states

BRFSS = Behavioral Risk Factor Surveillance System

NHIS = National Health Interview Survey

Figure 4 shows that while the MB estimates of change tracked the NHIS direct estimates, the BRFSS estimates fluctuated about the NHIS values. This is consistent with the national-level results (Table 2), showing that there was considerable difference in prevalence levels but smaller trend differences between the two surveys.

Impact of the covariates

The first eight principal components (labeled PC1 to PC8), which explained more than 90% of the total variation, were used as the primary covariates. The coefficients (available upon request) have the following interpretations:

PC1: Higher scores for states that have higher socioeconomic status (SES), as measured through higher education, higher per capita income, and higher home value (highest state scores for DC and New Jersey; lowest scores for West Virginia and South Dakota)

PC2: Higher scores for states that have an older population (larger percentage of the population of retirement age and with Social Security benefits), small mean household size, and small percentage of households with children (highest scores for DC and West Virginia; lowest scores for Utah and Alaska)

PC3: Higher scores for states that have a higher newspaper readership rate, more social services, lower African American population, and lower poverty level (highest scores for New Hampshire and Vermont; lowest scores for Mississippi and Louisiana)

PC4: Higher scores for more prosperous (lower unemployment rate) and more urban (large population and population density) states (highest scores for New Jersey and Connecticut; lowest scores for Alaska and Montana)

PC5: Higher scores for states that have an older and Hispanic population and smaller percentage of African Americans (highest scores for Florida and Hawaii; lowest score for Alaska)

PC6: Higher scores for states that have a higher percentage of blue-collar workers and higher median home value and lower percentages of high school graduates (highest scores for Colorado and North Carolina; lowest scores for West Virginia and Hawaii)

PC7: Higher scores for states that have a larger population and lower values of serious crimes per capita and retail sales per capita (highest scores for New York and Texas; lowest scores for Delaware and Hawaii)

PC8: Higher scores for states with a high percentage of high school graduates but a low percentage of college graduates and a lower percentage of people who commute more than 30 minutes to work (highest scores for Indiana and California; lowest scores for Vermont and New York)

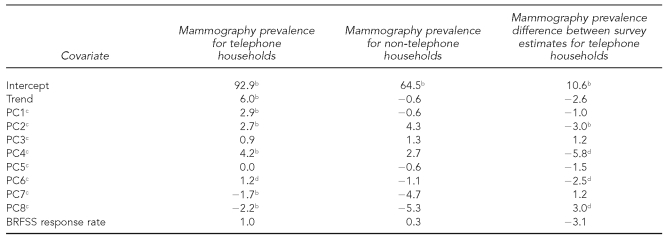

Table 4 shows estimated coefficients and tests of statistical significance for the model parameters, which measure the impact of the principal components and response rate on the mammography propensity. For telephone households, six components were statistically significant: four (PC1, PC2, PC4, and PC6) were positively associated and two (PC7 and PC8) were negatively associated with mammography propensity. None of the coefficients was statistically significant for non-telephone households, possibly due to small sample sizes. Four of the principal components (PC2, PC4, PC6, and PC8) had statistically significant coefficients for prevalence differences between the two surveys for telephone households. The coefficient of the BRFSS state response rate was not statistically significant.

Table 4.

Estimates of model parameters for mammography prevalence for telephone households, for non-telephone households, and for the difference between survey estimates for telephone householdsa

aSources: BRFSS and National Health Interview Survey

bCoefficient differs from zero (p<0.01).

cPC1–PC8 denote the first eight principal components for the 26 initial covariates.

dCoefficient differs from zero (p<0.05).

BRFSS = Behavioral Risk Factor Surveillance System

PC = principal component

DISCUSSION

NHIS and BRFSS direct estimate comparison

In each time period (1997–1999 or 2000–2003), the BRFSS national-level prevalence estimate for mammography was at least 6.6 percentage points larger than the NHIS estimate. These national-level differences are larger than those published for other outcome measures previously,21 are of practical significance for this important measure in the war on cancer, and should be studied further. Similarly, the BRFSS state estimates were consistently larger than the NHIS state estimates for both time periods. In contrast to the prevalence-level estimates, the national estimates for trend (computed as the differences of the estimates for the two time periods) for the two surveys were more comparable. In addition, the state estimates for trend were comparable and did not exhibit a consistent pattern (e.g., larger values for the BRFSS estimate).

Limitations

Differences in data collection time, proxy responses, question wording, coverage rates, response modes, response rates, and weighting methodology could cause differences between two survey estimates.35 Due to the intermittent inclusion of the mammography questions in both the NHIS and the BRFSS, different years were used in the analysis for the two surveys. Within the two time periods, the BRFSS survey years used were earlier on average than the NHIS years. Because the mammography rates increased during this period, the differences in prevalence between the two surveys would have been even greater than those observed if data from the same years had been available for analysis.

The use of proxy responses by the NHIS could have caused a slight bias. However, because less than 1% of the NHIS responses were by proxy, this could not have explained the large difference between the prevalence estimates for the two surveys. The mammography question/response wording is different for the two surveys. Although further study is warranted, it is unlikely that it is the sole cause of the large difference in prevalence estimates between the two surveys.

The large difference in national-level mammography prevalence estimates for telephone and non-telephone households indicates the potential for noncoverage bias for areas or domains with large proportions of non-telephone households. However, because the NHIS “telephone only” prevalence estimate differed substantially from the BRFSS prevalence estimate in both time periods, the potential noncoverage bias does not appear to be the primary cause of the national-level prevalence difference.

The increasing use of cellular telephones offers an additional noncoverage problem for telephone surveys. Cellular telephones are excluded from telephone surveys such as the BRFSS due to legal, ethical, and technical concerns.36 The U.S. percentage of cellular-only households was less than 1% until the end of 2001; however, by the second half of 2003 it had reached 4%.37,38 Because the use of cellular telephones is less common among older adults,36 the impact on the mammography prevalence estimates for women aged 40 and older should be small for the time period studied (1997–2003). However, to increase its overall coverage, the BRFSS has experimented recently with augmenting the random-digit-dialing survey results with those obtained from mail and Web modes.39–41

The difference in response modes (telephone vs. in-person) could cause differences between the BRFSS and NHIS estimates. Comparisons of national-level direct-prevalence estimates from these two surveys for a variety of outcome measures using 1997 data indicated good correspondence,21 while similar comparisons using 2004 data indicated more substantial differences.42 The most consistent finding from early research was that the mode effect is insignificant.43,44 In particular, no mode effect was found using telephone and in-person interviews in a Florida mammography screening study.45 However, more recent research has indicated possible mode effects for a variety of outcomes and related them to aspects of the questions and/or interviewer/responder interaction.

Higher current smoking prevalence rates have been obtained for current smoking for in-person vs. telephone responses in both the U.S. and Canada.46,47 In addition, randomized experiments have demonstrated that telephone respondents were more likely to present themselves in more socially desirable ways than were face-to-face respondents.48 A portion of the prevalence difference could be attributable to a mode effect, where a telephone respondent is more likely to report herself in a more socially desirable fashion as one who has had a mammogram within the past two years.

There are also methodological differences between the BRFSS and the NHIS in the development of survey weighting adjustments. Another cause that cannot be dismissed for the mammography prevalence difference is a combination of response and coverage differences resulting in a disproportionate number of middle-class respondents in the BRFSS, as those at the extreme ends of the socioeconomic spectrum are less likely to respond to the telephone survey, owing to a lack of understanding of the concept of the survey, concerns about privacy, a lack of time, and other reasons. Estimation errors caused by differences of this type have been termed the “middle-class bias” of telephone surveys.28,49 The larger percentage of respondents in the middle class in the BRFSS compared with the NHIS has been demonstrated for the period 1997–2000.11

In addition, those with lower education were found to be underrepresented in the BRFSS when compared with the Current Population Survey.50 Without a weighting or other adjustment that uses SES, the BRFSS may provide too little statistical weight to those with low SES. Because lower-SES individuals have low mammography screening rates,24 the absence of a weighting adjustment may lead to prevalence estimates for mammography screening that are too large. Recently, to remedy this general problem, researchers commissioned by the BRFSS included sociodemographic variables in the BRFSS statistical weighting procedure and found a substantial reduction in nonresponse bias for outcomes, such as mammography, that are strongly correlated with SES.51 The sociodemographic variables will be incorporated in the BRFSS weights beginning in 2011.

MB estimates

As expected given the model assumptions about bias, the MB prevalence estimates were closer to the NHIS estimates than to the BRFSS estimates for both time periods and for the change (trend) between the two time periods—both for the nation and for the vast majority of states. The agreement of the MB state estimates with both the NHIS and the BRFSS state estimates was shown to increase with increasing NHIS state sample size for both periods and for the change between the two periods. While the MB estimates were closer to the NHIS estimates than to the BRFSS estimates, the difference was less pronounced for the change between the two time periods than for each of the time periods.

The state-level covariates (through the principal components) were shown to be significantly related to the mammography prevalence for telephone households, with the expected positive relationships for SES, urbanicity, and age.24–27 In addition, four principal components were significantly related to the difference between NHIS and BRFSS telephone prevalence estimates—a result not previously demonstrated and worthy of further research. In addition, it may be useful to study the robustness of these conclusions to alternative estimation techniques for principal components.52,53 After adjusting for the other covariates, the BRFSS response rate was not a statistically significant predictor of the difference, which is consistent with literature suggesting that low survey response rates per se do not guarantee nonresponse bias.20

CONCLUSIONS

MB estimates, based on information from the two surveys, are a useful tool in representing combined information about mammography prevalence estimates from the two surveys. Our MB approach adjusted for the possible nonresponse and noncoverage biases of the telephone survey while using the large BRFSS state sample size to increase precision. The approach is general and could be applied to other health outcome measures including colonoscopy, Papanicolaou test, smoking, and other disease prevention activities.12

Acknowledgments

The authors thank Kevin Dodd, Robin Yabroff, Rong Wei, Meena Khare, Jennifer Madans, and Tom Krenzke for contributions to this research.

The findings and conclusions in this article are those of the authors and do not necessarily represent the official position of the National Cancer Institute, the Centers for Disease Control and Prevention, or the Social Security Administration.

REFERENCES

- 1.U. S. Preventive Services Task Force. Guide to clinical preventive services. 3rd ed. Baltimore: Williams & Wilkins; 2001. [Google Scholar]

- 2.Berry DA, Cronin KA, Plevritis SK, Fryback DG, Clarke L, Zelen M, et al. Effect of screening and adjuvant therapy on mortality from breast cancer. N Engl J Med. 2005;353:1784–92. doi: 10.1056/NEJMoa050518. [DOI] [PubMed] [Google Scholar]

- 3.Centers for Disease Control and Prevention (US) National Health Interview Survey. [cited 2009 Sep 25]. Available from: URL: http://www.cdc.gov/nchs/nhis.htm.

- 4.CDC (US) Behavioral Risk Factor Surveillance System. [cited 2009 Sep 25]. Available from: URL: http://www.cdc.gov/brfss.

- 5.Department of Health and Human Services (US) 2nd ed. Washington: DHHS, Office of the Surgeon General (US); 2000. Healthy People 2010: understanding and improving health. [Google Scholar]

- 6.Legler J, Breen N, Meissner H, Malec D, Coyne C. Predicting patterns of mammography use: a geographic perspective on national needs for intervention research. Health Serv Res. 2002;37:929–47. doi: 10.1034/j.1600-0560.2002.59.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wells BL, Horm JW. Targeting the underserved for breast and cervical cancer screening: the utility of ecological analysis using the National Health Interview Survey. Am J Public Health. 1988;88:1484–9. doi: 10.2105/ajph.88.10.1484. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Andersen MR, Yasui Y, Meischke H, Kuniyuki A, Etzioni R, Urban N. The effectiveness of mammography promotion by volunteers in rural communities. Am J Prev Med. 2000;18:199–207. doi: 10.1016/s0749-3797(99)00161-0. [DOI] [PubMed] [Google Scholar]

- 9.Breen N, Cronin KA, Meissner HI, Taplin SH, Tangka FK, Tiro JA, et al. Reported drop in mammography: is this cause for concern? Cancer. 2007;109:2405–9. doi: 10.1002/cncr.22723. [DOI] [PubMed] [Google Scholar]

- 10.Design and estimation for the National Health Interview Survey, 1995–2004. Vital Health Stat 2. 2003;(130) [PubMed] [Google Scholar]

- 11.Elliott MR, Davis WW. Obtaining cancer risk factor prevalence estimates in small areas: combining data from two surveys. J Royal Stat Soc Ser C. 2005;54:595–609. [Google Scholar]

- 12.Raghunathan TE, Dawei X, Schenker N, Parsons VL, Davis WW, Dodd KW, et al. Combining information from two surveys to estimate county-level prevalence rates of cancer risk factors and screening. J Am Stat Assoc. 2007;102:474–86. [Google Scholar]

- 13.Kish L. Statistical design for research. New York: John Wiley & Sons; 2004. [Google Scholar]

- 14.Anderson JE, Nelson DE, Wilson RW. Telephone coverage and measurement of health risk indicators from the National Health Interview Survey. Am J Public Health. 1988;88:1392–5. doi: 10.2105/ajph.88.9.1392. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Frankel MR, Srinath KP, Battaglia MP, Hoaglin DC, Smith PJ, Wright RA. Proc Surv Res Methods Sec Am Stat Assoc. 2000. Using data on interruptions in telephone service to reduce nontelephone bias in a random-digit-dialing survey; pp. 647–52. [Google Scholar]

- 16.Frankel MR, Srinath KP, Hoaglin DC, Battaglia MP, Smith PJ, Wright RA, et al. Adjustments for non-telephone bias in random-digit-dialing surveys. Stat Med. 2003;22:1611–26. doi: 10.1002/sim.1515. [DOI] [PubMed] [Google Scholar]

- 17.de Leeuw E, de Heer W. Trends in household survey nonresponse: a longitudinal and international comparison. In: Groves RM, Dillman DA, Eltinge JL, Little RJA, editors. Survey nonresponse. New York: John Wiley & Sons; 2002. pp. 41–54. [Google Scholar]

- 18.Curtin R, Presser S, Singer E. Changes in telephone survey nonresponse over the past quarter century. Public Opin Q. 2005;69:87–98. [Google Scholar]

- 19.Keeter S, Miller C, Kohut A, Groves RM, Presser S. Consequences of reducing nonresponse in a national telephone survey. Public Opin Q. 2000;64:125–48. doi: 10.1086/317759. [DOI] [PubMed] [Google Scholar]

- 20.Groves RM. Nonresponse rates and nonresponse bias in household surveys. Public Opin Q. 2006;70:646–75. [Google Scholar]

- 21.Nelson DE, Powell-Griner E, Town M, Kovar MG. A comparison of national estimates from the National Health Interview Survey and the Behavioral Risk Factor Surveillance System. Am J Public Health. 2003;93:1335–41. doi: 10.2105/ajph.93.8.1335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.National Center for Health Statistics (US) National Health Interview Survey: questionnaires, datasets, and related documentation. [cited 2009 Sep 25]. Available from: URL: http://www.cdc.gov/nchs/nhis/nhis_questionnaires.htm.

- 23.Schenker N, Gentleman JF. On judging the significance of differences by examining the overlap between confidence intervals. Am Stat. 2001;55:182–6. [Google Scholar]

- 24.Hiatt RA, Klabunde C, Breen N, Swan J, Ballard-Barbash R. Cancer screening practices from National Health Interview Surveys: past, present, and future. J Natl Cancer Inst. 2002;94:1837–46. doi: 10.1093/jnci/94.24.1837. [DOI] [PubMed] [Google Scholar]

- 25.Coughlin SS, Thompson TD, Hall HI, Logan P, Uhler RJ. Breast and cervical carcinoma screening practices among women in rural and nonrural areas of the United States, 1998–1999. Cancer. 2002;94:2801–12. doi: 10.1002/cncr.10577. [DOI] [PubMed] [Google Scholar]

- 26.Dailey AB, Kasl SV, Holford TR, Calcocoressi L, Jones BA. Neighborhood-level socioeconomic predictors of nonadherence to mammography screening guidelines. Cancer Epidemiol Biomarkers Prev. 2007;16:2293–303. doi: 10.1158/1055-9965.EPI-06-1076. [DOI] [PubMed] [Google Scholar]

- 27.Coughlin SS, Leadbetter S, Richards T, Sabatino SA. Contextual analysis of breast and cervical cancer screening and factors associated with health care access among United States women, 2002. Soc Sci Med. 2008;66:260–75. doi: 10.1016/j.socscimed.2007.09.009. [DOI] [PubMed] [Google Scholar]

- 28.Goyder J, Warriner K, Miller S. Evaluating socio-economic status (SES) bias in survey nonresponse. J Official Stat. 2002;18:1–11. [Google Scholar]

- 29.Groves RM, Couper MP. Nonresponse in household interview surveys. New York: John Wiley & Sons; 1998. [Google Scholar]

- 30.Breiman L, Spector P. Submodel selection and evaluation in regression—the X-random case. Int Stat Rev. 1992;60:291–319. [Google Scholar]

- 31.CDC (US) Behavioral Risk Factor Surveillance System annual survey data: summary data quality reports. [cited 2009 Sep 25]. Available from: URL: http://www.cdc.gov/brfss/technical_infodata/quality.htm.

- 32.Gilks WR, Richardson S, Spielgelhalter D. Markov Chain Monte Carlo in practice. New York: Chapman and Hall; 1995. [Google Scholar]

- 33.Lindley DV, Smith AFM. Bayesian estimates for the linear model. J Royal Stat Series B. 1972;34:1–41. [Google Scholar]

- 34.Fay RE, III, Herriot RA. Estimation of income from small places: an application of James-Stein procedures to Census data. J Am Stat Assoc. 1979;74:269–77. [Google Scholar]

- 35.Weisberg HF. The total survey error approach: a guide to the new science of survey research. Chicago: University of Chicago Press; 2005. [Google Scholar]

- 36.Blumberg SJ, Luke JV, Cynamon ML, Frankel MR. , editors. Advances in telephone survey methodology. New York: John Wiley & Sons; 2008. Recent trends in household telephone coverage in the United States. In: Lepkowski JM, Tucker C, Brick JM, de Leeuw ED, Japec L, Lavrakas PJ, et al pp. 56–86. [Google Scholar]

- 37.Tucker C, Brick JM, Meekins B. Household telephone service and usage patterns in the United States in 2004: implications for telephone samples. Public Opin Q. 2007;71:3–22. [Google Scholar]

- 38.Blumberg SJ, Luke JV, Cynamon ML. Telephone coverage and health survey estimates: evaluating the need for concern about wireless substitution. Am J Public Health. 2006;96:926–31. doi: 10.2105/AJPH.2004.057885. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Link MW, Mokdad AH. Can Web and mail survey models improve participation in an RDD-based national health surveillance? J Official Stat. 2006;22:293–312. [Google Scholar]

- 40.Link MW, Mokdad AH. Alternative modes for health surveillance surveys: an experiment with Web, mail, and telephone. Epidemiology. 2005;16:701–4. doi: 10.1097/01.ede.0000172138.67080.7f. [DOI] [PubMed] [Google Scholar]

- 41.Link MW, Battaglia MP, Frankel MR, Osborn L, Mokdad AH. Address-based versus random-digit-dial surveys: comparison of key health and risk indicators. Am J Epidemiol. 2006;164:1019–25. doi: 10.1093/aje/kwj310. [DOI] [PubMed] [Google Scholar]

- 42.Fahimi M, Link M, Mokdad A, Schwartz DA, Levy P. Tracking chronic disease and risk behavior prevalence as survey participation declines: statistics from the Behavioral Risk Factor Surveillance System and other national surveys. Prev Chronic Dis. 2008;5:A80. [PMC free article] [PubMed] [Google Scholar]

- 43.Groves RM, Miller PV, Cannell CF. Chapter II: differences between the telephone and personal interview data. Vital Health Stat 2. 1987;2:11–9. [PubMed] [Google Scholar]

- 44.Groves RM. Survey errors and survey costs. New York: John Wiley & Sons; 1989. [Google Scholar]

- 45.Mickey RM, Worden JK, Vacek PM, Skelly JM, Costanza MC. Comparability of telephone and household breast cancer screening surveys with differing response rates. Epidemiology. 1994;5:462–5. doi: 10.1097/00001648-199407000-00014. [DOI] [PubMed] [Google Scholar]

- 46.Simile CM, Stussman B, Dahlhamer JM. Exploring the impact of mode on key health estimates in the National Health Interview Survey. Proc Stat Canada Symposium 2006. [cited 2009 Sep 25]. Available from: URL: http://www.statcan.gc.ca/pub/11-522-x/2006001/article/10421-eng.pdf.

- 47.St-Pierre M, Beland Y. Mode effects in the Canadian Community Health Survey: a comparison of CAPI and CATI. Proc Surv Res Methods Sec Am Stat Assoc. 2004:4438–44. [Google Scholar]

- 48.Holbrook AL, Green MC, Krosnick JA. Telephone versus face-to-face interviewing of national probability samples with long questionnaires: comparisons of respondent satisficing and social desirability response bias. Public Opin Q. 2003;67:79–125. [Google Scholar]

- 49.Van Goor H, Rispens S. A middle class image of society. Quality and Quantity. 2004;38:35–49. [Google Scholar]

- 50.Rao RS, Link MW, Battaglia MP, Frankel MR, Giambo P, Mokdad AH. Proc Surv Res Methods Sec Am Stat Assoc. 2005. Assessing representativeness in RDD surveys: coverage and nonresponse in the Behavioral Risk Factor Surveillance System; pp. 3495–501. [Google Scholar]

- 51.Battaglia MP, Frankel MR, Link MW. Improving standard poststratification techniques for random-digit-dialing telephone surveys. Surv Res Methods. 2008;2:11–9. [Google Scholar]

- 52.Muthen B, Kaplan D. A comparison of some methodologies for the factor analysis of non-normal Likert variables. Br J Math Stat Psychol. 1985;38:171–89. [Google Scholar]

- 53.Muthen B, Kaplan D. A comparison of some methodologies for the factor analysis of non-normal Likert variables: a note on the size of the model. Br J Math Stat Psychol. 1992;45:19–30. [Google Scholar]