Abstract

Background

Neurophysiological evidence from primates has demonstrated the presence of mirror neurons, with visual and motor properties, that discharge both when an action is performed and during observation of the same action. A similar system for observation-execution matching may also exist in humans. We postulate that behavioral stimulation of this parietal-frontal system may play an important role in motor learning for speech and thereby aid language recovery after stroke.

Aims

The purpose of this article is to describe the development of IMITATE, a computer-assisted system for aphasia therapy based on action observation and imitation. We also describe briefly the randomized controlled clinical trial that is currently underway to evaluate its efficacy and mechanism of action.

Methods and Procedures

IMITATE therapy consists of silent observation of audio-visually presented words and phrases spoken aloud by six different speakers, followed by a period during which the participant orally repeats the stimuli. We describe the rationale for the therapeutic features, stimulus selection, and delineation of treatment levels.

The clinical trial is a randomized single blind controlled trial in which participants receive two pre-treatment baseline assessments, six weeks apart, followed by either IMITATE or a control therapy. Both treatments are provided intensively (90 minutes per day). Treatment is followed by a post-treatment assessment, and a six-week follow-up assessment.

Outcomes & Results

Thus far, five participants have completed IMITATE. We expect the results of the randomized controlled trial to be available by late 2010.

Conclusions

IMITATE is a novel computer-assisted treatment for aphasia that is supported by theoretical rationales and previous human and primate data from neurobiology. The treatment is feasible, and preliminary behavioral data are emerging. However, the results will not be known until the clinical trial data are available to evaluate fully the efficacy of IMITATE and to inform theoretically about the mechanism of action and the role of a human mirror system in aphasia treatment.

Recovery from aphasia occurs over a period of time ranging from several months to many years (Benson & Geschwind, 1989; Goodglass, 1993; Kertesz & McCabe, 1977; Lecours, Lhermitte, & Bryans, 1983). Although this recovery is accompanied by changes in brain physiology, basic neurobiology has not yet had significant impact on clinical practice, and rehabilitation measures for persons with aphasia remain rooted in educational rather than biological models (Small, 2004a). In this article, we outline a therapeutic approach, based on basic principles from neurophysiology, that we believe can play an important role in the treatment of aphasia.

Efforts to apply neurophysiological principles to rehabilitation in aphasia are limited by the lack of animal models of language use and the current state of knowledge of human neuroscience (Aichner, Adelwohrer, & Haring, 2002; Raymer, Beeson, Holland, Kendall, Maher, Martin, Murray, Rose, Thompson, Turkstra, Altmann, Boyle, Conway, Hula, Kearns, Rapp, Simmons-Mackie, & Gonzalez Rothi, 2008; Small, 2004b; Turkstra, Holland, & Bays, 2003). Only very recently has the study of human systems neurobiology led to an even basic understanding of the nature of neural networks that support the basic perceptual functions and higher cortical functions that enable language.

Recent neurophysiological evidence from nonhuman primates suggests important interactions between brain regions traditionally associated with either language comprehension or production. Regions traditionally considered responsible for motor planning and motor control appear to play a role in perception and comprehension of action (Graziano, Taylor, Moore, & Cooke, 2002; Romanski & Goldman-Rakic, 2002). Certain neurons with visual and/or auditory and motor properties in these regions discharge both when an action is performed and during perception of another person performing the same action (Gallese, Fadiga, Fogassi, & Rizzolatti, 1996; Kohler, Keysers, Umilta, Fogassi, Gallese, & Rizzolatti, 2002; Rizzolatti, Fadiga, Gallese, & Fogassi, 1996). These neurons are called mirror neurons and have been shown to exist for both manual and oral actions, and for both auditory and visual sensation. Action observation is thought to induce a re-enactment of similar actions stored in human brains (Buccino, Binkofski, Fink, Fadiga, Fogassi, Gallese, Seitz, Zilles, Rizzolatti, & Freund, 2001; Fadiga, Fogassi, Pavesi, & Rizzolatti, 1995; Rizzolatti & Craighero, 2004), possibly by inducing simulation of the ongoing actions (Gallese, 2003). It is likely that action observation leads to organizational changes in the brain (Fadiga et al., 1995) and may participate via the mirror neuron system in learning of motor skills (Buccino, Vogt, Ritzl, Fink, Zilles, Freund, & Rizzolatti, 2004b).

In our work, we focus on observation-execution matching (via imitation) as an aid to language recovery after stroke. Imitation permits the visual system to provide input into oral speech mechanisms, and the brain appears to have circuits particularly active in imitation (Gallese et al., 1996; Iacoboni, Woods, Brass, Bekkering, Mazziotta, & Rizzolatti, 1999; Murata, Fadiga, Fogassi, Gallese, Raos, & Rizzolatti, 1997). These circuits may play a special role in motor development (Tomasello, Savage-Rumbaugh, & Kruger, 1993), speech (Fadiga, Craighero, Buccino, & Rizzolatti, 2002; Rizzolatti & Arbib, 1999), and language (Rizzolatti & Arbib, 1998; Tettamanti, Buccino, Saccuman, Gallese, Danna, Scifo, Fazio, Rizzolatti, Cappa, & Perani, 2005). This system exists both in non-human primates (Gallese et al., 1996) and humans (Iacoboni et al., 1999), with a relatively precise anatomy in the F5 region of the lateral frontal lobe in macaque monkeys (Gallese et al., 1996) and a corresponding anatomy at the interface of the ventral premotor and frontal opercular regions in humans (Iacoboni et al., 1999; Rizzolatti, Fogassi, & Gallese, 2002).

Imitation has played an important role in many treatments for non-fluent aphasia (Duffy, 1995), with the rationale that visual input complements other sensory information for use in oral speech mechanisms. Research has only recently demonstrated that the brain has circuits particularly active in motor imitation (Gallese et al., 1996; Iacoboni et al., 1999; Murata et al., 1997), including oral motor imitation (Buccino et al., 2001; Buccino, Lui, Canessa, Patteri, Lagravinese, Benuzzi, Porro, & Rizzolatti, 2004a; Rizzolatti & Arbib, 1998; Tettamanti et al., 2005). Imitation depends on connections between the inferior parietal lobule and the ventral premotor/inferior frontal homologue of the macaque mirror neuron locus (Buccino et al., 2004b).

The human mirror system appears critical for observation/execution matching in oral motor actions (Skipper, Goldin-Meadow, Nusbaum, & Small, 2007; Skipper, Nusbaum, & Small, 2006; Skipper, van Wassenhove, Nusbaum, & Small, 2007), and thus could be of significant benefit in aphasia therapy after stroke. Furthermore, the role of this system in predicting the consequences of motor activity (Iacoboni, 2003; Iacoboni, Koski, Brass, Bekkering, Woods, Dubeau, Mazziotta, & Rizzolatti, 2001) and contributing to comprehension of sentences describing actions (Tettamanti et al., 2005), gives this system major potential for aiding language recovery more generally.

Using this system to effect neural changes in the premotor and frontal opercular cortices requires more than a reasonable physiological rationale. The implementation of the treatment must draw heavily on previous work in both treatment of aphasia and motor speech disorders and on theoretical work in learning. Our approach includes oral repetition of words and sentences in an ecological setting (i.e., with visualization of the speaker), intensively, with graded incremental changes in stimulus difficulty.

Intensity of therapy is an important component of therapeutic success (Basso, 1993; Bhogal, Teasell, & Speechley, 2003; Huber, Springer, & Willmes, 1993; Robey, 1998). Given current healthcare constraints, computer-based treatment can be used to provide intensive aphasia therapy at a reasonable cost. Despite the large number of computer programs and web-based systems for language practice, there is a paucity of theory-driven computational systems for aphasia therapy per se (Weinrich, 1997), although some good research has been done (Canseco-Gonzalez, Shapiro, Zurif, & Baker, 1990; Cherney, Halper, Holland, & Cole, 2008; Crerar, Ellis, & Dean, 1996; Fitch, 1983; Grawemeyer, Cox, & Lum, 2000; Katz & Wertz, 1997; Naeser, Baker, Palumbo, Nicholas, Alexander, Samaraweera, Prete, Hodge, & Weissman, 1998; Steele, Weinrich, Wertz, Kleczewska, & Carlson, 1989; Weinrich, McCall, Boser, & Virata, 2002; Weinrich, Shelton, Cox, & McCall, 1997; Weinrich, Shelton, McCall, & Cox, 1997).

In this article, we have three goals. First, we describe the development of a novel computer-based treatment for aphasia based on action observation and imitation, Intensive Mouth Imitation and Talking for Aphasia Therapeutic Effect, or IMITATE. The therapeutic features are outlined and a detailed account of the method of stimuli selection and delineation of treatment levels is provided. Second, we offer a description of the computer-assisted treatment program, including depictions of both the user and clinician/administrator interface. Lastly, we describe the randomized controlled clinical trial that is currently underway to evaluate the efficacy of IMITATE in aphasia therapy.

The IMITATE Approach

One of the overarching goals in the development of IMITATE was to create an innovative aphasia treatment supported by theoretical rationales and neuroanatomical and neurophysiological data. The therapy as a whole has been designed to stimulate the human parietal-frontal system for observation-execution matching, thought to be the homologue of the macaque mirror neuron system. The treatment approach consists of observation of audiovisual presentations of words and phrases followed by oral repetition of the stimuli. Treatment is provided at a high intensity, incorporates ecological stimuli, includes both stimulus and speaker variability, and presents graded incremental changes in stimulus complexity.

Given the importance of intensity in therapeutic success, IMITATE is designed to provide intense treatment via the computer. Since the number of hours provided in a week appears to be significantly correlated with greater improvement on language outcome measures (Bhogal et al., 2003), IMITATE requires 90 minutes of daily therapy.

In addition to high intensity, a second key therapeutic feature of IMITATE is the exclusive use of ecologically valid stimuli. The therapeutic tasks use only stimuli that are part of normal speech (e.g., words, sentences) and are uttered with normal prosody by a speaker whose face, lips, and mouth are visible. This need for ecological validity is based on the physiology: Those neurons that discharge when an action is performed and during perception of another person performing the same action (i.e., mirror neurons) may work by matching an observed action onto an internal motor representation of that action (Kohler et al., 2002; Rizzolatti et al., 1996). Such neurons are not active on tasks that are not part of the normal motor repertoire of the animal or person tested.

Thirdly, IMITATE treatment incorporates the principle of graded, incremental learning through changes in stimuli complexity. As a patient improves and becomes able to imitate successively monosyllabic words, disyllabic words, two to three word phrases, and longer utterances, these are incrementally changed to be more difficult, and the rate is increased, in a process referred to as incremental learning (Sutton & Barto, 1998), adaptive training (Merzenich, Jenkins, Johnston, Schreiner, Miller, & Tallal, 1996; Tallal, Miller, Bedi, Byma, Wang, Nagarajan, Schreiner, Jenkins, & Merzenich, 1996), or “shaping” (Taub, Crago, Burgio, Groomes, Cook, DeLuca, & Miller, 1994). Work in neural network computer models also reinforces the notion that gradual, incremental learning has theoretical advantages (Elman, 1993). Graded, incremental learning is integrated into the IMITATE therapy as patients advance through increasingly complex treatment levels.

Finally, the IMITATE approach includes variability as a fundamental design feature. Although stimulus complexity increases over the course of treatment, based on the improving skills of the patient, the words and phrases presented are selected randomly from a database with a probabilistic favoring of stimuli near the appropriate level. Thus, early in treatment, a patient will occasionally be presented with a relatively complex word or even a phrase, and later in treatment, a patient will sometimes be asked to imitate a very simple word. We believe that such variability represents the best learning strategy and reconciles the two conflicting sets of data that suggest on one hand that “starting small” improves learning (Elman, 1990), and on the other, that complexity is the desirable starting point (Kiran & Thompson, 2003). Finally, we have included speaker variability as well, since this is a fundamental component of speech perception (Magnuson & Nusbaum, 2007; Wong, Nusbaum, & Small, 2004).

The IMITATE System

Overview

After much discussion and careful selection of stimulus criteria, we established a pool of treatment stimuli consisting of 2,636 words and 405 phrases, ranging in length and complexity. We produced audiovisual recordings of each stimulus, spoken by six individuals differing in gender, age, and race. We constructed a (platform-independent) JAVA-based computer program that incorporated various treatment levels, based on explicit collections of stimulus parameters, and including a simple and straightforward patient interface, designed specifically for ease of use by individuals with aphasia.

IMITATE therapy consists of a period of observation followed by imitation. Each stimulus (i.e., word or phrase) is spoken aloud in succession by each of the six distinct speakers, as their image is presented on the computer screen. The subject, sitting opposite the computer screen, is instructed to look at and listen to the six successive audiovisual clips of the same stimulus (observation) and then to say it aloud (imitation). The computer records audiovisual information from the subject during the thirty-minute treatment session. The final system is deceptively simple to the user, despite the intricate set of stimuli and stimulus selection algorithms, and internal bookkeeping.

Stimulus Selection and Delineation of Treatment Levels

Lexical and Phrasal Stimuli

To select the pool of words and phrases for IMITATE, we first established parameters that would be used by the stimulus selection algorithm, including number of letters, phonemes, and syllables, part of speech, written frequency, familiarity, frontal and total visibility, and phonemic complexity. Many of these values were derived from the MRC Psycholinguistic Database (Coltheart, 1981), and others came from a diverse set of sources, including the Kucera and Francis corpus (Kucera & Francis, 1967), the Hoosier mental lexicon (Nusbaum, Pisoni, & Davis, 1984), and various measures of viseme content (Bement, Wallber, DeFilippo, Bochner, & Garrison, 1988; Owens & Blazek, 1985).

Some measures were not available explicitly and were created as we developed the stimulus presentation algorithm. Determining phonemic complexity involved coding stimuli for presence of consonant blends in the initial position. In terms of visibility, both consonants and vowels were assessed on a four-point scale, with high visibility productions, like consonants/p/,/b/,/m/,/f/, and/v/, receiving the highest scores on the scale. Taking all criteria together, we arrived at a final pool of 2568 words. An additional 68 words that did not meet the selection criteria were added because of their high functional utility (e.g., “blue“, “March“, “chair“, “Monday“). Each word contained between one and four syllables (Mean = 1.42) and between one and twelve phonemes (Mean = 4.09).

In addition to the words, the stimulus set included 405 phrases. We chose phrases that were commonly used and had high functional utility for people with aphasia (e.g., “sit down”, “watch out”, “nice to see you”, “please pass the salt”). Phrases were selected from a large variety of English language textbooks, travel guides, and intuition. Each phrase was coded for number of words and syllables, and verb and preposition (if applicable) frequency. Each phrase contained between two and nine syllables (Mean = 4.03) and between two and five words (Mean = 3.36).

Treatment Levels

IMITATE was designed to be appropriate for a range of speech and language deficit severities. Consistent with principles of incremental learning, a key element of IMITATE involves increasing the complexity of stimuli presented at each level. Ideally, patients advance to the next complexity level with each week of therapy. Furthermore, patients begin treatment at the level most suited to the severity of their language and/or motor speech deficit. Because we sought to include individuals with varying degrees of aphasia severity, we developed twelve unique treatment levels, even though patients only perform six weeks of therapy. In doing so, a patient with severe aphasia and apraxia of speech, for example, could begin treatment at level 1, advancing through level 6, while a patient with less severe deficits could begin at level 7 and advance through level 12.

Different sets of stimulus selection parameters defined the twelve distinct levels for observation-imitation treatment, including familiarity, part of speech, visibility, syllable length, and phonemic complexity (the presence of an initial consonant blend in a word). We also included a pool of high utility words to be incorporated across levels regardless of complexity, due to their highly useable, contextual nature. In addition, we defined the selection of familiar phrases based on syllable and word length. Once we had selected the appropriate parameters, we needed to determine a method for gradually increasing the complexity of treatment levels with objective measures. It also became necessary to limit the number of parameters used to define treatment levels in order to maintain a large enough sample of words from which to draw.

Word Selection

Table 2 outlines the delineation of parameters for words across the twelve treatment levels in terms of the following objective measures: familiarity (Nusbaum et al., 1984), part of speech, front visibility score, average visibility, syllable length, and presence of a blended phoneme. Level 1 was intended for participants with severe aphasia, while Level 12 was created for participants with a more mild presentation.

TABLE 2.

Delineation of parameters for words across treatment levels: Familiarity (FAM); percent of nouns, verbs, and other parts of speech; front visibility score (FV) of 4 (high) or 3 (low); syllable length (LS) of 1, 2, 3, or 4; and percent of blended phonemes (BL)

| Level | FAM | % Nouns | % Verbs | % Other | % FV4 | % FV3 | Avg FV score | % LS1 | % LS2 | % LS3 | % LS4 | % BL |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 620 | 80 | 20 | 0 | 100 | 0 | 95 | 5 | ||||

| 2 | 600 | 70 | 20 | 10 | 95 | 5 | 90 | 10 | ||||

| 3 | 580 | 65 | 25 | 10 | 85 | 15 | 85 | 15 | ||||

| 4 | 570 | 60 | 25 | 15 | 75 | 25 | 75 | 25 | 5 | |||

| 5 | 560 | 50 | 25 | 25 | 70 | 30 | 60 | 40 | 10 | |||

| 6 | 550 | 40 | 30 | 30 | 60 | 40 | 50 | 50 | 15 | |||

| 7 | 540 | 3.5 | 40 | 50 | 10 | 20 | ||||||

| 8 | 530 | 3.5 | 30 | 50 | 10 | 10 | 20 | |||||

| 9 | 520 | 3.5 | 30 | 40 | 20 | 10 | 20 | |||||

| 10 | 510 | 3.0 | 25 | 35 | 30 | 10 | 25 | |||||

| 11 | 500 | 2.9 | 20 | 30 | 40 | 10 | 30 | |||||

| 12 | 500 | 2.8 | 15 | 25 | 50 | 10 | 40 |

The treatment gradually increases in the complexity of presented words and phrases from Levels 1 to 12. Although determination of parameters was initially made on theoretical grounds, some redefinition was required based on the experience of several clinicians and individuals with aphasia who tested the program for us. Thus a bit of trial and error was ultimately used to refine the stimulus sets for each level. We required that words at Level 1 have the highest level of familiarity possible (FAM=620) without compromising the size of the pool of words. We also wanted Level 1 to contain a preponderance of nouns (80%), all of which had high front visibility (score=4). Level 2 words were relatively similar to Level 1, incorporating words that were highly familiar and visible, but this level gradually introduces a greater percentage of verbs and other parts of speech. Word length was also a key component for delineation of treatment levels. At Levels 1 and 2, the majority of words presented in observation-imitation treatment were one syllable in length. Levels 1 through 3 did not contain words with initial consonant blends, though blends were gradually introduced at Level 4 (5%). Short phrases were introduced at Level 3 and increased gradually in syllable and word length with treatment level.

There is a clear distinction in complexity between Levels 1 though 6 and Levels 7 through 12. By Level 7, greater syllable length and presence of an initial consonant blend became a more essential consideration for selection than part of speech. The issue of initial phoneme visibility was also less vital in the higher treatment levels, as visuomotor information gradually becomes more automatic for the patients. Words at Levels 7 through 12 thus were selected based on their average visibility, with slightly decreasing gradation at each level. By Level 12, the most complex treatment level for our observation-imitation therapy, 50% of the words available for presentation are three syllables in length, while 40% of the available words contain an initial blended consonant cluster. In Levels 8 through 12, 10% of the words available are four syllables. Increasing the proportion of four-syllable words further would have narrowed the potential pool of words from which to draw and compromised the variety of words we wished to maintain.

Phrase Selection

The selection algorithm also included specific criteria for the composition of phrases, as well as for the relative proportion of words to phrases across treatment levels. Phrases are minimally introduced at Level 3, whereas by Levels 11 and 12, 45 to 50 percent, respectively, of the treatment sessions consist of phrases. Phrase and syllable length were the key considerations rather than grammatical complexity. Table 3 outlines the syllable and word length constraints assigned to each treatment level.

TABLE 3.

Delineation of parameters for phrases across levels based on minimum (Min) and maximum (Max) number of syllable and words

| Level | Syllable Min | Syllable Max | Words Min | Words Max |

|---|---|---|---|---|

| 3–4 | 2 | 2 | ||

| 5–6 | 3 | 3 | ||

| 7–8 | 4 | 4 | ||

| 9 | 4 | 6 | 4 | 5 |

| 10 | 4 | 7 | 4 | 6 |

| 11 | 5 | 8 | ||

| 12 | 6 | 9 |

Phrases used at Levels 3–4 and 5–6 contained between two to three words each, whereas phrases at the highest treatment levels were the longest. While the maximum number of words in a given phrase is six, by Level 12, the maximum number of syllables per phrase is as great as nine syllables. It was important to constrain phrases by syllable length at the higher levels. For example, a Level 12 phrase like “directory assistance” is only two words but seven syllables, an appropriate level of complexity for the most difficult treatment period.

In terms of the relative proportion of phrases to words across treatment levels, we established that the percentage of phrases to words should increase as treatment level increased. While Levels 1 and 2 clearly did not include phrases, Level 12 had a higher proportion of phrases to words, 50 percent and 40 percent respectively. From Levels 3 to 12, the proportion of phrases increased by five percent with each level, while the proportion of words decreased by the same amount, as shown in Table 4.

TABLE 4.

Proportion of high utility words, all words, and all phrases across treatment levels

| Level | % High Utility Words | % Words | % Phrases |

|---|---|---|---|

| 1 | 10 | 90 | 0 |

| 2 | 10 | 90 | 0 |

| 3 | 10 | 85 | 5 |

| 4 | 10 | 80 | 10 |

| 5 | 10 | 75 | 15 |

| 6 | 10 | 70 | 20 |

| 7 | 10 | 65 | 25 |

| 8 | 10 | 60 | 30 |

| 9 | 10 | 55 | 35 |

| 10 | 10 | 50 | 40 |

| 11 | 10 | 45 | 45 |

| 12 | 10 | 40 | 50 |

Utility Word Selection

We coded a total of 230 stimuli as utility words, defined as words with high functional utility to people with aphasia. Examples of high utility words include colors, numbers, common household objects, days of the week, and months. This set included 68 words not in the original set, which were added to the original observation-imitation treatment word pool because of their high functional utility. The addition of high utility words speaks to one of the fundamental goals of IMITATE, the need for ecologically valid stimuli. High utility words, including words that are both frequent and helpful in everyday language, are incorporated within each level of treatment, regardless of complexity level. Therefore, as shown in Table 4, high utility words comprise 10% of each of the therapy levels.

Table 5 provides some sample words and phrases for the twelve IMITATE treatment levels. The sample words and phrases illustrate the range of stimuli and carefully graded complexity levels that comprise a key component of the program development. Note that these words and phrases are examples only. The algorithm makes random selections from the database, guided by the parameter specifications for the particular level, but with a variable component, such that stimuli from outside these parameter settings will occasionally be selected. Thus, a Level 1 session commonly will include a phrase (or even two phrases) and a Level 12 session will commonly include one or more simpler words and phrases.

TABLE 5.

Sample words and phrases across all twelve IMITATE treatment levels

| Treatment Level | Sample Words | Sample Phrases |

|---|---|---|

| 1 | man, pie, moon, bed, meat | N/A |

| 2 | pear, matter, buy, mouth, food | N/A |

| 3 | mile, peer, voice, pear, outside, four | run over, come in, pull through, plan for |

| 4 | church, die, side, deep, pause, beam | point out, give in, stand up, take off |

| 5 | pocket, repair, famous, jaw, choose | call in, see you soon, stressed out, hear about |

| 6 | greatly, bell, tube, van, machine, blush | find out, one more time, figure out, go for it |

| 7 | market, smart, comfort, further, foolish, strain | raise your hand, come off, text message, don’t pout |

| 8 | former, danger, motor, admire, stranger, shrimp | take care of, wait a second, dressing room, wait a minute |

| 9 | thunder, preaching, loose, show, welfare, division | it rained all night, close your eyes, have a safe trip |

| 10 | teacher, ceiling, military, committee, literature | I need exact change, fix the heater, the town is very small, it is Memorial Day |

| 11 | officer, reaction, medicine, prize, utter | hand me my ID card, a glass of orange juice, Please accept my apology, may I leave a message? |

| 12 | principle, highway, empire, determine, medicine | directory assistance, he went to the museum, the student produced poor work, Could you fill this prescription? |

Audiovisual Recordings

Six versions of each audiovisual stimulus were recorded, with each lexical or phrasal stimulus spoken by six different individuals of varying ages and ethnicity. All six speakers were native speakers of Standard American English. These included a Caucasian male in his 70’s, a Caucasian female in her 50’s, an Asian-American male in his 20’s, a Caucasian female in her 30’s, an African-American female in her 70’s, and an African-American male in his 70’s. Only the speaker’s upper body and head were recorded, and the hands were specifically excluded from the recordings. Each speaker was centered in the frame for all stimuli.

The speakers were instructed to say the words and phrases as they would occur in everyday language. Speakers were told to start and end each clip with the mouth closed, looking directly at the camera. It was very important that the stimuli be as ecological as possible. For each stimulus, after the speaker appears, there is a brief delay before the initiation of speech, followed by production of the word or phrase and finally a brief delay after the speaker has completed voicing of the word. Each stimulus is between approximately 1.5 to 3 seconds in duration, with words a bit shorter (~1.5 to 2.5 seconds) than phrases (~2.5 to 3.0 seconds).

Software Details

The IMITATE computer system consists of three platform-independent JAVA programs (a) to specify the therapy program in terms of parameter settings and treatment levels (Imitate Configuration Utility); (b) to present the stimuli to the user with aphasia (Imitate Therapy); and (c) to manage individual patient sessions by setting treatment levels and collecting and managing response data (Imitate Session Viewer). The IMITATE system was designed to be versatile: The configuration utility simplifies the therapy specification to maximize individual users’ needs, the therapy program has a simple user interface, designed specifically for individuals with aphasia of varying severities, and the session viewer allows the researcher to easily manage the data collected during the therapy sessions.

IMITATE Utility Programs

The configuration utility is generally not intended to be used by clinicians, since the therapy program is specified in advance, as described previously. In principle, it is possible to change the definitions of levels and stimulus selection criteria for each patient, but in practice, changes should only be made for particular groups of patients. We use the same treatment specification for all patients in the current research study evaluating this system.

The session viewer allows setting the configuration for a single therapy session or a series of sessions. Each session is associated with a week number and a day number. The week number corresponds to the number of complexity levels and ranges from 1 to 12. The day number specifies the day during the week in which the session configuration will be used and potentially can range from 1 to 7.

Once treatment begins, the clinician assigns a level of therapy (1 – 12) to a subject for the upcoming week in the session viewer utility. The session viewer also permits viewing and manipulating the data collected during the sessions. The system will automatically chart trends about the therapy sessions over the course of a week or all of the defined weeks. The trends program, for example, can illustrate, with a simple line graph, the increase in phonemic complexity of the stimuli presented to a user throughout the six weeks of treatment.

IMITATE Therapy Program

The therapy application was designed to be as easy to use for participants as possible, with minimal training. The program resides on a Macintosh laptop computer, which is loaned to the patient for the duration of the therapy. The therapy application automatically starts when the laptop is turned on, and the laptop automatically shuts down when the therapy program exits. The application can be controlled using only the spacebar; the subject is prompted to press the space bar to start the program, and again to proceed after each stimulus is presented and the response period for the stimulus has elapsed. When the laptop is started the first time during a therapy week, the first therapy session of the week will begin. When a subject completes a therapy session, the application will record that fact, exit, and shut down the laptop. The next time the patient turns on the laptop, the next session will automatically begin. If the subject turns on the computer after completing all therapy sessions, the application will indicate as such, and after a short period, exit and shut down the laptop.

A subject can exit the application at any time by hitting the Escape key. The application will also exit if the subject does not respond for a pre-determined period of time after a response period has ended. In either case, the laptop will shut down, and the subject will need to repeat the session in which he or she was participating.

While the application is running, it will capture an audio/video recording of the subject participating in the therapy. These recordings can be viewed by the therapist later in the session viewer and analyzed to determine whether the subject is participating fully in the therapy. These recordings can also be used for research purposes.

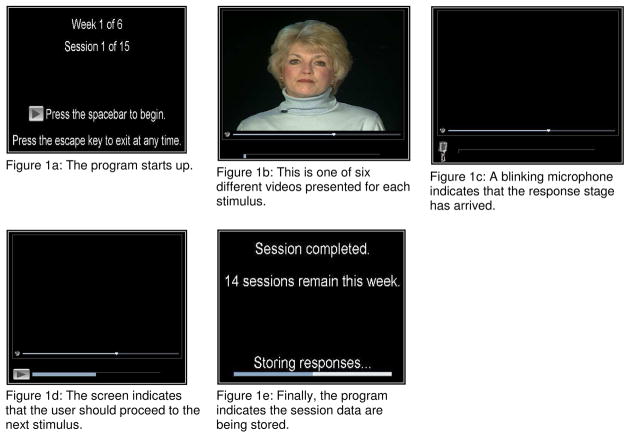

When the therapy application starts, a screen appears indicating in which week and session the user is participating and reminding the user how to start and exit from the application (Figure 1a). To begin, the user needs only to press the spacebar, and the therapy application presents the videos for the first stimulus (Figure 1b). After the videos for each stimulus have been presented, the video section of the screen goes blank, and a microphone icon appears (Figure 1c) (blinking for a few seconds), prompting the user to respond by repeating the stimulus word or phrase as many times as he or she can. After the configured period of time the proceed icon appears again prompting the user to hit the spacebar to proceed to the next stimulus (Figure 1d).

Figure 1.

Screen shots of the IMITATE therapy program.

1a. The program starts up.

1b. This is one of six different videos presented for each stimulus.

1c. A blinking microphone indicates that the response stage has arrived.

1d. The screen indicates that the user should proceed to the next stimulus.

1e. Finally, the program indicates the session data are being stored.

A progress bar is presented to indicate the percentage of the total stimulus/response time allocated for the session in which the user has participated thus far. When the “proceed” icon appears, the progress bar is updated. If a user does not press the space bar to proceed after a preset wait time, an audio clip is played saying, “Please push the spacebar to continue.” If the user still does not push the spacebar in the configured time, the application exits. After completion of all stimuli for a session, an end screen appears indicating the end of the session. A progress bar appears while the user’s responses are being stored and analyzed (Figure 1e). After all the responses have been processed, the progress bar will be replaced with an “Exiting…” message, and the application will automatically exit and the computer shuts down.

IMITATE Clinical Trial

We are currently conducting a randomized clinical trial evaluating the efficacy of the computer-assisted IMITATE treatment approach. For the purposes of the trial, subjects are required to work with the program at home for three 30-minute sessions per day, six days a week (i.e. 9 hours/week), for a period of six weeks. The first of the 18 sessions is completed with the therapist during the participant’s weekly visit. We concluded that six weeks was sufficient treatment time to facilitate clinical changes, without sacrificing compliance. While the dosage of therapy is in itself intensive, the treatment design and mechanism by which stimuli are presented also provide opportunities for massed practice. The levels were designed with the intention that participants could move through six levels during the six-week treatment period. However, a participant could also repeat one or more treatment levels for an additional week if the clinician judged it to be necessary.

For the IMITATE trial, participants are randomly assigned to either the IMITATE therapy or a control therapy. The control therapy is similar to the IMITATE therapy in many respects. However, when a participant hears a word or phrase, instead of seeing the full audiovisual clip of the word or phrase, they see only a static image of each speaker and are asked to repeat one word or phrase at a time. In the static images, speakers are shown with the mouth closed, looking directly at the camera. The same single static image is used for each speaker, regardless of the word or phrase that he or she is saying, (i.e., there is not a different static image for each speaker for each word or phrase).

Table 6 outlines the treatment protocol for the current study. The protocol timeline, which is the same for both the experimental and control groups, includes two pre-treatment baseline assessments, six weeks of computer-assisted therapy, a post-treatment assessment, and a six-week follow-up assessment.

TABLE 6.

Treatment Protocol Timeline

| Week | Activity | |

|---|---|---|

| Baseline Assessment | −6 | Baseline assessment of speech, language, and cognition. Neurological screening |

| Pre-treatment Assessment | 0 | Pre-treatment assessment Computer training on use of program and completion of practice log |

| Treatment | 1–6 | Home practice 90 minutes/day, 6 days/week |

| Post-treatment Assessment | 6 | Post-treatment assessment of speech and language |

| Follow-up Assessment | 12 | Follow-up assessment of speech, language and quality of life |

Our primary language outcome measure is the Aphasia Quotient of the Western Aphasia Battery (Kertesz, 1982). Secondary speech and language outcome measures include: selected subtests from the Apraxia Battery for Adults (Dabul, 2000), the Boston Naming Test (Kaplan, Goodglass, & Weintraub, 1983), and the “cookie theft” picture description task from the Boston Diagnostic Aphasia Examination (Goodglass & Kaplan, 1972; Goodglass, Kaplan, & Barresi, 2000). In addition, participants undergo neurological and cognitive screening, including the Spatial Span subtest of the Wechsler Memory Scale (Wechsler, 1997), three subtests of the Behavioral Inattention Test (Wilson, Cockburn, & Halligan, 1987), the National Institute of Health Stroke Scale (Pallicino, Snyder, & Granger, 1992), and the Mini-Mental Status Examination (Folstein, Folstein, & McHugh, 1975). These measures serve not only to characterize participants but also as a means to ensure sufficient visual attention, memory span, and language comprehension for participation in the treatment protocol. Baseline testing also includes the Center for Epidemiological Studies Depression Scale (Radloff, 1977) and a motor assessment using the Box and Block Test (Mathiowetz, Volland, Kashman, & Weber, 1985). Finally, the Stroke-Specific Quality of Life Test (Hilari, Byng, Lamping, & Smith, 2003; Williams, Weinberger, Harris, Clark, & Biller, 1999) is administered at baseline and follow-up to determine whether the treatment impacts quality of life.

Although formal data analysis has yet to be completed, five participants with varying aphasia types and severities have completed the treatment protocol thus far. Each of the five participants learned the mechanics of the program easily after one initial training session with the speech-language pathologist. During the training, the speech-language pathologist occasionally needed to cue participants to press the spacebar (the only key required to control the application), either verbally or by highlighting the key with a colored sticker. Participants met weekly with the speech-language pathologist to ensure treatment was proceeding correctly and (if possible) to advance to the next treatment level. None of the participants reported problems with the computer’s automatic start-up and shut-down mechanisms. Although the program automatically tracks treatment sessions completed throughout the week, participants also recorded sessions completed on weekly practice logs. Four of the participants were fully compliant with the treatment schedule, completing the required sessions and successfully advancing a treatment level during each of the six weeks of treatment. The participant who was not fully compliant with the protocol completed only five of the six weeks of treatment. This participant reported frustration with the treatment tasks while the other four reported general satisfaction. Nevertheless, this participant advanced through four levels based on his severity level and compliance with the practice schedule (i.e., he repeated level 3 and level 4, performing each twice).

Summary and Conclusions

In this article, we describe the development of IMITATE, an innovative computer-assisted treatment for aphasia based on action observation and imitation. We describe in detail the development process, including the selection of lexical and phrasal stimuli and the delineation of stimuli across twelve treatment levels. In addition, we summarize the treatment approach, which consists of observation of audiovisual presentations of words and phrases followed by oral repetition of the stimuli, and offer depictions of both the user and clinician/administrator interface. Lastly, we describe briefly the randomized controlled clinical trial that is currently underway to evaluate the efficacy of IMITATE treatment.

Our goal was to create a novel treatment for aphasia that was supported by theoretical rationale and neuroanatomical and neurophysiologic data. First, we devised IMITATE to incorporate features of language treatment that are based on current knowledge in clinical, behavioral, and neural sciences: The therapy is provided at a high intensity, incorporates ecological stimuli, includes both stimulus and speaker variability, and presents graded incremental changes in stimulus complexity. Second, while aphasia recovery is accompanied by changes in brain physiology, few clinical treatments for aphasia are based on biological models. IMITATE, in contrast, was designed to stimulate the human parietal-frontal system for observation-execution matching, thought to be the homologue of the macaque mirror neuron system and to play a significant role in observational learning. In conclusion, IMITATE is a therapeutic approach, based on basic principles from neurophysiology, that we believe can play an important role in aphasia treatment and recovery. Results from the clinical trial will provide valuable information regarding treatment efficacy and the potential impact of biologically based aphasia interventions on brain physiology.

TABLE 1.

IMITATE: Important Therapeutic Features

| Visual observation |

| Oral repetition |

| Speaker variability |

| High intensity |

| Ecological stimuli |

| Graded incremental learning |

| Variability in gradation |

Acknowledgments

This work was supported by the National Institute of Deafness and Other Communication Disorders of the National Institutes of Health under Grant R01-DC007488. Their support is gratefully acknowledged. We thank Edna Babbitt for her input on treatment development, Howard Nusbaum for valuable discussions regarding the stimulus sets, and Ana Solodkin for advice on neuroanatomy and neurophysiology. Finally, we would like to thank Audrey Holland for her guidance, mentorship, and friendship over the past 20 years.

References

- Aichner F, Adelwohrer C, Haring HP. Rehabilitation approaches to stroke. Journal of Neural Transmission. Supplementum. 2002;63:59–73. doi: 10.1007/978-3-7091-6137-1_4. [DOI] [PubMed] [Google Scholar]

- Basso A. Therapy for Aphasia in Italy. In: Holland AL, Forbes MM, editors. Aphasia Treatment: World Perspectives. San Diego, California: Singular Publishing Group; 1993. pp. 1–24. [Google Scholar]

- Bement L, Wallber J, DeFilippo C, Bochner J, Garrison W. A new protocol for assessing viseme perception in sentence context: the lipreading discrimination test. Ear and Hearing. 1988;9(1):33–40. doi: 10.1097/00003446-198802000-00014. [DOI] [PubMed] [Google Scholar]

- Benson DF, Geschwind N. The Aphasias and Related Disturbances . In: Joynt R, editor. Clinical Neurology. Philadelphia: J. B. Lippincott Company; 1989. pp. 1–28. [Google Scholar]

- Bhogal SK, Teasell R, Speechley M. Intensity of aphasia therapy, impact on recovery. Stroke. 2003;34(4):987–993. doi: 10.1161/01.STR.0000062343.64383.D0. [DOI] [PubMed] [Google Scholar]

- Buccino G, Binkofski F, Fink GR, Fadiga L, Fogassi L, Gallese V, Seitz RJ, Zilles K, Rizzolatti G, Freund HJ. Action observation activates premotor and parietal areas in a somatotopic manner: an fMRI study. European Journal of Neuroscience. 2001;13(2):400–404. [PubMed] [Google Scholar]

- Buccino G, Lui F, Canessa N, Patteri I, Lagravinese G, Benuzzi F, Porro CA, Rizzolatti G. Neural circuits involved in the recognition of actions performed by nonconspecifics: an FMRI study. Journal of Cognitive Neuroscience. 2004a;16(1):114–126. doi: 10.1162/089892904322755601. [DOI] [PubMed] [Google Scholar]

- Buccino G, Vogt S, Ritzl A, Fink GR, Zilles K, Freund HJ, Rizzolatti G. Neural circuits underlying imitation learning of hand actions: an event-related FMRI study. Neuron. 2004b;42(2):323–334. doi: 10.1016/s0896-6273(04)00181-3. [DOI] [PubMed] [Google Scholar]

- Canseco-Gonzalez E, Shapiro LP, Zurif EB, Baker E. Predicate-argument structure as a link between linguistic and nonlinguistic representations. Brain and Language. 1990;39(3):391–404. doi: 10.1016/0093-934x(90)90147-9. [DOI] [PubMed] [Google Scholar]

- Cherney LR, Halper AS, Holland AL, Cole R. Computerized script training for aphasia: preliminary results. Am J Speech Lang Pathol. 2008;17(1):19–34. doi: 10.1044/1058-0360(2008/003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coltheart M. The MRC Psycholinguistic Database. Quarterly Journal of Experimental Psychology. 1981;33A:497–505. [Google Scholar]

- Crerar MA, Ellis AW, Dean EC. Remediation of sentence processing deficits in aphasia using a computer-based microworld. Brain and Language. 1996;52(1):229–275. doi: 10.1006/brln.1996.0010. [DOI] [PubMed] [Google Scholar]

- Dabul BL. Apraxia Battery for Adults (ABA-2) 2. Austin, Texas: PRO-ED, Inc; 2000. [Google Scholar]

- Duffy JR. Motor Speech Disorders: Substrates, Differential Diagnosis, and Management. 1. Philadelphia, PA: Mosby; 1995. [Google Scholar]

- Elman JL. Finding Structure in Time. Cognitive Science. 1990;14:179–211. [Google Scholar]

- Elman JL. Learning and Development in Neural Networks: The Importance of Starting Small. Cognition. 1993;48:71–99. doi: 10.1016/0010-0277(93)90058-4. [DOI] [PubMed] [Google Scholar]

- Fadiga L, Craighero L, Buccino G, Rizzolatti G. Speech listening specifically modulates the excitability of tongue muscles: a TMS study. European Journal of Neuroscience. 2002;15(2):399–402. doi: 10.1046/j.0953-816x.2001.01874.x. [DOI] [PubMed] [Google Scholar]

- Fadiga L, Fogassi L, Pavesi G, Rizzolatti G. Motor facilitation during action observation: a magnetic stimulation study. Journal of Neurophysiology. 1995;73(6):2608–2611. doi: 10.1152/jn.1995.73.6.2608. [DOI] [PubMed] [Google Scholar]

- Fitch JL. Telecomputer treatment for aphasia. Journal of Speech and Hearing Disorders. 1983;48(3):335–336. doi: 10.1044/jshd.4803.335. [DOI] [PubMed] [Google Scholar]

- Folstein MF, Folstein SE, McHugh PR. ‘Mini-Mental State’: A Practical Method for Grading the Cognitive State of Patients for the Clinician. J Psychiat Res. 1975;12:189–198. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- Gallese V. A neuroscientific grasp of concepts: from control to representation. Philosophical Transactions of the Royal Society of London Series B: Biological Sciences. 2003;358(1435):1231–1240. doi: 10.1098/rstb.2003.1315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallese V, Fadiga L, Fogassi L, Rizzolatti G. Action recognition in the premotor cortex. Brain. 1996;119(Pt 2):593–609. doi: 10.1093/brain/119.2.593. [DOI] [PubMed] [Google Scholar]

- Goodglass H. Understanding Aphasia. San Diego, California: Academic Press; 1993. [Google Scholar]

- Goodglass H, Kaplan E. The Assessment of Aphasia and Related Disorders. Philadelphia: Lea and Febiger; 1972. [Google Scholar]

- Goodglass H, Kaplan E, Barresi B. The Assessment of Aphasia and Related Disorders (Revised) Philadelphia: Lippincott Williams & Wilkins; 2000. [Google Scholar]

- Grawemeyer B, Cox R, Lum C. AUDIX: a knowledge-based system for speech-therapeutic auditory discrimination exercises. Studies in Health Technology and Informatics. 2000;77:568–572. [PubMed] [Google Scholar]

- Graziano MS, Taylor CS, Moore T, Cooke DF. The cortical control of movement revisited. Neuron. 2002;36(3):349–362. doi: 10.1016/s0896-6273(02)01003-6. [DOI] [PubMed] [Google Scholar]

- Hilari K, Byng S, Lamping DL, Smith SC. Stroke and Aphasia Quality of Life Scale-39 (SAQOL-39): evaluation of acceptability, reliability, and validity. Stroke. 2003;34(8):1944–1950. doi: 10.1161/01.STR.0000081987.46660.ED. [DOI] [PubMed] [Google Scholar]

- Huber W, Springer L, Willmes K. Approaches to Aphasia Therapy in Aachen. In: Holland AL, Forbes MM, editors. Aphasia Treatment: World Perspectives. San Diego, California: Singular Publishing Group; 1993. pp. 55–86. [Google Scholar]

- Iacoboni M. Neural circuitry of imitation: the human homologues of monkey STS, PF, and F5; Paper presented at the Human Brain Mapping; 2003; New York, NY. 2003. [Google Scholar]

- Iacoboni M, Koski LM, Brass M, Bekkering H, Woods RP, Dubeau MC, Mazziotta JC, Rizzolatti G. Reafferent copies of imitated actions in the right superior temporal cortex. Proceedings of the National Academy of Sciences of the United States of America. 2001;98(24):13995–13999. doi: 10.1073/pnas.241474598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iacoboni M, Woods RP, Brass M, Bekkering H, Mazziotta JC, Rizzolatti G. Cortical mechanisms of human imitation. Science. 1999;286(5449):2526–2528. doi: 10.1126/science.286.5449.2526. [DOI] [PubMed] [Google Scholar]

- Kaplan E, Goodglass J, Weintraub S. Boston Naming Test. Philadelphia: Lea and Febiger; 1983. [Google Scholar]

- Katz RC, Wertz RT. The efficacy of computer-provided reading treatment for chronic aphasic adults. Journal of Speech, Language, and Hearing Research. 1997;40(3):493–507. doi: 10.1044/jslhr.4003.493. [DOI] [PubMed] [Google Scholar]

- Kertesz A. The Western Aphasia Battery. New York: Grune and Stratton; 1982. [Google Scholar]

- Kertesz A, McCabe P. Recovery Patterns and Prognosis in Aphasia. Brain. 1977;100(1):1–18. doi: 10.1093/brain/100.1.1. [DOI] [PubMed] [Google Scholar]

- Kiran S, Thompson CK. The role of semantic complexity in treatment of naming deficits: Training semantic categories in fluent aphasia by controlling exemplar typicality. Journal of Speech, Language and Hearing Research. 2003;46:608–622. doi: 10.1044/1092-4388(2003/048). [DOI] [PubMed] [Google Scholar]

- Kohler E, Keysers C, Umilta MA, Fogassi L, Gallese V, Rizzolatti G. Hearing sounds, understanding actions: action representation in mirror neurons. Science. 2002;297(5582):846–848. doi: 10.1126/science.1070311. [DOI] [PubMed] [Google Scholar]

- Kucera H, Francis WN. Computational Analysis of Present Day American English. Providence, Rhode Island: Brown University Press; 1967. [Google Scholar]

- Lecours AR, Lhermitte F, Bryans B. Aphasiology. London, England: Baillière Tindall; 1983. [Google Scholar]

- Magnuson JS, Nusbaum HC. Acoustic differences, listener expectations, and the perceptual accommodation of talker variability. Journal of Experimental Psychology: Human Perception and Performance. 2007;33(2):391–409. doi: 10.1037/0096-1523.33.2.391. [DOI] [PubMed] [Google Scholar]

- Mathiowetz V, Volland G, Kashman N, Weber K. Adult norms for the Box and Block Test of manual dexterity. American Journal of Occupational Therapy. 1985;39(6):386–391. doi: 10.5014/ajot.39.6.386. [DOI] [PubMed] [Google Scholar]

- Merzenich MM, Jenkins WM, Johnston P, Schreiner C, Miller SL, Tallal P. Temporal processing deficits of language-learning impaired children ameliorated by training. Science. 1996;271(5245):77–81. doi: 10.1126/science.271.5245.77. [DOI] [PubMed] [Google Scholar]

- Murata A, Fadiga L, Fogassi L, Gallese V, Raos V, Rizzolatti G. Object representation in the ventral premotor cortex (area F5) of the monkey. Journal of Neurophysiology. 1997;78(4):2226–2230. doi: 10.1152/jn.1997.78.4.2226. [DOI] [PubMed] [Google Scholar]

- Naeser MA, Baker EH, Palumbo CL, Nicholas M, Alexander MP, Samaraweera R, Prete MN, Hodge SM, Weissman T. Lesion site patterns in severe, nonverbal aphasia to predict outcome with a computer-assisted treatment program. Archives of Neurology. 1998;55(11):1438–1448. doi: 10.1001/archneur.55.11.1438. [DOI] [PubMed] [Google Scholar]

- Nusbaum HC, Pisoni DB, Davis C. Sizing up the Hoosier mental lexicon: Measuring the familiarity of 20,000 words (No. Research on Speech Perception Progress Report No. 10) Bloomington, Indiana: Speech Research Laboratory, Department of Psychology, Indiana University; 1984. [Google Scholar]

- Owens E, Blazek B. Visemes observed by hearing-impaired and normal-hearing adult viewers. Journal of Speech and Hearing Research. 1985;28(3):381–393. doi: 10.1044/jshr.2803.381. [DOI] [PubMed] [Google Scholar]

- Pallicino P, Snyder W, Granger C. The NIH stroke scale and the FIM in stroke rehabilitation. Stroke. 1992;23(6):919. [PubMed] [Google Scholar]

- Radloff LS. The CES-D Scale: A self-report depression scale for research in the general population. Applied Psychological Measurement. 1977;1(3):385–401. [Google Scholar]

- Raymer AM, Beeson P, Holland A, Kendall D, Maher LM, Martin N, Murray L, Rose M, Thompson CK, Turkstra L, Altmann L, Boyle M, Conway T, Hula W, Kearns K, Rapp B, Simmons-Mackie N, Gonzalez Rothi LJ. Translational research in aphasia: from neuroscience to neurorehabilitation. Journal of Speech, Language, and Hearing Research. 2008;51(1):S259–275. doi: 10.1044/1092-4388(2008/020). [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Arbib MA. Language within our grasp. Trends in Neurosciences. 1998;21(5):188–194. doi: 10.1016/s0166-2236(98)01260-0. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Arbib MA. From grasping to speech: imitation might provide a missing link: reply. Trends in Neurosciences. 1999;22(4):152. doi: 10.1016/s0166-2236(98)01389-7. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L. The mirror-neuron system. Annual Review of Neuroscience. 2004;27:169–192. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fadiga L, Gallese V, Fogassi L. Premotor cortex and the recognition of motor actions. Brain Research Cognitive Brain Research. 1996;3(2):131–141. doi: 10.1016/0926-6410(95)00038-0. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fogassi L, Gallese V. Motor and cognitive functions of the ventral premotor cortex. Current Opinion in Neurobiology. 2002;12(2):149–154. doi: 10.1016/s0959-4388(02)00308-2. [DOI] [PubMed] [Google Scholar]

- Robey RR. A meta-analysis of clinical outcomes in the treatment of aphasia. Journal of Speech, Language, and Hearing Research. 1998;41(1):172–187. doi: 10.1044/jslhr.4101.172. [DOI] [PubMed] [Google Scholar]

- Romanski LM, Goldman-Rakic PS. An auditory domain in primate prefrontal cortex. Nature Neuroscience. 2002;5(1):15–16. doi: 10.1038/nn781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skipper JI, Goldin-Meadow S, Nusbaum HC, Small SL. Speech-associated gestures, Broca’s area, and the human mirror system. Brain and Language. 2007;101(3):260–277. doi: 10.1016/j.bandl.2007.02.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skipper JI, Nusbaum HC, Small SL. Lending a helping hand to hearing: A motor theory of speech perception. In: Arbib MA, editor. Action To Language via the Mirror Neuron System. Cambridge: Cambridge University Press; 2006. pp. 250–286. [Google Scholar]

- Skipper JI, van Wassenhove V, Nusbaum HC, Small SL. Hearing lips and seeing voices: how cortical areas supporting speech production mediate audiovisual speech perception. Cerebral Cortex. 2007;17(10):2387–2399. doi: 10.1093/cercor/bhl147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Small SL. A Biological Model of Aphasia Rehabilitation: Pharmacological Perspectives. Aphasiology. 2004a;18(5/6/7):473–492. [Google Scholar]

- Small SL. Therapeutics in cognitive and behavioral neurology. Annals of Neurology. 2004b;56(1):5–7. doi: 10.1002/ana.20194. [DOI] [PubMed] [Google Scholar]

- Steele R, Weinrich M, Wertz RT, Kleczewska MK, Carlson GS. Computer-Based Visual Communication in Aphasia. Neuropsychologia. 1989;27(4):409–426. doi: 10.1016/0028-3932(89)90048-1. [DOI] [PubMed] [Google Scholar]

- Sutton RS, Barto AG. Reinforcement Learning: An Introduction. Cambridge, MA: MIT Press; 1998. [Google Scholar]

- Tallal P, Miller SL, Bedi G, Byma G, Wang X, Nagarajan SS, Schreiner C, Jenkins WM, Merzenich MM. Language comprehension in language-learning impaired children improved with acoustically modified speech. Science. 1996;271(5245):81–84. doi: 10.1126/science.271.5245.81. [DOI] [PubMed] [Google Scholar]

- Taub E, Crago JE, Burgio LD, Groomes TE, Cook EW, 3rd, DeLuca SC, Miller NE. An operant approach to rehabilitation medicine: overcoming learned nonuse by shaping. Journal of the Experimental Analysis of Behavior. 1994;61(2):281–293. doi: 10.1901/jeab.1994.61-281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tettamanti M, Buccino G, Saccuman MC, Gallese V, Danna M, Scifo P, Fazio F, Rizzolatti G, Cappa SF, Perani D. Listening to Action-related Sentences Activates Fronto-parietal Motor Circuits. Journal of Cognitive Neuroscience. 2005;17(2):273–281. doi: 10.1162/0898929053124965. [DOI] [PubMed] [Google Scholar]

- Tomasello M, Savage-Rumbaugh S, Kruger AC. Imitative learning of actions on objects by children, chimpanzees, and enculturated chimpanzees. Child Development. 1993;64(6):1688–1705. [PubMed] [Google Scholar]

- Turkstra LS, Holland AL, Bays GA. The neuroscience of recovery and rehabilitation: what have we learned from animal research? Archives of Physical Medicine and Rehabilitation. 2003;84(4):604–612. doi: 10.1053/apmr.2003.50146. [DOI] [PubMed] [Google Scholar]

- Wechsler D. Wechsler Memory Scale-III. San Antonio, Texas: The Psychological Corporation; 1997. [Google Scholar]

- Weinrich M. Computer rehabilitation in aphasia. Clinical Neuroscience. 1997;4(2):103–107. [PubMed] [Google Scholar]

- Weinrich M, McCall D, Boser KI, Virata T. Narrative and procedural discourse production by severely aphasic patients. Neurorehabil Neural Repair. 2002;16(3):249–274. doi: 10.1177/154596802401105199. [DOI] [PubMed] [Google Scholar]

- Weinrich M, Shelton JR, Cox DM, McCall D. Remediating production of tense morphology improves verb retrieval in chronic aphasia. Brain and Language. 1997;58(1):23–45. doi: 10.1006/brln.1997.1757. [DOI] [PubMed] [Google Scholar]

- Weinrich M, Shelton JR, McCall D, Cox DM. Generalization from single sentence to multisentence production in severely aphasic patients. Brain and Language. 1997;58(2):327–352. doi: 10.1006/brln.1997.1759. [DOI] [PubMed] [Google Scholar]

- Williams LS, Weinberger M, Harris LE, Clark DO, Biller J. Development of a stroke-specific quality of life scale. Stroke. 1999;30(7):1362–1369. doi: 10.1161/01.str.30.7.1362. [DOI] [PubMed] [Google Scholar]

- Wilson B, Cockburn J, Halligan P. Development of a behavioral test of visuospatial neglect. Archives of Physical Medicine and Rehabilitation. 1987;68(2):98–102. [PubMed] [Google Scholar]

- Wong PC, Nusbaum HC, Small SL. Neural bases of talker normalization. Journal of Cognitive Neuroscience. 2004;16(7):1173–1184. doi: 10.1162/0898929041920522. [DOI] [PubMed] [Google Scholar]