Abstract

Since its first discovery in the prefrontal cortex, persistent activity during the interval between a transient sensory stimulus and a subsequent behavioral response has been identified in many cortical and subcortical areas. Such persistent activity is thought to reflect the maintenance of working memory representations that bridge past events with future contingent plans. Indeed, the term persistent activity is sometimes used interchangeably with working memory. In this review, we argue that persistent activity observed broadly across many cortical and subcortical areas reflects not only working memory maintenance, but also a variety of other cognitive processes, including perceptual and reward-based decision making.

Properties of persistent activity

For decades now we have known that neuronal activity persists in the prefrontal cortex when the subject is in an active state of remembering a recent event [1–3], a process termed working memory [4,5]. Working memory allows animals to use information that is not currently available in the environment but is crucial for adaptive behavior. In other words, an internal representation of relevant information must be created and maintained until it can be used later to guide behavior.

Typical working memory experiments involve the brief presentation of a stimulus to be remembered, which is then followed by a short delay, or retention interval. Performance is probed by having the subject make a response that is contingent on successful maintenance of the memoranda. Neural activity in the prefrontal cortex persists during the delay period without being contaminated by sensory and motor processes, so it is thought to reflect the maintenance of a working memory representation [1–3].

Several key features of persistent activity suggest that it is a neural mechanism critical for working memory maintenance and presumably other high-level cognitive processes such as decision making. First, neural activity, measured by a variety of methodologies, such as single-neuron recording and neuroimaging, persists during the time epoch when a representation is thought to be maintained in an active state (e.g. when a stimulus needs to be remembered) [1–3,5–7]. Second, sustained neural activity dissipates when the representation is no longer needed (e.g. until a memory-guided response has been generated) [3,8,9]. Third, when activity does not persist throughout a retention interval, memory performance is compromised [3,10]. Fourth, persistent activity scales with working memory load. For example, as the number of items that need to be maintained increases, the level of delay period activity also increases until it plateaus near the limits of the individual's short-term memory capacity [11–13]. Fifth, persistent activity is selective, such that specific information about the contents of working memory can be decoded from the population activity [14–16]. For example, persistent activity is selective for the location of the memoranda during spatial working memory tasks [3,9] (Figure 1). Finally, although much of the relevant research has focused on the prefrontal cortex, persistent activity has been reported in many other cortical and subcortical areas [17,18], suggesting that its generation and maintenance might depend on a broadly distributed circuit mechanism (Box 1). In this review, we argue that the persistence of neural activity in the absence of external stimulation is the general mechanism by which internal representations are maintained in an active state. Moreover, these representations go beyond traditional notions of working memory and include variables useful for decision making [19,20].

Figure 1.

Persistent activity for spatial working memory. (a) Left, lateral view of the rhesus monkey brain. Right, spike density functions of a single neuron in the dorsolateral prefrontal cortex of a rhesus monkey during the delay period (gray background), with colors indicating the remembered location of the visual stimulus (see inset) briefly flashed during the cue period (yellow background). During the experiment, all peripheral stimuli were presented in the same color [20,48]. (b) Left, maintenance of a spatial location during working memory task-evoked BOLD activity in the frontal and parietal cortices, shown as a statistical map overlaid on the inflated cortex (sulci, dark gray; gyri, white). Top right, a BOLD signal persisted during a spatial working memory task in the dorsolateral prefrontal cortex (dotted circle in the left panel) and was greater for memoranda in the contralateral visual field (solid line) than for those in the ipsilateral field (dashed line). The yellow bar represents presentation of the sample cue and the gray background depicts the memory delay. Bottom right, time course of BOLD signals from the dorsolateral prefrontal cortex aligned on presentation of the sample cue during a spatial working memory task. Separate lines represent the different delay lengths (indicated by colored bars at the bottom). Importantly, persistent activity bracketed by the phasic BOLD signals increased in duration with the delay length and was sustained until the working memory representation could be used to guide the response [10].

Persistent activity and working memory

The development of neurobiological theories of working memory critically depends on the measurement of neural activity during the delay period. As a result, persistent activity has essentially become a proxy for working memory and the two are often used interchangeably. This is not surprising because persistent neural activity during delay periods seems to carry useful information about the features of the memoranda. Persistent activity arises while the subject maintains information about the spatial location [3,9,10,17,18,21,22], object identity [23–25], word [26], sound [27,28] or haptic sensation [29] of the memorandum. Theoretically, the short-term maintenance of such features is necessary for a variety of high-level cognitive functions because it facilitates temporal integration of past sensory events with future actions [5,30]. Importantly, working memory paradigms have been the test bed for studies addressing the neural mechanisms of persistent activity (Box 1).

Despite its logical fallibility, the reverse inference that the presence of persistent activity indicates the maintenance of a past stimulus continues to tempt researchers. The problem, however, is that persistent activity has been linked to several other cognitive processes in addition to working memory [6,31,32]. Furthermore, neural activity in the prefrontal cortex still persists and encodes the properties of visual stimuli even in the absence of working memory demands [33]. Persistent activity can also reflect not the maintenance of past events, but the anticipation of future ones. During an interval prior to or in preparation for an action, persistent activity is thought to represent the metrics or goal of a forthcoming planned action [34,35]. Similarly, persistent activity has been linked to stimuli that are anticipated but not yet encountered [25,36]. Therefore, persistent activity underlies the representation of retrospective, current and prospective information. Moreover, persistent activity can be observed in the prefrontal cortex during the active representation of more abstract information, such as rules, associations, categories and strategies [37–39]. Therefore, in addition to traditional notions of working memory representations, persistent activity can reflect a wider variety of cognitive processes, some of which are used for making decisions and to which we now turn our attention.

Persistent activity reflecting accumulation of sensory evidence

As described above, activity of individual neurons and blood-oxygen-level-dependent (BOLD) signals in multiple brain areas can be sustained during the delay period of a working memory task, and therefore might provide the substrate for working memory and storage of other behaviorally relevant information. It is often thought that such persistent activity might arise from a network of recurrently connected neurons [19]. However, persistent activity related to a past sensory event might also arise indirectly from the short-term changes in synaptic efficacy induced by transient neural activity [40,41] (Box 1). Regardless of its mechanism, such persistent activity observed during a working memory task can result from temporal integration of a transient input (Figure 2a). Thus, the neurons or networks displaying persistent activity during a working memory task might be involved in other cognitive processes that require temporal integration [19,42,43]. For example, neurons displaying persistent activity might be used to average out sensory noise during perceptual decision making (Figure 2b). According to the so-called random-walk models of decision making, a behavioral response is triggered when accumulated evidence reaches a certain threshold and therefore it takes more time to identify a weak noisy sensory stimulus than a salient stimulus. Consistent with this model, during a choice reaction time task, both the speed and accuracy of responses decrease as the stimuli become more difficult to discriminate [44]. Moreover, the same neurons in the prefrontal cortex and posterior parietal cortex that show persistent activity during a working memory task might contribute to the integration of sensory evidence because their activity tends to increase more slowly during the reaction time following presentation of a relatively weak stimulus [42,45]. Similarly, fMRI studies on perceptual decision-making tasks have also found an increase in BOLD activity in the prefrontal cortex and posterior parietal cortex when the stimulus can be more easily discriminated [46].

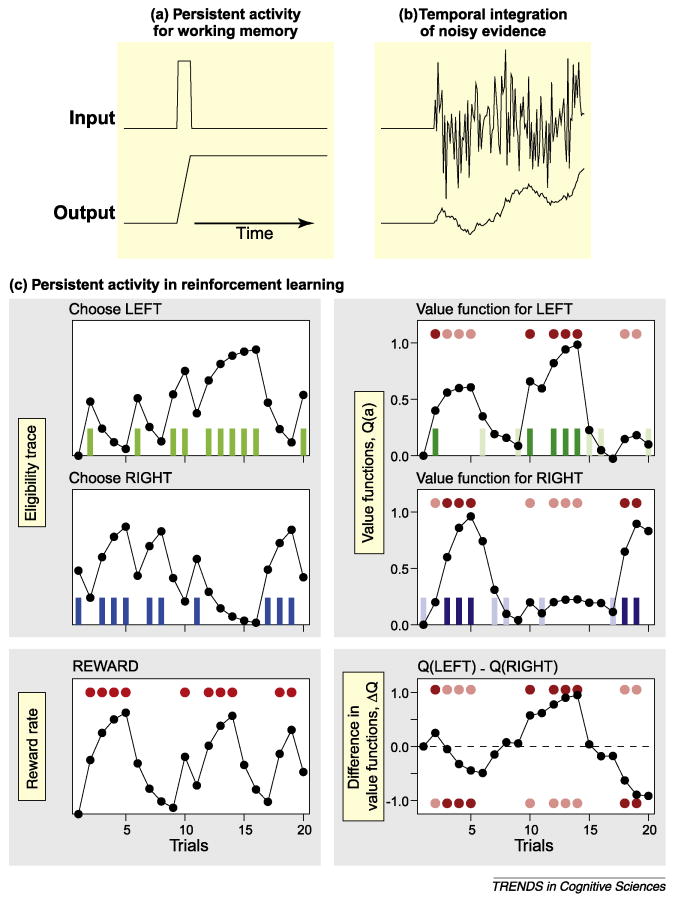

Figure 2.

Persistent activity resulting from temporal integration can subserve multiple types of cognitive processes, including working memory, accumulation of noisy sensory evidence and creation of eligibility trace and other computations in reinforcement learning. (a,b) Schematic illustration of (a) the time course of inputs leading to persistent activity during a working memory task and (b) buildup activity related to the accumulation of noisy sensory inputs. (c) Hypothetical signals related to the animal's actions (green and blue vertical bars for leftward and rightward actions, respectively) can be temporally integrated to generate eligibility traces (upper and middle left panels), whereas temporal integration of reward (red disks) can lead to signals related to average reward rate (bottom left panel). The value function for each action is incremented by the reward prediction error, namely, the discrepancy between the reward earned and the reward predicted from the current value function, weighted by its eligibility trace. As a result, reward delivered in a given trial increases the value functions according to the recency of each action (upper and middle right panels), even when it was not chosen in the last trial. Dark red disks and colored vertical bars indicate the trials in which the leftward or rightward action led to immediate reward. The difference in value functions for the two alternative actions is also shown [bottom right panel; dark red circles indicate the trials in which reward was obtained after choosing the leftward (top) and rightward (bottom) actions, respectively].

Persistent activity in reinforcement learning

In most neurophysiological experiments conducted in behaving animals, neural activity is recorded while the animal performs a particular behavioral task repeatedly in a large number of trials separated by short inter-trial intervals. As a result, properties of persistent activity have been largely studied in relation to behaviorally relevant events within a single trial. For example, persistent activity during the delay period of a working memory task has been viewed as the substrate of working memory for the items presented at the beginning of a trial [3,8–10]. Similarly, gradual changes in neural activity observed during a perceptual decision-making task have been linked to the process of evidence accumulation within a trial [19,42,45,46]. However, the network of neurons capable of temporal integration might be exploited in tasks in which the animal is required to integrate information about the events in multiple trials. Recently, this possibility has been tested during several behavioral tasks in which animals need to change their decision-making strategies based on their previous experience [20,47–51].

The process of decision making, namely, selecting a particular action among a number of alternative actions, is trivial if the outcomes of all the alternative actions are fully known and can be evaluated along a single dimension. Often, however, the outcomes of actions vary along multiple dimensions, such as the magnitude, probability and immediacy of reward expected from each action, and decision makers need to resolve potential conflicts to maximize the magnitude and probability of their reward and to obtain it as soon as possible. Nevertheless, as long as all the information about the expected outcomes is available, such conflicts can be resolved without any additional inputs from the environment. However, it is a much more challenging task to discover an optimal decision-making strategy in an unfamiliar and stochastic environment for which the probabilities of different outcomes from alternative actions are not known. In such cases, the desirability of each action needs to be estimated through experience. The framework of reinforcement learning theory [52] parsimoniously accounts for dynamic changes in the behaviors of humans and animals as they experience unpredictable outcomes [53–55]. In addition, the same framework has played an important role in elucidating cortical and subcortical mechanisms involved in evaluating the action outcomes and exploiting this information to optimize decision-making strategies [47–49,54–58].

In reinforcement learning theory, the entire history of previous actions and their outcomes is abstracted and represented by a set of quantities, referred to as value functions. The value function (Q in Figure 2c) is an estimate of future rewards expected from a particular state and action, and is continuously adjusted according to the animal's experience. Accordingly, decision makers can make choices at any moment simply by selecting an action with the maximum value function. Value functions are increased (decreased) when the outcome from a given action is better (worse) than expected. The discrepancy between the expected and actual outcomes is referred to as the reward prediction error and might be encoded by the activity of midbrain dopamine neurons [56]. In addition, neurons with a change in activity according to the value functions of specific actions have been identified in a number of brain areas, including the prefrontal cortex, posterior parietal cortex and basal ganglia [47–49,54,59–61].

As in working-memory and perceptual decision-making tasks, persistent activity has been observed in multiple brain areas during reward-based decision-making tasks. Activity related to value functions is often manifest as persistent activity [43,47–49,51,61] and therefore can be used to guide the selection of subsequent actions. Moreover, many of the key computations in reinforcement learning correspond to temporal integration and might be implemented in the form of persistent activity. For example, the outcomes from a particular action are often revealed to the animal after substantial delays. In some cases, the animal might execute multiple actions before receiving a reward or penalty. This makes it difficult to determine how the value functions should be adjusted for multiple actions, creating a problem of temporal credit assignment [52,62]. In the so-called temporal-difference (TD) learning algorithms, this problem is addressed by comparing the value functions of two successive states. Therefore, a particular action can produce a positive reward prediction error and increase its value function, even without receiving any reward immediately, if it allows the decision maker to move to a state with a higher value function than that for the current state. Although TD learning provides a solution to the problem of temporal credit assignment, the time course of reward prediction errors encoded by dopamine neurons does not match the pattern predicted by simple TD learning algorithms, suggesting that additional mechanisms for temporal credit assignment exist [63]. Indeed, some reinforcement learning algorithms rely on the memory of previous actions and states visited by the decision makers. These memory signals are referred to as eligibility traces [52] (Figure 2c). Persistent activity in the prefrontal cortex [20,47,49], posterior parietal cortex [48] and basal ganglia [51,64,65] can be modulated by previous actions of the animal across multiple trials and might correspond to the eligibility traces (Figure 3a,c).

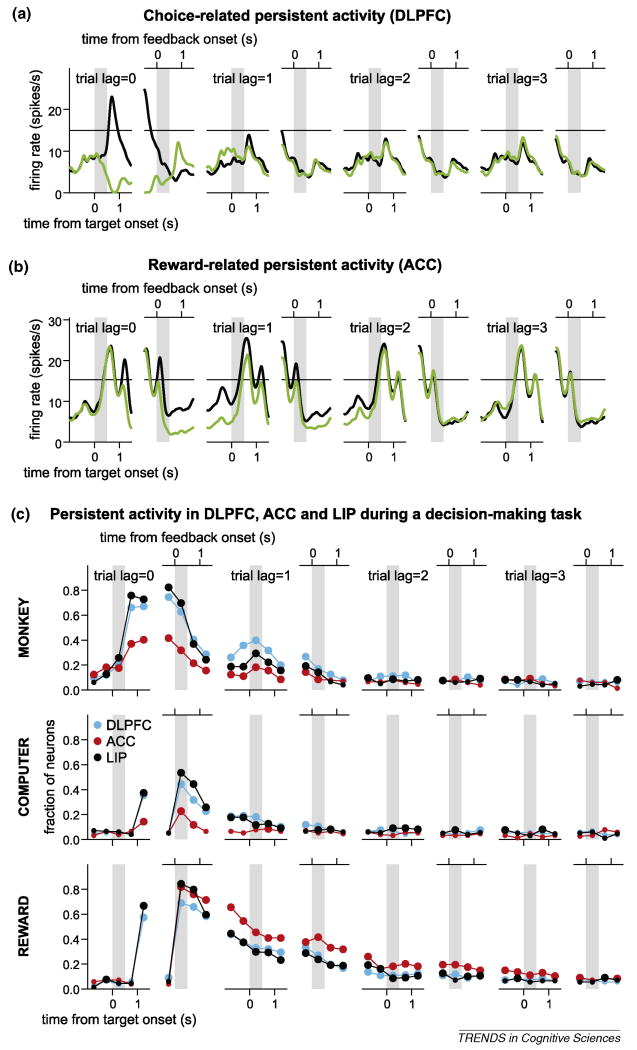

Figure 3.

Persistent activity for decision making and reinforcement learning. (a) Persistent activity related to the animal's choice [20]. The spike density function of the same neuron illustrated in Figure 1a during a binary decision-making task is plotted separately according to the position of the target chosen in the current trial (trial lag=0) or in each of the previous three trials (trial lag=1–3). Black and green lines indicate the activity for the trials in which the animal chose the leftward and rightward targets, respectively. (b) Persistent activity related to the outcome of the animal's decision [43]. The spike density functions of a neuron in the anterior cingulate cortex is plotted separately according to whether the animal was rewarded (green) or not (black) in the current (trial lag=0) or in each of the previous three trials (trial lag=1–3) using the same format as in (a). (c) Proportion of neurons in the dorsolateral prefrontal cortex (DLPFC), anterior cingulate cortex (ACC) and posterior parietal cortex (lateral intra-parietal area, LIP) that showed a significant change in activity according to the animal's choice (top), the choice of the computer opponent (middle), and outcome of the animal's choice (bottom) in the current (trial lag=0) and previous three trials (trial lag=1–3). Two subpanels for each trial lag illustrate the activity aligned at the target onset (left) and feedback onset (right), and gray bars correspond to the 0.5-s cue period and feedback period, respectively. The decision-making task used in these studies simulated a matching-pennies task in which the animal was rewarded only when it chose the same target as the computer opponent [20,43,47–49,53].

Persistent activity related to the outcomes of previous choices has also been observed in the same regions of the cortex and basal ganglia that showed persistent changes in neural activity according to the animal's previous choices [20,43,47–50,64,65] (Figures 2c and 3b,c). These results suggest that the signals related to previous actions and their outcomes might influence the ways in which sensory stimuli are encoded and transformed to behavioral responses in multiple regions of the brain. Similar to the activity related to previous choices, persistent activity related to previous outcomes often continues during inter-trial intervals. In addition, in contrast to persistent activity observed during the delay period of a working memory task, persistent activity related to the animal's previous choices and outcomes tends to decay gradually [43,48,51]. Reward-related persistent activity might provide the substrate for temporal integration of rewards in multiple trials, and could therefore be used to encode the average reward rate [43]. Information about the average reward rate plays an important role in some reinforcement learning algorithms. For example, it might be used to calculate the reward prediction error and influence how the value functions would be updated, as in so-called R-learning [52]. Indeed, the primate anterior cingulate cortex contains neurons encoding reward prediction errors in addition to neurons with persistent activity related to previous rewards [43,66]. A sudden change in the average rate is likely to indicate unexpected changes in the animal's environment that require appropriate adjustment in the animal's behavioral strategies [55]. Therefore, persistent activity related to previous reward might provide a substrate for monitoring the productivity of the animal's behavior and therefore facilitate flexible behavior.

Concluding remarks

The brain uses the information obtained from previous experience to make predictions about the future and to select behaviors that are likely to result in the most beneficial outcomes. The length of the retention interval during which the brain has to store information about past events varies greatly according to the properties of the environment and the animal's behavioral needs. Presumably, structural changes in the nervous system are necessary for long-term information storage. By contrast, persistent neural activity might underlie short-term storage of behaviorally relevant information. Such persistent activity can support diverse cognitive processes, ranging from working memory maintenance to computation of value functions. An attractive hypothesis is that persistent activity arises from a neural circuitry capable of temporal integration of its inputs, and might flexibly subserve a multitude of functions. Therefore, characterizing persistent activity in more detail under a diverse set of behavioral conditions and understanding its underlying biophysical mechanisms represent important goals if we are to uncover the biological basis of cognition (Box 2).

Box 1. Mechanisms of persistent activity.

Precisely how persistent activity is generated and controlled in the mammalian brain is not known, but several possible, and not mutually exclusive, mechanisms have been proposed. Given the ubiquity of persistent activity, it remains an important area of future research to determine the exact relationship between each of these mechanisms and a specific form of persistent activity observed during a particular behavioral task.

Persistent activity from reverberations in a recurrent network. Persistent activity might be initiated and sustained by reciprocal positive feedback within a population of neurons. Persistent activity observed in a given cortical area (e.g. prefrontal cortex) might arise locally within a population of neighboring neurons [19], within a population of neurons distributed across multiple cortical regions [21] or from a network of areas including both cortical and subcortical systems, such as the corticobasal ganglia–thalamus–cortical loops [17,18]. At each of these network levels, activation of separate networks could specify different stimuli, different actions or stimulus–response mappings, different behavioral strategies and reward expectancies.

Persistent activity relying on intracellular signals. Individual cortical neurons might display persistent activity due to specific membrane currents or cumulative changes in the concentration of intracellular calcium. For example, neurons in the entorhinal cortex can display a step-like depolarization following brief stimulation. Increased spiking can lead to calcium influx through voltage-gated calcium channels at dendrites, causing subsequent depolarization and thereby closing a positive feedback loop. This form of persistent activity seems to rely on nonspecific calcium-sensitive cationic currents [67].

Persistent activity and short-term synaptic plasticity. When a particular synapse is repeatedly stimulated, the amount of neurotransmitter released in response to a given presynaptic action potential can increase in some synapses and decrease in others [68]. The pattern of activity sustained in a network is a function of synaptic weights among its constituent neurons, so short-term synaptic changes induced by a particular pattern of activity might make it possible for the network to resume specific patterns of persistent activity even after a quiescent period [40,41].

Box 2. Questions for future research.

What are the precise functions of persistent activity during working memory? Does the persistent activity observed in different brain areas perform different functions, such as selection and maintenance of remembered items? Does persistent activity reflect active control processes as well as maintenance, and how can we characterize these functions separately?

How is persistent activity modified to store information about multiple sensory items that are presented simultaneously or sequentially [69]? How do the inputs to the neural circuits generate persistent activity gated so that it stores only behaviorally relevant information?

What are the mechanisms of persistent activity? Does persistent activity depend on intracellular signals, short-term synaptic plasticity or feedback loops formed between multiple brain regions, including the basal ganglia and thalamus?

What is the relationship between persistent activity and synaptic plasticity? Does persistent activity play a critical role in inducing synaptic plasticity and hence long-term memory? Conversely, what is the contribution of short-term synaptic plasticity to persistent activity?

How is the time course of persistent activity controlled? Does persistent activity related to the animal's previous choices and their outcomes depend on the statistics of the task and environment? How is persistent activity terminated?

What are the functions of neuromodulators, such as dopamine and norepinephrine, in controlling the strength and time course of persistent activity [70]?

Acknowledgments

We are grateful to Hyojung Seo, Min Whan Jung and Xiao-Jing Wang for helpful discussions. This research was supported by National Institutes of Health Grants R01-EY016407 (C.E.C), RL1-DA024855, R01-MH05916 and P05-NS048328 (D.L.).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Fuster JM, Alexander GE. Neuron activity related to short-term memory. Science. 1971;173:652–654. doi: 10.1126/science.173.3997.652. [DOI] [PubMed] [Google Scholar]

- 2.Kubota K, Niki H. Prefrontal cortical unit activity and delayed alternation performance in monkeys. J Neurophysiol. 1971;34:337–347. doi: 10.1152/jn.1971.34.3.337. [DOI] [PubMed] [Google Scholar]

- 3.Funahashi S, et al. Mnemonic coding of visual space in the monkey's dorsolateral prefrontal cortex. J Neurophysiol. 1989;61:331–349. doi: 10.1152/jn.1989.61.2.331. [DOI] [PubMed] [Google Scholar]

- 4.Baddeley A. Working Memory. Oxford University Press; 1986. [Google Scholar]

- 5.Goldman-Rakic PS. Cellular basis of working memory. Neuron. 1995;14:477–485. doi: 10.1016/0896-6273(95)90304-6. [DOI] [PubMed] [Google Scholar]

- 6.Curtis CE, D'Esposito M. Persistent activity in the prefrontal cortex during working memory. Trends Cogn Sci. 2003;7:415–423. doi: 10.1016/s1364-6613(03)00197-9. [DOI] [PubMed] [Google Scholar]

- 7.Curtis CE, D'Esposito M. Working memory. In: Cabezza R, Kingstone A, editors. Handbook of Functional Neuroimaging of Cognition. 2nd. MIT Press; 2006. pp. ??–??. [Google Scholar]

- 8.Schluppeck D, et al. Sustained activity in topographic areas of human posterior parietal cortex during memory-guided saccades. J Neurosci. 2006;26:5098–5108. doi: 10.1523/JNEUROSCI.5330-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Srimal R, Curtis CE. Persistent neural activity during the maintenance of spatial position in working memory. Neuroimage. 2008;39:455–468. doi: 10.1016/j.neuroimage.2007.08.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Curtis CE, et al. Maintenance of spatial and motor codes during oculomotor delayed response tasks. J Neurosci. 2004;24:3944–3952. doi: 10.1523/JNEUROSCI.5640-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Todd JJ, Marois R. Capacity limit of visual short-term memory in human posterior parietal cortex. Nature. 2004;428:751–754. doi: 10.1038/nature02466. [DOI] [PubMed] [Google Scholar]

- 12.Vogel EK, et al. Neural measures reveal individual differences in controlling access to working memory. Nature. 2005;438:500–503. doi: 10.1038/nature04171. [DOI] [PubMed] [Google Scholar]

- 13.Xu YD, Chun MM. Dissociable neural mechanisms supporting visual short-term memory for objects. Nature. 2006;440:91–95. doi: 10.1038/nature04262. [DOI] [PubMed] [Google Scholar]

- 14.Baeg EH, et al. Dynamics of population code for working memory in the prefrontal cortex. Neuron. 2003;40:177–188. doi: 10.1016/s0896-6273(03)00597-x. [DOI] [PubMed] [Google Scholar]

- 15.Averbeck BB, Lee D. Coding and transmission of information by neural ensembles. Trends Neurosci. 2004;27:225–230. doi: 10.1016/j.tins.2004.02.006. [DOI] [PubMed] [Google Scholar]

- 16.Averbeck BB, Lee D. Prefrontal neural correlates of memory for sequences. J Neurosci. 2007;27:2204–2211. doi: 10.1523/JNEUROSCI.4483-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hikosaka O, et al. Role of the basal ganglia in the control of purposive saccadic eye movements. Physiol Rev. 2000;80:953–978. doi: 10.1152/physrev.2000.80.3.953. [DOI] [PubMed] [Google Scholar]

- 18.Watanabe Y, Funahashi S. Neuronal activity throughout the primate mediodorsal nucleus of the thalamus during oculomotor delayed-responses. I. Cue-, delay- and response-period activity. J Neurophysiol. 2004;92:1738–1755. doi: 10.1152/jn.00994.2003. [DOI] [PubMed] [Google Scholar]

- 19.Wang XJ. Decision making in recurrent neuronal circuits. Neuron. 2008;60:215–234. doi: 10.1016/j.neuron.2008.09.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Seo H, et al. Dynamic signals related to choices and outcomes in the dorsolateral prefrontal cortex. Cereb Cortex. 2007;17:i110–i117. doi: 10.1093/cercor/bhm064. [DOI] [PubMed] [Google Scholar]

- 21.Chafee MV, Goldman-Rakic PS. Matching patterns of activity in primate prefrontal area 8a and parietal area 7ip neurons during a spatial working memory task. J Neurophysiol. 1998;79:2919–2940. doi: 10.1152/jn.1998.79.6.2919. [DOI] [PubMed] [Google Scholar]

- 22.Courtney SM, et al. An area specialized for spatial working memory in human frontal cortex. Science. 1998;279:1347–1351. doi: 10.1126/science.279.5355.1347. [DOI] [PubMed] [Google Scholar]

- 23.Miller EK, et al. A neural mechanism for working and recognition memory in inferior temporal cortex. Science. 1991;254:1377–1379. doi: 10.1126/science.1962197. [DOI] [PubMed] [Google Scholar]

- 24.Ó Scalaidhe SP, et al. Face-selective neurons during passive viewing and working memory performance of rhesus monkeys: evidence for intrinsic specialization of neuronal coding. Cereb Cortex. 1999;9:459–475. doi: 10.1093/cercor/9.5.459. [DOI] [PubMed] [Google Scholar]

- 25.Ranganath C, et al. Inferior temporal, prefrontal, and hippocampal contributions to visual working memory maintenance and associative memory retrieval. J Neurosci. 2004;24:3917–3925. doi: 10.1523/JNEUROSCI.5053-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Jonides J, et al. The role of parietal cortex in verbal working memory. J Neurosci. 1998;18:5026–5034. doi: 10.1523/JNEUROSCI.18-13-05026.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Alain C, et al. The contribution of the inferior parietal lobe to auditory spatial working memory. J Cogn Neurosci. 2008;20:285–295. doi: 10.1162/jocn.2008.20014. [DOI] [PubMed] [Google Scholar]

- 28.Tark KJ, Curtis CE. Persistent neural activity in the human frontal cortex when maintaining space that is off the map. Nat Neurosci. 2009;12:1463–1468. doi: 10.1038/nn.2406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Romo R, et al. Neuronal correlates of parametric working memory in the prefrontal cortex. Nature. 1999;399:470–473. doi: 10.1038/20939. [DOI] [PubMed] [Google Scholar]

- 30.Fuster JM. The prefrontal cortex--an update: time is of the essence. Neuron. 2001;30:319–333. doi: 10.1016/s0896-6273(01)00285-9. [DOI] [PubMed] [Google Scholar]

- 31.Passingham D, Sakai K. The prefrontal cortex and working memory: physiology and brain imaging. Curr Opin Neurobiol. 2004;14:163–168. doi: 10.1016/j.conb.2004.03.003. [DOI] [PubMed] [Google Scholar]

- 32.Wise SP. Forward frontal fields: phylogeny and fundamental function. Trends Neurosci. 2008;31:599–608. doi: 10.1016/j.tins.2008.08.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Meyer T, et al. Persistent discharges in the prefrontal cortex of monkeys naive to working memory tasks. Cereb Cortex. 2007;17:i70–i76. doi: 10.1093/cercor/bhm063. [DOI] [PubMed] [Google Scholar]

- 34.Andersen RA, Buneo CA. Intentional maps in posterior parietal cortex. Annu Rev Neurosci. 2002;25:189–220. doi: 10.1146/annurev.neuro.25.112701.142922. [DOI] [PubMed] [Google Scholar]

- 35.Curtis CE, Connolly JD. Saccade preparation signals in the human frontal and parietal cortices. J Neurophysiol. 2008;99:133–145. doi: 10.1152/jn.00899.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Rainer G, et al. Prospective coding for objects in primate prefrontal cortex. J Neurosci. 1999;19:5493–5505. doi: 10.1523/JNEUROSCI.19-13-05493.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Freedman DJ, et al. Categorical representation of visual stimuli in the primate prefrontal cortex. Science. 2001;291:312–316. doi: 10.1126/science.291.5502.312. [DOI] [PubMed] [Google Scholar]

- 38.Wallis JD, et al. Single neurons in prefrontal cortex encode abstract rules. Nature. 2001;411:953–956. doi: 10.1038/35082081. [DOI] [PubMed] [Google Scholar]

- 39.Bunge SA, et al. Neural circuits subserving the retrieval and maintenance of abstract rules. J Neurophysiol. 2003;90:3419–3428. doi: 10.1152/jn.00910.2002. [DOI] [PubMed] [Google Scholar]

- 40.Hempel CM, et al. Multiple forms of short-term plasticity at excitatory synapses in rat medial prefrontal cortex. J Neurophysiol. 2000;83:3031–3041. doi: 10.1152/jn.2000.83.5.3031. [DOI] [PubMed] [Google Scholar]

- 41.Mongillo G, et al. Synaptic theory of working memory. Science. 2008;319:1543–1546. doi: 10.1126/science.1150769. [DOI] [PubMed] [Google Scholar]

- 42.Gold JI, Shadlen MN. The neural basis of decision making. Annu Rev Neurosci. 2007;30:535–574. doi: 10.1146/annurev.neuro.29.051605.113038. [DOI] [PubMed] [Google Scholar]

- 43.Seo H, Lee D. Temporal filtering of reward signals in the dorsal anterior cingulate cortex during a mixed-strategy game. J Neurosci. 2007;27:8366–8377. doi: 10.1523/JNEUROSCI.2369-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Laming DRJ. Information Theory of Choice– Reaction Times. Academic Press; 1968. [Google Scholar]

- 45.Roitman JD, Shadlen MN. Response of neurons in the lateral intraparietal area during a combined visual discrimination reaction time task. J Neurosci. 2002;22:9475–9489. doi: 10.1523/JNEUROSCI.22-21-09475.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Heekeren HR, et al. The neural systems that mediate human perceptual decision making. Nat Rev Neurosci. 2008;9:467–479. doi: 10.1038/nrn2374. [DOI] [PubMed] [Google Scholar]

- 47.Barraclough DJ, et al. Prefrontal cortex and decision making in a mixed-strategy game. Nat Neurosci. 2004;7:404–410. doi: 10.1038/nn1209. [DOI] [PubMed] [Google Scholar]

- 48.Seo H, et al. Lateral intraparietal cortex and reinforcement learning during a mixed-strategy game. J Neurosci. 2009;29:7278–7289. doi: 10.1523/JNEUROSCI.1479-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Seo H, Lee D. Behavioral and neural changes after gains and losses of conditioned reinforcers. J Neurosci. 2009;29:3627–3641. doi: 10.1523/JNEUROSCI.4726-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Histed MH, et al. Learning substrates in the primate prefrontal cortex and striatum: sustained activity related to successful actions. Neuron. 2009;63:244–253. doi: 10.1016/j.neuron.2009.06.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Kim H, et al. Role of striatum in updating values of chosen actions. J Neurosci. 2009;29:14701–14712. doi: 10.1523/JNEUROSCI.2728-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Sutton RS, Barto AG. Reinforcement Learning: An Introduction. MIT Press; 1998. [Google Scholar]

- 53.Lee D, et al. Reinforcement learning and decision making in monkeys during a competitive game. Cogn Brain Res. 2004;22:45–58. doi: 10.1016/j.cogbrainres.2004.07.007. [DOI] [PubMed] [Google Scholar]

- 54.Samejima K, et al. Representation of action-specific reward values in the striatum. Science. 2005;310:1337–1340. doi: 10.1126/science.1115270. [DOI] [PubMed] [Google Scholar]

- 55.Behrens TEJ, et al. Learning the value of information in an uncertain world. Nat Neurosci. 2007;10:1214–1221. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- 56.Schultz W. Behavioral theories and the neurophysiology of reward. Annu Rev Psychol. 2006;57:87–115. doi: 10.1146/annurev.psych.56.091103.070229. [DOI] [PubMed] [Google Scholar]

- 57.Lee D. Neural basis of quasi-rational decision making. Curr Opin Neurobiol. 2006;16:191–198. doi: 10.1016/j.conb.2006.02.001. [DOI] [PubMed] [Google Scholar]

- 58.Daw ND, Doya K. The computational neurobiology of learning and reward. Curr Opin Neurobiol. 2006;16:199–204. doi: 10.1016/j.conb.2006.03.006. [DOI] [PubMed] [Google Scholar]

- 59.Kim S, et al. Prefrontal coding of temporally discounted values during inter-temporal choice. Neuron. 2008;59:161–172. doi: 10.1016/j.neuron.2008.05.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Lau B, Glimcher PW. Value representations in the primate striatum during matching behavior. Neuron. 2008;58:451–463. doi: 10.1016/j.neuron.2008.02.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Sugrue LP, et al. Matching behavior and the representation of value in the parietal cortex. Science. 2004;304:1782–1787. doi: 10.1126/science.1094765. [DOI] [PubMed] [Google Scholar]

- 62.Walton ME, et al. Separable learning systems in the macaque brain and the role of orbitofrontal cortex in contingent learning. Neuron. doi: 10.1016/j.neuron.2010.02.027. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Pan WX, et al. Dopamine cells respond to predicted events during classical conditioning: evidence for eligibility traces in the reward-learning network. J Neurosci. 2005;25:6235–6242. doi: 10.1523/JNEUROSCI.1478-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Kim Y, et al. Encoding of action history in the rat ventral striatum. J Neurophysiol. 2007;98:3548–3556. doi: 10.1152/jn.00310.2007. [DOI] [PubMed] [Google Scholar]

- 65.Ito M, Doya K. Validation of decision-making models and analysis of decision variables in the rat basal ganglia. J Neurosci. 2009;29:9861–9874. doi: 10.1523/JNEUROSCI.6157-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Matsumoto M, et al. Medial prefrontal cell activity signaling prediction errors of action values. Nat Neurosci. 2007;10:647–656. doi: 10.1038/nn1890. [DOI] [PubMed] [Google Scholar]

- 67.Fransén E, et al. Mechanism of graded persistent cellular activity of entorhinal cortex layer V neurons. Neuron. 2005;49:735–746. doi: 10.1016/j.neuron.2006.01.036. [DOI] [PubMed] [Google Scholar]

- 68.Zucker RS, Regehr WG. Short-term synaptic plasticity. Annu Rev Physiol. 2002;64:355–405. doi: 10.1146/annurev.physiol.64.092501.114547. [DOI] [PubMed] [Google Scholar]

- 69.Warden MR, Miller EK. The representation of multiple objects in prefrontal neuronal delay activity. Cereb Cortex. 2007;17:i41–i50. doi: 10.1093/cercor/bhm070. [DOI] [PubMed] [Google Scholar]

- 70.Robbins TW, Arnsten AFT. The neuropsychopharmacology of fronto-executive function: monoaminergic modulation. Annu Rev Neurosci. 2009;32:267–287. doi: 10.1146/annurev.neuro.051508.135535. [DOI] [PMC free article] [PubMed] [Google Scholar]