Abstract

The effects of self-monitoring on the procedural integrity of token economy implementation by 3 staff in a special education classroom were evaluated. The subsequent changes in academic readiness behaviors of 2 students with low-incidence disabilities were measured. Multiple baselines across staff and students showed that procedural integrity increased when staff used monitoring checklists, and students' academic readiness behavior also increased. Results are discussed with respect to the use of self-monitoring and the importance of procedural integrity in public school settings.

Keywords: autism, developmental disabilities, early childhood special education, procedural integrity, self-monitoring, token economy

Procedural integrity is the degree to which an intervention is implemented as intended (e.g., Najdowski et al., 2008). Accurate implementation of evidence-based interventions allows data-based decision making regarding the effectiveness of an intervention and increases the likelihood of improving student outcomes (for a review, see Hagermoser-Sanetti & Kratochwill, 2008). Effective training practices, such as didactic instruction, behavioral rehearsal, and brief coaching, must be combined with contingencies following training to ensure accurate implementation of behavioral interventions (DiGennaro, Martens, & Kleinman, 2007; DiGennaro, Martens, & McIntyre, 2005). In these two studies, performance feedback and negative reinforcement were combined to increase the procedural integrity of a behavioral intervention implemented by elementary school teachers. Improved integrity was correlated negatively with student problem behavior. These studies are unique, because they report educator and student data for an intervention in a public school setting. However, an external researcher was needed to provide the feedback necessary for changes to procedural integrity; ongoing external support may not be feasible in many school settings.

Self-monitoring is a procedure that has been shown to increase the accuracy with which direct service providers implement a variety of protocols in human service organizations (Allen & Blackston, 2003; Richman, Riordan, Reiss, Pyles, & Bailey, 1988). Seligson-Petscher and Bailey (2006) examined the effects of self-monitoring on the accurate implementation of a behavior intervention plan by public school personnel. Despite increases in procedural integrity, experimenters provided performance feedback throughout all conditions, making it impossible to isolate the effects of self-monitoring on procedural integrity. The purposes of the current study were (a) to identify the effects of a self-monitoring checklist on the procedural integrity of token economy implementation by early childhood special education (ECSE) classroom staff and (b) to identify any changes in academic readiness behaviors of ECSE students when experimental manipulations were applied to token economy implementation.

METHOD

Participants, Setting, and Materials

Three Caucasian staff members and 2 Hispanic students participated in this research study. Ingrid (teacher), Teri, and Rita (paraprofessionals) were educators in a 10-hr per week ECSE program. Ingrid requested experimenter support for 2 students in the ECSE program who often required one-to-one adult support to participate in group activities. Toby (4 years old) had been diagnosed with autism and Kendra (3 years old) with Williams syndrome and specific language impairment.

Measurement and Interobserver Agreement

Staff

Experimenters used a discrete categorization procedure (Kazdin, 1982) to score the percentage of token economy components implemented correctly. A component was scored correct if it occurred in the correct order and as written on a token economy procedural checklist (available from the first author). An item was scored incorrect if it was not implemented as written or was implemented in the wrong order, or if another response was implemented in place of the indicated response. Experimenters conducted 15-min observations 2 to 3 days each week. Paper and pencil were used to record data during small- and large-group activities.

Students

The dependent measures were the percentage of intervals students engaged in two academic readiness behaviors (i.e., appropriate sitting and vocalizing) simultaneously. Appropriate sitting was defined as sitting in a staff-designated location and in a manner instructed by staff with minimal movement for the entire interval. Appropriate vocalizing was defined as talking at or below conversational volume (i.e., could not be heard from more than 3 m away). Data were collected with paper and pencil using a 30-s whole-interval procedure and MotivAider vibrating timers to signal the end of an interval. Engagement in academic readiness behavior was scored when a student demonstrated both target behaviors for an entire interval. Staff participants delivered token reinforcers to students for engaging in these behaviors following a pretraining baseline. Observations were 10 to 15 min in length and occurred during small- and large-group activities 2 or 3 days each week.

Two independent observers scored 28% of staff and 38% of student observations to evaluate interobserver agreement. Agreements were identified when both observers scored the same response for a component (staff participants) or interval (student participants). Disagreements were identified when interobserver responses varied for a component or interval. Total agreements were divided by the sum of agreements plus disagreements and converted to a percentage. Mean agreement for procedural integrity was 84% (range, 64% to 100%), 84% (range, 64% to 91%), and 95% (range, 82% to 100%) for Rita, Teri, and Ingrid, respectively. Mean agreement for academic readiness behavior was 83% (range, 60% to 100%) and 94% (range, 70% to 100%) for Toby and Kendra, respectively. Low agreement scores occurred during initial observations and were corrected by the observers briefly discussing response definitions.

Design and Procedure

The effects of self-monitoring on procedural integrity were analyzed using a multiple baseline design similar to that in DiGennaro et al. (2005). A second multiple baseline design across students was used to assess the effects of any changes in staff behavior on student behavior.

Staff baseline

Staff baseline consisted of three phases: pretraining, training, and implementation. Consultants developed a token economy procedure prior to the pretraining phase and measured the number of components completed when staff participants addressed student engagement in academic readiness behavior as they had throughout the school year prior to the start of research activities. Following pretraining, the experimenters conducted the training phase, which consisted of two 1-hr training sessions and used procedures similar to those of DiGennaro et al. (2007) to train staff participants to implement each item on the token economy checklist. The first session involved staff participants only and consisted of didactic review, modeling, and role play. The second session involved staff and student participants. During the second training session, the experimenter first modeled token economy implementation with student participants and then provided coaching and feedback to staff participants who implemented the procedure. Once a staff participant demonstrated procedural integrity of 80% for one token economy session, coaching and feedback were terminated, and the staff participant implemented the intervention independently. The implementation phase continued for each staff participant until procedural integrity was stable or downward trending, and the experimenter and individual staff participant could meet to review the self-monitoring procedure.

Staff self-monitoring

Prior to beginning self-monitoring, the experimenter met briefly with each staff participant and explained the monitoring checklist, reviewed the token economy procedure, and answered any questions. During self-monitoring, staff participants continued to implement the token economy and were instructed to complete a token economy checklist (identical to that of the experimenters) following two token sessions of their choice each day. Completed checklists were left in a file folder for the experimenters to collect following observations.

Student conditions

Experimenters observed and recorded the occurrence or nonoccurrence of academic readiness behavior during all experimental conditions. The independent variable in effect for students was the token economy system as implemented by staff participants in each condition.

RESULTS AND DISCUSSION

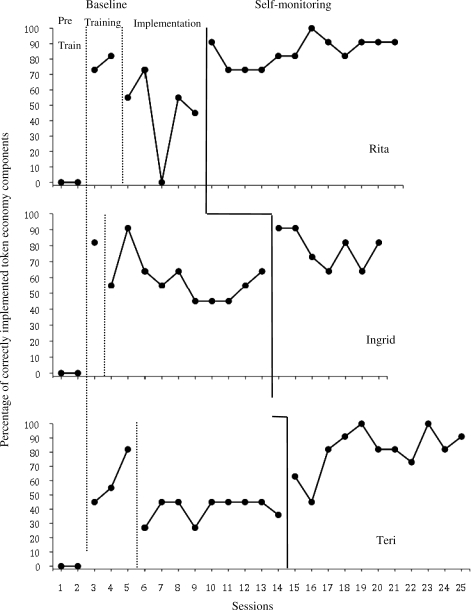

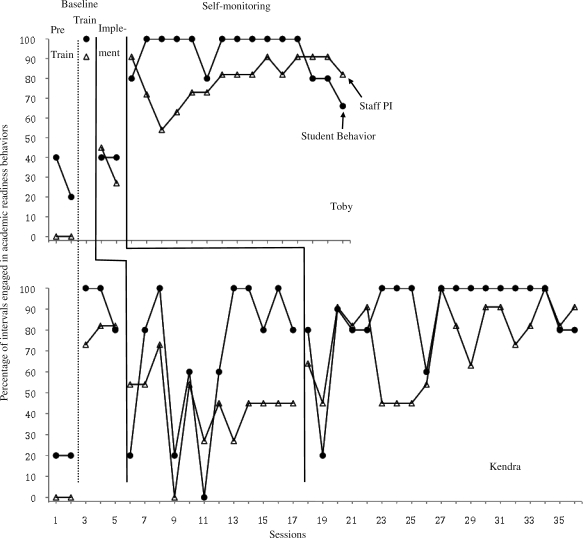

Figure 1 shows the percentage of token economy steps implemented correctly across staff participants. Token economy implementation did not occur during pretraining; the combined procedural integrity for all staff was 0%. During training, implementation, and self-monitoring, the mean percentage of token economy steps implemented correctly for all participants was 70% (range, 45% to 82%), 45% (range, 0% to 91%), and 84% (range, 45% to 100%), respectively. Figure 2 shows the relation between levels of procedural integrity and student behavior across experimental conditions and participants. Mean engagement in academic readiness behaviors during pretraining, training, implementation, and self-monitoring was 25% (range, 20% to 40%), 95% (range, 80% to 100%), 52% (range, 0% to 100%), and 89% (range, 20% to 100%), respectively.

Figure 1.

The percentage of accurately completed token economy steps by each staff participant throughout all conditions. Phases in baseline are pretraining, training, and implementation, and the intervention condition is self-monitoring.

Figure 2.

The percentage of intervals students engaged in academic readiness behaviors during corresponding staff conditions. Phases in baseline are pretraining, training, and implementation, and the intervention condition is self-monitoring.

The results suggest that self-monitoring can improve the implementation of a token economy system in a public school setting. Consistent with the findings of DiGennaro et al. (2007), behavioral interventions were not implemented accurately following initial training. This investigation extends previous procedural integrity research by demonstrating the effectiveness of self-monitoring for increasing posttraining procedural integrity levels with minimal support provided by external agents. Future researchers should evaluate long-term implementation while employing school personnel (e.g., school psychologist) to collect self-monitoring data and evaluate procedural integrity.

Although these results demonstrate positive outcomes, some limitations should be considered. First, staff and student participants demonstrated variability in responding following the introduction of self-monitoring procedures. The cause of variability was unknown, although procedural integrity may have been controlled by competing demands, and academic readiness behavior could have been controlled by the accurate or inaccurate implementation of specific token economy components as opposed to the overall procedural integrity within a token economy session. Future researchers could isolate the effects of individual components on student outcomes. Second, Ingrid's accelerating trend in procedural integrity during the implementation condition suggests that an unknown variable contributed to this increase. This may have been due to observation of other educators implementing the procedure accurately or to practice effects over the extended baseline period. Future research could address this limitation by examining the effects of observing accurate implementation on rates of procedural integrity.

Despite these limitations, this investigation extends previous research by demonstrating the efficacy of self-monitoring for supporting accurate token economy implementation in a public school setting. In addition, student participants demonstrated improvements in academic readiness behaviors following the introduction of the token economy. The use of self-management practices in school settings may be an effective and efficient approach to improve implementation of school-based interventions.

Acknowledgments

This investigation was funded by the Office of Special Education and Rehabilitative Services of the U.S. Department of Education (Grant H325D030060). We thank Mary Mariage for her collaboration and support.

REFERENCES

- Allen S.J, Blackston A.R. Training preservice teachers in collaborative problem solving: An investigation of the impact on teacher and student behavior change in real-world settings. School Psychology Quarterly. 2003;18:22–51. [Google Scholar]

- DiGennaro F.D, Martens B.K, Kleinman A.E. A comparison of performance feedback procedures on teachers' treatment implementation integrity and students' inappropriate behavior in special education classrooms. Journal of Applied Behavior Analysis. 2007;40:447–461. doi: 10.1901/jaba.2007.40-447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DiGennaro F.D, Martens B.K, McIntyre L.L. Increasing treatment integrity through negative reinforcement: Effects on teacher and student behavior. School Psychology Review. 2005;34:220–231. [Google Scholar]

- Hagermoser-Sanetti L.M, Kratochwill T.R. Treatment integrity in behavioral consultation: Measurement, promotion, and outcomes. International Journal of Behavioral Consultation and Therapy. 2008;4:95–114. [Google Scholar]

- Kazdin A.E. Single-case research designs. New York: Oxford; 1982. [Google Scholar]

- Najdowski A.C, Wallace M.D, Penrod B, Tarbox J, Reagon K, Higbee T.S. Caregiver-conducted experimental functional analyses of inappropriate mealtime behavior. Journal of Applied Behavior Analysis. 2008;41:459–465. doi: 10.1901/jaba.2008.41-459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richman G.S, Riordan M.R, Reiss M.L, Pyles D.M, Bailey J.S. The effects of self-monitoring and supervisor feedback on staff performance in a residential setting. Journal of Applied Behavior Analysis. 1988;21:401–409. doi: 10.1901/jaba.1988.21-401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seligson-Petscher E, Bailey J.S. Effects of training, prompting, and self-monitoring on staff behavior in a classroom for students with disabilities. Journal of Applied Behavior Analysis. 2006;39:215–226. doi: 10.1901/jaba.2006.02-05. [DOI] [PMC free article] [PubMed] [Google Scholar]