Abstract

Speech reception in noise is an especially difficult problem for listeners with hearing impairment as well as for users of cochlear implants (CIs). One likely cause of this is an inability to ‘glimpse’ a target talker in a fluctuating background, which has been linked to deficits in temporal fine-structure processing. A fine-structure cue that has the potential to be beneficial for speech reception in noise is fundamental frequency (F0). A challenging problem, however, is delivering the cue to these individuals. The benefits to speech intelligibility of F0 for both listeners with hearing impairment and users of CIs are reviewed, as well as various methods of delivering F0 to these listeners.

Keywords: fundamental frequency, F0, speech, noise, glimpsing, temporal fine structure

I. The Problem of Speech In Noise

The deleterious effects of background noise on speech intelligibility are well-documented. Although background noise has adverse effects on speech intelligibility for all individuals, those with hearing impairment (HI) are often more severely affected (Bacon et al., 1998; Carhart and Tillman, 1970; Carhart et al., 1969; Festen and Plomp, 1983; Houtgast and Festen, 2008; Plomp, 1978). For example, when speech intelligibility was measured for monosyllables in competing sentences in listeners with normal hearing and listeners with HI, the group with HI exhibited performance that was as much as 20-50 percentage points lower than the group with normal hearing at equivalent signal-to-noise ratios (SNRs) (Carhart and Tillman, 1970). The authors suggested that the effects of sensorineural hearing loss are similar to an increase in masker level relative to the level of the target (i.e., a decrease in SNR) resulting from increased thresholds.

It has since been established, however, that the reduction in intelligibility exhibited by listeners with HI cannot be explained solely by elevated thresholds (Plomp, 1978). This seems to indicate that there are supra-threshold deficits associated with HI. This additional component to the deficits exhibited by listeners with HI was described by a model (Plomp, 1986) that contained two independent factors: an attenuation factor, and a distortion factor. The attenuation factor represents the threshold shift of a listener with HI. A model based solely on the attenuation factor would show differences in speech intelligibility only at lower noise levels, and a convergence in performance once the level of the noise increased. The distortion factor represents supra-threshold deficits, and specifies that performance will always be worse for listeners with HI, once the level of the noise rises to such a level that it becomes the limiting factor in the audibility of the target speech.

One example of the supra-threshold processing deficits exhibited by listeners with HI can be observed when fluctuating (amplitude-modulated) maskers are used, such as competing speech. It has been known for some time that normal-hearing listeners can take advantage of the momentary favorable SNRs that occur in the temporal valleys of fluctuating maskers to improve speech intelligibility performance while listeners with HI are less able to (Bacon et al., 1998; Duquesnoy, 1983; Festen and Plomp, 1990; Miller and Licklider, 1950; Peters et al., 1998; Plomp, 1994). This ability has been termed “listening in the dips” or “glimpsing.”

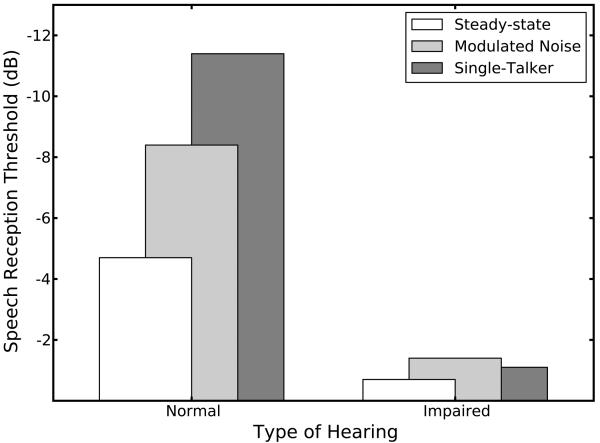

One report (Festen and Plomp, 1990) that looked at this difference in glimpsing abilities between listeners with normal hearing and listeners with HI measured the SNR required for 50 percent-correct speech recognition (the speech reception threshold, or SRT) for both groups of individuals. The background was varied to be steady-state noise, noise modulated with the amplitude envelope of speech, or a competing single talker. The SRTs for the participants with normal hearing were −4.7 dB in steady-state noise, −8.4 dB for speech-modulated noise, and −11.4 dB for competing speech (see Fig. 1). Thus, the corresponding masking release (the decline in the effectiveness of the masker) due to an ability to glimpse the target in the valleys of the fluctuating maskers was about 4 dB in speech-modulated noise, and about 7 dB for competing speech. On the other hand, in addition to higher overall SRTs than listeners with normal hearing, listeners with HI showed no release from masking, as evidenced by their nearly equivalent SRTs regardless of the background (Festen and Plomp, 1990).

Fig. 1.

Data estimated from Fig. 6 in Festen and Plomp (1990). Bars in the left column represent SRT performance by normal-hearing listeners, bars in the right column by listeners with HI. White bars indicate SRTs in steady-state noise, light gray bars indicate SRTs in modulate noise and dark gray indicate SRTs in a single-talker background. For each group of listeners, the difference between the white and gray bars represents the release from masking due to modulation.

As adverse as the effects of background noise are for individuals with HI, they are perhaps more adverse for users of cochlear implantsi (CIs), due to the reduced frequency resolution and lack of fine-structure cues provided by the devices (Cullington and Zeng, 2008; Fu et al., 1998; Nelson and Jin, 2004; Nelson et al., 2003). CIs work by filtering the incoming signal into a number of frequency bands, and extracting the amplitude envelope in each of the bands (Loizou, 1998). Electrodes, positioned at various points along the basilar membrane, emit trains of electrical pulses that are modulated in amplitude with each extracted envelope. Because of this process, fine-structure cues are largely discarded.

In one study that examined the effects of background noise under CI processing (Nelson et al., 2003), speech intelligibility was measured in three groups of participants: users of CIs, normal-hearing listeners listening to broadband stimuli, and normal-hearing listeners who heard stimuli processed with an envelope vocoder, which simulates CI processingii. Target speech was heard either in quiet, in a steady noise, or in a gated noise that used a 50% duty cycle. For the gated noise, the modulation rate was varied from 1 to 32 Hz. The results showed that normal-hearing listeners showed releases from masking of as much as 70 percentage points. On the other hand, both normal-hearing listeners in CI simulations and CI patients themselves showed little to no masking release. The authors argue that their results cannot be explained by forward masking, because of the relatively high SNRs used for these groups (+8 and +16). Rather, they suggest that it is the spectrally impoverished nature of CI processing that prevents a coherent auditory image from forming even in relatively low-level noise, and thus inhibits the listeners' ability to glimpse the target speech (Nelson et al., 2003).

Another study (Stickney et al., 2004) examined speech intelligibility in normal-hearing listeners listening to a CI simulation, and used either speech maskers or noise shaped with the long-term spectra of each respective speech masker. They found no release from masking with speech maskers relative to speech-shaped noise, and in some cases, the speech maskers produced more masking than the noise.

II. The Importance of Fundamental Frequency (F0)

It has been suggested that the lack of masking release in fluctuating maskers (such as competing speech) in both listeners with HI (Festen and Plomp, 1990; Summers and Leek, 1998) and in CI patients (Nelson and Jin, 2004; Qin and Oxenham, 2003; Qin and Oxenham, 2005; Stickney et al., 2007; Stickney et al., 2004) is due at least in part to an inability to code F0. This inability likely arises from reduced temporal fine-structure processing (Hopkins and Moore, 2007; Hopkins et al., 2008; Lorenzi et al., 2009; Lorenzi et al., 2006; Moore, 2008; Moore et al., 2006; Stickney et al., 2007). The inability of listeners with HI to make use of temporal fine-structure cues is currently a topic of considerable study, and current CI processing strategies are well-known for discarding all (or nearly all) temporal fine-structure information, and providing only envelope cues to the user.

Although there is ample evidence to support the importance of temporal fine structure and F0 for speech understanding, it is important to note that the redundancy of speech makes temporal fine structure (and F0) neither necessary nor sufficient for good speech reception. It is also the case that not all researchers have argued for the importance of temporal fine structure in the release from masking in modulated backgrounds. For example, when a speech-plus-noise mixture was high-pass filtered at 1500 Hz, the release from masking obtained when the background was modulated was similar to that obtained when the same mixture was low-pass filtered at 1200 Hz (Oxenham and Simonson, 2009). The authors also measured frequency selectivity by obtaining F0 difference limens (DLs) of harmonic complexes that were filtered using the same filter characteristics, and found that the DLs for the high-passed complexes were worse than those for the low-pass complexes by about a factor of 10 (F0 DLs increased from about 1% in the low-pass condition to about 10% in the high-pass condition). Thus, they concluded that F0 was likely not a useful cue in the high-pass conditions, and therefore was not responsible for the release from masking they observed (Oxenham and Simonson, 2009). The results are difficult to interpret, however. Although the DLs are considerably poorer in the high-pass condition than in the low-pass condition, differences in F0 of 10% or more in the high-passed complex were nevertheless detectable. The authors report that the mean F0 of the target talker was 110 Hz, and the standard deviation was 24 Hz, indicating that F0 variation in the target speech commonly exceeded 10% of the mean F0, which was the average DL for the high-pass condition. Thus, this seems to indicate that the F0 variation may have been detectable at least some of the time, even in the high-pass condition where frequency selectivity was greatly reduced.

Despite the conclusions drawn by Oxenham and Simonson (2009), there is evidence from many sources that the availability of the target talker's F0 helps improve intelligibility, particularly in competing speech. F0 has been shown to be important for several linguistic cues, including voicing (Holt et al., 2001; Whalen et al., 1993), lexical boundaries (Spitzer et al., 2007), and manner of articulation (Faulkner and Rosen, 1999). However, it is also an important acoustic cue. In this case, it is useful to consider separately two cues that are often classified under the general term F0: the static mean F0, and the dynamic variations in F0. Mean F0 is what is typically thought of when describing the differences in F0 between males and females. F0 variation is the change in F0 that occurs in time across an utterance, and can be thought of as what is removed by speaking in monotone.

A. Mean F0

Mean F0 is a well-known cue for speech intelligibility in the presence of competing speech, and it has been shown that intelligibility in listeners with normal hearing increases as mean F0 separation (the difference in mean F0 between target and masker) increases for both vowels (Assmann and Summerfield, 1990; Culling and Darwin, 1993b) and sentences (Assmann, 1999; Bird and Darwin, 1997; Brokx and Nooteboom, 1982; Oxenham and Simonson, 2009; Summers and Leek, 1998). However, there has generally been no benefit to intelligibility from mean F0 separation between target and masker observed in listeners with HI (Summers and Leek, 1998) or in either real or simulated CI listening conditions (Stickney et al., 2004). On the other hand, CI patients have shown some ability to discriminate gender when mean F0 separation was large (Fu et al., 2005). In this study, a closed set of monosyllables was produced by 10 males and 10 females. For each gender, the 5 males with the highest mean F0 were grouped with the 5 females with the lowest mean F0, and the other 10 talkers formed a second group. Thus, there was one group in which the average mean F0 difference between males and females was small (10 Hz), and another group in which the average mean F0 difference between males and females was large (101 Hz). The participants' task was to identify the gender of the talker. Results showed that identification performance by CI patients was about 94 percent correct when mean F0 difference between males and females was large, and about 68 percent correct when it was small (Fu et al., 2005). It is interesting to note that even in the more difficult condition, performance was still above chance, although the small number of patients tested precluded any statistical analysis.

There have also been a few simulated CI listening studies that have varied mean F0 separation between target and masker by using different speech maskers (Brown and Bacon, 2009b; Cullington and Zeng, 2008). Although both of these studies showed significant differences in speech intelligibility performance with differences in mean F0 separation, they both also used vocoders having sinusoidal carriers, which may provide more pitch information than vocoders using noise-band carriers. In addition, neither of these studies manipulated mean F0 separation per se, but rather used different background talkers. Because the speech materials in these experiments were sentences, this makes interpretation of the effects of mean F0 separation difficult, because more than just mean F0 may vary using this manipulation. For example, speech from different talkers may have been produced with different speaking rates. They may also vary between ‘conversational’ and ‘clear’ speaking styles, which has been shown to be a significant factor for speech understanding in both actual CI processing and in simulations of CI processing (Liu et al., 2004). Indeed, both studies conclude that there is not a simple relationship between the amount of masking from speech maskers and mean F0 separation (Brown and Bacon, 2009b; Cullington and Zeng, 2008).

B. F0 Variation

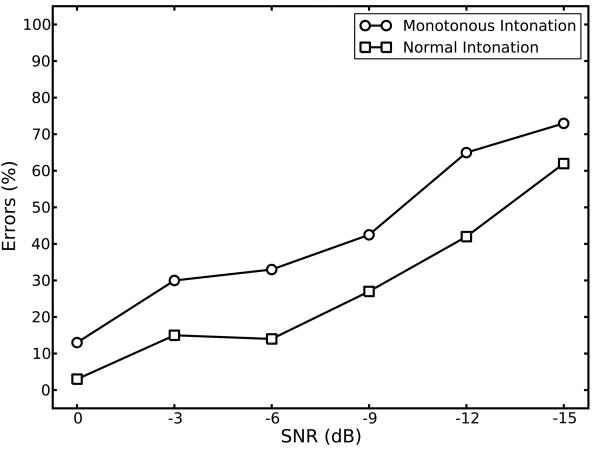

The effects of F0 variation have been shown to be beneficial for speech reception as well. For example, a relatively early paper demonstrates this effect for listeners with normal hearing (Brokx and Nooteboom, 1982), although it is not a point of emphasis in the paper. A comparison of their Figures 3 and 5 shows that when the target and masker had different mean F0s (‘DIFFERENT PITCH’ conditions), the percentage of errors decreased by about 15 percentage points on average across SNRs when intonation was normal (with F0 variations) compared to monotonous productions (without F0 variations). Fig. 2 re-plots these data for convenience. The fact that almost no benefit from F0 variation is observed in the ‘SAME PITCH’ conditions is not surprising, and is likely due to confusion arising from an inability of listeners to discriminate the variations of the target F0 from those of the masker F0. More recently, F0 variation was removed from target speech, which was then presented in a background of either speech-shaped noise or a single talker (Binns and Culling, 2007). While SRTs in speech-shaped noise were equivalent whether the target talker's F0 variation was or was not present, they improved by 2 dB in the single-talker background when the F0 of the target speech varied normally, as compared to when it did not vary at all.

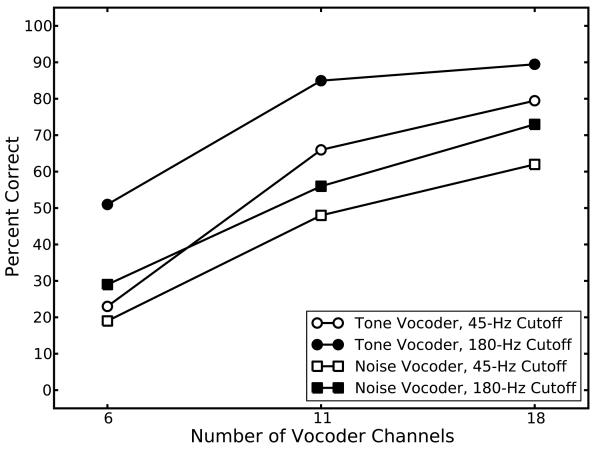

Fig. 3.

Data estimated from Fig. 3 in Stone et al. (2008). Mean percent correct intelligibility as a function of number of vocoder channels. Plots with square symbols represent performance when the vocoder used noise band carriers; plots with circles represent sinusoidal carriers. Open symbols represent performance when the cutoff frequency of the envelope extraction filter was 45 Hz, whereas filled symbols represent performance when the cutoff was 180 Hz.

Fig. 2.

Data estimated from Figs. 3 and 5 in Brokx and Nooteboom (1982). Percent errors as a function of SNR when intonation was either monotonous (circles) or normal (squares). The percent errors decreased by an average of about 15 percentage points across SNRs with the addition of normal intonation.

Indirect evidence for a benefit to speech reception from F0 variation may be seen in the results of a few experiments that have noted significant differences in performance depending on the vocoder implementation used. Broadly, vocoders that use sinusoidal carriers have shown more benefit from F0 variation than those that use noise-band carriers (Stone et al., 2008; Whitmal et al., 2007), perhaps because of better F0 representation provided by the sinusoidal carriers (Stone et al., 2008). For example, speech intelligibility in the presence of a competing talker was measured using both tone and noise vocoders (Stone et al., 2008). Although mean F0s are not reported, the target and masker were both males who had considerable overlap in the reported ranges of F0. In addition to vocoder carrier type, the low-pass cutoff frequency of the envelope extraction filters was also varied to be either 45 Hz or 180 Hz. This manipulation provided a signal that either contained (180 Hz) or did not contain (45 Hz) F0 information from periodicity cues in the envelope. While the differences in performance due to envelope cutoff frequency were relatively small (around 10 percentage points) when the vocoder used noise-band carriers, the same differences were as much as 20-25 percentage points with the sinusoidal vocoder (see Fig. 3). These results seem to indicate that the F0 cue was better preserved when the vocoder carriers were tones. In addition to cutoff frequency, the slope of the envelope extraction filter has also been shown to affect intelligibility (Healy and Steinbach, 2007) when sinusoidal carriers are used. In this study, speech reception was measured using a 3-channel sinusoidal vocoder and a male target talker. The cutoff frequency of the envelope extraction filter was either 16 or 100 Hz. When the slope of the envelope extraction filter was decreased from 96 to 12 dB/octave, performance improved significantly (Healy and Steinbach, 2007).

Another recent study (Chatterjee and Peng, 2008) compared the abilities to discriminate intonation patterns in speech of both listeners with normal hearing listening to a vocoder, and users of CIs. English-speaking listeners heard the word ‘popcorn,’ which was resynthesized to contain either a rising intonation pattern, which in English would indicate a question, or a falling intonation pattern, which would indicate a statement. Their task was to indicate whether the stimulus was a statement or question. Results clearly demonstrated that listeners could discriminate the different intonation patterns. These researchers (Chatterjee and Peng, 2008) found a similar pattern of results for listeners with CIs as well, despite considerable variability across users in their ability to discriminate the patterns of intonation.

One report (Wei et al., 2004) that examined the ability to use F0 variation in both listeners with CIs and listeners with normal hearing listening to vocoded stimuli used native speakers of Mandarin Chinese, which is a tonal language. An advantage of Mandarin is that it contains words that vary in meaning, but differ acoustically only in F0 contour. For example, the syllable /ma/ can have one of four different meanings depending on the contour. Under both CI and vocoder processing, the authors varied the number of channels from 1 to 20, and asked listeners to perform a tone recognition task. In CI patients, they found that performance ranged from near chance (25 percent correct) with only one active electrode to about 70 percent correct with 10 active channels (Wei et al., 2004). Not every CI patient could perform the task, however, as one patient never achieved better than chance performance. In vocoder simulations, tone recognition was about 70 percent correct even with a single channel. These data indicate that it is at least possible for F0 variation to be used as a cue by some CI patients.

However, these data were collected in quiet and it is unclear whether, with current CI and hearing aid technologies, the F0 cue can be as salient in background noise. A considerable amount of attention has been paid over the years to developing ways of providing F0 to listeners who stand to benefit. These efforts are outlined in the next section.

III. Providing F0 as a Cue for Listeners with Impairment

As we have seen, F0 is important for speech understanding, particularly in difficult listening conditions, such as in the presence of a competing background. However, the individuals who perform the poorest in background noise and thus stand to benefit the most from F0 (i.e., listeners with HI and users of CIs) are often the ones who are least able to take advantage of the cue. As a result, over the years many researchers have explored ways of providing F0 information, in addition to other cues, to these listeners.

A. Listeners with HI

Various studies have examined the efficacy of delivering F0 to listeners with HI as an aid to lipreading. One possible advantage to providing an F0 cue as opposed to simply amplifying speech is that the narrow-band nature of the F0 cue (which is typically delivered using a pure-tone carrier) makes it possible for higher sound pressure levels to be achieved for a given amount of gain, because all of the amplification can be concentrated in the frequency region in which F0 occurs.

In one study, lipreading performance by profoundly deafened adults was measured either alone, or augmented by acoustically delivered F0, which was extracted using a laryngograph (Rosen et al., 1981). Subjects practiced with another participant over a number of sessions on a connected discourse task. Each partner was in an adjoining sound booth, with a window between so they could see each other. The job of the participant with HI was to read the lips of his or her partner, and repeat what they were saying. The talker could receive auditory feedback from the receiver, but not vice-versa, so that if there was an error in communication, the receiver could indicate this to the talker and they could try again. Performance was measured in the number of words accurately repeated per minute. While only small improvements to lipreading speed were observed over time without the F0 cue, the rate of lipreading increased consistently over time in the presence of F0, and by the end of testing, was shown to be faster than lipreading alone by as much as 2-3 times (Rosen et al., 1981).

Others have examined similar procedures, using F0 extracted from the acoustic signal, rather than with a laryngograph. Although less accurate, this type of extraction is a more realistic implementation, since laryngographs are somewhat infeasible for everyday interactions. For example, when lipreading performance was measured in listeners with normal hearing, the addition of F0 information provided 50 percentage points of enhancement over lipreading alone (Kishon-Rabin et al., 1996). These researchers also examined the efficacy of delivering the F0 cue to individuals with HI using a tactile display (Kishon-Rabin et al., 1996). Users wore the device on the forearm, and it delivered F0 variation via both vibro-tactile (the rate of a pulse train used to vibrate the device was modulated in frequency), as well as spatial-tactile means (the pulse train was delivered via one of 16 solenoids, arranged linearly on the device). In this way, users received both a rate cue, in the form of the modulation rate of the pulse train, and a place cue, conveyed as the place of stimulation on the forearm. Results showed that the device provided an average of 11 percentage points of benefit over lipreading alone. The authors ruled out the accuracy of the extraction method as a limiting factor, and thus concluded that there were limitations inherent in the tactile delivery method that were responsible for the modest enhancements they observed with the device (Kishon-Rabin et al., 1996).

It has also been shown that in addition to F0 variation, voicing and low-frequency amplitude envelope information can provide benefit as cues to lip reading (Grant et al., 1985). Along these lines, another study examined the benefits to lipreading from a wearable aid that presented both F0 and amplitude envelope information acoustically to profoundly deafened individuals (Faulkner et al., 1992). The aid was shown to provide more benefit than simple amplification for 3 of 5 subjects tested in both consonant identification and connected discourse tracking.

B. CI Patients

Providing pitch information to CI users is a difficult problem. In designing the devices, tradeoffs have had to be made and as we have seen, one tradeoff has been the near elimination of fine-structure cues in favor of envelope cues. As a result, most pitch cues are unusable for most CI patients. There are several reasons for this. First, there is a limit to the number of useful concurrent electrodes. Of course, more electrodes can be added to the electrode array, but they will not necessarily benefit the user. This is because of a phenomenon known as current spread. It is difficult to control the electrical current once it is discharged from the electrode, and a broad region of the basilar membrane is usually stimulated. Thus, if two electrodes are very close in proximity, the user will not be able to distinguish which electrode was stimulated. As a result, there are only a certain number of effective channels that can be used along the length of the basilar membrane. When one considers that the electrodes are fixed in place, it becomes clear that only very gross frequency resolution is possible using standard CI configurations.

Numerous attempts have been made over the years to provide an improved representation of F0 in CIs. Early devices used various techniques to deliver F0, as well as F1 and F2 cues to patients fitted with a CI (Blamey et al., 1987; Blamey et al., 1984; Clark et al., 1987; Dowell et al., 1985; Dowell et al., 1986; Seligman et al., 1984). One technique utilizes what is known as rate pitch. Typically, the train of electrical pulses delivered to each electrode has a fixed rate. When F0 is represented by rate pitch in a CI, the pulse train of an electrode is modulated in frequency, such that the rate of the electric pulses varies with changes in F0. Another method can be described as place pitch. This method uses a group of contiguous electrodes, and changes in frequency are coded by changes in the electrode being discharged. Early devices demonstrated significant benefits in speech reception by using a combination of these two methods. For example, some early CIs were designed to deliver F0 in the form of rate pitch (Blamey et al., 1984; Clark et al., 1987; Dowell et al., 1985), and the higher ordered formants (F1, F2) in the form of place pitch (Blamey et al., 1987; Clark et al., 1987; Dowell et al., 1986; Seligman et al., 1984). Results showed that speech reception was best when all three formants were represented, rather than either F0 alone, or F0 and F2 (Blamey et al., 1984).

An interesting place-pitch strategy has been employed recently to encode F0 with some success (Geurts and Wouters, 2004). This technique involves delivering current to a pair of adjacent electrodes, and weighting the current to each. If the current to both electrodes is weighted equally, then the pitch percept will be between those obtained when either electrode is stimulated alone (McDermott and McKay, 1994). As the weighting shifts to the more basal electrode, for example, the pitch percept will shift correspondingly higher. This type of stimulation has been called current steering (Koch et al., 2007) or virtual channels (Wilson et al., 2004).

Another place-pitch implementation is known as current focusing, or tripolar stimulation (Miyoshi et al., 1996). This strategy is an attempt to address the problem of current spread. Three adjacent electrodes are used, with the two flanking electrodes carrying the same current load as the center electrode, but reduced in amplitude, and inverted in phase. The idea is that the phase-inverted current from the flanking electrodes will cancel the current from the center electrode as it spreads laterally, thus ‘focusing’ the current. An examination of cortical activity has confirmed a narrowed current field from tripolar stimulation (Bierer and Middlebrooks, 2002), and better spectral resolution than standard (monopolar) stimulation strategies (Berenstein et al., 2008) has also been reported. However, there has been little, if any, improvement to speech intelligibility as a result of this strategy reported thus far (Mens and Berenstein, 2005). The nature of tripolar stimulation seems to make it a potentially useful mechanism for delivering a pitch cue to CI patients, since in theory, it would be possible to dynamically adjust the current to the two flanking electrodes to ‘steer’ the current from the center electrode according to changes in the F0 cue. However, the efficacy of tripolar stimulation in this regard remains to be seen.

Finally, various attempts have been made to improve the F0 representation in CIs in the form of envelope pitch, in which the electric pulses are amplitude modulated with an extracted F0 (Green et al., 2004; Green et al., 2005; Milczynski et al., 2009). While improvements have been shown for pitch ranking and melody recognition (Laneau et al., 2004; Milczynski et al., 2009), there has been limited benefit to speech reception reported thus far (Green et al., 2005).

The most common limitation of the wide-spread adoption of F0-based solutions may be the problem of feature extraction (including F0) in noise. Although this is an issue that has received a great deal of attention, and many signal processing refinements have been made over the years to improve the accuracy of feature extraction algorithms, a robust solution has not yet been found.

C. EAS Patients

We have established that the limited representation of F0 by modern CIs results in difficulty by CI users with speech understanding in the presence of background noise. While much work has been devoted to finding ways to improve F0 representation by the devices, perhaps the best way to deliver F0 to those CI patients who retain some residual hearing is acoustically. For both simulated and real implant processing, the addition of low-frequency acoustic stimulation often enhances speech understanding, particularly when listening to speech in the presence of competing speech (Dorman et al., 2005; Kong et al., 2005; Turner et al., 2004). The benefit of this so-called electric-acoustic stimulation (EAS) occurs even when the acoustic stimulation alone provides little or no intelligibility (i.e., no words correctly identified).

Several recent papers have demonstrated that CI patients may need residual hearing only up to about 125 Hz or so to achieve an EAS benefit (Cullington and Zeng, 2009; Zhang et al., 2009). In one study for example, EAS patients listened to both sentences in noise and monosyllables in quiet (Zhang et al., 2009). The acoustically-delivered stimuli were low-pass filtered at 750, 500, 250, or 125 Hz, or presented broadband and filtered by each listener's audiometric configuration. Results showed that the majority of the EAS benefit was derived from information present below 125 Hz. With monosyllables, for example, scores in the electric-only, EAS-125, and EAS-broadband conditions were 56, 78, and 88 percent correct (Zhang et al., 2009). Given that the talker was a male with a mean F0 of 123 Hz, it seems clear that F0 provided the majority of the EAS benefit observed in this study.

Segregation has been suggested as a possible mechanism for the benefits of EAS in noise (Chang et al., 2006; Kong et al., 2005; Qin and Oxenham, 2006). According to this theory, listeners are able to combine the relatively weak pitch information conveyed by the electric stimulation with the stronger pitch cue from the low-frequency acoustic region to segregate target and masker. This is a reasonable hypothesis, because there are results in other contexts that show that F0 aids in the segregation of competing talkers (Assmann, 1999; Assmann and Summerfield, 1990; Bird and Darwin, 1997; Brokx and Nooteboom, 1982; Culling and Darwin, 1993a). Recent reports (Chang et al., 2006; Qin and Oxenham, 2006) have provided evidence that F0 is likely to play an important role independent of any role that the first formant may play. For example, significant benefit was observed when vocoder stimulation was supplemented by 300-Hz low-pass speech (Chang et al., 2006; Qin and Oxenham, 2006). This seems to suggest that F0 is important, since it is very unlikely that the first formant is present below 300 Hz (Hillenbrand et al., 1995).

The majority of the work that has been published on this topic points to F0 as a useful cue under EAS conditions. Much of the evidence to support F0 has been rather indirect, however, and there is not complete agreement as to the importance of F0. For example, the first published work that directly examined the contributions of F0 did so under simulated EAS conditions (Kong and Carlyon, 2007), and showed that F0 provided no benefit. These researchers extracted the dynamic changes in F0 from target speech, and used the F0 information to frequency modulate a harmonic complex having a F0 equal to the target talker's mean F0. The complex was then combined with a target-plus-background mixture processed through a vocoder which simulated electric stimulation. The rationale was that if an EAS benefit is observed using the modulated complex, it can be concluded that F0 is a useful cue. The authors also examined the independent contributions of the voicing cue (by turning an unmodulated complex on and off with voicing) and the amplitude envelope (by amplitude modulating the complex with the amplitude envelope of the target speech), and found that while both of those cues provided benefit over vocoder-only stimulation, F0 did not. They concluded that because the F0 cue was not shown to be beneficial in their study, segregation was likely not the mechanism for the benefits of EAS. Instead, they suggested glimpsing as an explanation, wherein the low-frequency stimulus (target speech or the amplitude-modulated harmonic complex) provides an indication of when to listen in the vocoder region. Because of comodulation inherent in speech, when the level of the target is high in the low-frequency region, there is a greater likelihood that the signal-to-noise ratio is more favorable in the vocoder region.

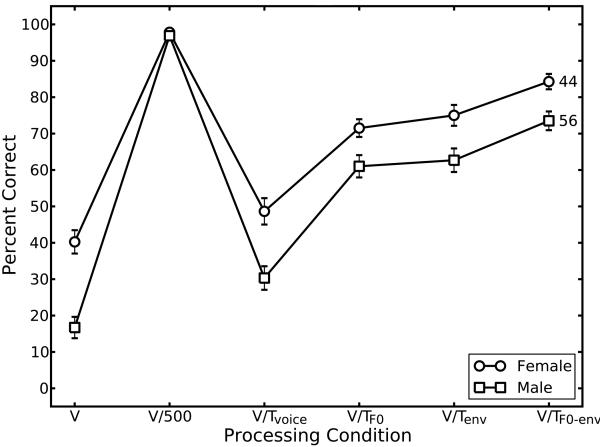

A similar experimental design was employed by Brown and Bacon (2009b) using a pure-tone carrier, as opposed to a harmonic complex. These simulation data showed that each of the three cues tested (voicing, amplitude envelope, and F0) contributed a significant amount of benefit under simulated EAS conditions, and that combining all three produced the greatest benefit. The amount of benefit to speech intelligibility was between 24 and 57 percentage points over vocoder-only stimulation, depending on the target and masker materials used. In particular, the tone provided more benefit when the sentence context was high. Fig. 4 shows data collected using a procedure that was identical to that used by Brown and Bacon (2009b), but with high-context sentence materials. Each plot represents percent correct word recognition for CUNY sentences, spoken by a male talker, in either a different male background (squares) or a female background (circles). Processing conditions are depicted along the x axis, and include vocoder only (V), vocoder plus 500-Hz low-pass target speech (V/500), vocoder plus a tone with a frequency equal to the mean F0 of the target talker that was turned on and off with voicing (V/Tvoice), vocoder plus a tone modulated in frequency with the target talker's F0 (V/TF0), vocoder plus a tone modulated in amplitude with the amplitude envelope of the low-pass target speech (V/Tenv), and vocoder plus a tone carrying all three cues (V/TF0-env). Note that the voicing cue is present in all of the tone conditions. The values to the right of each plot are the amounts of improvement from the frequency- and amplitude-modulated tone in each background over vocoder-only performance. Although the benefit due to F0 and the amplitude envelope is greater here with the high-context sentences than we observed previously with low-context sentences (Brown and Bacon, 2009b), the pattern of results is the same: both F0 and the amplitude envelope contribute nearly equivalent amounts of benefit over vocoder only, and the combination of the two cues provides more benefit than either cue alone.

Fig. 4.

Mean percent correct intelligibility. Squares represent performance in a male background (different talker from the target), circles represent a female background. Processing conditions are depicted along the x axis, and include vocoder only (V), vocoder plus 500-Hz low-pass target speech (V/500), vocoder plus a tone with a frequency equal to the mean F0 of the target talker that was turned on and off with voicing (V/Tvoice), vocoder plus a tone modulated in frequency with the target talker's F0 (V/TF0), vocoder plus a tone modulated in amplitude with the amplitude envelope of the low-pass target speech (V/Tenv), and vocoder plus a tone carrying all three cues (V/TF0-env). Note that the voicing cue is present in all of the tone conditions. The values to the right of each plot are the amounts of improvement from the frequency- and amplitude-modulated tone (V/TF0-env) in each background over vocoder-only performance (V).

The effectiveness of the tone has also been confirmed with EAS patients (Brown and Bacon, 2009a), who showed an average benefit over electric-only stimulation of 46 percentage points when the frequency- and amplitude-modulated tone was presented acoustically, as compared to 55 percentage points when the acoustic stimulus was target speech. It has also been reported that the presence of a second tone that carried F0 and amplitude envelope cues from the masker sentence had little to no effect on performance (Brown and Bacon, 2009b), and that the F0 and amplitude envelope cues of the target speech can be carried by a tone much lower in frequency than the mean F0 of the target talker with no loss in the amount of EAS benefit (Brown et al., 2009). This result in particular is strong evidence against segregation as an explanation for EAS. If listeners are indeed segregating target and masker by combining the strong F0 cue in the acoustic stimulation with the relatively weak pitch cue from the electric stimulation, then altering the pitch cue by shifting it down in frequency should reduce or eliminate the benefit. But these researchers showed that even when the F0 variation from a female target talker whose mean F0 was 213 Hz was applied to a tone with a frequency of 113 Hz, there was no decrease in the benefit due to the tone (Brown et al., 2009). As a result, the authors have argued against segregation as an explanation for the benefits of EAS, and support the glimpsing account proposed previously (Kong and Carlyon, 2007; Li and Loizou, 2008).

These results seem to indicate that it may be possible to provide F0 (and the amplitude envelope) to EAS patients who have very little residual hearing. For example, if a CI patient has residual hearing up to about 100 Hz, no EAS benefit typically may be observed, even with amplification. One reason for this lack of benefit is that the F0 of most talkers, particularly women and children (Peterson and Barney, 1952), would be too high in frequency to be audible. However, the benefits of EAS may be possible if F0 and amplitude envelope cues are applied to a tone that has been shifted down into a frequency region of audibility (Brown et al., 2009).

IV. Conclusion

Complex waveforms such as speech contain, to a greater or lesser degree, both envelope information and temporal fine-structure information. In favorable listening situations, providing envelope cues only is often sufficient for good intelligibility. This point is illustrated clearly by the success of the modern CI, which is well known for discarding most fine-structure information in favor of amplitude envelope information. Users of CIs often perform quite well on speech intelligibility tasks in quiet (Dorman and Loizou, 1997; Dorman and Loizou, 1998). On the other hand, speech intelligibility by patients with CIs is typically poor in the presence of background noise (Nelson et al., 2003; Stickney et al., 2004). Indeed, background noise is a significant problem for listeners with HI and CI users, and these difficulties have been linked to reduced fine-structure processing, a common characteristic in both listeners with HI (Hall and Wood, 1984; Lorenzi et al., 2006; Moore et al., 2006), and those fitted with a CI (Fu and Nogaki, 2005; Stickney et al., 2007).

An important consequence of reduced fine-structure processing is an inability to glimpse a target in a fluctuating masker, which leads to poor performance on speech reception tasks in modulated noise (Lorenzi et al., 2009; Lorenzi et al., 2006). Although F0 has been shown to be important for speech understanding in a variety of settings, the cue seems to be particularly useful in difficult listening conditions in which fine-structure cues may be limited, such as those experienced by listeners with HI and users of CIs. Several researchers have cited an inadequate representation of F0 as one of the causes of the reduced speech reception often observed in these impaired populations (Festen and Plomp, 1990; Nelson and Jin, 2004; Qin and Oxenham, 2003; Qin and Oxenham, 2005; Stickney et al., 2007; Stickney et al., 2004; Summers and Leek, 1998), and although numerous attempts have been made to provide them with the F0 cue, much more work needs to be done.

Acknowledgments

This work was supported by grant DC008329 from the National Institute of Deafness and Other Communication Disorders. The authors would like to thank William A. Yost for helpful suggestions on an earlier version of the manuscript.

List of Abbreviations Used

- CI

Cochlear implant

- dB

deciBel

- DL

Difference limen

- EAS

Electric-acoustic stimulation

- F0

Fundamental frequency

- F1

First formant

- F2

Second formant

- HI

Hearing impairment

- SNR

Signal-to-noise ratio

- SRT

Speech reception threshold

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

For clarity, listeners with HI and CI patients are discussed separately throughout, despite the fact that both groups of individuals have HI.

The study of the perception of speech and other acoustic signals by users of CIs has been aided and accelerated by the use of envelope vocoders, which use a processing scheme similar to that of CIs. The main difference between CI processing and vocoder processing is that the electrical pulse train carriers of the CI are replaced with carriers consisting of either bands of noise or pure tones. Because vocoder processing is similar in many respects to CI processing, the vocoder is thought to be a reasonable simulation of CI processing (Dorman et al., 1997; Shannon et al., 1995).

References

- Assmann PF. Fundamental frequency and the intelligibility of competing voices. Proc. 14th Int. Cong. Of Phonetic Sci. 1999:179–182. [Google Scholar]

- Assmann PF, Summerfield Q. Modeling the perception of concurrent vowels: vowels with different fundamental frequencies. J. Acoust. Soc. Am. 1990;88:680–697. doi: 10.1121/1.399772. [DOI] [PubMed] [Google Scholar]

- Bacon SP, Opie JM, Montoya DY. The effects of hearing loss and noise masking on the masking release for speech in temporally complex backgrounds. J Speech Lang Hear Res. 1998;41:549–563. doi: 10.1044/jslhr.4103.549. [DOI] [PubMed] [Google Scholar]

- Berenstein CK, Mens LHM, Mulder JJS, Vanpoucke FJ. Current steering and current focusing in cochlear implants: comparison of monopolar, tripolar, and virtual channel electrode configurations. Ear Hear. 2008;29:250–260. doi: 10.1097/aud.0b013e3181645336. [DOI] [PubMed] [Google Scholar]

- Bierer JA, Middlebrooks JC. Auditory cortical images of cochlear-implant stimuli: dependence on electrode configuration. J Neurophysiol. 2002;87:478–492. doi: 10.1152/jn.00212.2001. [DOI] [PubMed] [Google Scholar]

- Binns C, Culling JF. The role of fundamental frequency contours in the perception of speech against interfering speech. J. Acoust. Soc. Am. 2007;122:1765. doi: 10.1121/1.2751394. [DOI] [PubMed] [Google Scholar]

- Bird J, Darwin CJ. Effects of a difference in fundamental frequency in separating two sentences. Paper for the 11th Int. Conf. on Hear. 1997 [Google Scholar]

- Blamey PJ, Dowell RC, Brown AM, Clark GM, Seligman PM. Vowel and consonant recognition of cochlear implant patients using formant-estimating speech processors. J. Acoust. Soc. Am. 1987;82:48–57. doi: 10.1121/1.395436. [DOI] [PubMed] [Google Scholar]

- Blamey PJ, Dowell RC, Tong YC, Brown AM, Luscombe SM, Clark GM. Speech processing studies using an acoustic model of a multiple-channel cochlear implant. J. Acoust. Soc. Am. 1984;76:104–110. doi: 10.1121/1.391104. [DOI] [PubMed] [Google Scholar]

- Brokx J, Nooteboom S. Intonation and the perceptual separation of simultaneous voices. J. Phonetics. 1982;10:23–36. [Google Scholar]

- Brown CA, Bacon SP. Achieving Electric-Acoustic Benefit with a Modulated Tone. Ear Hear. 2009a;30:489–493. doi: 10.1097/AUD.0b013e3181ab2b87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown CA, Bacon SP. Low-frequency speech cues and simulated electric-acoustic hearing. J. Acoust. Soc. Am. 2009b;125:1658–1665. doi: 10.1121/1.3068441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown CA, Scherrer NM, Bacon SP. Shifting fundamental frequency in simulated electric-acoustic listening. J. Acoust. Soc.Am. 2009 doi: 10.1121/1.3463808. in preparation. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carhart R, Tillman TW. Interaction of competing speech signals with hearing losses. Arch Otolaryngol. 1970;91:273–279. doi: 10.1001/archotol.1970.00770040379010. [DOI] [PubMed] [Google Scholar]

- Carhart R, Tillman TW, Greetis ES. Perceptual masking in multiple sound backgrounds. J Acoust Soc Am. 1969;45:694–703. doi: 10.1121/1.1911445. [DOI] [PubMed] [Google Scholar]

- Chang JE, Bai JY, Zeng F. Unintelligible low-frequency sound enhances simulated cochlear-implant speech recognition in noise. IEEE Trans. Biomed. Eng. 2006;53:2598–2601. doi: 10.1109/TBME.2006.883793. [DOI] [PubMed] [Google Scholar]

- Chatterjee M, Peng S. Processing F0 with cochlear implants: Modulation frequency discrimination and speech intonation recognition. Hear Res. 2008;235:143–156. doi: 10.1016/j.heares.2007.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark GM, Blamey PJ, Brown AM, Gusby PA, Dowell RC, Franz BK, Pyman BC, Shepherd RK, Tong YC, Webb RL, et al. The University of Melbourne--nucleus multi-electrode cochlear implant. Adv Otorhinolaryngol. 1987;38:V–IX. 1-181. [PubMed] [Google Scholar]

- Culling JF, Darwin CJ. The role of timbre in the segregation of simultaneous voices with intersecting F0 contours. Percept. Psychophys. 1993a;54:303–309. doi: 10.3758/bf03205265. [DOI] [PubMed] [Google Scholar]

- Culling JF, Darwin CJ. Perceptual separation of simultaneous vowels: within and across-formant grouping by F0. J. Acoust. Soc. Am. 1993b;93:3454–3467. doi: 10.1121/1.405675. [DOI] [PubMed] [Google Scholar]

- Cullington HE, Zeng F. Speech recognition with varying numbers and types of competing talkers by normal-hearing, cochlear-implant, and implant simulation subjects. J. Acoust. Soc. Am. 2008;123:450–461. doi: 10.1121/1.2805617. [DOI] [PubMed] [Google Scholar]

- Cullington HE, Zeng F. Bimodal hearing benefit for speech recognition with competing voice in cochlear implant subject with normal hearing in contralateral ear. Ear Hear. 2009 doi: 10.1097/AUD.0b013e3181bc7722. In Press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Loizou PC. Speech intelligibility as a function of the number of channels of stimulation for normal-hearing listeners and patients with cochlear implants. Am. J. Otol. 1997;18:S113–4. [PubMed] [Google Scholar]

- Dorman MF, Loizou PC. The identification of consonants and vowels by cochlear implant patients using a 6-channel continuous interleaved sampling processor and by normal-hearing subjects using simulations of processors with two to nine channels. Ear Hear. 1998;19:162–166. doi: 10.1097/00003446-199804000-00008. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Loizou PC, Rainey D. Speech intelligibility as a function of the number of channels of stimulation for signal processors using sine-wave and noise-band outputs. J. Acoust. Soc. Am. 1997;102:2403–2411. doi: 10.1121/1.419603. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Spahr AJ, Loizou PC, Dana CJ, Schmidt JS. Acoustic simulations of combined electric and acoustic hearing (EAS) Ear Hear. 2005;26:371–380. doi: 10.1097/00003446-200508000-00001. [DOI] [PubMed] [Google Scholar]

- Dowell RC, Martin LF, Clark GM, Brown AM. Results of a preliminary clinical trial on a multiple channel cochlear prosthesis. Ann Otol Rhinol Laryngol. 1985;94:244–250. [PubMed] [Google Scholar]

- Dowell RC, Mecklenburg DJ, Clark GM. Speech recognition for 40 patients receiving multichannel cochlear implants. Arch Otolaryngol Head Neck Surg. 1986;112:1054–1059. doi: 10.1001/archotol.1986.03780100042005. [DOI] [PubMed] [Google Scholar]

- Duquesnoy AJ. Effect of a single interfering noise or speech source upon the binaural sentence intelligibility of aged persons. J Acoust Soc Am. 1983;74:739–743. doi: 10.1121/1.389859. [DOI] [PubMed] [Google Scholar]

- Faulkner A, Rosen S. Contributions of temporal encodings of voicing, voicelessness, fundamental frequency, and amplitude variation to audio-visual and auditory speech perception. J. Acoust. Soc. Am. 1999;106:2063–2073. doi: 10.1121/1.427951. [DOI] [PubMed] [Google Scholar]

- Faulkner A, Ball V, Rosen S, Moore BC, Fourcin A. Speech pattern hearing aids for the profoundly hearing impaired: speech perception and auditory abilities. J. Acoust. Soc. Am. 1992;91:2136–2155. doi: 10.1121/1.403674. [DOI] [PubMed] [Google Scholar]

- Festen JM, Plomp R. Relations between auditory functions in impaired hearing. J Acoust Soc Am. 1983;73:652–662. doi: 10.1121/1.388957. [DOI] [PubMed] [Google Scholar]

- Festen JM, Plomp R. Effects of fluctuating noise and interfering speech on the speech-reception threshold for impaired and normal hearing. J Acoust Soc Am. 1990;88:1725–1736. doi: 10.1121/1.400247. [DOI] [PubMed] [Google Scholar]

- Fu Q, Nogaki G. Noise susceptibility of cochlear implant users: the role of spectral resolution and smearing. J. Assoc. Res. Otolaryngol. 2005;6:19–27. doi: 10.1007/s10162-004-5024-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fu Q, Chinchilla S, Nogaki G, Galvin JJ3. Voice gender identification by cochlear implant users: the role of spectral and temporal resolution. J. Acoust. Soc. Am. 2005;118:1711–1718. doi: 10.1121/1.1985024. [DOI] [PubMed] [Google Scholar]

- Fu QJ, Shannon RV, Wang X. Effects of noise and spectral resolution on vowel and consonant recognition: acoustic and electric hearing. J Acoust Soc Am. 1998;104:3586–3596. doi: 10.1121/1.423941. [DOI] [PubMed] [Google Scholar]

- Geurts L, Wouters J. Better place-coding of the fundamental frequency in cochlear implants. J. Acoust. Soc. Am. 2004;115:844–852. doi: 10.1121/1.1642623. [DOI] [PubMed] [Google Scholar]

- Grant KW, Ardell LH, Kuhl PK, Sparks DW. The contribution of fundamental frequency, amplitude envelope, and voicing duration cues to speechreading in normal-hearing subjects. J Acoust Soc Am. 1985;77:671–677. doi: 10.1121/1.392335. [DOI] [PubMed] [Google Scholar]

- Green T, Faulkner A, Rosen S. Enhancing temporal cues to voice pitch in continuous interleaved sampling cochlear implants. J. Acoust. Soc. Am. 2004;116:2298–2310. doi: 10.1121/1.1785611. [DOI] [PubMed] [Google Scholar]

- Green T, Faulkner A, Rosen S, Macherey O. Enhancement of temporal periodicity cues in cochlear implants: effects on prosodic perception and vowel identification. J. Acoust. Soc. Am. 2005;118:375–385. doi: 10.1121/1.1925827. [DOI] [PubMed] [Google Scholar]

- Hall JW, Wood EJ. Stimulus duration and frequency discrimination for normal-hearing and hearing-impaired subjects. J. Speech. Hear. Res. 1984;27:252–256. doi: 10.1044/jshr.2702.256. [DOI] [PubMed] [Google Scholar]

- Healy EW, Steinbach HM. The effect of smoothing filter slope and spectral frequency on temporal speech information. J Acoust Soc Am. 2007;121:1177–1181. doi: 10.1121/1.2354019. [DOI] [PubMed] [Google Scholar]

- Hillenbrand J, Getty LA, Clark MJ, Wheeler K. Acoustic characteristics of American English vowels. J. Acoust. Soc. Am. 1995;97:3099–3111. doi: 10.1121/1.411872. [DOI] [PubMed] [Google Scholar]

- Holt LL, Lotto AJ, Kluender KR. Influence of fundamental frequency on stop-consonant voicing perception: a case of learned covariation or auditory enhancement? J. Acoust. Soc. Am. 2001;109:764–774. doi: 10.1121/1.1339825. [DOI] [PubMed] [Google Scholar]

- Hopkins K, Moore BCJ. Moderate cochlear hearing loss leads to a reduced ability to use temporal fine structure information. J Acoust Soc Am. 2007;122:1055–1068. doi: 10.1121/1.2749457. [DOI] [PubMed] [Google Scholar]

- Hopkins K, Moore BCJ, Stone MA. Effects of moderate cochlear hearing loss on the ability to benefit from temporal fine structure information in speech. J Acoust Soc Am. 2008;123:1140–1153. doi: 10.1121/1.2824018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Houtgast T, Festen JM. On the auditory and cognitive functions that may explain an individual's elevation of the speech reception threshold in noise. Int J Audiol. 2008;47:287–295. doi: 10.1080/14992020802127109. [DOI] [PubMed] [Google Scholar]

- Kishon-Rabin L, Boothroyd A, Hanin L. Speechreading enhancement: a comparison of spatial-tactile display of voice fundamental frequency (F0) with auditory F0. J Acoust Soc Am. 1996;100:593–602. doi: 10.1121/1.415885. [DOI] [PubMed] [Google Scholar]

- Koch DB, Downing M, Osberger MJ, Litvak L. Using current steering to increase spectral resolution in CII and HiRes 90K users. Ear Hear. 2007;28:38S–41S. doi: 10.1097/AUD.0b013e31803150de. [DOI] [PubMed] [Google Scholar]

- Kong Y, Carlyon RP. Improved speech recognition in noise in simulated binaurally combined acoustic and electric stimulation. J. Acoust. Soc. Am. 2007;121:3717–3727. doi: 10.1121/1.2717408. [DOI] [PubMed] [Google Scholar]

- Kong Y, Stickney GS, Zeng F. Speech and melody recognition in binaurally combined acoustic and electric hearing. J. Acoust. Soc. Am. 2005;117:1351–1361. doi: 10.1121/1.1857526. [DOI] [PubMed] [Google Scholar]

- Laneau J, Wouters J, Moonen M. Relative contributions of temporal and place pitch cues to fundamental frequency discrimination in cochlear implantees. J. Acoust. Soc. Am. 2004;116:3606–3619. doi: 10.1121/1.1823311. [DOI] [PubMed] [Google Scholar]

- Li N, Loizou PC. A glimpsing account for the benefit of simulated combined acoustic and electric hearing. J. Acoust. Soc. Am. 2008;123:2287–2294. doi: 10.1121/1.2839013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu S, Del Rio E, Bradlow AR, Zeng F. Clear speech perception in acoustic and electric hearing. J. Acoust. Soc. Am. 2004;116:2374–2383. doi: 10.1121/1.1787528. [DOI] [PubMed] [Google Scholar]

- Loizou PC. Mimicking the Human Ear. IEEE Sig. Proc. Mag. 1998:101–129. [Google Scholar]

- Lorenzi C, Debruille L, Garnier S, Fleuriot P, Moore BCJ. Abnormal processing of temporal fine structure in speech for frequencies where absolute thresholds are normal. J Acoust Soc Am. 2009;125:27–30. doi: 10.1121/1.2939125. [DOI] [PubMed] [Google Scholar]

- Lorenzi C, Gilbert G, Carn H, Garnier S, Moore BCJ. Speech perception problems of the hearing impaired reflect inability to use temporal fine structure. Proc Natl Acad Sci U S A. 2006;103:18866–18869. doi: 10.1073/pnas.0607364103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDermott HJ, McKay CM. Pitch ranking with nonsimultaneous dual-electrode electrical stimulation of the cochlea. J Acoust Soc Am. 1994;96:155–162. doi: 10.1121/1.410475. [DOI] [PubMed] [Google Scholar]

- Mens LHM, Berenstein CK. Speech perception with mono- and quadrupolar electrode configurations: a crossover study. Otol Neurotol. 2005;26:957–964. doi: 10.1097/01.mao.0000185060.74339.9d. [DOI] [PubMed] [Google Scholar]

- Milczynski M, Wouters J, van Wieringen A. Improved fundamental frequency coding in cochlear implant signal processing. J Acoust Soc Am. 2009;125:2260–2271. doi: 10.1121/1.3085642. [DOI] [PubMed] [Google Scholar]

- Miller GA, Licklider JCR. The Intelligibility of Interrupted Speech. J Acoust Soc Am. 1950;22:167–173. [Google Scholar]

- Miyoshi S, Iida Y, Shimizu S, Matsushima J, Ifukube T. Proposal of a new auditory nerve stimulation method for cochlear prosthesis. Artif Organs. 1996;20:941–946. doi: 10.1111/j.1525-1594.1996.tb04574.x. [DOI] [PubMed] [Google Scholar]

- Moore BCJ. The role of temporal fine structure processing in pitch perception, masking, and speech perception for normal-hearing and hearing-impaired people. J Assoc Res Otolaryngol. 2008;9:399–406. doi: 10.1007/s10162-008-0143-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore BCJ, Glasberg BR, Hopkins K. Frequency discrimination of complex tones by hearing-impaired subjects: Evidence for loss of ability to use temporal fine structure. Hear Res. 2006;222:16–27. doi: 10.1016/j.heares.2006.08.007. [DOI] [PubMed] [Google Scholar]

- Nelson PB, Jin S. Factors affecting speech understanding in gated interference: cochlear implant users and normal-hearing listeners. J. Acoust. Soc. Am. 2004;115:2286–2294. doi: 10.1121/1.1703538. [DOI] [PubMed] [Google Scholar]

- Nelson PB, Jin S, Carney AE, Nelson DA. Understanding speech in modulated interference: cochlear implant users and normal-hearing listeners. J. Acoust. Soc. Am. 2003;113:961–968. doi: 10.1121/1.1531983. [DOI] [PubMed] [Google Scholar]

- Oxenham AJ, Simonson AM. Masking release for low- and high-pass-filtered speech in the presence of noise and single-talker interference. J Acoust Soc Am. 2009;125:457–468. doi: 10.1121/1.3021299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peters RW, Moore BCJ, Baer T. Speech reception thresholds in noise with and without spectral and temporal dips for hearing-impaired and normally hearing people. J Acoust Soc Am. 1998;103:577–587. doi: 10.1121/1.421128. [DOI] [PubMed] [Google Scholar]

- Peterson GE, Barney HL. Control Methods Used in a Study of the Vowels. J. Acoust. Soc. Am. 1952;24:175–184. [Google Scholar]

- Plomp R. Auditory handicap of hearing impairment and the limited benefit of hearing aids. J Acoust Soc Am. 1978;63:533–549. doi: 10.1121/1.381753. [DOI] [PubMed] [Google Scholar]

- Plomp R. A signal-to-noise ratio model for the speech-reception threshold of the hearing impaired. J Speech Hear Res. 1986;29:146–154. doi: 10.1044/jshr.2902.146. [DOI] [PubMed] [Google Scholar]

- Plomp R. Noise, amplification, and compression: considerations of three main issues in hearing aid design. Ear Hear. 1994;15:2–12. [PubMed] [Google Scholar]

- Qin MK, Oxenham AJ. Effects of simulated cochlear-implant processing on speech reception in fluctuating maskers. J. Acoust. Soc. Am. 2003;114:446–454. doi: 10.1121/1.1579009. [DOI] [PubMed] [Google Scholar]

- Qin MK, Oxenham AJ. Effects of envelope-vocoder processing on F0 discrimination and concurrent-vowel identification. Ear Hear. 2005;26:451–460. doi: 10.1097/01.aud.0000179689.79868.06. [DOI] [PubMed] [Google Scholar]

- Qin MK, Oxenham AJ. Effects of introducing unprocessed low-frequency information on the reception of envelope-vocoder processed speech. J. Acoust. Soc. Am. 2006;119:2417–2426. doi: 10.1121/1.2178719. [DOI] [PubMed] [Google Scholar]

- Rosen SM, Fourcin AJ, Moore BCJ. Voice pitch as an aid to lipreading. Nature. 1981;291:150–152. doi: 10.1038/291150a0. [DOI] [PubMed] [Google Scholar]

- Seligman PM, Patrick JF, Tong YC, Clark GM, Dowell RC, Crosby PA. A signal processor for a multiple-electrode hearing prosthesis. Acta Otolaryngol Suppl. 1984;411:135–139. [PubMed] [Google Scholar]

- Shannon RV, Zeng FG, Kamath V, Wygonski J, Ekelid M. Speech recognition with primarily temporal cues. Science. 1995;270:303–304. doi: 10.1126/science.270.5234.303. [DOI] [PubMed] [Google Scholar]

- Spitzer SM, Liss JM, Mattys SL. Acoustic cues to lexical segmentation: a study of resynthesized speech. J. Acoust. Soc. Am. 2007;122:3678–3687. doi: 10.1121/1.2801545. [DOI] [PubMed] [Google Scholar]

- Stickney GS, Assmann PF, Chang J, Zeng F. Effects of cochlear implant processing and fundamental frequency on the intelligibility of competing sentences. J Acoust Soc Am. 2007;122:1069–1078. doi: 10.1121/1.2750159. [DOI] [PubMed] [Google Scholar]

- Stickney GS, Zeng F, Litovsky R, Assmann P. Cochlear implant speech recognition with speech maskers. J. Acoust. Soc. Am. 2004;116:1081–1091. doi: 10.1121/1.1772399. [DOI] [PubMed] [Google Scholar]

- Stone MA, Füllgrabe C, Moore BCJ. Benefit of high-rate envelope cues in vocoder processing: effect of number of channels and spectral region. J Acoust Soc Am. 2008;124:2272–2282. doi: 10.1121/1.2968678. [DOI] [PubMed] [Google Scholar]

- Summers V, Leek MR. FO processing and the separation of competing speech signals by listeners with normal hearing and with hearing loss. J Speech Lang Hear Res. 1998;41:1294–1306. doi: 10.1044/jslhr.4106.1294. [DOI] [PubMed] [Google Scholar]

- Turner CW, Gantz BJ, Vidal C, Behrens A, Henry BA. Speech recognition in noise for cochlear implant listeners: benefits of residual acoustic hearing. J. Acoust. Soc. Am. 2004;115:1729–1735. doi: 10.1121/1.1687425. [DOI] [PubMed] [Google Scholar]

- Wei C, Cao K, Zeng F. Mandarin tone recognition in cochlear-implant subjects. Hear. Res. 2004;197:87–95. doi: 10.1016/j.heares.2004.06.002. [DOI] [PubMed] [Google Scholar]

- Whalen DH, Abramson AS, Lisker L, Mody M. F0 gives voicing information even with unambiguous voice onset times. J Acoust Soc Am. 1993;93:2152–2159. doi: 10.1121/1.406678. [DOI] [PubMed] [Google Scholar]

- Whitmal NA, Poissant SF, Freyman RL, Helfer KS. Speech intelligibility in cochlear implant simulations: Effects of carrier type, interfering noise, and subject experience. J. Acoust. Soc. Am. 2007;122:2376–2388. doi: 10.1121/1.2773993. [DOI] [PubMed] [Google Scholar]

- Wilson B, Sun X, Schatzer R, Wolford R. Representation of fine structure or fine frequency information with cochlear implants. International Congress Series. 2004;1273:3–6. [Google Scholar]

- Zhang T, Dorman MF, Spahr AJ. Information from the voice fundamental frequency (F0) region accounts for the majority of the benefit when acoustic stimulation is added to electric stimulation. Ear Hear. 2009 doi: 10.1097/aud.0b013e3181b7190c. In Press. [DOI] [PMC free article] [PubMed] [Google Scholar]